Ce contenu n'est pas disponible dans la langue sélectionnée.

Chapter 16. Managing TLS certificates

Streams for Apache Kafka supports TLS for encrypted communication between Kafka and Streams for Apache Kafka components.

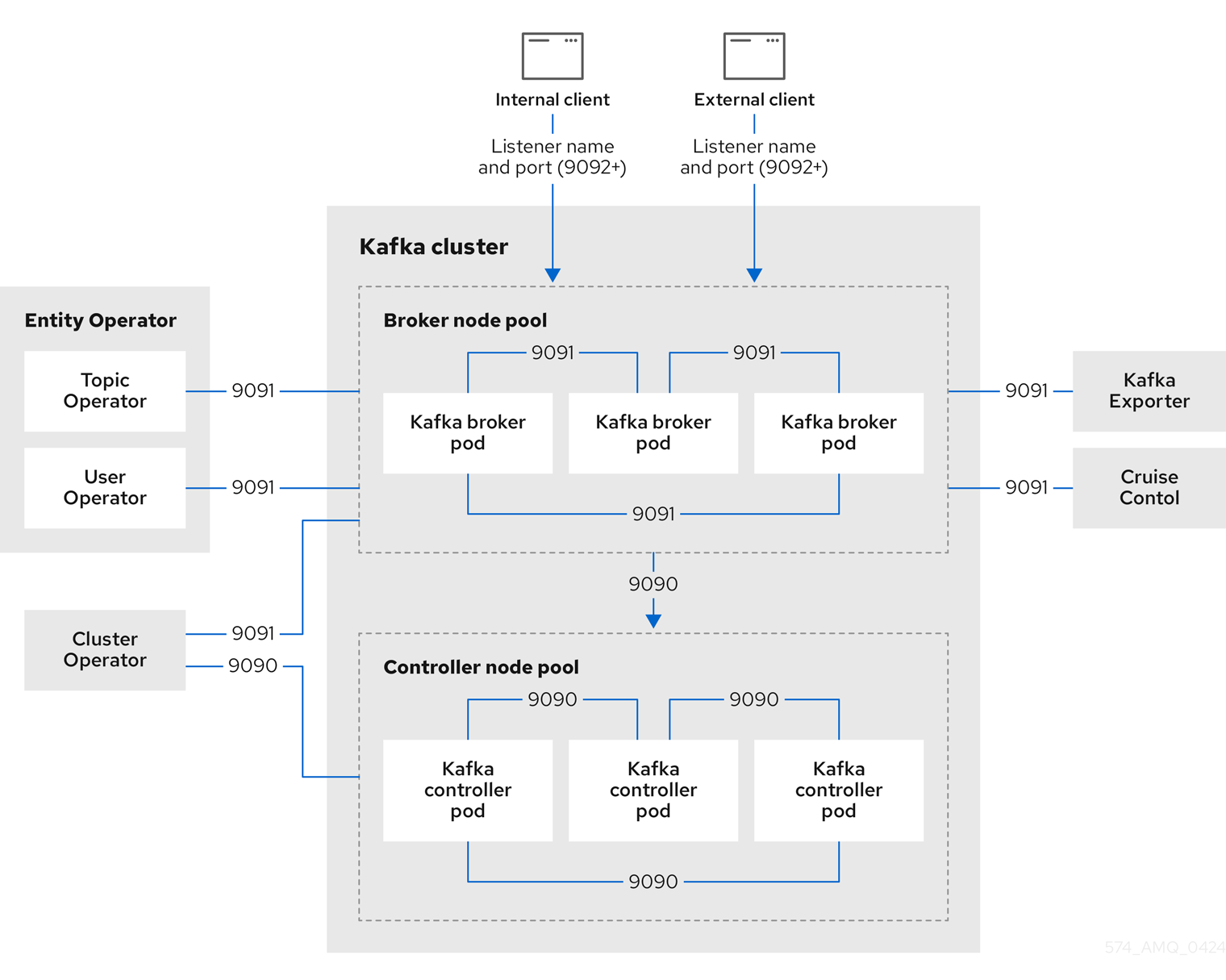

Streams for Apache Kafka establishes encrypted TLS connections for communication between the following components when using Kafka in KRaft mode:

- Kafka brokers

- Kafka controllers

- Kafka brokers and controllers

- Streams for Apache Kafka operators and Kafka

- Cruise Control and Kafka brokers

- Kafka Exporter and Kafka brokers

Connections between clients and Kafka brokers use listeners that you must configure to use TLS-encrypted communication. You configure these listeners in the Kafka custom resource and each listener name and port number must be unique within the cluster. Communication between Kafka brokers and Kafka clients is encrypted according to how the tls property is configured for the listener. For more information, see Chapter 14, Setting up client access to a Kafka cluster.

The following diagram shows the connections for secure communication.

Figure 16.1. KRaft-based Kafka communication secured by TLS encryption

The ports shown in the diagram are used as follows:

- Control plane listener (9090)

- The internal control plane listener on port 9090 facilitates interbroker communication between Kafka controllers and broker-to-controller communication. Additionally, the Cluster Operator communicates with the controllers through the listener. This listener is not accessible to Kafka clients.

- Replication listener (9091)

- Data replication between brokers, as well as internal connections to the brokers from Streams for Apache Kafka operators, Cruise Control, and the Kafka Exporter, use the replication listener on port 9091. This listener is not accessible to Kafka clients.

- Listeners for client connections (9092 or higher)

- For TLS-encrypted communication (through configuration of the listener), internal and external clients connect to Kafka brokers. External clients (producers and consumers) connect to the Kafka brokers through the advertised listener port.

When configuring listeners for client access to brokers, you can use port 9092 or higher (9093, 9094, and so on), but with a few exceptions. The listeners cannot be configured to use the ports reserved for interbroker communication (9090 and 9091), Prometheus metrics (9404), and JMX (Java Management Extensions) monitoring (9999).

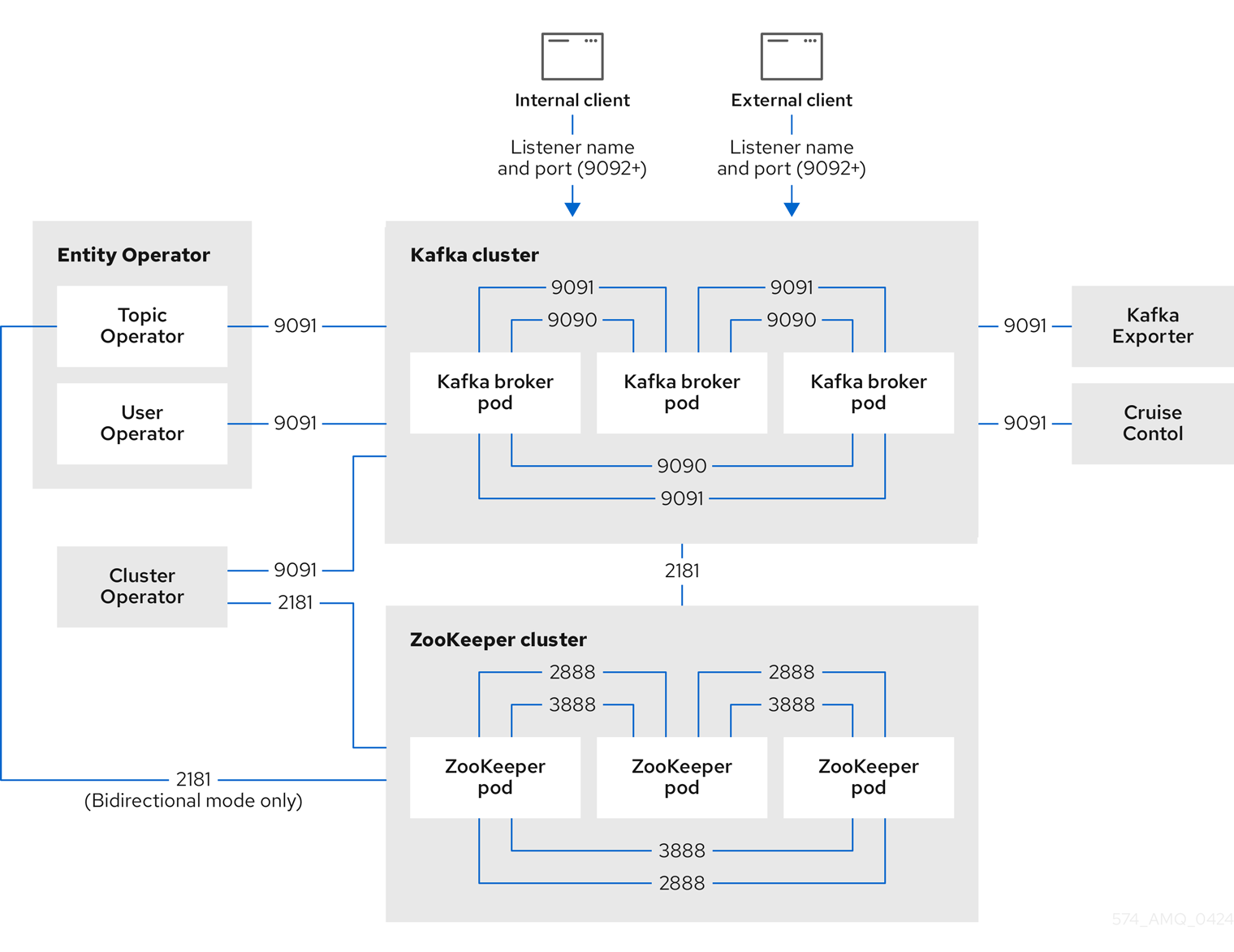

If you are using ZooKeeper for cluster management, there are TLS connections between ZooKeeper and Kafka brokers and Streams for Apache Kafka operators.

The following diagram shows the connections for secure communication when using ZooKeeper.

Figure 16.2. Kafka and ZooKeeper communication secured by TLS encryption

The ZooKeeper ports are used as follows:

- ZooKeeper Port (2181)

- ZooKeeper port for connection to Kafka brokers. Additionally, the Cluster Operator communicates with ZooKeeper through this port. If you are using the Topic Operator in bidirectional mode, it also communicates with ZooKeeper through this port.

- ZooKeeper internodal communication port (2888)

- ZooKeeper port for internodal communication between ZooKeeper nodes.

- ZooKeeper leader election port (3888)

- ZooKeeper port for leader election among ZooKeeper nodes in a ZooKeeper cluster.

16.1. Internal cluster CA and clients CA

To support encryption, each Streams for Apache Kafka component needs its own private keys and public key certificates. All component certificates are signed by an internal CA (certificate authority) called the cluster CA.

CA (Certificate Authority) certificates are generated by the Cluster Operator to verify the identities of components and clients.

Similarly, each Kafka client application connecting to Streams for Apache Kafka using mTLS needs to use private keys and certificates. A second internal CA, named the clients CA, is used to sign certificates for the Kafka clients.

Both the cluster CA and clients CA have a self-signed public key certificate.

Kafka brokers are configured to trust certificates signed by either the cluster CA or clients CA. Components that clients do not need to connect to, such as ZooKeeper, only trust certificates signed by the cluster CA. Unless TLS encryption for external listeners is disabled, client applications must trust certificates signed by the cluster CA. This is also true for client applications that perform mTLS authentication.

By default, Streams for Apache Kafka automatically generates and renews CA certificates issued by the cluster CA or clients CA. You can configure the management of these CA certificates using Kafka.spec.clusterCa and Kafka.spec.clientsCa properties.

If you don’t want to use the CAs generated by the Cluster Operator, you can install your own cluster and clients CA certificates. Any certificates you provide are not renewed by the Cluster Operator.

16.2. Secrets generated by the operators

The Cluster Operator automatically sets up and renews TLS certificates to enable encryption and authentication within a cluster. It also sets up other TLS certificates if you want to enable encryption or mTLS authentication between Kafka brokers and clients.

Secrets are created when custom resources are deployed, such as Kafka and KafkaUser. Streams for Apache Kafka uses these secrets to store private and public key certificates for Kafka clusters, clients, and users. The secrets are used for establishing TLS encrypted connections between Kafka brokers, and between brokers and clients. They are also used for mTLS authentication.

Cluster and clients secrets are always pairs: one contains the public key and one contains the private key.

- Cluster secret

- A cluster secret contains the cluster CA to sign Kafka broker certificates. Connecting clients use the certificate to establish a TLS encrypted connection with a Kafka cluster. The certificate verifies broker identity.

- Client secret

- A client secret contains the clients CA for a user to sign its own client certificate. This allows mutual authentication against the Kafka cluster. The broker validates a client’s identity through the certificate.

- User secret

- A user secret contains a private key and certificate. The secret is created and signed by the clients CA when a new user is created. The key and certificate are used to authenticate and authorize the user when accessing the cluster.

You can provide Kafka listener certificates for TLS listeners or external listeners that have TLS encryption enabled. Use Kafka listener certificates to incorporate the security infrastructure you already have in place.

16.2.1. TLS authentication using keys and certificates in PEM or PKCS #12 format

The secrets created by Streams for Apache Kafka provide private keys and certificates in PEM (Privacy Enhanced Mail) and PKCS #12 (Public-Key Cryptography Standards) formats. PEM and PKCS #12 are OpenSSL-generated key formats for TLS communications using the SSL protocol.

You can configure mutual TLS (mTLS) authentication that uses the credentials contained in the secrets generated for a Kafka cluster and user.

To set up mTLS, you must first do the following:

When you deploy a Kafka cluster, a <cluster_name>-cluster-ca-cert secret is created with public key to verify the cluster. You use the public key to configure a truststore for the client.

When you create a KafkaUser, a <kafka_user_name> secret is created with the keys and certificates to verify the user (client). Use these credentials to configure a keystore for the client.

With the Kafka cluster and client set up to use mTLS, you extract credentials from the secrets and add them to your client configuration.

- PEM keys and certificates

For PEM, you add the following to your client configuration:

- Truststore

-

ca.crtfrom the<cluster_name>-cluster-ca-certsecret, which is the CA certificate for the cluster.

-

- Keystore

-

user.crtfrom the<kafka_user_name>secret, which is the public certificate of the user. -

user.keyfrom the<kafka_user_name>secret, which is the private key of the user.

-

- PKCS #12 keys and certificates

For PKCS #12, you add the following to your client configuration:

- Truststore

-

ca.p12from the<cluster_name>-cluster-ca-certsecret, which is the CA certificate for the cluster. -

ca.passwordfrom the<cluster_name>-cluster-ca-certsecret, which is the password to access the public cluster CA certificate.

-

- Keystore

-

user.p12from the<kafka_user_name>secret, which is the public key certificate of the user. -

user.passwordfrom the<kafka_user_name>secret, which is the password to access the public key certificate of the Kafka user.

-

PKCS #12 is supported by Java, so you can add the values of the certificates directly to your Java client configuration. You can also reference the certificates from a secure storage location. With PEM files, you must add the certificates directly to the client configuration in single-line format. Choose a format that’s suitable for establishing TLS connections between your Kafka cluster and client. Use PKCS #12 if you are unfamiliar with PEM.

All keys are 2048 bits in size and, by default, are valid for 365 days from the initial generation. You can change the validity period.

16.2.2. Secrets generated by the Cluster Operator

The Cluster Operator generates the following certificates, which are saved as secrets in the OpenShift cluster. Streams for Apache Kafka uses these secrets by default.

The cluster CA and clients CA have separate secrets for the private key and public key.

<cluster_name>-cluster-ca- Contains the private key of the cluster CA. Streams for Apache Kafka and Kafka components use the private key to sign server certificates.

<cluster_name>-cluster-ca-cert- Contains the public key of the cluster CA. Kafka clients use the public key to verify the identity of the Kafka brokers they are connecting to with TLS server authentication.

<cluster_name>-clients-ca- Contains the private key of the clients CA. Kafka clients use the private key to sign new user certificates for mTLS authentication when connecting to Kafka brokers.

<cluster_name>-clients-ca-cert- Contains the public key of the clients CA. Kafka brokers use the public key to verify the identity of clients accessing the Kafka brokers when mTLS authentication is used.

Secrets for communication between Streams for Apache Kafka components contain a private key and a public key certificate signed by the cluster CA.

<cluster_name>-kafka-brokers- Contains the private and public keys for Kafka brokers.

<cluster_name>-zookeeper-nodes- Contains the private and public keys for ZooKeeper nodes.

<cluster_name>-cluster-operator-certs- Contains the private and public keys for encrypting communication between the Cluster Operator and Kafka or ZooKeeper.

<cluster_name>-entity-topic-operator-certs- Contains the private and public keys for encrypting communication between the Topic Operator and Kafka or ZooKeeper.

<cluster_name>-entity-user-operator-certs- Contains the private and public keys for encrypting communication between the User Operator and Kafka or ZooKeeper.

<cluster_name>-cruise-control-certs- Contains the private and public keys for encrypting communication between Cruise Control and Kafka or ZooKeeper.

<cluster_name>-kafka-exporter-certs- Contains the private and public keys for encrypting communication between Kafka Exporter and Kafka or ZooKeeper.

You can provide your own server certificates and private keys to connect to Kafka brokers using Kafka listener certificates rather than certificates signed by the cluster CA.

16.2.3. Cluster CA secrets

Cluster CA secrets are managed by the Cluster Operator in a Kafka cluster.

Only the <cluster_name>-cluster-ca-cert secret is required by clients. All other cluster secrets are accessed by Streams for Apache Kafka components. You can enforce this using OpenShift role-based access controls, if necessary.

The CA certificates in <cluster_name>-cluster-ca-cert must be trusted by Kafka client applications so that they validate the Kafka broker certificates when connecting to Kafka brokers over TLS.

| Field | Description |

|---|---|

|

| The current private key for the cluster CA. |

| Field | Description |

|---|---|

|

| PKCS #12 store for storing certificates and keys. |

|

| Password for protecting the PKCS #12 store. |

|

| The current certificate for the cluster CA. |

| Field | Description |

|---|---|

|

| PKCS #12 store for storing certificates and keys. |

|

| Password for protecting the PKCS #12 store. |

|

|

Certificate for a Kafka broker pod <num>. Signed by a current or former cluster CA private key in |

|

|

Private key for a Kafka broker pod |

| Field | Description |

|---|---|

|

| PKCS #12 store for storing certificates and keys. |

|

| Password for protecting the PKCS #12 store. |

|

|

Certificate for ZooKeeper node <num>. Signed by a current or former cluster CA private key in |

|

|

Private key for ZooKeeper pod |

| Field | Description |

|---|---|

|

| PKCS #12 store for storing certificates and keys. |

|

| Password for protecting the PKCS #12 store. |

|

|

Certificate for mTLS communication between the Cluster Operator and Kafka or ZooKeeper. Signed by a current or former cluster CA private key in |

|

| Private key for mTLS communication between the Cluster Operator and Kafka or ZooKeeper. |

| Field | Description |

|---|---|

|

| PKCS #12 store for storing certificates and keys. |

|

| Password for protecting the PKCS #12 store. |

|

|

Certificate for mTLS communication between the Topic Operator and Kafka or ZooKeeper. Signed by a current or former cluster CA private key in |

|

| Private key for mTLS communication between the Topic Operator and Kafka or ZooKeeper. |

| Field | Description |

|---|---|

|

| PKCS #12 store for storing certificates and keys. |

|

| Password for protecting the PKCS #12 store. |

|

|

Certificate for mTLS communication between the User Operator and Kafka or ZooKeeper. Signed by a current or former cluster CA private key in |

|

| Private key for mTLS communication between the User Operator and Kafka or ZooKeeper. |

| Field | Description |

|---|---|

|

| PKCS #12 store for storing certificates and keys. |

|

| Password for protecting the PKCS #12 store. |

|

|

Certificate for mTLS communication between Cruise Control and Kafka or ZooKeeper. Signed by a current or former cluster CA private key in |

|

| Private key for mTLS communication between the Cruise Control and Kafka or ZooKeeper. |

| Field | Description |

|---|---|

|

| PKCS #12 store for storing certificates and keys. |

|

| Password for protecting the PKCS #12 store. |

|

|

Certificate for mTLS communication between Kafka Exporter and Kafka or ZooKeeper. Signed by a current or former cluster CA private key in |

|

| Private key for mTLS communication between the Kafka Exporter and Kafka or ZooKeeper. |

16.2.4. Clients CA secrets

Clients CA secrets are managed by the Cluster Operator in a Kafka cluster.

The certificates in <cluster_name>-clients-ca-cert are those which the Kafka brokers trust.

The <cluster_name>-clients-ca secret is used to sign the certificates of client applications. This secret must be accessible to the Streams for Apache Kafka components and for administrative access if you are intending to issue application certificates without using the User Operator. You can enforce this using OpenShift role-based access controls, if necessary.

| Field | Description |

|---|---|

|

| The current private key for the clients CA. |

| Field | Description |

|---|---|

|

| PKCS #12 store for storing certificates and keys. |

|

| Password for protecting the PKCS #12 store. |

|

| The current certificate for the clients CA. |

16.2.5. User secrets generated by the User Operator

User secrets are managed by the User Operator.

When a user is created using the User Operator, a secret is generated using the name of the user.

| Secret name | Field within secret | Description |

|---|---|---|

|

|

| PKCS #12 store for storing certificates and keys. |

|

| Password for protecting the PKCS #12 store. | |

|

| Certificate for the user, signed by the clients CA | |

|

| Private key for the user |

16.2.6. Adding labels and annotations to cluster CA secrets

By configuring the clusterCaCert template property in the Kafka custom resource, you can add custom labels and annotations to the Cluster CA secrets created by the Cluster Operator. Labels and annotations are useful for identifying objects and adding contextual information. You configure template properties in Streams for Apache Kafka custom resources.

Example template customization to add labels and annotations to secrets

apiVersion: kafka.strimzi.io/v1beta2

kind: Kafka

metadata:

name: my-cluster

spec:

kafka:

# ...

template:

clusterCaCert:

metadata:

labels:

label1: value1

label2: value2

annotations:

annotation1: value1

annotation2: value2

# ...

16.2.7. Disabling ownerReference in the CA secrets

By default, the cluster and clients CA secrets are created with an ownerReference property that is set to the Kafka custom resource. This means that, when the Kafka custom resource is deleted, the CA secrets are also deleted (garbage collected) by OpenShift.

If you want to reuse the CA for a new cluster, you can disable the ownerReference by setting the generateSecretOwnerReference property for the cluster and clients CA secrets to false in the Kafka configuration. When the ownerReference is disabled, CA secrets are not deleted by OpenShift when the corresponding Kafka custom resource is deleted.

Example Kafka configuration with disabled ownerReference for cluster and clients CAs

apiVersion: kafka.strimzi.io/v1beta2

kind: Kafka

# ...

spec:

# ...

clusterCa:

generateSecretOwnerReference: false

clientsCa:

generateSecretOwnerReference: false

# ...

Additional resources

16.3. Certificate renewal and validity periods

Cluster CA and clients CA certificates are only valid for a limited time period, known as the validity period. This is usually defined as a number of days since the certificate was generated.

For CA certificates automatically created by the Cluster Operator, you can configure the validity period of:

-

Cluster CA certificates in

Kafka.spec.clusterCa.validityDays -

Clients CA certificates in

Kafka.spec.clientsCa.validityDays

The default validity period for both certificates is 365 days. Manually-installed CA certificates should have their own validity periods defined.

When a CA certificate expires, components and clients that still trust that certificate will not accept connections from peers whose certificates were signed by the CA private key. The components and clients need to trust the new CA certificate instead.

To allow the renewal of CA certificates without a loss of service, the Cluster Operator initiates certificate renewal before the old CA certificates expire.

You can configure the renewal period of the certificates created by the Cluster Operator:

-

Cluster CA certificates in

Kafka.spec.clusterCa.renewalDays -

Clients CA certificates in

Kafka.spec.clientsCa.renewalDays

The default renewal period for both certificates is 30 days.

The renewal period is measured backwards, from the expiry date of the current certificate.

Validity period against renewal period

Not Before Not After

| |

|<--------------- validityDays --------------->|

<--- renewalDays --->|

To make a change to the validity and renewal periods after creating the Kafka cluster, you configure and apply the Kafka custom resource, and manually renew the CA certificates. If you do not manually renew the certificates, the new periods will be used the next time the certificate is renewed automatically.

Example Kafka configuration for certificate validity and renewal periods

apiVersion: kafka.strimzi.io/v1beta2

kind: Kafka

# ...

spec:

# ...

clusterCa:

renewalDays: 30

validityDays: 365

generateCertificateAuthority: true

clientsCa:

renewalDays: 30

validityDays: 365

generateCertificateAuthority: true

# ...

The behavior of the Cluster Operator during the renewal period depends on the settings for the generateCertificateAuthority certificate generation properties for the cluster CA and clients CA.

true-

If the properties are set to

true, a CA certificate is generated automatically by the Cluster Operator, and renewed automatically within the renewal period. false-

If the properties are set to

false, a CA certificate is not generated by the Cluster Operator. Use this option if you are installing your own certificates.

16.3.1. Renewal process with automatically generated CA certificates

The Cluster Operator performs the following processes in this order when renewing CA certificates:

Generates a new CA certificate, but retains the existing key.

The new certificate replaces the old one with the name

ca.crtwithin the correspondingSecret.Generates new client certificates (for ZooKeeper nodes, Kafka brokers, and the Entity Operator).

This is not strictly necessary because the signing key has not changed, but it keeps the validity period of the client certificate in sync with the CA certificate.

- Restarts ZooKeeper nodes so that they will trust the new CA certificate and use the new client certificates.

- Restarts Kafka brokers so that they will trust the new CA certificate and use the new client certificates.

Restarts the Topic and User Operators so that they will trust the new CA certificate and use the new client certificates.

User certificates are signed by the clients CA. User certificates generated by the User Operator are renewed when the clients CA is renewed.

16.3.2. Client certificate renewal

The Cluster Operator is not aware of the client applications using the Kafka cluster.

When connecting to the cluster, and to ensure they operate correctly, client applications must:

- Trust the cluster CA certificate published in the <cluster>-cluster-ca-cert Secret.

Use the credentials published in their <user-name> Secret to connect to the cluster.

The User Secret provides credentials in PEM and PKCS #12 format, or it can provide a password when using SCRAM-SHA authentication. The User Operator creates the user credentials when a user is created.

You must ensure clients continue to work after certificate renewal. The renewal process depends on how the clients are configured.

If you are provisioning client certificates and keys manually, you must generate new client certificates and ensure the new certificates are used by clients within the renewal period. Failure to do this by the end of the renewal period could result in client applications being unable to connect to the cluster.

For workloads running inside the same OpenShift cluster and namespace, Secrets can be mounted as a volume so the client Pods construct their keystores and truststores from the current state of the Secrets. For more details on this procedure, see Configuring internal clients to trust the cluster CA.

16.3.3. Manually renewing Cluster Operator-managed CA certificates

Cluster and clients CA certificates generated by the Cluster Operator auto-renew at the start of their respective certificate renewal periods. However, you can use the strimzi.io/force-renew annotation to manually renew one or both of these certificates before the certificate renewal period starts. You might do this for security reasons, or if you have changed the renewal or validity periods for the certificates.

A renewed certificate uses the same private key as the old certificate.

If you are using your own CA certificates, the force-renew annotation cannot be used. Instead, follow the procedure for renewing your own CA certificates.

Prerequisites

- The Cluster Operator must be deployed.

- A Kafka cluster in which CA certificates and private keys are installed.

- The OpenSSL TLS management tool to check the period of validity for CA certificates.

In this procedure, we use a Kafka cluster named my-cluster within the my-project namespace.

Procedure

Apply the

strimzi.io/force-renewannotation to the secret that contains the CA certificate that you want to renew.Renewing the Cluster CA secret

oc annotate secret my-cluster-cluster-ca-cert -n my-project strimzi.io/force-renew="true"

Renewing the Clients CA secret

oc annotate secret my-cluster-clients-ca-cert -n my-project strimzi.io/force-renew="true"

At the next reconciliation, the Cluster Operator generates new certificates.

If maintenance time windows are configured, the Cluster Operator generates the new CA certificate at the first reconciliation within the next maintenance time window.

Check the period of validity for the new CA certificates.

Checking the period of validity for the new cluster CA certificate

oc get secret my-cluster-cluster-ca-cert -n my-project -o=jsonpath='{.data.ca\.crt}' | base64 -d | openssl x509 -noout -datesChecking the period of validity for the new clients CA certificate

oc get secret my-cluster-clients-ca-cert -n my-project -o=jsonpath='{.data.ca\.crt}' | base64 -d | openssl x509 -noout -datesThe command returns a

notBeforeandnotAfterdate, which is the valid start and end date for the CA certificate.Update client configurations to trust the new cluster CA certificate.

See:

16.3.4. Manually recovering from expired Cluster Operator-managed CA certificates

The Cluster Operator automatically renews the cluster and clients CA certificates when their renewal periods begin. Nevertheless, unexpected operational problems or disruptions may prevent the renewal process, such as prolonged downtime of the Cluster Operator or unavailability of the Kafka cluster. If CA certificates expire, Kafka cluster components cannot communicate with each other and the Cluster Operator cannot renew the CA certificates without manual intervention.

To promptly perform a recovery, follow the steps outlined in this procedure in the order given. You can recover from expired cluster and clients CA certificates. The process involves deleting the secrets containing the expired certificates so that new ones are generated by the Cluster Operator. For more information on the secrets managed in Streams for Apache Kafka, see Section 16.2.2, “Secrets generated by the Cluster Operator”.

If you are using your own CA certificates and they expire, the process is similar, but you need to renew the CA certificates rather than use certificates generated by the Cluster Operator.

Prerequisites

- The Cluster Operator must be deployed.

- A Kafka cluster in which CA certificates and private keys are installed.

- The OpenSSL TLS management tool to check the period of validity for CA certificates.

In this procedure, we use a Kafka cluster named my-cluster within the my-project namespace.

Procedure

Delete the secret containing the expired CA certificate.

Deleting the Cluster CA secret

oc delete secret my-cluster-cluster-ca-cert -n my-project

Deleting the Clients CA secret

oc delete secret my-cluster-clients-ca-cert -n my-project

Wait for the Cluster Operator to generate new certificates.

-

A new CA cluster certificate to verify the identity of the Kafka brokers is created in a secret of the same name (

my-cluster-cluster-ca-cert). -

A new CA clients certificate to verify the identity of Kafka users is created in a secret of the same name (

my-cluster-clients-ca-cert).

-

A new CA cluster certificate to verify the identity of the Kafka brokers is created in a secret of the same name (

Check the period of validity for the new CA certificates.

Checking the period of validity for the new cluster CA certificate

oc get secret my-cluster-cluster-ca-cert -n my-project -o=jsonpath='{.data.ca\.crt}' | base64 -d | openssl x509 -noout -datesChecking the period of validity for the new clients CA certificate

oc get secret my-cluster-clients-ca-cert -n my-project -o=jsonpath='{.data.ca\.crt}' | base64 -d | openssl x509 -noout -datesThe command returns a

notBeforeandnotAfterdate, which is the valid start and end date for the CA certificate.Delete the component pods and secrets that use the CA certificates.

- Delete the ZooKeeper secret.

- Wait for the Cluster Operator to detect the missing ZooKeeper secret and recreate it.

- Delete all ZooKeeper pods.

- Delete the Kafka secret.

- Wait for the Cluster Operator to detect the missing Kafka secret and recreate it.

- Delete all Kafka pods.

If you are only recovering the clients CA certificate, you only need to delete the Kafka secret and pods.

You can use the following

occommand to find resources and also verify that they have been removed.oc get <resource_type> --all-namespaces | grep <kafka_cluster_name>

Replace

<resource_type>with the type of the resource, such asPodorSecret.Wait for the Cluster Operator to detect the missing Kafka and ZooKeeper pods and recreate them with the updated CA certificates.

On reconciliation, the Cluster Operator automatically updates other components to trust the new CA certificates.

- Verify that there are no issues related to certificate validation in the Cluster Operator log.

Update client configurations to trust the new cluster CA certificate.

See:

16.3.5. Replacing private keys used by Cluster Operator-managed CA certificates

You can replace the private keys used by the cluster CA and clients CA certificates generated by the Cluster Operator. When a private key is replaced, the Cluster Operator generates a new CA certificate for the new private key.

If you are using your own CA certificates, the force-replace annotation cannot be used. Instead, follow the procedure for renewing your own CA certificates.

Prerequisites

- The Cluster Operator is running.

- A Kafka cluster in which CA certificates and private keys are installed.

Procedure

Apply the

strimzi.io/force-replaceannotation to theSecretthat contains the private key that you want to renew.Table 16.13. Commands for replacing private keys Private key for Secret Annotate command Cluster CA

<cluster_name>-cluster-ca

oc annotate secret <cluster_name>-cluster-ca strimzi.io/force-replace="true"Clients CA

<cluster_name>-clients-ca

oc annotate secret <cluster_name>-clients-ca strimzi.io/force-replace="true"

At the next reconciliation the Cluster Operator will:

-

Generate a new private key for the

Secretthat you annotated - Generate a new CA certificate

If maintenance time windows are configured, the Cluster Operator will generate the new private key and CA certificate at the first reconciliation within the next maintenance time window.

Client applications must reload the cluster and clients CA certificates that were renewed by the Cluster Operator.

16.4. Configuring internal clients to trust the cluster CA

This procedure describes how to configure a Kafka client that resides inside the OpenShift cluster — connecting to a TLS listener — to trust the cluster CA certificate.

The easiest way to achieve this for an internal client is to use a volume mount to access the Secrets containing the necessary certificates and keys.

Follow the steps to configure trust certificates that are signed by the cluster CA for Java-based Kafka Producer, Consumer, and Streams APIs.

Choose the steps to follow according to the certificate format of the cluster CA: PKCS #12 (.p12) or PEM (.crt).

The steps describe how to mount the Cluster Secret that verifies the identity of the Kafka cluster to the client pod.

Prerequisites

- The Cluster Operator must be running.

-

There needs to be a

Kafkaresource within the OpenShift cluster. - You need a Kafka client application inside the OpenShift cluster that will connect using TLS, and needs to trust the cluster CA certificate.

-

The client application must be running in the same namespace as the

Kafkaresource.

Using PKCS #12 format (.p12)

Mount the cluster Secret as a volume when defining the client pod.

For example:

kind: Pod apiVersion: v1 metadata: name: client-pod spec: containers: - name: client-name image: client-name volumeMounts: - name: secret-volume mountPath: /data/p12 env: - name: SECRET_PASSWORD valueFrom: secretKeyRef: name: my-secret key: my-password volumes: - name: secret-volume secret: secretName: my-cluster-cluster-ca-certHere we’re mounting the following:

- The PKCS #12 file into an exact path, which can be configured

- The password into an environment variable, where it can be used for Java configuration

Configure the Kafka client with the following properties:

A security protocol option:

-

security.protocol: SSLwhen using TLS for encryption (with or without mTLS authentication). -

security.protocol: SASL_SSLwhen using SCRAM-SHA authentication over TLS.

-

-

ssl.truststore.locationwith the truststore location where the certificates were imported. -

ssl.truststore.passwordwith the password for accessing the truststore. -

ssl.truststore.type=PKCS12to identify the truststore type.

Using PEM format (.crt)

Mount the cluster Secret as a volume when defining the client pod.

For example:

kind: Pod apiVersion: v1 metadata: name: client-pod spec: containers: - name: client-name image: client-name volumeMounts: - name: secret-volume mountPath: /data/crt volumes: - name: secret-volume secret: secretName: my-cluster-cluster-ca-cert- Use the extracted certificate to configure a TLS connection in clients that use certificates in X.509 format.

16.5. Configuring external clients to trust the cluster CA

This procedure describes how to configure a Kafka client that resides outside the OpenShift cluster – connecting to an external listener – to trust the cluster CA certificate. Follow this procedure when setting up the client and during the renewal period, when the old clients CA certificate is replaced.

Follow the steps to configure trust certificates that are signed by the cluster CA for Java-based Kafka Producer, Consumer, and Streams APIs.

Choose the steps to follow according to the certificate format of the cluster CA: PKCS #12 (.p12) or PEM (.crt).

The steps describe how to obtain the certificate from the Cluster Secret that verifies the identity of the Kafka cluster.

The <cluster_name>-cluster-ca-cert secret contains more than one CA certificate during the CA certificate renewal period. Clients must add all of them to their truststores.

Prerequisites

- The Cluster Operator must be running.

-

There needs to be a

Kafkaresource within the OpenShift cluster. - You need a Kafka client application outside the OpenShift cluster that will connect using TLS, and needs to trust the cluster CA certificate.

Using PKCS #12 format (.p12)

Extract the cluster CA certificate and password from the

<cluster_name>-cluster-ca-certSecret of the Kafka cluster.oc get secret <cluster_name>-cluster-ca-cert -o jsonpath='{.data.ca\.p12}' | base64 -d > ca.p12oc get secret <cluster_name>-cluster-ca-cert -o jsonpath='{.data.ca\.password}' | base64 -d > ca.passwordReplace <cluster_name> with the name of the Kafka cluster.

Configure the Kafka client with the following properties:

A security protocol option:

-

security.protocol: SSLwhen using TLS. -

security.protocol: SASL_SSLwhen using SCRAM-SHA authentication over TLS.

-

-

ssl.truststore.locationwith the truststore location where the certificates were imported. -

ssl.truststore.passwordwith the password for accessing the truststore. This property can be omitted if it is not needed by the truststore. -

ssl.truststore.type=PKCS12to identify the truststore type.

Using PEM format (.crt)

Extract the cluster CA certificate from the

<cluster_name>-cluster-ca-certsecret of the Kafka cluster.oc get secret <cluster_name>-cluster-ca-cert -o jsonpath='{.data.ca\.crt}' | base64 -d > ca.crt- Use the extracted certificate to configure a TLS connection in clients that use certificates in X.509 format.

16.6. Using your own CA certificates and private keys

Install and use your own CA certificates and private keys instead of using the defaults generated by the Cluster Operator. You can replace the cluster and clients CA certificates and private keys.

You can switch to using your own CA certificates and private keys in the following ways:

- Install your own CA certificates and private keys before deploying your Kafka cluster

- Replace the default CA certificates and private keys with your own after deploying a Kafka cluster

The steps to replace the default CA certificates and private keys after deploying a Kafka cluster are the same as those used to renew your own CA certificates and private keys.

If you use your own certificates, they won’t be renewed automatically. You need to renew the CA certificates and private keys before they expire.

Renewal options:

- Renew the CA certificates only

- Renew CA certificates and private keys (or replace the defaults)

16.6.1. Installing your own CA certificates and private keys

Install your own CA certificates and private keys instead of using the cluster and clients CA certificates and private keys generated by the Cluster Operator.

By default, Streams for Apache Kafka uses the following cluster CA and clients CA secrets, which are renewed automatically.

Cluster CA secrets

-

<cluster_name>-cluster-ca -

<cluster_name>-cluster-ca-cert

-

Clients CA secrets

-

<cluster_name>-clients-ca -

<cluster_name>-clients-ca-cert

-

To install your own certificates, use the same names.

Prerequisites

- The Cluster Operator is running.

A Kafka cluster is not yet deployed.

If you have already deployed a Kafka cluster, you can replace the default CA certificates with your own.

Your own X.509 certificates and keys in PEM format for the cluster CA or clients CA.

If you want to use a cluster or clients CA which is not a Root CA, you have to include the whole chain in the certificate file. The chain should be in the following order:

- The cluster or clients CA

- One or more intermediate CAs

- The root CA

- All CAs in the chain should be configured using the X509v3 Basic Constraints extension. Basic Constraints limit the path length of a certificate chain.

- The OpenSSL TLS management tool for converting certificates.

Before you begin

The Cluster Operator generates keys and certificates in PEM (Privacy Enhanced Mail) and PKCS #12 (Public-Key Cryptography Standards) formats. You can add your own certificates in either format.

Some applications cannot use PEM certificates and support only PKCS #12 certificates. If you don’t have a cluster certificate in PKCS #12 format, use the OpenSSL TLS management tool to generate one from your ca.crt file.

Example certificate generation command

openssl pkcs12 -export -in ca.crt -nokeys -out ca.p12 -password pass:<P12_password> -caname ca.crt

Replace <P12_password> with your own password.

Procedure

Create a new secret that contains the CA certificate.

Client secret creation with a certificate in PEM format only

oc create secret generic <cluster_name>-clients-ca-cert --from-file=ca.crt=ca.crt

Cluster secret creation with certificates in PEM and PKCS #12 format

oc create secret generic <cluster_name>-cluster-ca-cert \ --from-file=ca.crt=ca.crt \ --from-file=ca.p12=ca.p12 \ --from-literal=ca.password=P12-PASSWORDReplace <cluster_name> with the name of your Kafka cluster.

Create a new secret that contains the private key.

oc create secret generic <ca_key_secret> --from-file=ca.key=ca.key

Label the secrets.

oc label secret <ca_certificate_secret> strimzi.io/kind=Kafka strimzi.io/cluster="<cluster_name>"

oc label secret <ca_key_secret> strimzi.io/kind=Kafka strimzi.io/cluster="<cluster_name>"

-

Label

strimzi.io/kind=Kafkaidentifies the Kafka custom resource. -

Label

strimzi.io/cluster="<cluster_name>"identifies the Kafka cluster.

-

Label

Annotate the secrets

oc annotate secret <ca_certificate_secret> strimzi.io/ca-cert-generation="<ca_certificate_generation>"

oc annotate secret <ca_key_secret> strimzi.io/ca-key-generation="<ca_key_generation>"

-

Annotation

strimzi.io/ca-cert-generation="<ca_certificate_generation>"defines the generation of a new CA certificate. Annotation

strimzi.io/ca-key-generation="<ca_key_generation>"defines the generation of a new CA key.Start from 0 (zero) as the incremental value (

strimzi.io/ca-cert-generation=0) for your own CA certificate. Set a higher incremental value when you renew the certificates.

-

Annotation

Create the

Kafkaresource for your cluster, configuring either theKafka.spec.clusterCaor theKafka.spec.clientsCaobject to not use generated CAs.Example fragment

Kafkaresource configuring the cluster CA to use certificates you supply for yourselfkind: Kafka version: kafka.strimzi.io/v1beta2 spec: # ... clusterCa: generateCertificateAuthority: false

16.6.2. Renewing your own CA certificates

If you are using your own CA certificates, you need to renew them manually. The Cluster Operator will not renew them automatically. Renew the CA certificates in the renewal period before they expire.

Perform the steps in this procedure when you are renewing CA certificates and continuing with the same private key. If you are renewing your own CA certificates and private keys, see Section 16.6.3, “Renewing or replacing CA certificates and private keys with your own”.

The procedure describes the renewal of CA certificates in PEM format.

Prerequisites

- The Cluster Operator is running.

- You have new cluster or clients X.509 certificates in PEM format.

Procedure

Update the

Secretfor the CA certificate.Edit the existing secret to add the new CA certificate and update the certificate generation annotation value.

oc edit secret <ca_certificate_secret_name><ca_certificate_secret_name> is the name of the

Secret, which is<kafka_cluster_name>-cluster-ca-certfor the cluster CA certificate and<kafka_cluster_name>-clients-ca-certfor the clients CA certificate.The following example shows a secret for a cluster CA certificate that’s associated with a Kafka cluster named

my-cluster.Example secret configuration for a cluster CA certificate

apiVersion: v1 kind: Secret data: ca.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0F... 1 metadata: annotations: strimzi.io/ca-cert-generation: "0" 2 labels: strimzi.io/cluster: my-cluster strimzi.io/kind: Kafka name: my-cluster-cluster-ca-cert #... type: Opaque

Encode your new CA certificate into base64.

cat <path_to_new_certificate> | base64Update the CA certificate.

Copy the base64-encoded CA certificate from the previous step as the value for the

ca.crtproperty underdata.Increase the value of the CA certificate generation annotation.

Update the

strimzi.io/ca-cert-generationannotation with a higher incremental value. For example, changestrimzi.io/ca-cert-generation=0tostrimzi.io/ca-cert-generation=1. If theSecretis missing the annotation, the value is treated as0, so add the annotation with a value of1.When Streams for Apache Kafka generates certificates, the certificate generation annotation is automatically incremented by the Cluster Operator. For your own CA certificates, set the annotations with a higher incremental value. The annotation needs a higher value than the one from the current secret so that the Cluster Operator can roll the pods and update the certificates. The

strimzi.io/ca-cert-generationhas to be incremented on each CA certificate renewal.Save the secret with the new CA certificate and certificate generation annotation value.

Example secret configuration updated with a new CA certificate

apiVersion: v1 kind: Secret data: ca.crt: GCa6LS3RTHeKFiFDGBOUDYFAZ0F... 1 metadata: annotations: strimzi.io/ca-cert-generation: "1" 2 labels: strimzi.io/cluster: my-cluster strimzi.io/kind: Kafka name: my-cluster-cluster-ca-cert #... type: Opaque

On the next reconciliation, the Cluster Operator performs a rolling update of ZooKeeper, Kafka, and other components to trust the new CA certificate.

If maintenance time windows are configured, the Cluster Operator will roll the pods at the first reconciliation within the next maintenance time window.

16.6.3. Renewing or replacing CA certificates and private keys with your own

If you are using your own CA certificates and private keys, you need to renew them manually. The Cluster Operator will not renew them automatically. Renew the CA certificates in the renewal period before they expire. You can also use the same procedure to replace the CA certificates and private keys generated by the Streams for Apache Kafka operators with your own.

Perform the steps in this procedure when you are renewing or replacing CA certificates and private keys. If you are only renewing your own CA certificates, see Section 16.6.2, “Renewing your own CA certificates”.

The procedure describes the renewal of CA certificates and private keys in PEM format.

Before going through the following steps, make sure that the CN (Common Name) of the new CA certificate is different from the current one. For example, when the Cluster Operator renews certificates automatically it adds a v<version_number> suffix to identify a version. Do the same with your own CA certificate by adding a different suffix on each renewal. By using a different key to generate a new CA certificate, you retain the current CA certificate stored in the Secret.

Prerequisites

- The Cluster Operator is running.

- You have new cluster or clients X.509 certificates and keys in PEM format.

Procedure

Pause the reconciliation of the

Kafkacustom resource.Annotate the custom resource in OpenShift, setting the

pause-reconciliationannotation totrue:oc annotate Kafka <name_of_custom_resource> strimzi.io/pause-reconciliation="true"

For example, for a

Kafkacustom resource namedmy-cluster:oc annotate Kafka my-cluster strimzi.io/pause-reconciliation="true"

Check that the status conditions of the custom resource show a change to

ReconciliationPaused:oc describe Kafka <name_of_custom_resource>

The

typecondition changes toReconciliationPausedat thelastTransitionTime.

Check the settings for the

generateCertificateAuthorityproperties in yourKafkacustom resource.If a property is set to

false, a CA certificate is not generated by the Cluster Operator. You require this setting if you are using your own certificates.If needed, edit the existing

Kafkacustom resource and set thegenerateCertificateAuthorityproperties tofalse.oc edit Kafka <name_of_custom_resource>

The following example shows a

Kafkacustom resource with both cluster and clients CA certificates generation delegated to the user.Example

Kafkaconfiguration using your own CA certificatesapiVersion: kafka.strimzi.io/v1beta2 kind: Kafka # ... spec: # ... clusterCa: generateCertificateAuthority: false 1 clientsCa: generateCertificateAuthority: false 2 # ...Update the

Secretfor the CA certificate.Edit the existing secret to add the new CA certificate and update the certificate generation annotation value.

oc edit secret <ca_certificate_secret_name>

<ca_certificate_secret_name> is the name of the

Secret, which is<kafka_cluster_name>-cluster-ca-certfor the cluster CA certificate and<kafka_cluster_name>-clients-ca-certfor the clients CA certificate.The following example shows a secret for a cluster CA certificate that’s associated with a Kafka cluster named

my-cluster.Example secret configuration for a cluster CA certificate

apiVersion: v1 kind: Secret data: ca.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0F... 1 metadata: annotations: strimzi.io/ca-cert-generation: "0" 2 labels: strimzi.io/cluster: my-cluster strimzi.io/kind: Kafka name: my-cluster-cluster-ca-cert #... type: Opaque

Rename the current CA certificate to retain it.

Rename the current

ca.crtproperty underdataasca-<date>.crt, where <date> is the certificate expiry date in the format YEAR-MONTH-DAYTHOUR-MINUTE-SECONDZ. For exampleca-2023-01-26T17-32-00Z.crt:. Leave the value for the property as it is to retain the current CA certificate.Encode your new CA certificate into base64.

cat <path_to_new_certificate> | base64

Update the CA certificate.

Create a new

ca.crtproperty underdataand copy the base64-encoded CA certificate from the previous step as the value forca.crtproperty.Increase the value of the CA certificate generation annotation.

Update the

strimzi.io/ca-cert-generationannotation with a higher incremental value. For example, changestrimzi.io/ca-cert-generation=0tostrimzi.io/ca-cert-generation=1. If theSecretis missing the annotation, the value is treated as0, so add the annotation with a value of1.When Streams for Apache Kafka generates certificates, the certificate generation annotation is automatically incremented by the Cluster Operator. For your own CA certificates, set the annotations with a higher incremental value. The annotation needs a higher value than the one from the current secret so that the Cluster Operator can roll the pods and update the certificates. The

strimzi.io/ca-cert-generationhas to be incremented on each CA certificate renewal.Save the secret with the new CA certificate and certificate generation annotation value.

Example secret configuration updated with a new CA certificate

apiVersion: v1 kind: Secret data: ca.crt: GCa6LS3RTHeKFiFDGBOUDYFAZ0F... 1 ca-2023-01-26T17-32-00Z.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0F... 2 metadata: annotations: strimzi.io/ca-cert-generation: "1" 3 labels: strimzi.io/cluster: my-cluster strimzi.io/kind: Kafka name: my-cluster-cluster-ca-cert #... type: Opaque

Update the

Secretfor the CA key used to sign your new CA certificate.Edit the existing secret to add the new CA key and update the key generation annotation value.

oc edit secret <ca_key_name>

<ca_key_name> is the name of CA key, which is

<kafka_cluster_name>-cluster-cafor the cluster CA key and<kafka_cluster_name>-clients-cafor the clients CA key.The following example shows a secret for a cluster CA key that’s associated with a Kafka cluster named

my-cluster.Example secret configuration for a cluster CA key

apiVersion: v1 kind: Secret data: ca.key: SA1cKF1GFDzOIiPOIUQBHDNFGDFS... 1 metadata: annotations: strimzi.io/ca-key-generation: "0" 2 labels: strimzi.io/cluster: my-cluster strimzi.io/kind: Kafka name: my-cluster-cluster-ca #... type: Opaque

Encode the CA key into base64.

cat <path_to_new_key> | base64

Update the CA key.

Copy the base64-encoded CA key from the previous step as the value for the

ca.keyproperty underdata.Increase the value of the CA key generation annotation.

Update the

strimzi.io/ca-key-generationannotation with a higher incremental value. For example, changestrimzi.io/ca-key-generation=0tostrimzi.io/ca-key-generation=1. If theSecretis missing the annotation, it is treated as0, so add the annotation with a value of1.When Streams for Apache Kafka generates certificates, the key generation annotation is automatically incremented by the Cluster Operator. For your own CA certificates together with a new CA key, set the annotation with a higher incremental value. The annotation needs a higher value than the one from the current secret so that the Cluster Operator can roll the pods and update the certificates and keys. The

strimzi.io/ca-key-generationhas to be incremented on each CA certificate renewal.Save the secret with the new CA key and key generation annotation value.

Example secret configuration updated with a new CA key

apiVersion: v1 kind: Secret data: ca.key: AB0cKF1GFDzOIiPOIUQWERZJQ0F... 1 metadata: annotations: strimzi.io/ca-key-generation: "1" 2 labels: strimzi.io/cluster: my-cluster strimzi.io/kind: Kafka name: my-cluster-cluster-ca #... type: Opaque

Resume from the pause.

To resume the

Kafkacustom resource reconciliation, set thepause-reconciliationannotation tofalse.oc annotate --overwrite Kafka <name_of_custom_resource> strimzi.io/pause-reconciliation="false"

You can also do the same by removing the

pause-reconciliationannotation.oc annotate Kafka <name_of_custom_resource> strimzi.io/pause-reconciliation-

On the next reconciliation, the Cluster Operator performs a rolling update of ZooKeeper, Kafka, and other components to trust the new CA certificate. When the rolling update is complete, the Cluster Operator will start a new one to generate new server certificates signed by the new CA key.

If maintenance time windows are configured, the Cluster Operator will roll the pods at the first reconciliation within the next maintenance time window.

- Wait until the rolling updates to move to the new CA certificate are complete.

Remove any outdated certificates from the secret configuration to ensure that the cluster no longer trusts them.

oc edit secret <ca_certificate_secret_name>

Example secret configuration with the old certificate removed

apiVersion: v1 kind: Secret data: ca.crt: GCa6LS3RTHeKFiFDGBOUDYFAZ0F... metadata: annotations: strimzi.io/ca-cert-generation: "1" labels: strimzi.io/cluster: my-cluster strimzi.io/kind: Kafka name: my-cluster-cluster-ca-cert #... type: OpaqueStart a manual rolling update of your cluster to pick up the changes made to the secret configuration.

See Section 29.2, “Starting rolling updates of Kafka and other operands using annotations”.