Questo contenuto non è disponibile nella lingua selezionata.

Chapter 12. Migrating virtual machines

If the current host of a virtual machine (VM) becomes unsuitable or cannot be used anymore, or if you want to redistribute the hosting workload, you can migrate the VM to another KVM host.

12.1. How migrating virtual machines works

You can migrate a running virtual machine (VM) without interrupting the workload, with only a small downtime, by using a live migration. By default, the migrated VM is transient on the destination host, and remains defined also on the source host. The essential part of a live migration is transferring the state of the VM’s memory and of any attached virtualized devices to a destination host. For the VM to remain functional on the destination host, the VM’s disk images must remain available to it.

To migrate a shut-off VM, you must use an offline migration, which copies the VM’s configuration to the destination host. For details, see the following table.

| Migration type | Description | Use case | Storage requirements |

|---|---|---|---|

| Live migration | The VM continues to run on the source host machine while KVM is transferring the VM’s memory pages to the destination host. When the migration is nearly complete, KVM very briefly suspends the VM, and resumes it on the destination host. | Useful for VMs that require constant uptime. However, for VMs that modify memory pages faster than KVM can transfer them, such as VMs under heavy I/O load, the live migration might fail. (1) | The VM’s disk images must be accessible both to the source host and the destination host during the migration. (2) |

| Offline migration | Moves the VM’s configuration to the destination host | Recommended for shut-off VMs and in situations when shutting down the VM does not disrupt your workloads. | The VM’s disk images do not have to be accessible to the source or destination host during migration, and can be copied or moved manually to the destination host instead. |

(1) For possible solutions, see: Additional virsh migrate options for live migrations

(2) To achieve this, use one of the following:

- Storage located on a shared network

-

The

--copy-storage-allparameter for thevirsh migratecommand, which copies disk image contents from the source to the destination over the network. - Storage area network (SAN) logical units (LUNs).

- Ceph storage clusters

For easier management of large-scale migrations, explore other Red Hat products, such as:

12.2. Benefits of migrating virtual machines

Migrating virtual machines (VMs) can be useful for:

- Load balancing

- VMs can be moved to host machines with lower usage if their host becomes overloaded, or if another host is under-utilized.

- Hardware independence

- When you need to upgrade, add, or remove hardware devices on the host machine, you can safely relocate VMs to other hosts. This means that VMs do not experience any downtime for hardware improvements.

- Energy saving

- VMs can be redistributed to other hosts, and the unloaded host systems can thus be powered off to save energy and cut costs during low usage periods.

- Geographic migration

- VMs can be moved to another physical location for lower latency or when required for other reasons.

12.3. Limitations for migrating virtual machines

Before migrating virtual machines (VMs) in RHEL 9, ensure you are aware of the migration’s limitations.

VMs that use certain features and configurations will not work correctly if migrated, or the migration will fail. Such features include:

- Device passthrough

- SR-IOV device assignment (With the exception of migrating a VM with an attached virtual function of a Mellanox networking device, which works correctly.)

- Mediated devices, such as vGPUs (With the exception of migrating a VM with an attached NVIDIA vGPU, which works correctly.)

- A migration between hosts that use Non-Uniform Memory Access (NUMA) pinning works only if the hosts have similar topology. However, the performance on running workloads might be negatively affected by the migration.

- Both the source and destination hosts use specific RHEL versions that are supported for VM migration, see Supported hosts for virtual machine migration

The physical CPUs, both on the source VM and the destination VM, must be identical, otherwise the migration might fail. Any differences between the VMs in the following CPU related areas can cause problems with the migration:

CPU model

- Migrating between an Intel 64 host and an AMD64 host is unsupported, even though they share the x86-64 instruction set.

- For steps to ensure that a VM will work correctly after migrating to a host with a different CPU model, see Verifying host CPU compatibility for virtual machine migration.

- Physical machine firmware versions and settings

12.4. Migrating a virtual machine by using the command line

If the current host of a virtual machine (VM) becomes unsuitable or cannot be used anymore, or if you want to redistribute the hosting workload, you can migrate the VM to another KVM host. You can perform a live migration or an offline migration. For differences between the two scenarios, see How migrating virtual machines works.

Prerequisites

- Hypervisor: The source host and the destination host both use the KVM hypervisor.

-

Network connection: The source host and the destination host are able to reach each other over the network. Use the

pingutility to verify this. Open ports: Ensure the following ports are open on the destination host.

- Port 22 is needed for connecting to the destination host by using SSH.

- Port 16514 is needed for connecting to the destination host by using TLS.

- Port 16509 is needed for connecting to the destination host by using TCP.

- Ports 49152-49215 are needed by QEMU for transferring the memory and disk migration data.

- Hosts: For the migration to be supportable by Red Hat, the source host and destination host must be using specific operating systems and machine types. To ensure this is the case, see Supported hosts for virtual machine migration.

- CPU: The VM must be compatible with the CPU features of the destination host. To ensure this is the case, see Verifying host CPU compatibility for virtual machine migration.

Storage: The disk images of VMs that will be migrated are accessible to both the source host and the destination host. This is optional for offline migration, but required for migrating a running VM. To ensure storage accessibility for both hosts, one of the following must apply:

- You are using storage area network (SAN) logical units (LUNs).

- You are using a Ceph storage clusters.

-

You have created a disk image with the same format and size as the source VM disk and you will use the

--copy-storage-allparameter when migrating the VM. - The disk image is located on a separate networked location. For instructions to set up such shared VM storage, see Sharing virtual machine disk images with other hosts.

Network bandwidth: When migrating a running VM, your network bandwidth must be higher than the rate in which the VM generates dirty memory pages.

To obtain the dirty page rate of your VM before you start the live migration, do the following:

Monitor the rate of dirty page generation of the VM for a short period of time.

virsh domdirtyrate-calc <example_VM> 30

# virsh domdirtyrate-calc <example_VM> 30Copy to Clipboard Copied! Toggle word wrap Toggle overflow After the monitoring finishes, obtain its results:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, the VM is generating 2 MB of dirty memory pages per second. Attempting to live-migrate such a VM on a network with a bandwidth of 2 MB/s or less will cause the live migration not to progress if you do not pause the VM or lower its workload.

To ensure that the live migration finishes successfully, Red Hat recommends that your network bandwidth is significantly greater than the VM’s dirty page generation rate.

NoteThe value of the

calc_periodoption might differ based on the workload and dirty page rate. You can experiment with severalcalc_periodvalues to determine the most suitable period that aligns with the dirty page rate in your environment.

- Bridge tap network specifics: When migrating an existing VM in a public bridge tap network, the source and destination hosts must be located on the same network. Otherwise, the VM network will not work after migration.

Connection protocol: When performing a VM migration, the

virshclient on the source host can use one of several protocols to connect to the libvirt daemon on the destination host. Examples in the following procedure use an SSH connection, but you can choose a different one.If you want libvirt to use an SSH connection, ensure that the

virtqemudsocket is enabled and running on the destination host.systemctl enable --now virtqemud.socket

# systemctl enable --now virtqemud.socketCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you want libvirt to use a TLS connection, ensure that the

virtproxyd-tlssocket is enabled and running on the destination host.systemctl enable --now virtproxyd-tls.socket

# systemctl enable --now virtproxyd-tls.socketCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you want libvirt to use a TCP connection, ensure that the

virtproxyd-tcpsocket is enabled and running on the destination host.systemctl enable --now virtproxyd-tcp.socket

# systemctl enable --now virtproxyd-tcp.socketCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

To migrate a VM from one host to another, use the virsh migrate command.

Offline migration

The following command migrates a shut-off

example-VMVM from your local host to the system connection of theexample-destinationhost by using an SSH tunnel.virsh migrate --offline --persistent <example_VM> qemu+ssh://example-destination/system

# virsh migrate --offline --persistent <example_VM> qemu+ssh://example-destination/systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Live migration

The following command migrates the

example-VMVM from your local host to the system connection of theexample-destinationhost by using an SSH tunnel. The VM keeps running during the migration.virsh migrate --live --persistent <example_VM> qemu+ssh://example-destination/system

# virsh migrate --live --persistent <example_VM> qemu+ssh://example-destination/systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Wait for the migration to complete. The process might take some time depending on network bandwidth, system load, and the size of the VM. If the

--verboseoption is not used forvirsh migrate, the CLI does not display any progress indicators except errors.When the migration is in progress, you can use the

virsh domjobinfoutility to display the migration statistics.

Multi-FD live migration

You can use multiple parallel connections to the destination host during the live migration. This is also known as multiple file descriptors (multi-FD) migration. With multi-FD migration, you can speed up the migration by utilizing all of the available network bandwidth for the migration process.

virsh migrate --live --persistent --parallel --parallel-connections 4 <example_VM> qemu+ssh://<example-destination>/system

# virsh migrate --live --persistent --parallel --parallel-connections 4 <example_VM> qemu+ssh://<example-destination>/systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow This example uses 4 multi-FD channels to migrate the <example_VM> VM. It is recommended to use one channel for each 10 Gbps of available network bandwidth. The default value is 2 channels.

Live migration with an increased downtime limit

To improve the reliability of a live migration, you can set the

maxdowntimeparameter, which specifies the maximum amount of time, in milliseconds, the VM can be paused during live migration. Setting a larger downtime can help to ensure the migration completes successfully.virsh migrate-setmaxdowntime <example_VM> <time_interval_in_milliseconds>

# virsh migrate-setmaxdowntime <example_VM> <time_interval_in_milliseconds>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Live migration with passwordless SSH authentication

To avoid the need to input the SSH password for the remote host during the migration, you can specify a private key file for the migration to use instead. This can be useful for example in automated migration scripts, or for peer-to-peer migration.

virsh migrate --live --persistent <example_VM> qemu+ssh://<example-destination>/system?keyfile=<path_to_key>

# virsh migrate --live --persistent <example_VM> qemu+ssh://<example-destination>/system?keyfile=<path_to_key>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Post-copy migration

If your VM has a large memory footprint, you can perform a post-copy migration, which transfers the source VM’s CPU state first and immediately starts the migrated VM on the destination host. The source VM’s memory pages are transferred after the migrated VM is already running on the destination host. Because of this, a post-copy migration can result in a smaller downtime of the migrated VM.

However, the running VM on the destination host might try to access memory pages that have not yet been transferred, which causes a page fault. If too many page faults occur during the migration, the performance of the migrated VM can be severely degraded.

Given the potential complications of a post-copy migration, it is recommended to use the following command that starts a standard live migration and switches to a post-copy migration if the live migration cannot be finished in a specified amount of time.

virsh migrate --live --persistent --postcopy --timeout <time_interval_in_seconds> --timeout-postcopy <example_VM> qemu+ssh://<example-destination>/system

# virsh migrate --live --persistent --postcopy --timeout <time_interval_in_seconds> --timeout-postcopy <example_VM> qemu+ssh://<example-destination>/systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Auto-converged live migration

If your VM is under a heavy memory workload, you can use the

--auto-convergeoption. This option automatically slows down the execution speed of the VM’s CPU. As a consequence, this CPU throttling can help to slow down memory writes, which means the live migration might succeed even in VMs with a heavy memory workload.However, the CPU throttling does not help to resolve workloads where memory writes are not directly related to CPU execution speed, and it can negatively impact the performance of the VM during a live migration.

virsh migrate --live --persistent --auto-converge <example_VM> qemu+ssh://<example-destination>/system

# virsh migrate --live --persistent --auto-converge <example_VM> qemu+ssh://<example-destination>/systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

For offline migration:

On the destination host, list the available VMs to verify that the VM was migrated successfully.

virsh list --all Id Name State ---------------------------------- 10 example-VM-1 shut off

# virsh list --all Id Name State ---------------------------------- 10 example-VM-1 shut offCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For live migration:

On the destination host, list the available VMs to verify the state of the destination VM:

virsh list --all Id Name State ---------------------------------- 10 example-VM-1 running

# virsh list --all Id Name State ---------------------------------- 10 example-VM-1 runningCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the state of the VM is listed as

running, it means that the migration is finished. However, if the live migration is still in progress, the state of the destination VM will be listed aspaused.

For post-copy migration:

On the source host, list the available VMs to verify the state of the source VM.

virsh list --all Id Name State ---------------------------------- 10 example-VM-1 shut off

# virsh list --all Id Name State ---------------------------------- 10 example-VM-1 shut offCopy to Clipboard Copied! Toggle word wrap Toggle overflow On the destination host, list the available VMs to verify the state of the destination VM.

virsh list --all Id Name State ---------------------------------- 10 example-VM-1 running

# virsh list --all Id Name State ---------------------------------- 10 example-VM-1 runningCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the state of the source VM is listed as

shut offand the state of the destination VM is listed asrunning, it means that the migration is finished.

12.5. Live migrating a virtual machine by using the web console

If you want to migrate a virtual machine (VM) that is performing tasks which require it to be constantly running, you can migrate that VM to another KVM host without shutting it down. This is also known as live migration. The following instructions explain how to do so by using the web console.

Prerequisites

- You have installed the RHEL 9 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

- The web console VM plugin is installed on your system.

- Hypervisor: The source host and the destination host both use the KVM hypervisor.

- Hosts: The source and destination hosts are running.

Open ports: Ensure the following ports are open on the destination host.

- Port 22 is needed for connecting to the destination host by using SSH.

- Port 16514 is needed for connecting to the destination host by using TLS.

- Port 16509 is needed for connecting to the destination host by using TCP.

- Ports 49152-49215 are needed by QEMU for transfering the memory and disk migration data.

- CPU: The VM must be compatible with the CPU features of the destination host. To ensure this is the case, see Verifying host CPU compatibility for virtual machine migration.

Storage: The disk images of VMs that will be migrated are accessible to both the source host and the destination host. This is optional for offline migration, but required for migrating a running VM. To ensure storage accessibility for both hosts, one of the following must apply:

- You are using storage area network (SAN) logical units (LUNs).

- You are using a Ceph storage clusters.

-

You have created a disk image with the same format and size as the source VM disk and you will use the

--copy-storage-allparameter when migrating the VM. - The disk image is located on a separate networked location. For instructions to set up such shared VM storage, see Sharing virtual machine disk images with other hosts.

Network bandwidth: When migrating a running VM, your network bandwidth must be higher than the rate in which the VM generates dirty memory pages.

To obtain the dirty page rate of your VM before you start the live migration, do the following on the command line:

Monitor the rate of dirty page generation of the VM for a short period of time.

virsh domdirtyrate-calc vm-name 30

# virsh domdirtyrate-calc vm-name 30Copy to Clipboard Copied! Toggle word wrap Toggle overflow After the monitoring finishes, obtain its results:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, the VM is generating 2 MB of dirty memory pages per second. Attempting to live-migrate such a VM on a network with a bandwidth of 2 MB/s or less will cause the live migration not to progress if you do not pause the VM or lower its workload.

To ensure that the live migration finishes successfully, Red Hat recommends that your network bandwidth is significantly greater than the VM’s dirty page generation rate.

NoteThe value of the

calc_periodoption might differ based on the workload and dirty page rate. You can experiment with severalcalc_periodvalues to determine the most suitable period that aligns with the dirty page rate in your environment.

- Bridge tap network specifics: When migrating an existing VM in a public bridge tap network, the source and destination hosts must be located on the same network. Otherwise, the VM network will not work after migration.

Procedure

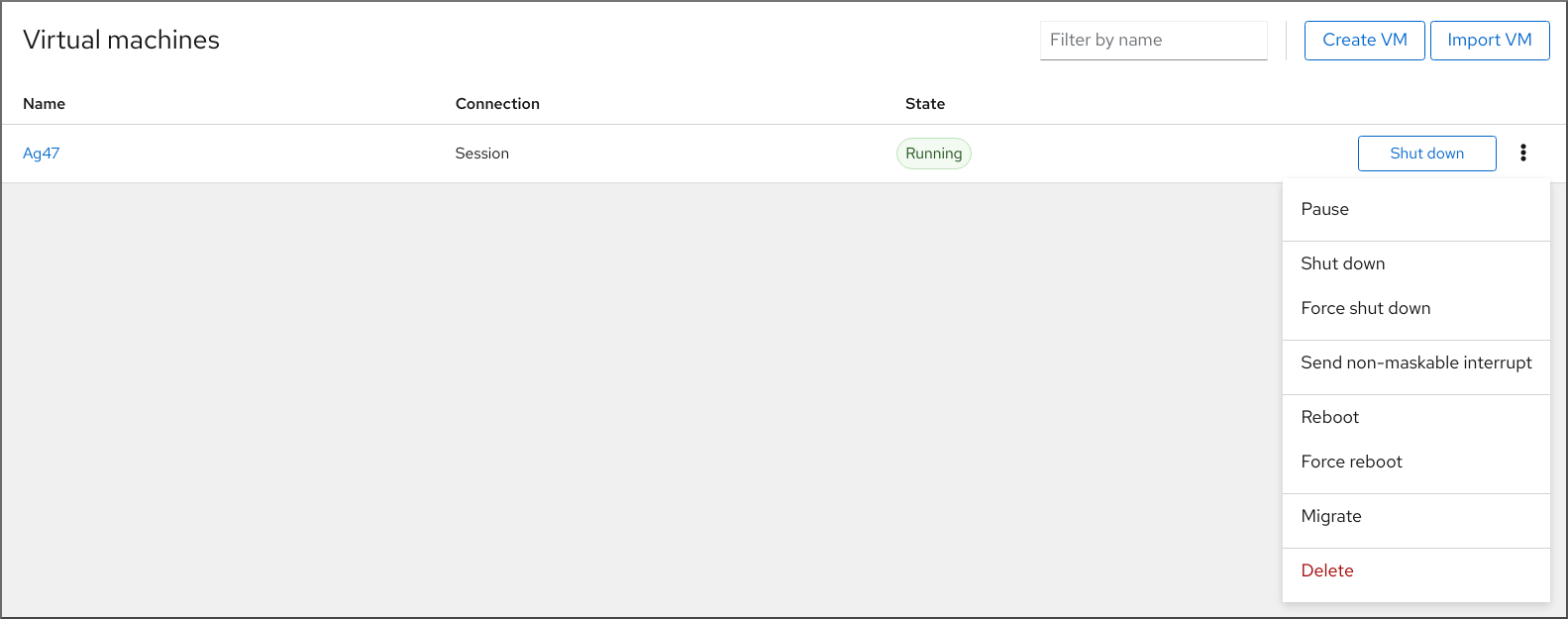

In the Virtual Machines interface of the web console, click the Menu button of the VM that you want to migrate.

A drop down menu appears with controls for various VM operations.

Click

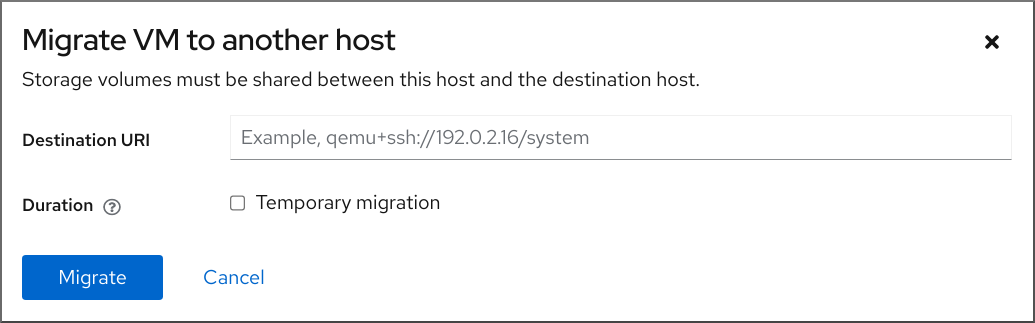

The Migrate VM to another host dialog appears.

- Enter the URI of the destination host.

Configure the duration of the migration:

- Permanent - Do not check the box if you want to migrate the VM permanently. Permanent migration completely removes the VM configuration from the source host.

- Temporary - Temporary migration migrates a copy of the VM to the destination host. This copy is deleted from the destination host when the VM is shut down. The original VM remains on the source host.

Click

Your VM is migrated to the destination host.

Verification

To verify whether the VM has been successfully migrated and is working correctly:

- Confirm whether the VM appears in the list of VMs available on the destination host.

- Start the migrated VM and observe if it boots up.

12.6. Live migrating a virtual machine with an attached Mellanox virtual function

You can live migrate a virtual machine (VM) with an attached virtual function (VF) of a supported Mellanox networking device.

Red Hat implements the general functionality of VM live migration with an attached VF of a Mellanox networking device. However, the functionality depends on specific Mellanox device models and firmware versions.

Currently, the VF migration is supported only with a Mellanox CX-7 networking device.

The VF on the Mellanox CX-7 networking device uses a new mlx5_vfio_pci driver, which adds functionality that is necessary for the live migration, and libvirt binds the new driver to the VF automatically.

Red Hat directly supports Mellanox VF live migration only with the included mlx5_vfio_pci driver.

Limitations

Some virtualization features cannot be used when live migrating a VM with an attached virtual function:

Calculating dirty memory page rate generation of the VM.

Currently, when migrating a VM with an attached Mellanox VF, live migration data and statistics provided by

virsh domjobinfoandvirsh domdirtyrate-calccommands are inaccurate, because the calculations only count guest RAM without including the impact of the attached VF.- Using a post-copy live migration.

- Using a virtual I/O Memory Management Unit (vIOMMU) device in the VM.

Additional limitations that are specific to the Mellanox CX-7 networking device:

A CX-7 device with the same Parameter-Set Identification (PSID) and the same firmware version must be used on both the source and the destination hosts.

You can check the PSID of your device with the following command:

mstflint -d <device_pci_address> query | grep -i PSID PSID: MT_1090111019

# mstflint -d <device_pci_address> query | grep -i PSID PSID: MT_1090111019Copy to Clipboard Copied! Toggle word wrap Toggle overflow - On one CX-7 physical function, you can use at maximum 4 VFs for live migration at the same time. For example, you can migrate one VM with 4 attached VFs, or 4 VMs with one VF attached to each VM.

Prerequisites

You have a Mellanox CX-7 networking device with a firmware version that is equal to or greater than 28.36.1010.

Refer to Mellanox documentation for details about supported firmware versions and ensure you are using an up-to-date version of the firmware.

- The host uses the Intel 64, AMD64, or ARM 64 CPU architecture.

- The Mellanox firmware version on the source host must be the same as on the destination host.

The

mstflintpackage is installed on both the source and destination host:dnf install mstflint

# dnf install mstflintCopy to Clipboard Copied! Toggle word wrap Toggle overflow The Mellanox CX-7 networking device has

VF_MIGRATION_MODEset toMIGRATION_ENABLED:mstconfig -d <device_pci_address> query | grep -i VF_migration VF_MIGRATION_MODE MIGRATION_ENABLED(2)

# mstconfig -d <device_pci_address> query | grep -i VF_migration VF_MIGRATION_MODE MIGRATION_ENABLED(2)Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can set

VF_MIGRATION_MODEtoMIGRATION_ENABLEDby using the following command:mstconfig -d <device_pci_address> set VF_MIGRATION_MODE=2

# mstconfig -d <device_pci_address> set VF_MIGRATION_MODE=2Copy to Clipboard Copied! Toggle word wrap Toggle overflow

The

openvswitchpackage is installed on both the source and destination host:dnf install openvswitch

# dnf install openvswitchCopy to Clipboard Copied! Toggle word wrap Toggle overflow - All of the general SR-IOV devices prerequisites. For details, see Attaching SR-IOV networking devices to virtual machines

- All of the general VM migration prerequisites. For details, see Migrating a virtual machine by using the command line

Procedure

On the source host, set the Mellanox networking device to the

switchdevmode.devlink dev eswitch set pci/<device_pci_address> mode switchdev

# devlink dev eswitch set pci/<device_pci_address> mode switchdevCopy to Clipboard Copied! Toggle word wrap Toggle overflow On the source host, create a virtual function on the Mellanox device.

echo 1 > /sys/bus/pci/devices/0000\:e1\:00.0/sriov_numvfs

# echo 1 > /sys/bus/pci/devices/0000\:e1\:00.0/sriov_numvfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

/0000\:e1\:00.0/part of the file path is based on the PCI address of the device. In the example it is:0000:e1:00.0On the source host, unbind the VF from its driver.

virsh nodedev-detach <vf_pci_address> --driver pci-stub

# virsh nodedev-detach <vf_pci_address> --driver pci-stubCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can view the PCI address of the VF by using the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow On the source host, enable the migration function of the VF.

devlink port function set pci/0000:e1:00.0/1 migratable enable

# devlink port function set pci/0000:e1:00.0/1 migratable enableCopy to Clipboard Copied! Toggle word wrap Toggle overflow In this example,

pci/0000:e1:00.0/1refers to the first VF on the Mellanox device with the given PCI address.On the source host, configure Open vSwitch (OVS) for the migration of the VF. If the Mellanox device is in

switchdevmode, it cannot transfer data over the network.Ensure the

openvswitchservice is running.systemctl start openvswitch

# systemctl start openvswitchCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable hardware offloading to improve networking performance.

ovs-vsctl set Open_vSwitch . other_config:hw-offload=true

# ovs-vsctl set Open_vSwitch . other_config:hw-offload=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Increase the maximum idle time to ensure network connections remain open during the migration.

ovs-vsctl set Open_vSwitch . other_config:max-idle=300000

# ovs-vsctl set Open_vSwitch . other_config:max-idle=300000Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new bridge in the OVS instance.

ovs-vsctl add-br <bridge_name>

# ovs-vsctl add-br <bridge_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the

openvswitchservice.systemctl restart openvswitch

# systemctl restart openvswitchCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the physical Mellanox device to the OVS bridge.

ovs-vsctl add-port <bridge_name> enp225s0np0

# ovs-vsctl add-port <bridge_name> enp225s0np0Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example,

<bridge_name>is the name of the bridge you created in a previous step, andenp225s0np0is the network interface name of the Mellanox device.Add the VF of the Mellanox device to the OVS bridge.

ovs-vsctl add-port <bridge_name> enp225s0npf0vf0

# ovs-vsctl add-port <bridge_name> enp225s0npf0vf0Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example,

<bridge_name>is the name of the bridge you created in step d andenp225s0npf0vf0is the network interface name of the VF.

- Repeat steps 1-5 on the destination host.

On the source host, open a new file, such as

mlx_vf.xml, and add the following XML configuration of the VF:Copy to Clipboard Copied! Toggle word wrap Toggle overflow This example configures a pass-through of the VF as a network interface for the VM. Ensure the MAC address is unique, and use the PCI address of the VF on the source host.

On the source host, attach the VF XML file to the VM.

virsh attach-device <vm_name> mlx_vf.xml --live --config

# virsh attach-device <vm_name> mlx_vf.xml --live --configCopy to Clipboard Copied! Toggle word wrap Toggle overflow In this example,

mlx_vf.xmlis the name of the XML file with the VF configuration. Use the--liveoption to attach the device to a running VM.On the source host, start the live migration of the running VM with the attached VF.

virsh migrate --live --domain <vm_name> --desturi qemu+ssh://<destination_host_ip_address>/system

# virsh migrate --live --domain <vm_name> --desturi qemu+ssh://<destination_host_ip_address>/systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow For more details about performing a live migration, see Migrating a virtual machine by using the command line

Verification

In the migrated VM, view the network interface name of the Mellanox VF.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the migrated VM, check that the Mellanox VF works, for example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

12.7. Live migrating a virtual machine with an attached NVIDIA vGPU

If you use virtual GPUs (vGPUs) in your virtualization workloads, you can live migrate a running virtual machine (VM) with an attached vGPU to another KVM host. Currently, this is only possible with NVIDIA GPUs.

Prerequisites

- You have an NVIDIA GPU with an NVIDIA Virtual GPU Software Driver version that supports this functionality. Refer to the relevant NVIDIA vGPU documentation for more details.

You have a correctly configured NVIDIA vGPU assigned to a VM. For instructions, see: Setting up NVIDIA vGPU devices

NoteIt is also possible to live migrate a VM with multiple vGPU devices attached.

- The host uses RHEL 9.4 or later as the operating system.

- The host uses x86_64 CPU architecture.

- All of the vGPU migration prerequisites that are documented by NVIDIA. Refer to the relevant NVIDIA vGPU documentation for more details.

- All of the general VM migration prerequisites. For details, see Migrating a virtual machine by using the command line

Limitations

- Certain NVIDIA GPU features can disable the migration. For more information, see the specific NVIDIA documentation for your graphics card.

- Some GPU workloads are not compatible with the downtime that happens during a migration. As a consequence, the GPU workloads might stop or crash. It is recommended to test if your workloads are compatible with the downtime before attempting a vGPU live migration.

Currently, some general virtualization features cannot be used when live migrating a VM with an attached vGPU:

Calculating dirty memory page rate generation of the VM.

Currently, live migration data and statistics provided by

virsh domjobinfoandvirsh domdirtyrate-calccommands are inaccurate when migrating a VM with an attached vGPU, because the calculations only count guest RAM without including vRAM from the vGPU.- Using a post-copy live migration.

- Using a virtual I/O Memory Management Unit (vIOMMU) device in the VM.

Procedure

- For instructions on how to proceed with the live migration, see: Migrating a virtual machine by using the command line

No additional parameters for the migration command are required for the attached vGPU device.

12.8. Sharing virtual machine disk images with other hosts

To perform a live migration of a virtual machine (VM) between supported KVM hosts, you must also migrate the storage of the running VM in a way that makes it possible for the VM to read from and write to the storage during the migration process.

One of the methods to do this is using shared VM storage. The following procedure provides instructions for sharing a locally stored VM image with the source host and the destination host by using the NFS protocol.

Prerequisites

- The VM intended for migration is shut down.

- Optional: A host system is available for hosting the storage that is not the source or destination host, but both the source and the destination host can reach it through the network. This is the optimal solution for shared storage and is recommended by Red Hat.

- Make sure that NFS file locking is not used as it is not supported in KVM.

- The NFS protocol is installed and enabled on the source and destination hosts. See Deploying an NFS server.

The

virt_use_nfsSELinux boolean is set toon.setsebool virt_use_nfs 1

# setsebool virt_use_nfs 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Connect to the host that will provide shared storage. In this example, it is the

example-shared-storagehost:ssh root@example-shared-storage root@example-shared-storage's password: Last login: Mon Sep 24 12:05:36 2019 root~#

# ssh root@example-shared-storage root@example-shared-storage's password: Last login: Mon Sep 24 12:05:36 2019 root~#Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a directory on the

example-shared-storagehost that will hold the disk image and that will be shared with the migration hosts:mkdir /var/lib/libvirt/shared-images

# mkdir /var/lib/libvirt/shared-imagesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the disk image of the VM from the source host to the newly created directory. The following example copies the disk image

example-disk-1of the VM to the/var/lib/libvirt/shared-images/directory of theexample-shared-storagehost:scp /var/lib/libvirt/images/example-disk-1.qcow2 root@example-shared-storage:/var/lib/libvirt/shared-images/example-disk-1.qcow2

# scp /var/lib/libvirt/images/example-disk-1.qcow2 root@example-shared-storage:/var/lib/libvirt/shared-images/example-disk-1.qcow2Copy to Clipboard Copied! Toggle word wrap Toggle overflow On the host that you want to use for sharing the storage, add the sharing directory to the

/etc/exportsfile. The following example shares the/var/lib/libvirt/shared-imagesdirectory with theexample-source-machineandexample-destination-machinehosts:/var/lib/libvirt/shared-images example-source-machine(rw,no_root_squash) example-destination-machine(rw,no\_root_squash)

# /var/lib/libvirt/shared-images example-source-machine(rw,no_root_squash) example-destination-machine(rw,no\_root_squash)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

exportfs -acommand for the changes in the/etc/exportsfile to take effect.exportfs -a

# exportfs -aCopy to Clipboard Copied! Toggle word wrap Toggle overflow On both the source and destination host, mount the shared directory in the

/var/lib/libvirt/imagesdirectory:mount example-shared-storage:/var/lib/libvirt/shared-images /var/lib/libvirt/images

# mount example-shared-storage:/var/lib/libvirt/shared-images /var/lib/libvirt/imagesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

- Start the VM on the source host and observe if it boots successfully.

12.9. Verifying host CPU compatibility for virtual machine migration

For migrated virtual machines (VMs) to work correctly on the destination host, the CPUs on the source and the destination hosts must be compatible. To ensure that this is the case, calculate a common CPU baseline before you begin the migration.

The instructions in this section use an example migration scenario with the following host CPUs:

- Source host: Intel Core i7-8650U

- Destination hosts: Intel Xeon CPU E5-2620 v2

In addition, this procedure does not apply to 64-bit ARM systems.

Prerequisites

- Virtualization is installed and enabled on your system.

- You have administrator access to the source host and the destination host for the migration.

Procedure

On the source host, obtain its CPU features and paste them into a new XML file, such as

domCaps-CPUs.xml.virsh domcapabilities | xmllint --xpath "//cpu/mode[@name='host-model']" - > domCaps-CPUs.xml

# virsh domcapabilities | xmllint --xpath "//cpu/mode[@name='host-model']" - > domCaps-CPUs.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

In the XML file, replace the

<mode> </mode>tags with<cpu> </cpu>. Optional: Verify that the content of the

domCaps-CPUs.xmlfile looks similar to the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow On the destination host, use the following command to obtain its CPU features:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Add the obtained CPU features from the destination host to the

domCaps-CPUs.xmlfile on the source host. Again, replace the<mode> </mode>tags with<cpu> </cpu>and save the file. Optional: Verify that the XML file now contains the CPU features from both hosts.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the XML file to calculate the CPU feature baseline for the VM you intend to migrate.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Open the XML configuration of the VM you intend to migrate, and replace the contents of the

<cpu>section with the settings obtained in the previous step.virsh edit <vm_name>

# virsh edit <vm_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the VM is running, shut down the VM and start it again.

virsh shutdown <vm_name> virsh start <vm_name>

# virsh shutdown <vm_name> # virsh start <vm_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

12.10. Supported hosts for virtual machine migration

For the virtual machine (VM) migration to work properly and be supported by Red Hat, the source and destination hosts must be specific RHEL versions and machine types. The following table shows supported VM migration paths.

| Migration method | Release type | Future version example | Support status |

|---|---|---|---|

| Forward | Minor release |

9.3 | On supported RHEL 9 systems: Supported for machine type q35. |

| Forward | Major release |

8.x | On supported RHEL 9 systems: Supported for machine type q35. |

| Forward | Major release |

9.7 | On supported RHEL 9 systems: Supported for machine type q35. |

| Backward | Minor release |

9.7 | On supported RHEL 9 systems: Supported for machine type q35. |

| Backward | Major release |

9.7 | On supported RHEL 9 systems: Supported for machine type q35. |

| Backward | Major release |

10.1 | On supported RHEL 9 systems: Supported for machine type q35. |

Support level is different for other virtualization solutions provided by Red Hat, including RHOSP and OpenShift Virtualization.