이 콘텐츠는 선택한 언어로 제공되지 않습니다.

Chapter 9. Connect an instance to the physical network

This chapter explains how to use provider networks to connect instances directly to an external network.

Overview of the OpenStack Networking topology:

OpenStack Networking (neutron) has two categories of services distributed across a number of node types.

- Neutron server - This service runs the OpenStack Networking API server, which provides the API for end-users and services to interact with OpenStack Networking. This server also integrates with the underlying database to store and retrieve tenant network, router, and loadbalancer details, among others.

Neutron agents - These are the services that perform the network functions for OpenStack Networking:

-

neutron-dhcp-agent- manages DHCP IP addressing for tenant private networks. -

neutron-l3-agent- performs layer 3 routing between tenant private networks, the external network, and others. -

neutron-lbaas-agent- provisions the LBaaS routers created by tenants.

-

-

Compute node - This node hosts the hypervisor that runs the virtual machines, also known as instances. A Compute node must be wired directly to the network in order to provide external connectivity for instances. This node is typically where the l2 agents run, such as

neutron-openvswitch-agent.

Service placement:

The OpenStack Networking services can either run together on the same physical server, or on separate dedicated servers, which are named according to their roles:

- Controller node - The server that runs API service.

- Network node - The server that runs the OpenStack Networking agents.

- Compute node - The hypervisor server that hosts the instances.

The steps in this chapter assume that your environment has deployed these three node types. If your deployment has both the Controller and Network node roles on the same physical node, then the steps from both sections must be performed on that server. This also applies for a High Availability (HA) environment, where all three nodes might be running the Controller node and Network node services with HA. As a result, sections applicable to Controller and Network nodes will need to be performed on all three nodes.

9.1. Using Flat Provider Networks

This procedure creates flat provider networks that can connect instances directly to external networks. You would do this if you have multiple physical networks (for example, physnet1, physnet2) and separate physical interfaces (eth0 -> physnet1, and eth1 -> physnet2), and you need to connect each Compute node and Network node to those external networks.

If you want to connect multiple VLAN-tagged interfaces (on a single NIC) to multiple provider networks, please refer to Section 9.2, “Using VLAN provider networks”.

Configure the Controller nodes:

1. Edit /etc/neutron/plugin.ini (which is symlinked to /etc/neutron/plugins/ml2/ml2_conf.ini) and add flat to the existing list of values, and set flat_networks to *:

type_drivers = vxlan,flat flat_networks =*

type_drivers = vxlan,flat

flat_networks =*

2. Create a flat external network and associate it with the configured physical_network. Creating it as a shared network will allow other users to connect their instances directly to it:

neutron net-create public01 --provider:network_type flat --provider:physical_network physnet1 --router:external=True --shared

neutron net-create public01 --provider:network_type flat --provider:physical_network physnet1 --router:external=True --shared

3. Create a subnet within this external network using neutron subnet-create, or the OpenStack Dashboard. For example:

neutron subnet-create --name public_subnet --enable_dhcp=False --allocation_pool start=192.168.100.20,end=192.168.100.100 --gateway=192.168.100.1 public01 192.168.100.0/24

neutron subnet-create --name public_subnet --enable_dhcp=False --allocation_pool start=192.168.100.20,end=192.168.100.100 --gateway=192.168.100.1 public01 192.168.100.0/24

4. Restart the neutron-server service to apply this change:

systemctl restart neutron-server.service

# systemctl restart neutron-server.serviceConfigure the Network node and Compute nodes:

These steps must be completed on the Network node and the Compute nodes. As a result, the nodes will connect to the external network, and will allow instances to communicate directly with the external network.

1. Create the Open vSwitch bridge and port. This step creates the external network bridge (br-ex) and adds a corresponding port (eth1):

i. Edit /etc/sysconfig/network-scripts/ifcfg-eth1:

ii. Edit /etc/sysconfig/network-scripts/ifcfg-br-ex:

2. Restart the network service for the changes to take effect:

systemctl restart network.service

# systemctl restart network.service

3. Configure the physical networks in /etc/neutron/plugins/ml2/openvswitch_agent.ini and map the bridge to the physical network:

For more information on configuring bridge_mappings, see Chapter 13, Configure Bridge Mappings.

bridge_mappings = physnet1:br-ex

bridge_mappings = physnet1:br-ex

4. Restart the neutron-openvswitch-agent service on the Network and Compute nodes for the changes to take effect:

systemctl restart neutron-openvswitch-agent

systemctl restart neutron-openvswitch-agentConfigure the Network node:

1. Set external_network_bridge = to an empty value in /etc/neutron/l3_agent.ini. This enables the use of external provider networks.

# Name of bridge used for external network traffic. This should be set to empty value for the linux bridge external_network_bridge =

# Name of bridge used for external network traffic. This should be set to

# empty value for the linux bridge

external_network_bridge =

2. Restart neutron-l3-agent for the changes to take effect:

systemctl restart neutron-l3-agent.service

systemctl restart neutron-l3-agent.service

If there are multiple flat provider networks, each of them should have separate physical interface and bridge to connect them to external network. Please configure the ifcfg-* scripts appropriately and use comma-separated list for each network when specifying them in bridge_mappings. For more information on configuring bridge_mappings, see Chapter 13, Configure Bridge Mappings.

Connect an instance to the external network:

With the network created, you can now connect an instance to it and test connectivity:

1. Create a new instance.

2. Use the Networking tab in the dashboard to add the new instance directly to the newly-created external network.

How does the packet flow work?

With flat provider networking configured, this section describes in detail how traffic flows to and from an instance.

9.1.1. The flow of outgoing traffic

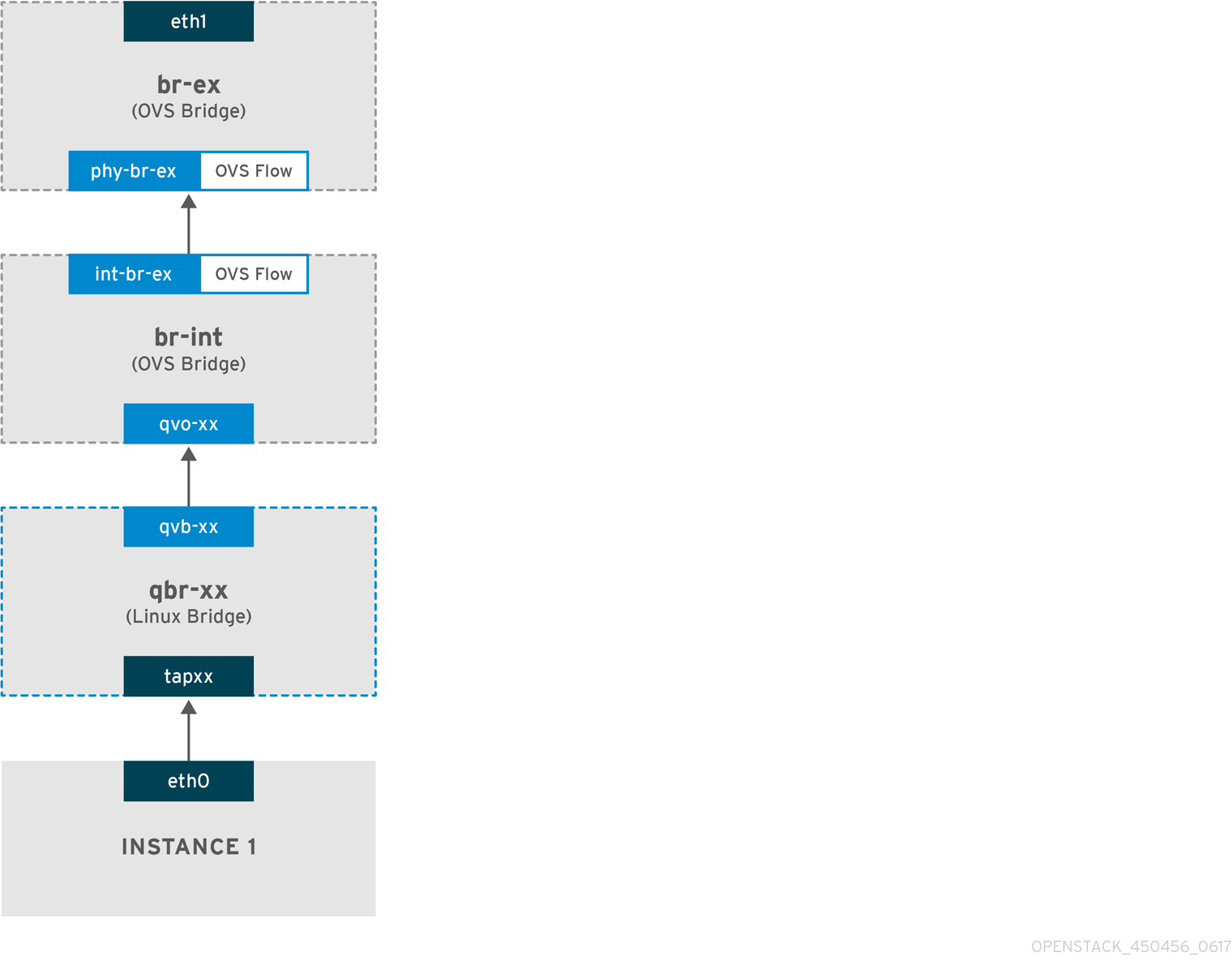

The packet flow for traffic leaving an instance and arriving directly at an external network: Once you configure br-ex, add the physical interface, and spawn an instance to a Compute node, your resulting interfaces and bridges will be similar to those in the diagram below (if using the iptables_hybrid firewall driver):

1. Packets leaving the eth0 interface of the instance will first arrive at the linux bridge qbr-xx.

2. Bridge qbr-xx is connected to br-int using veth pair qvb-xx <-> qvo-xxx. This is because the bridge is used to apply the inbound/outbound firewall rules defined by the security group.

3. Interface qvbxx is connected to the qbr-xx linux bridge, and qvoxx is connected to the br-int Open vSwitch (OVS) bridge.

The configuration of qbr-xx on the Linux bridge:

qbr269d4d73-e7 8000.061943266ebb no qvb269d4d73-e7 tap269d4d73-e7

qbr269d4d73-e7 8000.061943266ebb no qvb269d4d73-e7

tap269d4d73-e7The configuration of qvoxx on br-int:

Port qvoxx is tagged with the internal VLAN tag associated with the flat provider network. In this example, the VLAN tag is 5. Once the packet reaches qvoxx, the VLAN tag is appended to the packet header.

The packet is then moved to the br-ex OVS bridge using the patch-peer int-br-ex <-> phy-br-ex.

Example configuration of the patch-peer on br-int:

Example configuration of the patch-peer on br-ex:

When this packet reaches phy-br-ex on br-ex, an OVS flow inside br-ex strips the VLAN tag (5) and forwards it to the physical interface.

In the example below, the output shows the port number of phy-br-ex as 2.

The output below shows any packet that arrives on phy-br-ex (in_port=2) with a VLAN tag of 5 (dl_vlan=5). In addition, the VLAN tag is stripped away and the packet is forwarded (actions=strip_vlan,NORMAL).

ovs-ofctl dump-flows br-ex NXST_FLOW reply (xid=0x4): cookie=0x0, duration=4703.491s, table=0, n_packets=3620, n_bytes=333744, idle_age=0, priority=1 actions=NORMAL cookie=0x0, duration=3890.038s, table=0, n_packets=13, n_bytes=1714, idle_age=3764, priority=4,in_port=2,dl_vlan=5 actions=strip_vlan,NORMAL cookie=0x0, duration=4702.644s, table=0, n_packets=10650, n_bytes=447632, idle_age=0, priority=2,in_port=2 actions=drop

# ovs-ofctl dump-flows br-ex

NXST_FLOW reply (xid=0x4):

cookie=0x0, duration=4703.491s, table=0, n_packets=3620, n_bytes=333744, idle_age=0, priority=1 actions=NORMAL

cookie=0x0, duration=3890.038s, table=0, n_packets=13, n_bytes=1714, idle_age=3764, priority=4,in_port=2,dl_vlan=5 actions=strip_vlan,NORMAL

cookie=0x0, duration=4702.644s, table=0, n_packets=10650, n_bytes=447632, idle_age=0, priority=2,in_port=2 actions=dropThis packet is then forwarded to the physical interface. If the physical interface is another VLAN-tagged interface, then the interface will add the tag to the packet.

9.1.2. The flow of incoming traffic

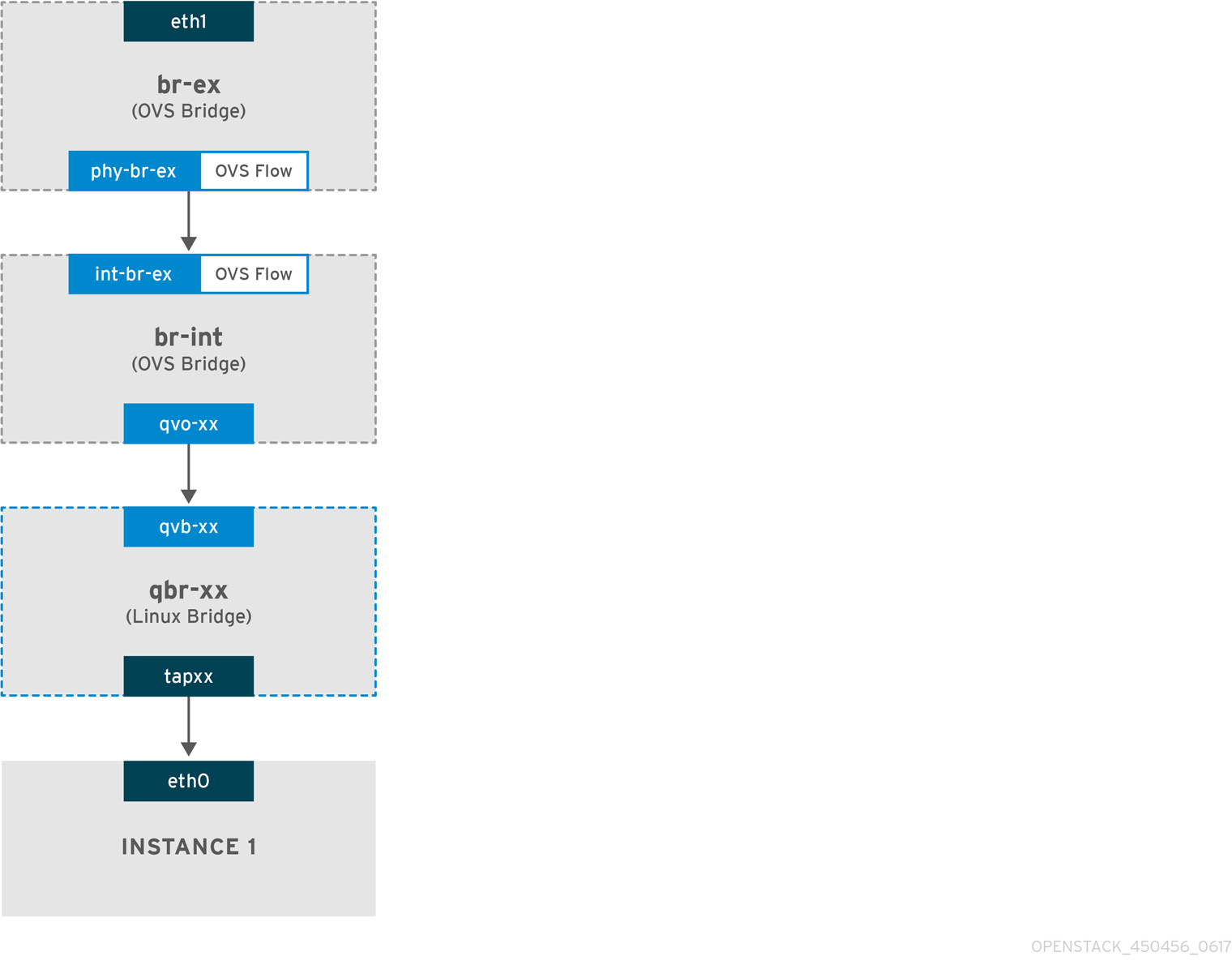

This section describes the flow of incoming traffic from the external network until it arrives at the instance’s interface.

1. Incoming traffic first arrives at eth1 on the physical node.

2. The packet is then passed to the br-ex bridge.

3. The packet then moves to br-int using the patch-peer phy-br-ex <--> int-br-ex.

In the example below, int-br-ex uses port number 15. See the entry containing 15(int-br-ex):

Observing the traffic flow on br-int

1. When the packet arrives at int-br-ex, an OVS flow rule within the br-int bridge amends the packet to add the internal VLAN tag 5. See the entry for actions=mod_vlan_vid:5:

2. The second rule manages packets that arrive on int-br-ex (in_port=15) with no VLAN tag (vlan_tci=0x0000): It adds VLAN tag 5 to the packet (actions=mod_vlan_vid:5,NORMAL) and forwards it on to qvoxxx.

3. qvoxxx accepts the packet and forwards to qvbxx, after stripping the away the VLAN tag.

4. The packet then reaches the instance.

VLAN tag 5 is an example VLAN that was used on a test Compute node with a flat provider network; this value was assigned automatically by neutron-openvswitch-agent. This value may be different for your own flat provider network, and it can differ for the same network on two separate Compute nodes.

9.1.3. Troubleshooting

The output provided in the section above - How does the packet flow work? - provides sufficient debugging information for troubleshooting a flat provider network, should anything go wrong. The steps below would further assist with the troubleshooting process.

1. Review bridge_mappings:

Verify that physical network name used (for example, physnet1) is consistent with the contents of the bridge_mapping configuration. For example:

2. Review the network configuration:

Confirm that the network is created as external, and uses the flat type:

neutron net-show provider-flat ... | provider:network_type | flat | | router:external | True | ...

# neutron net-show provider-flat

...

| provider:network_type | flat |

| router:external | True |

...3. Review the patch-peer:

Run ovs-vsctl show, and verify that br-int and br-ex is connected using a patch-peer int-br-ex <--> phy-br-ex.

This connection is created when the neutron-openvswitch-agent service is restarted. But only if bridge_mapping is correctly configured in /etc/neutron/plugins/ml2/openvswitch_agent.ini. Re-check the bridge_mapping setting if this is not created, even after restarting the service.

For more information on configuring bridge_mappings, see Chapter 13, Configure Bridge Mappings.

4. Review the network flows:

Run ovs-ofctl dump-flows br-ex and ovs-ofctl dump-flows br-int and review whether the flows strip the internal VLAN IDs for outgoing packets, and add VLAN IDs for incoming packets. This flow is first added when you spawn an instance to this network on a specific Compute node.

-

If this flow is not created after spawning the instance, verify that the network is created as

flat, isexternal, and that thephysical_networkname is correct. In addition, review thebridge_mappingsettings. -

Finally, review the

ifcfg-br-exandifcfg-ethxconfiguration. Make sure thatethXis definitely added as a port withinbr-ex, and that both of them haveUPflag in the output ofip a.

For example, the output below shows eth1 is a port in br-ex:

The example below demonstrates that eth1 is configured as an OVS port, and that the kernel knows to transfer all packets from the interface, and send them to the OVS bridge br-ex. This can be observed in the entry: master ovs-system.

ip a 5: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP qlen 1000

# ip a

5: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP qlen 10009.2. Using VLAN provider networks

This procedure creates VLAN provider networks that can connect instances directly to external networks. You would do this if you want to connect multiple VLAN-tagged interfaces (on a single NIC) to multiple provider networks. This example uses a physical network called physnet1, with a range of VLANs (171-172). The network nodes and compute nodes are connected to the physical network using a physical interface on them called eth1. The switch ports to which these interfaces are connected must be configured to trunk the required VLAN ranges.

The following procedures configure the VLAN provider networks using the example VLAN IDs and names given above.

Configure the Controller nodes:

1. Enable the vlan mechanism driver by editing /etc/neutron/plugin.ini (symlinked to /etc/neutron/plugins/ml2/ml2_conf.ini), and add vlan to the existing list of values. For example:

[ml2] type_drivers = vxlan,flat,vlan

[ml2]

type_drivers = vxlan,flat,vlan

2. Configure the network_vlan_ranges setting to reflect the physical network and VLAN ranges in use. For example:

[ml2_type_vlan] network_vlan_ranges=physnet1:171:172

[ml2_type_vlan]

network_vlan_ranges=physnet1:171:1723. Restart the neutron-server service to apply the changes:

systemctl restart neutron-server

systemctl restart neutron-server

4. Create the external networks as a vlan-type, and associate them to the configured physical_network. Create it as a --shared network to let other users directly connect instances. This example creates two networks: one for VLAN 171, and another for VLAN 172:

5. Create a number of subnets and configure them to use the external network. This is done using either neutron subnet-create or the dashboard. You will want to make certain that the external subnet details you have received from your network administrator are correctly associated with each VLAN. In this example, VLAN 171 uses subnet 10.65.217.0/24 and VLAN 172 uses 10.65.218.0/24:

Configure the Network nodes and Compute nodes:

These steps must be performed on the Network node and Compute nodes. As a result, this will connect the nodes to the external network, and permit instances to communicate directly with the external network.

1. Create an external network bridge (br-ex), and associate a port (eth1) with it:

- This example configures eth1 to use br-ex:

- This example configures the br-ex bridge:

2. Reboot the node, or restart the network service for the networking changes to take effect. For example:

systemctl restart network

# systemctl restart network

3. Configure the physical networks in /etc/neutron/plugins/ml2/openvswitch_agent.ini and map bridges according to the physical network:

bridge_mappings = physnet1:br-ex

bridge_mappings = physnet1:br-ex

For more information on configuring bridge_mappings, see Chapter 13, Configure Bridge Mappings.

4. Restart the neutron-openvswitch-agent service on the network nodes and compute nodes for the changes to take effect:

systemctl restart neutron-openvswitch-agent

systemctl restart neutron-openvswitch-agentConfigure the Network node:

1. Set external_network_bridge = to an empty value in /etc/neutron/l3_agent.ini. This is required to use provider external networks, not bridge based external network where we will add external_network_bridge = br-ex:

# Name of bridge used for external network traffic. This should be set to empty value for the linux bridge external_network_bridge =

# Name of bridge used for external network traffic. This should be set to

# empty value for the linux bridge

external_network_bridge =

2. Restart neutron-l3-agent for the changes to take effect.

systemctl restart neutron-l3-agent

systemctl restart neutron-l3-agent

3. Create a new instance and use the Networking tab in the dashboard to add the new instance directly to the newly-created external network.

How does the packet flow work?

With VLAN provider networking configured, this section describes in detail how traffic flows to and from an instance:

9.2.1. The flow of outgoing traffic

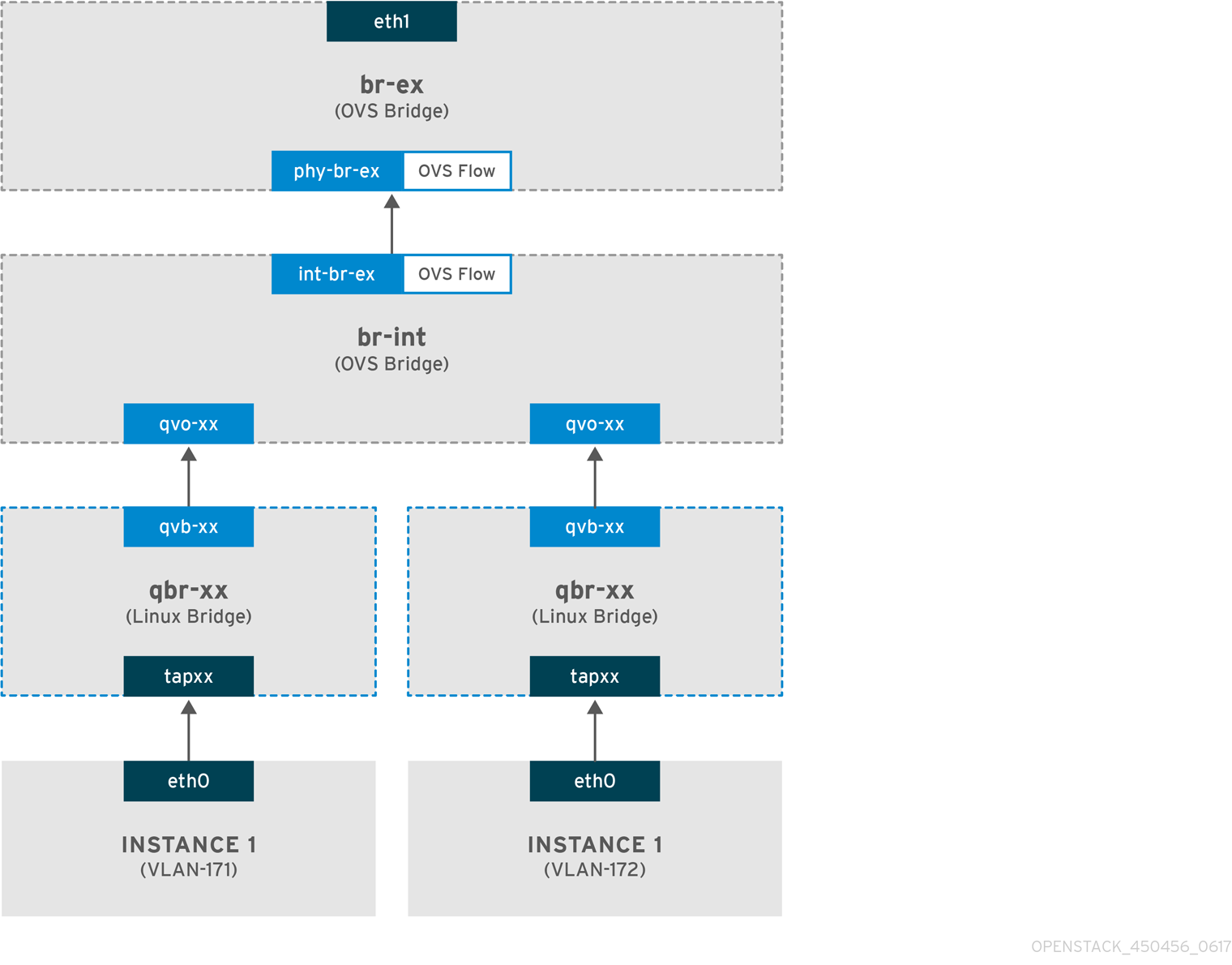

This section describes the packet flow for traffic leaving an instance and arriving directly to a VLAN provider external network. This example uses two instances attached to the two VLAN networks (171 and 172). Once you configure br-ex, add a physical interface to it, and spawn an instance to a Compute node, your resulting interfaces and bridges will be similar to those in the diagram below:

1. As illustrated above, packets leaving the instance’s eth0 first arrive at the linux bridge qbr-xx connected to the instance.

2. qbr-xx is connected to br-int using veth pair qvbxx <→ qvoxxx.

3. qvbxx is connected to the linux bridge qbr-xx and qvoxx is connected to the Open vSwitch bridge br-int.

Below is the configuration of qbr-xx on the Linux bridge.

Since there are two instances, there would two linux bridges:

The configuration of qvoxx on br-int:

-

qvoxxis tagged with the internal VLAN tag associated with the VLAN provider network. In this example, the internal VLAN tag 2 is associated with the VLAN provider networkprovider-171and VLAN tag 3 is associated with VLAN provider networkprovider-172. Once the packet reaches qvoxx, the packet header will get this VLAN tag added to it. -

The packet is then moved to the br-ex OVS bridge using patch-peer

int-br-ex<→phy-br-ex. Example patch-peer on br-int:

Example configuration of the patch peer on br-ex:

- When this packet reaches phy-br-ex on br-ex, an OVS flow inside br-ex replaces the internal VLAN tag with the actual VLAN tag associated with the VLAN provider network.

The output of the following command shows that the port number of phy-br-ex is 4:

ovs-ofctl show br-ex

4(phy-br-ex): addr:32:e7:a1:6b:90:3e

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

# ovs-ofctl show br-ex

4(phy-br-ex): addr:32:e7:a1:6b:90:3e

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

The below command displays any packet that arrives on phy-br-ex (in_port=4) which has VLAN tag 2 (dl_vlan=2). Open vSwitch replaces the VLAN tag with 171 (actions=mod_vlan_vid:171,NORMAL) and forwards the packet on. It also shows any packet that arrives on phy-br-ex (in_port=4) which has VLAN tag 3 (dl_vlan=3). Open vSwitch replaces the VLAN tag with 172 (actions=mod_vlan_vid:172,NORMAL) and forwards the packet on. These rules are automatically added by neutron-openvswitch-agent.

- This packet is then forwarded to physical interface eth1.

9.2.2. The flow of incoming traffic

- An incoming packet for the instance from external network first reaches eth1, then arrives at br-ex.

-

From br-ex, the packet is moved to br-int via patch-peer

phy-br-ex <-> int-br-ex.

The below command shows int-br-ex with port number 18:

ovs-ofctl show br-int

18(int-br-ex): addr:fe:b7:cb:03:c5:c1

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

# ovs-ofctl show br-int

18(int-br-ex): addr:fe:b7:cb:03:c5:c1

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max-

When the packet arrives on int-br-ex, an OVS flow rule inside br-int adds internal VLAN tag 2 for

provider-171and VLAN tag 3 forprovider-172to the packet:

The second rule says a packet that arrives on int-br-ex (in_port=18) which has VLAN tag 172 in it (dl_vlan=172), replace VLAN tag with 3 (actions=mod_vlan_vid:3,NORMAL) and forward. The third rule says a packet that arrives on int-br-ex (in_port=18) which has VLAN tag 171 in it (dl_vlan=171), replace VLAN tag with 2 (actions=mod_vlan_vid:2,NORMAL) and forward.

- With the internal VLAN tag added to the packet, qvoxxx accepts it and forwards it on to qvbxx (after stripping the VLAN tag), after which the packet then reaches the instance.

Note that the VLAN tag 2 and 3 is an example that was used on a test Compute node for the VLAN provider networks (provider-171 and provider-172). The required configuration may be different for your VLAN provider network, and can also be different for the same network on two different Compute nodes.

9.2.3. Troubleshooting

Refer to the packet flow described in the section above when troubleshooting connectivity in a VLAN provider network. In addition, review the following configuration options:

1. Verify that physical network name is used consistently. In this example, physnet1 is used consistently while creating the network, and within the bridge_mapping configuration:

2. Confirm that the network was created as external, is type vlan, and uses the correct segmentation_id value:

3. Run ovs-vsctl show and verify that br-int and br-ex are connected using the patch-peer int-br-ex <→ phy-br-ex.

This connection is created while restarting neutron-openvswitch-agent, provided that the bridge_mapping is correctly configured in /etc/neutron/plugins/ml2/openvswitch_agent.ini.

Recheck the bridge_mapping setting if this is not created even after restarting the service.

4. To review the flow of outgoing packets, run ovs-ofctl dump-flows br-ex and ovs-ofctl dump-flows br-int, and verify that the flows map the internal VLAN IDs to the external VLAN ID (segmentation_id). For incoming packets, map the external VLAN ID to the internal VLAN ID.

This flow is added by the neutron OVS agent when you spawn an instance to this network for the first time. If this flow is not created after spawning the instance, make sure that the network is created as vlan, is external, and that the physical_network name is correct. In addition, re-check the bridge_mapping settings.

5. Finally, re-check the ifcfg-br-ex and ifcfg-ethx configuration. Make sure that ethX is added as a port inside br-ex, and that both of them have UP flag in the output of the ip a command.

For example, the output below shows that eth1 is a port in br-ex.

The command below shows that eth1 has been added as a port, and that the kernel is aware to move all packets from the interface to the OVS bridge br-ex. This is demonstrated by the entry: master ovs-system.

ip a 5: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP qlen 1000

# ip a

5: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP qlen 10009.3. Enable Compute metadata access

Instances connected in this way are directly attached to the provider external networks, and have external routers configured as their default gateway; no OpenStack Networking (neutron) routers are used. This means that neutron routers cannot be used to proxy metadata requests from instances to the nova-metadata server, which may result in failures while running cloud-init. However, this issue can be resolved by configuring the dhcp agent to proxy metadata requests. You can enable this functionality in /etc/neutron/dhcp_agent.ini. For example:

enable_isolated_metadata = True

enable_isolated_metadata = True9.4. Floating IP addresses

Note that the same network can be used to allocate floating IP addresses to instances, even if they have been added to private networks at the same time. The addresses allocated as floating IPs from this network will be bound to the qrouter-xxx namespace on the Network node, and will perform DNAT-SNAT to the associated private IP address. In contrast, the IP addresses allocated for direct external network access will be bound directly inside the instance, and allow the instance to communicate directly with external network.