Dieser Inhalt ist in der von Ihnen ausgewählten Sprache nicht verfügbar.

System Design Guide

Designing a RHEL 8 system

Abstract

Providing feedback on Red Hat documentation

We appreciate your feedback on our documentation. Let us know how we can improve it.

Submitting feedback through Jira (account required)

- Log in to the Jira website.

- Click Create in the top navigation bar.

- Enter a descriptive title in the Summary field.

- Enter your suggestion for improvement in the Description field. Include links to the relevant parts of the documentation.

- Click Create at the bottom of the dialogue.

Part I. Design of installation

Chapter 1. System requirements and supported architectures

Red Hat Enterprise Linux 8 delivers a stable, secure, consistent foundation across hybrid cloud deployments with the tools needed to deliver workloads faster with less effort. You can deploy RHEL as a guest on supported hypervisors and Cloud provider environments as well as on physical infrastructure, so your applications can take advantage of innovations in the leading hardware architecture platforms.

Review the guidelines provided for system, hardware, security, memory, and RAID before installing.

If you want to use your system as a virtualization host, review the necessary hardware requirements for virtualization.

Red Hat Enterprise Linux supports the following architectures:

- AMD and Intel 64-bit architectures

- The 64-bit ARM architecture

- IBM Power Systems, Little Endian

- 64-bit IBM Z architectures

1.1. Supported installation targets

An installation target is a storage device that stores Red Hat Enterprise Linux and boots the system. Red Hat Enterprise Linux supports the following installation targets for IBMZ , IBM Power, AMD64, Intel 64, and 64-bit ARM systems:

- Storage connected by a standard internal interface, such as DASD, SCSI, SATA, or SAS

- BIOS/firmware RAID devices on the Intel64, AMD64 and arm64 architectures

-

NVDIMM devices in sector mode on the Intel64 and AMD64 architectures, supported by the

nd_pmemdriver. - Storage connected via Fibre Channel Host Bus Adapters, such as DASDs (IBM Z architecture only) and SCSI LUNs, including multipath devices. Some might require vendor-provided drivers.

- Xen block devices on Intel processors in Xen virtual machines.

- VirtIO block devices on Intel processors in KVM virtual machines.

Red Hat does not support installation to USB drives or SD memory cards. For information about support for third-party virtualization technologies, see the Red Hat Hardware Compatibility List.

1.2. System specifications

The Red Hat Enterprise Linux installation program automatically detects and installs your system’s hardware, so you should not have to supply any specific system information. However, for certain Red Hat Enterprise Linux installation scenarios, it is recommended that you record system specifications for future reference. These scenarios include:

Installing RHEL with a customized partition layout

Record: The model numbers, sizes, types, and interfaces of the disks attached to the system. For example, Seagate ST3320613AS 320 GB on SATA0, Western Digital WD7500AAKS 750 GB on SATA1.

Installing RHEL as an additional operating system on an existing system

Record: Partitions used on the system. This information can include file system types, device node names, file system labels, and sizes, and allows you to identify specific partitions during the partitioning process. If one of the operating systems is a Unix operating system, Red Hat Enterprise Linux may report the device names differently. Additional information can be found by executing the equivalent of the mount command and the blkid command, and in the /etc/fstab file.

If multiple operating systems are installed, the Red Hat Enterprise Linux installation program attempts to automatically detect them, and to configure boot loader to boot them. You can manually configure additional operating systems if they are not detected automatically.

Installing RHEL from an image on a local disk

Record: The disk and directory that holds the image.

Installing RHEL from a network location

If the network has to be configured manually, that is, DHCP is not used.

Record:

- IP address

- Netmask

- Gateway IP address

- Server IP addresses, if required

Contact your network administrator if you need assistance with networking requirements.

Installing RHEL on an iSCSI target

Record: The location of the iSCSI target. Depending on your network, you may need a CHAP user name and password, and a reverse CHAP user name and password.

Installing RHEL if the system is part of a domain

Verify that the domain name is supplied by the DHCP server. If it is not, enter the domain name during installation.

1.3. Disk and memory requirements

If several operating systems are installed, it is important that you verify that the allocated disk space is separate from the disk space required by Red Hat Enterprise Linux. In some cases, it is important to dedicate specific partitions to Red Hat Enterprise Linux, for example, for AMD64, Intel 64, and 64-bit ARM, at least two partitions (/ and swap) must be dedicated to RHEL and for IBM Power Systems servers, at least three partitions (/, swap, and a PReP boot partition) must be dedicated to RHEL.

Additionally, you must have a minimum of 10 GiB of available disk space. To install Red Hat Enterprise Linux, you must have a minimum of 10 GiB of space in either unpartitioned disk space or in partitions that can be deleted. For more information, see Partitioning reference.

| Installation type | Minimum RAM |

|---|---|

| Local media installation (USB, DVD) |

|

| NFS network installation |

|

| HTTP, HTTPS or FTP network installation |

|

It is possible to complete the installation with less memory than the minimum requirements. The exact requirements depend on your environment and installation path. Test various configurations to determine the minimum required RAM for your environment. Installing Red Hat Enterprise Linux using a Kickstart file has the same minimum RAM requirements as a standard installation. However, additional RAM may be required if your Kickstart file includes commands that require additional memory, or write data to the RAM disk. For more information, see Automatically installing RHEL.

1.4. Graphics display resolution requirements

Your system must have the following minimum resolution to ensure a smooth and error-free installation of Red Hat Enterprise Linux.

| Product version | Resolution |

|---|---|

| Red Hat Enterprise Linux 8 | Minimum: 800 x 600 Recommended: 1026 x 768 |

1.5. UEFI Secure Boot and Beta release requirements

If you plan to install a Beta release of Red Hat Enterprise Linux, on systems having UEFI Secure Boot enabled, then first disable the UEFI Secure Boot option and then begin the installation.

UEFI Secure Boot requires that the operating system kernel is signed with a recognized private key, which the system’s firmware verifies using the corresponding public key. For Red Hat Enterprise Linux Beta releases, the kernel is signed with a Red Hat Beta-specific public key, which the system fails to recognize by default. As a result, the system fails to even boot the installation media.

Chapter 2. Customizing the installation media

For details, see Composing a customized RHEL system image.

Chapter 3. Creating a bootable installation medium for RHEL

You can download the ISO file from the Customer Portal to prepare the bootable physical installation medium, such as a USB or DVD. Starting with RHEL 8, Red Hat no longer provides separate variants for Server and Workstation. Red Hat Enterprise Linux for x86_64 includes both Server and Workstation capabilities. The distinction between Server and Workstation is managed through the System Purpose Role during the installation or configuration process.

After downloading an ISO file from the Customer Portal, create a bootable physical installation medium, such as a USB or DVD to continue the installation process.

For secure environment cases where USB drives are prohibited, consider using the Image Builder to create and deploy reference images. This method ensures compliance with security policies while maintaining system integrity. For more details, refer to the Image builder documentation.

By default, the inst.stage2= boot option is used on the installation medium and is set to a specific label, for example, inst.stage2=hd:LABEL=RHEL8\x86_64. If you modify the default label of the file system containing the runtime image, or if you use a customized procedure to boot the installation system, verify that the label is set to the correct value.

3.1. Installation boot media options

There are several options available to boot the Red Hat Enterprise Linux installation program.

- Full installation DVD or USB flash drive

- Create a full installation DVD or USB flash drive using the DVD ISO image. The DVD or USB flash drive can be used as a boot device and as an installation source for installing software packages.

- Minimal installation DVD, CD, or USB flash drive

- Create a minimal installation CD, DVD, or USB flash drive using the Boot ISO image, which contains only the minimum files necessary to boot the system and start the installation program. If you are not using the Content Delivery Network (CDN) to download the required software packages, the Boot ISO image requires an installation source that contains the required software packages.

- PXE Server

- A preboot execution environment (PXE) server allows the installation program to boot over the network. After a system boot, you must complete the installation from a different installation source, such as a local disk or a network location.

- Image builder

- With image builder, you can create customized system and cloud images to install Red Hat Enterprise Linux in virtual and cloud environments.

3.2. Creating a bootable DVD

You can create a bootable installation DVD by using a burning software and a DVD burner. The exact steps to produce a DVD from an ISO image file vary greatly, depending on the operating system and disc burning software installed. Consult your system’s burning software documentation for the exact steps to burn a DVD from an ISO image file.

You can create a bootable DVD using either the DVD ISO image (full install) or the Boot ISO image (minimal install). However, the DVD ISO image is larger than 4.7 GB, and as a result, it might not fit on a single or dual-layer DVD. Check the size of the DVD ISO image file before you proceed. Use a USB flash drive when using the DVD ISO image to create bootable installation media. For the environment cases where USB drives are prohibited, see Image builder documentation.

3.3. Creating a bootable USB device on Linux

You can create a bootable USB device which you can then use to install Red Hat Enterprise Linux on other machines. This procedure overwrites the existing data on the USB drive without any warning. Back up any data or use an empty flash drive. A bootable USB drive cannot be used for storing data.

Prerequisites

- You have downloaded the full installation DVD ISO or minimal installation Boot ISO image from the Product Downloads page.

- You have a USB flash drive with enough capacity for the ISO image. The required size varies, but the recommended USB size is 8 GB.

Procedure

- Connect the USB flash drive to the system.

Open a terminal window and display a log of recent events.

dmesg|tail

$ dmesg|tailCopy to Clipboard Copied! Toggle word wrap Toggle overflow Messages resulting from the attached USB flash drive are displayed at the bottom of the log. Record the name of the connected device.

Log in as a root user:

su -

$ su -Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter your root password when prompted.

Find the device node assigned to the drive. In this example, the drive name is

sdd.Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

If the inserted USB device mounts automatically, unmount it before continuing with the next steps. For unmounting, use the

umountcommand. For more information, see Unmounting a file system with umount. Write the ISO image directly to the USB device:

dd if=/image_directory/image.iso of=/dev/device

# dd if=/image_directory/image.iso of=/dev/deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace /image_directory/image.iso with the full path to the ISO image file that you downloaded,

Replace device with the device name that you retrieved with the

dmesgcommand.In this example, the full path to the ISO image is

/home/testuser/Downloads/rhel-8-x86_64-boot.iso, and the device name issdd:dd if=/home/testuser/Downloads/rhel-8-x86_64-boot.iso of=/dev/sdd

# dd if=/home/testuser/Downloads/rhel-8-x86_64-boot.iso of=/dev/sddCopy to Clipboard Copied! Toggle word wrap Toggle overflow Partition names are usually device names with a numerical suffix. For example,

sddis a device name, andsdd1is the name of a partition on the devicesdd.

-

Wait for the

ddcommand to finish writing the image to the device. Run thesynccommand to synchronize cached writes to the device. The data transfer is complete when the # prompt appears. When you see the prompt, log out of the root account and unplug the USB drive. The USB drive is now ready to use as a boot device.

3.4. Creating a bootable USB device on Windows

You can create a bootable USB device on a Windows system with various tools. You can use Fedora Media Writer, available for download at https://github.com/FedoraQt/MediaWriter/releases. Fedora Media Writer is a community product and is not supported by Red Hat. You can report any issues with the tool at https://github.com/FedoraQt/MediaWriter/issues.

Creating a bootable drive overwrites existing data on the USB drive without any warning. Back up any data or use an empty flash drive. A bootable USB drive cannot be used for storing data.

Prerequisites

- You have downloaded the full installation DVD ISO or minimal installation Boot ISO image from the Product Downloads page.

- You have a USB flash drive with enough capacity for the ISO image. The required size varies.

Procedure

- Download and install Fedora Media Writer from https://github.com/FedoraQt/MediaWriter/releases.

- Connect the USB flash drive to the system.

- Open Fedora Media Writer.

- From the main window, click and select the previously downloaded Red Hat Enterprise Linux ISO image.

- From the Write Custom Image window, select the drive that you want to use.

- Click . The boot media creation process starts. Do not unplug the drive until the operation completes. The operation may take several minutes, depending on the size of the ISO image, and the write speed of the USB drive.

- When the operation completes, unmount the USB drive. The USB drive is now ready to be used as a boot device.

3.5. Creating a bootable USB device on macOS

You can create a bootable USB device which you can then use to install Red Hat Enterprise Linux on other machines. Creating a bootable USB drive overwrites any data previously stored on the USB drive without any warning. Back up any data or use an empty flash drive. A bootable USB drive cannot be used for storing data.

Prerequisites

- You have downloaded the full installation DVD ISO or minimal installation Boot ISO image from the Product Downloads page.

- You have a USB flash drive with enough capacity for the ISO image. The required size varies.

Procedure

- Connect the USB flash drive to the system.

Identify the device path with the

diskutil listcommand. The device path has the format of/dev/disknumber, wherenumberis the number of the disk. The disks are numbered starting at zero (0). Typically,disk0is the OS X recovery disk, anddisk1is the main OS X installation. In the following example, the USB device isdisk2:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Identify your USB flash drive by comparing the NAME, TYPE and SIZE columns to your flash drive. For example, the NAME should be the title of the flash drive icon in the Finder tool. You can also compare these values to those in the information panel of the flash drive.

Unmount the flash drive’s file system volumes:

diskutil unmountDisk /dev/disknumber Unmount of all volumes on disknumber was successful

$ diskutil unmountDisk /dev/disknumber Unmount of all volumes on disknumber was successfulCopy to Clipboard Copied! Toggle word wrap Toggle overflow When the command completes, the icon for the flash drive disappears from your desktop. If the icon does not disappear, you may have selected the wrong disk. Attempting to unmount the system disk accidentally returns a failed to unmount error.

Write the ISO image to the flash drive. macOS provides both a block (

/dev/disk*) and character device (/dev/rdisk*) file for each storage device. Writing an image to the/dev/rdisknumbercharacter device is faster than writing to the/dev/disknumberblock device. For example, to write the/Users/user_name/Downloads/rhel-8-x86_64-boot.isofile to the/dev/rdisk2device, enter the following command:sudo dd if=/Users/user_name/Downloads/rhel-8-x86_64-boot.iso of=/dev/rdisk2 bs=512K status=progress

# sudo dd if=/Users/user_name/Downloads/rhel-8-x86_64-boot.iso of=/dev/rdisk2 bs=512K status=progressCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

if=- Path to the installation image. -

of=- The raw disk device (/dev/rdisknumber) representing the target disk. -

bs=512K- Sets the block size to 512 KB for faster data transfer. -

status=progress- Displays a progress indicator during the operation.

-

-

Wait for the

ddcommand to finish writing the image to the device. The data transfer is complete when the # prompt appears. When the prompt is displayed, log out of the root account and unplug the USB drive. The USB drive is now ready to be used as a boot device.

Chapter 4. Booting the installation media

You can boot the Red Hat Enterprise Linux installation using a USB or DVD.

You can register RHEL using the Red Hat Content Delivery Network (CDN). CDN is a geographically distributed series of web servers. These servers provide, for example, packages and updates to RHEL hosts with a valid subscription.

During the installation, registering and installing RHEL from the CDN offers following benefits:

- Utilizing the latest packages for an up-to-date system immediately after installation and

- Integrated support for connecting to Red Hat Insights and enabling System Purpose.

Starting with RHEL 9.6, the Defense Information Systems Agency (DISA) Security Technical Implementation Guide (STIG) and other security profiles do not automatically enable Federal Information Processing Standards (FIPS) mode at first boot. To remain FIPS compliant, you must manually enable FIPS mode before the installation begins—either by adding the fips=1 kernel boot option or by using a Kickstart configuration that explicitly enables FIPS. If FIPS is not enabled before installation, systems built using these security profiles might not be compliant, and users might unknowingly deploy non-compliant systems. To avoid compliance issues, ensure that FIPS is enabled during the boot phase, prior to launching the graphical or text-based installer.

Prerequisites

- You have created a bootable installation media (USB or DVD).

Procedure

- Power off the system to which you are installing Red Hat Enterprise Linux.

- Disconnect any drives from the system.

- Power on the system.

- Insert the bootable installation media (USB, DVD, or CD).

- Power off the system but do not remove the boot media.

- Power on the system.

- You might need to press a specific key or combination of keys to boot from the media or configure the Basic Input/Output System (BIOS) of your system to boot from the media. For more information, see the documentation that came with your system.

- The Red Hat Enterprise Linux boot window opens and displays information about a variety of available boot options.

Use the arrow keys on your keyboard to select the boot option that you require, and press Enter to select the boot option. The Welcome to Red Hat Enterprise Linux window opens and you can install Red Hat Enterprise Linux using the graphical user interface.

The installation program automatically begins if no action is performed in the boot window within 60 seconds.

Optional: Edit the available boot options:

- UEFI-based systems: Press E to enter edit mode. Change the predefined command line to add or remove boot options. Press Enter to confirm your choice.

- BIOS-based systems: Press the Tab key on your keyboard to enter edit mode. Change the predefined command line to add or remove boot options. Press Enter to confirm your choice.

Chapter 5. Optional: Customizing boot options

When you are installing RHEL on x86_64 or ARM64 architectures, you can edit the boot options to customize the installation process based on your specific environment.

5.1. Boot options

You can append multiple options separated by space to the boot command line. Boot options specific to the installation program always start with inst. The following are the available boot options:

- Options with an equals "=" sign

-

You must specify a value for boot options that use the

=symbol. For example, theinst.vncpassword=option must contain a value, in this example, a password. The correct syntax for this example isinst.vncpassword=password. - Options without an equals "=" sign

-

This boot option does not accept any values or parameters. For example, the

rd.live.checkoption forces the installation program to verify the installation media before starting the installation. If this boot option is present, the installation program performs the verification and if the boot option is not present, the verification is skipped.

You can customize boot options for a particular menu entry in the following ways:

-

On BIOS-based systems: Press the

Tabkey and add custom boot options to the command line. You can also access theboot:prompt by pressing theEsckey but no required boot options are preset. In this scenario, you must always specify the Linux option before using any other boot options. For more information, see Editing the boot: prompt in BIOS -

On UEFI-based systems: Press the

ekey and add custom boot options to the command line. When ready pressCtrl+Xto boot the modified option.

For more information, see Editing boot options for the UEFI-based systems

5.2. Editing the boot: prompt in BIOS

When using the boot: prompt, the first option must always specify the installation program image file that you want to load. In most cases, you can specify the image using the keyword. You can specify additional options according to your requirements.

Prerequisites

- You have created bootable installation media (USB, CD or DVD).

- You have booted the installation from the media, and the installation boot menu is open.

Procedure

- With the boot menu open, press the Esc key on your keyboard.

-

The

boot:prompt is now accessible. - Press the Tab key on your keyboard to display the help commands.

-

Press the Enter key on your keyboard to start the installation with your options. To return from the

boot:prompt to the boot menu, restart the system and boot from the installation media again.

5.3. Editing predefined boot options using the > prompt

On BIOS-based AMD64 and Intel 64 systems, you can use the > prompt to edit predefined boot options.

Prerequisites

- You have created bootable installation media (USB, CD or DVD).

- You have booted the installation from the media, and the installation boot menu is open.

Procedure

-

From the boot menu, select an option and press the Tab key on your keyboard. The

>prompt is accessible and displays the available options. -

Optional: To view a full set of options, select

Test this media and install RHEL 8. Append the options that you require to the

>prompt.For example, to enable the cryptographic module self-checks mandated by the Federal Information Processing Standard (FIPS) 140, add

fips=1:>vmlinuz initrd=initrd.img inst.stage2=hd:LABEL=RHEL-9-5-0-BaseOS-x86_64 rd.live.check quiet fips=1

>vmlinuz initrd=initrd.img inst.stage2=hd:LABEL=RHEL-9-5-0-BaseOS-x86_64 rd.live.check quiet fips=1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Press Enter to start the installation.

- Press Esc to cancel editing and return to the boot menu.

5.5. Updating drivers during installation

You can update drivers during the Red Hat Enterprise Linux installation process. Updating drivers is completely optional. Do not perform a driver update unless it is necessary. Ensure you have been notified by Red Hat, your hardware vendor, or a trusted third-party vendor that a driver update is required during Red Hat Enterprise Linux installation.

5.5.1. Overview

Red Hat Enterprise Linux supports drivers for many hardware devices but some newly-released drivers may not be supported. A driver update should only be performed if an unsupported driver prevents the installation from completing. Updating drivers during installation is typically only required to support a particular configuration. For example, installing drivers for a storage adapter card that provides access to your system’s storage devices.

Driver update disks may disable conflicting kernel drivers. In rare cases, unloading a kernel module may cause installation errors.

5.5.2. Types of driver update

Red Hat, your hardware vendor, or a trusted third party provides the driver update as an ISO image file. Once you receive the ISO image file, choose the type of driver update.

Types of driver update

- Automatic

-

In this driver update method; a storage device (including a CD, DVD, or USB flash drive) labeled

OEMDRVis physically connected to the system. If theOEMDRVstorage device is present when the installation starts, it is treated as a driver update disk, and the installation program automatically loads its drivers. - Assisted

-

The installation program prompts you to locate a driver update. You can use any local storage device with a label other than

OEMDRV. Theinst.ddboot option is specified when starting the installation. If you use this option without any parameters, the installation program displays all of the storage devices connected to the system, and prompts you to select a device that contains a driver update. - Manual

-

Manually specify a path to a driver update image or an RPM package. You can use any local storage device with a label other than

OEMDRV, or a network location accessible from the installation system. Theinst.dd=locationboot option is specified when starting the installation, where location is the path to a driver update disk or ISO image. When you specify this option, the installation program attempts to load any driver updates found at the specified location. With manual driver updates, you can specify local storage devices, or a network location (HTTP, HTTPS or FTP server). You can use bothinst.dd=locationandinst.ddsimultaneously, where location is the path to a driver update disk or ISO image. In this scenario, the installation program attempts to load any available driver updates from the location and also prompts you to select a device that contains the driver update.

Initialize the network using the ip= option when loading a driver update from a network location.

Limitations

On UEFI systems with the Secure Boot technology enabled, all drivers must be signed with a valid certificate. Red Hat drivers are signed by one of Red Hat’s private keys and authenticated by its corresponding public key in the kernel. If you load additional, separate drivers, verify that they are signed.

5.5.3. Preparing a driver update

This procedure describes how to prepare a driver update on a CD and DVD.

Prerequisites

- You have received the driver update ISO image from Red Hat, your hardware vendor, or a trusted third-party vendor.

- You have burned the driver update ISO image to a CD or DVD.

If only a single ISO image file ending in .iso is available on the CD or DVD, the burn process has not been successful. See your system’s burning software documentation for instructions on how to burn ISO images to a CD or DVD.

Procedure

- Insert the driver update CD or DVD into your system’s CD/DVD drive, and browse it using the system’s file manager tool.

-

Verify that a single file

rhdd3is available.rhdd3is a signature file that contains the driver description and a directory namedrpms, which contains the RPM packages with the actual drivers for various architectures.

5.5.4. Performing an automatic driver update

This procedure describes how to perform an automatic driver update during installation.

Prerequisites

-

You have placed the driver update image on a standard disk partition with an

OEMDRVlabel or burnt theOEMDRVdriver update image to a CD or DVD. Advanced storage, such as RAID or LVM volumes, may not be accessible during the driver update process. -

You have connected a block device with an

OEMDRVvolume label to your system, or inserted the prepared CD or DVD into your system’s CD/DVD drive before starting the installation process.

Procedure

- When you complete the prerequisite steps, the drivers load automatically when the installation program starts and installs during the system’s installation process.

5.5.5. Performing an assisted driver update

This procedure describes how to perform an assisted driver update during installation.

Prerequisites

-

You have connected a block device without an

OEMDRVvolume label to your system and copied the driver disk image to this device, or you have prepared a driver update CD or DVD and inserted it into your system’s CD or DVD drive before starting the installation process.

If you burn an ISO image file to a CD or DVD but it does not have the OEMDRV volume label, you can use the inst.dd option with no arguments. The installation program provides an option to scan and select drivers from the CD or DVD. In this scenario, the installation program does not prompt you to select a driver update ISO image. Another scenario is to use the CD or DVD with the inst.dd=location boot option; this allows the installation program to automatically scan the CD or DVD for driver updates. For more information, see Performing a manual driver update.

Procedure

- From the boot menu window, press the Tab key on your keyboard to display the boot command line.

-

Append the

inst.ddboot option to the command line and press Enter to execute the boot process. - From the menu, select a local disk partition or a CD or DVD device. The installation program scans for ISO files, or driver update RPM packages.

Optional: Select the driver update ISO file.

This step is not required if the selected device or partition contains driver update RPM packages rather than an ISO image file, for example, an optical drive containing a driver update CD or DVD.

Select the required drivers.

- Use the number keys on your keyboard to toggle the driver selection.

- Press c to install the selected driver. The selected driver is loaded and the installation process starts.

5.5.6. Performing a manual driver update

This procedure describes how to perform a manual driver update during installation.

Prerequisites

- You have placed the driver update ISO image file on a USB flash drive or a web server and connected it to your computer.

Procedure

- From the boot menu window, press the Tab key on your keyboard to display the boot command line.

-

Append the

inst.dd=locationboot option to the command line, where location is a path to the driver update. Typically, the image file is located on a web server, for example, http://server.example.com/dd.iso, or on a USB flash drive, for example,/dev/sdb1. It is also possible to specify an RPM package containing the driver update, for example http://server.example.com/dd.rpm. - Press Enter to execute the boot process. The drivers available at the specified location are automatically loaded and the installation process starts.

5.5.7. Disabling a driver

This procedure describes how to disable a malfunctioning driver.

Prerequisites

- You have booted the installation program boot menu.

Procedure

- From the boot menu, press the Tab key on your keyboard to display the boot command line.

Append the

modprobe.blacklist=driver_nameboot option to the command line.Replace driver_name with the name of the driver or drivers you want to disable, for example:

modprobe.blacklist=ahci

modprobe.blacklist=ahciCopy to Clipboard Copied! Toggle word wrap Toggle overflow Drivers disabled using the

modprobe.blacklist=boot option remain disabled on the installed system and appear in the/etc/modprobe.d/anaconda-blacklist.conffile.- Press Enter to execute the boot process.

Chapter 6. Customizing the system in the installer

During the customization phase of the installation, you must perform certain configuration tasks to enable the installation of Red Hat Enterprise Linux. These tasks include:

- Configuring the storage and assign mount points.

- Selecting a base environment with software to be installed.

- Setting a password for the root user or creating a local user.

Optionally, you can further customize the system, for example, by configuring system settings and connecting the host to a network.

6.1. Setting the installer language

You can select the language to be used by the installation program before starting the installation.

Prerequisites

- You have created installation media.

- You have specified an installation source if you are using the Boot ISO image file.

- You have booted the installation.

Procedure

- After you select Install Red hat Enterprise Linux option from the boot menu, the Welcome to Red Hat Enterprise Screen appears.

From the left-hand pane of the Welcome to Red Hat Enterprise Linux window, select a language. Alternatively, search the preferred language by using the text box.

NoteA language is pre-selected by default. If network access is configured, that is, if you booted from a network server instead of local media, the pre-selected language is determined by the automatic location detection feature of the GeoIP module. If you use the

inst.lang=option on the boot command line or in your PXE server configuration, then the language that you define with the boot option is selected.- From the right-hand pane of the Welcome to Red Hat Enterprise Linux window, select a location specific to your region.

- Click to proceed to the graphical installations window.

If you are installing a pre-release version of Red Hat Enterprise Linux, a warning message is displayed about the pre-release status of the installation media.

- To continue with the installation, click , or

- To quit the installation and reboot the system, click .

6.2. Configuring the storage devices

You can install Red Hat Enterprise Linux on a large variety of storage devices. You can configure basic, locally accessible, storage devices in the Installation Destination window. Basic storage devices directly connected to the local system, such as disks and solid-state drives, are displayed in the Local Standard Disks section of the window. On 64-bit IBM Z, this section contains activated Direct Access Storage Devices (DASDs).

A known issue prevents DASDs configured as HyperPAV aliases from being automatically attached to the system after the installation is complete. These storage devices are available during the installation, but are not immediately accessible after you finish installing and reboot. To attach HyperPAV alias devices, add them manually to the /etc/dasd.conf configuration file of the system.

6.2.1. Configuring installation destination

You can use the Installation Destination window to configure the storage options, for example, the disks that you want to use as the installation target for your Red Hat Enterprise Linux installation. You must select at least one disk.

Prerequisites

- The Installation Summary window is open.

- Ensure to back up your data if you plan to use a disk that already contains data. For example, if you want to shrink an existing Microsoft Windows partition and install Red Hat Enterprise Linux as a second system, or if you are upgrading a previous release of Red Hat Enterprise Linux. Manipulating partitions always carries a risk. For example, if the process is interrupted or fails for any reason data on the disk can be lost.

Procedure

From the Installation Summary window, click Installation Destination. Perform the following operations in the Installation Destination window opens:

From the Local Standard Disks section, select the storage device that you require; a white check mark indicates your selection. Disks without a white check mark are not used during the installation process; they are ignored if you choose automatic partitioning, and they are not available in manual partitioning.

The Local Standard Disks shows all locally available storage devices, for example, SATA, IDE and SCSI disks, USB flash and external disks. Any storage devices connected after the installation program has started are not detected. If you use a removable drive to install Red Hat Enterprise Linux, your system is unusable if you remove the device.

Optional: Click the Refresh link in the lower right-hand side of the window if you want to configure additional local storage devices to connect new disks. The Rescan Disks dialog box opens.

Click and wait until the scanning process completes.

All storage changes that you make during the installation are lost when you click Rescan Disks.

- Click to return to the Installation Destination window. All detected disks including any new ones are displayed under the Local Standard Disks section.

Optional: Click to add a specialized storage device.

The Storage Device Selection window opens and lists all storage devices that the installation program has access to.

Optional: Under Storage Configuration, select the Automatic radio button for automatic partitioning.

You can also configure custom partitioning. For more details, see Configuring manual partitioning.

- Optional: Select I would like to make additional space available to reclaim space from an existing partitioning layout. For example, if a disk you want to use already has a different operating system and you want to make this system’s partitions smaller to allow more room for Red Hat Enterprise Linux.

Optional: Select Encrypt my data to encrypt all partitions except the ones needed to boot the system (such as

/boot) using Linux Unified Key Setup (LUKS). Encrypting your disk to add an extra layer of security.Click . The Disk Encryption Passphrase dialog box opens.

- Type your passphrase in the Passphrase and Confirm fields.

Click to complete disk encryption.

WarningIf you lose the LUKS passphrase, any encrypted partitions and their data is completely inaccessible. There is no way to recover a lost passphrase. However, if you perform a Kickstart installation, you can save encryption passphrases and create backup encryption passphrases during the installation. For more information, see the Automatically installing RHEL document.

Optional: Click the Full disk summary and bootloader link in the lower left-hand side of the window to select which storage device contains the boot loader. For more information, see Configuring boot loader.

In most cases it is sufficient to leave the boot loader in the default location. Some configurations, for example, systems that require chain loading from another boot loader require the boot drive to be specified manually.

- Click .

Optional: The Reclaim Disk Space dialog box appears if you selected automatic partitioning and the I would like to make additional space available option, or if there is not enough free space on the selected disks to install Red Hat Enterprise Linux. It lists all configured disk devices and all partitions on those devices. The dialog box displays information about the minimal disk space the system needs for an installation with the currently selected package set and how much space you have reclaimed. To start the reclaiming process:

- Review the displayed list of available storage devices. The Reclaimable Space column shows how much space can be reclaimed from each entry.

- Select a disk or partition to reclaim space.

- Use the button to use free space on a partition while preserving the existing data.

- Use the button to delete that partition or all partitions on a selected disk including existing data.

- Use the button to delete all existing partitions on all disks including existing data and make this space available to install Red Hat Enterprise Linux.

Click to apply the changes and return to graphical installations.

No disk changes are made until you click on the Installation Summary window. The Reclaim Space dialog only marks partitions for resizing or deletion; no action is performed.

6.2.2. Special cases during installation destination configuration

Following are some special cases to consider when you are configuring installation destinations:

-

Some BIOS types do not support booting from a RAID card. In these instances, the

/bootpartition must be created on a partition outside of the RAID array, such as on a separate disk. It is necessary to use an internal disk for partition creation with problematic RAID cards. A/bootpartition is also necessary for software RAID setups. If you choose to partition your system automatically, you should manually edit your/bootpartition. - To configure the Red Hat Enterprise Linux boot loader to chain load from a different boot loader, you must specify the boot drive manually by clicking the Full disk summary and bootloader link from the Installation Destination window.

- When you install Red Hat Enterprise Linux on a system with both multipath and non-multipath storage devices, the automatic partitioning layout in the installation program creates volume groups that contain a mix of multipath and non-multipath devices. This defeats the purpose of multipath storage. Select either multipath or non-multipath devices on the Installation Destination window. Alternatively, proceed to manual partitioning.

6.2.3. Configuring boot loader

Red Hat Enterprise Linux uses GRand Unified Bootloader version 2 (GRUB2) as the boot loader for AMD64 and Intel 64, IBM Power Systems, and ARM. For 64-bit IBM Z, the zipl boot loader is used.

The boot loader is the first program that runs when the system starts and is responsible for loading and transferring control to an operating system. GRUB2 can boot any compatible operating system (including Microsoft Windows) and can also use chain loading to transfer control to other boot loaders for unsupported operating systems.

Installing GRUB2 may overwrite your existing boot loader.

If an operating system is already installed, the Red Hat Enterprise Linux installation program attempts to automatically detect and configure the boot loader to start the other operating system. If the boot loader is not detected, you can manually configure any additional operating systems after you finish the installation.

If you are installing a Red Hat Enterprise Linux system with more than one disk, you might want to manually specify the disk where you want to install the boot loader.

Procedure

From the Installation Destination window, click the Full disk summary and bootloader link. The Selected Disks dialog box opens.

The boot loader is installed on the device of your choice, or on a UEFI system; the EFI system partition is created on the target device during guided partitioning.

- To change the boot device, select a device from the list and click . You can set only one device as the boot device.

- To disable a new boot loader installation, select the device currently marked for boot and click . This ensures GRUB2 is not installed on any device.

If you choose not to install a boot loader, you cannot boot the system directly and you must use another boot method, such as a standalone commercial boot loader application. Use this option only if you have another way to boot your system.

The boot loader may also require a special partition to be created, depending on if your system uses BIOS or UEFI firmware, or if the boot drive has a GUID Partition Table (GPT) or a Master Boot Record (MBR, also known as msdos) label. If you use automatic partitioning, the installation program creates the partition.

6.2.4. Storage device selection

The storage device selection window lists all storage devices that the installation program can access. Depending on your system and available hardware, some tabs might not be displayed. The devices are grouped under the following tabs:

- Multipath Devices

- Storage devices accessible through more than one path, such as through multiple SCSI controllers or Fiber Channel ports on the same system. The installation program only detects multipath storage devices with serial numbers that are 16 or 32 characters long.

- Other SAN Devices

- Devices available on a Storage Area Network (SAN).

- Firmware RAID

- Storage devices attached to a firmware RAID controller.

- NVDIMM Devices

- Under specific circumstances, Red Hat Enterprise Linux 8 can boot and run from (NVDIMM) devices in sector mode on the Intel 64 and AMD64 architectures.

- IBM Z Devices

- Storage devices, or Logical Units (LUNs), DASD, attached through the zSeries Linux FCP (Fiber Channel Protocol) driver.

6.2.5. Filtering storage devices

In the storage device selection window you can filter storage devices either by their World Wide Identifier (WWID) or by the port, target, or logical unit number (LUN).

Prerequisites

- The Installation Summary window is open.

Procedure

- From the Installation Summary window, click Installation Destination. The Installation Destination window opens, listing all available drives.

- Under the Specialized & Network Disks section, click . The storage devices selection window opens.

Click the Search by tab to search by port, target, LUN, or WWID.

Searching by WWID or LUN requires additional values in the corresponding input text fields.

- Select the option that you require from the Search drop-down menu.

- Click to start the search. Each device is presented on a separate row with a corresponding check box.

Select the check box to enable the device that you require during the installation process.

Later in the installation process you can choose to install Red Hat Enterprise Linux on any of the selected devices, and you can choose to mount any of the other selected devices as part of the installed system automatically. Selected devices are not automatically erased by the installation process and selecting a device does not put the data stored on the device at risk.

NoteYou can add devices to the system after installation by modifying the

/etc/fstabfile.- Click to return to the Installation Destination window.

Any storage devices that you do not select are hidden from the installation program entirely. To chain load the boot loader from a different boot loader, select all the devices present.

6.2.6. Using advanced storage options

To use an advanced storage device, you can configure an iSCSI (SCSI over TCP/IP) target or FCoE (Fibre Channel over Ethernet) SAN (Storage Area Network).

To use iSCSI storage devices for the installation, the installation program must be able to discover them as iSCSI targets and be able to create an iSCSI session to access them. Each of these steps might require a user name and password for Challenge Handshake Authentication Protocol (CHAP) authentication. Additionally, you can configure an iSCSI target to authenticate the iSCSI initiator on the system to which the target is attached (reverse CHAP), both for discovery and for the session. Used together, CHAP and reverse CHAP are called mutual CHAP or two-way CHAP. Mutual CHAP provides the greatest level of security for iSCSI connections, particularly if the user name and password are different for CHAP authentication and reverse CHAP authentication.

Repeat the iSCSI discovery and iSCSI login steps to add all required iSCSI storage. You cannot change the name of the iSCSI initiator after you attempt discovery for the first time. To change the iSCSI initiator name, you must restart the installation.

6.2.6.1. Discovering and starting an iSCSI session

The Red Hat Enterprise Linux installer can discover and log in to iSCSI disks in two ways:

- iSCSI Boot Firmware Table (iBFT)

-

When the installer starts, it checks if the BIOS or add-on boot ROMs of the system support iBFT. It is a BIOS extension for systems that can boot from iSCSI. If the BIOS supports iBFT, the installer reads the iSCSI target information for the configured boot disk from the BIOS and logs in to this target, making it available as an installation target. To automatically connect to an iSCSI target, activate a network device for accessing the target. To do so, use the

ip=ibftboot option. For more information, see Network boot options. - Discover and add iSCSI targets manually

- You can discover and start an iSCSI session to identify available iSCSI targets (network storage devices) in the installer’s graphical user interface.

Prerequisites

- The Installation Summary window is open.

Procedure

- From the Installation Summary window, click Installation Destination. The Installation Destination window opens, listing all available drives.

- Under the Specialized & Network Disks section, click . The storage devices selection window opens.

Click . The Add iSCSI Storage Target window opens.

ImportantYou cannot place the

/bootpartition on iSCSI targets that you have manually added using this method - an iSCSI target containing a/bootpartition must be configured for use with iBFT. However, in instances where the installed system is expected to boot from iSCSI with iBFT configuration provided by a method other than firmware iBFT, for example using iPXE, you can remove the/bootpartition restriction using theinst.nonibftiscsibootinstaller boot option.- Enter the IP address of the iSCSI target in the Target IP Address field.

Type a name in the iSCSI Initiator Name field for the iSCSI initiator in iSCSI qualified name (IQN) format. A valid IQN entry contains the following information:

-

The string

iqn.(note the period). -

A date code that specifies the year and month in which your organization’s Internet domain or subdomain name was registered, represented as four digits for the year, a dash, and two digits for the month, followed by a period. For example, represent September 2010 as

2010-09. -

Your organization’s Internet domain or subdomain name, presented in reverse order with the top-level domain first. For example, represent the subdomain

storage.example.comascom.example.storage. A colon followed by a string that uniquely identifies this particular iSCSI initiator within your domain or subdomain. For example

:diskarrays-sn-a8675309.A complete IQN is as follows:

iqn.2010-09.storage.example.com:diskarrays-sn-a8675309. The installation program pre populates theiSCSI Initiator Namefield with a name in this format to help you with the structure. For more information about IQNs, see 3.2.6. iSCSI Names in RFC 3720 - Internet Small Computer Systems Interface (iSCSI) available from tools.ietf.org and 1. iSCSI Names and Addresses in RFC 3721 - Internet Small Computer Systems Interface (iSCSI) Naming and Discovery available from tools.ietf.org.

-

The string

Select the

Discovery Authentication Typedrop-down menu to specify the type of authentication to use for iSCSI discovery. The following options are available:- No credentials

- CHAP pair

- CHAP pair and a reverse pair

Do one of the following:

-

If you selected

CHAP pairas the authentication type, enter the user name and password for the iSCSI target in theCHAP UsernameandCHAP Passwordfields. -

If you selected

CHAP pair and a reverse pairas the authentication type, enter the user name and password for the iSCSI target in theCHAP UsernameandCHAP Passwordfield, and the user name and password for the iSCSI initiator in theReverse CHAP UsernameandReverse CHAP Passwordfields.

-

If you selected

-

Optional: Select the

Bind targets to network interfacescheck box. Click .

The installation program attempts to discover an iSCSI target based on the information provided. If discovery succeeds, the

Add iSCSI Storage Targetwindow displays a list of all iSCSI nodes discovered on the target.Select the check boxes for the node that you want to use for installation.

The

Node login authentication typemenu contains the same options as theDiscovery Authentication Typemenu. However, if you need credentials for discovery authentication, use the same credentials to log in to a discovered node.-

Click the additional

Use the credentials from discoverydrop-down menu. When you provide the proper credentials, the button becomes available. - Click to initiate an iSCSI session.

While the installer uses iscsiadm to find and log into iSCSI targets, iscsiadm automatically stores any information about these targets in the iscsiadm iSCSI database. The installer then copies this database to the installed system and marks any iSCSI targets that are not used for root partition, so that the system automatically logs in to them when it starts. If the root partition is placed on an iSCSI target, initrd logs into this target and the installer does not include this target in start up scripts to avoid multiple attempts to log into the same target.

6.2.6.2. Configuring FCoE parameters

You can discover the FCoE (Fibre Channel over Ethernet) devices from the Installation Destination window by configuring the FCoE parameters accordingly.

Prerequisites

- The Installation Summary window is open.

Procedure

- From the Installation Summary window, click Installation Destination. The Installation Destination window opens, listing all available drives.

- Under the Specialized & Network Disks section, click . The storage devices selection window opens.

- Click . A dialog box opens for you to configure network interfaces for discovering FCoE storage devices.

-

Select a network interface that is connected to an FCoE switch in the

NICdrop-down menu. - Click to scan the network for SAN devices.

Select the required check boxes:

- Use DCB:Data Center Bridging (DCB) is a set of enhancements to the Ethernet protocols designed to increase the efficiency of Ethernet connections in storage networks and clusters. Select the check box to enable or disable the installation program’s awareness of DCB. Enable this option only for network interfaces that require a host-based DCBX client. For configurations on interfaces that use a hardware DCBX client, disable the check box.

- Use auto vlan:Auto VLAN is enabled by default and indicates whether VLAN discovery should be performed. If this check box is enabled, then the FIP (FCoE Initiation Protocol) VLAN discovery protocol runs on the Ethernet interface when the link configuration has been validated. If they are not already configured, network interfaces for any discovered FCoE VLANs are automatically created and FCoE instances are created on the VLAN interfaces.

-

Discovered FCoE devices are displayed under the

Other SAN Devicestab in the Installation Destination window.

6.2.6.3. Configuring DASD storage devices

You can discover and configure the DASD storage devices from the Installation Destination window.

Prerequisites

- The Installation Summary window is open.

Procedure

- From the Installation Summary window, click Installation Destination. The Installation Destination window opens, listing all available drives.

- Under the Specialized & Network Disks section, click . The storage devices selection window opens.

- Click . The Add DASD Storage Target dialog box opens and prompts you to specify a device number, such as 0.0.0204, and attach additional DASDs that were not detected when the installation started.

- Type the device number of the DASD that you want to attach in the Device number field.

Click .

If a DASD with the specified device number is found and if it is not already attached, the dialog box closes and the newly-discovered drives appear in the list of drives. You can then select the check boxes for the required devices and click . The new DASDs are available for selection, marked as

DASD device 0.0.xxxxin the Local Standard Disks section of the Installation Destination window.

If you entered an invalid device number, or if the DASD with the specified device number is already attached to the system, an error message appears in the dialog box, explaining the error and prompting you to try again with a different device number.

6.2.6.4. Configuring FCP devices

FCP devices enable 64-bit IBM Z to use SCSI devices rather than, or in addition to, Direct Access Storage Device (DASD) devices. FCP devices provide a switched fabric topology that enables 64-bit IBM Z systems to use SCSI LUNs as disk devices in addition to traditional DASD devices.

Prerequisites

- The Installation Summary window is open.

-

For an FCP-only installation, you have removed the

DASD=option from the CMS configuration file or therd.dasd=option from the parameter file to indicate that no DASD is present.

Procedure

- From the Installation Summary window, click Installation Destination. The Installation Destination window opens, listing all available drives.

- Under the Specialized & Network Disks section, click . The storage devices selection window opens.

Click . The Add zFCP Storage Target dialog box opens allowing you to add a FCP (Fibre Channel Protocol) storage device.

64-bit IBM Z requires that you enter any FCP device manually so that the installation program can activate FCP LUNs. You can enter FCP devices either in the graphical installation, or as a unique parameter entry in the parameter or CMS configuration file. The values that you enter must be unique to each site that you configure.

- Type the 4 digit hexadecimal device number in the Device number field.

When installing RHEL-8.6 or older releases or if the

zFCPdevice is not configured in NPIV mode, or whenauto LUNscanning is disabled by thezfcp.allow_lun_scan=0kernel module parameter, provide the following values:- Type the 16 digit hexadecimal World Wide Port Number (WWPN) in the WWPN field.

- Type the 16 digit hexadecimal FCP LUN identifier in the LUN field.

- Click to connect to the FCP device.

The newly-added devices are displayed in the IBM Z tab of the Installation Destination window.

Use only lower-case letters in hex values. If you enter an incorrect value and click , the installation program displays a warning. You can edit the configuration information and retry the discovery attempt. For more information about these values, consult the hardware documentation and check with your system administrator.

6.2.7. Installing to an NVDIMM device

Non-Volatile Dual In-line Memory Module (NVDIMM) devices combine the performance of RAM with disk-like data persistence when no power is supplied. Under specific circumstances, Red Hat Enterprise Linux 8 can boot and run from NVDIMM devices.

6.2.7.1. Criteria for using an NVDIMM device as an installation target

You can install Red Hat Enterprise Linux 8 to Non-Volatile Dual In-line Memory Module (NVDIMM) devices in sector mode on the Intel 64 and AMD64 architectures, supported by the nd_pmem driver.

Conditions for using an NVDIMM device as storage

To use an NVDIMM device as storage, the following conditions must be satisfied:

- The architecture of the system is Intel 64 or AMD64.

- The NVDIMM device is configured to sector mode. The installation program can reconfigure NVDIMM devices to this mode.

- The NVDIMM device must be supported by the nd_pmem driver.

Conditions for booting from an NVDIMM Device

Booting from an NVDIMM device is possible under the following conditions:

- All conditions for using the NVDIMM device as storage are satisfied.

- The system uses UEFI.

- The NVDIMM device must be supported by firmware available on the system, or by an UEFI driver. The UEFI driver may be loaded from an option ROM of the device itself.

- The NVDIMM device must be made available under a namespace.

Utilize the high performance of NVDIMM devices during booting, place the /boot and /boot/efi directories on the device. The Execute-in-place (XIP) feature of NVDIMM devices is not supported during booting and the kernel is loaded into conventional memory.

6.2.7.2. Configuring an NVDIMM device using the graphical installation mode

A Non-Volatile Dual In-line Memory Module (NVDIMM) device must be properly configured for use by Red Hat Enterprise Linux 8 using the graphical installation.

Reconfiguration of a NVDIMM device process destroys any data stored on the device.

Prerequisites

- A NVDIMM device is present on the system and satisfies all the other conditions for usage as an installation target.

- The installation has booted and the Installation Summary window is open.

Procedure

- From the Installation Summary window, click Installation Destination. The Installation Destination window opens, listing all available drives.

- Under the Specialized & Network Disks section, click . The storage devices selection window opens.

- Click the NVDIMM Devices tab.

To reconfigure a device, select it from the list.

If a device is not listed, it is not in sector mode.

- Click . A reconfiguration dialog opens.

Enter the sector size that you require and click .

The supported sector sizes are 512 and 4096 bytes.

- When reconfiguration completes click .

- Select the device check box.

Click to return to the Installation Destination window.

The NVDIMM device that you reconfigured is displayed in the Specialized & Network Disks section.

- Click to return to the Installation Summary window.

The NVDIMM device is now available for you to select as an installation target. Additionally, if the device meets the requirements for booting, you can set the device as a boot device.

6.3. Configuring the root user and creating local accounts

6.3.1. Configuring a root password

You must configure a root password to finish the installation process and to log in to the administrator (also known as superuser or root) account that is used for system administration tasks. These tasks include installing and updating software packages and changing system-wide configuration such as network and firewall settings, storage options, and adding or modifying users, groups and file permissions.

To gain root privileges to the installed systems, you can either use a root account or create a user account with administrative privileges (member of the wheel group). The root account is always created during the installation. Switch to the administrator account only when you need to perform a task that requires administrator access.

The root account has complete control over the system. If unauthorized personnel gain access to the account, they can access or delete users' personal files.

Procedure

- From the Installation Summary window, select User Settings > Root Password. The Root Password window opens.

Type your password in the Root Password field.

The requirements for creating a strong root password are:

- Must be at least eight characters long

- May contain numbers, letters (upper and lower case) and symbols

- Is case-sensitive

- Type the same password in the Confirm field.

Click to confirm your root password and return to the Installation Summary window.

If you proceed with a weak password, you must click twice.

6.3.2. Creating a user account

Create a user account to finish the installation. If you do not create a user account, you must log in to the system as root directly, which is not recommended.

Procedure

- On the Installation Summary window, select User Settings > User Creation. The Create User window opens.

- Type the user account name in to the Full name field, for example: John Smith.

Type the username in to the User name field, for example: jsmith.

The User name is used to log in from a command line; if you install a graphical environment, then your graphical login manager uses the Full name.

Select the Make this user administrator check box if the user requires administrative rights (the installation program adds the user to the

wheelgroup ).An administrator user can use the

sudocommand to perform tasks that are only available torootusing the user password, instead of therootpassword. This may be more convenient, but it can also cause a security risk.Select the Require a password to use this account check box.

If you give administrator privileges to a user, ensure the account is password protected. Never give a user administrator privileges without assigning a password to the account.

- Type a password into the Password field.

- Type the same password into the Confirm password field.

- Click to apply the changes and return to the Installation Summary window.

6.3.3. Editing advanced user settings

This procedure describes how to edit the default settings for the user account in the Advanced User Configuration dialog box.

Procedure

- On the Create User window, click .

-

Edit the details in the Home directory field, if required. The field is populated by default with

/home/username. In the User and Groups IDs section you can:

Select the Specify a user ID manually check box and use or to enter the required value.

The default value is 1000. User IDs (UIDs) 0-999 are reserved by the system so they cannot be assigned to a user.

Select the Specify a group ID manually check box and use or to enter the required value.

The default group name is the same as the user name, and the default Group ID (GID) is 1000. GIDs 0-999 are reserved by the system so they can not be assigned to a user group.

Specify additional groups as a comma-separated list in the Group Membership field. Groups that do not already exist are created; you can specify custom GIDs for additional groups in parentheses. If you do not specify a custom GID for a new group, the new group receives a GID automatically.

The user account created always has one default group membership (the user’s default group with an ID set in the Specify a group ID manually field).

- Click to apply the updates and return to the Create User window.

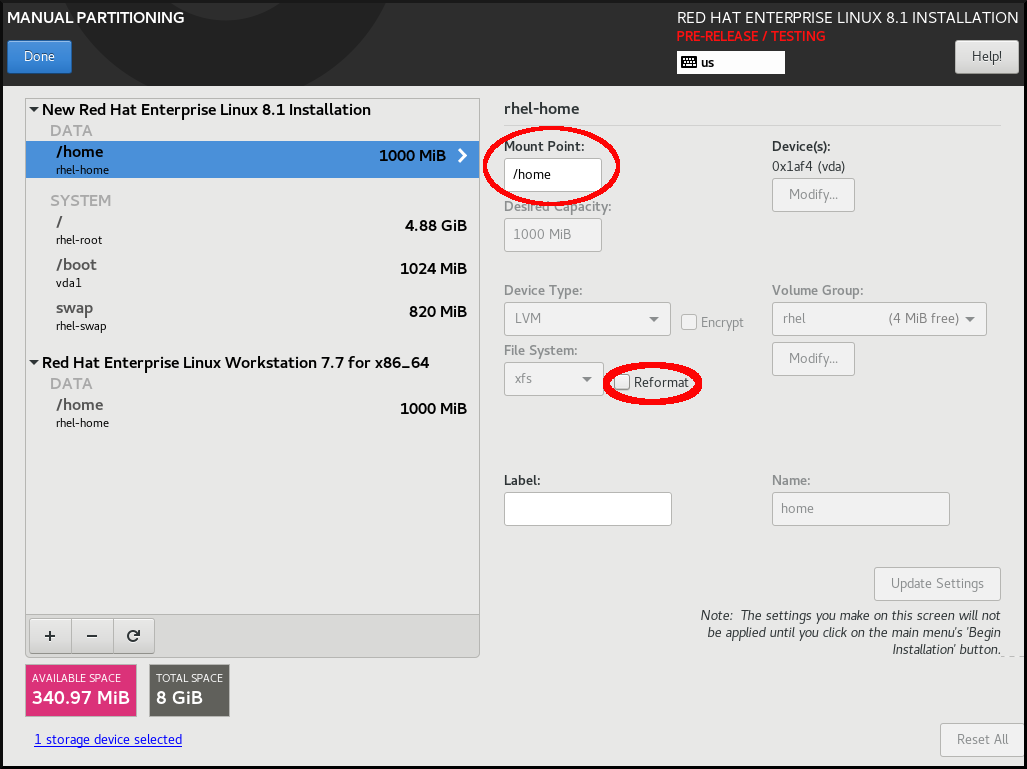

6.4. Configuring manual partitioning

You can use manual partitioning to configure your disk partitions and mount points and define the file system that Red Hat Enterprise Linux is installed on. Before installation, you should consider whether you want to use partitioned or unpartitioned disk devices. For more information about the advantages and disadvantages to using partitioning on LUNs, either directly or with LVM, see the Red Hat Knowledgebase solution advantages and disadvantages to using partitioning on LUNs.

You have different partitioning and storage options available, including Standard Partitions, LVM, and LVM thin provisioning. These options provide various benefits and configurations for managing your system’s storage effectively.

- Standard partition

-

A standard partition contains a file system or swap space. Standard partitions are most commonly used for

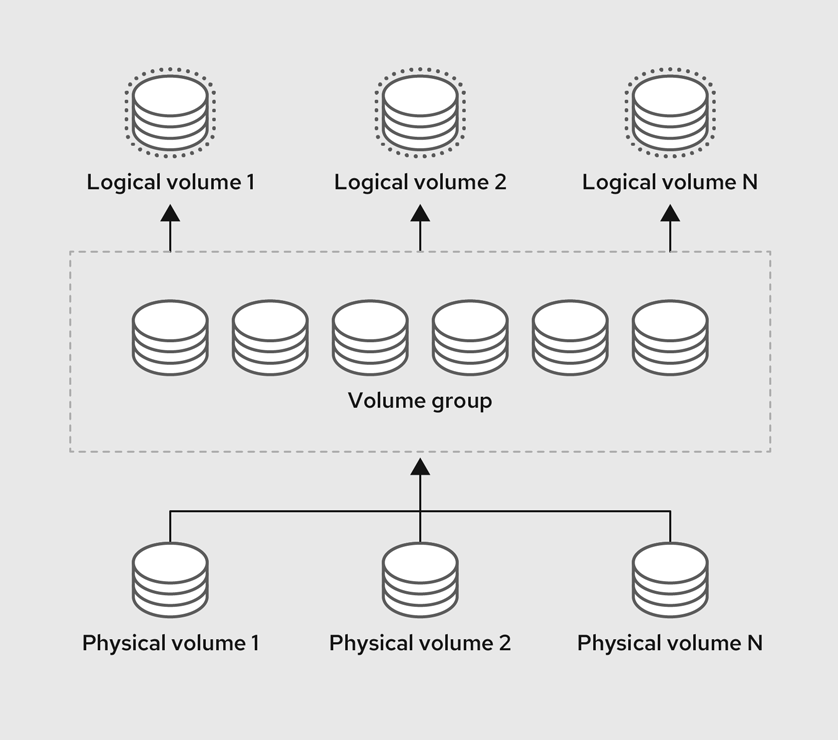

/bootand theBIOS BootandEFI System partitions. You can use the LVM logical volumes in most other uses. - LVM

-

Choosing

LVM(or Logical Volume Management) as the device type creates an LVM logical volume. LVM improves performance when using physical disks, and it allows for advanced setups such as using multiple physical disks for one mount point, and setting up software RAID for increased performance, reliability, or both. - LVM thin provisioning

- Using thin provisioning, you can manage a storage pool of free space, known as a thin pool, which can be allocated to an arbitrary number of devices when needed by applications. You can dynamically expand the pool when needed for cost-effective allocation of storage space.

An installation of Red Hat Enterprise Linux requires a minimum of one partition but uses at least the following partitions or volumes: /, /home, /boot, and swap. You can also create additional partitions and volumes as you require.

To prevent data loss it is recommended that you back up your data before proceeding. If you are upgrading or creating a dual-boot system, you should back up any data you want to keep on your storage devices.

6.4.1. Recommended partitioning scheme

Create separate file systems at the following mount points. However, if required, you can also create the file systems at /usr, /var, and /tmp mount points.

-

/boot -

/(root) -

/home -

swap -

/boot/efi -

PReP

This partition scheme is recommended for bare metal deployments and it does not apply to virtual and cloud deployments.

/bootpartition - recommended size at least 1 GiB-

The partition mounted on

/bootcontains the operating system kernel, which allows your system to boot Red Hat Enterprise Linux 8, along with files used during the bootstrap process. Due to the limitations of most firmwares, create a small partition to hold these. In most scenarios, a 1 GiB boot partition is adequate. Unlike other mount points, using an LVM volume for/bootis not possible -/bootmust be located on a separate disk partition.

If you have a RAID card, be aware that some BIOS types do not support booting from the RAID card. In such a case, the /boot partition must be created on a partition outside of the RAID array, such as on a separate disk.

-

Normally, the

/bootpartition is created automatically by the installation program. However, if the/(root) partition is larger than 2 TiB and (U)EFI is used for booting, you need to create a separate/bootpartition that is smaller than 2 TiB to boot the machine successfully. -

Ensure the

/bootpartition is located within the first 2 TB of the disk while manual partitioning. Placing the/bootpartition beyond the 2 TB boundary might result in a successful installation, but the system fails to boot because BIOS cannot read the/bootpartition beyond this limit.

root- recommended size of 10 GiBThis is where "

/", or the root directory, is located. The root directory is the top-level of the directory structure. By default, all files are written to this file system unless a different file system is mounted in the path being written to, for example,/bootor/home.While a 5 GiB root file system allows you to install a minimal installation, it is recommended to allocate at least 10 GiB so that you can install as many package groups as you want.

Do not confuse the / directory with the /root directory. The /root directory is the home directory of the root user. The /root directory is sometimes referred to as slash root to distinguish it from the root directory.

/home- recommended size at least 1 GiB-

To store user data separately from system data, create a dedicated file system for the

/homedirectory. Base the file system size on the amount of data that is stored locally, number of users, and so on. You can upgrade or reinstall Red Hat Enterprise Linux 8 without erasing user data files. If you select automatic partitioning, it is recommended to have at least 55 GiB of disk space available for the installation, to ensure that the/homefile system is created. swappartition - recommended size at least 1 GiBSwap file systems support virtual memory; data is written to a swap file system when there is not enough RAM to store the data your system is processing. Swap size is a function of system memory workload, not total system memory and therefore is not equal to the total system memory size. It is important to analyze what applications a system will be running and the load those applications will serve in order to determine the system memory workload. Application providers and developers can provide guidance.

When the system runs out of swap space, the kernel terminates processes as the system RAM memory is exhausted. Configuring too much swap space results in storage devices being allocated but idle and is a poor use of resources. Too much swap space can also hide memory leaks. The maximum size for a swap partition and other additional information can be found in the

mkswap(8)manual page.The following table provides the recommended size of a swap partition depending on the amount of RAM in your system and if you want sufficient memory for your system to hibernate. If you let the installation program partition your system automatically, the swap partition size is established using these guidelines. Automatic partitioning setup assumes hibernation is not in use. The maximum size of the swap partition is limited to 10 percent of the total size of the disk, and the installation program cannot create swap partitions more than 1TiB. To set up enough swap space to allow for hibernation, or if you want to set the swap partition size to more than 10 percent of the system’s storage space, or more than 1TiB, you must edit the partitioning layout manually.

| Amount of RAM in the system | Recommended swap space | Recommended swap space if allowing for hibernation |

|---|---|---|

| Less than 2 GiB | 2 times the amount of RAM | 3 times the amount of RAM |

| 2 GiB - 8 GiB | Equal to the amount of RAM | 2 times the amount of RAM |

| 8 GiB - 64 GiB | 4 GiB to 0.5 times the amount of RAM | 1.5 times the amount of RAM |

| More than 64 GiB | Workload dependent (at least 4GiB) | Hibernation not recommended |

/boot/efipartition - recommended size of 200 MiB- UEFI-based AMD64, Intel 64, and 64-bit ARM require a 200 MiB EFI system partition. The recommended minimum size is 200 MiB, the default size is 600 MiB, and the maximum size is 600 MiB. BIOS systems do not require an EFI system partition.

At the border between each range, for example, a system with 2 GiB, 8 GiB, or 64 GiB of system RAM, discretion can be exercised with regard to chosen swap space and hibernation support. If your system resources allow for it, increasing the swap space can lead to better performance.

Distributing swap space over multiple storage devices - particularly on systems with fast drives, controllers and interfaces - also improves swap space performance.

Many systems have more partitions and volumes than the minimum required. Choose partitions based on your particular system needs. If you are unsure about configuring partitions, accept the automatic default partition layout provided by the installation program.

Only assign storage capacity to those partitions you require immediately. You can allocate free space at any time, to meet needs as they occur.

PRePboot partition - recommended size of 4 to 8 MiB-

When installing Red Hat Enterprise Linux on IBM Power System servers, the first partition of the disk should include a

PRePboot partition. This contains the GRUB boot loader, which allows other IBM Power Systems servers to boot Red Hat Enterprise Linux.

6.4.2. Supported hardware storage

It is important to understand how storage technologies are configured and how support for them may have changed between major versions of Red Hat Enterprise Linux.

Hardware RAID

Any RAID functions provided by the mainboard of your computer, or attached controller cards, need to be configured before you begin the installation process. Each active RAID array appears as one drive within Red Hat Enterprise Linux.

Software RAID

On systems with more than one disk, you can use the Red Hat Enterprise Linux installation program to operate several of the drives as a Linux software RAID array. With a software RAID array, RAID functions are controlled by the operating system rather than the dedicated hardware.

When a pre-existing RAID array’s member devices are all unpartitioned disks/drives, the installation program treats the array as a disk and there is no method to remove the array.

USB Disks

You can connect and configure external USB storage after installation. Most devices are recognized by the kernel, but some devices may not be recognized. If it is not a requirement to configure these disks during installation, disconnect them to avoid potential problems.

NVDIMM devices

To use a Non-Volatile Dual In-line Memory Module (NVDIMM) device as storage, the following conditions must be satisfied:

- Version of Red Hat Enterprise Linux is 7.6 or later.

- The architecture of the system is Intel 64 or AMD64.

- The device is configured to sector mode. Anaconda can reconfigure NVDIMM devices to this mode.