This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Chapter 4. Setting up a Router

4.1. Router Overview

4.1.1. About Routers

There are many ways to get traffic into the cluster. The most common approach is to use the OpenShift Container Platform router as the ingress point for external traffic destined for services in your OpenShift Container Platform installation.

OpenShift Container Platform provides and supports the following router plug-ins:

- The HAProxy template router is the default plug-in. It uses the openshift3/ose-haproxy-router image to run an HAProxy instance alongside the template router plug-in inside a container on OpenShift Container Platform. It currently supports HTTP(S) traffic and TLS-enabled traffic via SNI. The router’s container listens on the host network interface, unlike most containers that listen only on private IPs. The router proxies external requests for route names to the IPs of actual pods identified by the service associated with the route.

- The F5 router integrates with an existing F5 BIG-IP® system in your environment to synchronize routes. F5 BIG-IP® version 11.4 or newer is required in order to have the F5 iControl REST API.

The F5 router plug-in is available starting in OpenShift Container Platform 3.0.2.

4.1.2. Router Service Account

Before deploying an OpenShift Container Platform cluster, you must have a service account for the router. Starting in OpenShift Container Platform 3.1, a router service account is automatically created during a quick or advanced installation (previously, this required manual creation). This service account has permissions to a security context constraint (SCC) that allows it to specify host ports.

4.1.2.1. Permission to Access Labels

When namespace labels are used, for example in creating router shards, the service account for the router must have cluster-reader permission.

oc adm policy add-cluster-role-to-user \

cluster-reader \

system:serviceaccount:default:router

$ oc adm policy add-cluster-role-to-user \

cluster-reader \

system:serviceaccount:default:routerWith a service account in place, you can proceed to installing a default HAProxy Router, a customized HAProxy Router or F5 Router.

4.2. Using the Default HAProxy Router

4.2.1. Overview

The oc adm router command is provided with the administrator CLI to simplify the tasks of setting up routers in a new installation. If you followed the quick installation, then a default router was automatically created for you. The oc adm router command creates the service and deployment configuration objects. Use the --service-account option to specify the service account the router will use to contact the master.

The router service account can be created in advance or created by the oc adm router --service-account command.

Every form of communication between OpenShift Container Platform components is secured by TLS and uses various certificates and authentication methods. The --default-certificate .pem format file can be supplied or one is created by the oc adm router command. When routes are created, the user can provide route certificates that the router will use when handling the route.

When deleting a router, ensure the deployment configuration, service, and secret are deleted as well.

Routers are deployed on specific nodes. This makes it easier for the cluster administrator and external network manager to coordinate which IP address will run a router and which traffic the router will handle. The routers are deployed on specific nodes by using node selectors.

Routers use host networking by default, and they directly attach to port 80 and 443 on all interfaces on a host. Restrict routers to hosts where ports 80/443 are available and not being consumed by another service, and set this using node selectors and the scheduler configuration. As an example, you can achieve this by dedicating infrastructure nodes to run services such as routers.

It is recommended to use separate distinct openshift-router service account with your router. This can be provided using the --service-account flag to the oc adm router command.

oc adm router --dry-run --service-account=router

$ oc adm router --dry-run --service-account=router

Router pods created using oc adm router have default resource requests that a node must satisfy for the router pod to be deployed. In an effort to increase the reliability of infrastructure components, the default resource requests are used to increase the QoS tier of the router pods above pods without resource requests. The default values represent the observed minimum resources required for a basic router to be deployed and can be edited in the routers deployment configuration and you may want to increase them based on the load of the router.

4.2.2. Creating a Router

The quick installation process automatically creates a default router. If the router does not exist, run the following to create a router:

oc adm router <router_name> --replicas=<number> --service-account=router

$ oc adm router <router_name> --replicas=<number> --service-account=router

--replicas is usually 1 unless a high availability configuration is being created.

To find the host IP address of the router:

oc get po <router-pod> --template={{.status.hostIP}}

$ oc get po <router-pod> --template={{.status.hostIP}}You can also use router shards to ensure that the router is filtered to specific namespaces or routes, or set any environment variables after router creation. In this case create a router for each shard.

4.2.3. Other Basic Router Commands

- Checking the Default Router

- The default router service account, named router, is automatically created during quick and advanced installations. To verify that this account already exists:

oc adm router --dry-run --service-account=router

$ oc adm router --dry-run --service-account=router- Viewing the Default Router

- To see what the default router would look like if created:

oc adm router --dry-run -o yaml --service-account=router

$ oc adm router --dry-run -o yaml --service-account=router- Deploying the Router to a Labeled Node

- To deploy the router to any node(s) that match a specified node label:

oc adm router <router_name> --replicas=<number> --selector=<label> \

--service-account=router

$ oc adm router <router_name> --replicas=<number> --selector=<label> \

--service-account=router

For example, if you want to create a router named router and have it placed on a node labeled with region=infra:

oc adm router router --replicas=1 --selector='region=infra' \ --service-account=router

$ oc adm router router --replicas=1 --selector='region=infra' \

--service-account=router

During advanced installation, the openshift_hosted_router_selector and openshift_registry_selector Ansible settings are set to region=infra by default. The default router and registry will only be automatically deployed if a node exists that matches the region=infra label.

For information on updating labels, see Updating Labels on Nodes.

Multiple instances are created on different hosts according to the scheduler policy.

- Using a Different Router Image

- To use a different router image and view the router configuration that would be used:

oc adm router <router_name> -o <format> --images=<image> \

--service-account=router

$ oc adm router <router_name> -o <format> --images=<image> \

--service-account=routerFor example:

oc adm router region-west -o yaml --images=myrepo/somerouter:mytag \

--service-account=router

$ oc adm router region-west -o yaml --images=myrepo/somerouter:mytag \

--service-account=router4.2.4. Filtering Routes to Specific Routers

Using the ROUTE_LABELS environment variable, you can filter routes so that they are used only by specific routers.

For example, if you have multiple routers, and 100 routes, you can attach labels to the routes so that a portion of them are handled by one router, whereas the rest are handled by another.

After creating a router, use the

ROUTE_LABELSenvironment variable to tag the router:oc env dc/<router=name> ROUTE_LABELS="key=value"

$ oc env dc/<router=name> ROUTE_LABELS="key=value"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the label to the desired routes:

oc label route <route=name> key=value

oc label route <route=name> key=valueCopy to Clipboard Copied! Toggle word wrap Toggle overflow To verify that the label has been attached to the route, check the route configuration:

oc describe dc/<route_name>

$ oc describe dc/<route_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Setting the Maximum Number of Concurrent Connections

-

The router can handle a maximum number of 20000 connections by default. You can change that limit depending on your needs. Having too few connections prevents the health check from working, which causes unnecessary restarts. You need to configure the system to support the maximum number of connections. The limits shown in

'sysctl fs.nr_open'and'sysctl fs.file-max'must be large enough. Otherwise, HAproxy will not start.

When the router is created, the --max-connections= option sets the desired limit:

oc adm router --max-connections=10000 ....

$ oc adm router --max-connections=10000 ....

Edit the ROUTER_MAX_CONNECTIONS environment variable in the router’s deployment configuration to change the value. The router pods are restarted with the new value. If ROUTER_MAX_CONNECTIONS is not present, the default value of 20000, is used.

4.2.5. Highly-Available Routers

You can set up a highly-available router on your OpenShift Container Platform cluster using IP failover. This setup has multiple replicas on different nodes so the failover software can switch to another replica if the current one fails.

4.2.6. Customizing the Router Service Ports

You can customize the service ports that a template router binds to by setting the environment variables ROUTER_SERVICE_HTTP_PORT and ROUTER_SERVICE_HTTPS_PORT. This can be done by creating a template router, then editing its deployment configuration.

The following example creates a router deployment with 0 replicas and customizes the router service HTTP and HTTPS ports, then scales it appropriately (to 1 replica).

oc adm router --replicas=0 --ports='10080:10080,10443:10443'

oc set env dc/router ROUTER_SERVICE_HTTP_PORT=10080 \

ROUTER_SERVICE_HTTPS_PORT=10443

oc scale dc/router --replicas=1

$ oc adm router --replicas=0 --ports='10080:10080,10443:10443'

$ oc set env dc/router ROUTER_SERVICE_HTTP_PORT=10080 \

ROUTER_SERVICE_HTTPS_PORT=10443

$ oc scale dc/router --replicas=1- 1

- Ensures exposed ports are appropriately set for routers that use the container networking mode

--host-network=false.

If you do customize the template router service ports, you will also need to ensure that the nodes where the router pods run have those custom ports opened in the firewall (either via Ansible or iptables, or any other custom method that you use via firewall-cmd).

The following is an example using iptables to open the custom router service ports.

iptables -A INPUT -p tcp --dport 10080 -j ACCEPT iptables -A INPUT -p tcp --dport 10443 -j ACCEPT

$ iptables -A INPUT -p tcp --dport 10080 -j ACCEPT

$ iptables -A INPUT -p tcp --dport 10443 -j ACCEPT4.2.7. Working With Multiple Routers

An administrator can create multiple routers with the same definition to serve the same set of routes. Each router will be on a different node and will have a different IP address. The network administrator will need to get the desired traffic to each node.

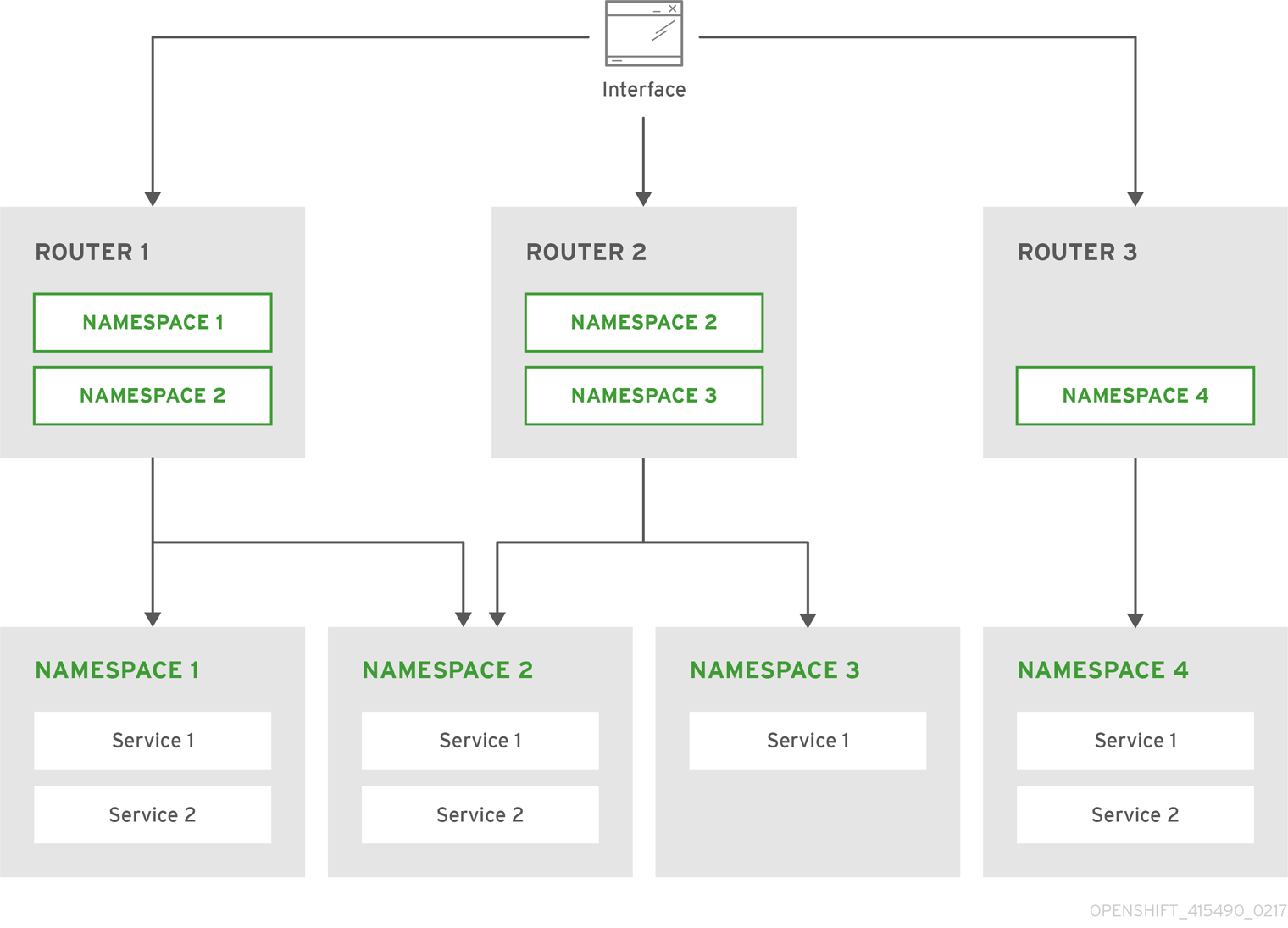

Multiple routers can be grouped to distribute routing load in the cluster and separate tenants to different routers or shards. Each router or shard in the group admits routes based on the selectors in the router. An administrator can create shards over the whole cluster using ROUTE_LABELS. A user can create shards over a namespace (project) by using NAMESPACE_LABELS.

4.2.8. Adding a Node Selector to a Deployment Configuration

Making specific routers deploy on specific nodes requires two steps:

Add a label to the desired node:

oc label node 10.254.254.28 "router=first"

$ oc label node 10.254.254.28 "router=first"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add a node selector to the router deployment configuration:

oc edit dc <deploymentConfigName>

$ oc edit dc <deploymentConfigName>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the

template.spec.nodeSelectorfield with a key and value corresponding to the label:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The key and value are

routerandfirst, respectively, corresponding to therouter=firstlabel.

4.2.9. Using Router Shards

Router sharding uses NAMESPACE_LABELS and/or ROUTE_LABELS, that enable a router to filter out the namespaces and/or routes that it should admit. This enables you to partition routes amongst multiple router deployments effectively distributing the set of routes.

By default, a router selects all routes from all projects (namespaces). Sharding adds labels to routes and each router shard selects routes with specific labels.

The router service account must have the [cluster reader] permission set to allow access to labels in other namespaces.

Router Sharding and DNS

Because an external DNS server is needed to route requests to the desired shard, the administrator is responsible for making a separate DNS entry for each router in a project. A router will not forward unknown routes to another router.

For example:

-

If Router A lives on host 192.168.0.5 and has routes with

*.foo.com. -

And Router B lives on host 192.168.1.9 and has routes with

*.example.com.

Separate DNS entries must resolve *.foo.com to the node hosting Router A and *.example.com to the node hosting Router B:

-

*.foo.com A IN 192.168.0.5 -

*.example.com A IN 192.168.1.9

Router Sharding Examples

This section describes router sharding using project (namespace) labels or project (namespace) names.

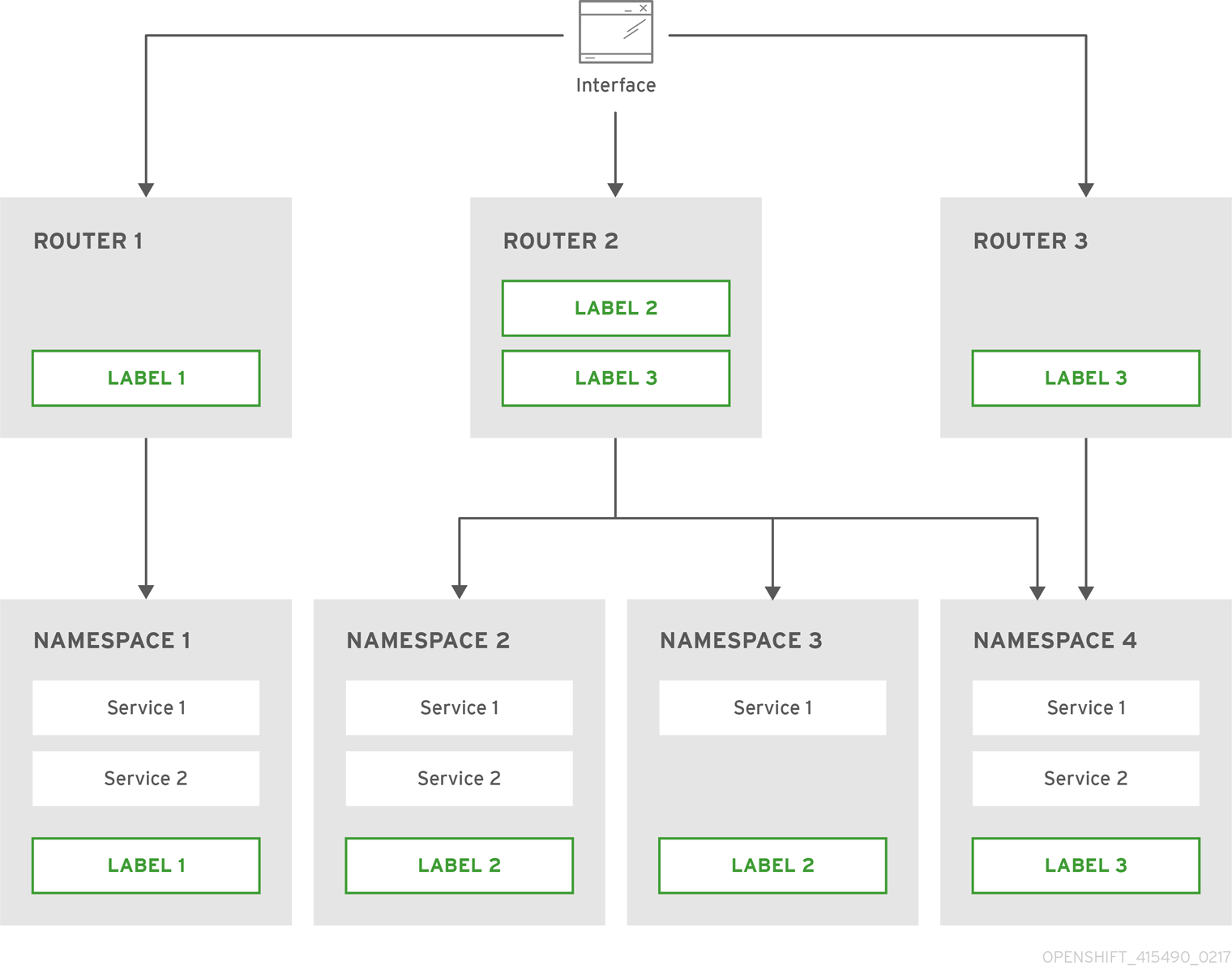

Figure 4.1. Router Sharding Based on Namespace Labels

Example: A router deployment finops-router is run with route selector NAMESPACE_LABELS="name in (finance, ops)" and a router deployment dev-router is run with route selector NAMESPACE_LABELS="name=dev".

If all routes are in the three namespaces finance, ops or dev, then this could effectively distribute your routes across two router deployments.

In the above scenario, sharding becomes a special case of partitioning with no overlapping sets. Routes are divided amongst multiple router shards.

The criteria for route selection governs how the routes are distributed. It is possible to have routes that overlap across multiple router deployments.

Example: In addition to the finops-router and dev-router in the example above, you also have devops-router, which is run with a route selector NAMESPACE_LABELS="name in (dev, ops)".

The routes in namespaces dev or ops now are serviced by two different router deployments. This becomes a case in which you have partitioned the routes with an overlapping set.

In addition, this enables you to create more complex routing rules, allowing the diversion of high priority traffic to the dedicated finops-router, but sending the lower priority ones to the devops-router.

NAMESPACE_LABELS allows filtering of the projects to service and selecting all the routes from those projects, but you may want to partition routes based on other criteria in the routes themselves. The ROUTE_LABELS selector allows you to slice-and-dice the routes themselves.

Example: A router deployment prod-router is run with route selector ROUTE_LABELS="mydeployment=prod" and a router deployment devtest-router is run with route selector ROUTE_LABELS="mydeployment in (dev, test)".

The example assumes you have all the routes you want to be serviced tagged with a label "mydeployment=<tag>".

Figure 4.2. Router Sharding Based on Namespace Names

4.2.9.1. Creating Router Shards

To implement router sharding set labels on the routes in the pool and express the desired subset of those routes for the router to admit with a selection expression via the oc set env command.

First, ensure that service account associated with the router has the cluster reader permission.

The rest of this section describes an extended example. Suppose there are 26 routes, named a — z, in the pool, with various labels:

Possible labels on routes in the pool

sla=high geo=east hw=modest dept=finance sla=medium geo=west hw=strong dept=dev sla=low dept=ops

sla=high geo=east hw=modest dept=finance

sla=medium geo=west hw=strong dept=dev

sla=low dept=opsThese labels express the concepts: service level agreement, geographical location, hardware requirements, and department. The routes in the pool can have at most one label from each column. Some routes may have other labels, entirely, or none at all.

| Name(s) | SLA | Geo | HW | Dept | Other Labels |

|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

| ||

|

|

|

|

| ||

|

|

|

|

| ||

|

|

|

|

| ||

|

|

|

|

Here is a convenience script mkshard that ilustrates how oc adm router, oc set env, and oc scale work together to make a router shard.

Running mkshard several times creates several routers:

| Router | Selection Expression | Routes |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

4.2.9.2. Modifying Router Shards

Because a router shard is a construct based on labels, you can modify either the labels (via oc label) or the selection expression.

This section extends the example started in the Creating Router Shards section, demonstrating how to change the selection expression.

Here is a convenience script modshard that modifies an existing router to use a new selection expression:

- 1

- The modified router has name

router-shard-<id>. - 2

- The deployment configuration where the modifications occur.

- 3

- Scale it down.

- 4

- Set the new selection expression using

oc set env. Unlikemkshardfrom the Creating Router Shards section, the selection expression specified as the non-IDarguments tomodshardmust include the environment variable name as well as its value. - 5

- Scale it back up.

In modshard, the oc scale commands are not necessary if the deployment strategy for router-shard-<id> is Rolling.

For example, to expand the department for router-shard-3 to include ops as well as dev:

modshard 3 ROUTE_LABELS='dept in (dev, ops)'

$ modshard 3 ROUTE_LABELS='dept in (dev, ops)'

The result is that router-shard-3 now selects routes g — s (the combined sets of g — k and l — s).

This example takes into account that there are only three departments in this example scenario, and specifies a department to leave out of the shard, thus achieving the same result as the preceding example:

modshard 3 ROUTE_LABELS='dept != finanace'

$ modshard 3 ROUTE_LABELS='dept != finanace'

This example specifies shows three comma-separated qualities, and results in only route b being selected:

modshard 3 ROUTE_LABELS='hw=strong,type=dynamic,geo=west'

$ modshard 3 ROUTE_LABELS='hw=strong,type=dynamic,geo=west'

Similarly to ROUTE_LABELS, which involve a route’s labels, you can select routes based on the labels of the route’s namespace labels, with the NAMESPACE_LABELS environment variable. This example modifies router-shard-3 to serve routes whose namespace has the label frequency=weekly:

modshard 3 NAMESPACE_LABELS='frequency=weekly'

$ modshard 3 NAMESPACE_LABELS='frequency=weekly'

The last example combines ROUTE_LABELS and NAMESPACE_LABELS to select routes with label sla=low and whose namespace has the label frequency=weekly:

modshard 3 \

NAMESPACE_LABELS='frequency=weekly' \

ROUTE_LABELS='sla=low'

$ modshard 3 \

NAMESPACE_LABELS='frequency=weekly' \

ROUTE_LABELS='sla=low'4.2.9.3. Using Namespace Router Shards

The routes for a project can be handled by a selected router by using NAMESPACE_LABELS. The router is given a selector for a NAMESPACE_LABELS label and the project that wants to use the router applies the NAMESPACE_LABELS label to its namespace.

First, ensure that service account associated with the router has the cluster reader permission. This permits the router to read the labels that are applied to the namespaces.

Now create and label the router:

oc adm router ... --service-account=router oc set env dc/router NAMESPACE_LABELS="router=r1"

$ oc adm router ... --service-account=router

$ oc set env dc/router NAMESPACE_LABELS="router=r1"Because the router has a selector for a namespace, the router will handle routes for that namespace. So, for example:

oc label namespace default "router=r1"

$ oc label namespace default "router=r1"Now create routes in the default namespace, and the route is available in the default router:

oc create -f route1.yaml

$ oc create -f route1.yamlNow create a new project (namespace) and create a route, route2.

oc new-project p1 oc create -f route2.yaml

$ oc new-project p1

$ oc create -f route2.yamlAnd notice the route is not available in your router. Now label namespace p1 with "router=r1"

oc label namespace p1 "router=r1"

$ oc label namespace p1 "router=r1"Which makes the route available to the router.

Note that removing the label from the namespace won’t have immediate effect (as we don’t see the updates in the router), so if you redeploy/start a new router pod, you should see the unlabelled effects.

oc scale dc/router --replicas=0 && oc scale dc/router --replicas=1

$ oc scale dc/router --replicas=0 && oc scale dc/router --replicas=14.2.10. Finding the Host Name of the Router

When exposing a service, a user can use the same route from the DNS name that external users use to access the application. The network administrator of the external network must make sure the host name resolves to the name of a router that has admitted the route. The user can set up their DNS with a CNAME that points to this host name. However, the user may not know the host name of the router. When it is not known, the cluster administrator can provide it.

The cluster administrator can use the --router-canonical-hostname option with the router’s canonical host name when creating the router. For example:

oc adm router myrouter --router-canonical-hostname="rtr.example.com"

# oc adm router myrouter --router-canonical-hostname="rtr.example.com"

This creates the ROUTER_CANONICAL_HOSTNAME environment variable in the router’s deployment configuration containing the host name of the router.

For routers that already exist, the cluster administrator can edit the router’s deployment configuration and add the ROUTER_CANONICAL_HOSTNAME environment variable:

The ROUTER_CANONICAL_HOSTNAME value is displayed in the route status for all routers that have admitted the route. The route status is refreshed every time the router is reloaded.

When a user creates a route, all of the active routers evaluate the route and, if conditions are met, admit it. When a router that defines the ROUTER_CANONICAL_HOSTNAME environment variable admits the route, the router places the value in the routerCanonicalHostname field in the route status. The user can examine the route status to determine which, if any, routers have admitted the route, select a router from the list, and find the host name of the router to pass along to the network administrator.

oc describe inclues the host name when available:

oc describe route/hello-route3 ... Requested Host: hello.in.mycloud.com exposed on router myroute (host rtr.example.com) 12 minutes ago

$ oc describe route/hello-route3

...

Requested Host: hello.in.mycloud.com exposed on router myroute (host rtr.example.com) 12 minutes ago

Using the above information, the user can ask the DNS administrator to set up a CNAME from the route’s host, hello.in.mycloud.com, to the router’s canonical hostname, rtr.example.com. This results in any traffic to hello.in.mycloud.com reaching the user’s application.

4.2.11. Customizing the Default Routing Subdomain

You can customize the suffix used as the default routing subdomain for your environment by modifying the master configuration file (the /etc/origin/master/master-config.yaml file by default). Routes that do not specify a host name would have one generated using this default routing subdomain.

The following example shows how you can set the configured suffix to v3.openshift.test:

routingConfig: subdomain: v3.openshift.test

routingConfig:

subdomain: v3.openshift.testThis change requires a restart of the master if it is running.

With the OpenShift Container Platform master(s) running the above configuration, the generated host name for the example of a route named no-route-hostname without a host name added to a namespace mynamespace would be:

no-route-hostname-mynamespace.v3.openshift.test

no-route-hostname-mynamespace.v3.openshift.test4.2.12. Forcing Route Host Names to a Custom Routing Subdomain

If an administrator wants to restrict all routes to a specific routing subdomain, they can pass the --force-subdomain option to the oc adm router command. This forces the router to override any host names specified in a route and generate one based on the template provided to the --force-subdomain option.

The following example runs a router, which overrides the route host names using a custom subdomain template ${name}-${namespace}.apps.example.com.

oc adm router --force-subdomain='${name}-${namespace}.apps.example.com'

$ oc adm router --force-subdomain='${name}-${namespace}.apps.example.com'4.2.13. Using Wildcard Certificates

A TLS-enabled route that does not include a certificate uses the router’s default certificate instead. In most cases, this certificate should be provided by a trusted certificate authority, but for convenience you can use the OpenShift Container Platform CA to create the certificate. For example:

CA=/etc/origin/master

oc adm ca create-server-cert --signer-cert=$CA/ca.crt \

--signer-key=$CA/ca.key --signer-serial=$CA/ca.serial.txt \

--hostnames='*.cloudapps.example.com' \

--cert=cloudapps.crt --key=cloudapps.key

$ CA=/etc/origin/master

$ oc adm ca create-server-cert --signer-cert=$CA/ca.crt \

--signer-key=$CA/ca.key --signer-serial=$CA/ca.serial.txt \

--hostnames='*.cloudapps.example.com' \

--cert=cloudapps.crt --key=cloudapps.key

The oc adm ca create-server-cert command generates a certificate that is valid for two years. This can be altered with the --expire-days option, but for security reasons, it is recommended to not make it greater than this value.

The router expects the certificate and key to be in PEM format in a single file:

cat cloudapps.crt cloudapps.key $CA/ca.crt > cloudapps.router.pem

$ cat cloudapps.crt cloudapps.key $CA/ca.crt > cloudapps.router.pem

From there you can use the --default-cert flag:

oc adm router --default-cert=cloudapps.router.pem --service-account=router

$ oc adm router --default-cert=cloudapps.router.pem --service-account=routerBrowsers only consider wildcards valid for subdomains one level deep. So in this example, the certificate would be valid for a.cloudapps.example.com but not for a.b.cloudapps.example.com.

4.2.14. Manually Redeploy Certificates

To manually redeploy the router certificates:

Check to see if a secret containing the default router certificate was added to the router:

oc volumes dc/router deploymentconfigs/router secret/router-certs as server-certificate mounted at /etc/pki/tls/private$ oc volumes dc/router deploymentconfigs/router secret/router-certs as server-certificate mounted at /etc/pki/tls/privateCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the certificate is added, skip the following step and overwrite the secret.

Make sure that you have a default certificate directory set for the following variable

DEFAULT_CERTIFICATE_DIR:oc env dc/router --list DEFAULT_CERTIFICATE_DIR=/etc/pki/tls/private

$ oc env dc/router --list DEFAULT_CERTIFICATE_DIR=/etc/pki/tls/privateCopy to Clipboard Copied! Toggle word wrap Toggle overflow If not, create the directory using the following command:

oc env dc/router DEFAULT_CERTIFICATE_DIR=/etc/pki/tls/private

$ oc env dc/router DEFAULT_CERTIFICATE_DIR=/etc/pki/tls/privateCopy to Clipboard Copied! Toggle word wrap Toggle overflow Export the certificate to PEM format:

cat custom-router.key custom-router.crt custom-ca.crt > custom-router.crt

$ cat custom-router.key custom-router.crt custom-ca.crt > custom-router.crtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Overwrite or create a router certificate secret:

If the certificate secret was added to the router, overwrite the secret. If not, create a new secret.

To overwrite the secret, run the following command:

oc secrets new router-certs tls.crt=custom-router.crt tls.key=custom-router.key -o json --type='kubernetes.io/tls' --confirm | oc replace -f -

$ oc secrets new router-certs tls.crt=custom-router.crt tls.key=custom-router.key -o json --type='kubernetes.io/tls' --confirm | oc replace -f -Copy to Clipboard Copied! Toggle word wrap Toggle overflow To create a new secret, run the following commands:

oc secrets new router-certs tls.crt=custom-router.crt tls.key=custom-router.key --type='kubernetes.io/tls' --confirm oc volume dc/router --add --mount-path=/etc/pki/tls/private --secret-name='router-certs' --name router-certs

$ oc secrets new router-certs tls.crt=custom-router.crt tls.key=custom-router.key --type='kubernetes.io/tls' --confirm $ oc volume dc/router --add --mount-path=/etc/pki/tls/private --secret-name='router-certs' --name router-certsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy the router.

oc rollout latest dc/router

$ oc rollout latest dc/routerCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.15. Using Secured Routes

Currently, password protected key files are not supported. HAProxy prompts for a password upon starting and does not have a way to automate this process. To remove a passphrase from a keyfile, you can run:

openssl rsa -in <passwordProtectedKey.key> -out <new.key>

# openssl rsa -in <passwordProtectedKey.key> -out <new.key>Here is an example of how to use a secure edge terminated route with TLS termination occurring on the router before traffic is proxied to the destination. The secure edge terminated route specifies the TLS certificate and key information. The TLS certificate is served by the router front end.

First, start up a router instance:

oc adm router --replicas=1 --service-account=router

# oc adm router --replicas=1 --service-account=router

Next, create a private key, csr and certificate for our edge secured route. The instructions on how to do that would be specific to your certificate authority and provider. For a simple self-signed certificate for a domain named www.example.test, see the example shown below:

Generate a route using the above certificate and key.

oc create route edge --service=my-service \

--hostname=www.example.test \

--key=example-test.key --cert=example-test.crt

route "my-service" created

$ oc create route edge --service=my-service \

--hostname=www.example.test \

--key=example-test.key --cert=example-test.crt

route "my-service" createdLook at its definition.

Make sure your DNS entry for www.example.test points to your router instance(s) and the route to your domain should be available. The example below uses curl along with a local resolver to simulate the DNS lookup:

# routerip="4.1.1.1" # replace with IP address of one of your router instances. curl -k --resolve www.example.test:443:$routerip https://www.example.test/

# routerip="4.1.1.1" # replace with IP address of one of your router instances.

# curl -k --resolve www.example.test:443:$routerip https://www.example.test/4.2.16. Using Wildcard Routes (for a Subdomain)

The HAProxy router has support for wildcard routes, which are enabled by setting the ROUTER_ALLOW_WILDCARD_ROUTES environment variable to true. Any routes with a wildcard policy of Subdomain that pass the router admission checks will be serviced by the HAProxy router. Then, the HAProxy router exposes the associated service (for the route) per the route’s wildcard policy.

To change a route’s wildcard policy, you must remove the route and recreate it with the updated wildcard policy. Editing only the route’s wildcard policy in a route’s .yaml file does not work.

oc adm router --replicas=0 ... oc set env dc/router ROUTER_ALLOW_WILDCARD_ROUTES=true oc scale dc/router --replicas=1

$ oc adm router --replicas=0 ...

$ oc set env dc/router ROUTER_ALLOW_WILDCARD_ROUTES=true

$ oc scale dc/router --replicas=1Learn how to configure the web console for wildcard routes.

Using a Secure Wildcard Edge Terminated Route

This example reflects TLS termination occurring on the router before traffic is proxied to the destination. Traffic sent to any hosts in the subdomain example.org (*.example.org) is proxied to the exposed service.

The secure edge terminated route specifies the TLS certificate and key information. The TLS certificate is served by the router front end for all hosts that match the subdomain (*.example.org).

Start up a router instance:

oc adm router --replicas=0 --service-account=router oc set env dc/router ROUTER_ALLOW_WILDCARD_ROUTES=true oc scale dc/router --replicas=1

$ oc adm router --replicas=0 --service-account=router $ oc set env dc/router ROUTER_ALLOW_WILDCARD_ROUTES=true $ oc scale dc/router --replicas=1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a private key, certificate signing request (CSR), and certificate for the edge secured route.

The instructions on how to do this are specific to your certificate authority and provider. For a simple self-signed certificate for a domain named

*.example.test, see this example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Generate a wildcard route using the above certificate and key:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure your DNS entry for

*.example.testpoints to your router instance(s) and the route to your domain is available.This example uses

curlwith a local resolver to simulate the DNS lookup:# routerip="4.1.1.1" # replace with IP address of one of your router instances. curl -k --resolve www.example.test:443:$routerip https://www.example.test/ curl -k --resolve abc.example.test:443:$routerip https://abc.example.test/ curl -k --resolve anyname.example.test:443:$routerip https://anyname.example.test/

# routerip="4.1.1.1" # replace with IP address of one of your router instances. # curl -k --resolve www.example.test:443:$routerip https://www.example.test/ # curl -k --resolve abc.example.test:443:$routerip https://abc.example.test/ # curl -k --resolve anyname.example.test:443:$routerip https://anyname.example.test/Copy to Clipboard Copied! Toggle word wrap Toggle overflow

For routers that allow wildcard routes (ROUTER_ALLOW_WILDCARD_ROUTES set to true), there are some caveats to the ownership of a subdomain associated with a wildcard route.

Prior to wildcard routes, ownership was based on the claims made for a host name with the namespace with the oldest route winning against any other claimants. For example, route r1 in namespace ns1 with a claim for one.example.test would win over another route r2 in namespace ns2 for the same host name one.example.test if route r1 was older than route r2.

In addition, routes in other namespaces were allowed to claim non-overlapping hostnames. For example, route rone in namespace ns1 could claim www.example.test and another route rtwo in namespace d2 could claim c3po.example.test.

This is still the case if there are no wildcard routes claiming that same subdomain (example.test in the above example).

However, a wildcard route needs to claim all of the host names within a subdomain (host names of the form \*.example.test). A wildcard route’s claim is allowed or denied based on whether or not the oldest route for that subdomain (example.test) is in the same namespace as the wildcard route. The oldest route can be either a regular route or a wildcard route.

For example, if there is already a route eldest that exists in the ns1 namespace that claimed a host named owner.example.test and, if at a later point in time, a new wildcard route wildthing requesting for routes in that subdomain (example.test) is added, the claim by the wildcard route will only be allowed if it is the same namespace (ns1) as the owning route.

The following examples illustrate various scenarios in which claims for wildcard routes will succeed or fail.

In the example below, a router that allows wildcard routes will allow non-overlapping claims for hosts in the subdomain example.test as long as a wildcard route has not claimed a subdomain.

In the example below, a router that allows wildcard routes will not allow the claim for owner.example.test or aname.example.test to succeed since the owning namespace is ns1.

In the example below, a router that allows wildcard routes will allow the claim for `\*.example.test to succeed since the owning namespace is ns1 and the wildcard route belongs to that same namespace.

In the example below, a router that allows wildcard routes will not allow the claim for `\*.example.test to succeed since the owning namespace is ns1 and the wildcard route belongs to another namespace cyclone.

Similarly, once a namespace with a wildcard route claims a subdomain, only routes within that namespace can claim any hosts in that same subdomain.

In the example below, once a route in namespace ns1 with a wildcard route claims subdomain example.test, only routes in the namespace ns1 are allowed to claim any hosts in that same subdomain.

In the example below, different scenarios are shown, in which the owner routes are deleted and ownership is passed within and across namespaces. While a route claiming host eldest.example.test in the namespace ns1 exists, wildcard routes in that namespace can claim subdomain example.test. When the route for host eldest.example.test is deleted, the next oldest route senior.example.test would become the oldest route and would not affect any other routes. Once the route for host senior.example.test is deleted, the next oldest route junior.example.test becomes the oldest route and block the wildcard route claimant.

4.2.17. Using the Container Network Stack

The OpenShift Container Platform router runs inside a container and the default behavior is to use the network stack of the host (i.e., the node where the router container runs). This default behavior benefits performance because network traffic from remote clients does not need to take multiple hops through user space to reach the target service and container.

Additionally, this default behavior enables the router to get the actual source IP address of the remote connection rather than getting the node’s IP address. This is useful for defining ingress rules based on the originating IP, supporting sticky sessions, and monitoring traffic, among other uses.

This host network behavior is controlled by the --host-network router command line option, and the default behaviour is the equivalent of using --host-network=true. If you wish to run the router with the container network stack, use the --host-network=false option when creating the router. For example:

oc adm router --service-account=router --host-network=false

$ oc adm router --service-account=router --host-network=falseInternally, this means the router container must publish the 80 and 443 ports in order for the external network to communicate with the router.

Running with the container network stack means that the router sees the source IP address of a connection to be the NATed IP address of the node, rather than the actual remote IP address.

On OpenShift Container Platform clusters using multi-tenant network isolation, routers on a non-default namespace with the --host-network=false option will load all routes in the cluster, but routes across the namespaces will not be reachable due to network isolation. With the --host-network=true option, routes bypass the container network and it can access any pod in the cluster. If isolation is needed in this case, then do not add routes across the namespaces.

4.2.18. Exposing Router Metrics

Using the --metrics-image and --expose-metrics options, you can configure the OpenShift Container Platform router to run a sidecar container that exposes or publishes router metrics for consumption by external metrics collection and aggregation systems (e.g. Prometheus, statsd).

Depending on your router implementation, the image is appropriately set up and the metrics sidecar container is started when the router is deployed. For example, the HAProxy-based router implementation defaults to using the prom/haproxy-exporter image to run as a sidecar container, which can then be used as a metrics datasource by the Prometheus server.

The --metrics-image option overrides the defaults for HAProxy-based router implementations and, in the case of custom implementations, enables the image to use for a custom metrics exporter or publisher.

Grab the HAProxy Prometheus exporter image from the Docker registry:

sudo docker pull prom/haproxy-exporter

$ sudo docker pull prom/haproxy-exporterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the OpenShift Container Platform router:

oc adm router --service-account=router --expose-metrics

$ oc adm router --service-account=router --expose-metricsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Or, optionally, use the

--metrics-imageoption to override the HAProxy defaults:oc adm router --service-account=router --expose-metrics \ --metrics-image=prom/haproxy-exporter$ oc adm router --service-account=router --expose-metrics \ --metrics-image=prom/haproxy-exporterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Once the haproxy-exporter containers (and your HAProxy router) have started, point Prometheus to the sidecar container on port 9101 on the node where the haproxy-exporter container is running:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.19. Preventing Connection Failures During Restarts

If you connect to the router while the proxy is reloading, there is a small chance that your connection will end up in the wrong network queue and be dropped. The issue is being addressed. In the meantime, it is possible to work around the problem by installing iptables rules to prevent connections during the reload window. However, doing so means that the router needs to run with elevated privilege so that it can manipulate iptables on the host. It also means that connections that happen during the reload are temporarily ignored and must retransmit their connection start, lengthening the time it takes to connect, but preventing connection failure.

To prevent this, configure the router to use iptables by changing the service account, and setting an environment variable on the router.

Use a Privileged SCC

When creating the router, allow it to use the privileged SCC. This gives the router user the ability to create containers with root privileges on the nodes:

oc adm policy add-scc-to-user privileged -z router

$ oc adm policy add-scc-to-user privileged -z routerPatch the Router Deployment Configuration to Create a Privileged Container

You can now create privileged containers. Next, configure the router deployment configuration to use the privilege so that the router can set the iptables rules it needs. This patch changes the router deployment configuration so that the container that is created runs as privileged (and therefore gets correct capabilities) and run as root:

oc patch dc router -p '{"spec":{"template":{"spec":{"containers":[{"name":"router","securityContext":{"privileged":true}}],"securityContext":{"runAsUser": 0}}}}}'

$ oc patch dc router -p '{"spec":{"template":{"spec":{"containers":[{"name":"router","securityContext":{"privileged":true}}],"securityContext":{"runAsUser": 0}}}}}'Configure the Router to Use iptables

Set the option on the router deployment configuration:

oc set env dc/router -c router DROP_SYN_DURING_RESTART=true

$ oc set env dc/router -c router DROP_SYN_DURING_RESTART=trueIf you used a non-default name for the router, you must change dc/router accordingly.

4.2.20. ARP Cache Tuning for Large-scale Clusters

In OpenShift Container Platform clusters with large numbers of routes (greater than the value of net.ipv4.neigh.default.gc_thresh3, which is 1024 by default), you must increase the default values of sysctl variables to allow more entries in the ARP cache.

The kernel messages would be similar to the following:

[ 1738.811139] net_ratelimit: 1045 callbacks suppressed [ 1743.823136] net_ratelimit: 293 callbacks suppressed

[ 1738.811139] net_ratelimit: 1045 callbacks suppressed

[ 1743.823136] net_ratelimit: 293 callbacks suppressed

When this issue occurs, the oc commands might start to fail with the following error:

Unable to connect to the server: dial tcp: lookup <hostname> on <ip>:<port>: write udp <ip>:<port>-><ip>:<port>: write: invalid argument

Unable to connect to the server: dial tcp: lookup <hostname> on <ip>:<port>: write udp <ip>:<port>-><ip>:<port>: write: invalid argumentTo verify the actual amount of ARP entries for IPv4, run the following:

ip -4 neigh show nud all | wc -l

# ip -4 neigh show nud all | wc -l

If the number begins to approach the net.ipv4.neigh.default.gc_thresh3 threshold, increase the values. Run the following on the nodes running the router pods. The following sysctl values are suggested for clusters with large numbers of routes:

net.ipv4.neigh.default.gc_thresh1 = 8192 net.ipv4.neigh.default.gc_thresh2 = 32768 net.ipv4.neigh.default.gc_thresh3 = 65536

net.ipv4.neigh.default.gc_thresh1 = 8192

net.ipv4.neigh.default.gc_thresh2 = 32768

net.ipv4.neigh.default.gc_thresh3 = 65536To make these settings permanent across reboots, create a custom tuned profile.

4.2.21. Protecting Against DDoS Attacks

Add timeout http-request to the default HAProxy router image to protect the deployment against distributed denial-of-service (DDoS) attacks (for example, slowloris):

- 1

- timeout http-request is set up to 5 seconds. HAProxy gives a client 5 seconds *to send its whole HTTP request. Otherwise, HAProxy shuts the connection with *an error.

Also, when the environment variable ROUTER_SLOWLORIS_TIMEOUT is set, it limits the amount of time a client has to send the whole HTTP request. Otherwise, HAProxy will shut down the connection.

Setting the environment variable allows information to be captured as part of the router’s deployment configuration and does not require manual modification of the template, whereas manually adding the HAProxy setting requires you to rebuild the router pod and maintain your router template file.

Using annotations implements basic DDoS protections in the HAProxy template router, including the ability to limit the:

- number of concurrent TCP connections

- rate at which a client can request TCP connections

- rate at which HTTP requests can be made

These are enabled on a per route basis because applications can have extremely different traffic patterns.

| Setting | Description |

|---|---|

|

| Enables the settings be configured (when set to true, for example). |

|

| The number of concurrent TCP connections that can be made by the same IP address on this route. |

|

| The number of TCP connections that can be opened by a client IP. |

|

| The number of HTTP requests that a client IP can make in a 3-second period. |

4.3. Deploying a Customized HAProxy Router

4.3.1. Overview

The default HAProxy router is intended to satisfy the needs of most users. However, it does not expose all of the capability of HAProxy. Therefore, users may need to modify the router for their own needs.

You may need to implement new features within the application back-ends, or modify the current operation. The router plug-in provides all the facilities necessary to make this customization.

The router pod uses a template file to create the needed HAProxy configuration file. The template file is a golang template. When processing the template, the router has access to OpenShift Container Platform information, including the router’s deployment configuration, the set of admitted routes, and some helper functions.

When the router pod starts, and every time it reloads, it creates an HAProxy configuration file, and then it starts HAProxy. The HAProxy configuration manual describes all of the features of HAProxy and how to construct a valid configuration file.

A configMap can be used to add the new template to the router pod. With this approach, the router deployment configuration is modified to mount the configMap as a volume in the router pod. The TEMPLATE_FILE environment variable is set to the full path name of the template file in the router pod.

Alternatively, you can build a custom router image and use it when deploying some or all of your routers. There is no need for all routers to run the same image. To do this, modify the haproxy-template.config file, and rebuild the router image. The new image is pushed to the the cluster’s Docker repository, and the router’s deployment configuration image: field is updated with the new name. When the cluster is updated, the image needs to be rebuilt and pushed.

In either case, the router pod starts with the template file.

4.3.2. Obtaining the Router Configuration Template

The HAProxy template file is fairly large and complex. For some changes, it may be easier to modify the existing template rather than writing a complete replacement. You can obtain a haproxy-config.template file from a running router by running this on master, referencing the router pod:

oc get po NAME READY STATUS RESTARTS AGE router-2-40fc3 1/1 Running 0 11d oc rsh router-2-40fc3 cat haproxy-config.template > haproxy-config.template oc rsh router-2-40fc3 cat haproxy.config > haproxy.config

# oc get po

NAME READY STATUS RESTARTS AGE

router-2-40fc3 1/1 Running 0 11d

# oc rsh router-2-40fc3 cat haproxy-config.template > haproxy-config.template

# oc rsh router-2-40fc3 cat haproxy.config > haproxy.configAlternatively, you can log onto the node that is running the router:

docker run --rm --interactive=true --tty --entrypoint=cat \

registry.access.redhat.com/openshift3/ose-haproxy-router:v3.5 haproxy-config.template

# docker run --rm --interactive=true --tty --entrypoint=cat \

registry.access.redhat.com/openshift3/ose-haproxy-router:v3.5 haproxy-config.templateThe image name is from docker images.

Save this content to a file for use as the basis of your customized template. The saved haproxy.config shows what is actually running.

4.3.3. Modifying the Router Configuration Template

4.3.3.1. Background

The template is based on the golang template. It can reference any of the environment variables in the router’s deployment configuration, any configuration information that is described below, and router provided helper functions.

The structure of the template file mirrors the resulting HAProxy configuration file. As the template is processed, anything not surrounded by {{" something "}} is directly copied to the configuration file. Passages that are surrounded by {{" something "}} are evaluated. The resulting text, if any, is copied to the configuration file.

4.3.3.2. Go Template Actions

The define action names the file that will contain the processed template.

{{define "/var/lib/haproxy/conf/haproxy.config"}}pipeline{{end}}

{{define "/var/lib/haproxy/conf/haproxy.config"}}pipeline{{end}}| Function | Meaning |

|---|---|

|

| Returns the list of valid endpoints. |

|

| Tries to get the named environment variable from the pod. Returns the second argument if the variable cannot be read or is empty. |

|

| The first argument is a string that contains the regular expression, the second argument is the variable to test. Returns a Boolean value indicating whether the regular expression provided as the first argument matches the string provided as the second argument. |

|

| Determines if a given variable is an integer. |

|

| Compares a given string to a list of allowed strings. |

|

| Generates a regular expression matching the subdomain for hosts (and paths) with a wildcard policy. |

|

| Generates a regular expression matching the route hosts (and paths). The first argument is the host name, the second is the path, and the third is a wildcard Boolean. |

|

| Generates host name to use for serving/matching certificates. First argument is the host name and the second is the wildcard Boolean. |

These functions are provided by the HAProxy template router plug-in.

4.3.3.3. Router Provided Information

This section reviews the OpenShift Container Platform information that the router makes available to the template. The router configuration parameters are the set of data that the HAProxy router plug-in is given. The fields are accessed by (dot) .Fieldname.

The tables below the Router Configuration Parameters expand on the definitions of the various fields. In particular, .State has the set of admitted routes.

| Field | Type | Description |

|---|---|---|

|

| string | The directory that files will be written to, defaults to /var/lib/containers/router |

|

|

| The routes. |

|

|

| The service lookup. |

|

| string | Full path name to the default certificate in pem format. |

|

|

| Peers. |

|

| string | User name to expose stats with (if the template supports it). |

|

| string | Password to expose stats with (if the template supports it). |

|

| int | Port to expose stats with (if the template supports it). |

|

| bool | Whether the router should bind the default ports. |

| Field | Type | Description |

|---|---|---|

|

| string | The user-specified name of the route. |

|

| string | The namespace of the route. |

|

| string |

The host name. For example, |

|

| string |

Optional path. For example, |

|

|

| The termination policy for this back-end; drives the mapping files and router configuration. |

|

|

| Certificates used for securing this back-end. Keyed by the certificate ID. |

|

|

| Indicates the status of configuration that needs to be persisted. |

|

| string | Indicates the port the user wants to expose. If empty, a port will be selected for the service. |

|

|

|

Indicates desired behavior for insecure connections to an edge-terminated route: |

|

| string | Hash of the route + namespace name used to obscure the cookie ID. |

|

| bool | Indicates this service unit needing wildcard support. |

|

|

| Annotations attached to this route. |

|

|

| Collection of services that support this route, keyed by service name and valued on the weight attached to it with respect to other entries in the map. |

|

| int |

Count of the |

The ServiceAliasConfig is a route for a service. Uniquely identified by host + path. The default template iterates over routes using {{range $cfgIdx, $cfg := .State }}. Within such a {{range}} block, the template can refer to any field of the current ServiceAliasConfig using $cfg.Field.

| Field | Type | Description |

|---|---|---|

|

| string |

Name corresponds to a service name + namespace. Uniquely identifies the |

|

|

| Endpoints that back the service. This translates into a final back-end implementation for routers. |

ServiceUnit is an encapsulation of a service, the endpoints that back that service, and the routes that point to the service. This is the data that drives the creation of the router configuration files

| Field | Type |

|---|---|

|

| string |

|

| string |

|

| string |

|

| string |

|

| string |

|

| string |

|

| bool |

Endpoint is an internal representation of a Kubernetes endpoint.

| Field | Type | Description |

|---|---|---|

|

| string |

Represents a public/private key pair. It is identified by an ID, which will become the file name. A CA certificate will not have a |

|

| string | Indicates that the necessary files for this configuration have been persisted to disk. Valid values: "saved", "". |

| Field | Type | Description |

|---|---|---|

| ID | string | |

| Contents | string | The certificate. |

| PrivateKey | string | The private key. |

| Field | Type | Description |

|---|---|---|

|

| string | Dictates where the secure communication will stop. |

|

| string | Indicates the desired behavior for insecure connections to a route. While each router may make its own decisions on which ports to expose, this is normally port 80. |

TLSTerminationType and InsecureEdgeTerminationPolicyType dictate where the secure communication will stop.

| Constant | Value | Meaning |

|---|---|---|

|

|

| Terminate encryption at the edge router. |

|

|

| Terminate encryption at the destination, the destination is responsible for decrypting traffic. |

|

|

| Terminate encryption at the edge router and re-encrypt it with a new certificate supplied by the destination. |

| Type | Meaning |

|---|---|

|

| Traffic is sent to the server on the insecure port (default). |

|

| No traffic is allowed on the insecure port. |

|

| Clients are redirected to the secure port. |

None ("") is the same as Disable.

4.3.3.4. Annotations

Each route can have annotations attached. Each annotation is just a name and a value.

The name can be anything that does not conflict with existing Annotations. The value is any string. The string can have multiple tokens separated by a space. For example, aa bb cc. The template uses {{index}} to extract the value of an annotation. For example:

{{$balanceAlgo := index $cfg.Annotations "haproxy.router.openshift.io/balance"}}

{{$balanceAlgo := index $cfg.Annotations "haproxy.router.openshift.io/balance"}}This is an example of how this could be used for mutual client authorization.

Then, you can handle the white-listed CNs with this command.

oc annotate route <route-name> --overwrite whiteListCertCommonName="CN1 CN2 CN3"

$ oc annotate route <route-name> --overwrite whiteListCertCommonName="CN1 CN2 CN3"See Route-specific Annotations for more information.

4.3.3.5. Environment Variables

The template can use any environment variables that exist in the router pod. The environment variables can be set in the deployment configuration. New environment variables can be added.

They are referenced by the env function:

{{env "ROUTER_MAX_CONNECTIONS" "20000"}}

{{env "ROUTER_MAX_CONNECTIONS" "20000"}}

The first string is the variable, and the second string is the default when the variable is missing or nil. When ROUTER_MAX_CONNECTIONS is not set or is nil, 20000 is used. Environment variables are a map where the key is the environment variable name and the content is the value of the variable.

See Route-specific Environment variables for more information.

4.3.3.6. Example Usage

Here is a simple template based on the HAProxy template file.

Start with a comment:

{{/*

Here is a small example of how to work with templates

taken from the HAProxy template file.

*/}}

{{/*

Here is a small example of how to work with templates

taken from the HAProxy template file.

*/}}

The template can create any number of output files. Use a define construct to create an output file. The file name is specified as an argument to define, and everything inside the define block up to the matching end is written as the contents of that file.

{{ define "/var/lib/haproxy/conf/haproxy.config" }}

global

{{ end }}

{{ define "/var/lib/haproxy/conf/haproxy.config" }}

global

{{ end }}

The above will copy global to the /var/lib/haproxy/conf/haproxy.config file, and then close the file.

Set up logging based on environment variables.

{{ with (env "ROUTER_SYSLOG_ADDRESS" "") }}

log {{.}} {{env "ROUTER_LOG_FACILITY" "local1"}} {{env "ROUTER_LOG_LEVEL" "warning"}}

{{ end }}

{{ with (env "ROUTER_SYSLOG_ADDRESS" "") }}

log {{.}} {{env "ROUTER_LOG_FACILITY" "local1"}} {{env "ROUTER_LOG_LEVEL" "warning"}}

{{ end }}

The env function extracts the value for the environment variable. If the environment variable is not defined or nil, the second argument is returned.

The with construct sets the value of "." (dot) within the with block to whatever value is provided as an argument to with. The with action tests Dot for nil. If not nil, the clause is processed up to the end. In the above, assume ROUTER_SYSLOG_ADDRESS contains /var/log/msg, ROUTER_LOG_FACILITY is not defined, and ROUTER_LOG_LEVEL contains info. The following will be copied to the output file:

log /var/log/msg local1 info

log /var/log/msg local1 info

Each admitted route ends up generating lines in the configuration file. Use range to go through the admitted routes:

{{ range $cfgIdx, $cfg := .State }}

backend be_http_{{$cfgIdx}}

{{end}}

{{ range $cfgIdx, $cfg := .State }}

backend be_http_{{$cfgIdx}}

{{end}}

.State is a map of ServiceAliasConfig, where the key is the route name. range steps through the map and, for each pass, it sets $cfgIdx with the key, and sets `$cfg to point to the ServiceAliasConfig that describes the route. If there are two routes named myroute and hisroute, the above will copy the following to the output file:

backend be_http_myroute backend be_http_hisroute

backend be_http_myroute

backend be_http_hisroute

Route Annotations, $cfg.Annotations, is also a map with the annotation name as the key and the content string as the value. The route can have as many annotations as desired and the use is defined by the template author. The user codes the annotation into the route and the template author customized the HAProxy template to handle the annotation.

The common usage is to index the annotation to get the value.

{{$balanceAlgo := index $cfg.Annotations "haproxy.router.openshift.io/balance"}}

{{$balanceAlgo := index $cfg.Annotations "haproxy.router.openshift.io/balance"}}

The index extracts the value for the given annotation, if any. Therefore, `$balanceAlgo will contain the string associated with the annotation or nil. As above, you can test for a non-nil string and act on it with the with construct.

{{ with $balanceAlgo }}

balance $balanceAlgo

{{ end }}

{{ with $balanceAlgo }}

balance $balanceAlgo

{{ end }}

Here when $balanceAlgo is not nil, balance $balanceAlgo is copied to the output file.

In a second example, you want to set a server timeout based on a timeout value set in an annotation.

$value := index $cfg.Annotations "haproxy.router.openshift.io/timeout"

$value := index $cfg.Annotations "haproxy.router.openshift.io/timeout"

The $value can now be evaluated to make sure it contains a properly constructed string. The matchPattern function accepts a regular expression and returns true if the argument satisfies the expression.

matchPattern "[1-9][0-9]*(us\|ms\|s\|m\|h\|d)?" $value

matchPattern "[1-9][0-9]*(us\|ms\|s\|m\|h\|d)?" $value

This would accept 5000ms but not 7y. The results can be used in a test.

{{if (matchPattern "[1-9][0-9]*(us\|ms\|s\|m\|h\|d)?" $value) }}

timeout server {{$value}}

{{ end }}

{{if (matchPattern "[1-9][0-9]*(us\|ms\|s\|m\|h\|d)?" $value) }}

timeout server {{$value}}

{{ end }}It can also be used to match tokens:

matchPattern "roundrobin|leastconn|source" $balanceAlgo

matchPattern "roundrobin|leastconn|source" $balanceAlgo

Alternatively matchValues can be used to match tokens:

matchValues $balanceAlgo "roundrobin" "leastconn" "source"

matchValues $balanceAlgo "roundrobin" "leastconn" "source"4.3.4. Using a ConfigMap to Replace the Router Configuration Template

You can use a ConfigMap to customize the router instance without rebuilding the router image. The haproxy-config.template, reload-haproxy, and other scripts can be modified as well as creating and modifying router environment variables.

- Copy the haproxy-config.template that you want to modify as described above. Modify it as desired.

Create a ConfigMap:

oc create configmap customrouter --from-file=haproxy-config.template

$ oc create configmap customrouter --from-file=haproxy-config.templateCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

customrouterConfigMap now contains a copy of the modified haproxy-config.template file.Modify the router deployment configuration to mount the ConfigMap as a file and point the

TEMPLATE_FILEenvironment variable to it. This can be done viaoc set envandoc volumecommands, or alternatively by editing the router deployment configuration.- Using

occommands Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Editing the Router Deployment Configuration

Use

oc edit dc routerto edit the router deployment configuration with a text editor.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the changes and exit the editor. This restarts the router.

- Using

4.3.5. Using Stick Tables

The following example customization can be used in a highly-available routing setup to use stick-tables that synchronize between peers.

Adding a Peer Section

In order to synchronize stick-tables amongst peers you must a define a peers section in your HAProxy configuration. This section determines how HAProxy will identify and connect to peers. The plug-in provides data to the template under the .PeerEndpoints variable to allow you to easily identify members of the router service. You may add a peer section to the haproxy-config.template file inside the router image by adding:

Changing the Reload Script

When using stick-tables, you have the option of telling HAProxy what it should consider the name of the local host in the peer section. When creating endpoints, the plug-in attempts to set the TargetName to the value of the endpoint’s TargetRef.Name. If TargetRef is not set, it will set the TargetName to the IP address. The TargetRef.Name corresponds with the Kubernetes host name, therefore you can add the -L option to the reload-haproxy script to identify the local host in the peer section.

- 1

- Must match an endpoint target name that is used in the peer section.

Modifying Back Ends

Finally, to use the stick-tables within back ends, you can modify the HAProxy configuration to use the stick-tables and peer set. The following is an example of changing the existing back end for TCP connections to use stick-tables:

After this modification, you can rebuild your router.

4.3.6. Rebuilding Your Router

In order to rebuild the router, you need copies of several files that are present on a running router. Make a work directory and copy the files from the router:

You can edit or replace any of these files. However, conf/haproxy-config.template and reload-haproxy are the most likely to be modified.

After updating the files:

# docker build -t openshift/origin-haproxy-router-myversion . docker tag openshift/origin-haproxy-router-myversion 172.30.243.98:5000/openshift/haproxy-router-myversion docker push 172.30.243.98:5000/openshift/origin-haproxy-router-pc:latest

# docker build -t openshift/origin-haproxy-router-myversion .

# docker tag openshift/origin-haproxy-router-myversion 172.30.243.98:5000/openshift/haproxy-router-myversion

# docker push 172.30.243.98:5000/openshift/origin-haproxy-router-pc:latest

To use the new router, edit the router deployment configuration either by changing the image: string or by adding the --images=<repo>/<image>:<tag> flag to the oc adm router command.

When debugging the changes, it is helpful to set imagePullPolicy: Always in the deployment configuration to force an image pull on each pod creation. When debugging is complete, you can change it back to imagePullPolicy: IfNotPresent to avoid the pull on each pod start.

4.4. Configuring the HAProxy Router to Use the PROXY Protocol

4.4.1. Overview

By default, the HAProxy router expects incoming connections to unsecure, edge, and re-encrypt routes to use HTTP. However, you can configure the router to expect incoming requests by using the PROXY protocol instead. This topic describes how to configure the HAProxy router and an external load balancer to use the PROXY protocol.

4.4.2. Why Use the PROXY Protocol?

When an intermediary service such as a proxy server or load balancer forwards an HTTP request, it appends the source address of the connection to the request’s "Forwarded" header in order to provide this information to subsequent intermediaries and to the back-end service to which the request is ultimately forwarded. However, if the connection is encrypted, intermediaries cannot modify the "Forwarded" header. In this case, the HTTP header will not accurately communicate the original source address when the request is forwarded.

To solve this problem, some load balancers encapsulate HTTP requests using the PROXY protocol as an alternative to simply forwarding HTTP. Encapsulation enables the load balancer to add information to the request without modifying the forwarded request itself. In particular, this means that the load balancer can communicate the source address even when forwarding an encrypted connection.

The HAProxy router can be configured to accept the PROXY protocol and decapsulate the HTTP request. Because the router terminates encryption for edge and re-encrypt routes, the router can then update the "Forwarded" HTTP header (and related HTTP headers) in the request, appending any source address that is communicated using the PROXY protocol.

The PROXY protocol and HTTP are incompatible and cannot be mixed. If you use a load balancer in front of the router, both must use either the PROXY protocol or HTTP. Configuring one to use one protocol and the other to use the other protocol will cause routing to fail.

4.4.3. Using the PROXY Protocol

By default, the HAProxy router does not use the PROXY protocol. The router can be configured using the ROUTER_USE_PROXY_PROTOCOL environment variable to expect the PROXY protocol for incoming connections:

Enable the PROXY Protocol

oc env dc/router ROUTER_USE_PROXY_PROTOCOL=true

$ oc env dc/router ROUTER_USE_PROXY_PROTOCOL=true

Set the variable to any value other than true or TRUE to disable the PROXY protocol:

Disable the PROXY Protocol

oc env dc/router ROUTER_USE_PROXY_PROTOCOL=false

$ oc env dc/router ROUTER_USE_PROXY_PROTOCOL=falseIf you enable the PROXY protocol in the router, you must configure your load balancer in front of the router to use the PROXY protocol as well. Following is an example of configuring Amazon’s Elastic Load Balancer (ELB) service to use the PROXY protocol. This example assumes that ELB is forwarding ports 80 (HTTP), 443 (HTTPS), and 5000 (for the image registry) to the router running on one or more EC2 instances.

Configure Amazon ELB to Use the PROXY Protocol

To simplify subsequent steps, first set some shell variables:

lb='infra-lb' instances=( 'i-079b4096c654f563c' ) secgroups=( 'sg-e1760186' ) subnets=( 'subnet-cf57c596' )

$ lb='infra-lb'1 $ instances=( 'i-079b4096c654f563c' )2 $ secgroups=( 'sg-e1760186' )3 $ subnets=( 'subnet-cf57c596' )4 Copy to Clipboard Copied! Toggle word wrap Toggle overflow Next, create the ELB with the appropriate listeners, security groups, and subnets.

NoteYou must configure all listeners to use the TCP protocol, not the HTTP protocol.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Register your router instance or instances with the ELB:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the ELB’s health check:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Finally, create a load-balancer policy with the

ProxyProtocolattribute enabled, and configure it on the ELB’s TCP ports 80 and 443:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verify the Configuration

You can examine the load balancer as follows to verify that the configuration is correct:

Alternatively, if you already have an ELB configured, but it is not configured to use the PROXY protocol, you will need to change the existing listener for TCP port 80 to use the TCP protocol instead of HTTP (TCP port 443 should already be using the TCP protocol):

aws elb delete-load-balancer-listeners --load-balancer-name "$lb" \ --load-balancer-ports 80 aws elb create-load-balancer-listeners --load-balancer-name "$lb" \ --listeners 'Protocol=TCP,LoadBalancerPort=80,InstanceProtocol=TCP,InstancePort=80'

$ aws elb delete-load-balancer-listeners --load-balancer-name "$lb" \

--load-balancer-ports 80

$ aws elb create-load-balancer-listeners --load-balancer-name "$lb" \

--listeners 'Protocol=TCP,LoadBalancerPort=80,InstanceProtocol=TCP,InstancePort=80'Verify the Protocol Updates

Verify that the protocol has been updated as follows:

- 1

- All listeners, including the listener for TCP port 80, should be using the TCP protocol.

Then, create a load-balancer policy and add it to the ELB as described in Step 5 above.

4.5. Using the F5 Router Plug-in

4.5.1. Overview

The F5 router plug-in is available starting in OpenShift Container Platform 3.0.2.

The F5 router plug-in is provided as a container image and run as a pod, just like the default HAProxy router.

Support relationships between F5 and Red Hat provide a full scope of support for F5 integration. F5 provides support for the F5 BIG-IP® product. Both F5 and Red Hat jointly support the integration with Red Hat OpenShift. While Red Hat helps with bug fixes and feature enhancements, all get communicated to F5 Networks where they are managed as part of their development cycles.

4.5.2. Prerequisites

When deploying the F5 router plug-in, ensure you meet the following requirements:

A F5 host IP with:

- Credentials for API access

- SSH access via a private key

- An F5 user with Advanced Shell access

A virtual server for HTTP routes:

- HTTP profile must be http.

A virtual server with HTTP profile routes:

- HTTP profile must be http

- SSL Profile (client) must be clientssl

- SSL Profile (server) must be serverssl

For edge integration (not recommended):

- A working ramp node

- A working tunnel to the ramp node

For native integration:

- A host-internal IP capable of communicating with all nodes on the port 4789/UDP

- The sdn-services add-on license installed on the F5 host.

OpenShift Container Platform supports only the following F5 BIG-IP® versions:

- 11.x

- 12.x

The following features are not supported with F5 BIG-IP®:

- Wildcard routes together with re-encrypt routes - you must supply a certificate and a key in the route. If you provide a certificate, a key, and a certificate authority (CA), the CA is never used.

- A pool is created for all services, even for the ones with no associated route.

- Idling applications

-

Unencrypted HTTP traffic in redirect mode, with edge TLS termination. (

insecureEdgeTerminationPolicy: Redirect) -

Sharding, that is, having multiple

vserverson the F5. -

SSL cipher (

ROUTER_CIPHERS=modern/old) - Customizing the endpoint health checks for time-intervals and the type of checks.

- Serving F5 metrics by using a metrics server.

-

Specifying a different target port (

PreferPort/TargetPort) rather than the ones specified in the service. - Customizing the source IP whitelists, that is, allowing traffic for a route only from specific IP addresses.

-

Customizing timeout values, such as

max connect time, ortcp FIN timeout. - HA mode for the F5 BIG-IP®.

4.5.2.1. Configuring the Virtual Servers

As a prerequisite to working with the openshift-F5 integrated router, two virtual servers (one virtual server each for HTTP and HTTPS profiles, respectively) need to be set up in the F5 BIG-IP® appliance.

To set up a virtual server in the F5 BIG-IP® appliance, follow the instructions from F5.

While creating the virtual server, ensure the following settings are in place:

-

For the HTTP server, set the

ServicePortto'http'/80. -