This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Builds

Performing and interacting with builds in OpenShift Container Platform

Abstract

Chapter 1. Understanding image builds

1.1. Builds

A build is the process of transforming input parameters into a resulting object. Most often, the process is used to transform input parameters or source code into a runnable image. A BuildConfig object is the definition of the entire build process.

OpenShift Container Platform uses Kubernetes by creating containers from build images and pushing them to a container image registry.

Build objects share common characteristics including inputs for a build, the requirement to complete a build process, logging the build process, publishing resources from successful builds, and publishing the final status of the build. Builds take advantage of resource restrictions, specifying limitations on resources such as CPU usage, memory usage, and build or pod execution time.

The OpenShift Container Platform build system provides extensible support for build strategies that are based on selectable types specified in the build API. There are three primary build strategies available:

- Docker build

- Source-to-Image (S2I) build

- Custom build

By default, Docker builds and S2I builds are supported.

The resulting object of a build depends on the builder used to create it. For Docker and S2I builds, the resulting objects are runnable images. For Custom builds, the resulting objects are whatever the builder image author has specified.

Additionally, the Pipeline build strategy can be used to implement sophisticated workflows:

- Continuous integration

- Continuous deployment

1.1.1. Docker build

The Docker build strategy invokes the docker build command, and it expects a repository with a Dockerfile and all required artifacts in it to produce a runnable image.

1.1.2. Source-to-Image (S2I) build

Source-to-Image (S2I) is a tool for building reproducible, Docker-formatted container images. It produces ready-to-run images by injecting application source into a container image and assembling a new image. The new image incorporates the base image (the builder) and built source and is ready to use with the buildah run command. S2I supports incremental builds, which re-use previously downloaded dependencies, previously built artifacts, etc.

The advantages of S2I include the following:

| Image flexibility |

S2I scripts can be written to inject application code into almost any existing Docker-formatted container image, taking advantage of the existing ecosystem. Note that, currently, S2I relies on |

| Speed | With S2I, the assemble process can perform a large number of complex operations without creating a new layer at each step, resulting in a fast process. In addition, S2I scripts can be written to re-use artifacts stored in a previous version of the application image, rather than having to download or build them each time the build is run. |

| Patchability | S2I allows you to rebuild the application consistently if an underlying image needs a patch due to a security issue. |

| Operational efficiency | By restricting build operations instead of allowing arbitrary actions, as a Dockerfile would allow, the PaaS operator can avoid accidental or intentional abuses of the build system. |

| Operational security | Building an arbitrary Dockerfile exposes the host system to root privilege escalation. This can be exploited by a malicious user because the entire Docker build process is run as a user with Docker privileges. S2I restricts the operations performed as a root user and can run the scripts as a non-root user. |

| User efficiency |

S2I prevents developers from performing arbitrary |

| Ecosystem | S2I encourages a shared ecosystem of images where you can leverage best practices for your applications. |

| Reproducibility | Produced images can include all inputs including specific versions of build tools and dependencies. This ensures that the image can be reproduced precisely. |

1.1.3. Custom build

The Custom build strategy allows developers to define a specific builder image responsible for the entire build process. Using your own builder image allows you to customize your build process.

A Custom builder image is a plain Docker-formatted container image embedded with build process logic, for example for building RPMs or base images.

Custom builds run with a very high level of privilege and are not available to users by default. Only users who can be trusted with cluster administration permissions should be granted access to run custom builds.

1.1.4. Pipeline build

The Pipeline build strategy is deprecated in OpenShift Container Platform 4. Equivalent and improved functionality is present in the OpenShift Pipelines based on Tekton.

Jenkins images on OpenShift are fully supported and users should follow Jenkins user documentation for defining their Jenkinsfile in a job or store it in a Source Control Management system.

The Pipeline build strategy allows developers to define a Jenkins pipeline for execution by the Jenkins pipeline plug-in. The build can be started, monitored, and managed by OpenShift Container Platform in the same way as any other build type.

Pipeline workflows are defined in a Jenkinsfile, either embedded directly in the build configuration, or supplied in a Git repository and referenced by the build configuration.

Chapter 2. Understanding build configurations

The following sections define the concept of a build, BuildConfig, and outline the primary build strategies available.

2.1. BuildConfigs

A build configuration describes a single build definition and a set of triggers for when a new build is created. Build configurations are defined by a BuildConfig, which is a REST object that can be used in a POST to the API server to create a new instance.

A build configuration, or BuildConfig, is characterized by a build strategy and one or more sources. The strategy determines the process, while the sources provide its input.

Depending on how you choose to create your application using OpenShift Container Platform, a BuildConfig is typically generated automatically for you if you use the web console or CLI, and it can be edited at any time. Understanding the parts that make up a BuildConfig and their available options can help if you choose to manually change your configuration later.

The following example BuildConfig results in a new build every time a container image tag or the source code changes:

BuildConfig object definition

- 1

- This specification creates a new

BuildConfignamed ruby-sample-build. - 2

- The

runPolicyfield controls whether builds created from this build configuration can be run simultaneously. The default value isSerial, which means new builds run sequentially, not simultaneously. - 3

- You can specify a list of triggers, which cause a new build to be created.

- 4

- The

sourcesection defines the source of the build. The source type determines the primary source of input, and can be eitherGit, to point to a code repository location,Dockerfile, to build from an inline Dockerfile, orBinary, to accept binary payloads. It is possible to have multiple sources at once. Refer to the documentation for each source type for details. - 5

- The

strategysection describes the build strategy used to execute the build. You can specify aSource,Docker, orCustomstrategy here. This example uses theruby-20-centos7container image that Source-To-Image uses for the application build. - 6

- After the container image is successfully built, it is pushed into the repository described in the

outputsection. - 7

- The

postCommitsection defines an optional build hook.

Chapter 3. Creating build inputs

Use the following sections for an overview of build inputs, instructions on how to use inputs to provide source content for builds to operate on, and how to use build environments and create secrets.

3.1. Build inputs

A build input provides source content for builds to operate on. You can use the following build inputs to provide sources in OpenShift Container Platform, listed in order of precedence:

- Inline Dockerfile definitions

- Content extracted from existing images

- Git repositories

- Binary (Local) inputs

- Input secrets

- External artifacts

You can combine multiple inputs in a single build. However, as the inline Dockerfile takes precedence, it can overwrite any other file named Dockerfile provided by another input. Binary (local) input and Git repositories are mutually exclusive inputs.

You can use input secrets when you do not want certain resources or credentials used during a build to be available in the final application image produced by the build, or want to consume a value that is defined in a Secret resource. External artifacts can be used to pull in additional files that are not available as one of the other build input types.

When you run a build:

- A working directory is constructed and all input content is placed in the working directory. For example, the input Git repository is cloned into the working directory, and files specified from input images are copied into the working directory using the target path.

-

The build process changes directories into the

contextDir, if one is defined. - The inline Dockerfile, if any, is written to the current directory.

-

The content from the current directory is provided to the build process for reference by the Dockerfile, custom builder logic, or assemble script. This means any input content that resides outside the

contextDirwill be ignored by the build.

The following example of a source definition includes multiple input types and an explanation of how they are combined. For more details on how each input type is defined, see the specific sections for each input type.

- 1

- The repository to be cloned into the working directory for the build.

- 2

- /usr/lib/somefile.jar from

myinputimagewill be stored in <workingdir>/app/dir/injected/dir. - 3

- The working directory for the build will become <original_workingdir>/app/dir.

- 4

- A Dockerfile with this content will be created in <original_workingdir>/app/dir, overwriting any existing file with that name.

3.2. Dockerfile source

When you supply a dockerfile value, the content of this field is written to disk as a file named Dockerfile. This is done after other input sources are processed, so if the input source repository contains a Dockerfile in the root directory, it will be overwritten with this content.

The source definition is part of the spec section in the BuildConfig:

source: dockerfile: "FROM centos:7\nRUN yum install -y httpd"

source:

dockerfile: "FROM centos:7\nRUN yum install -y httpd" - 1

- The

dockerfilefield contains an inline Dockerfile that will be built.

Additional resources

-

The typical use for this field is to provide a

Dockerfileto a Docker strategy build.

3.3. Image source

You can add additional files to the build process with images. Input images are referenced in the same way the From and To image targets are defined. This means both container images and imagestreamtags can be referenced. In conjunction with the image, you must provide one or more path pairs to indicate the path of the files or directories to copy the image and the destination to place them in the build context.

The source path can be any absolute path within the image specified. The destination must be a relative directory path. At build time, the image will be loaded and the indicated files and directories will be copied into the context directory of the build process. This is the same directory into which the source repository content (if any) is cloned. If the source path ends in /. then the content of the directory will be copied, but the directory itself will not be created at the destination.

Image inputs are specified in the source definition of the BuildConfig:

- 1

- An array of one or more input images and files.

- 2

- A reference to the image containing the files to be copied.

- 3

- An array of source/destination paths.

- 4

- The directory relative to the build root where the build process can access the file.

- 5

- The location of the file to be copied out of the referenced image.

- 6

- An optional secret provided if credentials are needed to access the input image.

Optionally, if an input image requires a pull secret, you can link the pull secret to the service account used by the build. By default, builds use the builder service account. The pull secret is automatically added to the build if the secret contains a credential that matches the repository hosting the input image.

+

oc secrets link builder dockerhub

$ oc secrets link builder dockerhubThis feature is not supported for builds using the Custom Strategy.

3.4. Git source

When specified, source code is fetched from the supplied location.

If you supply an inline Dockerfile, it overwrites the Dockerfile (if any) in the contextDir of the Git repository.

The source definition is part of the spec section in the BuildConfig:

- 1

- The

gitfield contains the URI to the remote Git repository of the source code. Optionally, specify thereffield to check out a specific Git reference. A validrefcan be a SHA1 tag or a branch name. - 2

- The

contextDirfield allows you to override the default location inside the source code repository where the build looks for the application source code. If your application exists inside a sub-directory, you can override the default location (the root folder) using this field. - 3

- If the optional

dockerfilefield is provided, it should be a string containing a Dockerfile that overwrites any Dockerfile that may exist in the source repository.

If the ref field denotes a pull request, the system will use a git fetch operation and then checkout FETCH_HEAD.

When no ref value is provided, OpenShift Container Platform performs a shallow clone (--depth=1). In this case, only the files associated with the most recent commit on the default branch (typically master) are downloaded. This results in repositories downloading faster, but without the full commit history. To perform a full git clone of the default branch of a specified repository, set ref to the name of the default branch (for example master).

Git clone operations that go through a proxy that is performing man in the middle (MITM) TLS hijacking or reencrypting of the proxied connection will not work.

3.4.1. Using a proxy

If your Git repository can only be accessed using a proxy, you can define the proxy to use in the source section of the BuildConfig. You can configure both an HTTP and HTTPS proxy to use. Both fields are optional. Domains for which no proxying should be performed can also be specified in the NoProxy field.

Your source URI must use the HTTP or HTTPS protocol for this to work.

For Pipeline strategy builds, given the current restrictions with the Git plug-in for Jenkins, any Git operations through the Git plug-in will not leverage the HTTP or HTTPS proxy defined in the BuildConfig. The Git plug-in only will use the proxy configured in the Jenkins UI at the Plugin Manager panel. This proxy will then be used for all git interactions within Jenkins, across all jobs.

Additional resources

- You can find instructions on how to configure proxies through the Jenkins UI at JenkinsBehindProxy.

3.4.2. Source Clone Secrets

Builder pods require access to any Git repositories defined as source for a build. Source clone secrets are used to provide the builder pod with access it would not normally have access to, such as private repositories or repositories with self-signed or untrusted SSL certificates.

The following source clone secret configurations are supported.

- .gitconfig File

- Basic Authentication

- SSH Key Authentication

- Trusted Certificate Authorities

You can also use combinations of these configurations to meet your specific needs.

3.4.2.1. Automatically adding a source clone secret to a build configuration

When a BuildConfig is created, OpenShift Container Platform can automatically populate its source clone secret reference. This behavior allows the resulting Builds to automatically use the credentials stored in the referenced Secret to authenticate to a remote Git repository, without requiring further configuration.

To use this functionality, a Secret containing the Git repository credentials must exist in the namespace in which the BuildConfig is later created. This Secret must additionally include one or more annotations prefixed with build.openshift.io/source-secret-match-uri-. The value of each of these annotations is a URI pattern, defined as follows. When a BuildConfig is created without a source clone secret reference and its Git source URI matches a URI pattern in a Secret annotation, OpenShift Container Platform will automatically insert a reference to that Secret in the BuildConfig.

Prerequisites

A URI pattern must consist of:

-

a valid scheme (

*://,git://,http://,https://orssh://). -

a host (

*or a valid hostname or IP address optionally preceded by*.). -

a path (

/*or/followed by any characters optionally including*characters).

In all of the above, a * character is interpreted as a wildcard.

URI patterns must match Git source URIs which are conformant to RFC3986. Do not include a username (or password) component in a URI pattern.

For example, if you use ssh://git@bitbucket.atlassian.com:7999/ATLASSIAN/jira.git for a git repository URL, the source secret must be specified as ssh://bitbucket.atlassian.com:7999/* (and not ssh://git@bitbucket.atlassian.com:7999/*).

oc annotate secret mysecret \

'build.openshift.io/source-secret-match-uri-1=ssh://bitbucket.atlassian.com:7999/*'

$ oc annotate secret mysecret \

'build.openshift.io/source-secret-match-uri-1=ssh://bitbucket.atlassian.com:7999/*'Procedure

If multiple Secrets match the Git URI of a particular BuildConfig, OpenShift Container Platform will select the secret with the longest match. This allows for basic overriding, as in the following example.

The following fragment shows two partial source clone secrets, the first matching any server in the domain mycorp.com accessed by HTTPS, and the second overriding access to servers mydev1.mycorp.com and mydev2.mycorp.com:

-

Add a

build.openshift.io/source-secret-match-uri-annotation to a pre-existing secret using:

oc annotate secret mysecret \

'build.openshift.io/source-secret-match-uri-1=https://*.mycorp.com/*'

$ oc annotate secret mysecret \

'build.openshift.io/source-secret-match-uri-1=https://*.mycorp.com/*'3.4.2.2. Manually adding a source clone secret

Source clone secrets can be added manually to a build configuration by adding a sourceSecret field to the source section inside the BuildConfig and setting it to the name of the secret that you created (basicsecret, in this example).

Procedure

You can also use the oc set build-secret command to set the source clone secret on an existing build configuration.

- To set the source clone secret on an existing build configuration, run:

oc set build-secret --source bc/sample-build basicsecret

$ oc set build-secret --source bc/sample-build basicsecretAdditional resources

-

Defining Secrets in the

BuildConfigprovides more information on this topic.

3.4.2.3. Creating a secret from a .gitconfig file

If the cloning of your application is dependent on a .gitconfig file, then you can create a secret that contains it. Add it to the builder service account and then your BuildConfig.

Procedure

-

To create a secret from a

.gitconfigfile:

oc create secret generic <secret_name> --from-file=<path/to/.gitconfig>

$ oc create secret generic <secret_name> --from-file=<path/to/.gitconfig>

SSL verification can be turned off if sslVerify=false is set for the http section in your .gitconfig file:

[http]

sslVerify=false

[http]

sslVerify=false3.4.2.4. Creating a secret from a .gitconfig file for secured Git

If your Git server is secured with two-way SSL and user name with password, you must add the certificate files to your source build and add references to the certificate files in the .gitconfig file.

Prerequisites

- Git credentials

Procedure

Add the certificate files to your source build and add references to the certificate files in the .gitconfig file.

-

Add the

client.crt,cacert.crt, andclient.keyfiles to the/var/run/secrets/openshift.io/source/folder in the application source code. In the

.gitconfigfile for the server, add the[http]section shown in the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the secret:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

To avoid having to enter your password again, be sure to specify the S2I image in your builds. However, if you cannot clone the repository, you still must specify your user name and password to promote the build.

Additional resources

-

/var/run/secrets/openshift.io/source/folder in the application source code.

3.4.2.5. Creating a secret from source code basic authentication

Basic authentication requires either a combination of --username and --password, or a token to authenticate against the SCM server.

Prerequisites

- User name and password to access the private repository.

Procedure

Create the

secretfirst before using the user name and password to access the private repository:oc create secret generic <secret_name> \ --from-literal=username=<user_name> \ --from-literal=password=<password> \ --type=kubernetes.io/basic-auth$ oc create secret generic <secret_name> \ --from-literal=username=<user_name> \ --from-literal=password=<password> \ --type=kubernetes.io/basic-authCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a basic authentication secret with a token:

oc create secret generic <secret_name> \ --from-literal=password=<token> \ --type=kubernetes.io/basic-auth$ oc create secret generic <secret_name> \ --from-literal=password=<token> \ --type=kubernetes.io/basic-authCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.2.6. Creating a secret from source code SSH key authentication

SSH key based authentication requires a private SSH key.

The repository keys are usually located in the $HOME/.ssh/ directory, and are named id_dsa.pub, id_ecdsa.pub, id_ed25519.pub, or id_rsa.pub by default.

Procedure

Generate SSH key credentials:

ssh-keygen -t ed25519 -C "your_email@example.com"

$ ssh-keygen -t ed25519 -C "your_email@example.com"Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteCreating a passphrase for the SSH key prevents OpenShift Container Platform from building. When prompted for a passphrase, leave it blank.

Two files are created: the public key and a corresponding private key (one of

id_dsa,id_ecdsa,id_ed25519, orid_rsa). With both of these in place, consult your source control management (SCM) system’s manual on how to upload the public key. The private key is used to access your private repository.Before using the SSH key to access the private repository, create the secret:

oc create secret generic <secret_name> \ --from-file=ssh-privatekey=<path/to/ssh/private/key> \ --type=kubernetes.io/ssh-auth$ oc create secret generic <secret_name> \ --from-file=ssh-privatekey=<path/to/ssh/private/key> \ --type=kubernetes.io/ssh-authCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.2.7. Creating a secret from source code trusted certificate authorities

The set of TLS certificate authorities (CA) that are trusted during a git clone operation are built into the OpenShift Container Platform infrastructure images. If your Git server uses a self-signed certificate or one signed by an authority not trusted by the image, you can create a secret that contains the certificate or disable TLS verification.

If you create a secret for the CA certificate, OpenShift Container Platform uses it to access your Git server during the git clone operation. Using this method is significantly more secure than disabling Git’s SSL verification, which accepts any TLS certificate that is presented.

Procedure

Create a secret with a CA certificate file.

If your CA uses Intermediate Certificate Authorities, combine the certificates for all CAs in a

ca.crtfile. Run the following command:cat intermediateCA.crt intermediateCA.crt rootCA.crt > ca.crt

$ cat intermediateCA.crt intermediateCA.crt rootCA.crt > ca.crtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the secret:

oc create secret generic mycert --from-file=ca.crt=</path/to/file>

$ oc create secret generic mycert --from-file=ca.crt=</path/to/file>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- You must use the key name

ca.crt.

3.4.2.8. Source secret combinations

You can combine the different methods for creating source clone secrets for your specific needs.

3.4.2.8.1. Creating a SSH-based authentication secret with a .gitconfig file

You can combine the different methods for creating source clone secrets for your specific needs, such as a SSH-based authentication secret with a .gitconfig file.

Prerequisites

- SSH authentication

- .gitconfig file

Procedure

-

Create a SSH-based authentication secret with a

.gitconfigfile

oc create secret generic <secret_name> \

--from-file=ssh-privatekey=<path/to/ssh/private/key> \

--from-file=<path/to/.gitconfig> \

--type=kubernetes.io/ssh-auth

$ oc create secret generic <secret_name> \

--from-file=ssh-privatekey=<path/to/ssh/private/key> \

--from-file=<path/to/.gitconfig> \

--type=kubernetes.io/ssh-auth3.4.2.8.2. Creating a secret that combines a .gitconfig file and CA certificate

You can combine the different methods for creating source clone secrets for your specific needs, such as a secret that combines a .gitconfig file and CA certificate.

Prerequisites

- .gitconfig file

- CA certificate

Procedure

-

Create a secret that combines a

.gitconfigfile and CA certificate

oc create secret generic <secret_name> \

--from-file=ca.crt=<path/to/certificate> \

--from-file=<path/to/.gitconfig>

$ oc create secret generic <secret_name> \

--from-file=ca.crt=<path/to/certificate> \

--from-file=<path/to/.gitconfig>- builds/creating-build-inputs.adoc

3.4.2.8.3. Creating a basic authentication secret with a CA certificate

You can combine the different methods for creating source clone secrets for your specific needs, such as a secret that combines a basic authentication and CA certificate.

Prerequisites

- Basic authentication credentials

- CA certificate

Procedure

- Create a basic authentication secret with a CA certificate

oc create secret generic <secret_name> \

--from-literal=username=<user_name> \

--from-literal=password=<password> \

--from-file=ca-cert=</path/to/file> \

--type=kubernetes.io/basic-auth

$ oc create secret generic <secret_name> \

--from-literal=username=<user_name> \

--from-literal=password=<password> \

--from-file=ca-cert=</path/to/file> \

--type=kubernetes.io/basic-auth- builds/creating-build-inputs.adoc

3.4.2.8.4. Creating a basic authentication secret with a .gitconfig file

You can combine the different methods for creating source clone secrets for your specific needs, such as a secret that combines a basic authentication and .gitconfig file.

Prerequisites

- Basic authentication credentials

-

.gitconfigfile

Procedure

-

Create a basic authentication secret with a

.gitconfigfile

oc create secret generic <secret_name> \

--from-literal=username=<user_name> \

--from-literal=password=<password> \

--from-file=</path/to/.gitconfig> \

--type=kubernetes.io/basic-auth

$ oc create secret generic <secret_name> \

--from-literal=username=<user_name> \

--from-literal=password=<password> \

--from-file=</path/to/.gitconfig> \

--type=kubernetes.io/basic-auth- builds/creating-build-inputs.adoc

3.4.2.8.5. Creating a basic authentication secret with a .gitconfig file and CA certificate

You can combine the different methods for creating source clone secrets for your specific needs, such as a secret that combines a basic authentication, .gitconfig file, and CA certificate.

Prerequisites

- Basic authentication credentials

-

.gitconfigfile - CA certificate

Procedure

-

Create a basic authentication secret with a

.gitconfigfile and CA certificate

3.5. Binary (local) source

Streaming content from a local file system to the builder is called a Binary type build. The corresponding value of BuildConfig.spec.source.type is Binary for such builds.

This source type is unique in that it is leveraged solely based on your use of the oc start-build.

Binary type builds require content to be streamed from the local file system, so automatically triggering a binary type build (e.g. via an image change trigger) is not possible, because the binary files cannot be provided. Similarly, you cannot launch binary type builds from the web console.

To utilize binary builds, invoke oc start-build with one of these options:

-

--from-file: The contents of the file you specify are sent as a binary stream to the builder. You can also specify a URL to a file. Then, the builder stores the data in a file with the same name at the top of the build context. -

--from-dirand--from-repo: The contents are archived and sent as a binary stream to the builder. Then, the builder extracts the contents of the archive within the build context directory. With--from-dir, you can also specify a URL to an archive, which will be extracted. -

--from-archive: The archive you specify is sent to the builder, where it is extracted within the build context directory. This option behaves the same as--from-dir; an archive is created on your host first, whenever the argument to these options is a directory.

In each of the previously listed cases:

-

If your

BuildConfigalready has aBinarysource type defined, it will effectively be ignored and replaced by what the client sends. -

If your

BuildConfighas aGitsource type defined, it is dynamically disabled, sinceBinaryandGitare mutually exclusive, and the data in the binary stream provided to the builder takes precedence.

Instead of a file name, you can pass a URL with HTTP or HTTPS schema to --from-file and --from-archive. When using --from-file with a URL, the name of the file in the builder image is determined by the Content-Disposition header sent by the web server, or the last component of the URL path if the header is not present. No form of authentication is supported and it is not possible to use custom TLS certificate or disable certificate validation.

When using oc new-build --binary=true, the command ensures that the restrictions associated with binary builds are enforced. The resulting BuildConfig will have a source type of Binary, meaning that the only valid way to run a build for this BuildConfig is to use oc start-build with one of the --from options to provide the requisite binary data.

The dockerfile and contextDir source options have special meaning with binary builds.

dockerfile can be used with any binary build source. If dockerfile is used and the binary stream is an archive, its contents serve as a replacement Dockerfile to any Dockerfile in the archive. If dockerfile is used with the --from-file argument, and the file argument is named dockerfile, the value from dockerfile replaces the value from the binary stream.

In the case of the binary stream encapsulating extracted archive content, the value of the contextDir field is interpreted as a subdirectory within the archive, and, if valid, the builder changes into that subdirectory before executing the build.

3.6. Input secrets and config maps

In some scenarios, build operations require credentials or other configuration data to access dependent resources, but it is undesirable for that information to be placed in source control. You can define input secrets and input config maps for this purpose.

For example, when building a Java application with Maven, you can set up a private mirror of Maven Central or JCenter that is accessed by private keys. In order to download libraries from that private mirror, you have to supply the following:

- A settings.xml file configured with the mirror’s URL and connection settings.

- A private key referenced in the settings file, such as ~/.ssh/id_rsa.

For security reasons, you do not want to expose your credentials in the application image.

This example describes a Java application, but you can use the same approach for adding SSL certificates into the /etc/ssl/certs directory, API keys or tokens, license files, and more.

3.6.1. Adding input secrets and config maps

In some scenarios, build operations require credentials or other configuration data to access dependent resources, but it is undesirable for that information to be placed in source control. You can define input secrets and input config maps for this purpose.

Procedure

To add an input secret and/or config map to an existing BuildConfig object:

Create the

ConfigMapobject, if it does not exist:oc create configmap settings-mvn \ --from-file=settings.xml=<path/to/settings.xml>$ oc create configmap settings-mvn \ --from-file=settings.xml=<path/to/settings.xml>Copy to Clipboard Copied! Toggle word wrap Toggle overflow This creates a new config map named settings-mvn, which contains the plain text content of the settings.xml file.

Create the

Secretobject, if it does not exist:oc create secret generic secret-mvn \ --from-file=id_rsa=<path/to/.ssh/id_rsa>$ oc create secret generic secret-mvn \ --from-file=id_rsa=<path/to/.ssh/id_rsa>Copy to Clipboard Copied! Toggle word wrap Toggle overflow This creates a new secret named secret-mvn, which contains the base64 encoded content of the id_rsa private key.

Add the config map and secret to the

sourcesection in the existingBuildConfigobject:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

To include the secret and config map in a new BuildConfig object, run the following command:

oc new-build \

openshift/wildfly-101-centos7~https://github.com/wildfly/quickstart.git \

--context-dir helloworld --build-secret “secret-mvn” \

--build-config-map "settings-mvn"

$ oc new-build \

openshift/wildfly-101-centos7~https://github.com/wildfly/quickstart.git \

--context-dir helloworld --build-secret “secret-mvn” \

--build-config-map "settings-mvn"

During the build, the settings.xml and id_rsa files are copied into the directory where the source code is located. In OpenShift Container Platform S2I builder images, this is the image working directory, which is set using the WORKDIR instruction in the Dockerfile. If you want to specify another directory, add a destinationDir to the definition:

You can also specify the destination directory when creating a new BuildConfig object:

oc new-build \

openshift/wildfly-101-centos7~https://github.com/wildfly/quickstart.git \

--context-dir helloworld --build-secret “secret-mvn:.ssh” \

--build-config-map "settings-mvn:.m2"

$ oc new-build \

openshift/wildfly-101-centos7~https://github.com/wildfly/quickstart.git \

--context-dir helloworld --build-secret “secret-mvn:.ssh” \

--build-config-map "settings-mvn:.m2"In both cases, the settings.xml file is added to the ./.m2 directory of the build environment, and the id_rsa key is added to the ./.ssh directory.

3.6.2. Source-to-image strategy

When using a Source strategy, all defined input secrets are copied to their respective destinationDir. If you left destinationDir empty, then the secrets are placed in the working directory of the builder image.

The same rule is used when a destinationDir is a relative path; the secrets are placed in the paths that are relative to the image’s working directory. The final directory in the destinationDir path is created if it does not exist in the builder image. All preceding directories in the destinationDir must exist, or an error will occur.

Input secrets are added as world-writable (have 0666 permissions) and will be truncated to size zero after executing the assemble script. This means that the secret files will exist in the resulting image, but they will be empty for security reasons.

Input ConfigMaps are not truncated after the assemble script completes.

3.6.3. Docker strategy

When using a Docker strategy, you can add all defined input secrets into your container image using the ADD and COPY instructions in your Dockerfile.

If you do not specify the destinationDir for a secret, then the files will be copied into the same directory in which the Dockerfile is located. If you specify a relative path as destinationDir, then the secrets will be copied into that directory, relative to your Dockerfile location. This makes the secret files available to the Docker build operation as part of the context directory used during the build.

Example of a Dockerfile referencing secret and ConfigMap data

Users should normally remove their input secrets from the final application image so that the secrets are not present in the container running from that image. However, the secrets will still exist in the image itself in the layer where they were added. This removal should be part of the Dockerfile itself.

3.6.4. Custom strategy

When using a Custom strategy, all the defined input secrets and ConfigMaps are available inside the builder container in the /var/run/secrets/openshift.io/build directory. The custom build image is responsible for using these secrets and ConfigMaps appropriately. The Custom strategy also allows secrets to be defined as described in Custom Strategy Options.

There is no technical difference between existing strategy secrets and the input secrets. However, your builder image might distinguish between them and use them differently, based on your build use case.

The input secrets are always mounted into the /var/run/secrets/openshift.io/build directory or your builder can parse the $BUILD environment variable, which includes the full build object.

3.7. External artifacts

It is not recommended to store binary files in a source repository. Therefore, you may find it necessary to define a build which pulls additional files (such as Java .jar dependencies) during the build process. How this is done depends on the build strategy you are using.

For a Source build strategy, you must put appropriate shell commands into the assemble script:

.s2i/bin/assemble File

#!/bin/sh APP_VERSION=1.0 wget http://repository.example.com/app/app-$APP_VERSION.jar -O app.jar

#!/bin/sh

APP_VERSION=1.0

wget http://repository.example.com/app/app-$APP_VERSION.jar -O app.jar.s2i/bin/run File

#!/bin/sh exec java -jar app.jar

#!/bin/sh

exec java -jar app.jar

For a Docker build strategy, you must modify the Dockerfile and invoke shell commands with the RUN instruction:

Excerpt of Dockerfile

In practice, you may want to use an environment variable for the file location so that the specific file to be downloaded can be customized using an environment variable defined on the BuildConfig, rather than updating the Dockerfile or assemble script.

You can choose between different methods of defining environment variables:

- Using the .s2i/environment file] (only for a Source build strategy)

-

Setting in

BuildConfig -

Providing explicitly using

oc start-build --env(only for builds that are triggered manually)

3.8. Using Docker credentials for private registries

You can supply builds with a .docker/config.json file with valid credentials for private container registries. This allows you to push the output image into a private container image registry or pull a builder image from the private container image registry that requires authentication.

For the OpenShift Container Platform container image registry, this is not required because secrets are generated automatically for you by OpenShift Container Platform.

The .docker/config.json file is found in your home directory by default and has the following format:

auths:

https://index.docker.io/v1/:

auth: "YWRfbGzhcGU6R2labnRib21ifTE="

email: "user@example.com"

auths:

https://index.docker.io/v1/:

auth: "YWRfbGzhcGU6R2labnRib21ifTE="

email: "user@example.com"

You can define multiple container image registry entries in this file. Alternatively, you can also add authentication entries to this file by running the docker login command. The file will be created if it does not exist.

Kubernetes provides Secret objects, which can be used to store configuration and passwords.

Prerequisites

- .docker/config.json file

Procedure

Create the secret from your local .docker/config.json file:

oc create secret generic dockerhub \ --from-file=.dockerconfigjson=<path/to/.docker/config.json> \ --type=kubernetes.io/dockerconfigjson$ oc create secret generic dockerhub \ --from-file=.dockerconfigjson=<path/to/.docker/config.json> \ --type=kubernetes.io/dockerconfigjsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow This generates a JSON specification of the secret named

dockerhuband creates the object.Add a

pushSecretfield into theoutputsection of theBuildConfigand set it to the name of thesecretthat you created, which in the above example isdockerhub:Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can use the

oc set build-secretcommand to set the push secret on the build configuration:oc set build-secret --push bc/sample-build dockerhub

$ oc set build-secret --push bc/sample-build dockerhubCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can also link the push secret to the service account used by the build instead of specifying the

pushSecretfield. By default, builds use the builder service account. The push secret is automatically added to the build if the secret contains a credential that matches the repository hosting the build’s output image.oc secrets link builder dockerhub

$ oc secrets link builder dockerhubCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pull the builder container image from a private container image registry by specifying the

pullSecretfield, which is part of the build strategy definition:Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can use the

oc set build-secretcommand to set the pull secret on the build configuration:oc set build-secret --pull bc/sample-build dockerhub

$ oc set build-secret --pull bc/sample-build dockerhubCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThis example uses

pullSecretin a Source build, but it is also applicable in Docker and Custom builds.You can also link the pull secret to the service account used by the build instead of specifying the

pullSecretfield. By default, builds use the builder service account. The pull secret is automatically added to the build if the secret contains a credential that matches the repository hosting the build’s input image.oc secrets link builder dockerhub

$ oc secrets link builder dockerhubCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou must specify a

fromimage in theBuildConfigspec to take advantage of this feature. Docker strategy builds generated byoc new-buildoroc new-appmay not do this in some situations.

3.9. Build environments

As with pod environment variables, build environment variables can be defined in terms of references to other resources or variables using the Downward API. There are some exceptions, which are noted.

You can also manage environment variables defined in the BuildConfig with the oc set env command.

Referencing container resources using valueFrom in build environment variables is not supported as the references are resolved before the container is created.

3.9.1. Using build fields as environment variables

You can inject information about the build object by setting the fieldPath environment variable source to the JsonPath of the field from which you are interested in obtaining the value.

Jenkins Pipeline strategy does not support valueFrom syntax for environment variables.

Procedure

Set the

fieldPathenvironment variable source to theJsonPathof the field from which you are interested in obtaining the value:env: - name: FIELDREF_ENV valueFrom: fieldRef: fieldPath: metadata.nameenv: - name: FIELDREF_ENV valueFrom: fieldRef: fieldPath: metadata.nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.9.2. Using secrets as environment variables

You can make key values from Secrets available as environment variables using the valueFrom syntax.

Procedure

To use a secret as an environment variable, set the

valueFromsyntax:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.10. What is a secret?

The Secret object type provides a mechanism to hold sensitive information such as passwords, OpenShift Container Platform client configuration files, dockercfg files, private source repository credentials, and so on. Secrets decouple sensitive content from the pods. You can mount secrets into containers using a volume plug-in or the system can use secrets to perform actions on behalf of a pod.

YAML Secret Object Definition

- 1

- Indicates the structure of the secret’s key names and values.

- 2

- The allowable format for the keys in the

datafield must meet the guidelines in theDNS_SUBDOMAINvalue in the Kubernetes identifiers glossary. - 3

- The value associated with keys in the

datamap must be base64 encoded. - 4

- Entries in the

stringDatamap are converted to base64 and the entry will then be moved to thedatamap automatically. This field is write-only; the value will only be returned via thedatafield. - 5

- The value associated with keys in the

stringDatamap is made up of plain text strings.

3.10.1. Properties of secrets

Key properties include:

- Secret data can be referenced independently from its definition.

- Secret data volumes are backed by temporary file-storage facilities (tmpfs) and never come to rest on a node.

- Secret data can be shared within a namespace.

3.10.2. Types of Secrets

The value in the type field indicates the structure of the secret’s key names and values. The type can be used to enforce the presence of user names and keys in the secret object. If you do not want validation, use the opaque type, which is the default.

Specify one of the following types to trigger minimal server-side validation to ensure the presence of specific key names in the secret data:

-

kubernetes.io/service-account-token. Uses a service account token. -

kubernetes.io/dockercfg. Uses the .dockercfg file for required Docker credentials. -

kubernetes.io/dockerconfigjson. Uses the .docker/config.json file for required Docker credentials. -

kubernetes.io/basic-auth. Use with Basic Authentication. -

kubernetes.io/ssh-auth. Use with SSH Key Authentication. -

kubernetes.io/tls. Use with TLS certificate authorities.

Specify type= Opaque if you do not want validation, which means the secret does not claim to conform to any convention for key names or values. An opaque secret, allows for unstructured key:value pairs that can contain arbitrary values.

You can specify other arbitrary types, such as example.com/my-secret-type. These types are not enforced server-side, but indicate that the creator of the secret intended to conform to the key/value requirements of that type.

3.10.3. Updates to secrets

When you modify the value of a secret, the value (used by an already running pod) will not dynamically change. To change a secret, you must delete the original pod and create a new pod (perhaps with an identical PodSpec).

Updating a secret follows the same workflow as deploying a new container image. You can use the kubectl rolling-update command.

The resourceVersion value in a secret is not specified when it is referenced. Therefore, if a secret is updated at the same time as pods are starting, then the version of the secret will be used for the pod will not be defined.

Currently, it is not possible to check the resource version of a secret object that was used when a pod was created. It is planned that pods will report this information, so that a controller could restart ones using a old resourceVersion. In the interim, do not update the data of existing secrets, but create new ones with distinct names.

3.10.4. Creating secrets

You must create a secret before creating the pods that depend on that secret.

When creating secrets:

- Create a secret object with secret data.

- Update the pod’s service account to allow the reference to the secret.

-

Create a pod, which consumes the secret as an environment variable or as a file (using a

secretvolume).

Procedure

Use the create command to create a secret object from a JSON or YAML file:

oc create -f <filename>

$ oc create -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example, you can create a secret from your local .docker/config.json file:

oc create secret generic dockerhub \ --from-file=.dockerconfigjson=<path/to/.docker/config.json> \ --type=kubernetes.io/dockerconfigjson$ oc create secret generic dockerhub \ --from-file=.dockerconfigjson=<path/to/.docker/config.json> \ --type=kubernetes.io/dockerconfigjsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow This command generates a JSON specification of the secret named

dockerhuband creates the object.YAML Opaque Secret Object Definition

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specifies an opaque secret.

Docker Configuration JSON File Secret Object Definition

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.10.4.1. Using secrets

After creating secrets, you can create a pod to reference your secret, get logs, and delete the pod.

Procedure

Create the pod to reference your secret:

oc create -f <your_yaml_file>.yaml

$ oc create -f <your_yaml_file>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the logs:

oc logs secret-example-pod

$ oc logs secret-example-podCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the pod:

oc delete pod secret-example-pod

$ oc delete pod secret-example-podCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

Example YAML files with secret data:

YAML Secret That Will Create Four Files

Copy to Clipboard Copied! Toggle word wrap Toggle overflow YAML of a Pod Populating Files in a Volume with Secret Data

Copy to Clipboard Copied! Toggle word wrap Toggle overflow YAML of a Pod Populating Environment Variables with Secret Data

Copy to Clipboard Copied! Toggle word wrap Toggle overflow YAML of a Build Config Populating Environment Variables with Secret Data

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.11. Service serving certificate secrets

Service serving certificate secrets are intended to support complex middleware applications that need out-of-the-box certificates. It has the same settings as the server certificates generated by the administrator tooling for nodes and masters.

Procedure

To secure communication to your service, have the cluster generate a signed serving certificate/key pair into a secret in your namespace.

Set the

service.alpha.openshift.io/serving-cert-secret-nameannotation on your service with the value set to the name you want to use for your secret.Then, your

PodSpeccan mount that secret. When it is available, your pod will run. The certificate will be good for the internal service DNS name,<service.name>.<service.namespace>.svc.The certificate and key are in PEM format, stored in

tls.crtandtls.keyrespectively. The certificate/key pair is automatically replaced when it gets close to expiration. View the expiration date in theservice.alpha.openshift.io/expiryannotation on the secret, which is in RFC3339 format.

In most cases, the service DNS name <service.name>.<service.namespace>.svc is not externally routable. The primary use of <service.name>.<service.namespace>.svc is for intracluster or intraservice communication, and with re-encrypt routes.

Other pods can trust cluster-created certificates (which are only signed for internal DNS names), by using the CA bundle in the /var/run/secrets/kubernetes.io/serviceaccount/service-ca.crt file that is automatically mounted in their pod.

The signature algorithm for this feature is x509.SHA256WithRSA. To manually rotate, delete the generated secret. A new certificate is created.

3.12. Secrets restrictions

To use a secret, a pod needs to reference the secret. A secret can be used with a pod in three ways:

- To populate environment variables for containers.

- As files in a volume mounted on one or more of its containers.

- By kubelet when pulling images for the pod.

Volume type secrets write data into the container as a file using the volume mechanism. imagePullSecrets use service accounts for the automatic injection of the secret into all pods in a namespaces.

When a template contains a secret definition, the only way for the template to use the provided secret is to ensure that the secret volume sources are validated and that the specified object reference actually points to an object of type Secret. Therefore, a secret needs to be created before any pods that depend on it. The most effective way to ensure this is to have it get injected automatically through the use of a service account.

Secret API objects reside in a namespace. They can only be referenced by pods in that same namespace.

Individual secrets are limited to 1MB in size. This is to discourage the creation of large secrets that would exhaust apiserver and kubelet memory. However, creation of a number of smaller secrets could also exhaust memory.

Chapter 4. Managing build output

Use the following sections for an overview of and instructions for managing build output.

4.1. Build output

Builds that use the Docker or Source-to-Image (S2I) strategy result in the creation of a new container image. The image is then pushed to the container image registry specified in the output section of the Build specification.

If the output kind is ImageStreamTag, then the image will be pushed to the integrated OpenShift Container Platform registry and tagged in the specified imagestream. If the output is of type DockerImage, then the name of the output reference will be used as a Docker push specification. The specification may contain a registry or will default to DockerHub if no registry is specified. If the output section of the build specification is empty, then the image will not be pushed at the end of the build.

Output to an ImageStreamTag

spec:

output:

to:

kind: "ImageStreamTag"

name: "sample-image:latest"

spec:

output:

to:

kind: "ImageStreamTag"

name: "sample-image:latest"Output to a Docker Push Specification

spec:

output:

to:

kind: "DockerImage"

name: "my-registry.mycompany.com:5000/myimages/myimage:tag"

spec:

output:

to:

kind: "DockerImage"

name: "my-registry.mycompany.com:5000/myimages/myimage:tag"4.2. Output image environment variables

Docker and Source-to-Image (S2I) strategy builds set the following environment variables on output images:

| Variable | Description |

|---|---|

|

| Name of the build |

|

| Namespace of the build |

|

| The source URL of the build |

|

| The Git reference used in the build |

|

| Source commit used in the build |

Additionally, any user-defined environment variable, for example those configured with S2I] or Docker strategy options, will also be part of the output image environment variable list.

4.3. Output image labels

Docker and Source-to-Image (S2I) builds set the following labels on output images:

| Label | Description |

|---|---|

|

| Author of the source commit used in the build |

|

| Date of the source commit used in the build |

|

| Hash of the source commit used in the build |

|

| Message of the source commit used in the build |

|

| Branch or reference specified in the source |

|

| Source URL for the build |

You can also use the BuildConfig.spec.output.imageLabels field to specify a list of custom labels that will be applied to each image built from the BuildConfig.

Custom Labels to be Applied to Built Images

Chapter 5. Using build strategies

The following sections define the primary supported build strategies, and how to use them.

5.1. Docker build

The Docker build strategy invokes the docker build command, and it expects a repository with a Dockerfile and all required artifacts in it to produce a runnable image.

5.1.1. Replacing Dockerfile FROM image

You can replace the FROM instruction of the Dockerfile with the from of the BuildConfig. If the Dockerfile uses multi-stage builds, the image in the last FROM instruction will be replaced.

Procedure

To replace the FROM instruction of the Dockerfile with the from of the BuildConfig.

strategy:

dockerStrategy:

from:

kind: "ImageStreamTag"

name: "debian:latest"

strategy:

dockerStrategy:

from:

kind: "ImageStreamTag"

name: "debian:latest"5.1.2. Using Dockerfile path

By default, Docker builds use a Dockerfile (named Dockerfile) located at the root of the context specified in the BuildConfig.spec.source.contextDir field.

The dockerfilePath field allows the build to use a different path to locate your Dockerfile, relative to the BuildConfig.spec.source.contextDir field. It can be a different file name than the default Dockerfile (for example, MyDockerfile), or a path to a Dockerfile in a subdirectory (for example, dockerfiles/app1/Dockerfile).

Procedure

To use the dockerfilePath field for the build to use a different path to locate your Dockerfile, set:

strategy:

dockerStrategy:

dockerfilePath: dockerfiles/app1/Dockerfile

strategy:

dockerStrategy:

dockerfilePath: dockerfiles/app1/Dockerfile5.1.3. Using Docker environment variables

To make environment variables available to the Docker build process and resulting image, you can add environment variables to the dockerStrategy definition of the BuildConfig.

The environment variables defined there are inserted as a single ENV Dockerfile instruction right after the FROM instruction, so that it can be referenced later on within the Dockerfile.

Procedure

The variables are defined during build and stay in the output image, therefore they will be present in any container that runs that image as well.

For example, defining a custom HTTP proxy to be used during build and runtime:

dockerStrategy:

...

env:

- name: "HTTP_PROXY"

value: "http://myproxy.net:5187/"

dockerStrategy:

...

env:

- name: "HTTP_PROXY"

value: "http://myproxy.net:5187/"Cluster administrators can also configure global build settings using Ansible.

You can also manage environment variables defined in the BuildConfig with the oc set env command.

5.1.4. Adding Docker build arguments

You can set Docker build arguments using the BuildArgs array. The build arguments will be passed to Docker when a build is started.

Procedure

To set Docker build arguments, add entries to the BuildArgs array, which is located in the dockerStrategy definition of the BuildConfig. For example:

dockerStrategy:

...

buildArgs:

- name: "foo"

value: "bar"

dockerStrategy:

...

buildArgs:

- name: "foo"

value: "bar"5.2. Source-to-Image (S2I) build

Source-to-Image (S2I) is a tool for building reproducible, Docker-formatted container images. It produces ready-to-run images by injecting application source into a container image and assembling a new image. The new image incorporates the base image (the builder) and built source and is ready to use with the buildah run command. S2I supports incremental builds, which re-use previously downloaded dependencies, previously built artifacts, etc.

The advantages of S2I include the following:

| Image flexibility |

S2I scripts can be written to inject application code into almost any existing Docker-formatted container image, taking advantage of the existing ecosystem. Note that, currently, S2I relies on |

| Speed | With S2I, the assemble process can perform a large number of complex operations without creating a new layer at each step, resulting in a fast process. In addition, S2I scripts can be written to re-use artifacts stored in a previous version of the application image, rather than having to download or build them each time the build is run. |

| Patchability | S2I allows you to rebuild the application consistently if an underlying image needs a patch due to a security issue. |

| Operational efficiency | By restricting build operations instead of allowing arbitrary actions, as a Dockerfile would allow, the PaaS operator can avoid accidental or intentional abuses of the build system. |

| Operational security | Building an arbitrary Dockerfile exposes the host system to root privilege escalation. This can be exploited by a malicious user because the entire Docker build process is run as a user with Docker privileges. S2I restricts the operations performed as a root user and can run the scripts as a non-root user. |

| User efficiency |

S2I prevents developers from performing arbitrary |

| Ecosystem | S2I encourages a shared ecosystem of images where you can leverage best practices for your applications. |

| Reproducibility | Produced images can include all inputs including specific versions of build tools and dependencies. This ensures that the image can be reproduced precisely. |

5.2.1. Performing Source-to-Image (S2I) incremental builds

S2I can perform incremental builds, which means it reuses artifacts from previously-built images.

Procedure

To create an incremental build, create a BuildConfig with the following modification to the strategy definition:

- 1

- Specify an image that supports incremental builds. Consult the documentation of the builder image to determine if it supports this behavior.

- 2

- This flag controls whether an incremental build is attempted. If the builder image does not support incremental builds, the build will still succeed, but you will get a log message stating the incremental build was not successful because of a missing save-artifacts script.

Additional resources

- See S2I Requirements for information on how to create a builder image supporting incremental builds.

5.2.2. Overriding Source-to-Image (S2I) builder image scripts

You can override the assemble, run, and save-artifacts S2I scripts provided by the builder image.

Procedure

To override the assemble, run, and save-artifacts S2I scripts provided by the builder image, either:

- Provide an assemble, run, or save-artifacts script in the .s2i/bin directory of your application source repository, or

- Provide a URL of a directory containing the scripts as part of the strategy definition. For example:

- 1

- This path will have run, assemble, and save-artifacts appended to it. If any or all scripts are found they will be used in place of the same named script(s) provided in the image.

Files located at the scripts URL take precedence over files located in .s2i/bin of the source repository.

5.2.3. Source-to-Image (S2I) environment variables

There are two ways to make environment variables available to the source build process and resulting image. Environment files and BuildConfig environment values. Variables provided will be present during the build process and in the output image.

5.2.3.1. Using Source-to-Image (S2I) environment files

Source build enables you to set environment values (one per line) inside your application, by specifying them in a .s2i/environment file in the source repository. The environment variables specified in this file are present during the build process and in the output image.

If you provide a .s2i/environment file in your source repository, S2I reads this file during the build. This allows customization of the build behavior as the assemble script may use these variables.

Procedure

For example, to disable assets compilation for your Rails application during the build:

-

Add

DISABLE_ASSET_COMPILATION=truein the .s2i/environment file.

In addition to builds, the specified environment variables are also available in the running application itself. For example, to cause the Rails application to start in development mode instead of production:

-

Add

RAILS_ENV=developmentto the .s2i/environment file.

Additional resources

- The complete list of supported environment variables is available in the using images section for each image.

5.2.3.2. Using Source-to-Image (S2I) BuildConfig environment

You can add environment variables to the sourceStrategy definition of the BuildConfig. The environment variables defined there are visible during the assemble script execution and will be defined in the output image, making them also available to the run script and application code.

Procedure

- For example, to disable assets compilation for your Rails application:

sourceStrategy:

...

env:

- name: "DISABLE_ASSET_COMPILATION"

value: "true"

sourceStrategy:

...

env:

- name: "DISABLE_ASSET_COMPILATION"

value: "true"Additional resources

- The Build environment section provides more advanced instructions.

-

You can also manage environment variables defined in the

BuildConfigwith theoc set envcommand.

5.2.4. Ignoring Source-to-Image (S2I) source files

Source to image supports a .s2iignore file, which contains a list of file patterns that should be ignored. Files in the build working directory, as provided by the various input sources, that match a pattern found in the .s2iignore file will not be made available to the assemble script.

For more details on the format of the .s2iignore file, see the source-to-image documentation.

5.2.5. Creating images from source code with s2i

Source-to-Image (S2I) is a framework that makes it easy to write images that take application source code as an input and produce a new image that runs the assembled application as output.

The main advantage of using S2I for building reproducible container images is the ease of use for developers. As a builder image author, you must understand two basic concepts in order for your images to provide the best possible S2I performance: the build process and S2I scripts.

5.2.5.1. Understanding the S2I build process

The build process consists of the following three fundamental elements, which are combined into a final container image:

- sources

- S2I scripts

- builder image

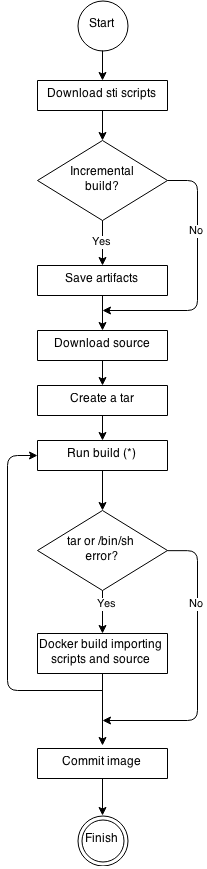

During the build process, S2I must place sources and scripts inside the builder image. To do so, S2I creates a tar file that contains the sources and scripts, then streams that file into the builder image. Before executing the assemble script, S2I un-tars that file and places its contents into the location specified by the io.openshift.s2i.destination label from the builder image, with the default location being the /tmp directory.

For this process to happen, your image must supply the tar archiving utility (the tar command available in $PATH) and the command line interpreter (the /bin/sh command); this allows your image to use the fastest possible build path. If the tar or /bin/sh command is not available, the s2i build process is forced to automatically perform an additional container build to put both the sources and the scripts inside the image, and only then run the usual build.

See the following diagram for the basic S2I build workflow:

Figure 5.1. Build Workflow

Run build’s responsibility is to un-tar the sources, scripts and artifacts (if such exist) and invoke the assemble script. If this is the second run (after catching tar or /bin/sh not found error) it is responsible only for invoking assemble script, since both scripts and sources are already there.

5.2.5.2. Writing S2I scripts

You can write S2I scripts in any programming language, as long as the scripts are executable inside the builder image. S2I supports multiple options providing assemble/run/save-artifacts scripts. All of these locations are checked on each build in the following order:

- A script specified in the BuildConfig

-

A script found in the application source

.s2i/bindirectory -

A script found at the default image URL (

io.openshift.s2i.scripts-urllabel)

Both the io.openshift.s2i.scripts-url label specified in the image and the script specified in a BuildConfig can take one of the following forms:

-

image:///path_to_scripts_dir- absolute path inside the image to a directory where the S2I scripts are located -

file:///path_to_scripts_dir- relative or absolute path to a directory on the host where the S2I scripts are located -

http(s)://path_to_scripts_dir- URL to a directory where the S2I scripts are located

| Script | Description |

|---|---|

| assemble (required) | The assemble script builds the application artifacts from a source and places them into appropriate directories inside the image. The workflow for this script is:

|

| run (required) | The run script executes your application. |

| save-artifacts (optional) | The save-artifacts script gathers all dependencies that can speed up the build processes that follow. For example:

These dependencies are gathered into a tar file and streamed to the standard output. |

| usage (optional) | The usage script allows you to inform the user how to properly use your image. |

| test/run (optional) | The test/run script allows you to create a simple process to check if the image is working correctly. The proposed flow of that process is:

Note The suggested location to put the test application built by your test/run script is the test/test-app directory in your image repository. See the S2I documentation for more information. |

5.2.5.2.1. Example S2I Scripts

The following example S2I scripts are written in Bash. Each example assumes its tar contents are unpacked into the /tmp/s2i directory.

Example 5.1. assemble script:

Example 5.2. run script:

#!/bin/bash run the application /opt/application/run.sh

#!/bin/bash

# run the application

/opt/application/run.shExample 5.3. save-artifacts script:

Example 5.4. usage script:

5.3. Custom build

The Custom build strategy allows developers to define a specific builder image responsible for the entire build process. Using your own builder image allows you to customize your build process.

A Custom builder image is a plain Docker-formatted container image embedded with build process logic, for example for building RPMs or base images.

Custom builds run with a very high level of privilege and are not available to users by default. Only users who can be trusted with cluster administration permissions should be granted access to run custom builds.

5.3.1. Using FROM image for custom builds

You can use the customStrategy.from section to indicate the image to use for the custom build

Procedure

To set the customStrategy.from section:

strategy:

customStrategy:

from:

kind: "DockerImage"

name: "openshift/sti-image-builder"

strategy:

customStrategy:

from:

kind: "DockerImage"

name: "openshift/sti-image-builder"5.3.2. Using secrets in custom builds

In addition to secrets for source and images that can be added to all build types, custom strategies allow adding an arbitrary list of secrets to the builder pod.

Procedure

To mount each secret at a specific location:

5.3.3. Using environment variables for custom builds

To make environment variables available to the Custom build process, you can add environment variables to the customStrategy definition of the BuildConfig.

The environment variables defined there are passed to the pod that runs the custom build.

Procedure

To define a custom HTTP proxy to be used during build:

customStrategy:

...

env:

- name: "HTTP_PROXY"

value: "http://myproxy.net:5187/"

customStrategy:

...

env:

- name: "HTTP_PROXY"

value: "http://myproxy.net:5187/"Cluster administrators can also configure global build settings using Ansible.

You can also manage environment variables defined in the BuildConfig with the oc set env command.

5.3.4. Using custom builder images

By allowing you to define a specific builder image responsible for the entire build process, OpenShift Container Platform’s Custom build strategy was designed to fill a gap created with the increased popularity of creating container images. When there is a requirement for a build to still produce individual artifacts (packages, JARs, WARs, installable ZIPs, and base images, for example), a Custom builder image using the Custom build strategy is the perfect match to fill that gap.

A Custom builder image is a plain container image embedded with build process logic, for example for building RPMs or base container images.

Additionally, the Custom builder allows implementing any extended build process, for example a CI/CD flow that runs unit or integration tests.

To fully leverage the benefits of the Custom build strategy, you must understand how to create a Custom builder image that will be capable of building desired objects.

5.3.4.1. Custom builder image

Upon invocation, a custom builder image will receive the following environment variables with the information needed to proceed with the build:

| Variable Name | Description |

|---|---|

|

|

The entire serialized JSON of the |

|

| The URL of a Git repository with source to be built. |

|

|

Uses the same value as |

|

| Specifies the subdirectory of the Git repository to be used when building. Only present if defined. |

|

| The Git reference to be built. |

|

| The version of the OpenShift Container Platform master that created this build object. |

|

| The container image registry to push the image to. |

|

| The container image tag name for the image being built. |

|

|

The path to the container registry credentials for running a |

5.3.4.2. Custom builder workflow

Although custom builder image authors have great flexibility in defining the build process, your builder image must still adhere to the following required steps necessary for seamlessly running a build inside of OpenShift Container Platform:

-

The

Buildobject definition contains all the necessary information about input parameters for the build. - Run the build process.

- If your build produces an image, push it to the build’s output location if it is defined. Other output locations can be passed with environment variables.

5.4. Pipeline build

The Pipeline build strategy is deprecated in OpenShift Container Platform 4. Equivalent and improved functionality is present in the OpenShift Pipelines based on Tekton.

Jenkins images on OpenShift are fully supported and users should follow Jenkins user documentation for defining their Jenkinsfile in a job or store it in a Source Control Management system.

The Pipeline build strategy allows developers to define a Jenkins pipeline for execution by the Jenkins pipeline plug-in. The build can be started, monitored, and managed by OpenShift Container Platform in the same way as any other build type.

Pipeline workflows are defined in a Jenkinsfile, either embedded directly in the build configuration, or supplied in a Git repository and referenced by the build configuration.

5.4.1. Understanding OpenShift Container Platform pipelines

Pipelines give you control over building, deploying, and promoting your applications on OpenShift Container Platform. Using a combination of the Jenkins Pipeline Build Strategy, Jenkinsfiles, and the OpenShift Container Platform Domain Specific Language (DSL) (provided by the Jenkins Client Plug-in), you can create advanced build, test, deploy, and promote pipelines for any scenario.

OpenShift Container Platform Jenkins Sync Plugin