This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Pipelines

Configuring and using Pipelines in OpenShift Container Platform

Abstract

Chapter 1. Understanding OpenShift Pipelines

OpenShift Pipelines is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see https://access.redhat.com/support/offerings/techpreview/.

Red Hat OpenShift Pipelines is a cloud-native, continuous integration and continuous delivery (CI/CD) solution based on Kubernetes resources. It uses Tekton building blocks to automate deployments across multiple platforms by abstracting away the underlying implementation details. Tekton introduces a number of standard Custom Resource Definitions (CRDs) for defining CI/CD pipelines that are portable across Kubernetes distributions.

1.1. Key features

- Red Hat OpenShift Pipelines is a serverless CI/CD system that runs Pipelines with all the required dependencies in isolated containers.

- Red Hat OpenShift Pipelines are designed for decentralized teams that work on microservice-based architecture.

- Red Hat OpenShift Pipelines use standard CI/CD pipeline definitions that are easy to extend and integrate with the existing Kubernetes tools, enabling you to scale on-demand.

- You can use Red Hat OpenShift Pipelines to build images with Kubernetes tools such as Source-to-Image (S2I), Buildah, Buildpacks, and Kaniko that are portable across any Kubernetes platform.

- You can use the OpenShift Container Platform Developer Console to create Tekton resources, view logs of Pipeline runs, and manage pipelines in your OpenShift Container Platform namespaces.

1.2. Red Hat OpenShift Pipelines concepts

Red Hat OpenShift Pipelines provide a set of standard Custom Resource Definitions (CRDs) that act as the building blocks from which you can assemble a CI/CD pipeline for your application.

- Task

- A Task is the smallest configurable unit in a Pipeline. It is essentially a function of inputs and outputs that form the Pipeline build. It can run individually or as a part of a Pipeline. A Pipeline includes one or more Tasks, where each Task consists of one or more Steps. Steps are a series of commands that are sequentially executed by the Task.

- Pipeline

- A Pipeline consists of a series of Tasks that are executed to construct complex workflows that automate the build, deployment, and delivery of applications. It is a collection of PipelineResources, parameters, and one or more Tasks. A Pipeline interacts with the outside world by using PipelineResources, which are added to Tasks as inputs and outputs.

- PipelineRun

- A PipelineRun is the running instance of a Pipeline. A PipelineRun initiates a Pipeline and manages the creation of a TaskRun for each Task being executed in the Pipeline.

- TaskRun

- A TaskRun is automatically created by a PipelineRun for each Task in a Pipeline. It is the result of running an instance of a Task in a Pipeline. It can also be manually created if a Task runs outside of a Pipeline.

- Workspace

- A Workspace is a storage volume that a Task requires at runtime to receive input or provide output. A Task or Pipeline declares the Workspace, and a TaskRun or PipelineRun provides the actual location of the storage volume, which mounts on the declared Workspace. This makes the Task flexible, reusable, and allows the Workspaces to be shared across multiple Tasks.

- Trigger

- A Trigger captures an external event, such as a Git pull request and processes the event payload to extract key pieces of information. This extracted information is then mapped to a set of predefined parameters, which trigger a series of tasks that may involve creation and deployment of Kubernetes resources. You can use Triggers along with Pipelines to create full-fledged CI/CD systems where the execution is defined entirely through Kubernetes resources.

- Condition

-

A Condition refers to a validation or check, which is executed before a Task is run in your Pipeline. Conditions are like

ifstatements which perform logical tests, with a return value ofTrueorFalse. A Task is executed if all Conditions returnTrue, but if any of the Conditions fail, the Task and all subsequent Tasks are skipped. You can use Conditions in your Pipeline to create complex workflows covering multiple scenarios.

1.3. Detailed OpenShift Pipeline Concepts

This guide provides a detailed view of the various Pipeline concepts.

1.3.1. Tasks

Tasks are the building blocks of a Pipeline and consist of sequentially executed Steps. Tasks are reusable and can be used in multiple Pipelines.

Steps are a series of commands that achieve a specific goal, such as building an image. Every Task runs as a pod and each Step runs in its own container within the same pod. Because Steps run within the same pod, they have access to the same volumes for caching files, ConfigMaps, and Secrets.

The following example shows the apply-manifests Task.

This Task starts the pod and runs a container inside that pod using the maven:3.6.0-jdk-8-slim image to run the specified commands. It receives an input directory called workspace-git that contains the source code of the application.

The Task only declares the placeholder for the Git repository, it does not specify which Git repository to use. This allows Tasks to be reusable for multiple Pipelines and purposes.

Red Hat OpenShift Pipelines 1.3 and earlier versions in Technology Preview (TP) allowed users to create a task without verifying their Security Context Constraints (SCC). Thus, any authenticated user could create a task using a container running with a privileged SCC.

To avoid such security issues in the production scenario, do not use Pipelines versions that are in TP. Instead, consider upgrading the Operator to generally available versions such as Pipelines 1.4 or later.

1.3.2. TaskRun

A TaskRun instantiates a Task for execution with specific inputs, outputs, and execution parameters on a cluster. It can be invoked on its own or as part of a PipelineRun.

A Task consists of one or more Steps that execute container images, and each container image performs a specific piece of build work. A TaskRun executes the Steps in a Task in the specified order, until all Steps execute successfully or a failure occurs.

The following example shows a TaskRun that runs the apply-manifests Task with the relevant input parameters:

- 1

- TaskRun API version

v1beta1. - 2

- Specifies the type of Kubernetes object. In this example,

TaskRun. - 3

- Unique name to identify this TaskRun.

- 4

- Definition of the TaskRun. For this TaskRun, the Task and the required workspace are specified.

- 5

- Name of the Task reference used for this TaskRun. This TaskRun executes the

apply-manifestsTask. - 6

- Workspace used by the TaskRun.

1.3.3. Pipelines

A Pipeline is a collection of Task resources arranged in a specific order of execution. You can define a CI/CD workflow for your application using pipelines containing one or more tasks.

A Pipeline resource definition consists of a number of fields or attributes, which together enable the pipeline to accomplish a specific goal. Each Pipeline resource definition must contain at least one Task resource, which ingests specific inputs and produces specific outputs. The pipeline definition can also optionally include Conditions, Workspaces, Parameters, or Resources depending on the application requirements.

The following example shows the build-and-deploy pipeline, which builds an application image from a Git repository using the buildah ClusterTask resource:

- 1

- Pipeline API version

v1beta1. - 2

- Specifies the type of Kubernetes object. In this example,

Pipeline. - 3

- Unique name of this Pipeline.

- 4

- Specifies the definition and structure of the Pipeline.

- 5

- Workspaces used across all the Tasks in the Pipeline.

- 6

- Parameters used across all the Tasks in the Pipeline.

- 7

- Specifies the list of Tasks used in the Pipeline.

- 8

- Task

build-image, which uses thebuildahClusterTask to build application images from a given Git repository. - 9

- Task

apply-manifests, which uses a user-defined Task with the same name. - 10

- Specifies the sequence in which Tasks are run in a Pipeline. In this example, the

apply-manifestsTask is run only after thebuild-imageTask is completed.

1.3.4. PipelineRun

A PipelineRun instantiates a Pipeline for execution with specific inputs, outputs, and execution parameters on a cluster. A corresponding TaskRun is created for each Task automatically in the PipelineRun.

All the Tasks in the Pipeline are executed in the defined sequence until all Tasks are successful or a Task fails. The status field tracks and stores the progress of each TaskRun in the PipelineRun for monitoring and auditing purpose.

The following example shows a PipelineRun to run the build-and-deploy Pipeline with relevant resources and parameters:

- 1

- PipelineRun API version

v1beta1. - 2

- Specifies the type of Kubernetes object. In this example,

PipelineRun. - 3

- Unique name to identify this PipelineRun.

- 4

- Name of the Pipeline to be run. In this example,

build-and-deploy. - 5

- Specifies the list of parameters required to run the Pipeline.

- 6

- Workspace used by the PipelineRun.

1.3.5. Workspaces

It is recommended that you use Workspaces instead of PipelineResources in OpenShift Pipelines, as PipelineResources are difficult to debug, limited in scope, and make Tasks less reusable.

Workspaces declare shared storage volumes that a Task in a Pipeline needs at runtime. Instead of specifying the actual location of the volumes, Workspaces enable you to declare the filesystem or parts of the filesystem that would be required at runtime. You must provide the specific location details of the volume that is mounted into that Workspace in a TaskRun or a PipelineRun. This separation of volume declaration from runtime storage volumes makes the Tasks reusable, flexible, and independent of the user environment.

With Workspaces, you can:

- Store Task inputs and outputs

- Share data among Tasks

- Use it as a mount point for credentials held in Secrets

- Use it as a mount point for configurations held in ConfigMaps

- Use it as a mount point for common tools shared by an organization

- Create a cache of build artifacts that speed up jobs

You can specify Workspaces in the TaskRun or PipelineRun using:

- A read-only ConfigMaps or Secret

- An existing PersistentVolumeClaim shared with other Tasks

- A PersistentVolumeClaim from a provided VolumeClaimTemplate

- An emptyDir that is discarded when the TaskRun completes

The following example shows a code snippet of the build-and-deploy Pipeline, which declares a shared-workspace Workspace for the build-image and apply-manifests Tasks as defined in the Pipeline.

- 1

- List of Workspaces shared between the Tasks defined in the Pipeline. A Pipeline can define as many Workspaces as required. In this example, only one Workspace named

shared-workspaceis declared. - 2

- Definition of Tasks used in the Pipeline. This snippet defines two Tasks,

build-imageandapply-manifests, which share a common Workspace. - 3

- List of Workspaces used in the

build-imageTask. A Task definition can include as many Workspaces as it requires. However, it is recommended that a Task uses at most one writable Workspace. - 4

- Name that uniquely identifies the Workspace used in the Task. This Task uses one Workspace named

source. - 5

- Name of the Pipeline Workspace used by the Task. Note that the Workspace

sourcein turn uses the Pipeline Workspace namedshared-workspace. - 6

- List of Workspaces used in the

apply-manifestsTask. Note that this Task shares thesourceWorkspace with thebuild-imageTask.

Workspaces help tasks share data, and allow you to specify one or more volumes that each task in the pipeline requires during execution. You can create a persistent volume claim or provide a volume claim template that creates a persistent volume claim for you.

The following code snippet of the build-deploy-api-pipelinerun PipelineRun uses a volume claim template to create a persistent volume claim for defining the storage volume for the shared-workspace Workspace used in the build-and-deploy Pipeline.

- 1

- Specifies the list of Pipeline Workspaces for which volume binding will be provided in the PipelineRun.

- 2

- The name of the Workspace in the Pipeline for which the volume is being provided.

- 3

- Specifies a volume claim template that creates a persistent volume claim to define the storage volume for the workspace.

1.3.6. Triggers

Use Triggers in conjunction with pipelines to create a full-fledged CI/CD system where Kubernetes resources define the entire CI/CD execution. Triggers capture the external events and process them to extract key pieces of information. Mapping this event data to a set of predefined parameters triggers a series of tasks that can then create and deploy Kubernetes resources and instantiate the pipeline.

For example, you define a CI/CD workflow using Red Hat OpenShift Pipelines for your application. The pipeline must start for any new changes to take effect in the application repository. Triggers automate this process by capturing and processing any change event and by triggering a pipeline run that deploys the new image with the latest changes.

Triggers consist of the following main resources that work together to form a reusable, decoupled, and self-sustaining CI/CD system:

-

The

TriggerBindingresource validates events, extracts the fields from an event payload, and stores them as parameters. -

The

TriggerTemplateresource acts as a standard for the way resources must be created. It specifies the way parameterized data from theTriggerBindingresource should be used. A trigger template receives input from the trigger binding, and then performs a series of actions that results in creation of new pipeline resources, and initiation of a new pipeline run. -

The

EventListenerresource provides an endpoint, or an event sink, that listens for incoming HTTP-based events with a JSON payload. It extracts event parameters from eachTriggerBindingresource, and then processes this data to create Kubernetes resources as specified by the correspondingTriggerTemplateresource. TheEventListenerresource also performs lightweight event processing or basic filtering on the payload using eventinterceptors, which identify the type of payload and optionally modify it. Currently, pipeline triggers support four types of interceptors: Webhook Interceptors, GitHub Interceptors, GitLab Interceptors, and Common Expression Language (CEL) Interceptors. -

The

Triggerresource connects theTriggerBindingandTriggerTemplateresources, and thisTriggerresource is referenced in theEventListenerspecification.

The following example shows a code snippet of the TriggerBinding resource, which extracts the Git repository information from the received event payload:

- 1

- The API version of the

TriggerBindingresource. In this example,v1alpha1. - 2

- Specifies the type of Kubernetes object. In this example,

TriggerBinding. - 3

- Unique name to identify the

TriggerBindingresource. - 4

- List of parameters which will be extracted from the received event payload and passed to the

TriggerTemplateresource. In this example, the Git repository URL, name, and revision are extracted from the body of the event payload.

The following example shows a code snippet of a TriggerTemplate resource, which creates a pipeline run using the Git repository information received from the TriggerBinding resource you just created:

- 1

- The API version of the

TriggerTemplateresource. In this example,v1alpha1. - 2

- Specifies the type of Kubernetes object. In this example,

TriggerTemplate. - 3

- Unique name to identify the

TriggerTemplateresource. - 4

- Parameters supplied by the

TriggerBindingorEventListernerresources. - 5

- List of templates that specify the way resources must be created using the parameters received through the

TriggerBindingorEventListenerresources.

The following example shows a code snippet of a Trigger resource, named vote-trigger that connects the TriggerBinding and TriggerTemplate resources.

- 1

- The API version of the

Triggerresource. In this example,v1alpha1. - 2

- Specifies the type of Kubernetes object. In this example,

Trigger. - 3

- Unique name to identify the

Triggerresource. - 4

- Service account name to be used.

- 5

- Name of the

TriggerBindingresource to be connected to theTriggerTemplateresource. - 6

- Name of the

TriggerTemplateresource to be connected to theTriggerBindingresource.

The following example shows an EventListener resource, which references the Trigger resource named vote-trigger.

- 1

- The API version of the

EventListenerresource. In this example,v1alpha1. - 2

- Specifies the type of Kubernetes object. In this example,

EventListener. - 3

- Unique name to identify the

EventListenerresource. - 4

- Service account name to be used.

- 5

- Name of the

Triggerresource referenced by theEventListenerresource.

Chapter 2. Installing OpenShift Pipelines

This guide walks cluster administrators through the process of installing the Red Hat OpenShift Pipelines Operator to an OpenShift Container Platform cluster.

The Red Hat OpenShift Pipelines Operator is supported for installation in a restricted network environment. For more information, see Using Operator Lifecycle Manager on restricted networks.

Prerequisites

-

You have access to an OpenShift Container Platform cluster using an account with

cluster-adminpermissions. -

You have installed

ocCLI. -

You have installed OpenShift Pipelines (

tkn) CLI on your local system.

2.1. Installing the Red Hat OpenShift Pipelines Operator in web console

You can install Red Hat OpenShift Pipelines using the Operator listed in the OpenShift Container Platform OperatorHub. When you install the Red Hat OpenShift Pipelines Operator, the Custom Resources (CRs) required for the Pipelines configuration are automatically installed along with the Operator.

Procedure

- In the Administrator perspective of the web console, navigate to Operators → OperatorHub.

Use the Filter by keyword box to search for

Red Hat OpenShift Pipelines Operatorin the catalog. Click the OpenShift Pipelines Operator tile.NoteEnsure that you do not select the Community version of the OpenShift Pipelines Operator.

- Read the brief description about the Operator on the Red Hat OpenShift Pipelines Operator page. Click Install.

On the Install Operator page:

-

Select All namespaces on the cluster (default) for the Installation Mode. This mode installs the Operator in the default

openshift-operatorsnamespace, which enables the Operator to watch and be made available to all namespaces in the cluster. - Select Automatic for the Approval Strategy. This ensures that the future upgrades to the Operator are handled automatically by the Operator Lifecycle Manager (OLM). If you select the Manual approval strategy, OLM creates an update request. As a cluster administrator, you must then manually approve the OLM update request to update the Operator to the new version.

Select an Update Channel.

- The ocp-<4.x> channel enables installation of the latest stable release of the Red Hat OpenShift Pipelines Operator.

- The preview channel enables installation of the latest preview version of the Red Hat OpenShift Pipelines Operator, which may contain features that are not yet available from the 4.x update channel.

-

Select All namespaces on the cluster (default) for the Installation Mode. This mode installs the Operator in the default

Click Install. You will see the Operator listed on the Installed Operators page.

NoteThe Operator is installed automatically into the

openshift-operatorsnamespace.- Verify that the Status is set to Succeeded Up to date to confirm successful installation of Red Hat OpenShift Pipelines Operator.

2.2. Installing the OpenShift Pipelines Operator using the CLI

You can install Red Hat OpenShift Pipelines Operator from the OperatorHub using the CLI.

Procedure

Create a Subscription object YAML file to subscribe a namespace to the Red Hat OpenShift Pipelines Operator, for example,

sub.yaml:Example Subscription

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the Subscription object:

oc apply -f sub.yaml

$ oc apply -f sub.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow The Red Hat OpenShift Pipelines Operator is now installed in the default target namespace

openshift-operators.

Chapter 3. Uninstalling OpenShift Pipelines

Uninstalling the Red Hat OpenShift Pipelines Operator is a two-step process:

- Delete the Custom Resources (CRs) that were added by default when you installed the Red Hat OpenShift Pipelines Operator.

- Uninstall the Red Hat OpenShift Pipelines Operator.

Uninstalling only the Operator will not remove the Red Hat OpenShift Pipelines components created by default when the Operator is installed.

3.1. Deleting the Red Hat OpenShift Pipelines components and Custom Resources

Delete the Custom Resources (CRs) created by default during installation of the Red Hat OpenShift Pipelines Operator.

Procedure

- In the Administrator perspective of the web console, navigate to Administration → Custom Resource Definition.

-

Type

config.operator.tekton.devin the Filter by name box to search for the Red Hat OpenShift Pipelines Operator CRs. - Click CRD Config to see the Custom Resource Definition Details page.

Click the Actions drop-down menu and select Delete Custom Resource Definition.

NoteDeleting the CRs will delete the Red Hat OpenShift Pipelines components, and all the Tasks and Pipelines on the cluster will be lost.

- Click Delete to confirm the deletion of the CRs.

3.2. Uninstalling the Red Hat OpenShift Pipelines Operator

Procedure

-

From the Operators → OperatorHub page, use the Filter by keyword box to search for

Red Hat OpenShift Pipelines Operator. - Click the OpenShift Pipelines Operator tile. The Operator tile indicates it is installed.

- In the OpenShift Pipelines Operator descriptor page, click Uninstall.

Chapter 4. Creating CI/CD solutions for applications using OpenShift Pipelines

With Red Hat OpenShift Pipelines, you can create a customized CI/CD solution to build, test, and deploy your application.

To create a full-fledged, self-serving CI/CD pipeline for an application, you must perform the following tasks:

- Create custom tasks, or install existing reusable tasks.

- Create and define the delivery pipeline for your application.

Provide a storage volume or filesystem that is attached to a workspace for the pipeline execution using one of the following approaches:

- Specify a volume claim template that creates a persistent volume claim

- Specify a persistent volume claim

-

Create a

PipelineRunobject to instantiate and invoke the pipeline. - Add triggers to capture events in the source repository.

This section uses the pipelines-tutorial example to demonstrate the preceding tasks. The example uses a simple application which consists of:

-

A front-end interface,

vote-ui, with the source code in theui-repoGit repository. -

A back-end interface,

vote-api, with the source code in theapi-repoGit repository. -

The

apply-manifestsandupdate-deploymenttasks in thepipelines-tutorialGit repository.

4.1. Prerequisites

- You have access to an OpenShift Container Platform cluster.

- You have installed OpenShift Pipelines using the Red Hat OpenShift Pipelines Operator listed in the OpenShift OperatorHub. Once installed, it is applicable to the entire cluster.

- You have installed OpenShift Pipelines CLI.

-

You have forked the front-end

ui-repoand back-endapi-repoGit repositories using your GitHub ID, and have Administrator access to these repositories. -

Optional: You have cloned the

pipelines-tutorialGit repository.

4.2. Creating a project and checking your Pipeline ServiceAccount

Procedure

Log in to your OpenShift Container Platform cluster:

oc login -u <login> -p <password> https://openshift.example.com:6443

$ oc login -u <login> -p <password> https://openshift.example.com:6443Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a project for the sample application. For this example workflow, create the

pipelines-tutorialproject:oc new-project pipelines-tutorial

$ oc new-project pipelines-tutorialCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you create a project with a different name, be sure to update the resource URLs used in the example with your project name.

View the

pipelineServiceAccount:Red Hat OpenShift Pipelines Operator adds and configures a ServiceAccount named

pipelinethat has sufficient permissions to build and push an image. This ServiceAccount is used by PipelineRun.oc get serviceaccount pipeline

$ oc get serviceaccount pipelineCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. Creating Pipeline Tasks

Procedure

Install the

apply-manifestsandupdate-deploymentTaskresources from thepipelines-tutorialrepository, which contains a list of reusable tasks for pipelines:oc create -f https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/01_pipeline/01_apply_manifest_task.yaml oc create -f https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/01_pipeline/02_update_deployment_task.yaml

$ oc create -f https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/01_pipeline/01_apply_manifest_task.yaml $ oc create -f https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/01_pipeline/02_update_deployment_task.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

tkn task listcommand to list the tasks you created:tkn task list

$ tkn task listCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output verifies that the

apply-manifestsandupdate-deploymentTaskresources were created:NAME DESCRIPTION AGE apply-manifests 1 minute ago update-deployment 48 seconds ago

NAME DESCRIPTION AGE apply-manifests 1 minute ago update-deployment 48 seconds agoCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

tkn clustertasks listcommand to list the Operator-installed additionalClusterTaskresources, for example --buildahands2i-python-3:NoteYou must use a privileged pod container to run the

buildahClusterTaskresource because it requires a privileged security context. To learn more about security context constraints (SCC) for pods, see the Additional resources section.tkn clustertasks list

$ tkn clustertasks listCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output lists the Operator-installed

ClusterTaskresources:NAME DESCRIPTION AGE buildah 1 day ago git-clone 1 day ago s2i-php 1 day ago tkn 1 day ago

NAME DESCRIPTION AGE buildah 1 day ago git-clone 1 day ago s2i-php 1 day ago tkn 1 day agoCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. Assembling a Pipeline

A Pipeline represents a CI/CD flow and is defined by the Tasks to be executed. It is designed to be generic and reusable in multiple applications and environments.

A Pipeline specifies how the Tasks interact with each other and their order of execution using the from and runAfter parameters. It uses the workspaces field to specify one or more volumes that each Task in the Pipeline requires during execution.

In this section, you will create a Pipeline that takes the source code of the application from GitHub and then builds and deploys it on OpenShift Container Platform.

The Pipeline performs the following tasks for the back-end application vote-api and front-end application vote-ui:

-

Clones the source code of the application from the Git repository by referring to the

git-urlandgit-revisionparameters. -

Builds the container image using the

buildahClusterTask. -

Pushes the image to the internal image registry by referring to the

imageparameter. -

Deploys the new image on OpenShift Container Platform by using the

apply-manifestsandupdate-deploymentTasks.

Procedure

Copy the contents of the following sample Pipeline YAML file and save it:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The Pipeline definition abstracts away the specifics of the Git source repository and image registries. These details are added as

paramswhen a Pipeline is triggered and executed.Create the Pipeline:

oc create -f <pipeline-yaml-file-name.yaml>

$ oc create -f <pipeline-yaml-file-name.yaml>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can also execute the YAML file directly from the Git repository:

oc create -f https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/01_pipeline/04_pipeline.yaml

$ oc create -f https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/01_pipeline/04_pipeline.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

tkn pipeline listcommand to verify that the Pipeline is added to the application:tkn pipeline list

$ tkn pipeline listCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output verifies that the

build-and-deployPipeline was created:NAME AGE LAST RUN STARTED DURATION STATUS build-and-deploy 1 minute ago --- --- --- ---

NAME AGE LAST RUN STARTED DURATION STATUS build-and-deploy 1 minute ago --- --- --- ---Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Running a Pipeline

A PipelineRun resource starts a pipeline and ties it to the Git and image resources that should be used for the specific invocation. It automatically creates and starts the TaskRun resources for each task in the pipeline.

Procedure

Start the pipeline for the back-end application:

tkn pipeline start build-and-deploy \ -w name=shared-workspace,volumeClaimTemplateFile=https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/01_pipeline/03_persistent_volume_claim.yaml \ -p deployment-name=vote-api \ -p git-url=http://github.com/openshift-pipelines/vote-api.git \ -p IMAGE=image-registry.openshift-image-registry.svc:5000/pipelines-tutorial/vote-api \$ tkn pipeline start build-and-deploy \ -w name=shared-workspace,volumeClaimTemplateFile=https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/01_pipeline/03_persistent_volume_claim.yaml \ -p deployment-name=vote-api \ -p git-url=http://github.com/openshift-pipelines/vote-api.git \ -p IMAGE=image-registry.openshift-image-registry.svc:5000/pipelines-tutorial/vote-api \Copy to Clipboard Copied! Toggle word wrap Toggle overflow The previous command uses a volume claim template, which creates a persistent volume claim for the pipeline execution.

To track the progress of the pipeline run, enter the following command::

tkn pipelinerun logs <pipelinerun_id> -f

$ tkn pipelinerun logs <pipelinerun_id> -fCopy to Clipboard Copied! Toggle word wrap Toggle overflow The <pipelinerun_id> in the above command is the ID for the

PipelineRunthat was returned in the output of the previous command.Start the Pipeline for the front-end application:

tkn pipeline start build-and-deploy \ -w name=shared-workspace,volumeClaimTemplateFile=https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/01_pipeline/03_persistent_volume_claim.yaml \ -p deployment-name=vote-ui \ -p git-url=http://github.com/openshift-pipelines/vote-ui.git \ -p IMAGE=image-registry.openshift-image-registry.svc:5000/pipelines-tutorial/vote-ui \$ tkn pipeline start build-and-deploy \ -w name=shared-workspace,volumeClaimTemplateFile=https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/01_pipeline/03_persistent_volume_claim.yaml \ -p deployment-name=vote-ui \ -p git-url=http://github.com/openshift-pipelines/vote-ui.git \ -p IMAGE=image-registry.openshift-image-registry.svc:5000/pipelines-tutorial/vote-ui \Copy to Clipboard Copied! Toggle word wrap Toggle overflow To track the progress of the pipeline run, enter the following command:

tkn pipelinerun logs <pipelinerun_id> -f

$ tkn pipelinerun logs <pipelinerun_id> -fCopy to Clipboard Copied! Toggle word wrap Toggle overflow The <pipelinerun_id> in the above command is the ID for the

PipelineRunthat was returned in the output of the previous command.After a few minutes, use

tkn pipelinerun listcommand to verify that the Pipeline ran successfully by listing all the PipelineRuns:tkn pipelinerun list

$ tkn pipelinerun listCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output lists the PipelineRuns:

NAME STARTED DURATION STATUS build-and-deploy-run-xy7rw 1 hour ago 2 minutes Succeeded build-and-deploy-run-z2rz8 1 hour ago 19 minutes Succeeded

NAME STARTED DURATION STATUS build-and-deploy-run-xy7rw 1 hour ago 2 minutes Succeeded build-and-deploy-run-z2rz8 1 hour ago 19 minutes SucceededCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the application route:

oc get route vote-ui --template='http://{{.spec.host}}'$ oc get route vote-ui --template='http://{{.spec.host}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note the output of the previous command. You can access the application using this route.

To rerun the last pipeline run, using the pipeline resources and service account of the previous pipeline, run:

tkn pipeline start build-and-deploy --last

$ tkn pipeline start build-and-deploy --lastCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.6. Adding Triggers to a Pipeline

Triggers enable pipelines to respond to external GitHub events, such as push events and pull requests. After you assemble and start a Pipeline for the application, add the TriggerBinding, TriggerTemplate, Trigger, and EventListener resources to capture the GitHub events.

Procedure

Copy the content of the following sample

TriggerBindingYAML file and save it:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

TriggerBindingresource:oc create -f <triggerbinding-yaml-file-name.yaml>

$ oc create -f <triggerbinding-yaml-file-name.yaml>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can create the

TriggerBindingresource directly from thepipelines-tutorialGit repository:oc create -f https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/03_triggers/01_binding.yaml

$ oc create -f https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/03_triggers/01_binding.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the content of the following sample

TriggerTemplateYAML file and save it:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The template specifies a volume claim template to create a persistent volume claim for defining the storage volume for the workspace. Therefore, you do not need to create a persistent volume claim to provide data storage.

Create the

TriggerTemplateresource:oc create -f <triggertemplate-yaml-file-name.yaml>

$ oc create -f <triggertemplate-yaml-file-name.yaml>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can create the

TriggerTemplateresource directly from thepipelines-tutorialGit repository:oc create -f https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/03_triggers/02_template.yaml

$ oc create -f https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/03_triggers/02_template.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the contents of the following sample

TriggerYAML file and save it:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

Triggerresource:oc create -f <trigger-yaml-file-name.yaml>

$ oc create -f <trigger-yaml-file-name.yaml>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can create the

Triggerresource directly from thepipelines-tutorialGit repository:oc create -f https://github.com/openshift/pipelines-tutorial/blob/release-tech-preview-3/03_triggers/03_trigger.yaml

$ oc create -f https://github.com/openshift/pipelines-tutorial/blob/release-tech-preview-3/03_triggers/03_trigger.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the contents of the following sample

EventListenerYAML file and save it:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, if you have not defined a trigger custom resource, add the binding and template spec to the

EventListenerYAML file, instead of referring to the name of the trigger:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

EventListenerresource:oc create -f <eventlistener-yaml-file-name.yaml>

$ oc create -f <eventlistener-yaml-file-name.yaml>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can create the

EvenListenerresource directly from thepipelines-tutorialGit repository:oc create -f https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/03_triggers/04_event_listener.yaml

$ oc create -f https://raw.githubusercontent.com/openshift/pipelines-tutorial/release-tech-preview-3/03_triggers/04_event_listener.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expose the

EventListenerservice as an OpenShift Container Platform route to make it publicly accessible:oc expose svc el-vote-app

$ oc expose svc el-vote-appCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.7. Creating Webhooks

Webhooks are HTTP POST messages that are received by the EventListeners whenever a configured event occurs in your repository. The event payload is then mapped to TriggerBindings, and processed by TriggerTemplates. The TriggerTemplates eventually start one or more PipelineRuns, leading to the creation and deployment of Kubernetes resources.

In this section, you will configure a Webhook URL on your forked Git repositories vote-ui and vote-api. This URL points to the publicly accessible EventListener service route.

Adding Webhooks requires administrative privileges to the repository. If you do not have administrative access to your repository, contact your system administrator for adding Webhooks.

Procedure

Get the Webhook URL:

echo "URL: $(oc get route el-vote-app --template='http://{{.spec.host}}')"$ echo "URL: $(oc get route el-vote-app --template='http://{{.spec.host}}')"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note the URL obtained in the output.

Configure Webhooks manually on the front-end repository:

-

Open the front-end Git repository

vote-uiin your browser. - Click Settings → Webhooks → Add Webhook

On the Webhooks/Add Webhook page:

- Enter the Webhook URL from step 1 in Payload URL field

- Select application/json for the Content type

- Specify the secret in the Secret field

- Ensure that the Just the push event is selected

- Select Active

- Click Add Webhook

-

Open the front-end Git repository

-

Repeat step 2 for the back-end repository

vote-api.

4.8. Triggering a pipeline run

Whenever a push event occurs in the Git repository, the configured Webhook sends an event payload to the publicly exposed EventListener service route. The EventListener service of the application processes the payload, and passes it to the relevant TriggerBinding and TriggerTemplate resource pairs. The TriggerBinding resource extracts the parameters and the TriggerTemplate resource uses these parameters and specifies the way the resources must be created. This may rebuild and redeploy the application.

In this section, you push an empty commit to the front-end vote-ui repository, which then triggers the pipeline run.

Procedure

From the terminal, clone your forked Git repository

vote-ui:git clone git@github.com:<your GitHub ID>/vote-ui.git -b release-tech-preview-3

$ git clone git@github.com:<your GitHub ID>/vote-ui.git -b release-tech-preview-3Copy to Clipboard Copied! Toggle word wrap Toggle overflow Push an empty commit:

git commit -m "empty-commit" --allow-empty && git push origin release-tech-preview-3

$ git commit -m "empty-commit" --allow-empty && git push origin release-tech-preview-3Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check if the pipeline run was triggered:

tkn pipelinerun list

$ tkn pipelinerun listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Notice that a new pipeline run was initiated.

Chapter 5. Working with Red Hat OpenShift Pipelines using the Developer perspective

You can use the Developer perspective of the OpenShift Container Platform web console to create CI/CD Pipelines for your software delivery process.

In the Developer perspective:

- Use the Add → Pipeline → Pipeline Builder option to create customized Pipelines for your application.

- Use the Add → From Git option to create Pipelines using operator-installed Pipeline templates and resources while creating an application on OpenShift Container Platform.

After you create the Pipelines for your application, you can view and visually interact with the deployed Pipelines in the Pipelines view. You can also use the Topology view to interact with the Pipelines created using the From Git option. You need to apply custom labels to a Pipeline created using the Pipeline Builder to see it in the Topology view.

Prerequisites

- You have access to an OpenShift Container Platform cluster and have switched to the Developer perspective.

- You have the OpenShift Pipelines Operator installed in your cluster.

- You are a cluster administrator or a user with create and edit permissions.

- You have created a project.

5.1. Constructing Pipelines using the Pipeline Builder

In the Developer perspective of the console, you can use the Add → Pipeline → Pipeline Builder option to:

- Construct a Pipeline flow using existing Tasks and ClusterTasks. When you install the OpenShift Pipelines Operator, it adds reusable Pipeline ClusterTasks to your cluster.

- Specify the type of resources required for the Pipeline Run, and if required, add additional parameters to the Pipeline.

- Reference these Pipeline resources in each of the Tasks in the Pipeline as input and output resources.

- The parameters for a Task are prepopulated based on the specifications of the Task. If required, reference any additional parameters added to the Pipeline in the Task.

Procedure

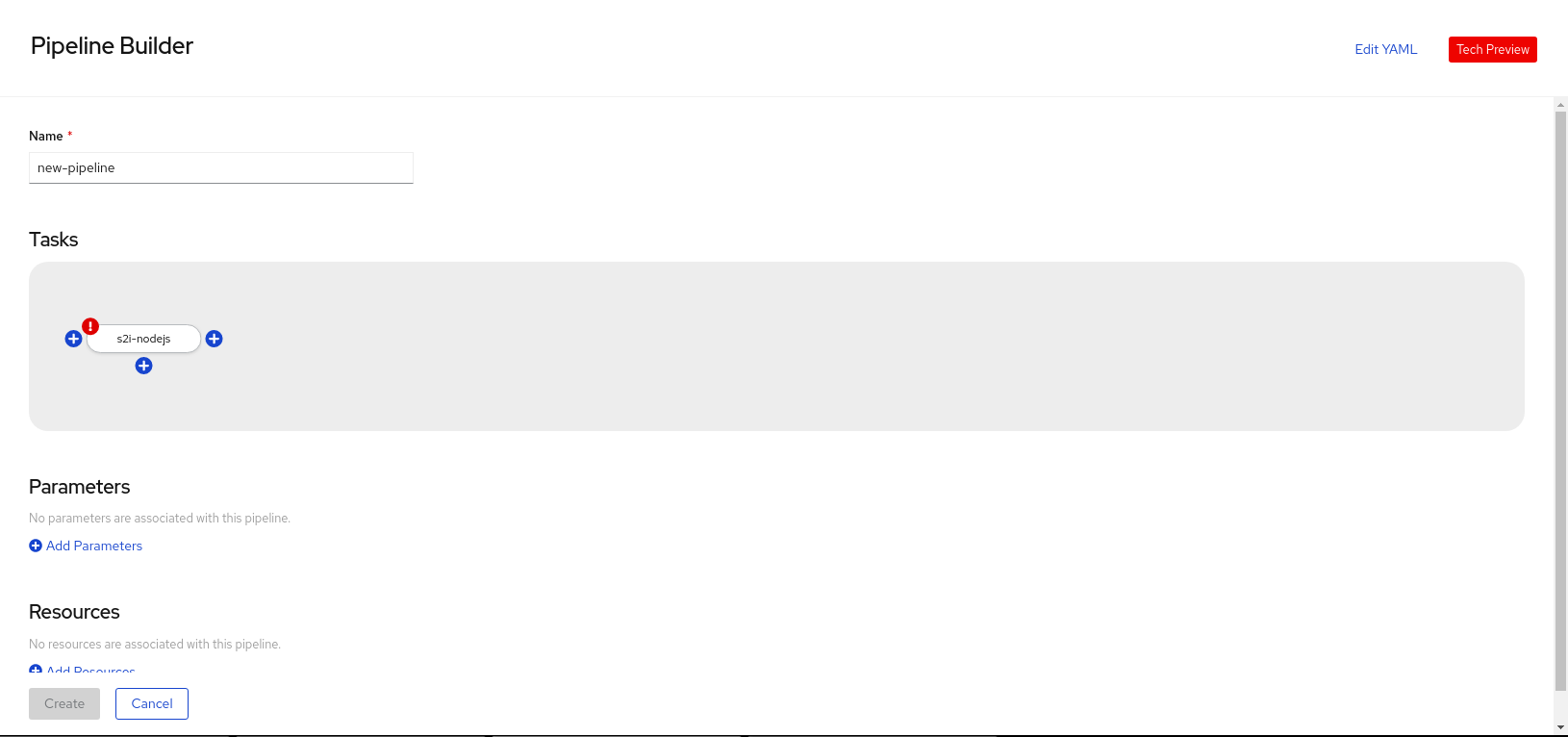

- In the Add view of the Developer perspective, click the Pipeline tile to see the Pipeline Builder page.

- Enter a unique name for the Pipeline.

Select a Task from the Select task list to add a Task to the Pipeline. This example uses the s2i-nodejs Task.

- To add sequential Tasks to the Pipeline, click the plus icon to the right or left of the Task, and from the Select task list, select the Task you want to add to the Pipeline. For this example, use the plus icon to the right of the s2i-nodejs Task to add an openshift-client Task.

To add a parallel Task to the existing Task, click the plus icon displayed below the Task, and from the Select Task list, select the parallel Task you want to add to the Pipeline.

Figure 5.1. Pipeline Builder

Click Add Resources to specify the name and type of resources that the Pipeline Run will use. These resources are then used by the Tasks in the Pipeline as inputs and outputs. For this example:

-

Add an input resource. In the Name field, enter

Source, and from the Resource Type drop-down list, select Git. -

Add an output resource. In the Name field, enter

Img, and from the Resource Type drop-down list, select Image.

-

Add an input resource. In the Name field, enter

- The Parameters for a Task are prepopulated based on the specifications of the Task. If required, use the Add Parameters link to add additional parameters.

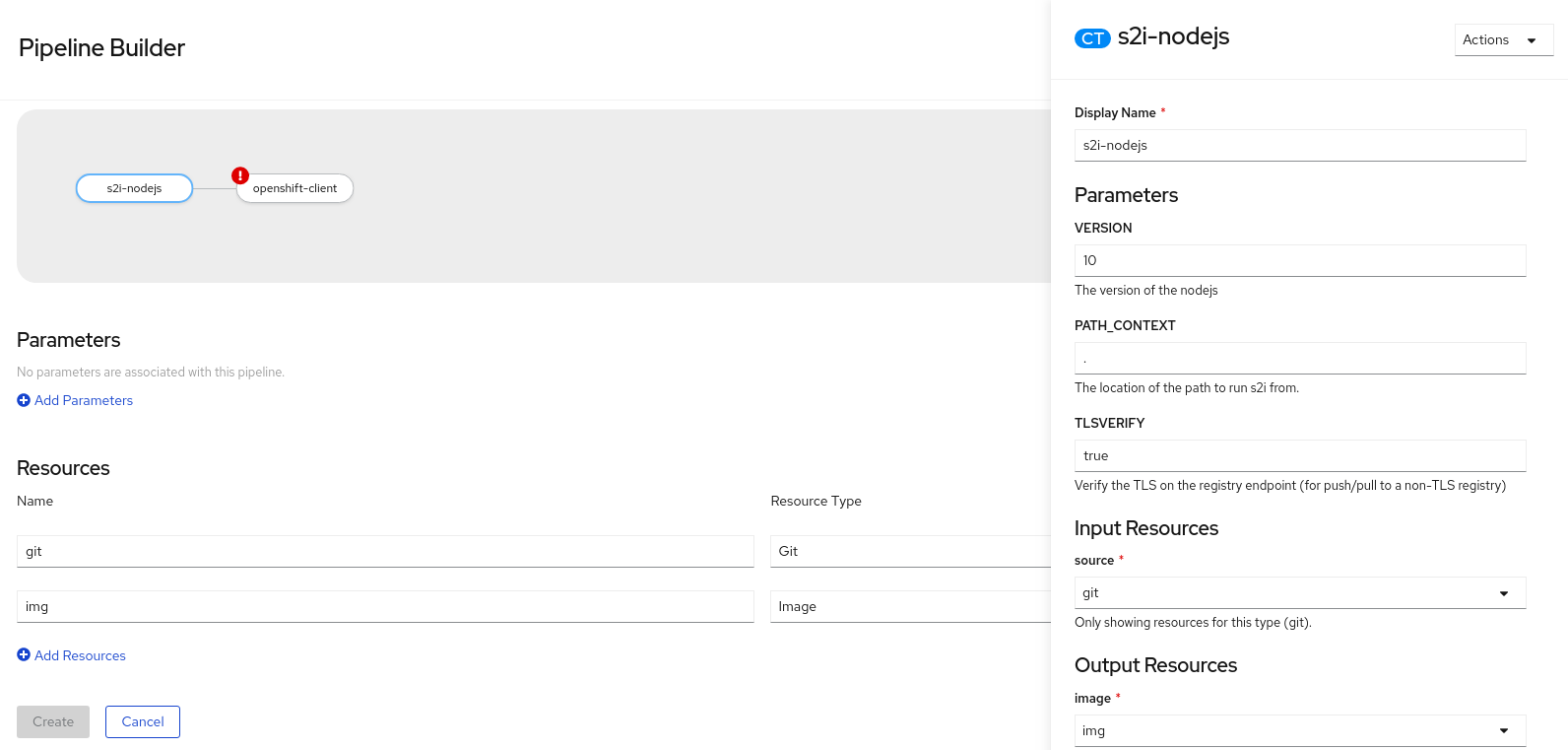

A Missing Resources warning is displayed on a Task if the resources for the Task are not specified. Click the s2i-nodejs Task to see the side panel with details for the Task.

Figure 5.2. Tasks details in Pipelines Builder

In the Task side panel, specify the resources and parameters for it:

- In the Input Resources → Source section, the Select Resources drop-down list displays the resources that you added to the Pipeline. For this example, select Source.

- In the Output Resources → Image section, click the Select Resources list, and select Img.

-

If required, in the Parameters section, add more parameters to the default ones, by using the

$(params.<param-name>)syntax.

- Similarly, add an input resource for the openshift-client Task.

- Click Create to create the Pipeline. You are redirected to the Pipeline Details page that displays the details of the created Pipeline.

- Click the Actions drop-down menu, and then click Start to start the Pipeline.

Optionally, you can also use the Edit YAML link, on the upper right of the Pipeline Builder page, to directly modify a Pipeline YAML file in the console. You can also use the operator-installed, reusable snippets and samples to create detailed Pipelines.

5.2. Creating applications with OpenShift Pipelines

To create Pipelines along with applications, use the From Git option in the Add view of the Developer perspective. For more information, see Creating applications using the Developer perspective.

5.3. Interacting with Pipelines using the Developer perspective

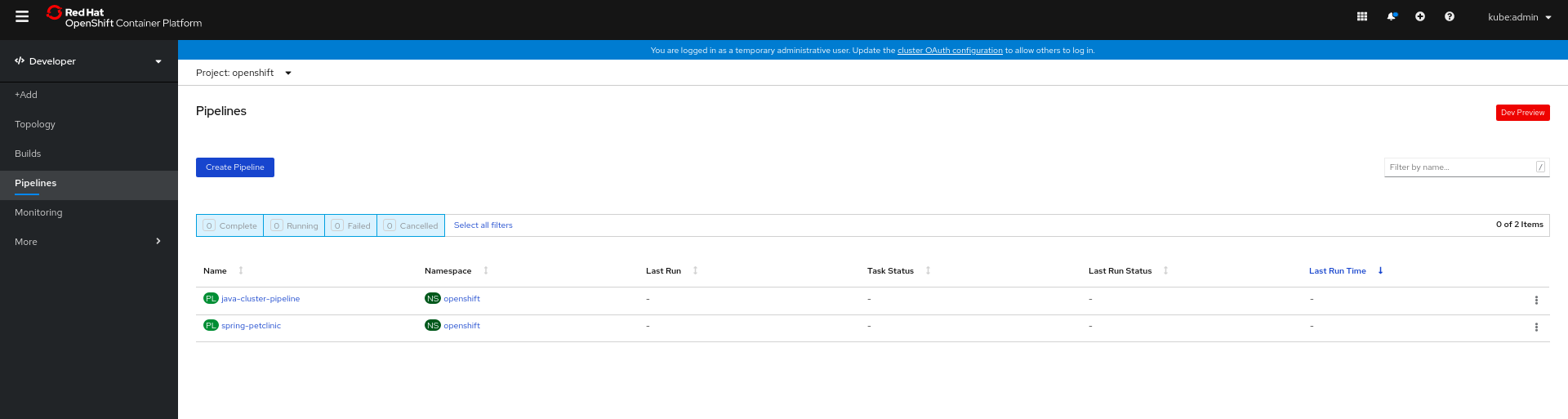

The Pipelines view in the Developer perspective lists all the pipelines in a project along with the following details:

- The namespace in which the pipeline was created

- The last pipeline run

- The status of the tasks in the pipeline run

- The status of the pipeline run

- The creation time of the last pipeline run

Procedure

In the Pipelines view of the Developer perspective, select a project from the Project drop-down list to see the Pipelines in that project.

Figure 5.3. Pipelines view in the Developer perspective

- Click the required Pipeline to see the Pipeline Details page. This page provides a visual representation of all the serial and parallel Tasks in the Pipeline. The Tasks are also listed at the lower right of the page. You can click the listed Tasks to view Task details.

Optionally, in the Pipeline Details page:

- Click the YAML tab to edit the YAML file for the Pipeline.

Click the Pipeline Runs tab to see the completed, running, or failed runs for the pipeline.

NoteThe Details section of the Pipeline Run Details page displays a Log Snippet of the failed pipeline run. Log Snippet provides a general error message and a snippet of the log. A link to the Logs section provides quick access to the details about the failed run. The Log Snippet is also displayed in the Details section of the Task Run Details page.

You can use the Options menu

to stop a running pipeline, to rerun a pipeline using the same parameters and resources as that of the previous pipeline execution, or to delete a pipeline run.

to stop a running pipeline, to rerun a pipeline using the same parameters and resources as that of the previous pipeline execution, or to delete a pipeline run.

- Click the Parameters tab to see the parameters defined in the pipeline. You can also add or edit additional parameters as required.

- Click the Resources tab to see the resources defined in the pipeline. You can also add or edit additional resources as required.

5.4. Starting Pipelines

After you create a Pipeline, you need to start it to execute the included Tasks in the defined sequence. You can start a Pipeline Run from the Pipelines view, Pipeline Details page, or the Topology view.

Procedure

To start a Pipeline using the Pipelines view:

-

In the Pipelines view of the Developer perspective, click the Options

menu adjoining a Pipeline, and select Start.

menu adjoining a Pipeline, and select Start.

The Start Pipeline dialog box displays the Git Resources and the Image Resources based on the Pipeline definition.

NoteFor Pipelines created using the From Git option, the Start Pipeline dialog box also displays an

APP_NAMEfield in the Parameters section, and all the fields in the dialog box are prepopulated by the Pipeline templates.- If you have resources in your namespace, the Git Resources and the Image Resources fields are prepopulated with those resources. If required, use the drop-downs to select or create the required resources and customize the Pipeline Run instance.

Optional: Modify the Advanced Options to add credentials to authenticate the specified private Git server or Docker registry.

- Under Advanced Options, click Show Credentials Options and select Add Secret.

In the Create Source Secret section, specify the following:

- A unique Secret Name for the secret.

- In the Designated provider to be authenticated section, specify the provider to be authenticated in the Access to field, and the base Server URL.

Select the Authentication Type and provide the credentials:

For the Authentication Type

Image Registry Crendentials, specify the Registry Server Address that you want to authenticate, and provide your credentials in the Username, Password, and Email fields.Select Add Credentials if you want to specify an additional Registry Server Address.

-

For the Authentication Type

Basic Authentication, specify the values for the UserName and Password or Token fields. -

For the Authentication Type

SSH Keys, specify the value for the SSH Private Key field.

- Select the check mark to add the secret.

You can add multiple secrets based upon the number of resources in your Pipeline.

- Click Start to start the PipelineRun.

The Pipeline Run Details page displays the Pipeline being executed. After the Pipeline starts, the Tasks and Steps within each Task are executed. You can:

- Hover over the Tasks to see the time taken for the execution of each Step.

- Click on a Task to see logs for each of the Steps in the Task.

Click the Logs tab to see the logs according to the execution sequence of the Tasks and use the Download button to download the logs to a text file.

Figure 5.4. Pipeline run

For Pipelines created using the From Git option, you can use the Topology view to interact with Pipelines after you start them:

NoteTo see Pipelines created using the Pipeline Builder in the Topology view, customize the Pipeline labels to link the Pipeline with the application workload.

- On the left navigation panel, click Topology, and click on the application to see the Pipeline Runs listed in the side panel.

In the Pipeline Runs section, click Start Last Run to start a new Pipeline Run with the same parameters and resources as the previous ones. This option is disabled if a Pipeline Run has not been initiated.

Figure 5.5. Pipelines on the Topology view

In the Topology page, hover to the left of the application to see the status of the pipeline run for the application.

NoteThe side panel of the application node in the Topology page displays a Log Snippet when a pipeline run fails on a specific task run. You can view the Log Snippet in the Pipeline Runs section, under the Resources tab. Log Snippet provides a general error message and a snippet of the log. A link to the Logs section provides quick access to the details about the failed run.

5.5. Editing Pipelines

You can edit the Pipelines in your cluster using the Developer perspective of the web console:

Procedure

- In the Pipelines view of the Developer perspective, select the Pipeline you want to edit to see the details of the Pipeline. In the Pipeline Details page, click Actions and select Edit Pipeline.

In the Pipeline Builder page:

- You can add additional Tasks, parameters, or resources to the Pipeline.

- You can click the Task you want to modify to see the Task details in the side panel and modify the required Task details, such as the display name, parameters and resources.

- Alternatively, to delete the Task, click the Task, and in the side panel, click Actions and select Remove Task.

- Click Save to save the modified Pipeline.

5.6. Deleting Pipelines

You can delete the Pipelines in your cluster using the Developer perspective of the web console.

Procedure

-

In the Pipelines view of the Developer perspective, click the Options

menu adjoining a Pipeline, and select Delete Pipeline.

menu adjoining a Pipeline, and select Delete Pipeline.

- In the Delete Pipeline confirmation prompt, click Delete to confirm the deletion.

Chapter 6. Red Hat OpenShift Pipelines release notes

Red Hat OpenShift Pipelines is a cloud-native CI/CD experience based on the Tekton project which provides:

- Standard Kubernetes-native pipeline definitions (CRDs).

- Serverless pipelines with no CI server management overhead.

- Extensibility to build images using any Kubernetes tool, such as S2I, Buildah, JIB, and Kaniko.

- Portability across any Kubernetes distribution.

- Powerful CLI for interacting with pipelines.

- Integrated user experience with the Developer perspective of the OpenShift Container Platform web console.

For an overview of Red Hat OpenShift Pipelines, see Understanding OpenShift Pipelines.

6.1. Getting support

If you experience difficulty with a procedure described in this documentation, visit the Red Hat Customer Portal to learn more about Red Hat Technology Preview features support scope.

For questions and feedback, you can send an email to the product team at pipelines-interest@redhat.com.

6.2. Release notes for Red Hat OpenShift Pipelines Technology Preview 1.2

6.2.1. New features

Red Hat OpenShift Pipelines Technology Preview (TP) 1.2 is now available on OpenShift Container Platform 4.6. Red Hat OpenShift Pipelines TP 1.2 is updated to support:

- Tekton Pipelines 0.16.3

-

Tekton

tknCLI 0.13.1 - Tekton Triggers 0.8.1

- ClusterTasks based on Tekton Catalog 0.16

- IBM Power Systems on OpenShift Container Platform 4.6

- IBM Z and LinuxONE on OpenShift Container Platform 4.6

In addition to the fixes and stability improvements, here is a highlight of what’s new in OpenShift Pipelines 1.2.

6.2.1.1. Pipelines

This release of Red Hat OpenShift Pipelines adds support for a disconnected installation.

NoteInstallations in restricted environments are currently not supported on IBM Power Systems, IBM Z, and LinuxONE.

-

You can now use the

whenfield, instead ofconditions, to run a task only when certain criteria are met. The key components ofWhenExpressionsareInput,Operator, andValues. If all theWhenExpressionsevaluate toTrue, then the task is run. If any of theWhenExpressionsevaluate toFalse, the task is skipped. - Step statuses are now updated if a task run is canceled or times out.

-

Support for Git Large File Storage (LFS) is now available to build the base image used by

git-init. -

You can now use the

taskSpecfield to specify metadata, such as labels and annotations, when a task is embedded in a pipeline. -

Cloud events are now supported by pipeline runs. Retries with

backoffare now enabled for cloud events sent by the cloud event pipeline resource. -

You can now set a default

Workspaceconfiguration for any workspace that aTaskresource declares, but that aTaskRunresource does not explicitly provide. -

Support is available for namespace variable interpolation for the

PipelineRunnamespace andTaskRunnamespace. -

Validation for

TaskRunobjects is now added to check that not more than one persistent volume claim workspace is used when aTaskRunresource is associated with an Affinity Assistant. If more than one persistent volume claim workspace is used, the task run fails with aTaskRunValidationFailedcondition. Note that by default, the Affinity Assistant is disabled in Red Hat OpenShift Pipelines, so you will need to enable the assistant to use it.

6.2.1.2. Pipelines CLI

The

tkn task describe,tkn taskrun describe,tkn clustertask describe,tkn pipeline describe, andtkn pipelinerun describecommands now:-

Automatically select the

Task,TaskRun,ClusterTask,PipelineandPipelineRunresource, respectively, if only one of them is present. -

Display the results of the

Task,TaskRun,ClusterTask,PipelineandPipelineRunresource in their outputs, respectively. -

Display workspaces declared in the

Task,TaskRun,ClusterTask,PipelineandPipelineRunresource in their outputs, respectively.

-

Automatically select the

-

You can now use the

--prefix-nameoption with thetkn clustertask startcommand to specify a prefix for the name of a task run. -

Interactive mode support has now been provided to the

tkn clustertask startcommand. -

You can now specify

PodTemplateproperties supported by pipelines using local or remote file definitions forTaskRunandPipelineRunobjects. -

You can now use the

--use-params-defaultsoption with thetkn clustertask startcommand to use the default values set in theClusterTaskconfiguration and create the task run. -

The

--use-param-defaultsflag for thetkn pipeline startcommand now prompts the interactive mode if the default values have not been specified for some of the parameters.

6.2.1.3. Triggers

-

The Common Expression Language (CEL) function named

parseYAMLhas been added to parse a YAML string into a map of strings. - Error messages for parsing CEL expressions have been improved to make them more granular while evaluating expressions and when parsing the hook body for creating the evaluation environment.

- Support is now available for marshaling boolean values and maps if they are used as the values of expressions in a CEL overlay mechanism.

The following fields have been added to the

EventListenerobject:-

The

replicasfield enables the event listener to run more than one pod by specifying the number of replicas in the YAML file. -

The

NodeSelectorfield enables theEventListenerobject to schedule the event listener pod to a specific node.

-

The

-

Webhook interceptors can now parse the

EventListener-Request-URLheader to extract parameters from the original request URL being handled by the event listener. - Annotations from the event listener can now be propagated to the deployment, services, and other pods. Note that custom annotations on services or deployment are overwritten, and hence, must be added to the event listener annotations so that they are propagated.

-

Proper validation for replicas in the

EventListenerspecification is now available for cases when a user specifies thespec.replicasvalues asnegativeorzero. -

You can now specify the

TriggerCRDobject inside theEventListenerspec as a reference using theTriggerReffield to create theTriggerCRDobject separately and then bind it inside theEventListenerspec. -

Validation and defaults for the

TriggerCRDobject are now available.

6.2.2. Deprecated features

-

$(params)are now removed and replaced by$(tt.params)to avoid confusion between theresourcetemplateandtriggertemplateparameters. -

The

ServiceAccountreference of the optionalEventListenerTrigger-based authentication level has changed from an object reference to aServiceAccountNamestring. This ensures that theServiceAccountreference is in the same namespace as theEventListenerTriggerobject. -

The

Conditionscustom resource definition (CRD) is now deprecated; use theWhenExpressionsCRD instead. -

The

PipelineRun.Spec.ServiceAccountNamesobject is being deprecated and replaced by thePipelineRun.Spec.TaskRunSpec[].ServiceAccountNameobject.

6.2.3. Known issues

- This release of Red Hat OpenShift Pipelines adds support for a disconnected installation. However, some images used by the cluster tasks must be mirrored for them to work in disconnected clusters.

-

Pipelines in the

openshiftnamespace are not deleted after you uninstall the Red Hat OpenShift Pipelines Operator. Use theoc delete pipelines -n openshift --allcommand to delete the pipelines. Uninstalling the Red Hat OpenShift Pipelines Operator does not remove the event listeners.

As a workaround, to remove the

EventListenerandPodCRDs:Edit the

EventListenerobject with theforegroundDeletionfinalizers:oc patch el/<eventlistener_name> -p '{"metadata":{"finalizers":["foregroundDeletion"]}}' --type=merge$ oc patch el/<eventlistener_name> -p '{"metadata":{"finalizers":["foregroundDeletion"]}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc patch el/github-listener-interceptor -p '{"metadata":{"finalizers":["foregroundDeletion"]}}' --type=merge$ oc patch el/github-listener-interceptor -p '{"metadata":{"finalizers":["foregroundDeletion"]}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the

EventListenerCRD:oc patch crd/eventlisteners.triggers.tekton.dev -p '{"metadata":{"finalizers":[]}}' --type=merge$ oc patch crd/eventlisteners.triggers.tekton.dev -p '{"metadata":{"finalizers":[]}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow

When you run a multi-arch container image task without command specification on an IBM Power Systems (ppc64le) or IBM Z (s390x) cluster, the

TaskRunresource fails with the following error:Error executing command: fork/exec /bin/bash: exec format error

Error executing command: fork/exec /bin/bash: exec format errorCopy to Clipboard Copied! Toggle word wrap Toggle overflow As a workaround, use an architecture specific container image or specify the sha256 digest to point to the correct architecture. To get the sha256 digest enter:

skopeo inspect --raw <image_name>| jq '.manifests[] | select(.platform.architecture == "<architecture>") | .digest'

$ skopeo inspect --raw <image_name>| jq '.manifests[] | select(.platform.architecture == "<architecture>") | .digest'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.4. Fixed issues

- A simple syntax validation to check the CEL filter, overlays in the Webhook validator, and the expressions in the interceptor has now been added.

- Triggers no longer overwrite annotations set on the underlying deployment and service objects.

-

Previously, an event listener would stop accepting events. This fix adds an idle timeout of 120 seconds for the

EventListenersink to resolve this issue. -

Previously, canceling a pipeline run with a

Failed(Canceled)state gave a success message. This has been fixed to display an error instead. -

The

tkn eventlistener listcommand now provides the status of the listed event listeners, thus enabling you to easily identify the available ones. -

Consistent error messages are now displayed for the

triggers listandtriggers describecommands when triggers are not installed or when a resource cannot be found. -

Previously, a large number of idle connections would build up during cloud event delivery. The

DisableKeepAlives: trueparameter was added to thecloudeventclientconfig to fix this issue. Thus, a new connection is set up for every cloud event. -

Previously, the

creds-initcode would write empty files to the disk even if credentials of a given type were not provided. This fix modifies thecreds-initcode to write files for only those credentials that have actually been mounted from correctly annotated secrets.

6.3. Release notes for Red Hat OpenShift Pipelines Technology Preview 1.1

6.3.1. New features

Red Hat OpenShift Pipelines Technology Preview (TP) 1.1 is now available on OpenShift Container Platform 4.6. Red Hat OpenShift Pipelines TP 1.1 is updated to support:

- Tekton Pipelines 0.14.3

-

Tekton

tknCLI 0.11.0 - Tekton Triggers 0.6.1

- ClusterTasks based on Tekton Catalog 0.14

In addition to the fixes and stability improvements, here is a highlight of what’s new in OpenShift Pipelines 1.1.

6.3.1.1. Pipelines

- Workspaces can now be used instead of PipelineResources. It is recommended that you use Workspaces in OpenShift Pipelines, as PipelineResources are difficult to debug, limited in scope, and make Tasks less reusable. For more details on Workspaces, see Understanding OpenShift Pipelines.

Workspace support for VolumeClaimTemplates has been added:

- The VolumeClaimTemplate for a PipelineRun and TaskRun can now be added as a volume source for Workspaces. The tekton-controller then creates a PersistentVolumeClaim (PVC) using the template that is seen as a PVC for all TaskRuns in the Pipeline. Thus you do not need to define the PVC configuration every time it binds a workspace that spans multiple tasks.

- Support to find the name of the PersistentVolumeClaim when a VolumeClaimTemplate is used as a volume source is now available using variable substitution.

Support for improving audits:

-

The

PipelineRun.Statusfield now contains the status of every TaskRun in the Pipeline and the Pipeline specification used to instantiate a PipelineRun to monitor the progress of the PipelineRun. -

Pipeline results have been added to the pipeline specification and

PipelineRunstatus. -

The

TaskRun.Statusfield now contains the exact Task specification used to instantiate theTaskRun.

-

The

- Support to apply the default parameter to Conditions.

-

A TaskRun created by referencing a ClusterTask now adds the

tekton.dev/clusterTasklabel instead of thetekton.dev/tasklabel. -

The

kubeconfigwriternow adds theClientKeyDataand theClientCertificateDataconfigurations in the Resource structure to enable replacement of the pipeline resource type cluster with the kubeconfig-creator Task. -

The names of the

feature-flagsand theconfig-defaultsConfigMaps are now customizable. - Support for HostNetwork in the PodTemplate used by TaskRun is now available.

- An Affinity Assistant is now available to support node affinity in TaskRuns that share workspace volume. By default, this is disabled on OpenShift Pipelines.

-

The PodTemplate has been updated to specify

imagePullSecretsto identify secrets that the container runtime should use to authorize container image pulls when starting a pod. - Support for emitting warning events from the TaskRun controller if the controller fails to update the TaskRun.

- Standard or recommended k8s labels have been added to all resources to identify resources belonging to an application or component.

- The Entrypoint process is now notified for signals and these signals are then propagated using a dedicated PID Group of the Entrypoint process.

-

The PodTemplate can now be set on a Task level at runtime using

TaskRunSpecs. Support for emitting Kubernetes events:

-

The controller now emits events for additional TaskRun lifecycle events -

taskrun startedandtaskrun running. - The PipelineRun controller now emits an event every time a Pipeline starts.

-

The controller now emits events for additional TaskRun lifecycle events -

- In addition to the default Kubernetes events, support for CloudEvents for TaskRuns is now available. The controller can be configured to send any TaskRun events, such as create, started, and failed, as cloud events.

-

Support for using the

$context.<task|taskRun|pipeline|pipelineRun>.namevariable to reference the appropriate name when in PipelineRuns and TaskRuns. - Validation for PipelineRun parameters is now available to ensure that all the parameters required by the Pipeline are provided by the PipelineRun. This also allows PipelineRuns to provide extra parameters in addition to the required parameters.

-

You can now specify Tasks within a Pipeline that will always execute before the pipeline exits, either after finishing all tasks successfully or after a Task in the Pipeline failed, using the

finallyfield in the Pipeline YAML file. -

The

git-cloneClusterTask is now available.

6.3.1.2. Pipelines CLI

-

Support for embedded Trigger binding is now available to the

tkn evenlistener describecommand. - Support to recommend subcommands and make suggestions if an incorrect subcommand is used.

-

The

tkn task describecommand now auto selects the task if only one task is present in the Pipeline. -

You can now start a Task using default parameter values by specifying the

--use-param-defaultsflag in thetkn task startcommand. -

You can now specify a volumeClaimTemplate for PipelineRuns or TaskRuns using the

--workspaceoption with thetkn pipeline startortkn task startcommands. -

The

tkn pipelinerun logscommand now displays logs for the final tasks listed in thefinallysection. -

Interactive mode support has now been provided to the

tkn task startcommand and thedescribesubcommand for the following tkn resources:pipeline,pipelinerun,task,taskrun,clustertask, andpipelineresource. -

The

tkn versioncommand now displays the version of the Triggers installed in the cluster. -

The

tkn pipeline describecommand now displays parameter values and timeouts specified for Tasks used in the Pipeline. -

Support added for the

--lastoption for thetkn pipelinerun describeand thetkn taskrun describecommands to describe the most recent PipelineRun or TaskRun, respectively. -

The

tkn pipeline describecommand now displays the conditions applicable to the Tasks in the Pipeline. -

You can now use the

--no-headersand--all-namespacesflags with thetkn resource listcommand.

6.3.1.3. Triggers

The following Common Expression Language (CEL) functions are now available:

-

parseURLto parse and extract portions of a URL -

parseJSONto parse JSON value types embedded in a string in thepayloadfield of thedeploymentwebhook

-

- A new interceptor for webhooks from Bitbucket has been added.

-

EventListeners now display the

Address URLand theAvailable statusas additional fields when listed with thekubectl getcommand. -

TriggerTemplate params now use the

$(tt.params.<paramName>)syntax instead of$(params.<paramName>)to reduce the confusion between TriggerTemplate and ResourceTemplates params. -

You can now add

tolerationsin the EventListener CRD to ensure that EventListeners are deployed with the same configuration even if all nodes are tainted due to security or management issues. -

You can now add a Readiness Probe for EventListener Deployment at

URL/live. - Support for embedding TriggerBinding specifications in EventListener Triggers.

-

Trigger resources are now annotated with the recommended

app.kubernetes.iolabels.

6.3.2. Deprecated features

The following items are deprecated in this release:

-

The

--namespaceor-nflags for all cluster-wide commands, including theclustertaskandclustertriggerbindingcommands, are deprecated. It will be removed in a future release. -

The

namefield intriggers.bindingswithin an EventListener has been deprecated in favor of thereffield and will be removed in a future release. -

Variable interpolation in TriggerTemplates using

$(params)has been deprecated in favor of using$(tt.params)to reduce confusion with the Pipeline variable interpolation syntax. The$(params.<paramName>)syntax will be removed in a future release. -

The

tekton.dev/tasklabel is deprecated on ClusterTasks. -

The

TaskRun.Status.ResourceResults.ResourceReffield is deprecated and will be removed. -

The

tkn pipeline create,tkn task create, andtkn resource create -fsubcommands have been removed. -

Namespace validation has been removed from

tkncommands. -

The default timeout of

1hand the-tflag for thetkn ct startcommand have been removed. -

The

s2iClusterTask has been deprecated.

6.3.3. Known issues

- Conditions do not support Workspaces.

-

The

--workspaceoption and the interactive mode is not supported for thetkn clustertask startcommand. -

Support of backward compatibility for

$(params.<paramName>)forces you to use TriggerTemplates with pipeline specific params as the Triggers webhook is unable to differentiate Trigger params from pipelines params. -

Pipeline metrics report incorrect values when you run a promQL query for

tekton_taskrun_countandtekton_taskrun_duration_seconds_count. -

PipelineRuns and TaskRuns continue to be in the

RunningandRunning(Pending)states respectively even when a non existing PVC name is given to a Workspace.

6.3.4. Fixed issues

-

Previously, the

tkn task delete <name> --trscommand would delete both the Task and ClusterTask if the name of the Task and ClusterTask were the same. With this fix, the command deletes only the TaskRuns that are created by the Task<name>. -

Previously the

tkn pr delete -p <name> --keep 2command would disregard the-pflag when used with the--keepflag and would delete all the PipelineRuns except the latest two. With this fix, the command deletes only the PipelineRuns that are created by the Pipeline<name>, except for the latest two. -

The

tkn triggertemplate describeoutput now displays ResourceTemplates in a table format instead of YAML format. -

Previously the

buildahClusterTask failed when a new user was added to a container. With this fix, the issue has been resolved.

6.4. Release notes for Red Hat OpenShift Pipelines Technology Preview 1.0

6.4.1. New features

Red Hat OpenShift Pipelines Technology Preview (TP) 1.0 is now available on OpenShift Container Platform 4.6. Red Hat OpenShift Pipelines TP 1.0 is updated to support:

- Tekton Pipelines 0.11.3

-

Tekton

tknCLI 0.9.0 - Tekton Triggers 0.4.0

- ClusterTasks based on Tekton Catalog 0.11

In addition to the fixes and stability improvements, here is a highlight of what’s new in OpenShift Pipelines 1.0.

6.4.1.1. Pipelines

- Support for v1beta1 API Version.

- Support for an improved LimitRange. Previously, LimitRange was specified exclusively for the TaskRun and the PipelineRun. Now there is no need to explicitly specify the LimitRange. The minimum LimitRange across the namespace is used.

- Support for sharing data between Tasks using TaskResults and TaskParams.

-

Pipelines can now be configured to not overwrite the

HOMEenvironment variable andworkingDirof Steps. -

Similar to Task Steps,

sidecarsnow support script mode. -

You can now specify a different scheduler name in TaskRun

podTemplate. - Support for variable substitution using Star Array Notation.

- Tekton Controller can now be configured to monitor an individual namespace.

- A new description field is now added to the specification of Pipeline, Task, ClusterTask, Resource, and Condition.

- Addition of proxy parameters to Git PipelineResources.

6.4.1.2. Pipelines CLI

-

The

describesubcommand is now added for the followingtknresources:eventlistener,condition,triggertemplate,clustertask, andtriggerbinding. -

Support added for

v1beta1to the following commands along with backward comptibility forv1alpha1:clustertask,task,pipeline,pipelinerun, andtaskrun. The following commands can now list output from all namespaces using the

--all-namespacesflag option:-

tkn task list -

tkn pipeline list -

tkn taskrun list tkn pipelinerun listThe output of these commands is also enhanced to display information without headers using the

--no-headersflag option.

-

-

You can now start a Pipeline using default parameter values by specifying

--use-param-defaultsflag in thetkn pipelines startcommand. -

Support for Workspace is now added to

tkn pipeline startandtkn task startcommands. -

A new

clustertriggerbindingcommand is now added with the following subcommands:describe,delete, andlist. -

You can now directly start a pipeline run using a local or remote

yamlfile. -

The