Install ROSA Classic clusters

Installing, accessing, and deleting Red Hat OpenShift Service on AWS (ROSA) clusters.

Abstract

Chapter 1. Creating a ROSA cluster with STS using the default options

If you are looking for a quickstart guide for ROSA, see Red Hat OpenShift Service on AWS quickstart guide.

Create a Red Hat OpenShift Service on AWS (ROSA) cluster quickly by using the default options and automatic AWS Identity and Access Management (IAM) resource creation. You can deploy your cluster by using Red Hat OpenShift Cluster Manager or the ROSA CLI (rosa).

The procedures in this document use the auto modes in the ROSA CLI (rosa) and OpenShift Cluster Manager to immediately create the required IAM resources using the current AWS account. The required resources include the account-wide IAM roles and policies, cluster-specific Operator roles and policies, and OpenID Connect (OIDC) identity provider.

Alternatively, you can use manual mode, which outputs the aws commands needed to create the IAM resources instead of deploying them automatically. For steps to deploy a ROSA cluster by using manual mode or with customizations, see Creating a cluster using customizations.

Next steps

- Ensure that you have completed the AWS prerequisites.

ROSA CLI 1.2.7 introduces changes to the OIDC provider endpoint URL format for new clusters. Red Hat OpenShift Service on AWS cluster OIDC provider URLs are no longer regional. The AWS CloudFront implementation provides improved access speed and resiliency and reduces latency.

Because this change is only available to new clusters created by using ROSA CLI 1.2.7 or later, existing OIDC-provider configurations do not have any supported migration paths.

1.1. Overview of the default cluster specifications

You can quickly create a Red Hat OpenShift Service on AWS (ROSA) cluster with the Security Token Service (STS) by using the default installation options. The following summary describes the default cluster specifications.

| Component | Default specifications |

|---|---|

| Accounts and roles |

|

| Cluster settings |

|

| Encryption |

|

| Control plane node configuration |

|

| Infrastructure node configuration |

|

| Compute node machine pool |

|

| Networking configuration |

|

| Classless Inter-Domain Routing (CIDR) ranges |

|

| Cluster roles and policies |

|

| Cluster update strategy |

|

1.2. Understanding AWS account association

Before you can use Red Hat OpenShift Cluster Manager on the Red Hat Hybrid Cloud Console to create Red Hat OpenShift Service on AWS (ROSA) clusters that use the AWS Security Token Service (STS), you must associate your AWS account with your Red Hat organization. You can associate your account by creating and linking the following IAM roles.

- OpenShift Cluster Manager role

Create an OpenShift Cluster Manager IAM role and link it to your Red Hat organization.

You can apply basic or administrative permissions to the OpenShift Cluster Manager role. The basic permissions enable cluster maintenance using OpenShift Cluster Manager. The administrative permissions enable automatic deployment of the cluster-specific Operator roles and the OpenID Connect (OIDC) provider using OpenShift Cluster Manager.

You can use the administrative permissions with the OpenShift Cluster Manager role to deploy a cluster quickly.

- User role

Create a user IAM role and link it to your Red Hat user account. The Red Hat user account must exist in the Red Hat organization that is linked to your OpenShift Cluster Manager role.

The user role is used by Red Hat to verify your AWS identity when you use the OpenShift Cluster Manager Hybrid Cloud Console to install a cluster and the required STS resources.

Additional resources

- For detailed steps to create and link the OpenShift Cluster Manager and user IAM roles, see Associating your AWS account with your Red Hat organization.

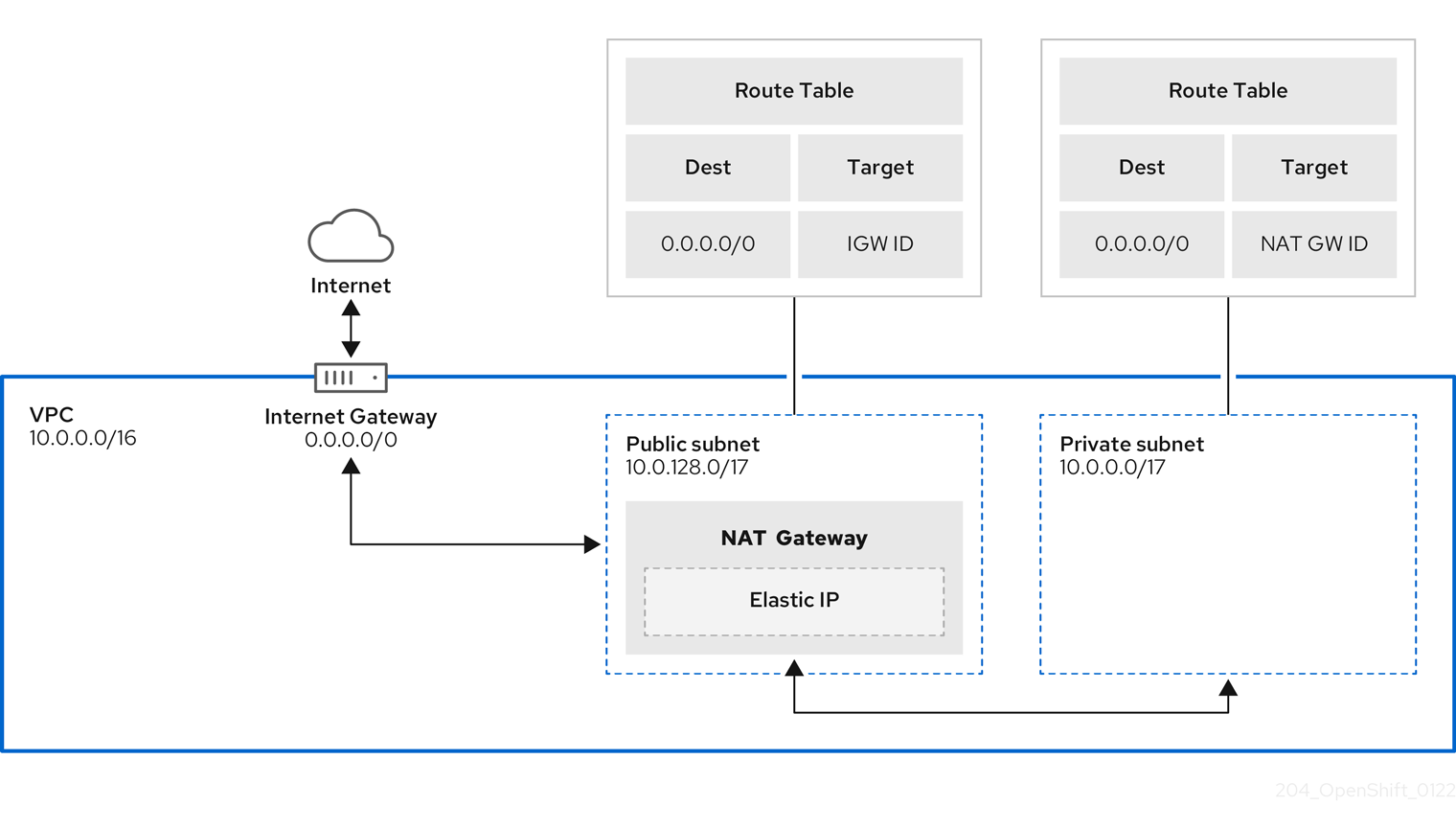

1.3. Amazon VPC Requirements for non-PrivateLink ROSA clusters

To create an Amazon VPC, You must have the following:

- An internet gateway,

- A NAT gateway,

- Private and public subnets that have internet connectivity provided to install required components.

You must have at least one single private and public subnet for Single-AZ clusters, and you need at least three private and public subnets for Multi-AZ clusters.

Additional resources

- For more information about the default components required for an AWS cluster, see Default VPCs in the AWS documentation.

- For instructions on creating a VPC in the AWS console, see Create a VPC in the AWS documentation.

1.4. Creating a cluster quickly using OpenShift Cluster Manager

When using Red Hat OpenShift Cluster Manager to create a Red Hat OpenShift Service on AWS (ROSA) cluster that uses the AWS Security Token Service (STS), you can select the default options to create the cluster quickly.

Before you can use OpenShift Cluster Manager to deploy ROSA with STS clusters, you must associate your AWS account with your Red Hat organization and create the required account-wide STS roles and policies.

1.4.1. Associating your AWS account with your Red Hat organization

Before using Red Hat OpenShift Cluster Manager on the Red Hat Hybrid Cloud Console to create Red Hat OpenShift Service on AWS (ROSA) clusters that use the AWS Security Token Service (STS), create an OpenShift Cluster Manager IAM role and link it to your Red Hat organization. Then, create a user IAM role and link it to your Red Hat user account in the same Red Hat organization.

Prerequisites

- You have completed the AWS prerequisites for ROSA with STS.

- You have available AWS service quotas.

- You have enabled the ROSA service in the AWS Console.

You have installed and configured the latest ROSA CLI (

rosa) on your installation host.NoteTo successfully install ROSA clusters, use the latest version of the ROSA CLI.

- You have logged in to your Red Hat account by using the ROSA CLI.

- You have organization administrator privileges in your Red Hat organization.

Procedure

Create an OpenShift Cluster Manager role and link it to your Red Hat organization:

NoteTo enable automatic deployment of the cluster-specific Operator roles and the OpenID Connect (OIDC) provider using the OpenShift Cluster Manager Hybrid Cloud Console, you must apply the administrative privileges to the role by choosing the Admin OCM role command in the Accounts and roles step of creating a ROSA cluster. For more information about the basic and administrative privileges for the OpenShift Cluster Manager role, see Understanding AWS account association.

NoteIf you choose the Basic OCM role command in the Accounts and roles step of creating a ROSA cluster in the OpenShift Cluster Manager Hybrid Cloud Console, you must deploy a ROSA cluster using manual mode. You will be prompted to configure the cluster-specific Operator roles and the OpenID Connect (OIDC) provider in a later step.

$ rosa create ocm-role

Select the default values at the prompts to quickly create and link the role.

Create a user role and link it to your Red Hat user account:

$ rosa create user-role

Select the default values at the prompts to quickly create and link the role.

NoteThe Red Hat user account must exist in the Red Hat organization that is linked to your OpenShift Cluster Manager role.

1.4.2. Creating the account-wide STS roles and policies

Before using the Red Hat OpenShift Cluster Manager Hybrid Cloud Console to create Red Hat OpenShift Service on AWS (ROSA) clusters that use the AWS Security Token Service (STS), create the required account-wide STS roles and policies, including the Operator policies.

Prerequisites

- You have completed the AWS prerequisites for ROSA with STS.

- You have available AWS service quotas.

- You have enabled the ROSA service in the AWS Console.

-

You have installed and configured the latest ROSA CLI (

rosa) on your installation host. Runrosa versionto see your currently installed version of the ROSA CLI. If a newer version is available, the CLI provides a link to download this upgrade. - You have logged in to your Red Hat account by using the ROSA CLI.

Procedure

Check your AWS account for existing roles and policies:

$ rosa list account-roles

If they do not exist in your AWS account, create the required account-wide STS roles and policies:

$ rosa create account-roles

Select the default values at the prompts to quickly create the roles and policies.

1.4.3. Creating an OpenID Connect configuration

When using a Red Hat OpenShift Service on AWS cluster, you can create the OpenID Connect (OIDC) configuration prior to creating your cluster. This configuration is registered to be used with OpenShift Cluster Manager.

Prerequisites

-

You have installed and configured the latest Red Hat OpenShift Service on AWS (ROSA) CLI,

rosa, on your installation host.

Procedure

To create your OIDC configuration alongside the AWS resources, run the following command:

$ rosa create oidc-config --mode=auto --yes

This command returns the following information.

Example output

? Would you like to create a Managed (Red Hat hosted) OIDC Configuration Yes I: Setting up managed OIDC configuration I: To create Operator Roles for this OIDC Configuration, run the following command and remember to replace <user-defined> with a prefix of your choice: rosa create operator-roles --prefix <user-defined> --oidc-config-id 13cdr6b If you are going to create a Hosted Control Plane cluster please include '--hosted-cp' I: Creating OIDC provider using 'arn:aws:iam::4540112244:user/userName' ? Create the OIDC provider? Yes I: Created OIDC provider with ARN 'arn:aws:iam::4540112244:oidc-provider/dvbwgdztaeq9o.cloudfront.net/13cdr6b'

When creating your cluster, you must supply the OIDC config ID. The CLI output provides this value for

--mode auto, otherwise you must determine these values based onawsCLI output for--mode manual.Optional: you can save the OIDC configuration ID as a variable to use later. Run the following command to save the variable:

$ export OIDC_ID=<oidc_config_id>1- 1

- In the example output above, the OIDC configuration ID is 13cdr6b.

View the value of the variable by running the following command:

$ echo $OIDC_ID

Example output

13cdr6b

Verification

You can list the possible OIDC configurations available for your clusters that are associated with your user organization. Run the following command:

$ rosa list oidc-config

Example output

ID MANAGED ISSUER URL SECRET ARN 2330dbs0n8m3chkkr25gkkcd8pnj3lk2 true https://dvbwgdztaeq9o.cloudfront.net/2330dbs0n8m3chkkr25gkkcd8pnj3lk2 233hvnrjoqu14jltk6lhbhf2tj11f8un false https://oidc-r7u1.s3.us-east-1.amazonaws.com aws:secretsmanager:us-east-1:242819244:secret:rosa-private-key-oidc-r7u1-tM3MDN

1.4.4. Creating a cluster with the default options using OpenShift Cluster Manager

When using Red Hat OpenShift Cluster Manager on the Red Hat Hybrid Cloud Console to create a Red Hat OpenShift Service on AWS (ROSA) cluster that uses the AWS Security Token Service (STS), you can select the default options to create the cluster quickly. You can also use the admin OpenShift Cluster Manager IAM role to enable automatic deployment of the cluster-specific Operator roles and the OpenID Connect (OIDC) provider.

Prerequisites

- You have completed the AWS prerequisites for ROSA with STS.

- You have available AWS service quotas.

- You have enabled the ROSA service in the AWS Console.

-

You have installed and configured the latest ROSA CLI (

rosa) on your installation host. Runrosa versionto see your currently installed version of the ROSA CLI. If a newer version is available, the CLI provides a link to download this upgrade. - You have verified that the AWS Elastic Load Balancing (ELB) service role exists in your AWS account.

- You have associated your AWS account with your Red Hat organization. When you associated your account, you applied the administrative permissions to the OpenShift Cluster Manager role. For detailed steps, see Associating your AWS account with your Red Hat organization.

- You have created the required account-wide STS roles and policies. For detailed steps, see Creating the account-wide STS roles and policies.

Procedure

- Navigate to OpenShift Cluster Manager and select Create cluster.

- On the Create an OpenShift cluster page, select Create cluster in the Red Hat OpenShift Service on AWS (ROSA) row.

Verify that your AWS account ID is listed in the Associated AWS accounts drop-down menu and that the installer, support, worker, and control plane account role Amazon Resource Names (ARNs) are listed on the Accounts and roles page.

NoteIf your AWS account ID is not listed, check that you have successfully associated your AWS account with your Red Hat organization. If your account role ARNs are not listed, check that the required account-wide STS roles exist in your AWS account.

- Click Next.

On the Cluster details page, provide a name for your cluster in the Cluster name field. Leave the default values in the remaining fields and click Next.

NoteCluster creation generates a domain prefix as a subdomain for your provisioned cluster on

openshiftapps.com. If the cluster name is less than or equal to 15 characters, that name is used for the domain prefix. If the cluster name is longer than 15 characters, the domain prefix is randomly generated as a 15-character string. To customize the subdomain, select the Create custom domain prefix checkbox, and enter your domain prefix name in the Domain prefix field.- To deploy a cluster quickly, leave the default options in the Cluster settings, Networking, Cluster roles and policies, and Cluster updates pages and click Next on each page.

- On the Review your ROSA cluster page, review the summary of your selections and click Create cluster to start the installation.

Optional: On the Overview tab, you can enable the delete protection feature by selecting Enable, which is located directly under Delete Protection: Disabled. This will prevent your cluster from being deleted. To disable delete protection, select Disable. By default, clusters are created with the delete protection feature disabled.

Verification

You can check the progress of the installation in the Overview page for your cluster. You can view the installation logs on the same page. Your cluster is ready when the Status in the Details section of the page is listed as Ready.

NoteIf the installation fails or the cluster State does not change to Ready after about 40 minutes, check the installation troubleshooting documentation for details. For more information, see Troubleshooting installations. For steps to contact Red Hat Support for assistance, see Getting support for Red Hat OpenShift Service on AWS.

1.5. Creating a cluster quickly using the CLI

When using the Red Hat OpenShift Service on AWS (ROSA) CLI, rosa, to create a cluster that uses the AWS Security Token Service (STS), you can select the default options to create the cluster quickly.

Prerequisites

- You have completed the AWS prerequisites for ROSA with STS.

- You have available AWS service quotas.

- You have enabled the ROSA service in the AWS Console.

-

You have installed and configured the latest ROSA CLI (

rosa) on your installation host. Runrosa versionto see your currently installed version of the ROSA CLI. If a newer version is available, the CLI provides a link to download this upgrade. - You have logged in to your Red Hat account by using the ROSA CLI.

- You have verified that the AWS Elastic Load Balancing (ELB) service role exists in your AWS account.

Procedure

Create the required account-wide roles and policies, including the Operator policies:

$ rosa create account-roles --mode auto

NoteWhen using

automode, you can optionally specify the-yargument to bypass the interactive prompts and automatically confirm operations.Create a cluster with STS using the defaults. When you use the defaults, the latest stable OpenShift version is installed:

$ rosa create cluster --cluster-name <cluster_name> \ 1 --sts --mode auto 2

NoteIf your cluster name is longer than 15 characters, it will contain an autogenerated domain prefix as a sub-domain for your provisioned cluster on

*.openshiftapps.com.To customize the subdomain, use the

--domain-prefixflag. The domain prefix cannot be longer than 15 characters, must be unique, and cannot be changed after cluster creation.Check the status of your cluster:

$ rosa describe cluster --cluster <cluster_name|cluster_id>

The following

Statefield changes are listed in the output as the cluster installation progresses:-

waiting (Waiting for OIDC configuration) -

pending (Preparing account) -

installing (DNS setup in progress) -

installing readyNoteIf the installation fails or the

Statefield does not change toreadyafter about 40 minutes, check the installation troubleshooting documentation for details. For more information, see Troubleshooting installations. For steps to contact Red Hat Support for assistance, see Getting support for Red Hat OpenShift Service on AWS.

-

Track the progress of the cluster creation by watching the OpenShift installer logs:

$ rosa logs install --cluster <cluster_name|cluster_id> --watch 1- 1

- Specify the

--watchflag to watch for new log messages as the installation progresses. This argument is optional.

1.6. Next steps

1.7. Additional resources

- For steps to deploy a ROSA cluster using manual mode, see Creating a cluster using customizations.

- For more information about the AWS Identity Access Management (IAM) resources required to deploy Red Hat OpenShift Service on AWS with STS, see About IAM resources for clusters that use STS.

- For details about optionally setting an Operator role name prefix, see About custom Operator IAM role prefixes.

- For information about the prerequisites to installing ROSA with STS, see AWS prerequisites for ROSA with STS.

-

For details about using the

autoandmanualmodes to create the required STS resources, see Understanding the auto and manual deployment modes. - For more information about using OpenID Connect (OIDC) identity providers in AWS IAM, see Creating OpenID Connect (OIDC) identity providers in the AWS documentation.

- For more information about troubleshooting ROSA cluster installations, see Troubleshooting installations.

- For steps to contact Red Hat Support for assistance, see Getting support for Red Hat OpenShift Service on AWS.

Chapter 2. Creating a ROSA cluster with STS using customizations

Create a Red Hat OpenShift Service on AWS (ROSA) cluster with the AWS Security Token Service (STS) using customizations. You can deploy your cluster by using Red Hat OpenShift Cluster Manager or the ROSA CLI (rosa).

With the procedures in this document, you can also choose between the auto and manual modes when creating the required AWS Identity and Access Management (IAM) resources.

2.1. Understanding the auto and manual deployment modes

When installing a Red Hat OpenShift Service on AWS (ROSA) cluster that uses the AWS Security Token Service (STS), you can choose between the auto and manual modes to create the required AWS Identity and Access Management (IAM) resources.

automode-

With this mode, the ROSA CLI (

rosa) immediately creates the required IAM roles and policies, and an OpenID Connect (OIDC) provider in your AWS account. manualmode-

With this mode,

rosaoutputs theawscommands needed to create the IAM resources. The corresponding policy JSON files are also saved to the current directory. By usingmanualmode, you can review the generatedawscommands before running them manually.manualmode also enables you to pass the commands to another administrator or group in your organization so that they can create the resources.

If you opt to use manual mode, the cluster installation waits until you create the cluster-specific Operator roles and OIDC provider manually. After you create the resources, the installation proceeds. For more information, see Creating the Operator roles and OIDC provider using OpenShift Cluster Manager.

For more information about the AWS IAM resources required to install ROSA with STS, see About IAM resources for clusters that use STS.

2.1.1. Creating the Operator roles and OIDC provider using OpenShift Cluster Manager

If you use Red Hat OpenShift Cluster Manager to install your cluster and opt to create the required AWS IAM Operator roles and the OIDC provider using manual mode, you are prompted to select one of the following methods to install the resources. The options are provided to enable you to choose a resource creation method that suits the needs of your organization:

- AWS CLI (

aws) -

With this method, you can download and extract an archive file that contains the

awscommands and policy files required to create the IAM resources. Run the provided CLI commands from the directory that contains the policy files to create the Operator roles and the OIDC provider. - The Red Hat OpenShift Service on AWS (ROSA) CLI,

rosa -

You can run the commands provided by this method to create the Operator roles and the OIDC provider for your cluster using

rosa.

If you use auto mode, OpenShift Cluster Manager creates the Operator roles and the OIDC provider automatically, using the permissions provided through the OpenShift Cluster Manager IAM role. To use this feature, you must apply admin privileges to the role.

2.2. Understanding AWS account association

Before you can use Red Hat OpenShift Cluster Manager on the Red Hat Hybrid Cloud Console to create Red Hat OpenShift Service on AWS (ROSA) clusters that use the AWS Security Token Service (STS), you must associate your AWS account with your Red Hat organization. You can associate your account by creating and linking the following IAM roles.

- OpenShift Cluster Manager role

Create an OpenShift Cluster Manager IAM role and link it to your Red Hat organization.

You can apply basic or administrative permissions to the OpenShift Cluster Manager role. The basic permissions enable cluster maintenance using OpenShift Cluster Manager. The administrative permissions enable automatic deployment of the cluster-specific Operator roles and the OpenID Connect (OIDC) provider using OpenShift Cluster Manager.

You can use the administrative permissions with the OpenShift Cluster Manager role to deploy a cluster quickly.

- User role

Create a user IAM role and link it to your Red Hat user account. The Red Hat user account must exist in the Red Hat organization that is linked to your OpenShift Cluster Manager role.

The user role is used by Red Hat to verify your AWS identity when you use the OpenShift Cluster Manager Hybrid Cloud Console to install a cluster and the required STS resources.

Additional resources

- For detailed steps to create and link the OpenShift Cluster Manager and user IAM roles, see Creating a cluster with customizations by using OpenShift Cluster Manager.

2.3. ARN path customization for IAM roles and policies

When you create the AWS IAM roles and policies required for Red Hat OpenShift Service on AWS (ROSA) clusters that use the AWS Security Token Service (STS), you can specify custom Amazon Resource Name (ARN) paths. This enables you to use role and policy ARN paths that meet the security requirements of your organization.

You can specify custom ARN paths when you create your OCM role, user role, and account-wide roles and policies.

If you define a custom ARN path when you create a set of account-wide roles and policies, the same path is applied to all of the roles and policies in the set. The following example shows the ARNs for a set of account-wide roles and policies. In the example, the ARNs use the custom path /test/path/dev/ and the custom role prefix test-env:

-

arn:aws:iam::<account_id>:role/test/path/dev/test-env-Worker-Role -

arn:aws:iam::<account_id>:role/test/path/dev/test-env-Support-Role -

arn:aws:iam::<account_id>:role/test/path/dev/test-env-Installer-Role -

arn:aws:iam::<account_id>:role/test/path/dev/test-env-ControlPlane-Role -

arn:aws:iam::<account_id>:policy/test/path/dev/test-env-Worker-Role-Policy -

arn:aws:iam::<account_id>:policy/test/path/dev/test-env-Support-Role-Policy -

arn:aws:iam::<account_id>:policy/test/path/dev/test-env-Installer-Role-Policy -

arn:aws:iam::<account_id>:policy/test/path/dev/test-env-ControlPlane-Role-Policy

When you create the cluster-specific Operator roles, the ARN path for the relevant account-wide installer role is automatically detected and applied to the Operator roles.

For more information about ARN paths, see Amazon Resource Names (ARNs) in the AWS documentation.

Additional resources

- For the steps to specify custom ARN paths for IAM resources when you create Red Hat OpenShift Service on AWS clusters, see Creating a cluster using customizations.

2.4. Support considerations for ROSA clusters with STS

The supported way of creating a Red Hat OpenShift Service on AWS (ROSA) cluster that uses the AWS Security Token Service (STS) is by using the steps described in this product documentation.

You can use manual mode with the ROSA CLI (rosa) to generate the AWS Identity and Access Management (IAM) policy files and aws commands that are required to install the STS resources.

The files and aws commands are generated for review purposes only and must not be modified in any way. Red Hat cannot provide support for ROSA clusters that have been deployed by using modified versions of the policy files or aws commands.

2.5. Amazon VPC Requirements for non-PrivateLink ROSA clusters

To create an Amazon VPC, You must have the following:

- An internet gateway,

- A NAT gateway,

- Private and public subnets that have internet connectivity provided to install required components.

You must have at least one single private and public subnet for Single-AZ clusters, and you need at least three private and public subnets for Multi-AZ clusters.

Additional resources

- For more information about the default components required for an AWS cluster, see Default VPCs in the AWS documentation.

- For instructions on creating a VPC in the AWS console, see Create a VPC in the AWS documentation.

2.6. Creating an OpenID Connect configuration

When using a Red Hat OpenShift Service on AWS cluster, you can create the OpenID Connect (OIDC) configuration prior to creating your cluster. This configuration is registered to be used with OpenShift Cluster Manager.

Prerequisites

-

You have installed and configured the latest Red Hat OpenShift Service on AWS (ROSA) CLI,

rosa, on your installation host.

Procedure

To create your OIDC configuration alongside the AWS resources, run the following command:

$ rosa create oidc-config --mode=auto --yes

This command returns the following information.

Example output

? Would you like to create a Managed (Red Hat hosted) OIDC Configuration Yes I: Setting up managed OIDC configuration I: To create Operator Roles for this OIDC Configuration, run the following command and remember to replace <user-defined> with a prefix of your choice: rosa create operator-roles --prefix <user-defined> --oidc-config-id 13cdr6b If you are going to create a Hosted Control Plane cluster please include '--hosted-cp' I: Creating OIDC provider using 'arn:aws:iam::4540112244:user/userName' ? Create the OIDC provider? Yes I: Created OIDC provider with ARN 'arn:aws:iam::4540112244:oidc-provider/dvbwgdztaeq9o.cloudfront.net/13cdr6b'

When creating your cluster, you must supply the OIDC config ID. The CLI output provides this value for

--mode auto, otherwise you must determine these values based onawsCLI output for--mode manual.Optional: you can save the OIDC configuration ID as a variable to use later. Run the following command to save the variable:

$ export OIDC_ID=<oidc_config_id>1- 1

- In the example output above, the OIDC configuration ID is 13cdr6b.

View the value of the variable by running the following command:

$ echo $OIDC_ID

Example output

13cdr6b

Verification

You can list the possible OIDC configurations available for your clusters that are associated with your user organization. Run the following command:

$ rosa list oidc-config

Example output

ID MANAGED ISSUER URL SECRET ARN 2330dbs0n8m3chkkr25gkkcd8pnj3lk2 true https://dvbwgdztaeq9o.cloudfront.net/2330dbs0n8m3chkkr25gkkcd8pnj3lk2 233hvnrjoqu14jltk6lhbhf2tj11f8un false https://oidc-r7u1.s3.us-east-1.amazonaws.com aws:secretsmanager:us-east-1:242819244:secret:rosa-private-key-oidc-r7u1-tM3MDN

2.7. Creating a cluster using customizations

Deploy a Red Hat OpenShift Service on AWS (ROSA) with AWS Security Token Service (STS) cluster with a configuration that suits the needs of your environment. You can deploy your cluster with customizations by using Red Hat OpenShift Cluster Manager or the ROSA CLI (rosa).

2.7.1. Creating a cluster with customizations by using OpenShift Cluster Manager

When you create a Red Hat OpenShift Service on AWS (ROSA) cluster that uses the AWS Security Token Service (STS), you can customize your installation interactively by using Red Hat OpenShift Cluster Manager.

Only public and AWS PrivateLink clusters are supported with STS. Regular private clusters (non-PrivateLink) are not available for use with STS.

Prerequisites

- You have completed the AWS prerequisites for ROSA with STS.

- You have available AWS service quotas.

- You have enabled the ROSA service in the AWS Console.

-

You have installed and configured the latest ROSA CLI (

rosa) on your installation host. Runrosa versionto see your currently installed version of the ROSA CLI. If a newer version is available, the CLI provides a link to download this upgrade. - You have verified that the AWS Elastic Load Balancing (ELB) service role exists in your AWS account.

- If you are configuring a cluster-wide proxy, you have verified that the proxy is accessible from the VPC that the cluster is being installed into. The proxy must also be accessible from the private subnets of the VPC.

Procedure

- Navigate to OpenShift Cluster Manager and select Create cluster.

- On the Create an OpenShift cluster page, select Create cluster in the Red Hat OpenShift Service on AWS (ROSA) row.

If an AWS account is automatically detected, the account ID is listed in the Associated AWS accounts drop-down menu. If no AWS accounts are automatically detected, click Select an account → Associate AWS account and follow these steps:

On the Authenticate page, click the copy button next to the

rosa logincommand. The command includes your OpenShift Cluster Manager API login token.NoteYou can also load your API token on the OpenShift Cluster Manager API Token page on OpenShift Cluster Manager.

Run the copied command in the CLI to log in to your ROSA account.

$ rosa login --token=<api_login_token> 1- 1

- Replace

<api_login_token>with the token that is provided in the copied command.

Example output

I: Logged in as '<username>' on 'https://api.openshift.com'

- On the Authenticate page in OpenShift Cluster Manager, click Next.

On the OCM role page, click the copy button next to the Basic OCM role or the Admin OCM role commands.

The basic role enables OpenShift Cluster Manager to detect the AWS IAM roles and policies required by ROSA. The admin role also enables the detection of the roles and policies. In addition, the admin role enables automatic deployment of the cluster-specific Operator roles and the OpenID Connect (OIDC) provider by using OpenShift Cluster Manager.

Run the copied command in the CLI and follow the prompts to create the OpenShift Cluster Manager IAM role. The following example creates a basic OpenShift Cluster Manager IAM role using the default options:

$ rosa create ocm-role

Example output

I: Creating ocm role ? Role prefix: ManagedOpenShift 1 ? Enable admin capabilities for the OCM role (optional): No 2 ? Permissions boundary ARN (optional): 3 ? Role Path (optional): 4 ? Role creation mode: auto 5 I: Creating role using 'arn:aws:iam::<aws_account_id>:user/<aws_username>' ? Create the 'ManagedOpenShift-OCM-Role-<red_hat_organization_external_id>' role? Yes I: Created role 'ManagedOpenShift-OCM-Role-<red_hat_organization_external_id>' with ARN 'arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-OCM-Role-<red_hat_organization_external_id>' I: Linking OCM role ? OCM Role ARN: arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-OCM-Role-<red_hat_organization_external_id> ? Link the 'arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-OCM-Role-<red_hat_organization_external_id>' role with organization '<red_hat_organization_id>'? Yes 6 I: Successfully linked role-arn 'arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-OCM-Role-<red_hat_organization_external_id>' with organization account '<red_hat_organization_id>'

- 1

- Specify the prefix to include in the OCM IAM role name. The default is

ManagedOpenShift. You can create only one OCM role per AWS account for your Red Hat organization. - 2

- Enable the admin OpenShift Cluster Manager IAM role, which is equivalent to specifying the

--adminargument. The admin role is required if you want to use Auto mode to automatically provision the cluster-specific Operator roles and the OIDC provider by using OpenShift Cluster Manager. - 3

- Optional: Specify a permissions boundary Amazon Resource Name (ARN) for the role. For more information, see Permissions boundaries for IAM entities in the AWS documentation.

- 4

- Specify a custom ARN path for your OCM role. The path must contain alphanumeric characters only and start and end with

/, for example/test/path/dev/. For more information, see ARN path customization for IAM roles and policies. - 5

- Select the role creation mode. You can use

automode to automatically create the OpenShift Cluster Manager IAM role and link it to your Red Hat organization account. Inmanualmode, the ROSA CLI generates theawscommands needed to create and link the role. Inmanualmode, the corresponding policy JSON files are also saved to the current directory.manualmode enables you to review the details before running theawscommands manually. - 6

- Link the OpenShift Cluster Manager IAM role to your Red Hat organization account.

If you opted not to link the OpenShift Cluster Manager IAM role to your Red Hat organization account in the preceding command, copy the

rosa linkcommand from the OpenShift Cluster Manager OCM role page and run it:$ rosa link ocm-role <arn> 1- 1

- Replace

<arn>with the ARN of the OpenShift Cluster Manager IAM role that is included in the output of the preceding command.

- Select Next on the OpenShift Cluster Manager OCM role page.

On the User role page, click the copy button for the User role command and run the command in the CLI. Red Hat uses the user role to verify your AWS identity when you install a cluster and the required resources with OpenShift Cluster Manager.

Follow the prompts to create the user role:

$ rosa create user-role

Example output

I: Creating User role ? Role prefix: ManagedOpenShift 1 ? Permissions boundary ARN (optional): 2 ? Role Path (optional): [? for help] 3 ? Role creation mode: auto 4 I: Creating ocm user role using 'arn:aws:iam::<aws_account_id>:user/<aws_username>' ? Create the 'ManagedOpenShift-User-<red_hat_username>-Role' role? Yes I: Created role 'ManagedOpenShift-User-<red_hat_username>-Role' with ARN 'arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-User-<red_hat_username>-Role' I: Linking User role ? User Role ARN: arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-User-<red_hat_username>-Role ? Link the 'arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-User-<red_hat_username>-Role' role with account '<red_hat_user_account_id>'? Yes 5 I: Successfully linked role ARN 'arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-User-<red_hat_username>-Role' with account '<red_hat_user_account_id>'

- 1

- Specify the prefix to include in the user role name. The default is

ManagedOpenShift. - 2

- Optional: Specify a permissions boundary Amazon Resource Name (ARN) for the role. For more information, see Permissions boundaries for IAM entities in the AWS documentation.

- 3

- Specify a custom ARN path for your user role. The path must contain alphanumeric characters only and start and end with

/, for example/test/path/dev/. For more information, see ARN path customization for IAM roles and policies. - 4

- Select the role creation mode. You can use

automode to automatically create the user role and link it to your OpenShift Cluster Manager user account. Inmanualmode, the ROSA CLI generates theawscommands needed to create and link the role. Inmanualmode, the corresponding policy JSON files are also saved to the current directory.manualmode enables you to review the details before running theawscommands manually. - 5

- Link the user role to your OpenShift Cluster Manager user account.

If you opted not to link the user role to your OpenShift Cluster Manager user account in the preceding command, copy the

rosa linkcommand from the OpenShift Cluster Manager User role page and run it:$ rosa link user-role <arn> 1- 1

- Replace

<arn>with the ARN of the user role that is included in the output of the preceding command.

- On the OpenShift Cluster Manager User role page, click Ok.

- Verify that the AWS account ID is listed in the Associated AWS accounts drop-down menu on the Accounts and roles page.

If the required account roles do not exist, a notification is provided stating that Some account roles ARNs were not detected. You can create the AWS account-wide roles and policies, including the Operator policies, by clicking the copy buffer next to the

rosa create account-rolescommand and running the command in the CLI:$ rosa create account-roles

Example output

I: Logged in as '<red_hat_username>' on 'https://api.openshift.com' I: Validating AWS credentials... I: AWS credentials are valid! I: Validating AWS quota... I: AWS quota ok. If cluster installation fails, validate actual AWS resource usage against https://docs.openshift.com/rosa/rosa_getting_started/rosa-required-aws-service-quotas.html I: Verifying whether OpenShift command-line tool is available... I: Current OpenShift Client Version: 4.0 I: Creating account roles ? Role prefix: ManagedOpenShift 1 ? Permissions boundary ARN (optional): 2 ? Path (optional): [? for help] 3 ? Role creation mode: auto 4 I: Creating roles using 'arn:aws:iam::<aws_account_number>:user/<aws_username>' ? Create the 'ManagedOpenShift-Installer-Role' role? Yes 5 I: Created role 'ManagedOpenShift-Installer-Role' with ARN 'arn:aws:iam::<aws_account_number>:role/ManagedOpenShift-Installer-Role' ? Create the 'ManagedOpenShift-ControlPlane-Role' role? Yes 6 I: Created role 'ManagedOpenShift-ControlPlane-Role' with ARN 'arn:aws:iam::<aws_account_number>:role/ManagedOpenShift-ControlPlane-Role' ? Create the 'ManagedOpenShift-Worker-Role' role? Yes 7 I: Created role 'ManagedOpenShift-Worker-Role' with ARN 'arn:aws:iam::<aws_account_number>:role/ManagedOpenShift-Worker-Role' ? Create the 'ManagedOpenShift-Support-Role' role? Yes 8 I: Created role 'ManagedOpenShift-Support-Role' with ARN 'arn:aws:iam::<aws_account_number>:role/ManagedOpenShift-Support-Role' I: To create a cluster with these roles, run the following command: rosa create cluster --sts

- 1

- Specify the prefix to include in the OpenShift Cluster Manager IAM role name. The default is

ManagedOpenShift.ImportantYou must specify an account-wide role prefix that is unique across your AWS account, even if you use a custom ARN path for your account roles.

- 2

- Optional: Specify a permissions boundary Amazon Resource Name (ARN) for the role. For more information, see Permissions boundaries for IAM entities in the AWS documentation.

- 3

- Specify a custom ARN path for your account-wide roles. The path must contain alphanumeric characters only and start and end with

/, for example/test/path/dev/. For more information, see ARN path customization for IAM roles and policies. - 4

- Select the role creation mode. You can use

automode to automatically create the account wide roles and policies. Inmanualmode, the ROSA CLI generates theawscommands needed to create the roles and policies. Inmanualmode, the corresponding policy JSON files are also saved to the current directory.manualmode enables you to review the details before running theawscommands manually. - 5 6 7 8

- Creates the account-wide installer, control plane, worker and support roles and corresponding IAM policies. For more information, see Account-wide IAM role and policy reference.Note

In this step, the ROSA CLI also automatically creates the account-wide Operator IAM policies that are used by the cluster-specific Operator policies to permit the ROSA cluster Operators to carry out core OpenShift functionality. For more information, see Account-wide IAM role and policy reference.

On the Accounts and roles page, click Refresh ARNs and verify that the installer, support, worker, and control plane account role ARNs are listed.

If you have more than one set of account roles in your AWS account for your cluster version, a drop-down list of Installer role ARNs is provided. Select the ARN for the installer role that you want to use with your cluster. The cluster uses the account-wide roles and policies that relate to the selected installer role.

Click Next.

NoteIf the Accounts and roles page was refreshed, you might need to select the checkbox again to acknowledge that you have read and completed all of the prerequisites.

On the Cluster details page, provide a name for your cluster and specify the cluster details:

- Add a Cluster name.

Optional: Cluster creation generates a domain prefix as a subdomain for your provisioned cluster on

openshiftapps.com. If the cluster name is less than or equal to 15 characters, that name is used for the domain prefix. If the cluster name is longer than 15 characters, the domain prefix is randomly generated to a 15 character string.To customize the subdomain, select the Create custom domain prefix checkbox, and enter your domain prefix name in the Domain prefix field. The domain prefix cannot be longer than 15 characters, must be unique within your organization, and cannot be changed after cluster creation.

- Select a cluster version from the Version drop-down menu.

- Select a cloud provider region from the Region drop-down menu.

- Select a Single zone or Multi-zone configuration.

- Leave Enable user workload monitoring selected to monitor your own projects in isolation from Red Hat Site Reliability Engineer (SRE) platform metrics. This option is enabled by default.

Optional: Expand Advanced Encryption to make changes to encryption settings.

Accept the default setting Use default KMS Keys to use your default AWS KMS key, or select Use Custom KMS keys to use a custom KMS key.

- With Use Custom KMS keys selected, enter the AWS Key Management Service (KMS) custom key Amazon Resource Name (ARN) ARN in the Key ARN field. The key is used for encrypting all control plane, infrastructure, worker node root volumes, and persistent volumes in your cluster.

Optional: To create a customer managed KMS key, follow the procedure for Creating symmetric encryption KMS keys.

ImportantThe EBS Operator role is required in addition to the account roles to successfully create your cluster.

This role must be attached with the

ManagedOpenShift-openshift-cluster-csi-drivers-ebs-cloud-credentialspolicy, an IAM policy required by ROSA to manage back-end storage through the Container Storage Interface (CSI).For more information about the policies and permissions that the cluster Operators require, see Methods of account-wide role creation.

Example EBS Operator role

"arn:aws:iam::<aws_account_id>:role/<cluster_name>-xxxx-openshift-cluster-csi-drivers-ebs-cloud-credent"After you create your Operator roles, you must edit the Key Policy in the Key Management Service (KMS) page of the AWS Console to add the roles.

Optional: Select Enable FIPS cryptography if you require your cluster to be FIPS validated.

NoteIf Enable FIPS cryptography is selected, Enable additional etcd encryption is enabled by default and cannot be disabled. You can select Enable additional etcd encryption without selecting Enable FIPS cryptography.

Optional: Select Enable additional etcd encryption if you require etcd key value encryption. With this option, the etcd key values are encrypted, but the keys are not. This option is in addition to the control plane storage encryption that encrypts the etcd volumes in Red Hat OpenShift Service on AWS clusters by default.

NoteBy enabling etcd encryption for the key values in etcd, you will incur a performance overhead of approximately 20%. The overhead is a result of introducing this second layer of encryption, in addition to the default control plane storage encryption that encrypts the etcd volumes. Consider enabling etcd encryption only if you specifically require it for your use case.

- Click Next.

On the Default machine pool page, select a Compute node instance type.

NoteAfter your cluster is created, you can change the number of compute nodes in your cluster, but you cannot change the compute node instance type in the default machine pool. The number and types of nodes available to you depend on whether you use single or multiple availability zones. They also depend on what is enabled and available in your AWS account and the selected region.

Optional: Configure autoscaling for the default machine pool:

- Select Enable autoscaling to automatically scale the number of machines in your default machine pool to meet the deployment needs.

Set the minimum and maximum node count limits for autoscaling. The cluster autoscaler does not reduce or increase the default machine pool node count beyond the limits that you specify.

- If you deployed your cluster using a single availability zone, set the Minimum node count and Maximum node count. This defines the minimum and maximum compute node limits in the availability zone.

- If you deployed your cluster using multiple availability zones, set the Minimum nodes per zone and Maximum nodes per zone. This defines the minimum and maximum compute node limits per zone.

NoteAlternatively, you can set your autoscaling preferences for the default machine pool after the machine pool is created.

If you did not enable autoscaling, select a compute node count for your default machine pool:

- If you deployed your cluster using a single availability zone, select a Compute node count from the drop-down menu. This defines the number of compute nodes to provision to the machine pool for the zone.

- If you deployed your cluster using multiple availability zones, select a Compute node count (per zone) from the drop-down menu. This defines the number of compute nodes to provision to the machine pool per zone.

Optional: Select an EC2 Instance Metadata Service (IMDS) configuration -

optional(default) orrequired- to enforce use of IMDSv2. For more information regarding IMDS, see Instance metadata and user data in the AWS documentation.ImportantThe Instance Metadata Service settings cannot be changed after your cluster is created.

- Optional: Expand Edit node labels to add labels to your nodes. Click Add label to add more node labels and select Next.

In the Cluster privacy section of the Network configuration page, select Public or Private to use either public or private API endpoints and application routes for your cluster.

ImportantThe API endpoint cannot be changed between public and private after your cluster is created.

- Public API endpoint

- Select Public if you do not want to restrict access to your cluster. You can access the Kubernetes API endpoint and application routes from the internet.

- Private API endpoint

Select Private if you want to restrict network access to your cluster. The Kubernetes API endpoint and application routes are accessible from direct private connections only.

ImportantIf you are using private API endpoints, you cannot access your cluster until you update the network settings in your cloud provider account.

Optional: If you opted to use public API endpoints, by default a new VPC is created for your cluster. If you want to install your cluster in an existing VPC instead, select Install into an existing VPC.

WarningYou cannot install a ROSA cluster into an existing VPC that was created by the OpenShift installer. These VPCs are created during the cluster deployment process and must only be associated with a single cluster to ensure that cluster provisioning and deletion operations work correctly.

To verify whether a VPC was created by the OpenShift installer, check for the

ownedvalue on thekubernetes.io/cluster/<infra-id>tag. For example, when viewing the tags for the VPC namedmycluster-12abc-34def, thekubernetes.io/cluster/mycluster-12abc-34deftag has a value ofowned. Therefore, the VPC was created by the installer and must not be modified by the administrator.NoteIf you opted to use private API endpoints, you must use an existing VPC and PrivateLink and the Install into an existing VPC and Use a PrivateLink options are automatically selected. With these options, the Red Hat Site Reliability Engineering (SRE) team can connect to the cluster to assist with support by using only AWS PrivateLink endpoints.

- Optional: If you are installing your cluster into an existing VPC, select Configure a cluster-wide proxy to enable an HTTP or HTTPS proxy to deny direct access to the internet from your cluster.

- Click Next.

If you opted to install the cluster in an existing AWS VPC, provide your Virtual Private Cloud (VPC) subnet settings.

NoteYou must ensure that your VPC is configured with a public and a private subnet for each availability zone that you want the cluster installed into. If you opted to use PrivateLink, only private subnets are required.

Optional: Expand Additional security groups and select additional custom security groups to apply to nodes in the machine pools created by default. You must have already created the security groups and associated them with the VPC you selected for this cluster. You cannot add or edit security groups to the default machine pools after you create the cluster.

By default, the security groups you specify will be added for all node types. Uncheck the Apply the same security groups to all node types (control plane, infrastructure and worker) checkbox to select different security groups for each node type.

For more information, see the requirements for Security groups under Additional resources.

If you opted to configure a cluster-wide proxy, provide your proxy configuration details on the Cluster-wide proxy page:

Enter a value in at least one of the following fields:

- Specify a valid HTTP proxy URL.

- Specify a valid HTTPS proxy URL.

-

In the Additional trust bundle field, provide a PEM encoded X.509 certificate bundle. The bundle is added to the trusted certificate store for the cluster nodes. An additional trust bundle file is required if you use a TLS-inspecting proxy unless the identity certificate for the proxy is signed by an authority from the Red Hat Enterprise Linux CoreOS (RHCOS) trust bundle. This requirement applies regardless of whether the proxy is transparent or requires explicit configuration using the

http-proxyandhttps-proxyarguments.

Click Next.

For more information about configuring a proxy with Red Hat OpenShift Service on AWS, see Configuring a cluster-wide proxy.

In the CIDR ranges dialog, configure custom classless inter-domain routing (CIDR) ranges or use the defaults that are provided and click Next.

NoteIf you are installing into a VPC, the Machine CIDR range must match the VPC subnets.

ImportantCIDR configurations cannot be changed later. Confirm your selections with your network administrator before proceeding.

Under the Cluster roles and policies page, select your preferred cluster-specific Operator IAM role and OIDC provider creation mode.

With Manual mode, you can use either the

rosaCLI commands or theawsCLI commands to generate the required Operator roles and OIDC provider for your cluster. Manual mode enables you to review the details before using your preferred option to create the IAM resources manually and complete your cluster installation.Alternatively, you can use Auto mode to automatically create the Operator roles and OIDC provider. To enable Auto mode, the OpenShift Cluster Manager IAM role must have administrator capabilities.

NoteIf you specified custom ARN paths when you created the associated account-wide roles, the custom path is automatically detected and applied to the Operator roles. The custom ARN path is applied when the Operator roles are created by using either Manual or Auto mode.

Optional: Specify a Custom operator roles prefix for your cluster-specific Operator IAM roles.

NoteBy default, the cluster-specific Operator role names are prefixed with the cluster name and random 4-digit hash. You can optionally specify a custom prefix to replace

<cluster_name>-<hash>in the role names. The prefix is applied when you create the cluster-specific Operator IAM roles. For information about the prefix, see About custom Operator IAM role prefixes.- Select Next.

On the Cluster update strategy page, configure your update preferences:

Choose a cluster update method:

- Select Individual updates if you want to schedule each update individually. This is the default option.

Select Recurring updates to update your cluster on your preferred day and start time, when updates are available.

ImportantEven when you opt for recurring updates, you must update the account-wide and cluster-specific IAM resources before you upgrade your cluster between minor releases.

NoteYou can review the end-of-life dates in the update life cycle documentation for Red Hat OpenShift Service on AWS. For more information, see Red Hat OpenShift Service on AWS update life cycle.

- If you opted for recurring updates, select a preferred day of the week and upgrade start time in UTC from the drop-down menus.

- Optional: You can set a grace period for Node draining during cluster upgrades. A 1 hour grace period is set by default.

Click Next.

NoteIf there are critical security concerns that significantly impact the security or stability of a cluster, Red Hat Site Reliability Engineering (SRE) might schedule automatic updates to the latest z-stream version that is not impacted. The updates are applied within 48 hours after customer notifications are provided. For a description of the critical impact security rating, see Understanding Red Hat security ratings.

- Review the summary of your selections and click Create cluster to start the cluster installation.

If you opted to use Manual mode, create the cluster-specific Operator roles and OIDC provider manually to continue the installation:

In the Action required to continue installation dialog, select either the AWS CLI or the ROSA CLI tab and manually create the resources:

If you opted to use the AWS CLI method, click Download .zip, save the file, and then extract the AWS CLI command and policy files. Then, run the provided

awscommands in the CLI.NoteYou must run the

awscommands in the directory that contains the policy files.If you opted to use the ROSA CLI method, click the copy button next to the

rosa createcommands and run them in the CLI.NoteIf you specified custom ARN paths when you created the associated account-wide roles, the custom path is automatically detected and applied to the Operator roles when you create them by using these manual methods.

- In the Action required to continue installation dialog, click x to return to the Overview page for your cluster.

- Verify that the cluster Status in the Details section of the Overview page for your cluster has changed from Waiting to Installing. There might be a short delay of approximately two minutes before the status changes.

NoteIf you opted to use Auto mode, OpenShift Cluster Manager creates the Operator roles and the OIDC provider automatically.

ImportantThe EBS Operator role is required in addition to the account roles to successfully create your cluster.

This role must be attached with the

ManagedOpenShift-openshift-cluster-csi-drivers-ebs-cloud-credentialspolicy, an IAM policy required by ROSA to manage back-end storage through the Container Storage Interface (CSI).For more information about the policies and permissions that the cluster Operators require, see Methods of account-wide role creation.

Example EBS Operator role

"arn:aws:iam::<aws_account_id>:role/<cluster_name>-xxxx-openshift-cluster-csi-drivers-ebs-cloud-credent"After you create your Operator roles, you must edit the Key Policy in the Key Management Service (KMS) page of the AWS Console to add the roles.

Verification

You can monitor the progress of the installation in the Overview page for your cluster. You can view the installation logs on the same page. Your cluster is ready when the Status in the Details section of the page is listed as Ready.

NoteIf the installation fails or the cluster State does not change to Ready after about 40 minutes, check the installation troubleshooting documentation for details. For more information, see Troubleshooting installations. For steps to contact Red Hat Support for assistance, see Getting support for Red Hat OpenShift Service on AWS.

Additional resources

- create cluster in Managing objects with the ROSA CLI

- Methods of account-wide role creation

2.7.2. Creating a cluster with customizations using the CLI

When you create a Red Hat OpenShift Service on AWS (ROSA) cluster that uses the AWS Security Token Service (STS), you can customize your installation interactively.

When you run the rosa create cluster --interactive command at cluster creation time, you are presented with a series of interactive prompts that enable you to customize your deployment. For more information, see Interactive cluster creation mode reference.

After a cluster installation using the interactive mode completes, a single command is provided in the output that enables you to deploy further clusters using the same custom configuration.

Only public and AWS PrivateLink clusters are supported with STS. Regular private clusters (non-PrivateLink) are not available for use with STS.

Prerequisites

- You have completed the AWS prerequisites for ROSA with STS.

- You have available AWS service quotas.

- You have enabled the ROSA service in the AWS Console.

-

You have installed and configured the latest ROSA CLI,

rosa, on your installation host. Runrosa versionto see your currently installed version of the ROSA CLI. If a newer version is available, the CLI provides a link to download this upgrade. If you want to use a customer managed AWS Key Management Service (KMS) key for encryption, you must create a symmetric KMS key. You must provide the Amazon Resource Name (ARN) when creating your cluster. To create a customer managed KMS key, follow the procedure for Creating symmetric encryption KMS keys.

ImportantThe EBS Operator role is required in addition to the account roles to successfully create your cluster.

This role must be attached with the

ManagedOpenShift-openshift-cluster-csi-drivers-ebs-cloud-credentialspolicy, an IAM policy required by ROSA to manage back-end storage through the Container Storage Interface (CSI).For more information about the policies and permissions that the cluster Operators require, see Methods of account-wide role creation.

Example EBS Operator role

"arn:aws:iam::<aws_account_id>:role/<cluster_name>-xxxx-openshift-cluster-csi-drivers-ebs-cloud-credent"After you create your Operator roles, you must edit the Key Policy in the Key Management Service (KMS) page of the AWS Console to add the roles.

Procedure

Create the required account-wide roles and policies, including the Operator policies:

Generate the IAM policy JSON files in the current working directory and output the

awsCLI commands for review:$ rosa create account-roles --interactive \ 1 --mode manual 2

- 1

interactivemode enables you to specify configuration options at the interactive prompts. For more information, see Interactive cluster creation mode reference.- 2

manualmode generates theawsCLI commands and JSON files needed to create the account-wide roles and policies. After review, you must run the commands manually to create the resources.

Example output

I: Logged in as '<red_hat_username>' on 'https://api.openshift.com' I: Validating AWS credentials... I: AWS credentials are valid! I: Validating AWS quota... I: AWS quota ok. If cluster installation fails, validate actual AWS resource usage against https://docs.openshift.com/rosa/rosa_getting_started/rosa-required-aws-service-quotas.html I: Verifying whether OpenShift command-line tool is available... I: Current OpenShift Client Version: 4.0 I: Creating account roles ? Role prefix: ManagedOpenShift 1 ? Permissions boundary ARN (optional): 2 ? Path (optional): [? for help] 3 ? Role creation mode: auto 4 I: Creating roles using 'arn:aws:iam::<aws_account_number>:user/<aws_username>' ? Create the 'ManagedOpenShift-Installer-Role' role? Yes 5 I: Created role 'ManagedOpenShift-Installer-Role' with ARN 'arn:aws:iam::<aws_account_number>:role/ManagedOpenShift-Installer-Role' ? Create the 'ManagedOpenShift-ControlPlane-Role' role? Yes 6 I: Created role 'ManagedOpenShift-ControlPlane-Role' with ARN 'arn:aws:iam::<aws_account_number>:role/ManagedOpenShift-ControlPlane-Role' ? Create the 'ManagedOpenShift-Worker-Role' role? Yes 7 I: Created role 'ManagedOpenShift-Worker-Role' with ARN 'arn:aws:iam::<aws_account_number>:role/ManagedOpenShift-Worker-Role' ? Create the 'ManagedOpenShift-Support-Role' role? Yes 8 I: Created role 'ManagedOpenShift-Support-Role' with ARN 'arn:aws:iam::<aws_account_number>:role/ManagedOpenShift-Support-Role' I: To create a cluster with these roles, run the following command: rosa create cluster --sts

- 1

- Specify the prefix to include in the OpenShift Cluster Manager IAM role name. The default is

ManagedOpenShift.ImportantYou must specify an account-wide role prefix that is unique across your AWS account, even if you use a custom ARN path for your account roles.

- 2

- Optional: Specifies a permissions boundary Amazon Resource Name (ARN) for the role. For more information, see Permissions boundaries for IAM entities in the AWS documentation.

- 3

- Specify a custom ARN path for your account-wide roles. The path must contain alphanumeric characters only and start and end with

/, for example/test/path/dev/. For more information, see ARN path customization for IAM roles and policies. - 4

- Select the role creation mode. You can use

automode to automatically create the account wide roles and policies. Inmanualmode, therosaCLI generates theawscommands needed to create the roles and policies. Inmanualmode, the corresponding policy JSON files are also saved to the current directory.manualmode enables you to review the details before running theawscommands manually. - 5 6 7 8

- Creates the account-wide installer, control plane, worker and support roles and corresponding IAM policies. For more information, see Account-wide IAM role and policy reference.Note

In this step, the ROSA CLI also automatically creates the account-wide Operator IAM policies that are used by the cluster-specific Operator policies to permit the ROSA cluster Operators to run core OpenShift functionality. For more information, see Account-wide IAM role and policy reference.

-

After review, run the

awscommands manually to create the roles and policies. Alternatively, you can run the preceding command using--mode autoto run theawscommands immediately.

-

After review, run the

Optional: If you are using your own AWS KMS key to encrypt the control plane, infrastructure, worker node root volumes, and persistent volumes (PVs), add the ARN for the account-wide installer role to your KMS key policy.

ImportantOnly persistent volumes (PVs) created from the default storage class are encrypted with this specific key.

PVs created by using any other storage class are still encrypted, but the PVs are not encrypted with this key unless the storage class is specifically configured to use this key.

Save the key policy for your KMS key to a file on your local machine. The following example saves the output to

kms-key-policy.jsonin the current working directory:$ aws kms get-key-policy --key-id <key_id_or_arn> --policy-name default --output text > kms-key-policy.json 1- 1

- Replace

<key_id_or_arn>with the ID or ARN of your KMS key.

Add the ARN for the account-wide installer role that you created in the preceding step to the

Statement.Principal.AWSsection in the file. In the following example, the ARN for the defaultManagedOpenShift-Installer-Rolerole is added:{ "Version": "2012-10-17", "Id": "key-rosa-policy-1", "Statement": [ { "Sid": "Enable IAM User Permissions", "Effect": "Allow", "Principal": { "AWS": "arn:aws:iam::<aws_account_id>:root" }, "Action": "kms:*", "Resource": "*" }, { "Sid": "Allow ROSA use of the key", "Effect": "Allow", "Principal": { "AWS": [ "arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-Support-Role", 1 "arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-Installer-Role", "arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-Worker-Role", "arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-ControlPlane-Role", "arn:aws:iam::<aws_account_id>:role/<cluster_name>-xxxx-openshift-cluster-csi-drivers-ebs-cloud-credent" 2 ] }, "Action": [ "kms:Encrypt", "kms:Decrypt", "kms:ReEncrypt*", "kms:GenerateDataKey*", "kms:DescribeKey" ], "Resource": "*" }, { "Sid": "Allow attachment of persistent resources", "Effect": "Allow", "Principal": { "AWS": [ "arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-Support-Role", 3 "arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-Installer-Role", "arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-Worker-Role", "arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-ControlPlane-Role", "arn:aws:iam::<aws_account_id>:role/<cluster_name>-xxxx-openshift-cluster-csi-drivers-ebs-cloud-credent" 4 ] }, "Action": [ "kms:CreateGrant", "kms:ListGrants", "kms:RevokeGrant" ], "Resource": "*", "Condition": { "Bool": { "kms:GrantIsForAWSResource": "true" } } } ] }- 1 3

- You must specify the ARN for the account-wide role that will be used when you create the ROSA cluster. The ARNs listed in the section must be comma-separated.

- 2 4

- You must specify the ARN for the operator role that will be used when you create the ROSA cluster. The ARNs listed in the section must be comma-separated.

Apply the changes to your KMS key policy:

$ aws kms put-key-policy --key-id <key_id_or_arn> \ 1 --policy file://kms-key-policy.json \ 2 --policy-name default

You can reference the ARN of your KMS key when you create the cluster in the next step.

Create a cluster with STS using custom installation options. You can use the

--interactivemode to interactively specify custom settings:WarningYou cannot install a ROSA cluster into an existing VPC that was created by the OpenShift installer. These VPCs are created during the cluster deployment process and must only be associated with a single cluster to ensure that cluster provisioning and deletion operations work correctly.

To verify whether a VPC was created by the OpenShift installer, check for the

ownedvalue on thekubernetes.io/cluster/<infra-id>tag. For example, when viewing the tags for the VPC namedmycluster-12abc-34def, thekubernetes.io/cluster/mycluster-12abc-34deftag has a value ofowned. Therefore, the VPC was created by the installer and must not be modified by the administrator.$ rosa create cluster --interactive --sts

Example output

I: Interactive mode enabled. Any optional fields can be left empty and a default will be selected. ? Cluster name: <cluster_name> ? Domain prefix: <domain_prefix> 1 ? Deploy cluster with Hosted Control Plane (optional): No ? Create cluster admin user: Yes 2 ? Create custom password for cluster admin: No 3 I: cluster admin user is cluster-admin I: cluster admin password is password ? OpenShift version: <openshift_version> 4 ? Configure the use of IMDSv2 for ec2 instances optional/required (optional): 5 I: Using arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-Installer-Role for the Installer role 6 I: Using arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-ControlPlane-Role for the ControlPlane role I: Using arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-Worker-Role for the Worker role I: Using arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-Support-Role for the Support role ? External ID (optional): 7 ? Operator roles prefix: <cluster_name>-<random_string> 8 ? Deploy cluster using pre registered OIDC Configuration ID: ? Tags (optional) 9 ? Multiple availability zones (optional): No 10 ? AWS region: us-east-1 ? PrivateLink cluster (optional): No ? Machine CIDR: 10.0.0.0/16 ? Service CIDR: 172.30.0.0/16 ? Pod CIDR: 10.128.0.0/14 ? Install into an existing VPC (optional): Yes 11 ? Subnet IDs (optional): ? Select availability zones (optional): No ? Enable Customer Managed key (optional): No 12 ? Compute nodes instance type (optional): ? Enable autoscaling (optional): No ? Compute nodes: 2 ? Worker machine pool labels (optional): ? Host prefix: 23 ? Additional Security Group IDs (optional): 13 ? > [*] sg-0e375ff0ec4a6cfa2 ('sg-1') ? > [ ] sg-0e525ef0ec4b2ada7 ('sg-2') ? Enable FIPS support: No 14 ? Encrypt etcd data: No 15 ? Disable Workload monitoring (optional): No I: Creating cluster '<cluster_name>' I: To create this cluster again in the future, you can run: rosa create cluster --cluster-name <cluster_name> --role-arn arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-Installer-Role --support-role-arn arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-Support-Role --master-iam-role arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-ControlPlane-Role --worker-iam-role arn:aws:iam::<aws_account_id>:role/ManagedOpenShift-Worker-Role --operator-roles-prefix <cluster_name>-<random_string> --region us-east-1 --version 4.17.0 --additional-compute-security-group-ids sg-0e375ff0ec4a6cfa2 --additional-infra-security-group-ids sg-0e375ff0ec4a6cfa2 --additional-control-plane-security-group-ids sg-0e375ff0ec4a6cfa2 --replicas 2 --machine-cidr 10.0.0.0/16 --service-cidr 172.30.0.0/16 --pod-cidr 10.128.0.0/14 --host-prefix 23 16 I: To view a list of clusters and their status, run 'rosa list clusters' I: Cluster '<cluster_name>' has been created. I: Once the cluster is installed you will need to add an Identity Provider before you can login into the cluster. See 'rosa create idp --help' for more information. ...

- 1

- Optional. When creating your cluster, you can customize the subdomain for your cluster on

*.openshiftapps.comusing the--domain-prefixflag. The value for this flag must be unique within your organization, cannot be longer than 15 characters, and cannot be changed after cluster creation. If the flag is not supplied, an autogenerated value is created that depends on the length of the cluster name. If the cluster name is fewer than or equal to 15 characters, that name is used for the domain prefix. If the cluster name is longer than 15 characters, the domain prefix is randomly generated to a 15 character string. - 2

- When creating your cluster, you can create a local administrator user (

cluster-admin) for your cluster. This automatically configures anhtpasswdidentity provider for thecluster-adminuser. - 3

- You can create a custom password for the

cluster-adminuser, or have the system generate a password. If you do not create a custom password, the generated password is displayed in the command line output. If you specify a custom password, the password must be at least 14 characters (ASCII-standard) without any whitespace. When defined, the password is hashed and transported securely. - 4

- When creating the cluster, the listed

OpenShift versionoptions include the major, minor, and patch versions, for example4.17.0. - 5

- Optional: Specify

optionalto configure all EC2 instances to use both v1 and v2 endpoints of EC2 Instance Metadata Service (IMDS). This is the default value. Specifyrequiredto configure all EC2 instances to use IMDSv2 only.ImportantThe Instance Metadata Service settings cannot be changed after your cluster is created.

- 6

- If you have more than one set of account roles for your cluster version in your Amazon Web Services (AWS) account, an interactive list of options is provided.

- 7

- Optional: Specify an unique identifier that is passed by Red Hat OpenShift Service on AWS and the OpenShift installer when an account role is assumed. This option is only required for custom account roles that expect an external ID.

- 8

- By default, the cluster-specific Operator role names are prefixed with the cluster name and a random 4-digit hash. You can optionally specify a custom prefix to replace

<cluster_name>-<hash>in the role names. The prefix is applied when you create the cluster-specific Operator IAM roles. For information about the prefix, see About custom Operator IAM role prefixes.NoteIf you specified custom ARN paths when you created the associated account-wide roles, the custom path is automatically detected. The custom path is applied to the cluster-specific Operator roles when you create them in a later step.

- 9

- Optional: Specify a tag that is used on all resources created by Red Hat OpenShift Service on AWS in AWS. Tags can help you manage, identify, organize, search for, and filter resources within AWS. Tags are comma separated, for example:

key value, data input.ImportantRed Hat OpenShift Service on AWS only supports custom tags to Red Hat OpenShift resources during cluster creation. Once added, the tags cannot be removed or edited. Tags that are added by Red Hat are required for clusters to stay in compliance with Red Hat production service level agreements (SLAs). These tags must not be removed.

Red Hat OpenShift Service on AWS does not support adding additional tags outside of ROSA cluster-managed resources. These tags can be lost when AWS resources are managed by the ROSA cluster. In these cases, you might need custom solutions or tools to reconcile the tags and keep them intact.

- 10

- Optional: Multiple availability zones are recommended for production workloads. The default is a single availability zone.

- 11

- Optional: You can create a cluster in an existing VPC, or ROSA can create a new VPC to use.Warning

You cannot install a ROSA cluster into an existing VPC that was created by the OpenShift installer. These VPCs are created during the cluster deployment process and must only be associated with a single cluster to ensure that cluster provisioning and deletion operations work correctly.

To verify whether a VPC was created by the OpenShift installer, check for the

ownedvalue on thekubernetes.io/cluster/<infra-id>tag. For example, when viewing the tags for the VPC namedmycluster-12abc-34def, thekubernetes.io/cluster/mycluster-12abc-34deftag has a value ofowned. Therefore, the VPC was created by the installer and must not be modified by the administrator. - 12

- Optional: Enable this option if you are using your own AWS KMS key to encrypt the control plane, infrastructure, worker node root volumes, and PVs. Specify the ARN for the KMS key that you added to the account-wide role ARN in the preceding step.Important

Only persistent volumes (PVs) created from the default storage class are encrypted with this specific key.

PVs created by using any other storage class are still encrypted, but the PVs are not encrypted with this key unless the storage class is specifically configured to use this key.

- 13

- Optional: You can select additional custom security groups to use in your cluster. You must have already created the security groups and associated them with the VPC you selected for this cluster. You cannot add or edit security groups for the default machine pools after you create the machine pool. For more information, see the requirements for Security groups under Additional resources.

- 14

- Optional: Enable this option if you require your cluster to be FIPS validated. Selecting this option means the encrypt etcd data option is enabled by default and cannot be disabled. You can encrypt etcd data without enabling FIPS support.

- 15

- Optional: Enable this option if your use case only requires etcd key value encryption in addition to the control plane storage encryption that encrypts the etcd volumes by default. With this option, the etcd key values are encrypted but not the keys.Important