6.5. Host Tasks

6.5.1. Adding a Satellite Host Provider Host

Procedure 6.1. Adding a Satellite Host Provider Host

- Click the Hosts resource tab to list the hosts in the results list.

- Click to open the New Host window.

- Use the drop-down menu to select the Host Cluster for the new host.

- Select the Foreman/Satellite check box to display the options for adding a Satellite host provider host and select the provider from which the host is to be added.

- Select either Discovered Hosts or Provisioned Hosts.

- Discovered Hosts (default option): Select the host, host group, and compute resources from the drop-down lists.

- Provisioned Hosts: Select a host from the Providers Hosts drop-down list.

Any details regarding the host that can be retrieved from the external provider are automatically set, and can be edited as desired. - Enter the Name, Address, and SSH Port (Provisioned Hosts only) of the new host.

- Select an authentication method to use with the host.

- Enter the root user's password to use password authentication.

- Copy the key displayed in the SSH PublicKey field to

/root/.ssh/authorized_hostson the host to use public key authentication (Provisioned Hosts only).

- You have now completed the mandatory steps to add a Red Hat Enterprise Linux host. Click the drop-down button to show the advanced host settings.

- Optionally disable automatic firewall configuration.

- Optionally add a host SSH fingerprint to increase security. You can add it manually, or fetch it automatically.

- You can configure the Power Management, SPM, Console, and Network Provider using the applicable tabs now; however, as these are not fundamental to adding a Red Hat Enterprise Linux host, they are not covered in this procedure.

- Click to add the host and close the window.

Installing, and you can view the progress of the installation in the details pane. After installation is complete, the status will update to Reboot. The host must be activated for the status to change to Up.

6.5.2. Configuring Satellite Errata Management for a Host

Important

Procedure 6.2. Configuring Satellite Errata Management for a Host

- Add the Satellite server as an external provider. See Section 11.2.2, “Adding a Red Hat Satellite Instance for Host Provisioning” for more information.

- Associate the required host with the Satellite server.

Note

The host must be registered to the Satellite server and have the katello-agent package installed.For more information on how to configure a host registration see Configuring a Host for Registration in the Red Hat Satellite User Guide and for more information on how to register a host and install the katello-agent package see Registration in the Red Hat Satellite User Guide- In the Hosts tab, select the host in the results list.

- Click to open the Edit Host window.

- Check the checkbox.

- Select the required Satellite server from the drop-down list.

- Click .

6.5.3. Explanation of Settings and Controls in the New Host and Edit Host Windows

6.5.3.1. Host General Settings Explained

|

Field Name

|

Description

|

|---|---|

|

Data Center

|

The data center to which the host belongs. Red Hat Enterprise Virtualization Hypervisor hosts cannot be added to Gluster-enabled clusters.

|

|

Host Cluster

|

The cluster to which the host belongs.

|

|

Use Foreman/Satellite

|

Select or clear this check box to view or hide options for adding hosts provided by Satellite host providers. The following options are also available:

Discovered Hosts

Provisioned Hosts

|

|

Name

|

The name of the cluster. This text field has a 40-character limit and must be a unique name with any combination of uppercase and lowercase letters, numbers, hyphens, and underscores.

|

|

Comment

|

A field for adding plain text, human-readable comments regarding the host.

|

|

Address

|

The IP address, or resolvable hostname of the host.

|

|

Password

|

The password of the host's root user. This can only be given when you add the host; it cannot be edited afterwards.

|

|

SSH PublicKey

|

Copy the contents in the text box to the

/root/.known_hosts file on the host to use the Manager's ssh key instead of using a password to authenticate with the host.

|

|

Automatically configure host firewall

|

When adding a new host, the Manager can open the required ports on the host's firewall. This is enabled by default. This is an Advanced Parameter.

|

|

Use JSON protocol

|

This is enabled by default. This is an Advanced Parameter.

|

|

SSH Fingerprint

|

You can the host's SSH fingerprint, and compare it with the fingerprint you expect the host to return, ensuring that they match. This is an Advanced Parameter.

|

6.5.3.2. Host Power Management Settings Explained

|

Field Name

|

Description

|

|---|---|

|

Enable Power Management

|

Enables power management on the host. Select this check box to enable the rest of the fields in the Power Management tab.

|

|

Kdump integration

|

Prevents the host from fencing while performing a kernel crash dump, so that the crash dump is not interrupted. From Red Hat Enterprise Linux 6.6 and 7.1 onwards, Kdump is available by default. If kdump is available on the host, but its configuration is not valid (the kdump service cannot be started), enabling Kdump integration will cause the host (re)installation to fail. If this is the case, see Section 6.6.4, “fence_kdump Advanced Configuration”.

|

|

Disable policy control of power management

|

Power management is controlled by the Scheduling Policy of the host's cluster. If power management is enabled and the defined low utilization value is reached, the Manager will power down the host machine, and restart it again when load balancing requires or there are not enough free hosts in the cluster. Select this check box to disable policy control.

|

|

Agents by Sequential Order

|

Lists the host's fence agents. Fence agents can be sequential, concurrent, or a mix of both.

Fence agents are sequential by default. Use the up and down buttons to change the sequence in which the fence agents are used.

To make two fence agents concurrent, select one fence agent from the Concurrent with drop-down list next to the other fence agent. Additional fence agents can be added to the group of concurrent fence agents by selecting the group from the Concurrent with drop-down list next to the additional fence agent.

|

|

Add Fence Agent

|

Click the plus () button to add a new fence agent. The Edit fence agent window opens. See the table below for more information on the fields in this window.

|

|

Power Management Proxy Preference

|

By default, specifies that the Manager will search for a fencing proxy within the same cluster as the host, and if no fencing proxy is found, the Manager will search in the same dc (data center). Use the up and down buttons to change the sequence in which these resources are used. This field is available under Advanced Parameters.

|

|

Field Name

|

Description

|

|---|---|

|

Address

|

The address to access your host's power management device. Either a resolvable hostname or an IP address.

|

|

User Name

|

User account with which to access the power management device. You can set up a user on the device, or use the default user.

|

|

Password

|

Password for the user accessing the power management device.

|

|

Type

|

The type of power management device in your host.

Choose one of the following:

|

|

SSH Port

|

The port number used by the power management device to communicate with the host.

|

|

Slot

|

The number used to identify the blade of the power management device.

|

|

Service Profile

|

The service profile name used to identify the blade of the power management device. This field appears instead of Slot when the device type is

cisco_ucs.

|

|

Options

|

Power management device specific options. Enter these as 'key=value'. See the documentation of your host's power management device for the options available.

For Red Hat Enterprise Linux 7 hosts, if you are using cisco_ucs as the power management device, you also need to append

ssl_insecure=1 to the Options field.

|

|

Secure

|

Select this check box to allow the power management device to connect securely to the host. This can be done via ssh, ssl, or other authentication protocols depending on the power management agent.

|

6.5.3.3. SPM Priority Settings Explained

|

Field Name

|

Description

|

|---|---|

|

SPM Priority

|

Defines the likelihood that the host will be given the role of Storage Pool Manager (SPM). The options are Low, Normal, and High priority. Low priority means that there is a reduced likelihood of the host being assigned the role of SPM, and High priority means there is an increased likelihood. The default setting is Normal.

|

6.5.3.4. Host Console Settings Explained

|

Field Name

|

Description

|

|---|---|

|

Override display address

|

Select this check box to override the display addresses of the host. This feature is useful in a case where the hosts are defined by internal IP and are behind a NAT firewall. When a user connects to a virtual machine from outside of the internal network, instead of returning the private address of the host on which the virtual machine is running, the machine returns a public IP or FQDN (which is resolved in the external network to the public IP).

|

|

Display address

|

The display address specified here will be used for all virtual machines running on this host. The address must be in the format of a fully qualified domain name or IP.

|

6.5.4. Configuring Host Power Management Settings

Important

maintenance mode before configuring power management settings. Otherwise, all running virtual machines on that host will be stopped ungracefully upon restarting the host, which can cause disruptions in production environments. A warning dialog will appear if you have not correctly set your host to maintenance mode.

Procedure 6.3. Configuring Power Management Settings

- In the Hosts tab, select the host in the results list.

- Click to open the Edit Host window.

- Click the Power Management tab to display the Power Management settings.

- Select the Enable Power Management check box to enable the fields.

- Select the Kdump integration check box to prevent the host from fencing while performing a kernel crash dump.

Important

When you enable Kdump integration on an existing host, the host must be reinstalled for kdump to be configured. See Section 6.5.10, “Reinstalling Virtualization Hosts”. - Optionally, select the Disable policy control of power management check box if you do not want your host's power management to be controlled by the Scheduling Policy of the host's cluster.

- Click the plus () button to add a new power management device. The Edit fence agent window opens.

- Enter the Address, User Name, and Password of the power management device into the appropriate fields.

- Select the power management device Type from the drop-down list.

- Enter the SSH Port number used by the power management device to communicate with the host.

- Enter the Slot number used to identify the blade of the power management device.

- Enter the Options for the power management device. Use a comma-separated list of 'key=value' entries.

- Select the Secure check box to enable the power management device to connect securely to the host.

- Click to ensure the settings are correct. Test Succeeded, Host Status is: on will display upon successful verification.

- Click to close the Edit fence agent window.

- In the Power Management tab, optionally expand the Advanced Parameters and use the up and down buttons to specify the order in which the Manager will search the host's cluster and dc (datacenter) for a fencing proxy.

- Click .

6.5.5. Configuring Host Storage Pool Manager Settings

Procedure 6.4. Configuring SPM settings

- Click the Hosts resource tab, and select a host from the results list.

- Click to open the Edit Host window.

- Click the SPM tab to display the SPM Priority settings.

- Use the radio buttons to select the appropriate SPM priority for the host.

- Click to save the settings and close the window.

6.5.6. Editing a Resource

Procedure 6.5. Editing a Resource

- Use the resource tabs, tree mode, or the search function to find and select the resource in the results list.

- Click to open the Edit window.

- Change the necessary properties and click .

6.5.7. Moving a Host to Maintenance Mode

Procedure 6.6. Placing a Host into Maintenance Mode

- Click the Hosts resource tab, and select the desired host.

- Click to open the Maintenance Host(s) confirmation window.

- Optionally, enter a Reason for moving the host into maintenance mode in the Maintenance Host(s) confirmation window. This allows you to provide an explanation for the maintenance, which will appear in the logs and when the host is activated again.

Note

The host maintenance Reason field will only appear if it has been enabled in the cluster settings. See Section 4.2.2.1, “General Cluster Settings Explained” for more information. - Click to initiate maintenance mode.

Preparing for Maintenance, and finally Maintenance when the operation completes successfully. VDSM does not stop while the host is in maintenance mode.

Note

6.5.8. Activating a Host from Maintenance Mode

Procedure 6.7. Activating a Host from Maintenance Mode

- Click the Hosts resources tab and select the host.

- Click .

Unassigned, and finally Up when the operation is complete. Virtual machines can now run on the host. Virtual machines that were migrated off the host when it was placed into maintenance mode are not automatically migrated back to the host when it is activated, but can be migrated manually. If the host was the Storage Pool Manager (SPM) before being placed into maintenance mode, the SPM role does not return automatically when the host is activated.

6.5.9. Removing a Host

Procedure 6.8. Removing a host

- In the Administration Portal, click the Hosts resource tab and select the host in the results list.

- Place the host into maintenance mode.

- Click to open the Remove Host(s) confirmation window.

- Select the Force Remove check box if the host is part of a Red Hat Gluster Storage cluster and has volume bricks on it, or if the host is non-responsive.

- Click .

6.5.10. Reinstalling Virtualization Hosts

Important

Procedure 6.9. Reinstalling Red Hat Enterprise Virtualization Hypervisors and Red Hat Enterprise Linux Hosts

- Use the Hosts resource tab, tree mode, or the search function to find and select the host in the results list.

- Click . If migration is enabled at cluster level, any virtual machines running on the host are migrated to other hosts. If the host is the SPM, this function is moved to another host. The status of the host changes as it enters maintenance mode.

- Click Reinstall to open the Install Host window.

- Click to reinstall the host.

Important

6.5.11. Customizing Hosts with Tags

Procedure 6.10. Customizing hosts with tags

- Use the Hosts resource tab, tree mode, or the search function to find and select the host in the results list.

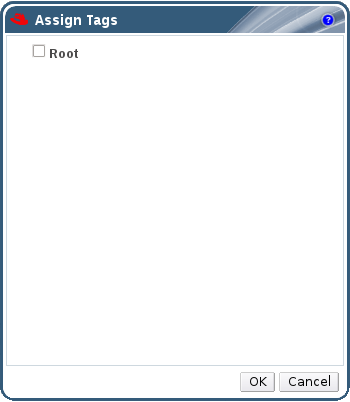

- Click to open the Assign Tags window.

Figure 6.1. Assign Tags Window

- The Assign Tags window lists all available tags. Select the check boxes of applicable tags.

- Click to assign the tags and close the window.

6.5.12. Viewing Host Errata

Procedure 6.11. Viewing Host Errata

- Click the Hosts resource tab, and select a host from the results list.

- Click the General tab in the details pane.

- Click the Errata sub-tab in the General tab.

6.5.13. Viewing the Health Status of a Host

- OK: No icon

- Info:

- Warning:

- Error:

- Failure:

GET request on a host will include the external_status element, which contains the health status.

events collection. For more information, see Adding Events in the REST API Guide.

6.5.14. Viewing Host Devices

Procedure 6.12. Viewing Host Devices

- Use the Hosts resource tab, tree mode, or the search function to find and select a host from the results list.

- Click the Host Devices tab in the details pane.

6.5.15. Preparing Host and Guest Systems for GPU Passthrough

grub configuration files. Both machines also require reboot for the changes to take effect.

Procedure 6.13. Preparing a Host for GPU Passthrough

- Log in to the host server and find the device vendor ID:product ID. In this example, the IDs are

10de:13baand10de:0fbc.lspci -nn ... 01:00.0 VGA compatible controller [0300]: NVIDIA Corporation GM107GL [Quadro K2200] [10de:13ba] (rev a2) 01:00.1 Audio device [0403]: NVIDIA Corporation Device [10de:0fbc] (rev a1) ...

# lspci -nn ... 01:00.0 VGA compatible controller [0300]: NVIDIA Corporation GM107GL [Quadro K2200] [10de:13ba] (rev a2) 01:00.1 Audio device [0403]: NVIDIA Corporation Device [10de:0fbc] (rev a1) ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Edit the grub configuration file and append pci-stub.ids=xxxx:xxxx to the end of the

GRUB_CMDLINE_LINUXline.vi /etc/default/grub ... GRUB_CMDLINE_LINUX="nofb splash=quiet console=tty0 ... pci-stub.ids=10de:13ba,10de:0fbc" ...

# vi /etc/default/grub ... GRUB_CMDLINE_LINUX="nofb splash=quiet console=tty0 ... pci-stub.ids=10de:13ba,10de:0fbc" ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Blacklist the corresponding drivers on the host. In this example, nVidia's nouveau driver is being blacklisted by an additional amendment to theGRUB_CMDLINE_LINUXline.vi /etc/default/grub ... GRUB_CMDLINE_LINUX="nofb splash=quiet console=tty0 ... pci-stub.ids=10de:13ba,10de:0fbc rdblacklist=nouveau" ...

# vi /etc/default/grub ... GRUB_CMDLINE_LINUX="nofb splash=quiet console=tty0 ... pci-stub.ids=10de:13ba,10de:0fbc rdblacklist=nouveau" ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save thegrubconfiguration file. - Refresh the

grub.cfgfile and reboot the server for these changes to take effect:grub2-mkconfig -o /boot/grub2/grub.cfg

# grub2-mkconfig -o /boot/grub2/grub.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow reboot

# rebootCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Confirm the device is bound to the

pci-stubdriver with thelspcicommand:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure 6.14. Preparing a Guest Virtual Machine for GPU Passthrough

- For Linux

- Only proprietary GPU drivers are supported. Black list the corresponding open source driver in the

grubconfiguration file. For example:vi /etc/default/grub ... GRUB_CMDLINE_LINUX="nofb splash=quiet console=tty0 ... rdblacklist=nouveau" ...

$ vi /etc/default/grub ... GRUB_CMDLINE_LINUX="nofb splash=quiet console=tty0 ... rdblacklist=nouveau" ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Locate the GPU BusID. In this example, is BusID is

00:09.0.lspci | grep VGA 00:09.0 VGA compatible controller: NVIDIA Corporation GK106GL [Quadro K4000] (rev a1)

# lspci | grep VGA 00:09.0 VGA compatible controller: NVIDIA Corporation GK106GL [Quadro K4000] (rev a1)Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Edit the

/etc/X11/xorg.conffile and append the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart the virtual machine.

- For Windows

- Download and install the corresponding drivers for the device. For example, for Nvidia drivers, go to NVIDIA Driver Downloads.

- Restart the virtual machine.