Questo contenuto non è disponibile nella lingua selezionata.

Chapter 2. Working with pods

2.1. Using pods

A pod is one or more containers deployed together on one host, and the smallest compute unit that can be defined, deployed, and managed.

2.1.1. Understanding pods

Pods are the rough equivalent of a machine instance (physical or virtual) to a Container. Each pod is allocated its own internal IP address, therefore owning its entire port space, and containers within pods can share their local storage and networking.

Pods have a lifecycle; they are defined, then they are assigned to run on a node, then they run until their container(s) exit or they are removed for some other reason. Pods, depending on policy and exit code, might be removed after exiting, or can be retained to enable access to the logs of their containers.

OpenShift Container Platform treats pods as largely immutable; changes cannot be made to a pod definition while it is running. OpenShift Container Platform implements changes by terminating an existing pod and recreating it with modified configuration, base image(s), or both. Pods are also treated as expendable, and do not maintain state when recreated. Therefore pods should usually be managed by higher-level controllers, rather than directly by users.

For the maximum number of pods per OpenShift Container Platform node host, see the Cluster Limits.

Bare pods that are not managed by a replication controller will be not rescheduled upon node disruption.

2.1.2. Example pod configurations

OpenShift Container Platform leverages the Kubernetes concept of a pod, which is one or more containers deployed together on one host, and the smallest compute unit that can be defined, deployed, and managed.

The following is an example definition of a pod. It demonstrates many features of pods, most of which are discussed in other topics and thus only briefly mentioned here:

Pod object definition (YAML)

- 1

- Pods can be "tagged" with one or more labels, which can then be used to select and manage groups of pods in a single operation. The labels are stored in key/value format in the

metadatahash. - 2

- The pod restart policy with possible values

Always,OnFailure, andNever. The default value isAlways. - 3

- OpenShift Container Platform defines a security context for containers which specifies whether they are allowed to run as privileged containers, run as a user of their choice, and more. The default context is very restrictive but administrators can modify this as needed.

- 4

containersspecifies an array of one or more container definitions.- 5

- The container specifies where external storage volumes are mounted within the container.

- 6

- Specify the volumes to provide for the pod. Volumes mount at the specified path. Do not mount to the container root,

/, or any path that is the same in the host and the container. This can corrupt your host system if the container is sufficiently privileged, such as the host/dev/ptsfiles. It is safe to mount the host by using/host. - 7

- Each container in the pod is instantiated from its own container image.

- 8

- The pod defines storage volumes that are available to its container(s) to use.

If you attach persistent volumes that have high file counts to pods, those pods can fail or can take a long time to start. For more information, see When using Persistent Volumes with high file counts in OpenShift, why do pods fail to start or take an excessive amount of time to achieve "Ready" state?.

This pod definition does not include attributes that are filled by OpenShift Container Platform automatically after the pod is created and its lifecycle begins. The Kubernetes pod documentation has details about the functionality and purpose of pods.

2.1.3. Understanding resource requests and limits

You can specify CPU and memory requests and limits for pods by using a pod spec, as shown in "Example pod configurations", or the specification for the controlling object of the pod.

CPU and memory requests specify the minimum amount of a resource that a pod needs to run, helping OpenShift Container Platform to schedule pods on nodes with sufficient resources.

CPU and memory limits define the maximum amount of a resource that a pod can consume, preventing the pod from consuming excessive resources and potentially impacting other pods on the same node.

CPU and memory requests and limits are processed by using the following principles:

CPU limits are enforced by using CPU throttling. When a container approaches its CPU limit, the kernel restricts access to the CPU specified as the container’s limit. As such, a CPU limit is a hard limit that the kernel enforces. OpenShift Container Platform can allow a container to exceed its CPU limit for extended periods of time. However, container runtimes do not terminate pods or containers for excessive CPU usage.

CPU limits and requests are measured in CPU units. One CPU unit is equivalent to 1 physical CPU core or 1 virtual core, depending on whether the node is a physical host or a virtual machine running inside a physical machine. Fractional requests are allowed. For example, when you define a container with a CPU request of

0.5, you are requesting half as much CPU time than if you asked for1.0CPU. For CPU units,0.1is equivalent to the100m, which can be read as one hundred millicpu or one hundred millicores. A CPU resource is always an absolute amount of resource, and is never a relative amount.NoteBy default, the smallest amount of CPU that can be allocated to a pod is 10 mCPU. You can request resource limits lower than 10 mCPU in a pod spec. However, the pod would still be allocated 10 mCPU.

Memory limits are enforced by the kernel by using out of memory (OOM) kills. When a container uses more than its memory limit, the kernel can terminate that container. However, terminations happen only when the kernel detects memory pressure. As such, a container that over allocates memory might not be immediately killed. This means memory limits are enforced reactively. A container can use more memory than its memory limit. If it does, the container can get killed.

You can express memory as a plain integer or as a fixed-point number by using one of these quantity suffixes:

E,P,T,G,M, ork. You can also use the power-of-two equivalents:Ei,Pi,Ti,Gi,Mi, orKi.

If the node where a pod is running has enough of a resource available, it is possible for a container to use more CPU or memory resources than it requested. However, the container cannot exceed the corresponding limit. For example, if you set a container memory request of 256 MiB, and that container is in a pod scheduled to a node with 8GiB of memory and no other pods, the container can try to use more memory than the requested 256 MiB.

This behavior does not apply to CPU and memory limits. These limits are applied by the kubelet and the container runtime, and are enforced by the kernel. On Linux nodes, the kernel enforces limits by using cgroups.

For Linux workloads, you can specify huge page resources. Huge pages are a Linux-specific feature where the node kernel allocates blocks of memory that are much larger than the default page size. For example, on a system where the default page size is 4KiB, you could specify a higher limit. For more information on huge pages, see "Huge pages".

2.2. Viewing pods

As an administrator, you can view cluster pods, check their health, and evaluate the overall health of the cluster. You can also view a list of pods associated with a specific project or view usage statistics about pods. Regularly viewing pods can help you detect problems early, track resource usage, and ensure cluster stability.

2.2.1. Viewing pods in a project

You can display pod usage statistics, such as CPU, memory, and storage consumption, to monitor container runtime environments and ensure efficient resource use.

Procedure

Change to the project by entering the following command:

oc project <project_name>

$ oc project <project_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Obtain a list of pods by entering the following command:

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE console-698d866b78-bnshf 1/1 Running 2 165m console-698d866b78-m87pm 1/1 Running 2 165m

NAME READY STATUS RESTARTS AGE console-698d866b78-bnshf 1/1 Running 2 165m console-698d866b78-m87pm 1/1 Running 2 165mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Add the

-o wideflags to view the pod IP address and the node where the pod is located. For example:oc get pods -o wide

$ oc get pods -o wideCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE console-698d866b78-bnshf 1/1 Running 2 166m 10.128.0.24 ip-10-0-152-71.ec2.internal <none> console-698d866b78-m87pm 1/1 Running 2 166m 10.129.0.23 ip-10-0-173-237.ec2.internal <none>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE console-698d866b78-bnshf 1/1 Running 2 166m 10.128.0.24 ip-10-0-152-71.ec2.internal <none> console-698d866b78-m87pm 1/1 Running 2 166m 10.129.0.23 ip-10-0-173-237.ec2.internal <none>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2.2. Describing a pod

To troubleshoot pod issues and view detailed information about a pod in OpenShift Container Platform, you can describe a pod using the oc describe pod command. The Events section in the output provides detailed information about the pod and the containers inside of it.

Procedure

Describe a pod by running the following command:

oc describe pod -n <namespace> busybox-1

$ oc describe pod -n <namespace> busybox-1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2.3. Viewing pod usage statistics

You can display usage statistics about pods, which provide the runtime environments for containers. These usage statistics include CPU, memory, and storage consumption.

Prerequisites

-

You must have

cluster-readerpermission to view the usage statistics. - Metrics must be installed to view the usage statistics.

Procedure

View the usage statistics by entering the following command:

oc adm top pods -n <namespace>

$ oc adm top pods -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME CPU(cores) MEMORY(bytes) console-7f58c69899-q8c8k 0m 22Mi console-7f58c69899-xhbgg 0m 25Mi downloads-594fcccf94-bcxk8 3m 18Mi downloads-594fcccf94-kv4p6 2m 15Mi

NAME CPU(cores) MEMORY(bytes) console-7f58c69899-q8c8k 0m 22Mi console-7f58c69899-xhbgg 0m 25Mi downloads-594fcccf94-bcxk8 3m 18Mi downloads-594fcccf94-kv4p6 2m 15MiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Add the

--selector=''label to view usage statistics for pods with labels. Note that you must choose the label query to filter on, such as=,==, or!=. For example:oc adm top pod --selector='<pod_name>'

$ oc adm top pod --selector='<pod_name>'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2.4. Viewing resource logs

You can view logs for resources in the OpenShift CLI (oc) or web console. Logs display from the end (or tail) by default. Viewing logs for resources can help you troubleshoot issues and monitor resource behavior.

2.2.4.1. Viewing resource logs by using the web console

Use the following procedure to view resource logs by using the OpenShift Container Platform web console.

Procedure

In the OpenShift Container Platform console, navigate to Workloads

Pods or navigate to the pod through the resource you want to investigate. NoteSome resources, such as builds, do not have pods to query directly. In such instances, you can locate the Logs link on the Details page for the resource.

- Select a project from the drop-down menu.

- Click the name of the pod you want to investigate.

- Click Logs.

2.2.4.2. Viewing resource logs by using the CLI

Use the following procedure to view resource logs by using the command-line interface (CLI).

Prerequisites

-

Access to the OpenShift CLI (

oc).

Procedure

View the log for a specific pod by entering the following command:

oc logs -f <pod_name> -c <container_name>

$ oc logs -f <pod_name> -c <container_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

-f- Optional: Specifies that the output follows what is being written into the logs.

<pod_name>- Specifies the name of the pod.

<container_name>- Optional: Specifies the name of a container. When a pod has more than one container, you must specify the container name.

For example:

oc logs -f ruby-57f7f4855b-znl92 -c ruby

$ oc logs -f ruby-57f7f4855b-znl92 -c rubyCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the log for a specific resource by entering the following command:

oc logs <object_type>/<resource_name>

$ oc logs <object_type>/<resource_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc logs deployment/ruby

$ oc logs deployment/rubyCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3. Configuring an OpenShift Container Platform cluster for pods

As an administrator, you can create and maintain an efficient cluster for pods.

By keeping your cluster efficient, you can provide a better environment for your developers using such tools as what a pod does when it exits, ensuring that the required number of pods is always running, when to restart pods designed to run only once, limit the bandwidth available to pods, and how to keep pods running during disruptions.

2.3.1. Configuring how pods behave after restart

A pod restart policy determines how OpenShift Container Platform responds when Containers in that pod exit. The policy applies to all Containers in that pod.

The possible values are:

-

Always- Tries restarting a successfully exited Container on the pod continuously, with an exponential back-off delay (10s, 20s, 40s) capped at 5 minutes. The default isAlways. -

OnFailure- Tries restarting a failed Container on the pod with an exponential back-off delay (10s, 20s, 40s) capped at 5 minutes. -

Never- Does not try to restart exited or failed Containers on the pod. Pods immediately fail and exit.

After the pod is bound to a node, the pod will never be bound to another node. This means that a controller is necessary in order for a pod to survive node failure:

| Condition | Controller Type | Restart Policy |

|---|---|---|

| Pods that are expected to terminate (such as batch computations) | Job |

|

| Pods that are expected to not terminate (such as web servers) | Replication controller |

|

| Pods that must run one-per-machine | Daemon set | Any |

If a Container on a pod fails and the restart policy is set to OnFailure, the pod stays on the node and the Container is restarted. If you do not want the Container to restart, use a restart policy of Never.

If an entire pod fails, OpenShift Container Platform starts a new pod. Developers must address the possibility that applications might be restarted in a new pod. In particular, applications must handle temporary files, locks, incomplete output, and so forth caused by previous runs.

Kubernetes architecture expects reliable endpoints from cloud providers. When a cloud provider is down, the kubelet prevents OpenShift Container Platform from restarting.

If the underlying cloud provider endpoints are not reliable, do not install a cluster using cloud provider integration. Install the cluster as if it was in a no-cloud environment. It is not recommended to toggle cloud provider integration on or off in an installed cluster.

For details on how OpenShift Container Platform uses restart policy with failed Containers, see the Example States in the Kubernetes documentation.

2.3.2. Limiting the bandwidth available to pods

You can apply quality-of-service traffic shaping to a pod and effectively limit its available bandwidth. Egress traffic (from the pod) is handled by policing, which simply drops packets in excess of the configured rate. Ingress traffic (to the pod) is handled by shaping queued packets to effectively handle data. The limits you place on a pod do not affect the bandwidth of other pods.

Procedure

To limit the bandwidth on a pod:

Write an object definition JSON file, and specify the data traffic speed using

kubernetes.io/ingress-bandwidthandkubernetes.io/egress-bandwidthannotations. For example, to limit both pod egress and ingress bandwidth to 10M/s:Limited

Podobject definitionCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the pod using the object definition:

oc create -f <file_or_dir_path>

$ oc create -f <file_or_dir_path>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.3. Understanding how to use pod disruption budgets to specify the number of pods that must be up

A pod disruption budget allows the specification of safety constraints on pods during operations, such as draining a node for maintenance.

PodDisruptionBudget is an API object that specifies the minimum number or percentage of replicas that must be up at a time. Setting these in projects can be helpful during node maintenance (such as scaling a cluster down or a cluster upgrade) and is only honored on voluntary evictions (not on node failures).

A PodDisruptionBudget object’s configuration consists of the following key parts:

- A label selector, which is a label query over a set of pods.

An availability level, which specifies the minimum number of pods that must be available simultaneously, either:

-

minAvailableis the number of pods must always be available, even during a disruption. -

maxUnavailableis the number of pods can be unavailable during a disruption.

-

Available refers to the number of pods that has condition Ready=True. Ready=True refers to the pod that is able to serve requests and should be added to the load balancing pools of all matching services.

A maxUnavailable of 0% or 0 or a minAvailable of 100% or equal to the number of replicas is permitted but can block nodes from being drained.

The default setting for maxUnavailable is 1 for all the machine config pools in OpenShift Container Platform. It is recommended to not change this value and update one control plane node at a time. Do not change this value to 3 for the control plane pool.

You can check for pod disruption budgets across all projects with the following:

oc get poddisruptionbudget --all-namespaces

$ oc get poddisruptionbudget --all-namespacesThe following example contains some values that are specific to OpenShift Container Platform on AWS.

Example output

The PodDisruptionBudget is considered healthy when there are at least minAvailable pods running in the system. Every pod above that limit can be evicted.

Depending on your pod priority and preemption settings, lower-priority pods might be removed despite their pod disruption budget requirements.

2.3.3.1. Specifying the number of pods that must be up with pod disruption budgets

You can use a PodDisruptionBudget object to specify the minimum number or percentage of replicas that must be up at a time.

Procedure

To configure a pod disruption budget:

Create a YAML file with the an object definition similar to the following:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

PodDisruptionBudgetis part of thepolicy/v1API group.- 2

- The minimum number of pods that must be available simultaneously. This can be either an integer or a string specifying a percentage, for example,

20%. - 3

- A label query over a set of resources. The result of

matchLabelsandmatchExpressionsare logically conjoined. Leave this parameter blank, for exampleselector {}, to select all pods in the project.

Or:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

PodDisruptionBudgetis part of thepolicy/v1API group.- 2

- The maximum number of pods that can be unavailable simultaneously. This can be either an integer or a string specifying a percentage, for example,

20%. - 3

- A label query over a set of resources. The result of

matchLabelsandmatchExpressionsare logically conjoined. Leave this parameter blank, for exampleselector {}, to select all pods in the project.

Run the following command to add the object to project:

oc create -f </path/to/file> -n <project_name>

$ oc create -f </path/to/file> -n <project_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.3.2. Specifying the eviction policy for unhealthy pods

When you use pod disruption budgets (PDBs) to specify how many pods must be available simultaneously, you can also define the criteria for how unhealthy pods are considered for eviction.

You can choose one of the following policies:

- IfHealthyBudget

- Running pods that are not yet healthy can be evicted only if the guarded application is not disrupted.

- AlwaysAllow

Running pods that are not yet healthy can be evicted regardless of whether the criteria in the pod disruption budget is met. This policy can help evict malfunctioning applications, such as ones with pods stuck in the

CrashLoopBackOffstate or failing to report theReadystatus.NoteIt is recommended to set the

unhealthyPodEvictionPolicyfield toAlwaysAllowin thePodDisruptionBudgetobject to support the eviction of misbehaving applications during a node drain. The default behavior is to wait for the application pods to become healthy before the drain can proceed.

Procedure

Create a YAML file that defines a

PodDisruptionBudgetobject and specify the unhealthy pod eviction policy:Example

pod-disruption-budget.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Choose either

IfHealthyBudgetorAlwaysAllowas the unhealthy pod eviction policy. The default isIfHealthyBudgetwhen theunhealthyPodEvictionPolicyfield is empty.

Create the

PodDisruptionBudgetobject by running the following command:oc create -f pod-disruption-budget.yaml

$ oc create -f pod-disruption-budget.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

With a PDB that has the AlwaysAllow unhealthy pod eviction policy set, you can now drain nodes and evict the pods for a malfunctioning application guarded by this PDB.

2.3.4. Preventing pod removal using critical pods

There are a number of core components that are critical to a fully functional cluster, but, run on a regular cluster node rather than the master. A cluster might stop working properly if a critical add-on is evicted.

Pods marked as critical are not allowed to be evicted.

Procedure

To make a pod critical:

Create a

Podspec or edit existing pods to include thesystem-cluster-criticalpriority class:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Default priority class for pods that should never be evicted from a node.

Alternatively, you can specify

system-node-criticalfor pods that are important to the cluster but can be removed if necessary.Create the pod:

oc create -f <file-name>.yaml

$ oc create -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.5. Reducing pod timeouts when using persistent volumes with high file counts

If a storage volume contains many files (~1,000,000 or greater), you might experience pod timeouts.

This can occur because, when volumes are mounted, OpenShift Container Platform recursively changes the ownership and permissions of the contents of each volume in order to match the fsGroup specified in a pod’s securityContext. For large volumes, checking and changing the ownership and permissions can be time consuming, resulting in a very slow pod startup.

You can reduce this delay by applying one of the following workarounds:

- Use a security context constraint (SCC) to skip the SELinux relabeling for a volume.

-

Use the

fsGroupChangePolicyfield inside an SCC to control the way that OpenShift Container Platform checks and manages ownership and permissions for a volume. - Use the Cluster Resource Override Operator to automatically apply an SCC to skip the SELinux relabeling.

- Use a runtime class to skip the SELinux relabeling for a volume.

For information, see When using Persistent Volumes with high file counts in OpenShift, why do pods fail to start or take an excessive amount of time to achieve "Ready" state?.

2.4. Automatically scaling pods with the horizontal pod autoscaler

As a developer, you can use a horizontal pod autoscaler (HPA) to specify how OpenShift Container Platform should automatically increase or decrease the scale of a replication controller or deployment configuration, based on metrics collected from the pods that belong to that replication controller or deployment configuration. You can create an HPA for any deployment, deployment config, replica set, replication controller, or stateful set.

For information on scaling pods based on custom metrics, see Automatically scaling pods based on custom metrics.

It is recommended to use a Deployment object or ReplicaSet object unless you need a specific feature or behavior provided by other objects. For more information on these objects, see Understanding deployments.

2.4.1. Understanding horizontal pod autoscalers

You can create a horizontal pod autoscaler to specify the minimum and maximum number of pods you want to run, and the CPU usage or memory usage your pods should target.

After you create a horizontal pod autoscaler, OpenShift Container Platform begins to query the CPU, memory, or both resource metrics on the pods. When these metrics are available, the horizontal pod autoscaler computes the ratio of the current metric use with the intended metric use, and scales up or down as needed. The query and scaling occurs at a regular interval, but can take one to two minutes before metrics become available.

For replication controllers, this scaling corresponds directly to the replicas of the replication controller. For deployment, scaling corresponds directly to the replica count of the deployment. Note that autoscaling applies only to the latest deployment in the Complete phase.

OpenShift Container Platform automatically accounts for resources and prevents unnecessary autoscaling during resource spikes, such as during start up. Pods in the unready state have 0 CPU usage when scaling up and the autoscaler ignores the pods when scaling down. Pods without known metrics have 0% CPU usage when scaling up and 100% CPU when scaling down. This allows for more stability during the HPA decision. To use this feature, you must configure readiness checks to determine if a new pod is ready for use.

To use horizontal pod autoscalers, your cluster administrator must have properly configured cluster metrics.

The following metrics are supported by horizontal pod autoscalers:

| Metric | Description | API version |

|---|---|---|

| CPU utilization | Number of CPU cores used. You can use this to calculate a percentage of the pod’s requested CPU. |

|

| Memory utilization | Amount of memory used. You can use this to calculate a percentage of the pod’s requested memory. |

|

For memory-based autoscaling, memory usage must increase and decrease proportionally to the replica count. On average:

- An increase in replica count must lead to an overall decrease in memory (working set) usage per-pod.

- A decrease in replica count must lead to an overall increase in per-pod memory usage.

Use the OpenShift Container Platform web console to check the memory behavior of your application and ensure that your application meets these requirements before using memory-based autoscaling.

The following example shows autoscaling for the hello-node Deployment object. The initial deployment requires 3 pods. The HPA object increases the minimum to 5. If CPU usage on the pods reaches 75%, the pods increase to 7:

oc autoscale deployment/hello-node --min=5 --max=7 --cpu-percent=75

$ oc autoscale deployment/hello-node --min=5 --max=7 --cpu-percent=75Example output

horizontalpodautoscaler.autoscaling/hello-node autoscaled

horizontalpodautoscaler.autoscaling/hello-node autoscaledSample YAML to create an HPA for the hello-node deployment object with minReplicas set to 3

After you create the HPA, you can view the new state of the deployment by running the following command:

oc get deployment hello-node

$ oc get deployment hello-nodeThere are now 5 pods in the deployment:

Example output

NAME REVISION DESIRED CURRENT TRIGGERED BY hello-node 1 5 5 config

NAME REVISION DESIRED CURRENT TRIGGERED BY

hello-node 1 5 5 config2.4.2. How does the HPA work?

The horizontal pod autoscaler (HPA) extends the concept of pod auto-scaling. The HPA lets you create and manage a group of load-balanced nodes. The HPA automatically increases or decreases the number of pods when a given CPU or memory threshold is crossed.

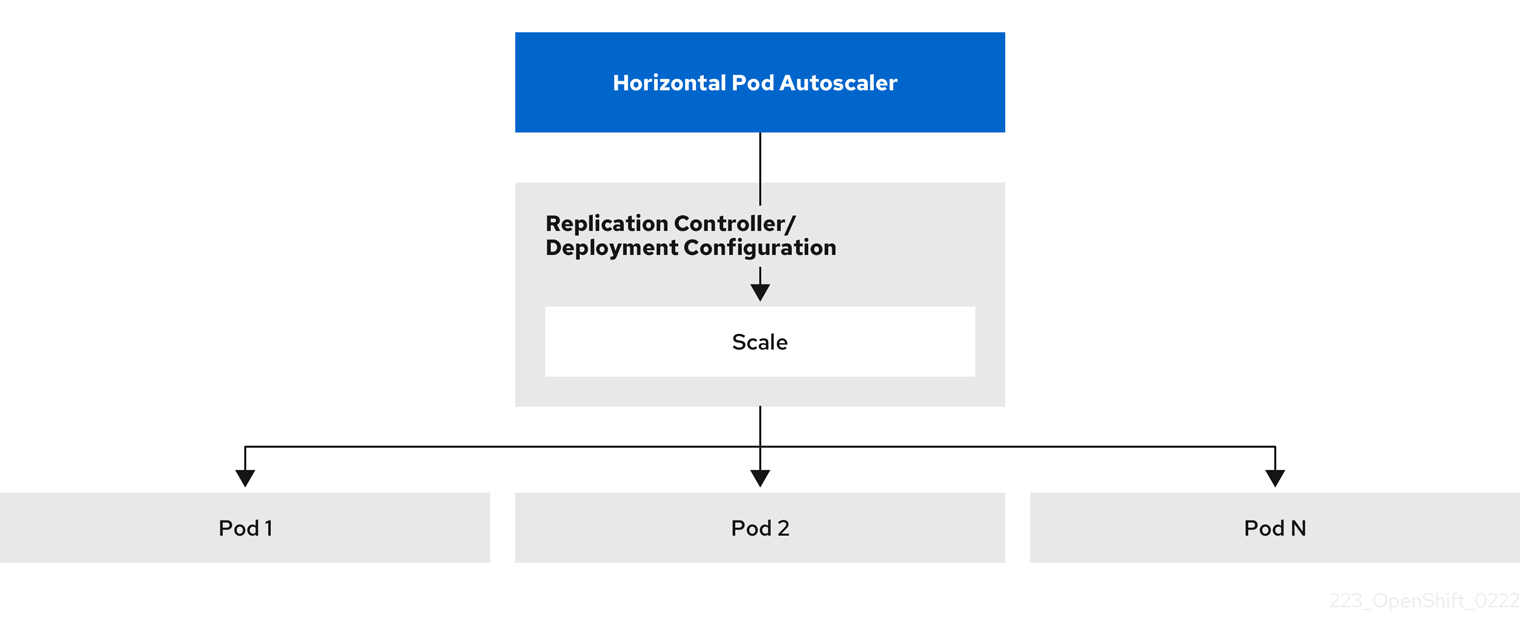

Figure 2.1. High level workflow of the HPA

The HPA is an API resource in the Kubernetes autoscaling API group. The autoscaler works as a control loop with a default of 15 seconds for the sync period. During this period, the controller manager queries the CPU, memory utilization, or both, against what is defined in the YAML file for the HPA. The controller manager obtains the utilization metrics from the resource metrics API for per-pod resource metrics like CPU or memory, for each pod that is targeted by the HPA.

If a utilization value target is set, the controller calculates the utilization value as a percentage of the equivalent resource request on the containers in each pod. The controller then takes the average of utilization across all targeted pods and produces a ratio that is used to scale the number of desired replicas. The HPA is configured to fetch metrics from metrics.k8s.io, which is provided by the metrics server. Because of the dynamic nature of metrics evaluation, the number of replicas can fluctuate during scaling for a group of replicas.

To implement the HPA, all targeted pods must have a resource request set on their containers.

2.4.3. About requests and limits

The scheduler uses the resource request that you specify for containers in a pod, to decide which node to place the pod on. The kubelet enforces the resource limit that you specify for a container to ensure that the container is not allowed to use more than the specified limit. The kubelet also reserves the request amount of that system resource specifically for that container to use.

How to use resource metrics?

In the pod specifications, you must specify the resource requests, such as CPU and memory. The HPA uses this specification to determine the resource utilization and then scales the target up or down.

For example, the HPA object uses the following metric source:

In this example, the HPA keeps the average utilization of the pods in the scaling target at 60%. Utilization is the ratio between the current resource usage to the requested resource of the pod.

2.4.4. Best practices

For optimal performance, configure resource requests for all pods. To prevent frequent replica fluctuations, configure the cooldown period.

- All pods must have resource requests configured

- The HPA makes a scaling decision based on the observed CPU or memory usage values of pods in an OpenShift Container Platform cluster. Utilization values are calculated as a percentage of the resource requests of each pod. Missing resource request values can affect the optimal performance of the HPA.

For more information, see "Understanding resource requests and limits".

- Configure the cool down period

-

During horizontal pod autoscaling, there might be a rapid scaling of events without a time gap. Configure the cool down period to prevent frequent replica fluctuations. You can specify a cool down period by configuring the

stabilizationWindowSecondsfield. The stabilization window is used to restrict the fluctuation of replicas count when the metrics used for scaling keep fluctuating. The autoscaling algorithm uses this window to infer a previous required state and avoid unwanted changes to workload scale.

For example, a stabilization window is specified for the scaleDown field:

behavior:

scaleDown:

stabilizationWindowSeconds: 300

behavior:

scaleDown:

stabilizationWindowSeconds: 300In the previous example, all intended states for the past 5 minutes are considered. This approximates a rolling maximum, and avoids having the scaling algorithm often remove pods only to trigger recreating an equal pod just moments later.

For more information, see "Scaling policies".

2.4.4.1. Scaling policies

Use the autoscaling/v2 API to add scaling policies to a horizontal pod autoscaler. A scaling policy controls how the OpenShift Container Platform horizontal pod autoscaler (HPA) scales pods. Use scaling policies to restrict the rate that HPAs scale pods up or down by setting a specific number or specific percentage to scale in a specified period of time. You can also define a stabilization window, which uses previously computed required states to control scaling if the metrics are fluctuating. You can create multiple policies for the same scaling direction, and determine the policy to use, based on the amount of change. You can also restrict the scaling by timed iterations. The HPA scales pods during an iteration, then performs scaling, as needed, in further iterations.

Sample HPA object with a scaling policy

- 1

- Specifies the direction for the scaling policy, either

scaleDownorscaleUp. This example creates a policy for scaling down. - 2

- Defines the scaling policy.

- 3

- Determines if the policy scales by a specific number of pods or a percentage of pods during each iteration. The default value is

pods. - 4

- Limits the amount of scaling, either the number of pods or percentage of pods, during each iteration. There is no default value for scaling down by number of pods.

- 5

- Determines the length of a scaling iteration. The default value is

15seconds. - 6

- The default value for scaling down by percentage is 100%.

- 7

- Determines the policy to use first, if multiple policies are defined. Specify

Maxto use the policy that allows the highest amount of change,Minto use the policy that allows the lowest amount of change, orDisabledto prevent the HPA from scaling in that policy direction. The default value isMax. - 8

- Determines the time period the HPA reviews the required states. The default value is

0. - 9

- This example creates a policy for scaling up.

- 10

- Limits the amount of scaling up by the number of pods. The default value for scaling up the number of pods is 4%.

- 11

- Limits the amount of scaling up by the percentage of pods. The default value for scaling up by percentage is 100%.

Example policy for scaling down

In this example, when the number of pods is greater than 40, the percent-based policy is used for scaling down, as that policy results in a larger change, as required by the selectPolicy.

If there are 80 pod replicas, in the first iteration the HPA reduces the pods by 8, which is 10% of the 80 pods (based on the type: Percent and value: 10 parameters), over one minute (periodSeconds: 60). For the next iteration, the number of pods is 72. The HPA calculates that 10% of the remaining pods is 7.2, which it rounds up to 8 and scales down 8 pods. On each subsequent iteration, the number of pods to be scaled is re-calculated based on the number of remaining pods. When the number of pods falls to less than 40, the pods-based policy is applied, because the pod-based number is greater than the percent-based number. The HPA reduces 4 pods at a time (type: Pods and value: 4), over 30 seconds (periodSeconds: 30), until there are 20 replicas remaining (minReplicas).

The selectPolicy: Disabled parameter prevents the HPA from scaling up the pods. You can manually scale up by adjusting the number of replicas in the replica set or deployment set, if needed.

If set, you can view the scaling policy by using the oc edit command:

oc edit hpa hpa-resource-metrics-memory

$ oc edit hpa hpa-resource-metrics-memoryExample output

2.4.5. Creating a horizontal pod autoscaler by using the web console

From the web console, you can create a horizontal pod autoscaler (HPA) that specifies the minimum and maximum number of pods you want to run on a Deployment or DeploymentConfig object. You can also define the amount of CPU or memory usage that your pods should target.

An HPA cannot be added to deployments that are part of an Operator-backed service, Knative service, or Helm chart.

Procedure

To create an HPA in the web console:

- In the Topology view, click the node to reveal the side pane.

From the Actions drop-down list, select Add HorizontalPodAutoscaler to open the Add HorizontalPodAutoscaler form.

Figure 2.2. Add HorizontalPodAutoscaler

From the Add HorizontalPodAutoscaler form, define the name, minimum and maximum pod limits, the CPU and memory usage, and click Save.

NoteIf any of the values for CPU and memory usage are missing, a warning is displayed.

2.4.5.1. Editing a horizontal pod autoscaler by using the web console

From the web console, you can modify a horizontal pod autoscaler (HPA) that specifies the minimum and maximum number of pods you want to run on a Deployment or DeploymentConfig object. You can also define the amount of CPU or memory usage that your pods should target.

Procedure

- In the Topology view, click the node to reveal the side pane.

- From the Actions drop-down list, select Edit HorizontalPodAutoscaler to open the Edit Horizontal Pod Autoscaler form.

- From the Edit Horizontal Pod Autoscaler form, edit the minimum and maximum pod limits and the CPU and memory usage, and click Save.

While creating or editing the horizontal pod autoscaler in the web console, you can switch from Form view to YAML view.

2.4.5.2. Removing a horizontal pod autoscaler by using the web console

You can remove a horizontal pod autoscaler (HPA) in the web console.

Procedure

- In the Topology view, click the node to reveal the side panel.

- From the Actions drop-down list, select Remove HorizontalPodAutoscaler.

- In the confirmation window, click Remove to remove the HPA.

2.4.6. Creating a horizontal pod autoscaler by using the CLI

Using the OpenShift Container Platform CLI, you can create a horizontal pod autoscaler (HPA) to automatically scale an existing Deployment, DeploymentConfig, ReplicaSet, ReplicationController, or StatefulSet object. The HPA scales the pods associated with that object to maintain the CPU or memory resources that you specify.

You can autoscale based on CPU or memory use by specifying a percentage of resource usage or a specific value, as described in the following sections.

The HPA increases and decreases the number of replicas between the minimum and maximum numbers to maintain the specified resource use across all pods.

2.4.6.1. Creating a horizontal pod autoscaler for a percent of CPU use

Using the OpenShift Container Platform CLI, you can create a horizontal pod autoscaler (HPA) to automatically scale an existing object based on percent of CPU use. The HPA scales the pods associated with that object to maintain the CPU use that you specify.

When autoscaling for a percent of CPU use, you can use the oc autoscale command to specify the minimum and maximum number of pods that you want to run at any given time and the average CPU use your pods should target. If you do not specify a minimum, the pods are given default values from the OpenShift Container Platform server.

Use a Deployment object or ReplicaSet object unless you need a specific feature or behavior provided by other objects.

Prerequisites

To use horizontal pod autoscalers, your cluster administrator must have properly configured cluster metrics. You can use the oc describe PodMetrics <pod-name> command to determine if metrics are configured. If metrics are configured, the output appears similar to the following, with Cpu and Memory displayed under Usage.

oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internal

$ oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internalExample output

Procedure

Create a

HorizontalPodAutoscalerobject for an existing object:oc autoscale <object_type>/<name> \ --min <number> \ --max <number> \ --cpu-percent=<percent>

$ oc autoscale <object_type>/<name> \1 --min <number> \2 --max <number> \3 --cpu-percent=<percent>4 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify the type and name of the object to autoscale. The object must exist and be a

Deployment,DeploymentConfig/dc,ReplicaSet/rs,ReplicationController/rc, orStatefulSet. - 2

- Optional: Specify the minimum number of replicas when scaling down.

- 3

- Specify the maximum number of replicas when scaling up.

- 4

- Specify the target average CPU use over all the pods, represented as a percent of requested CPU. If not specified or negative, a default autoscaling policy is used.

For example, the following command shows autoscaling for the

hello-nodedeployment object. The initial deployment requires 3 pods. The HPA object increases the minimum to 5. If CPU usage on the pods reaches 75%, the pods will increase to 7:oc autoscale deployment/hello-node --min=5 --max=7 --cpu-percent=75

$ oc autoscale deployment/hello-node --min=5 --max=7 --cpu-percent=75Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the horizontal pod autoscaler:

oc create -f <file-name>.yaml

$ oc create -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Ensure that the horizontal pod autoscaler was created:

oc get hpa cpu-autoscale

$ oc get hpa cpu-autoscaleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE cpu-autoscale Deployment/example 173m/500m 1 10 1 20m

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE cpu-autoscale Deployment/example 173m/500m 1 10 1 20mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4.6.2. Creating a horizontal pod autoscaler for a specific CPU value

Using the OpenShift Container Platform CLI, you can create a horizontal pod autoscaler (HPA) to automatically scale an existing object based on a specific CPU value by creating a HorizontalPodAutoscaler object with the target CPU and pod limits. The HPA scales the pods associated with that object to maintain the CPU use that you specify.

Use a Deployment object or ReplicaSet object unless you need a specific feature or behavior provided by other objects.

Prerequisites

To use horizontal pod autoscalers, your cluster administrator must have properly configured cluster metrics. You can use the oc describe PodMetrics <pod-name> command to determine if metrics are configured. If metrics are configured, the output appears similar to the following, with Cpu and Memory displayed under Usage.

oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internal

$ oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internalExample output

Procedure

Create a YAML file similar to the following for an existing object:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Use the

autoscaling/v2API. - 2

- Specify a name for this horizontal pod autoscaler object.

- 3

- Specify the API version of the object to scale:

-

For a

Deployment,ReplicaSet,Statefulsetobject, useapps/v1. -

For a

ReplicationController, usev1. -

For a

DeploymentConfig, useapps.openshift.io/v1.

-

For a

- 4

- Specify the type of object. The object must be a

Deployment,DeploymentConfig/dc,ReplicaSet/rs,ReplicationController/rc, orStatefulSet. - 5

- Specify the name of the object to scale. The object must exist.

- 6

- Specify the minimum number of replicas when scaling down.

- 7

- Specify the maximum number of replicas when scaling up.

- 8

- Use the

metricsparameter for memory use. - 9

- Specify

cpufor CPU usage. - 10

- Set to

AverageValue. - 11

- Set to

averageValuewith the targeted CPU value.

Create the horizontal pod autoscaler:

oc create -f <file-name>.yaml

$ oc create -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Check that the horizontal pod autoscaler was created:

oc get hpa cpu-autoscale

$ oc get hpa cpu-autoscaleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE cpu-autoscale Deployment/example 173m/500m 1 10 1 20m

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE cpu-autoscale Deployment/example 173m/500m 1 10 1 20mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4.6.3. Creating a horizontal pod autoscaler object for a percent of memory use

Using the OpenShift Container Platform CLI, you can create a horizontal pod autoscaler (HPA) to automatically scale an existing object based on a percent of memory use. The HPA scales the pods associated with that object to maintain the memory use that you specify.

Use a Deployment object or ReplicaSet object unless you need a specific feature or behavior provided by other objects.

You can specify the minimum and maximum number of pods and the average memory use that your pods should target. If you do not specify a minimum, the pods are given default values from the OpenShift Container Platform server.

Prerequisites

To use horizontal pod autoscalers, your cluster administrator must have properly configured cluster metrics. You can use the oc describe PodMetrics <pod-name> command to determine if metrics are configured. If metrics are configured, the output appears similar to the following, with Cpu and Memory displayed under Usage.

oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internal

$ oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internalExample output

Procedure

Create a

HorizontalPodAutoscalerobject similar to the following for an existing object:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Use the

autoscaling/v2API. - 2

- Specify a name for this horizontal pod autoscaler object.

- 3

- Specify the API version of the object to scale:

-

For a ReplicationController, use

v1. -

For a DeploymentConfig, use

apps.openshift.io/v1. -

For a Deployment, ReplicaSet, Statefulset object, use

apps/v1.

-

For a ReplicationController, use

- 4

- Specify the type of object. The object must be a

Deployment,DeploymentConfig,ReplicaSet,ReplicationController, orStatefulSet. - 5

- Specify the name of the object to scale. The object must exist.

- 6

- Specify the minimum number of replicas when scaling down.

- 7

- Specify the maximum number of replicas when scaling up.

- 8

- Use the

metricsparameter for memory usage. - 9

- Specify

memoryfor memory usage. - 10

- Set to

Utilization. - 11

- Specify

averageUtilizationand a target average memory usage over all the pods, represented as a percent of requested memory. The target pods must have memory requests configured. - 12

- Optional: Specify a scaling policy to control the rate of scaling up or down.

Create the horizontal pod autoscaler by using a command similar to the following:

oc create -f <file-name>.yaml

$ oc create -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc create -f hpa.yaml

$ oc create -f hpa.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

horizontalpodautoscaler.autoscaling/hpa-resource-metrics-memory created

horizontalpodautoscaler.autoscaling/hpa-resource-metrics-memory createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Check that the horizontal pod autoscaler was created by using a command similar to the following:

oc get hpa hpa-resource-metrics-memory

$ oc get hpa hpa-resource-metrics-memoryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE hpa-resource-metrics-memory Deployment/example 2441216/500Mi 1 10 1 20m

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE hpa-resource-metrics-memory Deployment/example 2441216/500Mi 1 10 1 20mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the details of the horizontal pod autoscaler by using a command similar to the following:

oc describe hpa hpa-resource-metrics-memory

$ oc describe hpa hpa-resource-metrics-memoryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4.6.4. Creating a horizontal pod autoscaler object for specific memory use

Using the OpenShift Container Platform CLI, you can create a horizontal pod autoscaler (HPA) to automatically scale an existing object. The HPA scales the pods associated with that object to maintain the average memory use that you specify.

Use a Deployment object or ReplicaSet object unless you need a specific feature or behavior provided by other objects.

You can specify the minimum and maximum number of pods and the average memory use that your pods should target. If you do not specify a minimum, the pods are given default values from the OpenShift Container Platform server.

Prerequisites

To use horizontal pod autoscalers, your cluster administrator must have properly configured cluster metrics. You can use the oc describe PodMetrics <pod-name> command to determine if metrics are configured. If metrics are configured, the output appears similar to the following, with Cpu and Memory displayed under Usage.

oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internal

$ oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internalExample output

Procedure

Create a

HorizontalPodAutoscalerobject similar to the following for an existing object:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Use the

autoscaling/v2API. - 2

- Specify a name for this horizontal pod autoscaler object.

- 3

- Specify the API version of the object to scale:

-

For a

Deployment,ReplicaSet, orStatefulsetobject, useapps/v1. -

For a

ReplicationController, usev1. -

For a

DeploymentConfig, useapps.openshift.io/v1.

-

For a

- 4

- Specify the type of object. The object must be a

Deployment,DeploymentConfig,ReplicaSet,ReplicationController, orStatefulSet. - 5

- Specify the name of the object to scale. The object must exist.

- 6

- Specify the minimum number of replicas when scaling down.

- 7

- Specify the maximum number of replicas when scaling up.

- 8

- Use the

metricsparameter for memory usage. - 9

- Specify

memoryfor memory usage. - 10

- Set the type to

AverageValue. - 11

- Specify

averageValueand a specific memory value. - 12

- Optional: Specify a scaling policy to control the rate of scaling up or down.

Create the horizontal pod autoscaler by using a command similar to the following:

oc create -f <file-name>.yaml

$ oc create -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc create -f hpa.yaml

$ oc create -f hpa.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

horizontalpodautoscaler.autoscaling/hpa-resource-metrics-memory created

horizontalpodautoscaler.autoscaling/hpa-resource-metrics-memory createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Check that the horizontal pod autoscaler was created by using a command similar to the following:

oc get hpa hpa-resource-metrics-memory

$ oc get hpa hpa-resource-metrics-memoryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE hpa-resource-metrics-memory Deployment/example 2441216/500Mi 1 10 1 20m

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE hpa-resource-metrics-memory Deployment/example 2441216/500Mi 1 10 1 20mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the details of the horizontal pod autoscaler by using a command similar to the following:

oc describe hpa hpa-resource-metrics-memory

$ oc describe hpa hpa-resource-metrics-memoryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4.7. Understanding horizontal pod autoscaler status conditions by using the CLI

You can use the status conditions set to determine whether or not the horizontal pod autoscaler (HPA) is able to scale and whether or not it is currently restricted in any way.

The HPA status conditions are available with the v2 version of the autoscaling API.

The HPA responds with the following status conditions:

The

AbleToScalecondition indicates whether HPA is able to fetch and update metrics, as well as whether any backoff-related conditions could prevent scaling.-

A

Truecondition indicates scaling is allowed. -

A

Falsecondition indicates scaling is not allowed for the reason specified.

-

A

The

ScalingActivecondition indicates whether the HPA is enabled (for example, the replica count of the target is not zero) and is able to calculate desired metrics.-

A

Truecondition indicates metrics is working properly. -

A

Falsecondition generally indicates a problem with fetching metrics.

-

A

The

ScalingLimitedcondition indicates that the desired scale was capped by the maximum or minimum of the horizontal pod autoscaler.-

A

Truecondition indicates that you need to raise or lower the minimum or maximum replica count in order to scale. A

Falsecondition indicates that the requested scaling is allowed.oc describe hpa cm-test

$ oc describe hpa cm-testCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The horizontal pod autoscaler status messages.

-

A

The following is an example of a pod that is unable to scale:

Example output

The following is an example of a pod that could not obtain the needed metrics for scaling:

Example output

Conditions: Type Status Reason Message ---- ------ ------ ------- AbleToScale True SucceededGetScale the HPA controller was able to get the target's current scale ScalingActive False FailedGetResourceMetric the HPA was unable to compute the replica count: failed to get cpu utilization: unable to get metrics for resource cpu: no metrics returned from resource metrics API

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True SucceededGetScale the HPA controller was able to get the target's current scale

ScalingActive False FailedGetResourceMetric the HPA was unable to compute the replica count: failed to get cpu utilization: unable to get metrics for resource cpu: no metrics returned from resource metrics APIThe following is an example of a pod where the requested autoscaling was less than the required minimums:

Example output

2.4.7.1. Viewing horizontal pod autoscaler status conditions by using the CLI

You can view the status conditions set on a pod by the horizontal pod autoscaler (HPA).

The horizontal pod autoscaler status conditions are available with the v2 version of the autoscaling API.

Prerequisites

To use horizontal pod autoscalers, your cluster administrator must have properly configured cluster metrics. You can use the oc describe PodMetrics <pod-name> command to determine if metrics are configured. If metrics are configured, the output appears similar to the following, with Cpu and Memory displayed under Usage.

oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internal

$ oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internalExample output

Procedure

To view the status conditions on a pod, use the following command with the name of the pod:

oc describe hpa <pod-name>

$ oc describe hpa <pod-name>For example:

oc describe hpa cm-test

$ oc describe hpa cm-test

The conditions appear in the Conditions field in the output.

Example output

2.5. Automatically adjust pod resource levels with the vertical pod autoscaler

The OpenShift Container Platform Vertical Pod Autoscaler Operator (VPA) automatically reviews the historic and current CPU and memory resources for containers in pods. The VPA can update the resource limits and requests based on the usage values it learns. By using individual custom resources (CR), the VPA updates all the pods in a project associated with any built-in workload objects. This includes the following list of object types:

-

Deployment -

DeploymentConfig -

StatefulSet -

Job -

DaemonSet -

ReplicaSet -

ReplicationController

The VPA can also update certain custom resource object that manage pods. For more information, see Example custom resources for the Vertical Pod Autoscaler.

The VPA helps you to understand the optimal CPU and memory usage for your pods and can automatically maintain pod resources through the pod lifecycle.

2.5.1. About the Vertical Pod Autoscaler Operator

The Vertical Pod Autoscaler Operator (VPA) is implemented as an API resource and a custom resource (CR). The CR determines the actions for the VPA to take with the pods associated with a specific workload object, such as a daemon set, replication controller, and so forth, in a project.

The VPA consists of three components, each of which has its own pod in the VPA namespace:

- Recommender

- The VPA recommender monitors the current and past resource consumption. Based on this data, the VPA recommender determines the optimal CPU and memory resources for the pods in the associated workload object.

- Updater

- The VPA updater checks if the pods in the associated workload object have the correct resources. If the resources are correct, the updater takes no action. If the resources are not correct, the updater kills the pod so that pods' controllers can re-create them with the updated requests.

- Admission controller

- The VPA admission controller sets the correct resource requests on each new pod in the associated workload object. This applies whether the pod is new or the controller re-created the pod due to the VPA updater actions.

You can use the default recommender or use your own alternative recommender to autoscale based on your own algorithms.

The default recommender automatically computes historic and current CPU and memory usage for the containers in those pods. The default recommender uses this data to determine optimized resource limits and requests to ensure that these pods are operating efficiently at all times. For example, the default recommender suggests reduced resources for pods that are requesting more resources than they are using and increased resources for pods that are not requesting enough.

The VPA then automatically deletes any pods that are out of alignment with these recommendations one at a time, so that your applications can continue to serve requests with no downtime. The workload objects then redeploy the pods with the original resource limits and requests. The VPA uses a mutating admission webhook to update the pods with optimized resource limits and requests before admitting the pods to a node. If you do not want the VPA to delete pods, you can view the VPA resource limits and requests and manually update the pods as needed.

By default, workload objects must specify a minimum of two replicas for the VPA to automatically delete their pods. Workload objects that specify fewer replicas than this minimum are not deleted. If you manually delete these pods, when the workload object redeploys the pods, the VPA updates the new pods with its recommendations. You can change this minimum by modifying the VerticalPodAutoscalerController object as shown in Changing the VPA minimum value.

For example, if you have a pod that uses 50% of the CPU but only requests 10%, the VPA determines that the pod is consuming more CPU than requested and deletes the pod. The workload object, such as replica set, restarts the pods and the VPA updates the new pod with its recommended resources.

For developers, you can use the VPA to help ensure that your pods active during periods of high demand by scheduling pods onto nodes that have appropriate resources for each pod.

Administrators can use the VPA to better use cluster resources, such as preventing pods from reserving more CPU resources than needed. The VPA monitors the resources that workloads are actually using and adjusts the resource requirements so capacity is available to other workloads. The VPA also maintains the ratios between limits and requests specified in the initial container configuration.

If you stop running the VPA or delete a specific VPA CR in your cluster, the resource requests for the pods already modified by the VPA do not change. However, any new pods get the resources defined in the workload object, not the previous recommendations made by the VPA.

2.5.2. Installing the Vertical Pod Autoscaler Operator

You can use the OpenShift Container Platform web console to install the Vertical Pod Autoscaler Operator (VPA).

Procedure

-

In the OpenShift Container Platform web console, click Ecosystem

Software Catalog. - Choose VerticalPodAutoscaler from the list of available Operators, and click Install.

-

On the Install Operator page, ensure that the Operator recommended namespace option is selected. This installs the Operator in the mandatory

openshift-vertical-pod-autoscalernamespace, which is automatically created if it does not exist. - Click Install.

Verification

Verify the installation by listing the VPA components:

-

Navigate to Workloads

Pods. -

Select the

openshift-vertical-pod-autoscalerproject from the drop-down menu and verify that there are four pods running. -

Navigate to Workloads

Deployments to verify that there are four deployments running.

-

Navigate to Workloads

Optional: Verify the installation in the OpenShift Container Platform CLI using the following command:

oc get all -n openshift-vertical-pod-autoscaler

$ oc get all -n openshift-vertical-pod-autoscalerCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output shows four pods and four deployments:

Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.3. Moving the Vertical Pod Autoscaler Operator components

The Vertical Pod Autoscaler Operator (VPA) and each component has its own pod in the VPA namespace on the control plane nodes. You can move the VPA Operator and component pods to infrastructure or worker nodes by adding a node selector to the VPA subscription and the VerticalPodAutoscalerController CR.

You can create and use infrastructure nodes to host only infrastructure components. For example, the default router, the integrated container image registry, and the components for cluster metrics and monitoring. These infrastructure nodes are not counted toward the total number of subscriptions that are required to run the environment. For more information, see Creating infrastructure machine sets.

You can move the components to the same node or separate nodes as appropriate for your organization.

The following example shows the default deployment of the VPA pods to the control plane nodes.

Example output

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES vertical-pod-autoscaler-operator-6c75fcc9cd-5pb6z 1/1 Running 0 7m59s 10.128.2.24 c416-tfsbj-master-1 <none> <none> vpa-admission-plugin-default-6cb78d6f8b-rpcrj 1/1 Running 0 5m37s 10.129.2.22 c416-tfsbj-master-1 <none> <none> vpa-recommender-default-66846bd94c-dsmpp 1/1 Running 0 5m37s 10.129.2.20 c416-tfsbj-master-0 <none> <none> vpa-updater-default-db8b58df-2nkvf 1/1 Running 0 5m37s 10.129.2.21 c416-tfsbj-master-1 <none> <none>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

vertical-pod-autoscaler-operator-6c75fcc9cd-5pb6z 1/1 Running 0 7m59s 10.128.2.24 c416-tfsbj-master-1 <none> <none>

vpa-admission-plugin-default-6cb78d6f8b-rpcrj 1/1 Running 0 5m37s 10.129.2.22 c416-tfsbj-master-1 <none> <none>

vpa-recommender-default-66846bd94c-dsmpp 1/1 Running 0 5m37s 10.129.2.20 c416-tfsbj-master-0 <none> <none>

vpa-updater-default-db8b58df-2nkvf 1/1 Running 0 5m37s 10.129.2.21 c416-tfsbj-master-1 <none> <none>Procedure

Move the VPA Operator pod by adding a node selector to the

Subscriptioncustom resource (CR) for the VPA Operator:Edit the CR:

oc edit Subscription vertical-pod-autoscaler -n openshift-vertical-pod-autoscaler

$ oc edit Subscription vertical-pod-autoscaler -n openshift-vertical-pod-autoscalerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a node selector to match the node role label on the node where you want to install the VPA Operator pod:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf the infra node uses taints, you need to add a toleration to the

SubscriptionCR.For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specifies a toleration for a taint on the node where you want to move the VPA Operator pod.

Move each VPA component by adding node selectors to the

VerticalPodAutoscalercustom resource (CR):Edit the CR:

oc edit VerticalPodAutoscalerController default -n openshift-vertical-pod-autoscaler

$ oc edit VerticalPodAutoscalerController default -n openshift-vertical-pod-autoscalerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add node selectors to match the node role label on the node where you want to install the VPA components:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf a target node uses taints, you need to add a toleration to the

VerticalPodAutoscalerControllerCR.For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specifies a toleration for the admission controller pod for a taint on the node where you want to install the pod.

- 2

- Specifies a toleration for the recommender pod for a taint on the node where you want to install the pod.

- 3

- Specifies a toleration for the updater pod for a taint on the node where you want to install the pod.

Verification

You can verify the pods have moved by using the following command:

oc get pods -n openshift-vertical-pod-autoscaler -o wide

$ oc get pods -n openshift-vertical-pod-autoscaler -o wideCopy to Clipboard Copied! Toggle word wrap Toggle overflow The pods are no longer deployed to the control plane nodes. In the following example output, the node is now an infra node, not a control plane node.

Example output

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES vertical-pod-autoscaler-operator-6c75fcc9cd-5pb6z 1/1 Running 0 7m59s 10.128.2.24 c416-tfsbj-infra-eastus3-2bndt <none> <none> vpa-admission-plugin-default-6cb78d6f8b-rpcrj 1/1 Running 0 5m37s 10.129.2.22 c416-tfsbj-infra-eastus1-lrgj8 <none> <none> vpa-recommender-default-66846bd94c-dsmpp 1/1 Running 0 5m37s 10.129.2.20 c416-tfsbj-infra-eastus1-lrgj8 <none> <none> vpa-updater-default-db8b58df-2nkvf 1/1 Running 0 5m37s 10.129.2.21 c416-tfsbj-infra-eastus1-lrgj8 <none> <none>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES vertical-pod-autoscaler-operator-6c75fcc9cd-5pb6z 1/1 Running 0 7m59s 10.128.2.24 c416-tfsbj-infra-eastus3-2bndt <none> <none> vpa-admission-plugin-default-6cb78d6f8b-rpcrj 1/1 Running 0 5m37s 10.129.2.22 c416-tfsbj-infra-eastus1-lrgj8 <none> <none> vpa-recommender-default-66846bd94c-dsmpp 1/1 Running 0 5m37s 10.129.2.20 c416-tfsbj-infra-eastus1-lrgj8 <none> <none> vpa-updater-default-db8b58df-2nkvf 1/1 Running 0 5m37s 10.129.2.21 c416-tfsbj-infra-eastus1-lrgj8 <none> <none>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.4. About using the Vertical Pod Autoscaler Operator

To use the Vertical Pod Autoscaler Operator (VPA), you create a VPA custom resource (CR) for a workload object in your cluster. The VPA learns and applies the optimal CPU and memory resources for the pods associated with that workload object. You can use a VPA with a deployment, stateful set, job, daemon set, replica set, or replication controller workload object. The VPA CR must be in the same project as the pods that you want to check.

You use the VPA CR to associate a workload object and specify the mode that the VPA operates in:

-

The

AutoandRecreatemodes automatically apply the VPA CPU and memory recommendations throughout the pod lifetime. The VPA deletes any pods in the project that are out of alignment with its recommendations. When redeployed by the workload object, the VPA updates the new pods with its recommendations. -

The

Initialmode automatically applies VPA recommendations only at pod creation. -

The

Offmode only provides recommended resource limits and requests. You can then manually apply the recommendations. TheOffmode does not update pods.

You can also use the CR to opt-out certain containers from VPA evaluation and updates.

For example, a pod has the following limits and requests:

After creating a VPA that is set to Auto, the VPA learns the resource usage and deletes the pod. When redeployed, the pod uses the new resource limits and requests:

You can view the VPA recommendations by using the following command:

oc get vpa <vpa-name> --output yaml

$ oc get vpa <vpa-name> --output yamlAfter a few minutes, the output shows the recommendations for CPU and memory requests, similar to the following:

Example output

The output shows the recommended resources, target, the minimum recommended resources, lowerBound, the highest recommended resources, upperBound, and the most recent resource recommendations, uncappedTarget.

The VPA uses the lowerBound and upperBound values to determine if a pod needs updating. If a pod has resource requests less than the lowerBound values or more than the upperBound values, the VPA terminates and recreates the pod with the target values.

2.5.4.1. Changing the VPA minimum value

By default, workload objects must specify a minimum of two replicas in order for the VPA to automatically delete and update their pods. As a result, workload objects that specify fewer than two replicas are not automatically acted upon by the VPA. The VPA does update new pods from these workload objects if a process external to the VPA restarts the pods. You can change this cluster-wide minimum value by modifying the minReplicas parameter in the VerticalPodAutoscalerController custom resource (CR).

For example, if you set minReplicas to 3, the VPA does not delete and update pods for workload objects that specify fewer than three replicas.

If you set minReplicas to 1, the VPA can delete the only pod for a workload object that specifies only one replica. Use this setting with one-replica objects only if your workload can tolerate downtime whenever the VPA deletes a pod to adjust its resources. To avoid unwanted downtime with one-replica objects, configure the VPA CRs with the podUpdatePolicy set to Initial, which automatically updates the pod only when a process external to the VPA restarts, or Off, which you can use to update the pod manually at an appropriate time for your application.

Example VerticalPodAutoscalerController object

- 1

- Specify the minimum number of replicas in a workload object for the VPA to act on. Any objects with replicas fewer than the minimum are not automatically deleted by the VPA.

2.5.4.2. Automatically applying VPA recommendations

To use the VPA to automatically update pods, create a VPA CR for a specific workload object with updateMode set to Auto or Recreate.

When the pods are created for the workload object, the VPA constantly monitors the containers to analyze their CPU and memory needs. The VPA deletes any pods that do not meet the VPA recommendations for CPU and memory. When redeployed, the pods use the new resource limits and requests based on the VPA recommendations, honoring any pod disruption budget set for your applications. The recommendations are added to the status field of the VPA CR for reference.

By default, workload objects must specify a minimum of two replicas in order for the VPA to automatically delete their pods. Workload objects that specify fewer replicas than this minimum are not deleted. If you manually delete these pods, when the workload object redeploys the pods, the VPA does update the new pods with its recommendations. You can change this minimum by modifying the VerticalPodAutoscalerController object as shown in Changing the VPA minimum value.

Example VPA CR for the Auto mode

- 1

- The type of workload object you want this VPA CR to manage.

- 2

- The name of the workload object you want this VPA CR to manage.

- 3

- Set the mode to

AutoorRecreate:-

Auto. The VPA assigns resource requests on pod creation and updates the existing pods by terminating them when the requested resources differ significantly from the new recommendation. -

Recreate. The VPA assigns resource requests on pod creation and updates the existing pods by terminating them when the requested resources differ significantly from the new recommendation. Use this mode rarely, only if you need to ensure that when the resource request changes the pods restart.

-

Before a VPA can determine recommendations for resources and apply the recommended resources to new pods, operating pods must exist and be running in the project.

If a workload’s resource usage, such as CPU and memory, is consistent, the VPA can determine recommendations for resources in a few minutes. If a workload’s resource usage is inconsistent, the VPA must collect metrics at various resource usage intervals for the VPA to make an accurate recommendation.

2.5.4.3. Automatically applying VPA recommendations on pod creation

To use the VPA to apply the recommended resources only when a pod is first deployed, create a VPA CR for a specific workload object with updateMode set to Initial.

Then, manually delete any pods associated with the workload object that you want to use the VPA recommendations. In the Initial mode, the VPA does not delete pods and does not update the pods as it learns new resource recommendations.

Example VPA CR for the Initial mode

Before a VPA can determine recommended resources and apply the recommendations to new pods, operating pods must exist and be running in the project.

To obtain the most accurate recommendations from the VPA, wait at least 8 days for the pods to run and for the VPA to stabilize.

2.5.4.4. Manually applying VPA recommendations

To use the VPA to only determine the recommended CPU and memory values, create a VPA CR for a specific workload object with updateMode set to Off.

When the pods are created for that workload object, the VPA analyzes the CPU and memory needs of the containers and records those recommendations in the status field of the VPA CR. The VPA does not update the pods as it determines new resource recommendations.

Example VPA CR for the Off mode

You can view the recommendations by using the following command.

oc get vpa <vpa-name> --output yaml

$ oc get vpa <vpa-name> --output yamlWith the recommendations, you can edit the workload object to add CPU and memory requests, then delete and redeploy the pods by using the recommended resources.

Before a VPA can determine recommended resources and apply the recommendations to new pods, operating pods must exist and be running in the project.

To obtain the most accurate recommendations from the VPA, wait at least 8 days for the pods to run and for the VPA to stabilize.

2.5.4.5. Exempting containers from applying VPA recommendations

If your workload object has multiple containers and you do not want the VPA to evaluate and act on all of the containers, create a VPA CR for a specific workload object and add a resourcePolicy to opt-out specific containers.

When the VPA updates the pods with recommended resources, any containers with a resourcePolicy are not updated and the VPA does not present recommendations for those containers in the pod.

- 1

- The type of workload object you want this VPA CR to manage.

- 2

- The name of the workload object you want this VPA CR to manage.

- 3

- Set the mode to