Questo contenuto non è disponibile nella lingua selezionata.

Tutorials

Red Hat OpenShift Service on AWS tutorials

Abstract

Chapter 1. Tutorials overview

Use the step-by-step tutorials from Red Hat experts to get the most out of your Managed OpenShift cluster.

This content is authored by Red Hat experts but has not yet been tested on every supported configuration.

Chapter 2. Tutorial: Red Hat OpenShift Service on AWS activation and account linking

This tutorial describes the process for activating Red Hat OpenShift Service on AWS and linking to an AWS account, before deploying the first cluster.

If you have received a private offer for the product, make sure to proceed according to the instructions provided with the private offer before following this tutorial. The private offer is designed either for a case when the product is already activated, which replaces an active subscription, or for first time activations.

2.1. Prerequisites

- Log in to the Red Hat account that you want to associate with the AWS account that will activate the Red Hat OpenShift Service on AWS product subscription.

- The AWS account used for service billing can only be associated with a single Red Hat account. Typically an AWS payer account is the one that is used to subscribe to Red Hat OpenShift Service on AWS and used for account linking and billing.

- All team members belonging to the same Red Hat organization can use the linked AWS account for service billing while creating Red Hat OpenShift Service on AWS clusters.

2.2. Subscription enablement and AWS account setup

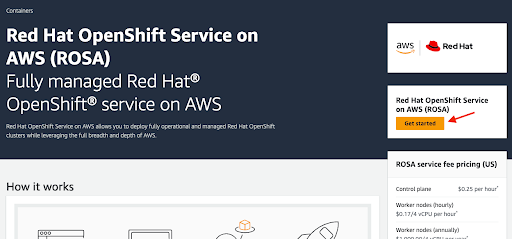

Activate the Red Hat OpenShift Service on AWS product at the AWS console page by clicking the Get started button:

Figure 2.1. Get started

If you have activated Red Hat OpenShift Service on AWS before but did not complete the process, you can click the button and complete the account linking as described in the following steps.

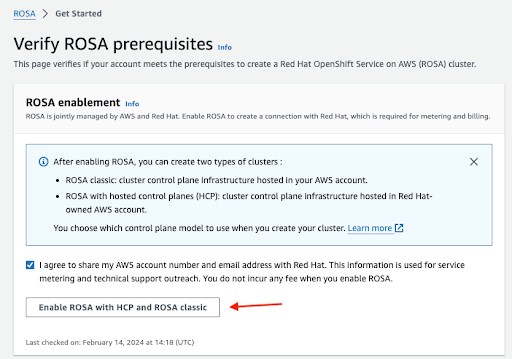

Confirm that you want your contact information to be shared with Red Hat and enable the service:

Figure 2.2. Enable Red Hat OpenShift Service on AWS

- You will not be charged by enabling the service in this step. The connection is made for billing and metering that will take place only after you deploy your first cluster. This could take a few minutes.

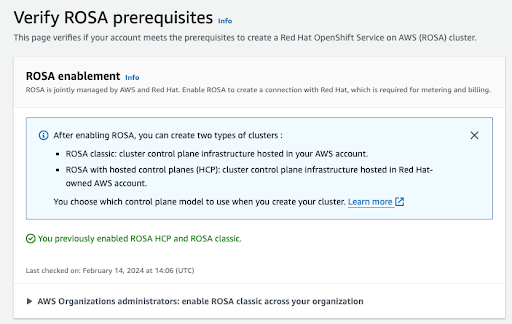

After the process is completed, you will see a confirmation:

Figure 2.3. Red Hat OpenShift Service on AWS enablement confirmation

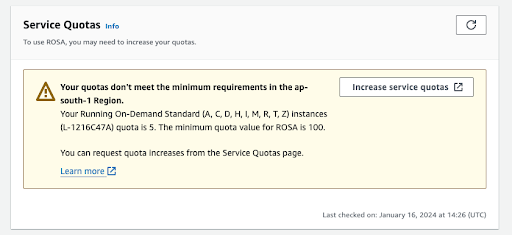

Other sections on this verification page show the status of additional prerequisites. In case any of these prerequisites are not met, a corresponding message is shown. Here is an example of insufficient quotas in the selected region:

Figure 2.4. Service quotas

- Click the Increase service quotas button or use the Learn more link to get more information about the about how to manage service quotas. In the case of insufficient quotas, note that quotas are region-specific. You can use the region switcher in the upper right corner of the web console to re-run the quota check for any region you are interested in and then submit service quota increase requests as needed.

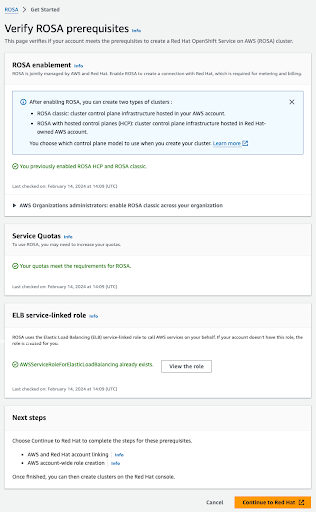

If all the prerequisites are met, the page will look like this:

Figure 2.5. Verify Red Hat OpenShift Service on AWS prerequisites

The ELB service-linked role is created for you automatically. You can click any of the small Info blue links to get contextual help and resources.

2.3. AWS and Red Hat account and subscription linking

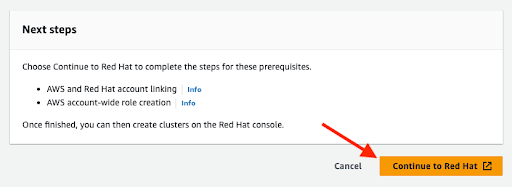

Click the orange Continue to Red Hat button to proceed with account linking:

Figure 2.6. Continue to Red Hat

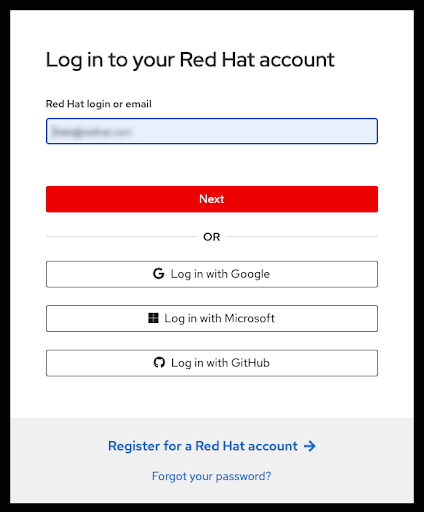

If you are not already logged in to your Red Hat account in your current browser’s session, you will be asked to log in to your account:

NoteYour AWS account must be linked to a single Red Hat organization.

Figure 2.7. Log in to your Red Hat account

- You can also register for a new Red Hat account or reset your password on this page.

- Log in to the Red Hat account that you want to associate with the AWS account that has activated the Red Hat OpenShift Service on AWS product subscription.

- The AWS account used for service billing can only be associated with a single Red Hat account. Typically an AWS payer account is the one that is used to subscribe to Red Hat OpenShift Service on AWS and used for account linking and billing.

- All team members belonging to the same Red Hat organization can use the linked AWS account for service billing while creating Red Hat OpenShift Service on AWS clusters.

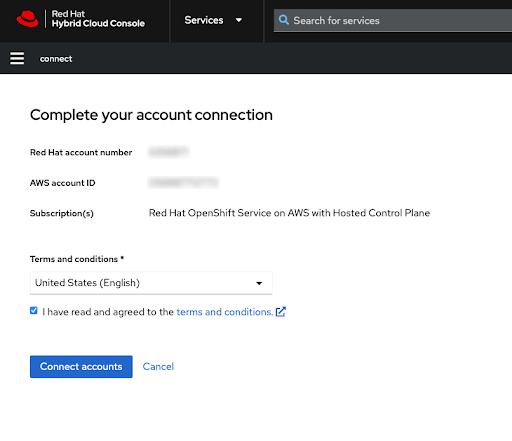

Complete the Red Hat account linking after reviewing the terms and conditions:

NoteThis step is available only if the AWS account was not linked to any Red Hat account before.

This step is skipped if the AWS account is already linked to the user’s logged in Red Hat account.

If the AWS account is linked to a different Red Hat account, an error will be displayed. See Correcting Billing Account Information for HCP clusters for troubleshooting.

Figure 2.8. Complete your account connection

Both the Red Hat and AWS account numbers are shown on this screen.

Click the Connect accounts button if you agree with the service terms.

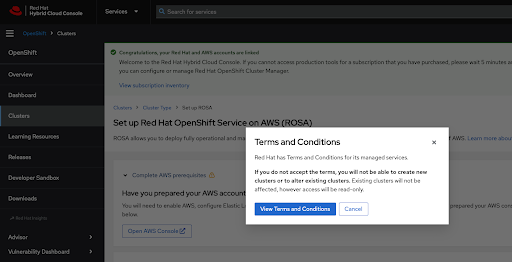

If this is the first time you are using the Red Hat Hybrid Cloud Console, you will be asked to agree with the general managed services terms and conditions before being able to create the first cluster:

Figure 2.9. Terms and conditions

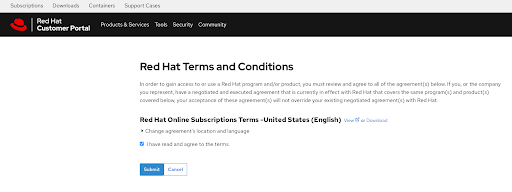

Additional terms that need to be reviewed and accepted are shown after clicking the View Terms and Conditions button:

Figure 2.10. Red Hat terms and conditions

Submit your agreement once you have reviewed any additional terms when prompted at this time.

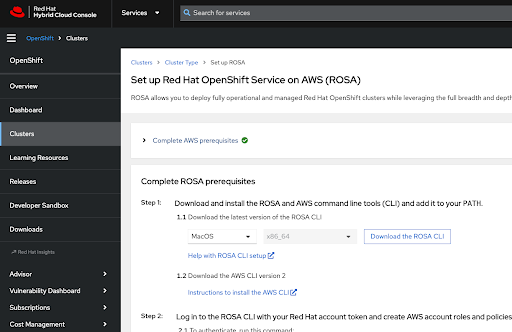

The Hybrid Cloud Console provides a confirmation that AWS account setup was completed and lists the prerequisites for cluster deployment:

Figure 2.11. Complete Red Hat OpenShift Service on AWS prerequisites

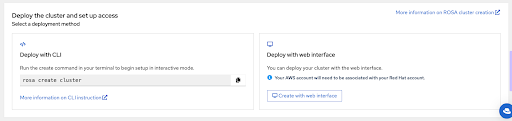

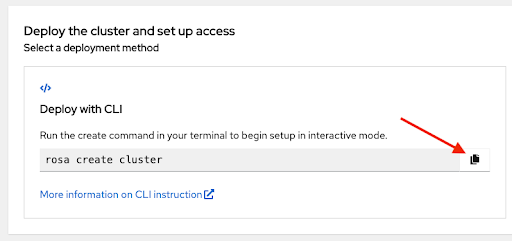

The last section of this page shows cluster deployment options, either using the

rosaCLI or through the web console:Figure 2.12. Deploy the cluster and set up access

2.4. Selecting the AWS billing account for Red Hat OpenShift Service on AWS during cluster deployment using the CLI

Make sure that you have the most recent ROSA command-line interface (CLI) and AWS CLI installed and have completed the Red Hat OpenShift Service on AWS prerequisites covered in the previous section. See Help with ROSA CLI setup and Instructions to install the AWS CLI for more information.

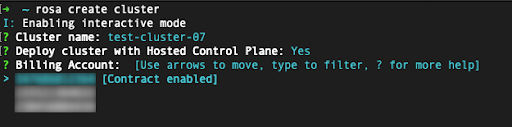

Initiate the cluster deployment using the

rosa create clustercommand. You can click the copy button on the Set up Red Hat OpenShift Service on AWS (ROSA) console page and paste the command in your terminal. This launches the cluster creation process in interactive mode:Figure 2.13. Deploy the cluster and set up access

-

To use a custom AWS profile, one of the non-default profiles specified in your

~/.aws/credentials, you can add the–profile <profile_name>selector to the rosa create cluster command so that the command looks like rosa create cluster–profile stage. If no AWS CLI profile is specified using this option, the default AWS CLI profile will determine the AWS infrastructure profile into which the cluster is deployed. The billing AWS profile is selected in one of the following steps. When deploying a Red Hat OpenShift Service on AWS cluster, the billing AWS account needs to be specified:

Figure 2.14. Specify the Billing Account

- Only AWS accounts that are linked to the user’s logged in Red Hat account are shown.

- The specified AWS account is charged for using the Red Hat OpenShift Service on AWS service.

An indicator shows if the Red Hat OpenShift Service on AWS contract is enabled or not enabled for a given AWS billing account.

- If you select an AWS billing account that shows the Contract enabled label, on-demand consumption rates are charged only after the capacity of your pre-paid contract is consumed.

- AWS accounts without the Contract enabled label are charged the applicable on-demand consumption rates.

Additional resources

- The detailed cluster deployment steps are beyond the scope of this tutorial. See Creating Red Hat OpenShift Service on AWS clusters using the default options for more details about how to complete the Red Hat OpenShift Service on AWS cluster deployment using the CLI.

2.5. Selecting the AWS billing account for Red Hat OpenShift Service on AWS during cluster deployment using the web console

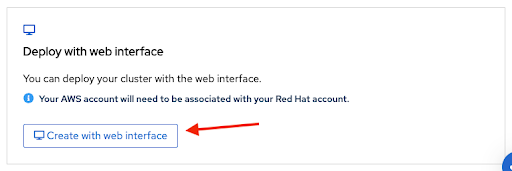

A cluster can be created using the web console by selecting the second option in the bottom section of the introductory Set up Red Hat OpenShift Service on AWS page:

Figure 2.15. Deploy with web interface

NoteComplete the prerequisites before starting the web console deployment process.

The

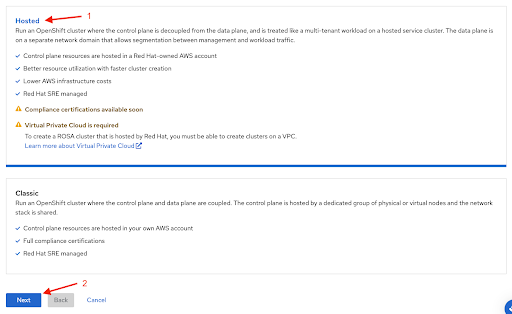

rosaCLI is required for certain tasks, such as creating the account roles. If you are deploying Red Hat OpenShift Service on AWS for the first time, follow this the CLI steps until running therosa whoamicommand, before starting the web console deployment steps.The first step when creating a Red Hat OpenShift Service on AWS cluster using the web console is the control plane selection. Make sure the Hosted option is selected before clicking the Next button:

Figure 2.16. Select hosted option

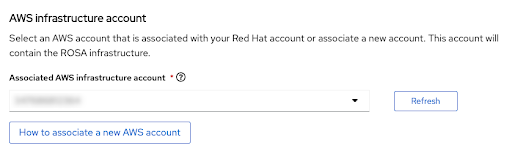

The next step Accounts and roles allows you specifying the infrastructure AWS account, into which the Red Hat OpenShift Service on AWS cluster is deployed and where the resources are consumed and managed:

Figure 2.17. AWS infrastructure account

- Click the How to associate a new AWS account, if you don not see the account into which you want to deploy the Red Hat OpenShift Service on AWS cluster for detailed information on how to create or link account roles for this association.

-

The

rosaCLI is used for this. -

If you are using multiple AWS accounts and have their profiles configured for the AWS CLI, you can use the

--profileselector to specify the AWS profile when working with therosaCLI commands.

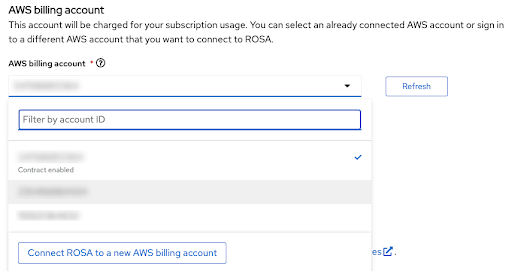

The billing AWS account is selected in the immediately following section:

Figure 2.18. AWS billing account

- Only AWS accounts that are linked to the user’s logged in Red Hat account are shown.

- The specified AWS account is charged for using the Red Hat OpenShift Service on AWS service.

An indicator shows if the Red Hat OpenShift Service on AWS contract is enabled or not enabled for a given AWS billing account.

- If you select an AWS billing account that shows the Contract enabled label, on-demand consumption rates are charged only after the capacity of your pre-paid contract is consumed.

- AWS accounts without the Contract enabled label are charged the applicable on-demand consumption rates.

The following steps past the billing AWS account selection are beyond the scope of this tutorial.

Additional resources

- For information on using the CLI to create a cluster, see Creating a Red Hat OpenShift Service on AWS cluster using the CLI.

- See this learning path for more details on how to complete cluster deployment using the web console.

Chapter 3. Tutorial: Red Hat OpenShift Service on AWS private offer acceptance and sharing

This guide describes how to accept a private offer for Red Hat OpenShift Service on AWS and how to ensure that all team members can use the private offer for the clusters they provision.

Red Hat OpenShift Service on AWS costs are composed of the AWS infrastructure costs and the Red Hat OpenShift Service on AWS service costs. AWS infrastructure costs, such as the EC2 instances that are running the needed workloads, are charged to the AWS account where the infrastructure is deployed. Red Hat OpenShift Service on AWS service costs are charged to the AWS account specified as the "AWS billing account" when deploying a cluster.

The cost components can be billed to different AWS accounts. Detailed description of how the Red Hat OpenShift Service on AWS service cost and AWS infrastructure costs are calculated can be found on the Red Hat OpenShift Service on AWS Pricing page.

3.1. Accepting a private offer

When you get a private offer for Red Hat OpenShift Service on AWS, you are provided with a unique URL that is accessible only by a specific AWS account ID that was specified by the seller.

NoteVerify that you are logged in using the AWS account that was specified as the buyer. Attempting to access the offer using another AWS account produces a "page not found" error message as shown in Figure 11 in the troubleshooting section below.

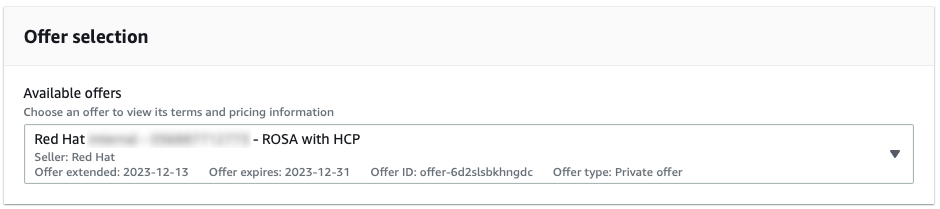

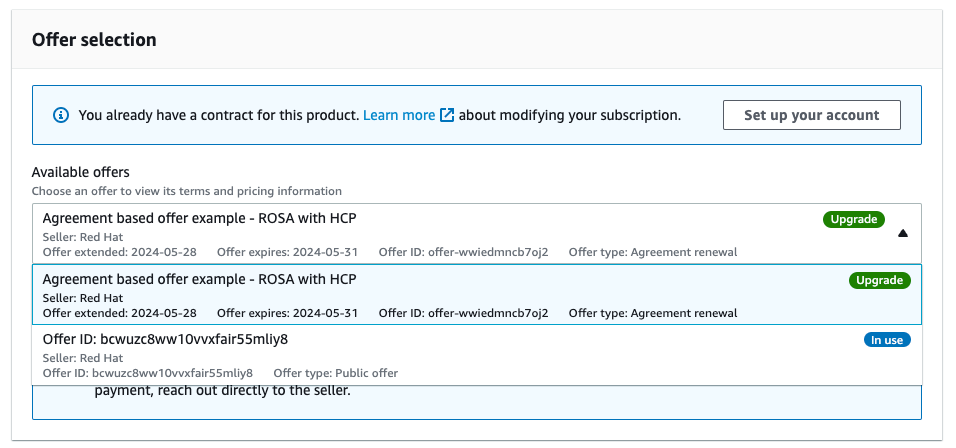

You can see the offer selection drop down menu with a regular private offer pre-selected in Figure 1. This type of offer can be accepted only if the Red Hat OpenShift Service on AWS was not activated before using the public offer or another private offer.

Figure 3.1. Regular private offer

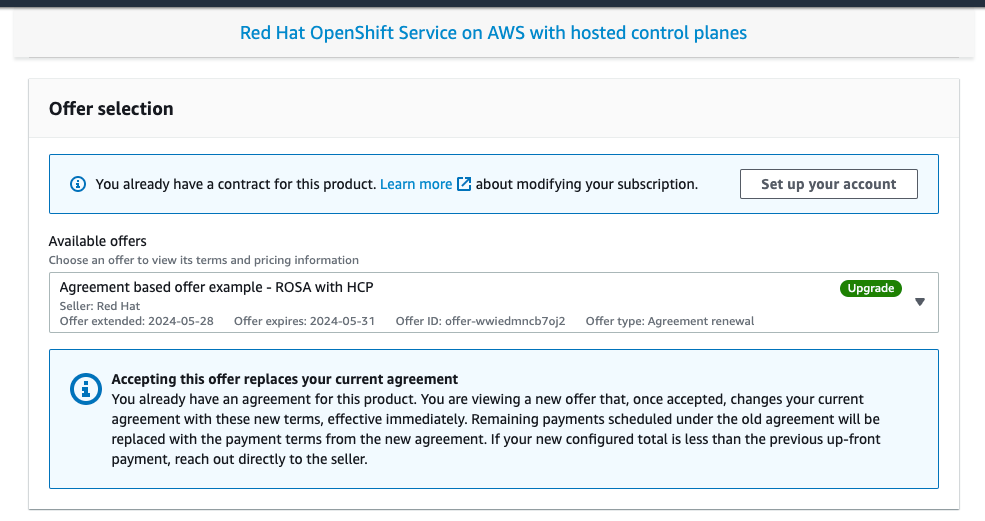

You can see a private offer that was created for an AWS account that previously activated Red Hat OpenShift Service on AWS using the public offer, showing the product name and the selected private offer labeled as "Upgrade", that replaces the currently running contract for Red Hat OpenShift Service on AWS in Figure 2.

Figure 3.2. Private offer selection selection screen

The drop down menu allows selecting between multiple offers, if available. The previously activated public offer is shown together with the newly provided agreement based offer that is labeled as "Upgrade" in Figure 3.

Figure 3.3. Private offer selection dropdown

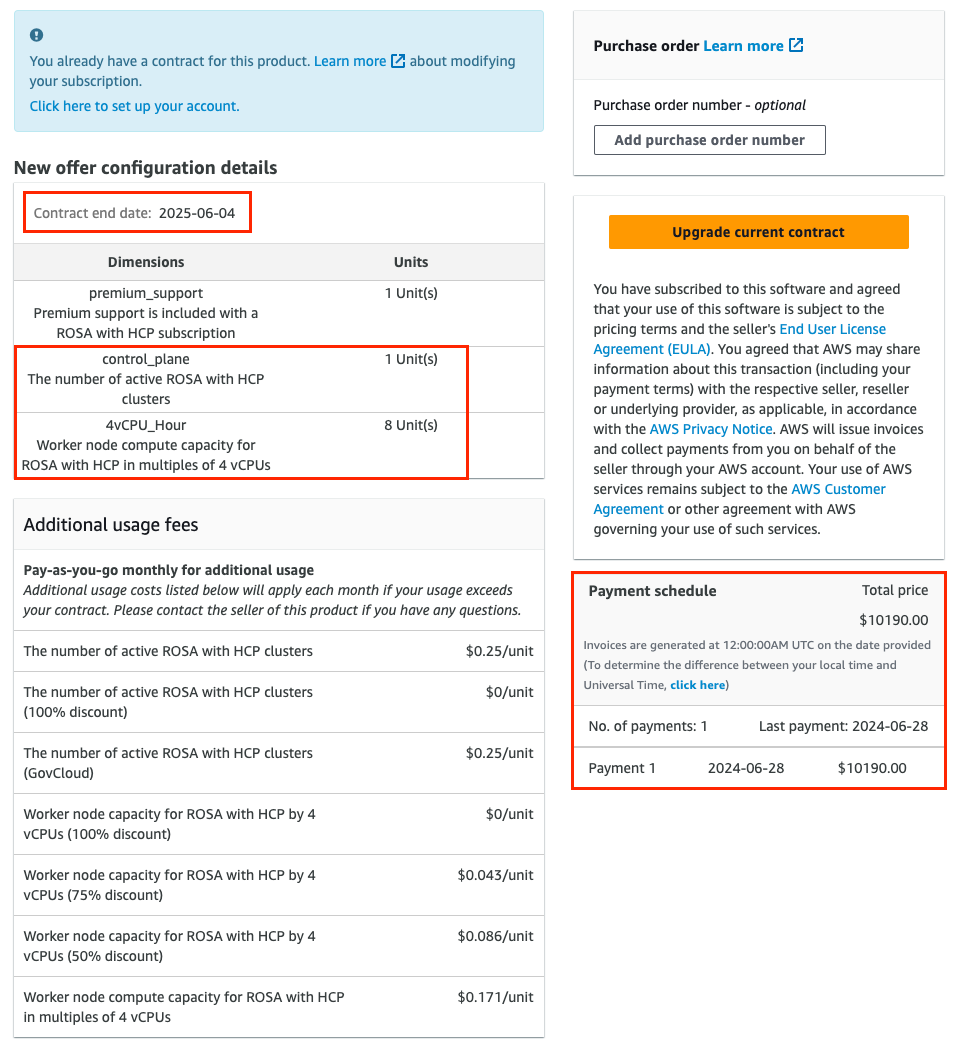

Verify that your offer configuration is selected. Figure 4 shows the bottom part of the offer page with the offer details.

NoteThe contract end date, the number of units included with the offer, and the payment schedule. In this example, 1 cluster and up to 3 nodes utilizing 4 vCPUs are included.

Figure 3.4. Private offer details

Optional: you can add your own purchase order (PO) number to the subscription that is being purchased, so it is included on your subsequent AWS invoices. Also, check the "Additional usage fees" that are charged for any usage above the scope of the "New offer configuration details".

NotePrivate offers have several available configurations.

- It is possible that the private offer you are accepting is set up with a fixed future start date.

- If you do not have another active Red Hat OpenShift Service on AWS subscription at the time of accepting the private offer, a public offer or an older private offer entitlement, accept the private offer itself and continue with the account linking and cluster deployment steps after the specified service start date.

You must have an active Red Hat OpenShift Service on AWS entitlement to complete these steps. Service start dates are always reported in the UTC time zone

Create or upgrade your contract.

For private offers accepted by an AWS account that does not have Red Hat OpenShift Service on AWS activated yet and is creating the first contract for this service, click the Create contract button.

Figure 3.5. Create contract button

For agreement-based offers, click the Upgrade current contract button shown in Figures 4 and 6.

Figure 3.6. Upgrade contract button

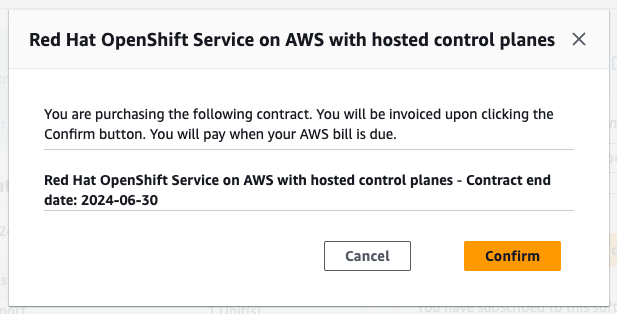

Click Confirm.

Figure 3.7. Private offer acceptance confirmation window

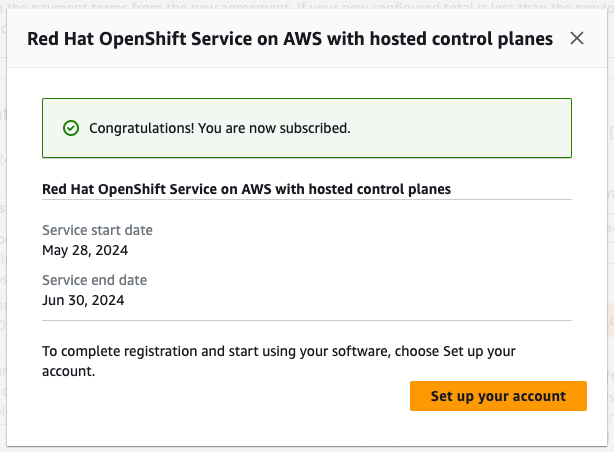

If the accepted private offer service start date is set to be immediately following the offer acceptance, click the Set up your account button in the confirmation modal window.

Figure 3.8. Subscription confirmation

If the accepted private offer has a future start date specified, return to the private offer page after the service start date, and click the Setup your account button to proceed with the Red Hat and AWS account linking.

NoteWith no agreement active, the account linking described below is not triggered, the "Account setup" process can be done only after the "Service start date".

These are always in UTC time zone.

3.2. Sharing a private offer

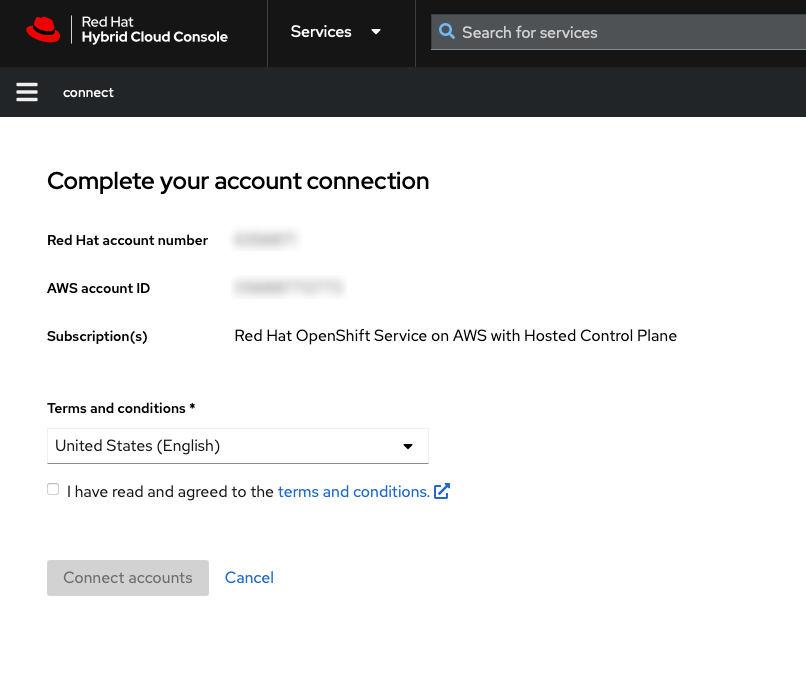

Clicking the Set up your account button in the previous step takes you to the AWS and Red Hat account linking step. At this time, you are already logged in with the AWS account that accepted the offer. If you are not logged in with a Red Hat account, you will be prompted to do so.

Red Hat OpenShift Service on AWS entitlement is shared with other team members through your Red Hat organization account. All existing users in the same Red Hat organization are able to select the billing AWS account that accepted the private offer by following the above described steps. You can manage users in your Red Hat organization, when logged in as the Red Hat organization administrator, and invite or create new users.

NoteRed Hat OpenShift Service on AWS private offer cannot be shared with AWS linked accounts through the AWS License Manager.

- Add any users that you want to deploy Red Hat OpenShift Service on AWS clusters. Check this user management FAQ for more details about Red Hat account user management tasks.

- Verify that the already logged in Red Hat account includes all users that are meant to be Red Hat OpenShift Service on AWS cluster deployers benefiting from the accepted private offer.

Verify that the Red Hat account number and the AWS account ID are the desired accounts that are to be linked. This linking is unique and a Red Hat account can be connected only with a single AWS (billing) account.

Figure 3.9. AWS and Red Hat accounts connection

If you want to link the AWS account with another Red Hat account than is shown on this page in Figure 9, log out from the Red Hat Hybrid Cloud Console before connecting the accounts and repeat the step of setting the account by returning to the private offer URL that is already accepted.

An AWS account can be connected with a single Red Hat account only. Once Red Hat and AWS accounts are connected, this cannot be changed by the user. If a change is needed, the user must create a support ticket.

- Agree to the terms and conditions and then click Connect accounts.

3.3. AWS billing account selection

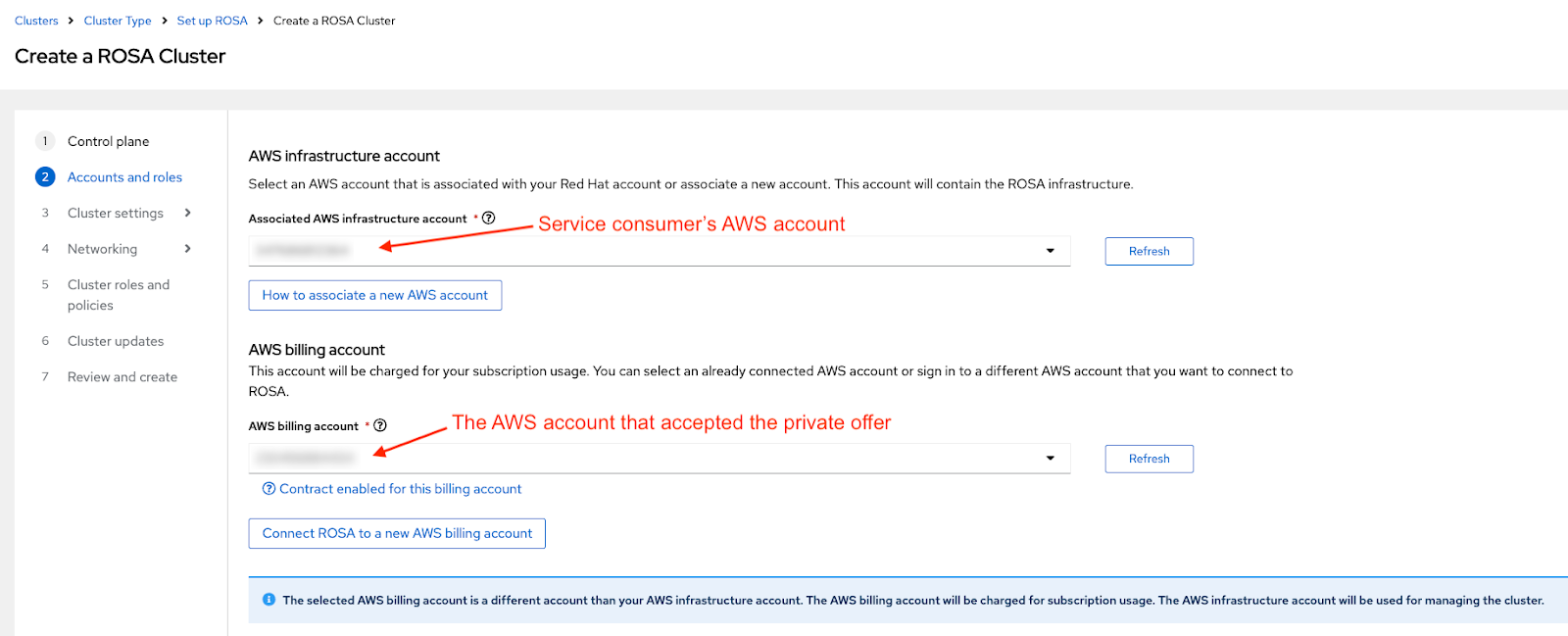

- When deploying Red Hat OpenShift Service on AWS clusters, verify that end users select the AWS billing account that accepted the private offer.

When using the web interface for deploying Red Hat OpenShift Service on AWS, the Associated AWS infrastructure account" is typically set to the AWS account ID used by the administrator of the cluster that is being created.

- This can be the same AWS account as the billing AWS account.

AWS resources are deployed into this account and all the billing associated with those resources are processed accordingly.

Figure 3.10. Infrastructure and billing AWS account selection during Red Hat OpenShift Service on AWS cluster deployment

- The drop-down for the AWS billing account on the screenshot above should be set to the AWS account that accepted the private offer, providing the purchased quota is intended to be used by the cluster that is being created. If different AWS accounts are selected in the infrastructure and billing "roles", the blue informative note visible in Figure 10 is shown.

3.4. Troubleshooting

The most frequent issues associated with private offer acceptance and Red Hat account linking.

3.4.1. Accessing a private offer using a different AWS account

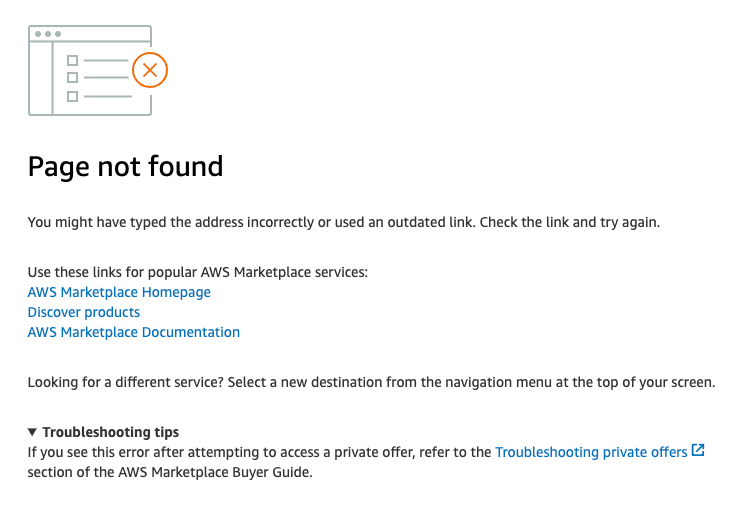

If you try accessing the private offer when logged in under AWS account ID that is not defined in the offer, and see the message shown in Figure 11, then verify that you are logged in as the desired AWS billing account.

Figure 3.11. HTTP 404 error when using the private offer URL

- Contact the seller if you need the private offer to be extended to another AWS account.

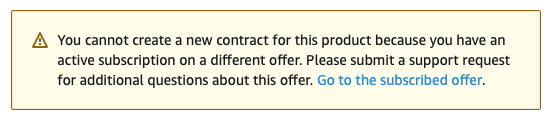

3.4.2. The private offer cannot be accepted because of active subscription

If you try accessing a private offer that was created for the first time Red Hat OpenShift Service on AWS activation, while you already have Red Hat OpenShift Service on AWS activated using another public or private offer, and see the following notice, then contact the seller who provided you with the offer.

The seller can provide you with a new offer that will seamlessly replace your current agreement, without a need to cancel your previous subscription.

Figure 3.12. Existing subscription preventing private offer acceptance

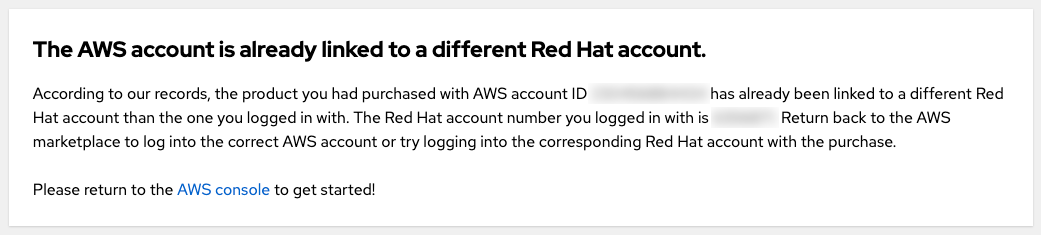

3.4.3. The AWS account is already linked to a different Red Hat account

If you see the error message "AWS account is already linked to a different Red Hat account" when you try to connect the AWS account that accepted the private offer with a presently logged-in Red Hat user, then the AWS account is already connected to another Red Hat user.

Figure 3.13. AWS account is already linked to a different Red Hat account

You can either log in using another Red Hat account or another AWS account.

- However, since this guide pertains to private offers, the assumption is that you are logged in with the AWS account that was specified as the buyer and already accepted the private offer so it is intended to be used as the billing account. Logging in as another AWS account is not expected after a private offer was accepted.

- You can still log in with another Red Hat user which is already connected to the AWS account that accepted the private offer. Other Red Hat users belonging to the same Red Hat organization are able to use the linked AWS account as the Red Hat OpenShift Service on AWS AWS billing account when creating clusters as seen in Figure 10.

- If you believe that the existing account linking might not be correct, see the "My team members belong to different Red Hat organizations" question below for tips on how you can proceed.

3.4.4. My team members belong to different Red Hat organizations

- An AWS account can be connected to a single Red Hat account only. Any user that wants to create a cluster and benefit from the private offer granted to this AWS account needs to be in the same Red Hat account. This can be achieved by inviting the user to the same Red Hat account and creating a new Red Hat user.

3.4.5. Incorrect AWS billing account was selected when creating a cluster

- If the user selected an incorrect AWS billing account, the fastest way to fix this is to delete the cluster and create a new one, while selecting the correct AWS billing account.

- If this is a production cluster that cannot be easily deleted, please contact Red Hat support to change the billing account for an existing cluster. Expect some turnaround time for this to be resolved.

Chapter 4. Tutorial: Deploying Red Hat OpenShift Service on AWS with a Custom DNS Resolver

A custom DHCP option set enables you to customize your VPC with your own DNS server, domain name, and more. Red Hat OpenShift Service on AWS clusters support using custom DHCP option sets. By default, Red Hat OpenShift Service on AWS clusters require setting the "domain name servers" option to AmazonProvidedDNS to ensure successful cluster creation and operation. Customers who want to use custom DNS servers for DNS resolution must do additional configuration to ensure successful Red Hat OpenShift Service on AWS cluster creation and operation.

In this tutorial, we will configure our DNS server to forward DNS lookups for specific DNS zones (further detailed below) to an Amazon Route 53 Inbound Resolver.

This tutorial uses the open-source BIND DNS server (named) to demonstrate the configuration necessary to forward DNS lookups to an Amazon Route 53 Inbound Resolver located in the VPC you plan to deploy a Red Hat OpenShift Service on AWS cluster into. Refer to the documentation of your preferred DNS server for how to configure zone forwarding.

4.1. Prerequisites

-

ROSA CLI (

rosa) -

AWS CLI (

aws) - A manually created AWS VPC

- A DHCP option set configured to point to a custom DNS server and set as the default for your VPC

4.2. Setting up your environment

Configure the following environment variables:

export VPC_ID=<vpc_ID> export REGION=<region> export VPC_CIDR=<vpc_CIDR>

$ export VPC_ID=<vpc_ID>1 $ export REGION=<region>2 $ export VPC_CIDR=<vpc_CIDR>3 Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure all fields output correctly before moving to the next section:

echo "VPC ID: ${VPC_ID}, VPC CIDR Range: ${VPC_CIDR}, Region: ${REGION}"$ echo "VPC ID: ${VPC_ID}, VPC CIDR Range: ${VPC_CIDR}, Region: ${REGION}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. Create an Amazon Route 53 Inbound Resolver

Use the following procedure to deploy an Amazon Route 53 Inbound Resolver in the VPC we plan to deploy the cluster into.

In this example, we deploy the Amazon Route 53 Inbound Resolver into the same VPC the cluster will use. If you want to deploy it into a separate VPC, you must manually associate the private hosted zone(s) detailed below once cluster creation is started. You cannot associate the zone before the cluster creation process begins. Failure to associate the private hosted zone during the cluster creation process will result in cluster creation failures.

Create a security group and allow access to ports

53/tcpand53/udpfrom the VPC:SG_ID=$(aws ec2 create-security-group --group-name rosa-inbound-resolver --description "Security group for ROSA inbound resolver" --vpc-id ${VPC_ID} --region ${REGION} --output text) aws ec2 authorize-security-group-ingress --group-id ${SG_ID} --protocol tcp --port 53 --cidr ${VPC_CIDR} --region ${REGION} aws ec2 authorize-security-group-ingress --group-id ${SG_ID} --protocol udp --port 53 --cidr ${VPC_CIDR} --region ${REGION}$ SG_ID=$(aws ec2 create-security-group --group-name rosa-inbound-resolver --description "Security group for ROSA inbound resolver" --vpc-id ${VPC_ID} --region ${REGION} --output text) $ aws ec2 authorize-security-group-ingress --group-id ${SG_ID} --protocol tcp --port 53 --cidr ${VPC_CIDR} --region ${REGION} $ aws ec2 authorize-security-group-ingress --group-id ${SG_ID} --protocol udp --port 53 --cidr ${VPC_CIDR} --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an Amazon Route 53 Inbound Resolver in your VPC:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe above command attaches Amazon Route 53 Inbound Resolver endpoints to all subnets in the provided VPC using dynamically allocated IP addresses. If you prefer to manually specify the subnets and/or IP addresses, run the following command instead:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<subnet_ID>with the subnet IDs and<endpoint_IP>with the static IP addresses you want inbound resolver endpoints added to.

Get the IP addresses of your inbound resolver endpoints to configure in your DNS server configuration:

aws route53resolver list-resolver-endpoint-ip-addresses \ --resolver-endpoint-id ${RESOLVER_ID} \ --region=${REGION} \ --query 'IpAddresses[*].Ip'$ aws route53resolver list-resolver-endpoint-ip-addresses \ --resolver-endpoint-id ${RESOLVER_ID} \ --region=${REGION} \ --query 'IpAddresses[*].Ip'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

[ "10.0.45.253", "10.0.23.131", "10.0.148.159" ][ "10.0.45.253", "10.0.23.131", "10.0.148.159" ]Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. Configure your DNS server

Use the following procedure to configure your DNS server to forward the necessary private hosted zones to your Amazon Route 53 Inbound Resolver.

4.4.1. Red Hat OpenShift Service on AWS

Red Hat OpenShift Service on AWS clusters require you to configure DNS forwarding for two private hosted zones:

-

<cluster-name>.hypershift.local -

rosa.<domain-prefix>.<unique-ID>.p3.openshiftapps.com

These Amazon Route 53 private hosted zones are created during cluster creation. The cluster-name and domain-prefix are customer-specified values, but the unique-ID is randomly generated during cluster creation and cannot be preselected. As such, you must wait for the cluster creation process to begin before configuring forwarding for the p3.openshiftapps.com private hosted zone.

Before the cluster is created, configure your DNS server to forward all DNS requests for

<cluster-name>.hypershift.localto your Amazon Route 53 Inbound Resolver endpoints. For BIND DNS servers, edit your/etc/named.conffile in your favorite text editor and add a new zone using the below example:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Create your cluster.

Once your cluster has begun the creation process, locate the newly created private hosted zone:

aws route53 list-hosted-zones-by-vpc \ --vpc-id ${VPC_ID} \ --vpc-region ${REGION} \ --query 'HostedZoneSummaries[*].Name' \ --output table$ aws route53 list-hosted-zones-by-vpc \ --vpc-id ${VPC_ID} \ --vpc-region ${REGION} \ --query 'HostedZoneSummaries[*].Name' \ --output tableCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIt may take a few minutes for the cluster creation process to create the private hosted zones in Route 53. If you do not see an

p3.openshiftapps.comdomain, wait a few minutes and run the command again.Once you know the unique ID of the cluster domain, configure your DNS server to forward all DNS requests for

rosa.<domain-prefix>.<unique-ID>.p3.openshiftapps.comto your Amazon Route 53 Inbound Resolver endpoints. For BIND DNS servers, edit your/etc/named.conffile in your favorite text editor and add a new zone using the below example:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 5. Tutorial: Using AWS WAF and Amazon CloudFront to protect Red Hat OpenShift Service on AWS workloads

AWS WAF is a web application firewall that lets you monitor the HTTP and HTTPS requests that are forwarded to your protected web application resources.

You can use an Amazon CloudFront to add a Web Application Firewall (WAF) to your Red Hat OpenShift Service on AWS workloads. Using an external solution protects Red Hat OpenShift Service on AWS resources from experiencing denial of service due to handling the WAF.

WAFv1, WAF classic, is no longer supported. Use WAFv2.

5.1. Prerequisites

- A Red Hat OpenShift Service on AWS cluster.

-

You have access to the OpenShift CLI (

oc). -

You have access to the AWS CLI (

aws).

5.1.1. Environment setup

Prepare the environment variables:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace with the custom domain you want to use for the

IngressController.

NoteThe "Cluster" output from the previous command might be the name of your cluster, the internal ID of your cluster, or the cluster’s domain prefix. If you prefer to use another identifier, you can manually set this value by running the following command:

export CLUSTER=my-custom-value

$ export CLUSTER=my-custom-valueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2. Setting up the secondary ingress controller

It is necessary to configure a secondary ingress controller to segment your external WAF-protected traffic from your standard (and default) cluster ingress controller.

Prerequisites

A publicly trusted SAN or wildcard certificate for your custom domain, such as

CN=*.apps.example.comImportantAmazon CloudFront uses HTTPS to communicate with your cluster’s secondary ingress controller. As explained in the Amazon CloudFront documentation, you cannot use a self-signed certificate for HTTPS communication between CloudFront and your cluster. Amazon CloudFront verifies that the certificate was issued by a trusted certificate authority.

Procedure

Create a new TLS secret from a private key and a public certificate, where

fullchain.pemis your full wildcard certificate chain (including any intermediaries) andprivkey.pemis your wildcard certificate’s private key.Example

oc -n openshift-ingress create secret tls waf-tls --cert=fullchain.pem --key=privkey.pem

$ oc -n openshift-ingress create secret tls waf-tls --cert=fullchain.pem --key=privkey.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new

IngressControllerresource:Example

waf-ingress-controller.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

IngressController:Example

oc apply -f waf-ingress-controller.yaml

$ oc apply -f waf-ingress-controller.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that your IngressController has successfully created an external load balancer:

oc -n openshift-ingress get service/router-cloudfront-waf

$ oc -n openshift-ingress get service/router-cloudfront-wafCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE router-cloudfront-waf LoadBalancer 172.30.16.141 a68a838a7f26440bf8647809b61c4bc8-4225395f488830bd.elb.us-east-1.amazonaws.com 80:30606/TCP,443:31065/TCP 2m19s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE router-cloudfront-waf LoadBalancer 172.30.16.141 a68a838a7f26440bf8647809b61c4bc8-4225395f488830bd.elb.us-east-1.amazonaws.com 80:30606/TCP,443:31065/TCP 2m19sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.1. Configure the AWS WAF

The AWS WAF service is a web application firewall that lets you monitor, protect, and control the HTTP and HTTPS requests that are forwarded to your protected web application resources, like Red Hat OpenShift Service on AWS.

Create a AWS WAF rules file to apply to our web ACL:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow This will enable the Core (Common) and SQL AWS Managed Rule Sets.

Create an AWS WAF Web ACL using the rules we specified above:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.3. Configure Amazon CloudFront

Retrieve the newly created custom ingress controller’s NLB hostname:

NLB=$(oc -n openshift-ingress get service router-cloudfront-waf \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')$ NLB=$(oc -n openshift-ingress get service router-cloudfront-waf \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Import your certificate into Amazon Certificate Manager, where

cert.pemis your wildcard certificate,fullchain.pemis your wildcard certificate’s chain andprivkey.pemis your wildcard certificate’s private key.NoteRegardless of what region your cluster is deployed, you must import this certificate to

us-east-1as Amazon CloudFront is a global AWS service.Example

aws acm import-certificate --certificate file://cert.pem \ --certificate-chain file://fullchain.pem \ --private-key file://privkey.pem \ --region us-east-1

$ aws acm import-certificate --certificate file://cert.pem \ --certificate-chain file://fullchain.pem \ --private-key file://privkey.pem \ --region us-east-1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Log into the AWS console to create a CloudFront distribution.

Configure the CloudFront distribution by using the following information:

NoteIf an option is not specified in the table below, leave them the default (which may be blank).

Expand Option Value Origin domain

Output from the previous command [1]

Name

rosa-waf-ingress [2]

Viewer protocol policy

Redirect HTTP to HTTPS

Allowed HTTP methods

GET, HEAD, OPTIONS, PUT, POST, PATCH, DELETE

Cache policy

CachingDisabled

Origin request policy

AllViewer

Web Application Firewall (WAF)

Enable security protections

Use existing WAF configuration

true

Choose a web ACL

cloudfront-wafAlternate domain name (CNAME)

*.apps.example.com [3]

Custom SSL certificate

Select the certificate you imported from the step above [4]

-

Run

echo ${NLB}to get the origin domain. - If you have multiple clusters, ensure the origin name is unique.

- This should match the wildcard domain you used to create the custom ingress controller.

- This should match the alternate domain name entered above.

-

Run

Retrieve the Amazon CloudFront Distribution endpoint:

aws cloudfront list-distributions --query "DistributionList.Items[?Origins.Items[?DomainName=='${NLB}']].DomainName" --output text$ aws cloudfront list-distributions --query "DistributionList.Items[?Origins.Items[?DomainName=='${NLB}']].DomainName" --output textCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the DNS of your custom wildcard domain with a CNAME to the Amazon CloudFront Distribution endpoint from the step above.

Example

*.apps.example.com CNAME d1b2c3d4e5f6g7.cloudfront.net

*.apps.example.com CNAME d1b2c3d4e5f6g7.cloudfront.netCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4. Deploy a sample application

Create a new project for your sample application by running the following command:

oc new-project hello-world

$ oc new-project hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy a hello world application:

oc -n hello-world new-app --image=docker.io/openshift/hello-openshift

$ oc -n hello-world new-app --image=docker.io/openshift/hello-openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a route for the application specifying your custom domain name:

Example

oc -n hello-world create route edge --service=hello-openshift hello-openshift-tls \ --hostname hello-openshift.${DOMAIN}$ oc -n hello-world create route edge --service=hello-openshift hello-openshift-tls \ --hostname hello-openshift.${DOMAIN}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Label the route to admit it to your custom ingress controller:

oc -n hello-world label route.route.openshift.io/hello-openshift-tls route=waf

$ oc -n hello-world label route.route.openshift.io/hello-openshift-tls route=wafCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5. Test the WAF

Test that the app is accessible behind Amazon CloudFront:

Example

curl "https://hello-openshift.${DOMAIN}"$ curl "https://hello-openshift.${DOMAIN}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow Test that the WAF denies a bad request:

Example

curl -X POST "https://hello-openshift.${DOMAIN}" \ -F "user='<script><alert>Hello></alert></script>'"$ curl -X POST "https://hello-openshift.${DOMAIN}" \ -F "user='<script><alert>Hello></alert></script>'"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The expected result is a

403 ERROR, which means the AWS WAF is protecting your application.

Chapter 6. Tutorial: Using AWS WAF and AWS ALBs to protect Red Hat OpenShift Service on AWS workloads

AWS WAF is a web application firewall that lets you monitor the HTTP and HTTPS requests that are forwarded to your protected web application resources.

You can use an AWS Application Load Balancer (ALB) to add a Web Application Firewall (WAF) to your Red Hat OpenShift Service on AWS workloads. Using an external solution protects Red Hat OpenShift Service on AWS resources from experiencing denial of service due to handling the WAF.

It is recommended that you use the more flexible CloudFront method unless you absolutely must use an ALB based solution.

6.1. Prerequisites

Multiple availability zone (AZ) Red Hat OpenShift Service on AWS cluster.

NoteAWS ALBs require at least two public subnets across AZs, per the AWS documentation. For this reason, only multiple AZ Red Hat OpenShift Service on AWS clusters can be used with ALBs.

-

You have access to the OpenShift CLI (

oc). -

You have access to the AWS CLI (

aws).

6.1.1. Environment setup

Prepare the environment variables:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.1.2. AWS VPC and subnets

This section only applies to clusters that were deployed into existing VPCs. If you did not deploy your cluster into an existing VPC, skip this section and proceed to the installation section below.

Set the below variables to the proper values for your Red Hat OpenShift Service on AWS deployment:

export VPC_ID=<vpc-id> export PUBLIC_SUBNET_IDS=(<space-separated-list-of-ids>) export PRIVATE_SUBNET_IDS=(<space-separated-list-of-ids>)

$ export VPC_ID=<vpc-id>1 $ export PUBLIC_SUBNET_IDS=(<space-separated-list-of-ids>)2 $ export PRIVATE_SUBNET_IDS=(<space-separated-list-of-ids>)3 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace with the VPC ID of the cluster, for example:

export VPC_ID=vpc-04c429b7dbc4680ba. - 2

- Replace with a space-separated list of the private subnet IDs of the cluster, making sure to preserve the

(). For example:export PUBLIC_SUBNET_IDS=(subnet-056fd6861ad332ba2 subnet-08ce3b4ec753fe74c subnet-071aa28228664972f). - 3

- Replace with a space-separated list of the private subnet IDs of the cluster, making sure to preserve the

(). For example:export PRIVATE_SUBNET_IDS=(subnet-0b933d72a8d72c36a subnet-0817eb72070f1d3c2 subnet-0806e64159b66665a).

Add a tag to your cluster’s VPC with the cluster identifier:

aws ec2 create-tags --resources ${VPC_ID} \ --tags Key=kubernetes.io/cluster/${CLUSTER},Value=shared --region ${REGION}$ aws ec2 create-tags --resources ${VPC_ID} \ --tags Key=kubernetes.io/cluster/${CLUSTER},Value=shared --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add a tag to your public subnets:

aws ec2 create-tags \ --resources ${PUBLIC_SUBNET_IDS} \ --tags Key=kubernetes.io/role/elb,Value='1' \ Key=kubernetes.io/cluster/${CLUSTER},Value=shared \ --region ${REGION}$ aws ec2 create-tags \ --resources ${PUBLIC_SUBNET_IDS} \ --tags Key=kubernetes.io/role/elb,Value='1' \ Key=kubernetes.io/cluster/${CLUSTER},Value=shared \ --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add a tag to your private subnets:

aws ec2 create-tags \ --resources ${PRIVATE_SUBNET_IDS} \ --tags Key=kubernetes.io/role/internal-elb,Value='1' \ Key=kubernetes.io/cluster/${CLUSTER},Value=shared \ --region ${REGION}$ aws ec2 create-tags \ --resources ${PRIVATE_SUBNET_IDS} \ --tags Key=kubernetes.io/role/internal-elb,Value='1' \ Key=kubernetes.io/cluster/${CLUSTER},Value=shared \ --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2. Deploy the AWS Load Balancer Operator

The AWS Load Balancer Operator is used to used to install, manage and configure an instance of aws-load-balancer-controller in a Red Hat OpenShift Service on AWS cluster. To deploy ALBs in Red Hat OpenShift Service on AWS, we need to first deploy the AWS Load Balancer Operator.

Create a new project to deploy the AWS Load Balancer Operator into by running the following command:

oc new-project aws-load-balancer-operator

$ oc new-project aws-load-balancer-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create an AWS IAM policy for the AWS Load Balancer Controller if one does not already exist by running the following command:

NoteThe policy is sourced from the upstream AWS Load Balancer Controller policy. This is required by the operator to function.

POLICY_ARN=$(aws iam list-policies --query \ "Policies[?PolicyName=='aws-load-balancer-operator-policy'].{ARN:Arn}" \ --output text)$ POLICY_ARN=$(aws iam list-policies --query \ "Policies[?PolicyName=='aws-load-balancer-operator-policy'].{ARN:Arn}" \ --output text)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an AWS IAM trust policy for AWS Load Balancer Operator:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an AWS IAM role for the AWS Load Balancer Operator:

ROLE_ARN=$(aws iam create-role --role-name "${CLUSTER}-alb-operator" \ --assume-role-policy-document "file://${SCRATCH}/trust-policy.json" \ --query Role.Arn --output text)$ ROLE_ARN=$(aws iam create-role --role-name "${CLUSTER}-alb-operator" \ --assume-role-policy-document "file://${SCRATCH}/trust-policy.json" \ --query Role.Arn --output text)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Attach the AWS Load Balancer Operator policy to the IAM role we created previously by running the following command:

aws iam attach-role-policy --role-name "${CLUSTER}-alb-operator" \ --policy-arn ${POLICY_ARN}$ aws iam attach-role-policy --role-name "${CLUSTER}-alb-operator" \ --policy-arn ${POLICY_ARN}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a secret for the AWS Load Balancer Operator to assume our newly created AWS IAM role:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Install the AWS Load Balancer Operator:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy an instance of the AWS Load Balancer Controller using the operator:

NoteIf you get an error here wait a minute and try again, it means the Operator has not completed installing yet.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check the that the operator and controller pods are both running:

oc -n aws-load-balancer-operator get pods

$ oc -n aws-load-balancer-operator get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow You should see the following, if not wait a moment and retry:

NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-cluster-6ddf658785-pdp5d 1/1 Running 0 99s aws-load-balancer-operator-controller-manager-577d9ffcb9-w6zqn 2/2 Running 0 2m4s

NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-cluster-6ddf658785-pdp5d 1/1 Running 0 99s aws-load-balancer-operator-controller-manager-577d9ffcb9-w6zqn 2/2 Running 0 2m4sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3. Deploy a sample application

Create a new project for our sample application:

oc new-project hello-world

$ oc new-project hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy a hello world application:

oc new-app -n hello-world --image=docker.io/openshift/hello-openshift

$ oc new-app -n hello-world --image=docker.io/openshift/hello-openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow Convert the pre-created service resource to a NodePort service type:

oc -n hello-world patch service hello-openshift -p '{"spec":{"type":"NodePort"}}'$ oc -n hello-world patch service hello-openshift -p '{"spec":{"type":"NodePort"}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy an AWS ALB using the AWS Load Balancer Operator:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Curl the AWS ALB Ingress endpoint to verify the hello world application is accessible:

NoteAWS ALB provisioning takes a few minutes. If you receive an error that says

curl: (6) Could not resolve host, please wait and try again.INGRESS=$(oc -n hello-world get ingress hello-openshift-alb -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') curl "http://${INGRESS}"$ INGRESS=$(oc -n hello-world get ingress hello-openshift-alb -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') $ curl "http://${INGRESS}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.1. Configure the AWS WAF

The AWS WAF service is a web application firewall that lets you monitor, protect, and control the HTTP and HTTPS requests that are forwarded to your protected web application resources, like Red Hat OpenShift Service on AWS.

Create a AWS WAF rules file to apply to our web ACL:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow This will enable the Core (Common) and SQL AWS Managed Rule Sets.

Create an AWS WAF Web ACL using the rules we specified above:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Annotate the Ingress resource with the AWS WAF Web ACL ARN:

oc annotate -n hello-world ingress.networking.k8s.io/hello-openshift-alb \ alb.ingress.kubernetes.io/wafv2-acl-arn=${WAF_ARN}$ oc annotate -n hello-world ingress.networking.k8s.io/hello-openshift-alb \ alb.ingress.kubernetes.io/wafv2-acl-arn=${WAF_ARN}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Wait for 10 seconds for the rules to propagate and test that the app still works:

curl "http://${INGRESS}"$ curl "http://${INGRESS}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow Test that the WAF denies a bad request:

curl -X POST "http://${INGRESS}" \ -F "user='<script><alert>Hello></alert></script>'"$ curl -X POST "http://${INGRESS}" \ -F "user='<script><alert>Hello></alert></script>'"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteActivation of the AWS WAF integration can sometimes take several minutes. If you do not receive a

403 Forbiddenerror, please wait a few seconds and try again.The expected result is a

403 Forbiddenerror, which means the AWS WAF is protecting your application.

Chapter 7. Tutorial: Deploying OpenShift API for Data Protection on a Red Hat OpenShift Service on AWS cluster

This content is authored by Red Hat experts, but has not yet been tested on every supported configuration.

Prerequisites

Environment

Prepare the environment variables:

NoteChange the cluster name to match your Red Hat OpenShift Service on AWS cluster and ensure you are logged into the cluster as an Administrator. Ensure all fields are outputted correctly before moving on.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.1. Prepare AWS Account

Create an IAM Policy to allow for S3 Access:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an IAM Role trust policy for the cluster:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Attach the IAM Policy to the IAM Role:

aws iam attach-role-policy --role-name "${ROLE_NAME}" \ --policy-arn ${POLICY_ARN}$ aws iam attach-role-policy --role-name "${ROLE_NAME}" \ --policy-arn ${POLICY_ARN}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.2. Deploy OADP on the cluster

Create a namespace for OADP:

oc create namespace openshift-adp

$ oc create namespace openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a credentials secret:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<aws_region>with the AWS region to use for the Security Token Service (STS) endpoint.

Deploy the OADP Operator:

NoteThere is currently an issue with version 1.1 of the Operator with backups that have a

PartiallyFailedstatus. This does not seem to affect the backup and restore process, but it should be noted as there are issues with it.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Wait for the Operator to be ready:

watch oc -n openshift-adp get pods

$ watch oc -n openshift-adp get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE openshift-adp-controller-manager-546684844f-qqjhn 1/1 Running 0 22s

NAME READY STATUS RESTARTS AGE openshift-adp-controller-manager-546684844f-qqjhn 1/1 Running 0 22sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create Cloud Storage:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check your application’s storage default storage class:

oc get pvc -n <namespace>

$ oc get pvc -n <namespace>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Enter your application’s namespace.

Example output

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE applog Bound pvc-351791ae-b6ab-4e8b-88a4-30f73caf5ef8 1Gi RWO gp3-csi 4d19h mysql Bound pvc-16b8e009-a20a-4379-accc-bc81fedd0621 1Gi RWO gp3-csi 4d19h

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE applog Bound pvc-351791ae-b6ab-4e8b-88a4-30f73caf5ef8 1Gi RWO gp3-csi 4d19h mysql Bound pvc-16b8e009-a20a-4379-accc-bc81fedd0621 1Gi RWO gp3-csi 4d19hCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc get storageclass

$ oc get storageclassCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE gp2 kubernetes.io/aws-ebs Delete WaitForFirstConsumer true 4d21h gp2-csi ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h gp3 ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h gp3-csi (default) ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE gp2 kubernetes.io/aws-ebs Delete WaitForFirstConsumer true 4d21h gp2-csi ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h gp3 ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h gp3-csi (default) ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21hCopy to Clipboard Copied! Toggle word wrap Toggle overflow Using either gp3-csi, gp2-csi, gp3 or gp2 will work. If the application(s) that are being backed up are all using PV’s with CSI, include the CSI plugin in the OADP DPA configuration.

CSI only: Deploy a Data Protection Application:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you run this command for CSI volumes, you can skip the next step.

Non-CSI volumes: Deploy a Data Protection Application:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

In OADP 1.1.x Red Hat OpenShift Service on AWS STS environments, the container image backup and restore (

spec.backupImages) value must be set tofalseas it is not supported. -

The Restic feature (

restic.enable=false) is disabled and not supported in Red Hat OpenShift Service on AWS STS environments. -

The DataMover feature (

dataMover.enable=false) is disabled and not supported in Red Hat OpenShift Service on AWS STS environments.

7.3. Perform a backup

The following sample hello-world application has no attached persistent volumes. Either DPA configuration will work.

Create a workload to back up:

oc create namespace hello-world oc new-app -n hello-world --image=docker.io/openshift/hello-openshift

$ oc create namespace hello-world $ oc new-app -n hello-world --image=docker.io/openshift/hello-openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expose the route:

oc expose service/hello-openshift -n hello-world

$ oc expose service/hello-openshift -n hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the application is working:

curl `oc get route/hello-openshift -n hello-world -o jsonpath='{.spec.host}'`$ curl `oc get route/hello-openshift -n hello-world -o jsonpath='{.spec.host}'`Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow Back up the workload:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Wait until the backup is done:

watch "oc -n openshift-adp get backup hello-world -o json | jq .status"

$ watch "oc -n openshift-adp get backup hello-world -o json | jq .status"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the demo workload:

oc delete ns hello-world

$ oc delete ns hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restore from the backup:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Wait for the Restore to finish:

watch "oc -n openshift-adp get restore hello-world -o json | jq .status"

$ watch "oc -n openshift-adp get restore hello-world -o json | jq .status"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the workload is restored:

oc -n hello-world get pods

$ oc -n hello-world get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE hello-openshift-9f885f7c6-kdjpj 1/1 Running 0 90s

NAME READY STATUS RESTARTS AGE hello-openshift-9f885f7c6-kdjpj 1/1 Running 0 90sCopy to Clipboard Copied! Toggle word wrap Toggle overflow curl `oc get route/hello-openshift -n hello-world -o jsonpath='{.spec.host}'`$ curl `oc get route/hello-openshift -n hello-world -o jsonpath='{.spec.host}'`Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow - For troubleshooting tips please refer to the OADP team’s troubleshooting documentation

- Additional sample applications can be found in the OADP team’s sample applications directory

7.4. Cleanup

Delete the workload:

oc delete ns hello-world

$ oc delete ns hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the backup and restore resources from the cluster if they are no longer required:

oc delete backups.velero.io hello-world oc delete restores.velero.io hello-world

$ oc delete backups.velero.io hello-world $ oc delete restores.velero.io hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow To delete the backup/restore and remote objects in s3:

velero backup delete hello-world velero restore delete hello-world

$ velero backup delete hello-world $ velero restore delete hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the Data Protection Application:

oc -n openshift-adp delete dpa ${CLUSTER_NAME}-dpa$ oc -n openshift-adp delete dpa ${CLUSTER_NAME}-dpaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the Cloud Storage:

oc -n openshift-adp delete cloudstorage ${CLUSTER_NAME}-oadp$ oc -n openshift-adp delete cloudstorage ${CLUSTER_NAME}-oadpCopy to Clipboard Copied! Toggle word wrap Toggle overflow WarningIf this command hangs, you might need to delete the finalizer:

oc -n openshift-adp patch cloudstorage ${CLUSTER_NAME}-oadp -p '{"metadata":{"finalizers":null}}' --type=merge$ oc -n openshift-adp patch cloudstorage ${CLUSTER_NAME}-oadp -p '{"metadata":{"finalizers":null}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the Operator if it is no longer required:

oc -n openshift-adp delete subscription oadp-operator

$ oc -n openshift-adp delete subscription oadp-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the namespace for the Operator:

oc delete ns redhat-openshift-adp

$ oc delete ns redhat-openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the Custom Resource Definitions from the cluster if you no longer wish to have them:

for CRD in `oc get crds | grep velero | awk '{print $1}'`; do oc delete crd $CRD; done $ for CRD in `oc get crds | grep -i oadp | awk '{print $1}'`; do oc delete crd $CRD; done$ for CRD in `oc get crds | grep velero | awk '{print $1}'`; do oc delete crd $CRD; done $ for CRD in `oc get crds | grep -i oadp | awk '{print $1}'`; do oc delete crd $CRD; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the AWS S3 Bucket:

aws s3 rm s3://${CLUSTER_NAME}-oadp --recursive aws s3api delete-bucket --bucket ${CLUSTER_NAME}-oadp$ aws s3 rm s3://${CLUSTER_NAME}-oadp --recursive $ aws s3api delete-bucket --bucket ${CLUSTER_NAME}-oadpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Detach the Policy from the role:

aws iam detach-role-policy --role-name "${ROLE_NAME}" \ --policy-arn "${POLICY_ARN}"$ aws iam detach-role-policy --role-name "${ROLE_NAME}" \ --policy-arn "${POLICY_ARN}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the role:

aws iam delete-role --role-name "${ROLE_NAME}"$ aws iam delete-role --role-name "${ROLE_NAME}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 8. Tutorial: AWS Load Balancer Operator on Red Hat OpenShift Service on AWS

This content is authored by Red Hat experts, but has not yet been tested on every supported configuration.

Load Balancers created by the AWS Load Balancer Operator cannot be used for OpenShift Routes, and should only be used for individual services or ingress resources that do not need the full layer 7 capabilities of an OpenShift Route.

The AWS Load Balancer Controller manages AWS Elastic Load Balancers for a Red Hat OpenShift Service on AWS cluster. The controller provisions AWS Application Load Balancers (ALB) when you create Kubernetes Ingress resources and AWS Network Load Balancers (NLB) when implementing Kubernetes Service resources with a type of LoadBalancer.

Compared with the default AWS in-tree load balancer provider, this controller is developed with advanced annotations for both ALBs and NLBs. Some advanced use cases are:

- Using native Kubernetes Ingress objects with ALBs

Integrate ALBs with the AWS Web Application Firewall (WAF) service

NoteWAFv1, WAF classic, is no longer supported. Use WAFv2.

- Specify custom NLB source IP ranges

- Specify custom NLB internal IP addresses

The AWS Load Balancer Operator is used to used to install, manage and configure an instance of aws-load-balancer-controller in a Red Hat OpenShift Service on AWS cluster.

8.1. Prerequisites

AWS ALBs require a multi-AZ cluster, as well as three public subnets split across three AZs in the same VPC as the cluster. This makes ALBs unsuitable for many PrivateLink clusters. AWS NLBs do not have this restriction.

- A multi-AZ Red Hat OpenShift Service on AWS cluster

- BYO VPC cluster

- AWS CLI

- OC CLI

8.1.1. Environment

Prepare the environment variables:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

8.1.2. AWS VPC and subnets

This section only applies to clusters that were deployed into existing VPCs. If you did not deploy your cluster into an existing VPC, skip this section and proceed to the installation section below.

Set the below variables to the proper values for your cluster deployment:

export VPC_ID=<vpc-id> export PUBLIC_SUBNET_IDS=<public-subnets> export PRIVATE_SUBNET_IDS=<private-subnets> export CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}")$ export VPC_ID=<vpc-id> $ export PUBLIC_SUBNET_IDS=<public-subnets> $ export PRIVATE_SUBNET_IDS=<private-subnets> $ export CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}")Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add a tag to your cluster’s VPC with the cluster name:

aws ec2 create-tags --resources ${VPC_ID} --tags Key=kubernetes.io/cluster/${CLUSTER_NAME},Value=owned --region ${REGION}$ aws ec2 create-tags --resources ${VPC_ID} --tags Key=kubernetes.io/cluster/${CLUSTER_NAME},Value=owned --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add a tag to your public subnets:

aws ec2 create-tags \ --resources ${PUBLIC_SUBNET_IDS} \ --tags Key=kubernetes.io/role/elb,Value='' \ --region ${REGION}$ aws ec2 create-tags \ --resources ${PUBLIC_SUBNET_IDS} \ --tags Key=kubernetes.io/role/elb,Value='' \ --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add a tag to your private subnets:

aws ec2 create-tags \ --resources "${PRIVATE_SUBNET_IDS}" \ --tags Key=kubernetes.io/role/internal-elb,Value='' \ --region ${REGION}$ aws ec2 create-tags \ --resources "${PRIVATE_SUBNET_IDS}" \ --tags Key=kubernetes.io/role/internal-elb,Value='' \ --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

8.2. Installation

Create an AWS IAM policy for the AWS Load Balancer Controller:

NoteThe policy is sourced from the upstream AWS Load Balancer Controller policy plus permission to create tags on subnets. This is required by the Operator to function.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an AWS IAM trust policy for AWS Load Balancer Operator:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an AWS IAM role for the AWS Load Balancer Operator:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a secret for the AWS Load Balancer Operator to assume our newly created AWS IAM role:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Install the AWS Load Balancer Operator:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy an instance of the AWS Load Balancer Controller using the Operator:

NoteIf you get an error here wait a minute and try again, it means the Operator has not completed installing yet.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check the that the Operator and controller pods are both running:

oc -n aws-load-balancer-operator get pods

$ oc -n aws-load-balancer-operator get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow You should see the following, if not wait a moment and retry:

NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-cluster-6ddf658785-pdp5d 1/1 Running 0 99s aws-load-balancer-operator-controller-manager-577d9ffcb9-w6zqn 2/2 Running 0 2m4s

NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-cluster-6ddf658785-pdp5d 1/1 Running 0 99s aws-load-balancer-operator-controller-manager-577d9ffcb9-w6zqn 2/2 Running 0 2m4sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

8.3. Validating the deployment

Create a new project:

oc new-project hello-world

$ oc new-project hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy a hello world application:

oc new-app -n hello-world --image=docker.io/openshift/hello-openshift

$ oc new-app -n hello-world --image=docker.io/openshift/hello-openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure a NodePort service for the AWS ALB to connect to:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy an AWS ALB using the AWS Load Balancer Operator:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Curl the AWS ALB Ingress endpoint to verify the hello world application is accessible:

NoteAWS ALB provisioning takes a few minutes. If you receive an error that says

curl: (6) Could not resolve host, please wait and try again.INGRESS=$(oc -n hello-world get ingress hello-openshift-alb \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') curl "http://${INGRESS}"$ INGRESS=$(oc -n hello-world get ingress hello-openshift-alb \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') $ curl "http://${INGRESS}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy an AWS NLB for your hello world application:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Test the AWS NLB endpoint:

NoteNLB provisioning takes a few minutes. If you receive an error that says

curl: (6) Could not resolve host, please wait and try again.NLB=$(oc -n hello-world get service hello-openshift-nlb \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') curl "http://${NLB}"$ NLB=$(oc -n hello-world get service hello-openshift-nlb \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') $ curl "http://${NLB}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow

8.4. Cleaning up

Delete the hello world application namespace (and all the resources in the namespace):

oc delete project hello-world

$ oc delete project hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the AWS Load Balancer Operator and the AWS IAM roles:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the AWS IAM policy:

aws iam delete-policy --policy-arn $POLICY_ARN

$ aws iam delete-policy --policy-arn $POLICY_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 9. Tutorial: Configuring Microsoft Entra ID (formerly Azure Active Directory) as an identity provider

You can configure Microsoft Entra ID (formerly Azure Active Directory) as the cluster identity provider in Red Hat OpenShift Service on AWS.

This tutorial guides you to complete the following tasks:

- Register a new application in Entra ID for authentication.

- Configure the application registration in Entra ID to include optional and group claims in tokens.

- Configure the Red Hat OpenShift Service on AWS cluster to use Entra ID as the identity provider.

- Grant additional permissions to individual groups.

9.1. Prerequisites

- You created a set of security groups and assigned users by following the Microsoft documentation.

9.2. Registering a new application in Entra ID for authentication

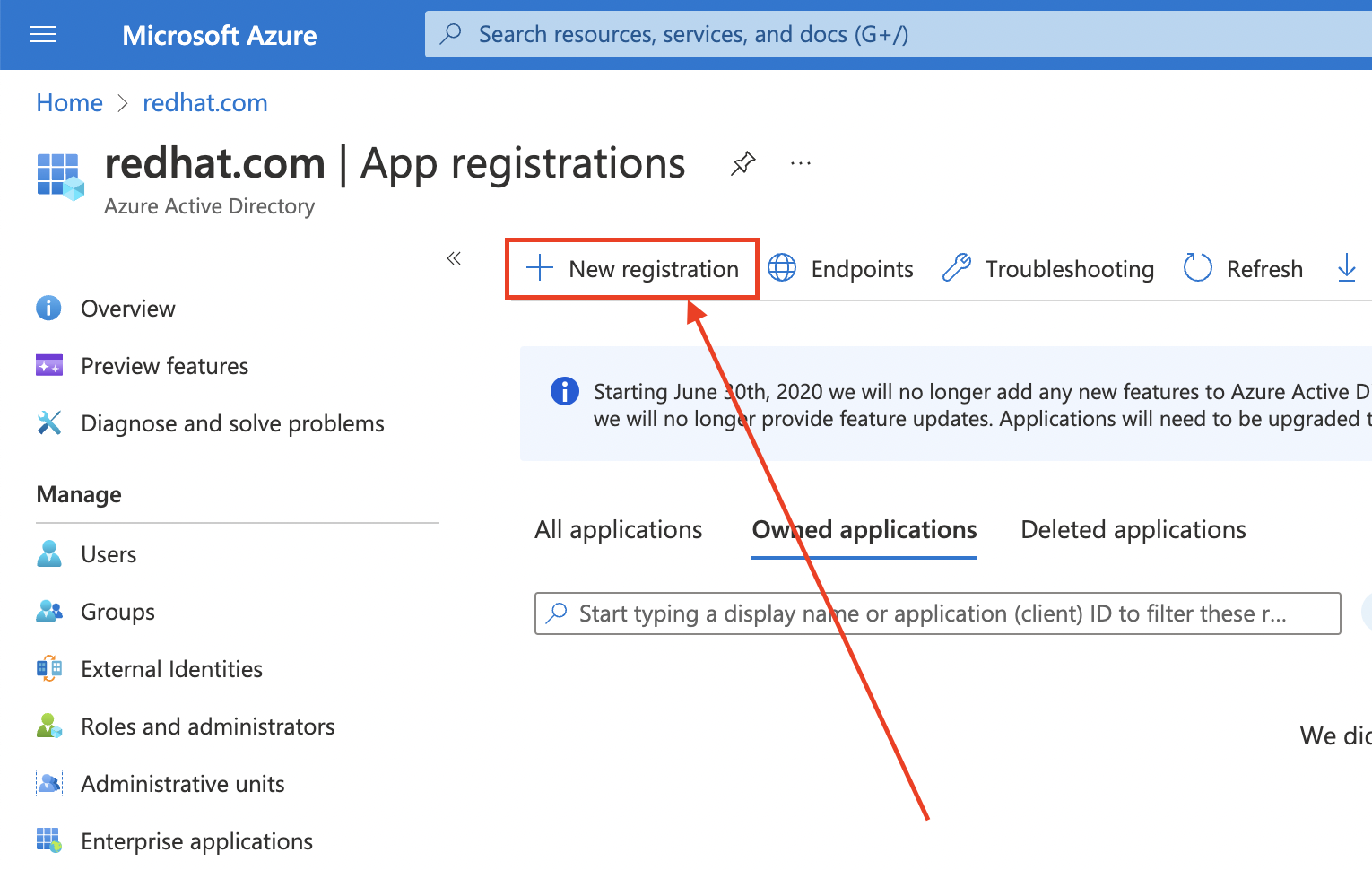

To register your application in Entra ID, first create the OAuth callback URL, then register your application.

Procedure

Create the cluster’s OAuth callback URL by changing the specified variables and running the following command:

NoteRemember to save this callback URL; it will be required later in the process.

domain=$(rosa describe cluster -c <cluster_name> | grep "DNS" | grep -oE '\S+.openshiftapps.com')

$ domain=$(rosa describe cluster -c <cluster_name> | grep "DNS" | grep -oE '\S+.openshiftapps.com') echo "OAuth callback URL: https://oauth.${domain}/oauth2callback/AAD"Copy to Clipboard Copied! Toggle word wrap Toggle overflow The "AAD" directory at the end of the OAuth callback URL must match the OAuth identity provider name that you will set up later in this process.

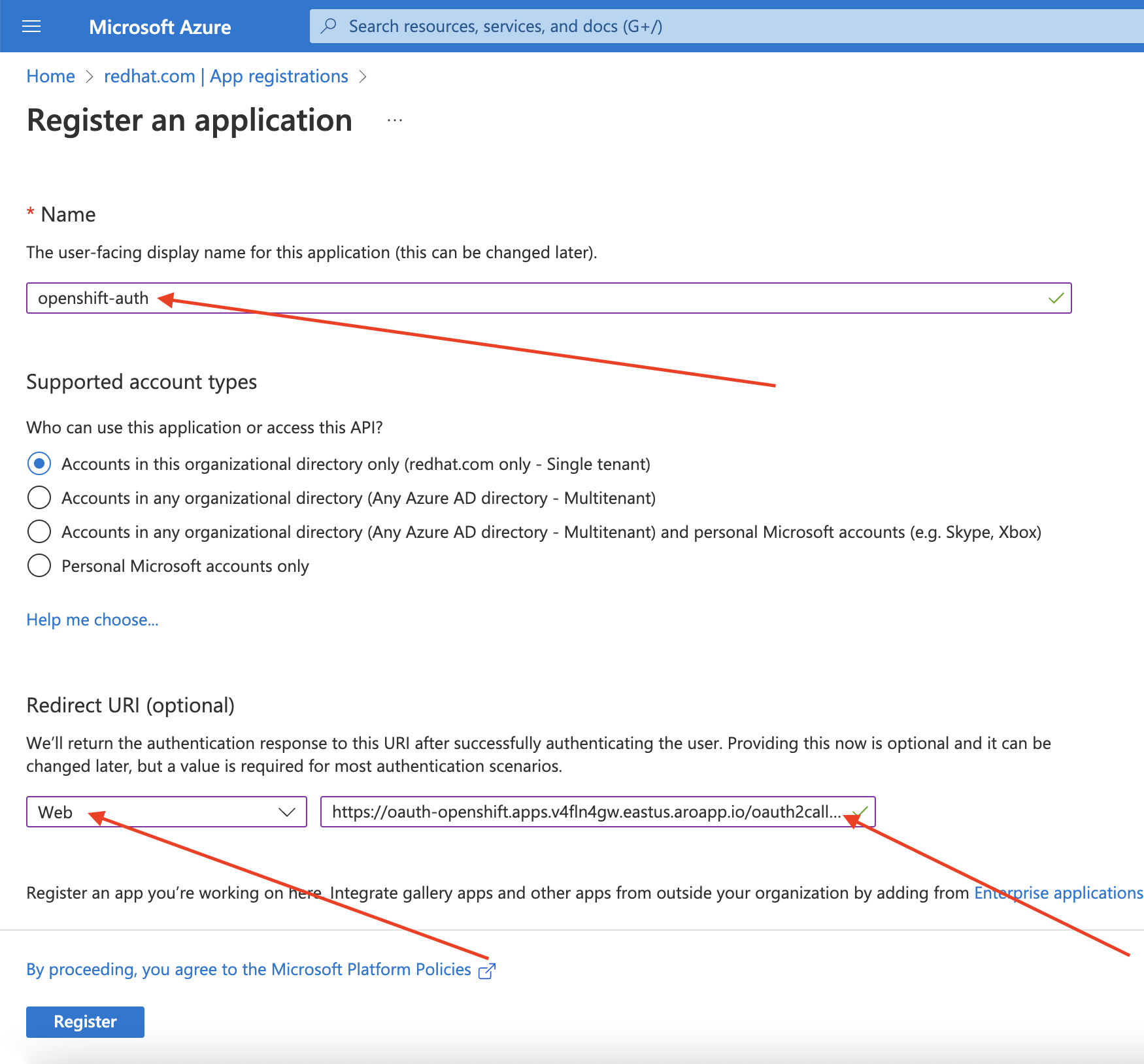

Create the Entra ID application by logging in to the Azure portal, and select the App registrations blade. Then, select New registration to create a new application.

-

Name the application, for example

openshift-auth. - Select Web from the Redirect URI dropdown and enter the value of the OAuth callback URL you retrieved in the previous step.

After providing the required information, click Register to create the application.

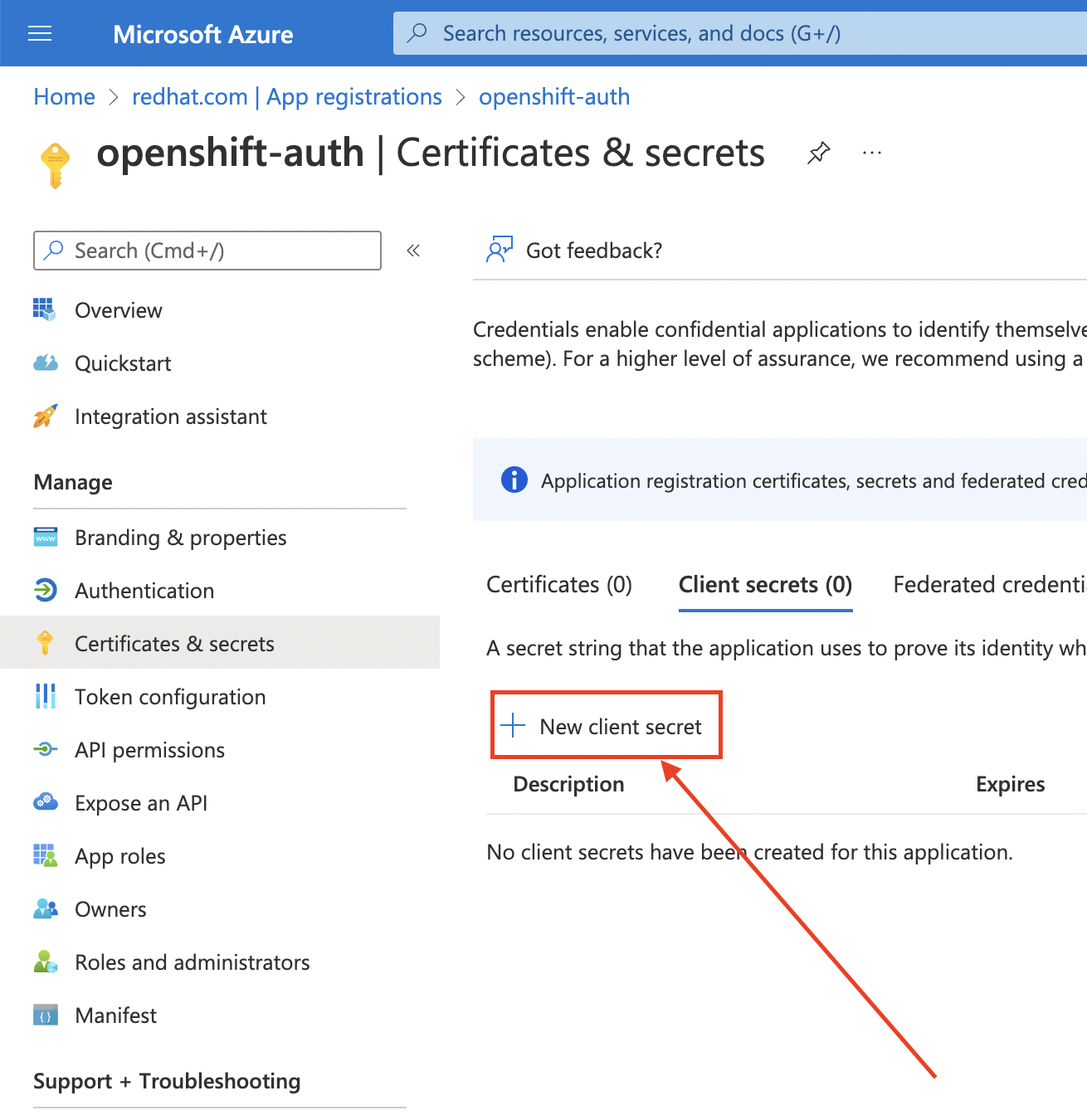

Select the Certificates & secrets sub-blade and select New client secret.

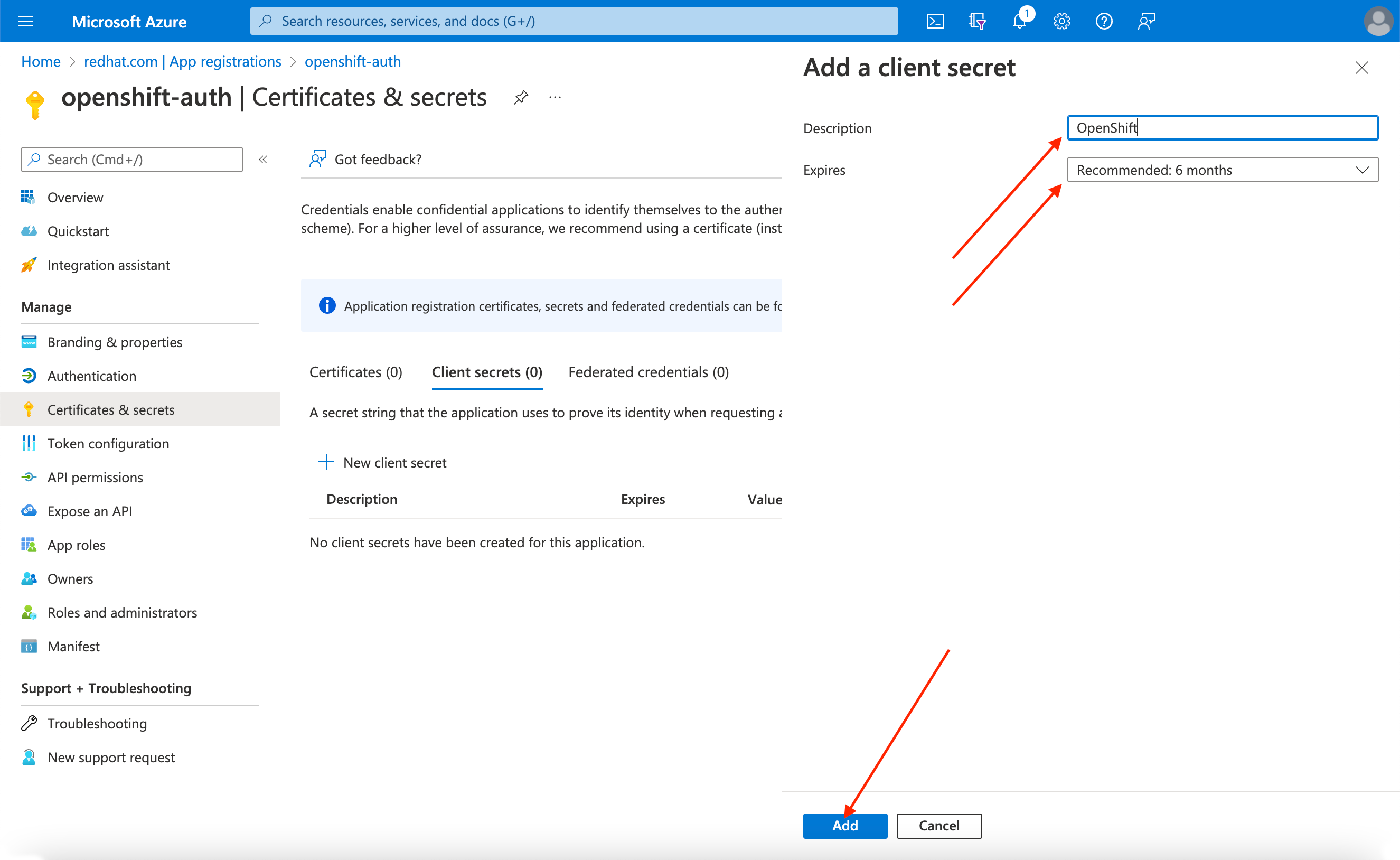

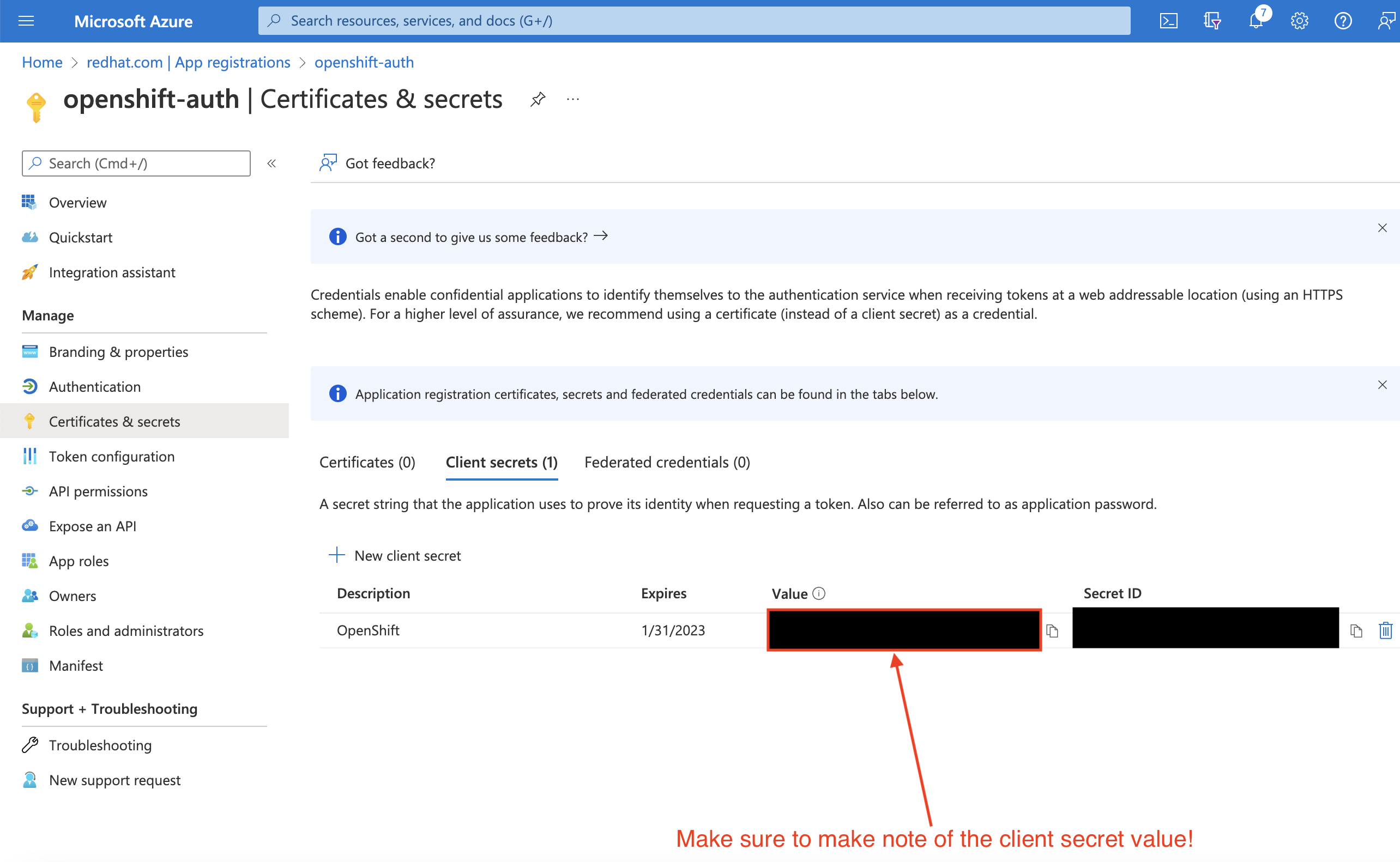

Complete the requested details and store the generated client secret value. This secret is required later in this process.

ImportantAfter initial setup, you cannot see the client secret. If you did not record the client secret, you must generate a new one.

Select the Overview sub-blade and note the

Application (client) IDandDirectory (tenant) ID. You will need these values in a future step.

9.3. Configuring the application registration in Entra ID to include optional and group claims

So that Red Hat OpenShift Service on AWS has enough information to create the user’s account, you must configure Entra ID to give two optional claims: email and preferred_username. For more information about optional claims in Entra ID, see the Microsoft documentation.

In addition to individual user authentication, Red Hat OpenShift Service on AWS provides group claim functionality. This functionality allows an OpenID Connect (OIDC) identity provider, such as Entra ID, to offer a user’s group membership for use within Red Hat OpenShift Service on AWS.

9.3.1. Configuring optional claims

You can configure the optional claims in Entra ID.

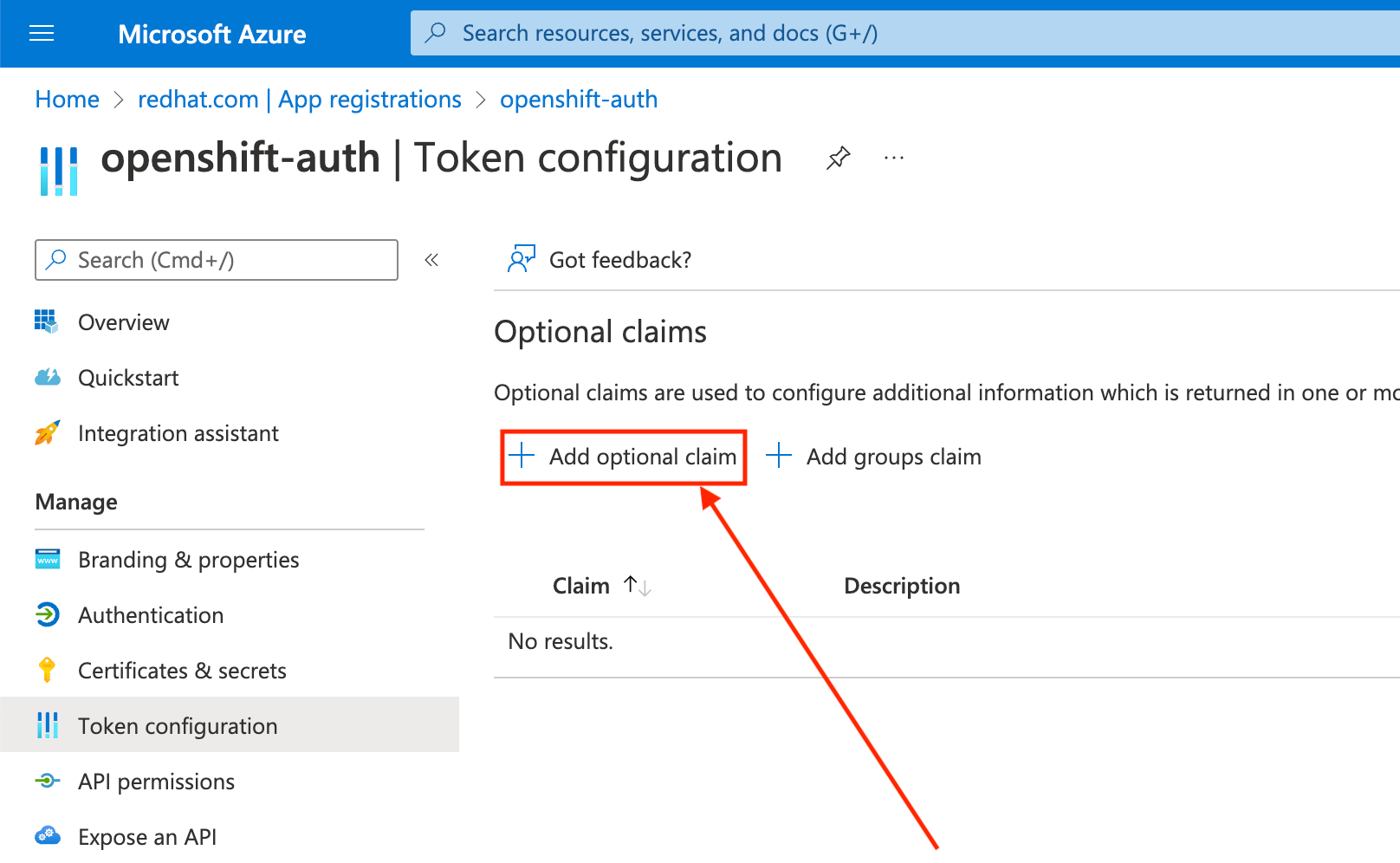

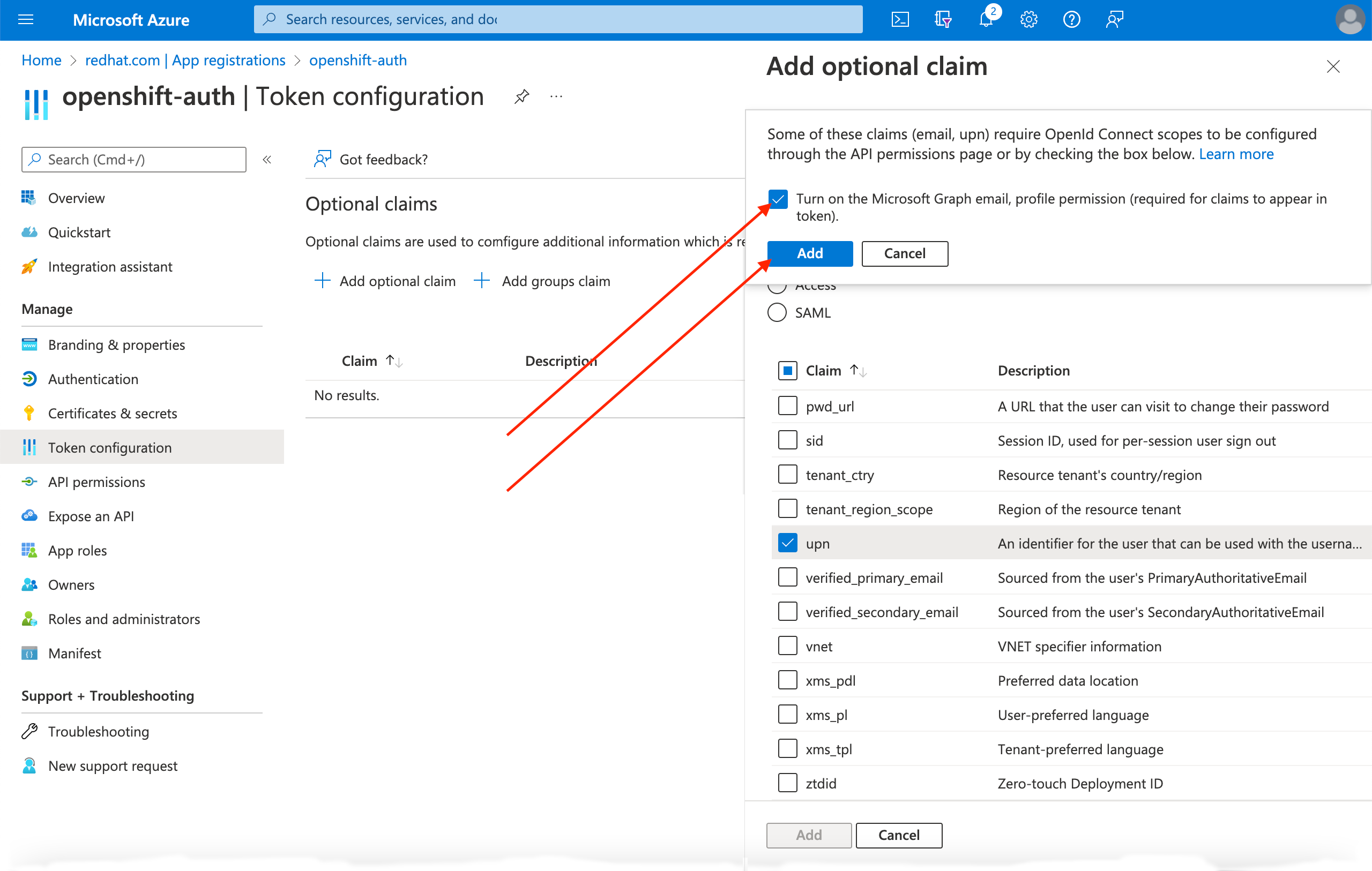

Click the Token configuration sub-blade and select the Add optional claim button.

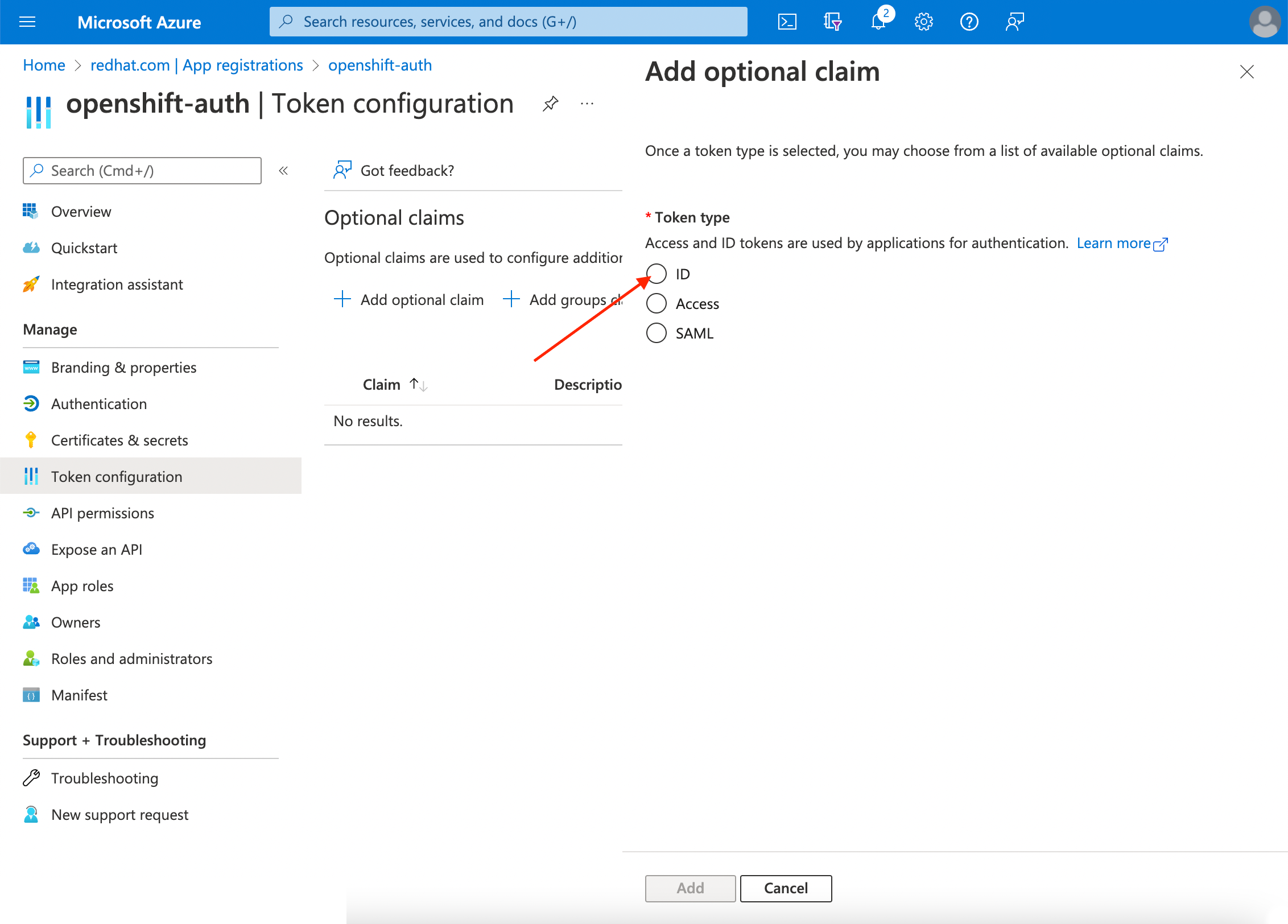

Select the ID radio button.

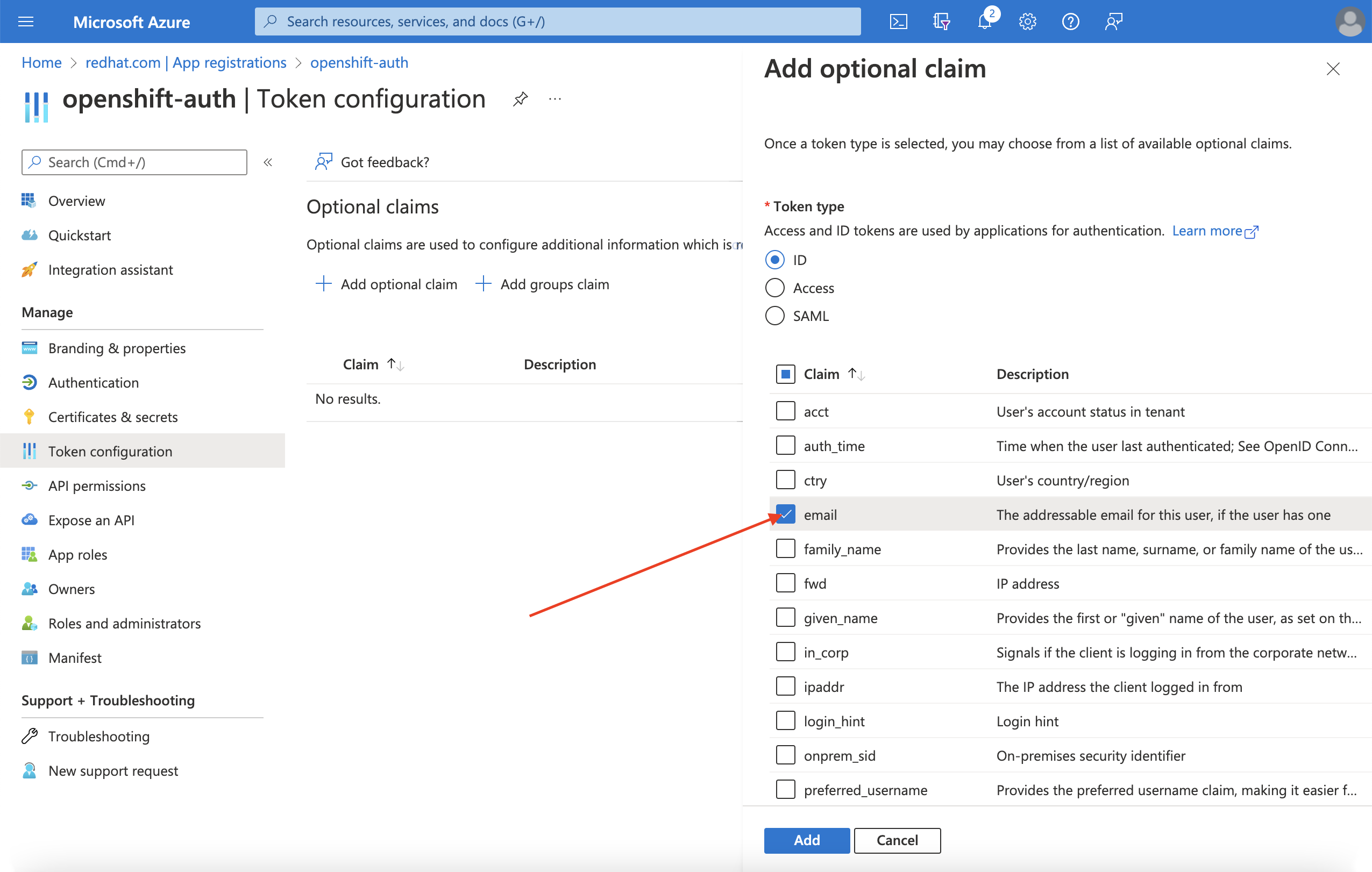

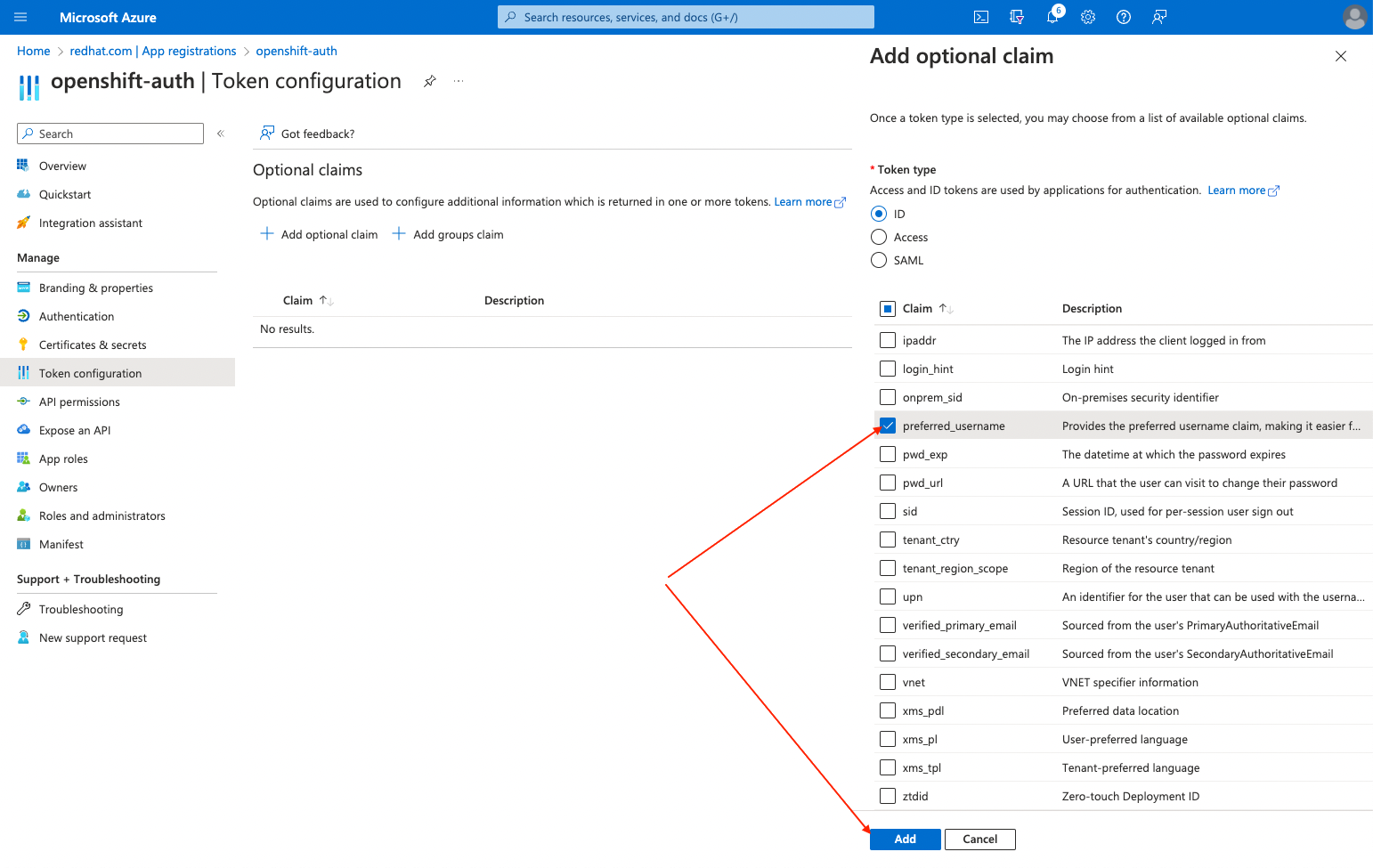

Select the email claim checkbox.

Select the

preferred_usernameclaim checkbox. Then, click Add to configure the email and preferred_username claims your Entra ID application.

A dialog box appears at the top of the page. Follow the prompt to enable the necessary Microsoft Graph permissions.

9.3.2. Configuring group claims (optional)

Configure Entra ID to offer a groups claim.

Procedure

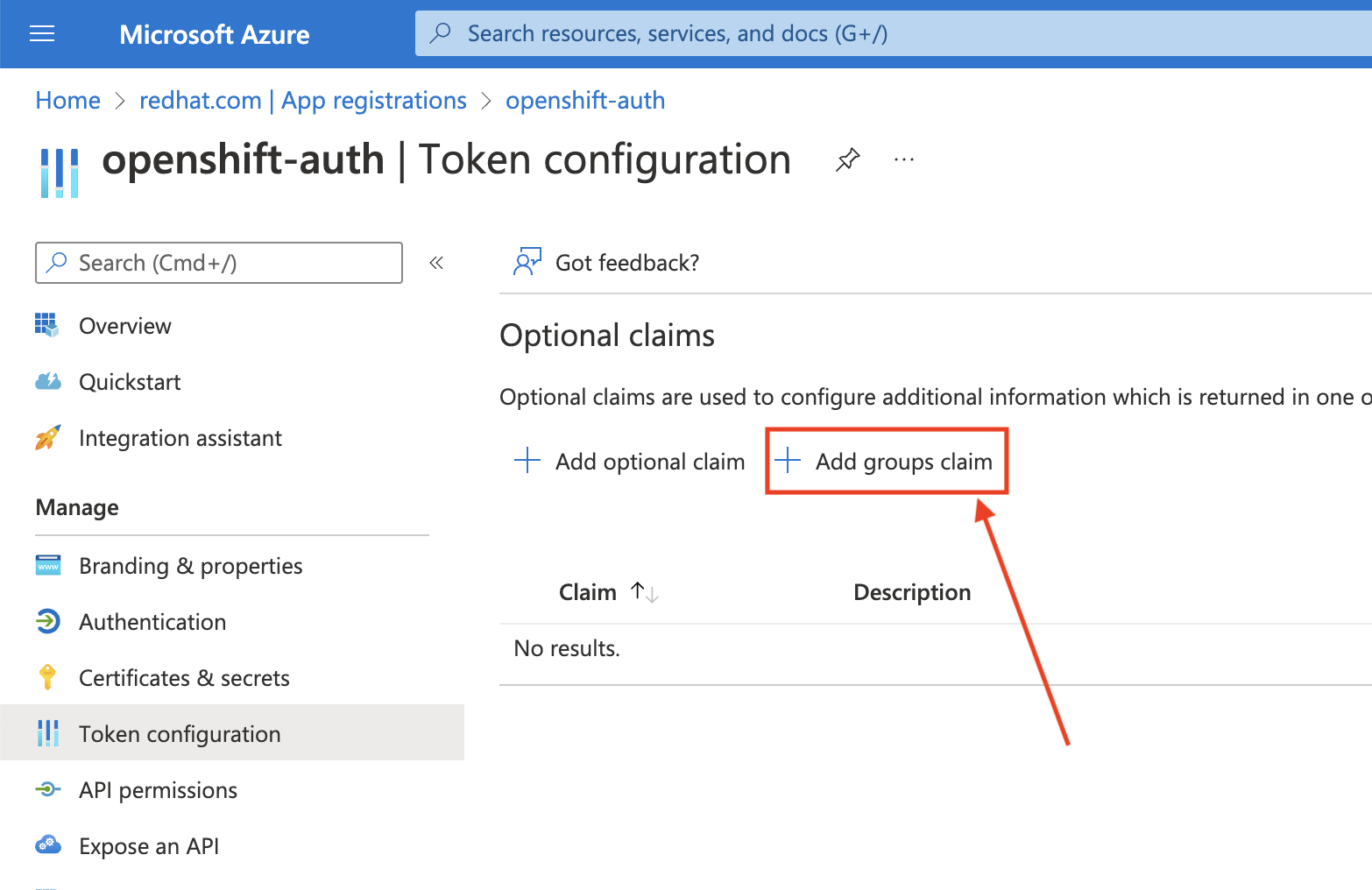

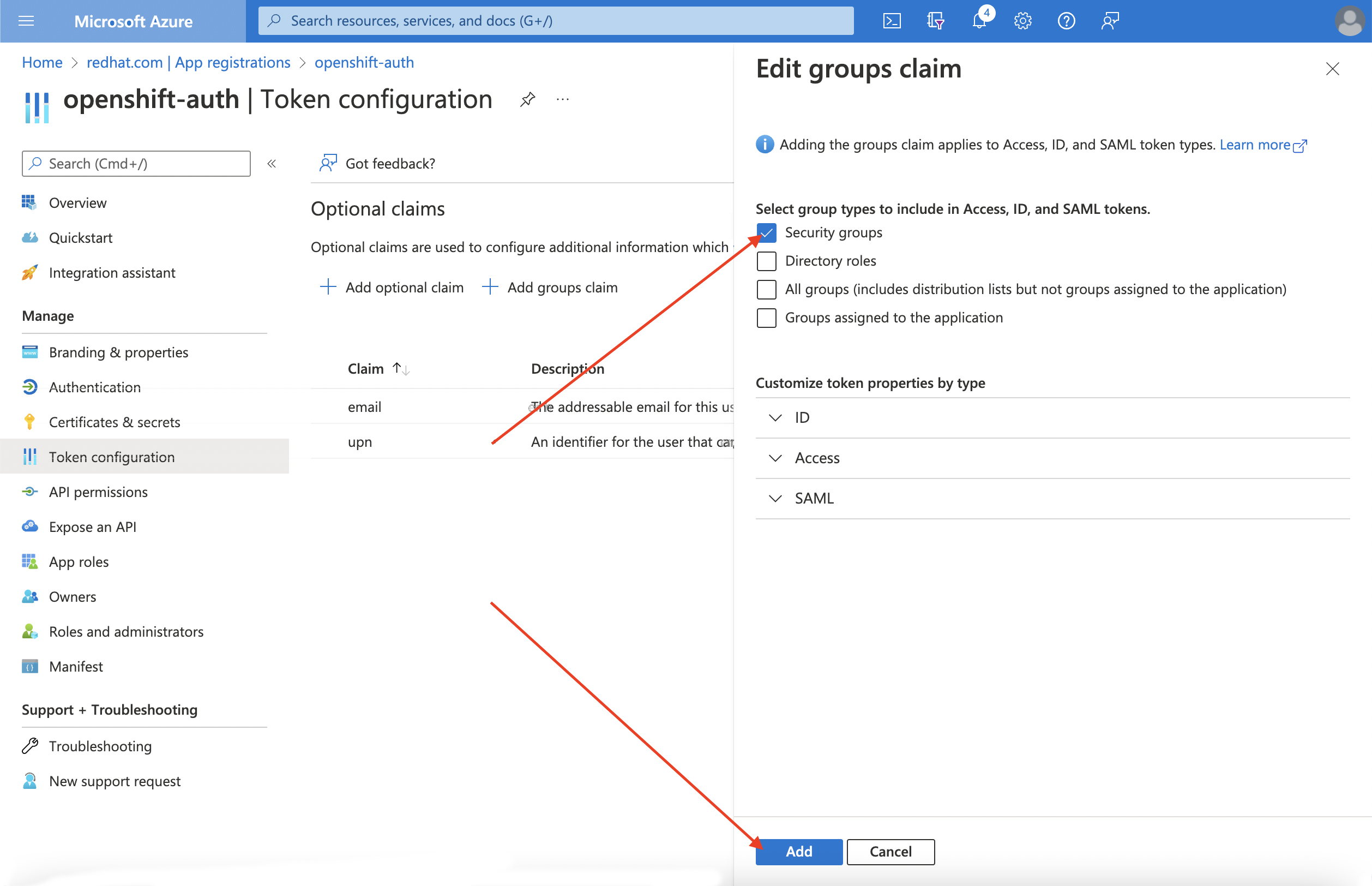

From the Token configuration sub-blade, click Add groups claim.

To configure group claims for your Entra ID application, select Security groups and then click the Add.

NoteIn this example, the group claim includes all of the security groups that a user is a member of. In a real production environment, ensure that the groups that the group claim only includes groups that apply to Red Hat OpenShift Service on AWS.

9.4. Configuring the Red Hat OpenShift Service on AWS cluster to use Entra ID as the identity provider

You must configure Red Hat OpenShift Service on AWS to use Entra ID as its identity provider.

Although Red Hat OpenShift Service on AWS offers the ability to configure identity providers by using OpenShift Cluster Manager, use the ROSA CLI to configure the cluster’s OAuth provider to use Entra ID as its identity provider. Before configuring the identity provider, set the necessary variables for the identity provider configuration.

Procedure

Create the variables by running the following command:

CLUSTER_NAME=example-cluster IDP_NAME=AAD APP_ID=yyyyyyyy-yyyy-yyyy-yyyy-yyyyyyyyyyyy CLIENT_SECRET=xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx TENANT_ID=zzzzzzzz-zzzz-zzzz-zzzz-zzzzzzzzzzzz

$ CLUSTER_NAME=example-cluster1 $ IDP_NAME=AAD2 $ APP_ID=yyyyyyyy-yyyy-yyyy-yyyy-yyyyyyyyyyyy3 $ CLIENT_SECRET=xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx4 $ TENANT_ID=zzzzzzzz-zzzz-zzzz-zzzz-zzzzzzzzzzzz5 Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the cluster’s OAuth provider by running the following command. If you enabled group claims, ensure that you use the

--group-claims groupsargument.If you enabled group claims, run the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you did not enable group claims, run the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

After a few minutes, the cluster authentication Operator reconciles your changes, and you can log in to the cluster by using Entra ID.

9.5. Granting additional permissions to individual users and groups

When your first log in, you might notice that you have very limited permissions. By default, Red Hat OpenShift Service on AWS only grants you the ability to create new projects, or namespaces, in the cluster. Other projects are restricted from view.

You must grant these additional abilities to individual users and groups.

9.5.1. Granting additional permissions to individual users

Red Hat OpenShift Service on AWS includes a significant number of preconfigured roles, including the cluster-admin role that grants full access and control over the cluster.

Procedure

Grant a user access to the

cluster-adminrole by running the following command:rosa grant user cluster-admin \ --user=<USERNAME>$ rosa grant user cluster-admin \ --user=<USERNAME>1 --cluster=${CLUSTER_NAME}Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Provide the Entra ID username that you want to have cluster admin permissions.

9.5.2. Granting additional permissions to individual groups

If you opted to enable group claims, the cluster OAuth provider automatically creates or updates the user’s group memberships by using the group ID. The cluster OAuth provider does not automatically create RoleBindings and ClusterRoleBindings for the groups that are created; you are responsible for creating those bindings by using your own processes.

To grant an automatically generated group access to the cluster-admin role, you must create a ClusterRoleBinding to the group ID.

Procedure

Create the

ClusterRoleBindingby running the following command:oc create clusterrolebinding cluster-admin-group \ --clusterrole=cluster-admin \ --group=<GROUP_ID>

$ oc create clusterrolebinding cluster-admin-group \ --clusterrole=cluster-admin \ --group=<GROUP_ID>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Provide the Entra ID group ID that you want to have cluster admin permissions.

Now, any user in the specified group automatically receives

cluster-adminaccess.

Chapter 10. Tutorial: Using AWS Secrets Manager CSI on Red Hat OpenShift Service on AWS with STS

The AWS Secrets and Configuration Provider (ASCP) provides a way to expose AWS Secrets as Kubernetes storage volumes. With the ASCP, you can store and manage your secrets in Secrets Manager and then retrieve them through your workloads running on Red Hat OpenShift Service on AWS.

10.1. Prerequisites

Ensure that you have the following resources and tools before starting this process:

- A Red Hat OpenShift Service on AWS cluster deployed with STS

- Helm 3

-

awsCLI -

ocCLI -

jqCLI

10.1.1. Additional environment requirements

Log in to your Red Hat OpenShift Service on AWS cluster by running the following command:

oc login --token=<your-token> --server=<your-server-url>

$ oc login --token=<your-token> --server=<your-server-url>Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can find your login token by accessing your cluster in pull secret from Red Hat OpenShift Cluster Manager.

Validate that your cluster has STS by running the following command:

oc get authentication.config.openshift.io cluster -o json \ | jq .spec.serviceAccountIssuer

$ oc get authentication.config.openshift.io cluster -o json \ | jq .spec.serviceAccountIssuerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

"https://xxxxx.cloudfront.net/xxxxx"

"https://xxxxx.cloudfront.net/xxxxx"Copy to Clipboard Copied! Toggle word wrap Toggle overflow If your output is different, do not proceed. See Red Hat documentation on creating an STS cluster before continuing this process.

Set the