Questo contenuto non è disponibile nella lingua selezionata.

Chapter 1. Monitoring overview

1.1. About Red Hat OpenShift Service on AWS monitoring

In Red Hat OpenShift Service on AWS, you can monitor your own projects in isolation from Red Hat Site Reliability Engineering (SRE) platform metrics. You can monitor your own projects without the need for an additional monitoring solution.

The Red Hat OpenShift Service on AWS (ROSA) monitoring stack is based on the Prometheus open source project and its wider ecosystem.

1.2. Understanding the monitoring stack

The monitoring stack includes the following components:

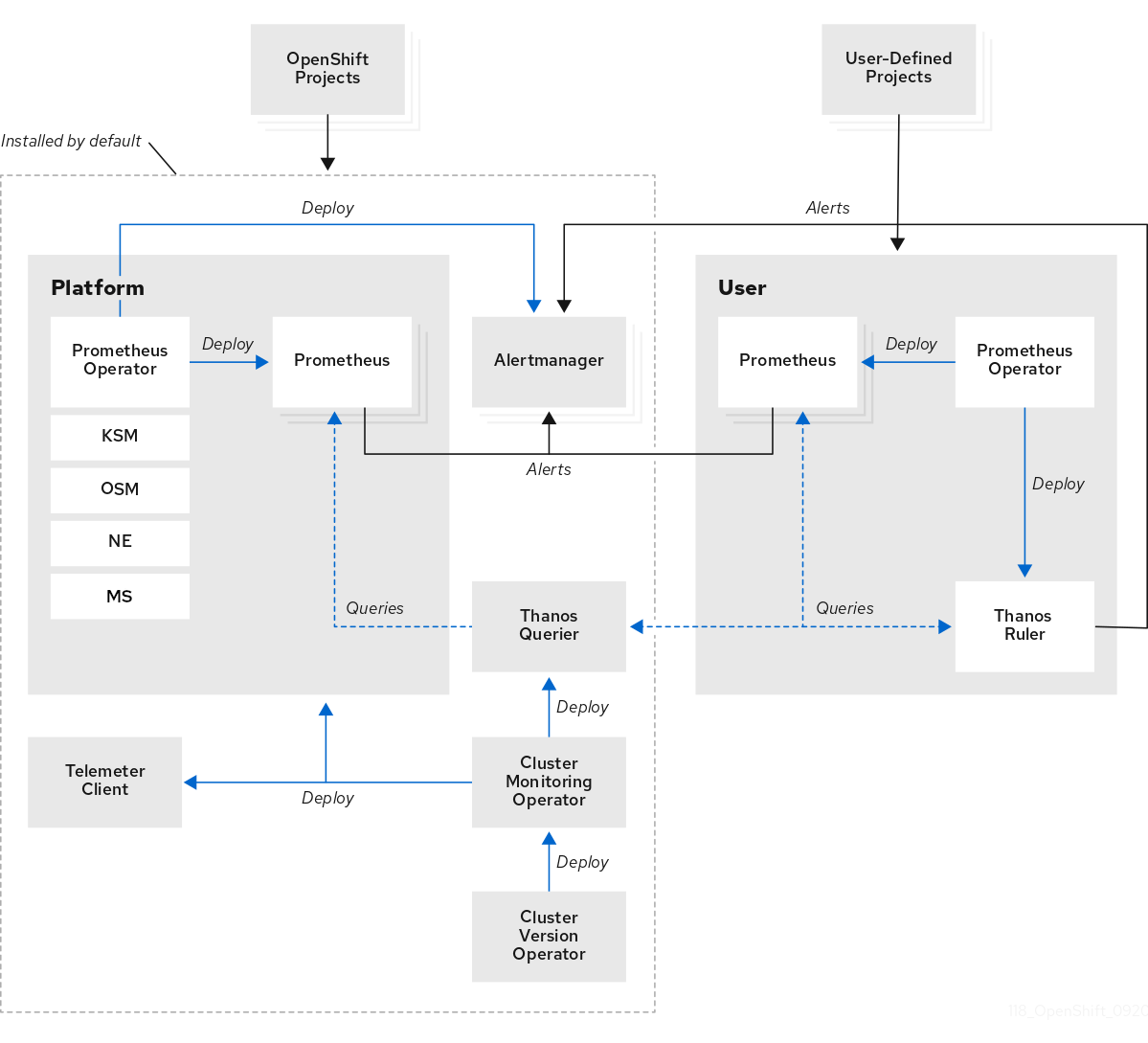

Default platform monitoring components. A set of platform monitoring components are installed in the

openshift-monitoringproject by default during a Red Hat OpenShift Service on AWS installation. Red Hat Site Reliability Engineers (SRE) use these components to monitor core cluster components including Kubernetes services. This includes critical metrics, such as CPU and memory, collected from all of the workloads in every namespace.These components are illustrated in the Installed by default section in the following diagram.

-

Components for monitoring user-defined projects. A set of user-defined project monitoring components are installed in the

openshift-user-workload-monitoringproject by default during a Red Hat OpenShift Service on AWS installation. You can use these components to monitor services and pods in user-defined projects. These components are illustrated in the User section in the following diagram.

1.2.1. Default monitoring targets

The following are examples of targets monitored by Red Hat Site Reliability Engineers (SRE) in your Red Hat OpenShift Service on AWS cluster:

- CoreDNS

- etcd

- HAProxy

- Image registry

- Kubelets

- Kubernetes API server

- Kubernetes controller manager

- Kubernetes scheduler

The exact list of targets can vary depending on your cluster capabilities and installed components.

Additional resources

1.2.2. Components for monitoring user-defined projects

Red Hat OpenShift Service on AWS includes an optional enhancement to the monitoring stack that enables you to monitor services and pods in user-defined projects. This feature includes the following components:

| Component | Description |

|---|---|

| Prometheus Operator |

The Prometheus Operator (PO) in the |

| Prometheus | Prometheus is the monitoring system through which monitoring is provided for user-defined projects. Prometheus sends alerts to Alertmanager for processing. |

| Thanos Ruler | The Thanos Ruler is a rule evaluation engine for Prometheus that is deployed as a separate process. In Red Hat OpenShift Service on AWS , Thanos Ruler provides rule and alerting evaluation for the monitoring of user-defined projects. |

| Alertmanager | The Alertmanager service handles alerts received from Prometheus and Thanos Ruler. Alertmanager is also responsible for sending user-defined alerts to external notification systems. Deploying this service is optional. |

All of these components are monitored by the stack and are automatically updated when Red Hat OpenShift Service on AWS is updated.

1.2.3. Monitoring targets for user-defined projects

Monitoring is enabled by default for Red Hat OpenShift Service on AWS user-defined projects. You can monitor:

- Metrics provided through service endpoints in user-defined projects.

- Pods running in user-defined projects.

1.2.4. The monitoring stack in high-availability clusters

By default, in multi-node clusters, the following components run in high-availability (HA) mode to prevent data loss and service interruption:

- Prometheus

- Alertmanager

- Thanos Ruler

The component is replicated across two pods, each running on a separate node. This means that the monitoring stack can tolerate the loss of one pod.

- Prometheus in HA mode

- Both replicas independently scrape the same targets and evaluate the same rules.

- The replicas do not communicate with each other. Therefore, data might differ between the pods.

- Alertmanager in HA mode

- The two replicas synchronize notification and silence states with each other. This ensures that each notification is sent at least once.

- If the replicas fail to communicate or if there is an issue on the receiving side, notifications are still sent, but they might be duplicated.

Prometheus, Alertmanager, and Thanos Ruler are stateful components. To ensure high availability, you must configure them with persistent storage.

1.3. Glossary of common terms for Red Hat OpenShift Service on AWS monitoring

This glossary defines common terms that are used in Red Hat OpenShift Service on AWS architecture.

- Alertmanager

- Alertmanager handles alerts received from Prometheus. Alertmanager is also responsible for sending the alerts to external notification systems.

- Alerting rules

- Alerting rules contain a set of conditions that outline a particular state within a cluster. Alerts are triggered when those conditions are true. An alerting rule can be assigned a severity that defines how the alerts are routed.

- Cluster Monitoring Operator

- The Cluster Monitoring Operator (CMO) is a central component of the monitoring stack. It deploys and manages Prometheus instances such as, the Thanos Querier, the Telemeter Client, and metrics targets to ensure that they are up to date. The CMO is deployed by the Cluster Version Operator (CVO).

- Cluster Version Operator

- The Cluster Version Operator (CVO) manages the lifecycle of cluster Operators, many of which are installed in Red Hat OpenShift Service on AWS by default.

- config map

-

A config map provides a way to inject configuration data into pods. You can reference the data stored in a config map in a volume of type

ConfigMap. Applications running in a pod can use this data. - Container

- A container is a lightweight and executable image that includes software and all its dependencies. Containers virtualize the operating system. As a result, you can run containers anywhere from a data center to a public or private cloud as well as a developer’s laptop.

- custom resource (CR)

- A CR is an extension of the Kubernetes API. You can create custom resources.

- etcd

- etcd is the key-value store for Red Hat OpenShift Service on AWS, which stores the state of all resource objects.

- Fluentd

Fluentd is a log collector that resides on each Red Hat OpenShift Service on AWS node. It gathers application, infrastructure, and audit logs and forwards them to different outputs.

NoteFluentd is deprecated and is planned to be removed in a future release. Red Hat provides bug fixes and support for this feature during the current release lifecycle, but this feature no longer receives enhancements. As an alternative to Fluentd, you can use Vector instead.

- Kubelets

- Runs on nodes and reads the container manifests. Ensures that the defined containers have started and are running.

- Kubernetes API server

- Kubernetes API server validates and configures data for the API objects.

- Kubernetes controller manager

- Kubernetes controller manager governs the state of the cluster.

- Kubernetes scheduler

- Kubernetes scheduler allocates pods to nodes.

- labels

- Labels are key-value pairs that you can use to organize and select subsets of objects such as a pod.

- Metrics Server

-

The Metrics Server monitoring component collects resource metrics and exposes them in the

metrics.k8s.ioMetrics API service for use by other tools and APIs, which frees the core platform Prometheus stack from handling this functionality. - node

- A worker machine in the Red Hat OpenShift Service on AWS cluster. A node is either a virtual machine (VM) or a physical machine.

- Operator

- The preferred method of packaging, deploying, and managing a Kubernetes application in an Red Hat OpenShift Service on AWS cluster. An Operator takes human operational knowledge and encodes it into software that is packaged and shared with customers.

- Operator Lifecycle Manager (OLM)

- OLM helps you install, update, and manage the lifecycle of Kubernetes native applications. OLM is an open source toolkit designed to manage Operators in an effective, automated, and scalable way.

- Persistent storage

- Stores the data even after the device is shut down. Kubernetes uses persistent volumes to store the application data.

- Persistent volume claim (PVC)

- You can use a PVC to mount a PersistentVolume into a Pod. You can access the storage without knowing the details of the cloud environment.

- pod

- The pod is the smallest logical unit in Kubernetes. A pod is comprised of one or more containers to run in a worker node.

- Prometheus

- Prometheus is the monitoring system on which the Red Hat OpenShift Service on AWS monitoring stack is based. Prometheus is a time-series database and a rule evaluation engine for metrics. Prometheus sends alerts to Alertmanager for processing.

- Prometheus Operator

-

The Prometheus Operator (PO) in the

openshift-monitoringproject creates, configures, and manages platform Prometheus and Alertmanager instances. It also automatically generates monitoring target configurations based on Kubernetes label queries. - Silences

- A silence can be applied to an alert to prevent notifications from being sent when the conditions for an alert are true. You can mute an alert after the initial notification, while you work on resolving the underlying issue.

- storage

- Red Hat OpenShift Service on AWS supports many types of storage on AWS. You can manage container storage for persistent and non-persistent data in an Red Hat OpenShift Service on AWS cluster.

- Thanos Ruler

- The Thanos Ruler is a rule evaluation engine for Prometheus that is deployed as a separate process. In Red Hat OpenShift Service on AWS, Thanos Ruler provides rule and alerting evaluation for the monitoring of user-defined projects.

- Vector

- Vector is a log collector that deploys to each Red Hat OpenShift Service on AWS node. It collects log data from each node, transforms the data, and forwards it to configured outputs.

- web console

- A user interface (UI) to manage Red Hat OpenShift Service on AWS.