此内容没有您所选择的语言版本。

Chapter 8. Managing Snapshots

Red Hat Gluster Storage Snapshot feature enables you to create point-in-time copies of Red Hat Gluster Storage volumes, which you can use to protect data. Users can directly access Snapshot copies which are read-only to recover from accidental deletion, corruption, or modification of the data.

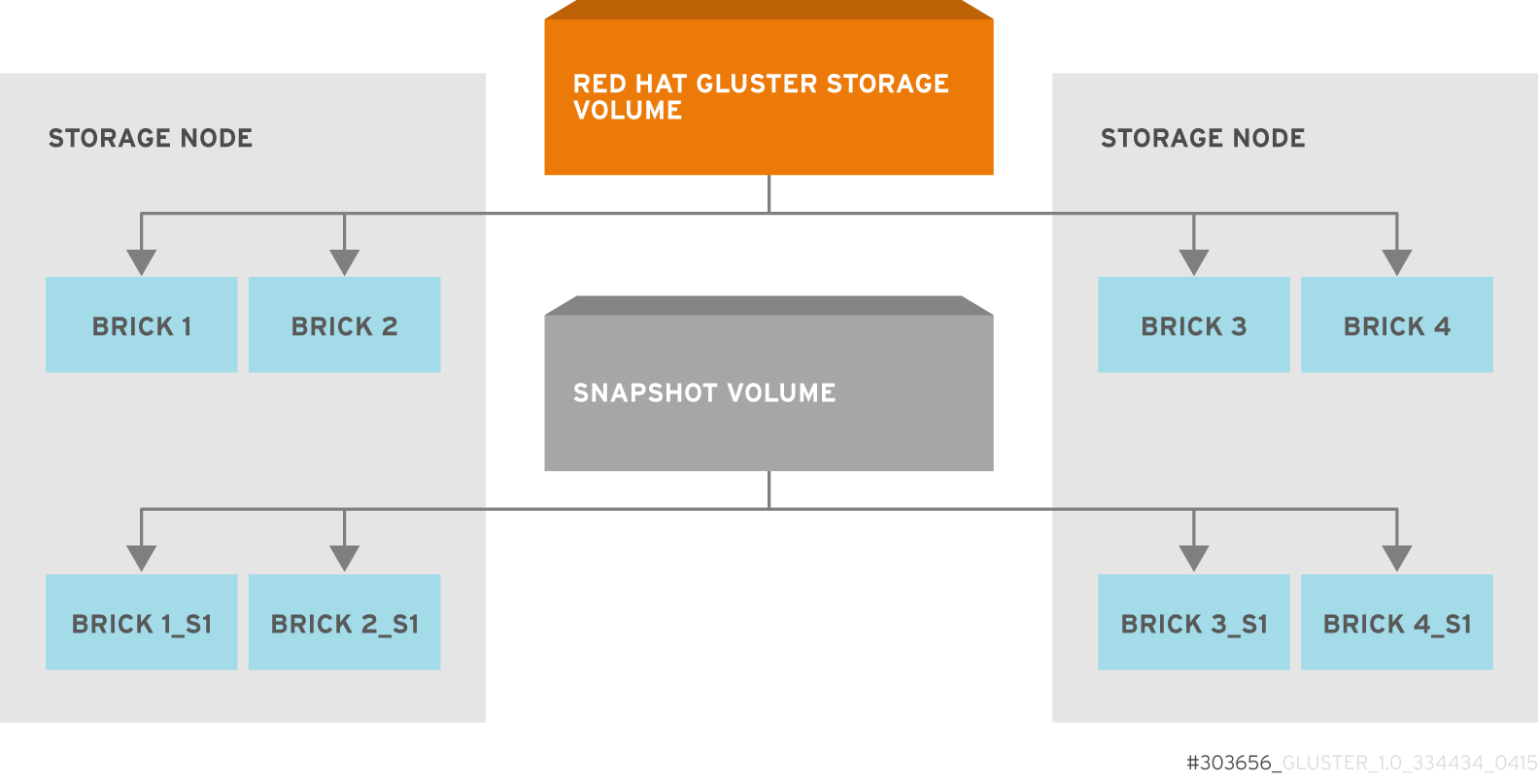

Figure 8.1. Snapshot Architecture

In the Snapshot Architecture diagram, Red Hat Gluster Storage volume consists of multiple bricks (Brick1 Brick2 etc) which is spread across one or more nodes and each brick is made up of independent thin Logical Volumes (LV). When a snapshot of a volume is taken, it takes the snapshot of the LV and creates another brick. Brick1_s1 is an identical image of Brick1. Similarly, identical images of each brick is created and these newly created bricks combine together to form a snapshot volume.

Some features of snapshot are:

- Crash Consistency

A crash consistent snapshot is captured at a particular point-in-time. When a crash consistent snapshot is restored, the data is identical as it was at the time of taking a snapshot.

Note

Currently, application level consistency is not supported. - Online Snapshot

Snapshot is an online snapshot hence the file system and its associated data continue to be available for the clients even while the snapshot is being taken.

- Barrier

To guarantee crash consistency some of the file operations are blocked during a snapshot operation.

These file operations are blocked till the snapshot is complete. All other file operations are passed through. There is a default time-out of 2 minutes, within that time if snapshot is not complete then these file operations are unbarriered. If the barrier is unbarriered before the snapshot is complete then the snapshot operation fails. This is to ensure that the snapshot is in a consistent state.

Note

Taking a snapshot of a Red Hat Gluster Storage volume that is hosting the Virtual Machine Images is not recommended. Taking a Hypervisor assisted snapshot of a virtual machine would be more suitable in this use case.

8.1. Prerequisites

复制链接链接已复制到粘贴板!

Before using this feature, ensure that the following prerequisites are met:

- Snapshot is based on thinly provisioned LVM. Ensure the volume is based on LVM2. Red Hat Gluster Storage is supported on Red Hat Enterprise Linux 6.7 and later, Red Hat Enterprise Linux 7.1 and later, and on Red Hat Enterprise Linux 8.2 and later versions. All these versions of Red Hat Enterprise Linux is based on LVM2 by default. For more information, see https://access.redhat.com/site/documentation/en-US/Red_Hat_Enterprise_Linux/6/html/Logical_Volume_Manager_Administration/thinprovisioned_volumes.html

Important

Red Hat Gluster Storage is not supported on Red Hat Enterprise Linux 6 (RHEL 6) from 3.5 Batch Update 1 onwards. See Version Details table in section Red Hat Gluster Storage Software Components and Versions of the Installation Guide - Each brick must be independent thinly provisioned logical volume(LV).

- All bricks must be online for snapshot creation.

- The logical volume which contains the brick must not contain any data other than the brick.

- Linear LVM and thin LV are supported with Red Hat Gluster Storage 3.4 and later. For more information, see https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html-single/logical_volume_manager_administration/index#LVM_components

Recommended Setup

The recommended setup for using Snapshot is described below. In addition, you must ensure to read Chapter 19, Tuning for Performance for enhancing snapshot performance:

- For each volume brick, create a dedicated thin pool that contains the brick of the volume and its (thin) brick snapshots. With the current thin-p design, avoid placing the bricks of different Red Hat Gluster Storage volumes in the same thin pool, as this reduces the performance of snapshot operations, such as snapshot delete, on other unrelated volumes.

- The recommended thin pool chunk size is 256KB. There might be exceptions to this in cases where we have a detailed information of the customer's workload.

- The recommended pool metadata size is 0.1% of the thin pool size for a chunk size of 256KB or larger. In special cases, where we recommend a chunk size less than 256KB, use a pool metadata size of 0.5% of thin pool size.

For Example

To create a brick from device /dev/sda1.

- Create a physical volume(PV) by using the

pvcreatecommand.pvcreate /dev/sda1

pvcreate /dev/sda1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the correctdataalignmentoption based on your device. For more information, Section 19.2, “Brick Configuration” - Create a Volume Group (VG) from the PV using the following command:

vgcreate dummyvg /dev/sda1

vgcreate dummyvg /dev/sda1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Create a thin-pool using the following command:

lvcreate --size 1T --thin dummyvg/dummypool --chunksize 256k --poolmetadatasize 16G --zero n

# lvcreate --size 1T --thin dummyvg/dummypool --chunksize 256k --poolmetadatasize 16G --zero nCopy to Clipboard Copied! Toggle word wrap Toggle overflow A thin pool of size 1 TB is created, using a chunksize of 256 KB. Maximum pool metadata size of 16 G is used. - Create a thinly provisioned volume from the previously created pool using the following command:

lvcreate --virtualsize 1G --thin dummyvg/dummypool --name dummylv

# lvcreate --virtualsize 1G --thin dummyvg/dummypool --name dummylvCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create a file system (XFS) on this. Use the recommended options to create the XFS file system on the thin LV.For example,

mkfs.xfs -f -i size=512 -n size=8192 /dev/dummyvg/dummylv

mkfs.xfs -f -i size=512 -n size=8192 /dev/dummyvg/dummylvCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Mount this logical volume and use the mount path as the brick.

mount /dev/dummyvg/dummylv /mnt/brick1

mount /dev/dummyvg/dummylv /mnt/brick1Copy to Clipboard Copied! Toggle word wrap Toggle overflow