此内容没有您所选择的语言版本。

5.5. Creating Replicated Volumes

Replicated volume creates copies of files across multiple bricks in the volume. Use replicated volumes in environments where high-availability and high-reliability are critical.

Use

gluster volume create to create different types of volumes, and gluster volume info to verify successful volume creation.

Prerequisites

- A trusted storage pool has been created, as described in Section 4.1, “Adding Servers to the Trusted Storage Pool”.

- Understand how to start and stop volumes, as described in Section 5.10, “Starting Volumes”.

Warning

Red Hat no longer recommends the use of two-way replication without arbiter bricks as Two-way replication without arbiter bricks is deprecated with Red Hat Gluster Storage 3.4 and no longer supported. This change affects both replicated and distributed-replicated volumes that do not use arbiter bricks.

Two-way replication without arbiter bricks is being deprecated because it does not provide adequate protection from split-brain conditions. Even in distributed-replicated configurations, two-way replication cannot ensure that the correct copy of a conflicting file is selected without the use of a tie-breaking node.

While a dummy node can be used as an interim solution for this problem, Red Hat strongly recommends that all volumes that currently use two-way replication without arbiter bricks are migrated to use either arbitrated replication or three-way replication.

Instructions for migrating a two-way replicated volume without arbiter bricks to an arbitrated replicated volume are available in the 5.7.5. Converting to an arbitrated volume. Information about three-way replication is available in Section 5.5.1, “Creating Three-way Replicated Volumes” and Section 5.6.1, “Creating Three-way Distributed Replicated Volumes”.

5.5.1. Creating Three-way Replicated Volumes

复制链接链接已复制到粘贴板!

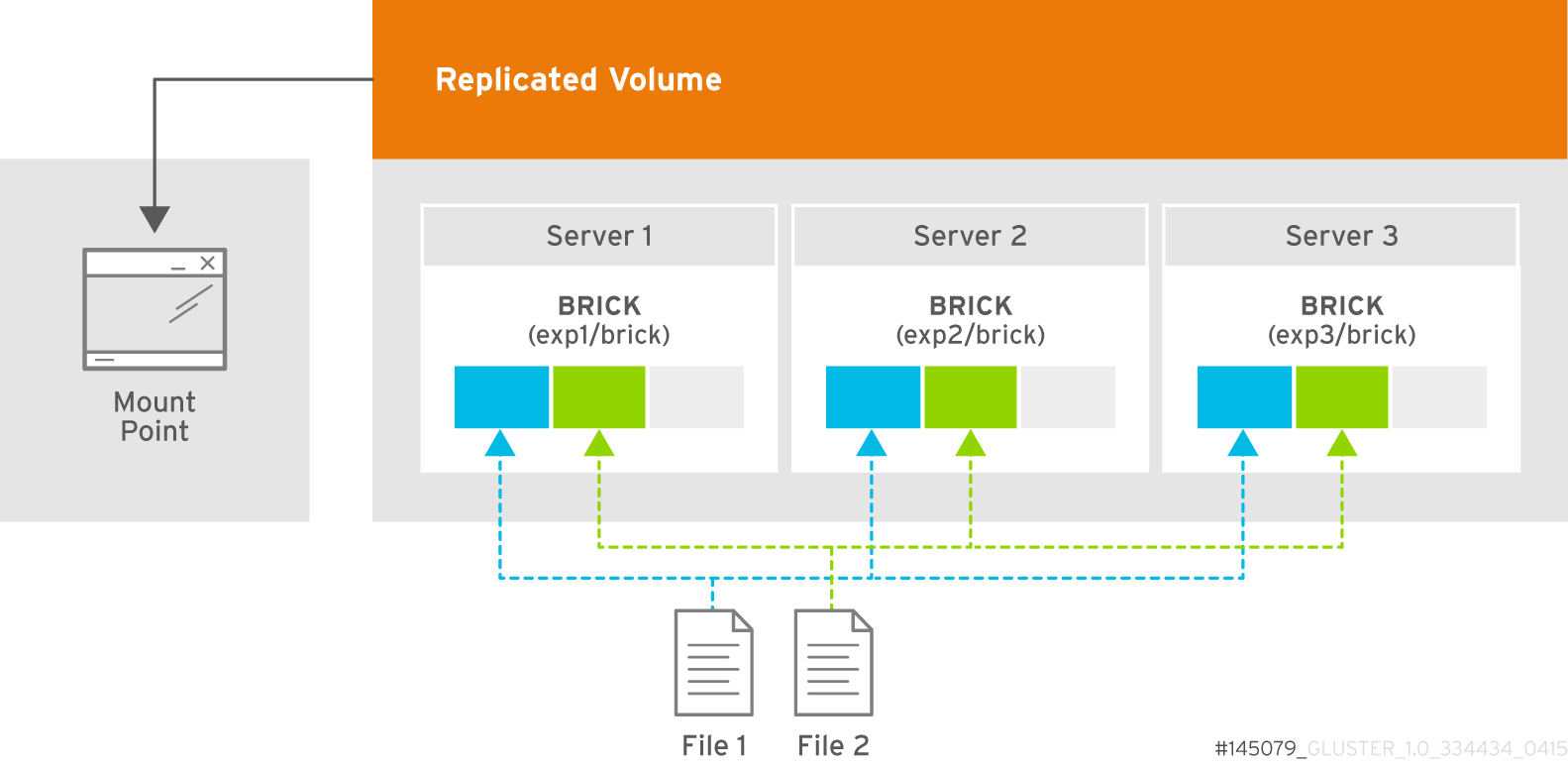

Three-way replicated volume creates three copies of files across multiple bricks in the volume. The number of bricks must be equal to the replica count for a replicated volume. To protect against server and disk failures, it is recommended that the bricks of the volume are from different servers.

Synchronous three-way replication is now fully supported in Red Hat Gluster Storage. It is recommended that three-way replicated volumes use JBOD, but use of hardware RAID with three-way replicated volumes is also supported.

Figure 5.2. Illustration of a Three-way Replicated Volume

Creating three-way replicated volumes

- Run the

gluster volume createcommand to create the replicated volume.The syntax is# gluster volume create NEW-VOLNAME [replica COUNT] [transport tcp | rdma (Deprecated) | tcp,rdma] NEW-BRICK...The default value for transport istcp. Other options can be passed such asauth.alloworauth.reject. See Section 11.1, “Configuring Volume Options” for a full list of parameters.Example 5.3. Replicated Volume with Three Storage Servers

The order in which bricks are specified determines how bricks are replicated with each other. For example, everynbricks, where3is the replica count forms a replica set. This is illustrated in Figure 5.2, “Illustration of a Three-way Replicated Volume”.gluster v create glutervol data replica 3 transport tcp server1:/rhgs/brick1 server2:/rhgs/brick2 server3:/rhgs/brick3 volume create: glutervol: success: please start the volume to access

# gluster v create glutervol data replica 3 transport tcp server1:/rhgs/brick1 server2:/rhgs/brick2 server3:/rhgs/brick3 volume create: glutervol: success: please start the volume to accessCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Run

# gluster volume start VOLNAMEto start the volume.gluster v start glustervol volume start: glustervol: success

# gluster v start glustervol volume start: glustervol: successCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Run

gluster volume infocommand to optionally display the volume information.

Important

By default, the client-side quorum is enabled on three-way replicated volumes to minimize split-brain scenarios. For more information on client-side quorum, see Section 11.15.1.2, “Configuring Client-Side Quorum”

5.5.2. Creating Sharded Replicated Volumes

复制链接链接已复制到粘贴板!

Sharding breaks files into smaller pieces so that they can be distributed across the bricks that comprise a volume. This is enabled on a per-volume basis.

When sharding is enabled, files written to a volume are divided into pieces. The size of the pieces depends on the value of the volume's features.shard-block-size parameter. The first piece is written to a brick and given a GFID like a normal file. Subsequent pieces are distributed evenly between bricks in the volume (sharded bricks are distributed by default), but they are written to that brick's

.shard directory, and are named with the GFID and a number indicating the order of the pieces. For example, if a file is split into four pieces, the first piece is named GFID and stored normally. The other three pieces are named GFID.1, GFID.2, and GFID.3 respectively. They are placed in the .shard directory and distributed evenly between the various bricks in the volume.

Because sharding distributes files across the bricks in a volume, it lets you store files with a larger aggregate size than any individual brick in the volume. Because the file pieces are smaller, heal operations are faster, and geo-replicated deployments can sync the small pieces of a file that have changed, rather than syncing the entire aggregate file.

Sharding also lets you increase volume capacity by adding bricks to a volume in an ad-hoc fashion.

5.5.2.1. Supported use cases

复制链接链接已复制到粘贴板!

Sharding has one supported use case: in the context of providing Red Hat Gluster Storage as a storage domain for Red Hat Enterprise Virtualization, to provide storage for live virtual machine images. Note that sharding is also a requirement for this use case, as it provides significant performance improvements over previous implementations.

Important

Quotas are not compatible with sharding.

Important

Sharding is supported in new deployments only, as there is currently no upgrade path for this feature.

Example 5.4. Example: Three-way replicated sharded volume

- Set up a three-way replicated volume, as described in the Red Hat Gluster Storage Administration Guide: https://access.redhat.com/documentation/en-US/red_hat_gluster_storage/3.5/html/Administration_Guide/sect-Creating_Replicated_Volumes.html#Creating_Three-way_Replicated_Volumes.

- Before you start your volume, enable sharding on the volume.

gluster volume set test-volume features.shard enable

# gluster volume set test-volume features.shard enableCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Start the volume and ensure it is working as expected.

gluster volume test-volume start gluster volume info test-volume

# gluster volume test-volume start # gluster volume info test-volumeCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5.2.2. Configuration Options

复制链接链接已复制到粘贴板!

Sharding is enabled and configured at the volume level. The configuration options are as follows.

-

features.shard - Enables or disables sharding on a specified volume. Valid values are

enableanddisable. The default value isdisable.gluster volume set volname features.shard enable

# gluster volume set volname features.shard enableCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this only affects files created after this command is run; files created before this command is run retain their old behaviour. -

features.shard-block-size - Specifies the maximum size of the file pieces when sharding is enabled. The supported value for this parameter is 512MB.

gluster volume set volname features.shard-block-size 32MB

# gluster volume set volname features.shard-block-size 32MBCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this only affects files created after this command is run; files created before this command is run retain their old behaviour.

5.5.2.3. Finding the pieces of a sharded file

复制链接链接已复制到粘贴板!

When you enable sharding, you might want to check that it is working correctly, or see how a particular file has been sharded across your volume.

To find the pieces of a file, you need to know that file's GFID. To obtain a file's GFID, run:

getfattr -d -m. -e hex path_to_file

# getfattr -d -m. -e hex path_to_file

Once you have the GFID, you can run the following command on your bricks to see how this file has been distributed:

ls /rhgs/*/.shard -lh | grep GFID

# ls /rhgs/*/.shard -lh | grep GFID