Dieser Inhalt ist in der von Ihnen ausgewählten Sprache nicht verfügbar.

Chapter 6. Service Catalog Components

6.1. Service Catalog

6.1.1. Overview

When developing microservices-based applications to run on cloud native platforms, there are many ways to provision different resources and share their coordinates, credentials, and configuration, depending on the service provider and the platform.

To give developers a more seamless experience, OpenShift Container Platform includes a service catalog, an implementation of the Open Service Broker API (OSB API) for Kubernetes. This allows users to connect any of their applications deployed in OpenShift Container Platform to a wide variety of service brokers.

The service catalog allows cluster administrators to integrate multiple platforms using a single API specification. The OpenShift Container Platform web console displays the cluster service classes offered by service brokers in the service catalog, allowing users to discover and instantiate those services for use with their applications.

As a result, service users benefit from ease and consistency of use across different types of services from different providers, while service providers benefit from having one integration point that gives them access to multiple platforms.

6.1.2. Design

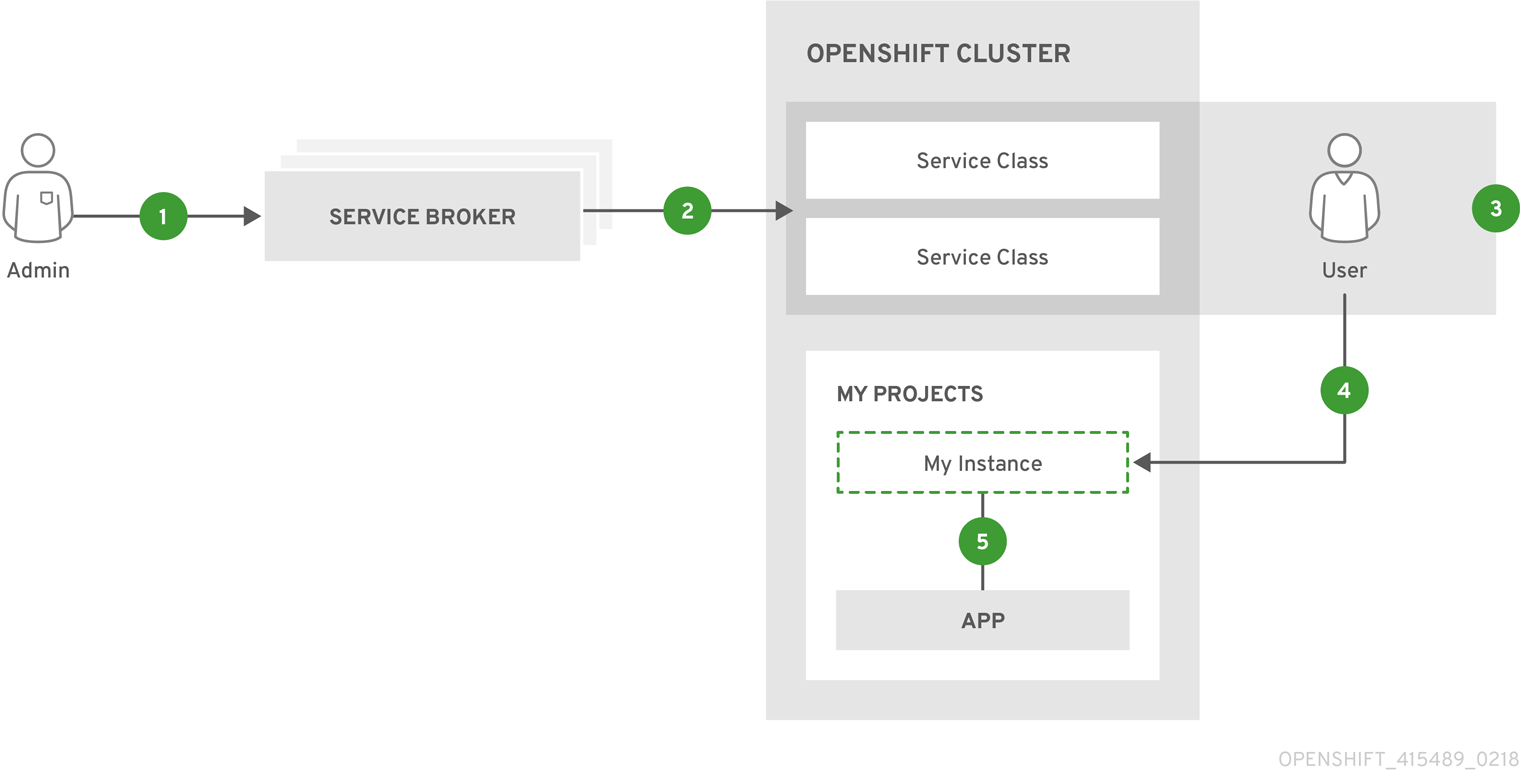

The design of the service catalog follows this basic workflow:

New terms in the following are defined further in Concepts and Terminology.

- A cluster administrator registers one or more cluster service brokers with their OpenShift Container Platform cluster. This can be done automatically during installation for some default-provided service brokers or manually.

- Each service broker specifies a set of cluster service classes and variations of those services (service plans) to OpenShift Container Platform that should be made available to users.

- Using the OpenShift Container Platform web console or CLI, users discover the services that are available. For example, a cluster service class may be available that is a database-as-a-service called BestDataBase.

- A user chooses a cluster service class and requests a new instance of their own. For example, a service instance may be a BestDataBase instance named

my_db. - A user links, or binds, their service instance to a set of pods (their application). For example, the

my_dbservice instance may be bound to the user’s application calledmy_app.

When a user makes a request to provision or deprovision a resource, the request is made to the service catalog, which then sends a request to the appropriate cluster service broker. With some services, some operations such as provision, deprovision, and update are expected to take some time to fulfill. If the cluster service broker is unavailable, the service catalog will continue to retry the operation.

This infrastructure allows a loose coupling between applications running in OpenShift Container Platform and the services they use. This allows the application that uses those services to focus on its own business logic while leaving the management of these services to the provider.

6.1.2.1. Deleting Resources

When a user is done with a service (or perhaps no longer wishes to be billed), the service instance can be deleted. In order to delete the service instance, the service bindings must be removed first. Deleting the service bindings is known as unbinding. Part of the deletion process includes deleting the secret that references the service binding being deleted.

Once all the service bindings are removed, the service instance may be deleted. Deleting the service instance is known as deprovisioning.

If a project or namespace containing service bindings and service instances is deleted, the service catalog must first request the cluster service broker to delete the associated instances and bindings. This is expected to delay the actual deletion of the project or namespace since the service catalog must communicate with cluster service brokers and wait for them to perform their deprovisioning work. In normal circumstances, this may take several minutes or longer depending on the service.

If you delete a service binding used by a deployment, you must also remove any references to the binding secret from the deployment. Otherwise, the next rollout will fail.

6.1.3. Concepts and Terminology

- Cluster Service Broker

A cluster service broker is a server that conforms to the OSB API specification and manages a set of one or more services. The software could be hosted within your own OpenShift Container Platform cluster or elsewhere.

Cluster administrators can create

ClusterServiceBrokerAPI resources representing cluster service brokers and register them with their OpenShift Container Platform cluster. This allows cluster administrators to make new types of managed services using that cluster service broker available within their cluster.A

ClusterServiceBrokerresource specifies connection details for a cluster service broker and the set of services (and variations of those services) to OpenShift Container Platform that should then be made available to users. Of special note is theauthInfosection, which contains the data used to authenticate with the cluster service broker.Example

ClusterServiceBrokerResourceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Cluster Service Class

Also synonymous with "service" in the context of the service catalog, a cluster service class is a type of managed service offered by a particular cluster service broker. Each time a new cluster service broker resource is added to the cluster, the service catalog controller connects to the corresponding cluster service broker to obtain a list of service offerings. A new

ClusterServiceClassresource is automatically created for each.NoteOpenShift Container Platform also has a core concept called services, which are separate Kubernetes resources related to internal load balancing. These resources are not to be confused with how the term is used in the context of the service catalog and OSB API.

Example

ClusterServiceClassResourceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Cluster Service Plan

- A cluster service plan represents tiers of a cluster service class. For example, a cluster service class may expose a set of plans that offer varying degrees of quality-of-service (QoS), each with a different cost associated with it.

- Service Instance

A service instance is a provisioned instance of a cluster service class. When a user wants to use the capability provided by a service class, they can create a new service instance.

When a new

ServiceInstanceresource is created, the service catalog controller connects to the appropriate cluster service broker and instructs it to provision the service instance.Example

ServiceInstanceResourceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Application

- The term application refers to the OpenShift Container Platform deployment artifacts, for example pods running in a user’s project, that will use a service instance.

- Credentials

- Credentials are information needed by an application to communicate with a service instance.

- Service Binding

A service binding is a link between a service instance and an application. These are created by cluster users who wish for their applications to reference and use a service instance.

Upon creation, the service catalog controller creates a Kubernetes secret containing connection details and credentials for the service instance. Such secrets can be mounted into pods as usual.

Example

ServiceBindingResourceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Users should not use the web console to change the prefix of environment variables for instantiated instances, as it can cause application routes to become inaccessible.

- Parameters

A parameter is a special field available to pass additional data to the cluster service broker when using either service bindings or service instances. The only formatting requirement is for the parameters to be valid YAML (or JSON). In the above example, a security level parameter is passed to the cluster service broker in the service binding request. For parameters that need more security, place them in a secret and reference them using

parametersFrom.Example Service Binding Resource Referencing a Secret

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.1.4. Provided Cluster Service Brokers

OpenShift Container Platform provides the following cluster service brokers for use with the service catalog.

6.2. Service catalog command-line interface (CLI)

6.2.1. Overview

The basic workflow of interacting with the service catalog is that:

- The cluster administrator installs and registers a broker server to make available its services.

- The users use those services by instantiating them in an OpenShift project and linking those service instances to their pods.

The Service Catalog command-line interface (CLI) utility called svcat is available to handle these user related tasks. While oc commands can perform the same tasks, you can use svcat for easier interaction with Service Catalog resources. svcat communicates with the Service Catalog API by using the aggregated API endpoint on an OpenShift cluster.

6.2.2. Installing svcat

You can install svcat as an RPM by using Red Hat Subscription Management (RHSM) if you have an active OpenShift Enterprise subscription on your Red Hat account:

yum install atomic-enterprise-service-catalog-svcat

# yum install atomic-enterprise-service-catalog-svcat6.2.2.1. Considerations for cloud providers

Google Compute Engine For Google Cloud Platform, run the following command to setup firewall rules to allow incoming traffic:

gcloud compute firewall-rules create allow-service-catalog-secure --allow tcp:30443 --description "Allow incoming traffic on 30443 port."

$ gcloud compute firewall-rules create allow-service-catalog-secure --allow tcp:30443 --description "Allow incoming traffic on 30443 port."6.2.3. Using svcat

This section includes common commands to handle the user associated tasks listed in the service catalog workflow. Use the svcat --help command to get more information and view other available command-line options. The sample output in this section assumes that the Ansible Service Broker is already installed on the cluster.

6.2.3.1. Get broker details

You can view a list available brokers, sync the broker catalog, and get details about brokers deployed in the service catalog.

6.2.3.1.1. Find brokers

To view all the brokers installed on the cluster:

svcat get brokers

$ svcat get brokersExample Output

NAME URL STATUS +-------------------------+-------------------------------------------------------------------------------------------+--------+ ansible-service-broker https://asb.openshift-ansible-service-broker.svc:1338/ansible-service-broker Ready template-service-broker https://apiserver.openshift-template-service-broker.svc:443/brokers/template.openshift.io Ready

NAME URL STATUS

+-------------------------+-------------------------------------------------------------------------------------------+--------+

ansible-service-broker https://asb.openshift-ansible-service-broker.svc:1338/ansible-service-broker Ready

template-service-broker https://apiserver.openshift-template-service-broker.svc:443/brokers/template.openshift.io Ready6.2.3.1.2. Sync broker catalog

To refresh the catalog metadata from the broker:

svcat sync broker ansible-service-broker

$ svcat sync broker ansible-service-brokerExample Output

Synchronization requested for broker: ansible-service-broker

Synchronization requested for broker: ansible-service-broker6.2.3.1.3. View broker details

To view the details of the broker:

svcat describe broker ansible-service-broker

$ svcat describe broker ansible-service-brokerExample Output

Name: ansible-service-broker URL: https://openshift-automation-service-broker.openshift-automation-service-broker.svc:1338/openshift-automation-service-broker/ Status: Ready - Successfully fetched catalog entries from broker @ 2018-06-07 00:32:59 +0000 UTC

Name: ansible-service-broker

URL: https://openshift-automation-service-broker.openshift-automation-service-broker.svc:1338/openshift-automation-service-broker/

Status: Ready - Successfully fetched catalog entries from broker @ 2018-06-07 00:32:59 +0000 UTC6.2.3.2. View service classes and service plans

When you create a ClusterServiceBroker resource, the service catalog controller queries the broker server to find all services it offers and creates a service class (ClusterServiceClass) for each of those services. Additionally, it also creates service plans (ClusterServicePlan) for each of the broker’s services.

6.2.3.2.1. View service classes

To view the available ClusterServiceClass resources:

svcat get classes

$ svcat get classesExample Output

To view details of a service class:

svcat describe class rh-postgresql-apb

$ svcat describe class rh-postgresql-apbExample Output

6.2.3.2.2. View service plans

To view the ClusterServicePlan resources available in the cluster:

svcat get plans

$ svcat get plansExample Output

View details of a plan:

svcat describe plan rh-postgresql-apb/dev

$ svcat describe plan rh-postgresql-apb/devExample Output

6.2.3.3. Provision services

Provisioning means to make the service available for consumption. To provision a service, you need to create a service instance and then bind to it.

6.2.3.3.1. Create ServiceInstance

Service instances must be created inside an OpenShift namespace.

Create a new project.

oc new-project <project-name>

$ oc new-project <project-name>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<project-name>with the name of your project.

Create service instance using the command:

svcat provision postgresql-instance --class rh-postgresql-apb --plan dev --params-json '{"postgresql_database":"admin","postgresql_password":"admin","postgresql_user":"admin","postgresql_version":"9.6"}' -n szh-project$ svcat provision postgresql-instance --class rh-postgresql-apb --plan dev --params-json '{"postgresql_database":"admin","postgresql_password":"admin","postgresql_user":"admin","postgresql_version":"9.6"}' -n szh-projectCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.3.3.1.1. View service instance details

To view service instance details:

svcat get instance

$ svcat get instanceExample Output

NAME NAMESPACE CLASS PLAN STATUS +---------------------+-------------+-------------------+------+--------+ postgresql-instance szh-project rh-postgresql-apb dev Ready

NAME NAMESPACE CLASS PLAN STATUS

+---------------------+-------------+-------------------+------+--------+

postgresql-instance szh-project rh-postgresql-apb dev Ready6.2.3.3.2. Create ServiceBinding

When you create a ServiceBinding resource:

- The service catalog controller communicates with the broker server to initiate the binding.

- The broker server create credentials and issue them to the service catalog controller.

- The service catalog controller adds those credentials as secrets to the project.

Create the service binding using the command:

svcat bind postgresql-instance --name mediawiki-postgresql-binding

$ svcat bind postgresql-instance --name mediawiki-postgresql-bindingExample Output

6.2.3.3.2.1. View service binding details

To view service binding details:

svcat get bindings

$ svcat get bindingsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

NAME NAMESPACE INSTANCE STATUS +------------------------------+-------------+---------------------+--------+ mediawiki-postgresql-binding szh-project postgresql-instance Ready

NAME NAMESPACE INSTANCE STATUS +------------------------------+-------------+---------------------+--------+ mediawiki-postgresql-binding szh-project postgresql-instance ReadyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the instance details after binding the service:

svcat describe instance postgresql-instance

$ svcat describe instance postgresql-instanceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.4. Deleting resources

To delete service catalog related resources, you need to unbind service bindings and deprovision the service instances.

6.2.4.1. Deleting service bindings

To delete all service bindings associated with a service instance:

svcat unbind -n <project-name> \ <instance-name>

$ svcat unbind -n <project-name>1 \ <instance-name>2 Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

svcat unbind -n szh-project postgresql-instance

$ svcat unbind -n szh-project postgresql-instanceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

deleted mediawiki-postgresql-binding

deleted mediawiki-postgresql-bindingCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that all service bindings are deleted:

svcat get bindings

$ svcat get bindingsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

NAME NAMESPACE INSTANCE STATUS +------+-----------+----------+--------+

NAME NAMESPACE INSTANCE STATUS +------+-----------+----------+--------+Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteRunning this command deletes all service bindings for the instance. For deleting individual bindings from within an instance run the command

svcat unbind -n <project-name> --name <binding-name>. For example,svcat unbind -n szh-project --name mediawiki-postgresql-binding.Verify that the associated secret is deleted.

oc get secret -n szh-project

$ oc get secret -n szh-projectCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.4.2. Deleting service instances

To deprovision the service instance:

svcat deprovision postgresql-instance

$ svcat deprovision postgresql-instanceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

deleted postgresql-instance

deleted postgresql-instanceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the instance is deleted:

svcat get instance

$ svcat get instanceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

NAME NAMESPACE CLASS PLAN STATUS +------+-----------+-------+------+--------+

NAME NAMESPACE CLASS PLAN STATUS +------+-----------+-------+------+--------+Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.4.3. Deleting service brokers

To remove broker services for the service catalog, delete the

ClusterServiceBrokerresource:oc delete clusterservicebrokers template-service-broker

$ oc delete clusterservicebrokers template-service-brokerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

clusterservicebroker "template-service-broker" deleted

clusterservicebroker "template-service-broker" deletedCopy to Clipboard Copied! Toggle word wrap Toggle overflow To view all the brokers installed on the cluster:

svcat get brokers

$ svcat get brokersCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

NAME URL STATUS +-------------------------+-------------------------------------------------------------------------------------------+--------+ ansible-service-broker https://asb.openshift-ansible-service-broker.svc:1338/ansible-service-broker Ready

NAME URL STATUS +-------------------------+-------------------------------------------------------------------------------------------+--------+ ansible-service-broker https://asb.openshift-ansible-service-broker.svc:1338/ansible-service-broker ReadyCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the

ClusterServiceClassresources for the broker to verify that the broker is removed:svcat get classes

$ svcat get classesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

NAME DESCRIPTION +------+-------------+

NAME DESCRIPTION +------+-------------+Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3. Template Service Broker

The template service broker (TSB) gives the service catalog visibility into the default Instant App and Quickstart templates that have shipped with OpenShift Container Platform since its initial release. The TSB can also make available as a service anything for which an OpenShift Container Platform template has been written, whether provided by Red Hat, a cluster administrator or user, or a third party vendor.

By default, the TSB shows the objects that are globally available from the openshift project. It can also be configured to watch any other project that a cluster administrator chooses.

6.4. OpenShift Ansible Broker

6.4.1. Overview

The OpenShift Ansible broker (OAB) is an implementation of the Open Service Broker (OSB) API that manages applications defined by Ansible playbook bundles (APBs). APBs provide a new method for defining and distributing container applications in OpenShift Container Platform, consisting of a bundle of Ansible playbooks built into a container image with an Ansible runtime. APBs leverage Ansible to create a standard mechanism for automating complex deployments.

The design of the OAB follows this basic workflow:

- A user requests list of available applications from the service catalog using the OpenShift Container Platform web console.

- The service catalog requests the OAB for available applications.

- The OAB communicates with a defined container image registry to learn which APBs are available.

- The user issues a request to provision a specific APB.

- The provision request makes its way to the OAB, which fulfills the user’s request by invoking the provision method on the APB.

6.4.2. Ansible Playbook Bundles

An Ansible playbook bundle (APB) is a lightweight application definition that allows you to leverage existing investment in Ansible roles and playbooks.

APBs use a simple directory with named playbooks to perform OSB API actions, such as provision and bind. Metadata defined in apb.yml spec file contains a list of required and optional parameters for use during deployment.

See the APB Development Guide for details on the overall design and how APBs are written.

6.5. AWS Service Broker

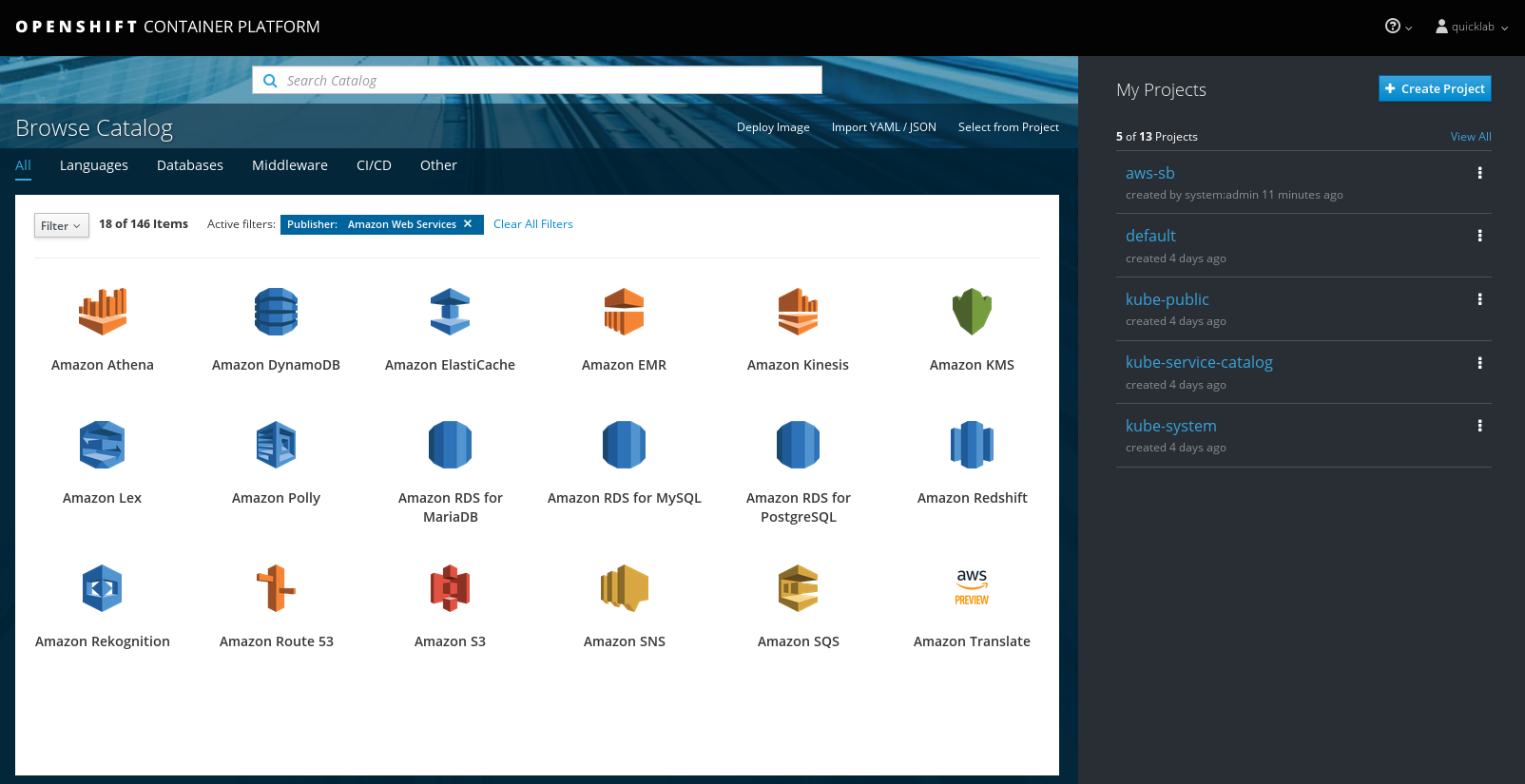

The AWS Service Broker provides access to Amazon Web Services (AWS) through the OpenShift Container Platform service catalog. AWS services and components can be configured and viewed in both the OpenShift Container Platform web console and the AWS dashboards.

Figure 6.1. Example AWS services in the OpenShift Container Platform service catalog

For information on installing the AWS Service Broker, see the AWS Service Broker Documentation in the Amazon Web Services - Labs docs repository.

The AWS Service Broker is supported and qualified on OpenShift Container Platform. It is offered directly from Amazon as a download and as such, many components of this service broker solution are supported directly by Amazon for the most recent two versions with a lag of two months after a new OpenShift Container Platform release. Red Hat provides support for the installation and troubleshooting of the OpenShift cluster and service catalog issues.