Dieser Inhalt ist in der von Ihnen ausgewählten Sprache nicht verfügbar.

Chapter 6. Working with nodes

6.1. Viewing and listing the nodes in your Red Hat OpenShift Service on AWS cluster

You can list all the nodes in your cluster to obtain information such as status, age, memory usage, and details about the nodes.

When you perform node management operations, the CLI interacts with node objects that are representations of actual node hosts. The master uses the information from node objects to validate nodes with health checks.

Worker nodes are not guaranteed longevity, and may be replaced at any time as part of the normal operation and management of OpenShift. For more details about the node lifecycle, refer to additional resources.

6.1.1. About listing all the nodes in a cluster

You can get detailed information on the nodes in the cluster.

The following command lists all nodes:

oc get nodes

$ oc get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following example is a cluster with healthy nodes:

oc get nodes

$ oc get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS ROLES AGE VERSION master.example.com Ready master 7h v1.34.2 node1.example.com Ready worker 7h v1.34.2 node2.example.com Ready worker 7h v1.34.2

NAME STATUS ROLES AGE VERSION master.example.com Ready master 7h v1.34.2 node1.example.com Ready worker 7h v1.34.2 node2.example.com Ready worker 7h v1.34.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following example is a cluster with one unhealthy node:

oc get nodes

$ oc get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS ROLES AGE VERSION master.example.com Ready master 7h v1.34.2 node1.example.com NotReady,SchedulingDisabled worker 7h v1.34.2 node2.example.com Ready worker 7h v1.34.2

NAME STATUS ROLES AGE VERSION master.example.com Ready master 7h v1.34.2 node1.example.com NotReady,SchedulingDisabled worker 7h v1.34.2 node2.example.com Ready worker 7h v1.34.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow The conditions that trigger a

NotReadystatus are shown later in this section.The

-o wideoption provides additional information on nodes.oc get nodes -o wide

$ oc get nodes -o wideCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master.example.com Ready master 171m v1.34.2 10.0.129.108 <none> Red Hat Enterprise Linux CoreOS 48.83.202103210901-0 (Ootpa) 4.21.0-240.15.1.el8_3.x86_64 cri-o://1.34.2-30.rhaos4.10.gitf2f339d.el8-dev node1.example.com Ready worker 72m v1.34.2 10.0.129.222 <none> Red Hat Enterprise Linux CoreOS 48.83.202103210901-0 (Ootpa) 4.21.0-240.15.1.el8_3.x86_64 cri-o://1.34.2-30.rhaos4.10.gitf2f339d.el8-dev node2.example.com Ready worker 164m v1.34.2 10.0.142.150 <none> Red Hat Enterprise Linux CoreOS 48.83.202103210901-0 (Ootpa) 4.21.0-240.15.1.el8_3.x86_64 cri-o://1.34.2-30.rhaos4.10.gitf2f339d.el8-dev

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master.example.com Ready master 171m v1.34.2 10.0.129.108 <none> Red Hat Enterprise Linux CoreOS 48.83.202103210901-0 (Ootpa) 4.21.0-240.15.1.el8_3.x86_64 cri-o://1.34.2-30.rhaos4.10.gitf2f339d.el8-dev node1.example.com Ready worker 72m v1.34.2 10.0.129.222 <none> Red Hat Enterprise Linux CoreOS 48.83.202103210901-0 (Ootpa) 4.21.0-240.15.1.el8_3.x86_64 cri-o://1.34.2-30.rhaos4.10.gitf2f339d.el8-dev node2.example.com Ready worker 164m v1.34.2 10.0.142.150 <none> Red Hat Enterprise Linux CoreOS 48.83.202103210901-0 (Ootpa) 4.21.0-240.15.1.el8_3.x86_64 cri-o://1.34.2-30.rhaos4.10.gitf2f339d.el8-devCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following command lists information about a single node:

oc get node <node>

$ oc get node <node>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc get node node1.example.com

$ oc get node node1.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS ROLES AGE VERSION node1.example.com Ready worker 7h v1.34.2

NAME STATUS ROLES AGE VERSION node1.example.com Ready worker 7h v1.34.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following command provides more detailed information about a specific node, including the reason for the current condition:

oc describe node <node>

$ oc describe node <node>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc describe node node1.example.com

$ oc describe node node1.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe following example contains some values that are specific to Red Hat OpenShift Service on AWS on AWS.

Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The name of the node.

- 2

- The role of the node, either

masterorworker. - 3

- The labels applied to the node.

- 4

- The annotations applied to the node.

- 5

- The taints applied to the node.

- 6

- The node conditions and status. The

conditionsstanza lists theReady,PIDPressure,MemoryPressure,DiskPressureandOutOfDiskstatus. These condition are described later in this section. - 7

- The IP address and hostname of the node.

- 8

- The pod resources and allocatable resources.

- 9

- Information about the node host.

- 10

- The pods on the node.

- 11

- The events reported by the node.

Among the information shown for nodes, the following node conditions appear in the output of the commands shown in this section:

| Condition | Description |

|---|---|

|

|

If |

|

|

If |

|

|

If |

|

|

If |

|

|

If |

|

|

If |

|

|

If |

|

| Pods cannot be scheduled for placement on the node. |

6.1.2. Listing pods on a node in your cluster

You can list all the pods on a specific node.

Procedure

To list all or selected pods on selected nodes:

oc get pod --selector=<nodeSelector>

$ oc get pod --selector=<nodeSelector>Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get pod --selector=kubernetes.io/os

$ oc get pod --selector=kubernetes.io/osCopy to Clipboard Copied! Toggle word wrap Toggle overflow Or:

oc get pod -l=<nodeSelector>

$ oc get pod -l=<nodeSelector>Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get pod -l kubernetes.io/os=linux

$ oc get pod -l kubernetes.io/os=linuxCopy to Clipboard Copied! Toggle word wrap Toggle overflow To list all pods on a specific node, including terminated pods:

oc get pod --all-namespaces --field-selector=spec.nodeName=<nodename>

$ oc get pod --all-namespaces --field-selector=spec.nodeName=<nodename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.1.3. Viewing memory and CPU usage statistics on your nodes

You can display usage statistics about nodes, which provide the runtime environments for containers. These usage statistics include CPU, memory, and storage consumption.

Prerequisites

-

You must have

cluster-readerpermission to view the usage statistics. - Metrics must be installed to view the usage statistics.

Procedure

To view the usage statistics:

oc adm top nodes

$ oc adm top nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To view the usage statistics for nodes with labels:

oc adm top node --selector=''

$ oc adm top node --selector=''Copy to Clipboard Copied! Toggle word wrap Toggle overflow You must choose the selector (label query) to filter on. Supports

=,==, and!=.

Additional resources

6.2. Working with nodes

As an administrator, you can perform several tasks to make your clusters more efficient. You can use the oc adm command to cordon, uncordon, and drain a specific node.

Cordoning and draining are only allowed on worker nodes that are part of Red Hat OpenShift Cluster Manager machine pools.

6.2.1. Understanding how to evacuate pods on nodes

Evacuating pods allows you to migrate all or selected pods from a given node or nodes.

You can only evacuate pods backed by a replication controller. The replication controller creates new pods on other nodes and removes the existing pods from the specified node(s).

Bare pods, meaning those not backed by a replication controller, are unaffected by default. You can evacuate a subset of pods by specifying a pod-selector. Pod selectors are based on labels, so all the pods with the specified label will be evacuated.

Procedure

Mark the nodes unschedulable before performing the pod evacuation.

Mark the node as unschedulable:

oc adm cordon <node1>

$ oc adm cordon <node1>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

node/<node1> cordoned

node/<node1> cordonedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the node status is

Ready,SchedulingDisabled:oc get node <node1>

$ oc get node <node1>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS ROLES AGE VERSION <node1> Ready,SchedulingDisabled worker 1d v1.34.2

NAME STATUS ROLES AGE VERSION <node1> Ready,SchedulingDisabled worker 1d v1.34.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Evacuate the pods using one of the following methods:

Evacuate all or selected pods on one or more nodes:

oc adm drain <node1> <node2> [--pod-selector=<pod_selector>]

$ oc adm drain <node1> <node2> [--pod-selector=<pod_selector>]Copy to Clipboard Copied! Toggle word wrap Toggle overflow Force the deletion of bare pods using the

--forceoption. When set totrue, deletion continues even if there are pods not managed by a replication controller, replica set, job, daemon set, or stateful set:oc adm drain <node1> <node2> --force=true

$ oc adm drain <node1> <node2> --force=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set a period of time in seconds for each pod to terminate gracefully, use

--grace-period. If negative, the default value specified in the pod will be used:oc adm drain <node1> <node2> --grace-period=-1

$ oc adm drain <node1> <node2> --grace-period=-1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ignore pods managed by daemon sets using the

--ignore-daemonsetsflag set totrue:oc adm drain <node1> <node2> --ignore-daemonsets=true

$ oc adm drain <node1> <node2> --ignore-daemonsets=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set the length of time to wait before giving up using the

--timeoutflag. A value of0sets an infinite length of time:oc adm drain <node1> <node2> --timeout=5s

$ oc adm drain <node1> <node2> --timeout=5sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete pods even if there are pods using

emptyDirvolumes by setting the--delete-emptydir-dataflag totrue. Local data is deleted when the node is drained:oc adm drain <node1> <node2> --delete-emptydir-data=true

$ oc adm drain <node1> <node2> --delete-emptydir-data=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow List objects that will be migrated without actually performing the evacuation, using the

--dry-runoption set totrue:oc adm drain <node1> <node2> --dry-run=true

$ oc adm drain <node1> <node2> --dry-run=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Instead of specifying specific node names (for example,

<node1> <node2>), you can use the--selector=<node_selector>option to evacuate pods on selected nodes.

Mark the node as schedulable when done.

oc adm uncordon <node1>

$ oc adm uncordon <node1>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3. Using the Node Tuning Operator

Red Hat OpenShift Service on AWS supports the Node Tuning Operator to improve performance of your nodes on your clusters. The Node Tuning Operator in Red Hat OpenShift Service on AWS helps you manage node-level tuning by orchestrating the TuneD daemon. You can use this unified interface to apply custom tuning specifications and achieve low latency performance for high-performance applications.

Before creating a node tuning configuration, you must create a custom tuning specification.

The Node Tuning Operator helps you manage node-level tuning by orchestrating the TuneD daemon and achieves low latency performance by using the Performance Profile controller. The majority of high-performance applications require some level of kernel tuning. The Node Tuning Operator provides a unified management interface to users of node-level sysctls and more flexibility to add custom tuning specified by user needs.

The Operator manages the containerized TuneD daemon for Red Hat OpenShift Service on AWS as a Kubernetes daemon set. It ensures the custom tuning specification is passed to all containerized TuneD daemons running in the cluster in the format that the daemons understand. The daemons run on all nodes in the cluster, one per node.

Node-level settings applied by the containerized TuneD daemon are rolled back on an event that triggers a profile change or when the containerized TuneD daemon is terminated gracefully by receiving and handling a termination signal.

The Node Tuning Operator uses the Performance Profile controller to implement automatic tuning to achieve low latency performance for Red Hat OpenShift Service on AWS applications.

The cluster administrator configures a performance profile to define node-level settings such as the following:

- Updating the kernel to kernel-rt.

- Choosing CPUs for housekeeping.

- Choosing CPUs for running workloads.

The Node Tuning Operator is part of a standard Red Hat OpenShift Service on AWS installation in version 4.1 and later.

In earlier versions of Red Hat OpenShift Service on AWS, the Performance Addon Operator was used to implement automatic tuning to achieve low latency performance for OpenShift applications. In Red Hat OpenShift Service on AWS 4.11 and later, this functionality is part of the Node Tuning Operator.

6.3.1. Custom tuning specification

The custom resource (CR) for the Operator has two major sections. The first section, profile:, is a list of TuneD profiles and their names. The second, recommend:, defines the profile selection logic.

Multiple custom tuning specifications can co-exist as multiple CRs in the Operator’s namespace. The existence of new CRs or the deletion of old CRs is detected by the Operator. All existing custom tuning specifications are merged and appropriate objects for the containerized TuneD daemons are updated.

Management state

The Operator Management state is set by adjusting the default Tuned CR. By default, the Operator is in the Managed state and the spec.managementState field is not present in the default Tuned CR. Valid values for the Operator Management state are as follows:

- Managed: the Operator will update its operands as configuration resources are updated

- Unmanaged: the Operator will ignore changes to the configuration resources

- Removed: the Operator will remove its operands and resources the Operator provisioned

Profile data

The profile: section lists TuneD profiles and their names.

Recommended profiles

The profile: selection logic is defined by the recommend: section of the CR. The recommend: section is a list of items to recommend the profiles based on a selection criteria.

recommend: <recommend-item-1> # ... <recommend-item-n>

recommend:

<recommend-item-1>

# ...

<recommend-item-n>The individual items of the list:

- 1

- Optional.

- 2

- A dictionary of key/value

MachineConfiglabels. The keys must be unique. - 3

- If omitted, profile match is assumed unless a profile with a higher priority matches first or

machineConfigLabelsis set. - 4

- An optional list.

- 5

- Profile ordering priority. Lower numbers mean higher priority (

0is the highest priority). - 6

- A TuneD profile to apply on a match. For example

tuned_profile_1. - 7

- Optional operand configuration.

- 8

- Turn debugging on or off for the TuneD daemon. Options are

truefor on orfalsefor off. The default isfalse. - 9

- Turn

reapply_sysctlfunctionality on or off for the TuneD daemon. Options aretruefor on andfalsefor off.

<match> is an optional list recursively defined as follows:

- label: <label_name>

value: <label_value>

type: <label_type>

<match>

- label: <label_name>

value: <label_value>

type: <label_type>

<match>

If <match> is not omitted, all nested <match> sections must also evaluate to true. Otherwise, false is assumed and the profile with the respective <match> section will not be applied or recommended. Therefore, the nesting (child <match> sections) works as logical AND operator. Conversely, if any item of the <match> list matches, the entire <match> list evaluates to true. Therefore, the list acts as logical OR operator.

If machineConfigLabels is defined, machine config pool based matching is turned on for the given recommend: list item. <mcLabels> specifies the labels for a machine config. The machine config is created automatically to apply host settings, such as kernel boot parameters, for the profile <tuned_profile_name>. This involves finding all machine config pools with machine config selector matching <mcLabels> and setting the profile <tuned_profile_name> on all nodes that are assigned the found machine config pools. To target nodes that have both master and worker roles, you must use the master role.

The list items match and machineConfigLabels are connected by the logical OR operator. The match item is evaluated first in a short-circuit manner. Therefore, if it evaluates to true, the machineConfigLabels item is not considered.

When using machine config pool based matching, it is advised to group nodes with the same hardware configuration into the same machine config pool. Not following this practice might result in TuneD operands calculating conflicting kernel parameters for two or more nodes sharing the same machine config pool.

Example: Node or pod label based matching

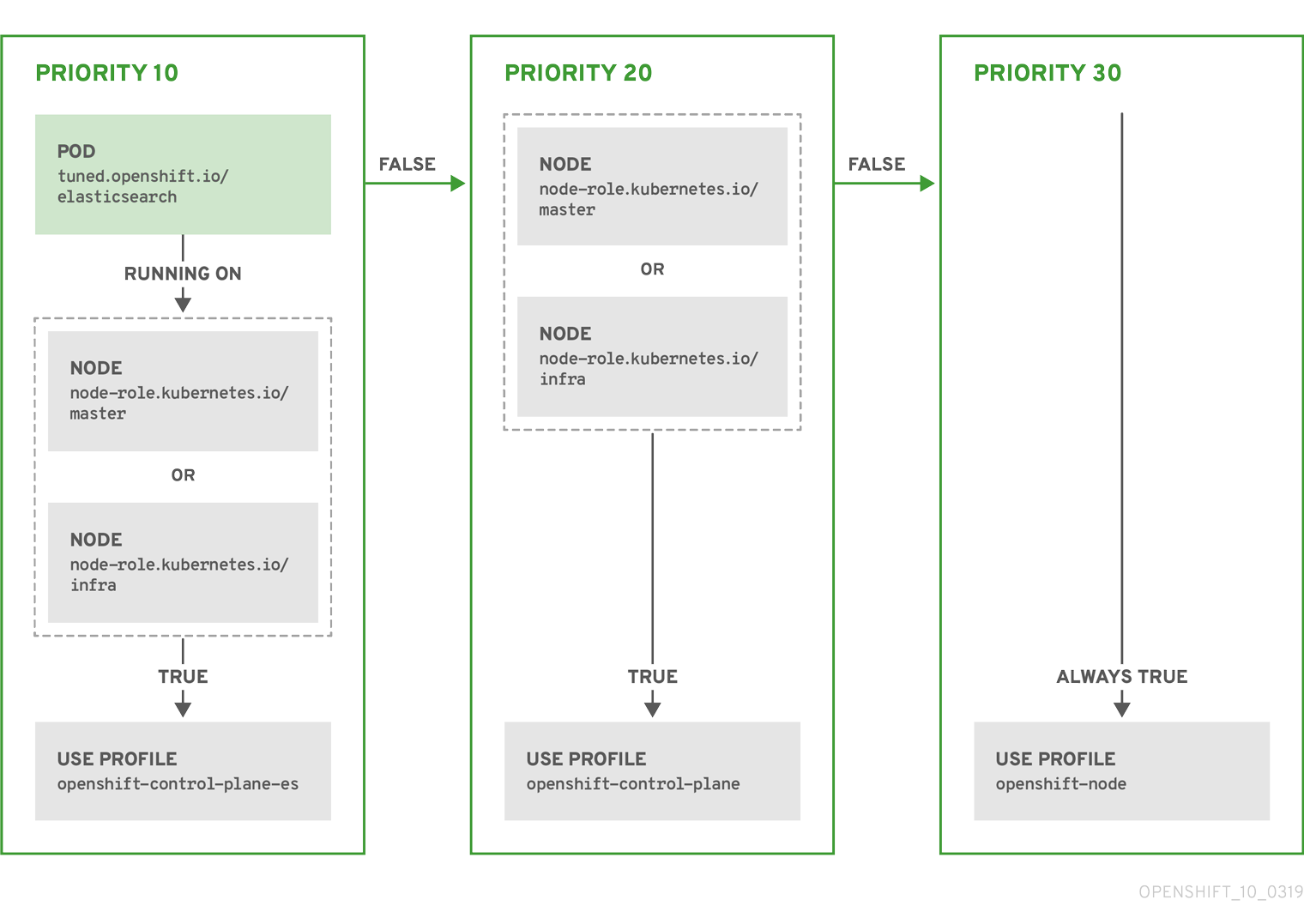

The CR above is translated for the containerized TuneD daemon into its recommend.conf file based on the profile priorities. The profile with the highest priority (10) is openshift-control-plane-es and, therefore, it is considered first. The containerized TuneD daemon running on a given node looks to see if there is a pod running on the same node with the tuned.openshift.io/elasticsearch label set. If not, the entire <match> section evaluates as false. If there is such a pod with the label, in order for the <match> section to evaluate to true, the node label also needs to be node-role.kubernetes.io/master or node-role.kubernetes.io/infra.

If the labels for the profile with priority 10 matched, openshift-control-plane-es profile is applied and no other profile is considered. If the node/pod label combination did not match, the second highest priority profile (openshift-control-plane) is considered. This profile is applied if the containerized TuneD pod runs on a node with labels node-role.kubernetes.io/master or node-role.kubernetes.io/infra.

Finally, the profile openshift-node has the lowest priority of 30. It lacks the <match> section and, therefore, will always match. It acts as a profile catch-all to set openshift-node profile, if no other profile with higher priority matches on a given node.

Example: Machine config pool based matching

To minimize node reboots, label the target nodes with a label the machine config pool’s node selector will match, then create the Tuned CR above and finally create the custom machine config pool itself.

Cloud provider-specific TuneD profiles

With this functionality, all Cloud provider-specific nodes can conveniently be assigned a TuneD profile specifically tailored to a given Cloud provider on a Red Hat OpenShift Service on AWS cluster. This can be accomplished without adding additional node labels or grouping nodes into machine config pools.

This functionality takes advantage of spec.providerID node object values in the form of <cloud-provider>://<cloud-provider-specific-id> and writes the file /var/lib/ocp-tuned/provider with the value <cloud-provider> in NTO operand containers. The content of this file is then used by TuneD to load provider-<cloud-provider> profile if such profile exists.

The openshift profile that both openshift-control-plane and openshift-node profiles inherit settings from is now updated to use this functionality through the use of conditional profile loading. Neither NTO nor TuneD currently include any Cloud provider-specific profiles. However, it is possible to create a custom profile provider-<cloud-provider> that will be applied to all Cloud provider-specific cluster nodes.

Example GCE Cloud provider profile

Due to profile inheritance, any setting specified in the provider-<cloud-provider> profile will be overwritten by the openshift profile and its child profiles.

6.3.2. Creating node tuning configurations

You can create tuning configurations by using the Red Hat OpenShift Service on AWS (ROSA) CLI, rosa.

Prerequisites

- You have downloaded the latest version of the ROSA CLI.

- You have a cluster on the latest version.

- You have a specification file configured for node tuning.

Procedure

Run the following command to create your tuning configuration:

rosa create tuning-config -c <cluster_id> --name <name_of_tuning> --spec-path <path_to_spec_file>

$ rosa create tuning-config -c <cluster_id> --name <name_of_tuning> --spec-path <path_to_spec_file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow You must supply the path to the

spec.jsonfile or the command returns an error.I: Tuning config 'sample-tuning' has been created on cluster 'cluster-example'.

$ I: Tuning config 'sample-tuning' has been created on cluster 'cluster-example'.Copy to Clipboard Copied! Toggle word wrap Toggle overflow I: To view all tuning configs, run 'rosa list tuning-configs -c cluster-example'

$ I: To view all tuning configs, run 'rosa list tuning-configs -c cluster-example'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

You can verify the existing tuning configurations that are applied by your account with the following command:

rosa list tuning-configs -c <cluster_name> [-o json]

$ rosa list tuning-configs -c <cluster_name> [-o json]Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can specify the type of output you want for the configuration list.

Without specifying the output type, you see the ID and name of the tuning configuration:

Example output without specifying output type

ID NAME 20468b8e-edc7-11ed-b0e4-0a580a800298 sample-tuning

ID NAME 20468b8e-edc7-11ed-b0e4-0a580a800298 sample-tuningCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you specify an output type, such as

json, you receive the tuning configuration as JSON text:NoteThe following JSON output has hard line-returns for the sake of reading clarity. This JSON output is invalid unless you remove the newlines in the JSON strings.

Example output specifying JSON output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.3. Modifying your node tuning configurations

You can view and update the node tuning configurations by using the Red Hat OpenShift Service on AWS (ROSA) CLI, rosa.

Prerequisites

- You have downloaded the latest version of the ROSA CLI.

- You have a cluster on the latest version

- Your cluster has a node tuning configuration added to it

Procedure

You view the tuning configurations with the

rosa describecommand:rosa describe tuning-config -c <cluster_id> \ --name <name_of_tuning> \ [-o json]$ rosa describe tuning-config -c <cluster_id> \ --name <name_of_tuning> \ [-o json]Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<cluster_id>- Specifies the cluster ID for the cluster that you own that you want to apply a node tuning configuration.

<name_of_tuning>- Specifies the name of your tuning configuration.

-o json- Specifies the output type. This parameter is optional. If you do not specify any outputs, you see only the ID and name of the tuning configuration.

Example output without specifying output type

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output specifying JSON output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow After verifying the tuning configuration, you edit the existing configurations with the

rosa editcommand:rosa edit tuning-config -c <cluster_id> --name <name_of_tuning> --spec-path <path_to_spec_file>

$ rosa edit tuning-config -c <cluster_id> --name <name_of_tuning> --spec-path <path_to_spec_file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this command, you use the

spec.jsonfile to edit your configurations.

Verification

Run the

rosa describecommand again, to see that the changes you made to thespec.jsonfile are updated in the tuning configurations:rosa describe tuning-config -c <cluster_id> --name <name_of_tuning>

$ rosa describe tuning-config -c <cluster_id> --name <name_of_tuning>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.4. Deleting node tuning configurations

You can delete tuning configurations if you no longer need them by using the Red Hat OpenShift Service on AWS (ROSA) CLI, rosa.

You cannot delete a tuning configuration referenced in a machine pool. You must first remove the tuning configuration from all machine pools before you can delete it.

Prerequisites

- You have downloaded the latest version of the ROSA CLI.

- You have a cluster on the latest version .

- Your cluster has a node tuning configuration that you want delete.

Procedure

To delete the tuning configurations, run the following command:

rosa delete tuning-config -c <cluster_id> <name_of_tuning>

$ rosa delete tuning-config -c <cluster_id> <name_of_tuning>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The tuning configuration on the cluster is deleted

Example output

? Are you sure you want to delete tuning config sample-tuning on cluster sample-cluster? Yes I: Successfully deleted tuning config 'sample-tuning' from cluster 'sample-cluster'

? Are you sure you want to delete tuning config sample-tuning on cluster sample-cluster? Yes I: Successfully deleted tuning config 'sample-tuning' from cluster 'sample-cluster'Copy to Clipboard Copied! Toggle word wrap Toggle overflow