バックアップおよび復元

OpenShift Container Platform クラスターのバックアップおよび復元

概要

第1章 バックアップおよび復元

1.1. コントロールプレーンのバックアップおよび復元の操作

クラスター管理者は、OpenShift Container Platform クラスターを一定期間停止し、後で再起動する必要がある場合があります。クラスターを再起動する理由として、クラスターでメンテナンスを実行する必要がある、またはリソースコストを削減する必要がある、などが挙げられます。OpenShift Container Platform では、クラスターの正常なシャットダウン を実行して、後でクラスターを簡単に再起動できます。

クラスターをシャットダウンする前に etcd データをバックアップする 必要があります。etcd は OpenShift Container Platform のキーと値のストアであり、すべてのリソースオブジェクトの状態を保存します。etcd バックアップは障害復旧において重要な役割を果たします。OpenShift Container Platform では、正常でない etcd メンバーを置き換える こともできます。

クラスターを再度実行する場合は、クラスターを正常に再起動します。

クラスターの証明書は、インストール日から 1 年後に有効期限が切れます。証明書が有効である間は、クラスターをシャットダウンし、正常に再起動することができます。クラスターは、期限切れのコントロールプレーン証明書を自動的に取得しますが、証明書署名要求 (CSR) を承認する 必要があります。

以下のように、OpenShift Container Platform が想定どおりに機能しないさまざまな状況に直面します。

- ノードの障害やネットワーク接続の問題などの予期しない状態により、再起動後にクラスターが機能しない。

- 誤ってクラスターで重要なものを削除した。

- 大多数のコントロールプレーンホストが失われたため、etcd のクォーラム (定足数) を喪失した。

保存した etcd スナップショットを使用して、クラスターを以前の状態に復元して、障害状況から常に回復できます。

1.2. アプリケーションのバックアップおよび復元の操作

クラスター管理者は、OpenShift API for Data Protection (OADP) を使用して、OpenShift Container Platform で実行しているアプリケーションをバックアップおよび復元できます。

OADP は、管理者がインストールした OADP のバージョンに適したバージョンの Velero (Velero CLI ツールのダウンロード の表を参照) を使用して、namespace の粒度で Kubernetes リソースと内部イメージをバックアップおよび復元します。OADP は、スナップショットまたは Restic を使用して、永続ボリューム (PV) をバックアップおよび復元します。詳細は、OADP の機能 を参照してください。

1.2.1. OADP 要件

OADP には以下の要件があります。

-

cluster-adminロールを持つユーザーとしてログインする必要があります。 次のストレージタイプのいずれかなど、バックアップを保存するためのオブジェクトストレージが必要です。

- OpenShift Data Foundation

- Amazon Web Services

- Microsoft Azure

- Google Cloud

- S3 と互換性のあるオブジェクトストレージ

- IBM Cloud® Object Storage S3

OCP 4.11 以降で CSI バックアップを使用する場合は、OADP 1.1.x をインストールします。

OADP 1.0.x は、OCP 4.11 以降での CSI バックアップをサポートしていません。OADP 1.0.x には Velero 1.7.x が含まれており、OCP 4.11 以降には存在しない API グループ snapshot.storage.k8s.io/v1beta1 が必要です。

S3 ストレージ用の CloudStorage API は、テクノロジープレビュー機能のみです。テクノロジープレビュー機能は、Red Hat 製品のサービスレベルアグリーメント (SLA) の対象外であり、機能的に完全ではないことがあります。Red Hat は、実稼働環境でこれらを使用することを推奨していません。テクノロジープレビュー機能は、最新の製品機能をいち早く提供して、開発段階で機能のテストを行い、フィードバックを提供していただくことを目的としています。

Red Hat のテクノロジープレビュー機能のサポート範囲に関する詳細は、以下のリンクを参照してください。

スナップショットを使用して PV をバックアップするには、ネイティブスナップショット API を備えているか、次のプロバイダーなどの Container Storage Interface (CSI) スナップショットをサポートするクラウドストレージが必要です。

- Amazon Web Services

- Microsoft Azure

- Google Cloud

- Ceph RBD や Ceph FS などの CSI スナップショット対応のクラウドストレージ

スナップショットを使用して PV をバックアップしたくない場合は、デフォルトで OADP Operator によってインストールされる Restic を使用できます。

1.2.2. アプリケーションのバックアップおよび復元

Backup カスタムリソース (CR) を作成して、アプリケーションをバックアップします。バックアップ CR の作成 を参照してください。次のバックアップオプションを設定できます。

- バックアップ操作の前後にコマンドを実行するための バックアップフックの作成

- バックアップのスケジュール

- File System Backup を使用してアプリケーションをバックアップする: Kopia または Restic

-

アプリケーションのバックアップを復元するには、

Restore(CR) を作成します。復元 CR の作成 を参照してください。 - 復元操作中に init コンテナーまたはアプリケーションコンテナーでコマンドを実行するように 復元フック を設定できます。

第2章 クラスターの正常なシャットダウン

このドキュメントでは、クラスターを正常にシャットダウンするプロセスを説明します。メンテナンスの目的で、またはリソースコストの節約のためにクラスターを一時的にシャットダウンする必要がある場合があります。

2.1. 前提条件

クラスターをシャットダウンする前に etcd バックアップ を作成する。

重要クラスターの再起動時に問題が発生した場合にクラスターを復元できるように、この手順を実行する前に etcd バックアップを作成しておくことは重要です。

たとえば、次の条件により、再起動したクラスターが誤動作する可能性があります。

- シャットダウン時の etcd データの破損

- ハードウェアが原因のノード障害

- ネットワーク接続の問題

クラスターの回復に失敗した場合は、クラスターを以前の状態に復元 する手順を実行してください。

2.2. クラスターのシャットダウン

クラスターを正常な状態でシャットダウンし、後で再起動できるようにします。

インストール日から 1 年までクラスターをシャットダウンして、正常に再起動することを期待できます。インストール日から 1 年後に、クラスター証明書が期限切れになります。ただし、クラスターの再起動時に、kubelet 証明書を回復するために保留中の証明書署名要求 (CSR) を手動で承認する必要がある場合があります。

前提条件

-

cluster-adminロールを持つユーザーとしてクラスターにアクセスできる。 - etcd のバックアップを取得している。

手順

クラスターを長期間シャットダウンする場合は、証明書の有効期限が切れる日付を確認し、次のコマンドを実行します。

oc -n openshift-kube-apiserver-operator get secret kube-apiserver-to-kubelet-signer -o jsonpath='{.metadata.annotations.auth\.openshift\.io/certificate-not-after}'$ oc -n openshift-kube-apiserver-operator get secret kube-apiserver-to-kubelet-signer -o jsonpath='{.metadata.annotations.auth\.openshift\.io/certificate-not-after}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

2022-08-05T14:37:50Zuser@user:~ $

2022-08-05T14:37:50Zuser@user:~ $1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- クラスターが正常に再起動できるようにするために、指定の日付または指定の日付の前に再起動するように計画します。クラスターの再起動時に、kubelet 証明書を回復するために保留中の証明書署名要求 (CSR) を手動で承認する必要がある場合があります。

クラスター内のすべてのノードをスケジュール不可としてマークします。クラウドプロバイダーの Web コンソールから、または次のループを実行することでマークできます。

for node in $(oc get nodes -o jsonpath='{.items[*].metadata.name}'); do echo ${node} ; oc adm cordon ${node} ; done$ for node in $(oc get nodes -o jsonpath='{.items[*].metadata.name}'); do echo ${node} ; oc adm cordon ${node} ; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 次の方法を使用して Pod を退避させます。

for node in $(oc get nodes -l node-role.kubernetes.io/worker -o jsonpath='{.items[*].metadata.name}'); do echo ${node} ; oc adm drain ${node} --delete-emptydir-data --ignore-daemonsets=true --timeout=15s --force ; done$ for node in $(oc get nodes -l node-role.kubernetes.io/worker -o jsonpath='{.items[*].metadata.name}'); do echo ${node} ; oc adm drain ${node} --delete-emptydir-data --ignore-daemonsets=true --timeout=15s --force ; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow クラスターのすべてのノードをシャットダウンします。これを実行するには、クラウドプロバイダーの Web コンソールから行うか、次のループを実行します。どちらかの方法を使用してノードをシャットダウンすると、Pod が正常に終了するため、データが破損する可能性が低くなります。

注記API の仮想 IP が割り当てられたコントロールプレーンノードが、ループ内で最後に処理されるノードであることを確認してください。そうでない場合、シャットダウンコマンドが失敗します。

for node in $(oc get nodes -o jsonpath='{.items[*].metadata.name}'); do oc debug node/${node} -- chroot /host shutdown -h 1; done$ for node in $(oc get nodes -o jsonpath='{.items[*].metadata.name}'); do oc debug node/${node} -- chroot /host shutdown -h 1; done1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

-h 1は、コントロールプレーンノードがシャットダウンされるまで、このプロセスを継続する時間 (分単位) を示します。10 ノード以上の大規模なクラスターでは、すべてのコンピュートノードが先にシャットダウンする時間を確保するために、-h 10以上に設定します。

出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 注記シャットダウン前に OpenShift Container Platform に同梱される標準 Pod のコントロールプレーンノードをドレイン (解放) する必要はありません。クラスター管理者は、クラスターの再起動後に独自のワークロードのクリーンな再起動を実行する必要があります。カスタムワークロードが原因でシャットダウン前にコントロールプレーンノードをドレイン (解放) した場合は、再起動後にクラスターが再び機能する前にコントロールプレーンノードをスケジュール可能としてマークする必要があります。

外部ストレージや LDAP サーバーなど、不要になったクラスター依存関係をすべて停止します。この作業を行う前に、ベンダーのドキュメントを確認してください。

重要クラスターをクラウドプロバイダープラットフォームにデプロイした場合は、関連するクラウドリソースをシャットダウン、一時停止、または削除しないでください。一時停止された仮想マシンのクラウドリソースを削除すると、OpenShift Container Platform が正常に復元されない場合があります。

第3章 クラスターの正常な再起動

このドキュメントでは、正常なシャットダウン後にクラスターを再起動するプロセスを説明します。

クラスターは再起動後に機能することが予想されますが、クラスターは以下の例を含む予期しない状態によって回復しない可能性があります。

- シャットダウン時の etcd データの破損

- ハードウェアが原因のノード障害

- ネットワーク接続の問題

クラスターの回復に失敗した場合は、クラスターを以前の状態に復元 する手順を実行してください。

3.1. 前提条件

3.2. クラスターの再起動

クラスターの正常なシャットダウン後にクラスターを再起動できます。

前提条件

-

cluster-adminロールを持つユーザーとしてクラスターにアクセスできる。 - この手順では、クラスターを正常にシャットダウンしていることを前提としています。

手順

コントロールプレーンノードをオンにします。

クラスターインストール時の

admin.kubeconfigを使用しており、API 仮想 IP アドレス (VIP) が稼働している場合は、次の手順を実行します。-

KUBECONFIG環境変数をadmin.kubeconfigパスに設定します。 クラスター内の各コントロールプレーンノードに対して次のコマンドを実行します。

oc adm uncordon <node>

$ oc adm uncordon <node>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

admin.kubeconfigの認証情報にアクセスできない場合は、次の手順を実行します。- SSH を使用してコントロールプレーンノードに接続します。

-

localhost-recovery.kubeconfigファイルを/rootディレクトリーにコピーします。 そのファイルを使用して、クラスター内の各コントロールプレーンノードに対して次のコマンドを実行します。

oc adm uncordon <node>

$ oc adm uncordon <node>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- 外部ストレージや LDAP サーバーなどのクラスターの依存関係すべてをオンにします。

すべてのクラスターマシンを起動します。

クラウドプロバイダーの Web コンソールなどでマシンを起動するには、ご使用のクラウド環境に適した方法を使用します。

約 10 分程度待機してから、コントロールプレーンノードのステータス確認に進みます。

すべてのコントロールプレーンノードが準備状態にあることを確認します。

oc get nodes -l node-role.kubernetes.io/master

$ oc get nodes -l node-role.kubernetes.io/masterCopy to Clipboard Copied! Toggle word wrap Toggle overflow 以下の出力に示されているように、コントロールプレーンノードはステータスが

Readyの場合、準備状態にあります。NAME STATUS ROLES AGE VERSION ip-10-0-168-251.ec2.internal Ready control-plane,master 75m v1.29.4 ip-10-0-170-223.ec2.internal Ready control-plane,master 75m v1.29.4 ip-10-0-211-16.ec2.internal Ready control-plane,master 75m v1.29.4

NAME STATUS ROLES AGE VERSION ip-10-0-168-251.ec2.internal Ready control-plane,master 75m v1.29.4 ip-10-0-170-223.ec2.internal Ready control-plane,master 75m v1.29.4 ip-10-0-211-16.ec2.internal Ready control-plane,master 75m v1.29.4Copy to Clipboard Copied! Toggle word wrap Toggle overflow コントロールプレーンノードが準備状態に ない 場合、承認する必要がある保留中の証明書署名要求 (CSR) があるかどうかを確認します。

現在の CSR の一覧を取得します。

oc get csr

$ oc get csrCopy to Clipboard Copied! Toggle word wrap Toggle overflow CSR の詳細をレビューし、これが有効であることを確認します。

oc describe csr <csr_name>

$ oc describe csr <csr_name>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

<csr_name>は、現行の CSR のリストからの CSR の名前です。

それぞれの有効な CSR を承認します。

oc adm certificate approve <csr_name>

$ oc adm certificate approve <csr_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

コントロールプレーンノードが準備状態になった後に、すべてのワーカーノードが準備状態にあることを確認します。

oc get nodes -l node-role.kubernetes.io/worker

$ oc get nodes -l node-role.kubernetes.io/workerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 以下の出力に示されているように、ワーカーノードのステータスが

Readyの場合、ワーカーノードは準備状態にあります。NAME STATUS ROLES AGE VERSION ip-10-0-179-95.ec2.internal Ready worker 64m v1.29.4 ip-10-0-182-134.ec2.internal Ready worker 64m v1.29.4 ip-10-0-250-100.ec2.internal Ready worker 64m v1.29.4

NAME STATUS ROLES AGE VERSION ip-10-0-179-95.ec2.internal Ready worker 64m v1.29.4 ip-10-0-182-134.ec2.internal Ready worker 64m v1.29.4 ip-10-0-250-100.ec2.internal Ready worker 64m v1.29.4Copy to Clipboard Copied! Toggle word wrap Toggle overflow ワーカーノードが準備状態に ない 場合、承認する必要がある保留中の証明書署名要求 (CSR) があるかどうかを確認します。

現在の CSR の一覧を取得します。

oc get csr

$ oc get csrCopy to Clipboard Copied! Toggle word wrap Toggle overflow CSR の詳細をレビューし、これが有効であることを確認します。

oc describe csr <csr_name>

$ oc describe csr <csr_name>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

<csr_name>は、現行の CSR のリストからの CSR の名前です。

それぞれの有効な CSR を承認します。

oc adm certificate approve <csr_name>

$ oc adm certificate approve <csr_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

コントロールプレーンとコンピュートノードの準備ができたら、次のコマンドを実行して、クラスター内のすべてのノードをスケジュール可能としてマークします。

for node in $(oc get nodes -o jsonpath='{.items[*].metadata.name}'); do echo ${node} ; oc adm uncordon ${node} ; done$ for node in $(oc get nodes -o jsonpath='{.items[*].metadata.name}'); do echo ${node} ; oc adm uncordon ${node} ; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow クラスターが適切に起動していることを確認します。

パフォーマンスが低下したクラスター Operator がないことを確認します。

oc get clusteroperators

$ oc get clusteroperatorsCopy to Clipboard Copied! Toggle word wrap Toggle overflow DEGRADED条件がTrueに設定されているクラスター Operator がないことを確認します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow すべてのノードが

Ready状態にあることを確認します。oc get nodes

$ oc get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow すべてのノードのステータスが

Readyであることを確認します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow クラスターが適切に起動しなかった場合、etcd バックアップを使用してクラスターを復元する必要がある場合があります。

第4章 OADP アプリケーションのバックアップと復元

4.1. OpenShift API for Data Protection の概要

OpenShift API for Data Protection (OADP) 製品は、OpenShift Container Platform 上のお客様のアプリケーションを保護します。この製品は、OpenShift Container Platform のアプリケーション、アプリケーション関連のクラスターリソース、永続ボリューム、内部イメージをカバーする包括的な障害復旧保護を提供します。OADP は、コンテナー化されたアプリケーションと仮想マシン (VM) の両方をバックアップすることもできます。

ただし、OADP は etcd または OpenShift Operator の障害復旧ソリューションとしては機能しません。

OADP サポートは、お客様のワークロードの namespace とクラスタースコープのリソースに提供されます。

完全なクラスターの バックアップ と 復元 はサポートされていません。

4.1.1. OpenShift API for Data Protection API

OpenShift API for Data Protection (OADP) は、バックアップをカスタマイズし、不要または不適切なリソースの組み込みを防止するための複数のアプローチを可能にする API を提供します。

OADP は次の API を提供します。

4.1.1.1. データ保護のための OpenShift API のサポート

| バージョン | OCP のバージョン | 一般公開 | フルサポートの終了日 | メンテナンスの終了日 | 延長更新サポート (EUS) | Extended Update Support 期間 2 (EUS 期間 2) |

| 1.4 |

| 2024 年 7 月 10 日 | 1.5 のリリース | 1.6 のリリース | 2026 年 6 月 27 日 EUS は OCP 4.16 です | 2027 年 6 月 27 日 EUS Term 2 は OCP 4.16 です |

| 1.3 |

| 2023 年 11 月 29 日 | 2024 年 7 月 10 日 | 1.5 のリリース | 2025 年 10 月 31 日 EUS は OCP 4.14 です | 2026 年 10 月 31 日 EUS Term 2 は OCP 4.14 です |

4.1.1.1.1. OADP Operator のサポートされていないバージョン

| バージョン | 一般公開 | フルサポート終了 | メンテナンス終了 |

| 1.2 | 2023 年 6 月 14 日 | 2023 年 11 月 29 日 | 2024 年 7 月 10 日 |

| 1.1 | 2022 年 9 月 1 日 | 2023 年 6 月 14 日 | 2023 年 11 月 29 日 |

| 1.0 | 2022 年 2 月 9 日 | 2022 年 9 月 1 日 | 2023 年 6 月 14 日 |

EUS の詳細は、Extended Update Support を参照してください。

EUS Term 2 の詳細は、Extended Update Support Term 2 を参照してください。

4.2. OADP リリースノート

4.2.1. OADP 1.4 リリースノート

OpenShift API for Data Protection (OADP) のリリースノートでは、新機能と機能拡張、非推奨の機能、製品の推奨事項、既知の問題、および解決された問題を説明します。

OADP に関する追加情報は、OpenShift API for Data Protection (OADP) FAQ を参照してください。

4.2.1.1. OADP 1.4.7 リリースノート

OpenShift API for Data Protection (OADP) 1.4.7 は、コンテナーのヘルスグレードを更新するためにリリースされた Container Grade Only (CGO) リリースです。OADP 1.4.6 と比較して、製品自体のコードは変更されていません。

4.2.1.2. OADP 1.5.3 リリースノート

OpenShift API for Data Protection (OADP) 1.5.3 は、コンテナーのヘルスグレードを更新するためにリリースされた Container Grade Only (CGO) リリースです。OADP 1.5.2 と比較して、製品自体のコードは変更されていません。

4.2.1.3. OADP 1.4.5 リリースノート

OpenShift API for Data Protection (OADP) 1.4.5 リリースノートには、新機能と解決された問題が記載されています。

4.2.1.3.1. 新機能

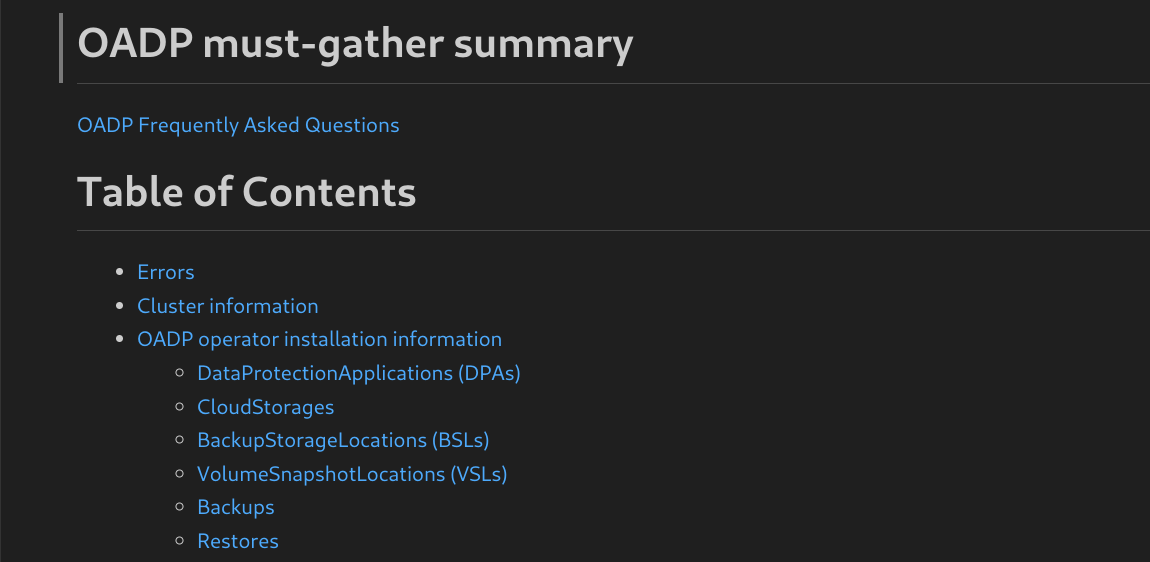

must-gather ツールを使用したログの収集が Markdown 概要で改善されました。

must-gather ツールを使用して、ログ、および OpenShift API for Data Protection (OADP) カスタムリソースに関する情報を収集できます。must-gather データはすべてのカスタマーケースに添付する必要があります。このツールは、must-gather ログクラスターディレクトリーにある収集された情報を含む Markdown 出力ファイルを生成します。(OADP-5904)

4.2.1.3.2. 解決された問題

- OADP 1.4.5 では、次の CVE が修正されています。

4.2.1.4. OADP 1.4.4 リリースノート

OpenShift API for Data Protection (OADP) 1.4.4 は、コンテナーのヘルスグレードを更新するためにリリースされた Container Grade Only (CGO) リリースです。OADP 1.4.3 と比較して、製品自体のコードは変更されていません。

4.2.1.4.1. 既知の問題

ステートフルアプリケーションの復元に関する問題

azurefile-csi ストレージクラスを使用するステートフルアプリケーションを復元すると、復元操作が Finalizing フェーズのままになります。(OADP-5508)

4.2.1.5. OADP 1.4.3 リリースノート

OpenShift API for Data Protection (OADP) 1.4.3 リリースノートには、次の新機能が記載されています。

4.2.1.5.1. 新機能

kubevirt velero プラグインバージョン 0.7.1 の注目すべき変更点

このリリースにより、kubevirt velero プラグインがバージョン 0.7.1 に更新されました。注目すべき改良点として、次のバグ修正と新機能が含まれます。

- 所有者の仮想マシンが除外されている場合に、仮想マシンインスタンス (VMI) がバックアップから無視されなくなりました。

- バックアップおよび復元操作中に、すべての追加オブジェクトがオブジェクトグラフに含まれるようになりました。

- オプションで生成されたラベルが、復元操作中に新しいファームウェアの汎用一意識別子 (UUID) に追加されるようになりました。

- 復元操作中に仮想マシン実行ストラテジーを切り替えることが可能になりました。

- ラベルごとに MAC アドレスをクリアできるようになりました。

- バックアップ操作中の復元固有のチェックがスキップされるようになりました。

-

VirtualMachineClusterInstancetypeおよびVirtualMachineClusterPreferenceカスタムリソース定義 (CRD) がサポートされるようになりました。

4.2.1.6. OADP 1.4.2 リリースノート

OpenShift API for Data Protection (OADP) 1.4.2 リリースノートには、新機能、解決された問題とバグ、既知の問題が記載されています。

4.2.1.6.1. 新機能

VolumePolicy 機能を使用して同じ namespace 内の異なるボリュームをバックアップできるようになりました

このリリースでは、Velero は VolumePolicy 機能を使用して同じ namespace 内の異なるボリュームをバックアップするためのリソースポリシーを提供します。さまざまなボリュームをバックアップするためにサポートされている VolumePolicy 機能には、skip、snapshot、および fs-backup アクションが含まれます。OADP-1071

ファイルシステムのバックアップとデータムーバーで短期認証情報を使用できるようになりました

ファイルシステムバックアップとデータムーバーでは、AWS Security Token Service (STS) や Google Cloud WIF などの短期認証情報が使用できるようになりました。このサポートにより、PartiallyFailed ステータスなしでバックアップが正常に完了します。OADP-5095

4.2.1.6.2. 解決された問題

VSL に誤ったプロバイダー値が含まれている場合に DPA がエラーを報告するようになった。

以前は、Volume Snapshot Location (VSL) 仕様のプロバイダーが正しくない場合でも、Data Protection Application (DPA) によるリコンサイルが成功していました。この更新により、DPA はエラーを報告し、有効なプロバイダー値を要求します。OADP-5044

バックアップと復元に異なる OADP namespace を使用しているかどうかに関係なく、Data Mover の復元に成功する。

以前は、ある namespace にインストールされた OADP を使用してバックアップ操作を実行し、別の namespace にインストールされた OADP を使用して復元すると、Data Mover の復元が失敗しました。この更新により、Data Mover の復元が成功するようになりました。OADP-5460

SSE-C バックアップは、計算された秘密鍵の MD5 で動作する

以前は、次のエラーでバックアップが失敗しました。

Requests specifying Server Side Encryption with Customer provided keys must provide the client calculated MD5 of the secret key.

Requests specifying Server Side Encryption with Customer provided keys must provide the client calculated MD5 of the secret key.

この更新により、足りなかった Server-Side Encryption with Customer-Provided Keys (SSE-C) の base64 および MD5 ハッシュが修正されました。その結果、SSE-C バックアップは計算された秘密鍵の MD5 を使用して機能します。さらに、customerKey サイズの誤った errorhandling も修正されました。OADP-5388

このリリースで解決されたすべての問題のリストは、Jira の OADP 1.4.2 の解決済みの問題 を参照してください。

4.2.1.6.3. 既知の問題

nodeSelector 仕様は、Data Mover 復元アクションではサポートされていない。

nodeAgent パラメーターに nodeSelector フィールドを設定して Data Protection Application (DPA) を作成すると、復元操作が完了する代わりに、Data Mover の復元が部分的に失敗します。OADP-5260

TLS スキップ検証が指定されている場合、S3 ストレージはプロキシー環境を使用しない。

イメージレジストリーのバックアップでは、insecureSkipTLSVerify パラメーターが true に設定されている場合、S3 ストレージはプロキシー環境を使用しません。OADP-3143

Kopia はバックアップの有効期限が切れてもアーティファクトが削除されない。

バックアップを削除した後でも、バックアップの有効期限が切れると、Kopia は S3 ロケーションの ${bucket_name}/kopia/$openshift-adp からボリューム成果物が削除されません。詳細は、「Kopia リポジトリーのメンテナンスについて」を参照してください。OADP-5131

4.2.1.7. OADP 1.4.1 リリースノート

OpenShift API for Data Protection (OADP) 1.4.1 リリースノートには、新機能、解決された問題とバグ、既知の問題が記載されています。

4.2.1.7.1. 新機能

クライアントの QPS とバーストを更新するための新しい DPA フィールド

新しい Data Protection Application (DPA) フィールドを使用して、Velero Server Kubernetes API の 1 秒あたりのクエリー数とバースト値を変更できるようになりました。新しい DPA フィールドは、spec.configuration.velero.client-qps と spec.configuration.velero.client-burst です。どちらもデフォルトは 100 です。OADP-4076

Kopia でデフォルト以外のアルゴリズムを有効にする

この更新により、Kopia のハッシュ、暗号化、およびスプリッターアルゴリズムを設定して、デフォルト以外のオプションを選択し、さまざまなバックアップワークロードのパフォーマンスを最適化できるようになりました。

これらのアルゴリズムを設定するには、DataProtectionApplication (DPA) 設定の podConfig セクションで velero Pod の env 変数を設定します。この変数が設定されていない場合、またはサポートされていないアルゴリズムが選択されている場合、Kopia はデフォルトで標準アルゴリズムを使用します。OADP-4640

4.2.1.7.2. 解決された問題

Pod なしでバックアップを正常に復元できるようになる

以前は、Pod なしでバックアップを復元し、StorageClass VolumeBindingMode を WaitForFirstConsumer に設定すると、PartiallyFailed ステータスになり、fail to patch dynamic PV, err: context deadline exceeded というエラーが発生していました。この更新により、動的 PV のパッチ適用がスキップされ、バックアップの復元が成功するようになり、PartiallyFailed ステータスが発生しなくなりました。OADP-4231

PodVolumeBackup CR が正しいメッセージを表示するようになる

以前は、PodVolumeBackup カスタムリソース (CR) によって、get a podvolumebackup with status "InProgress" during the server starting, mark it as "Failed" という誤ったメッセージが生成されていました。この更新により、次のメッセージが生成されるようになりました。

found a podvolumebackup with status "InProgress" during the server starting, mark it as "Failed".

found a podvolumebackup with status "InProgress" during the server starting,

mark it as "Failed".DPA で imagePullPolicy をオーバーライドできるようになる

以前は、OADP がすべてのイメージに対して imagePullPolicy パラメーターを Always に設定していました。この更新により、OADP が各イメージに sha256 または sha512 ダイジェストが含まれているかどうかを確認し、imagePullPolicy を IfNotPresent に設定するようになりました。含まれていない場合、imagePullPolicy は Always に設定されます。このポリシーは、新しい spec.containerImagePullPolicy DPA フィールドを使用してオーバーライドできるようになりました。OADP-4172

OADP Velero が、最初の更新が失敗した場合に復元ステータスの更新を再試行できるようになる

以前は、OADP Velero が復元された CR ステータスの更新に失敗していました。これにより、ステータスが無期限に InProgress のままになっていました。バックアップおよび復元 CR のステータスに依存して完了を判断するコンポーネントも失敗していました。この更新により、復元の際に、復元 CR のステータスが Completed または Failed ステータスに正しく移行するようになりました。OADP-3227

別のクラスターからの BuildConfig ビルド復元がエラーなしで正常に処理されるようになる

以前は、別のクラスターから BuildConfig ビルドリソースの復元を実行すると、アプリケーションが内部イメージレジストリーへの TLS 検証時にエラーを生成していました。結果として、failed to verify certificate: x509: certificate signed by unknown authority エラーが発生していました。この更新により、別のクラスターへの BuildConfig ビルドリソース復元が正常に処理されるようになり、failed to verify certificate エラーが生成されなくなりました。OADP-4692

空の PVC が正常に復元されるようになる

以前は、空の永続ボリューム要求 (PVC) を復元中にデータのダウンロードが失敗していました。次のエラーで失敗していました。

data path restore failed: Failed to run kopia restore: Unable to load

snapshot : snapshot not found

data path restore failed: Failed to run kopia restore: Unable to load

snapshot : snapshot not foundこの更新により、空の PVC を復元するときにデータのダウンロードが正しく終了するようになり、エラーメッセージが生成されなくなりました。OADP-3106

CSI および DataMover プラグインで Velero のメモリーリークが発生しなくなる

以前は、CSI および DataMover プラグインの使用によって Velero のメモリーリークが発生していました。バックアップが終了したときに、Velero プラグインインスタンスが削除されず、Velero Pod で Out of Memory (OOM) 状態が生成されるまで、メモリーリークによってメモリーが消費されていました。この更新により、CSI および DataMover プラグインの使用時に Velero のメモリーリークが発生しなくなりました。OADP-4448

関連する PV が解放されるまで、ポストフック操作が開始されなくなる

以前は、Data Mover 操作の非同期性により、関連する Pod の永続ボリューム (PV) が Data Mover の永続ボリューム要求 (PVC) によって解放される前に、ポストフックが試行されることがありました。この問題により、バックアップが PartiallyFailed ステータスで失敗していました。この更新により、関連する PV が Data Mover PVC によって解放されるまでポストフック操作が開始されなくなり、PartiallyFailed バックアップステータスが発生しなくなりました。OADP-3140

DPA のデプロイが、37 文字を超える namespace でも期待どおりに機能するようになる

新しい DPA を作成するために、37 文字を超える namespace に OADP Operator をインストールすると、"cloud-credentials" シークレットのラベル付けが失敗し、DPA によって次のエラーが報告されていました。

The generated label name is too long.

The generated label name is too long.この更新により、名前が 37 文字を超える namespace でも DPA の作成が失敗しなくなりました。OADP-3960

タイムアウトエラーをオーバーライドすることで復元が正常に完了するようになる

以前は、大規模な環境で、復元操作の結果が Partiallyfailed ステータスになり、fail to patch dynamic PV, err: context deadline exceeded というエラーが発生していました。この更新により、Velero サーバー引数の resourceTimeout を使用してこのタイムアウトエラーをオーバーライドすることで、復元が成功するようになりました。OADP-4344

このリリースで解決されたすべての問題のリストは、Jira の OADP 1.4.1 の解決済みの問題 を参照してください。

4.2.1.7.3. 既知の問題

OADP を復元した後に Cassandra アプリケーション Pod が CrashLoopBackoff ステータスになる

OADP が復元されると、Cassandra アプリケーション Pod が CrashLoopBackoff ステータスになる可能性があります。この問題を回避するには、OADP を復元した後、CrashLoopBackoff エラー状態を返す StatefulSet Pod を削除します。その後、StatefulSet コントローラーがこれらの Pod を再作成し、正常に動作するようになります。OADP-4407

ImageStream を参照するデプロイメントが適切に復元されず、Pod とボリュームの内容が破損する

File System Backup (FSB) の復元操作中に、ImageStream を参照する Deployment リソースが適切に復元されません。FSB を実行する復元された Pod と postHook が途中で終了します。

復元操作中に、OpenShift Container Platform コントローラーが、Deployment リソースの spec.template.spec.containers[0].image フィールドを新しい ImageStreamTag ハッシュで更新します。更新により、新しい Pod のロールアウトがトリガーされ、velero が FSB とともにポストフックを実行する Pod が終了します。イメージストリームトリガーの詳細は、イメージストリームの変更時の更新のトリガー を参照してください。

この動作を回避するには、次の 2 段階の復元プロセスを実行します。

Deploymentリソースを除外して復元を実行します。次に例を示します。velero restore create <RESTORE_NAME> \ --from-backup <BACKUP_NAME> \ --exclude-resources=deployment.apps

$ velero restore create <RESTORE_NAME> \ --from-backup <BACKUP_NAME> \ --exclude-resources=deployment.appsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 最初の復元が成功したら、次の例のように、次のリソースを含めて 2 回目の復元を実行します。

velero restore create <RESTORE_NAME> \ --from-backup <BACKUP_NAME> \ --include-resources=deployment.apps

$ velero restore create <RESTORE_NAME> \ --from-backup <BACKUP_NAME> \ --include-resources=deployment.appsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.1.8. OADP 1.4.0 リリースノート

OpenShift API for Data Protection (OADP) 1.4.0 リリースノートには、解決された問題と既知の問題が記載されています。

4.2.1.8.1. 解決された問題

OpenShift Container Platform 4.16 では復元が正しく機能します

以前は、削除されたアプリケーションの namespace を復元する際に、OpenShift Container Platform 4.16 で resource name may not be empty エラーが発生し、復元操作が部分的に失敗していました。この更新により、OpenShift Container Platform 4.16 で復元が期待どおりに機能するようになりました。OADP-4075

OpenShift Container Platform 4.16 クラスターでは、Data Mover バックアップが正常に動作します。

以前は、Velero は Spec.SourceVolumeMode フィールドが存在しない以前のバージョンの SDK を使用していました。その結果、バージョン 4.2 の外部スナップショットの OpenShift Container Platform 4.16 クラスターで Data Mover バックアップが失敗しました。この更新により、外部スナップショットインスタンスはバージョン 7.0 以降にアップグレードされました。その結果、OpenShift Container Platform 4.16 クラスターではバックアップが失敗しなくなります。OADP-3922

このリリースで解決されたすべての問題のリストは、Jira の OADP 1.4.0 の解決済みの問題 のリストを参照してください。

4.2.1.8.2. 既知の問題

MCG に checksumAlgorithm が設定されていない場合、バックアップが失敗する

バックアップロケーションとして Noobaa を使用してアプリケーションのバックアップを実行するときに、checksumAlgorithm 設定パラメーターが設定されていない場合は、バックアップは失敗します。この問題を解決するために、Backup Storage Location (BSL) の設定で checksumAlgorithm の値を指定しなかった場合、空の値が追加されます。空の値は、Data Protection Application (DPA) カスタムリソース (CR) を使用して作成された BSL に対してのみ追加され、他の方法を使用して BSL が作成された場合、この値は追加されません。OADP-4274

このリリースにおける既知の問題の完全なリストは、Jira の OADP 1.4.0 known issues のリストを参照してください。

4.2.1.8.3. アップグレードの注意事項

必ず次のマイナーバージョンにアップグレードしてください。バージョンは絶対に スキップしないでください。新しいバージョンに更新するには、一度に 1 つのチャネルのみアップグレードします。たとえば、OpenShift API for Data Protection (OADP) 1.1 から 1.3 にアップグレードする場合、まず 1.2 にアップグレードし、次に 1.3 にアップグレードします。

4.2.1.8.3.1. OADP 1.3 から 1.4 への変更点

Velero サーバーが、バージョン 1.12 から 1.14 に更新されました。Data Protection Application (DPA) には変更がない点に注意してください。

これにより、以下の変更が発生します。

-

velero-plugin-for-csiコードが Velero コードで利用可能になりました。つまり、プラグインにinitコンテナーが不要になりました。 - Velero は、クライアントのバーストと QPS のデフォルトをそれぞれ 30 と 20 から 100 と 100 に変更しました。

velero-plugin-for-awsプラグインは、BackupStorageLocationオブジェクト (BSL) のspec.config.checksumAlgorithmフィールドのデフォルト値を""(チェックサム計算なし) からCRC32アルゴリズムに更新しました。チェックサムアルゴリズムタイプは AWS でのみ動作することがわかっています。いくつかの S3 プロバイダーでは、チェックサムアルゴリズムを""に設定してmd5sumを無効にする必要があります。ストレージプロバイダーでmd5sumアルゴリズムのサポートと設定を確認してください。OADP 1.4 では、この設定の DPA 内で作成される BSL のデフォルト値は

""です。このデフォルト値は、md5sumがチェックされないことを意味し、OADP 1.3 と一致しています。DPA 内で作成された BSL の場合は、DPA のspec.backupLocations[].velero.config.checksumAlgorithmフィールドを使用して更新します。BSL が DPA の外部で作成された場合は、BSL でspec.config.checksumAlgorithmを使用してこの設定を更新できます。

4.2.1.8.3.2. DPA 設定をバックアップする

現在の DataProtectionApplication (DPA) 設定をバックアップする必要があります。

手順

次のコマンドを実行して、現在の DPA 設定を保存します。

コマンドの例

oc get dpa -n openshift-adp -o yaml > dpa.orig.backup

$ oc get dpa -n openshift-adp -o yaml > dpa.orig.backupCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.1.8.3.3. OADP Operator をアップグレードする

OpenShift API for Data Protection (OADP) Operator をアップグレードする場合は、次の手順を使用します。

手順

-

OADP Operator のサブスクリプションチャネルを、

stable-1.3からstable-1.4に変更します。 - Operator とコンテナーが更新され、再起動するまで待ちます。

4.2.1.8.4. DPA を新しいバージョンに変換する

OADP 1.3 から 1.4 にアップグレードする場合、Data Protection Application (DPA) を変更する必要はありません。

4.2.1.8.5. アップグレードの検証

アップグレードを検証するには、次の手順を使用します。

手順

次のコマンドを実行して OpenShift API for Data Protection (OADP) リソースを表示し、インストールを検証します。

oc get all -n openshift-adp

$ oc get all -n openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行して、

DataProtectionApplication(DPA) が調整されていることを確認します。oc get dpa dpa-sample -n openshift-adp -o jsonpath='{.status}'$ oc get dpa dpa-sample -n openshift-adp -o jsonpath='{.status}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

{"conditions":[{"lastTransitionTime":"2023-10-27T01:23:57Z","message":"Reconcile complete","reason":"Complete","status":"True","type":"Reconciled"}]}{"conditions":[{"lastTransitionTime":"2023-10-27T01:23:57Z","message":"Reconcile complete","reason":"Complete","status":"True","type":"Reconciled"}]}Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

typeがReconciledに設定されていることを確認します。 次のコマンドを実行して、Backup Storage Location を確認し、

PHASEがAvailableであることを確認します。oc get backupstoragelocations.velero.io -n openshift-adp

$ oc get backupstoragelocations.velero.io -n openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

NAME PHASE LAST VALIDATED AGE DEFAULT dpa-sample-1 Available 1s 3d16h true

NAME PHASE LAST VALIDATED AGE DEFAULT dpa-sample-1 Available 1s 3d16h trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. OADP パフォーマンス

4.3.1. OADP 推奨ネットワーク設定

OpenShift API for Data Protection (OADP) のサポートされたエクスペリエンスを得るには、OpenShift ノード、S3 ストレージ、および OpenShift ネットワーク要件の推奨事項を満たすサポート対象のクラウド環境全体で、安定した回復力のあるネットワークが必要です。

最適ではないデータパスを持つクラスター外にあるリモート S3 バケットを含むデプロイメントでバックアップ操作および復元操作を正常に実行するには、最適ではない状況でネットワーク設定が次の最小要件を満たすことが推奨されます。

- 帯域幅 (オブジェクトストレージへのネットワークアップロード速度): 小規模なバックアップの場合は 2 Mbps 以上、大規模なバックアップの場合はデータ量に応じて 10 - 100 Mbps。

- 1% のパケットロス

- パケット破損: 1%

- 遅延: 100ms

OpenShift Container Platform ネットワークが最適に動作し、OpenShift Container Platform ネットワークの要件を満たしていることを確認します。

Red Hat は標準的なバックアップおよび復元の失敗に対するサポートを提供していますが、推奨されるしきい値を満たさないネットワーク設定によって発生する失敗に対するサポートは提供していません。

4.4. OADP の機能とプラグイン

OpenShift API for Data Protection (OADP) 機能は、アプリケーションをバックアップおよび復元するためのオプションを提供します。

デフォルトのプラグインにより、Velero は特定のクラウドプロバイダーと統合し、OpenShift Container Platform リソースをバックアップおよび復元できます。

4.4.1. OADP の機能

OpenShift API for Data Protection (OADP) は、以下の機能をサポートします。

- バックアップ

OADP を使用して OpenShift Platform 上のすべてのアプリケーションをバックアップしたり、タイプ、namespace、またはラベルでリソースをフィルターしたりできます。

OADP は、Kubernetes オブジェクトと内部イメージをアーカイブファイルとしてオブジェクトストレージに保存することにより、それらをバックアップします。OADP は、ネイティブクラウドスナップショット API または Container Storage Interface (CSI) を使用してスナップショットを作成することにより、永続ボリューム (PV) をバックアップします。スナップショットをサポートしないクラウドプロバイダーの場合、OADP は Restic を使用してリソースと PV データをバックアップします。

注記バックアップと復元を成功させるには、アプリケーションのバックアップから Operator を除外する必要があります。

- 復元

バックアップからリソースと PV を復元できます。バックアップ内のすべてのオブジェクトを復元することも、オブジェクトを namespace、PV、またはラベルでフィルタリングすることもできます。

注記バックアップと復元を成功させるには、アプリケーションのバックアップから Operator を除外する必要があります。

- スケジュール

- 指定した間隔でバックアップをスケジュールできます。

- フック

-

フックを使用して、Pod 上のコンテナーでコマンドを実行できます。たとえば、

fsfreezeを使用してファイルシステムをフリーズできます。バックアップまたは復元の前または後に実行するようにフックを設定できます。復元フックは、init コンテナーまたはアプリケーションコンテナーで実行できます。

4.4.2. OADP プラグイン

OpenShift API for Data Protection (OADP) は、バックアップおよびスナップショット操作をサポートするためにストレージプロバイダーと統合されたデフォルトの Velero プラグインを提供します。Velero プラグインに基づき、カスタムプラグイン を作成できます。

OADP は、OpenShift Container Platform リソースバックアップ、OpenShift Virtualization リソースバックアップ、および Container Storage Interface (CSI) スナップショット用のプラグインも提供します。

| OADP プラグイン | 機能 | ストレージの場所 |

|---|---|---|

|

| Kubernetes オブジェクトをバックアップし、復元します。 | AWS S3 |

| スナップショットを使用してボリュームをバックアップおよび復元します。 | AWS EBS | |

|

| Kubernetes オブジェクトをバックアップし、復元します。 | Microsoft Azure Blob ストレージ |

| スナップショットを使用してボリュームをバックアップおよび復元します。 | Microsoft Azure マネージドディスク | |

|

| Kubernetes オブジェクトをバックアップし、復元します。 | Google Cloud Storage |

| スナップショットを使用してボリュームをバックアップおよび復元します。 | Google Compute Engine ディスク | |

|

| OpenShift Container Platform リソースをバックアップおよび復元します。[1] | オブジェクトストア |

|

| OpenShift Virtualization リソースをバックアップおよび復元します。[2] | オブジェクトストア |

|

| CSI スナップショットを使用して、ボリュームをバックアップおよび復元します。[3] | CSI スナップショットをサポートするクラウドストレージ |

|

| VolumeSnapshotMover は、クラスターの削除などの状況で、ステートフルアプリケーションを回復するための復元プロセス中に使用されるスナップショットをクラスターからオブジェクトストアに再配置します。[4] | オブジェクトストア |

- 必須。

- 仮想マシンディスクは CSI スナップショットまたは Restic でバックアップされます。

csiプラグインは、Kubernetes CSI スナップショット API を使用します。-

OADP 1.1 以降は

snapshot.storage.k8s.io/v1を使用します。 -

OADP 1.0 は

snapshot.storage.k8s.io/v1beta1を使用します。

-

OADP 1.1 以降は

- OADP 1.2 のみ。

4.4.3. OADP Velero プラグインについて

Velero のインストール時に、次の 2 種類のプラグインを設定できます。

- デフォルトのクラウドプロバイダープラグイン

- カスタムプラグイン

どちらのタイプのプラグインもオプションですが、ほとんどのユーザーは少なくとも 1 つのクラウドプロバイダープラグインを設定します。

4.4.3.1. デフォルトの Velero クラウドプロバイダープラグイン

デプロイメント中に oadp_v1alpha1_dpa.yaml ファイルを設定するときに、次のデフォルトの Velero クラウドプロバイダープラグインのいずれかをインストールできます。

-

aws(Amazon Web Services) -

gcp(Google Cloud) -

azure(Microsoft Azure) -

openshift(OpenShift Velero プラグイン) -

csi(Container Storage Interface) -

kubevirt(KubeVirt)

デプロイメント中に oadp_v1alpha1_dpa.yaml ファイルで目的のデフォルトプラグインを指定します。

ファイルの例:

次の .yaml ファイルは、openshift、aws、azure、および gcp プラグインをインストールします。

4.4.3.2. カスタム Velero プラグイン

デプロイメント中に oadp_v1alpha1_dpa.yaml ファイルを設定するときに、プラグインの image と name を指定することにより、カスタム Velero プラグインをインストールできます。

デプロイメント中に oadp_v1alpha1_dpa.yaml ファイルで目的のカスタムプラグインを指定します。

ファイルの例:

次の .yaml ファイルは、デフォルトの openshift、azure、および gcp プラグインと、イメージ quay.io/example-repo/custom-velero-plugin を持つ custom-plugin-example という名前のカスタムプラグインをインストールします。

4.4.3.3. Velero プラグインがメッセージ "received EOF, stopping recv loop" を返す

Velero プラグインは、別のプロセスとして開始されます。Velero 操作が完了すると、成功したかどうかにかかわらず終了します。デバッグログの received EOF, stopping recv loop メッセージは、プラグイン操作が完了したことを示します。エラーが発生したわけではありません。

4.4.4. OADP でサポートされるアーキテクチャー

OpenShift API for Data Protection (OADP) は、次のアーキテクチャーをサポートします。

- AMD64

- ARM64

- PPC64le

- s390x

OADP 1.2.0 以降のバージョンは、ARM64 アーキテクチャーをサポートします。

4.4.5. IBM Power および IBM Z の OADP サポート

OpenShift API for Data Protection (OADP) はプラットフォームに依存しません。以下は、IBM Power® および IBM Z® のみに関連する情報です。

- OADP 1.3.8 は、IBM Power® と IBM Z® の両方の OpenShift Container Platform 4.12、4.13、4.14、4.15 に対して正常にテストされました。次のセクションでは、これらのシステムのバックアップ場所に関して、OADP 1.3.8 のテストおよびサポート情報を示します。

- OADP 1.4.7 は、IBM Power® と IBM Z® の両方の OpenShift Container Platform 4.14、4.15、4.16、4.17 に対して正常にテストされました。次のセクションでは、これらのシステムのバックアップ場所に関して、OADP 1.4.7 のテストおよびサポート情報を示します。

4.4.5.1. IBM Power を使用したターゲットバックアップロケーションの OADP サポート

- OpenShift Container Platform 4.12、4.13、4.14、4.15、および OADP 1.3.8 で実行される IBM Power® は、AWS S3 バックアップロケーションターゲットに対して正常にテストされました。テストには AWS S3 ターゲットのみが使用されましたが、Red Hat は、AWS 以外のすべての S3 バックアップロケーションターゲットに対して、OpenShift Container Platform 4.13、4.14、4.15、および OADP 1.3.8 を使用した IBM Power® の実行をサポートしています。

- OpenShift Container Platform 4.14、4.15、4.16、4.17、および OADP 1.4.7 で実行される IBM Power® は、AWS S3 バックアップロケーションターゲットに対して正常にテストされました。テストには AWS S3 ターゲットのみが使用されましたが、Red Hat は、AWS 以外のすべての S3 バックアップロケーションターゲットに対して、OpenShift Container Platform 4.14、4.15、4.16、4.17、および OADP 1.4.7 を使用した IBM Power® の実行をサポートしています。

4.4.5.2. IBM Z を使用したターゲットバックアップロケーションの OADP テストとサポート

- OpenShift Container Platform 4.12、4.13、4.14、4.15、および 1.3.8 で実行されている IBM Z® は、AWS S3 バックアップロケーションターゲットに対して正常にテストされました。テストには AWS S3 ターゲットのみが使用されましたが、Red Hat は、AWS 以外のすべての S3 バックアップロケーションターゲットに対して、OpenShift Container Platform 4.13、4.14、4.15、1.3.8 を使用した IBM Z® の実行をサポートしています。

- OpenShift Container Platform 4.14、4.15、4.16、4.17、および 1.4.7 で実行されている IBM Z® は、AWS S3 バックアップロケーションターゲットに対して正常にテストされました。テストには AWS S3 ターゲットのみが使用されましたが、Red Hat は、AWS 以外のすべての S3 バックアップロケーションターゲットに対して、OpenShift Container Platform 4.14、4.15、4.16、4.17、および 1.4.7 を使用した IBM Z® の実行をサポートしています。

4.4.5.2.1. IBM Power (R) および IBM Z(R) プラットフォームを使用した OADP の既知の問題

- 現在、IBM Power® および IBM Z® プラットフォームにデプロイされたシングルノードの OpenShift クラスターには、バックアップ方法の制限があります。現在、これらのプラットフォーム上のシングルノード OpenShift クラスターと互換性があるのは、NFS ストレージのみです。さらに、バックアップおよび復元操作では、Kopia や Restic などの File System Backup (FSB) 方式のみがサポートされます。現在、この問題に対する回避策はありません。

4.4.6. OADP プラグインの既知の問題

次のセクションでは、OpenShift API for Data Protection (OADP) プラグインの既知の問題を説明します。

4.4.6.1. シークレットがないことで、イメージストリームのバックアップ中に Velero プラグインでパニックが発生する

バックアップと Backup Storage Location (BSL) が Data Protection Application (DPA) の範囲外で管理されている場合、OADP コントローラー (つまり DPA の調整) によって関連する oadp-<bsl_name>-<bsl_provider>-registry-secret が作成されません。

バックアップを実行すると、OpenShift Velero プラグインがイメージストリームバックアップでパニックになり、次のパニックエラーが表示されます。

024-02-27T10:46:50.028951744Z time="2024-02-27T10:46:50Z" level=error msg="Error backing up item" backup=openshift-adp/<backup name> error="error executing custom action (groupResource=imagestreams.image.openshift.io, namespace=<BSL Name>, name=postgres): rpc error: code = Aborted desc = plugin panicked: runtime error: index out of range with length 1, stack trace: goroutine 94…

024-02-27T10:46:50.028951744Z time="2024-02-27T10:46:50Z" level=error msg="Error backing up item"

backup=openshift-adp/<backup name> error="error executing custom action (groupResource=imagestreams.image.openshift.io,

namespace=<BSL Name>, name=postgres): rpc error: code = Aborted desc = plugin panicked:

runtime error: index out of range with length 1, stack trace: goroutine 94…4.4.6.1.1. パニックエラーを回避するための回避策

Velero プラグインのパニックエラーを回避するには、次の手順を実行します。

カスタム BSL に適切なラベルを付けます。

oc label backupstoragelocations.velero.io <bsl_name> app.kubernetes.io/component=bsl

$ oc label backupstoragelocations.velero.io <bsl_name> app.kubernetes.io/component=bslCopy to Clipboard Copied! Toggle word wrap Toggle overflow BSL にラベルを付けた後、DPA の調整を待ちます。

注記DPA 自体に軽微な変更を加えることで、強制的に調整を行うことができます。

DPA の調整では、適切な

oadp-<bsl_name>-<bsl_provider>-registry-secretが作成されていること、正しいレジストリーデータがそこに設定されていることを確認します。oc -n openshift-adp get secret/oadp-<bsl_name>-<bsl_provider>-registry-secret -o json | jq -r '.data'

$ oc -n openshift-adp get secret/oadp-<bsl_name>-<bsl_provider>-registry-secret -o json | jq -r '.data'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4.6.2. OpenShift ADP Controller のセグメンテーション違反

cloudstorage と restic の両方を有効にして DPA を設定すると、openshift-adp-controller-manager Pod がクラッシュし、Pod がクラッシュループのセグメンテーション違反で失敗するまで無期限に再起動します。

velero または cloudstorage は相互に排他的なフィールドであるため、どちらか一方だけ定義できます。

-

veleroとcloudstorageの両方が定義されている場合、openshift-adp-controller-managerは失敗します。 -

veleroとcloudstorageのいずれも定義されていない場合、openshift-adp-controller-managerは失敗します。

この問題の詳細は、OADP-1054 を参照してください。

4.4.6.2.1. OpenShift ADP Controller のセグメンテーション違反の回避策

DPA の設定時に、velero または cloudstorage のいずれかを定義する必要があります。DPA で両方の API を定義すると、openshift-adp-controller-manager Pod がクラッシュループのセグメンテーション違反で失敗します。

4.4.7. OADP と FIPS

Federal Information Processing Standards (FIPS) は、Federal Information Security Management Act (FISMA) に従って米国連邦政府が開発した一連のコンピューターセキュリティー標準です。

OpenShift API for Data Protection (OADP) はテスト済みで、FIPS 対応の OpenShift Container Platform クラスターで動作します。

4.5. OADP のユースケース

4.5.1. OpenShift API for Data Protection と Red Hat OpenShift Data Foundation (ODF) を使用したバックアップ

以下は、OADP と ODF を使用してアプリケーションをバックアップするユースケースです。

4.5.1.1. OADP と ODF を使用したアプリケーションのバックアップ

このユースケースでは、OADP を使用してアプリケーションをバックアップし、Red Hat OpenShift Data Foundation (ODF) によって提供されるオブジェクトストレージにバックアップを保存します。

- Backup Storage Location を設定するために、Object Bucket Claim (OBC) を作成します。ODF を使用して、Amazon S3 互換のオブジェクトストレージバケットを設定します。ODF は、MultiCloud Object Gateway (NooBaa MCG) と Ceph Object Gateway (RADOS Gateway (RGW) とも呼ばれる) オブジェクトストレージサービスを提供します。このユースケースでは、Backup Storage Location として NooBaa MCG を使用します。

-

awsプロバイダープラグインを使用して、OADP で NooBaa MCG サービスを使用します。 - Backup Storage Location (BSL) を使用して Data Protection Application (DPA) を設定します。

- バックアップカスタムリソース (CR) を作成し、バックアップするアプリケーションの namespace を指定します。

- バックアップを作成して検証します。

前提条件

- OADP Operator をインストールした。

- ODF Operator をインストールした。

- 別の namespace で実行されているデータベースを持つアプリケーションがある。

手順

次の例に示すように、NooBaa MCG バケットを要求する OBC マニフェストファイルを作成します。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

test-obc- Object Bucket Claim の名前を指定します。

test-backup-bucket- バケットの名前を指定します。

次のコマンドを実行して OBC を作成します。

oc create -f <obc_file_name>

$ oc create -f <obc_file_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<obc_file_name>- オブジェクトバケットクレームマニフェストのファイル名を指定します。

OBC を作成すると、ODF が Object Bucket Claim と同じ名前の

secretとconfig mapを作成します。secretにはバケットの認証情報が含まれており、config mapにはバケットにアクセスするための情報が含まれています。生成された config map からバケット名とバケットホストを取得するには、次のコマンドを実行します。oc extract --to=- cm/test-obc

$ oc extract --to=- cm/test-obcCopy to Clipboard Copied! Toggle word wrap Toggle overflow test-obcは OBC の名前です。出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 生成された

secretからバケットの認証情報を取得するには、次のコマンドを実行します。oc extract --to=- secret/test-obc

$ oc extract --to=- secret/test-obcCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

# AWS_ACCESS_KEY_ID ebYR....xLNMc # AWS_SECRET_ACCESS_KEY YXf...+NaCkdyC3QPym

# AWS_ACCESS_KEY_ID ebYR....xLNMc # AWS_SECRET_ACCESS_KEY YXf...+NaCkdyC3QPymCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行して、

openshift-storagenamespace の s3 ルートから S3 エンドポイントのパブリック URL を取得します。oc get route s3 -n openshift-storage

$ oc get route s3 -n openshift-storageCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドに示すように、オブジェクトバケットの認証情報を含む

cloud-credentialsファイルを作成します。[default] aws_access_key_id=<AWS_ACCESS_KEY_ID> aws_secret_access_key=<AWS_SECRET_ACCESS_KEY>

[default] aws_access_key_id=<AWS_ACCESS_KEY_ID> aws_secret_access_key=<AWS_SECRET_ACCESS_KEY>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドに示すように、

cloud-credentialsファイルの内容を使用してcloud-credentialsシークレットを作成します。oc create secret generic \ cloud-credentials \ -n openshift-adp \ --from-file cloud=cloud-credentials

$ oc create secret generic \ cloud-credentials \ -n openshift-adp \ --from-file cloud=cloud-credentialsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次の例に示すように、Data Protection Application (DPA) を設定します。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

defaultSnapshotMoveData-

OADP Data Mover を使用して、リモートオブジェクトストレージへの Container Storage Interface (CSI)スナップショットの移動を有効にするには、

trueに設定します。 s3Url- ODF ストレージの S3 URL を指定します。

<bucket_name>- バケット名を指定します。

次のコマンドを実行して DPA を作成します。

oc apply -f <dpa_filename>

$ oc apply -f <dpa_filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行して、DPA が正常に作成されたことを確認します。出力例から、

statusオブジェクトのtypeフィールドがReconciledに設定されていることがわかります。これは、DPA が正常に作成されたことを意味します。oc get dpa -o yaml

$ oc get dpa -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行して、Backup Storage Location (BSL) が使用可能であることを確認します。

oc get backupstoragelocations.velero.io -n openshift-adp

$ oc get backupstoragelocations.velero.io -n openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

NAME PHASE LAST VALIDATED AGE DEFAULT dpa-sample-1 Available 3s 15s true

NAME PHASE LAST VALIDATED AGE DEFAULT dpa-sample-1 Available 3s 15s trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次の例に示すように、バックアップ CR を設定します。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<application_namespace>- アプリケーションのバックアップ用の namespace を指定します。

次のコマンドを実行してバックアップ CR を作成します。

oc apply -f <backup_cr_filename>

$ oc apply -f <backup_cr_filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

検証

次のコマンドを実行して、バックアップオブジェクトが

Completedフェーズにあることを確認します。詳細は、出力例を参照してください。oc describe backup test-backup -n openshift-adp

$ oc describe backup test-backup -n openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5.2. OpenShift API for Data Protection (OADP) による復元のユースケース

以下は、OADP を使用してバックアップを別の namespace に復元するユースケースです。

4.5.2.1. OADP を使用してアプリケーションを別の namespace に復元する

OADP を使用して、アプリケーションのバックアップを、新しいターゲット namespace の test-restore-application に復元します。バックアップを復元するには、次の例に示すように、復元カスタムリソース (CR) を作成します。この復元 CR では、バックアップに含めたアプリケーションの namespace を、ソース namespace が参照します。その後、新しい復元先の namespace にプロジェクトを切り替えてリソースを確認することで、復元を検証します。

前提条件

- OADP Operator をインストールした。

- 復元するアプリケーションのバックアップがある。

手順

次の例に示すように、復元 CR を作成します。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

test-restore- 復元 CR の名前を指定します。

<backup_name>- バックアップの名前を指定します。

<application_namespace>-

復元先のターゲット名前空間を指定します。

namespaceMappingは、ソースアプリケーションの namespace をターゲットアプリケーションの namespace にマップします。test-restore-applicationは、バックアップを復元するターゲット namespace の名前です。

次のコマンドを実行して復元 CR を適用します。

oc apply -f <restore_cr_filename>

$ oc apply -f <restore_cr_filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

検証

次のコマンドを実行して、復元が

Completedフェーズにあることを確認します。oc describe restores.velero.io <restore_name> -n openshift-adp

$ oc describe restores.velero.io <restore_name> -n openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行して、復元先の namespace

test-restore-applicationに切り替えます。oc project test-restore-application

$ oc project test-restore-applicationCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行して、永続ボリューム要求 (pvc)、サービス (svc)、デプロイメント、シークレット、config map などの復元されたリソースを確認します。

oc get pvc,svc,deployment,secret,configmap

$ oc get pvc,svc,deployment,secret,configmapCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5.3. バックアップ時の自己署名 CA 証明書の追加

自己署名認証局 (CA) 証明書を Data Protection Application (DPA) に含めてから、アプリケーションをバックアップできます。バックアップは、Red Hat OpenShift Data Foundation (ODF) が提供する NooBaa バケットに保存します。

4.5.3.1. アプリケーションとその自己署名 CA 証明書のバックアップ

ODF によって提供される s3.openshift-storage.svc サービスは、自己署名サービス CA で署名された Transport Layer Security (TLS) プロトコル証明書を使用します。

certificate signed by unknown authority エラーを防ぐには、DataProtectionApplication カスタムリソース (CR) の Backup Storage Location (BSL) セクションに自己署名 CA 証明書を含める必要があります。この場合、次のタスクを完了する必要があります。

- Object Bucket Claim (OBC) を作成して、NooBaa バケットを要求します。

- バケットの詳細を抽出します。

-

DataProtectionApplicationCR に自己署名 CA 証明書を含めます。 - アプリケーションをバックアップします。

前提条件

- OADP Operator をインストールした。

- ODF Operator をインストールした。

- 別の namespace で実行されているデータベースを持つアプリケーションがある。

手順

次の例に示すように、NooBaa バケットを要求する OBC マニフェストを作成します。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

test-obc- Object Bucket Claim の名前を指定します。

test-backup-bucket- バケットの名前を指定します。

次のコマンドを実行して OBC を作成します。

oc create -f <obc_file_name>

$ oc create -f <obc_file_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow OBC を作成すると、ODF が Object Bucket Claim と同じ名前の

secretとConfigMapを作成します。secretオブジェクトにはバケットの認証情報が含まれ、ConfigMapオブジェクトにはバケットにアクセスするための情報が含まれています。生成された config map からバケット名とバケットホストを取得するには、次のコマンドを実行します。oc extract --to=- cm/test-obc

$ oc extract --to=- cm/test-obcCopy to Clipboard Copied! Toggle word wrap Toggle overflow test-obcは OBC の名前です。出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow secretオブジェクトからバケット認証情報を取得するには、次のコマンドを実行します。oc extract --to=- secret/test-obc

$ oc extract --to=- secret/test-obcCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

# AWS_ACCESS_KEY_ID ebYR....xLNMc # AWS_SECRET_ACCESS_KEY YXf...+NaCkdyC3QPym

# AWS_ACCESS_KEY_ID ebYR....xLNMc # AWS_SECRET_ACCESS_KEY YXf...+NaCkdyC3QPymCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次の設定例を使用して、オブジェクトバケットの認証情報を含む

cloud-credentialsファイルを作成します。[default] aws_access_key_id=<AWS_ACCESS_KEY_ID> aws_secret_access_key=<AWS_SECRET_ACCESS_KEY>

[default] aws_access_key_id=<AWS_ACCESS_KEY_ID> aws_secret_access_key=<AWS_SECRET_ACCESS_KEY>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行して、

cloud-credentialsファイルの内容を使用してcloud-credentialsシークレットを作成します。oc create secret generic \ cloud-credentials \ -n openshift-adp \ --from-file cloud=cloud-credentials

$ oc create secret generic \ cloud-credentials \ -n openshift-adp \ --from-file cloud=cloud-credentialsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行して、

openshift-service-ca.crtconfig map からサービス CA 証明書を抽出します。証明書をBase64形式で必ずエンコードし、次のステップで使用する値をメモしてください。oc get cm/openshift-service-ca.crt \ -o jsonpath='{.data.service-ca\.crt}' | base64 -w0; echo$ oc get cm/openshift-service-ca.crt \ -o jsonpath='{.data.service-ca\.crt}' | base64 -w0; echoCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0... ....gpwOHMwaG9CRmk5a3....FLS0tLS0K

LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0... ....gpwOHMwaG9CRmk5a3....FLS0tLS0KCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次の例に示すように、バケット名と CA 証明書を使用して

DataProtectionApplicationCR マニフェストファイルを設定します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

insecureSkipTLSVerify-

SSL/TLS セキュリティーを有効にするかどうかを指定します。

trueに設定すると、SSL/TLS セキュリティーが無効になります。falseに設定すると、SSL/TLS セキュリティーが有効になります。 <bucket_name>- 前の手順で抽出されたバケットの名前を指定します。

<ca_cert>-

直前の手順の

Base64でエンコードされた証明書を指定します。

次のコマンドを実行して、

DataProtectionApplicationCR を作成します。oc apply -f <dpa_filename>

$ oc apply -f <dpa_filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行して、

DataProtectionApplicationCR が正常に作成されたことを確認します。oc get dpa -o yaml

$ oc get dpa -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行して、Backup Storage Location (BSL) が使用可能であることを確認します。

oc get backupstoragelocations.velero.io -n openshift-adp

$ oc get backupstoragelocations.velero.io -n openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

NAME PHASE LAST VALIDATED AGE DEFAULT dpa-sample-1 Available 3s 15s true

NAME PHASE LAST VALIDATED AGE DEFAULT dpa-sample-1 Available 3s 15s trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次の例を使用して、

BackupCR を設定します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<application_namespace>- アプリケーションのバックアップ用の namespace を指定します。

次のコマンドを実行して

BackupCR を作成します。oc apply -f <backup_cr_filename>

$ oc apply -f <backup_cr_filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

検証

次のコマンドを実行して、

BackupオブジェクトがCompletedフェーズにあることを確認します。oc describe backup test-backup -n openshift-adp

$ oc describe backup test-backup -n openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5.4. legacy-aws Velero プラグインの使用

AWS S3 互換の Backup Storage Location を使用している場合、アプリケーションのバックアップ中に SignatureDoesNotMatch エラーが発生する可能性があります。このエラーは、一部の Backup Storage Location で、新しい AWS SDK for Go V2 と互換性のない古いバージョンの S3 API がまだ使用されているために発生します。この問題を解決するには、DataProtectionApplication カスタムリソース (CR) で legacy-aws Velero プラグインを使用できます。legacy-aws Velero プラグインは、従来の S3 API と互換性のある古い AWS SDK for Go V1 を使用します。これによりバックアップが正常に実行されます。

4.5.4.1. DataProtectionApplication CR で legacy-aws Velero プラグインを使用する

次のユースケースでは、legacy-aws Velero プラグインを使用して DataProtectionApplication CR を設定し、アプリケーションをバックアップします。

選択した Backup Storage Location に応じて、DataProtectionApplication CR で legacy-aws または aws プラグインのいずれかを使用できます。DataProtectionApplication CR で両方のプラグインを使用すると、aws and legacy-aws can not be both specified in DPA spec.configuration.velero.defaultPlugins というエラーが発生します。

前提条件

- OADP Operator がインストールされている。

- バックアップの場所として AWS S3 互換のオブジェクトストレージが設定されている。

- 別の namespace で実行されているデータベースを持つアプリケーションがある。

手順

次の例に示すように、

legacy-awsVelero プラグインを使用するようにDataProtectionApplicationCR を設定します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

legacy-aws-

legacy-awsプラグインの使用を指定します。 <bucket_name>- バケット名を指定します。

次のコマンドを実行して、

DataProtectionApplicationCR を作成します。oc apply -f <dpa_filename>

$ oc apply -f <dpa_filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行して、

DataProtectionApplicationCR が正常に作成されたことを確認します。出力例から、statusオブジェクトのtypeフィールドがReconciledに設定され、statusフィールドが"True"に設定されていることがわかります。このステータスは、DataProtectionApplicationCR が正常に作成されたことを示します。oc get dpa -o yaml

$ oc get dpa -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行して、Backup Storage Location (BSL) が使用可能であることを確認します。

oc get backupstoragelocations.velero.io -n openshift-adp

$ oc get backupstoragelocations.velero.io -n openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次の例のような出力が表示されます。

NAME PHASE LAST VALIDATED AGE DEFAULT dpa-sample-1 Available 3s 15s true

NAME PHASE LAST VALIDATED AGE DEFAULT dpa-sample-1 Available 3s 15s trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次の例に示すように、

BackupCR を設定します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<application_namespace>- アプリケーションのバックアップ用の namespace を指定します。

次のコマンドを実行して

BackupCR を作成します。oc apply -f <backup_cr_filename>

$ oc apply -f <backup_cr_filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

検証

次のコマンドを実行して、バックアップオブジェクトが

Completedフェーズにあることを確認します。詳細は、出力例を参照してください。oc describe backups.velero.io test-backup -n openshift-adp

$ oc describe backups.velero.io test-backup -n openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.6. OADP のインストール

4.6.1. OADP のインストールについて

クラスター管理者は、OADP Operator をインストールして、OpenShift API for Data Protection (OADP) をインストールします。OADP Operator は Velero 1.14 をインストールします。

OADP 1.0.4 以降、すべての OADP 1.0.z バージョンは Migration Toolkit for Containers Operator の依存関係としてのみ使用でき、スタンドアロン Operator として使用することはできません。

Kubernetes リソースと内部イメージをバックアップするには、次のいずれかのストレージタイプなど、バックアップロケーションとしてオブジェクトストレージが必要です。

- Amazon Web Services

- Microsoft Azure

- Google Cloud

- Multicloud Object Gateway

- IBM Cloud® Object Storage S3

- AWS S3 互換オブジェクトストレージ (Multicloud Object Gateway、MinIO など)

個々の OADP デプロイメントごとに、同じ namespace 内に複数の Backup Storage Location を設定できます。

特に指定のない限り、"NooBaa" は軽量オブジェクトストレージを提供するオープンソースプロジェクトを指し、"Multicloud Object Gateway (MCG)" は NooBaa の Red Hat ディストリビューションを指します。

MCG の詳細は、アプリケーションを使用して Multicloud Object Gateway にアクセスする を参照してください。

オブジェクトストレージのバケット作成を自動化する CloudStorage API は、テクノロジープレビュー機能のみです。テクノロジープレビュー機能は、Red Hat 製品のサービスレベルアグリーメント (SLA) の対象外であり、機能的に完全ではないことがあります。Red Hat は、実稼働環境でこれらを使用することを推奨していません。テクノロジープレビュー機能は、最新の製品機能をいち早く提供して、開発段階で機能のテストを行い、フィードバックを提供していただくことを目的としています。

Red Hat のテクノロジープレビュー機能のサポート範囲に関する詳細は、以下のリンクを参照してください。

CloudStorage API は、CloudStorage オブジェクトを使用しており、OADP で CloudStorage API を使用して BackupStorageLocation として使用する S3 バケットを自動的に作成するためのテクノロジープレビュー機能です。

CloudStorage API は、既存の S3 バケットを指定して BackupStorageLocation オブジェクトを手動作成することをサポートしています。現在、S3 バケットを自動的に作成する CloudStorage API は、AWS S3 ストレージに対してのみ有効です。

スナップショットまたは File System Backup (FSB) を使用して、永続ボリューム (PV) をバックアップできます。

スナップショットを使用して PV をバックアップするには、ネイティブスナップショット API または Container Storage Interface (CSI) スナップショットのいずれかをサポートするクラウドプロバイダー (次のいずれかのクラウドプロバイダーなど) が必要です。

- Amazon Web Services

- Microsoft Azure

- Google Cloud

- OpenShift Data Foundation などの CSI スナップショット対応クラウドプロバイダー

OCP 4.11 以降で CSI バックアップを使用する場合は、OADP 1.1.x をインストールします。

OADP 1.0.x は、OCP 4.11 以降での CSI バックアップをサポートしていません。OADP 1.0.x には Velero 1.7.x が含まれており、OCP 4.11 以降には存在しない API グループ snapshot.storage.k8s.io/v1beta1 が必要です。

クラウドプロバイダーがスナップショットをサポートしていない場合、またはストレージが NFS である場合は、オブジェクトストレージ上の File System Backup によるアプリケーションのバックアップ: Kopia または Restic を使用してアプリケーションをバックアップできます。

デフォルトの Secret を作成し、次に、Data Protection Application をインストールします。

4.6.1.1. AWS S3 互換のバックアップストレージプロバイダー

OADP は、多くの S3 互換オブジェクトストレージプロバイダーと連携します。OADP のリリースごとに、複数のオブジェクトストレージプロバイダーが認定およびテストされています。さまざまな S3 プロバイダーが OADP で動作することが知られていますが、テストおよび認定は特にされていません。これらのプロバイダーはベストエフォート方式でサポートされます。いくつかの S3 オブジェクトストレージプロバイダーには、このドキュメントに記載されている既知の問題と制限があります。

Red Hat は、任意の S3 互換ストレージ上の OADP のサポートを提供しますが、S3 エンドポイントが問題の根本原因であると判断された場合はサポートが停止されます。

4.6.1.1.1. 認定バックアップストレージプロバイダー

次の AWS S3 互換オブジェクトストレージプロバイダーは、AWS プラグインを介して OADP によって完全にサポートされており、Backup Storage Location として使用できます。

- MinIO

- Multicloud Object Gateway (MCG)

- Amazon Web Services (AWS) S3

- IBM Cloud® Object Storage S3

- Ceph RADOS ゲートウェイ (Ceph オブジェクトゲートウェイ)

- Red Hat Container Storage

- Red Hat OpenShift Data Foundation

- NetApp ONTAP S3 Object Storage

Google Cloud と Microsoft Azure には独自の Velero オブジェクトストアプラグインがあります。

4.6.1.1.2. サポートされていないバックアップストレージプロバイダー

次の AWS S3 互換オブジェクトストレージプロバイダーは、AWS プラグインを介して Velero と連携し、Backup Storage Location として使用できることがわかっています。ただし、Red Hat ではサポートされておらず、テストも行われていません。

- Oracle Cloud

- DigitalOcean

- NooBaa (Multicloud Object Gateway (MCG) を使用してインストールされていない場合)

- Tencent Cloud

- Quobyte

- Cloudian HyperStore

特に指定のない限り、"NooBaa" は軽量オブジェクトストレージを提供するオープンソースプロジェクトを指し、"Multicloud Object Gateway (MCG)" は NooBaa の Red Hat ディストリビューションを指します。

MCG の詳細は、アプリケーションを使用して Multicloud Object Gateway にアクセスする を参照してください。

4.6.1.1.3. 既知の制限があるバックアップストレージプロバイダー

次の AWS S3 互換オブジェクトストレージプロバイダーは、AWS プラグインを介して Velero と連携することがわかっていますが、機能セットが制限されています。

- Swift - バックアップストレージの Backup Storage Location として使用できますが、ファイルシステムベースのボリュームバックアップおよび復元については Restic と互換性がありません。

4.6.1.2. OpenShift Data Foundation で障害復旧を行うための Multicloud Object Gateway (MCG) 設定

OpenShift Data Foundation 上の MCG バケット backupStorageLocation にクラスターストレージを使用する場合は、MCG を外部オブジェクトストアとして設定します。

MCG を外部オブジェクトストアとして設定しない場合、バックアップが利用できなくなる可能性があります。

特に指定のない限り、"NooBaa" は軽量オブジェクトストレージを提供するオープンソースプロジェクトを指し、"Multicloud Object Gateway (MCG)" は NooBaa の Red Hat ディストリビューションを指します。

MCG の詳細は、アプリケーションを使用して Multicloud Object Gateway にアクセスする を参照してください。

手順

- ハイブリッドまたはマルチクラウドのストレージリソースの追加 の説明に従って、MCG を外部オブジェクトストアとして設定します。

4.6.1.3. OADP 更新チャネルについて

OADP Operator をインストールするときに、更新チャネル を選択します。このチャネルにより、OADP Operator と Velero のどちらのアップグレードを受け取るかが決まります。いつでもチャンネルを切り替えることができます。

次の更新チャネルを利用できます。

-

stable チャネルは非推奨になりました。stable チャネルには、

OADP.v1.1.zおよびOADP.v1.0.zの古いバージョン用の OADPClusterServiceVersionのパッチ (z-stream 更新) が含まれています。 - stable-1.0 チャネルは非推奨であり、サポートされていません。

- stable-1.1 チャネルは非推奨であり、サポートされていません。

- stable-1.2 チャネルは非推奨であり、サポートされていません。

-

stable-1.3 チャネルには、最新の OADP 1.3

ClusterServiceVersionのOADP.v1.3.zが含まれています。 -

stable-1.4 チャネルには、最新の OADP 1.4

ClusterServiceVersionのOADP.v1.4.zが含まれています。

詳細は、OpenShift Operator のライフサイクル を参照してください。

適切な更新チャネルはどれですか?

-

stable チャネルは非推奨になりました。すでに stable チャネルを使用している場合は、引き続き

OADP.v1.1.zから更新が提供されます。 - stable-1.y をインストールする OADP 1.y 更新チャネルを選択し、そのパッチを引き続き受け取ります。このチャネルを選択すると、バージョン 1.y.z のすべての z-stream パッチを受け取ります。

いつ更新チャネルを切り替える必要がありますか?

- OADP 1.y がインストールされていて、その y-stream のパッチのみを受け取りたい場合は、stable 更新チャネルから stable-1.y 更新チャネルに切り替える必要があります。その後、バージョン 1.y.z のすべての z-stream パッチを受け取ります。

- OADP 1.0 がインストールされていて、OADP 1.1 にアップグレードしたい場合、OADP 1.1 のみのパッチを受け取るには、stable-1.0 更新チャネルから stable-1.1 更新チャネルに切り替える必要があります。その後、バージョン 1.1.z のすべての z-stream パッチを受け取ります。

- OADP 1.y がインストールされていて、y が 0 より大きく、OADP 1.0 に切り替える場合は、OADP Operator をアンインストールしてから、stable-1.0 更新チャネルを使用して再インストールする必要があります。その後、バージョン 1.0.z のすべての z-stream パッチを受け取ります。

更新チャネルを切り替えて、OADP 1.y から OADP 1.0 に切り替えることはできません。Operator をアンインストールしてから再インストールする必要があります。

4.6.1.4. 複数の namespace への OADP のインストール

OpenShift API for Data Protection を同じクラスター上の複数の namespace にインストールすると、複数のプロジェクト所有者が独自の OADP インスタンスを管理できるようになります。このユースケースは、File System Backup (FSB) と Container Storage Interface (CSI) を使用して検証されています。

このドキュメントに含まれるプラットフォームごとの手順で指定されている OADP の各インスタンスを、以下の追加要件とともにインストールします。

- 同じクラスター上の OADP のデプロイメントは、すべて同じバージョン (例: 1.4.0) である必要があります。同じクラスターに異なるバージョンの OADP をインストールすることは サポートされていません。

-

OADP の個々のデプロイメントには、一意の認証情報セットと一意の

BackupStorageLocation設定が必要です。同じ namespace 内で、複数のBackupStorageLocation設定を使用することもできます。 - デフォルトでは、各 OADP デプロイメントには namespace 全体でクラスターレベルのアクセス権があります。OpenShift Container Platform 管理者は、同じ namespace へのバックアップと復元を同時に行わないことなど、潜在的な影響を慎重に検討する必要があります。

4.6.1.5. OADP がバックアップデータの不変性をサポートする

OADP 1.4 以降では、バージョン管理が有効になっている AWS S3 バケットに OADP バックアップを保存できます。バージョン管理のサポートは AWS S3 バケットのみに適用され、S3 互換バケットには適用されません。

特定のクラウドプロバイダーの制限については、次のリストを参照してください。

- S3 オブジェクトロックはバージョン管理されたバケットにのみ適用されるため、AWS S3 サービスはバックアップをサポートします。新しいバージョンのオブジェクトデータを更新することもできます。ただし、バックアップは削除されても、オブジェクトの古いバージョンは削除されません。

- OADP バックアップはサポートされておらず、Azure Storage Blob で不変性を有効にすると期待どおりに動作しない可能性があります。

- Google Cloud ストレージポリシーは、バケットレベルの不変性のみをサポートしています。したがって、Google Cloud 環境で不変性を実装することは現実的ではありません。

ストレージプロバイダーに応じて、不変性オプションの呼び出し方法は異なります。

- S3 オブジェクトロック

- オブジェクト保持

- バケットバージョン管理

- Write Once Read Many (WORM) バケット

他の S3 互換オブジェクトストレージがサポートされていない主な理由は、OADP が最初にバックアップの状態を finalizing として保存し、その後、非同期操作が進行中かどうかを確認するためです。

4.6.1.6. 収集したデータに基づく Velero CPU およびメモリーの要件

以下の推奨事項は、スケールおよびパフォーマンスのラボで観察したパフォーマンスに基づいています。バックアップおよび復元リソースは、プラグインのタイプ、そのバックアップまたは復元に必要なリソースの量、そのリソースに関連する永続ボリューム (PV) に含まれるデータの影響を受けます。

4.6.1.6.1. 設定に必要な CPU とメモリー

| 設定タイプ | [1] 平均使用量 | [2] 大量使用時 | resourceTimeouts |

|---|---|---|---|

| CSI | Velero: CPU - リクエスト 200m、制限 1000m メモリー - リクエスト 256 Mi、制限 1024 Mi | Velero: CPU - リクエスト 200m、制限 2000m メモリー - リクエスト 256 Mi、制限 2048 Mi | 該当なし |

| Restic | [3] Restic: CPU - リクエスト 1000m、制限 2000m メモリー - リクエスト 16 Gi、制限 32 Gi | [4] Restic: CPU - リクエスト 2000m、制限 8000m メモリー - リクエスト 16 Gi、制限 40 Gi | 900 m |

| [5] Data Mover | 該当なし | 該当なし | 10m - 平均使用量 60m - 大量使用時 |

- 平均使用量 - ほとんどの状況下でこの設定を使用します。

- 大量使用時 - 大規模な PV (使用量 500 GB)、複数の namespace (100 以上)、または 1 つの namespace に多数の Pod (2000 Pod 以上) があるなどして使用量が大きくなる状況下では、大規模なデータセットを含む場合のバックアップと復元で最適なパフォーマンスを実現するために、この設定を使用します。

- Restic リソースの使用量は、データの量とデータタイプに対応します。たとえば、多数の小さなファイルや大量のデータがある場合は、Restic が大量のリソースを使用する可能性があります。Velero のドキュメントでは、指定されたデフォルト値である 500 m を参照していますが、ほとんどのテストではリクエスト 200 m、制限 1000 m が適切でした。Velero のドキュメントに記載されているとおり、正確な CPU とメモリー使用量は、環境の制限に加えて、ファイルとディレクトリーの規模に依存します。

- CPU を増やすと、バックアップと復元の時間を大幅に短縮できます。

- Data Mover - Data Mover のデフォルトの resourceTimeout は 10 m です。テストでは、大規模な PV (使用量 500 GB) を復元するには、resourceTimeout を 60m に増やす必要があることがわかりました。

このガイド全体に記載されているリソース要件は、平均的な使用量に限定されています。大量に使用する場合は、上の表の説明に従って設定を調整してください。

4.6.1.6.2. 大量使用のための NodeAgent CPU

テストの結果、NodeAgent CPU を増やすと、OpenShift API for Data Protection (OADP) を使用する際のバックアップと復元の時間が大幅に短縮されることがわかりました。

パフォーマンス分析と要件に応じて、OpenShift Container Platform 環境を調整できます。ファイルシステムのバックアップに Kopia を使用する場合は、ワークロードで CPU 制限を使用してください。

Pod で CPU 制限を使用しない場合、Pod は利用可能な場合に余剰の CPU を使用できます。CPU 制限を指定すると、Pod がその制限を超えたときに、Pod にスロットリングが適用される可能性があります。したがって、Pod で CPU 制限を使用することはアンチパターンと考えられています。

Pod が余剰の CPU を利用できるように、必ず CPU 要求を正確に指定してください。リソースの割り当ては、CPU 制限ではなく CPU 要求に基づいて保証されます。

テストの結果、20 コアと 32 Gi メモリーで Kopia を実行した場合、100 GB 超のデータ、複数の namespace、または単一 namespace 内の 2000 超の Pod のバックアップと復元操作がサポートされることが判明しました。テストでは、これらのリソース仕様では CPU の制限やメモリーの飽和は検出されませんでした。

環境によっては、デフォルト設定によりリソースが飽和状態になった場合に発生する Pod の再起動を回避するために、Ceph MDS Pod リソースを調整する必要があります。

Ceph MDS Pod で Pod リソース制限を設定する方法の詳細は、rook-ceph Pod の CPU およびメモリーリソースの変更 を参照してください。

4.6.2. OADP Operator のインストール

Operator Lifecycle Manager (OLM) を使用して、OpenShift Container Platform 4.16 に OpenShift API for Data Protection (OADP) Operator をインストールできます。

OADP Operator は Velero 1.14 をインストールします。

4.6.2.1. OADP Operator のインストール

OADP Operator をインストールするには、次の手順を使用します。

前提条件

cluster-admin特権を持つユーザーとしてログインしている。- OpenShift Container Platform Web コンソールで、Operators → OperatorHub をクリックします。

- Filter by keyword フィールドを使用して、OADP Operator を検索します。

- OADP Operator を選択し、Install をクリックします。

-

Install をクリックして、

openshift-adpプロジェクトに Operator をインストールします。 - Operators → Installed Operators をクリックして、インストールを確認します。

4.6.2.2. OADP、Velero、および OpenShift Container Platform の各バージョンの関係

| OADP のバージョン | Velero のバージョン | OpenShift Container Platform バージョン |

|---|---|---|

| 1.3.0 | {velero-1.12} | 4.12-4.15 |

| 1.3.1 | {velero-1.12} | 4.12-4.15 |

| 1.3.2 | {velero-1.12} | 4.12-4.15 |

| 1.3.3 | {velero-1.12} | 4.12-4.15 |

| 1.3.4 | {velero-1.12} | 4.12-4.15 |

| 1.3.5 | {velero-1.12} | 4.12-4.15 |

| 1.4.0 | {velero-1.14} | 4.14-4.18 |

| 1.4.1 | {velero-1.14} | 4.14-4.18 |

| 1.4.2 | {velero-1.14} | 4.14-4.18 |

| 1.4.3 | {velero-1.14} | 4.14-4.18 |

4.7. AWS S3 互換ストレージを使用した OADP の設定

4.7.1. AWS S3 互換ストレージを使用した OpenShift API for Data Protection の設定

OADP Operator をインストールすることで、Amazon Web Services (AWS) S3 互換ストレージを使用して OpenShift API for Data Protection (OADP) をインストールします。Operator は Velero 1.14 をインストールします。

OADP 1.0.4 以降、すべての OADP 1.0.z バージョンは Migration Toolkit for Containers Operator の依存関係としてのみ使用でき、スタンドアロン Operator として使用することはできません。

Velero 用に AWS を設定し、デフォルトの Secret を作成してから、Data Protection Application をインストールします。詳細は、OADP Operator のインストール を参照してください。

制限されたネットワーク環境に OADP Operator をインストールするには、最初にデフォルトの OperatorHub ソースを無効にして、Operator カタログをミラーリングする必要があります。詳細は、ネットワークが制限された環境での Operator Lifecycle Manager の使用 を参照してください。

4.7.1.1. Amazon Simple Storage Service、Identity and Access Management、GovCloud について

Amazon Simple Storage Service (Amazon S3) は、インターネット向けの Amazon のストレージソリューションです。許可されたユーザーは、このサービスを使用して、Web 上のどこからでも、いつでも任意の量のデータを保存および取得できます。

AWS Identity and Access Management (IAM) Web サービスは、Amazon S3 やその他の Amazon サービスへのアクセスをセキュアに制御するために使用します。

IAM を使用すると、ユーザーがアクセスできる AWS リソースを制御する権限を管理できます。IAM は、ユーザーが本人であるかどうかを認証 (検証) するとともに、リソースを使用する権限を認可 (付与) するために使用します。

AWS GovCloud (US) は、米国連邦政府の厳格かつ特定のデータセキュリティー要件を満たすために開発された Amazon ストレージソリューションです。AWS GovCloud (US) は、次の点を除いて Amazon S3 と同じように動作します。

- AWS GovCloud (米国) リージョンの Amazon S3 バケットの内容を、別の AWS リージョンに、または別の AWS リージョンから直接コピーすることはできません。

Amazon S3 ポリシーを使用する場合は、IAM ポリシー、Amazon S3 バケット名、API 呼び出しなど、AWS 全体で、AWS GovCloud (US) の Amazon Resource Name (ARN) 識別子を使用してリソースを明確に指定します。

AWS GovCloud (US) リージョンでは、他の標準 AWS リージョンの識別子とは異なる識別子 (

arn:aws-us-gov) が ARN に付きます。US-West または US-East リージョンを指定する必要がある場合は、次のいずれかの ARN を使用します。-

US-West の場合は、

us-gov-west-1を使用します。 -

US-East の場合は、

us-gov-east-1を使用します。

-

US-West の場合は、

-

その他のすべての標準リージョンでは、ARN は

arn:awsで始まります。

- AWS GovCloud (US) リージョンでは、Amazon Simple Storage Service endpoints and quotas の "Amazon S3 endpoints" の AWS GovCloud (US-East) および AWS GovCloud (US-West) の行にリストされているエンドポイントを使用します。輸出規制対象のデータを処理している場合は、SSL/TLS エンドポイントのいずれかを使用します。FIPS 要件がある場合は、https://s3-fips.us-gov-west-1.amazonaws.com や https://s3-fips.us-gov-east-1.amazonaws.com などの FIPS 140-2 エンドポイントを使用します。

- AWS が課すその他の制限は、How Amazon Simple Storage Service Differs for AWS GovCloud (US) を参照してください。

4.7.1.2. Amazon Web Services の設定

OpenShift API for Data Protection (OADP) 用に Amazon Web Services (AWS) を設定します。

前提条件

- AWS CLI がインストールされていること。

手順

BUCKET変数を設定します。BUCKET=<your_bucket>

$ BUCKET=<your_bucket>Copy to Clipboard Copied! Toggle word wrap Toggle overflow REGION変数を設定します。REGION=<your_region>

$ REGION=<your_region>Copy to Clipboard Copied! Toggle word wrap Toggle overflow AWS S3 バケットを作成します。

aws s3api create-bucket \ --bucket $BUCKET \ --region $REGION \ --create-bucket-configuration LocationConstraint=$REGION$ aws s3api create-bucket \ --bucket $BUCKET \ --region $REGION \ --create-bucket-configuration LocationConstraint=$REGION1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

us-east-1はLocationConstraintをサポートしていません。お住まいの地域がus-east-1の場合は、--create-bucket-configuration LocationConstraint=$REGIONを省略してください。

IAM ユーザーを作成します。

aws iam create-user --user-name velero

$ aws iam create-user --user-name velero1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Velero を使用して複数の S3 バケットを持つ複数のクラスターをバックアップする場合は、クラスターごとに一意のユーザー名を作成します。

velero-policy.jsonファイルを作成します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow ポリシーを添付して、

veleroユーザーに必要最小限の権限を付与します。aws iam put-user-policy \ --user-name velero \ --policy-name velero \ --policy-document file://velero-policy.json

$ aws iam put-user-policy \ --user-name velero \ --policy-name velero \ --policy-document file://velero-policy.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow veleroユーザーのアクセスキーを作成します。aws iam create-access-key --user-name velero

$ aws iam create-access-key --user-name veleroCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow credentials-veleroファイルを作成します。cat << EOF > ./credentials-velero [default] aws_access_key_id=<AWS_ACCESS_KEY_ID> aws_secret_access_key=<AWS_SECRET_ACCESS_KEY> EOF

$ cat << EOF > ./credentials-velero [default] aws_access_key_id=<AWS_ACCESS_KEY_ID> aws_secret_access_key=<AWS_SECRET_ACCESS_KEY> EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow Data Protection Application をインストールする前に、

credentials-veleroファイルを使用して AWS のSecretオブジェクトを作成します。

4.7.1.3. バックアップおよびスナップショットの場所、ならびにそのシークレットについて

DataProtectionApplication カスタムリソース (CR) で、バックアップおよびスナップショットの場所、ならびにそのシークレットを指定します。

4.7.1.3.1. バックアップの場所

バックアップの場所として、次のいずれかの AWS S3 互換オブジェクトストレージソリューションを指定できます。

- Multicloud Object Gateway (MCG)

- Red Hat Container Storage

- Ceph RADOS Gateway (別称 Ceph Object Gateway)

- Red Hat OpenShift Data Foundation

- MinIO

Velero は、オブジェクトストレージのアーカイブファイルとして、OpenShift Container Platform リソース、Kubernetes オブジェクト、および内部イメージをバックアップします。

4.7.1.3.2. スナップショットの場所

クラウドプロバイダーのネイティブスナップショット API を使用して永続ボリュームをバックアップする場合、クラウドプロバイダーをスナップショットの場所として指定する必要があります。

Container Storage Interface (CSI) スナップショットを使用する場合、CSI ドライバーを登録するために VolumeSnapshotClass CR を作成するため、スナップショットの場所を指定する必要はありません。

File System Backup (FSB) を使用する場合、FSB がオブジェクトストレージ上にファイルシステムをバックアップするため、スナップショットの場所を指定する必要はありません。

4.7.1.3.3. シークレット

バックアップとスナップショットの場所が同じ認証情報を使用する場合、またはスナップショットの場所が必要ない場合は、デフォルトの Secret を作成します。

バックアップとスナップショットの場所で異なる認証情報を使用する場合は、次の 2 つの secret オブジェクトを作成します。

-

DataProtectionApplicationCR で指定する、バックアップの場所用のカスタムSecret。 -

DataProtectionApplicationCR で参照されない、スナップショットの場所用のデフォルトSecret。

Data Protection Application には、デフォルトの Secret が必要です。作成しないと、インストールは失敗します。

インストール中にバックアップまたはスナップショットの場所を指定したくない場合は、空の credentials-velero ファイルを使用してデフォルトの Secret を作成できます。

4.7.1.3.4. デフォルト Secret の作成

バックアップとスナップショットの場所が同じ認証情報を使用する場合、またはスナップショットの場所が必要ない場合は、デフォルトの Secret を作成します。

Secret のデフォルト名は cloud-credentials です。

DataProtectionApplication カスタムリソース (CR) にはデフォルトの Secret が必要です。作成しないと、インストールは失敗します。バックアップの場所の Secret の名前が指定されていない場合は、デフォルトの名前が使用されます。

インストール時にバックアップ場所の認証情報を使用しない場合は、空の credentials-velero ファイルを使用して、デフォルト名の Secret を作成できます。

前提条件

- オブジェクトストレージとクラウドストレージがある場合は、同じ認証情報を使用する必要があります。

- Velero のオブジェクトストレージを設定する必要があります。

手順

Backup Storage Location の

credentials-veleroファイルをクラウドプロバイダーに適した形式で作成します。以下の例を参照してください。

[default] aws_access_key_id=<AWS_ACCESS_KEY_ID> aws_secret_access_key=<AWS_SECRET_ACCESS_KEY>

[default] aws_access_key_id=<AWS_ACCESS_KEY_ID> aws_secret_access_key=<AWS_SECRET_ACCESS_KEY>Copy to Clipboard Copied! Toggle word wrap Toggle overflow デフォルト名で

Secretカスタムリソース (CR) を作成します。oc create secret generic cloud-credentials -n openshift-adp --from-file cloud=credentials-velero

$ oc create secret generic cloud-credentials -n openshift-adp --from-file cloud=credentials-veleroCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Secret は、Data Protection Application をインストールするときに、DataProtectionApplication CR の spec.backupLocations.credential ブロックで参照されます。

4.7.1.3.5. 異なる認証情報のプロファイルの作成

バックアップとスナップショットの場所で異なる認証情報を使用する場合は、credentials-velero ファイルに個別のプロファイルを作成します。

次に、Secret オブジェクトを作成し、DataProtectionApplication カスタムリソース (CR) でプロファイルを指定します。

手順

次の例のように、バックアップとスナップショットの場所に別々のプロファイルを持つ

credentials-veleroファイルを作成します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow credentials-veleroファイルを使用してSecretオブジェクトを作成します。oc create secret generic cloud-credentials -n openshift-adp --from-file cloud=credentials-velero

$ oc create secret generic cloud-credentials -n openshift-adp --from-file cloud=credentials-velero1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow 次の例のように、プロファイルを

DataProtectionApplicationCR に追加します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.7.1.3.6. AWS を使用した Backup Storage Location の設定

次の例の手順に示すように、AWS の Backup Storage Location (BSL) を設定できます。

前提条件

- AWS を使用してオブジェクトストレージバケットを作成した。

- OADP Operator がインストールされている。

手順

ユースケースに応じて、BSL カスタムリソース (CR) に適切な値を設定します。

Backup Storage Location

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1 1

- オブジェクトストアプラグインの名前。この例では、プラグインは

awsです。このフィールドは必須です。 - 2

- バックアップを保存するバケットの名前。このフィールドは必須です。

- 3

- バックアップを保存するバケット内の接頭辞。このフィールドは任意です。

- 4

- Backup Storage Location の認証情報。カスタムの認証情報を設定できます。カスタムの認証情報が設定されていない場合は、デフォルトの認証情報のシークレットが使用されます。

- 5

- シークレットの認証情報データ内の

key。 - 6

- 認証情報を含むシークレットの名前。

- 7

- バケットが配置されている AWS リージョン。s3ForcePathStyle が false の場合は任意です。

- 8

- 仮想ホスト形式のバケットアドレス指定の代わりにパス形式のアドレス指定を使用するかどうかを決定するブール値フラグ。MinIO や NooBaa などのストレージサービスを使用する場合は、

trueに設定します。これは任意のフィールドです。デフォルト値はfalseです。 - 9

- ここで AWS S3 の URL を明示的に指定できます。このフィールドは主に、MinIO や NooBaa などのストレージサービス用です。これは任意のフィールドです。

- 10

- このフィールドは主に、MinIO や NooBaa などのストレージサービスに使用されます。これは任意のフィールドです。

- 11

- オブジェクトのアップロードに使用するサーバー側暗号化アルゴリズムの名前 (例:

AES256)。これは任意のフィールドです。 - 12

- AWS KMS のキー ID を指定します。例に示すように、

alias/<KMS-key-alias-name>などのエイリアスまたは完全なARNの形式で指定して、S3 に保存されているバックアップの暗号化を有効にできます。kmsKeyIdは、customerKeyEncryptionFileと一緒に使用できないことに注意してください。これは任意のフィールドです。 - 13

SSE-Cカスタマーキーを含むファイルを指定して、S3 に保存されているバックアップのカスタマーキー暗号化を有効にします。ファイルに 32 バイトの文字列が含まれている必要があります。customerKeyEncryptionFileフィールドは、veleroコンテナー内にマウントされたシークレットを参照します。velerocloud-credentialsシークレットに、キーと値のペアcustomer-key: <your_b64_encoded_32byte_string>を追加します。customerKeyEncryptionFileフィールドはkmsKeyIdフィールドと一緒に使用できないことに注意してください。デフォルト値は空の文字列 ("") です。これはSSE-Cが無効であることを意味します。これは任意のフィールドです。- 14

- 署名付き URL を作成するために使用する署名アルゴリズムのバージョン。署名付き URL は、バックアップのダウンロードやログの取得に使用します。有効な値は

1と4です。デフォルトのバージョンは4です。これは任意のフィールドです。 - 15

- 認証情報ファイル内の AWS プロファイルの名前。デフォルト値は

defaultです。これは任意のフィールドです。 - 16

- オブジェクトストアに接続するときに TLS 証明書を検証しない場合 (たとえば、MinIO を使用した自己署名証明書の場合)、

insecureSkipTLSVerifyフィールドをtrueに設定します。trueに設定すると、中間者攻撃の影響を受けやすくなります。この設定は実稼働ワークロードには推奨されません。デフォルト値はfalseです。これは任意のフィールドです。 - 17

- 認証情報ファイルを共有設定ファイルとして読み込む場合は、

enableSharedConfigフィールドをtrueに設定します。デフォルト値はfalseです。これは任意のフィールドです。 - 18

- AWS S3 オブジェクトにアノテーションを付けるタグを指定します。キーと値のペアでタグを指定します。デフォルト値は空の文字列 (

"") です。これは任意のフィールドです。 - 19

- S3 にオブジェクトをアップロードするときに使用するチェックサムアルゴリズムを指定します。サポートされている値は、

CRC32、CRC32C、SHA1、およびSHA256です。フィールドを空の文字列 ("") に設定すると、チェックサムチェックがスキップされます。デフォルト値はCRC32です。これは任意のフィールドです。

4.7.1.3.7. データセキュリティーを強化するための OADP SSE-C 暗号鍵の作成

Amazon Web Services (AWS) S3 は、Amazon S3 内のすべてのバケットに対して、基本レベルの暗号化として、Amazon S3 マネージドキー (SSE-S3) によるサーバー側暗号化を適用します。

OpenShift API for Data Protection (OADP) は、クラスターからストレージにデータを転送するときに、SSL/TLS、HTTPS、および velero-repo-credentials シークレットを使用してデータを暗号化します。AWS 認証情報の紛失または盗難に備えてバックアップデータを保護するには、追加の暗号化レイヤーを適用してください。

velero-plugin-for-aws プラグインで、いくつかの追加の暗号化方法を使用できます。プラグインの設定オプションを確認し、追加の暗号化を実装することを検討してください。

お客様提供の鍵を使用したサーバー側暗号化 (SSE-C) を使用することで、独自の暗号鍵を保存できます。この機能は、AWS 認証情報が漏えいした場合に追加のセキュリティーを提供します。

暗号鍵は必ずセキュアな方法で保管してください。暗号鍵がない場合、暗号化されたデータとバックアップを復元できません。

前提条件

OADP が

/credentialsの Velero Pod に SSE-C 鍵を含むシークレットをマウントできるように、AWS のデフォルトのシークレット名cloud-credentialsを使用し、次のラベルの少なくとも 1 つを空のままにします。-

dpa.spec.backupLocations[].velero.credential dpa.spec.snapshotLocations[].velero.credentialこれは既知の問題 (https://issues.redhat.com/browse/OADP-3971) に対する回避策です。

-

次の手順には、認証情報を指定しない spec:backupLocations ブロックの例が含まれています。この例では、OADP シークレットのマウントがトリガーされます。

-

バックアップの場所に

cloud-credentialsとは異なる名前の認証情報が必要な場合は、次の例のように、認証情報名を含まないスナップショットの場所を追加する必要があります。この例には認証情報名が含まれていないため、スナップショットの場所では、スナップショットを作成するためのシークレットとしてcloud-credentialsが使用されています。

認証情報が指定されていない DPA 内のスナップショットの場所を示す例

手順

SSE-C 暗号鍵を作成します。

次のコマンドを実行して乱数を生成し、

sse.keyという名前のファイルとして保存します。dd if=/dev/urandom bs=1 count=32 > sse.key

$ dd if=/dev/urandom bs=1 count=32 > sse.keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow

OpenShift Container Platform シークレットを作成します。

OADP を初めてインストールして設定する場合は、次のコマンドを実行して、AWS 認証情報と暗号鍵シークレットを同時に作成します。

oc create secret generic cloud-credentials --namespace openshift-adp --from-file cloud=<path>/openshift_aws_credentials,customer-key=<path>/sse.key

$ oc create secret generic cloud-credentials --namespace openshift-adp --from-file cloud=<path>/openshift_aws_credentials,customer-key=<path>/sse.keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow 既存のインストールを更新する場合は、次の例のように、

DataProtectionApplicationCR マニフェストのcloud-credentialsecretブロックの値を編集します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow

次の例のように、

DataProtectionApplicationCR マニフェストのbackupLocationsブロックにあるcustomerKeyEncryptionFile属性の値を編集します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow 警告既存のインストール環境でシークレットの認証情報を適切に再マウントするには、Velero Pod を再起動する必要があります。

インストールが完了すると、OpenShift Container Platform リソースをバックアップおよび復元できるようになります。AWS S3 ストレージに保存されるデータは、新しい鍵で暗号化されます。追加の暗号鍵がないと、AWS S3 コンソールまたは API からデータをダウンロードすることはできません。

検証

追加の鍵を含めずに暗号化したファイルをダウンロードできないことを確認するために、テストファイルを作成し、アップロードしてからダウンロードしてみます。

次のコマンドを実行してテストファイルを作成します。

echo "encrypt me please" > test.txt

$ echo "encrypt me please" > test.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行してテストファイルをアップロードします。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ファイルのダウンロードを試みます。Amazon Web コンソールまたはターミナルで、次のコマンドを実行します。

s3cmd get s3://<bucket>/test.txt test.txt

$ s3cmd get s3://<bucket>/test.txt test.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow ファイルが追加の鍵で暗号化されているため、ダウンロードは失敗します。

次のコマンドを実行して、追加の暗号鍵を含むファイルをダウンロードします。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 次のコマンドを実行してファイルの内容を読み取ります。

cat downloaded.txt

$ cat downloaded.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

encrypt me please

encrypt me pleaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.7.1.3.7.1. Velero によってバックアップされたファイルの SSE-C 暗号鍵を使用してファイルをダウンロードする

SSE-C 暗号鍵を検証するときに、Velero によってバックアップされたファイルの追加の暗号鍵を含むファイルをダウンロードすることもできます。

手順

次のコマンドを実行して、Velero によってバックアップされたファイルの追加の暗号鍵を含むファイルをダウンロードします。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.7.1.4. Data Protection Application の設定

Velero リソースの割り当てを設定するか、自己署名 CA 証明書を有効にして、Data Protection Application を設定できます。

4.7.1.4.1. Velero の CPU とメモリーのリソース割り当てを設定

DataProtectionApplication カスタムリソース (CR) マニフェストを編集して、Velero Pod の CPU およびメモリーリソースの割り当てを設定します。

前提条件

- OpenShift API for Data Protection (OADP) Operator がインストールされている必要があります。

手順

次の例のように、

DataProtectionApplicationCR マニフェストのspec.configuration.velero.podConfig.ResourceAllocationsブロックの値を編集します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow 注記Kopia は OADP 1.3 以降のリリースで選択できます。Kopia はファイルシステムのバックアップに使用できます。組み込みの Data Mover を使用する Data Mover の場合は、Kopia が唯一の選択肢になります。

Kopia は Restic よりも多くのリソースを消費するため、それに応じて CPU とメモリーの要件を調整しなければならない場合があります。

nodeSelector フィールドを使用して、ノードエージェントを実行できるノードを選択します。nodeSelector フィールドは、推奨される最も単純な形式のノード選択制約です。指定したラベルが、各ノードのラベルと一致する必要があります。

詳細は、ノードエージェントとノードラベルの設定 を参照してください。

4.7.1.4.2. 自己署名 CA 証明書の有効化