This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Chapter 1. Cluster monitoring

1.1. About cluster monitoring

OpenShift Container Platform includes a pre-configured, pre-installed, and self-updating monitoring stack that is based on the Prometheus open source project and its wider eco-system. It provides monitoring of cluster components and includes a set of alerts to immediately notify the cluster administrator about any occurring problems and a set of Grafana dashboards. The cluster monitoring stack is only supported for monitoring OpenShift Container Platform clusters.

To ensure compatibility with future OpenShift Container Platform updates, configuring only the specified monitoring stack options is supported.

1.1.1. Stack components and monitored targets

The monitoring stack includes these components:

| Component | Description |

|---|---|

| Cluster Monitoring Operator | The OpenShift Container Platform Cluster Monitoring Operator (CMO) is the central component of the stack. It controls the deployed monitoring components and resources and ensures that they are always up to date. |

| Prometheus Operator | The Prometheus Operator (PO) creates, configures, and manages Prometheus and Alertmanager instances. It also automatically generates monitoring target configurations based on familiar Kubernetes label queries. |

| Prometheus | The Prometheus is the systems and service monitoring system, around which the monitoring stack is based. |

| Prometheus Adapter | The Prometheus Adapter exposes cluster resource metrics API for horizontal pod autoscaling. Resource metrics are CPU and memory utilization. |

| Alertmanager | The Alertmanager service handles alerts sent by Prometheus. |

|

|

The |

|

|

|

| Grafana | The Grafana analytics platform provides dashboards for analyzing and visualizing the metrics. The Grafana instance that is provided with the monitoring stack, along with its dashboards, is read-only. |

All the components of the monitoring stack are monitored by the stack and are automatically updated when OpenShift Container Platform is updated.

In addition to the components of the stack itself, the monitoring stack monitors:

- CoreDNS

- Elasticsearch (if Logging is installed)

- Etcd

- Fluentd (if Logging is installed)

- HAProxy

- Image registry

- Kubelets

- Kubernetes apiserver

- Kubernetes controller manager

- Kubernetes scheduler

- Metering (if Metering is installed)

- OpenShift apiserver

- OpenShift controller manager

- Operator Lifecycle Manager (OLM)

- Telemeter client

Each OpenShift Container Platform component is responsible for its monitoring configuration. For problems with a component’s monitoring, open a bug in Bugzilla against that component, not against the general monitoring component.

Other OpenShift Container Platform framework components might be exposing metrics as well. For details, see their respective documentation.

Next steps

1.2. Configuring the monitoring stack

Prior to OpenShift Container Platform 4, the Prometheus Cluster Monitoring stack was configured through the Ansible inventory file. For that purpose, the stack exposed a subset of its available configuration options as Ansible variables. You configured the stack before you installed OpenShift Container Platform.

In OpenShift Container Platform 4, Ansible is not the primary technology to install OpenShift Container Platform anymore. The installation program provides only a very low number of configuration options before installation. Configuring most OpenShift framework components, including the Prometheus Cluster Monitoring stack, happens post-installation.

This section explains what configuration is supported, shows how to configure the monitoring stack, and demonstrates several common configuration scenarios.

Prerequisites

- The monitoring stack imposes additional resource requirements. Consult the computing resources recommendations in Scaling the Cluster Monitoring Operator and verify that you have sufficient resources.

1.2.1. Maintenance and support

The supported way of configuring OpenShift Container Platform Monitoring is by configuring it using the options described in this document. Do not use other configurations, as they are unsupported. Configuration paradigms might change across Prometheus releases, and such cases can only be handled gracefully if all configuration possibilities are controlled. If you use configurations other than those described in this section, your changes will disappear because the cluster-monitoring-operator reconciles any differences. The operator reverses everything to the defined state by default and by design.

Explicitly unsupported cases include:

-

Creating additional

ServiceMonitorobjects in theopenshift-*namespaces. This extends the targets the cluster monitoring Prometheus instance scrapes, which can cause collisions and load differences that cannot be accounted for. These factors might make the Prometheus setup unstable. -

Creating unexpected

ConfigMapobjects orPrometheusRuleobjects. This causes the cluster monitoring Prometheus instance to include additional alerting and recording rules. - Modifying resources of the stack. The Prometheus Monitoring Stack ensures its resources are always in the state it expects them to be. If they are modified, the stack will reset them.

- Using resources of the stack for your purposes. The resources created by the Prometheus Cluster Monitoring stack are not meant to be used by any other resources, as there are no guarantees about their backward compatibility.

- Stopping the Cluster Monitoring Operator from reconciling the monitoring stack.

- Adding new alerting rules.

- Modifying the monitoring stack Grafana instance.

1.2.2. Creating cluster monitoring ConfigMap

To configure the Prometheus Cluster Monitoring stack, you must create the cluster monitoring ConfigMap.

Prerequisites

-

An installed

ocCLI tool - Administrative privileges for the cluster

Procedure

Check whether the

cluster-monitoring-configConfigMap object exists:oc -n openshift-monitoring get configmap cluster-monitoring-config

$ oc -n openshift-monitoring get configmap cluster-monitoring-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow If it does not exist, create it:

oc -n openshift-monitoring create configmap cluster-monitoring-config

$ oc -n openshift-monitoring create configmap cluster-monitoring-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start editing the

cluster-monitoring-configConfigMap:oc -n openshift-monitoring edit configmap cluster-monitoring-config

$ oc -n openshift-monitoring edit configmap cluster-monitoring-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

datasection if it does not exist yet:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.2.3. Configuring the cluster monitoring stack

You can configure the Prometheus Cluster Monitoring stack using ConfigMaps. ConfigMaps configure the Cluster Monitoring Operator, which in turn configures components of the stack.

Prerequisites

-

Make sure you have the

cluster-monitoring-configConfigMap object with thedata/config.yamlsection.

Procedure

Start editing the

cluster-monitoring-configConfigMap:oc -n openshift-monitoring edit configmap cluster-monitoring-config

$ oc -n openshift-monitoring edit configmap cluster-monitoring-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Put your configuration under

data/config.yamlas key-value pair<component_name>: <component_configuration>:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Substitute

<component>and<configuration for the component>accordingly.For example, create this ConfigMap to configure a Persistent Volume Claim (PVC) for Prometheus:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Here, prometheusK8s defines the Prometheus component and the following lines define its configuration.

- Save the file to apply the changes. The pods affected by the new configuration are restarted automatically.

1.2.4. Configurable monitoring components

This table shows the monitoring components you can configure and the keys used to specify the components in the ConfigMap:

| Component | Key |

|---|---|

| Prometheus Operator |

|

| Prometheus |

|

| Alertmanager |

|

| kube-state-metrics |

|

| Grafana |

|

| Telemeter Client |

|

| Prometheus Adapter |

|

From this list, only Prometheus and Alertmanager have extensive configuration options. All other components usually provide only the nodeSelector field for being deployed on a specified node.

1.2.5. Moving monitoring components to different nodes

You can move any of the monitoring stack components to specific nodes.

Prerequisites

-

Make sure you have the

cluster-monitoring-configConfigMap object with thedata/config.yamlsection.

Procedure

Start editing the

cluster-monitoring-configConfigMap:oc -n openshift-monitoring edit configmap cluster-monitoring-config

$ oc -n openshift-monitoring edit configmap cluster-monitoring-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Specify the

nodeSelectorconstraint for the component underdata/config.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Substitute

<component>accordingly and substitute<node key>: <node value>with the map of key-value pairs that specifies the destination node. Often, only a single key-value pair is used.The component can only run on a node that has each of the specified key-value pairs as labels. The node can have additional labels as well.

For example, to move components to the node that is labeled

foo: bar, use:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the file to apply the changes. The components affected by the new configuration are moved to new nodes automatically.

Additional resources

-

See the Kubernetes documentation for details on the

nodeSelectorconstraint.

1.2.6. Configuring persistent storage

Running cluster monitoring with persistent storage means that your metrics are stored to a Persistent Volume and can survive a pod being restarted or recreated. This is ideal if you require your metrics or alerting data to be guarded from data loss. For production environments, it is highly recommended to configure persistent storage.

Prerequisites

- Dedicate sufficient persistent storage to ensure that the disk does not become full. How much storage you need depends on the number of pods. For information on system requirements for persistent storage, see Prometheus database storage requirements.

- Unless you enable dynamically-provisioned storage, make sure you have a Persistent Volume (PV) ready to be claimed by the Persistent Volume Claim (PVC), one PV for each replica. Since Prometheus has two replicas and Alertmanager has three replicas, you need five PVs to support the entire monitoring stack.

- Use the block type of storage.

1.2.6.1. Configuring a persistent volume claim

For the Prometheus or Alertmanager to use a persistent volume (PV), you first must configure a persistent volume claim (PVC).

Prerequisites

- Make sure you have the necessary storage class configured.

-

Make sure you have the

cluster-monitoring-configConfigMap object with thedata/config.yamlsection.

Procedure

Edit the

cluster-monitoring-configConfigMap:oc -n openshift-monitoring edit configmap cluster-monitoring-config

$ oc -n openshift-monitoring edit configmap cluster-monitoring-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Put your PVC configuration for the component under

data/config.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow See the Kubernetes documentation on PersistentVolumeClaims for information on how to specify

volumeClaimTemplate.For example, to configure a PVC that claims any configured OpenShift Container Platform block PV as a persistent storage for Prometheus, use:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow And to configure a PVC that claims any configured OpenShift Container Platform block PV as a persistent storage for Alertmanager, you can use:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the file to apply the changes. The pods affected by the new configuration are restarted automatically and the new storage configuration is applied.

1.2.6.2. Modifying retention time for Prometheus metrics data

By default, the Prometheus Cluster Monitoring stack configures the retention time for Prometheus data to be 15 days. You can modify the retention time to change how soon the data is deleted.

Prerequisites

-

Make sure you have the

cluster-monitoring-configConfigMap object with thedata/config.yamlsection.

Procedure

Start editing the

cluster-monitoring-configConfigMap:oc -n openshift-monitoring edit configmap cluster-monitoring-config

$ oc -n openshift-monitoring edit configmap cluster-monitoring-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Put your retention time configuration under

data/config.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Substitute

<time specification>with a number directly followed byms(milliseconds),s(seconds),m(minutes),h(hours),d(days),w(weeks), ory(years).For example, to configure retention time to be 24 hours, use:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the file to apply the changes. The pods affected by the new configuration are restarted automatically.

1.2.7. Configuring Alertmanager

The Prometheus Alertmanager is a component that manages incoming alerts, including:

- Alert silencing

- Alert inhibition

- Alert aggregation

- Reliable deduplication of alerts

- Grouping alerts

- Sending grouped alerts as notifications through receivers such as email, PagerDuty, and HipChat

1.2.7.1. Alertmanager default configuration

The default configuration of the OpenShift Container Platform Monitoring Alertmanager cluster is this:

OpenShift Container Platform monitoring ships with the Watchdog alert that always triggers by default to ensure the availability of the monitoring infrastructure.

1.2.7.2. Applying custom Alertmanager configuration

You can overwrite the default Alertmanager configuration by editing the alertmanager-main secret inside the openshift-monitoring namespace.

Prerequisites

-

An installed

jqtool for processing JSON data

Procedure

Print the currently active Alertmanager configuration into file

alertmanager.yaml:oc -n openshift-monitoring get secret alertmanager-main --template='{{ index .data "alertmanager.yaml" }}' |base64 -d > alertmanager.yaml$ oc -n openshift-monitoring get secret alertmanager-main --template='{{ index .data "alertmanager.yaml" }}' |base64 -d > alertmanager.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change the configuration in file

alertmanager.yamlto your new configuration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example, this listing configures PagerDuty for notifications:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow With this configuration, alerts of

criticalseverity fired by theexample-appservice are sent using theteam-frontend-pagereceiver, which means that these alerts are paged to a chosen person.Apply the new configuration in the file:

oc -n openshift-monitoring create secret generic alertmanager-main --from-file=alertmanager.yaml --dry-run -o=yaml | oc -n openshift-monitoring replace secret --filename=-

$ oc -n openshift-monitoring create secret generic alertmanager-main --from-file=alertmanager.yaml --dry-run -o=yaml | oc -n openshift-monitoring replace secret --filename=-Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- See the PagerDuty official site for more information on PagerDuty.

-

See the PagerDuty Prometheus Integration Guide to learn how to retrieve the

service_key. - See Alertmanager configuration for configuring alerting through different alert receivers.

1.2.7.3. Alerting rules

OpenShift Container Platform Cluster Monitoring by default ships with a set of pre-defined alerting rules.

Note that:

- The default alerting rules are used specifically for the OpenShift Container Platform cluster and nothing else. For example, you get alerts for a persistent volume in the cluster, but you do not get them for persistent volume in your custom namespace.

- Currently you cannot add custom alerting rules.

- Some alerting rules have identical names. This is intentional. They are sending alerts about the same event with different thresholds, with different severity, or both.

- With the inhibition rules, the lower severity is inhibited when the higher severity is firing.

1.2.7.4. Listing acting alerting rules

You can list the alerting rules that currently apply to the cluster.

Procedure

Configure the necessary port forwarding:

oc -n openshift-monitoring port-forward svc/prometheus-operated 9090

$ oc -n openshift-monitoring port-forward svc/prometheus-operated 9090Copy to Clipboard Copied! Toggle word wrap Toggle overflow Fetch the JSON object containing acting alerting rules and their properties:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- See also the Alertmanager documentation.

Next steps

- Manage cluster alerts.

- Learn about Telemetry and, if necessary, opt out of it.

1.3. Managing cluster alerts

OpenShift Container Platform 4 provides a Web interface to the Alertmanager, which enables you to manage alerts. This section demonstrates how to use the Alerting UI.

1.3.1. Contents of the Alerting UI

This section shows and explains the contents of the Alerting UI, a Web interface to the Alertmanager.

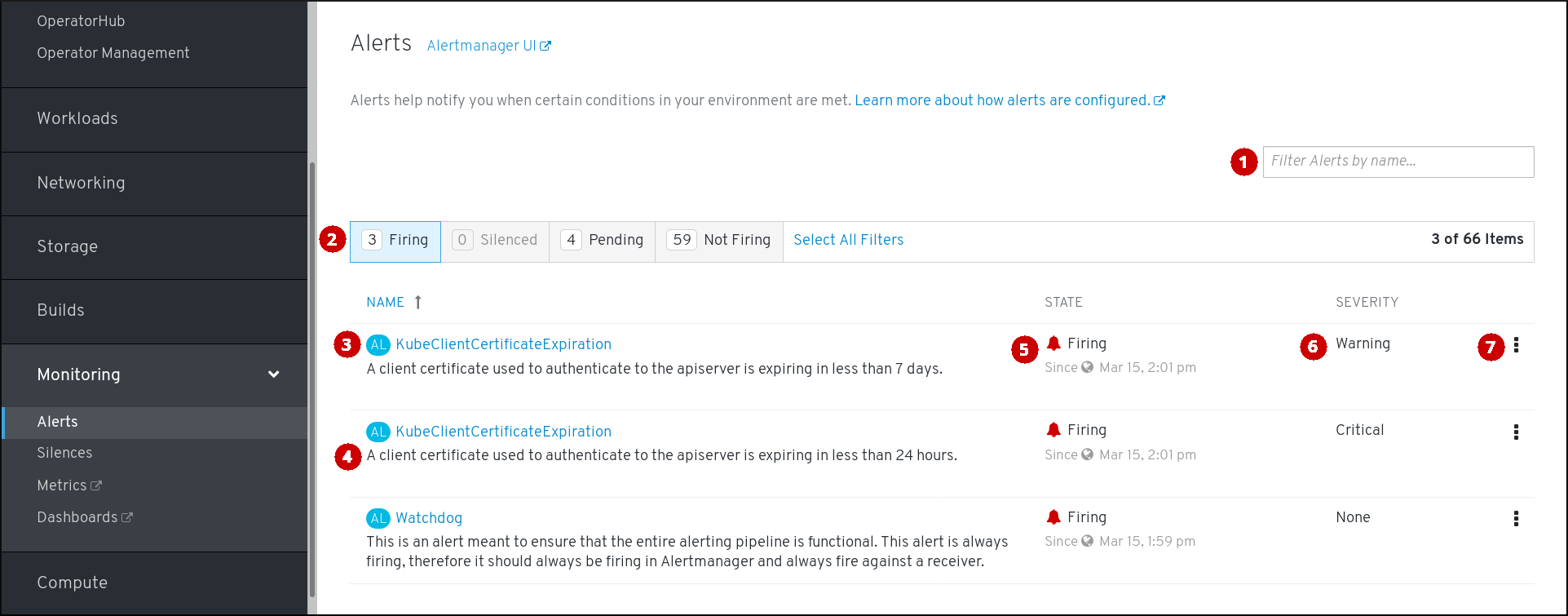

The main two pages of the Alerting UI are the Alerts and the Silences pages.

The Alerts page is located in Monitoring

- Filtering alerts by their names.

- Filtering the alerts by their states. To fire, some alerts need a certain condition to be true for the duration of a timeout. If a condition of an alert is currently true, but the timeout has not been reached, such an alert is in the Pending state.

- Alert name.

- Description of an alert.

- Current state of the alert and when the alert went into this state.

- Value of the Severity label of the alert.

- Actions you can do with the alert.

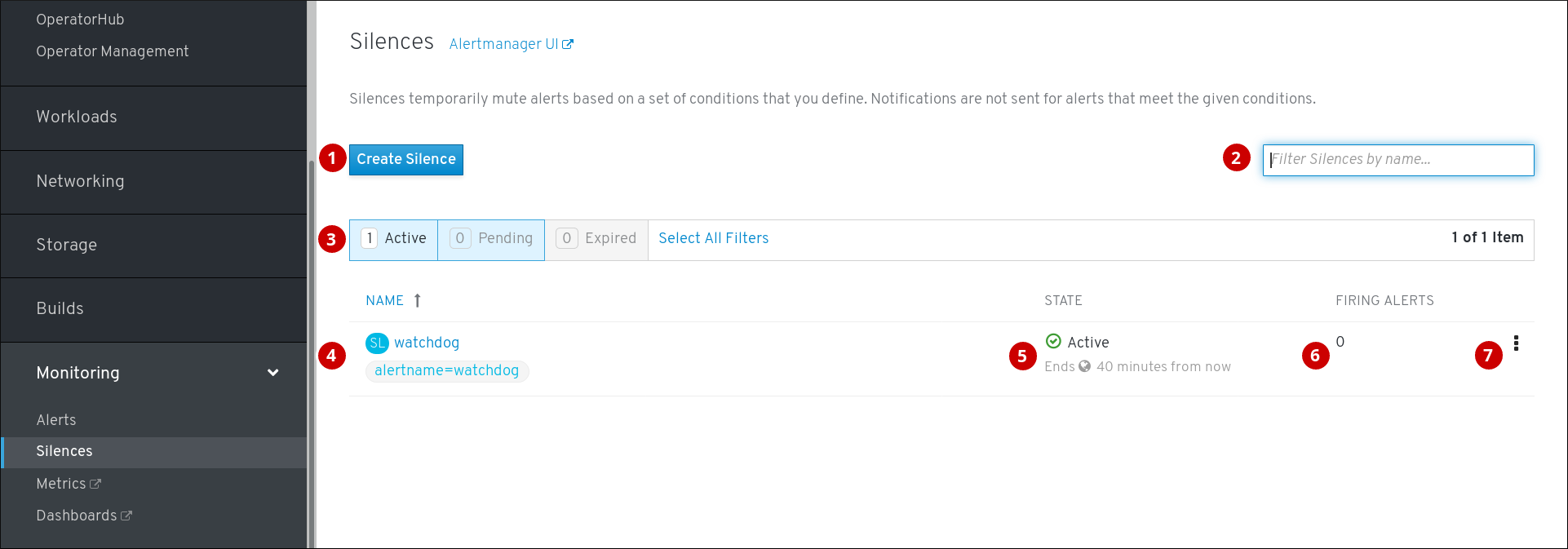

The Silences page is located in Monitoring

- Creating a silence for an alert.

- Filtering silences by their name.

- Filtering silences by their states. If a silence is pending, it is currently not active because it is scheduled to start at a later time. If a silence expired, it is no longer active because it has reached its end time.

- Description of a silence. It includes the specification of alerts that it matches.

- Current state of the silence. For active silences, it shows when it ends, and for pending silences, it shows when it starts.

- Number of alerts that are being silenced by the silence.

- Actions you can do with a silence.

Additionally, next to the title of each of these pages is a link to the old Alertmanager interface.

1.3.2. Getting information about alerts and alerting rules

You can find an alert and see information about it or its governing alerting rule.

Procedure

-

Open the OpenShift Container Platform web console and navigate to Monitoring

Alerts. - Optional: Filter the alerts by name using the Filter alerts by name field.

- Optional: Filter the alerts by state using one or more of the state buttons Firing, Silenced, Pending, Not firing.

- Optional: Sort the alerts by clicking one or more of the Name, State, and Severity column headers.

After you see the alert, you can see either details of the alert or details of its governing alerting rule.

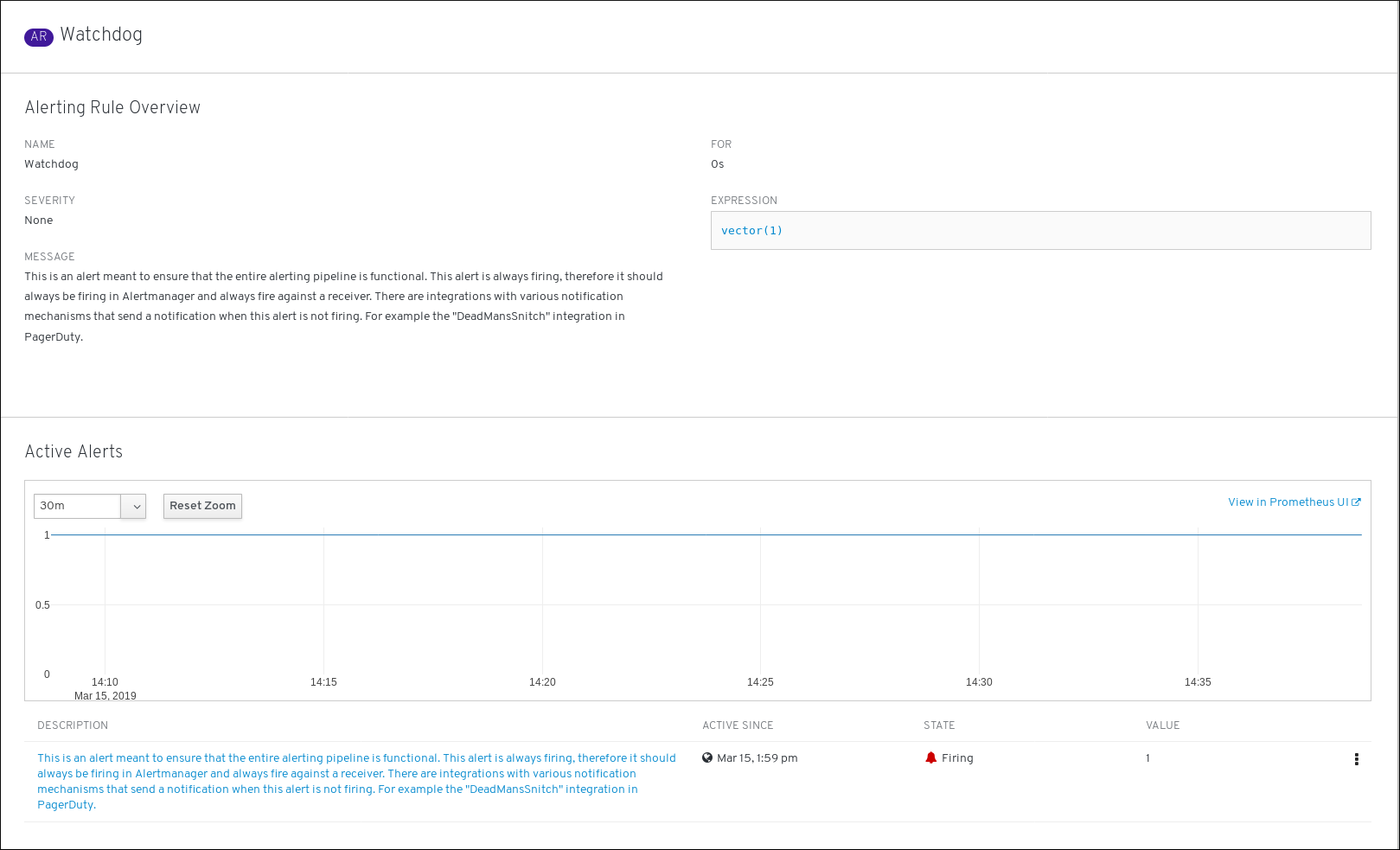

To see alert details, click on the name of the alert. This is the page with alert details:

The page has the graph with timeseries of the alert. It also has information about the alert, including:

- A link to its governing alerting rule

- Description of the alert

To see alerting rule details, click the button in the last column and select View Alerting Rule. This is the page with alerting rule details:

The page has information about the alerting rule, including:

- Alerting rule name, severity, and description

- The expression that defines the condition for firing the alert

- The time for which the condition should be true for an alert to fire

- Graph for each alert governed by the alerting rule, showing the value with which the alert is firing

- Table of all alerts governed by the alerting rule

1.3.3. Silencing alerts

You can either silence a specific alert or silence alerts that match a specification that you define.

Procedure

To silence a set of alerts by creating an alert specification:

-

Navigate to the Monitoring

Silences page of the OpenShift Container Platform web console. - Click Create Silence.

- Populate the Create Silence form.

- To create the silence, click Create.

To silence a specific alert:

-

Navigate to the Monitoring

Alerts page of the OpenShift Container Platform web console. - For the alert that you want to silence, click the button in the last column and click Silence Alert. The Create Silence form will appear with prepopulated specification of the chosen alert.

- Optional: Modify the silence.

- To create the silence, click Create.

1.3.4. Getting information about silences

You can find a silence and view its details.

Procedure

-

Open the OpenShift Container Platform web console and navigate to Monitoring

Silences. - Optional: Filter the silences by name using the Filter Silences by name field.

- Optional: Filter the silences by state using one or more of the state buttons Active, Pending, Expired.

- Optional: Sort the silences by clicking one or more of the Name, State, and Firing alerts column headers.

After you see the silence, you can click its name to see the details, including:

- Alert specification

- State

- Start time

- End time

- Number and list of firing alerts

1.3.5. Editing silences

You can edit a silence, which will expire the existing silence and create a new silence with the changed configuration.

Procedure

-

Navigate to the Monitoring

Silences screen. For the silence you want to modify, click the button in the last column and click Edit silence.

Alternatively, you can click Actions

Edit Silence in the Silence Overview screen for a particular silence. - In the Edit Silence screen, enter your changes and click the Save button. This will expire the existing silence and create one with the chosen configuration.

1.3.6. Expiring silences

You can expire a silence. Expiring a silence deactivates it forever.

Procedure

-

Navigate to the Monitoring

Silences page. For the silence you want to expire, click the button in the last column and click Expire Silence.

Alternatively, you can click the Actions

Expire Silence button in the Silence Overview page for a particular silence. - Confirm by clicking Expire Silence. This expires the silence.

1.4. Accessing Prometheus, Alertmanager, and Grafana

To work with data gathered by the monitoring stack, you might want to use the Prometheus, Alertmanager, and Grafana interfaces. They are available by default.

1.4.1. Accessing Prometheus, Alerting UI, and Grafana using the Web console

You can access Prometheus, Alerting UI, and Grafana web UIs using a Web browser through the OpenShift Container Platform Web console.

The Alerting UI accessed in this procedure is the new interface for Alertmanager.

Prerequisites

-

Authentication is performed against the OpenShift Container Platform identity and uses the same credentials or means of authentication as is used elsewhere in OpenShift Container Platform. You must use a role that has read access to all namespaces, such as the

cluster-monitoring-viewcluster role.

Procedure

- Navigate to the OpenShift Container Platform Web console and authenticate.

To access Prometheus, navigate to "Monitoring"

"Metrics". To access the Alerting UI, navigate to "Monitoring"

"Alerts" or "Monitoring" "Silences". To access Grafana, navigate to "Monitoring"

"Dashboards".

1.4.2. Accessing Prometheus, Alertmanager, and Grafana directly

You can access Prometheus, Alertmanager, and Grafana web UIs using the oc tool and a Web browser.

The Alertmanager UI accessed in this procedure is the old interface for Alertmanager.

Prerequisites

-

Authentication is performed against the OpenShift Container Platform identity and uses the same credentials or means of authentication as is used elsewhere in OpenShift Container Platform. You must use a role that has read access to all namespaces, such as the

cluster-monitoring-viewcluster role.

Procedure

Run:

oc -n openshift-monitoring get routes NAME HOST/PORT ... alertmanager-main alertmanager-main-openshift-monitoring.apps.url.openshift.com ... grafana grafana-openshift-monitoring.apps.url.openshift.com ... prometheus-k8s prometheus-k8s-openshift-monitoring.apps.url.openshift.com ...

$ oc -n openshift-monitoring get routes NAME HOST/PORT ... alertmanager-main alertmanager-main-openshift-monitoring.apps.url.openshift.com ... grafana grafana-openshift-monitoring.apps.url.openshift.com ... prometheus-k8s prometheus-k8s-openshift-monitoring.apps.url.openshift.com ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Prepend

https://to the address, you cannot access web UIs using unencrypted connection.For example, this is the resulting URL for Alertmanager:

https://alertmanager-main-openshift-monitoring.apps.url.openshift.com

https://alertmanager-main-openshift-monitoring.apps.url.openshift.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to the address using a Web browser and authenticate.

Additional resources

- For documentation on the new interface for Alertmanager, see Managing cluster alerts.