This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Chapter 4. Working with nodes

4.1. Viewing and listing the nodes in your OpenShift Container Platform cluster

You can list all the nodes in your cluster to obtain information such as status, age, memory usage, and details about the nodes.

When you perform node management operations, the CLI interacts with node objects that are representations of actual node hosts. The master uses the information from node objects to validate nodes with health checks.

4.1.1. About listing all the nodes in a cluster

You can get detailed information on the nodes in the cluster.

The following command lists all nodes:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

-wideoption provides additional information on all nodes.oc get nodes -o wide

$ oc get nodes -o wideCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following command lists information about a single node:

oc get node <node>

$ oc get node <node>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

STATUScolumn in the output of these commands can show nodes with the following conditions:Expand Table 4.1. Node Conditions Condition Description ReadyThe node reports its own readiness to the apiserver by returning

True.NotReadyOne of the underlying components, such as the container runtime or network, is experiencing issues or is not yet configured.

SchedulingDisabledPods cannot be scheduled for placement on the node.

The following command provides more detailed information about a specific node, including the reason for the current condition:

oc describe node <node>

$ oc describe node <node>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The name of the node.

- 2

- The role of the node, either

masterorworker. - 3

- The labels applied to the node.

- 4

- The annotations applied to the node.

- 5

- The taints applied to the node.

- 6

- Node conditions.

- 7

- The IP address and host name of the node.

- 8

- The pod resources and allocatable resources.

- 9

- Information about the node host.

- 10

- The pods on the node.

- 11

- The events reported by the node.

4.1.2. Listing pods on a node in your cluster

You can list all the pods on a specific node.

Procedure

To list all or selected pods on one or more nodes:

oc describe node <node1> <node2>

$ oc describe node <node1> <node2>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc describe node ip-10-0-128-218.ec2.internal

$ oc describe node ip-10-0-128-218.ec2.internalCopy to Clipboard Copied! Toggle word wrap Toggle overflow To list all or selected pods on selected nodes:

oc describe --selector=<node_selector> oc describe -l=<pod_selector>

$ oc describe --selector=<node_selector> $ oc describe -l=<pod_selector>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc describe node --selector=beta.kubernetes.io/os oc describe node -l node-role.kubernetes.io/worker

$ oc describe node --selector=beta.kubernetes.io/os $ oc describe node -l node-role.kubernetes.io/workerCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.3. Viewing memory and CPU usage statistics on your nodes

You can display usage statistics about nodes, which provide the runtime environments for containers. These usage statistics include CPU, memory, and storage consumption.

Prerequisites

-

You must have

cluster-readerpermission to view the usage statistics. - Metrics must be installed to view the usage statistics.

Procedure

To view the usage statistics:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To view the usage statistics for nodes with labels:

oc adm top node --selector=''

$ oc adm top node --selector=''Copy to Clipboard Copied! Toggle word wrap Toggle overflow You must choose the selector (label query) to filter on. Supports

=,==, and!=.

4.2. Working with nodes

As an administrator, you can perform a number of tasks to make your clusters more efficient.

4.2.1. Understanding how to evacuate pods on nodes

Evacuating pods allows you to migrate all or selected pods from a given node or nodes.

You can only evacuate pods backed by a replication controller. The replication controller creates new pods on other nodes and removes the existing pods from the specified node(s).

Bare pods, meaning those not backed by a replication controller, are unaffected by default. You can evacuate a subset of pods by specifying a pod-selector. Pod selectors are based on labels, so all the pods with the specified label will be evacuated.

Nodes must first be marked unschedulable to perform pod evacuation.

oc adm cordon <node1> NAME STATUS ROLES AGE VERSION <node1> NotReady,SchedulingDisabled worker 1d v1.13.4+b626c2fe1

$ oc adm cordon <node1>

NAME STATUS ROLES AGE VERSION

<node1> NotReady,SchedulingDisabled worker 1d v1.13.4+b626c2fe1

Use oc adm uncordon to mark the node as schedulable when done.

oc adm uncordon <node1>

$ oc adm uncordon <node1>The following command evacuates all or selected pods on one or more nodes:

oc adm drain <node1> <node2> [--pod-selector=<pod_selector>]

$ oc adm drain <node1> <node2> [--pod-selector=<pod_selector>]Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following command forces deletion of bare pods using the

--forceoption. When set totrue, deletion continues even if there are pods not managed by a replication controller, ReplicaSet, job, daemonset, or StatefulSet:oc adm drain <node1> <node2> --force=true

$ oc adm drain <node1> <node2> --force=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following command sets a period of time in seconds for each pod to terminate gracefully, use

--grace-period. If negative, the default value specified in the pod will be used:oc adm drain <node1> <node2> --grace-period=-1

$ oc adm drain <node1> <node2> --grace-period=-1Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following command ignores DaemonSet-managed pods using the

--ignore-daemonsetsflag set totrue:oc adm drain <node1> <node2> --ignore-daemonsets=true

$ oc adm drain <node1> <node2> --ignore-daemonsets=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following command sets the length of time to wait before giving up using the

--timeoutflag. A value of0sets an infinite length of time:oc adm drain <node1> <node2> --timeout=5s

$ oc adm drain <node1> <node2> --timeout=5sCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following command deletes pods even if there are pods using emptyDir using the

--delete-local-dataflag set totrue. Local data is deleted when the node is drained:oc adm drain <node1> <node2> --delete-local-data=true

$ oc adm drain <node1> <node2> --delete-local-data=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following command lists objects that will be migrated without actually performing the evacuation, using the

--dry-runoption set totrue:oc adm drain <node1> <node2> --dry-run=true

$ oc adm drain <node1> <node2> --dry-run=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Instead of specifying specific node names (for example,

<node1> <node2>), you can use the--selector=<node_selector>option to evacuate pods on selected nodes.

4.2.2. Understanding how to update labels on nodes

You can update any label on a node.

Node labels are not persisted after a node is deleted even if the node is backed up by a Machine.

Any change to a MachineSet is not applied to existing machines owned by the MachineSet. For example, labels edited or added to an existing MachineSet are not propagated to existing machines and Nodes associated with the MachineSet.

The following command adds or updates labels on a node:

oc label node <node> <key_1>=<value_1> ... <key_n>=<value_n>

$ oc label node <node> <key_1>=<value_1> ... <key_n>=<value_n>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc label nodes webconsole-7f7f6 unhealthy=true

$ oc label nodes webconsole-7f7f6 unhealthy=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following command updates all pods in the namespace:

oc label pods --all <key_1>=<value_1>

$ oc label pods --all <key_1>=<value_1>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc label pods --all status=unhealthy

$ oc label pods --all status=unhealthyCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.3. Understanding how to marking nodes as unschedulable or schedulable

By default, healthy nodes with a Ready status are marked as schedulable, meaning that new pods are allowed for placement on the node. Manually marking a node as unschedulable blocks any new pods from being scheduled on the node. Existing pods on the node are not affected.

The following command marks a node or nodes as unschedulable:

oc adm cordon <node>

$ oc adm cordon <node>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc adm cordon node1.example.com node/node1.example.com cordoned NAME LABELS STATUS node1.example.com kubernetes.io/hostname=node1.example.com Ready,SchedulingDisabled

$ oc adm cordon node1.example.com node/node1.example.com cordoned NAME LABELS STATUS node1.example.com kubernetes.io/hostname=node1.example.com Ready,SchedulingDisabledCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following command marks a currently unschedulable node or nodes as schedulable:

oc adm uncordon <node1>

$ oc adm uncordon <node1>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, instead of specifying specific node names (for example,

<node>), you can use the--selector=<node_selector>option to mark selected nodes as schedulable or unschedulable.

4.2.4. Deleting nodes from a cluster

When you delete a node using the CLI, the node object is deleted in Kubernetes, but the pods that exist on the node are not deleted. Any bare pods not backed by a replication controller become inaccessible to OpenShift Container Platform. Pods backed by replication controllers are rescheduled to other available nodes. You must delete local manifest pods.

Procedure

To delete a node from the OpenShift Container Platform cluster edit the appropriate MachineSet:

View the MachineSets that are in the cluster:

oc get machinesets -n openshift-machine-api

$ oc get machinesets -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow The MachineSets are listed in the form of <clusterid>-worker-<aws-region-az>.

Scale the MachineSet:

oc scale --replicas=2 machineset <machineset> -n openshift-machine-api

$ oc scale --replicas=2 machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For more information on scaling your cluster using a MachineSet, see Manually scaling a MachineSet.

4.2.5. Additional resources

For more information on scaling your cluster using a MachineSet, see Manually scaling a MachineSet.

4.3. Managing Nodes

OpenShift Container Platform uses a KubeletConfig Custom Resource to manage the configuration of nodes. By creating an instance of a KubeletConfig, a managed MachineConfig is created to override setting on the node.

Logging in to remote machines for the purpose of changing their configuration is not supported.

4.3.1. Modifying Nodes

To make configuration changes to a cluster, or MachinePool, you must create a Custom Resource Definition, or KubeletConfig instance. OpenShift Container Platform uses the Machine Config Controller to watch for changes introduced through the CRD applies the changes to the cluster.

Procedure

Obtain the label associated with the static CRD, Machine Config Pool, for the type of node you want to configure. Perform one of the following steps:

Check current labels of the desired machineconfigpool.

For example:

oc get machineconfigpool --show-labels NAME CONFIG UPDATED UPDATING DEGRADED LABELS master rendered-master-e05b81f5ca4db1d249a1bf32f9ec24fd True False False operator.machineconfiguration.openshift.io/required-for-upgrade= worker rendered-worker-f50e78e1bc06d8e82327763145bfcf62 True False False

$ oc get machineconfigpool --show-labels NAME CONFIG UPDATED UPDATING DEGRADED LABELS master rendered-master-e05b81f5ca4db1d249a1bf32f9ec24fd True False False operator.machineconfiguration.openshift.io/required-for-upgrade= worker rendered-worker-f50e78e1bc06d8e82327763145bfcf62 True False FalseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a custom label to the desired machineconfigpool.

For example:

oc label machineconfigpool worker custom-kubelet=enabled

$ oc label machineconfigpool worker custom-kubelet=enabledCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a KubeletConfig Custom Resource (CR) for your configuration change.

For example:

Sample configuration for a custom-config CR

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the CR object.

oc create -f <file-name>

$ oc create -f <file-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc create -f master-kube-config.yaml

$ oc create -f master-kube-config.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Most KubeletConfig Options may be set by the user. The following options are not allowed to be overwritten:

- CgroupDriver

- ClusterDNS

- ClusterDomain

- RuntimeRequestTimeout

- StaticPodPath

4.4. Managing the maximum number of Pods per Node

In OpenShift Container Platform, you can configure the number of pods that can run on a node based on the number of processor cores on the node, a hard limit or both. If you use both options, the lower of the two limits the number of pods on a node.

Exceeding these values can result in:

- Increased CPU utilization by OpenShift Container Platform.

- Slow pod scheduling.

- Potential out-of-memory scenarios, depending on the amount of memory in the node.

- Exhausting the IP address pool.

- Resource overcommitting, leading to poor user application performance.

A pod that is holding a single container actually uses two containers. The second container sets up networking prior to the actual container starting. As a result, a node running 10 pods actually has 20 containers running.

The podsPerCore parameter limits the number of pods the node can run based on the number of processor cores on the node. For example, if podsPerCore is set to 10 on a node with 4 processor cores, the maximum number of pods allowed on the node is 40.

The maxPods parameter limits the number of pods the node can run to a fixed value, regardless of the properties of the node.

4.4.1. Configuring the maximum number of Pods per Node

Two parameters control the maximum number of pods that can be scheduled to a node: podsPerCore and maxPods. If you use both options, the lower of the two limits the number of pods on a node.

For example, if podsPerCore is set to 10 on a node with 4 processor cores, the maximum number of pods allowed on the node will be 40.

Prerequisite

Obtain the label associated with the static Machine Config Pool CRD for the type of node you want to configure. Perform one of the following steps:

View the Machine Config Pool:

oc describe machineconfigpool <name>

$ oc describe machineconfigpool <name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- If a label has been added it appears under

labels.

If the label is not present, add a key/value pair:

oc label machineconfigpool worker custom-kubelet=small-pods

$ oc label machineconfigpool worker custom-kubelet=small-podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Create a Custom Resource (CR) for your configuration change.

Sample configuration for a max-pods CR

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteSetting

podsPerCoreto 0 disables this limit.In the above example, the default value for

podsPerCoreis10and the default value formaxPodsis250. This means that unless the node has 25 cores or more, by default,podsPerCorewill be the limiting factor.List the Machine Config Pool CRDs to see if the change is applied. The

UPDATINGcolumn reportsTrueif the change is picked up by the Machine Config Controller:oc get machineconfigpools NAME CONFIG UPDATED UPDATING DEGRADED master master-9cc2c72f205e103bb534 False False False worker worker-8cecd1236b33ee3f8a5e False True False

$ oc get machineconfigpools NAME CONFIG UPDATED UPDATING DEGRADED master master-9cc2c72f205e103bb534 False False False worker worker-8cecd1236b33ee3f8a5e False True FalseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Once the change is complete, the

UPDATEDcolumn reportsTrue.oc get machineconfigpools NAME CONFIG UPDATED UPDATING DEGRADED master master-9cc2c72f205e103bb534 False True False worker worker-8cecd1236b33ee3f8a5e True False False

$ oc get machineconfigpools NAME CONFIG UPDATED UPDATING DEGRADED master master-9cc2c72f205e103bb534 False True False worker worker-8cecd1236b33ee3f8a5e True False FalseCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Using the Node Tuning Operator

Learn about the Node Tuning Operator and how you can use it to manage node-level tuning by orchestrating the tuned daemon.

4.5.1. About the Node Tuning Operator

The Node Tuning Operator helps you manage node-level tuning by orchestrating the tuned daemon. The majority of high-performance applications require some level of kernel tuning. The Node Tuning Operator provides a unified management interface to users of node-level sysctls and more flexibility to add custom tuning, which is currently a Technology Preview feature, specified by user needs. The Operator manages the containerized tuned daemon for OpenShift Container Platform as a Kubernetes DaemonSet. It ensures the custom tuning specification is passed to all containerized tuned daemons running in the cluster in the format that the daemons understand. The daemons run on all nodes in the cluster, one per node.

The Node Tuning Operator is part of a standard OpenShift Container Platform installation in version 4.1 and later.

4.5.2. Accessing an example Node Tuning Operator specification

Use this process to access an example Node Tuning Operator specification.

Procedure

Run:

oc get Tuned/default -o yaml -n openshift-cluster-node-tuning-operator

$ oc get Tuned/default -o yaml -n openshift-cluster-node-tuning-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5.3. Custom tuning specification

The custom resource (CR) for the operator has two major sections. The first section, profile:, is a list of tuned profiles and their names. The second, recommend:, defines the profile selection logic.

Multiple custom tuning specifications can co-exist as multiple CRs in the operator’s namespace. The existence of new CRs or the deletion of old CRs is detected by the Operator. All existing custom tuning specifications are merged and appropriate objects for the containerized tuned daemons are updated.

Profile data

The profile: section lists tuned profiles and their names.

Recommended profiles

The profile: selection logic is defined by the recommend: section of the CR:

If <match> is omitted, a profile match (for example, true) is assumed.

<match> is an optional array recursively defined as follows:

- label: <label_name> # node or pod label name

value: <label_value> # optional node or pod label value; if omitted, the presence of <label_name> is enough to match

type: <label_type> # optional node or pod type ("node" or "pod"); if omitted, "node" is assumed

<match> # an optional <match> array

- label: <label_name> # node or pod label name

value: <label_value> # optional node or pod label value; if omitted, the presence of <label_name> is enough to match

type: <label_type> # optional node or pod type ("node" or "pod"); if omitted, "node" is assumed

<match> # an optional <match> array

If <match> is not omitted, all nested <match> sections must also evaluate to true. Otherwise, false is assumed and the profile with the respective <match> section will not be applied or recommended. Therefore, the nesting (child <match> sections) works as logical AND operator. Conversely, if any item of the <match> array matches, the entire <match> array evaluates to true. Therefore, the array acts as logical OR operator.

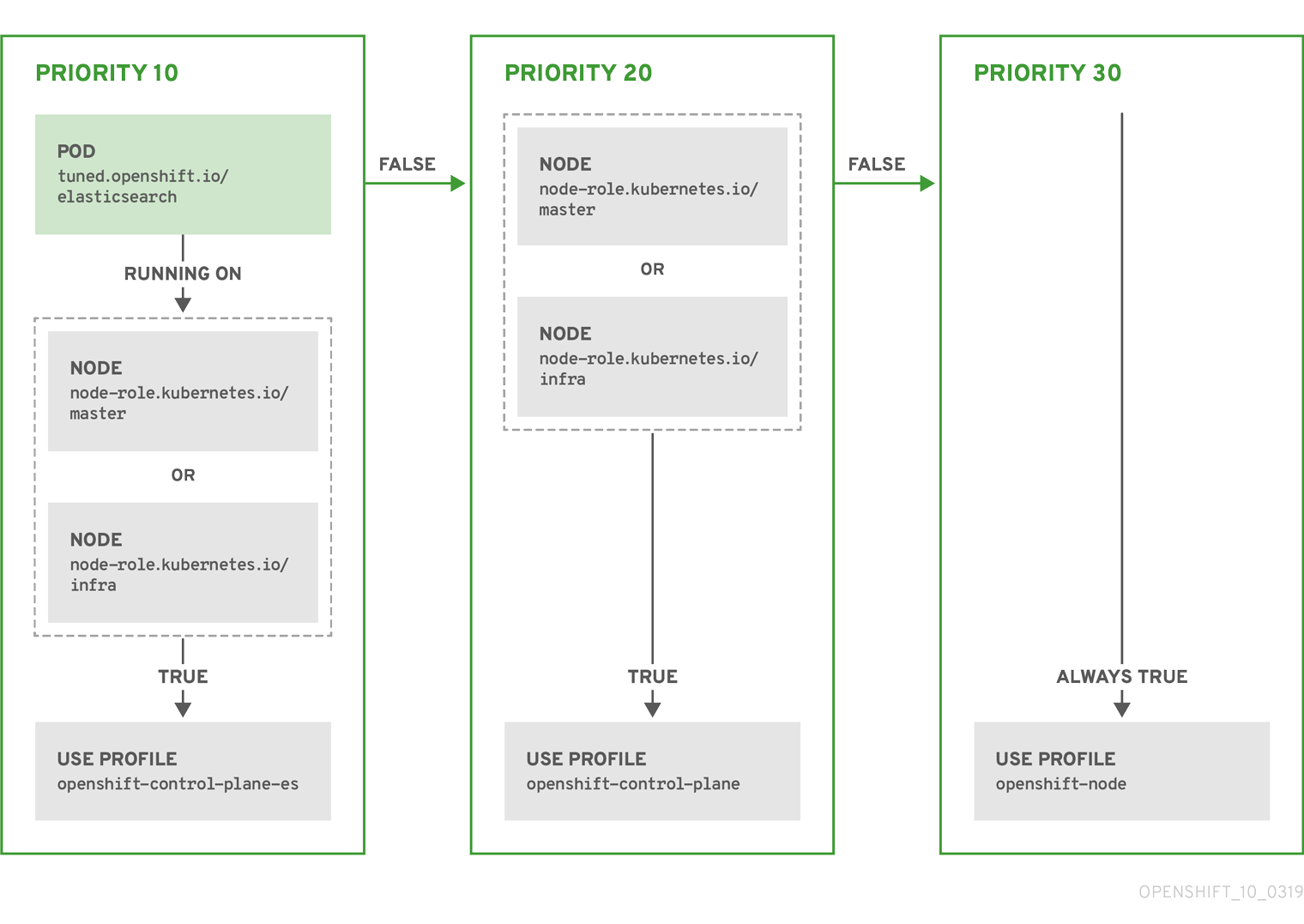

Example

The CR above is translated for the containerized tuned daemon into its recommend.conf file based on the profile priorities. The profile with the highest priority (10) is openshift-control-plane-es and, therefore, it is considered first. The containerized tuned daemon running on a given node looks to see if there is a pod running on the same node with the tuned.openshift.io/elasticsearch label set. If not, the entire <match> section evaluates as false. If there is such a pod with the label, in order for the <match> section to evaluate to true, the node label also needs to be node-role.kubernetes.io/master or node-role.kubernetes.io/infra.

If the labels for the profile with priority 10 matched, openshift-control-plane-es profile is applied and no other profile is considered. If the node/pod label combination did not match, the second highest priority profile (openshift-control-plane) is considered. This profile is applied if the containerized tuned pod runs on a node with labels node-role.kubernetes.io/master or node-role.kubernetes.io/infra.

Finally, the profile openshift-node has the lowest priority of 30. It lacks the <match> section and, therefore, will always match. It acts as a profile catch-all to set openshift-node profile, if no other profile with higher priority matches on a given node.

4.5.4. Default profiles set on a cluster

The following are the default profiles set on a cluster.

4.5.5. Supported Tuned daemon plug-ins

Excluding the [main] section, the following Tuned plug-ins are supported when using custom profiles defined in the profile: section of the Tuned CR:

- audio

- cpu

- disk

- eeepc_she

- modules

- mounts

- net

- scheduler

- scsi_host

- selinux

- sysctl

- sysfs

- usb

- video

- vm

There is some dynamic tuning functionality provided by some of these plug-ins that is not supported. The following Tuned plug-ins are currently not supported:

- bootloader

- script

- systemd

See Available Tuned Plug-ins and Getting Started with Tuned for more information.

4.6. Understanding node rebooting

To reboot a node without causing an outage for applications running on the platform, it is important to first evacuate the pods. For pods that are made highly available by the routing tier, nothing else needs to be done. For other pods needing storage, typically databases, it is critical to ensure that they can remain in operation with one pod temporarily going offline. While implementing resiliency for stateful pods is different for each application, in all cases it is important to configure the scheduler to use node anti-affinity to ensure that the pods are properly spread across available nodes.

Another challenge is how to handle nodes that are running critical infrastructure such as the router or the registry. The same node evacuation process applies, though it is important to understand certain edge cases.

4.6.1. Understanding infrastructure node rebooting

Infrastructure nodes are nodes that are labeled to run pieces of the OpenShift Container Platform environment. Currently, the easiest way to manage node reboots is to ensure that there are at least three nodes available to run infrastructure. The nodes to run the infrastructure are called master nodes.

The scenario below demonstrates a common mistake that can lead to service interruptions for the applications running on OpenShift Container Platform when only two nodes are available.

- Node A is marked unschedulable and all pods are evacuated.

- The registry pod running on that node is now redeployed on node B. This means node B is now running both registry pods.

- Node B is now marked unschedulable and is evacuated.

- The service exposing the two pod endpoints on node B, for a brief period of time, loses all endpoints until they are redeployed to node A.

The same process using three master nodes for infrastructure does not result in a service disruption. However, due to pod scheduling, the last node that is evacuated and brought back in to rotation is left running zero registries. The other two nodes will run two and one registries respectively. The best solution is to rely on pod anti-affinity.

4.6.2. Rebooting a node using pod anti-affinity

Pod anti-affinity is slightly different than node anti-affinity. Node anti-affinity can be violated if there are no other suitable locations to deploy a pod. Pod anti-affinity can be set to either required or preferred.

With this in place, if only two infrastructure nodes are available and one is rebooted, the container image registry pod is prevented from running on the other node. oc get pods reports the pod as unready until a suitable node is available. Once a node is available and all pods are back in ready state, the next node can be restarted.

Procedure

To reboot a node using pod anti-affinity:

Edit the node specification to configure pod anti-affinity:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Stanza to configure pod anti-affinity.

- 2

- Defines a preferred rule.

- 3

- Specifies a weight for a preferred rule. The node with the highest weight is preferred.

- 4

- Description of the pod label that determines when the anti-affinity rule applies. Specify a key and value for the label.

- 5

- The operator represents the relationship between the label on the existing pod and the set of values in the

matchExpressionparameters in the specification for the new pod. Can beIn,NotIn,Exists, orDoesNotExist.

This example assumes the container image registry pod has a label of

registry=default. Pod anti-affinity can use any Kubernetes match expression.-

Enable the

MatchInterPodAffinityscheduler predicate in the scheduling policy file.

4.6.3. Understanding how to reboot nodes running routers

In most cases, a pod running an OpenShift Container Platform router exposes a host port.

The PodFitsPorts scheduler predicate ensures that no router pods using the same port can run on the same node, and pod anti-affinity is achieved. If the routers are relying on IP failover for high availability, there is nothing else that is needed.

For router pods relying on an external service such as AWS Elastic Load Balancing for high availability, it is that service’s responsibility to react to router pod restarts.

In rare cases, a router pod may not have a host port configured. In those cases, it is important to follow the recommended restart process for infrastructure nodes.

4.7. Freeing node resources using garbage collection

As an administrator, you can use OpenShift Container Platform to ensure that your nodes are running efficiently by freeing up resources through garbage collection.

The OpenShift Container Platform node performs two types of garbage collection:

- Container garbage collection: Removes terminated containers.

- Image garbage collection: Removes images not referenced by any running pods.

4.7.1. Understanding how terminated containers are removed though garbage collection

Container garbage collection can be performed using eviction thresholds.

When eviction thresholds are set for garbage collection, the node tries to keep any container for any pod accessible from the API. If the pod has been deleted, the containers will be as well. Containers are preserved as long the pod is not deleted and the eviction threshold is not reached. If the node is under disk pressure, it will remove containers and their logs will no longer be accessible using oc logs.

- eviction-soft - A soft eviction threshold pairs an eviction threshold with a required administrator-specified grace period.

- eviction-hard - A hard eviction threshold has no grace period, and if observed, OpenShift Container Platform takes immediate action.

If a node is oscillating above and below a soft eviction threshold, but not exceeding its associated grace period, the corresponding node would constantly oscillate between true and false. As a consequence, the scheduler could make poor scheduling decisions.

To protect against this oscillation, use the eviction-pressure-transition-period flag to control how long OpenShift Container Platform must wait before transitioning out of a pressure condition. OpenShift Container Platform will not set an eviction threshold as being met for the specified pressure condition for the period specified before toggling the condition back to false.

4.7.2. Understanding how images are removed though garbage collection

Image garbage collection relies on disk usage as reported by cAdvisor on the node to decide which images to remove from the node.

The policy for image garbage collection is based on two conditions:

- The percent of disk usage (expressed as an integer) which triggers image garbage collection. The default is 85.

- The percent of disk usage (expressed as an integer) to which image garbage collection attempts to free. Default is 80.

For image garbage collection, you can modify any of the following variables using a Custom Resource.

| Setting | Description |

|---|---|

|

| The minimum age for an unused image before the image is removed by garbage collection. The default is 2m. |

|

| The percent of disk usage, expressed as an integer, which triggers image garbage collection. The default is 85. |

|

| The percent of disk usage, expressed as an integer, to which image garbage collection attempts to free. The default is 80. |

Two lists of images are retrieved in each garbage collector run:

- A list of images currently running in at least one pod.

- A list of images available on a host.

As new containers are run, new images appear. All images are marked with a time stamp. If the image is running (the first list above) or is newly detected (the second list above), it is marked with the current time. The remaining images are already marked from the previous spins. All images are then sorted by the time stamp.

Once the collection starts, the oldest images get deleted first until the stopping criterion is met.

4.7.3. Configuring garbage collection for containers and images

As an administrator, you can configure how OpenShift Container Platform performs garbage collection by creating a kubeletConfig object for each Machine Config Pool.

OpenShift Container Platform supports only one kubeletConfig object for each Machine Config Pool.

You can configure any combination of the following:

- soft eviction for containers

- hard eviction for containers

- eviction for images

For soft container eviction you can also configure a grace period before eviction.

Prerequisites

Obtain the label associated with the static Machine Config Pool CRD for the type of node you want to configure. Perform one of the following steps:

View the Machine Config Pool:

oc describe machineconfigpool <name>

$ oc describe machineconfigpool <name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- If a label has been added it appears under

labels.

If the label is not present, add a key/value pair:

oc label machineconfigpool worker custom-kubelet=small-pods

$ oc label machineconfigpool worker custom-kubelet=small-podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Create a Custom Resource (CR) for your configuration change.

Sample configuration for a container garbage collection CR:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Name for the object.

- 2

- Selector label.

- 3

- Type of eviction:

EvictionSoftandEvictionHard. - 4

- Eviction thresholds based on a specific eviction trigger signal.

- 5

- Grace periods for the soft eviction. This parameter does not apply to

eviction-hard. - 6

- The duration to wait before transitioning out of an eviction pressure condition

- 7

- The minimum age for an unused image before the image is removed by garbage collection.

- 8

- The percent of disk usage (expressed as an integer) which triggers image garbage collection.

- 9

- The percent of disk usage (expressed as an integer) to which image garbage collection attempts to free.

Create the object:

oc create -f <file-name>.yaml

$ oc create -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc create -f gc-container.yaml kubeletconfig.machineconfiguration.openshift.io/gc-container created

oc create -f gc-container.yaml kubeletconfig.machineconfiguration.openshift.io/gc-container createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that garbage collection is active. The Machine Config Pool you specified in the custom resource appears with

UPDATINGas 'true` until the change is fully implemented:oc get machineconfigpool NAME CONFIG UPDATED UPDATING master rendered-master-546383f80705bd5aeaba93 True False worker rendered-worker-b4c51bb33ccaae6fc4a6a5 False True

$ oc get machineconfigpool NAME CONFIG UPDATED UPDATING master rendered-master-546383f80705bd5aeaba93 True False worker rendered-worker-b4c51bb33ccaae6fc4a6a5 False TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.8. Allocating resources for nodes in an OpenShift Container Platform cluster

To provide more reliable scheduling and minimize node resource overcommitment, each node can reserve a portion of its resources for use by all underlying node components (such as kubelet, kube-proxy) and the remaining system components (such as sshd, NetworkManager) on the host. Once specified, the scheduler has more information about the resources (e.g., memory, CPU) a node has allocated for pods.

4.8.1. Understanding how to allocate resources for nodes

CPU and memory resources reserved for node components in OpenShift Container Platform are based on two node settings:

| Setting | Description |

|---|---|

|

| Resources reserved for node components. Default is none. |

|

| Resources reserved for the remaining system components. Default is none. |

If a flag is not set, it defaults to 0. If none of the flags are set, the allocated resource is set to the node’s capacity as it was before the introduction of allocatable resources.

4.8.1.1. How OpenShift Container Platform computes allocated resources

An allocated amount of a resource is computed based on the following formula:

[Allocatable] = [Node Capacity] - [kube-reserved] - [system-reserved] - [Hard-Eviction-Thresholds]

[Allocatable] = [Node Capacity] - [kube-reserved] - [system-reserved] - [Hard-Eviction-Thresholds]

The withholding of Hard-Eviction-Thresholds from allocatable is a change in behavior to improve system reliability now that allocatable is enforced for end-user pods at the node level. The experimental-allocatable-ignore-eviction setting is available to preserve legacy behavior, but it will be deprecated in a future release.

If [Allocatable] is negative, it is set to 0.

Each node reports system resources utilized by the container runtime and kubelet. To better aid your ability to configure --system-reserved and --kube-reserved, you can introspect corresponding node’s resource usage using the node summary API, which is accessible at <master>/api/v1/nodes/<node>/proxy/stats/summary.

4.8.1.2. How nodes enforce resource constraints

The node is able to limit the total amount of resources that pods may consume based on the configured allocatable value. This feature significantly improves the reliability of the node by preventing pods from starving system services (for example: container runtime, node agent, etc.) for resources. It is strongly encouraged that administrators reserve resources based on the desired node utilization target in order to improve node reliability.

The node enforces resource constraints using a new cgroup hierarchy that enforces quality of service. All pods are launched in a dedicated cgroup hierarchy separate from system daemons.

Optionally, the node can be made to enforce kube-reserved and system-reserved by specifying those tokens in the enforce-node-allocatable flag. If specified, the corresponding --kube-reserved-cgroup or --system-reserved-cgroup needs to be provided. In future releases, the node and container runtime will be packaged in a common cgroup separate from system.slice. Until that time, we do not recommend users change the default value of enforce-node-allocatable flag.

Administrators should treat system daemons similar to Guaranteed pods. System daemons can burst within their bounding control groups and this behavior needs to be managed as part of cluster deployments. Enforcing system-reserved limits can lead to critical system services being CPU starved or OOM killed on the node. The recommendation is to enforce system-reserved only if operators have profiled their nodes exhaustively to determine precise estimates and are confident in their ability to recover if any process in that group is OOM killed.

As a result, we strongly recommended that users only enforce node allocatable for pods by default, and set aside appropriate reservations for system daemons to maintain overall node reliability.

4.8.1.3. Understanding Eviction Thresholds

If a node is under memory pressure, it can impact the entire node and all pods running on it. If a system daemon is using more than its reserved amount of memory, an OOM event may occur that can impact the entire node and all pods running on it. To avoid (or reduce the probability of) system OOMs the node provides out-of-resource handling.

You can reserve some memory using the --eviction-hard flag. The node attempts to evict pods whenever memory availability on the node drops below the absolute value or percentage. If system daemons do not exist on a node, pods are limited to the memory capacity - eviction-hard. For this reason, resources set aside as a buffer for eviction before reaching out of memory conditions are not available for pods.

The following is an example to illustrate the impact of node allocatable for memory:

-

Node capacity is

32Gi -

--kube-reserved is

2Gi -

--system-reserved is

1Gi -

--eviction-hard is set to

100Mi.

For this node, the effective node allocatable value is 28.9Gi. If the node and system components use up all their reservation, the memory available for pods is 28.9Gi, and kubelet will evict pods when it exceeds this usage.

If you enforce node allocatable (28.9Gi) via top level cgroups, then pods can never exceed 28.9Gi. Evictions would not be performed unless system daemons are consuming more than 3.1Gi of memory.

If system daemons do not use up all their reservation, with the above example, pods would face memcg OOM kills from their bounding cgroup before node evictions kick in. To better enforce QoS under this situation, the node applies the hard eviction thresholds to the top-level cgroup for all pods to be Node Allocatable + Eviction Hard Thresholds.

If system daemons do not use up all their reservation, the node will evict pods whenever they consume more than 28.9Gi of memory. If eviction does not occur in time, a pod will be OOM killed if pods consume 29Gi of memory.

4.8.1.4. How the scheduler determines resource availability

The scheduler uses the value of node.Status.Allocatable instead of node.Status.Capacity to decide if a node will become a candidate for pod scheduling.

By default, the node will report its machine capacity as fully schedulable by the cluster.

4.8.2. Configuring allocated resources for nodes

OpenShift Container Platform supports the CPU and memory resource types for allocation. If your administrator enabled the ephemeral storage technology preview, the ephemeral-resource resource type is supported as well. For the cpu type, the resource quantity is specified in units of cores, such as 200m, 0.5, or 1. For memory and ephemeral-storage, it is specified in units of bytes, such as 200Ki, 50Mi, or 5Gi.

As an administrator, you can set these using a Custom Resource (CR) through a set of <resource_type>=<resource_quantity> pairs (e.g., cpu=200m,memory=512Mi).

Prerequisites

To help you determine setting for

--system-reservedand--kube-reservedyou can introspect the corresponding node’s resource usage using the node summary API, which is accessible at <master>/api/v1/nodes/<node>/proxy/stats/summary. Run the following command for your node:curl <certificate details> https://<master>/api/v1/nodes/<node-name>/proxy/stats/summary

$ curl <certificate details> https://<master>/api/v1/nodes/<node-name>/proxy/stats/summaryCopy to Clipboard Copied! Toggle word wrap Toggle overflow The REST API Overview has details about certificate details.

For example, to access the resources from cluster.node22 node, you can run:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Obtain the label associated with the static Machine Config Pool CRD for the type of node you want to configure. Perform one of the following steps:

View the Machine Config Pool:

oc describe machineconfigpool <name>

$ oc describe machineconfigpool <name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- If a label has been added it appears under

labels.

If the label is not present, add a key/value pair:

oc label machineconfigpool worker custom-kubelet=small-pods

$ oc label machineconfigpool worker custom-kubelet=small-podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Create a Custom Resource (CR) for your configuration change.

Sample configuration for a resource allocation CR

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.9. Viewing node audit logs

Audit provides a security-relevant chronological set of records documenting the sequence of activities that have affected system by individual users, administrators, or other components of the system.

4.9.1. About the API Audit Log

Audit works at the API server level, logging all requests coming to the server. Each audit log contains two entries:

The request line containing:

- A Unique ID allowing to match the response line (see #2)

- The source IP of the request

- The HTTP method being invoked

- The original user invoking the operation

-

The impersonated user for the operation (

selfmeaning himself) -

The impersonated group for the operation (

lookupmeaning user’s group) - The namespace of the request or <none>

- The URI as requested

The response line containing:

- The unique ID from #1

- The response code

You can view logs for the master nodes for the OpenShift Container Platform API server or the Kubernetes API server.

Example output for the Kubelet API server:

ip-10-0-140-97.ec2.internal {"kind":"Event","apiVersion":"audit.k8s.io/v1beta1","metadata":{"creationTimestamp":"2019-04-09T19:56:58Z"},"level":"Metadata","timestamp":"2019-04-09T19:56:58Z","auditID":"6e96c88b-ab6f-44d2-b62e-d1413efd676b","stage":"ResponseComplete","requestURI":"/api/v1/nodes/audit-2019-04-09T14-07-27.129.log","verb":"get","user":{"username":"kube:admin","groups":["system:cluster-admins","system:authenticated"],"extra":{"scopes.authorization.openshift.io":["user:full"]}},"sourceIPs":["10.0.57.93"],"userAgent":"oc/v1.13.4+b626c2fe1 (linux/amd64) kubernetes/ba88cb2","objectRef":{"resource":"nodes","name":"audit-2019-04-09T14-07-27.129.log","apiVersion":"v1"},"responseStatus":{"metadata":{},"status":"Failure","reason":"NotFound","code":404},"requestReceivedTimestamp":"2019-04-09T19:56:58.982157Z","stageTimestamp":"2019-04-09T19:56:58.985300Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"cluster-admins\" of ClusterRole \"cluster-admin\" to Group \"system:cluster-admins\""}}

ip-10-0-140-97.ec2.internal {"kind":"Event","apiVersion":"audit.k8s.io/v1beta1","metadata":{"creationTimestamp":"2019-04-09T19:56:58Z"},"level":"Metadata","timestamp":"2019-04-09T19:56:58Z","auditID":"6e96c88b-ab6f-44d2-b62e-d1413efd676b","stage":"ResponseComplete","requestURI":"/api/v1/nodes/audit-2019-04-09T14-07-27.129.log","verb":"get","user":{"username":"kube:admin","groups":["system:cluster-admins","system:authenticated"],"extra":{"scopes.authorization.openshift.io":["user:full"]}},"sourceIPs":["10.0.57.93"],"userAgent":"oc/v1.13.4+b626c2fe1 (linux/amd64) kubernetes/ba88cb2","objectRef":{"resource":"nodes","name":"audit-2019-04-09T14-07-27.129.log","apiVersion":"v1"},"responseStatus":{"metadata":{},"status":"Failure","reason":"NotFound","code":404},"requestReceivedTimestamp":"2019-04-09T19:56:58.982157Z","stageTimestamp":"2019-04-09T19:56:58.985300Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"cluster-admins\" of ClusterRole \"cluster-admin\" to Group \"system:cluster-admins\""}}4.9.2. Configuring the API Audit Log level

You can configure the audit feature to set log level, retention policy, and the type of events to log.

Procedure

Set the audit log level:

Get the name of the API server Custom Resource (CR):

oc get APIServer NAME AGE cluster 18h

$ oc get APIServer NAME AGE cluster 18hCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the API server CR:

oc edit APIServer cluster

$ oc edit APIServer clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can set the log level for both settings to one of the following. The setting can be different for each setting:

-

Normal. Normal is the default. Normal working log information, including helpful notices for auditing or common operations. Similar to

glog=2. -

Debug. Debug is for troubleshooting problems. A greater quanitity of notices than Normal, but contain less information than Trace. Common operations might be logged. Similar to

glog=4. -

Trace. Trace is for troubleshooting problems when Debug is not verbose enough. Logs every function call as part of a common operation, including tracing execution of a query. Similar to

glog=6. - TraceAll. TraceAll is troubleshoting at the level of API content/decoding. Contains complete body content. In production clusters, this setting causes performance degradation and results in a significant number of logs. Similar to ` glog=8`.

-

Normal. Normal is the default. Normal working log information, including helpful notices for auditing or common operations. Similar to

4.9.3. Viewing the API Audit Log

You can view the basic audit log.

Procedure

To view the basic audit log:

View the OpenShift Container Platform API server logs

If necessary, get the node IP and name of the log you want to view:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow View the OpenShift Container Platform API server log for a specific master node and timestamp or view all the logs for that master:

oc adm node-logs <node-ip> <log-name> --path=openshift-apiserver/<log-name>

$ oc adm node-logs <node-ip> <log-name> --path=openshift-apiserver/<log-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc adm node-logs ip-10-0-140-97.ec2.internal audit-2019-04-08T13-09-01.227.log --path=openshift-apiserver/audit-2019-04-08T13-09-01.227.log oc adm node-logs ip-10-0-140-97.ec2.internal audit.log --path=openshift-apiserver/audit.log

$ oc adm node-logs ip-10-0-140-97.ec2.internal audit-2019-04-08T13-09-01.227.log --path=openshift-apiserver/audit-2019-04-08T13-09-01.227.log $ oc adm node-logs ip-10-0-140-97.ec2.internal audit.log --path=openshift-apiserver/audit.logCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output appears similar to the following:

ip-10-0-140-97.ec2.internal {"kind":"Event","apiVersion":"audit.k8s.io/v1beta1","metadata":{"creationTimestamp":"2019-04-09T18:52:03Z"},"level":"Metadata","timestamp":"2019-04-09T18:52:03Z","auditID":"9708b50d-8956-4c87-b9eb-a53ba054c13d","stage":"ResponseComplete","requestURI":"/","verb":"get","user":{"username":"system:anonymous","groups":["system:unauthenticated"]},"sourceIPs":["10.128.0.1"],"userAgent":"Go-http-client/2.0","responseStatus":{"metadata":{},"code":200},"requestReceivedTimestamp":"2019-04-09T18:52:03.914638Z","stageTimestamp":"2019-04-09T18:52:03.915080Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"cluster-status-binding\" of ClusterRole \"cluster-status\" to Group \"system:unauthenticated\""}}ip-10-0-140-97.ec2.internal {"kind":"Event","apiVersion":"audit.k8s.io/v1beta1","metadata":{"creationTimestamp":"2019-04-09T18:52:03Z"},"level":"Metadata","timestamp":"2019-04-09T18:52:03Z","auditID":"9708b50d-8956-4c87-b9eb-a53ba054c13d","stage":"ResponseComplete","requestURI":"/","verb":"get","user":{"username":"system:anonymous","groups":["system:unauthenticated"]},"sourceIPs":["10.128.0.1"],"userAgent":"Go-http-client/2.0","responseStatus":{"metadata":{},"code":200},"requestReceivedTimestamp":"2019-04-09T18:52:03.914638Z","stageTimestamp":"2019-04-09T18:52:03.915080Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"cluster-status-binding\" of ClusterRole \"cluster-status\" to Group \"system:unauthenticated\""}}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

View the Kubernetes API server logs:

If necessary, get the node IP and name of the log you want to view:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow View the Kubernetes API server log for a specific master node and timestamp or view all the logs for that master:

oc adm node-logs <node-ip> <log-name> --path=kube-apiserver/<log-name>

$ oc adm node-logs <node-ip> <log-name> --path=kube-apiserver/<log-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc adm node-logs ip-10-0-140-97.ec2.internal audit-2019-04-09T14-07-27.129.log --path=kube-apiserver/audit-2019-04-09T14-07-27.129.log oc adm node-logs ip-10-0-170-165.ec2.internal audit.log --path=kube-apiserver/audit.log

$ oc adm node-logs ip-10-0-140-97.ec2.internal audit-2019-04-09T14-07-27.129.log --path=kube-apiserver/audit-2019-04-09T14-07-27.129.log $ oc adm node-logs ip-10-0-170-165.ec2.internal audit.log --path=kube-apiserver/audit.logCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output appears similar to the following:

ip-10-0-140-97.ec2.internal {"kind":"Event","apiVersion":"audit.k8s.io/v1beta1","metadata":{"creationTimestamp":"2019-04-09T19:56:58Z"},"level":"Metadata","timestamp":"2019-04-09T19:56:58Z","auditID":"6e96c88b-ab6f-44d2-b62e-d1413efd676b","stage":"ResponseComplete","requestURI":"/api/v1/nodes/audit-2019-04-09T14-07-27.129.log","verb":"get","user":{"username":"kube:admin","groups":["system:cluster-admins","system:authenticated"],"extra":{"scopes.authorization.openshift.io":["user:full"]}},"sourceIPs":["10.0.57.93"],"userAgent":"oc/v1.13.4+b626c2fe1 (linux/amd64) kubernetes/ba88cb2","objectRef":{"resource":"nodes","name":"audit-2019-04-09T14-07-27.129.log","apiVersion":"v1"},"responseStatus":{"metadata":{},"status":"Failure","reason":"NotFound","code":404},"requestReceivedTimestamp":"2019-04-09T19:56:58.982157Z","stageTimestamp":"2019-04-09T19:56:58.985300Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"cluster-admins\" of ClusterRole \"cluster-admin\" to Group \"system:cluster-admins\""}}ip-10-0-140-97.ec2.internal {"kind":"Event","apiVersion":"audit.k8s.io/v1beta1","metadata":{"creationTimestamp":"2019-04-09T19:56:58Z"},"level":"Metadata","timestamp":"2019-04-09T19:56:58Z","auditID":"6e96c88b-ab6f-44d2-b62e-d1413efd676b","stage":"ResponseComplete","requestURI":"/api/v1/nodes/audit-2019-04-09T14-07-27.129.log","verb":"get","user":{"username":"kube:admin","groups":["system:cluster-admins","system:authenticated"],"extra":{"scopes.authorization.openshift.io":["user:full"]}},"sourceIPs":["10.0.57.93"],"userAgent":"oc/v1.13.4+b626c2fe1 (linux/amd64) kubernetes/ba88cb2","objectRef":{"resource":"nodes","name":"audit-2019-04-09T14-07-27.129.log","apiVersion":"v1"},"responseStatus":{"metadata":{},"status":"Failure","reason":"NotFound","code":404},"requestReceivedTimestamp":"2019-04-09T19:56:58.982157Z","stageTimestamp":"2019-04-09T19:56:58.985300Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"cluster-admins\" of ClusterRole \"cluster-admin\" to Group \"system:cluster-admins\""}}Copy to Clipboard Copied! Toggle word wrap Toggle overflow