Chapter 23. Multiple networks

23.1. Understanding multiple networks

In Kubernetes, container networking is delegated to networking plugins that implement the Container Network Interface (CNI).

OpenShift Container Platform uses the Multus CNI plugin to allow chaining of CNI plugins. During cluster installation, you configure your default pod network. The default network handles all ordinary network traffic for the cluster. You can define an additional network based on the available CNI plugins and attach one or more of these networks to your pods. You can define more than one additional network for your cluster, depending on your needs. This gives you flexibility when you configure pods that deliver network functionality, such as switching or routing.

23.1.1. Usage scenarios for an additional network

You can use an additional network in situations where network isolation is needed, including data plane and control plane separation. Isolating network traffic is useful for the following performance and security reasons:

- Performance

- You can send traffic on two different planes to manage how much traffic is along each plane.

- Security

- You can send sensitive traffic onto a network plane that is managed specifically for security considerations, and you can separate private data that must not be shared between tenants or customers.

All of the pods in the cluster still use the cluster-wide default network to maintain connectivity across the cluster. Every pod has an eth0 interface that is attached to the cluster-wide pod network. You can view the interfaces for a pod by using the oc exec -it <pod_name> -- ip a command. If you add additional network interfaces that use Multus CNI, they are named net1, net2, …, netN.

To attach additional network interfaces to a pod, you must create configurations that define how the interfaces are attached. You specify each interface by using a NetworkAttachmentDefinition custom resource (CR). A CNI configuration inside each of these CRs defines how that interface is created.

23.1.2. Additional networks in OpenShift Container Platform

OpenShift Container Platform provides the following CNI plugins for creating additional networks in your cluster:

- bridge: Configure a bridge-based additional network to allow pods on the same host to communicate with each other and the host.

- host-device: Configure a host-device additional network to allow pods access to a physical Ethernet network device on the host system.

- ipvlan: Configure an ipvlan-based additional network to allow pods on a host to communicate with other hosts and pods on those hosts, similar to a macvlan-based additional network. Unlike a macvlan-based additional network, each pod shares the same MAC address as the parent physical network interface.

- vlan: Configure a vlan-based additional network to allow VLAN-based network isolation and connectivity for pods.

- macvlan: Configure a macvlan-based additional network to allow pods on a host to communicate with other hosts and pods on those hosts by using a physical network interface. Each pod that is attached to a macvlan-based additional network is provided a unique MAC address.

- SR-IOV: Configure an SR-IOV based additional network to allow pods to attach to a virtual function (VF) interface on SR-IOV capable hardware on the host system.

23.2. Configuring an additional network

As a cluster administrator, you can configure an additional network for your cluster. The following network types are supported:

23.2.1. Approaches to managing an additional network

You can manage the lifecycle of an additional network in OpenShift Container Platform by using one of two approaches: modifying the Cluster Network Operator (CNO) configuration or applying a YAML manifest. Each approach is mutually exclusive and you can only use one approach for managing an additional network at a time. For either approach, the additional network is managed by a Container Network Interface (CNI) plugin that you configure. The two different approaches are summarized here:

-

Modifying the Cluster Network Operator (CNO) configuration: Configuring additional networks through CNO is only possible for cluster administrators. The CNO automatically creates and manages the

NetworkAttachmentDefinitionobject. By using this approach, you can defineNetworkAttachmentDefinitionobjects at install time through configuration of theinstall-config. -

Applying a YAML manifest: You can manage the additional network directly by creating an

NetworkAttachmentDefinitionobject. Compared to modifying the CNO configuration, this approach gives you more granular control and flexibility when it comes to configuration.

When deploying OpenShift Container Platform nodes with multiple network interfaces on Red Hat OpenStack Platform (RHOSP) with OVN Kubernetes, DNS configuration of the secondary interface might take precedence over the DNS configuration of the primary interface. In this case, remove the DNS nameservers for the subnet ID that is attached to the secondary interface:

openstack subnet set --dns-nameserver 0.0.0.0 <subnet_id>

$ openstack subnet set --dns-nameserver 0.0.0.0 <subnet_id>23.2.2. IP address assignment for additional networks

For additional networks, IP addresses can be assigned using an IP Address Management (IPAM) CNI plugin, which supports various assignment methods, including Dynamic Host Configuration Protocol (DHCP) and static assignment.

The DHCP IPAM CNI plugin responsible for dynamic assignment of IP addresses operates with two distinct components:

- CNI Plugin: Responsible for integrating with the Kubernetes networking stack to request and release IP addresses.

- DHCP IPAM CNI Daemon: A listener for DHCP events that coordinates with existing DHCP servers in the environment to handle IP address assignment requests. This daemon is not a DHCP server itself.

For networks requiring type: dhcp in their IPAM configuration, ensure the following:

- A DHCP server is available and running in the environment. The DHCP server is external to the cluster and is expected to be part of the customer’s existing network infrastructure.

- The DHCP server is appropriately configured to serve IP addresses to the nodes.

In cases where a DHCP server is unavailable in the environment, it is recommended to use the Whereabouts IPAM CNI plugin instead. The Whereabouts CNI provides similar IP address management capabilities without the need for an external DHCP server.

Use the Whereabouts CNI plugin when there is no external DHCP server or where static IP address management is preferred. The Whereabouts plugin includes a reconciler daemon to manage stale IP address allocations.

A DHCP lease must be periodically renewed throughout the container’s lifetime, so a separate daemon, the DHCP IPAM CNI Daemon, is required. To deploy the DHCP IPAM CNI daemon, modify the Cluster Network Operator (CNO) configuration to trigger the deployment of this daemon as part of the additional network setup.

23.2.3. Configuration for an additional network attachment

An additional network is configured by using the NetworkAttachmentDefinition API in the k8s.cni.cncf.io API group.

Do not store any sensitive information or a secret in the NetworkAttachmentDefinition object because this information is accessible by the project administration user.

The configuration for the API is described in the following table:

| Field | Type | Description |

|---|---|---|

|

|

| The name for the additional network. |

|

|

| The namespace that the object is associated with. |

|

|

| The CNI plugin configuration in JSON format. |

23.2.3.1. Configuration of an additional network through the Cluster Network Operator

The configuration for an additional network attachment is specified as part of the Cluster Network Operator (CNO) configuration.

The following YAML describes the configuration parameters for managing an additional network with the CNO:

Cluster Network Operator configuration

- 1

- An array of one or more additional network configurations.

- 2

- The name for the additional network attachment that you are creating. The name must be unique within the specified

namespace. - 3

- The namespace to create the network attachment in. If you do not specify a value then the

defaultnamespace is used.ImportantTo prevent namespace issues for the OVN-Kubernetes network plugin, do not name your additional network attachment

default, because this namespace is reserved for thedefaultadditional network attachment. - 4

- A CNI plugin configuration in JSON format.

23.2.3.2. Configuration of an additional network from a YAML manifest

The configuration for an additional network is specified from a YAML configuration file, such as in the following example:

23.2.4. Configurations for additional network types

The specific configuration fields for additional networks is described in the following sections.

23.2.4.1. Configuration for a bridge additional network

The following object describes the configuration parameters for the bridge CNI plugin:

| Field | Type | Description |

|---|---|---|

|

|

|

The CNI specification version. The |

|

|

|

The value for the |

|

|

|

The name of the CNI plugin to configure: |

|

|

| The configuration object for the IPAM CNI plugin. The plugin manages IP address assignment for the attachment definition. |

|

|

|

Optional: Specify the name of the virtual bridge to use. If the bridge interface does not exist on the host, it is created. The default value is |

|

|

|

Optional: Set to |

|

|

|

Optional: Set to |

|

|

|

Optional: Set to |

|

|

|

Optional: Set to |

|

|

|

Optional: Set to |

|

|

|

Optional: Set to |

|

|

| Optional: Specify a virtual LAN (VLAN) tag as an integer value. By default, no VLAN tag is assigned. |

|

|

|

Optional: Indicates whether the default vlan must be preserved on the |

|

|

| Optional: Set the maximum transmission unit (MTU) to the specified value. The default value is automatically set by the kernel. |

|

|

|

Optional: Enables duplicate address detection for the container side |

|

|

|

Optional: Enables mac spoof check, limiting the traffic originating from the container to the mac address of the interface. The default value is |

The VLAN parameter configures the VLAN tag on the host end of the veth and also enables the vlan_filtering feature on the bridge interface.

To configure uplink for a L2 network you need to allow the vlan on the uplink interface by using the following command:

bridge vlan add vid VLAN_ID dev DEV

$ bridge vlan add vid VLAN_ID dev DEV23.2.4.1.1. bridge configuration example

The following example configures an additional network named bridge-net:

23.2.4.2. Configuration for a host device additional network

Specify your network device by setting only one of the following parameters: device,hwaddr, kernelpath, or pciBusID.

The following object describes the configuration parameters for the host-device CNI plugin:

| Field | Type | Description |

|---|---|---|

|

|

|

The CNI specification version. The |

|

|

|

The value for the |

|

|

|

The name of the CNI plugin to configure: |

|

|

|

Optional: The name of the device, such as |

|

|

| Optional: The device hardware MAC address. |

|

|

|

Optional: The Linux kernel device path, such as |

|

|

|

Optional: The PCI address of the network device, such as |

23.2.4.2.1. host-device configuration example

The following example configures an additional network named hostdev-net:

23.2.4.3. Configuration for an VLAN additional network

The following object describes the configuration parameters for the VLAN CNI plugin:

| Field | Type | Description |

|---|---|---|

|

|

|

The CNI specification version. The |

|

|

|

The value for the |

|

|

|

The name of the CNI plugin to configure: |

|

|

|

The Ethernet interface to associate with the network attachment. If a |

|

|

| Set the id of the vlan. |

|

|

| The configuration object for the IPAM CNI plugin. The plugin manages IP address assignment for the attachment definition. |

|

|

| Optional: Set the maximum transmission unit (MTU) to the specified value. The default value is automatically set by the kernel. |

|

|

| Optional: DNS information to return, for example, a priority-ordered list of DNS nameservers. |

|

|

| Optional: Specifies if the master interface is in the container network namespace or the main network namespace. |

23.2.4.3.1. vlan configuration example

The following example configures an additional network named vlan-net:

23.2.4.4. Configuration for an IPVLAN additional network

The following object describes the configuration parameters for the IPVLAN CNI plugin:

| Field | Type | Description |

|---|---|---|

|

|

|

The CNI specification version. The |

|

|

|

The value for the |

|

|

|

The name of the CNI plugin to configure: |

|

|

| The configuration object for the IPAM CNI plugin. The plugin manages IP address assignment for the attachment definition. This is required unless the plugin is chained. |

|

|

|

Optional: The operating mode for the virtual network. The value must be |

|

|

|

Optional: The Ethernet interface to associate with the network attachment. If a |

|

|

| Optional: Set the maximum transmission unit (MTU) to the specified value. The default value is automatically set by the kernel. |

-

The

ipvlanobject does not allow virtual interfaces to communicate with themasterinterface. Therefore the container will not be able to reach the host by using theipvlaninterface. Be sure that the container joins a network that provides connectivity to the host, such as a network supporting the Precision Time Protocol (PTP). -

A single

masterinterface cannot simultaneously be configured to use bothmacvlanandipvlan. -

For IP allocation schemes that cannot be interface agnostic, the

ipvlanplugin can be chained with an earlier plugin that handles this logic. If themasteris omitted, then the previous result must contain a single interface name for theipvlanplugin to enslave. Ifipamis omitted, then the previous result is used to configure theipvlaninterface.

23.2.4.4.1. ipvlan configuration example

The following example configures an additional network named ipvlan-net:

23.2.4.5. Configuration for a MACVLAN additional network

The following object describes the configuration parameters for the MAC Virtual LAN (MACVLAN) Container Network Interface (CNI) plugin:

| Field | Type | Description |

|---|---|---|

|

|

|

The CNI specification version. The |

|

|

|

The value for the |

|

|

|

The name of the CNI plugin to configure: |

|

|

| The configuration object for the IPAM CNI plugin. The plugin manages IP address assignment for the attachment definition. |

|

|

|

Optional: Configures traffic visibility on the virtual network. Must be either |

|

|

| Optional: The host network interface to associate with the newly created macvlan interface. If a value is not specified, then the default route interface is used. |

|

|

| Optional: The maximum transmission unit (MTU) to the specified value. The default value is automatically set by the kernel. |

If you specify the master key for the plugin configuration, use a different physical network interface than the one that is associated with your primary network plugin to avoid possible conflicts.

23.2.4.5.1. MACVLAN configuration example

The following example configures an additional network named macvlan-net:

23.2.4.6. Configuration for an OVN-Kubernetes additional network

The Red Hat OpenShift Networking OVN-Kubernetes network plugin allows the configuration of secondary network interfaces for pods. To configure secondary network interfaces, you must define the configurations in the NetworkAttachmentDefinition custom resource definition (CRD).

Configuration for an OVN-Kubernetes additional network is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

Pod and multi-network policy creation might remain in a pending state until the OVN-Kubernetes control plane agent in the nodes processes the associated network-attachment-definition CR.

The following sections provide example configurations for each of the topologies that OVN-Kubernetes currently allows for secondary networks.

Networks names must be unique. For example, creating multiple NetworkAttachmentDefinition CRDs with different configurations that reference the same network is unsupported.

23.2.4.6.1. OVN-Kubernetes network plugin JSON configuration table

The following table describes the configuration parameters for the OVN-Kubernetes CNI network plugin:

| Field | Type | Description |

|---|---|---|

|

|

|

The CNI specification version. The required value is |

|

|

|

The name of the network. These networks are not namespaced. For example, you can have a network named |

|

|

|

The name of the CNI plugin to configure. The required value is |

|

|

|

The topological configuration for the network. The required value is |

|

|

| The subnet to use for the network across the cluster.

For |

|

|

|

The maximum transmission unit (MTU) to the specified value. The default value, |

|

|

|

The metadata |

|

|

| A comma-separated list of CIDRs and IPs. IPs are removed from the assignable IP pool, and are never passed to the pods. When omitted, the logical switch implementing the network only provides layer 2 communication, and users must configure IPs for the pods. Port security only prevents MAC spoofing. |

23.2.4.6.2. Configuration for a switched topology

The switched (layer 2) topology networks interconnect the workloads through a cluster-wide logical switch. This configuration can be used for IPv6 and dual-stack deployments.

Layer 2 switched topology networks only allow for the transfer of data packets between pods within a cluster.

The following NetworkAttachmentDefinition custom resource definition (CRD) YAML describes the fields needed to configure a switched secondary network.

23.2.4.6.3. Configuring pods for additional networks

You must specify the secondary network attachments through the k8s.v1.cni.cncf.io/networks annotation.

The following example provisions a pod with two secondary attachments, one for each of the attachment configurations presented in this guide.

23.2.4.6.4. Configuring pods with a static IP address

The following example provisions a pod with a static IP address.

- You can only specify the IP address for a pod’s secondary network attachment for layer 2 attachments.

- Specifying a static IP address for the pod is only possible when the attachment configuration does not feature subnets.

23.2.5. Configuration of IP address assignment for an additional network

The IP address management (IPAM) Container Network Interface (CNI) plugin provides IP addresses for other CNI plugins.

You can use the following IP address assignment types:

- Static assignment.

- Dynamic assignment through a DHCP server. The DHCP server you specify must be reachable from the additional network.

- Dynamic assignment through the Whereabouts IPAM CNI plugin.

23.2.5.1. Static IP address assignment configuration

The following table describes the configuration for static IP address assignment:

| Field | Type | Description |

|---|---|---|

|

|

|

The IPAM address type. The value |

|

|

| An array of objects specifying IP addresses to assign to the virtual interface. Both IPv4 and IPv6 IP addresses are supported. |

|

|

| An array of objects specifying routes to configure inside the pod. |

|

|

| Optional: An array of objects specifying the DNS configuration. |

The addresses array requires objects with the following fields:

| Field | Type | Description |

|---|---|---|

|

|

|

An IP address and network prefix that you specify. For example, if you specify |

|

|

| The default gateway to route egress network traffic to. |

| Field | Type | Description |

|---|---|---|

|

|

|

The IP address range in CIDR format, such as |

|

|

| The gateway where network traffic is routed. |

| Field | Type | Description |

|---|---|---|

|

|

| An array of one or more IP addresses for to send DNS queries to. |

|

|

|

The default domain to append to a hostname. For example, if the domain is set to |

|

|

|

An array of domain names to append to an unqualified hostname, such as |

Static IP address assignment configuration example

23.2.5.2. Dynamic IP address (DHCP) assignment configuration

The following JSON describes the configuration for dynamic IP address address assignment with DHCP.

A pod obtains its original DHCP lease when it is created. The lease must be periodically renewed by a minimal DHCP server deployment running on the cluster.

To trigger the deployment of the DHCP server, you must create a shim network attachment by editing the Cluster Network Operator configuration, as in the following example:

Example shim network attachment definition

| Field | Type | Description |

|---|---|---|

|

|

|

The IPAM address type. The value |

Dynamic IP address (DHCP) assignment configuration example

{

"ipam": {

"type": "dhcp"

}

}

{

"ipam": {

"type": "dhcp"

}

}23.2.5.3. Dynamic IP address assignment configuration with Whereabouts

The Whereabouts CNI plugin allows the dynamic assignment of an IP address to an additional network without the use of a DHCP server.

The following table describes the configuration for dynamic IP address assignment with Whereabouts:

| Field | Type | Description |

|---|---|---|

|

|

|

The IPAM address type. The value |

|

|

| An IP address and range in CIDR notation. IP addresses are assigned from within this range of addresses. |

|

|

| Optional: A list of zero or more IP addresses and ranges in CIDR notation. IP addresses within an excluded address range are not assigned. |

Dynamic IP address assignment configuration example that uses Whereabouts

23.2.5.4. Creating a whereabouts-reconciler daemon set

The Whereabouts reconciler is responsible for managing dynamic IP address assignments for the pods within a cluster by using the Whereabouts IP Address Management (IPAM) solution. It ensures that each pod gets a unique IP address from the specified IP address range. It also handles IP address releases when pods are deleted or scaled down.

You can also use a NetworkAttachmentDefinition custom resource (CR) for dynamic IP address assignment.

The whereabouts-reconciler daemon set is automatically created when you configure an additional network through the Cluster Network Operator. It is not automatically created when you configure an additional network from a YAML manifest.

To trigger the deployment of the whereabouts-reconciler daemon set, you must manually create a whereabouts-shim network attachment by editing the Cluster Network Operator custom resource (CR) file.

Use the following procedure to deploy the whereabouts-reconciler daemon set.

Procedure

Edit the

Network.operator.openshift.iocustom resource (CR) by running the following command:oc edit network.operator.openshift.io cluster

$ oc edit network.operator.openshift.io clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Include the

additionalNetworkssection shown in this example YAML extract within thespecdefinition of the custom resource (CR):Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the file and exit the text editor.

Verify that the

whereabouts-reconcilerdaemon set deployed successfully by running the following command:oc get all -n openshift-multus | grep whereabouts-reconciler

$ oc get all -n openshift-multus | grep whereabouts-reconcilerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

23.2.5.5. Configuring the Whereabouts IP reconciler schedule

The Whereabouts IPAM CNI plugin runs the IP reconciler daily. This process cleans up any stranded IP allocations that might result in exhausting IPs and therefore prevent new pods from getting an IP allocated to them.

Use this procedure to change the frequency at which the IP reconciler runs.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You have access to the cluster as a user with the

cluster-adminrole. -

You have deployed the

whereabouts-reconcilerdaemon set, and thewhereabouts-reconcilerpods are up and running.

Procedure

Run the following command to create a

ConfigMapobject namedwhereabouts-configin theopenshift-multusnamespace with a specific cron expression for the IP reconciler:oc create configmap whereabouts-config -n openshift-multus --from-literal=reconciler_cron_expression="*/15 * * * *"

$ oc create configmap whereabouts-config -n openshift-multus --from-literal=reconciler_cron_expression="*/15 * * * *"Copy to Clipboard Copied! Toggle word wrap Toggle overflow This cron expression indicates the IP reconciler runs every 15 minutes. Adjust the expression based on your specific requirements.

NoteThe

whereabouts-reconcilerdaemon set can only consume a cron expression pattern that includes five asterisks. The sixth, which is used to denote seconds, is currently not supported.Retrieve information about resources related to the

whereabouts-reconcilerdaemon set and pods within theopenshift-multusnamespace by running the following command:oc get all -n openshift-multus | grep whereabouts-reconciler

$ oc get all -n openshift-multus | grep whereabouts-reconcilerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to verify that the

whereabouts-reconcilerpod runs the IP reconciler with the configured interval:oc -n openshift-multus logs whereabouts-reconciler-2p7hw

$ oc -n openshift-multus logs whereabouts-reconciler-2p7hwCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

23.2.6. Creating an additional network attachment with the Cluster Network Operator

The Cluster Network Operator (CNO) manages additional network definitions. When you specify an additional network to create, the CNO creates the NetworkAttachmentDefinition object automatically.

Do not edit the NetworkAttachmentDefinition objects that the Cluster Network Operator manages. Doing so might disrupt network traffic on your additional network.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Optional: Create the namespace for the additional networks:

oc create namespace <namespace_name>

$ oc create namespace <namespace_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow To edit the CNO configuration, enter the following command:

oc edit networks.operator.openshift.io cluster

$ oc edit networks.operator.openshift.io clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Modify the CR that you are creating by adding the configuration for the additional network that you are creating, as in the following example CR.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save your changes and quit the text editor to commit your changes.

Verification

Confirm that the CNO created the

NetworkAttachmentDefinitionobject by running the following command. There might be a delay before the CNO creates the object.oc get network-attachment-definitions -n <namespace>

$ oc get network-attachment-definitions -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<namespace>- Specifies the namespace for the network attachment that you added to the CNO configuration.

Example output

NAME AGE test-network-1 14m

NAME AGE test-network-1 14mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

23.2.7. Creating an additional network attachment by applying a YAML manifest

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create a YAML file with your additional network configuration, such as in the following example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To create the additional network, enter the following command:

oc apply -f <file>.yaml

$ oc apply -f <file>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<file>- Specifies the name of the file contained the YAML manifest.

23.3. About virtual routing and forwarding

23.3.1. About virtual routing and forwarding

Virtual routing and forwarding (VRF) devices combined with IP rules provide the ability to create virtual routing and forwarding domains. VRF reduces the number of permissions needed by CNF, and provides increased visibility of the network topology of secondary networks. VRF is used to provide multi-tenancy functionality, for example, where each tenant has its own unique routing tables and requires different default gateways.

Processes can bind a socket to the VRF device. Packets through the binded socket use the routing table associated with the VRF device. An important feature of VRF is that it impacts only OSI model layer 3 traffic and above so L2 tools, such as LLDP, are not affected. This allows higher priority IP rules such as policy based routing to take precedence over the VRF device rules directing specific traffic.

23.3.1.1. Benefits of secondary networks for pods for telecommunications operators

In telecommunications use cases, each CNF can potentially be connected to multiple different networks sharing the same address space. These secondary networks can potentially conflict with the cluster’s main network CIDR. Using the CNI VRF plugin, network functions can be connected to different customers' infrastructure using the same IP address, keeping different customers isolated. IP addresses are overlapped with OpenShift Container Platform IP space. The CNI VRF plugin also reduces the number of permissions needed by CNF and increases the visibility of network topologies of secondary networks.

23.4. Configuring multi-network policy

As a cluster administrator, you can configure multi-network for additional networks. You can specify multi-network policy for SR-IOV and macvlan additional networks. Macvlan additional networks are fully supported. Other types of additional networks, such as ipvlan, are not supported.

Support for configuring multi-network policies for SR-IOV additional networks is a Technology Preview feature and is only supported with kernel network interface cards (NICs). SR-IOV is not supported for Data Plane Development Kit (DPDK) applications.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

Configured network policies are ignored in IPv6 networks.

23.4.1. Differences between multi-network policy and network policy

Although the MultiNetworkPolicy API implements the NetworkPolicy API, there are several important differences:

You must use the

MultiNetworkPolicyAPI:apiVersion: k8s.cni.cncf.io/v1beta1 kind: MultiNetworkPolicy

apiVersion: k8s.cni.cncf.io/v1beta1 kind: MultiNetworkPolicyCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

You must use the

multi-networkpolicyresource name when using the CLI to interact with multi-network policies. For example, you can view a multi-network policy object with theoc get multi-networkpolicy <name>command where<name>is the name of a multi-network policy. You can use the

k8s.v1.cni.cncf.io/policy-forannotation on aMultiNetworkPolicyobject to point to aNetworkAttachmentDefinition(NAD) custom resource (CR). The NAD CR defines the network to which the policy applies.Example multi-network policy that includes the

k8s.v1.cni.cncf.io/policy-forannotationapiVersion: k8s.cni.cncf.io/v1beta1 kind: MultiNetworkPolicy metadata: annotations: k8s.v1.cni.cncf.io/policy-for:<namespace_name>/<network_name>apiVersion: k8s.cni.cncf.io/v1beta1 kind: MultiNetworkPolicy metadata: annotations: k8s.v1.cni.cncf.io/policy-for:<namespace_name>/<network_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<namespace_name>- Specifies the namespace name.

<network_name>- Specifies the name of a network attachment definition.

23.4.2. Enabling multi-network policy for the cluster

As a cluster administrator, you can enable multi-network policy support on your cluster.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in to the cluster with a user with

cluster-adminprivileges.

Procedure

Create the

multinetwork-enable-patch.yamlfile with the following YAML:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the cluster to enable multi-network policy:

oc patch network.operator.openshift.io cluster --type=merge --patch-file=multinetwork-enable-patch.yaml

$ oc patch network.operator.openshift.io cluster --type=merge --patch-file=multinetwork-enable-patch.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

network.operator.openshift.io/cluster patched

network.operator.openshift.io/cluster patchedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

23.4.3. Working with multi-network policy

As a cluster administrator, you can create, edit, view, and delete multi-network policies.

23.4.3.1. Prerequisites

- You have enabled multi-network policy support for your cluster.

23.4.3.2. Creating a multi-network policy using the CLI

To define granular rules describing ingress or egress network traffic allowed for namespaces in your cluster, you can create a multi-network policy.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges. - You are working in the namespace that the multi-network policy applies to.

Procedure

Create a policy rule:

Create a

<policy_name>.yamlfile:touch <policy_name>.yaml

$ touch <policy_name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<policy_name>- Specifies the multi-network policy file name.

Define a multi-network policy in the file that you just created, such as in the following examples:

Deny ingress from all pods in all namespaces

This is a fundamental policy, blocking all cross-pod networking other than cross-pod traffic allowed by the configuration of other Network Policies.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<network_name>- Specifies the name of a network attachment definition.

Allow ingress from all pods in the same namespace

Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<network_name>- Specifies the name of a network attachment definition.

Allow ingress traffic to one pod from a particular namespace

This policy allows traffic to pods labelled

pod-afrom pods running innamespace-y.Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<network_name>- Specifies the name of a network attachment definition.

Restrict traffic to a service

This policy when applied ensures every pod with both labels

app=bookstoreandrole=apican only be accessed by pods with labelapp=bookstore. In this example the application could be a REST API server, marked with labelsapp=bookstoreandrole=api.This example addresses the following use cases:

- Restricting the traffic to a service to only the other microservices that need to use it.

Restricting the connections to a database to only permit the application using it.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<network_name>- Specifies the name of a network attachment definition.

To create the multi-network policy object, enter the following command:

oc apply -f <policy_name>.yaml -n <namespace>

$ oc apply -f <policy_name>.yaml -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<policy_name>- Specifies the multi-network policy file name.

<namespace>- Optional: Specifies the namespace if the object is defined in a different namespace than the current namespace.

Example output

multinetworkpolicy.k8s.cni.cncf.io/deny-by-default created

multinetworkpolicy.k8s.cni.cncf.io/deny-by-default createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

If you log in to the web console with cluster-admin privileges, you have a choice of creating a network policy in any namespace in the cluster directly in YAML or from a form in the web console.

23.4.3.3. Editing a multi-network policy

You can edit a multi-network policy in a namespace.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges. - You are working in the namespace where the multi-network policy exists.

Procedure

Optional: To list the multi-network policy objects in a namespace, enter the following command:

oc get multi-networkpolicy

$ oc get multi-networkpolicyCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<namespace>- Optional: Specifies the namespace if the object is defined in a different namespace than the current namespace.

Edit the multi-network policy object.

If you saved the multi-network policy definition in a file, edit the file and make any necessary changes, and then enter the following command.

oc apply -n <namespace> -f <policy_file>.yaml

$ oc apply -n <namespace> -f <policy_file>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<namespace>- Optional: Specifies the namespace if the object is defined in a different namespace than the current namespace.

<policy_file>- Specifies the name of the file containing the network policy.

If you need to update the multi-network policy object directly, enter the following command:

oc edit multi-networkpolicy <policy_name> -n <namespace>

$ oc edit multi-networkpolicy <policy_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<policy_name>- Specifies the name of the network policy.

<namespace>- Optional: Specifies the namespace if the object is defined in a different namespace than the current namespace.

Confirm that the multi-network policy object is updated.

oc describe multi-networkpolicy <policy_name> -n <namespace>

$ oc describe multi-networkpolicy <policy_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<policy_name>- Specifies the name of the multi-network policy.

<namespace>- Optional: Specifies the namespace if the object is defined in a different namespace than the current namespace.

If you log in to the web console with cluster-admin privileges, you have a choice of editing a network policy in any namespace in the cluster directly in YAML or from the policy in the web console through the Actions menu.

23.4.3.4. Viewing multi-network policies using the CLI

You can examine the multi-network policies in a namespace.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges. - You are working in the namespace where the multi-network policy exists.

Procedure

List multi-network policies in a namespace:

To view multi-network policy objects defined in a namespace, enter the following command:

oc get multi-networkpolicy

$ oc get multi-networkpolicyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: To examine a specific multi-network policy, enter the following command:

oc describe multi-networkpolicy <policy_name> -n <namespace>

$ oc describe multi-networkpolicy <policy_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<policy_name>- Specifies the name of the multi-network policy to inspect.

<namespace>- Optional: Specifies the namespace if the object is defined in a different namespace than the current namespace.

If you log in to the web console with cluster-admin privileges, you have a choice of viewing a network policy in any namespace in the cluster directly in YAML or from a form in the web console.

23.4.3.5. Deleting a multi-network policy using the CLI

You can delete a multi-network policy in a namespace.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges. - You are working in the namespace where the multi-network policy exists.

Procedure

To delete a multi-network policy object, enter the following command:

oc delete multi-networkpolicy <policy_name> -n <namespace>

$ oc delete multi-networkpolicy <policy_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<policy_name>- Specifies the name of the multi-network policy.

<namespace>- Optional: Specifies the namespace if the object is defined in a different namespace than the current namespace.

Example output

multinetworkpolicy.k8s.cni.cncf.io/default-deny deleted

multinetworkpolicy.k8s.cni.cncf.io/default-deny deletedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

If you log in to the web console with cluster-admin privileges, you have a choice of deleting a network policy in any namespace in the cluster directly in YAML or from the policy in the web console through the Actions menu.

23.4.3.6. Creating a default deny all multi-network policy

This is a fundamental policy, blocking all cross-pod networking other than network traffic allowed by the configuration of other deployed network policies. This procedure enforces a default deny-by-default policy.

If you log in with a user with the cluster-admin role, then you can create a network policy in any namespace in the cluster.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges. - You are working in the namespace that the multi-network policy applies to.

Procedure

Create the following YAML that defines a

deny-by-defaultpolicy to deny ingress from all pods in all namespaces. Save the YAML in thedeny-by-default.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

namespace: defaultdeploys this policy to thedefaultnamespace.- 2

network_name: specifies the name of a network attachment definition.- 3

podSelector:is empty, this means it matches all the pods. Therefore, the policy applies to all pods in the default namespace.- 4

- There are no

ingressrules specified. This causes incoming traffic to be dropped to all pods.

Apply the policy by entering the following command:

oc apply -f deny-by-default.yaml

$ oc apply -f deny-by-default.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

multinetworkpolicy.k8s.cni.cncf.io/deny-by-default created

multinetworkpolicy.k8s.cni.cncf.io/deny-by-default createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

23.4.3.7. Creating a multi-network policy to allow traffic from external clients

With the deny-by-default policy in place you can proceed to configure a policy that allows traffic from external clients to a pod with the label app=web.

If you log in with a user with the cluster-admin role, then you can create a network policy in any namespace in the cluster.

Follow this procedure to configure a policy that allows external service from the public Internet directly or by using a Load Balancer to access the pod. Traffic is only allowed to a pod with the label app=web.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges. - You are working in the namespace that the multi-network policy applies to.

Procedure

Create a policy that allows traffic from the public Internet directly or by using a load balancer to access the pod. Save the YAML in the

web-allow-external.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by entering the following command:

oc apply -f web-allow-external.yaml

$ oc apply -f web-allow-external.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

multinetworkpolicy.k8s.cni.cncf.io/web-allow-external created

multinetworkpolicy.k8s.cni.cncf.io/web-allow-external createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

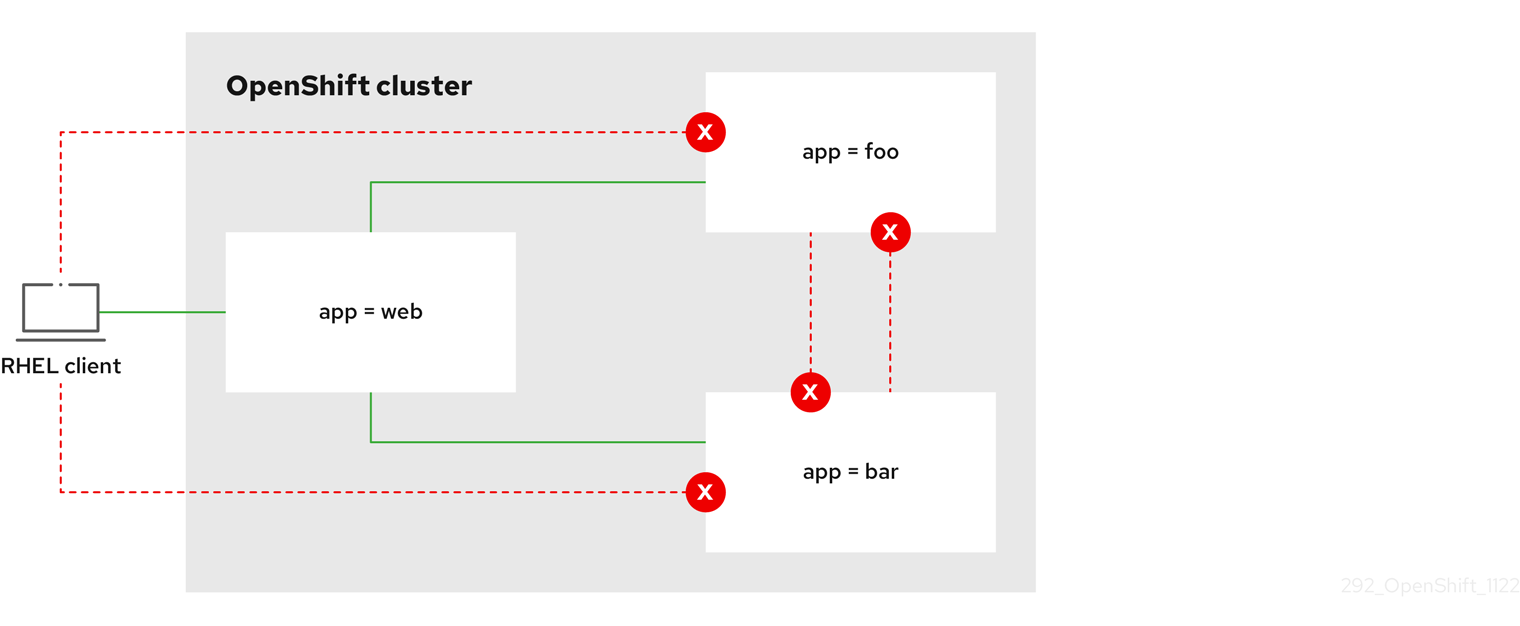

This policy allows traffic from all resources, including external traffic as illustrated in the following diagram:

23.4.3.8. Creating a multi-network policy allowing traffic to an application from all namespaces

If you log in with a user with the cluster-admin role, then you can create a network policy in any namespace in the cluster.

Follow this procedure to configure a policy that allows traffic from all pods in all namespaces to a particular application.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges. - You are working in the namespace that the multi-network policy applies to.

Procedure

Create a policy that allows traffic from all pods in all namespaces to a particular application. Save the YAML in the

web-allow-all-namespaces.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteBy default, if you omit specifying a

namespaceSelectorit does not select any namespaces, which means the policy allows traffic only from the namespace the network policy is deployed to.Apply the policy by entering the following command:

oc apply -f web-allow-all-namespaces.yaml

$ oc apply -f web-allow-all-namespaces.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

multinetworkpolicy.k8s.cni.cncf.io/web-allow-all-namespaces created

multinetworkpolicy.k8s.cni.cncf.io/web-allow-all-namespaces createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Start a web service in the

defaultnamespace by entering the following command:oc run web --namespace=default --image=nginx --labels="app=web" --expose --port=80

$ oc run web --namespace=default --image=nginx --labels="app=web" --expose --port=80Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to deploy an

alpineimage in thesecondarynamespace and to start a shell:oc run test-$RANDOM --namespace=secondary --rm -i -t --image=alpine -- sh

$ oc run test-$RANDOM --namespace=secondary --rm -i -t --image=alpine -- shCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command in the shell and observe that the request is allowed:

wget -qO- --timeout=2 http://web.default

# wget -qO- --timeout=2 http://web.defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

23.4.3.9. Creating a multi-network policy allowing traffic to an application from a namespace

If you log in with a user with the cluster-admin role, then you can create a network policy in any namespace in the cluster.

Follow this procedure to configure a policy that allows traffic to a pod with the label app=web from a particular namespace. You might want to do this to:

- Restrict traffic to a production database only to namespaces where production workloads are deployed.

- Enable monitoring tools deployed to a particular namespace to scrape metrics from the current namespace.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges. - You are working in the namespace that the multi-network policy applies to.

Procedure

Create a policy that allows traffic from all pods in a particular namespaces with a label

purpose=production. Save the YAML in theweb-allow-prod.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by entering the following command:

oc apply -f web-allow-prod.yaml

$ oc apply -f web-allow-prod.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

multinetworkpolicy.k8s.cni.cncf.io/web-allow-prod created

multinetworkpolicy.k8s.cni.cncf.io/web-allow-prod createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Start a web service in the

defaultnamespace by entering the following command:oc run web --namespace=default --image=nginx --labels="app=web" --expose --port=80

$ oc run web --namespace=default --image=nginx --labels="app=web" --expose --port=80Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to create the

prodnamespace:oc create namespace prod

$ oc create namespace prodCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to label the

prodnamespace:oc label namespace/prod purpose=production

$ oc label namespace/prod purpose=productionCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to create the

devnamespace:oc create namespace dev

$ oc create namespace devCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to label the

devnamespace:oc label namespace/dev purpose=testing

$ oc label namespace/dev purpose=testingCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to deploy an

alpineimage in thedevnamespace and to start a shell:oc run test-$RANDOM --namespace=dev --rm -i -t --image=alpine -- sh

$ oc run test-$RANDOM --namespace=dev --rm -i -t --image=alpine -- shCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command in the shell and observe that the request is blocked:

wget -qO- --timeout=2 http://web.default

# wget -qO- --timeout=2 http://web.defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

wget: download timed out

wget: download timed outCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to deploy an

alpineimage in theprodnamespace and start a shell:oc run test-$RANDOM --namespace=prod --rm -i -t --image=alpine -- sh

$ oc run test-$RANDOM --namespace=prod --rm -i -t --image=alpine -- shCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command in the shell and observe that the request is allowed:

wget -qO- --timeout=2 http://web.default

# wget -qO- --timeout=2 http://web.defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

23.5. Attaching a pod to an additional network

As a cluster user you can attach a pod to an additional network.

23.5.1. Adding a pod to an additional network

You can add a pod to an additional network. The pod continues to send normal cluster-related network traffic over the default network.

When a pod is created additional networks are attached to it. However, if a pod already exists, you cannot attach additional networks to it.

The pod must be in the same namespace as the additional network.

Prerequisites

-

Install the OpenShift CLI (

oc). - Log in to the cluster.

Procedure

Add an annotation to the

Podobject. Only one of the following annotation formats can be used:To attach an additional network without any customization, add an annotation with the following format. Replace

<network>with the name of the additional network to associate with the pod:metadata: annotations: k8s.v1.cni.cncf.io/networks: <network>[,<network>,...]metadata: annotations: k8s.v1.cni.cncf.io/networks: <network>[,<network>,...]1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- To specify more than one additional network, separate each network with a comma. Do not include whitespace between the comma. If you specify the same additional network multiple times, that pod will have multiple network interfaces attached to that network.

To attach an additional network with customizations, add an annotation with the following format:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

To create the pod, enter the following command. Replace

<name>with the name of the pod.oc create -f <name>.yaml

$ oc create -f <name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: To Confirm that the annotation exists in the

PodCR, enter the following command, replacing<name>with the name of the pod.oc get pod <name> -o yaml

$ oc get pod <name> -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow In the following example, the

example-podpod is attached to thenet1additional network:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

k8s.v1.cni.cncf.io/network-statusparameter is a JSON array of objects. Each object describes the status of an additional network attached to the pod. The annotation value is stored as a plain text value.

23.5.1.1. Specifying pod-specific addressing and routing options

When attaching a pod to an additional network, you may want to specify further properties about that network in a particular pod. This allows you to change some aspects of routing, as well as specify static IP addresses and MAC addresses. To accomplish this, you can use the JSON formatted annotations.

Prerequisites

- The pod must be in the same namespace as the additional network.

-

Install the OpenShift CLI (

oc). - You must log in to the cluster.

Procedure

To add a pod to an additional network while specifying addressing and/or routing options, complete the following steps:

Edit the

Podresource definition. If you are editing an existingPodresource, run the following command to edit its definition in the default editor. Replace<name>with the name of thePodresource to edit.oc edit pod <name>

$ oc edit pod <name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the

Podresource definition, add thek8s.v1.cni.cncf.io/networksparameter to the podmetadatamapping. Thek8s.v1.cni.cncf.io/networksaccepts a JSON string of a list of objects that reference the name ofNetworkAttachmentDefinitioncustom resource (CR) names in addition to specifying additional properties.metadata: annotations: k8s.v1.cni.cncf.io/networks: '[<network>[,<network>,...]]'metadata: annotations: k8s.v1.cni.cncf.io/networks: '[<network>[,<network>,...]]'1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<network>with a JSON object as shown in the following examples. The single quotes are required.

In the following example the annotation specifies which network attachment will have the default route, using the

default-routeparameter.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

namekey is the name of the additional network to associate with the pod. - 2

- The

default-routekey specifies a value of a gateway for traffic to be routed over if no other routing entry is present in the routing table. If more than onedefault-routekey is specified, this will cause the pod to fail to become active.

The default route will cause any traffic that is not specified in other routes to be routed to the gateway.

Setting the default route to an interface other than the default network interface for OpenShift Container Platform may cause traffic that is anticipated for pod-to-pod traffic to be routed over another interface.

To verify the routing properties of a pod, the oc command may be used to execute the ip command within a pod.

oc exec -it <pod_name> -- ip route

$ oc exec -it <pod_name> -- ip route

You may also reference the pod’s k8s.v1.cni.cncf.io/network-status to see which additional network has been assigned the default route, by the presence of the default-route key in the JSON-formatted list of objects.

To set a static IP address or MAC address for a pod you can use the JSON formatted annotations. This requires you create networks that specifically allow for this functionality. This can be specified in a rawCNIConfig for the CNO.

Edit the CNO CR by running the following command:

oc edit networks.operator.openshift.io cluster

$ oc edit networks.operator.openshift.io clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The following YAML describes the configuration parameters for the CNO:

Cluster Network Operator YAML configuration

- 1

- Specify a name for the additional network attachment that you are creating. The name must be unique within the specified

namespace. - 2

- Specify the namespace to create the network attachment in. If you do not specify a value, then the

defaultnamespace is used. - 3

- Specify the CNI plugin configuration in JSON format, which is based on the following template.

The following object describes the configuration parameters for utilizing static MAC address and IP address using the macvlan CNI plugin:

macvlan CNI plugin JSON configuration object using static IP and MAC address

- 1

- Specifies the name for the additional network attachment to create. The name must be unique within the specified

namespace. - 2

- Specifies an array of CNI plugin configurations. The first object specifies a macvlan plugin configuration and the second object specifies a tuning plugin configuration.

- 3

- Specifies that a request is made to enable the static IP address functionality of the CNI plugin runtime configuration capabilities.

- 4

- Specifies the interface that the macvlan plugin uses.

- 5

- Specifies that a request is made to enable the static MAC address functionality of a CNI plugin.

The above network attachment can be referenced in a JSON formatted annotation, along with keys to specify which static IP and MAC address will be assigned to a given pod.

Edit the pod with:

oc edit pod <name>

$ oc edit pod <name>macvlan CNI plugin JSON configuration object using static IP and MAC address

Static IP addresses and MAC addresses do not have to be used at the same time, you may use them individually, or together.

To verify the IP address and MAC properties of a pod with additional networks, use the oc command to execute the ip command within a pod.

oc exec -it <pod_name> -- ip a

$ oc exec -it <pod_name> -- ip a23.6. Removing a pod from an additional network

As a cluster user you can remove a pod from an additional network.

23.6.1. Removing a pod from an additional network

You can remove a pod from an additional network only by deleting the pod.

Prerequisites

- An additional network is attached to the pod.

-

Install the OpenShift CLI (

oc). - Log in to the cluster.

Procedure

To delete the pod, enter the following command:

oc delete pod <name> -n <namespace>

$ oc delete pod <name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

<name>is the name of the pod. -

<namespace>is the namespace that contains the pod.

-

23.7. Editing an additional network

As a cluster administrator you can modify the configuration for an existing additional network.

23.7.1. Modifying an additional network attachment definition

As a cluster administrator, you can make changes to an existing additional network. Any existing pods attached to the additional network will not be updated.

Prerequisites

- You have configured an additional network for your cluster.

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

To edit an additional network for your cluster, complete the following steps:

Run the following command to edit the Cluster Network Operator (CNO) CR in your default text editor:

oc edit networks.operator.openshift.io cluster

$ oc edit networks.operator.openshift.io clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

In the

additionalNetworkscollection, update the additional network with your changes. - Save your changes and quit the text editor to commit your changes.

Optional: Confirm that the CNO updated the

NetworkAttachmentDefinitionobject by running the following command. Replace<network-name>with the name of the additional network to display. There might be a delay before the CNO updates theNetworkAttachmentDefinitionobject to reflect your changes.oc get network-attachment-definitions <network-name> -o yaml

$ oc get network-attachment-definitions <network-name> -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example, the following console output displays a

NetworkAttachmentDefinitionobject that is namednet1:oc get network-attachment-definitions net1 -o go-template='{{printf "%s\n" .spec.config}}' { "cniVersion": "0.3.1", "type": "macvlan", "master": "ens5", "mode": "bridge", "ipam": {"type":"static","routes":[{"dst":"0.0.0.0/0","gw":"10.128.2.1"}],"addresses":[{"address":"10.128.2.100/23","gateway":"10.128.2.1"}],"dns":{"nameservers":["172.30.0.10"],"domain":"us-west-2.compute.internal","search":["us-west-2.compute.internal"]}} }$ oc get network-attachment-definitions net1 -o go-template='{{printf "%s\n" .spec.config}}' { "cniVersion": "0.3.1", "type": "macvlan", "master": "ens5", "mode": "bridge", "ipam": {"type":"static","routes":[{"dst":"0.0.0.0/0","gw":"10.128.2.1"}],"addresses":[{"address":"10.128.2.100/23","gateway":"10.128.2.1"}],"dns":{"nameservers":["172.30.0.10"],"domain":"us-west-2.compute.internal","search":["us-west-2.compute.internal"]}} }Copy to Clipboard Copied! Toggle word wrap Toggle overflow

23.8. Removing an additional network

As a cluster administrator you can remove an additional network attachment.

23.8.1. Removing an additional network attachment definition

As a cluster administrator, you can remove an additional network from your OpenShift Container Platform cluster. The additional network is not removed from any pods it is attached to.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

To remove an additional network from your cluster, complete the following steps:

Edit the Cluster Network Operator (CNO) in your default text editor by running the following command:

oc edit networks.operator.openshift.io cluster

$ oc edit networks.operator.openshift.io clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Modify the CR by removing the configuration from the

additionalNetworkscollection for the network attachment definition you are removing.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- If you are removing the configuration mapping for the only additional network attachment definition in the

additionalNetworkscollection, you must specify an empty collection.

- Save your changes and quit the text editor to commit your changes.

Optional: Confirm that the additional network CR was deleted by running the following command:

oc get network-attachment-definition --all-namespaces

$ oc get network-attachment-definition --all-namespacesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

23.9. Assigning a secondary network to a VRF

As a cluster administrator, you can configure an additional network for a virtual routing and forwarding (VRF) domain by using the CNI VRF plugin. The virtual network that this plugin creates is associated with the physical interface that you specify.

Using a secondary network with a VRF instance has the following advantages:

- Workload isolation

- Isolate workload traffic by configuring a VRF instance for the additional network.

- Improved security

- Enable improved security through isolated network paths in the VRF domain.

- Multi-tenancy support

- Support multi-tenancy through network segmentation with a unique routing table in the VRF domain for each tenant.

Applications that use VRFs must bind to a specific device. The common usage is to use the SO_BINDTODEVICE option for a socket. The SO_BINDTODEVICE option binds the socket to the device that is specified in the passed interface name, for example, eth1. To use the SO_BINDTODEVICE option, the application must have CAP_NET_RAW capabilities.

Using a VRF through the ip vrf exec command is not supported in OpenShift Container Platform pods. To use VRF, bind applications directly to the VRF interface.

23.9.1. Creating an additional network attachment with the CNI VRF plugin

The Cluster Network Operator (CNO) manages additional network definitions. When you specify an additional network to create, the CNO creates the NetworkAttachmentDefinition custom resource (CR) automatically.

Do not edit the NetworkAttachmentDefinition CRs that the Cluster Network Operator manages. Doing so might disrupt network traffic on your additional network.

To create an additional network attachment with the CNI VRF plugin, perform the following procedure.

Prerequisites

- Install the OpenShift Container Platform CLI (oc).

- Log in to the OpenShift cluster as a user with cluster-admin privileges.

Procedure

Create the

Networkcustom resource (CR) for the additional network attachment and insert therawCNIConfigconfiguration for the additional network, as in the following example CR. Save the YAML as the fileadditional-network-attachment.yaml.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

pluginsmust be a list. The first item in the list must be the secondary network underpinning the VRF network. The second item in the list is the VRF plugin configuration.- 2

typemust be set tovrf.- 3

vrfnameis the name of the VRF that the interface is assigned to. If it does not exist in the pod, it is created.- 4

- Optional.

tableis the routing table ID. By default, thetableidparameter is used. If it is not specified, the CNI assigns a free routing table ID to the VRF.

NoteVRF functions correctly only when the resource is of type

netdevice.Create the

Networkresource:oc create -f additional-network-attachment.yaml

$ oc create -f additional-network-attachment.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm that the CNO created the

NetworkAttachmentDefinitionCR by running the following command. Replace<namespace>with the namespace that you specified when configuring the network attachment, for example,additional-network-1.oc get network-attachment-definitions -n <namespace>

$ oc get network-attachment-definitions -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME AGE additional-network-1 14m

NAME AGE additional-network-1 14mCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThere might be a delay before the CNO creates the CR.

Verification

Create a pod and assign it to the additional network with the VRF instance:

Create a YAML file that defines the

Podresource:Example

pod-additional-net.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify the name of the additional network with the VRF instance.

Create the

Podresource by running the following command:oc create -f pod-additional-net.yaml

$ oc create -f pod-additional-net.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

pod/test-pod created

pod/test-pod createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verify that the pod network attachment is connected to the VRF additional network. Start a remote session with the pod and run the following command:

ip vrf show

$ ip vrf showCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Name Table ----------------------- vrf-1 1001

Name Table ----------------------- vrf-1 1001Copy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm that the VRF interface is the controller for the additional interface:

ip link

$ ip linkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

5: net1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master red state UP mode

5: net1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master red state UP modeCopy to Clipboard Copied! Toggle word wrap Toggle overflow