Support

Getting support for OpenShift Container Platform

Abstract

Chapter 1. Support overview

Red Hat offers cluster administrators tools for gathering data for your cluster, monitoring, and troubleshooting.

1.1. Get support

Get support: Visit the Red Hat Customer Portal to review knowledge base articles, submit a support case, and review additional product documentation and resources.

1.2. Remote health monitoring issues

Remote health monitoring issues: OpenShift Container Platform collects telemetry and configuration data about your cluster and reports it to Red Hat by using the Telemeter Client and the Insights Operator. Red Hat uses this data to understand and resolve issues in connected cluster. Similar to connected clusters, you can Use remote health monitoring in a restricted network. OpenShift Container Platform collects data and monitors health using the following:

Telemetry: The Telemetry Client gathers and uploads the metrics values to Red Hat every four minutes and thirty seconds. Red Hat uses this data to:

- Monitor the clusters.

- Roll out OpenShift Container Platform upgrades.

- Improve the upgrade experience.

Insights Operator: By default, OpenShift Container Platform installs and enables the Insights Operator, which reports configuration and component failure status every two hours. The Insights Operator helps to:

- Identify potential cluster issues proactively.

- Provide a solution and preventive action in Red Hat OpenShift Cluster Manager.

You can review telemetry information.

If you have enabled remote health reporting, Use Insights to identify issues with your cluster. You can optionally disable remote health reporting.

1.3. Gather data about your cluster

Gather data about your cluster: Red Hat recommends gathering your debugging information when opening a support case. This helps Red Hat Support to perform a root cause analysis. A cluster administrator can use the following to gather data about your cluster:

-

The must-gather tool: Use the

must-gathertool to collect information about your cluster and to debug the issues. -

sosreport: Use the

sosreporttool to collect configuration details, system information, and diagnostic data for debugging purposes. - Cluster ID: Obtain the unique identifier for your cluster, when providing information to Red Hat Support.

-

Bootstrap node journal logs: Gather

bootkube.servicejournaldunit logs and container logs from the bootstrap node to troubleshoot bootstrap-related issues. -

Cluster node journal logs: Gather

journaldunit logs and logs within/var/logon individual cluster nodes to troubleshoot node-related issues. - A network trace: Provide a network packet trace from a specific OpenShift Container Platform cluster node or a container to Red Hat Support to help troubleshoot network-related issues.

-

Diagnostic data: Use the

redhat-support-toolcommand to gather(?) diagnostic data about your cluster.

1.4. Troubleshooting issues

A cluster administrator can monitor and troubleshoot the following OpenShift Container Platform component issues:

Installation issues: OpenShift Container Platform installation proceeds through various stages. You can perform the following:

- Monitor the installation stages.

- Determine at which stage installation issues occur.

- Investigate multiple installation issues.

- Gather logs from a failed installation.

Node issues: A cluster administrator can verify and troubleshoot node-related issues by reviewing the status, resource usage, and configuration of a node. You can query the following:

- Kubelet’s status on a node.

- Cluster node journal logs.

Crio issues: A cluster administrator can verify CRI-O container runtime engine status on each cluster node. If you experience container runtime issues, perform the following:

- Gather CRI-O journald unit logs.

- Cleaning CRI-O storage.

Operating system issues: OpenShift Container Platform runs on Red Hat Enterprise Linux CoreOS. If you experience operating system issues, you can investigate kernel crash procedures. Ensure the following:

- Enable kdump.

- Test the kdump configuration.

- Analyze a core dump.

Network issues: To troubleshoot Open vSwitch issues, a cluster administrator can perform the following:

- Configure the Open vSwitch log level temporarily.

- Configure the Open vSwitch log level permanently.

- Display Open vSwitch logs.

Operator issues: A cluster administrator can do the following to resolve Operator issues:

- Verify Operator subscription status.

- Check Operator pod health.

- Gather Operator logs.

Pod issues: A cluster administrator can troubleshoot pod-related issues by reviewing the status of a pod and completing the following:

- Review pod and container logs.

- Start debug pods with root access.

Source-to-image issues: A cluster administrator can observe the S2I stages to determine where in the S2I process a failure occurred. Gather the following to resolve Source-to-Image (S2I) issues:

- Source-to-Image diagnostic data.

- Application diagnostic data to investigate application failure.

Storage issues: A multi-attach storage error occurs when the mounting volume on a new node is not possible because the failed node cannot unmount the attached volume. A cluster administrator can do the following to resolve multi-attach storage issues:

- Enable multiple attachments by using RWX volumes.

- Recover or delete the failed node when using an RWO volume.

Monitoring issues: A cluster administrator can follow the procedures on the troubleshooting page for monitoring. If the metrics for your user-defined projects are unavailable or if Prometheus is consuming a lot of disk space, check the following:

- Investigate why user-defined metrics are unavailable.

- Determine why Prometheus is consuming a lot of disk space.

Logging issues: A cluster administrator can follow the procedures in the "Support" and "Troubleshooting logging" sections to resolve logging issues:

-

OpenShift CLI (

oc) issues: Investigate OpenShift CLI (oc) issues by increasing the log level.

Chapter 2. Managing your cluster resources

You can apply global configuration options in OpenShift Container Platform. Operators apply these configuration settings across the cluster.

2.1. Interacting with your cluster resources

You can interact with cluster resources by using the OpenShift CLI (oc) tool in OpenShift Container Platform. The cluster resources that you see after running the oc api-resources command can be edited.

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole. -

You have access to the web console or you have installed the

ocCLI tool.

Procedure

To see which configuration Operators have been applied, run the following command:

oc api-resources -o name | grep config.openshift.io

$ oc api-resources -o name | grep config.openshift.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow To see what cluster resources you can configure, run the following command:

oc explain <resource_name>.config.openshift.io

$ oc explain <resource_name>.config.openshift.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow To see the configuration of custom resource definition (CRD) objects in the cluster, run the following command:

oc get <resource_name>.config -o yaml

$ oc get <resource_name>.config -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow To edit the cluster resource configuration, run the following command:

oc edit <resource_name>.config -o yaml

$ oc edit <resource_name>.config -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Getting support

3.1. Getting support

If you experience difficulty with a procedure described in this documentation, or with OpenShift Container Platform in general, visit the Red Hat Customer Portal.

From the Customer Portal, you can:

- Search or browse through the Red Hat Knowledgebase of articles and solutions relating to Red Hat products.

- Submit a support case to Red Hat Support.

- Access other product documentation.

To identify issues with your cluster, you can use Insights in OpenShift Cluster Manager. Insights provides details about issues and, if available, information on how to solve a problem.

If you have a suggestion for improving this documentation or have found an error, submit a Jira issue for the most relevant documentation component. Please provide specific details, such as the section name and OpenShift Container Platform version.

3.2. About the Red Hat Knowledgebase

The Red Hat Knowledgebase provides rich content aimed at helping you make the most of Red Hat’s products and technologies. The Red Hat Knowledgebase consists of articles, product documentation, and videos outlining best practices on installing, configuring, and using Red Hat products. In addition, you can search for solutions to known issues, each providing concise root cause descriptions and remedial steps.

3.3. Searching the Red Hat Knowledgebase

In the event of an OpenShift Container Platform issue, you can perform an initial search to determine if a solution already exists within the Red Hat Knowledgebase.

Prerequisites

- You have a Red Hat Customer Portal account.

Procedure

- Log in to the Red Hat Customer Portal.

- Click Search.

In the search field, input keywords and strings relating to the problem, including:

- OpenShift Container Platform components (such as etcd)

- Related procedure (such as installation)

- Warnings, error messages, and other outputs related to explicit failures

- Click the Enter key.

- Optional: Select the OpenShift Container Platform product filter.

- Optional: Select the Documentation content type filter.

3.4. Submitting a support case

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole. -

You have installed the OpenShift CLI (

oc). - You have a Red Hat Customer Portal account.

- You have a Red Hat Standard or Premium subscription.

Procedure

- Log in to the Customer Support page of the Red Hat Customer Portal.

- Click Get support.

On the Cases tab of the Customer Support page:

- Optional: Change the pre-filled account and owner details if needed.

- Select the appropriate category for your issue, such as Bug or Defect, and click Continue.

Enter the following information:

- In the Summary field, enter a concise but descriptive problem summary and further details about the symptoms being experienced, as well as your expectations.

- Select OpenShift Container Platform from the Product drop-down menu.

- Select 4.15 from the Version drop-down.

- Review the list of suggested Red Hat Knowledgebase solutions for a potential match against the problem that is being reported. If the suggested articles do not address the issue, click Continue.

- Review the updated list of suggested Red Hat Knowledgebase solutions for a potential match against the problem that is being reported. The list is refined as you provide more information during the case creation process. If the suggested articles do not address the issue, click Continue.

- Ensure that the account information presented is as expected, and if not, amend accordingly.

Check that the autofilled OpenShift Container Platform Cluster ID is correct. If it is not, manually obtain your cluster ID.

To manually obtain your cluster ID using the OpenShift Container Platform web console:

- Navigate to Home → Overview.

- Find the value in the Cluster ID field of the Details section.

Alternatively, it is possible to open a new support case through the OpenShift Container Platform web console and have your cluster ID autofilled.

- From the toolbar, navigate to (?) Help → Open Support Case.

- The Cluster ID value is autofilled.

To obtain your cluster ID using the OpenShift CLI (

oc), run the following command:oc get clusterversion -o jsonpath='{.items[].spec.clusterID}{"\n"}'$ oc get clusterversion -o jsonpath='{.items[].spec.clusterID}{"\n"}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Complete the following questions where prompted and then click Continue:

- What are you experiencing? What are you expecting to happen?

- Define the value or impact to you or the business.

- Where are you experiencing this behavior? What environment?

- When does this behavior occur? Frequency? Repeatedly? At certain times?

-

Upload relevant diagnostic data files and click Continue. It is recommended to include data gathered using the

oc adm must-gathercommand as a starting point, plus any issue specific data that is not collected by that command. - Input relevant case management details and click Continue.

- Preview the case details and click Submit.

Chapter 4. Remote health monitoring with connected clusters

4.1. About remote health monitoring

OpenShift Container Platform collects telemetry and configuration data about your cluster and reports it to Red Hat by using the Telemeter Client and the Insights Operator. The data that is provided to Red Hat enables the benefits outlined in this document.

A cluster that reports data to Red Hat through Telemetry and the Insights Operator is considered a connected cluster.

Telemetry is the term that Red Hat uses to describe the information being sent to Red Hat by the OpenShift Container Platform Telemeter Client. Lightweight attributes are sent from connected clusters to Red Hat to enable subscription management automation, monitor the health of clusters, assist with support, and improve customer experience.

The Insights Operator gathers OpenShift Container Platform configuration data and sends it to Red Hat. The data is used to produce insights about potential issues that a cluster might be exposed to. These insights are communicated to cluster administrators on OpenShift Cluster Manager.

More information is provided in this document about these two processes.

Telemetry and Insights Operator benefits

Telemetry and the Insights Operator enable the following benefits for end-users:

- Enhanced identification and resolution of issues. Events that might seem normal to an end-user can be observed by Red Hat from a broader perspective across a fleet of clusters. Some issues can be more rapidly identified from this point of view and resolved without an end-user needing to open a support case or file a Jira issue.

-

Advanced release management. OpenShift Container Platform offers the

candidate,fast, andstablerelease channels, which enable you to choose an update strategy. The graduation of a release fromfasttostableis dependent on the success rate of updates and on the events seen during upgrades. With the information provided by connected clusters, Red Hat can improve the quality of releases tostablechannels and react more rapidly to issues found in thefastchannels. - Targeted prioritization of new features and functionality. The data collected provides insights about which areas of OpenShift Container Platform are used most. With this information, Red Hat can focus on developing the new features and functionality that have the greatest impact for our customers.

- A streamlined support experience. You can provide a cluster ID for a connected cluster when creating a support ticket on the Red Hat Customer Portal. This enables Red Hat to deliver a streamlined support experience that is specific to your cluster, by using the connected information. This document provides more information about that enhanced support experience.

- Predictive analytics. The insights displayed for your cluster on OpenShift Cluster Manager are enabled by the information collected from connected clusters. Red Hat is investing in applying deep learning, machine learning, and artificial intelligence automation to help identify issues that OpenShift Container Platform clusters are exposed to.

4.1.1. About Telemetry

Telemetry sends a carefully chosen subset of the cluster monitoring metrics to Red Hat. The Telemeter Client fetches the metrics values every four minutes and thirty seconds and uploads the data to Red Hat. These metrics are described in this document.

This stream of data is used by Red Hat to monitor the clusters in real-time and to react as necessary to problems that impact our customers. It also allows Red Hat to roll out OpenShift Container Platform upgrades to customers to minimize service impact and continuously improve the upgrade experience.

This debugging information is available to Red Hat Support and Engineering teams with the same restrictions as accessing data reported through support cases. All connected cluster information is used by Red Hat to help make OpenShift Container Platform better and more intuitive to use.

4.1.1.1. Information collected by Telemetry

The following information is collected by Telemetry:

4.1.1.1.1. System information

- Version information, including the OpenShift Container Platform cluster version and installed update details that are used to determine update version availability

- Update information, including the number of updates available per cluster, the channel and image repository used for an update, update progress information, and the number of errors that occur in an update

- The unique random identifier that is generated during an installation

- Configuration details that help Red Hat Support to provide beneficial support for customers, including node configuration at the cloud infrastructure level, hostnames, IP addresses, Kubernetes pod names, namespaces, and services

- The OpenShift Container Platform framework components installed in a cluster and their condition and status

- Events for all namespaces listed as "related objects" for a degraded Operator

- Information about degraded software

- Information about the validity of certificates

- The name of the provider platform that OpenShift Container Platform is deployed on and the data center location

4.1.1.1.2. Sizing Information

- Sizing information about clusters, machine types, and machines, including the number of CPU cores and the amount of RAM used for each

- The number of etcd members and the number of objects stored in the etcd cluster

- Number of application builds by build strategy type

4.1.1.1.3. Usage information

- Usage information about components, features, and extensions

- Usage details about Technology Previews and unsupported configurations

Telemetry does not collect identifying information such as usernames or passwords. Red Hat does not intend to collect personal information. If Red Hat discovers that personal information has been inadvertently received, Red Hat will delete such information. To the extent that any telemetry data constitutes personal data, please refer to the Red Hat Privacy Statement for more information about Red Hat’s privacy practices.

4.1.2. About the Insights Operator

The Insights Operator periodically gathers configuration and component failure status and, by default, reports that data every two hours to Red Hat. This information enables Red Hat to assess configuration and deeper failure data than is reported through Telemetry.

Users of OpenShift Container Platform can display the report of each cluster in the Insights Advisor service on Red Hat Hybrid Cloud Console. If any issues have been identified, Insights provides further details and, if available, steps on how to solve a problem.

The Insights Operator does not collect identifying information, such as user names, passwords, or certificates. See Red Hat Insights Data & Application Security for information about Red Hat Insights data collection and controls.

Red Hat uses all connected cluster information to:

- Identify potential cluster issues and provide a solution and preventive actions in the Insights Advisor service on Red Hat Hybrid Cloud Console

- Improve OpenShift Container Platform by providing aggregated and critical information to product and support teams

- Make OpenShift Container Platform more intuitive

4.1.2.1. Information collected by the Insights Operator

The following information is collected by the Insights Operator:

- General information about your cluster and its components to identify issues that are specific to your OpenShift Container Platform version and environment

- Configuration files, such as the image registry configuration, of your cluster to determine incorrect settings and issues that are specific to parameters you set

- Errors that occur in the cluster components

- Progress information of running updates, and the status of any component upgrades

- Details of the platform that OpenShift Container Platform is deployed on and the region that the cluster is located in

- Cluster workload information transformed into discreet Secure Hash Algorithm (SHA) values, which allows Red Hat to assess workloads for security and version vulnerabilities without disclosing sensitive details

-

If an Operator reports an issue, information is collected about core OpenShift Container Platform pods in the

openshift-*andkube-*projects. This includes state, resource, security context, volume information, and more

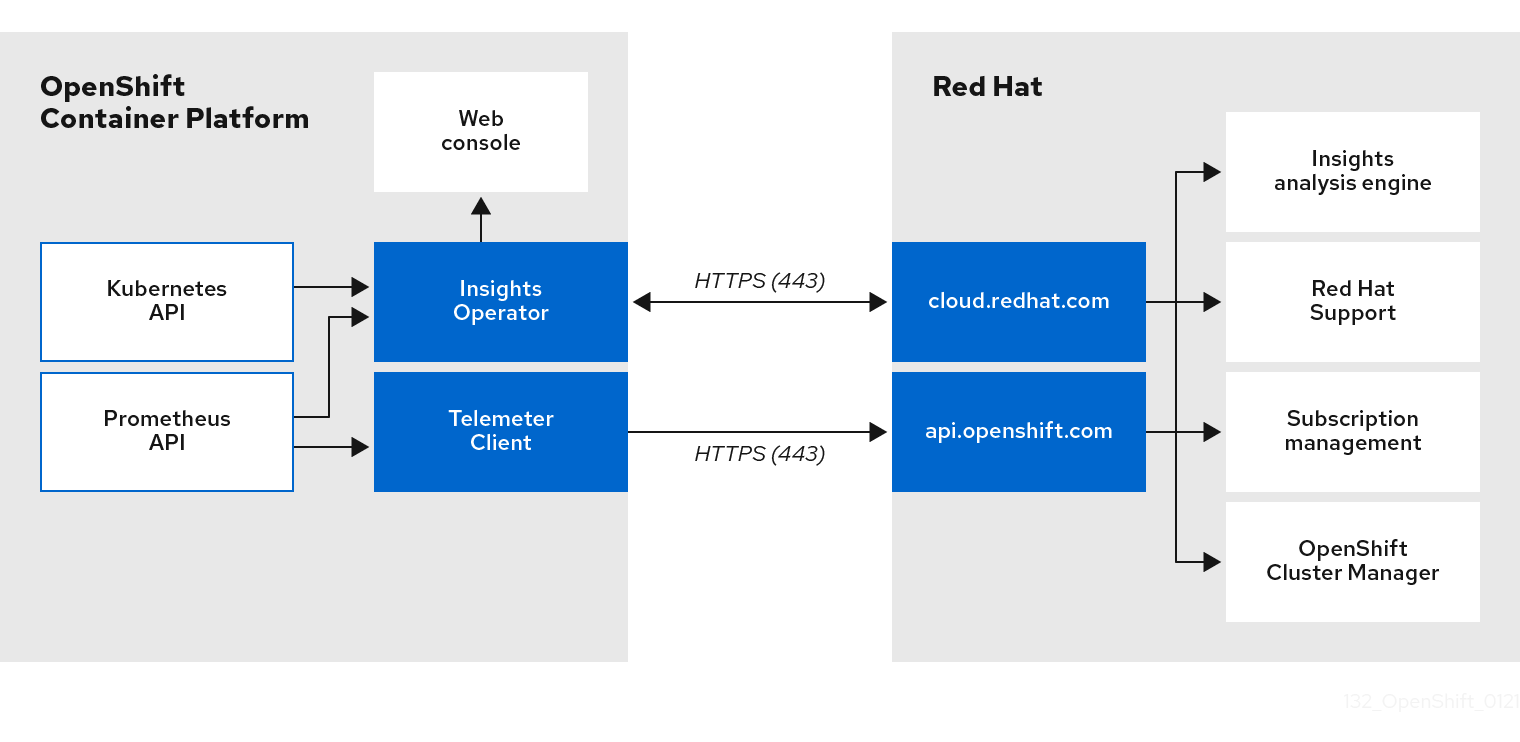

4.1.3. Understanding Telemetry and Insights Operator data flow

The Telemeter Client collects selected time series data from the Prometheus API. The time series data is uploaded to api.openshift.com every four minutes and thirty seconds for processing.

The Insights Operator gathers selected data from the Kubernetes API and the Prometheus API into an archive. The archive is uploaded to OpenShift Cluster Manager every two hours for processing. The Insights Operator also downloads the latest Insights analysis from OpenShift Cluster Manager. This is used to populate the Insights status pop-up that is included in the Overview page in the OpenShift Container Platform web console.

All of the communication with Red Hat occurs over encrypted channels by using Transport Layer Security (TLS) and mutual certificate authentication. All of the data is encrypted in transit and at rest.

Access to the systems that handle customer data is controlled through multi-factor authentication and strict authorization controls. Access is granted on a need-to-know basis and is limited to required operations.

Telemetry and Insights Operator data flow

4.1.4. Additional details about how remote health monitoring data is used

The information collected to enable remote health monitoring is detailed in Information collected by Telemetry and Information collected by the Insights Operator.

As further described in the preceding sections of this document, Red Hat collects data about your use of the Red Hat Product(s) for purposes such as providing support and upgrades, optimizing performance or configuration, minimizing service impacts, identifying and remediating threats, troubleshooting, improving the offerings and user experience, responding to issues, and for billing purposes if applicable.

Collection safeguards

Red Hat employs technical and organizational measures designed to protect the telemetry and configuration data.

Sharing

Red Hat may share the data collected through Telemetry and the Insights Operator internally within Red Hat to improve your user experience. Red Hat may share telemetry and configuration data with its business partners in an aggregated form that does not identify customers to help the partners better understand their markets and their customers’ use of Red Hat offerings or to ensure the successful integration of products jointly supported by those partners.

Third parties

Red Hat may engage certain third parties to assist in the collection, analysis, and storage of the Telemetry and configuration data.

User control / enabling and disabling telemetry and configuration data collection

You may disable OpenShift Container Platform Telemetry and the Insights Operator by following the instructions in Remote health reporting.

4.2. Showing data collected by remote health monitoring

As an administrator, you can review the metrics collected by Telemetry and the Insights Operator.

4.2.1. Showing data collected by Telemetry

You can view the cluster and components time series data captured by Telemetry.

Prerequisites

-

You have installed the OpenShift Container Platform CLI (

oc). -

You have access to the cluster as a user with the

cluster-adminrole or thecluster-monitoring-viewrole.

Procedure

- Log in to a cluster.

- Run the following command, which queries a cluster’s Prometheus service and returns the full set of time series data captured by Telemetry:

The following example contains some values that are specific to OpenShift Container Platform on AWS.

4.2.2. Showing data collected by the Insights Operator

You can review the data that is collected by the Insights Operator.

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole.

Procedure

Find the name of the currently running pod for the Insights Operator:

INSIGHTS_OPERATOR_POD=$(oc get pods --namespace=openshift-insights -o custom-columns=:metadata.name --no-headers --field-selector=status.phase=Running)

$ INSIGHTS_OPERATOR_POD=$(oc get pods --namespace=openshift-insights -o custom-columns=:metadata.name --no-headers --field-selector=status.phase=Running)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the recent data archives collected by the Insights Operator:

oc cp openshift-insights/$INSIGHTS_OPERATOR_POD:/var/lib/insights-operator ./insights-data

$ oc cp openshift-insights/$INSIGHTS_OPERATOR_POD:/var/lib/insights-operator ./insights-dataCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The recent Insights Operator archives are now available in the insights-data directory.

4.3. Remote health reporting

You can opt in, enable, or opt out, disable, reporting health and usage data for your cluster.

4.3.1. Enabling remote health reporting

If you or your organization have disabled remote health reporting, you can enable this feature again. You can see that remote health reporting is disabled from the message Insights not available in the Status tile on the OpenShift Container Platform web console Overview page.

To enable remote health reporting, you must change the global cluster pull secret with a new authorization token. Enabling remote health reporting enables both Insights Operator and Telemetry.

4.3.2. Changing your global cluster pull secret to enable remote health reporting

You can change your existing global cluster pull secret to enable remote health reporting. If you have disabled remote health monitoring, you must download a new pull secret with your console.openshift.com access token from Red Hat OpenShift Cluster Manager.

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. - Access to OpenShift Cluster Manager.

Procedure

- Go to the Downloads page on the Red Hat Hybrid Cloud Console.

From Tokens → Pull secret, click the Download button.

The

pull-secretfile contains yourcloud.openshift.comaccess token in JSON format:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Download the global cluster pull secret to your local file system.

oc get secret/pull-secret -n openshift-config --template='{{index .data ".dockerconfigjson" | base64decode}}' > pull-secret$ oc get secret/pull-secret -n openshift-config --template='{{index .data ".dockerconfigjson" | base64decode}}' > pull-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow Make a backup copy of your pull secret.

cp pull-secret pull-secret-backup

$ cp pull-secret pull-secret-backupCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Open the

pull-secretfile in a text editor. -

Append the

cloud.openshift.comJSON entry from thepull-secretfile that you downloaded earlier into theauthsfile. - Save the file.

Update the secret in your cluster by running the following command:

oc set data secret/pull-secret -n openshift-config --from-file=.dockerconfigjson=pull-secret

oc set data secret/pull-secret -n openshift-config --from-file=.dockerconfigjson=pull-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow You might need to wait several minutes for the secret to update and your cluster to begin reporting.

Verification

For a verification check from the OpenShift Container Platform web console, complete the following steps:

- Go to the Overview page on the OpenShift Container Platform web console.

- View the Insights section in the Status tile that reports the number of issues found.

For a verification check from the OpenShift CLI (

oc), enter the following command and then check that the value of thestatusparameter statesfalse:oc get co insights -o jsonpath='{.status.conditions[?(@.type=="Disabled")]}'$ oc get co insights -o jsonpath='{.status.conditions[?(@.type=="Disabled")]}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3.3. Consequences of disabling remote health reporting

In OpenShift Container Platform, customers can disable reporting usage information.

Before you disable remote health reporting, read the following benefits of a connected cluster:

- Red Hat can react more quickly to problems and better support our customers.

- Red Hat can better understand how product upgrades impact clusters.

- Connected clusters help to simplify the subscription and entitlement process.

- Connected clusters enable the OpenShift Cluster Manager service to offer an overview of your clusters and their subscription status.

Consider leaving health and usage reporting enabled for pre-production, test, and production clusters. This means that Red Hat can participate in qualifying OpenShift Container Platform in your environments and react more rapidly to product issues.

The following lists some consequences of disabling remote health reporting on a connected cluster:

- Red Hat cannot view the success of product upgrades or the health of your clusters without an open support case.

- Red Hat cannot use configuration data to better triage customer support cases and identify which configurations our customers find important.

- The OpenShift Cluster Manager cannot show data about your clusters, which includes health and usage information.

-

You must manually enter your subscription information in the

console.redhat.comweb console without the benefit of automatic usage reporting.

In restricted networks, Telemetry and Insights data still gets gathered through the appropriate configuration of your proxy.

4.3.4. Disabling remote health reporting

You can change your existing global cluster pull secret to disable remote health reporting. This configuration disables both Telemetry and the Insights Operator.

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole.

Procedure

Download the global cluster pull secret to your local file system:

oc extract secret/pull-secret -n openshift-config --to=.

$ oc extract secret/pull-secret -n openshift-config --to=.Copy to Clipboard Copied! Toggle word wrap Toggle overflow In a text editor, edit the

.dockerconfigjsonfile that you downloaded by removing thecloud.openshift.comJSON entry:"cloud.openshift.com":{"auth":"<hash>","email":"<email_address>"}"cloud.openshift.com":{"auth":"<hash>","email":"<email_address>"}Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the file.

Update the secret in your cluster. For more information, see "Updating the global cluster pull secret".

You might need to wait several minutes for the secret to update in your cluster.

4.3.5. Registering your disconnected cluster

Register your disconnected OpenShift Container Platform cluster on the Red Hat Hybrid Cloud Console so that your cluster does not get impacted by disabling remote health reporting. For more information, see "Consequences of disabling remote health reporting".

By registering your disconnected cluster, you can continue to report your subscription usage to Red Hat. Red Hat can then return accurate usage and capacity trends associated with your subscription, so that you can use the returned information to better organize subscription allocations across all of your resources.

Prerequisites

-

You logged in to the OpenShift Container Platform web console as the

cluster-adminrole. - You can log in to the Red Hat Hybrid Cloud Console.

Procedure

- Go to the Register disconnected cluster web page on the Red Hat Hybrid Cloud Console.

- Optional: To access the Register disconnected cluster web page from the home page of the Red Hat Hybrid Cloud Console, go to the Clusters navigation menu item and then select the Register cluster button.

- Enter your cluster’s details in the provided fields on the Register disconnected cluster page.

- From the Subscription settings section of the page, select the subscription settings that apply to your Red Hat subscription offering.

- To register your disconnected cluster, select the Register cluster button.

- How does the subscriptions service show my subscription data?(Getting Started with the Subscription Service)

4.3.6. Updating the global cluster pull secret

You can update the global pull secret for your cluster by either replacing the current pull secret or appending a new pull secret.

Use the procedure when you need a separate registry to store images than the registry used during installation.

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole.

Procedure

Optional: To append a new pull secret to the existing pull secret, complete the following steps:

Enter the following command to download the pull secret:

oc get secret/pull-secret -n openshift-config --template='{{index .data ".dockerconfigjson" | base64decode}}' > <pull_secret_location>$ oc get secret/pull-secret -n openshift-config --template='{{index .data ".dockerconfigjson" | base64decode}}' > <pull_secret_location>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

<pull_secret_location>: Include the path to the pull secret file.

Enter the following command to add the new pull secret:

oc registry login --registry="<registry>" \ --auth-basic="<username>:<password>" \ --to=<pull_secret_location>

$ oc registry login --registry="<registry>" \1 --auth-basic="<username>:<password>" \2 --to=<pull_secret_location>3 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

<registry>: Include the new registry. You can include many repositories within the same registry, for example:--registry="<registry/my-namespace/my-repository>.- 2

<username>:<password>: Include the credentials of the new registry.- 3

<pull_secret_location>: Include the path to the pull secret file.

You can also perform a manual update to the pull secret file.

Enter the following command to update the global pull secret for your cluster:

oc set data secret/pull-secret -n openshift-config --from-file=.dockerconfigjson=<pull_secret_location>

$ oc set data secret/pull-secret -n openshift-config --from-file=.dockerconfigjson=<pull_secret_location>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

<pull_secret_location>: Include the path to the new pull secret file.

This update rolls out to all nodes, which can take some time depending on the size of your cluster.

NoteAs of OpenShift Container Platform 4.7.4, changes to the global pull secret no longer trigger a node drain or reboot.

Additional resources

4.4. Using Insights to identify issues with your cluster

Insights repeatedly analyzes the data Insights Operator sends, which includes workload recommendations from Deployment Validation Operator (DVO). Users of OpenShift Container Platform can display the results in the Insights Advisor service on Red Hat Hybrid Cloud Console.

4.4.1. About Red Hat Insights Advisor for OpenShift Container Platform

You can use the Insights advisor service to assess and monitor the health of your OpenShift Container Platform clusters. Whether you are concerned about individual clusters, or with your whole infrastructure, it is important to be aware of the exposure of your cluster infrastructure to issues that can affect service availability, fault tolerance, performance, or security.

If the cluster has the Deployment Validation Operator (DVO) installed the recommendations also highlight workloads whose configuration might lead to cluster health issues.

The results of the Insights analysis are available in the Insights advisor service on Red Hat Hybrid Cloud Console. In the Red Hat Hybrid Cloud Console, you can perform the following actions:

- View clusters and workloads affected by specific recommendations.

- Use robust filtering capabilities to refine your results to those recommendations.

- Learn more about individual recommendations, details about the risks they present, and get resolutions tailored to your individual clusters.

- Share results with other stakeholders.

4.4.2. Understanding Insights Advisor recommendations

The Insights advisor service bundles information about various cluster states and component configurations that can negatively affect the service availability, fault tolerance, performance, or security of your clusters and workloads. This information set is called a recommendation in the Insights advisor service. Recommendations for clusters includes the following information:

- Name: A concise description of the recommendation

- Added: When the recommendation was published to the Insights Advisor service archive

- Category: Whether the issue has the potential to negatively affect service availability, fault tolerance, performance, or security

- Total risk: A value derived from the likelihood that the condition will negatively affect your cluster or workload, and the impact on operations if that were to happen

- Clusters: A list of clusters on which a recommendation is detected

- Description: A brief synopsis of the issue, including how it affects your clusters

4.4.3. Displaying potential issues with your cluster

This section describes how to display the Insights report in Insights Advisor on OpenShift Cluster Manager.

Note that Insights repeatedly analyzes your cluster and shows the latest results. These results can change, for example, if you fix an issue or a new issue has been detected.

Prerequisites

- Your cluster is registered on OpenShift Cluster Manager.

- Remote health reporting is enabled, which is the default.

- You are logged in to OpenShift Cluster Manager.

Procedure

Navigate to Advisor → Recommendations on OpenShift Cluster Manager.

Depending on the result, the Insights advisor service displays one of the following:

- No matching recommendations found, if Insights did not identify any issues.

- A list of issues Insights has detected, grouped by risk (low, moderate, important, and critical).

- No clusters yet, if Insights has not yet analyzed the cluster. The analysis starts shortly after the cluster has been installed, registered, and connected to the internet.

If any issues are displayed, click the > icon in front of the entry for more details.

Depending on the issue, the details can also contain a link to more information from Red Hat about the issue.

4.4.4. Displaying all Insights advisor service recommendations

The Recommendations view, by default, only displays the recommendations that are detected on your clusters. However, you can view all of the recommendations in the advisor service’s archive.

Prerequisites

- Remote health reporting is enabled, which is the default.

- Your cluster is registered on Red Hat Hybrid Cloud Console.

- You are logged in to OpenShift Cluster Manager.

Procedure

- Navigate to Advisor → Recommendations on OpenShift Cluster Manager.

Click the X icons next to the Clusters Impacted and Status filters.

You can now browse through all of the potential recommendations for your cluster.

4.4.5. Advisor recommendation filters

The Insights advisor service can return a large number of recommendations. To focus on your most critical recommendations, you can apply filters to the Advisor recommendations list to remove low-priority recommendations.

By default, filters are set to only show enabled recommendations that are impacting one or more clusters. To view all or disabled recommendations in the Insights library, you can customize the filters.

To apply a filter, select a filter type and then set its value based on the options that are available in the drop-down list. You can apply multiple filters to the list of recommendations.

You can set the following filter types:

- Name: Search for a recommendation by name.

- Total risk: Select one or more values from Critical, Important, Moderate, and Low indicating the likelihood and the severity of a negative impact on a cluster.

- Impact: Select one or more values from Critical, High, Medium, and Low indicating the potential impact to the continuity of cluster operations.

- Likelihood: Select one or more values from Critical, High, Medium, and Low indicating the potential for a negative impact to a cluster if the recommendation comes to fruition.

- Category: Select one or more categories from Service Availability, Performance, Fault Tolerance, Security, and Best Practice to focus your attention on.

- Status: Click a radio button to show enabled recommendations (default), disabled recommendations, or all recommendations.

- Clusters impacted: Set the filter to show recommendations currently impacting one or more clusters, non-impacting recommendations, or all recommendations.

- Risk of change: Select one or more values from High, Moderate, Low, and Very low indicating the risk that the implementation of the resolution could have on cluster operations.

4.4.5.1. Filtering Insights advisor service recommendations

As an OpenShift Container Platform cluster manager, you can filter the recommendations that are displayed on the recommendations list. By applying filters, you can reduce the number of reported recommendations and concentrate on your highest priority recommendations.

The following procedure demonstrates how to set and remove Category filters; however, the procedure is applicable to any of the filter types and respective values.

Prerequisites

You are logged in to the OpenShift Cluster Manager in the Hybrid Cloud Console.

Procedure

- Go to OpenShift > Advisor > Recommendations.

- In the main, filter-type drop-down list, select the Category filter type.

- Expand the filter-value drop-down list and select the checkbox next to each category of recommendation you want to view. Leave the checkboxes for unnecessary categories clear.

- Optional: Add additional filters to further refine the list.

Only recommendations from the selected categories are shown in the list.

Verification

- After applying filters, you can view the updated recommendations list. The applied filters are added next to the default filters.

4.4.5.2. Removing filters from Insights advisor service recommendations

You can apply multiple filters to the list of recommendations. When ready, you can remove them individually or completely reset them.

Removing filters individually

- Click the X icon next to each filter, including the default filters, to remove them individually.

Removing all non-default filters

- Click Reset filters to remove only the filters that you applied, leaving the default filters in place.

4.4.6. Disabling Insights advisor service recommendations

You can disable specific recommendations that affect your clusters, so that they no longer appear in your reports. It is possible to disable a recommendation for a single cluster or all of your clusters.

Disabling a recommendation for all of your clusters also applies to any future clusters.

Prerequisites

- Remote health reporting is enabled, which is the default.

- Your cluster is registered on OpenShift Cluster Manager.

- You are logged in to OpenShift Cluster Manager.

Procedure

- Navigate to Advisor → Recommendations on OpenShift Cluster Manager.

- Optional: Use the Clusters Impacted and Status filters as needed.

Disable an alert by using one of the following methods:

To disable an alert:

-

Click the Options menu

for that alert, and then click Disable recommendation.

for that alert, and then click Disable recommendation.

- Enter a justification note and click Save.

-

Click the Options menu

To view the clusters affected by this alert before disabling the alert:

- Click the name of the recommendation to disable. You are directed to the single recommendation page.

- Review the list of clusters in the Affected clusters section.

- Click Actions → Disable recommendation to disable the alert for all of your clusters.

- Enter a justification note and click Save.

4.4.7. Enabling a previously disabled Insights advisor service recommendation

When a recommendation is disabled for all clusters, you no longer see the recommendation in the Insights advisor service. You can change this behavior.

Prerequisites

- Remote health reporting is enabled, which is the default.

- Your cluster is registered on OpenShift Cluster Manager.

- You are logged in to OpenShift Cluster Manager.

Procedure

- Navigate to Advisor → Recommendations on OpenShift Cluster Manager.

Filter the recommendations to display on the disabled recommendations:

- From the Status drop-down menu, select Status.

- From the Filter by status drop-down menu, select Disabled.

- Optional: Clear the Clusters impacted filter.

- Locate the recommendation to enable.

-

Click the Options menu

, and then click Enable recommendation.

, and then click Enable recommendation.

4.4.8. About Insights advisor service recommendations for workloads

You can use the Red Hat Insights for OpenShift advisor service to view and manage information about recommendations that affect not only your clusters, but also your workloads. The advisor service takes advantage of deployment validation and helps OpenShift cluster administrators to see all runtime violations of deployment policies. You can see recommendations for workloads at OpenShift > Advisor > Workloads on the Red Hat Hybrid Cloud Console. For more information, see these additional resources:

- Information about Kubernetes workloads

- Boost your cluster operations with Deployment Validation and Insights Advisor for Workloads

- Identifying workload recommendations for namespaces in your clusters

- Viewing workload recommendations for namespaces in your cluster

- Excluding objects from workload recommendations in your clusters

4.4.9. Displaying the Insights status in the web console

Insights repeatedly analyzes your cluster and you can display the status of identified potential issues of your cluster in the OpenShift Container Platform web console. This status shows the number of issues in the different categories and, for further details, links to the reports in OpenShift Cluster Manager.

Prerequisites

- Your cluster is registered in OpenShift Cluster Manager.

- Remote health reporting is enabled, which is the default.

- You are logged in to the OpenShift Container Platform web console.

Procedure

- Navigate to Home → Overview in the OpenShift Container Platform web console.

Click Insights on the Status card.

The pop-up window lists potential issues grouped by risk. Click the individual categories or View all recommendations in Insights Advisor to display more details.

4.5. Using the Insights Operator

The Insights Operator periodically gathers configuration and component failure status and, by default, reports that data every two hours to Red Hat. This information enables Red Hat to assess configuration and deeper failure data than is reported through Telemetry. Users of OpenShift Container Platform can display the report in the Insights Advisor service on Red Hat Hybrid Cloud Console.

4.5.1. Configuring Insights Operator

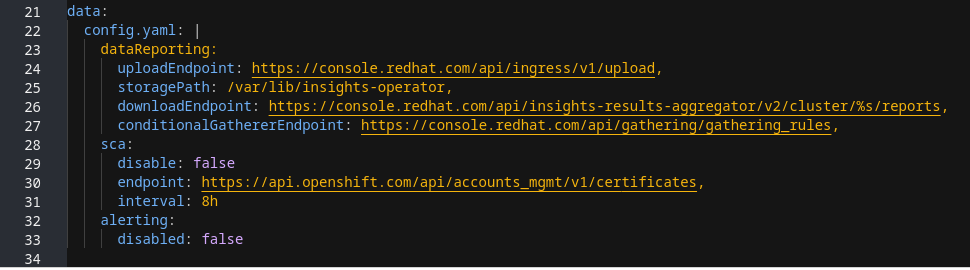

Insights Operator configuration is a combination of the default Operator configuration and the configuration that is stored in either the insights-config ConfigMap object in the openshift-insights namespace, OR in the support secret in the openshift-config namespace.

When a ConfigMap object or support secret exists, the contained attribute values override the default Operator configuration values. If both a ConfigMap object and a support secret exist, the Operator reads the ConfigMap object.

The ConfigMap object does not exist by default, so an OpenShift Container Platform cluster administrator must create it.

ConfigMap object configuration structure

This example of an insights-config ConfigMap object (config.yaml configuration) shows configuration options by using standard YAML formatting.

Configurable attributes and default values

The following table describes the available configuration attributes:

The insights-config ConfigMap object follows standard YAML formatting, wherein child values are below the parent attribute and indented two spaces. For the Obfuscation attribute, enter values as bulleted children of the parent attribute.

| Attribute name | Description | Value type | Default value |

|---|---|---|---|

enableGlobalObfuscation | Enables the global obfuscation of IP addresses and the cluster domain name. | Boolean |

|

endpoint | Sets the upload endpoint. | URL | |

clusterTransferEndpoint | The endpoint for checking and downloading cluster transfer data. | URL | https://api.openshift.com/api/accounts_mgmt/v1/cluster_transfers/ |

clusterTransferInterval | Sets the frequency for checking available cluster transfers. | Time interval |

|

conditionalGathererEndpoint | Sets the endpoint for providing conditional gathering rule definitions. | URL | |

httpProxy, httpsProxy, noProxy | Set custom proxy for Insights Operator. | URL | No default |

interval | Sets the data gathering and upload frequency. | Time interval |

|

reportEndpoint |

Specifies the endpoint for downloading the latest Insights analysis. For the specified default value, ensure that you replace | URL | https://console.redhat.com/api/insights-results-aggregator/v2/cluster/<cluster_id>/reports |

reportPullingDelay | Sets the delay between data upload and data download. | Time interval |

|

reportPullingTimeout | Sets the timeout value for the Insights download request. | Time interval |

|

reportMinRetryTime | Sets the time for when to retry a request. | Time interval |

|

scaEndpoint | Specifies the endpoint for downloading the simple content access (SCA) entitlements. | URL | https://api.openshift.com/api/accounts_mgmt/v1/entitlement_certificates |

scaInterval | Specifies the frequency of the simple content access entitlements download. | Time interval |

|

scaPullDisabled | Disables the simple content access entitlements download. | Boolean |

|

4.5.1.1. Creating the insights-config ConfigMap object

This procedure describes how to create the insights-config ConfigMap object for the Insights Operator to set custom configurations.

Red Hat recommends you consult Red Hat Support before making changes to the default Insights Operator configuration.

Prerequisites

- Remote health reporting is enabled, which is the default.

-

You are logged in to the OpenShift Container Platform web console as a user with

cluster-adminrole.

Procedure

- Go to Workloads → ConfigMaps and select Project: openshift-insights.

- Click Create ConfigMap.

Select Configure via: YAML view and enter your configuration preferences, for example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Optional: Select Form view and enter the necessary information that way.

- In the ConfigMap Name field, enter insights-config.

- In the Key field, enter config.yaml.

- For the Value field, either browse for a file to drag and drop into the field or enter your configuration parameters manually.

-

Click Create and you can see the

ConfigMapobject and configuration information.

4.5.2. Understanding Insights Operator alerts

The Insights Operator declares alerts through the Prometheus monitoring system to the Alertmanager. You can view these alerts in the Alerting UI in the OpenShift Container Platform web console by using one of the following methods:

- In the Administrator perspective, click Observe → Alerting.

- In the Developer perspective, click Observe → <project_name> → Alerts tab.

Currently, Insights Operator sends the following alerts when the conditions are met:

| Alert | Description |

|---|---|

|

| Insights Operator is disabled. |

|

| Simple content access is not enabled in Red Hat Subscription Management. |

|

| Insights has an active recommendation for the cluster. |

4.5.2.1. Disabling Insights Operator alerts

To prevent the Insights Operator from sending alerts to the cluster Prometheus instance, you create or edit the insights-config ConfigMap object.

Previously, a cluster administrator would create or edit the Insights Operator configuration using a support secret in the openshift-config namespace. Red Hat Insights now supports the creation of a ConfigMap object to configure the Operator. The Operator gives preference to the config map configuration over the support secret if both exist.

If the insights-config ConfigMap object does not exist, you must create it when you first add custom configurations. Note that configurations within the ConfigMap object take precedence over the default settings defined in the config/pod.yaml file.

Prerequisites

- Remote health reporting is enabled, which is the default.

-

You are logged in to the OpenShift Container Platform web console as

cluster-admin. -

The insights-config

ConfigMapobject exists in theopenshift-insightsnamespace.

Procedure

- Go to Workloads → ConfigMaps and select Project: openshift-insights.

-

Click on the insights-config

ConfigMapobject to open it. - Click Actions and select Edit ConfigMap.

- Click the YAML view radio button.

In the file, set the

alertingattribute todisabled: true.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Click Save. The insights-config config-map details page opens.

-

Verify that the value of the

config.yamlalertingattribute is set todisabled: true.

After you save the changes, Insights Operator no longer sends alerts to the cluster Prometheus instance.

4.5.2.2. Enabling Insights Operator alerts

When alerts are disabled, the Insights Operator no longer sends alerts to the cluster Prometheus instance. You can reenable them.

Previously, a cluster administrator would create or edit the Insights Operator configuration using a support secret in the openshift-config namespace. Red Hat Insights now supports the creation of a ConfigMap object to configure the Operator. The Operator gives preference to the config map configuration over the support secret if both exist.

Prerequisites

- Remote health reporting is enabled, which is the default.

-

You are logged in to the OpenShift Container Platform web console as

cluster-admin. -

The insights-config

ConfigMapobject exists in theopenshift-insightsnamespace.

Procedure

- Go to Workloads → ConfigMaps and select Project: openshift-insights.

-

Click on the insights-config

ConfigMapobject to open it. - Click Actions and select Edit ConfigMap.

- Click the YAML view radio button.

In the file, set the

alertingattribute todisabled: false.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Click Save. The insights-config config-map details page opens.

-

Verify that the value of the

config.yamlalertingattribute is set todisabled: false.

After you save the changes, Insights Operator again sends alerts to the cluster Prometheus instance.

4.5.3. Downloading your Insights Operator archive

Insights Operator stores gathered data in an archive located in the openshift-insights namespace of your cluster. You can download and review the data that is gathered by the Insights Operator.

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole.

Procedure

Find the name of the running pod for the Insights Operator:

oc get pods --namespace=openshift-insights -o custom-columns=:metadata.name --no-headers --field-selector=status.phase=Running

$ oc get pods --namespace=openshift-insights -o custom-columns=:metadata.name --no-headers --field-selector=status.phase=RunningCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the recent data archives collected by the Insights Operator:

oc cp openshift-insights/<insights_operator_pod_name>:/var/lib/insights-operator ./insights-data

$ oc cp openshift-insights/<insights_operator_pod_name>:/var/lib/insights-operator ./insights-data1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<insights_operator_pod_name>with the pod name output from the preceding command.

The recent Insights Operator archives are now available in the insights-data directory.

4.5.4. Running an Insights Operator gather operation

You can run Insights Operator data gather operations on demand. The following procedures describe how to run the default list of gather operations using the OpenShift web console or CLI. You can customize the on demand gather function to exclude any gather operations you choose. Disabling gather operations from the default list degrades Insights Advisor’s ability to offer effective recommendations for your cluster. If you have previously disabled Insights Operator gather operations in your cluster, this procedure will override those parameters.

The DataGather custom resource is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

If you enable Technology Preview in your cluster, the Insights Operator runs gather operations in individual pods. This is part of the Technology Preview feature set for the Insights Operator and supports the new data gathering features.

4.5.4.1. Viewing Insights Operator gather durations

You can view the time it takes for the Insights Operator to gather the information contained in the archive. This helps you to understand Insights Operator resource usage and issues with Insights Advisor.

Prerequisites

- A recent copy of your Insights Operator archive.

Procedure

From your archive, open

/insights-operator/gathers.json.The file contains a list of Insights Operator gather operations:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

duration_in_msis the amount of time in milliseconds for each gather operation.

- Inspect each gather operation for abnormalities.

4.5.4.2. Running an Insights Operator gather operation using the web console

You can run an Insights Operator gather operation using the OpenShift Container Platform web console.

Prerequisites

-

You are logged in to the OpenShift Container Platform web console as a user with the

cluster-adminrole.

Procedure

- Navigate to Administration → CustomResourceDefinitions.

- On the CustomResourceDefinitions page, use the Search by name field to find the DataGather resource definition and click it.

- On the CustomResourceDefinition details page, click the Instances tab.

- Click Create DataGather.

To create a new

DataGatheroperation, edit the configuration file:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace the

<your_data_gather>with a unique name for your gather operation. - 2

- Enter individual gather operations to disable under the

gatherersparameter. This example disables theworkloadsdata gather operation and will run the remainder of the default operations. To run the complete list of default gather operations, leave thespecparameter empty. You can find the complete list of gather operations in the Insights Operator documentation.

- Click Save.

Verification

- Navigate to Workloads → Pods.

- On the Pods page, select the Project pulldown menu, and then turn on Show default projects.

-

Select the

openshift-insightsproject from the Project pulldown menu. -

Check that your new gather operation is prefixed with your chosen name under the list of pods in the

openshift-insightsproject. Upon completion, the Insights Operator automatically uploads the data to Red Hat for processing.

4.5.4.3. Running an Insights Operator gather operation using the OpenShift CLI

You can run an Insights Operator gather operation using the OpenShift Container Platform command-line interface.

Prerequisites

-

You are logged in to OpenShift Container Platform as a user with the

cluster-adminrole.

Procedure

Enter the following command to run the gather operation:

oc apply -f <your_datagather_definition>.yaml

$ oc apply -f <your_datagather_definition>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

<your_datagather_definition>.yamlwith a configuration file using the following parameters:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace the

<your_data_gather>with a unique name for your gather operation. - 2

- Enter individual gather operations to disable under the

gatherersparameter. This example disables theworkloadsdata gather operation and will run the remainder of the default operations. To run the complete list of default gather operations, leave thespecparameter empty. You can find the complete list of gather operations in the Insights Operator documentation.

Verification

-

Check that your new gather operation is prefixed with your chosen name under the list of pods in the

openshift-insightsproject. Upon completion, the Insights Operator automatically uploads the data to Red Hat for processing.

4.5.4.4. Disabling the Insights Operator gather operations

You can disable the Insights Operator gather operations. Disabling the gather operations gives you the ability to increase privacy for your organization as Insights Operator will no longer gather and send Insights cluster reports to Red Hat. This will disable Insights analysis and recommendations for your cluster without affecting other core functions that require communication with Red Hat such as cluster transfers. You can view a list of attempted gather operations for your cluster from the /insights-operator/gathers.json file in your Insights Operator archive. Be aware that some gather operations only occur when certain conditions are met and might not appear in your most recent archive.

The InsightsDataGather custom resource is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

If you enable Technology Preview in your cluster, the Insights Operator runs gather operations in individual pods. This is part of the Technology Preview feature set for the Insights Operator and supports the new data gathering features.

Prerequisites

-

You are logged in to the OpenShift Container Platform web console as a user with the

cluster-adminrole.

Procedure

- Navigate to Administration → CustomResourceDefinitions.

- On the CustomResourceDefinitions page, use the Search by name field to find the InsightsDataGather resource definition and click it.

- On the CustomResourceDefinition details page, click the Instances tab.

- Click cluster, and then click the YAML tab.

Disable the gather operations by performing one of the following edits to the

InsightsDataGatherconfiguration file:To disable all the gather operations, enter

allunder thedisabledGathererskey:Copy to Clipboard Copied! Toggle word wrap Toggle overflow To disable individual gather operations, enter their values under the

disabledGathererskey:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Example individual gather operation

Click Save.

After you save the changes, the Insights Operator gather configurations are updated and the operations will no longer occur.

Disabling gather operations degrades the Insights advisor service’s ability to offer effective recommendations for your cluster.

4.5.4.5. Enabling the Insights Operator gather operations

You can enable the Insights Operator gather operations, if the gather operations have been disabled.

The InsightsDataGather custom resource is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

Prerequisites

-

You are logged in to the OpenShift Container Platform web console as a user with the

cluster-adminrole.

Procedure

- Navigate to Administration → CustomResourceDefinitions.

- On the CustomResourceDefinitions page, use the Search by name field to find the InsightsDataGather resource definition and click it.

- On the CustomResourceDefinition details page, click the Instances tab.

- Click cluster, and then click the YAML tab.

Enable the gather operations by performing one of the following edits:

To enable all disabled gather operations, remove the

gatherConfigstanza:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Remove the

gatherConfigstanza to enable all gather operations.

To enable individual gather operations, remove their values under the

disabledGathererskey:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Remove one or more gather operations.

Click Save.

After you save the changes, the Insights Operator gather configurations are updated and the affected gather operations start.

Disabling gather operations degrades Insights Advisor’s ability to offer effective recommendations for your cluster.

4.5.5. Obfuscating Deployment Validation Operator data

By default, when you install the Deployment Validation Operator (DVO), the name and unique identifier (UID) of a resource are included in the data that is captured and processed by the Insights Operator for OpenShift Container Platform. If you are a cluster administrator, you can configure the Insights Operator to obfuscate data from the Deployment Validation Operator (DVO). For example, you can obfuscate workload names in the archive file that is then sent to Red Hat.

To obfuscate the name of resources, you must manually set the obfuscation attribute in the insights-config ConfigMap object to include the workload_names value, as outlined in the following procedure.

Prerequisites

- Remote health reporting is enabled, which is the default.

- You are logged in to the OpenShift Container Platform web console with the "cluster-admin" role.

-

The insights-config

ConfigMapobject exists in theopenshift-insightsnamespace. - The cluster is self managed and the Deployment Validation Operator is installed.

Procedure

- Go to Workloads → ConfigMaps and select Project: openshift-insights.

-

Click the

insights-configConfigMapobject to open it. - Click Actions and select Edit ConfigMap.

- Click the YAML view radio button.

In the file, set the

obfuscationattribute with theworkload_namesvalue.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Click Save. The insights-config config-map details page opens.

-

Verify that the value of the

config.yamlobfuscationattribute is set to- workload_names.

4.6. Using remote health reporting in a restricted network

You can manually gather and upload Insights Operator archives to diagnose issues from a restricted network.

To use the Insights Operator in a restricted network, you must:

- Create a copy of your Insights Operator archive.

- Upload the Insights Operator archive to console.redhat.com.

Additionally, you can select to obfuscate the Insights Operator data before upload.

4.6.1. Running an Insights Operator gather operation

You must run a gather operation to create an Insights Operator archive.

Prerequisites

-

You are logged in to OpenShift Container Platform as

cluster-admin.

Procedure

Create a file named

gather-job.yamlusing this template:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy your

insights-operatorimage version:oc get -n openshift-insights deployment insights-operator -o yaml

$ oc get -n openshift-insights deployment insights-operator -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specifies your

insights-operatorimage version.

Paste your image version in

gather-job.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace any existing value with your

insights-operatorimage version.

Create the gather job:

oc apply -n openshift-insights -f gather-job.yaml

$ oc apply -n openshift-insights -f gather-job.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Find the name of the job pod:

oc describe -n openshift-insights job/insights-operator-job

$ oc describe -n openshift-insights job/insights-operator-jobCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - where

-

insights-operator-job-<your_job>is the name of the pod.

Verify that the operation has finished:

oc logs -n openshift-insights insights-operator-job-<your_job> insights-operator

$ oc logs -n openshift-insights insights-operator-job-<your_job> insights-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

I0407 11:55:38.192084 1 diskrecorder.go:34] Wrote 108 records to disk in 33ms

I0407 11:55:38.192084 1 diskrecorder.go:34] Wrote 108 records to disk in 33msCopy to Clipboard Copied! Toggle word wrap Toggle overflow Save the created archive:

oc cp openshift-insights/insights-operator-job-<your_job>:/var/lib/insights-operator ./insights-data

$ oc cp openshift-insights/insights-operator-job-<your_job>:/var/lib/insights-operator ./insights-dataCopy to Clipboard Copied! Toggle word wrap Toggle overflow Clean up the job:

oc delete -n openshift-insights job insights-operator-job

$ oc delete -n openshift-insights job insights-operator-jobCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.6.2. Uploading an Insights Operator archive

You can manually upload an Insights Operator archive to console.redhat.com to diagnose potential issues.

Prerequisites

-

You are logged in to OpenShift Container Platform as

cluster-admin. - You have a workstation with unrestricted internet access.

- You have created a copy of the Insights Operator archive.

Procedure

Download the

dockerconfig.jsonfile:oc extract secret/pull-secret -n openshift-config --to=.

$ oc extract secret/pull-secret -n openshift-config --to=.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy your

"cloud.openshift.com""auth"token from thedockerconfig.jsonfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Upload the archive to console.redhat.com:

curl -v -H "User-Agent: insights-operator/one10time200gather184a34f6a168926d93c330 cluster/<cluster_id>" -H "Authorization: Bearer <your_token>" -F "upload=@<path_to_archive>; type=application/vnd.redhat.openshift.periodic+tar" https://console.redhat.com/api/ingress/v1/upload

$ curl -v -H "User-Agent: insights-operator/one10time200gather184a34f6a168926d93c330 cluster/<cluster_id>" -H "Authorization: Bearer <your_token>" -F "upload=@<path_to_archive>; type=application/vnd.redhat.openshift.periodic+tar" https://console.redhat.com/api/ingress/v1/uploadCopy to Clipboard Copied! Toggle word wrap Toggle overflow where

<cluster_id>is your cluster ID,<your_token>is the token from your pull secret, and<path_to_archive>is the path to the Insights Operator archive.If the operation is successful, the command returns a

"request_id"and"account_number":Example output

* Connection #0 to host console.redhat.com left intact {"request_id":"393a7cf1093e434ea8dd4ab3eb28884c","upload":{"account_number":"6274079"}}%* Connection #0 to host console.redhat.com left intact {"request_id":"393a7cf1093e434ea8dd4ab3eb28884c","upload":{"account_number":"6274079"}}%Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification steps

- Log in to https://console.redhat.com/openshift.

- Click the Clusters menu in the left pane.

- To display the details of the cluster, click the cluster name.

Open the Insights Advisor tab of the cluster.

If the upload was successful, the tab displays one of the following:

- Your cluster passed all recommendations, if Insights Advisor did not identify any issues.

- A list of issues that Insights Advisor has detected, prioritized by risk (low, moderate, important, and critical).

4.6.3. Enabling Insights Operator data obfuscation

You can enable obfuscation to mask sensitive and identifiable IPv4 addresses and cluster base domains that the Insights Operator sends to console.redhat.com.

Although this feature is available, Red Hat recommends keeping obfuscation disabled for a more effective support experience.

Obfuscation assigns non-identifying values to cluster IPv4 addresses, and uses a translation table that is retained in memory to change IP addresses to their obfuscated versions throughout the Insights Operator archive before uploading the data to console.redhat.com.

For cluster base domains, obfuscation changes the base domain to a hardcoded substring. For example, cluster-api.openshift.example.com becomes cluster-api.<CLUSTER_BASE_DOMAIN>.

The following procedure enables obfuscation using the support secret in the openshift-config namespace.

Prerequisites

-

You are logged in to the OpenShift Container Platform web console as

cluster-admin.

Procedure

- Navigate to Workloads → Secrets.

- Select the openshift-config project.

- Search for the support secret using the Search by name field. If it does not exist, click Create → Key/value secret to create it.

-

Click the Options menu

, and then click Edit Secret.

, and then click Edit Secret.

- Click Add Key/Value.

-

Create a key named

enableGlobalObfuscationwith a value oftrue, and click Save. - Navigate to Workloads → Pods

-

Select the

openshift-insightsproject. -

Find the

insights-operatorpod. -

To restart the

insights-operatorpod, click the Options menu , and then click Delete Pod.

, and then click Delete Pod.

Verification

- Navigate to Workloads → Secrets.

- Select the openshift-insights project.

- Search for the obfuscation-translation-table secret using the Search by name field.

If the obfuscation-translation-table secret exists, then obfuscation is enabled and working.

Alternatively, you can inspect /insights-operator/gathers.json in your Insights Operator archive for the value "is_global_obfuscation_enabled": true.

4.7. Importing simple content access entitlements with Insights Operator