Hosted control planes

Using hosted control planes with OpenShift Container Platform

Abstract

Chapter 1. Hosted control planes release notes

Release notes contain information about new and deprecated features, changes, and known issues.

1.1. Hosted control planes release notes for OpenShift Container Platform 4.17

With this release, hosted control planes for OpenShift Container Platform 4.17 is available. Hosted control planes for OpenShift Container Platform 4.17 supports the multicluster engine for Kubernetes Operator version 2.7.

1.1.1. New features and enhancements

This release adds improvements related to the following concepts:

1.1.1.1. Custom taints and tolerations (Technology Preview)

You can now apply tolerations to hosted control plane pods by using the hcp CLI -tolerations argument or by using the hc.Spec.Tolerations API file. This feature is available as a Technology Preview feature. For more information, see Custom taints and tolerations.

1.1.1.2. Support for NVIDIA GPU devices on OpenShift Virtualization (Technology Preview)

For hosted control planes on OpenShift Virtualization, you can attach one or more NVIDIA graphics processing unit (GPU) devices to node pools. This feature is available as a Technology Preview feature. For more information, see Attaching NVIDIA GPU devices by using the hcp CLI and Attaching NVIDIA GPU devices by using the NodePool resource.

1.1.1.3. Support for tenancy on AWS

When you create a hosted cluster on AWS, you can indicate whether the EC2 instance should run on shared or single-tenant hardware. For more information, see Creating a hosted cluster on AWS.

1.1.1.4. Support for OpenShift Container Platform versions in hosted clusters

You can deploy a range of supported OpenShift Container Platform versions in a hosted cluster. For more information, see Supported OpenShift Container Platform versions in a hosted cluster.

1.1.1.5. Hosted control planes on OpenShift Virtualization in a disconnected environment is Generally Available

In this release, hosted control planes on OpenShift Virtualization in a disconnected environment is Generally Available. For more information, see Deploying hosted control planes on OpenShift Virtualization in a disconnected environment.

1.1.1.6. Hosted control planes for an ARM64 OpenShift Container Platform cluster on AWS is Generally Available

In this release, hosted control planes for an ARM64 OpenShift Container Platform cluster on AWS is Generally Available. For more information, see Running hosted clusters on an ARM64 architecture.

1.1.1.7. Hosted control planes on IBM Z is Generally Available

In this release, hosted control planes on IBM Z is Generally Available. For more information, see Deploying hosted control planes on IBM Z.

1.1.1.8. Hosted control planes on IBM Power is Generally Available

In this release, hosted control planes on IBM Power is Generally Available. For more information, see Deploying hosted control planes on IBM Power.

1.1.2. Bug fixes

-

Previously, when a hosted cluster proxy was configured and it used an identity provider (IDP) that had an HTTP or HTTPS endpoint, the hostname of the IDP was unresolved before sending it through the proxy. Consequently, hostnames that could only be resolved by the data plane failed to resolve for IDPs. With this update, a DNS lookup is performed before sending IPD traffic through the

konnectivitytunnel. As a result, IDPs with hostnames that can only be resolved by the data plane can be verified by the Control Plane Operator. (OCPBUGS-41371) -

Previously, when the hosted cluster

controllerAvailabilityPolicywas set toSingleReplica,podAntiAffinityon networking components blocked the availability of the components. With this release, the issue is resolved. (OCPBUGS-39313) -

Previously, the

AdditionalTrustedCAthat was specified in the hosted cluster image configuration was not reconciled into theopenshift-confignamespace, as expected by theimage-registry-operator, and the component did not become available. With this release, the issue is resolved. (OCPBUGS-39225) - Previously, Red Hat HyperShift periodic conformance jobs failed because of changes to the core operating system. These failed jobs caused the OpenShift API deployment to fail. With this release, an update recursively copies individual trusted certificate authority (CA) certificates instead of copying a single file, so that the periodic conformance jobs succeed and the OpenShift API runs as expected. (OCPBUGS-38941)

-

Previously, the Konnectivity proxy agent in a hosted cluster always sent all TCP traffic through an HTTP/S proxy. It also ignored host names in the

NO_PROXYconfiguration because it only received resolved IP addresses in its traffic. As a consequence, traffic that was not meant to be proxied, such as LDAP traffic, was proxied regardless of configuration. With this release, proxying is completed at the source (control plane) and the Konnectivity agent proxying configuration is removed. As a result, traffic that is not meant to be proxied, such as LDAP traffic, is not proxied anymore. TheNO_PROXYconfiguration that includes host names is honored. (OCPBUGS-38637) -

Previously, the

azure-disk-csi-driver-controllerimage was not getting appropriate override values when usingregistryOverride. This was intentional so as to avoid propagating the values to theazure-disk-csi-driverdata plane images. With this update, the issue is resolved by adding a separate image override value. As a result, theazure-disk-csi-driver-controllercan be used withregistryOverrideand no longer affectsazure-disk-csi-driverdata plane images. (OCPBUGS-38183) - Previously, the AWS cloud controller manager within a hosted control plane that was running on a proxied management cluster would not use the proxy for cloud API communication. With this release, the issue is fixed. (OCPBUGS-37832)

Previously, proxying for Operators that run in the control plane of a hosted cluster was performed through proxy settings on the Konnectivity agent pod that runs in the data plane. It was not possible to distinguish if proxying was needed based on application protocol.

For parity with OpenShift Container Platform, IDP communication via HTTPS or HTTP should be proxied, but LDAP communication should not be proxied. This type of proxying also ignores

NO_PROXYentries that rely on host names because by the time traffic reaches the Konnectivity agent, only the destination IP address is available.With this release, in hosted clusters, proxy is invoked in the control plane through

konnectivity-https-proxyandkonnectivity-socks5-proxy, and proxying traffic is stopped from the Konnectivity agent. As a result, traffic that is destined for LDAP servers is no longer proxied. Other HTTPS or HTTPS traffic is proxied correctly. TheNO_PROXYsetting is honored when you specify hostnames. (OCPBUGS-37052)Previously, proxying for IDP communication occurred in the Konnectivity agent. By the time traffic reached Konnectivity, its protocol and hostname were no longer available. As a consequence, proxying was not done correctly for the OAUTH server pod. It did not distinguish between protocols that require proxying (

http/s) and protocols that do not (ldap://). In addition, it did not honor theno_proxyvariable that is configured in theHostedCluster.spec.configuration.proxyspec.With this release, you can configure the proxy on the Konnectivity sidecar of the OAUTH server so that traffic is routed appropriately, honoring your

no_proxysettings. As a result, the OAUTH server can communicate properly with identity providers when a proxy is configured for the hosted cluster. (OCPBUGS-36932)-

Previously, the Hosted Cluster Config Operator (HCCO) did not delete the

ImageDigestMirrorSetCR (IDMS) after you removed theImageContentSourcesfield from theHostedClusterobject. As a consequence, the IDMS persisted in theHostedClusterobject when it should not. With this release, the HCCO manages the deletion of IDMS resources from theHostedClusterobject. (OCPBUGS-34820) -

Previously, deploying a

hostedClusterin a disconnected environment required setting thehypershift.openshift.io/control-plane-operator-imageannotation. With this update, the annotation is no longer needed. Additionally, the metadata inspector works as expected during the hosted Operator reconciliation, andOverrideImagesis populated as expected. (OCPBUGS-34734) - Previously, hosted clusters on AWS leveraged their VPC’s primary CIDR range to generate security group rules on the data plane. As a consequence, if you installed a hosted cluster into an AWS VPC with multiple CIDR ranges, the generated security group rules could be insufficient. With this update, security group rules are generated based on the provided machine CIDR range to resolve this issue. (OCPBUGS-34274)

- Previously, the OpenShift Cluster Manager container did not have the right TLS certificates. As a consequence, you could not use image streams in disconnected deployments. With this release, the TLS certificates are added as projected volumes to resolve this issue. (OCPBUGS-31446)

- Previously, the bulk destroy option in the multicluster engine for Kubernetes Operator console for OpenShift Virtualization did not destroy a hosted cluster. With this release, this issue is resolved. (ACM-10165)

-

Previously, updating the

additionalTrustBundleparameter in a hosted control planes cluster configuration did not get applied to compute nodes. With this release, a fix ensures that updates to theadditionalTrustBundleparameter automatically apply to compute nodes that exist in your hosted control planes cluster. If you update to the version that contains this fix, an automatic rollout of the existing nodes occurs to pick the bundle. (OCPBUGS-36680)

1.1.3. Known issues

-

If the annotation and the

ManagedClusterresource name do not match, the multicluster engine for Kubernetes Operator console displays the cluster asPending import. The cluster cannot be used by the multicluster engine Operator. The same issue happens when there is no annotation and theManagedClustername does not match theInfra-IDvalue of theHostedClusterresource. - When you use the multicluster engine for Kubernetes Operator console to add a new node pool to an existing hosted cluster, the same version of OpenShift Container Platform might appear more than once in the list of options. You can select any instance in the list for the version that you want.

When a node pool is scaled down to 0 workers, the list of hosts in the console still shows nodes in a

Readystate. You can verify the number of nodes in two ways:- In the console, go to the node pool and verify that it has 0 nodes.

On the command-rline interface, run the following commands:

Verify that 0 nodes are in the node pool by running the following command:

oc get nodepool -A

$ oc get nodepool -ACopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that 0 nodes are in the cluster by running the following command:

oc get nodes --kubeconfig

$ oc get nodes --kubeconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that 0 agents are reported as bound to the cluster by running the following command:

oc get agents -A

$ oc get agents -ACopy to Clipboard Copied! Toggle word wrap Toggle overflow

When you create a hosted cluster in an environment that uses the dual-stack network, you might encounter the following DNS-related issues:

-

CrashLoopBackOffstate in theservice-ca-operatorpod: When the pod tries to reach the Kubernetes API server through the hosted control plane, the pod cannot reach the server because the data plane proxy in thekube-systemnamespace cannot resolve the request. This issue occurs because in the HAProxy setup, the front end uses an IP address and the back end uses a DNS name that the pod cannot resolve. -

Pods stuck in

ContainerCreatingstate: This issue occurs because theopenshift-service-ca-operatorcannot generate themetrics-tlssecret that the DNS pods need for DNS resolution. As a result, the pods cannot resolve the Kubernetes API server. To resolve these issues, configure the DNS server settings for a dual stack network.

-

-

On the Agent platform, the hosted control planes feature periodically rotates the token that the Agent uses to pull ignition. As a result, if you have an Agent resource that was created some time ago, it might fail to pull ignition. As a workaround, in the Agent specification, delete the secret of the

IgnitionEndpointTokenReferenceproperty then add or modify any label on the Agent resource. The system re-creates the secret with the new token. If you created a hosted cluster in the same namespace as its managed cluster, detaching the managed hosted cluster deletes everything in the managed cluster namespace including the hosted cluster. The following situations can create a hosted cluster in the same namespace as its managed cluster:

- You created a hosted cluster on the Agent platform through the multicluster engine for Kubernetes Operator console by using the default hosted cluster cluster namespace.

- You created a hosted cluster through the command-line interface or API by specifying the hosted cluster namespace to be the same as the hosted cluster name.

If you configured a cluster-wide proxy for a new hosted cluster, the deployment of that cluster might fail because worker nodes cannot reach the Kubernetes API server when a cluster-wide proxy is configured. To resolve this problem, in the configuration file for the hosted cluster, add any of the following information to the

noProxyfield so that the traffic from the data plane to the Kubernetes API server skips the proxy:- The external API address.

-

The internal API address. The default is

172.20.0.1. -

The phrase

kubernetes. - The service network CIDR.

- The cluster network CIDR.

1.1.4. Generally Available and Technology Preview features

Features which are Generally Available (GA) are fully supported and are suitable for production use. Technology Preview (TP) features are experimental features and are not intended for production use. For more information about TP features, see the Technology Preview scope of support on the Red Hat Customer Portal.

For IBM Power and IBM Z, you must run the control plane on machine types based on 64-bit x86 architecture, and node pools on IBM Power or IBM Z.

See the following table to know about hosted control planes GA and TP features:

| Feature | 4.15 | 4.16 | 4.17 |

|---|---|---|---|

| Hosted control planes for OpenShift Container Platform on Amazon Web Services (AWS) | Technology Preview | Generally Available | Generally Available |

| Hosted control planes for OpenShift Container Platform on bare metal | General Availability | General Availability | General Availability |

| Hosted control planes for OpenShift Container Platform on OpenShift Virtualization | Generally Available | Generally Available | Generally Available |

| Hosted control planes for OpenShift Container Platform using non-bare-metal agent machines | Technology Preview | Technology Preview | Technology Preview |

| Hosted control planes for an ARM64 OpenShift Container Platform cluster on Amazon Web Services | Technology Preview | Technology Preview | Generally Available |

| Hosted control planes for OpenShift Container Platform on IBM Power | Technology Preview | Technology Preview | Generally Available |

| Hosted control planes for OpenShift Container Platform on IBM Z | Technology Preview | Technology Preview | Generally Available |

| Hosted control planes for OpenShift Container Platform on RHOSP | Not Available | Not Available | Developer Preview |

Chapter 2. Hosted control planes overview

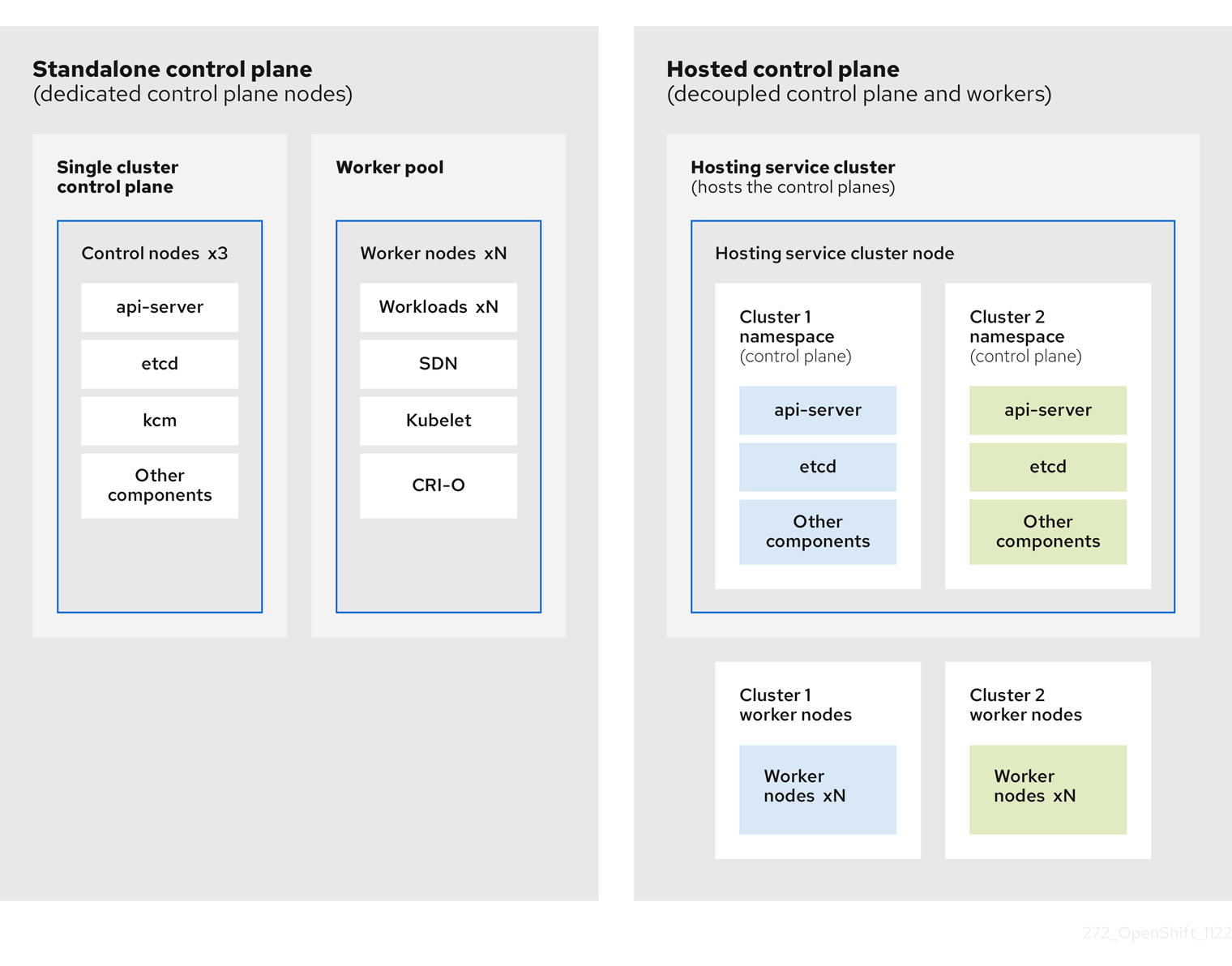

You can deploy OpenShift Container Platform clusters by using two different control plane configurations: standalone or hosted control planes. The standalone configuration uses dedicated virtual machines or physical machines to host the control plane. With hosted control planes for OpenShift Container Platform, you create control planes as pods on a management cluster without the need for dedicated virtual or physical machines for each control plane.

2.1. Introduction to hosted control planes

Hosted control planes is available by using a supported version of multicluster engine for Kubernetes Operator on the following platforms:

- Bare metal by using the Agent provider

- Non-bare-metal Agent machines, as a Technology Preview feature

- OpenShift Virtualization

- Amazon Web Services (AWS)

- IBM Z

- IBM Power

The hosted control planes feature is enabled by default.

The multicluster engine Operator is an integral part of Red Hat Advanced Cluster Management (RHACM) and is enabled by default with RHACM. However, you do not need RHACM in order to use hosted control planes.

2.1.1. Architecture of hosted control planes

OpenShift Container Platform is often deployed in a coupled, or standalone, model, where a cluster consists of a control plane and a data plane. The control plane includes an API endpoint, a storage endpoint, a workload scheduler, and an actuator that ensures state. The data plane includes compute, storage, and networking where workloads and applications run.

The standalone control plane is hosted by a dedicated group of nodes, which can be physical or virtual, with a minimum number to ensure quorum. The network stack is shared. Administrator access to a cluster offers visibility into the cluster’s control plane, machine management APIs, and other components that contribute to the state of a cluster.

Although the standalone model works well, some situations require an architecture where the control plane and data plane are decoupled. In those cases, the data plane is on a separate network domain with a dedicated physical hosting environment. The control plane is hosted by using high-level primitives such as deployments and stateful sets that are native to Kubernetes. The control plane is treated as any other workload.

2.1.2. Benefits of hosted control planes

With hosted control planes, you can pave the way for a true hybrid-cloud approach and enjoy several other benefits.

- The security boundaries between management and workloads are stronger because the control plane is decoupled and hosted on a dedicated hosting service cluster. As a result, you are less likely to leak credentials for clusters to other users. Because infrastructure secret account management is also decoupled, cluster infrastructure administrators cannot accidentally delete control plane infrastructure.

- With hosted control planes, you can run many control planes on fewer nodes. As a result, clusters are more affordable.

- Because the control planes consist of pods that are launched on OpenShift Container Platform, control planes start quickly. The same principles apply to control planes and workloads, such as monitoring, logging, and auto-scaling.

- From an infrastructure perspective, you can push registries, HAProxy, cluster monitoring, storage nodes, and other infrastructure components to the tenant’s cloud provider account, isolating usage to the tenant.

- From an operational perspective, multicluster management is more centralized, which results in fewer external factors that affect the cluster status and consistency. Site reliability engineers have a central place to debug issues and navigate to the cluster data plane, which can lead to shorter Time to Resolution (TTR) and greater productivity.

2.2. Differences between hosted control planes and OpenShift Container Platform

Hosted control planes is a form factor of OpenShift Container Platform. Hosted clusters and the stand-alone OpenShift Container Platform clusters are configured and managed differently. See the following tables to understand the differences between OpenShift Container Platform and hosted control planes:

2.2.1. Cluster creation and lifecycle

| OpenShift Container Platform | Hosted control planes |

|---|---|

|

You install a standalone OpenShift Container Platform cluster by using the |

You install a hosted cluster by using the |

2.2.2. Cluster configuration

| OpenShift Container Platform | Hosted control planes |

|---|---|

|

You configure cluster-scoped resources such as authentication, API server, and proxy by using the |

You configure resources that impact the control plane in the |

2.2.3. etcd encryption

| OpenShift Container Platform | Hosted control planes |

|---|---|

|

You configure etcd encryption by using the |

You configure etcd encryption by using the |

2.2.4. Operators and control plane

| OpenShift Container Platform | Hosted control planes |

|---|---|

| A standalone OpenShift Container Platform cluster contains separate Operators for each control plane component. | A hosted cluster contains a single Operator named Control Plane Operator that runs in the hosted control plane namespace on the management cluster. |

| etcd uses storage that is mounted on the control plane nodes. The etcd cluster Operator manages etcd. | etcd uses a persistent volume claim for storage and is managed by the Control Plane Operator. |

| The Ingress Operator, network related Operators, and Operator Lifecycle Manager (OLM) run on the cluster. | The Ingress Operator, network related Operators, and Operator Lifecycle Manager (OLM) run in the hosted control plane namespace on the management cluster. |

| The OAuth server runs inside the cluster and is exposed through a route in the cluster. | The OAuth server runs inside the control plane and is exposed through a route, node port, or load balancer on the management cluster. |

2.2.5. Updates

| OpenShift Container Platform | Hosted control planes |

|---|---|

|

The Cluster Version Operator (CVO) orchestrates the update process and monitors the |

The hosted control planes update results in a change to the |

| After you update an OpenShift Container Platform cluster, both the control plane and compute machines are updated. | After you update the hosted cluster, only the control plane is updated. You perform node pool updates separately. |

2.2.6. Machine configuration and management

| OpenShift Container Platform | Hosted control planes |

|---|---|

|

The |

The |

| A set of control plane machines are available. | A set of control plane machines do not exist. |

|

You enable a machine health check by using the |

You enable a machine health check through the |

|

You enable autoscaling by using the |

You enable autoscaling through the |

| Machines and machine sets are exposed in the cluster. | Machines, machine sets, and machine deployments from upstream Cluster CAPI Operator are used to manage machines but are not exposed to the user. |

| All machine sets are upgraded automatically when you update the cluster. | You update your node pools independently from the hosted cluster updates. |

| Only an in-place upgrade is supported in the cluster. | Both replace and in-place upgrades are supported in the hosted cluster. |

| The Machine Config Operator manages configurations for machines. | The Machine Config Operator does not exist in hosted control planes. |

|

You configure machine Ignition by using the |

You configure the |

| The Machine Config Daemon (MCD) manages configuration changes and updates on each of the nodes. | For an in-place upgrade, the node pool controller creates a run-once pod that updates a machine based on your configuration. |

| You can modify the machine configuration resources such as the SR-IOV Operator. | You cannot modify the machine configuration resources. |

2.2.7. Networking

| OpenShift Container Platform | Hosted control planes |

|---|---|

| The Kube API server communicates with nodes directly, because the Kube API server and nodes exist in the same Virtual Private Cloud (VPC). | The Kube API server communicates with nodes through Konnectivity. The Kube API server and nodes exist in a different Virtual Private Cloud (VPC). |

| Nodes communicate with the Kube API server through the internal load balancer. | Nodes communicate with the Kube API server through an external load balancer or a node port. |

2.2.8. Web console

| OpenShift Container Platform | Hosted control planes |

|---|---|

| The web console shows the status of a control plane. | The web console does not show the status of a control plane. |

| You can update your cluster by using the web console. | You cannot update the hosted cluster by using the web console. |

| The web console displays the infrastructure resources such as machines. | The web console does not display the infrastructure resources. |

|

You can configure machines through the | You cannot configure machines by using the web console. |

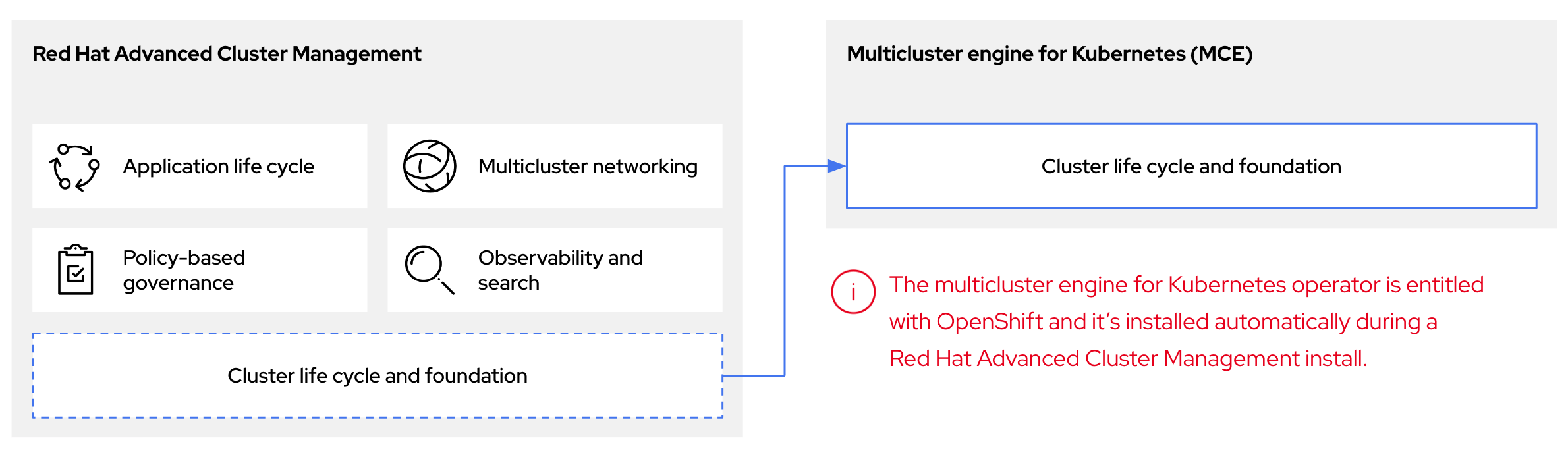

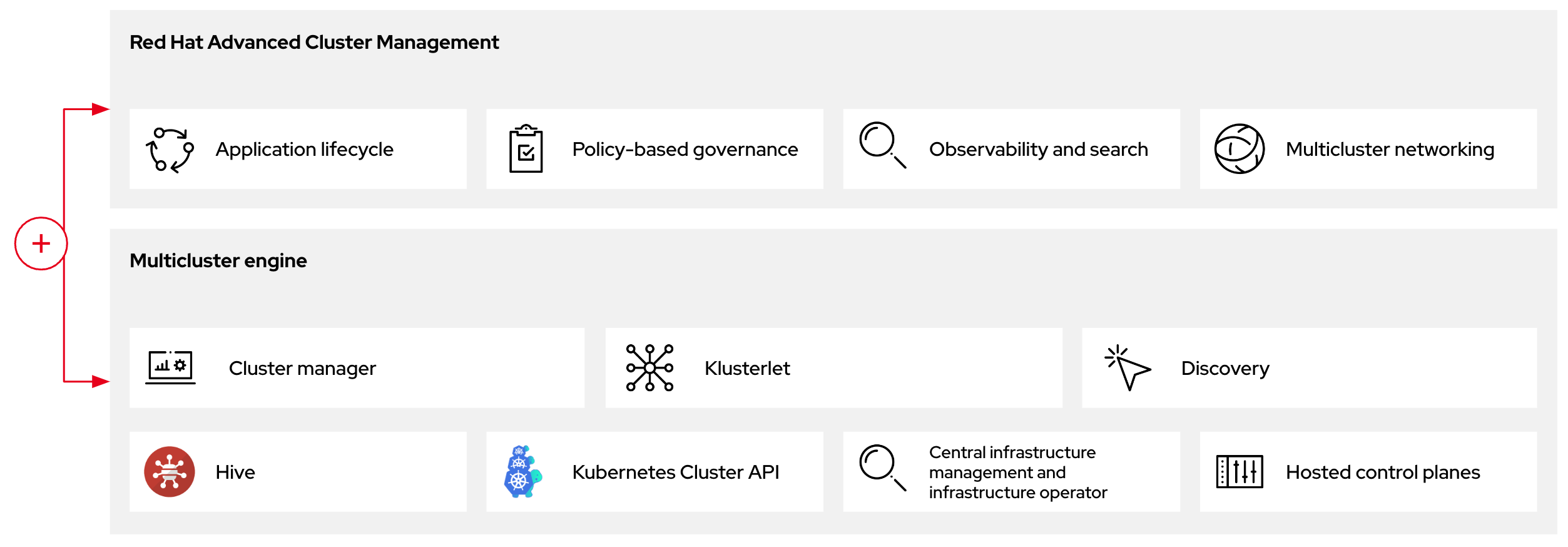

2.3. Relationship between hosted control planes, multicluster engine Operator, and RHACM

You can configure hosted control planes by using the multicluster engine for Kubernetes Operator. The multicluster engine Operator cluster lifecycle defines the process of creating, importing, managing, and destroying Kubernetes clusters across various infrastructure cloud providers, private clouds, and on-premises data centers.

The multicluster engine Operator is an integral part of Red Hat Advanced Cluster Management (RHACM) and is enabled by default with RHACM. However, you do not need RHACM in order to use hosted control planes.

The multicluster engine Operator is the cluster lifecycle Operator that provides cluster management capabilities for OpenShift Container Platform and RHACM hub clusters. The multicluster engine Operator enhances cluster fleet management and supports OpenShift Container Platform cluster lifecycle management across clouds and data centers.

Figure 2.1. Cluster life cycle and foundation

You can use the multicluster engine Operator with OpenShift Container Platform as a standalone cluster manager or as part of a RHACM hub cluster.

A management cluster is also known as the hosting cluster.

You can deploy OpenShift Container Platform clusters by using two different control plane configurations: standalone or hosted control planes. The standalone configuration uses dedicated virtual machines or physical machines to host the control plane. With hosted control planes for OpenShift Container Platform, you create control planes as pods on a management cluster without the need for dedicated virtual or physical machines for each control plane.

Figure 2.2. RHACM and the multicluster engine Operator introduction diagram

2.3.1. Discovering multicluster engine Operator hosted clusters in RHACM

If you want to bring hosted clusters to a Red Hat Advanced Cluster Management (RHACM) hub cluster to manage them with RHACM management components, see the instructions in the Red Hat Advanced Cluster Management official documentation.

2.4. Versioning for hosted control planes

The hosted control planes feature includes the following components, which might require independent versioning and support levels:

- Management cluster

- HyperShift Operator

-

Hosted control planes (

hcp) command-line interface (CLI) -

hypershift.openshift.ioAPI - Control Plane Operator

2.4.1. Management cluster

In management clusters for production use, you need multicluster engine for Kubernetes Operator, which is available through OperatorHub. The multicluster engine Operator bundles a supported build of the HyperShift Operator. For your management clusters to remain supported, you must use the version of OpenShift Container Platform that multicluster engine Operator runs on. In general, a new release of multicluster engine Operator runs on the following versions of OpenShift Container Platform:

- The latest General Availability version of OpenShift Container Platform

- Two versions before the latest General Availability version of OpenShift Container Platform

The full list of OpenShift Container Platform versions that you can install through the HyperShift Operator on a management cluster depends on the version of your HyperShift Operator. However, the list always includes at least the same OpenShift Container Platform version as the management cluster and two previous minor versions relative to the management cluster. For example, if the management cluster is running 4.17 and a supported version of multicluster engine Operator, the HyperShift Operator can install 4.17, 4.16, 4.15, and 4.14 hosted clusters.

With each major, minor, or patch version release of OpenShift Container Platform, two components of hosted control planes are released:

- The HyperShift Operator

-

The

hcpcommand-line interface (CLI)

2.4.2. HyperShift Operator

The HyperShift Operator manages the lifecycle of hosted clusters that are represented by the HostedCluster API resources. The HyperShift Operator is released with each OpenShift Container Platform release. The HyperShift Operator creates the supported-versions config map in the hypershift namespace. The config map contains the supported hosted cluster versions.

You can host different versions of control planes on the same management cluster.

Example supported-versions config map object

2.4.3. hosted control planes CLI

You can use the hcp CLI to create hosted clusters. You can download the CLI from multicluster engine Operator. When you run the hcp version command, the output shows the latest OpenShift Container Platform that the CLI supports against your kubeconfig file.

2.4.4. hypershift.openshift.io API

You can use the hypershift.openshift.io API resources, such as, HostedCluster and NodePool, to create and manage OpenShift Container Platform clusters at scale. A HostedCluster resource contains the control plane and common data plane configuration. When you create a HostedCluster resource, you have a fully functional control plane with no attached nodes. A NodePool resource is a scalable set of worker nodes that is attached to a HostedCluster resource.

The API version policy generally aligns with the policy for Kubernetes API versioning.

Updates for hosted control planes involve updating the hosted cluster and the node pools. For more information, see "Updates for hosted control planes".

2.4.5. Control Plane Operator

The Control Plane Operator is released as part of each OpenShift Container Platform payload release image for the following architectures:

- amd64

- arm64

- multi-arch

2.5. Glossary of common concepts and personas for hosted control planes

When you use hosted control planes for OpenShift Container Platform, it is important to understand its key concepts and the personas that are involved.

2.5.1. Concepts

- data plane

- The part of the cluster that includes the compute, storage, and networking where workloads and applications run.

- hosted cluster

- An OpenShift Container Platform cluster with its control plane and API endpoint hosted on a management cluster. The hosted cluster includes the control plane and its corresponding data plane.

- hosted cluster infrastructure

- Network, compute, and storage resources that exist in the tenant or end-user cloud account.

- hosted control plane

- An OpenShift Container Platform control plane that runs on the management cluster, which is exposed by the API endpoint of a hosted cluster. The components of a control plane include etcd, the Kubernetes API server, the Kubernetes controller manager, and a VPN.

- hosting cluster

- See management cluster.

- managed cluster

- A cluster that the hub cluster manages. This term is specific to the cluster lifecycle that the multicluster engine for Kubernetes Operator manages in Red Hat Advanced Cluster Management. A managed cluster is not the same thing as a management cluster. For more information, see Managed cluster.

- management cluster

- An OpenShift Container Platform cluster where the HyperShift Operator is deployed and where the control planes for hosted clusters are hosted. The management cluster is synonymous with the hosting cluster.

- management cluster infrastructure

- Network, compute, and storage resources of the management cluster.

- node pool

- A resource that manages a set of compute nodes that are associated with a hosted cluster. The compute nodes run applications and workloads within the hosted cluster.

2.5.2. Personas

- cluster instance administrator

-

Users who assume this role are the equivalent of administrators in standalone OpenShift Container Platform. This user has the

cluster-adminrole in the provisioned cluster, but might not have power over when or how the cluster is updated or configured. This user might have read-only access to see some configuration projected into the cluster. - cluster instance user

- Users who assume this role are the equivalent of developers in standalone OpenShift Container Platform. This user does not have a view into OperatorHub or machines.

- cluster service consumer

- Users who assume this role can request control planes and worker nodes, drive updates, or modify externalized configurations. Typically, this user does not manage or access cloud credentials or infrastructure encryption keys. The cluster service consumer persona can request hosted clusters and interact with node pools. Users who assume this role have RBAC to create, read, update, or delete hosted clusters and node pools within a logical boundary.

- cluster service provider

Users who assume this role typically have the

cluster-adminrole on the management cluster and have RBAC to monitor and own the availability of the HyperShift Operator as well as the control planes for the tenant’s hosted clusters. The cluster service provider persona is responsible for several activities, including the following examples:- Owning service-level objects for control plane availability, uptime, and stability

- Configuring the cloud account for the management cluster to host control planes

- Configuring the user-provisioned infrastructure, which includes the host awareness of available compute resources

Chapter 3. Preparing to deploy hosted control planes

3.1. Requirements for hosted control planes

In the context of hosted control planes, a management cluster is an OpenShift Container Platform cluster where the HyperShift Operator is deployed and where the control planes for hosted clusters are hosted.

The control plane is associated with a hosted cluster and runs as pods in a single namespace. When the cluster service consumer creates a hosted cluster, it creates a worker node that is independent of the control plane.

The following requirements apply to hosted control planes:

- In order to run the HyperShift Operator, your management cluster needs at least three worker nodes.

- You can run both the management cluster and the worker nodes on-premise, such as on a bare-metal platform or on OpenShift Virtualization. In addition, you can run both the management cluster and the worker nodes on cloud infrastructure, such as Amazon Web Services (AWS).

-

If you use a mixed infrastructure, such as running the management cluster on AWS and your worker nodes on-premise, or running your worker nodes on AWS and your management cluster on-premise, you must use the

PublicAndPrivatepublishing strategy and follow the latency requirements in the support matrix. - In Bare Metal Host (BMH) deployments, where the Bare Metal Operator starts machines, the hosted control plane must be able to reach baseboard management controllers (BMCs). If your security profile does not permit the Cluster Baremetal Operator to access the network where the BMHs have their BMCs in order to enable Redfish automation, you can use BYO ISO support. However, in BYO mode, OpenShift Container Platform cannot automate the powering on of BMHs.

3.1.1. Support matrix for hosted control planes

Because multicluster engine for Kubernetes Operator includes the HyperShift Operator, releases of hosted control planes align with releases of multicluster engine Operator. For more information, see OpenShift Operator Life Cycles.

3.1.1.1. Management cluster support

Any supported standalone OpenShift Container Platform cluster can be a management cluster.

A single-node OpenShift Container Platform cluster is not supported as a management cluster. If you have resource constraints, you can share infrastructure between a standalone OpenShift Container Platform control plane and hosted control planes. For more information, see "Shared infrastructure between hosted and standalone control planes".

The following table maps multicluster engine Operator versions to the management cluster versions that support them:

| Management cluster version | Supported multicluster engine Operator version |

|---|---|

| 4.14 - 4.15 | 2.4 |

| 4.14 - 4.16 | 2.5 |

| 4.14 - 4.17 | 2.6 |

| 4.15 - 4.17 | 2.7 |

3.1.1.2. Hosted cluster support

For hosted clusters, no direct relationship exists between the management cluster version and the hosted cluster version. The hosted cluster version depends on the HyperShift Operator that is included with your multicluster engine Operator version.

Ensure a maximum latency of 200 ms between the management cluster and hosted clusters. This requirement is especially important for mixed infrastructure deployments, such as when your management cluster is on AWS and your worker nodes are on-premise.

The following table maps multicluster engine Operator versions to the hosted cluster versions that you can create by using the HyperShift Operator that is associated with that version of multicluster engine Operator:

| Hosted cluster version | multicluster engine Operator 2.4 | multicluster engine Operator 2.5 | multicluster engine Operator 2.6 | multicluster engine Operator 2.7 |

|---|---|---|---|---|

| 4.14 | Yes | Yes | Yes | Yes |

| 4.15 | No | Yes | Yes | Yes |

| 4.16 | No | No | Yes | Yes |

| 4.17 | No | No | No | Yes |

3.1.1.3. Hosted cluster platform support

The following table indicates which OpenShift Container Platform versions are supported for each platform of hosted control planes.

For IBM Power and IBM Z, you must run the control plane on machine types based on 64-bit x86 architecture, and node pools on IBM Power or IBM Z.

In the following table, Management cluster version refers to the OpenShift Container Platform version where the multicluster engine Operator is enabled:

| Hosted cluster platform | Management cluster version | Hosted cluster version |

|---|---|---|

| Amazon Web Services | 4.16 - 4.17 | 4.16 - 4.17 |

| IBM Power | 4.17 | 4.17 |

| IBM Z | 4.17 | 4.17 |

| OpenShift Virtualization | 4.14 - 4.17 | 4.14 - 4.17 |

| Bare metal | 4.14 - 4.17 | 4.14 - 4.17 |

| Non-bare-metal agent machines (Technology Preview) | 4.16 - 4.17 | 4.16 - 4.17 |

3.1.1.4. Updates of multicluster engine Operator

When you update to another version of the multicluster engine Operator, your hosted cluster can continue to run if the HyperShift Operator that is included in the version of multicluster engine Operator supports the hosted cluster version. The following table shows which hosted cluster versions are supported on which updated multicluster engine Operator versions:

| Updated multicluster engine Operator version | Supported hosted cluster version |

|---|---|

| Updated from 2.4 to 2.5 | OpenShift Container Platform 4.14 |

| Updated from 2.5 to 2.6 | OpenShift Container Platform 4.14 - 4.15 |

| Updated from 2.6 to 2.7 | OpenShift Container Platform 4.14 - 4.16 |

For example, if you have an OpenShift Container Platform 4.14 hosted cluster on the management cluster and you update from multicluster engine Operator 2.4 to 2.5, the hosted cluster can continue to run.

3.1.1.5. Technology Preview features

The following list indicates Technology Preview features for this release:

- Hosted control planes on IBM Z in a disconnected environment

- Custom taints and tolerations for hosted control planes

- NVIDIA GPU devices on hosted control planes for OpenShift Virtualization

3.1.2. CIDR ranges for hosted control planes

To successfully deploy hosted control planes on OpenShift Container Platform, define the network environment by using specific Classless Inter-Domain Routing (CIDR) subnet ranges. Establishing these nonoverlapping ranges ensures reliable communication between cluster components and prevents internal IP address conflicts.

For deploying hosted control planes on OpenShift Container Platform, use the following required Classless Inter-Domain Routing (CIDR) subnet ranges:

-

v4InternalSubnet: 100.65.0.0/16 (OVN-Kubernetes) -

clusterNetwork: 10.132.0.0/14 (pod network) -

serviceNetwork: 172.31.0.0/16

3.2. Sizing guidance for hosted control planes

Many factors, including hosted cluster workload and worker node count, affect how many hosted control planes can fit within a certain number of worker nodes. Use this sizing guide to help with hosted cluster capacity planning. This guidance assumes a highly available hosted control planes topology. The load-based sizing examples were measured on a bare-metal cluster. Cloud-based instances might have different limiting factors, such as memory size.

You can override the following resource utilization sizing measurements and disable the metric service monitoring.

See the following highly available hosted control planes requirements, which were tested with OpenShift Container Platform version 4.12.9 and later:

- 78 pods

- Three 8 GiB PVs for etcd

- Minimum vCPU: approximately 5.5 cores

- Minimum memory: approximately 19 GiB

3.2.1. Pod limits

The maxPods setting for each node affects how many hosted clusters can fit in a control-plane node. It is important to note the maxPods value on all control-plane nodes. Plan for about 75 pods for each highly available hosted control plane.

For bare-metal nodes, the default maxPods setting of 250 is likely to be a limiting factor because roughly three hosted control planes fit for each node given the pod requirements, even if the machine has plenty of resources to spare. Setting the maxPods value to 500 by configuring the KubeletConfig value allows for greater hosted control plane density, which can help you take advantage of additional compute resources.

3.2.2. Request-based resource limit

The maximum number of hosted control planes that the cluster can host is calculated based on the hosted control plane CPU and memory requests from the pods.

A highly available hosted control plane consists of 78 pods that request 5 vCPUs and 18 GiB memory. These baseline numbers are compared to the cluster worker node resource capacities to estimate the maximum number of hosted control planes.

3.2.3. Load-based limit

The maximum number of hosted control planes that the cluster can host is calculated based on the hosted control plane pods CPU and memory utilizations when some workload is put on the hosted control plane Kubernetes API server.

The following method is used to measure the hosted control plane resource utilizations as the workload increases:

- A hosted cluster with 9 workers that use 8 vCPU and 32 GiB each, while using the KubeVirt platform

The workload test profile that is configured to focus on API control-plane stress, based on the following definition:

- Created objects for each namespace, scaling up to 100 namespaces total

- Additional API stress with continuous object deletion and creation

- Workload queries-per-second (QPS) and Burst settings set high to remove any client-side throttling

As the load increases by 1000 QPS, the hosted control plane resource utilization increases by 9 vCPUs and 2.5 GB memory.

For general sizing purposes, consider the 1000 QPS API rate that is a medium hosted cluster load, and a 2000 QPS API that is a heavy hosted cluster load.

This test provides an estimation factor to increase the compute resource utilization based on the expected API load. Exact utilization rates can vary based on the type and pace of the cluster workload.

The following example shows hosted control plane resource scaling for the workload and API rate definitions:

| QPS (API rate) | vCPU usage | Memory usage (GiB) |

|---|---|---|

| Low load (Less than 50 QPS) | 2.9 | 11.1 |

| Medium load (1000 QPS) | 11.9 | 13.6 |

| High load (2000 QPS) | 20.9 | 16.1 |

The hosted control plane sizing is about control-plane load and workloads that cause heavy API activity, etcd activity, or both. Hosted pod workloads that focus on data-plane loads, such as running a database, might not result in high API rates.

3.2.4. Sizing calculation example

This example provides sizing guidance for the following scenario:

-

Three bare-metal workers that are labeled as

hypershift.openshift.io/control-planenodes -

maxPodsvalue set to 500 - The expected API rate is medium or about 1000, according to the load-based limits

| Limit description | Server 1 | Server 2 |

|---|---|---|

| Number of vCPUs on worker node | 64 | 128 |

| Memory on worker node (GiB) | 128 | 256 |

| Maximum pods per worker | 500 | 500 |

| Number of workers used to host control planes | 3 | 3 |

| Maximum QPS target rate (API requests per second) | 1000 | 1000 |

| Calculated values based on worker node size and API rate | Server 1 | Server 2 | Calculation notes |

| Maximum hosted control planes per worker based on vCPU requests | 12.8 | 25.6 | Number of worker vCPUs ÷ 5 total vCPU requests per hosted control plane |

| Maximum hosted control planes per worker based on vCPU usage | 5.4 | 10.7 | Number of vCPUS ÷ (2.9 measured idle vCPU usage + (QPS target rate ÷ 1000) × 9.0 measured vCPU usage per 1000 QPS increase) |

| Maximum hosted control planes per worker based on memory requests | 7.1 | 14.2 | Worker memory GiB ÷ 18 GiB total memory request per hosted control plane |

| Maximum hosted control planes per worker based on memory usage | 9.4 | 18.8 | Worker memory GiB ÷ (11.1 measured idle memory usage + (QPS target rate ÷ 1000) × 2.5 measured memory usage per 1000 QPS increase) |

| Maximum hosted control planes per worker based on per node pod limit | 6.7 | 6.7 |

500 |

| Minimum of previously mentioned maximums | 5.4 | 6.7 | |

| vCPU limiting factor |

| ||

| Maximum number of hosted control planes within a management cluster | 16 | 20 | Minimum of previously mentioned maximums × 3 control-plane workers |

| Name | Description |

|

| Estimated maximum number of hosted control planes the cluster can host based on a highly available hosted control planes resource request. |

|

| Estimated maximum number of hosted control planes the cluster can host if all hosted control planes make around 50 QPS to the clusters Kube API server. |

|

| Estimated maximum number of hosted control planes the cluster can host if all hosted control planes make around 1000 QPS to the clusters Kube API server. |

|

| Estimated maximum number of hosted control planes the cluster can host if all hosted control planes make around 2000 QPS to the clusters Kube API server. |

|

| Estimated maximum number of hosted control planes the cluster can host based on the existing average QPS of hosted control planes. If you do not have an active hosted control planes, you can expect low QPS. |

3.3. Overriding resource utilization measurements

The set of baseline measurements for resource utilization can vary in each hosted cluster.

3.3.1. Overriding resource utilization measurements for a hosted cluster

You can override resource utilization measurements based on the type and pace of your cluster workload.

Procedure

Create the

ConfigMapresource by running the following command:oc create -f <your-config-map-file.yaml>

$ oc create -f <your-config-map-file.yaml>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

<your-config-map-file.yaml>with the name of your YAML file that contains yourhcp-sizing-baselineconfig map.Create the

hcp-sizing-baselineconfig map in thelocal-clusternamespace to specify the measurements you want to override. Your config map might resemble the following YAML file:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the

hypershift-addon-agentdeployment to restart thehypershift-addon-agentpod by running the following command:oc delete deployment hypershift-addon-agent \ -n open-cluster-management-agent-addon

$ oc delete deployment hypershift-addon-agent \ -n open-cluster-management-agent-addonCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Observe the

hypershift-addon-agentpod logs. Verify that the overridden measurements are updated in the config map by running the following command:oc logs hypershift-addon-agent -n open-cluster-management-agent-addon

$ oc logs hypershift-addon-agent -n open-cluster-management-agent-addonCopy to Clipboard Copied! Toggle word wrap Toggle overflow Your logs might resemble the following output:

Example output

2024-01-05T19:41:05.392Z INFO agent.agent-reconciler agent/agent.go:793 setting cpuRequestPerHCP to 5 2024-01-05T19:41:05.392Z INFO agent.agent-reconciler agent/agent.go:802 setting memoryRequestPerHCP to 18 2024-01-05T19:53:54.070Z INFO agent.agent-reconciler agent/hcp_capacity_calculation.go:141 The worker nodes have 12.000000 vCPUs 2024-01-05T19:53:54.070Z INFO agent.agent-reconciler agent/hcp_capacity_calculation.go:142 The worker nodes have 49.173369 GB memory

2024-01-05T19:41:05.392Z INFO agent.agent-reconciler agent/agent.go:793 setting cpuRequestPerHCP to 5 2024-01-05T19:41:05.392Z INFO agent.agent-reconciler agent/agent.go:802 setting memoryRequestPerHCP to 18 2024-01-05T19:53:54.070Z INFO agent.agent-reconciler agent/hcp_capacity_calculation.go:141 The worker nodes have 12.000000 vCPUs 2024-01-05T19:53:54.070Z INFO agent.agent-reconciler agent/hcp_capacity_calculation.go:142 The worker nodes have 49.173369 GB memoryCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the overridden measurements are not updated properly in the

hcp-sizing-baselineconfig map, you might see the following error message in thehypershift-addon-agentpod logs:Example error

2024-01-05T19:53:54.052Z ERROR agent.agent-reconciler agent/agent.go:788 failed to get configmap from the hub. Setting the HCP sizing baseline with default values. {"error": "configmaps \"hcp-sizing-baseline\" not found"}2024-01-05T19:53:54.052Z ERROR agent.agent-reconciler agent/agent.go:788 failed to get configmap from the hub. Setting the HCP sizing baseline with default values. {"error": "configmaps \"hcp-sizing-baseline\" not found"}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3.2. Disabling the metric service monitoring

After you enable the hypershift-addon managed cluster add-on, metric service monitoring is configured by default so that OpenShift Container Platform monitoring can gather metrics from hypershift-addon.

Procedure

You can disable metric service monitoring by completing the following steps:

Log in to your hub cluster by running the following command:

oc login

$ oc loginCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

hypershift-addon-deploy-configadd-on deployment configuration specification by running the following command:oc edit addondeploymentconfig hypershift-addon-deploy-config \ -n multicluster-engine

$ oc edit addondeploymentconfig hypershift-addon-deploy-config \ -n multicluster-engineCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the

disableMetrics=truecustomized variable to the specification, as shown in the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

disableMetrics=truecustomized variable disables metric service monitoring for both new and existinghypershift-addonmanaged cluster add-ons.

Apply the changes to the configuration specification by running the following command:

oc apply -f <filename>.yaml

$ oc apply -f <filename>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4. Installing the hosted control planes command-line interface

The hosted control planes command-line interface, hcp, is a tool that you can use to get started with hosted control planes. For Day 2 operations, such as management and configuration, use GitOps or your own automation tool.

3.4.1. Installing the hosted control planes command-line interface from the terminal

You can install the hosted control planes command-line interface (CLI), hcp, from the terminal.

Prerequisites

- On an OpenShift Container Platform cluster, you have installed multicluster engine for Kubernetes Operator 2.5 or later. The multicluster engine Operator is automatically installed when you install Red Hat Advanced Cluster Management. You can also install multicluster engine Operator without Red Hat Advanced Management as an Operator from OpenShift Container Platform OperatorHub.

Procedure

Get the URL to download the

hcpbinary by running the following command:oc get ConsoleCLIDownload hcp-cli-download -o json | jq -r ".spec"

$ oc get ConsoleCLIDownload hcp-cli-download -o json | jq -r ".spec"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Download the

hcpbinary by running the following command:wget <hcp_cli_download_url>

$ wget <hcp_cli_download_url>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

hcp_cli_download_urlwith the URL that you obtained from the previous step.

Unpack the downloaded archive by running the following command:

tar xvzf hcp.tar.gz

$ tar xvzf hcp.tar.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow Make the

hcpbinary file executable by running the following command:chmod +x hcp

$ chmod +x hcpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Move the

hcpbinary file to a directory in your path by running the following command:sudo mv hcp /usr/local/bin/.

$ sudo mv hcp /usr/local/bin/.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If you download the CLI on a Mac computer, you might see a warning about the hcp binary file. You need to adjust your security settings to allow the binary file to be run.

Verification

Verify that you see the list of available parameters by running the following command:

hcp create cluster <platform> --help

$ hcp create cluster <platform> --help1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- You can use the

hcp create clustercommand to create and manage hosted clusters. The supported platforms areaws,agent, andkubevirt.

3.4.2. Installing the hosted control planes command-line interface by using the web console

You can install the hosted control planes command-line interface (CLI), hcp, by using the OpenShift Container Platform web console.

Prerequisites

- On an OpenShift Container Platform cluster, you have installed multicluster engine for Kubernetes Operator 2.5 or later. The multicluster engine Operator is automatically installed when you install Red Hat Advanced Cluster Management. You can also install multicluster engine Operator without Red Hat Advanced Management as an Operator from OpenShift Container Platform OperatorHub.

Procedure

- From the OpenShift Container Platform web console, click the Help icon → Command Line Tools.

- Click Download hcp CLI for your platform.

Unpack the downloaded archive by running the following command:

tar xvzf hcp.tar.gz

$ tar xvzf hcp.tar.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to make the binary file executable:

chmod +x hcp

$ chmod +x hcpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to move the binary file to a directory in your path:

sudo mv hcp /usr/local/bin/.

$ sudo mv hcp /usr/local/bin/.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If you download the CLI on a Mac computer, you might see a warning about the hcp binary file. You need to adjust your security settings to allow the binary file to be run.

Verification

Verify that you see the list of available parameters by running the following command:

hcp create cluster <platform> --help

$ hcp create cluster <platform> --help1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- You can use the

hcp create clustercommand to create and manage hosted clusters. The supported platforms areaws,agent, andkubevirt.

3.4.3. Installing the hosted control planes command-line interface by using the content gateway

You can install the hosted control planes command-line interface (CLI), hcp, by using the content gateway.

Prerequisites

- On an OpenShift Container Platform cluster, you have installed multicluster engine for Kubernetes Operator 2.5 or later. The multicluster engine Operator is automatically installed when you install Red Hat Advanced Cluster Management. You can also install multicluster engine Operator without Red Hat Advanced Management as an Operator from OpenShift Container Platform OperatorHub.

Procedure

-

Navigate to the content gateway and download the

hcpbinary. Unpack the downloaded archive by running the following command:

tar xvzf hcp.tar.gz

$ tar xvzf hcp.tar.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow Make the

hcpbinary file executable by running the following command:chmod +x hcp

$ chmod +x hcpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Move the

hcpbinary file to a directory in your path by running the following command:sudo mv hcp /usr/local/bin/.

$ sudo mv hcp /usr/local/bin/.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If you download the CLI on a Mac computer, you might see a warning about the hcp binary file. You need to adjust your security settings to allow the binary file to be run.

Verification

Verify that you see the list of available parameters by running the following command:

hcp create cluster <platform> --help

$ hcp create cluster <platform> --help1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- You can use the

hcp create clustercommand to create and manage hosted clusters. The supported platforms areaws,agent, andkubevirt.

3.5. Distributing hosted cluster workloads

Before you get started with hosted control planes for OpenShift Container Platform, you must properly label nodes so that the pods of hosted clusters can be scheduled into infrastructure nodes. Node labeling is also important for the following reasons:

-

To ensure high availability and proper workload deployment. For example, you can set the

node-role.kubernetes.io/infralabel to avoid having the control-plane workload count toward your OpenShift Container Platform subscription. - To ensure that control plane workloads are separate from other workloads in the management cluster.

Do not use the management cluster for your workload. Workloads must not run on nodes where control planes run.

3.5.1. Labeling management cluster nodes

Proper node labeling is a prerequisite to deploying hosted control planes.

As a management cluster administrator, you use the following labels and taints in management cluster nodes to schedule a control plane workload:

-

hypershift.openshift.io/control-plane: true: Use this label and taint to dedicate a node to running hosted control plane workloads. By setting a value oftrue, you avoid sharing the control plane nodes with other components, for example, the infrastructure components of the management cluster or any other mistakenly deployed workload. -

hypershift.openshift.io/cluster: ${HostedControlPlane Namespace}: Use this label and taint when you want to dedicate a node to a single hosted cluster.

Apply the following labels on the nodes that host control-plane pods:

-

node-role.kubernetes.io/infra: Use this label to avoid having the control-plane workload count toward your subscription. topology.kubernetes.io/zone: Use this label on the management cluster nodes to deploy highly available clusters across failure domains. The zone might be a location, rack name, or the hostname of the node where the zone is set. For example, a management cluster has the following nodes:worker-1a,worker-1b,worker-2a, andworker-2b. Theworker-1aandworker-1bnodes are inrack1, and theworker-2aand worker-2b nodes are inrack2. To use each rack as an availability zone, enter the following commands:oc label node/worker-1a node/worker-1b topology.kubernetes.io/zone=rack1

$ oc label node/worker-1a node/worker-1b topology.kubernetes.io/zone=rack1Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc label node/worker-2a node/worker-2b topology.kubernetes.io/zone=rack2

$ oc label node/worker-2a node/worker-2b topology.kubernetes.io/zone=rack2Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Pods for a hosted cluster have tolerations, and the scheduler uses affinity rules to schedule them. Pods tolerate taints for control-plane and the cluster for the pods. The scheduler prioritizes the scheduling of pods into nodes that are labeled with hypershift.openshift.io/control-plane and hypershift.openshift.io/cluster: ${HostedControlPlane Namespace}.

For the ControllerAvailabilityPolicy option, use HighlyAvailable, which is the default value that the hosted control planes command-line interface, hcp, deploys. When you use that option, you can schedule pods for each deployment within a hosted cluster across different failure domains by setting topology.kubernetes.io/zone as the topology key. Scheduling pods for a deployment within a hosted cluster across different failure domains is available only for highly available control planes.

Procedure

To enable a hosted cluster to require its pods to be scheduled into infrastructure nodes, set HostedCluster.spec.nodeSelector, as shown in the following example:

spec:

nodeSelector:

node-role.kubernetes.io/infra: ""

spec:

nodeSelector:

node-role.kubernetes.io/infra: ""This way, hosted control planes for each hosted cluster are eligible infrastructure node workloads, and you do not need to entitle the underlying OpenShift Container Platform nodes.

3.5.2. Priority classes

Four built-in priority classes influence the priority and preemption of the hosted cluster pods. You can create the pods in the management cluster in the following order from highest to lowest:

-

hypershift-operator: HyperShift Operator pods. -

hypershift-etcd: Pods for etcd. -

hypershift-api-critical: Pods that are required for API calls and resource admission to succeed. These pods include pods such askube-apiserver, aggregated API servers, and web hooks. -

hypershift-control-plane: Pods in the control plane that are not API-critical but still need elevated priority, such as the cluster version Operator.

3.5.3. Custom taints and tolerations

By default, pods for a hosted cluster tolerate the control-plane and cluster taints. However, you can also use custom taints on nodes so that hosted clusters can tolerate those taints on a per-hosted-cluster basis by setting HostedCluster.spec.tolerations.

Passing tolerations for a hosted cluster is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

Example configuration

spec:

tolerations:

- effect: NoSchedule

key: kubernetes.io/custom

operator: Exists

spec:

tolerations:

- effect: NoSchedule

key: kubernetes.io/custom

operator: Exists

You can also set tolerations on the hosted cluster while you create a cluster by using the --tolerations hcp CLI argument.

Example CLI argument

--toleration="key=kubernetes.io/custom,operator=Exists,effect=NoSchedule"

--toleration="key=kubernetes.io/custom,operator=Exists,effect=NoSchedule"

For fine granular control of hosted cluster pod placement on a per-hosted-cluster basis, use custom tolerations with nodeSelectors. You can co-locate groups of hosted clusters and isolate them from other hosted clusters. You can also place hosted clusters in infra and control plane nodes.

Tolerations on the hosted cluster spread only to the pods of the control plane. To configure other pods that run on the management cluster and infrastructure-related pods, such as the pods to run virtual machines, you need to use a different process.

3.6. Enabling or disabling the hosted control planes feature

The hosted control planes feature, as well as the hypershift-addon managed cluster add-on, are enabled by default. If you want to disable the feature, or if you disabled it and want to manually enable it, see the following procedures.

3.6.1. Manually enabling the hosted control planes feature

If you need to manually enable hosted control planes, complete the following steps.

Procedure

Run the following command to enable the feature:

oc patch mce multiclusterengine --type=merge -p \ '{"spec":{"overrides":{"components":[{"name":"hypershift","enabled": true}]}}}'$ oc patch mce multiclusterengine --type=merge -p \ '{"spec":{"overrides":{"components":[{"name":"hypershift","enabled": true}]}}}'1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The default

MultiClusterEngineresource instance name ismulticlusterengine, but you can get theMultiClusterEnginename from your cluster by running the following command:$ oc get mce.

Run the following command to verify that the

hypershiftandhypershift-local-hostingfeatures are enabled in theMultiClusterEnginecustom resource:oc get mce multiclusterengine -o yaml

$ oc get mce multiclusterengine -o yaml1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The default

MultiClusterEngineresource instance name ismulticlusterengine, but you can get theMultiClusterEnginename from your cluster by running the following command:$ oc get mce.

Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.6.1.1. Manually enabling the hypershift-addon managed cluster add-on for local-cluster

Enabling the hosted control planes feature automatically enables the hypershift-addon managed cluster add-on. If you need to enable the hypershift-addon managed cluster add-on manually, complete the following steps to use the hypershift-addon to install the HyperShift Operator on local-cluster.

Procedure

Create the

ManagedClusterAddonadd-on namedhypershift-addonby creating a file that resembles the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the file by running the following command:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

filenamewith the name of the file that you created.Confirm that the

hypershift-addonmanaged cluster add-on is installed by running the following command:oc get managedclusteraddons -n local-cluster hypershift-addon

$ oc get managedclusteraddons -n local-cluster hypershift-addonCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the add-on is installed, the output resembles the following example:

NAME AVAILABLE DEGRADED PROGRESSING hypershift-addon True

NAME AVAILABLE DEGRADED PROGRESSING hypershift-addon TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Your hypershift-addon managed cluster add-on is installed and the hosting cluster is available to create and manage hosted clusters.

3.6.2. Disabling the hosted control planes feature

You can uninstall the HyperShift Operator and disable the hosted control planes feature. When you disable the hosted control planes feature, you must destroy the hosted cluster and the managed cluster resource on multicluster engine Operator, as described in the Managing hosted clusters topics.

3.6.2.1. Uninstalling the HyperShift Operator

To uninstall the HyperShift Operator and disable the hypershift-addon from the local-cluster, complete the following steps:

Procedure

Run the following command to ensure that there is no hosted cluster running:

oc get hostedcluster -A

$ oc get hostedcluster -ACopy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantIf a hosted cluster is running, the HyperShift Operator does not uninstall, even if the

hypershift-addonis disabled.Disable the

hypershift-addonby running the following command:oc patch mce multiclusterengine --type=merge -p \ '{"spec":{"overrides":{"components":[{"name":"hypershift-local-hosting","enabled": false}]}}}'$ oc patch mce multiclusterengine --type=merge -p \1 '{"spec":{"overrides":{"components":[{"name":"hypershift-local-hosting","enabled": false}]}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The default

MultiClusterEngineresource instance name ismulticlusterengine, but you can get theMultiClusterEnginename from your cluster by running the following command:$ oc get mce.

NoteYou can also disable the

hypershift-addonfor thelocal-clusterfrom the multicluster engine Operator console after disabling thehypershift-addon.

3.6.2.2. Disabling the hosted control planes feature

To disable the hosted control planes feature, complete the following steps.

Prerequisites

- You uninstalled the HyperShift Operator. For more information, see "Uninstalling the HyperShift Operator".

Procedure

Run the following command to disable the hosted control planes feature:

oc patch mce multiclusterengine --type=merge -p \ '{"spec":{"overrides":{"components":[{"name":"hypershift","enabled": false}]}}}'$ oc patch mce multiclusterengine --type=merge -p \1 '{"spec":{"overrides":{"components":[{"name":"hypershift","enabled": false}]}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The default

MultiClusterEngineresource instance name ismulticlusterengine, but you can get theMultiClusterEnginename from your cluster by running the following command:$ oc get mce.

You can verify that the

hypershiftandhypershift-local-hostingfeatures are disabled in theMultiClusterEnginecustom resource by running the following command:oc get mce multiclusterengine -o yaml

$ oc get mce multiclusterengine -o yaml1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The default

MultiClusterEngineresource instance name ismulticlusterengine, but you can get theMultiClusterEnginename from your cluster by running the following command:$ oc get mce.

See the following example where

hypershiftandhypershift-local-hostinghave theirenabled:flags set tofalse:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 4. Deploying hosted control planes

4.1. Deploying hosted control planes on AWS

To reduce infrastructure costs and improve cluster management efficiency, you can deploy hosted control planes on AWS. This configuration decouples the control plane from the data plane so that you can manage multiple clusters from a central management service.

A hosted cluster is an OpenShift Container Platform cluster with its API endpoint and control plane that are hosted on the management cluster. The hosted cluster includes the control plane and its corresponding data plane. To configure hosted control planes on premises, you must install multicluster engine for Kubernetes Operator in a management cluster. By deploying the HyperShift Operator on an existing managed cluster by using the hypershift-addon managed cluster add-on, you can enable that cluster as a management cluster and start to create the hosted cluster. The hypershift-addon managed cluster add-on is enabled by default for the local-cluster managed cluster.

You can use the multicluster engine Operator console or the hosted control plane command-line interface (CLI), hcp, to create a hosted cluster. The hosted cluster is automatically imported as a managed cluster. However, you can disable this automatic import feature into multicluster engine Operator.

4.1.1. Preparing to deploy hosted control planes on AWS

As you prepare to deploy hosted control planes on Amazon Web Services (AWS), consider the following information:

- Each hosted cluster must have a cluster-wide unique name. A hosted cluster name cannot be the same as any existing managed cluster in order for multicluster engine Operator to manage it.

-

Do not use

clustersas a hosted cluster name. - Run the management cluster and workers on the same platform for hosted control planes.

- A hosted cluster cannot be created in the namespace of a multicluster engine Operator managed cluster.

4.1.1.1. Prerequisites to configure a management cluster

You must have the following prerequisites to configure the management cluster:

- You have installed the multicluster engine for Kubernetes Operator 2.5 and later on an OpenShift Container Platform cluster. The multicluster engine Operator is automatically installed when you install Red Hat Advanced Cluster Management (RHACM). The multicluster engine Operator can also be installed without RHACM as an Operator from the OpenShift Container Platform OperatorHub.

You have at least one managed OpenShift Container Platform cluster for the multicluster engine Operator. The

local-clusteris automatically imported in the multicluster engine Operator version 2.5 and later. You can check the status of your hub cluster by running the following command:oc get managedclusters local-cluster

$ oc get managedclusters local-clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

You have installed the

awscommand-line interface (CLI). -

You have installed the hosted control plane CLI,

hcp.

4.1.2. Accessing a hosted cluster on AWS by using the hcp CLI

You can access the hosted cluster by using the hcp command-line interface (CLI) to generate the kubeconfig file.

Procedure

Generate the

kubeconfigfile by entering the following command:hcp create kubeconfig --namespace <hosted_cluster_namespace> \ --name <hosted_cluster_name> > <hosted_cluster_name>.kubeconfig

$ hcp create kubeconfig --namespace <hosted_cluster_namespace> \ --name <hosted_cluster_name> > <hosted_cluster_name>.kubeconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow After you save the

kubeconfigfile, you can access the hosted cluster by entering the following command:oc --kubeconfig <hosted_cluster_name>.kubeconfig get nodes

$ oc --kubeconfig <hosted_cluster_name>.kubeconfig get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.3. Creating the Amazon Web Services S3 bucket and S3 OIDC secret

Before you can create and manage hosted clusters on Amazon Web Services (AWS), you must create the S3 bucket and S3 OIDC secret.

Procedure

Create an S3 bucket that has public access to host OIDC discovery documents for your clusters by running the following commands:

aws s3api create-bucket --bucket <bucket_name> \ --create-bucket-configuration LocationConstraint=<region> \ --region <region>

$ aws s3api create-bucket --bucket <bucket_name> \1 --create-bucket-configuration LocationConstraint=<region> \2 --region <region>3 Copy to Clipboard Copied! Toggle word wrap Toggle overflow aws s3api delete-public-access-block --bucket <bucket_name>

$ aws s3api delete-public-access-block --bucket <bucket_name>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<bucket_name>with the name of the S3 bucket you are creating.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<bucket_name>with the name of the S3 bucket you are creating.

aws s3api put-bucket-policy --bucket <bucket_name> \ --policy file://policy.json

$ aws s3api put-bucket-policy --bucket <bucket_name> \1 --policy file://policy.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<bucket_name>with the name of the S3 bucket you are creating.

NoteIf you are using a Mac computer, you must export the bucket name in order for the policy to work.

-

Create an OIDC S3 secret named

hypershift-operator-oidc-provider-s3-credentialsfor the HyperShift Operator. -

Save the secret in the

local-clusternamespace. See the following table to verify that the secret contains the following fields:

Expand Table 4.1. Required fields for the AWS secret Field name Description bucketContains an S3 bucket with public access to host OIDC discovery documents for your hosted clusters.

credentialsA reference to a file that contains the credentials of the

defaultprofile that can access the bucket. By default, HyperShift only uses thedefaultprofile to operate thebucket.regionSpecifies the region of the S3 bucket.

To create an AWS secret, run the following command: