Service Mesh

Service Mesh installation, usage, and release notes

Abstract

Chapter 1. Service Mesh 3.x

1.1. Service Mesh overview

Red Hat OpenShift Service Mesh manages and secures communication between microservices by providing traffic management, advanced routing, and load balancing. Red Hat OpenShift Service Mesh also enhances security through features like mutual TLS, and offers observability with metrics, logging, and tracing to monitor and troubleshoot applications.

Because Red Hat OpenShift Service Mesh 3.0 releases on a different cadence from OpenShift Container Platform, the Service Mesh documentation is available as a separate documentation set at Red Hat OpenShift Service Mesh.

Chapter 2. Service Mesh 2.x

2.1. About OpenShift Service Mesh

Because Red Hat OpenShift Service Mesh releases on a different cadence from OpenShift Container Platform and because the Red Hat OpenShift Service Mesh Operator supports deploying multiple versions of the ServiceMeshControlPlane, the Service Mesh documentation does not maintain separate documentation sets for minor versions of the product. The current documentation set applies to the most recent version of Service Mesh unless version-specific limitations are called out in a particular topic or for a particular feature.

For additional information about the Red Hat OpenShift Service Mesh life cycle and supported platforms, refer to the Platform Life Cycle Policy.

2.1.1. Introduction to Red Hat OpenShift Service Mesh

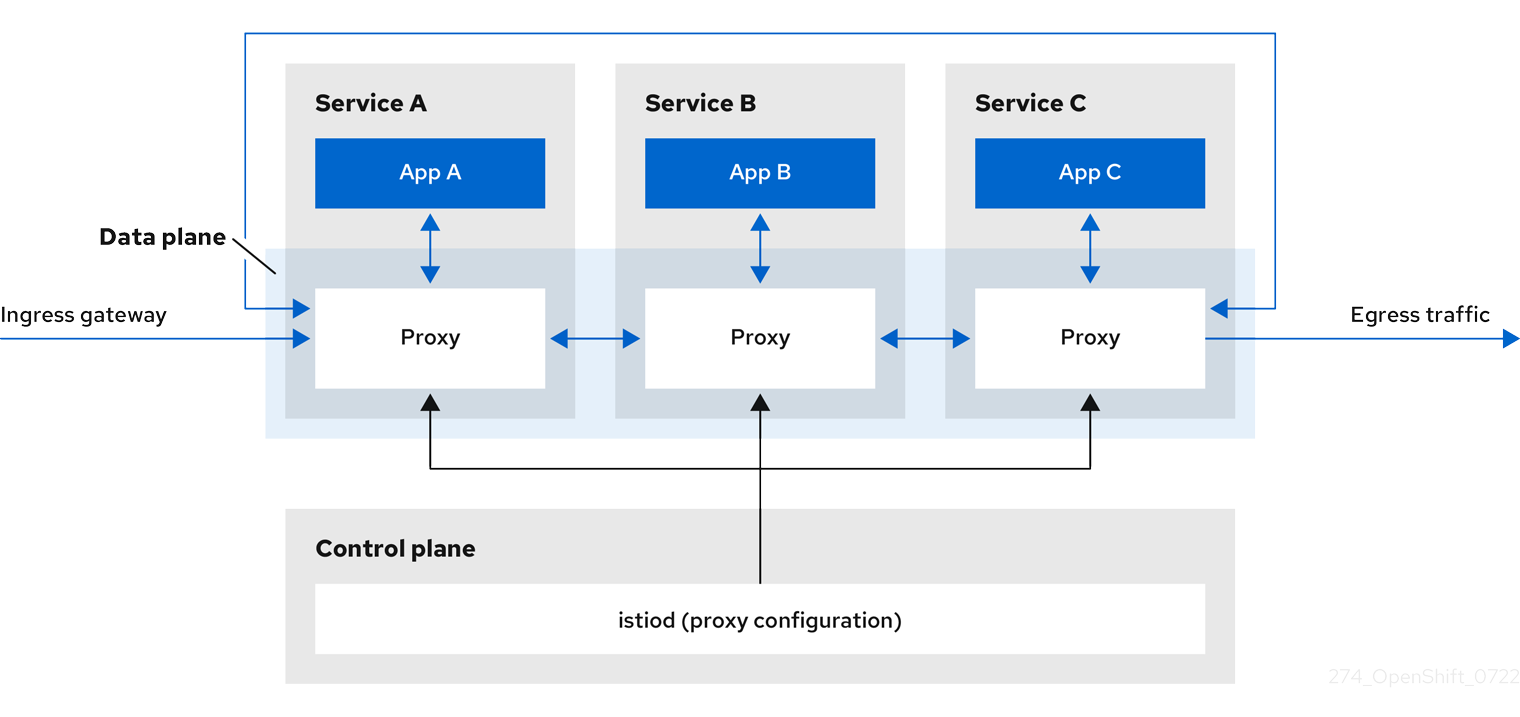

Red Hat OpenShift Service Mesh addresses a variety of problems in a microservice architecture by creating a centralized point of control in an application. It adds a transparent layer on existing distributed applications without requiring any changes to the application code.

Microservice architectures split the work of enterprise applications into modular services, which can make scaling and maintenance easier. However, as an enterprise application built on a microservice architecture grows in size and complexity, it becomes difficult to understand and manage. Service Mesh can address those architecture problems by capturing or intercepting traffic between services and can modify, redirect, or create new requests to other services.

Service Mesh, which is based on the open source Istio project, provides an easy way to create a network of deployed services that provides discovery, load balancing, service-to-service authentication, failure recovery, metrics, and monitoring. A service mesh also provides more complex operational functionality, including A/B testing, canary releases, access control, and end-to-end authentication.

Red Hat OpenShift Service Mesh 3 is generally available. For more information, see Red Hat OpenShift Service Mesh 3.0.

2.1.2. Core features

Red Hat OpenShift Service Mesh provides a number of key capabilities uniformly across a network of services:

- Traffic Management - Control the flow of traffic and API calls between services, make calls more reliable, and make the network more robust in the face of adverse conditions.

- Service Identity and Security - Provide services in the mesh with a verifiable identity and provide the ability to protect service traffic as it flows over networks of varying degrees of trustworthiness.

- Policy Enforcement - Apply organizational policy to the interaction between services, ensure access policies are enforced and resources are fairly distributed among consumers. Policy changes are made by configuring the mesh, not by changing application code.

- Telemetry - Gain understanding of the dependencies between services and the nature and flow of traffic between them, providing the ability to quickly identify issues.

2.2. Service Mesh Release Notes

2.2.1. Red Hat OpenShift Service Mesh version 2.6.13

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.13, and includes the ServiceMeshControlPlane resource version updates for 2.6.13.

This release addresses Common Vulnerabilities and Exposures (CVEs) and is supported on OpenShift Container Platform 4.14 and later.

The most current version of the Red Hat OpenShift Service Mesh Operator can be used with all supported versions of Service Mesh. The version of Service Mesh is specified using the ServiceMeshControlPlane.

You can use the most current version of the Kiali Operator provided by Red Hat with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.1.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.8 |

| Kiali Server | 1.73 |

2.2.1.2. Fixed issues

Before this update, the

KialiCustom Resource (CR) went temporarily to an invalid state after switching Service Mesh Control Plane (SMCP) fromMultiTenanttoClusterWidemode. As a consequence, there was a brief service disruption for users. With this release, the changes inaccessible_namespacesandcluster_wide_accesssettings when updating fromMultiTenanttoClusterWideavoid the temporary invalid state ofKialiCR, ensuring a smoother transition.Before this update, the

must-gathercommand failed due to the lack ofrsyncandtartools. As a consequence, users were unable to gather information from the cluster. With this release, themust-gathercommand now uses alternative tools for data collection. As a result, the command successfully downloads data, improving cluster information retrieval.

2.2.2. Red Hat OpenShift Service Mesh version 2.6.12

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.12, and includes the ServiceMeshControlPlane resource version updates for 2.6.12.

This release addresses Common Vulnerabilities and Exposures (CVEs) and is supported on OpenShift Container Platform 4.14 and later.

You can use the most current version of the Kiali Operator provided by Red Hat with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.2.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.8 |

| Kiali Server | 1.73 |

2.2.2.2. Fixed issues

Before this update, when you used the Kiali operator 2.17 or later with Red Hat OpenShift Service Mesh 2.6 and enabled the Kiali add-on in the Service Mesh control plane (SMCP) configuration, the Kiali config map always set the

cluster_wide_accessvalue totrue. As a consequence, Kiali maintained cluster-wide permissions even if you selected a restricted mode in the SMCP. With this release, the operator correctly sets thecluster_wide_accessvalue based on the mode specified in the SMCP. As a result, Kiali permissions now correctly match your service mesh configuration.

2.2.3. Red Hat OpenShift Service Mesh version 2.6.11

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.11, and includes the ServiceMeshControlPlane resource version updates for 2.6.11.

This release addresses Common Vulnerabilities and Exposures (CVEs) and is supported on OpenShift Container Platform 4.14 and later.

You can use the most current version of the Kiali Operator provided by Red Hat with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.3.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.7 |

| Kiali Server | 1.73 |

2.2.4. Red Hat OpenShift Service Mesh version 2.6.10

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.10, and includes the ServiceMeshControlPlane resource version updates for 2.6.10.

This release addresses Common Vulnerabilities and Exposures (CVEs) and is supported on OpenShift Container Platform 4.14 and later.

You can use the most current version of the Kiali Operator provided by Red Hat with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.4.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.7 |

| Kiali Server | 1.73 |

2.2.5. Red Hat OpenShift Service Mesh version 2.6.9

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.9, and includes the following ServiceMeshControlPlane resource version updates: 2.6.9 and 2.5.12.

This release addresses Common Vulnerabilities and Exposures (CVEs) and is supported on OpenShift Container Platform 4.14 and later.

You can use the most current version of the Kiali Operator provided by Red Hat with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.5.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.7 |

| Kiali Server | 1.73.22 |

2.2.6. Red Hat OpenShift Service Mesh version 2.5.12

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.9 and is supported on OpenShift Container Platform 4.14 and later. This release addresses Common Vulnerabilities and Exposures (CVEs).

2.2.6.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali Server | 1.73.22 |

2.2.7. Red Hat OpenShift Service Mesh version 2.6.8

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.8, and includes the following ServiceMeshControlPlane resource version updates: 2.6.8 and 2.5.11.

This release addresses Common Vulnerabilities and Exposures (CVEs) and is supported on OpenShift Container Platform 4.14 and later.

You can use the most current version of the Kiali Operator provided by Red Hat with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.7.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.7 |

| Kiali Server | 1.73.21 |

2.2.8. Red Hat OpenShift Service Mesh version 2.5.11

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.8 and is supported on OpenShift Container Platform 4.14 and later. This release addresses Common Vulnerabilities and Exposures (CVEs).

2.2.8.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali Server | 1.73.21 |

2.2.9. Red Hat OpenShift Service Mesh version 2.6.7

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.7, and includes the following ServiceMeshControlPlane resource version updates: 2.6.7 and 2.5.10.

This release addresses Common Vulnerabilities and Exposures (CVEs) and is supported on OpenShift Container Platform 4.14 and later.

You can use the most current version of the Kiali Operator provided by Red Hat with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh is specified by using the ServiceMeshControlPlane resource. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.9.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.7 |

| Kiali Server | 1.73.20 |

2.2.10. Red Hat OpenShift Service Mesh version 2.5.10

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.7 and is supported on OpenShift Container Platform 4.14 and later. This release addresses Common Vulnerabilities and Exposures (CVEs).

2.2.10.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali Server | 1.73.20 |

2.2.11. Red Hat OpenShift Service Mesh version 2.6.6

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.6, and includes the following ServiceMeshControlPlane resource version updates: 2.6.6, 2.5.9, and 2.4.15.

This release addresses Common Vulnerabilities and Exposures (CVEs) and is supported on OpenShift Container Platform 4.14 and later.

You can use the most current version of the Kiali Operator provided by Red Hat with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh is specified by using the ServiceMeshControlPlane resource. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.11.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.7 |

| Kiali Server | 1.73.19 |

2.2.11.2. New features

- With this update, the Operator for Red Hat OpenShift Service Mesh 2.6 is renamed to Red Hat OpenShift Service Mesh 2 to align with the release of Red Hat OpenShift Service Mesh 3.0 and improve clarity.

2.2.12. Red Hat OpenShift Service Mesh version 2.5.9

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.6 and is supported on OpenShift Container Platform 4.14 and later. This release addresses Common Vulnerabilities and Exposures (CVEs).

2.2.12.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali Server | 1.73.19 |

2.2.13. Red Hat OpenShift Service Mesh version 2.4.15

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.6 and is supported on OpenShift Container Platform 4.14 and later.

2.2.13.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.12 |

| Kiali Server | 1.65.20 |

2.2.14. Red Hat OpenShift Service Mesh version 2.6.5

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.5, and includes the following ServiceMeshControlPlane resource version updates: 2.6.5, 2.5.8, and 2.4.14.

This release addresses Common Vulnerabilities and Exposures (CVEs) and is supported on OpenShift Container Platform 4.14 and later.

You can use the most current version of the Kiali Operator provided by Red Hat with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh is specified by using the ServiceMeshControlPlane resource. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.14.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.7 |

| Kiali Server | 1.73.18 |

2.2.14.2. New features

- Red Hat OpenShift Distributed Tracing Platform Stack is now supported on IBM Z.

2.2.14.3. Fixed issues

- OSSM-8608 Previously, terminating a Container Network Interface (CNI) pod during the installation phase while copying binaries could leave Istio-CNI temporary files on the node file system. Repeated occurrences could eventually fill up the node disk space. Now, while terminating a CNI pod during the installation phase, existing temporary files are deleted before copying the CNI binary, ensuring that only one temporary file per Istio version exists on the node file system.

2.2.15. Red Hat OpenShift Service Mesh version 2.5.8

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.5 and is supported on OpenShift Container Platform 4.14 and later. This release addresses Common Vulnerabilities and Exposures (CVEs).

2.2.15.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali Server | 1.73.18 |

2.2.15.2. Fixed issues

- OSSM-8608 Previously, terminating a Container Network Interface (CNI) pod during the installation phase while copying binaries could leave Istio-CNI temporary files on the node file system. Repeated occurrences could eventually fill up the node disk space. Now, while terminating a CNI pod during the installation phase, existing temporary files are deleted before copying the CNI binary, ensuring that only one temporary file per Istio version exists on the node file system.

2.2.16. Red Hat OpenShift Service Mesh version 2.4.14

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.5 and is supported on OpenShift Container Platform 4.14 and later. This release addresses Common Vulnerabilities and Exposures (CVEs).

2.2.16.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.12 |

| Kiali Server | 1.65.19 |

2.2.16.2. Fixed issues

- OSSM-8608 Previously, terminating a Container Network Interface (CNI) pod during the installation phase while copying binaries could leave Istio-CNI temporary files on the node file system. Repeated occurrences could eventually fill up the node disk space. Now, while terminating a CNI pod during the installation phase, existing temporary files are deleted before copying the CNI binary, ensuring that only one temporary file per Istio version exists on the node file system.

2.2.17. Red Hat OpenShift Service Mesh version 2.6.4

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.4, and includes the following ServiceMeshControlPlane resource version updates: 2.6.4, 2.5.7, and 2.4.13.

This release addresses Common Vulnerabilities and Exposures (CVEs) and is supported on OpenShift Container Platform 4.14 and later.

The most current version of the Kiali Operator provided by Red Hat can be used with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh is specified by using the ServiceMeshControlPlane resource. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.17.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.7 |

| Kiali Server | 1.73.17 |

2.2.18. Red Hat OpenShift Service Mesh version 2.5.7

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.4 and is supported on OpenShift Container Platform 4.14 and later. This release addresses Common Vulnerabilities and Exposures (CVEs).

2.2.18.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali Server | 1.73.17 |

2.2.19. Red Hat OpenShift Service Mesh version 2.4.13

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.4 and is supported on OpenShift Container Platform 4.14 and later. This release addresses Common Vulnerabilities and Exposures (CVEs).

2.2.19.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.12 |

| Kiali Server | 1.65.18 |

2.2.20. Red Hat OpenShift Service Mesh version 2.6.3

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.3, and includes the following ServiceMeshControlPlane resource version updates: 2.6.3, 2.5.6, and 2.4.12.

This release addresses Common Vulnerabilities and Exposures (CVEs) and is supported on OpenShift Container Platform 4.14 and later.

The most current version of the Kiali Operator provided by Red Hat can be used with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh is specified by using the ServiceMeshControlPlane resource. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.20.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.7 |

| Kiali Server | 1.73.16 |

2.2.21. Red Hat OpenShift Service Mesh version 2.5.6

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.3, addresses Common Vulnerabilities and Exposures (CVEs), and is supported on OpenShift Container Platform 4.14 and later.

2.2.21.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali Server | 1.73.16 |

2.2.22. Red Hat OpenShift Service Mesh version 2.4.12

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.3, addresses Common Vulnerabilities and Exposures (CVEs), and is supported on OpenShift Container Platform 4.14 and later.

2.2.22.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.12 |

| Kiali Server | 1.65.17 |

2.2.23. Red Hat OpenShift Service Mesh version 2.6.2

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.2, and includes the following ServiceMeshControlPlane resource version updates: 2.6.2, 2.5.5 and 2.4.11.

This release addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.14 and later.

The most current version of the Kiali Operator provided by Red Hat can be used with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh is specified by using the ServiceMeshControlPlane resource. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.23.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.7 |

| Kiali Server | 1.73.15 |

2.2.23.2. New features

- The cert-manager Operator for Red Hat OpenShift is now supported on IBM Power, IBM Z, and IBM® LinuxONE.

2.2.23.3. Fixed issues

- OSSM-8099 Previously, there was an issue supporting persistent session labels when the endpoints were in the draining phase. Now, there is a method of handling draining endpoints for the stateful header sessions.

-

OSSM-8001 Previously, when

runAsUserandrunAsGroupwere set to the same value in pods, the proxy GID was incorrectly set to match the container’s GID, causing traffic interception issues with iptables rules applied by Istio CNI. Now, containers can have the same value for runAsUser and runAsGroup, and iptables rules apply correctly. -

OSSM-8074 Previously, the Kiali Operator failed to install the Kiali server when a Service Mesh had a numeric-only namespace (e.g.,

12345). Now, namespaces with only numerals work correctly.

2.2.24. Red Hat OpenShift Service Mesh version 2.5.5

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.2, addresses Common Vulnerabilities and Exposures (CVEs), and is supported on OpenShift Container Platform 4.14 and later.

2.2.24.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali Server | 1.73.15 |

2.2.24.2. Fixed issues

-

OSSM-8001 Previously, when the

runAsUserandrunAsGroupparameters were set to the same value in pods, the proxy GID was incorrectly set to match the container’s GID, causing traffic interception issues with iptables rules applied by Istio CNI. Now, containers can have the same value for therunAsUserandrunAsGroupparameters, and iptables rules apply correctly. -

OSSM-8074 Previously, the Kiali Operator provided by Red Hat failed to install the Kiali Server when a Service Mesh had a numeric-only namespace (e.g.,

12345). Now, namespaces with only numerals work correctly.

2.2.25. Red Hat OpenShift Service Mesh version 2.4.11

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.2, addresses Common Vulnerabilities and Exposures (CVEs), and is supported on OpenShift Container Platform 4.14 and later.

2.2.25.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.12 |

| Kiali Server | 1.65.16 |

2.2.25.2. Fixed issues

-

OSSM-8001 Previously, when the

runAsUserandrunAsGroupparameters were set to the same value in pods, the proxy GID was incorrectly set to match the container’s GID, causing traffic interception issues with iptables rules applied by Istio CNI. Now, containers can have the same value for therunAsUserandrunAsGroupparameters, and iptables rules apply correctly. -

OSSM-8074 Previously, the Kiali Operator provided by Red Hat failed to install the Kiali Server when a Service Mesh had a numeric-only namespace (e.g.,

12345). Now, namespaces with only numerals work correctly.

2.2.26. Red Hat OpenShift Service Mesh version 2.6.1

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.1, and includes the following ServiceMeshControlPlane resource version updates: 2.6.1, 2.5.4 and 2.4.10. This release addresses Common Vulnerabilities and Exposures (CVEs), contains a bug fix, and is supported on OpenShift Container Platform 4.14 and later.

The most current version of the Kiali Operator provided by Red Hat can be used with all supported versions of Red Hat OpenShift Service Mesh. The version of Service Mesh is specified by using the ServiceMeshControlPlane resource. The version of Service Mesh automatically ensures a compatible version of Kiali.

2.2.26.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.5 |

| Kiali Server | 1.73.14 |

2.2.26.2. Fixed issues

- OSSM-6766 Previously, the OpenShift Service Mesh Console (OSSMC) plugin failed if the user wanted to update a namespace (for example, enabling or disabling injection), or create any Istio object (for example, creating traffic policies). Now, the OpenShift Service Mesh Console (OSSMC) plugin does not fail if the user updates a namespace or creates any Istio object.

2.2.27. Red Hat OpenShift Service Mesh version 2.5.4

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.1, addresses Common Vulnerabilities and Exposures (CVEs), and is supported on OpenShift Container Platform 4.14 and later.

2.2.27.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali Server | 1.73.14 |

2.2.28. Red Hat OpenShift Service Mesh version 2.4.10

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.1, addresses Common Vulnerabilities and Exposures (CVEs), and is supported on OpenShift Container Platform 4.14 and later.

2.2.28.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.12 |

| Kiali Server | 1.65.15 |

2.2.29. Red Hat OpenShift Service Mesh version 2.6.0

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.6.0, and includes the following ServiceMeshControlPlane resource version updates: 2.6.0, 2.5.3 and 2.4.9. This release adds new features, addresses Common Vulnerabilities and Exposures (CVEs), and is supported on OpenShift Container Platform 4.14 and later.

This release ends maintenance support for Red Hat OpenShift Service Mesh version 2.3. If you are using Service Mesh version 2.3, you should update to a supported version.

Red Hat OpenShift Service Mesh is designed for FIPS. Service Mesh uses the RHEL cryptographic libraries that have been submitted to NIST for FIPS 140-2/140-3 Validation on the x86_64, ppc64le, and s390x architectures. For more information about the NIST validation program, see Cryptographic Module Validation Program. For the latest NIST status for the individual versions of RHEL cryptographic libraries that have been submitted for validation, see Compliance Activities and Government Standards.

2.2.29.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.20.8 |

| Envoy Proxy | 1.28.5 |

| Kiali | 1.73.9 |

2.2.29.2. Istio 1.20 support

Service Mesh 2.6 is based on Istio 1.20, which provides new features and product enhancements, including:

Native sidecars are supported on OpenShift Container Platform 4.16 or later.

Example

ServiceMeshControlPlaneresourceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Traffic mirroring in Istio 1.20 now supports multiple destinations. This feature enables the mirroring of traffic to various endpoints, allowing for simultaneous observation across different service versions or configurations.

While Red Hat OpenShift Service Mesh supports many Istio 1.20 features, the following exceptions should be noted:

- Ambient mesh is not supported

- QuickAssist Technology (QAT) PrivateKeyProvider in Istio is not supported

2.2.29.3. Istio and Kiali bundle image name changes

This release updates the Istio bundle image name and the Kiali bundle image name to better align with Red Hat naming conventions.

-

Istio bundle image name:

openshift-service-mesh/istio-operator-bundle -

Kiali bundle image name:

openshift-service-mesh/kiali-operator-bundle

2.2.29.4. Integration with Red Hat OpenShift Distributed Tracing Platform and Red Hat build of OpenTelemetry

This release introduces a generally available integration of the tracing extension provider(s) Red Hat OpenShift Distributed Tracing Platform and Red Hat build of OpenTelemetry.

You can expose tracing data to the Red Hat OpenShift Distributed Tracing Platform by appending a named element and the opentelemetry provider to the spec.meshConfig.extensionProviders specification in the ServiceMehControlPlane resource. Then, a telemetry custom resource configures Istio proxies to collect trace spans and send them to the OpenTelemetry Collector endpoint.

You can create a Red Hat build of OpenTelemetry instance in a mesh namespace and configure it to send tracing data to a tracing platform backend service.

2.2.29.5. Red Hat OpenShift Distributed Tracing Platform (Jaeger) default setting change

This release disables Red Hat OpenShift Distributed Tracing Platform (Jaeger) by default for new instances of the ServiceMeshControlPlane resource.

When updating existing instances of the ServiceMeshControlPlane resource to Red Hat OpenShift Service Mesh version 2.6, Distributed Tracing Platform (Jaeger) remains enabled by default.

Red Hat OpenShift Service Mesh 2.6 is the last release that includes support for Red Hat OpenShift Distributed Tracing Platform (Jaeger) and OpenShift Elasticsearch Operator. Both Distributed Tracing Platform (Jaeger) and OpenShift Elasticsearch Operator will be removed in the next release. If you are currently using Distributed Tracing Platform (Jaeger) and OpenShift Elasticsearch Operator, you need to switch to Red Hat OpenShift Distributed Tracing Platform and Red Hat build of OpenTelemetry.

2.2.29.6. Gateway API use is generally available for Red Hat OpenShift Service Mesh cluster-wide deployments

This release introduces the General Availability for using the Kubernetes Gateway API version 1.0.0 with Red Hat OpenShift Service Mesh 2.6. This API use is limited to Red Hat OpenShift Service Mesh. The Gateway API custom resource definitions (CRDs) are not supported.

Gateway API is now enabled by default if cluster-wide mode is enabled (spec.mode: ClusterWide). It can be enabled even if the custom resource definitions (CRDs) are not installed in the cluster.

Gateway API for multitenant mesh deployments is still in Technology Preview.

Refer to the following table to determine which Gateway API version should be installed with the OpenShift Service Mesh version you are using:

| Service Mesh Version | Istio Version | Gateway API Version | Notes |

|---|---|---|---|

| 2.6 | 1.20.x | 1.0.0 | N/A |

| 2.5.x | 1.18.x | 0.6.2 |

Use the experimental branch because |

| 2.4.x | 1.16.x | 0.5.1 | For multitenant mesh deployment, all Gateway API CRDs must be present. Use the experimental branch. |

You can disable this feature by setting PILOT_ENABLE_GATEWAY_API to false:

2.2.29.7. Fixed issues

- OSSM-6754 Previously, in OpenShift Container Platform 4.15, when users navigated to a Service details page, clicked the Service Mesh tab, and refreshed the page, the Service Mesh details page remained stuck on Service Mesh content information, even though the active tab was the default Details tab. Now, after a refresh, users can navigate through the different tabs of the Service details page without issue.

-

OSSM-2101 Previously, the Istio Operator never deleted the

istio-cni-nodeDaemonSet and other CNI resources when they were no longer needed. Now, after upgrading the Operator, if there is at least one SMCP installed in the cluster, the Operator reconciles this SMCP, and then deletes all unused CNI installations (even very old CNI versions as early as v2.0).

2.2.29.8. Kiali known issues

OSSM-6099 Installing the OpenShift Service Mesh Console (OSSMC) plugin fails on an IPv6 cluster.

Workaround: Install the OSSMC plugin on an IPv4 cluster.

2.2.30. Red Hat OpenShift Service Mesh version 2.5.3

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.0, addresses Common Vulnerabilities and Exposures (CVEs), and is supported on OpenShift Container Platform 4.12 and later.

2.2.30.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali | 1.73.9 |

2.2.31. Red Hat OpenShift Service Mesh version 2.4.9

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.6.0, addresses Common Vulnerabilities and Exposures (CVEs), and is supported on OpenShift Container Platform 4.12 and later.

2.2.31.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.12 |

| Kiali | 1.65.11 |

2.2.32. Red Hat OpenShift Service Mesh version 2.5.2

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.5.2, and includes the following ServiceMeshControlPlane resource version updates: 2.5.2, 2.4.8 and 2.3.12. This release addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.12 and later.

2.2.32.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali | 1.73.8 |

2.2.32.2. Fixed issues

-

OSSM-6331 Previously, the

smcp.general.logging.componentLevelsspec accepted invalidLogLevelvalues, and theServiceMeshControlPlaneresource was still created. Now, the terminal shows an error message if an invalid value is used, and the control plane is not created. -

OSSM-6290 Previously, the Project filter drop-down of the Istio Config list page did not work correctly. All

istio configitems were displayed from all namespaces even if you selected a specific project from the drop-down menu. Now, only theistio configitems that belong to the selected project in the filter drop-down are displayed. - OSSM-6298 Previously, when you clicked an item reference within the OpenShift Service Mesh Console (OSSMC) plugin, the console sometimes performed multiple redirects before opening the desired page. As a result, navigating back to the previous page that was open in the console caused your web browser to open the wrong page. Now, these redirects do not occur, and clicking Back in a web browser opens the correct page.

- OSSM-6299 Previously, in OpenShift Container Platform 4.15, when you clicked the Node graph menu option of any node menu within the traffic graph, the node graph was not displayed. Instead, the page refreshed with the same traffic graph. Now, clicking the Node graph menu option correctly displays the node graph.

- OSSM-6267 Previously, configuring a data source in Red Hat OpenShift Service Mesh 2.5 Grafana caused a data query authentication error, and users could not view data in the Istio service and workload dashboards. Now, upgrading an existing 2.5 SMCP to version 2.5.2 or later resolves the Grafana error.

2.2.33. Red Hat OpenShift Service Mesh version 2.4.8

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.5.2, addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.12 and later.

The most current version of the Red Hat OpenShift Service Mesh Operator can be used with all supported versions of Service Mesh. The version of Service Mesh is specified using the ServiceMeshControlPlane.

2.2.33.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.12 |

| Kiali | 1.65.11 |

2.2.34. Red Hat OpenShift Service Mesh version 2.3.12

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.5.2, addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.12 and later.

The most current version of the Red Hat OpenShift Service Mesh Operator can be used with all supported versions of Service Mesh. The version of Service Mesh is specified using the ServiceMeshControlPlane resource.

2.2.34.1. Component updates

| Component | Version |

|---|---|

| Istio | 1.14.5 |

| Envoy Proxy | 1.22.11 |

| Kiali | 1.57.14 |

2.2.35. Previous releases

These previous releases added features and improvements.

2.2.35.1. New features Red Hat OpenShift Service Mesh version 2.5.1

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.5.1, and includes the following ServiceMeshControlPlane resource version updates: 2.5.1, 2.4.7 and 2.3.11.

This release addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.12 and later.

2.2.35.1.1. Component versions for Red Hat OpenShift Service Mesh version 2.5.1

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali | 1.73.7 |

2.2.35.2. New features Red Hat OpenShift Service Mesh version 2.5

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.5.0, and includes the following ServiceMeshControlPlane resource version updates: 2.5.0, 2.4.6 and 2.3.10.

This release adds new features, addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.12 and later.

This release ends maintenance support for OpenShift Service Mesh version 2.2. If you are using OpenShift Service Mesh version 2.2, you should update to a supported version.

2.2.35.2.1. Component versions for Red Hat OpenShift Service Mesh version 2.5

| Component | Version |

|---|---|

| Istio | 1.18.7 |

| Envoy Proxy | 1.26.8 |

| Kiali | 1.73.4 |

2.2.35.2.2. Istio 1.18 support

Service Mesh 2.5 is based on Istio 1.18, which brings in new features and product enhancements. While Red Hat OpenShift Service Mesh supports many Istio 1.18 features, the following exceptions should be noted:

- Ambient mesh is not supported

- QuickAssist Technology (QAT) PrivateKeyProvider in Istio is not supported

2.2.35.2.3. Cluster-Wide mesh migration

This release adds documentation for migrating from a multitenant mesh to a cluster-wide mesh. For more information, see the following documentation:

- "About migrating to a cluster-wide mesh"

- "Excluding namespaces from a cluster-wide mesh"

- "Defining which namespaces receive sidecar injection in a cluster-wide mesh"

- "Excluding individual pods from a cluster-wide mesh"

2.2.35.2.4. Red Hat OpenShift Service Mesh Operator on ARM-based clusters

This release provides the Red Hat OpenShift Service Mesh Operator on ARM-based clusters as a generally available feature.

2.2.35.2.5. Integration with Red Hat OpenShift Distributed Tracing Platform (Tempo) Stack

This release introduces a generally available integration of the tracing extension provider(s). You can expose tracing data to the Red Hat OpenShift Distributed Tracing Platform (Tempo) stack by appending a named element and the zipkin provider to the spec.meshConfig.extensionProviders specification. Then, a telemetry custom resource configures Istio proxies to collect trace spans and send them to the Tempo distributor service endpoint.

Red Hat OpenShift Distributed Tracing Platform (Tempo) Stack is not supported on IBM Z.

2.2.35.2.6. OpenShift Service Mesh Console plugin

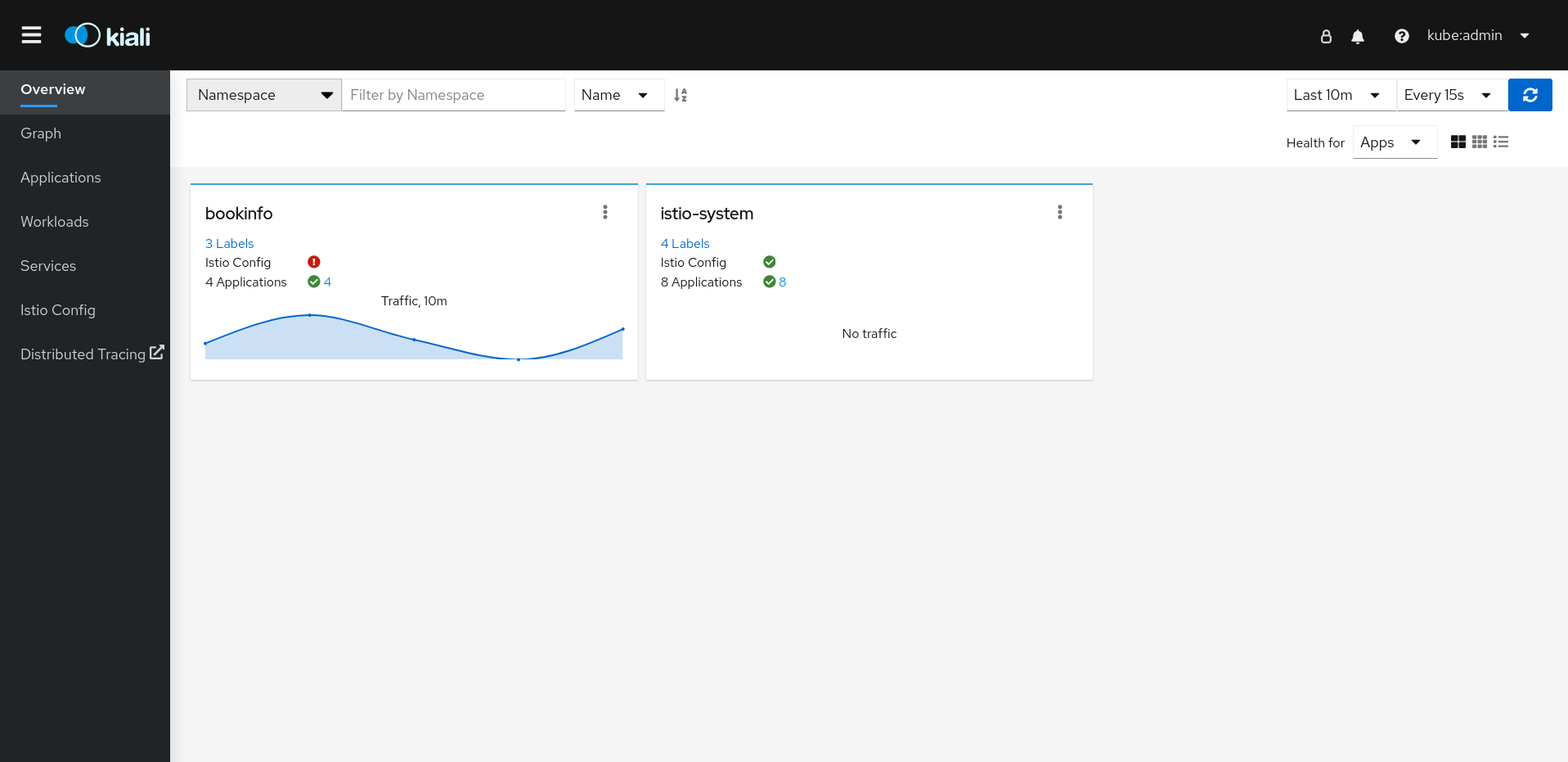

This release introduces a generally available version of the OpenShift Service Mesh Console (OSSMC) plugin.

The OSSMC plugin is an extension to the OpenShift Console that provides visibility into your Service Mesh. With the OSSMC plugin installed, a new Service Mesh menu option is available on the navigation pane of the web console, as well as new Service Mesh tabs that enhance existing Workloads and Service console pages.

The features of the OSSMC plugin are very similar to those of the standalone Kiali Console. The OSSMC plugin does not replace the Kiali Console, and after installing the OSSMC plugin, you can still access the standalone Kiali Console.

2.2.35.2.7. Istio OpenShift Routing (IOR) default setting change

The default setting for Istio OpenShift Routing (IOR) has changed. Starting with this release, automatic routes are disabled by default for new instances of the ServiceMeshControlPlane resource.

For new instances of the ServiceMeshControlPlane resources, you can use automatic routes by setting the enabled field to true in the gateways.openshiftRoute specification of the ServiceMeshControlPlane resource.

Example ServiceMeshControlPlane resource

When updating existing instances of the ServiceMeshControlPlane resource to Red Hat OpenShift Service Mesh version 2.5, automatic routes remain enabled by default.

2.2.35.2.8. Istio proxy concurrency configuration enhancement

The concurrency parameter in the networking.istio API configures how many worker threads the Istio proxy runs.

For consistency across deployments, Istio now configures the concurrency parameter based upon the CPU limit allocated to the proxy container. For example, a limit of 2500m would set the concurrency parameter to 3. If you set the concurrency parameter to a different value, then Istio uses that value to configure how many threads the proxy runs instead of using the CPU limit.

Previously, the default setting for the parameter was 2.

2.2.35.2.9. Gateway API CRD versions

OpenShift Container Platform Gateway API support is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

A new version of the Gateway API custom resource definition (CRD) is now available. Refer to the following table to determine which Gateway API version should be installed with the OpenShift Service Mesh version you are using:

| Service Mesh Version | Istio Version | Gateway API Version | Notes |

|---|---|---|---|

| 2.5.x | 1.18.x | 0.6.2 |

Use the experimental branch because |

| 2.4.x | 1.16.x | 0.5.1 | For multitenant mesh deployment, all Gateway API CRDs must be present. Use the experimental branch. |

2.2.35.3. New features Red Hat OpenShift Service Mesh version 2.4.7

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.5.1, addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.12 and later.

2.2.35.3.1. Component versions for Red Hat OpenShift Service Mesh version 2.4.7

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.12 |

| Kiali | 1.65.11 |

2.2.35.4. New features Red Hat OpenShift Service Mesh version 2.4.6

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.5.0, addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.12 and later.

2.2.35.4.1. Component versions for Red Hat OpenShift Service Mesh version 2.4.6

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.12 |

| Kiali | 1.65.11 |

2.2.35.5. New features Red Hat OpenShift Service Mesh version 2.4.5

This release of Red Hat OpenShift Service Mesh updates the Red Hat OpenShift Service Mesh Operator version to 2.4.5, and includes the following ServiceMeshControlPlane resource version updates: 2.4.5, 2.3.9 and 2.2.12.

This release addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.11 and later.

2.2.35.5.1. Component versions included in Red Hat OpenShift Service Mesh version 2.4.5

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.12 |

| Kiali | 1.65.11 |

2.2.35.6. New features Red Hat OpenShift Service Mesh version 2.4.4

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.11 and later versions.

2.2.35.6.1. Component versions included in Red Hat OpenShift Service Mesh version 2.4.4

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.12 |

| Jaeger | 1.47.0 |

| Kiali | 1.65.10 |

2.2.35.7. New features Red Hat OpenShift Service Mesh version 2.4.3

- The Red Hat OpenShift Service Mesh Operator is now available on ARM-based clusters as a Technology Preview feature.

-

The

envoyExtAuthzGrpcfield has been added, which is used to configure an external authorization provider using the gRPC API. - Common Vulnerabilities and Exposures (CVEs) have been addressed.

- This release is supported on OpenShift Container Platform 4.10 and newer versions.

2.2.35.7.1. Component versions included in Red Hat OpenShift Service Mesh version 2.4.3

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.10 |

| Jaeger | 1.42.0 |

| Kiali | 1.65.8 |

2.2.35.7.2. Red Hat OpenShift Service Mesh operator to ARM-based clusters

Red Hat OpenShift Service Mesh operator to ARM based clusters is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

This release makes the Red Hat OpenShift Service Mesh Operator available on ARM-based clusters as a Technology Preview feature. Images are available for Istio, Envoy, Prometheus, Kiali, and Grafana. Images are not available for Jaeger, so Jaeger must be disabled as a Service Mesh add-on.

2.2.35.7.3. Remote Procedure Calls (gRPC) API support for external authorization configuration

This enhancement adds the envoyExtAuthzGrpc field to configure an external authorization provider using the gRPC API.

2.2.35.8. New features Red Hat OpenShift Service Mesh version 2.4.2

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.10 and later versions.

2.2.35.8.1. Component versions included in Red Hat OpenShift Service Mesh version 2.4.2

| Component | Version |

|---|---|

| Istio | 1.16.7 |

| Envoy Proxy | 1.24.10 |

| Jaeger | 1.42.0 |

| Kiali | 1.65.7 |

2.2.35.9. New features Red Hat OpenShift Service Mesh version 2.4.1

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.10 and later versions.

2.2.35.9.1. Component versions included in Red Hat OpenShift Service Mesh version 2.4.1

| Component | Version |

|---|---|

| Istio | 1.16.5 |

| Envoy Proxy | 1.24.8 |

| Jaeger | 1.42.0 |

| Kiali | 1.65.7 |

2.2.35.10. New features Red Hat OpenShift Service Mesh version 2.4

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.10 and later versions.

2.2.35.10.1. Component versions included in Red Hat OpenShift Service Mesh version 2.4

| Component | Version |

|---|---|

| Istio | 1.16.5 |

| Envoy Proxy | 1.24.8 |

| Jaeger | 1.42.0 |

| Kiali | 1.65.6 |

2.2.35.10.2. Cluster-wide deployments

This enhancement introduces a generally available version of cluster-wide deployments. A cluster-wide deployment contains a service mesh control plane that monitors resources for an entire cluster. The control plane uses a single query across all namespaces to monitor each Istio or Kubernetes resource that affects the mesh configuration. Reducing the number of queries the control plane performs in a cluster-wide deployment improves performance.

2.2.35.10.3. Support for discovery selectors

This enhancement introduces a generally available version of the meshConfig.discoverySelectors field, which can be used in cluster-wide deployments to limit the services the service mesh control plane can discover.

2.2.35.10.4. Integration with cert-manager istio-csr

With this update, Red Hat OpenShift Service Mesh integrates with the cert-manager controller and the istio-csr agent. cert-manager adds certificates and certificate issuers as resource types in Kubernetes clusters, and simplifies the process of obtaining, renewing, and using those certificates. cert-manager provides and rotates an intermediate CA certificate for Istio. Integration with istio-csr enables users to delegate signing certificate requests from Istio proxies to cert-manager. ServiceMeshControlPlane v2.4 accepts CA certificates provided by cert-manager as cacerts secret.

Integration with cert-manager and istio-csr is not supported on IBM Power®, IBM Z®, and IBM® LinuxONE.

2.2.35.10.5. Integration with external authorization systems

This enhancement introduces a generally available method of integrating Red Hat OpenShift Service Mesh with external authorization systems by using the action: CUSTOM field of the AuthorizationPolicy resource. Use the envoyExtAuthzHttp field to delegate the access control to an external authorization system.

2.2.35.10.6. Integration with external Prometheus installation

This enhancement introduces a generally available version of the Prometheus extension provider. You can expose metrics to the OpenShift Container Platform monitoring stack or a custom Prometheus installation by setting the value of the extensionProviders field to prometheus in the spec.meshConfig specification. The telemetry object configures Istio proxies to collect traffic metrics. Service Mesh only supports the Telemetry API for Prometheus metrics.

2.2.35.10.7. Single stack IPv6 support

This enhancement introduces generally available support for single stack IPv6 clusters, providing access to a broader range of IP addresses. Dual stack IPv4 or IPv6 cluster is not supported.

Single stack IPv6 support is not available on IBM Power®, IBM Z®, and IBM® LinuxONE.

2.2.35.10.8. OpenShift Container Platform Gateway API support

OpenShift Container Platform Gateway API support is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

This enhancement introduces an updated Technology Preview version of the OpenShift Container Platform Gateway API. By default, the OpenShift Container Platform Gateway API is disabled.

2.2.35.10.8.1. Enabling OpenShift Container Platform Gateway API

To enable the OpenShift Container Platform Gateway API, set the value of the enabled field to true in the techPreview.gatewayAPI specification of the ServiceMeshControlPlane resource.

spec:

techPreview:

gatewayAPI:

enabled: true

spec:

techPreview:

gatewayAPI:

enabled: truePreviously, environment variables were used to enable the Gateway API.

2.2.35.10.9. Control plane deployment on infrastructure nodes

Service Mesh control plane deployment is now supported and documented on OpenShift infrastructure nodes. For more information, see the following documentation:

- Configuring all Service Mesh control plane components to run on infrastructure nodes

- Configuring individual Service Mesh control plane components to run on infrastructure nodes

2.2.35.10.10. Istio 1.16 support

Service Mesh 2.4 is based on Istio 1.16, which brings in new features and product enhancements. While many Istio 1.16 features are supported, the following exceptions should be noted:

- HBONE protocol for sidecars is an experimental feature that is not supported.

- Service Mesh on ARM64 architecture is not supported.

- OpenTelemetry API remains a Technology Preview feature.

2.2.35.11. New features Red Hat OpenShift Service Mesh version 2.3.11

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.5.1, addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.12 and later.

2.2.35.11.1. Component versions for Red Hat OpenShift Service Mesh version 2.3.11

| Component | Version |

|---|---|

| Istio | 1.14.5 |

| Envoy Proxy | 1.22.11 |

| Kiali | 1.57.14 |

2.2.35.12. New features Red Hat OpenShift Service Mesh version 2.3.10

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.5.0, addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.12 and later.

2.2.35.12.1. Component versions for Red Hat OpenShift Service Mesh version 2.3.10

| Component | Version |

|---|---|

| Istio | 1.14.5 |

| Envoy Proxy | 1.22.11 |

| Kiali | 1.57.14 |

2.2.35.13. New features Red Hat OpenShift Service Mesh version 2.3.9

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.4.5, addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.11 and later.

2.2.35.13.1. Component versions included in Red Hat OpenShift Service Mesh version 2.3.9

| Component | Version |

|---|---|

| Istio | 1.14.5 |

| Envoy Proxy | 1.22.11 |

| Jaeger | 1.47.0 |

| Kiali | 1.57.14 |

2.2.35.14. New features Red Hat OpenShift Service Mesh version 2.3.8

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.11 and later versions.

2.2.35.14.1. Component versions included in Red Hat OpenShift Service Mesh version 2.3.8

| Component | Version |

|---|---|

| Istio | 1.14.5 |

| Envoy Proxy | 1.22.11 |

| Jaeger | 1.47.0 |

| Kiali | 1.57.13 |

2.2.35.15. New features Red Hat OpenShift Service Mesh version 2.3.7

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.10 and later versions.

2.2.35.15.1. Component versions included in Red Hat OpenShift Service Mesh version 2.3.7

| Component | Version |

|---|---|

| Istio | 1.14.6 |

| Envoy Proxy | 1.22.11 |

| Jaeger | 1.42.0 |

| Kiali | 1.57.11 |

2.2.35.16. New features Red Hat OpenShift Service Mesh version 2.3.6

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.10 and later versions.

2.2.35.16.1. Component versions included in Red Hat OpenShift Service Mesh version 2.3.6

| Component | Version |

|---|---|

| Istio | 1.14.5 |

| Envoy Proxy | 1.22.11 |

| Jaeger | 1.42.0 |

| Kiali | 1.57.10 |

2.2.35.17. New features Red Hat OpenShift Service Mesh version 2.3.5

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.10 and later versions.

2.2.35.17.1. Component versions included in Red Hat OpenShift Service Mesh version 2.3.5

| Component | Version |

|---|---|

| Istio | 1.14.5 |

| Envoy Proxy | 1.22.9 |

| Jaeger | 1.42.0 |

| Kiali | 1.57.10 |

2.2.35.18. New features Red Hat OpenShift Service Mesh version 2.3.4

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.10 and later versions.

2.2.35.18.1. Component versions included in Red Hat OpenShift Service Mesh version 2.3.4

| Component | Version |

|---|---|

| Istio | 1.14.6 |

| Envoy Proxy | 1.22.9 |

| Jaeger | 1.42.0 |

| Kiali | 1.57.9 |

2.2.35.19. New features Red Hat OpenShift Service Mesh version 2.3.3

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.19.1. Component versions included in Red Hat OpenShift Service Mesh version 2.3.3

| Component | Version |

|---|---|

| Istio | 1.14.5 |

| Envoy Proxy | 1.22.9 |

| Jaeger | 1.42.0 |

| Kiali | 1.57.7 |

2.2.35.20. New features Red Hat OpenShift Service Mesh version 2.3.2

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.20.1. Component versions included in Red Hat OpenShift Service Mesh version 2.3.2

| Component | Version |

|---|---|

| Istio | 1.14.5 |

| Envoy Proxy | 1.22.7 |

| Jaeger | 1.39 |

| Kiali | 1.57.6 |

2.2.35.21. New features Red Hat OpenShift Service Mesh version 2.3.1

This release of Red Hat OpenShift Service Mesh introduces new features, addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.21.1. Component versions included in Red Hat OpenShift Service Mesh version 2.3.1

| Component | Version |

|---|---|

| Istio | 1.14.5 |

| Envoy Proxy | 1.22.4 |

| Jaeger | 1.39 |

| Kiali | 1.57.5 |

2.2.35.22. New features Red Hat OpenShift Service Mesh version 2.3

This release of Red Hat OpenShift Service Mesh introduces new features, addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.22.1. Component versions included in Red Hat OpenShift Service Mesh version 2.3

| Component | Version |

|---|---|

| Istio | 1.14.3 |

| Envoy Proxy | 1.22.4 |

| Jaeger | 1.38 |

| Kiali | 1.57.3 |

2.2.35.22.2. New Container Network Interface (CNI) DaemonSet container and ConfigMap

The openshift-operators namespace includes a new istio CNI DaemonSet istio-cni-node-v2-3 and a new ConfigMap resource, istio-cni-config-v2-3.

When upgrading to Service Mesh Control Plane 2.3, the existing istio-cni-node DaemonSet is not changed, and a new istio-cni-node-v2-3 DaemonSet is created.

This name change does not affect previous releases or any istio-cni-node CNI DaemonSet associated with a Service Mesh Control Plane deployed using a previous release.

2.2.35.22.3. Gateway injection support

This release introduces generally available support for Gateway injection. Gateway configurations are applied to standalone Envoy proxies that are running at the edge of the mesh, rather than the sidecar Envoy proxies running alongside your service workloads. This enables the ability to customize gateway options. When using gateway injection, you must create the following resources in the namespace where you want to run your gateway proxy: Service, Deployment, Role, and RoleBinding.

2.2.35.22.4. Istio 1.14 Support

Service Mesh 2.3 is based on Istio 1.14, which brings in new features and product enhancements. While many Istio 1.14 features are supported, the following exceptions should be noted:

- ProxyConfig API is supported with the exception of the image field.

- Telemetry API is a Technology Preview feature.

- SPIRE runtime is not a supported feature.

2.2.35.22.5. OpenShift Service Mesh Console

OpenShift Service Mesh Console is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

This release introduces a Technology Preview version of the OpenShift Container Platform Service Mesh Console, which integrates the Kiali interface directly into the OpenShift web console. For additional information, see Introducing the OpenShift Service Mesh Console (A Technology Preview)

2.2.35.22.6. Cluster-wide deployment

Cluster-wide deployment is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

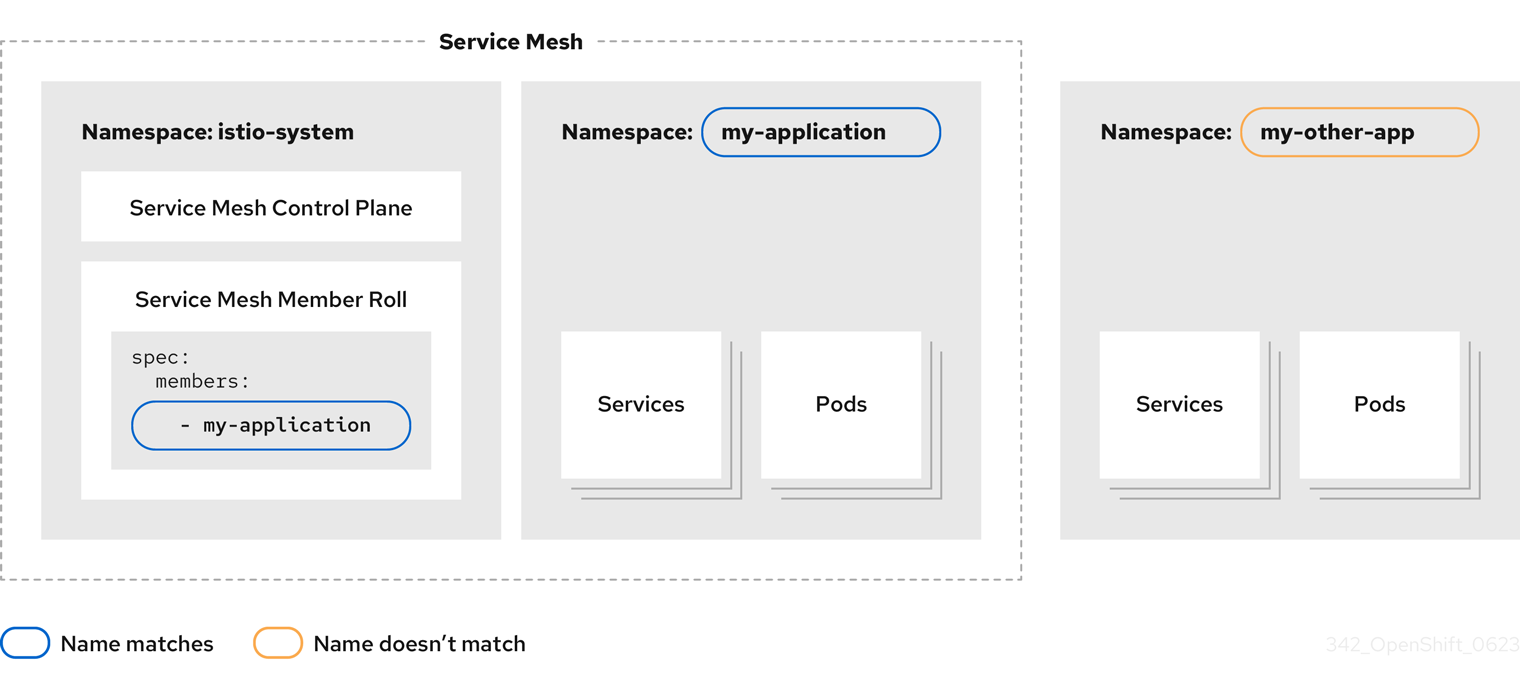

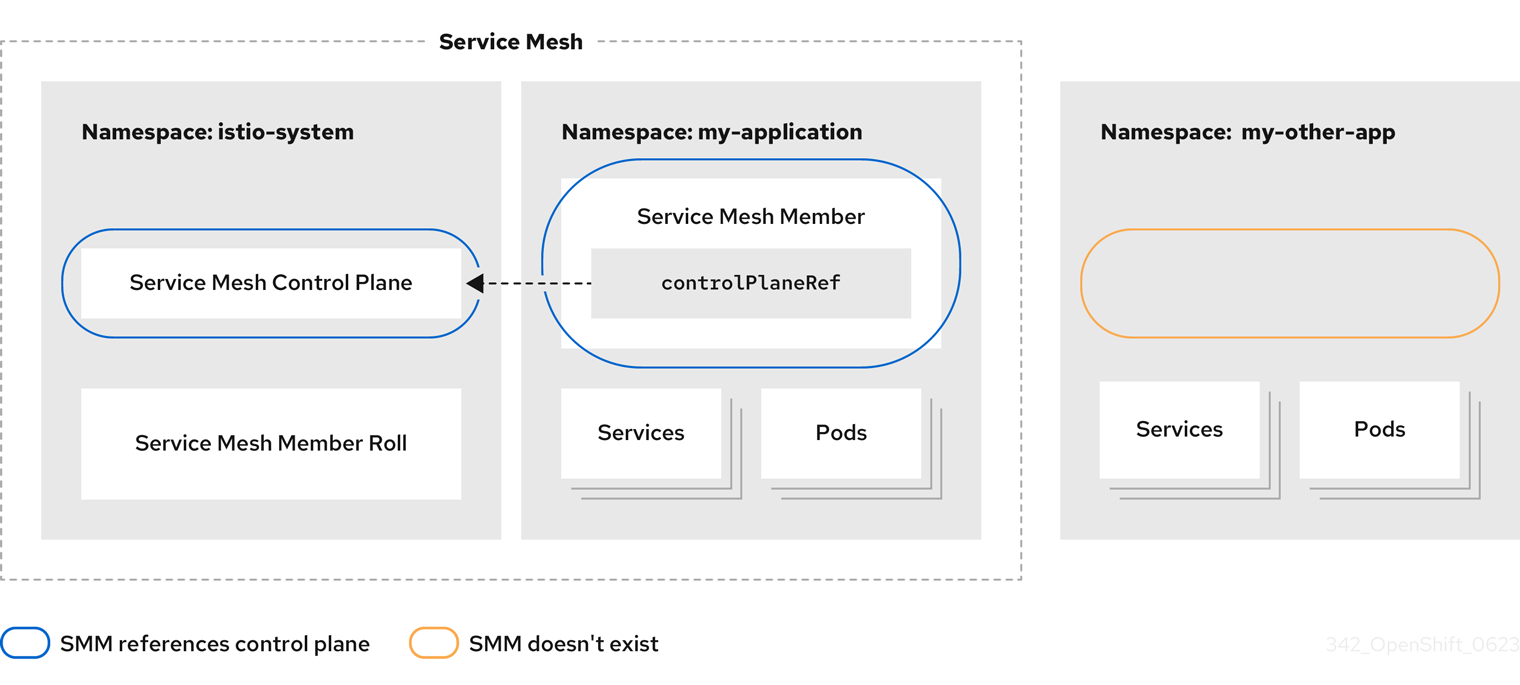

This release introduces cluster-wide deployment as a Technology Preview feature. A cluster-wide deployment contains a Service Mesh Control Plane that monitors resources for an entire cluster. The control plane uses a single query across all namespaces to monitor each Istio or Kubernetes resource kind that affects the mesh configuration. In contrast, the multitenant approach uses a query per namespace for each resource kind. Reducing the number of queries the control plane performs in a cluster-wide deployment improves performance.

This cluster-wide deployment documentation is only applicable for control planes deployed using SMCP v2.3. cluster-wide deployments created using SMCP v2.3 are not compatible with cluster-wide deployments created using SMCP v2.4.

2.2.35.22.6.1. Configuring cluster-wide deployment

The following example ServiceMeshControlPlane object configures a cluster-wide deployment.

To create an SMCP for cluster-wide deployment, a user must belong to the cluster-admin ClusterRole. If the SMCP is configured for cluster-wide deployment, it must be the only SMCP in the cluster. You cannot change the control plane mode from multitenant to cluster-wide (or from cluster-wide to multitenant). If a multitenant control plane already exists, delete it and create a new one.

This example configures the SMCP for cluster-wide deployment.

- 1

- Enables Istiod to monitor resources at the cluster level rather than monitor each individual namespace.

Additionally, the SMMR must also be configured for cluster-wide deployment. This example configures the SMMR for cluster-wide deployment.

- 1

- Adds all namespaces to the mesh, including any namespaces you subsequently create. The following namespaces are not part of the mesh: kube, openshift, kube-* and openshift-*.

2.2.35.23. New features Red Hat OpenShift Service Mesh version 2.2.12

This release of Red Hat OpenShift Service Mesh is included with the Red Hat OpenShift Service Mesh Operator 2.4.5, addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.11 and later.

2.2.35.23.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2.12

| Component | Version |

|---|---|

| Istio | 1.12.9 |

| Envoy Proxy | 1.20.8 |

| Jaeger | 1.47.0 |

| Kiali | 1.48.11 |

2.2.35.24. New features Red Hat OpenShift Service Mesh version 2.2.11

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.11 and later versions.

2.2.35.24.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2.11

| Component | Version |

|---|---|

| Istio | 1.12.9 |

| Envoy Proxy | 1.20.8 |

| Jaeger | 1.47.0 |

| Kiali | 1.48.10 |

2.2.35.25. New features Red Hat OpenShift Service Mesh version 2.2.10

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.10 and later versions.

2.2.35.25.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2.10

| Component | Version |

|---|---|

| Istio | 1.12.9 |

| Envoy Proxy | 1.20.8 |

| Jaeger | 1.42.0 |

| Kiali | 1.48.8 |

2.2.35.26. New features Red Hat OpenShift Service Mesh version 2.2.9

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.10 and later versions.

2.2.35.26.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2.9

| Component | Version |

|---|---|

| Istio | 1.12.9 |

| Envoy Proxy | 1.20.8 |

| Jaeger | 1.42.0 |

| Kiali | 1.48.7 |

2.2.35.27. New features Red Hat OpenShift Service Mesh version 2.2.8

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.10 and later versions.

2.2.35.27.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2.8

| Component | Version |

|---|---|

| Istio | 1.12.9 |

| Envoy Proxy | 1.20.8 |

| Jaeger | 1.42.0 |

| Kiali | 1.48.7 |

2.2.35.28. New features Red Hat OpenShift Service Mesh version 2.2.7

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.10 and later versions.

2.2.35.28.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2.7

| Component | Version |

|---|---|

| Istio | 1.12.9 |

| Envoy Proxy | 1.20.8 |

| Jaeger | 1.42.0 |

| Kiali | 1.48.6 |

2.2.35.29. New features Red Hat OpenShift Service Mesh version 2.2.6

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.29.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2.6

| Component | Version |

|---|---|

| Istio | 1.12.9 |

| Envoy Proxy | 1.20.8 |

| Jaeger | 1.39 |

| Kiali | 1.48.5 |

2.2.35.30. New features Red Hat OpenShift Service Mesh version 2.2.5

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.30.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2.5

| Component | Version |

|---|---|

| Istio | 1.12.9 |

| Envoy Proxy | 1.20.8 |

| Jaeger | 1.39 |

| Kiali | 1.48.3 |

2.2.35.31. New features Red Hat OpenShift Service Mesh version 2.2.4

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.31.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2.4

| Component | Version |

|---|---|

| Istio | 1.12.9 |

| Envoy Proxy | 1.20.8 |

| Jaeger | 1.36.14 |

| Kiali | 1.48.3 |

2.2.35.32. New features Red Hat OpenShift Service Mesh version 2.2.3

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), bug fixes, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.32.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2.3

| Component | Version |

|---|---|

| Istio | 1.12.9 |

| Envoy Proxy | 1.20.8 |

| Jaeger | 1.36 |

| Kiali | 1.48.3 |

2.2.35.33. New features Red Hat OpenShift Service Mesh version 2.2.2

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), bug fixes, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.33.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2.2

| Component | Version |

|---|---|

| Istio | 1.12.7 |

| Envoy Proxy | 1.20.6 |

| Jaeger | 1.36 |

| Kiali | 1.48.2-1 |

2.2.35.33.2. Copy route labels

With this enhancement, in addition to copying annotations, you can copy specific labels for an OpenShift route. Red Hat OpenShift Service Mesh copies all labels and annotations present in the Istio Gateway resource (with the exception of annotations starting with kubectl.kubernetes.io) into the managed OpenShift Route resource.

2.2.35.34. New features Red Hat OpenShift Service Mesh version 2.2.1

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), bug fixes, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.34.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2.1

| Component | Version |

|---|---|

| Istio | 1.12.7 |

| Envoy Proxy | 1.20.6 |

| Jaeger | 1.34.1 |

| Kiali | 1.48.2-1 |

2.2.35.35. New features Red Hat OpenShift Service Mesh 2.2

This release of Red Hat OpenShift Service Mesh adds new features and enhancements, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.35.1. Component versions included in Red Hat OpenShift Service Mesh version 2.2

| Component | Version |

|---|---|

| Istio | 1.12.7 |

| Envoy Proxy | 1.20.4 |

| Jaeger | 1.34.1 |

| Kiali | 1.48.0.16 |

2.2.35.35.2. WasmPlugin API

This release adds support for the WasmPlugin API and deprecates the ServiceMeshExtension API.

2.2.35.35.3. ROSA support

This release introduces service mesh support for Red Hat OpenShift on AWS (ROSA), including multi-cluster federation.

2.2.35.35.4. istio-node DaemonSet renamed

This release, the istio-node DaemonSet is renamed to istio-cni-node to match the name in upstream Istio.

2.2.35.35.5. Envoy sidecar networking changes

Istio 1.10 updated Envoy to send traffic to the application container using eth0 rather than lo by default.

2.2.35.35.6. Service Mesh Control Plane 1.1

This release marks the end of support for Service Mesh Control Planes based on Service Mesh 1.1 for all platforms.

2.2.35.35.7. Istio 1.12 Support

Service Mesh 2.2 is based on Istio 1.12, which brings in new features and product enhancements. While many Istio 1.12 features are supported, the following unsupported features should be noted:

- AuthPolicy Dry Run is a tech preview feature.

- gRPC Proxyless Service Mesh is a tech preview feature.

- Telemetry API is a tech preview feature.

- Discovery selectors is not a supported feature.

- External control plane is not a supported feature.

- Gateway injection is not a supported feature.

2.2.35.35.8. Kubernetes Gateway API

Kubernetes Gateway API is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

Kubernetes Gateway API is a technology preview feature that is disabled by default. If the Kubernetes API deployment controller is disabled, you must manually deploy and link an ingress gateway to the created Gateway object.

If the Kubernetes API deployment controller is enabled, then an ingress gateway automatically deploys when a Gateway object is created.

2.2.35.35.8.1. Installing the Gateway API CRDs

The Gateway API CRDs do not come preinstalled by default on OpenShift clusters. Install the CRDs prior to enabling Gateway API support in the SMCP.

kubectl get crd gateways.gateway.networking.k8s.io || { kubectl kustomize "github.com/kubernetes-sigs/gateway-api/config/crd?ref=v0.4.0" | kubectl apply -f -; }

$ kubectl get crd gateways.gateway.networking.k8s.io || { kubectl kustomize "github.com/kubernetes-sigs/gateway-api/config/crd?ref=v0.4.0" | kubectl apply -f -; }2.2.35.35.8.2. Enabling Kubernetes Gateway API

To enable the feature, set the following environment variables for the Istiod container in ServiceMeshControlPlane:

Restricting route attachment on Gateway API listeners is possible using the SameNamespace or All settings. Istio ignores usage of label selectors in listeners.allowedRoutes.namespaces and reverts to the default behavior (SameNamespace).

2.2.35.35.8.3. Manually linking an existing gateway to a Gateway resource

If the Kubernetes API deployment controller is disabled, you must manually deploy and then link an ingress gateway to the created Gateway resource.

2.2.35.36. New features Red Hat OpenShift Service Mesh 2.1.6

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.36.1. Component versions included in Red Hat OpenShift Service Mesh version 2.1.6

| Component | Version |

|---|---|

| Istio | 1.9.9 |

| Envoy Proxy | 1.17.5 |

| Jaeger | 1.36 |

| Kiali | 1.36.16 |

2.2.35.37. New features Red Hat OpenShift Service Mesh 2.1.5.2

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs), contains bug fixes, and is supported on OpenShift Container Platform 4.9 and later versions.

2.2.35.37.1. Component versions included in Red Hat OpenShift Service Mesh version 2.1.5.2

| Component | Version |

|---|---|

| Istio | 1.9.9 |

| Envoy Proxy | 1.17.5 |

| Jaeger | 1.36 |

| Kiali | 1.24.17 |

2.2.35.38. New features Red Hat OpenShift Service Mesh 2.1.5.1