托管 control plane

在 OpenShift Container Platform 中使用托管 control plane

摘要

第 1 章 托管 control plane 发行注记

发行注记包含有关新的和已弃用的功能、更改以及已知问题的信息。

在这个版本中,OpenShift Container Platform 4.17 托管 control plane 可用。OpenShift Container Platform 4.17 托管 control plane 支持 Kubernetes Operator 版本 2.7 的多集群引擎。

1.1.1. 新功能及功能增强

此版本对以下方面进行了改进:

1.1.1.1. 自定义污点和容限(技术预览)

现在,您可以使用 hcp CLI -tolerations 参数或使用 hc.Spec.Tolerations API 文件将容限应用到托管的 control plane pod。此功能作为技术预览功能提供。如需更多信息,请参阅自定义污点和容限。

对于 OpenShift Virtualization 上的托管 control plane,您可以将一个或多个 NVIDIA 图形处理单元(GPU) 设备附加到节点池。此功能作为技术预览功能提供。如需更多信息,请参阅使用 hcp CLI 附加 NVIDIA GPU 设备,以及使用 NodePool 资源附加 NVIDIA GPU 设备。

1.1.1.3. 支持 AWS 上的租期

当您在 AWS 上创建托管集群时,您可以指定 EC2 实例是否应该在共享或单租户硬件上运行。如需更多信息,请参阅在 AWS 上创建托管集群。

1.1.1.4. 支持托管的集群中的 OpenShift Container Platform 版本

您可以在托管集群中部署一系列支持的 OpenShift Container Platform 版本。如需更多信息,请参阅托管集群中的支持的 OpenShift Container Platform 版本。

在本发行版本中,在断开连接的环境中的 OpenShift Virtualization 上托管 control plane 为正式发布。如需更多信息,请参阅 在断开连接的环境中在 OpenShift Virtualization 上部署托管 control plane。

在本发行版本中,AWS 上的 ARM64 OpenShift Container Platform 集群的托管 control plane 正式发布。如需更多信息,请参阅在 ARM64 架构上运行托管集群。

1.1.1.7. IBM Z 上的托管 control plane 正式发布

在本发行版本中,IBM Z 上的托管 control plane 正式发布。如需更多信息,请参阅在 IBM Z 上部署托管 control plane。

1.1.1.8. IBM Power 上的托管 control plane 正式发布

在本发行版本中,IBM Power 上的托管 control plane 正式发布。如需更多信息,请参阅在 IBM Power 上部署托管 control plane。

1.1.2. 程序错误修复

-

在以前的版本中,当配置托管集群代理并使用具有 HTTP 或 HTTPS 端点的身份提供程序 (IDP) 时,IDP 的主机名在通过代理发送它前没有被解决。因此,只能由 data plane 解析的主机名无法为 IDP 解析。在这个版本中,在通过

konnectivity隧道发送 IPD 流量前会执行 DNS 查找。因此,Control Plane Operator 可以验证只能由 data plane 解析的主机名的 IDP。(OCPBUGS-41371) -

在以前的版本中,当托管集群

controllerAvailabilityPolicy设置为SingleReplica时,网络组件的podAntiAffinity会阻止组件的可用性。在这个版本中,这个问题已解决。(OCPBUGS-39313) -

在以前的版本中,在托管集群镜像配置中指定的

AdditionalTrustedCA不会被协调到openshift-config命名空间中,image-registry-operator的预期,且组件不可用。在这个版本中,这个问题已解决。(OCPBUGS-39225) - 在以前的版本中,因为对核心操作系统的更改,Red Hat HyperShift 定期合规作业会失败。这些失败的作业会导致 OpenShift API 部署失败。在这个版本中,更新会递归复制单独的可信证书颁发机构(CA)证书,而不是复制单个文件,因此定期一致性作业会成功,OpenShift API 会按预期运行。(OCPBUGS-38941)

-

在以前的版本中,托管集群中的 Konnectity 代理代理总是通过 HTTP/S 代理发送所有 TCP 流量。它还会忽略

NO_PROXY配置中的主机名,因为它仅在其流量中接收解析的 IP 地址。因此,无论配置是什么,不应被代理的流量(如 LDAP 流量)都会被代理。在这个版本中,代理会在源 (control plane) 中完成,在 Konnectity 代理中的代理配置被删除。因此,不应被代理的流量(如 LDAP 流量)不再被代理。满足包含主机名的NO_PROXY配置。(OCPBUGS-38637) -

在以前的版本中,在使用

registryOverride时,azure-disk-csi-driver-controller镜像不会获得适当的覆盖值。这是有意设计的,以避免将值传播到azure-disk-csi-driverdata plane 镜像。在这个版本中,通过添加单独的镜像覆盖值来解决这个问题。因此,azure-disk-csi-driver-controller可以与registryOverride一起使用,不再影响azure-disk-csi-driverdata plane 镜像。(OCPBUGS-38183) - 在以前的版本中,在代理管理集群上运行的托管 control plane 中的 AWS 云控制器管理器不会将代理用于云 API 通信。在这个版本中,这个问题已被解决。(OCPBUGS-37832)

在以前的版本中,在托管集群的 control plane 中运行的 Operator 代理是通过在 data plane 中运行的 Konnectity 代理 pod 上的代理设置执行的。无法区分需要基于应用程序协议的代理。

对于 OpenShift Container Platform 的奇偶校验,通过 HTTPS 或 HTTP 的 IDP 通信应该会被代理,但 LDAP 通信不应被代理。这种类型的代理还忽略依赖于主机名的

NO_PROXY条目,因为通过时间流量到达 Konnectity 代理,只有目标 IP 地址可用。在这个版本中,在托管的集群中,通过

konnectivity-https-proxy和konnectivity-socks5-proxy在 control plane 中调用代理,代理流量会从 Konnectivity 代理停止。因此,针对 LDAP 服务器的流量不再会被代理。其他 HTTPS 或 HTTPS 流量被正确代理。指定主机名时,会遵守NO_PROXY设置。(OCPBUGS-37052)在以前的版本中,Konnectity 代理中发生 IDP 通信的代理。通过时间流量达到 Konnectivity,其协议和主机名不再可用。因此,OAUTH 服务器 pod 无法正确进行代理。它无法区分需要代理的协议 (

http/s) 和不需要代理的协议 (ldap://)。另外,它不遵循HostedCluster.spec.configuration.proxyspec 中配置的no_proxy变量。在这个版本中,您可以在 OAUTH 服务器的 Konnectity sidecar 上配置代理,以便正确路由流量,并遵循您的

no_proxy设置。因此,当为托管集群配置代理时,OAUTH 服务器可以与身份提供程序正确通信。(OCPBUGS-36932)-

在以前的版本中,在从

HostedCluster对象中删除ImageContentSources字段后,托管 Cluster Config Operator (HCCO) 不会删除ImageDigestMirrorSetCR (IDMS)。因此,当 IDMS 不应该被保留在HostedCluster对象中,IDMS 会保留。在这个版本中,HCCO 管理从HostedCluster对象中删除 IDMS 资源。(OCPBUGS-34820) -

在以前的版本中,在断开连接的环境中部署

hostedCluster需要设置hypershift.openshift.io/control-plane-operator-image注解。在这个版本中,不再需要注解。另外,元数据检查器在托管 Operator 协调过程中可以正常工作,OverrideImages会如预期填充。(OCPBUGS-34734) - 在以前的版本中,AWS 上的托管集群利用其 VPC 的主 CIDR 范围在 data plane 上生成安全组规则。因此,如果您将托管集群安装到具有多个 CIDR 范围的 AWS VPC 中,则生成的安全组规则可能不足。在这个版本中,安全组规则根据提供的机器 CIDR 范围生成,解决了这个问题。(OCPBUGS-34274)

- 在以前的版本中,OpenShift Cluster Manager 容器没有正确的 TLS 证书。因此,您无法在断开连接的部署中使用镜像流。在这个版本中,TLS 证书作为投射卷添加,解决了这个问题。(OCPBUGS-31446)

- 在以前的版本中,OpenShift Virtualization 的 Kubernetes Operator 控制台的多集群引擎中的 bulk destroy 选项不会销毁托管集群。在这个版本中,这个问题已解决。(ACM-10165)

-

在以前的版本中,更新托管 control plane 集群配置中的

additionalTrustBundle参数不会应用到计算节点。在这个版本中,确保对additionalTrustBundle参数的更新会自动应用到托管 control plane 集群中存在的计算节点。如果您更新到包含此修复的版本,则会选择现有节点的自动推出部署。(OCPBUGS-36680)

1.1.3. 已知问题

-

如果注解和

ManagedCluster资源名称不匹配,Kubernetes Operator 控制台的多集群引擎会显示集群为Pending import。多集群引擎 Operator 无法使用集群。当没有注解且ManagedCluster名称与HostedCluster资源的Infra-ID值不匹配时,会出现同样的问题。 - 当使用 multicluster engine for Kubernetes Operator 控制台将新节点池添加到现有托管集群时,相同的 OpenShift Container Platform 版本可能会在选项列表中出现多次。您可以在列表中为您想要的版本选择任何实例。

当节点池缩减为 0 个 worker 时,控制台中的主机列表仍然会显示处于

Ready状态的节点。您可以通过两种方式验证节点数:- 在控制台中,进入节点池并验证它是否有 0 个节点。

在命令行界面中运行以下命令:

运行以下命令,验证有 0 个节点在节点池中:

oc get nodepool -A

$ oc get nodepool -ACopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令验证集群中有 0 个节点:

oc get nodes --kubeconfig

$ oc get nodes --kubeconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令验证报告了 0 个代理被绑定到集群:

oc get agents -A

$ oc get agents -ACopy to Clipboard Copied! Toggle word wrap Toggle overflow

当您在使用双栈网络的环境中创建托管集群时,您可能会遇到以下与 DNS 相关的问题:

-

service-ca-operatorpod 中的CrashLoopBackOff状态:当 pod 试图通过托管的 control plane 访问 Kubernetes API 服务器时,pod 无法访问服务器,因为kube-system命名空间中的 data plane 代理无法解析请求。出现这个问题的原因是,前端使用 IP 地址,后端使用 pod 无法解析的 DNS 名称。 -

Pod 处于

ContainerCreating状态 :出现这个问题,因为openshift-service-ca-operator无法生成 DNS pod 需要 DNS 解析的metrics-tlssecret。因此,pod 无法解析 Kubernetes API 服务器。要解决这些问题,请配置双栈网络的 DNS 服务器设置。

-

-

在 Agent 平台上,托管 control plane 功能定期轮转 Agent 用来拉取 ignition 的令牌。因此,如果您有一个创建一段时间的 Agent 资源,它可能无法拉取 ignition。作为临时解决方案,在 Agent 规格中,删除

IgnitionEndpointTokenReference属性的 secret,然后在 Agent 资源上添加或修改任何标签。系统使用新令牌重新创建 secret。 如果您在与其受管集群相同的命名空间中创建了托管集群,分离受管集群会删除受管集群命名空间中的所有集群(包括托管集群)。以下情况会在与受管集群相同的命名空间中创建托管集群:

- 已使用默认托管集群集群命名空间,通过 multicluster engine for Kubernetes Operator 控制台在 Agent 平台上创建托管集群。

- 您可以通过命令行界面或 API 创建托管集群,方法是将指定托管的集群命名空间指定为与托管集群名称相同。

如果您为新的托管集群配置了集群范围代理,则该集群的部署可能会失败,因为当配置了集群范围代理时 worker 节点无法访问 Kubernetes API 服务器。要解决这个问题,在托管集群的配置文件中,将任何以下信息添加到

noProxy字段中,以便数据平面的流量会跳过代理:- 外部 API 地址。

-

内部 API 地址。默认值为

172.20.0.1。 -

短语

kubernetes。 - 服务网络 CIDR。

- 集群网络 CIDR。

1.1.4. 正式发布(GA)和技术预览(TP)功能

正式发布(GA)的功能被完全支持,并适用于生产环境。技术预览功能为实验性功能,不适用于生产环境。有关 TP 功能的更多信息,请参阅红帽客户门户网站中的支持范围。

对于 IBM Power 和 IBM Z,您必须在基于 64 位 x86 架构的机器类型以及 IBM Power 或 IBM Z 上的节点池上运行 control plane。

参阅下表以了解托管 control plane GA 和 TP 功能:

| 功能 | 4.15 | 4.16 | 4.17 |

|---|---|---|---|

| 在 Amazon Web Services (AWS) 上托管 OpenShift Container Platform 的 control plane。 | 技术预览 | 正式发布 | 正式发布 |

| 在裸机上托管 OpenShift Container Platform 的 control plane | 公开发行 | 公开发行 | 公开发行 |

| 在 OpenShift Virtualization 上为 OpenShift Container Platform 托管 control plane | 正式发布 | 正式发布 | 正式发布 |

| 使用非裸机代理机器托管 OpenShift Container Platform 的 control plane | 技术预览 | 技术预览 | 技术预览 |

| 在 Amazon Web Services 上为 ARM64 OpenShift Container Platform 集群托管 control plane | 技术预览 | 技术预览 | 正式发布 |

| 在 IBM Power 上托管 OpenShift Container Platform 的 control plane | 技术预览 | 技术预览 | 正式发布 |

| 在 IBM Z 上托管 OpenShift Container Platform 的 control plane | 技术预览 | 技术预览 | 正式发布 |

| 在 RHOSP 上托管 OpenShift Container Platform 的 control plane | 不可用 | 不可用 | 开发者预览 |

第 2 章 托管 control plane 概述

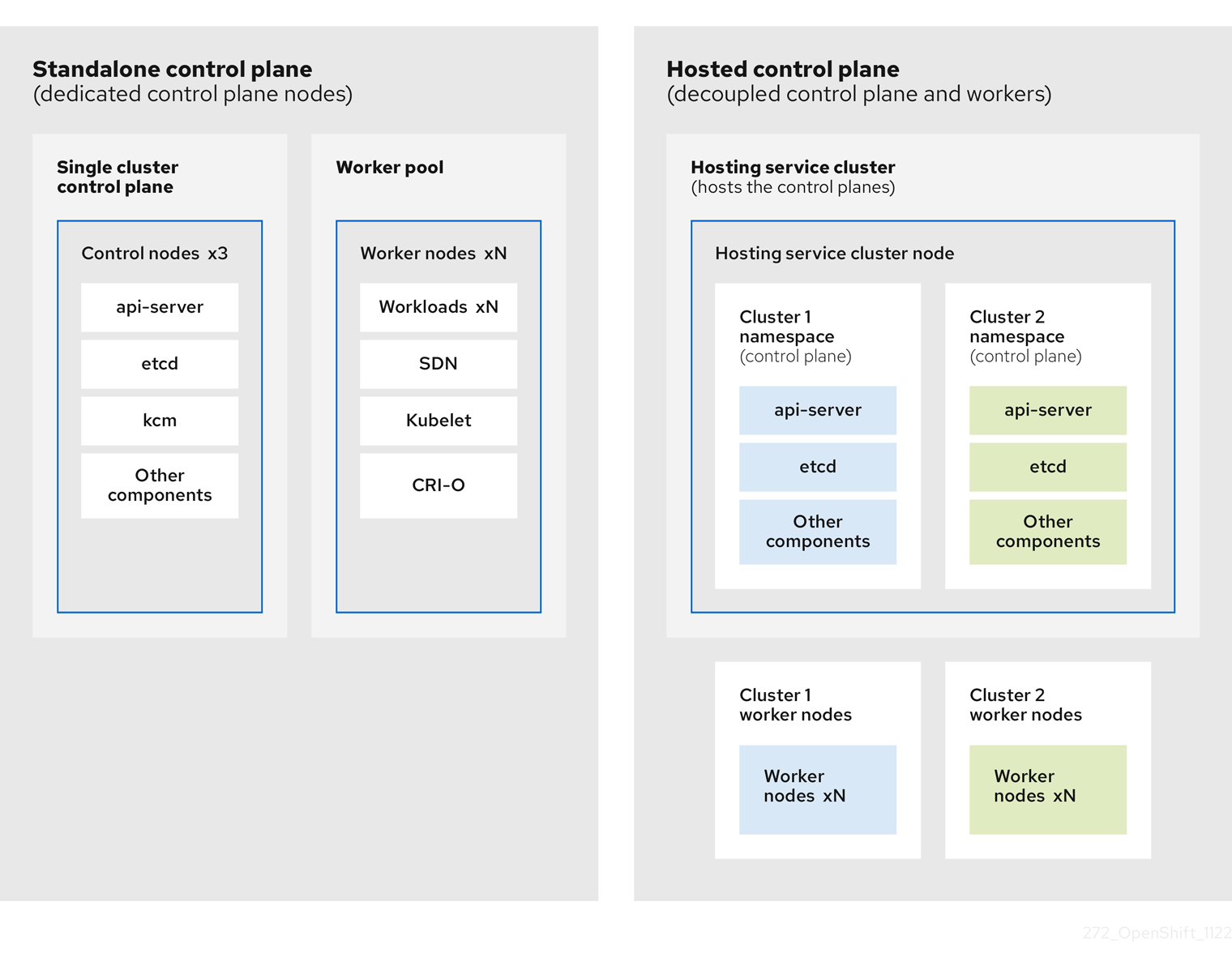

您可以使用两个不同的 control plane 配置部署 OpenShift Container Platform 集群:独立或托管的 control plane。独立配置使用专用虚拟机或物理机器来托管 control plane。通过为 OpenShift Container Platform 托管 control plane,您可以在托管集群中创建 pod 作为 control plane,而无需为每个 control plane 使用专用虚拟机或物理机器。

2.1. 托管 control plane 简介

使用以下平台上的 Kubernetes Operator 支持的多集群引擎版本来托管 control plane:

- 使用 Agent 供应商进行裸机

- 非裸机代理机器作为技术预览功能

- OpenShift Virtualization

- Amazon Web Services (AWS)

- IBM Z

- IBM Power

托管的 control plane 功能默认启用。

multicluster engine Operator 是 Red Hat Advanced Cluster Management (RHACM)的一个完整部分,它默认使用 RHACM 启用。但是,您不需要 RHACM 来使用托管的 control plane。

2.1.1. 托管 control plane 的架构

OpenShift Container Platform 通常以组合或独立部署,集群由 control plane 和数据平面组成。control plane 包括 API 端点、存储端点、工作负载调度程序和确保状态的指示器。data plane 包括运行工作负载的计算、存储和网络。

独立的 control plane 由一组专用的节点(可以是物理或虚拟)托管,最小数字来确保仲裁数。网络堆栈被共享。对集群的管理员访问权限提供了对集群的 control plane、机器管理 API 和有助于对集群状态贡献的其他组件的可见性。

虽然独立模式运行良好,但在某些情况下需要与 control plane 和数据平面分离的架构。在这些情况下,data plane 位于带有专用物理托管环境的独立网络域中。control plane 使用 Kubernetes 原生的高级别原语(如部署和有状态集)托管。control plane 被视为其他工作负载。

2.1.2. 托管 control plane 的优点

使用托管的 control plane,您可以为真正的混合云方法打下基础,并享受一些其他优势。

- 管理和工作负载之间的安全界限很强大,因为 control plane 分离并在专用的托管服务集群中托管。因此,您无法将集群的凭证泄漏到其他用户。因为基础架构 secret 帐户管理也已被分离,所以集群基础架构管理员无法意外删除 control plane 基础架构。

- 使用托管 control plane,您可以在较少的节点上运行多个 control plane。因此,集群更为经济。

- 因为 control plane 由 OpenShift Container Platform 上启动的 pod 组成,所以 control planes 快速启动。同样的原则适用于 control plane 和工作负载,如监控、日志记录和自动扩展。

- 从基础架构的角度来看,您可以将 registry、HAProxy、集群监控、存储节点和其他基础架构组件推送到租户的云供应商帐户,将使用情况隔离到租户。

- 从操作的角度来看,多集群管理更为集中,从而减少了影响集群状态和一致性的外部因素。站点可靠性工程师具有调试问题并进入集群的数据平面的中心位置,这可能会导致更短的时间解析 (TTR) 并提高生产效率。

托管 control plane 是 OpenShift Container Platform 的一个形式。托管集群和独立 OpenShift Container Platform 集群的配置和管理方式会有所不同。请参阅以下表以了解 OpenShift Container Platform 和托管的 control plane 之间的区别:

2.2.1. 集群创建和生命周期

| OpenShift Container Platform | 托管 control plane |

|---|---|

|

您可以使用 |

您可以在现有 OpenShift Container Platform 集群中使用 |

2.2.2. 集群配置

| OpenShift Container Platform | 托管 control plane |

|---|---|

|

您可以使用 |

您可以配置影响 |

2.2.3. etcd 加密

| OpenShift Container Platform | 托管 control plane |

|---|---|

|

您可以使用带有 AES-GCM 或 AES-CBC 的 |

您可以使用带有 AES-CBC 或 KMS 的 |

2.2.4. Operator 和 control plane

| OpenShift Container Platform | 托管 control plane |

|---|---|

| 独立的 OpenShift Container Platform 集群为每个 control plane 组件都包括了独立的 Operator。 | 托管的集群包含一个名为 Control Plane Operator 的单个 Operator,它在管理集群的托管 control plane 命名空间中运行。 |

| etcd 使用挂载到 control plane 节点上的存储。etcd 集群 Operator 管理 etcd。 | etcd 使用持久性卷声明进行存储,并由 Control Plane Operator 管理。 |

| Ingress Operator、网络相关的 Operator 和 Operator Lifecycle Manager (OLM) 在集群中运行。 | Ingress Operator、网络相关的 Operator 和 Operator Lifecycle Manager (OLM) 在管理集群的托管 control plane 命名空间中运行。 |

| OAuth 服务器在集群中运行,并通过集群中的路由公开。 | OAuth 服务器在 control plane 中运行,并通过管理集群上的路由、节点端口或负载均衡器公开。 |

2.2.5. 更新

| OpenShift Container Platform | 托管 control plane |

|---|---|

|

Cluster Version Operator (CVO) 编配更新过程并监控 |

托管的 control plane 更新会对 |

| 更新 OpenShift Container Platform 集群后,control plane 和计算机器都会更新。 | 更新托管集群后,只会更新 control plane。您可以单独执行节点池更新。 |

2.2.6. 机器配置和管理

| OpenShift Container Platform | 托管 control plane |

|---|---|

|

|

|

| 存在一组 control plane 机器。 | 不存在一组 control plane 机器。 |

|

您可以使用 |

您可以通过 |

|

您可以使用 |

您可以通过 |

| 在集群中公开机器和机器集。 | 来自上游 Cluster CAPI Operator 的机器、机器集和机器部署用于管理机器,但不会暴露给用户。 |

| 在更新集群时,所有机器集会自动升级。 | 您可以独立于托管集群更新节点池。 |

| 集群只支持原位升级。 | 托管集群中支持替换和原位升级。 |

| Machine Config Operator 管理机器的配置。 | 托管 control plane 中不存在 Machine Config Operator。 |

|

您可以使用从 |

您可以通过 |

| Machine Config Daemon (MCD) 管理每个节点上的配置更改和更新。 | 对于原位升级,节点池控制器会创建一个运行一次的 pod,它根据您的配置更新机器。 |

| 您可以修改机器配置资源,如 SR-IOV Operator。 | 您无法修改机器配置资源。 |

2.2.7. 网络

| OpenShift Container Platform | 托管 control plane |

|---|---|

| Kube API 服务器直接与节点通信,因为 Kube API 服务器和节点位于同一虚拟私有云(VPC)中。 | Kube API 服务器通过 Konnectivity 与节点通信。Kube API 服务器和节点存在于不同的 Virtual Private Cloud (VPC) 中。 |

| 节点通过内部负载均衡器与 Kube API 服务器通信。 | 节点通过外部负载均衡器或节点端口与 Kube API 服务器通信。 |

2.2.8. Web 控制台

| OpenShift Container Platform | 托管 control plane |

|---|---|

| Web 控制台显示 control plane 的状态。 | Web 控制台不显示 control plane 的状态。 |

| 您可以使用 Web 控制台更新集群。 | 您不能使用 Web 控制台更新托管集群。 |

| Web 控制台显示基础架构资源,如机器。 | Web 控制台不显示基础架构资源。 |

|

您可以使用 web 控制台通过 | 您不能使用 web 控制台配置机器。 |

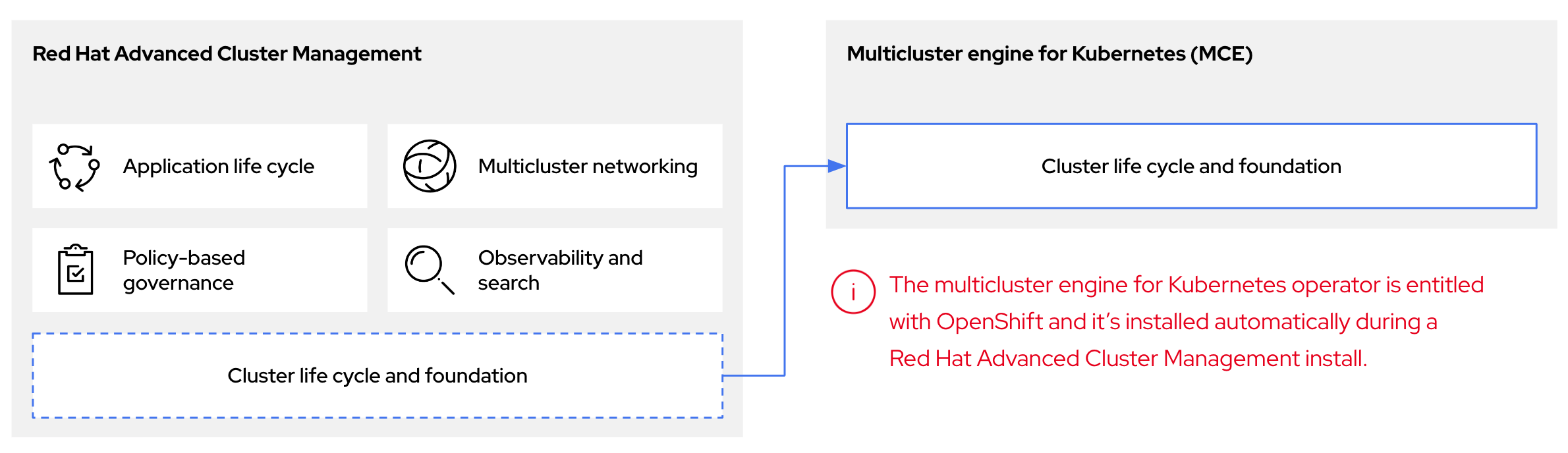

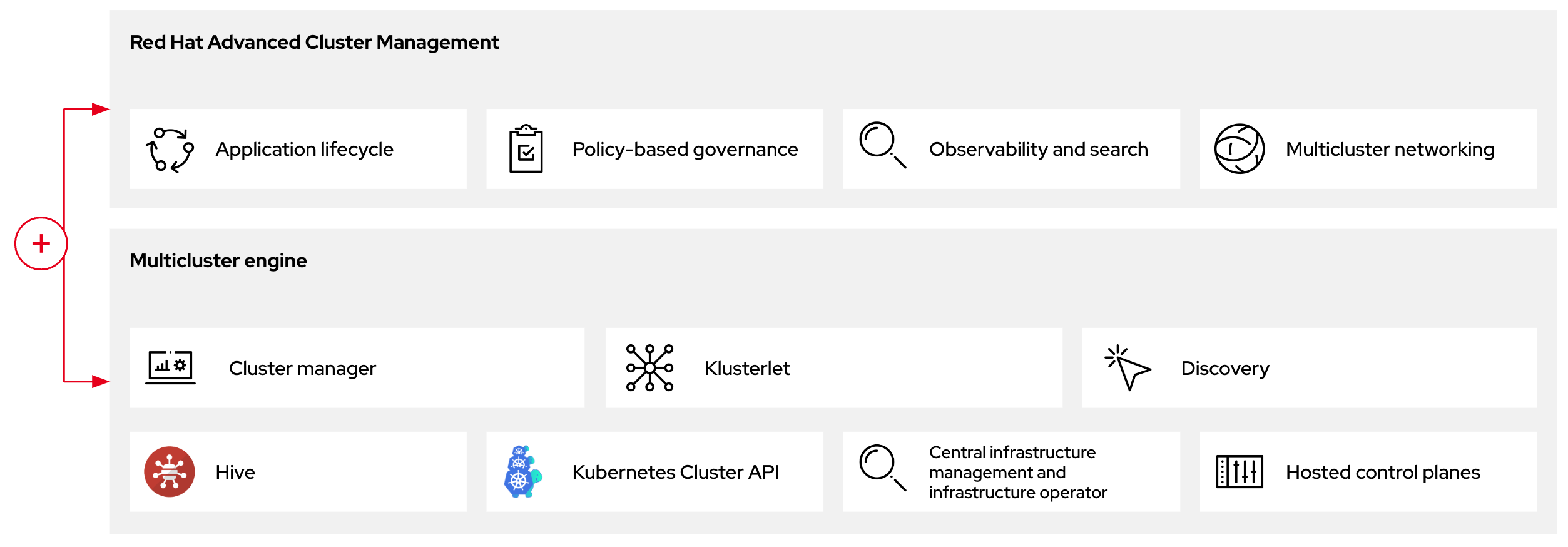

2.3. 托管 control plane、多集群引擎 Operator 和 RHACM 之间的关系

您可以使用 Kubernetes Operator 的多集群引擎配置托管的 control plane。multicluster engine Operator 集群生命周期定义了在不同基础架构云供应商、私有云和内部数据中心的创建、导入、管理和销毁 Kubernetes 集群的过程。

multicluster engine Operator 是 Red Hat Advanced Cluster Management (RHACM)的一个完整部分,它默认使用 RHACM 启用。但是,您不需要 RHACM 来使用托管的 control plane。

multicluster engine operator 是集群生命周期 Operator,它为 OpenShift Container Platform 和 RHACM hub 集群提供集群管理功能。multicluster engine Operator 增强了集群管理功能,并支持跨云和数据中心的 OpenShift Container Platform 集群生命周期管理。

图 2.1. 集群生命周期和基础

您可以将 multicluster engine Operator 与 OpenShift Container Platform 用作一个独立的集群管理器,或作为 RHACM hub 集群的一部分。

管理集群也称为托管集群。

您可以使用两个不同的 control plane 配置部署 OpenShift Container Platform 集群:独立或托管的 control plane。独立配置使用专用虚拟机或物理机器来托管 control plane。通过为 OpenShift Container Platform 托管 control plane,您可以在托管集群中创建 pod 作为 control plane,而无需为每个 control plane 使用专用虚拟机或物理机器。

图 2.2. RHACM 和多集群引擎 Operator 简介图

2.3.1. 在 RHACM 中发现多集群引擎 Operator 托管的集群

如果要将托管集群带到 Red Hat Advanced Cluster Management (RHACM) hub 集群,以便使用 RHACM 管理组件管理它们,请参阅 Red Hat Advanced Cluster Management 官方文档中的说明。

2.4. 托管 control plane 的版本控制

托管 control plane 功能包括以下组件,它们可能需要独立的版本控制和支持级别:

- 管理集群

- HyperShift Operator

-

托管 control plane (

hcp) 命令行界面 (CLI) -

hypershift.openshift.ioAPI - Control Plane Operator

2.4.1. 管理集群

在用于生产环境的受管集群中,您需要 Kubernetes Operator 的多集群引擎,该 Operator 可通过 OperatorHub 提供。multicluster engine Operator 捆绑包是受支持的 HyperShift Operator 构建。要使管理集群保持支持,您必须使用运行多集群引擎 Operator 的 OpenShift Container Platform 版本。通常,多集群引擎 Operator 的新发行版本在以下 OpenShift Container Platform 版本上运行:

- OpenShift Container Platform 的最新正式发行版本

- OpenShift Container Platform 最新正式发行版本前的两个版本

您可以通过管理集群上的 HyperShift Operator 安装的完整 OpenShift Container Platform 版本列表取决于 HyperShift Operator 的版本。但是,该列表至少包含与管理集群相同的 OpenShift Container Platform 版本,以及相对于管理集群的两个次版本。例如,如果管理集群运行 4.17 和受支持的 multicluster engine Operator 版本,则 HyperShift Operator 可以安装 4.17、4.16、4.15 和 4.14 托管集群。

对于 OpenShift Container Platform 的每个主要、次版本或补丁版本,会发布两个托管的 control plane 组件:

- HyperShift Operator

-

hcp命令行界面 (CLI)

2.4.2. HyperShift Operator

HyperShift Operator 管理由 HostedCluster API 资源表示的托管集群的生命周期。HyperShift Operator 会随每个 OpenShift Container Platform 发行版本一起发布。HyperShift Operator 在 hypershift 命名空间中创建 supported-versions 配置映射。配置映射包含受支持的托管集群版本。

您可以在同一管理集群中托管不同版本的 control plane。

supported-versions 配置映射对象示例

2.4.3. 托管 control plane CLI

您可以使用 hcp CLI 创建托管集群。您可以从多集群引擎 Operator 下载 CLI。运行 hcp version 命令时,输出显示 CLI 针对您的 kubeconfig 文件支持的最新 OpenShift Container Platform。

2.4.4. HyperShift.openshift.io API

您可以使用 hypershift.openshift.io API 资源,如 HostedCluster 和 NodePool,以大规模创建和管理 OpenShift Container Platform 集群。HostedCluster 资源包含 control plane 和通用数据平面配置。当您创建 HostedCluster 资源时,您有一个完全正常工作的 control plane,没有附加的节点。NodePool 资源是一组可扩展的 worker 节点,附加到 HostedCluster 资源。

API 版本策略通常与 Kubernetes API 版本 的策略一致。

托管 control plane 的更新涉及更新托管集群和节点池。如需更多信息,请参阅"更新托管 control plane"。

2.4.5. Control Plane Operator

Control Plane Operator 作为以下架构的每个 OpenShift Container Platform 有效负载发行镜像的一部分发布:

- amd64

- arm64

- 多架构

2.5. 托管控制平面(control plane)的常见概念和用户角色表

当使用托管的 control plane 用于 OpenShift Container Platform 时,了解其关键概念和涉及的用户角色非常重要。

2.5.1. 概念

- data plane

- 集群的一部分,其中包含运行工作负载的计算、存储和网络。

- 托管的集群

- 一个 OpenShift Container Platform 集群,其控制平面和 API 端点托管在管理集群中。托管的集群包括控制平面和它的对应的数据平面。

- 托管的集群基础架构

- 存在于租户或最终用户云账户中的网络、计算和存储资源。

- 托管控制平面

- 在管理集群上运行的 OpenShift Container Platform 控制平面,它由托管集群的 API 端点公开。控制平面的组件包括 etcd、Kubernetes API 服务器、Kubernetes 控制器管理器和 VPN。

- 托管集群

- 请参阅管理集群。

- 受管集群

- hub 集群管理的集群。此术语特定于在 Red Hat Advanced Cluster Management 中管理 Kubernetes Operator 的多集群引擎的集群生命周期。受管集群(managed cluster)与管理集群(management cluster)不同。如需更多信息,请参阅管理的集群。

- 管理集群

- 部署 HyperShift Operator,以及用于托管集群的控制平面所在的 OpenShift Container Platform 集群。管理集群与托管集群(hosting cluster)是同义的。

- 管理集群基础架构

- 管理集群的网络、计算和存储资源。

- 节点池

- 管理与托管集群关联的一组计算节点的资源。计算节点在托管的集群中运行应用程序和工作负载。

2.5.2. Personas

- 集群实例管理员

-

假设此角色的用户等同于独立 OpenShift Container Platform 中的管理员。此用户在置备的集群中具有

cluster-admin角色,但可能无法在更新或配置集群时关闭。此用户可能具有只读访问权限,来查看投射到集群中的一些配置。 - 集群实例用户

- 假设此角色的用户等同于独立 OpenShift Container Platform 中的开发人员。此用户没有 OperatorHub 或机器的视图。

- 集群服务消费者

- 假设此角色的用户可以请求控制平面和 worker 节点,驱动更新或修改外部化配置。通常,此用户无法管理或访问云凭证或基础架构加密密钥。集群服务消费者人员可以请求托管集群并与节点池交互。假设此角色的用户具有在逻辑边界中创建、读取、更新或删除托管集群和节点池的用户。

- 集群服务提供商

假设此角色的用户通常具有管理集群上的

cluster-admin角色,并具有 RBAC 来监控并拥有 HyperShift Operator 的可用性,以及租户托管的集群的 control plane。集群服务提供商用户角色负责多个活动,包括以下示例:- 拥有服务级别的对象,用于实现控制平面可用性、正常运行时间和稳定性。

- 为管理集群配置云帐户以托管控制平面

- 配置用户置备的基础架构,其中包括主机对可用计算资源的了解

第 3 章 准备部署托管的 control plane

3.1. 托管 control plane 的要求

在托管 control plane 的上下文中,管理集群 是一个 OpenShift Container Platform 集群,部署 HyperShift Operator,以及托管集群的 control plane 的位置。

control plane 与托管集群关联,并作为 pod 在单个命名空间中运行。当集群服务消费者创建托管集群时,它会创建一个独立于 control plane 的 worker 节点。

以下要求适用于托管的 control plane:

- 为了运行 HyperShift Operator,您的管理集群至少需要三个 worker 节点。

- 您可以在内部运行管理集群和 worker 节点,比如在裸机平台或 OpenShift Virtualization 中。另外,您可以在云基础架构上运行管理集群和 worker 节点,如 Amazon Web Services (AWS)。

-

如果您使用混合基础架构(如在 AWS 和 worker 节点上运行内部的 worker 节点),或在 AWS 和您的管理集群内部运行 worker 节点,则必须使用

PublicAndPrivate发布策略,并遵循支持列表中的延迟要求。 - 在 Bare Metal Host (BMH) 部署中,BMH 在其中启动机器,托管的 control plane 必须能够访问基板管理控制器(BMC)。如果您的安全配置集不允许 Cluster Baremetal Operator 访问 BMH 具有其 BMC 的网络,以便启用 Redfish 自动化,您可以使用 BYO ISO 支持。但是,在 BYO 模式中,OpenShift Container Platform 无法自动打开 BMH 的电源。

3.1.1. 托管 control plane 的支持列表

因为 Kubernetes Operator 的多集群引擎包含 HyperShift Operator,托管 control plane 的发行版本与 multicluster engine Operator 发行版本保持一致。如需更多信息,请参阅 OpenShift Operator 生命周期。

3.1.1.1. 管理集群支持

任何支持的独立 OpenShift Container Platform 集群都可以是一个管理集群。

不支持单节点 OpenShift Container Platform 集群作为管理集群。如果您有资源限制,可以在独立的 OpenShift Container Platform control plane 和托管的 control plane 间共享基础架构。如需更多信息,请参阅"托管和独立 control plane 之间的共享基础架构"。

下表将多集群引擎 Operator 版本映射到支持它们的管理集群版本:

| 管理集群版本 | 支持的多集群引擎 Operator 版本 |

|---|---|

| 4.14 - 4.15 | 2.4 |

| 4.14 - 4.16 | 2.5 |

| 4.14 - 4.17 | 2.6 |

| 4.15 - 4.17 | 2.7 |

3.1.1.2. 托管的集群支持

对于托管集群,管理集群版本和托管的集群版本之间没有直接关系。托管的集群版本取决于 multicluster engine Operator 版本中包含的 HyperShift Operator。

确保管理集群和托管的集群间的最大延迟 200 ms。这个要求对于混合基础架构部署来说尤其重要,如您的管理集群位于 AWS 上,且您的 worker 节点处于内部状态时。

下表将多集群引擎 Operator 版本映射到使用与该多集群引擎 Operator 版本关联的 HyperShift Operator 创建的托管集群版本:

| 托管的集群版本 | multicluster engine Operator 2.4 | multicluster engine Operator 2.5 | multicluster engine Operator 2.6 | multicluster engine Operator 2.7 |

|---|---|---|---|---|

| 4.14 | 是 | 是 | 是 | 是 |

| 4.15 | 否 | 是 | 是 | 是 |

| 4.16 | 否 | 否 | 是 | 是 |

| 4.17 | 否 | 否 | 否 | 是 |

3.1.1.3. 托管的集群平台支持

下表指明了托管 control plane 的每个平台都支持哪些 OpenShift Container Platform 版本。

对于 IBM Power 和 IBM Z,您必须在基于 64 位 x86 架构的机器类型以及 IBM Power 或 IBM Z 上的节点池上运行 control plane。

在下表中,管理集群版本指的是启用了 multicluster engine Operator 的 OpenShift Container Platform 版本:

| 托管的集群平台 | 管理集群版本 | 托管的集群版本 |

|---|---|---|

| Amazon Web Services | 4.16 - 4.17 | 4.16 - 4.17 |

| IBM Power | 4.17 | 4.17 |

| IBM Z | 4.17 | 4.17 |

| OpenShift Virtualization | 4.14 - 4.17 | 4.14 - 4.17 |

| 裸机 | 4.14 - 4.17 | 4.14 - 4.17 |

| 非裸机代理机器(技术预览) | 4.16 - 4.17 | 4.16 - 4.17 |

3.1.1.4. 多集群引擎 Operator 的更新

当您升级到 multicluster engine Operator 的另一个版本时,如果 multicluster engine Operator 版本中包含的 HyperShift Operator 支持托管的集群版本,则托管集群可以继续运行。下表显示了在哪些更新的多集群引擎 Operator 版本中支持哪些托管集群版本:

| 更新了多集群引擎 Operator 版本 | 支持的托管集群版本 |

|---|---|

| 从 2.4 更新至 2.5 | OpenShift Container Platform 4.14 |

| 从 2.5 更新至 2.6 | OpenShift Container Platform 4.14 - 4.15 |

| 从 2.6 更新至 2.7 | OpenShift Container Platform 4.14 - 4.16 |

例如,如果您在管理集群中有一个 OpenShift Container Platform 4.14 托管集群,且从 multicluster engine Operator 2.4 更新至 2.5,则托管集群可以继续运行。

3.1.1.5. 技术预览功能

以下列表显示了本发行版本的技术预览功能:

- 在断开连接的环境中在 IBM Z 上托管 control plane

- 托管 control plane 的自定义污点和容限

- 托管 control plane for OpenShift Virtualization 上的 NVIDIA GPU 设备

3.1.2. 托管 control plane 的 CIDR 范围

要在 OpenShift Container Platform 上部署托管的 control plane,请使用以下所需的无类别域间路由(CIDR)子网范围:

-

v4InternalSubnet: 100.65.0.0/16 (OVN-Kubernetes) -

clusterNetwork: 10.132.0.0/14 (pod network) -

serviceNetwork: 172.31.0.0/16

如需有关 OpenShift Container Platform CIDR 范围定义的更多信息,请参阅"CIDR 范围定义"。

3.2. 托管 control plane 的大小指导

许多因素(包括托管集群工作负载和 worker 节点数)会影响到特定数量的 worker 节点可以容纳多少个托管的 control plane。使用此大小指南来帮助托管集群容量规划。这个指南假设一个高可用的托管 control plane 拓扑。基于负载的大小示例是在裸机集群中测量的。基于云的实例可能具有不同的限制因素,如内存大小。

您可以覆盖以下资源使用率大小测量,并禁用指标服务监控。

请参阅以下高度可用的托管 control plane 要求,该要求已使用 OpenShift Container Platform 版本 4.12.9 及更新版本进行测试:

- 78 个 pod

- etcd:三个 8 GiB PV

- 最小 vCPU:大约 5.5 个内核

- 最小内存:大约 19 GiB

3.2.1. Pod 限值

每个节点的 maxPods 设置会影响 control-plane 节点可以包括多少个托管集群。记录所有 control-plane 节点上的 maxPods 值非常重要。为每个高可用性托管的 control plane 计划大约 75 个 pod。

对于裸机节点,默认的 maxPods 设置为 250,这可能会成为一个限制因素,因为根据 pod 的要求,每个节点大约可以包括三个托管 control plane,即使机器中存在大量可用资源。通过配置 KubeletConfig 值将 maxPods 设置为 500,允许更大的托管的 control plane 密度,这有助于利用额外的计算资源。

3.2.2. 基于请求的资源限值

集群可以托管的 control plane 的最大数量是根据来自 pod 的托管 control plane CPU 和内存请求进行计算的。

高可用性托管 control plane 由请求 5 个 vCPU 和 18 GiB 内存的 78 个 pod 组成。这些基线数据与集群 worker 节点资源容量进行比较,以估算托管 control plane 的最大数量。

3.2.3. 基于负载的限制

当某些工作负载放在托管的 control plane Kubernetes API 服务器上时,集群可以托管的 control plane pod CPU 和内存使用率计算的最大托管 control plane 数量。

在工作负载增加时,以下方法用于测量托管的 control plane 资源利用率:

- 在使用 KubeVirt 平台时,具有 9 个 worker 的、使用 8 个 vCPU 和 32 GiB 的托管集群

根据以下定义,配置为专注于 API control-plane 压力的工作负载测试配置集:

- 为每个命名空间创建对象,最多扩展 100 个命名空间

- 持续的对象删除和创建会造成额外的 API 压力

- 工作负载查询每秒(QPS)和 Burst 设置设置为高,以删除任何客户端节流

当负载增加 1000 QPS 时,托管的 control plane 资源使用率会增加 9 个 vCPU 和 2.5 GB 内存。

对于常规的大小设置,请考虑 1000 QPS API 的比率,它是一个 中型 托管集群负载;以及 2000 QPS API,它是一个 大型 托管的集群负载。

此测试提供了一个估算因素,用于根据预期的 API 负载增加计算资源利用率。确切的利用率可能会因集群工作负载的类型和节奏而有所不同。

以下示例显示了工作负载和 API 比率定义的托管 control plane 资源扩展:

| QPS (API rate) | vCPU 使用量 | 内存用量 (GiB) |

|---|---|---|

| 低负载 (小于 50 QPS) | 2.9 | 11.1 |

| 中型负载 (1000 QPS) | 11.9 | 13.6 |

| 高负载 (2000 QPS) | 20.9 | 16.1 |

托管 control plane 的大小针对于会导致大量 API 活动、etcd 活动或这两者的 control-plane 负载和工作负载。专注于 data-plane 负载的托管 pod 工作负载(如运行数据库)可能无法产生高 API 速率。

3.2.4. 大小计算示例

这个示例为以下场景提供大小指导:

-

三个裸机 worker,标记为

hypershift.openshift.io/control-plane节点 -

maxPods值设为 500 - 预期的 API 速率是中型或大约 1000,具体取决于基于负载的限制

| 限制描述 | Server 1 | Server 2 |

|---|---|---|

| worker 节点上的 vCPU 数量 | 64 | 128 |

| worker 节点上的内存 (GiB) | 128 | 256 |

| 每个 worker 的最大 pod 数量 | 500 | 500 |

| 用于托管 control plane 的 worker 数量 | 3 | 3 |

| 最大 QPS 目标比率(每秒的 API 请求) | 1000 | 1000 |

| 根据 worker 节点大小和 API 速率计算的值 | Server 1 | Server 2 | 计算备注 |

| 基于 vCPU 请求的每个 worker 的最大托管 control plane | 12.8 | 25.6 | worker vCPUs 数量 ÷ 5 总 vCPU 请求每个托管的 control plane |

| 基于 vCPU 使用的每个 worker 的最大托管 control plane | 5.4 | 10.7 | vCPUS 数量 ÷ (2.9 测量的空闲 vCPU 使用量 + (QPS 目标率 ÷ 1000) × 9.0 测量的 vCPU 使用量每 1000 QPS 增长) |

| 基于内存请求的每个 worker 的最大托管 control plane | 7.1 | 14.2 | Worker 内存 GiB ÷ 18 GiB 总内存请求每个托管 control plane |

| 根据内存用量,每个 worker 的最大托管 control plane | 9.4 | 18.8 | Worker 内存 GiB ÷ (11.1 测量的空闲内存使用量 + (QPS 目标率 ÷ 1000) × 2.5 测量的内存使用量每 1000 QPS 增加) |

| 每个 worker 的最大托管 control plane 基于每个节点 pod 限制 | 6.7 | 6.7 |

500 |

| 前面提到的最大值 | 5.4 | 6.7 | |

| vCPU 限制因素 |

| ||

| 一个管理集群中的托管 control plane 的最大数量 | 16 | 20 | 前面提到的最大的最小值 × 3 control-plane workers |

| Name | 描述 |

|

| 根据高可用性托管的 control plane 资源请求,集群可以托管的最大托管 control plane 数量。 |

|

| 如果所有托管的 control plane 都针对集群 Kube API 服务器有大约 50 个 QPS,则集群可以托管的最大托管 control plane 数量。 |

|

| 如果所有托管的 control plane 都针对集群 Kube API 服务器大约为 1000 QPS,则估计集群可以托管的最大 control plane 数量。 |

|

| 如果所有托管的 control plane 都针对集群 Kube API 服务器有大约 2000 个 QPS,则集群可以托管的最大托管 control plane 数量。 |

|

| 根据现有托管 control plane 的平均 QPS,估计集群可以托管的最大托管 control plane 数量。如果您没有活跃的托管 control plane,您可以预期低的 QPS。 |

3.3. 覆盖资源利用率测量

资源利用率的基线测量集合在每个托管的集群中可能会有所不同。

3.3.1. 覆盖托管集群的资源利用率测量

您可以根据集群工作负载的类型和节奏覆盖资源利用率测量。

流程

运行以下命令来创建

ConfigMap资源:oc create -f <your-config-map-file.yaml>

$ oc create -f <your-config-map-file.yaml>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 将

<your-config-map-file.yaml>替换为包含hcp-sizing-baseline配置映射的 YAML 文件的名称。在

local-cluster命名空间中创建hcp-sizing-baseline配置映射,以指定您要覆盖的测量。您的配置映射可能类似以下 YAML 文件:Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,删除

hypershift-addon-agent部署以重启hypershift-addon-agentpod:oc delete deployment hypershift-addon-agent \ -n open-cluster-management-agent-addon

$ oc delete deployment hypershift-addon-agent \ -n open-cluster-management-agent-addonCopy to Clipboard Copied! Toggle word wrap Toggle overflow

验证

观察

hypershift-addon-agentpod 日志。运行以下命令,验证配置映射中是否更新了覆盖的测量:oc logs hypershift-addon-agent -n open-cluster-management-agent-addon

$ oc logs hypershift-addon-agent -n open-cluster-management-agent-addonCopy to Clipboard Copied! Toggle word wrap Toggle overflow 您的日志可能类似以下输出:

输出示例

2024-01-05T19:41:05.392Z INFO agent.agent-reconciler agent/agent.go:793 setting cpuRequestPerHCP to 5 2024-01-05T19:41:05.392Z INFO agent.agent-reconciler agent/agent.go:802 setting memoryRequestPerHCP to 18 2024-01-05T19:53:54.070Z INFO agent.agent-reconciler agent/hcp_capacity_calculation.go:141 The worker nodes have 12.000000 vCPUs 2024-01-05T19:53:54.070Z INFO agent.agent-reconciler agent/hcp_capacity_calculation.go:142 The worker nodes have 49.173369 GB memory

2024-01-05T19:41:05.392Z INFO agent.agent-reconciler agent/agent.go:793 setting cpuRequestPerHCP to 5 2024-01-05T19:41:05.392Z INFO agent.agent-reconciler agent/agent.go:802 setting memoryRequestPerHCP to 18 2024-01-05T19:53:54.070Z INFO agent.agent-reconciler agent/hcp_capacity_calculation.go:141 The worker nodes have 12.000000 vCPUs 2024-01-05T19:53:54.070Z INFO agent.agent-reconciler agent/hcp_capacity_calculation.go:142 The worker nodes have 49.173369 GB memoryCopy to Clipboard Copied! Toggle word wrap Toggle overflow 如果在

hcp-sizing-baseline配置映射中没有正确更新覆盖的测量,您可能会在hypershift-addon-agentpod 日志中看到以下错误信息:错误示例

2024-01-05T19:53:54.052Z ERROR agent.agent-reconciler agent/agent.go:788 failed to get configmap from the hub. Setting the HCP sizing baseline with default values. {"error": "configmaps \"hcp-sizing-baseline\" not found"}2024-01-05T19:53:54.052Z ERROR agent.agent-reconciler agent/agent.go:788 failed to get configmap from the hub. Setting the HCP sizing baseline with default values. {"error": "configmaps \"hcp-sizing-baseline\" not found"}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3.2. 禁用指标服务监控

启用 hypershift-addon 受管集群附加组件后,会默认配置指标服务监控,以便 OpenShift Container Platform 监控可以从 hypershift-addon 收集指标。

流程

您可以通过完成以下步骤禁用指标服务监控:

运行以下命令登录到您的 hub 集群:

oc login

$ oc loginCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来编辑

hypershift-addon-deploy-config附加组件部署配置规格:oc edit addondeploymentconfig hypershift-addon-deploy-config \ -n multicluster-engine

$ oc edit addondeploymentconfig hypershift-addon-deploy-config \ -n multicluster-engineCopy to Clipboard Copied! Toggle word wrap Toggle overflow 在规格中添加

disableMetrics=true自定义变量,如下例所示:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

disableMetrics=true自定义变量为新的和现有的hypershift-addon受管集群附加组件禁用指标服务监控。

运行以下命令,将更改应用到配置规格:

oc apply -f <filename>.yaml

$ oc apply -f <filename>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4. 安装托管的 control plane 命令行界面

托管 control plane 命令行界面 hcp 是一款可用于托管 control plane 的工具。对于第 2 天操作,如管理和配置,请使用 GitOps 或您自己的自动化工具。

3.4.1. 从终端安装托管的 control plane 命令行界面

您可以从终端安装托管的 control plane 命令行界面(CLI) hcp。

先决条件

- 在 OpenShift Container Platform 集群中,已为 Kubernetes Operator 2.5 或更高版本安装了多集群引擎。安装 Red Hat Advanced Cluster Management 时会自动安装 multicluster engine Operator。您还可以在没有 Red Hat Advanced Management 的情况下安装 multicluster engine Operator,作为 OpenShift Container Platform OperatorHub 中的 Operator。

流程

运行以下命令,获取用于下载

hcp二进制文件的 URL:oc get ConsoleCLIDownload hcp-cli-download -o json | jq -r ".spec"

$ oc get ConsoleCLIDownload hcp-cli-download -o json | jq -r ".spec"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来下载

hcp二进制文件:wget <hcp_cli_download_url>

$ wget <hcp_cli_download_url>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 将

hcp_cli_download_url替换为您在上一步中获取的 URL。

运行以下命令来解包下载的存档:

tar xvzf hcp.tar.gz

$ tar xvzf hcp.tar.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令使

hcp二进制文件可执行:chmod +x hcp

$ chmod +x hcpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,将

hcp二进制文件移到路径中的目录中:sudo mv hcp /usr/local/bin/.

$ sudo mv hcp /usr/local/bin/.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

如果您在 Mac 计算机上下载 CLI,您可能会看到有关 hcp 二进制文件的警告。您需要调整安全设置,以允许运行二进制文件。

验证

运行以下命令,验证您是否可以看到可用参数列表:

hcp create cluster <platform> --help

$ hcp create cluster <platform> --help1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 您可以使用

hcp create cluster命令来创建和管理托管集群。支持的平台包括aws、agent和kubevirt。

3.4.2. 使用 Web 控制台安装托管的 control plane 命令行界面

您可以使用 OpenShift Container Platform Web 控制台安装托管的 control plane 命令行界面 (CLI) hcp。

先决条件

- 在 OpenShift Container Platform 集群中,已为 Kubernetes Operator 2.5 或更高版本安装了多集群引擎。安装 Red Hat Advanced Cluster Management 时会自动安装 multicluster engine Operator。您还可以在没有 Red Hat Advanced Management 的情况下安装 multicluster engine Operator,作为 OpenShift Container Platform OperatorHub 中的 Operator。

流程

- 在 OpenShift Container Platform web 控制台中点 Help 图标 → Command Line Tools。

- 为您的平台点 Download hcp CLI。

运行以下命令来解包下载的存档:

tar xvzf hcp.tar.gz

$ tar xvzf hcp.tar.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令使二进制文件可执行:

chmod +x hcp

$ chmod +x hcpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,将二进制文件移到路径中的目录中:

sudo mv hcp /usr/local/bin/.

$ sudo mv hcp /usr/local/bin/.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

如果您在 Mac 计算机上下载 CLI,您可能会看到有关 hcp 二进制文件的警告。您需要调整安全设置,以允许运行二进制文件。

验证

运行以下命令,验证您是否可以看到可用参数列表:

hcp create cluster <platform> --help

$ hcp create cluster <platform> --help1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 您可以使用

hcp create cluster命令来创建和管理托管集群。支持的平台包括aws、agent和kubevirt。

3.4.3. 使用内容网关安装托管的 control plane 命令行界面

您可以使用内容网关安装托管的 control plane 命令行界面 (CLI) hcp。

先决条件

- 在 OpenShift Container Platform 集群中,已为 Kubernetes Operator 2.5 或更高版本安装了多集群引擎。安装 Red Hat Advanced Cluster Management 时会自动安装 multicluster engine Operator。您还可以在没有 Red Hat Advanced Management 的情况下安装 multicluster engine Operator,作为 OpenShift Container Platform OperatorHub 中的 Operator。

流程

-

访问内容网关并下载

hcp二进制文件。 运行以下命令来解包下载的存档:

tar xvzf hcp.tar.gz

$ tar xvzf hcp.tar.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令使

hcp二进制文件可执行:chmod +x hcp

$ chmod +x hcpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,将

hcp二进制文件移到路径中的目录中:sudo mv hcp /usr/local/bin/.

$ sudo mv hcp /usr/local/bin/.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

如果您在 Mac 计算机上下载 CLI,您可能会看到有关 hcp 二进制文件的警告。您需要调整安全设置,以允许运行二进制文件。

验证

运行以下命令,验证您是否可以看到可用参数列表:

hcp create cluster <platform> --help

$ hcp create cluster <platform> --help1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 您可以使用

hcp create cluster命令来创建和管理托管集群。支持的平台包括aws、agent和kubevirt。

3.5. 分发托管集群工作负载

在开始使用 OpenShift Container Platform 托管 control plane 之前,您必须正确标记节点,以便托管集群的 pod 可以调度到基础架构节点。节点标签也因以下原因非常重要:

-

确保高可用性和正确的工作负载部署。例如,您可以设置

node-role.kubernetes.io/infra标签,以避免将 control-plane 工作负载计数设置为 OpenShift Container Platform 订阅。 - 确保 control plane 工作负载与管理集群中的其他工作负载分开。

不要将管理集群用于工作负载。工作负载不能在运行 control plane 的节点上运行。

3.5.1. 标记管理集群节点

正确的节点标签是部署托管 control plane 的先决条件。

作为管理集群管理员,您可以在管理集群节点中使用以下标签和污点来调度 control plane 工作负载:

-

HyperShift.openshift.io/control-plane: true:使用此标签和污点将节点专用于运行托管的 control plane 工作负载。通过设置true值,您可以避免与其他组件共享 control plane 节点,例如管理集群的基础架构组件或任何其他错误部署的工作负载。 -

HyperShift.openshift.io/cluster: ${HostedControlPlane Namespace}: 当您要将节点专用于单个托管集群时,请使用此标签和污点。

在托管 control-plane pod 的节点上应用以下标签:

-

node-role.kubernetes.io/infra: 使用该标签避免将 control-plane 工作负载计数设置为您的订阅。 topology.kubernetes.io/zone:在管理集群节点上使用此标签在故障域中部署高可用性集群。区域可能是设置区的节点的位置、机架名称或主机名。例如,管理集群具有以下节点:worker-1a、worker-1b、worker-2a、worker-2a和worker-2b。worker-1a和worker-1b节点位于rack1中,worker-2a和 worker-2b 节点位于rack2中。要将每个机架用作可用区,请输入以下命令:oc label node/worker-1a node/worker-1b topology.kubernetes.io/zone=rack1

$ oc label node/worker-1a node/worker-1b topology.kubernetes.io/zone=rack1Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc label node/worker-2a node/worker-2b topology.kubernetes.io/zone=rack2

$ oc label node/worker-2a node/worker-2b topology.kubernetes.io/zone=rack2Copy to Clipboard Copied! Toggle word wrap Toggle overflow

托管集群的 Pod 具有容限,调度程序使用关联性规则来调度它们。Pod 容限污点用于 control-plane 和 cluster 用于 pod。调度程序将 pod 调度到标记为 hypershift.openshift.io/control-plane 和 hypershift.openshift.io/cluster: ${HostedControlPlane Namespace} 的节点。

对于 ControllerAvailabilityPolicy 选项,请使用 HighlyAvailable,这是托管 control plane 命令行界面 hcp 部署的默认值。使用该选项时,您可以通过将 topology.kubernetes.io/zone 设置为拓扑键,将托管集群中每个部署的 pod 调度到不同的故障域中。在跨不同故障域的托管集群中为部署调度 pod,仅适用于高可用性 control plane。

流程

要让托管集群要求其 pod 调度到基础架构节点,请设置 HostedCluster.spec.nodeSelector,如下例所示:

spec:

nodeSelector:

node-role.kubernetes.io/infra: ""

spec:

nodeSelector:

node-role.kubernetes.io/infra: ""这样,每个托管的集群的托管 control plane 都是符合基础架构节点工作负载,您不需要授权底层 OpenShift Container Platform 节点。

3.5.2. 优先级类

四个内置优先级类会影响托管集群 pod 的优先级与抢占。您可以根据从高到低的顺序在管理集群中创建 pod:

-

hypershift-operator: HyperShift Operator pod. -

hypershift-etcd: etcd 的 Pod -

HyperShift-api-critical: API 调用和资源准入所需的 Pod 才能成功。这些 pod 包括kube-apiserver、聚合的 API 服务器和 web hook 等 pod。 -

HyperShift-control-plane: control plane 中不是 API-critical 但仍然需要升级的优先级的 Pod,如集群版本 Operator。

3.5.3. 自定义污点和容限

默认情况下,托管集群的 pod 可以容忍 control-plane 和 cluster 污点。但是,您还可以在节点上使用自定义污点,以便托管集群可以通过设置 HostedCluster.spec.tolerations 来容许每个托管的集群的污点。

为托管集群传递容限只是一个技术预览功能。技术预览功能不受红帽产品服务等级协议(SLA)支持,且功能可能并不完整。红帽不推荐在生产环境中使用它们。这些技术预览功能可以使用户提早试用新的功能,并有机会在开发阶段提供反馈意见。

有关红帽技术预览功能支持范围的更多信息,请参阅以下链接:

配置示例

spec:

tolerations:

- effect: NoSchedule

key: kubernetes.io/custom

operator: Exists

spec:

tolerations:

- effect: NoSchedule

key: kubernetes.io/custom

operator: Exists

您还可以使用 --tolerations hcp CLI 参数,在创建集群时在托管集群上设置容限。

CLI 参数示例

--toleration="key=kubernetes.io/custom,operator=Exists,effect=NoSchedule"

--toleration="key=kubernetes.io/custom,operator=Exists,effect=NoSchedule"

要基于每个集群对托管集群 pod 放置进行精细控制,请使用 nodeSelectors 的自定义容限。您可以并置托管集群的组,并将它们与其他托管集群隔离。您还可以将托管集群放在 infra 和 control plane 节点上。

托管的集群中的容限只适用于 control plane 的 pod。要配置在管理集群和基础架构相关的 pod (如运行虚拟机的 pod)上运行的其他 pod,您需要使用不同的进程。

3.6. 启用或禁用托管的 control plane 功能

托管的 control plane 功能以及 hypershift-addon 受管集群附加组件会被默认启用。如果要禁用这个功能,或者禁用了这个功能并希望手动启用它,请参阅以下步骤。

3.6.1. 手动启用托管的 control plane 功能

如果您需要手动启用托管的 control plane,请完成以下步骤。

流程

运行以下命令来启用该功能:

oc patch mce multiclusterengine --type=merge -p \ '{"spec":{"overrides":{"components":[{"name":"hypershift","enabled": true}]}}}'$ oc patch mce multiclusterengine --type=merge -p \ '{"spec":{"overrides":{"components":[{"name":"hypershift","enabled": true}]}}}'1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 默认

MultiClusterEngine资源实例名称是multiclusterengine,但您可以通过运行以下命令来从集群中获取MultiClusterEngine:$ oc get mce。

运行以下命令,以验证

MultiClusterEngine自定义资源中是否启用了hypershift和hypershift-local-hosting功能:oc get mce multiclusterengine -o yaml

$ oc get mce multiclusterengine -o yaml1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 默认

MultiClusterEngine资源实例名称是multiclusterengine,但您可以通过运行以下命令来从集群中获取MultiClusterEngine:$ oc get mce。

输出示例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.6.1.1. 为 local-cluster 手动启用 hypershift-addon 受管集群附加组件

启用托管的 control plane 功能会自动启用 hypershift-addon 受管集群附加组件。如果您需要手动启用 hypershift-addon 受管集群附加组件,请完成以下步骤,使用 hypershift-addon 在 local-cluster 上安装 HyperShift Operator。

流程

通过创建一个类似以下示例的文件,创建名为

hypershift-addon的ManagedClusterAddon附加组件:Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来应用该文件:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 使用您创建的文件的名称替换

filename。运行以下命令确认安装了

hypershift-addon受管集群附加组件:oc get managedclusteraddons -n local-cluster hypershift-addon

$ oc get managedclusteraddons -n local-cluster hypershift-addonCopy to Clipboard Copied! Toggle word wrap Toggle overflow 如果安装了附加组件,输出类似以下示例:

NAME AVAILABLE DEGRADED PROGRESSING hypershift-addon True

NAME AVAILABLE DEGRADED PROGRESSING hypershift-addon TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

已安装 hypershift-addon 受管集群附加组件,托管集群可用于创建和管理托管集群。

3.6.2. 禁用托管的 control plane 功能

您可以卸载 HyperShift Operator,并禁用托管的 control plane 功能。当禁用托管的 control plane 功能时,您必须在多集群引擎 Operator 上销毁托管集群和受管集群资源,如管理托管集群主题中所述。

3.6.2.1. 卸载 HyperShift Operator

要卸载 HyperShift Operator 并从 local-cluster 禁用 hypershift-addon,请完成以下步骤:

流程

运行以下命令,以确保没有托管集群:

oc get hostedcluster -A

$ oc get hostedcluster -ACopy to Clipboard Copied! Toggle word wrap Toggle overflow 重要如果托管集群正在运行,HyperShift Operator 不会卸载,即使

hypershift-addon被禁用。运行以下命令禁用

hypershift-addon:oc patch mce multiclusterengine --type=merge -p \ '{"spec":{"overrides":{"components":[{"name":"hypershift-local-hosting","enabled": false}]}}}'$ oc patch mce multiclusterengine --type=merge -p \1 '{"spec":{"overrides":{"components":[{"name":"hypershift-local-hosting","enabled": false}]}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 默认

MultiClusterEngine资源实例名称是multiclusterengine,但您可以通过运行以下命令来从集群中获取MultiClusterEngine:$ oc get mce。

注意在禁用

hypershift-addon后,您还可以从 multicluster engine Operator 控制台禁用local-cluster的hypershift-addon。

3.6.2.2. 禁用托管的 control plane 功能

要禁用托管的 control plane 功能,请完成以下步骤。

先决条件

- 您已卸载了 HyperShift Operator。如需更多信息,请参阅"卸载 HyperShift Operator"。

流程

运行以下命令以禁用托管的 control plane 功能:

oc patch mce multiclusterengine --type=merge -p \ '{"spec":{"overrides":{"components":[{"name":"hypershift","enabled": false}]}}}'$ oc patch mce multiclusterengine --type=merge -p \1 '{"spec":{"overrides":{"components":[{"name":"hypershift","enabled": false}]}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 默认

MultiClusterEngine资源实例名称是multiclusterengine,但您可以通过运行以下命令来从集群中获取MultiClusterEngine:$ oc get mce。

您可以运行以下命令来验证

hypershift和hypershift-local-hosting功能是否在MultiClusterEngine自定义资源中禁用:oc get mce multiclusterengine -o yaml

$ oc get mce multiclusterengine -o yaml1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 默认

MultiClusterEngine资源实例名称是multiclusterengine,但您可以通过运行以下命令来从集群中获取MultiClusterEngine:$ oc get mce。

请参阅以下示例,其中

hypershift和hypershift-local-hosting的enabled:标记被设置为false:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

第 4 章 部署托管的 control plane

4.1. 在 AWS 上部署托管的 control plane

托管的集群 是一个 OpenShift Container Platform 集群,其 API 端点和 control plane 托管在管理集群中。托管的集群包括控制平面和它的对应的数据平面。要在内部配置托管的 control plane,您必须在管理集群中安装 Kubernetes Operator 的多集群引擎。通过使用 hypershift-addon 受管集群附加组件在现有受管集群上部署 HyperShift Operator,您可以启用该集群作为管理集群,并开始创建托管集群。默认情况下,local-cluster 受管集群默认启用 hypershift-addon 受管集群。

您可以使用 multicluster engine Operator 控制台或托管的 control plane 命令行界面 (CLI) hcp 创建托管集群。托管的集群自动导入为受管集群。但是,您可以将此自动导入功能禁用到多集群引擎 Operator 中。

4.1.1. 准备在 AWS 上部署托管的 control plane

当您准备在 Amazon Web Services (AWS) 上部署托管 control plane 时,请考虑以下信息:

- 每个托管集群都必须具有集群范围的唯一名称。托管的集群名称不能与任何现有的受管集群相同,以便多集群引擎 Operator 可以管理它。

-

不要使用

clusters作为托管的集群名称。 - 在托管 control plane 的同一平台上运行管理集群和 worker。

- 无法在多集群引擎 Operator 受管集群的命名空间中创建托管集群。

4.1.1.1. 配置管理集群的先决条件

您必须满足以下先决条件才能配置管理集群:

- 您已在 OpenShift Container Platform 集群中安装了 Kubernetes Operator 2.5 及之后的版本的多集群引擎。安装 Red Hat Advanced Cluster Management (RHACM) 时会自动安装 multicluster engine Operator。multicluster engine Operator 也可以在没有 RHACM 作为 OpenShift Container Platform OperatorHub 中的 Operator 的情况下安装。

至少有一个受管 OpenShift Container Platform 集群用于多集群引擎 Operator。

local-cluster在 multicluster engine Operator 版本 2.5 及更新的版本中自动导入。您可以运行以下命令来检查 hub 集群的状态:oc get managedclusters local-cluster

$ oc get managedclusters local-clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

已安装

aws命令行界面(CLI)。 -

已安装托管的 control plane CLI

hcp。

4.1.2. 使用 hcp CLI 访问 AWS 上的托管集群

您可以使用 hcp 命令行界面(CLI)访问托管集群来生成 kubeconfig 文件。

流程

输入以下命令生成

kubeconfig文件:hcp create kubeconfig --namespace <hosted_cluster_namespace> \ --name <hosted_cluster_name> > <hosted_cluster_name>.kubeconfig

$ hcp create kubeconfig --namespace <hosted_cluster_namespace> \ --name <hosted_cluster_name> > <hosted_cluster_name>.kubeconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow 保存

kubeconfig文件后,您可以输入以下命令访问托管集群:oc --kubeconfig <hosted_cluster_name>.kubeconfig get nodes

$ oc --kubeconfig <hosted_cluster_name>.kubeconfig get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.3. 创建 Amazon Web Services S3 存储桶和 S3 OIDC secret

在 Amazon Web Services (AWS) 上创建和管理托管集群之前,您必须创建 S3 存储桶和 S3 OIDC secret。

流程

运行以下命令,创建一个可对集群托管 OIDC 发现文档的公共访问权限的 S3 存储桶:

aws s3api create-bucket --bucket <bucket_name> \ --create-bucket-configuration LocationConstraint=<region> \ --region <region>

$ aws s3api create-bucket --bucket <bucket_name> \1 --create-bucket-configuration LocationConstraint=<region> \2 --region <region>3 Copy to Clipboard Copied! Toggle word wrap Toggle overflow aws s3api delete-public-access-block --bucket <bucket_name>

$ aws s3api delete-public-access-block --bucket <bucket_name>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 将

<bucket_name>替换为您要创建的 S3 存储桶的名称。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 将

<bucket_name>替换为您要创建的 S3 存储桶的名称。

aws s3api put-bucket-policy --bucket <bucket_name> \ --policy file://policy.json

$ aws s3api put-bucket-policy --bucket <bucket_name> \1 --policy file://policy.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 将

<bucket_name>替换为您要创建的 S3 存储桶的名称。

注意如果使用 Mac 计算机,则必须导出存储桶名称才能使策略正常工作。

-

为 HyperShift Operator 创建一个名为

hypershift-operator-oidc-provider-s3-credentials的 OIDC S3 secret。 -

将 secret 保存到

local-cluster命名空间中。 请查看下表以验证 secret 是否包含以下字段:

Expand 表 4.1. AWS secret 的必填字段 字段名称 描述 bucket包含具有公共访问权限的 S3 存储桶,用于保存托管集群的 OIDC 发现文档。

credentials对包含可以访问存储桶的

default配置集凭证的文件的引用。默认情况下,HyperShift 仅使用default配置集来运行bucket。region指定 S3 存储桶的区域。

要创建 AWS secret,请运行以下命令:

oc create secret generic <secret_name> \ --from-file=credentials=<path>/.aws/credentials \ --from-literal=bucket=<s3_bucket> \ --from-literal=region=<region> \ -n local-cluster

$ oc create secret generic <secret_name> \ --from-file=credentials=<path>/.aws/credentials \ --from-literal=bucket=<s3_bucket> \ --from-literal=region=<region> \ -n local-clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow 注意secret 的灾难恢复备份不会被自动启用。要添加启用

hypershift-operator-oidc-provider-s3-credentialssecret 的标签来备份灾难恢复,请运行以下命令:oc label secret hypershift-operator-oidc-provider-s3-credentials \ -n local-cluster cluster.open-cluster-management.io/backup=true

$ oc label secret hypershift-operator-oidc-provider-s3-credentials \ -n local-cluster cluster.open-cluster-management.io/backup=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.4. 为托管集群创建可路由的公共区

要访问托管的集群中的应用程序,您必须配置可路由的公共区。如果 public 区域存在,请跳过这一步。否则,public 区域会影响现有功能。

流程

要为 DNS 记录创建可路由的公共区,请输入以下命令:

aws route53 create-hosted-zone \ --name <basedomain> \ --caller-reference $(whoami)-$(date --rfc-3339=date)

$ aws route53 create-hosted-zone \ --name <basedomain> \1 --caller-reference $(whoami)-$(date --rfc-3339=date)Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 将

<basedomain>替换为您的基域,例如www.example.com。

4.1.5. 创建 AWS IAM 角色和 STS 凭证

在 Amazon Web Services (AWS) 上创建托管集群前,您必须创建一个 AWS IAM 角色和 STS 凭证。

流程

运行以下命令,获取用户的 Amazon 资源名称(ARN):

aws sts get-caller-identity --query "Arn" --output text

$ aws sts get-caller-identity --query "Arn" --output textCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

arn:aws:iam::1234567890:user/<aws_username>

arn:aws:iam::1234567890:user/<aws_username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 使用此输出作为下一步中

<arn>的值。创建一个包含角色信任关系配置的 JSON 文件。请参见以下示例:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 将

<arn>替换为您在上一步中记下的用户的 ARN。

运行以下命令来创建 Identity and Access Management (IAM) 角色:

aws iam create-role \ --role-name <name> \ --assume-role-policy-document file://<file_name>.json \ --query "Role.Arn"

$ aws iam create-role \ --role-name <name> \1 --assume-role-policy-document file://<file_name>.json \2 --query "Role.Arn"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

arn:aws:iam::820196288204:role/myrole

arn:aws:iam::820196288204:role/myroleCopy to Clipboard Copied! Toggle word wrap Toggle overflow 创建名为

policy.json的 JSON 文件,其中包含您的角色的以下权限策略:Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,将

policy.json文件附加到角色中:aws iam put-role-policy \ --role-name <role_name> \ --policy-name <policy_name> \ --policy-document file://policy.json

$ aws iam put-role-policy \ --role-name <role_name> \1 --policy-name <policy_name> \2 --policy-document file://policy.json3 Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,在名为

sts-creds.json的 JSON 文件中检索 STS 凭证:aws sts get-session-token --output json > sts-creds.json

$ aws sts get-session-token --output json > sts-creds.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow sts-creds.json文件示例Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.6. 为托管 control plane 启用 AWS PrivateLink

要使用 PrivateLink 在 Amazon Web Services (AWS) 上置备托管的 control plane,请为托管 control plane 启用 AWS PrivateLink。

流程

-

为 HyperShift Operator 创建 AWS 凭证 secret,并将其命名为

hypershift-operator-private-link-credentials。secret 必须位于用作管理集群的受管集群的命名空间中。如果使用local-cluster,请在local-cluster命名空间中创建 secret。 - 下表确认 secret 包含必填字段:

| 字段名称 | 描述 | 可选或必需的 |

|---|---|---|

|

| 与私有链接一起使用的区域 | 必填 |

|

| 凭证访问密钥 ID。 | 必填 |

|

| 凭证访问密钥 secret。 | 必填 |

要创建 AWS secret,请运行以下命令:

oc create secret generic <secret_name> \ --from-literal=aws-access-key-id=<aws_access_key_id> \ --from-literal=aws-secret-access-key=<aws_secret_access_key> \ --from-literal=region=<region> -n local-cluster

$ oc create secret generic <secret_name> \

--from-literal=aws-access-key-id=<aws_access_key_id> \

--from-literal=aws-secret-access-key=<aws_secret_access_key> \

--from-literal=region=<region> -n local-cluster

secret 的灾难恢复备份不会被自动启用。运行以下命令添加可备份 hypershift-operator-private-link-credentials secret 的标签:

oc label secret hypershift-operator-private-link-credentials \ -n local-cluster \ cluster.open-cluster-management.io/backup=""

$ oc label secret hypershift-operator-private-link-credentials \

-n local-cluster \

cluster.open-cluster-management.io/backup=""4.1.7. 为 AWS 上托管的 control plane 启用外部 DNS

control plane 和数据平面在托管的 control plane 中是相互独立的。您可以在两个独立区域中配置 DNS:

-

托管集群中的工作负载的 Ingress,如以下域:

*.apps.service-consumer-domain.com。 -

管理集群中的服务端点的 Ingress,如通过服务提供商域提供 API 或 OAuth 端点:

service-provider-domain.com。

hostedCluster.spec.dns 的输入管理托管集群中工作负载的入口。hostedCluster.spec.services.servicePublishingStrategy.route.hostname 的输入管理管理集群中服务端点的 ingress。

外部 DNS 为托管的集群服务创建名称记录,用于指定 LoadBalancer 或 Route 的发布类型,并为该发布类型提供主机名。对于带有 Private 或 PublicAndPrivate 端点访问类型的托管集群,只有 APIServer 和 OAuth 服务支持主机名。对于 私有 托管集群,DNS 记录解析为 VPC 中 Virtual Private Cloud (VPC) 端点的专用 IP 地址。

托管 control plane 会公开以下服务:

-

APIServer -

OIDC

您可以使用 HostedCluster 规格中的 servicePublishingStrategy 字段来公开这些服务。默认情况下,对于 LoadBalancer 和 Route 类型的 servicePublishingStrategy,您可以通过以下方法之一发布该服务:

-

通过使用处于

LoadBalancer类型的Service状态的负载均衡器的主机名。 -

通过使用

Route资源的status.host字段。

但是,当您在受管服务上下文中部署托管 control plane 时,这些方法可以公开底层管理集群的 ingress 子域,并限制管理集群生命周期和灾难恢复的选项。

当 DNS 间接在 LoadBalancer 和 Route 发布类型上分层时,受管服务操作员可以使用服务级别域发布所有公共托管集群服务。这个架构允许将 DNS 名称重新映射到新的 LoadBalancer 或 Route,且不会公开管理集群的 ingress 域。托管 control plane 使用外部 DNS 来实现间接层。

您可以在管理集群的 hypershift 命名空间中部署 external-dns 和 HyperShift Operator。用于监视具有 external-dns.alpha.kubernetes.io/hostname 注解的 Services 或 Routes 的外部 DNS该注解用于创建指向 Service 的 DNS 记录,如 A 记录或 Route,如 CNAME 记录。

您只能在云环境中使用外部 DNS。对于其他环境,您需要手动配置 DNS 和服务。

有关外部 DNS 的更多信息,请参阅外部 DNS。

4.1.7.1. 先决条件

在 Amazon Web Services (AWS) 上为托管 control plane 设置外部 DNS 之前,您必须满足以下先决条件:

- 您创建了外部公共域。

- 您可以访问 AWS Route53 管理控制台。

- 为托管 control plane 启用了 AWS PrivateLink。

4.1.7.2. 为托管的 control plane 设置外部 DNS

您可以使用外部 DNS 或服务级别 DNS 置备托管的 control plane。

-

为 HyperShift Operator 创建 Amazon Web Services (AWS) 凭证 secret,并将其命名为

local-cluster命名空间中的hypershift-operator-external-dns-credentials。 查看下表以验证 secret 是否具有必填字段:

Expand 表 4.3. AWS secret 的必填字段 字段名称 描述 可选或必需的 provider管理服务级别 DNS 区的 DNS 供应商。

必需

domain-filter服务级别域。

必需

credentials支持所有外部 DNS 类型的凭据文件。

在使用 AWS 密钥时是可选的

aws-access-key-id凭证访问密钥 ID。

在使用 AWS DNS 服务时是可选的

aws-secret-access-key凭证访问密钥 secret。

在使用 AWS DNS 服务时是可选的

要创建 AWS secret,请运行以下命令:

oc create secret generic <secret_name> \ --from-literal=provider=aws \ --from-literal=domain-filter=<domain_name> \ --from-file=credentials=<path_to_aws_credentials_file> -n local-cluster

$ oc create secret generic <secret_name> \ --from-literal=provider=aws \ --from-literal=domain-filter=<domain_name> \ --from-file=credentials=<path_to_aws_credentials_file> -n local-clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow 注意secret 的灾难恢复备份不会被自动启用。要为灾难恢复备份 secret,请输入以下命令添加

hypershift-operator-external-dns-credentials:oc label secret hypershift-operator-external-dns-credentials \ -n local-cluster \ cluster.open-cluster-management.io/backup=""

$ oc label secret hypershift-operator-external-dns-credentials \ -n local-cluster \ cluster.open-cluster-management.io/backup=""Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.7.3. 创建公共 DNS 托管区

External DNS Operator 使用公共 DNS 托管区来创建公共托管集群。

您可以创建公共 DNS 托管区来用作外部 DNS domain-filter。在 AWS Route 53 管理控制台中完成以下步骤。

流程

- 在 Route 53 管理控制台中,点 Create hosted zone。

- 在 Hosted zone 配置 页面中,键入域名,验证 Public hosted zone 已选为类型,然后单击 Create hosted zone。

- 创建区域后,在 Records 选项卡中,请注意 Value/Route traffic to 栏中的值。

- 在主域中,创建一个 NS 记录,将 DNS 请求重定向到委派的区域。在 Value 字段中,输入您在上一步中记录的值。

- 点 Create records。

通过在新子区中创建测试条目并使用

dig命令进行测试,以验证 DNS 托管区是否正常工作,如下例所示:dig +short test.user-dest-public.aws.kerberos.com

$ dig +short test.user-dest-public.aws.kerberos.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

192.168.1.1

192.168.1.1Copy to Clipboard Copied! Toggle word wrap Toggle overflow 要创建为

LoadBalancer和Route服务设置主机名的托管集群,请输入以下命令:hcp create cluster aws --name=<hosted_cluster_name> \ --endpoint-access=PublicAndPrivate \ --external-dns-domain=<public_hosted_zone> ...

$ hcp create cluster aws --name=<hosted_cluster_name> \ --endpoint-access=PublicAndPrivate \ --external-dns-domain=<public_hosted_zone> ...1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 将

<public_hosted_zone>替换为您创建的公共托管区。

托管集群的

services块示例Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Control Plane Operator 创建 Services 和 Routes 资源,并使用 external-dns.alpha.kubernetes.io/hostname 注解为它们添加注解。对于 Services 和 Routes,Control Plane Operator 将 servicePublishingStrategy 字段中的 hostname 参数的值用于服务端点。要创建 DNS 记录,您可以使用某种机制,如 external-dns 部署。

您只能为公共服务配置服务级别 DNS 间接。您不能为私有服务设置主机名,因为它们使用 hypershift.local 私有区。

下表显示了何时能够为服务和端点组合设置主机名:

| service | 公开 | PublicAndPrivate | 私有 |

|---|---|---|---|

|

| Y | Y | N |

|

| Y | Y | N |

|

| Y | N | N |

|

| Y | N | N |

4.1.7.4. 使用 AWS 上的外部 DNS 创建托管集群

要使用 Amazon Web Services (AWS) 上的 PublicAndPrivate 或 Public 策略来创建托管集群,您必须在管理集群中配置以下工件:

- 公共 DNS 托管区

- External DNS Operator

- HyperShift Operator

您可以使用 hcp 命令行界面 (CLI)部署托管集群。

流程

要访问您的管理集群,请输入以下命令:

export KUBECONFIG=<path_to_management_cluster_kubeconfig>

$ export KUBECONFIG=<path_to_management_cluster_kubeconfig>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输入以下命令验证 External DNS Operator 是否正在运行:

oc get pod -n hypershift -lapp=external-dns

$ oc get pod -n hypershift -lapp=external-dnsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

NAME READY STATUS RESTARTS AGE external-dns-7c89788c69-rn8gp 1/1 Running 0 40s

NAME READY STATUS RESTARTS AGE external-dns-7c89788c69-rn8gp 1/1 Running 0 40sCopy to Clipboard Copied! Toggle word wrap Toggle overflow 要使用外部 DNS 创建托管集群,请输入以下命令:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 指定 Amazon Resource Name (ARN),例如

arn:aws:iam::820196288204:role/myrole。 - 2

- 指定实例类型,如

m6i.xlarge。 - 3

- 指定 AWS 区域,如

us-east-1。 - 4

- 指定托管集群名称,如

my-external-aws。 - 5

- 指定服务消费者拥有的公共托管区,如

service-consumer-domain.com。 - 6

- 指定节点副本数,例如

2。 - 7

- 指定 pull secret 文件的路径。

- 8

- 指定您要使用的 OpenShift Container Platform 版本,如

4.17.0-multi。 - 9

- 指定服务提供商拥有的公共托管区,如

service-provider-domain.com。 - 10

- 设置为

PublicAndPrivate。您只能使用Public或PublicAndPrivate配置来使用外部 DNS。 - 11

- 指定 AWS STS 凭证文件的路径,例如

/home/user/sts-creds/sts-creds.json。

4.1.8. 在 AWS 上创建托管集群

您可以使用 hcp 命令行界面 (CLI) 在 Amazon Web Services (AWS) 上创建托管集群。

默认情况下,对于 Amazon Web Services (AWS)上的托管 control plane,您可以使用 AMD64 托管的集群。但是,您可以启用托管的 control plane 在 ARM64 托管的集群中运行。如需更多信息,请参阅"在 ARM64 架构上运行托管集群"。

有关节点池和托管集群兼容组合,请参阅下表:

| 托管的集群 | 节点池 |

|---|---|

| AMD64 | AMD64 或 ARM64 |

| ARM64 | ARM64 或 AMD64 |

先决条件

-

您已设置了托管的 control plane CLI

hcp。 -

您已启用了

local-cluster受管集群作为管理集群。 - 您创建了 AWS Identity and Access Management (IAM) 角色和 AWS 安全令牌服务(STS) 凭证。

流程

要在 AWS 上创建托管集群,请运行以下命令:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 指定托管集群的名称,如

example。 - 2

- 指定您的基础架构名称。您必须为

<hosted_cluster_name>和<infra_id>提供相同的值。否则,集群可能无法在 Kubernetes Operator 控制台的多集群引擎中正确显示。 - 3

- 指定您的基域,例如

example.com。 - 4

- 指定 AWS STS 凭证文件的路径,例如

/home/user/sts-creds/sts-creds.json。 - 5

- 指定 pull secret 的路径,例如

/user/name/pullsecret。 - 6

- 指定 AWS 区域名称,如

us-east-1。 - 7

- 指定节点池副本数,例如

3。 - 8

- 默认情况下,所有

HostedCluster和NodePool自定义资源都会在clusters命名空间中创建。您可以使用--namespace <namespace>参数,在特定命名空间中创建HostedCluster和NodePool自定义资源。 - 9

- 指定 Amazon Resource Name (ARN),例如

arn:aws:iam::820196288204:role/myrole。 - 10

- 如果要指明 EC2 实例是否在共享或单个租户硬件上运行,请包含此字段。

--render-into标志会将 Kubernetes 资源呈现到您在此字段中指定的 YAML 文件中。然后,继续执行下一步来编辑 YAML 文件。

如果在上一命令中包含了

--render-into标志,请编辑指定的 YAML 文件。编辑 YAML 文件中的NodePool规格,以指示 EC2 实例是否应该在共享或单租户硬件上运行,如下例所示:YAML 文件示例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

验证

验证托管集群的状态,以检查

AVAILABLE的值是否为True。运行以下命令:oc get hostedclusters -n <hosted_cluster_namespace>

$ oc get hostedclusters -n <hosted_cluster_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,获取节点池列表:

oc get nodepools --namespace <hosted_cluster_namespace>

$ oc get nodepools --namespace <hosted_cluster_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.8.1. 访问 AWS 上的托管集群

您可以通过直接从资源获取 kubeconfig 文件和 kubeadmin 凭证来访问托管集群。

您必须熟悉托管集群的访问 secret。托管的集群命名空间包含托管的集群资源,托管的 control plane 命名空间是托管的 control plane 运行的位置。secret 名称格式如下:

-

kubeconfigsecret: <hosted-cluster-namespace>-<name>-admin-kubeconfig.例如clusters-hypershift-demo-admin-kubeconfig。 -

kubeadminpassword secret: <hosted-cluster-namespace>-<name>-kubeadmin-password.例如,clusters-hypershift-demo-kubeadmin-password。

流程

kubeconfigsecret 包含一个 Base64 编码的kubeconfig字段,您可以解码并保存到要使用以下命令使用的文件中:oc --kubeconfig <hosted_cluster_name>.kubeconfig get nodes

$ oc --kubeconfig <hosted_cluster_name>.kubeconfig get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow kubeadmin密码 secret 也为 Base64 编码的。您可以对它进行解码,并使用密码登录到托管集群的 API 服务器或控制台。

4.1.8.2. 使用 kubeadmin 凭证在 AWS 上访问托管集群

在 Amazon Web Services (AWS) 上创建托管集群后,您可以通过获取 kubeconfig 文件、访问 secret 和 kubeadmin 凭证来访问托管集群。

托管的集群命名空间包含托管的集群资源和访问 secret。托管 control plane 在托管的 control plane 命名空间中运行。

secret 名称格式如下:

-

kubeconfigsecret:<hosted_cluster_namespace>-<name>-admin-kubeconfig.例如clusters-hypershift-demo-admin-kubeconfig。 -

kubeadmin密码 secret:<hosted_cluster_namespace>-<name>-kubeadmin-password。例如,clusters-hypershift-demo-kubeadmin-password。

kubeadmin 密码 secret 是 Base64 编码的,kubeconfig secret 包含以 Base64 编码的 kubeconfig 配置。您必须对 Base64 编码的 kubeconfig 配置进行解码,并将其保存到 <hosted_cluster_name>.kubeconfig 文件中。

流程

使用包含解码的

kubeconfig配置的<hosted_cluster_name>.kubeconfig文件来访问托管集群。输入以下命令:oc --kubeconfig <hosted_cluster_name>.kubeconfig get nodes

$ oc --kubeconfig <hosted_cluster_name>.kubeconfig get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow 您必须对

kubeadmin密码 secret 进行解码,才能登录到 API 服务器或托管集群的控制台。

4.1.8.3. 使用 hcp CLI 访问 AWS 上的托管集群

您可以使用 hcp 命令行界面 (CLI)访问托管集群。

流程

输入以下命令生成

kubeconfig文件:hcp create kubeconfig --namespace <hosted_cluster_namespace> \ --name <hosted_cluster_name> > <hosted_cluster_name>.kubeconfig

$ hcp create kubeconfig --namespace <hosted_cluster_namespace> \ --name <hosted_cluster_name> > <hosted_cluster_name>.kubeconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow 保存

kubeconfig文件后,输入以下命令访问托管集群:oc --kubeconfig <hosted_cluster_name>.kubeconfig get nodes

$ oc --kubeconfig <hosted_cluster_name>.kubeconfig get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.9. 在托管集群中配置自定义 API 服务器证书

要为 API 服务器配置自定义证书,请在 HostedCluster 配置的 spec.configuration.apiServer 部分中指定证书详情。

您可以在第 1 天或第 2 天操作期间配置自定义证书。但是,由于在托管集群创建过程中设置服务发布策略后,服务发布策略不可变,所以您必须知道您要配置的 Kubernetes API 服务器的主机名。

先决条件

您创建了包含管理集群中的自定义证书的 Kubernetes secret。secret 包含以下键:

-

tls.crt: 证书 -

tls.key:私钥

-

-

如果您的

HostedCluster配置包含使用负载均衡器的服务发布策略,请确保证书的 Subject Alternative Names (SAN)与内部 API 端点(api-int)不冲突。内部 API 端点由您的平台自动创建和管理。如果您在自定义证书和内部 API 端点中使用相同的主机名,则可能会出现路由冲突。此规则的唯一例外是,当您将 AWS 用作供应商时,使用Private或PublicAndPrivate配置。在这些情况下,SAN 冲突由平台管理。 - 证书必须对外部 API 端点有效。

- 证书的有效性周期与集群的预期生命周期一致。

流程

输入以下命令使用自定义证书创建 secret:

oc create secret tls sample-hosted-kas-custom-cert \ --cert=path/to/cert.crt \ --key=path/to/key.key \ -n <hosted_cluster_namespace>

$ oc create secret tls sample-hosted-kas-custom-cert \ --cert=path/to/cert.crt \ --key=path/to/key.key \ -n <hosted_cluster_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 使用自定义证书详情更新

HostedCluster配置,如下例所示:Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输入以下命令将更改应用到

HostedCluster配置:oc apply -f <hosted_cluster_config>.yaml

$ oc apply -f <hosted_cluster_config>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

验证

- 检查 API 服务器 pod,以确保挂载了新证书。

- 使用自定义域名测试与 API 服务器的连接。

-

在浏览器中或使用

openssl等工具验证证书详情。

4.1.10. 在 AWS 上的多个区中创建托管集群

您可以使用 hcp 命令行界面 (CLI)在 Amazon Web Services (AWS) 上的多个区域中创建托管集群。

先决条件

- 您创建了 AWS Identity and Access Management (IAM) 角色和 AWS 安全令牌服务(STS) 凭证。

流程

运行以下命令,在 AWS 上的多个区中创建托管集群:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 指定托管集群的名称,如

example。 - 2

- 指定节点池副本数,例如

2。 - 3

- 指定您的基域,例如

example.com。 - 4

- 指定 pull secret 的路径,例如

/user/name/pullsecret。 - 5

- 指定 Amazon Resource Name (ARN),例如

arn:aws:iam::820196288204:role/myrole。 - 6

- 指定 AWS 区域名称,如

us-east-1。 - 7

- 指定 AWS 区域中的可用区,如

us-east-1a和us-east-1b。 - 8

- 指定 AWS STS 凭证文件的路径,例如

/home/user/sts-creds/sts-creds.json。

对于每个指定区,会创建以下基础架构:

- 公共子网

- 专用子网

- NAT 网关

- 私有路由表

公共路由表在公共子网之间共享。

为每个区创建一个 NodePool 资源。节点池名称带有区名称后缀。区的专用子网在 spec.platform.aws.subnet.id 中设置。

4.1.10.1. 通过提供 AWS STS 凭证来创建托管集群

当使用 hcp create cluster aws 命令创建托管集群时,您必须提供 Amazon Web Services (AWS) 帐户凭证来为托管集群创建基础架构资源。

基础架构资源包括以下示例:

- 虚拟私有云(VPC)

- 子网

- 网络地址转换 (NAT) 网关

您可以使用以下任一方法提供 AWS 凭证:

- AWS 安全令牌服务 (STS) 凭证

- 来自多集群引擎 Operator 的 AWS 云供应商 secret

流程

要通过提供 AWS STS 凭证在 AWS 上创建托管集群,请输入以下命令:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.11. 在 ARM64 架构上运行托管集群

默认情况下,对于 Amazon Web Services (AWS)上的托管 control plane,您可以使用 AMD64 托管的集群。但是,您可以启用托管的 control plane 在 ARM64 托管的集群中运行。

有关节点池和托管集群兼容组合,请参阅下表:

| 托管的集群 | 节点池 |

|---|---|

| AMD64 | AMD64 或 ARM64 |

| ARM64 | ARM64 或 AMD64 |

4.1.11.1. 在 ARM64 OpenShift Container Platform 集群中创建托管集群

您可以使用多架构发行镜像覆盖默认的发行镜像,在 ARM64 OpenShift Container Platform 集群中为 Amazon Web Services (AWS) 运行托管集群。

如果没有使用多架构发行镜像,则节点池中的计算节点不会被创建,协调过程会停止,直到您在托管集群中使用了一个多架构发行镜像,或根据发行镜像更新了 NodePool 自定义资源。

先决条件

- 您必须有一个在 AWS 上安装 64 位 ARM 基础架构的 OpenShift Container Platform 集群。如需更多信息,请参阅创建 OpenShift Container Platform Cluster: AWS (ARM)。

- 您必须创建一个 AWS Identity and Access Management (IAM) 角色和 AWS 安全令牌服务(STS) 凭证。如需更多信息,请参阅"创建 AWS IAM 角色和 STS 凭证"。

流程

输入以下命令在 ARM64 OpenShift Container Platform 集群上创建托管集群:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 指定托管集群的名称,如

example。 - 2

- 指定节点池副本数,例如

3。 - 3

- 指定您的基域,例如

example.com。 - 4

- 指定 pull secret 的路径,例如

/user/name/pullsecret。 - 5

- 指定 AWS STS 凭证文件的路径,例如

/home/user/sts-creds/sts-creds.json。 - 6

- 指定 AWS 区域名称,如

us-east-1。 - 7

- 指定您要使用的 OpenShift Container Platform 版本,如

4.17.0-multi。如果您使用断开连接的环境,将<ocp_release_image>替换为摘要镜像。要提取 OpenShift Container Platform 发行镜像摘要,请参阅"提取 OpenShift Container Platform 发行镜像摘要"。 - 8

- 指定 Amazon Resource Name (ARN),例如

arn:aws:iam::820196288204:role/myrole。

4.1.11.2. 在 AWS 托管集群中创建 ARM 或 AMD NodePool 对象

您可以调度应用程序负载,它是来自同一托管的 control plane 的 64 位 ARM 和 AMD 上的 NodePool 对象。您可以在 NodePool 规格中定义 arch 字段,为 NodePool 对象设置所需的处理器架构。arch 字段的有效值如下:

-

arm64 -

amd64

先决条件

-

您必须具有多架构镜像才能使用

HostedCluster自定义资源。您可以访问 multi-architecture nightly 镜像。

流程

运行以下命令,将 ARM 或 AMD

NodePool对象添加到 AWS 上的托管集群:hcp create nodepool aws \ --cluster-name <hosted_cluster_name> \ --name <node_pool_name> \ --node-count <node_pool_replica_count> \ --arch <architecture>

$ hcp create nodepool aws \ --cluster-name <hosted_cluster_name> \1 --name <node_pool_name> \2 --node-count <node_pool_replica_count> \3 --arch <architecture>4 Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.12. 在 AWS 上创建私有托管集群

在启用了 local-cluster 作为托管集群后,您可以在 Amazon Web Services (AWS) 上部署托管集群或私有托管集群。

默认情况下,托管集群可以通过公共 DNS 和管理集群的默认路由器公开访问。

对于 AWS 上的私有集群,所有与托管集群的通信都会通过 AWS PrivateLink 进行。

先决条件

- 您已启用了 AWS PrivateLink。如需更多信息,请参阅"启用 AWS PrivateLink"。

- 您创建了 AWS Identity and Access Management (IAM) 角色和 AWS 安全令牌服务(STS) 凭证。如需更多信息,请参阅"创建 AWS IAM 角色和 STS 凭证"和"Identity and Access Management (IAM)权限"。

- 您在 AWS 上配置了堡垒实例。

流程

输入以下命令在 AWS 上创建私有托管集群:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 指定托管集群的名称,如

example。 - 2

- 指定节点池副本数,例如

3。 - 3

- 指定您的基域,例如

example.com。 - 4

- 指定 pull secret 的路径,例如

/user/name/pullsecret。 - 5

- 指定 AWS STS 凭证文件的路径,例如

/home/user/sts-creds/sts-creds.json。 - 6

- 指定 AWS 区域名称,如

us-east-1。 - 7

- 定义集群是公共还是私有。

- 8

- 指定 Amazon Resource Name (ARN),例如

arn:aws:iam::820196288204:role/myrole。有关 ARN 角色的更多信息,请参阅"Identity and Access Management (IAM) 权限"。

托管集群的以下 API 端点可通过私有 DNS 区域访问:

-

api.<hosted_cluster_name>.hypershift.local -

*.apps.<hosted_cluster_name>.hypershift.local

4.2. 在裸机上部署托管的 control plane

您可以通过将集群配置为充当管理集群来部署托管的 control plane。管理集群是托管 control plane 的 OpenShift Container Platform 集群。在某些上下文中,管理集群也称为托管集群。

受管集群与受管集群不同。受管集群是 hub 集群管理的集群。

托管的 control plane 功能默认启用。

multicluster engine Operator 只支持默认的 local-cluster,它是管理的 hub 集群,而 hub 集群作为管理集群。如果安装了 Red Hat Advanced Cluster Management,您可以使用受管 hub 集群(也称为 local-cluster )作为管理集群。

托管的集群 是一个 OpenShift Container Platform 集群,其 API 端点和 control plane 托管在管理集群中。托管的集群包括控制平面和它的对应的数据平面。您可以使用多集群引擎 Operator 控制台或托管的 control plane 命令行界面(hcp)来创建托管集群。

托管的集群自动导入为受管集群。如果要禁用此自动导入功能,请参阅"禁用托管集群自动导入到多集群引擎 Operator"。

4.2.1. 准备在裸机上部署托管的 control plane

当您准备在裸机上部署托管 control plane 时,请考虑以下信息:

- 在托管 control plane 的同一平台上运行管理集群和 worker。

-

所有裸机主机都需要手动从中央基础架构管理提供的发现镜像 ISO 开始。您可以使用

Cluster-Baremetal-Operator手动启动主机或通过自动化来启动主机。每个主机启动后,它会运行一个代理进程来发现主机详情并完成安装。Agent自定义资源代表每个主机。 - 当您为托管 control plane 配置存储时,请考虑推荐的 etcd 实践。要确保您满足延迟要求,请将快速存储设备专用于每个 control-plane 节点上运行的所有托管 control plane etcd 实例。您可以使用 LVM 存储为托管的 etcd pod 配置本地存储类。如需更多信息,请参阅"推荐 etcd 实践"和"使用逻辑卷管理器存储的持久性存储"。

4.2.1.1. 配置管理集群的先决条件

- 您需要为 Kubernetes Operator 2.2 及之后的版本安装在 OpenShift Container Platform 集群上的多集群引擎。您可以从 OpenShift Container Platform OperatorHub 将 multicluster engine Operator 安装为 Operator。

multicluster engine Operator 必须至少有一个受管 OpenShift Container Platform 集群。

local-cluster在多集群引擎 Operator 2.2 及更高版本中自动导入。有关local-cluster的更多信息,请参阅 Red Hat Advanced Cluster Management 中的高级配置部分。您可以运行以下命令来检查 hub 集群的状态:oc get managedclusters local-cluster

$ oc get managedclusters local-clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

您必须将

topology.kubernetes.io/zone标签添加到管理集群中的裸机主机中。确保每个主机具有topology.kubernetes.io/zone的唯一值。否则,所有托管的 control plane pod 都调度到单一节点上,从而导致单点故障。 - 要在裸机上置备托管的 control plane,您可以使用 Agent 平台。Agent 平台使用中央基础架构管理服务将 worker 节点添加到托管的集群中。如需更多信息,请参阅启用中央基础架构管理服务。

- 您需要安装托管的 control plane 命令行界面。

4.2.1.2. 裸机防火墙、端口和服务要求

您必须满足防火墙、端口和服务要求,以便端口可以在管理集群、control plane 和托管集群之间进行通信。

服务在其默认端口上运行。但是,如果您使用 NodePort 发布策略,服务在由 NodePort 服务分配的端口上运行。

使用防火墙规则、安全组或其他访问控制来仅限制对所需源的访问。除非需要,否则请避免公开公开端口。对于生产环境部署,请使用负载均衡器来简化通过单个 IP 地址的访问。

如果您的 hub 集群有代理配置,请通过将所有托管集群 API 端点添加到 Proxy 对象的 noProxy 字段来确保它可以访问托管集群 API 端点。如需更多信息,请参阅"配置集群范围代理"。

托管 control plane 在裸机上公开以下服务:

APIServer-

APIServer服务默认在端口 6443 上运行,需要入口访问 control plane 组件之间的通信。 - 如果使用 MetalLB 负载均衡,允许入口访问用于负载均衡器 IP 地址的 IP 范围。

-

OAuthServer-

当使用路由和入口来公开服务时,

OAuthServer服务默认在端口 443 上运行。 -

如果使用

NodePort发布策略,请为OAuthServer服务使用防火墙规则。

-

当使用路由和入口来公开服务时,

Konnectivity-

当使用路由和入口来公开服务时,

Konnectivity服务默认在端口 443 上运行。 -

Konnectity代理建立一个反向隧道,允许 control plane 访问托管集群的网络。代理使用出口连接到Konnectivity服务器。服务器通过使用端口 443 上的路由或手动分配的NodePort来公开。 - 如果集群 API 服务器地址是一个内部 IP 地址,允许从工作负载子网访问端口 6443 上的 IP 地址。

- 如果地址是一个外部 IP 地址,允许从节点通过端口 6443 出口到该外部 IP 地址。

-

当使用路由和入口来公开服务时,

Ignition-

当使用路由和入口来公开服务时,

Ignition服务默认在端口 443 上运行。 -

如果使用

NodePort发布策略,请为Ignition服务使用防火墙规则。

-

当使用路由和入口来公开服务时,

在裸机上不需要以下服务:

-

OVNSbDb -

OIDC

4.2.1.3. 裸机基础架构要求

Agent 平台不会创建任何基础架构,但它对基础架构有以下要求:

- 代理 : 代理 代表使用发现镜像引导的主机,并准备好置备为 OpenShift Container Platform 节点。

- DNS :API 和入口端点必须可以被路由。

4.2.2. 裸机上的 DNS 配置

托管集群的 API 服务器作为 NodePort 服务公开。必须存在 api.<hosted_cluster_name>.<base_domain> 的 DNS 条目,它指向可以访问 API 服务器的目标。

DNS 条目可以作为记录,指向运行托管 control plane 管理集群中的一个节点。该条目也可以指向部署的负载均衡器,将传入的流量重定向到入口 pod。

DNS 配置示例

在上例中,*.apps.example.krnl.es。IN A 192.168.122.23 是托管的集群中的节点,如果已经配置了负载均衡器,则是一个负载均衡器。

如果您要为 IPv6 网络上的断开连接的环境配置 DNS,则配置类似以下示例。

IPv6 网络的 DNS 配置示例

如果您要为双栈网络上的断开连接的环境配置 DNS,请务必包括 IPv4 和 IPv6 的条目。

双栈网络的 DNS 配置示例

4.2.3. 创建 InfraEnv 资源

在裸机上创建托管集群前,您需要一个 InfraEnv 资源。

4.2.3.1. 创建 InfraEnv 资源并添加节点

在托管的 control plane 上,control-plane 组件作为 pod 在管理集群中作为 pod 运行,而 data plane 在专用节点上运行。您可以使用 Assisted Service 使用发现 ISO 引导硬件,该 ISO 将您的硬件添加到硬件清单中。之后,当您创建托管集群时,使用清单中的硬件来置备 data-plane 节点。用于获取发现 ISO 的对象是一个 InfraEnv 资源。您需要创建一个 BareMetalHost 对象,将集群配置为从发现 ISO 引导裸机节点。

流程

输入以下命令创建一个命名空间来存储硬件清单:

oc --kubeconfig ~/<directory_example>/mgmt-kubeconfig create \ namespace <namespace_example>

$ oc --kubeconfig ~/<directory_example>/mgmt-kubeconfig create \ namespace <namespace_example>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 其中:

- <directory_example>

-

是保存管理集群的

kubeconfig文件的目录名称。 - <namespace_example>

是您要创建的命名空间的名称,如

hardware-inventory。输出示例

namespace/hardware-inventory created

namespace/hardware-inventory createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

输入以下命令复制管理集群的 pull secret:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 其中:

- <directory_example>

-

是保存管理集群的

kubeconfig文件的目录名称。 - <namespace_example>

是您要创建的命名空间的名称,如

hardware-inventory。输出示例

secret/pull-secret created

secret/pull-secret createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

通过在 YAML 文件中添加以下内容来创建

InfraEnv资源:Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输入以下命令将更改应用到 YAML 文件:

oc apply -f <infraenv_config>.yaml

$ oc apply -f <infraenv_config>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 将

<infraenv_config> 替换为您的文件的名称。输入以下命令验证

InfraEnv资源是否已创建:oc --kubeconfig ~/<directory_example>/mgmt-kubeconfig \ -n <namespace_example> get infraenv hosted

$ oc --kubeconfig ~/<directory_example>/mgmt-kubeconfig \ -n <namespace_example> get infraenv hostedCopy to Clipboard Copied! Toggle word wrap Toggle overflow 通过以下两种方法之一添加裸机主机:

如果不使用 Metal3 Operator,请从

InfraEnv资源获取发现 ISO,并完成以下步骤手动引导主机:输入以下命令下载 live ISO:

oc get infraenv -A

$ oc get infraenv -ACopy to Clipboard Copied! Toggle word wrap Toggle overflow oc get infraenv <namespace_example> -o jsonpath='{.status.isoDownloadURL}' -n <namespace_example> <iso_url>$ oc get infraenv <namespace_example> -o jsonpath='{.status.isoDownloadURL}' -n <namespace_example> <iso_url>Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

引导 ISO。节点与 Assisted Service 通信,并作为代理注册到与

InfraEnv资源相同的命名空间中。 对于每个代理,设置安装磁盘 ID 和主机名,并批准它以指示代理可以使用。输入以下命令:

oc -n <hosted_control_plane_namespace> get agents

$ oc -n <hosted_control_plane_namespace> get agentsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

NAME CLUSTER APPROVED ROLE STAGE 86f7ac75-4fc4-4b36-8130-40fa12602218 auto-assign e57a637f-745b-496e-971d-1abbf03341ba auto-assign

NAME CLUSTER APPROVED ROLE STAGE 86f7ac75-4fc4-4b36-8130-40fa12602218 auto-assign e57a637f-745b-496e-971d-1abbf03341ba auto-assignCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc -n <hosted_control_plane_namespace> \ patch agent 86f7ac75-4fc4-4b36-8130-40fa12602218 \ -p '{"spec":{"installation_disk_id":"/dev/sda","approved":true,"hostname":"worker-0.example.krnl.es"}}' \ --type merge$ oc -n <hosted_control_plane_namespace> \ patch agent 86f7ac75-4fc4-4b36-8130-40fa12602218 \ -p '{"spec":{"installation_disk_id":"/dev/sda","approved":true,"hostname":"worker-0.example.krnl.es"}}' \ --type mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc -n <hosted_control_plane_namespace> \ patch agent 23d0c614-2caa-43f5-b7d3-0b3564688baa -p \ '{"spec":{"installation_disk_id":"/dev/sda","approved":true,"hostname":"worker-1.example.krnl.es"}}' \ --type merge$ oc -n <hosted_control_plane_namespace> \ patch agent 23d0c614-2caa-43f5-b7d3-0b3564688baa -p \ '{"spec":{"installation_disk_id":"/dev/sda","approved":true,"hostname":"worker-1.example.krnl.es"}}' \ --type mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc -n <hosted_control_plane_namespace> get agents

$ oc -n <hosted_control_plane_namespace> get agentsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

NAME CLUSTER APPROVED ROLE STAGE 86f7ac75-4fc4-4b36-8130-40fa12602218 true auto-assign e57a637f-745b-496e-971d-1abbf03341ba true auto-assign

NAME CLUSTER APPROVED ROLE STAGE 86f7ac75-4fc4-4b36-8130-40fa12602218 true auto-assign e57a637f-745b-496e-971d-1abbf03341ba true auto-assignCopy to Clipboard Copied! Toggle word wrap Toggle overflow

如果使用 Metal3 Operator,您可以通过创建以下对象来自动进行裸机主机注册:

创建 YAML 文件并添加以下内容:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 其中:

- <namespace_example>

- 是您的命名空间。

- <password>

- 是 secret 的密码。

- <username>

- 是 secret 的用户名。

- <bmc_address>

是

BareMetalHost对象的 BMC 地址。注意应用此 YAML 文件时,会创建以下对象:

- 带有基板管理控制器(BMC)凭证的 secret

-

BareMetalHost对象 - HyperShift Operator 的角色,用于管理代理

注意如何使用

infraenvs.agent-install.openshift.io: hostedcustom 标签在BareMetalHost对象中引用InfraEnv资源。这样可确保节点使用生成的 ISO 引导。

输入以下命令将更改应用到 YAML 文件:

oc apply -f <bare_metal_host_config>.yaml

$ oc apply -f <bare_metal_host_config>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 将

<bare_metal_host_config> 替换为您的文件的名称。

输入以下命令,然后等待几分钟后

BareMetalHost对象移至Provisioning状态:oc --kubeconfig ~/<directory_example>/mgmt-kubeconfig -n <namespace_example> get bmh

$ oc --kubeconfig ~/<directory_example>/mgmt-kubeconfig -n <namespace_example> get bmhCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

NAME STATE CONSUMER ONLINE ERROR AGE hosted-worker0 provisioning true 106s hosted-worker1 provisioning true 106s hosted-worker2 provisioning true 106s

NAME STATE CONSUMER ONLINE ERROR AGE hosted-worker0 provisioning true 106s hosted-worker1 provisioning true 106s hosted-worker2 provisioning true 106sCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输入以下命令验证节点是否已引导,并以代理的形式显示。这个过程可能需要几分钟时间,您可能需要多次输入命令。

oc --kubeconfig ~/<directory_example>/mgmt-kubeconfig -n <namespace_example> get agent

$ oc --kubeconfig ~/<directory_example>/mgmt-kubeconfig -n <namespace_example> get agentCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

NAME CLUSTER APPROVED ROLE STAGE aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaa0201 true auto-assign aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaa0202 true auto-assign aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaa0203 true auto-assign

NAME CLUSTER APPROVED ROLE STAGE aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaa0201 true auto-assign aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaa0202 true auto-assign aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaa0203 true auto-assignCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.3.2. 使用控制台创建 InfraEnv 资源

要使用控制台创建 InfraEnv 资源,请完成以下步骤。

流程

- 打开 OpenShift Container Platform Web 控制台,并输入您的管理员凭证登录。有关打开控制台的说明,请参阅"访问 Web 控制台"。

- 在控制台标头中,确保选择了 All Clusters。

- 点 Infrastructure → Host inventory → Create infrastructure environment。

-

创建

InfraEnv资源后,单击 Add hosts 并从可用选项中选择,从 InfraEnv 视图中添加裸机主机。

4.2.4. 在裸机上创建托管集群

您可以使用命令行界面(CLI)、控制台或使用镜像 registry 在裸机上创建托管集群。

4.2.4.1. 使用 CLI 创建托管集群

在裸机基础架构上,您可以创建或导入托管集群。在为多集群引擎 Operator 启用 Assisted Installer 作为附加组件后,您可以使用 Agent 平台创建一个托管集群,HyperShift Operator 会在托管的 control plane 命名空间中安装 Agent Cluster API 供应商。Agent Cluster API 供应商连接托管 control plane 和一个仅由计算节点组成的托管集群的管理集群。

先决条件

- 每个托管集群都必须具有集群范围的唯一名称。托管的集群名称都不能与任何现有受管集群相同。否则,多集群引擎 Operator 无法管理托管集群。

-

不要使用单词

cluster作为托管的集群名称。 - 您不能在多集群引擎 Operator 受管集群的命名空间中创建托管集群。

- 为获得最佳安全性和管理实践,请创建一个与其他托管集群分开的托管集群。

- 验证您是否为集群配置了默认存储类。否则,您可能会看到待处理的持久性卷声明(PVC)。

-

默认情况下,当使用

hcp create cluster agent命令时,命令会创建一个带有配置的节点端口的托管集群。裸机上托管集群的首选发布策略通过负载均衡器公开服务。如果使用 Web 控制台或使用 Red Hat Advanced Cluster Management 创建托管集群,要为 Kubernetes API 服务器以外的服务设置发布策略,您必须在HostedCluster自定义资源中手动指定servicePublishingStrategy信息。 确保您满足裸机上托管 control plane 的 "Requirements for hosted control plane" 中描述的要求,其中包括与基础架构、防火墙、端口和服务相关的要求。例如,这些要求描述了如何在管理集群中的裸机主机中添加适当的区标签,如下例所示:

oc label node [compute-node-1] topology.kubernetes.io/zone=zone1

$ oc label node [compute-node-1] topology.kubernetes.io/zone=zone1Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc label node [compute-node-2] topology.kubernetes.io/zone=zone2

$ oc label node [compute-node-2] topology.kubernetes.io/zone=zone2Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc label node [compute-node-3] topology.kubernetes.io/zone=zone3

$ oc label node [compute-node-3] topology.kubernetes.io/zone=zone3Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 确保您已将裸机节点添加到硬件清单中。

流程

运行以下命令来创建命名空间:

oc create ns <hosted_cluster_namespace>

$ oc create ns <hosted_cluster_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 将