This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Scalability and performance

Scaling your OpenShift Container Platform cluster and tuning performance in production environments

Abstract

Chapter 1. Recommended host practices

This topic provides recommended host practices for OpenShift Container Platform.

These guidelines apply to OpenShift Container Platform with software-defined networking (SDN), not Open Virtual Network (OVN).

1.1. Recommended node host practices

The OpenShift Container Platform node configuration file contains important options. For example, two parameters control the maximum number of pods that can be scheduled to a node: podsPerCore and maxPods.

When both options are in use, the lower of the two values limits the number of pods on a node. Exceeding these values can result in:

- Increased CPU utilization.

- Slow pod scheduling.

- Potential out-of-memory scenarios, depending on the amount of memory in the node.

- Exhausting the pool of IP addresses.

- Resource overcommitting, leading to poor user application performance.

In Kubernetes, a pod that is holding a single container actually uses two containers. The second container is used to set up networking prior to the actual container starting. Therefore, a system running 10 pods will actually have 20 containers running.

Disk IOPS throttling from the cloud provider might have an impact on CRI-O and kubelet. They might get overloaded when there are large number of I/O intensive pods running on the nodes. It is recommended that you monitor the disk I/O on the nodes and use volumes with sufficient throughput for the workload.

podsPerCore sets the number of pods the node can run based on the number of processor cores on the node. For example, if podsPerCore is set to 10 on a node with 4 processor cores, the maximum number of pods allowed on the node will be 40.

kubeletConfig: podsPerCore: 10

kubeletConfig:

podsPerCore: 10

Setting podsPerCore to 0 disables this limit. The default is 0. podsPerCore cannot exceed maxPods.

maxPods sets the number of pods the node can run to a fixed value, regardless of the properties of the node.

kubeletConfig:

maxPods: 250

kubeletConfig:

maxPods: 2501.2. Creating a KubeletConfig CRD to edit kubelet parameters

The kubelet configuration is currently serialized as an Ignition configuration, so it can be directly edited. However, there is also a new kubelet-config-controller added to the Machine Config Controller (MCC). This lets you use a KubeletConfig custom resource (CR) to edit the kubelet parameters.

As the fields in the kubeletConfig object are passed directly to the kubelet from upstream Kubernetes, the kubelet validates those values directly. Invalid values in the kubeletConfig object might cause cluster nodes to become unavailable. For valid values, see the Kubernetes documentation.

Consider the following guidance:

-

Create one

KubeletConfigCR for each machine config pool with all the config changes you want for that pool. If you are applying the same content to all of the pools, you need only oneKubeletConfigCR for all of the pools. -

Edit an existing

KubeletConfigCR to modify existing settings or add new settings, instead of creating a CR for each change. It is recommended that you create a CR only to modify a different machine config pool, or for changes that are intended to be temporary, so that you can revert the changes. -

As needed, create multiple

KubeletConfigCRs with a limit of 10 per cluster. For the firstKubeletConfigCR, the Machine Config Operator (MCO) creates a machine config appended withkubelet. With each subsequent CR, the controller creates anotherkubeletmachine config with a numeric suffix. For example, if you have akubeletmachine config with a-2suffix, the nextkubeletmachine config is appended with-3.

If you want to delete the machine configs, delete them in reverse order to avoid exceeding the limit. For example, you delete the kubelet-3 machine config before deleting the kubelet-2 machine config.

If you have a machine config with a kubelet-9 suffix, and you create another KubeletConfig CR, a new machine config is not created, even if there are fewer than 10 kubelet machine configs.

Example KubeletConfig CR

oc get kubeletconfig

$ oc get kubeletconfigNAME AGE set-max-pods 15m

NAME AGE

set-max-pods 15mExample showing a KubeletConfig machine config

oc get mc | grep kubelet

$ oc get mc | grep kubelet... 99-worker-generated-kubelet-1 b5c5119de007945b6fe6fb215db3b8e2ceb12511 3.2.0 26m ...

...

99-worker-generated-kubelet-1 b5c5119de007945b6fe6fb215db3b8e2ceb12511 3.2.0 26m

...The following procedure is an example to show how to configure the maximum number of pods per node on the worker nodes.

Prerequisites

Obtain the label associated with the static

MachineConfigPoolCR for the type of node you want to configure. Perform one of the following steps:View the machine config pool:

oc describe machineconfigpool <name>

$ oc describe machineconfigpool <name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc describe machineconfigpool worker

$ oc describe machineconfigpool workerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- If a label has been added it appears under

labels.

If the label is not present, add a key/value pair:

oc label machineconfigpool worker custom-kubelet=set-max-pods

$ oc label machineconfigpool worker custom-kubelet=set-max-podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

View the available machine configuration objects that you can select:

oc get machineconfig

$ oc get machineconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow By default, the two kubelet-related configs are

01-master-kubeletand01-worker-kubelet.Check the current value for the maximum pods per node:

oc describe node <node_name>

$ oc describe node <node_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc describe node ci-ln-5grqprb-f76d1-ncnqq-worker-a-mdv94

$ oc describe node ci-ln-5grqprb-f76d1-ncnqq-worker-a-mdv94Copy to Clipboard Copied! Toggle word wrap Toggle overflow Look for

value: pods: <value>in theAllocatablestanza:Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set the maximum pods per node on the worker nodes by creating a custom resource file that contains the kubelet configuration:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe rate at which the kubelet talks to the API server depends on queries per second (QPS) and burst values. The default values,

50forkubeAPIQPSand100forkubeAPIBurst, are sufficient if there are limited pods running on each node. It is recommended to update the kubelet QPS and burst rates if there are enough CPU and memory resources on the node.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the machine config pool for workers with the label:

oc label machineconfigpool worker custom-kubelet=large-pods

$ oc label machineconfigpool worker custom-kubelet=large-podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

KubeletConfigobject:oc create -f change-maxPods-cr.yaml

$ oc create -f change-maxPods-cr.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the

KubeletConfigobject is created:oc get kubeletconfig

$ oc get kubeletconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME AGE set-max-pods 15m

NAME AGE set-max-pods 15mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Depending on the number of worker nodes in the cluster, wait for the worker nodes to be rebooted one by one. For a cluster with 3 worker nodes, this could take about 10 to 15 minutes.

Verify that the changes are applied to the node:

Check on a worker node that the

maxPodsvalue changed:oc describe node <node_name>

$ oc describe node <node_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Locate the

Allocatablestanza:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- In this example, the

podsparameter should report the value you set in theKubeletConfigobject.

Verify the change in the

KubeletConfigobject:oc get kubeletconfigs set-max-pods -o yaml

$ oc get kubeletconfigs set-max-pods -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow This should show a

status: "True"andtype:Success:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4. Control plane node sizing

The control plane node resource requirements depend on the number of nodes in the cluster. The following control plane node size recommendations are based on the results of control plane density focused testing. The control plane tests create the following objects across the cluster in each of the namespaces depending on the node counts:

- 12 image streams

- 3 build configurations

- 6 builds

- 1 deployment with 2 pod replicas mounting two secrets each

- 2 deployments with 1 pod replica mounting two secrets

- 3 services pointing to the previous deployments

- 3 routes pointing to the previous deployments

- 10 secrets, 2 of which are mounted by the previous deployments

- 10 config maps, 2 of which are mounted by the previous deployments

| Number of worker nodes | Cluster load (namespaces) | CPU cores | Memory (GB) |

|---|---|---|---|

| 25 | 500 | 4 | 16 |

| 100 | 1000 | 8 | 32 |

| 250 | 4000 | 16 | 96 |

On a large and dense cluster with three masters or control plane nodes, the CPU and memory usage will spike up when one of the nodes is stopped, rebooted or fails. The failures can be due to unexpected issues with power, network or underlying infrastructure in addition to intentional cases where the cluster is restarted after shutting it down to save costs. The remaining two control plane nodes must handle the load in order to be highly available which leads to increase in the resource usage. This is also expected during upgrades because the masters are cordoned, drained, and rebooted serially to apply the operating system updates, as well as the control plane Operators update. To avoid cascading failures, keep the overall CPU and memory resource usage on the control plane nodes to at most 60% of all available capacity to handle the resource usage spikes. Increase the CPU and memory on the control plane nodes accordingly to avoid potential downtime due to lack of resources.

The node sizing varies depending on the number of nodes and object counts in the cluster. It also depends on whether the objects are actively being created on the cluster. During object creation, the control plane is more active in terms of resource usage compared to when the objects are in the running phase.

Operator Lifecycle Manager (OLM ) runs on the control plane nodes and it’s memory footprint depends on the number of namespaces and user installed operators that OLM needs to manage on the cluster. Control plane nodes need to be sized accordingly to avoid OOM kills. Following data points are based on the results from cluster maximums testing.

| Number of namespaces | OLM memory at idle state (GB) | OLM memory with 5 user operators installed (GB) |

|---|---|---|

| 500 | 0.823 | 1.7 |

| 1000 | 1.2 | 2.5 |

| 1500 | 1.7 | 3.2 |

| 2000 | 2 | 4.4 |

| 3000 | 2.7 | 5.6 |

| 4000 | 3.8 | 7.6 |

| 5000 | 4.2 | 9.02 |

| 6000 | 5.8 | 11.3 |

| 7000 | 6.6 | 12.9 |

| 8000 | 6.9 | 14.8 |

| 9000 | 8 | 17.7 |

| 10,000 | 9.9 | 21.6 |

If you used an installer-provisioned infrastructure installation method, you cannot modify the control plane node size in a running OpenShift Container Platform 4.8 cluster. Instead, you must estimate your total node count and use the suggested control plane node size during installation.

The recommendations are based on the data points captured on OpenShift Container Platform clusters with OpenShift SDN as the network plugin.

In OpenShift Container Platform 4.8, half of a CPU core (500 millicore) is now reserved by the system by default compared to OpenShift Container Platform 3.11 and previous versions. The sizes are determined taking that into consideration.

1.4.1. Increasing the flavor size of the Amazon Web Services (AWS) master instances

When you have overloaded AWS master nodes in a cluster and the master nodes require more resources, you can increase the flavor size of the master instances.

It is recommended to backup etcd before increasing the flavor size of the AWS master instances.

Prerequisites

- You have an IPI (installer-provisioned infrastructure) or UPI (user-provisioned infrastructure) cluster on AWS.

Procedure

- Open the AWS console, fetch the master instances.

- Stop one master instance.

- Select the stopped instance, and click Actions → Instance Settings → Change instance type.

-

Change the instance to a larger type, ensuring that the type is the same base as the previous selection, and apply changes. For example, you can change

m5.xlargetom5.2xlargeorm5.4xlarge. - Backup the instance, and repeat the steps for the next master instance.

1.5. Recommended etcd practices

Because etcd writes data to disk and persists proposals on disk, its performance depends on disk performance. Although etcd is not particularly I/O intensive, it requires a low latency block device for optimal performance and stability. Because etcd’s consensus protocol depends on persistently storing metadata to a log (WAL), etcd is sensitive to disk-write latency. Slow disks and disk activity from other processes can cause long fsync latencies.

Those latencies can cause etcd to miss heartbeats, not commit new proposals to the disk on time, and ultimately experience request timeouts and temporary leader loss. High write latencies also lead to an OpenShift API slowness, which affects cluster performance. Because of these reasons, avoid colocating other workloads on the control-plane nodes.

In terms of latency, run etcd on top of a block device that can write at least 50 IOPS of 8000 bytes long sequentially. That is, with a latency of 20ms, keep in mind that uses fdatasync to synchronize each write in the WAL. For heavy loaded clusters, sequential 500 IOPS of 8000 bytes (2 ms) are recommended. To measure those numbers, you can use a benchmarking tool, such as fio.

To achieve such performance, run etcd on machines that are backed by SSD or NVMe disks with low latency and high throughput. Consider single-level cell (SLC) solid-state drives (SSDs), which provide 1 bit per memory cell, are durable and reliable, and are ideal for write-intensive workloads.

The following hard disk features provide optimal etcd performance:

- Low latency to support fast read operation.

- High-bandwidth writes for faster compactions and defragmentation.

- High-bandwidth reads for faster recovery from failures.

- Solid state drives as a minimum selection, however NVMe drives are preferred.

- Server-grade hardware from various manufacturers for increased reliability.

- RAID 0 technology for increased performance.

- Dedicated etcd drives. Do not place log files or other heavy workloads on etcd drives.

Avoid NAS or SAN setups and spinning drives. Always benchmark by using utilities such as fio. Continuously monitor the cluster performance as it increases.

Avoid using the Network File System (NFS) protocol or other network based file systems.

Some key metrics to monitor on a deployed OpenShift Container Platform cluster are p99 of etcd disk write ahead log duration and the number of etcd leader changes. Use Prometheus to track these metrics.

To validate the hardware for etcd before or after you create the OpenShift Container Platform cluster, you can use fio.

Prerequisites

- Container runtimes such as Podman or Docker are installed on the machine that you’re testing.

-

Data is written to the

/var/lib/etcdpath.

Procedure

Run fio and analyze the results:

If you use Podman, run this command:

sudo podman run --volume /var/lib/etcd:/var/lib/etcd:Z quay.io/openshift-scale/etcd-perf

$ sudo podman run --volume /var/lib/etcd:/var/lib/etcd:Z quay.io/openshift-scale/etcd-perfCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you use Docker, run this command:

sudo docker run --volume /var/lib/etcd:/var/lib/etcd:Z quay.io/openshift-scale/etcd-perf

$ sudo docker run --volume /var/lib/etcd:/var/lib/etcd:Z quay.io/openshift-scale/etcd-perfCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The output reports whether the disk is fast enough to host etcd by comparing the 99th percentile of the fsync metric captured from the run to see if it is less than 20 ms. A few of the most important etcd metrics that might affected by I/O performance are as follow:

-

etcd_disk_wal_fsync_duration_seconds_bucketmetric reports the etcd’s WAL fsync duration -

etcd_disk_backend_commit_duration_seconds_bucketmetric reports the etcd backend commit latency duration -

etcd_server_leader_changes_seen_totalmetric reports the leader changes

Because etcd replicates the requests among all the members, its performance strongly depends on network input/output (I/O) latency. High network latencies result in etcd heartbeats taking longer than the election timeout, which results in leader elections that are disruptive to the cluster. A key metric to monitor on a deployed OpenShift Container Platform cluster is the 99th percentile of etcd network peer latency on each etcd cluster member. Use Prometheus to track the metric.

The histogram_quantile(0.99, rate(etcd_network_peer_round_trip_time_seconds_bucket[2m])) metric reports the round trip time for etcd to finish replicating the client requests between the members. Ensure that it is less than 50 ms.

1.6. Defragmenting etcd data

For large and dense clusters, etcd can suffer from poor performance if the keyspace grows too large and exceeds the space quota. Periodically maintain and defragment etcd to free up space in the data store. Monitor Prometheus for etcd metrics and defragment it when required; otherwise, etcd can raise a cluster-wide alarm that puts the cluster into a maintenance mode that accepts only key reads and deletes.

Monitor these key metrics:

-

etcd_server_quota_backend_bytes, which is the current quota limit -

etcd_mvcc_db_total_size_in_use_in_bytes, which indicates the actual database usage after a history compaction -

etcd_debugging_mvcc_db_total_size_in_bytes, which shows the database size, including free space waiting for defragmentation

Defragment etcd data to reclaim disk space after events that cause disk fragmentation, such as etcd history compaction.

History compaction is performed automatically every five minutes and leaves gaps in the back-end database. This fragmented space is available for use by etcd, but is not available to the host file system. You must defragment etcd to make this space available to the host file system.

Because etcd writes data to disk, its performance strongly depends on disk performance. Consider defragmenting etcd every month, twice a month, or as needed for your cluster. You can also monitor the etcd_db_total_size_in_bytes metric to determine whether defragmentation is necessary.

You can also determine whether defragmentation is needed by checking the etcd database size in MB that will be freed by defragmentation with the PromQL expression: (etcd_mvcc_db_total_size_in_bytes - etcd_mvcc_db_total_size_in_use_in_bytes)/1024/1024

Defragmenting etcd is a blocking action. The etcd member will not response until defragmentation is complete. For this reason, wait at least one minute between defragmentation actions on each of the pods to allow the cluster to recover.

Follow this procedure to defragment etcd data on each etcd member.

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole.

Procedure

Determine which etcd member is the leader, because the leader should be defragmented last.

Get the list of etcd pods:

oc get pods -n openshift-etcd -o wide | grep -v quorum-guard | grep etcd

$ oc get pods -n openshift-etcd -o wide | grep -v quorum-guard | grep etcdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

etcd-ip-10-0-159-225.example.redhat.com 3/3 Running 0 175m 10.0.159.225 ip-10-0-159-225.example.redhat.com <none> <none> etcd-ip-10-0-191-37.example.redhat.com 3/3 Running 0 173m 10.0.191.37 ip-10-0-191-37.example.redhat.com <none> <none> etcd-ip-10-0-199-170.example.redhat.com 3/3 Running 0 176m 10.0.199.170 ip-10-0-199-170.example.redhat.com <none> <none>

etcd-ip-10-0-159-225.example.redhat.com 3/3 Running 0 175m 10.0.159.225 ip-10-0-159-225.example.redhat.com <none> <none> etcd-ip-10-0-191-37.example.redhat.com 3/3 Running 0 173m 10.0.191.37 ip-10-0-191-37.example.redhat.com <none> <none> etcd-ip-10-0-199-170.example.redhat.com 3/3 Running 0 176m 10.0.199.170 ip-10-0-199-170.example.redhat.com <none> <none>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Choose a pod and run the following command to determine which etcd member is the leader:

oc rsh -n openshift-etcd etcd-ip-10-0-159-225.example.redhat.com etcdctl endpoint status --cluster -w table

$ oc rsh -n openshift-etcd etcd-ip-10-0-159-225.example.redhat.com etcdctl endpoint status --cluster -w tableCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Based on the

IS LEADERcolumn of this output, thehttps://10.0.199.170:2379endpoint is the leader. Matching this endpoint with the output of the previous step, the pod name of the leader isetcd-ip-10-0-199-170.example.redhat.com.

Defragment an etcd member.

Connect to the running etcd container, passing in the name of a pod that is not the leader:

oc rsh -n openshift-etcd etcd-ip-10-0-159-225.example.redhat.com

$ oc rsh -n openshift-etcd etcd-ip-10-0-159-225.example.redhat.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Unset the

ETCDCTL_ENDPOINTSenvironment variable:unset ETCDCTL_ENDPOINTS

sh-4.4# unset ETCDCTL_ENDPOINTSCopy to Clipboard Copied! Toggle word wrap Toggle overflow Defragment the etcd member:

etcdctl --command-timeout=30s --endpoints=https://localhost:2379 defrag

sh-4.4# etcdctl --command-timeout=30s --endpoints=https://localhost:2379 defragCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Finished defragmenting etcd member[https://localhost:2379]

Finished defragmenting etcd member[https://localhost:2379]Copy to Clipboard Copied! Toggle word wrap Toggle overflow If a timeout error occurs, increase the value for

--command-timeoutuntil the command succeeds.Verify that the database size was reduced:

etcdctl endpoint status -w table --cluster

sh-4.4# etcdctl endpoint status -w table --clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow This example shows that the database size for this etcd member is now 41 MB as opposed to the starting size of 104 MB.

Repeat these steps to connect to each of the other etcd members and defragment them. Always defragment the leader last.

Wait at least one minute between defragmentation actions to allow the etcd pod to recover. Until the etcd pod recovers, the etcd member will not respond.

If any

NOSPACEalarms were triggered due to the space quota being exceeded, clear them.Check if there are any

NOSPACEalarms:etcdctl alarm list

sh-4.4# etcdctl alarm listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

memberID:12345678912345678912 alarm:NOSPACE

memberID:12345678912345678912 alarm:NOSPACECopy to Clipboard Copied! Toggle word wrap Toggle overflow Clear the alarms:

etcdctl alarm disarm

sh-4.4# etcdctl alarm disarmCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.7. OpenShift Container Platform infrastructure components

The following infrastructure workloads do not incur OpenShift Container Platform worker subscriptions:

- Kubernetes and OpenShift Container Platform control plane services that run on masters

- The default router

- The integrated container image registry

- The HAProxy-based Ingress Controller

- The cluster metrics collection, or monitoring service, including components for monitoring user-defined projects

- Cluster aggregated logging

- Service brokers

- Red Hat Quay

- Red Hat OpenShift Container Storage

- Red Hat Advanced Cluster Manager

- Red Hat Advanced Cluster Security for Kubernetes

- Red Hat OpenShift GitOps

- Red Hat OpenShift Pipelines

Any node that runs any other container, pod, or component is a worker node that your subscription must cover.

1.8. Moving the monitoring solution

The monitoring stack includes multiple components, including Prometheus, Grafana, and Alertmanager. The Cluster Monitoring Operator manages this stack. To redeploy the monitoring stack to infrastructure nodes, you can create and apply a custom config map.

Procedure

Edit the

cluster-monitoring-configconfig map and change thenodeSelectorto use theinfralabel:oc edit configmap cluster-monitoring-config -n openshift-monitoring

$ oc edit configmap cluster-monitoring-config -n openshift-monitoringCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow Watch the monitoring pods move to the new machines:

watch 'oc get pod -n openshift-monitoring -o wide'

$ watch 'oc get pod -n openshift-monitoring -o wide'Copy to Clipboard Copied! Toggle word wrap Toggle overflow If a component has not moved to the

infranode, delete the pod with this component:oc delete pod -n openshift-monitoring <pod>

$ oc delete pod -n openshift-monitoring <pod>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The component from the deleted pod is re-created on the

infranode.

1.9. Moving the default registry

You configure the registry Operator to deploy its pods to different nodes.

Prerequisites

- Configure additional machine sets in your OpenShift Container Platform cluster.

Procedure

View the

config/instanceobject:oc get configs.imageregistry.operator.openshift.io/cluster -o yaml

$ oc get configs.imageregistry.operator.openshift.io/cluster -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

config/instanceobject:oc edit configs.imageregistry.operator.openshift.io/cluster

$ oc edit configs.imageregistry.operator.openshift.io/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Add a

nodeSelectorparameter with the appropriate value to the component you want to move. You can use anodeSelectorin the format shown or use<key>: <value>pairs, based on the value specified for the node. If you added a taint to the infrasructure node, also add a matching toleration.

Verify the registry pod has been moved to the infrastructure node.

Run the following command to identify the node where the registry pod is located:

oc get pods -o wide -n openshift-image-registry

$ oc get pods -o wide -n openshift-image-registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm the node has the label you specified:

oc describe node <node_name>

$ oc describe node <node_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Review the command output and confirm that

node-role.kubernetes.io/infrais in theLABELSlist.

1.10. Moving the router

You can deploy the router pod to a different machine set. By default, the pod is deployed to a worker node.

Prerequisites

- Configure additional machine sets in your OpenShift Container Platform cluster.

Procedure

View the

IngressControllercustom resource for the router Operator:oc get ingresscontroller default -n openshift-ingress-operator -o yaml

$ oc get ingresscontroller default -n openshift-ingress-operator -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow The command output resembles the following text:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

ingresscontrollerresource and change thenodeSelectorto use theinfralabel:oc edit ingresscontroller default -n openshift-ingress-operator

$ oc edit ingresscontroller default -n openshift-ingress-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Add a

nodeSelectorparameter with the appropriate value to the component you want to move. You can use anodeSelectorin the format shown or use<key>: <value>pairs, based on the value specified for the node. If you added a taint to the infrastructure node, also add a matching toleration.

Confirm that the router pod is running on the

infranode.View the list of router pods and note the node name of the running pod:

oc get pod -n openshift-ingress -o wide

$ oc get pod -n openshift-ingress -o wideCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES router-default-86798b4b5d-bdlvd 1/1 Running 0 28s 10.130.2.4 ip-10-0-217-226.ec2.internal <none> <none> router-default-955d875f4-255g8 0/1 Terminating 0 19h 10.129.2.4 ip-10-0-148-172.ec2.internal <none> <none>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES router-default-86798b4b5d-bdlvd 1/1 Running 0 28s 10.130.2.4 ip-10-0-217-226.ec2.internal <none> <none> router-default-955d875f4-255g8 0/1 Terminating 0 19h 10.129.2.4 ip-10-0-148-172.ec2.internal <none> <none>Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, the running pod is on the

ip-10-0-217-226.ec2.internalnode.View the node status of the running pod:

oc get node <node_name>

$ oc get node <node_name>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify the

<node_name>that you obtained from the pod list.

Example output

NAME STATUS ROLES AGE VERSION ip-10-0-217-226.ec2.internal Ready infra,worker 17h v1.21.0

NAME STATUS ROLES AGE VERSION ip-10-0-217-226.ec2.internal Ready infra,worker 17h v1.21.0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Because the role list includes

infra, the pod is running on the correct node.

1.11. Infrastructure node sizing

Infrastructure nodes are nodes that are labeled to run pieces of the OpenShift Container Platform environment. The infrastructure node resource requirements depend on the cluster age, nodes, and objects in the cluster, as these factors can lead to an increase in the number of metrics or time series in Prometheus. The following infrastructure node size recommendations are based on the results of cluster maximums and control plane density focused testing.

| Number of worker nodes | CPU cores | Memory (GB) |

|---|---|---|

| 25 | 4 | 16 |

| 100 | 8 | 32 |

| 250 | 16 | 128 |

| 500 | 32 | 128 |

In general, three infrastructure nodes are recommended per cluster.

These sizing recommendations are based on scale tests, which create a large number of objects across the cluster. These tests include reaching some of the cluster maximums. In the case of 250 and 500 node counts on a OpenShift Container Platform 4.8 cluster, these maximums are 10000 namespaces with 61000 pods, 10000 deployments, 181000 secrets, 400 config maps, and so on. Prometheus is a highly memory intensive application; the resource usage depends on various factors including the number of nodes, objects, the Prometheus metrics scraping interval, metrics or time series, and the age of the cluster. The disk size also depends on the retention period. You must take these factors into consideration and size them accordingly.

These sizing recommendations are only applicable for the Prometheus, Router, and Registry infrastructure components, which are installed during cluster installation. Logging is a day-two operation and is not included in these recommendations.

In OpenShift Container Platform 4.8, half of a CPU core (500 millicore) is now reserved by the system by default compared to OpenShift Container Platform 3.11 and previous versions. This influences the stated sizing recommendations.

Chapter 2. Recommended host practices for IBM Z & LinuxONE environments

This topic provides recommended host practices for OpenShift Container Platform on IBM Z and LinuxONE.

The s390x architecture is unique in many aspects. Therefore, some recommendations made here might not apply to other platforms.

Unless stated otherwise, these practices apply to both z/VM and Red Hat Enterprise Linux (RHEL) KVM installations on IBM Z and LinuxONE.

2.1. Managing CPU overcommitment

In a highly virtualized IBM Z environment, you must carefully plan the infrastructure setup and sizing. One of the most important features of virtualization is the capability to do resource overcommitment, allocating more resources to the virtual machines than actually available at the hypervisor level. This is very workload dependent and there is no golden rule that can be applied to all setups.

Depending on your setup, consider these best practices regarding CPU overcommitment:

- At LPAR level (PR/SM hypervisor), avoid assigning all available physical cores (IFLs) to each LPAR. For example, with four physical IFLs available, you should not define three LPARs with four logical IFLs each.

- Check and understand LPAR shares and weights.

- An excessive number of virtual CPUs can adversely affect performance. Do not define more virtual processors to a guest than logical processors are defined to the LPAR.

- Configure the number of virtual processors per guest for peak workload, not more.

- Start small and monitor the workload. Increase the vCPU number incrementally if necessary.

- Not all workloads are suitable for high overcommitment ratios. If the workload is CPU intensive, you will probably not be able to achieve high ratios without performance problems. Workloads that are more I/O intensive can keep consistent performance even with high overcommitment ratios.

2.2. Disable Transparent Huge Pages

Transparent Huge Pages (THP) attempt to automate most aspects of creating, managing, and using huge pages. Since THP automatically manages the huge pages, this is not always handled optimally for all types of workloads. THP can lead to performance regressions, since many applications handle huge pages on their own. Therefore, consider disabling THP.

2.3. Boost networking performance with Receive Flow Steering

Receive Flow Steering (RFS) extends Receive Packet Steering (RPS) by further reducing network latency. RFS is technically based on RPS, and improves the efficiency of packet processing by increasing the CPU cache hit rate. RFS achieves this, and in addition considers queue length, by determining the most convenient CPU for computation so that cache hits are more likely to occur within the CPU. Thus, the CPU cache is invalidated less and requires fewer cycles to rebuild the cache. This can help reduce packet processing run time.

2.3.1. Use the Machine Config Operator (MCO) to activate RFS

Procedure

Copy the following MCO sample profile into a YAML file. For example,

enable-rfs.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the MCO profile:

oc create -f enable-rfs.yaml

$ oc create -f enable-rfs.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that an entry named

50-enable-rfsis listed:oc get mc

$ oc get mcCopy to Clipboard Copied! Toggle word wrap Toggle overflow To deactivate, enter:

oc delete mc 50-enable-rfs

$ oc delete mc 50-enable-rfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4. Choose your networking setup

The networking stack is one of the most important components for a Kubernetes-based product like OpenShift Container Platform. For IBM Z setups, the networking setup depends on the hypervisor of your choice. Depending on the workload and the application, the best fit usually changes with the use case and the traffic pattern.

Depending on your setup, consider these best practices:

- Consider all options regarding networking devices to optimize your traffic pattern. Explore the advantages of OSA-Express, RoCE Express, HiperSockets, z/VM VSwitch, Linux Bridge (KVM), and others to decide which option leads to the greatest benefit for your setup.

- Always use the latest available NIC version. For example, OSA Express 7S 10 GbE shows great improvement compared to OSA Express 6S 10 GbE with transactional workload types, although both are 10 GbE adapters.

- Each virtual switch adds an additional layer of latency.

- The load balancer plays an important role for network communication outside the cluster. Consider using a production-grade hardware load balancer if this is critical for your application.

- OpenShift Container Platform SDN introduces flows and rules, which impact the networking performance. Make sure to consider pod affinities and placements, to benefit from the locality of services where communication is critical.

- Balance the trade-off between performance and functionality.

2.5. Ensure high disk performance with HyperPAV on z/VM

DASD and ECKD devices are commonly used disk types in IBM Z environments. In a typical OpenShift Container Platform setup in z/VM environments, DASD disks are commonly used to support the local storage for the nodes. You can set up HyperPAV alias devices to provide more throughput and overall better I/O performance for the DASD disks that support the z/VM guests.

Using HyperPAV for the local storage devices leads to a significant performance benefit. However, you must be aware that there is a trade-off between throughput and CPU costs.

2.5.1. Use the Machine Config Operator (MCO) to activate HyperPAV aliases in nodes using z/VM full-pack minidisks

For z/VM-based OpenShift Container Platform setups that use full-pack minidisks, you can leverage the advantage of MCO profiles by activating HyperPAV aliases in all of the nodes. You must add YAML configurations for both control plane and compute nodes.

Procedure

Copy the following MCO sample profile into a YAML file for the control plane node. For example,

05-master-kernelarg-hpav.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the following MCO sample profile into a YAML file for the compute node. For example,

05-worker-kernelarg-hpav.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou must modify the

rd.dasdarguments to fit the device IDs.Create the MCO profiles:

oc create -f 05-master-kernelarg-hpav.yaml

$ oc create -f 05-master-kernelarg-hpav.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc create -f 05-worker-kernelarg-hpav.yaml

$ oc create -f 05-worker-kernelarg-hpav.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow To deactivate, enter:

oc delete -f 05-master-kernelarg-hpav.yaml

$ oc delete -f 05-master-kernelarg-hpav.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete -f 05-worker-kernelarg-hpav.yaml

$ oc delete -f 05-worker-kernelarg-hpav.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.6. RHEL KVM on IBM Z host recommendations

Optimizing a KVM virtual server environment strongly depends on the workloads of the virtual servers and on the available resources. The same action that enhances performance in one environment can have adverse effects in another. Finding the best balance for a particular setting can be a challenge and often involves experimentation.

The following section introduces some best practices when using OpenShift Container Platform with RHEL KVM on IBM Z and LinuxONE environments.

2.6.1. Use multiple queues for your VirtIO network interfaces

With multiple virtual CPUs, you can transfer packages in parallel if you provide multiple queues for incoming and outgoing packets. Use the queues attribute of the driver element to configure multiple queues. Specify an integer of at least 2 that does not exceed the number of virtual CPUs of the virtual server.

The following example specification configures two input and output queues for a network interface:

<interface type="direct">

<source network="net01"/>

<model type="virtio"/>

<driver ... queues="2"/>

</interface>

<interface type="direct">

<source network="net01"/>

<model type="virtio"/>

<driver ... queues="2"/>

</interface>Multiple queues are designed to provide enhanced performance for a network interface, but they also use memory and CPU resources. Start with defining two queues for busy interfaces. Next, try two queues for interfaces with less traffic or more than two queues for busy interfaces.

2.6.2. Use I/O threads for your virtual block devices

To make virtual block devices use I/O threads, you must configure one or more I/O threads for the virtual server and each virtual block device to use one of these I/O threads.

The following example specifies <iothreads>3</iothreads> to configure three I/O threads, with consecutive decimal thread IDs 1, 2, and 3. The iothread="2" parameter specifies the driver element of the disk device to use the I/O thread with ID 2.

Sample I/O thread specification

Threads can increase the performance of I/O operations for disk devices, but they also use memory and CPU resources. You can configure multiple devices to use the same thread. The best mapping of threads to devices depends on the available resources and the workload.

Start with a small number of I/O threads. Often, a single I/O thread for all disk devices is sufficient. Do not configure more threads than the number of virtual CPUs, and do not configure idle threads.

You can use the virsh iothreadadd command to add I/O threads with specific thread IDs to a running virtual server.

2.6.3. Avoid virtual SCSI devices

Configure virtual SCSI devices only if you need to address the device through SCSI-specific interfaces. Configure disk space as virtual block devices rather than virtual SCSI devices, regardless of the backing on the host.

However, you might need SCSI-specific interfaces for:

- A LUN for a SCSI-attached tape drive on the host.

- A DVD ISO file on the host file system that is mounted on a virtual DVD drive.

2.6.4. Configure guest caching for disk

Configure your disk devices to do caching by the guest and not by the host.

Ensure that the driver element of the disk device includes the cache="none" and io="native" parameters.

<disk type="block" device="disk">

<driver name="qemu" type="raw" cache="none" io="native" iothread="1"/>

...

</disk>

<disk type="block" device="disk">

<driver name="qemu" type="raw" cache="none" io="native" iothread="1"/>

...

</disk>2.6.5. Exclude the memory balloon device

Unless you need a dynamic memory size, do not define a memory balloon device and ensure that libvirt does not create one for you. Include the memballoon parameter as a child of the devices element in your domain configuration XML file.

Check the list of active profiles:

<memballoon model="none"/>

<memballoon model="none"/>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.6.6. Tune the CPU migration algorithm of the host scheduler

Do not change the scheduler settings unless you are an expert who understands the implications. Do not apply changes to production systems without testing them and confirming that they have the intended effect.

The kernel.sched_migration_cost_ns parameter specifies a time interval in nanoseconds. After the last execution of a task, the CPU cache is considered to have useful content until this interval expires. Increasing this interval results in fewer task migrations. The default value is 500000 ns.

If the CPU idle time is higher than expected when there are runnable processes, try reducing this interval. If tasks bounce between CPUs or nodes too often, try increasing it.

To dynamically set the interval to 60000 ns, enter the following command:

sysctl kernel.sched_migration_cost_ns=60000

# sysctl kernel.sched_migration_cost_ns=60000

To persistently change the value to 60000 ns, add the following entry to /etc/sysctl.conf:

kernel.sched_migration_cost_ns=60000

kernel.sched_migration_cost_ns=600002.6.7. Disable the cpuset cgroup controller

This setting applies only to KVM hosts with cgroups version 1. To enable CPU hotplug on the host, disable the cgroup controller.

Procedure

-

Open

/etc/libvirt/qemu.confwith an editor of your choice. -

Go to the

cgroup_controllersline. - Duplicate the entire line and remove the leading number sign (#) from the copy.

Remove the

cpusetentry, as follows:cgroup_controllers = [ "cpu", "devices", "memory", "blkio", "cpuacct" ]

cgroup_controllers = [ "cpu", "devices", "memory", "blkio", "cpuacct" ]Copy to Clipboard Copied! Toggle word wrap Toggle overflow For the new setting to take effect, you must restart the libvirtd daemon:

- Stop all virtual machines.

Run the following command:

systemctl restart libvirtd

# systemctl restart libvirtdCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart the virtual machines.

This setting persists across host reboots.

2.6.8. Tune the polling period for idle virtual CPUs

When a virtual CPU becomes idle, KVM polls for wakeup conditions for the virtual CPU before allocating the host resource. You can specify the time interval, during which polling takes place in sysfs at /sys/module/kvm/parameters/halt_poll_ns. During the specified time, polling reduces the wakeup latency for the virtual CPU at the expense of resource usage. Depending on the workload, a longer or shorter time for polling can be beneficial. The time interval is specified in nanoseconds. The default is 50000 ns.

To optimize for low CPU consumption, enter a small value or write 0 to disable polling:

echo 0 > /sys/module/kvm/parameters/halt_poll_ns

# echo 0 > /sys/module/kvm/parameters/halt_poll_nsCopy to Clipboard Copied! Toggle word wrap Toggle overflow To optimize for low latency, for example for transactional workloads, enter a large value:

echo 80000 > /sys/module/kvm/parameters/halt_poll_ns

# echo 80000 > /sys/module/kvm/parameters/halt_poll_nsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Recommended cluster scaling practices

The guidance in this section is only relevant for installations with cloud provider integration.

These guidelines apply to OpenShift Container Platform with software-defined networking (SDN), not Open Virtual Network (OVN).

Apply the following best practices to scale the number of worker machines in your OpenShift Container Platform cluster. You scale the worker machines by increasing or decreasing the number of replicas that are defined in the worker machine set.

3.1. Recommended practices for scaling the cluster

When scaling up the cluster to higher node counts:

- Spread nodes across all of the available zones for higher availability.

- Scale up by no more than 25 to 50 machines at once.

- Consider creating new machine sets in each available zone with alternative instance types of similar size to help mitigate any periodic provider capacity constraints. For example, on AWS, use m5.large and m5d.large.

Cloud providers might implement a quota for API services. Therefore, gradually scale the cluster.

The controller might not be able to create the machines if the replicas in the machine sets are set to higher numbers all at one time. The number of requests the cloud platform, which OpenShift Container Platform is deployed on top of, is able to handle impacts the process. The controller will start to query more while trying to create, check, and update the machines with the status. The cloud platform on which OpenShift Container Platform is deployed has API request limits and excessive queries might lead to machine creation failures due to cloud platform limitations.

Enable machine health checks when scaling to large node counts. In case of failures, the health checks monitor the condition and automatically repair unhealthy machines.

When scaling large and dense clusters to lower node counts, it might take large amounts of time as the process involves draining or evicting the objects running on the nodes being terminated in parallel. Also, the client might start to throttle the requests if there are too many objects to evict. The default client QPS and burst rates are currently set to 5 and 10 respectively and they cannot be modified in OpenShift Container Platform.

3.2. Modifying a machine set

To make changes to a machine set, edit the MachineSet YAML. Then, remove all machines associated with the machine set by deleting each machine or scaling down the machine set to 0 replicas. Then, scale the replicas back to the desired number. Changes you make to a machine set do not affect existing machines.

If you need to scale a machine set without making other changes, you do not need to delete the machines.

By default, the OpenShift Container Platform router pods are deployed on workers. Because the router is required to access some cluster resources, including the web console, do not scale the worker machine set to 0 unless you first relocate the router pods.

Prerequisites

-

Install an OpenShift Container Platform cluster and the

occommand line. -

Log in to

ocas a user withcluster-adminpermission.

Procedure

Edit the machine set:

oc edit machineset <machineset> -n openshift-machine-api

$ oc edit machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Scale down the machine set to

0:oc scale --replicas=0 machineset <machineset> -n openshift-machine-api

$ oc scale --replicas=0 machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Or:

oc edit machineset <machineset> -n openshift-machine-api

$ oc edit machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can alternatively apply the following YAML to scale the machine set:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Wait for the machines to be removed.

Scale up the machine set as needed:

oc scale --replicas=2 machineset <machineset> -n openshift-machine-api

$ oc scale --replicas=2 machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Or:

oc edit machineset <machineset> -n openshift-machine-api

$ oc edit machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can alternatively apply the following YAML to scale the machine set:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Wait for the machines to start. The new machines contain changes you made to the machine set.

3.3. About machine health checks

Machine health checks automatically repair unhealthy machines in a particular machine pool.

To monitor machine health, create a resource to define the configuration for a controller. Set a condition to check, such as staying in the NotReady status for five minutes or displaying a permanent condition in the node-problem-detector, and a label for the set of machines to monitor.

You cannot apply a machine health check to a machine with the master role.

The controller that observes a MachineHealthCheck resource checks for the defined condition. If a machine fails the health check, the machine is automatically deleted and one is created to take its place. When a machine is deleted, you see a machine deleted event.

To limit disruptive impact of the machine deletion, the controller drains and deletes only one node at a time. If there are more unhealthy machines than the maxUnhealthy threshold allows for in the targeted pool of machines, remediation stops and therefore enables manual intervention.

Consider the timeouts carefully, accounting for workloads and requirements.

- Long timeouts can result in long periods of downtime for the workload on the unhealthy machine.

-

Too short timeouts can result in a remediation loop. For example, the timeout for checking the

NotReadystatus must be long enough to allow the machine to complete the startup process.

To stop the check, remove the resource.

For example, you should stop the check during the upgrade process because the nodes in the cluster might become temporarily unavailable. The MachineHealthCheck might identify such nodes as unhealthy and reboot them. To avoid rebooting such nodes, remove any MachineHealthCheck resource that you have deployed before updating the cluster. However, a MachineHealthCheck resource that is deployed by default (such as machine-api-termination-handler) cannot be removed and will be recreated.

3.3.1. Limitations when deploying machine health checks

There are limitations to consider before deploying a machine health check:

- Only machines owned by a machine set are remediated by a machine health check.

- Control plane machines are not currently supported and are not remediated if they are unhealthy.

- If the node for a machine is removed from the cluster, a machine health check considers the machine to be unhealthy and remediates it immediately.

-

If the corresponding node for a machine does not join the cluster after the

nodeStartupTimeout, the machine is remediated. -

A machine is remediated immediately if the

Machineresource phase isFailed.

3.4. Sample MachineHealthCheck resource

The MachineHealthCheck resource for all cloud-based installation types, and other than bare metal, resembles the following YAML file:

- 1

- Specify the name of the machine health check to deploy.

- 2 3

- Specify a label for the machine pool that you want to check.

- 4

- Specify the machine set to track in

<cluster_name>-<label>-<zone>format. For example,prod-node-us-east-1a. - 5 6

- Specify the timeout duration for a node condition. If a condition is met for the duration of the timeout, the machine will be remediated. Long timeouts can result in long periods of downtime for a workload on an unhealthy machine.

- 7

- Specify the amount of machines allowed to be concurrently remediated in the targeted pool. This can be set as a percentage or an integer. If the number of unhealthy machines exceeds the limit set by

maxUnhealthy, remediation is not performed. - 8

- Specify the timeout duration that a machine health check must wait for a node to join the cluster before a machine is determined to be unhealthy.

The matchLabels are examples only; you must map your machine groups based on your specific needs.

3.4.1. Short-circuiting machine health check remediation

Short circuiting ensures that machine health checks remediate machines only when the cluster is healthy. Short-circuiting is configured through the maxUnhealthy field in the MachineHealthCheck resource.

If the user defines a value for the maxUnhealthy field, before remediating any machines, the MachineHealthCheck compares the value of maxUnhealthy with the number of machines within its target pool that it has determined to be unhealthy. Remediation is not performed if the number of unhealthy machines exceeds the maxUnhealthy limit.

If maxUnhealthy is not set, the value defaults to 100% and the machines are remediated regardless of the state of the cluster.

The appropriate maxUnhealthy value depends on the scale of the cluster you deploy and how many machines the MachineHealthCheck covers. For example, you can use the maxUnhealthy value to cover multiple machine sets across multiple availability zones so that if you lose an entire zone, your maxUnhealthy setting prevents further remediation within the cluster.

The maxUnhealthy field can be set as either an integer or percentage. There are different remediation implementations depending on the maxUnhealthy value.

3.4.1.1. Setting maxUnhealthy by using an absolute value

If maxUnhealthy is set to 2:

- Remediation will be performed if 2 or fewer nodes are unhealthy

- Remediation will not be performed if 3 or more nodes are unhealthy

These values are independent of how many machines are being checked by the machine health check.

3.4.1.2. Setting maxUnhealthy by using percentages

If maxUnhealthy is set to 40% and there are 25 machines being checked:

- Remediation will be performed if 10 or fewer nodes are unhealthy

- Remediation will not be performed if 11 or more nodes are unhealthy

If maxUnhealthy is set to 40% and there are 6 machines being checked:

- Remediation will be performed if 2 or fewer nodes are unhealthy

- Remediation will not be performed if 3 or more nodes are unhealthy

The allowed number of machines is rounded down when the percentage of maxUnhealthy machines that are checked is not a whole number.

3.5. Creating a MachineHealthCheck resource

You can create a MachineHealthCheck resource for all MachineSets in your cluster. You should not create a MachineHealthCheck resource that targets control plane machines.

Prerequisites

-

Install the

occommand line interface.

Procedure

-

Create a

healthcheck.ymlfile that contains the definition of your machine health check. Apply the

healthcheck.ymlfile to your cluster:oc apply -f healthcheck.yml

$ oc apply -f healthcheck.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 4. Using the Node Tuning Operator

Learn about the Node Tuning Operator and how you can use it to manage node-level tuning by orchestrating the tuned daemon.

4.1. About the Node Tuning Operator

The Node Tuning Operator helps you manage node-level tuning by orchestrating the TuneD daemon. The majority of high-performance applications require some level of kernel tuning. The Node Tuning Operator provides a unified management interface to users of node-level sysctls and more flexibility to add custom tuning specified by user needs.

The Operator manages the containerized TuneD daemon for OpenShift Container Platform as a Kubernetes daemon set. It ensures the custom tuning specification is passed to all containerized TuneD daemons running in the cluster in the format that the daemons understand. The daemons run on all nodes in the cluster, one per node.

Node-level settings applied by the containerized TuneD daemon are rolled back on an event that triggers a profile change or when the containerized TuneD daemon is terminated gracefully by receiving and handling a termination signal.

The Node Tuning Operator is part of a standard OpenShift Container Platform installation in version 4.1 and later.

4.2. Accessing an example Node Tuning Operator specification

Use this process to access an example Node Tuning Operator specification.

Procedure

Run:

oc get Tuned/default -o yaml -n openshift-cluster-node-tuning-operator

$ oc get Tuned/default -o yaml -n openshift-cluster-node-tuning-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The default CR is meant for delivering standard node-level tuning for the OpenShift Container Platform platform and it can only be modified to set the Operator Management state. Any other custom changes to the default CR will be overwritten by the Operator. For custom tuning, create your own Tuned CRs. Newly created CRs will be combined with the default CR and custom tuning applied to OpenShift Container Platform nodes based on node or pod labels and profile priorities.

While in certain situations the support for pod labels can be a convenient way of automatically delivering required tuning, this practice is discouraged and strongly advised against, especially in large-scale clusters. The default Tuned CR ships without pod label matching. If a custom profile is created with pod label matching, then the functionality will be enabled at that time. The pod label functionality might be deprecated in future versions of the Node Tuning Operator.

4.3. Default profiles set on a cluster

The following are the default profiles set on a cluster.

4.4. Verifying that the TuneD profiles are applied

Verify the TuneD profiles that are applied to your cluster node.

oc get profile -n openshift-cluster-node-tuning-operator

$ oc get profile -n openshift-cluster-node-tuning-operatorExample output

-

NAME: Name of the Profile object. There is one Profile object per node and their names match. -

TUNED: Name of the desired TuneD profile to apply. -

APPLIED:Trueif the TuneD daemon applied the desired profile. (True/False/Unknown). -

DEGRADED:Trueif any errors were reported during application of the TuneD profile (True/False/Unknown). -

AGE: Time elapsed since the creation of Profile object.

4.5. Custom tuning specification

The custom resource (CR) for the Operator has two major sections. The first section, profile:, is a list of TuneD profiles and their names. The second, recommend:, defines the profile selection logic.

Multiple custom tuning specifications can co-exist as multiple CRs in the Operator’s namespace. The existence of new CRs or the deletion of old CRs is detected by the Operator. All existing custom tuning specifications are merged and appropriate objects for the containerized TuneD daemons are updated.

Management state

The Operator Management state is set by adjusting the default Tuned CR. By default, the Operator is in the Managed state and the spec.managementState field is not present in the default Tuned CR. Valid values for the Operator Management state are as follows:

- Managed: the Operator will update its operands as configuration resources are updated

- Unmanaged: the Operator will ignore changes to the configuration resources

- Removed: the Operator will remove its operands and resources the Operator provisioned

Profile data

The profile: section lists TuneD profiles and their names.

Recommended profiles

The profile: selection logic is defined by the recommend: section of the CR. The recommend: section is a list of items to recommend the profiles based on a selection criteria.

recommend: <recommend-item-1> # ... <recommend-item-n>

recommend:

<recommend-item-1>

# ...

<recommend-item-n>The individual items of the list:

- 1

- Optional.

- 2

- A dictionary of key/value

MachineConfiglabels. The keys must be unique. - 3

- If omitted, profile match is assumed unless a profile with a higher priority matches first or

machineConfigLabelsis set. - 4

- An optional list.

- 5

- Profile ordering priority. Lower numbers mean higher priority (

0is the highest priority). - 6

- A TuneD profile to apply on a match. For example

tuned_profile_1. - 7

- Optional operand configuration.

- 8

- Turn debugging on or off for the TuneD daemon. Options are

truefor on orfalsefor off. The default isfalse.

<match> is an optional list recursively defined as follows:

- label: <label_name>

value: <label_value>

type: <label_type>

<match>

- label: <label_name>

value: <label_value>

type: <label_type>

<match>

If <match> is not omitted, all nested <match> sections must also evaluate to true. Otherwise, false is assumed and the profile with the respective <match> section will not be applied or recommended. Therefore, the nesting (child <match> sections) works as logical AND operator. Conversely, if any item of the <match> list matches, the entire <match> list evaluates to true. Therefore, the list acts as logical OR operator.

If machineConfigLabels is defined, machine config pool based matching is turned on for the given recommend: list item. <mcLabels> specifies the labels for a machine config. The machine config is created automatically to apply host settings, such as kernel boot parameters, for the profile <tuned_profile_name>. This involves finding all machine config pools with machine config selector matching <mcLabels> and setting the profile <tuned_profile_name> on all nodes that are assigned the found machine config pools. To target nodes that have both master and worker roles, you must use the master role.

The list items match and machineConfigLabels are connected by the logical OR operator. The match item is evaluated first in a short-circuit manner. Therefore, if it evaluates to true, the machineConfigLabels item is not considered.

When using machine config pool based matching, it is advised to group nodes with the same hardware configuration into the same machine config pool. Not following this practice might result in TuneD operands calculating conflicting kernel parameters for two or more nodes sharing the same machine config pool.

Example: node or pod label based matching

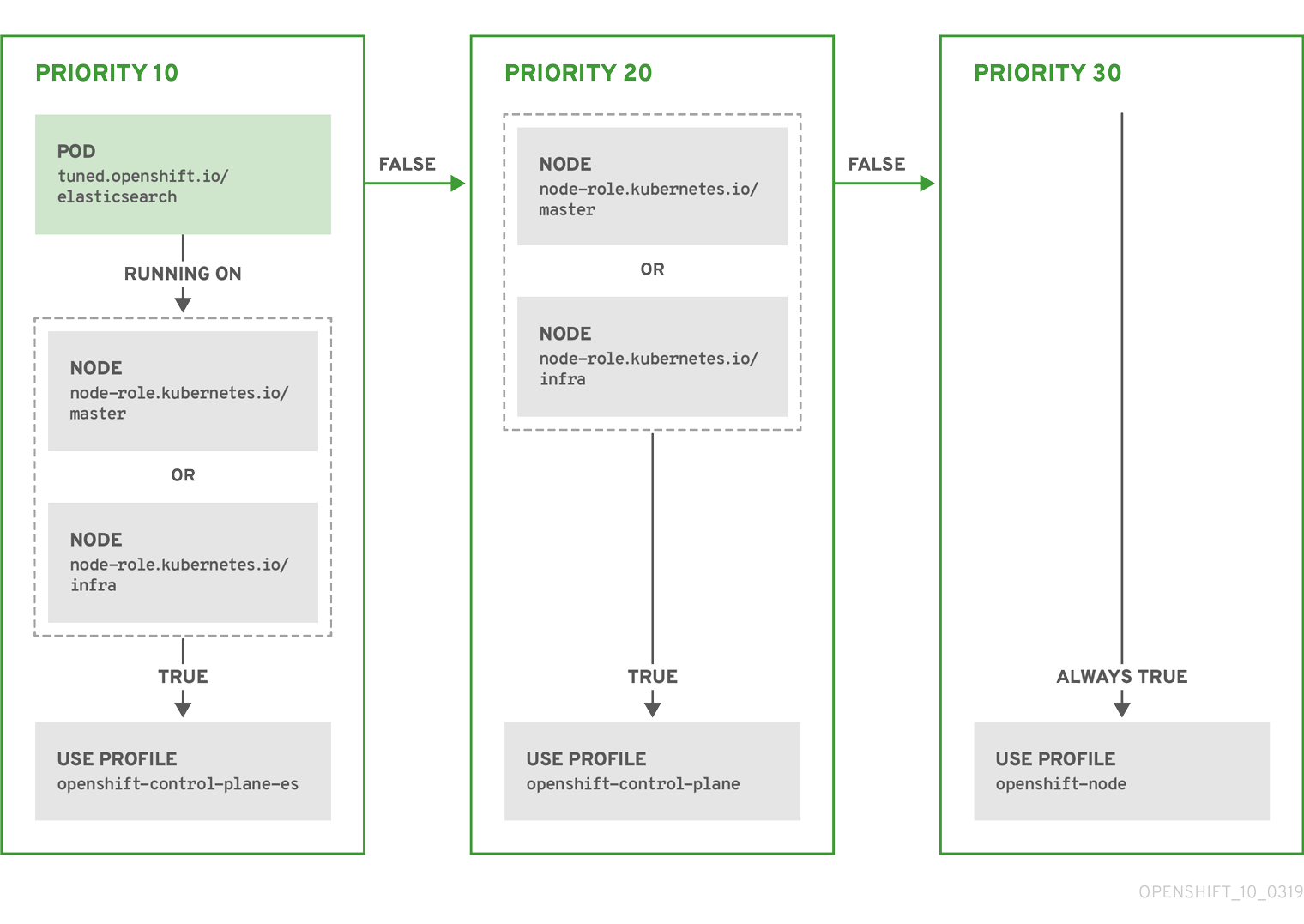

The CR above is translated for the containerized TuneD daemon into its recommend.conf file based on the profile priorities. The profile with the highest priority (10) is openshift-control-plane-es and, therefore, it is considered first. The containerized TuneD daemon running on a given node looks to see if there is a pod running on the same node with the tuned.openshift.io/elasticsearch label set. If not, the entire <match> section evaluates as false. If there is such a pod with the label, in order for the <match> section to evaluate to true, the node label also needs to be node-role.kubernetes.io/master or node-role.kubernetes.io/infra.

If the labels for the profile with priority 10 matched, openshift-control-plane-es profile is applied and no other profile is considered. If the node/pod label combination did not match, the second highest priority profile (openshift-control-plane) is considered. This profile is applied if the containerized TuneD pod runs on a node with labels node-role.kubernetes.io/master or node-role.kubernetes.io/infra.

Finally, the profile openshift-node has the lowest priority of 30. It lacks the <match> section and, therefore, will always match. It acts as a profile catch-all to set openshift-node profile, if no other profile with higher priority matches on a given node.

Example: machine config pool based matching

To minimize node reboots, label the target nodes with a label the machine config pool’s node selector will match, then create the Tuned CR above and finally create the custom machine config pool itself.

4.6. Custom tuning examples

Using TuneD profiles from the default CR

The following CR applies custom node-level tuning for OpenShift Container Platform nodes with label tuned.openshift.io/ingress-node-label set to any value.

Example: custom tuning using the openshift-control-plane TuneD profile

Custom profile writers are strongly encouraged to include the default TuneD daemon profiles shipped within the default Tuned CR. The example above uses the default openshift-control-plane profile to accomplish this.

Using built-in TuneD profiles

Given the successful rollout of the NTO-managed daemon set, the TuneD operands all manage the same version of the TuneD daemon. To list the built-in TuneD profiles supported by the daemon, query any TuneD pod in the following way:

oc exec $tuned_pod -n openshift-cluster-node-tuning-operator -- find /usr/lib/tuned/ -name tuned.conf -printf '%h\n' | sed 's|^.*/||'

$ oc exec $tuned_pod -n openshift-cluster-node-tuning-operator -- find /usr/lib/tuned/ -name tuned.conf -printf '%h\n' | sed 's|^.*/||'You can use the profile names retrieved by this in your custom tuning specification.

Example: using built-in hpc-compute TuneD profile

In addition to the built-in hpc-compute profile, the example above includes the openshift-node TuneD daemon profile shipped within the default Tuned CR to use OpenShift-specific tuning for compute nodes.

4.7. Supported TuneD daemon plugins

Excluding the [main] section, the following TuneD plugins are supported when using custom profiles defined in the profile: section of the Tuned CR:

- audio

- cpu

- disk

- eeepc_she

- modules

- mounts

- net

- scheduler

- scsi_host

- selinux

- sysctl

- sysfs

- usb

- video

- vm

There is some dynamic tuning functionality provided by some of these plugins that is not supported. The following TuneD plugins are currently not supported:

- bootloader

- script

- systemd

See Available TuneD Plugins and Getting Started with TuneD for more information.

Chapter 5. Using Cluster Loader

Cluster Loader is a tool that deploys large numbers of various objects to a cluster, which creates user-defined cluster objects. Build, configure, and run Cluster Loader to measure performance metrics of your OpenShift Container Platform deployment at various cluster states.

Cluster Loader is now deprecated and will be removed in a future release.

5.1. Installing Cluster Loader

Procedure

To pull the container image, run:

podman pull quay.io/openshift/origin-tests:4.8

$ podman pull quay.io/openshift/origin-tests:4.8Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2. Running Cluster Loader

Prerequisites

- The repository will prompt you to authenticate. The registry credentials allow you to access the image, which is not publicly available. Use your existing authentication credentials from installation.

Procedure

Execute Cluster Loader using the built-in test configuration, which deploys five template builds and waits for them to complete:

podman run -v ${LOCAL_KUBECONFIG}:/root/.kube/config:z -i \ quay.io/openshift/origin-tests:4.8 /bin/bash -c 'export KUBECONFIG=/root/.kube/config && \ openshift-tests run-test "[sig-scalability][Feature:Performance] Load cluster \ should populate the cluster [Slow][Serial] [Suite:openshift]"'$ podman run -v ${LOCAL_KUBECONFIG}:/root/.kube/config:z -i \ quay.io/openshift/origin-tests:4.8 /bin/bash -c 'export KUBECONFIG=/root/.kube/config && \ openshift-tests run-test "[sig-scalability][Feature:Performance] Load cluster \ should populate the cluster [Slow][Serial] [Suite:openshift]"'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, execute Cluster Loader with a user-defined configuration by setting the environment variable for

VIPERCONFIG:Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example,

${LOCAL_KUBECONFIG}refers to the path to thekubeconfigon your local file system. Also, there is a directory called${LOCAL_CONFIG_FILE_PATH}, which is mounted into the container that contains a configuration file calledtest.yaml. Additionally, if thetest.yamlreferences any external template files or podspec files, they should also be mounted into the container.

5.3. Configuring Cluster Loader

The tool creates multiple namespaces (projects), which contain multiple templates or pods.

5.3.1. Example Cluster Loader configuration file

Cluster Loader’s configuration file is a basic YAML file:

- 1

- Optional setting for end-to-end tests. Set to

localto avoid extra log messages. - 2

- The tuning sets allow rate limiting and stepping, the ability to create several batches of pods while pausing in between sets. Cluster Loader monitors completion of the previous step before continuing.

- 3

- Stepping will pause for

Mseconds after eachNobjects are created. - 4

- Rate limiting will wait

Mmilliseconds between the creation of objects.

This example assumes that references to any external template files or pod spec files are also mounted into the container.

If you are running Cluster Loader on Microsoft Azure, then you must set the AZURE_AUTH_LOCATION variable to a file that contains the output of terraform.azure.auto.tfvars.json, which is present in the installer directory.

5.3.2. Configuration fields

| Field | Description |

|---|---|

|

|

Set to |

|

|

A sub-object with one or many definition(s). Under |

|

|

A sub-object with one definition per configuration. |

|

| An optional sub-object with one definition per configuration. Adds synchronization possibilities during object creation. |

| Field | Description |

|---|---|

|

| An integer. One definition of the count of how many projects to create. |

|

|

A string. One definition of the base name for the project. The count of identical namespaces will be appended to |

|

| A string. One definition of what tuning set you want to apply to the objects, which you deploy inside this namespace. |

|

|

A string containing either |

|

| A list of key-value pairs. The key is the config map name and the value is a path to a file from which you create the config map. |

|

| A list of key-value pairs. The key is the secret name and the value is a path to a file from which you create the secret. |

|

| A sub-object with one or many definition(s) of pods to deploy. |

|

| A sub-object with one or many definition(s) of templates to deploy. |

| Field | Description |

|---|---|

|

| An integer. The number of pods or templates to deploy. |

|

| A string. The docker image URL to a repository where it can be pulled. |

|

| A string. One definition of the base name for the template (or pod) that you want to create. |

|

| A string. The path to a local file, which is either a pod spec or template to be created. |

|

|

Key-value pairs. Under |

| Field | Description |

|---|---|

|

| A string. The name of the tuning set which will match the name specified when defining a tuning in a project. |

|

|

A sub-object identifying the |

|

|

A sub-object identifying the |

| Field | Description |

|---|---|

|

| A sub-object. A stepping configuration used if you want to create an object in a step creation pattern. |

|

| A sub-object. A rate-limiting tuning set configuration to limit the object creation rate. |

| Field | Description |

|---|---|

|

| An integer. How many objects to create before pausing object creation. |

|

|

An integer. How many seconds to pause after creating the number of objects defined in |

|

| An integer. How many seconds to wait before failure if the object creation is not successful. |

|

| An integer. How many milliseconds (ms) to wait between creation requests. |

| Field | Description |

|---|---|

|

|

A sub-object with |

|

|

A boolean. Wait for pods with labels matching |

|

|

A boolean. Wait for pods with labels matching |

|

|

A list of selectors to match pods in |

|

|

A string. The synchronization timeout period to wait for pods in |

5.4. Known issues

- Cluster Loader fails when called without configuration. (BZ#1761925)

If the

IDENTIFIERparameter is not defined in user templates, template creation fails witherror: unknown parameter name "IDENTIFIER". If you deploy templates, add this parameter to your template to avoid this error:{ "name": "IDENTIFIER", "description": "Number to append to the name of resources", "value": "1" }{ "name": "IDENTIFIER", "description": "Number to append to the name of resources", "value": "1" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you deploy pods, adding the parameter is unnecessary.

Chapter 6. Using CPU Manager

CPU Manager manages groups of CPUs and constrains workloads to specific CPUs.

CPU Manager is useful for workloads that have some of these attributes:

- Require as much CPU time as possible.

- Are sensitive to processor cache misses.

- Are low-latency network applications.

- Coordinate with other processes and benefit from sharing a single processor cache.

6.1. Setting up CPU Manager

Procedure

Optional: Label a node:

oc label node perf-node.example.com cpumanager=true

# oc label node perf-node.example.com cpumanager=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

MachineConfigPoolof the nodes where CPU Manager should be enabled. In this example, all workers have CPU Manager enabled:oc edit machineconfigpool worker

# oc edit machineconfigpool workerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a label to the worker machine config pool:

metadata: creationTimestamp: 2020-xx-xxx generation: 3 labels: custom-kubelet: cpumanager-enabledmetadata: creationTimestamp: 2020-xx-xxx generation: 3 labels: custom-kubelet: cpumanager-enabledCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a