5.4. Creating Distributed Volumes

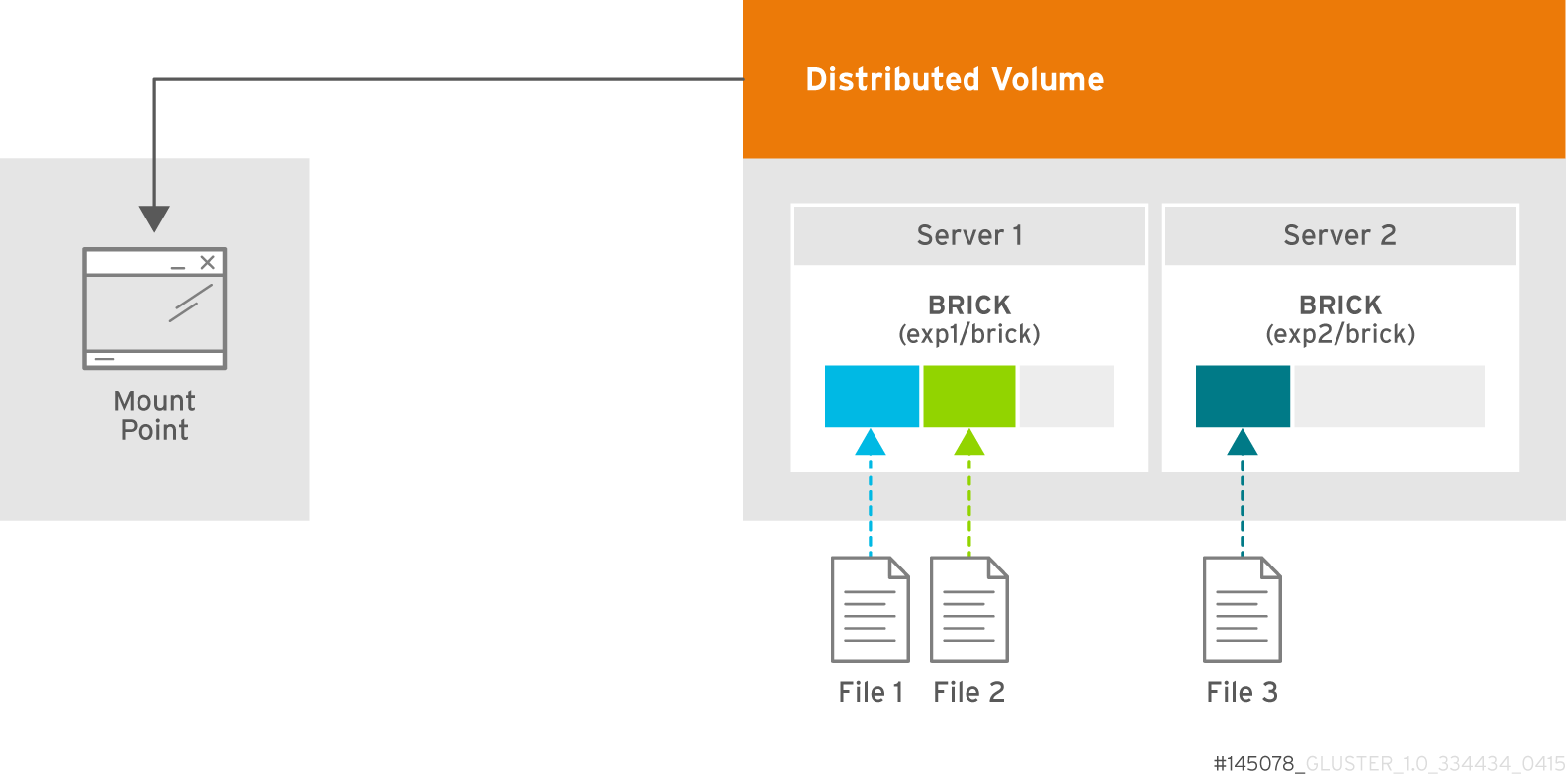

This type of volume spreads files across the bricks in the volume.

Figure 5.1. Illustration of a Distributed Volume

Warning

Distributed volumes can suffer significant data loss during a disk or server failure because directory contents are spread randomly across the bricks in the volume and hence require an architecture review before using them in production.

Please reach out to your Red Hat account team to arrange an architecture review should you intend to use distributed only volumes.

Use distributed volumes only where redundancy is either not important, or is provided by other hardware or software layers. In other cases, use one of the volume types that provide redundancy, for example distributed-replicated volumes.

Limitations of distributed only volumes include:

- No in-service upgrades - distributed only volumes need to be taken offline during upgrades.

- Temporary inconsistencies of directory entries and inodes during eventual node failures.

- I/O operations will block or fail due to node unavailability or eventual node failures.

- Permanent loss of data.

Create a Distributed Volume

Use

gluster volume create command to create different types of volumes, and gluster volume info command to verify successful volume creation.

Prerequisites

- A trusted storage pool has been created, as described in Section 4.1, “Adding Servers to the Trusted Storage Pool”.

- Understand how to start and stop volumes, as described in Section 5.10, “Starting Volumes”.

- Run the

gluster volume createcommand to create the distributed volume.The syntax isgluster volume create NEW-VOLNAME [transport tcp | rdma (Deprecated) | tcp,rdma] NEW-BRICK...The default value for transport istcp. Other options can be passed such asauth.alloworauth.reject. See Section 11.1, “Configuring Volume Options” for a full list of parameters.Red Hat recommends disabling theperformance.client-io-threadsoption on distributed volumes, as this option tends to worsen performance. Run the following command to disableperformance.client-io-threads:gluster volume set VOLNAME performance.client-io-threads off

# gluster volume set VOLNAME performance.client-io-threads offCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 5.1. Distributed Volume with Two Storage Servers

gluster v create glustervol server1:/rhgs/brick1 server2:/rhgs/brick1 volume create: glutervol: success: please start the volume to access data

# gluster v create glustervol server1:/rhgs/brick1 server2:/rhgs/brick1 volume create: glutervol: success: please start the volume to access dataCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 5.2. Distributed Volume over InfiniBand with Four Servers

gluster v create glustervol transport rdma server1:/rhgs/brick1 server2:/rhgs/brick1 server3:/rhgs/brick1 server4:/rhgs/brick1 volume create: glutervol: success: please start the volume to access data

# gluster v create glustervol transport rdma server1:/rhgs/brick1 server2:/rhgs/brick1 server3:/rhgs/brick1 server4:/rhgs/brick1 volume create: glutervol: success: please start the volume to access dataCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Run

# gluster volume start VOLNAMEto start the volume.gluster v start glustervol volume start: glustervol: success

# gluster v start glustervol volume start: glustervol: successCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Run

gluster volume infocommand to optionally display the volume information.The following output is the result of Example 5.1, “Distributed Volume with Two Storage Servers”.Copy to Clipboard Copied! Toggle word wrap Toggle overflow