7.6. Host Resilience

7.6.1. Host High Availability

7.6.2. Power Management by Proxy in Red Hat Virtualization

- Any host in the same cluster as the host requiring fencing.

- Any host in the same data center as the host requiring fencing.

7.6.3. Setting Fencing Parameters on a Host

Procedure 7.16. Setting fencing parameters on a host

- Use the Hosts resource tab, tree mode, or the search function to find and select the host in the results list.

- Click to open the Edit Host window.

- Click the Power Management tab.

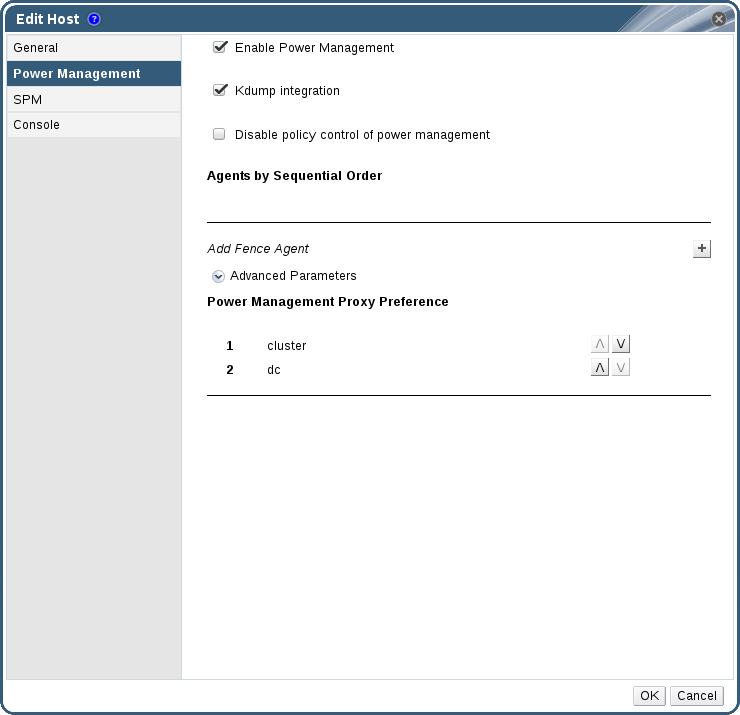

Figure 7.2. Power Management Settings

- Select the Enable Power Management check box to enable the fields.

- Select the Kdump integration check box to prevent the host from fencing while performing a kernel crash dump.

Important

When you enable Kdump integration on an existing host, the host must be reinstalled for kdump to be configured. See Section 7.5.11, “Reinstalling Hosts”. - Optionally, select the Disable policy control of power management check box if you do not want your host's power management to be controlled by the Scheduling Policy of the host's cluster.

- Click the plus () button to add a new power management device. The Edit fence agent window opens.

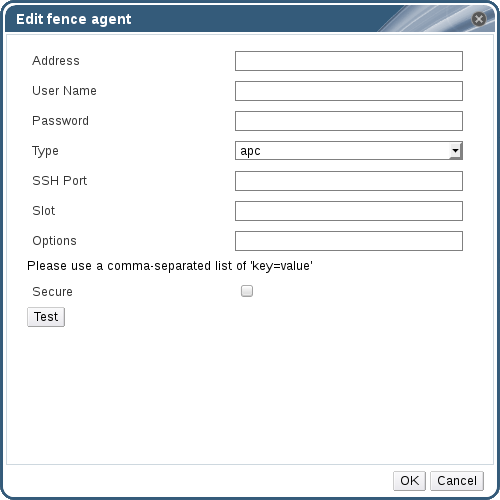

Figure 7.3. Edit fence agent

- Enter the Address, User Name, and Password of the power management device.

- Select the power management device Type from the drop-down list.

Note

For more information on how to set up a custom power management device, see https://access.redhat.com/articles/1238743. - Enter the SSH Port number used by the power management device to communicate with the host.

- Enter the Slot number used to identify the blade of the power management device.

- Enter the Options for the power management device. Use a comma-separated list of 'key=value' entries.

- Select the Secure check box to enable the power management device to connect securely to the host.

- Click the button to ensure the settings are correct. Test Succeeded, Host Status is: on will display upon successful verification.

Warning

Power management parameters (userid, password, options, etc) are tested by Red Hat Virtualization Manager only during setup and manually after that. If you choose to ignore alerts about incorrect parameters, or if the parameters are changed on the power management hardware without the corresponding change in Red Hat Virtualization Manager, fencing is likely to fail when most needed. - Click to close the Edit fence agent window.

- In the Power Management tab, optionally expand the Advanced Parameters and use the up and down buttons to specify the order in which the Manager will search the host's cluster and dc (datacenter) for a fencing proxy.

- Click .

7.6.4. fence_kdump Advanced Configuration

Select a host to view the status of the kdump service in the General tab of the details pane:

- Enabled: kdump is configured properly and the kdump service is running.

- Disabled: the kdump service is not running (in this case kdump integration will not work properly).

- Unknown: happens only for hosts with an older VDSM version that does not report kdump status.

Enabling Kdump integration in the Power Management tab of the New Host or Edit Host window configures a standard fence_kdump setup. If the environment's network configuration is simple and the Manager's FQDN is resolvable on all hosts, the default fence_kdump settings are sufficient for use.

engine-config:

engine-config -s FenceKdumpDestinationAddress=A.B.C.D

engine-config -s FenceKdumpDestinationAddress=A.B.C.D- The Manager has two NICs, where one of these is public-facing, and the second is the preferred destination for fence_kdump messages.

- You need to execute the fence_kdump listener on a different IP or port.

- You need to set a custom interval for fence_kdump notification messages, to prevent possible packet loss.

7.6.4.1. fence_kdump listener Configuration

Procedure 7.17. Manually Configuring the fence_kdump Listener

- Create a new file (for example,

my-fence-kdump.conf) in/etc/ovirt-engine/ovirt-fence-kdump-listener.conf.d/ - Enter your customization with the syntax OPTION=value and save the file.

Important

The edited values must also be changed inengine-configas outlined in the fence_kdump Listener Configuration Options table in Section 7.6.4.2, “Configuring fence_kdump on the Manager”. - Restart the fence_kdump listener:

systemctl restart ovirt-fence-kdump-listener.service

# systemctl restart ovirt-fence-kdump-listener.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

| Variable | Description | Default | Note |

|---|---|---|---|

| LISTENER_ADDRESS | Defines the IP address to receive fence_kdump messages on. | 0.0.0.0 | If the value of this parameter is changed, it must match the value of FenceKdumpDestinationAddress in engine-config. |

| LISTENER_PORT | Defines the port to receive fence_kdump messages on. | 7410 | If the value of this parameter is changed, it must match the value of FenceKdumpDestinationPort in engine-config. |

| HEARTBEAT_INTERVAL | Defines the interval in seconds of the listener's heartbeat updates. | 30 | If the value of this parameter is changed, it must be half the size or smaller than the value of FenceKdumpListenerTimeout in engine-config. |

| SESSION_SYNC_INTERVAL | Defines the interval in seconds to synchronize the listener's host kdumping sessions in memory to the database. | 5 | If the value of this parameter is changed, it must be half the size or smaller than the value of KdumpStartedTimeout in engine-config. |

| REOPEN_DB_CONNECTION_INTERVAL | Defines the interval in seconds to reopen the database connection which was previously unavailable. | 30 | - |

| KDUMP_FINISHED_TIMEOUT | Defines the maximum timeout in seconds after the last received message from kdumping hosts after which the host kdump flow is marked as FINISHED. | 60 | If the value of this parameter is changed, it must be double the size or higher than the value of FenceKdumpMessageInterval in engine-config. |

7.6.4.2. Configuring fence_kdump on the Manager

engine-config -g OPTION

# engine-config -g OPTIONProcedure 7.18. Manually Configuring Kdump with engine-config

- Edit kdump's configuration using the

engine-configcommand:engine-config -s OPTION=value

# engine-config -s OPTION=valueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Important

The edited values must also be changed in the fence_kdump listener configuration file as outlined in theKdump Configuration Optionstable. See Section 7.6.4.1, “fence_kdump listener Configuration”. - Restart the

ovirt-engineservice:systemctl restart ovirt-engine.service

# systemctl restart ovirt-engine.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Reinstall all hosts with Kdump integration enabled, if required (see the table below).

engine-config:

| Variable | Description | Default | Note |

|---|---|---|---|

| FenceKdumpDestinationAddress | Defines the hostname(s) or IP address(es) to send fence_kdump messages to. If empty, the Manager's FQDN is used. | Empty string (Manager FQDN is used) | If the value of this parameter is changed, it must match the value of LISTENER_ADDRESS in the fence_kdump listener configuration file, and all hosts with Kdump integration enabled must be reinstalled. |

| FenceKdumpDestinationPort | Defines the port to send fence_kdump messages to. | 7410 | If the value of this parameter is changed, it must match the value of LISTENER_PORT in the fence_kdump listener configuration file, and all hosts with Kdump integration enabled must be reinstalled. |

| FenceKdumpMessageInterval | Defines the interval in seconds between messages sent by fence_kdump. | 5 | If the value of this parameter is changed, it must be half the size or smaller than the value of KDUMP_FINISHED_TIMEOUT in the fence_kdump listener configuration file, and all hosts with Kdump integration enabled must be reinstalled. |

| FenceKdumpListenerTimeout | Defines the maximum timeout in seconds since the last heartbeat to consider the fence_kdump listener alive. | 90 | If the value of this parameter is changed, it must be double the size or higher than the value of HEARTBEAT_INTERVAL in the fence_kdump listener configuration file. |

| KdumpStartedTimeout | Defines the maximum timeout in seconds to wait until the first message from the kdumping host is received (to detect that host kdump flow has started). | 30 | If the value of this parameter is changed, it must be double the size or higher than the value of SESSION_SYNC_INTERVAL in the fence_kdump listener configuration file, and FenceKdumpMessageInterval. |

7.6.5. Soft-Fencing Hosts

- On the first network failure, the status of the host changes to "connecting".

- The Manager then makes three attempts to ask VDSM for its status, or it waits for an interval determined by the load on the host. The formula for determining the length of the interval is configured by the configuration values TimeoutToResetVdsInSeconds (the default is 60 seconds) + [DelayResetPerVmInSeconds (the default is 0.5 seconds)]*(the count of running virtual machines on host) + [DelayResetForSpmInSeconds (the default is 20 seconds)] * 1 (if host runs as SPM) or 0 (if the host does not run as SPM). To give VDSM the maximum amount of time to respond, the Manager chooses the longer of the two options mentioned above (three attempts to retrieve the status of VDSM or the interval determined by the above formula).

- If the host does not respond when that interval has elapsed,

vdsm restartis executed via SSH. - If

vdsm restartdoes not succeed in re-establishing the connection between the host and the Manager, the status of the host changes toNon Responsiveand, if power management is configured, fencing is handed off to the external fencing agent.

Note

7.6.6. Using Host Power Management Functions

When power management has been configured for a host, you can access a number of options from the Administration Portal interface. While each power management device has its own customizable options, they all support the basic options to start, stop, and restart a host.

Procedure 7.19. Using Host Power Management Functions

- Use the Hosts resource tab, tree mode, or the search function to find and select the host in the results list.

- Click the Power Management drop-down menu.

- Select one of the following options:

- Restart: This option stops the host and waits until the host's status changes to

Down. When the agent has verified that the host is down, the highly available virtual machines are restarted on another host in the cluster. The agent then restarts this host. When the host is ready for use its status displays asUp. - Start: This option starts the host and lets it join a cluster. When it is ready for use its status displays as

Up. - Stop: This option powers off the host. Before using this option, ensure that the virtual machines running on the host have been migrated to other hosts in the cluster. Otherwise the virtual machines will crash and only the highly available virtual machines will be restarted on another host. When the host has been stopped its status displays as

Non-Operational.

Important

When two fencing agents are defined on a host, they can be used concurrently or sequentially. For concurrent agents, both agents have to respond to the Stop command for the host to be stopped; and when one agent responds to the Start command, the host will go up. For sequential agents, to start or stop a host, the primary agent is used first; if it fails, the secondary agent is used. - Selecting one of the above options opens a confirmation window. Click OK to confirm and proceed.

The selected action is performed.

7.6.7. Manually Fencing or Isolating a Non Responsive Host

If a host unpredictably goes into a non-responsive state, for example, due to a hardware failure; it can significantly affect the performance of the environment. If you do not have a power management device, or it is incorrectly configured, you can reboot the host manually.

Warning

Procedure 7.20. Manually fencing or isolating a non-responsive host

- On the Hosts tab, select the host. The status must display as

non-responsive. - Manually reboot the host. This could mean physically entering the lab and rebooting the host.

- On the Administration Portal, right-click the host entry and select the button.

- A message displays prompting you to ensure that the host has been shut down or rebooted. Select the Approve Operation check box and click OK.

You have manually rebooted your host, allowing highly available virtual machines to be started on active hosts. You confirmed your manual fencing action in the Administrator Portal, and the host is back online.