1.3. Scheduling Policies

A scheduling policy is a set of rules that defines the logic by which virtual machines are distributed amongst hosts in the cluster that scheduling policy is applied to. Scheduling policies determine this logic via a combination of filters, weightings, and a load balancing policy. The filter modules apply hard enforcement and filter out hosts that do not meet the conditions specified by that filter. The weights modules apply soft enforcement, and are used to control the relative priority of factors considered when determining the hosts in a cluster on which a virtual machine can run.

The Red Hat Virtualization Manager provides five default scheduling policies: Evenly_Distributed, Cluster_Maintenance, None, Power_Saving, and VM_Evenly_Distributed. You can also define new scheduling policies that provide fine-grained control over the distribution of virtual machines. Regardless of the scheduling policy, a virtual machine will not start on a host with an overloaded CPU. By default, a host’s CPU is considered overloaded if it has a load of more than 80% for 5 minutes, but these values can be changed using scheduling policies. See Section 5.2.5, “Scheduling Policy Settings Explained” for more information about the properties of each scheduling policy.

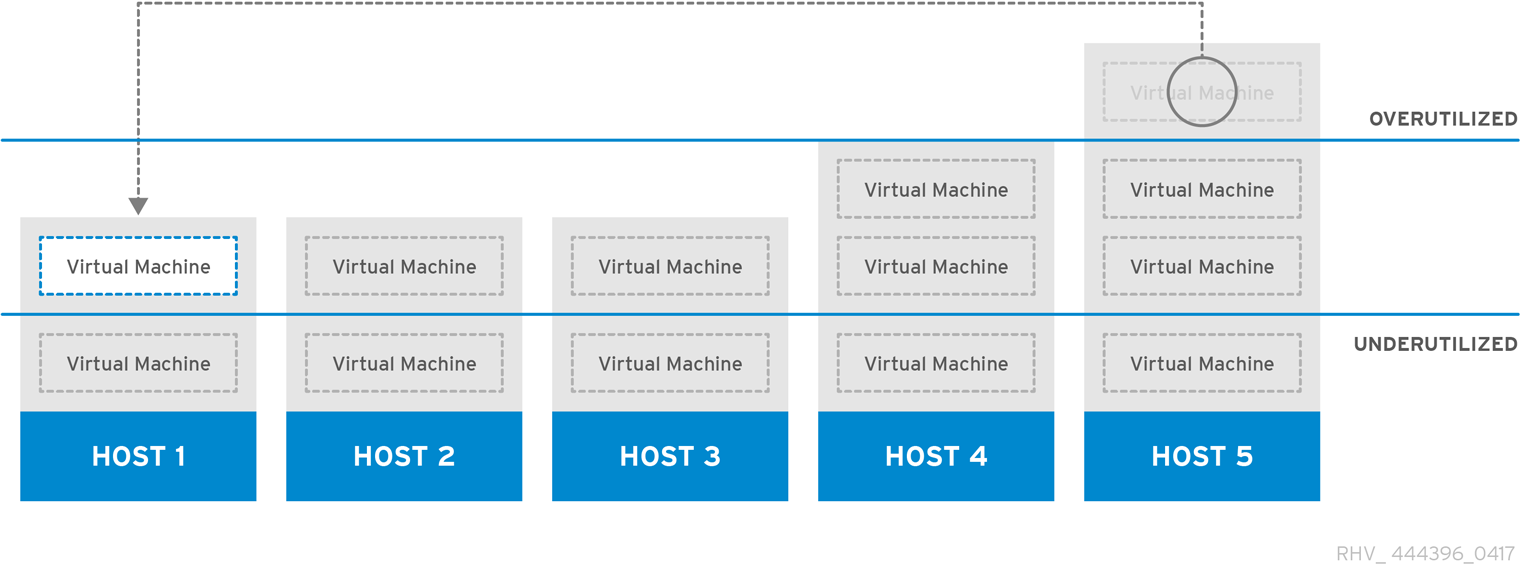

Figure 1.4. Evenly Distributed Scheduling Policy

The Evenly_Distributed scheduling policy distributes the memory and CPU processing load evenly across all hosts in the cluster. Additional virtual machines attached to a host will not start if that host has reached the defined CpuOverCommitDurationMinutes, HighUtilization, or MaxFreeMemoryForOverUtilized.

The VM_Evenly_Distributed scheduling policy virtual machines evenly between hosts based on a count of the virtual machines. The cluster is considered unbalanced if any host is running more virtual machines than the HighVmCount and there is at least one host with a virtual machine count that falls outside of the MigrationThreshold.

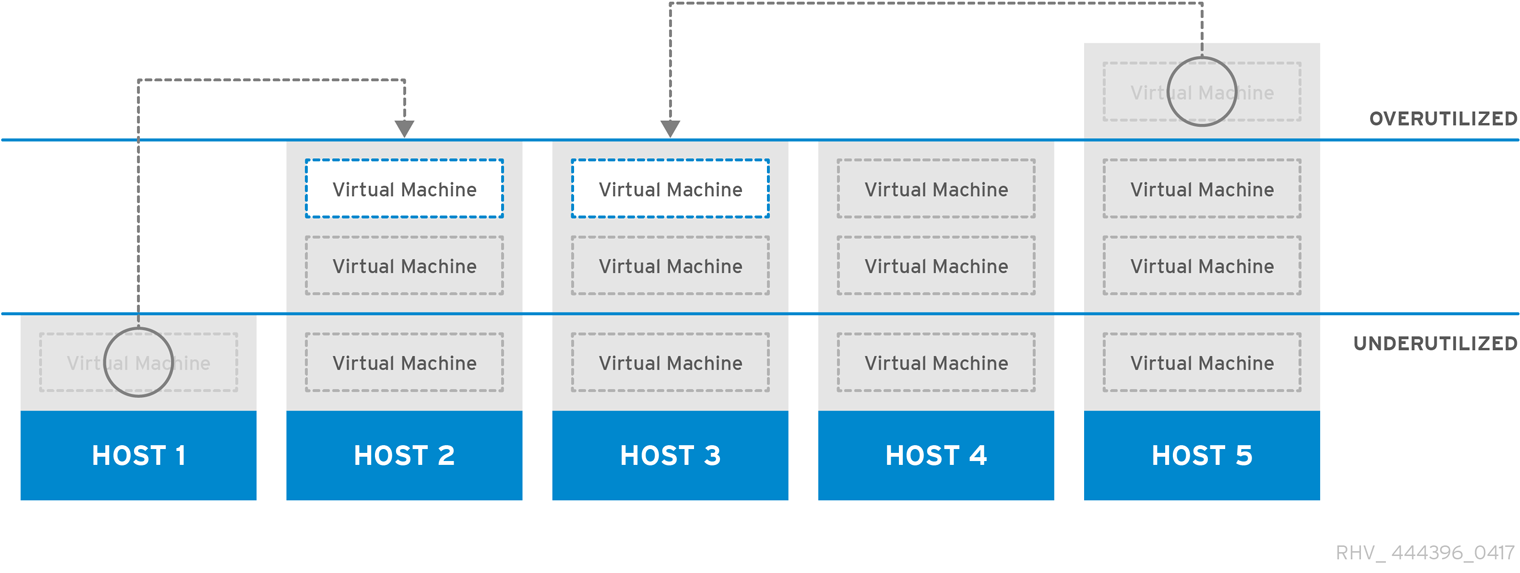

Figure 1.5. Power Saving Scheduling Policy

The Power_Saving scheduling policy distributes the memory and CPU processing load across a subset of available hosts to reduce power consumption on underutilized hosts. Hosts with a CPU load below the low utilization value for longer than the defined time interval will migrate all virtual machines to other hosts so that it can be powered down. Additional virtual machines attached to a host will not start if that host has reached the defined high utilization value.

Set the None policy to have no load or power sharing between hosts for running virtual machines. This is the default mode. When a virtual machine is started, the memory and CPU processing load is spread evenly across all hosts in the cluster. Additional virtual machines attached to a host will not start if that host has reached the defined CpuOverCommitDurationMinutes, HighUtilization, or MaxFreeMemoryForOverUtilized.

The Cluster_Maintenance scheduling policy limits activity in a cluster during maintenance tasks. When the Cluster_Maintenance policy is set, no new virtual machines may be started, except highly available virtual machines. If host failure occurs, highly available virtual machines will restart properly and any virtual machine can migrate.

1.3.1. Creating a Scheduling Policy

You can create new scheduling policies to control the logic by which virtual machines are distributed amongst a given cluster in your Red Hat Virtualization environment.

Creating a Scheduling Policy

-

Click

. - Click the Scheduling Policies tab.

- Click New.

- Enter a Name and Description for the scheduling policy.

Configure filter modules:

- In the Filter Modules section, drag and drop the preferred filter modules to apply to the scheduling policy from the Disabled Filters section into the Enabled Filters section.

- Specific filter modules can also be set as the First, to be given highest priority, or Last, to be given lowest priority, for basic optimization. To set the priority, right-click any filter module, hover the cursor over Position and select First or Last.

Configure weight modules:

- In the Weights Modules section, drag and drop the preferred weights modules to apply to the scheduling policy from the Disabled Weights section into the Enabled Weights & Factors section.

- Use the + and - buttons to the left of the enabled weight modules to increase or decrease the weight of those modules.

Specify a load balancing policy:

- From the drop-down menu in the Load Balancer section, select the load balancing policy to apply to the scheduling policy.

- From the drop-down menu in the Properties section, select a load balancing property to apply to the scheduling policy and use the text field to the right of that property to specify a value.

- Use the + and - buttons to add or remove additional properties.

- Click OK.

1.3.2. Explanation of Settings in the New Scheduling Policy and Edit Scheduling Policy Window

The following table details the options available in the New Scheduling Policy and Edit Scheduling Policy windows.

| Field Name | Description |

|---|---|

| Name | The name of the scheduling policy. This is the name used to refer to the scheduling policy in the Red Hat Virtualization Manager. |

| Description | A description of the scheduling policy. This field is recommended but not mandatory. |

| Filter Modules | A set of filters for controlling the hosts on which a virtual machine in a cluster can run. Enabling a filter will filter out hosts that do not meet the conditions specified by that filter, as outlined below:

|

| Weights Modules | A set of weightings for controlling the relative priority of factors considered when determining the hosts in a cluster on which a virtual machine can run.

|

| Load Balancer | This drop-down menu allows you to select a load balancing module to apply. Load balancing modules determine the logic used to migrate virtual machines from hosts experiencing high usage to hosts experiencing lower usage. |

| Properties | This drop-down menu allows you to add or remove properties for load balancing modules, and is only available when you have selected a load balancing module for the scheduling policy. No properties are defined by default, and the properties that are available are specific to the load balancing module that is selected. Use the + and - buttons to add or remove additional properties to or from the load balancing module. |