This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Este contenido no está disponible en el idioma seleccionado.

Chapter 2. Service Mesh 1.x

2.1. Service Mesh Release Notes

You are viewing documentation for a Red Hat OpenShift Service Mesh release that is no longer supported.

Service Mesh version 1.0 and 1.1 control planes are no longer supported. For information about upgrading your service mesh control plane, see Upgrading Service Mesh.

For information about the support status of a particular Red Hat OpenShift Service Mesh release, see the Product lifecycle page.

2.1.1. Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

2.1.2. Introduction to Red Hat OpenShift Service Mesh

Red Hat OpenShift Service Mesh addresses a variety of problems in a microservice architecture by creating a centralized point of control in an application. It adds a transparent layer on existing distributed applications without requiring any changes to the application code.

Microservice architectures split the work of enterprise applications into modular services, which can make scaling and maintenance easier. However, as an enterprise application built on a microservice architecture grows in size and complexity, it becomes difficult to understand and manage. Service Mesh can address those architecture problems by capturing or intercepting traffic between services and can modify, redirect, or create new requests to other services.

Service Mesh, which is based on the open source Istio project, provides an easy way to create a network of deployed services that provides discovery, load balancing, service-to-service authentication, failure recovery, metrics, and monitoring. A service mesh also provides more complex operational functionality, including A/B testing, canary releases, access control, and end-to-end authentication.

2.1.3. Getting support

If you experience difficulty with a procedure described in this documentation, or with OpenShift Container Platform in general, visit the Red Hat Customer Portal. From the Customer Portal, you can:

- Search or browse through the Red Hat Knowledgebase of articles and solutions relating to Red Hat products.

- Submit a support case to Red Hat Support.

- Access other product documentation.

To identify issues with your cluster, you can use Insights in OpenShift Cluster Manager. Insights provides details about issues and, if available, information on how to solve a problem.

If you have a suggestion for improving this documentation or have found an error, submit a Jira issue for the most relevant documentation component. Please provide specific details, such as the section name and OpenShift Container Platform version.

When opening a support case, it is helpful to provide debugging information about your cluster to Red Hat Support.

The must-gather tool enables you to collect diagnostic information about your OpenShift Container Platform cluster, including virtual machines and other data related to Red Hat OpenShift Service Mesh.

For prompt support, supply diagnostic information for both OpenShift Container Platform and Red Hat OpenShift Service Mesh.

2.1.3.1. About the must-gather tool

The oc adm must-gather CLI command collects the information from your cluster that is most likely needed for debugging issues, including:

- Resource definitions

- Service logs

By default, the oc adm must-gather command uses the default plugin image and writes into ./must-gather.local.

Alternatively, you can collect specific information by running the command with the appropriate arguments as described in the following sections:

To collect data related to one or more specific features, use the

--imageargument with an image, as listed in a following section.For example:

oc adm must-gather --image=registry.redhat.io/container-native-virtualization/cnv-must-gather-rhel8:v4.10.0

$ oc adm must-gather --image=registry.redhat.io/container-native-virtualization/cnv-must-gather-rhel8:v4.10.0Copy to Clipboard Copied! Toggle word wrap Toggle overflow To collect the audit logs, use the

-- /usr/bin/gather_audit_logsargument, as described in a following section.For example:

oc adm must-gather -- /usr/bin/gather_audit_logs

$ oc adm must-gather -- /usr/bin/gather_audit_logsCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteAudit logs are not collected as part of the default set of information to reduce the size of the files.

When you run oc adm must-gather, a new pod with a random name is created in a new project on the cluster. The data is collected on that pod and saved in a new directory that starts with must-gather.local. This directory is created in the current working directory.

For example:

NAMESPACE NAME READY STATUS RESTARTS AGE ... openshift-must-gather-5drcj must-gather-bklx4 2/2 Running 0 72s openshift-must-gather-5drcj must-gather-s8sdh 2/2 Running 0 72s ...

NAMESPACE NAME READY STATUS RESTARTS AGE

...

openshift-must-gather-5drcj must-gather-bklx4 2/2 Running 0 72s

openshift-must-gather-5drcj must-gather-s8sdh 2/2 Running 0 72s

...2.1.3.2. Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

The OpenShift Container Platform CLI (

oc) installed.

2.1.3.3. About collecting service mesh data

You can use the oc adm must-gather CLI command to collect information about your cluster, including features and objects associated with Red Hat OpenShift Service Mesh.

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

The OpenShift Container Platform CLI (

oc) installed.

Precedure

To collect Red Hat OpenShift Service Mesh data with

must-gather, you must specify the Red Hat OpenShift Service Mesh image.oc adm must-gather --image=registry.redhat.io/openshift-service-mesh/istio-must-gather-rhel8:2.4

$ oc adm must-gather --image=registry.redhat.io/openshift-service-mesh/istio-must-gather-rhel8:2.4Copy to Clipboard Copied! Toggle word wrap Toggle overflow To collect Red Hat OpenShift Service Mesh data for a specific Service Mesh control plane namespace with

must-gather, you must specify the Red Hat OpenShift Service Mesh image and namespace. In this example, aftergather,replace<namespace>with your Service Mesh control plane namespace, such asistio-system.oc adm must-gather --image=registry.redhat.io/openshift-service-mesh/istio-must-gather-rhel8:2.4 gather <namespace>

$ oc adm must-gather --image=registry.redhat.io/openshift-service-mesh/istio-must-gather-rhel8:2.4 gather <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.4. Red Hat OpenShift Service Mesh supported configurations

The following are the only supported configurations for the Red Hat OpenShift Service Mesh:

- OpenShift Container Platform version 4.6 or later.

OpenShift Online and Red Hat OpenShift Dedicated are not supported for Red Hat OpenShift Service Mesh.

- The deployment must be contained within a single OpenShift Container Platform cluster that is not federated.

- This release of Red Hat OpenShift Service Mesh is only available on OpenShift Container Platform x86_64.

- This release only supports configurations where all Service Mesh components are contained in the OpenShift Container Platform cluster in which it operates. It does not support management of microservices that reside outside of the cluster, or in a multi-cluster scenario.

- This release only supports configurations that do not integrate external services such as virtual machines.

For additional information about Red Hat OpenShift Service Mesh lifecycle and supported configurations, refer to the Support Policy.

2.1.4.1. Supported configurations for Kiali on Red Hat OpenShift Service Mesh

- The Kiali observability console is only supported on the two most recent releases of the Chrome, Edge, Firefox, or Safari browsers.

2.1.4.2. Supported Mixer adapters

This release only supports the following Mixer adapter:

- 3scale Istio Adapter

2.1.5. New Features

Red Hat OpenShift Service Mesh provides a number of key capabilities uniformly across a network of services:

- Traffic Management - Control the flow of traffic and API calls between services, make calls more reliable, and make the network more robust in the face of adverse conditions.

- Service Identity and Security - Provide services in the mesh with a verifiable identity and provide the ability to protect service traffic as it flows over networks of varying degrees of trustworthiness.

- Policy Enforcement - Apply organizational policy to the interaction between services, ensure access policies are enforced and resources are fairly distributed among consumers. Policy changes are made by configuring the mesh, not by changing application code.

- Telemetry - Gain understanding of the dependencies between services and the nature and flow of traffic between them, providing the ability to quickly identify issues.

2.1.5.1. New features Red Hat OpenShift Service Mesh 1.1.18.2

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs).

2.1.5.1.1. Component versions included in Red Hat OpenShift Service Mesh version 1.1.18.2

| Component | Version |

|---|---|

| Istio | 1.4.10 |

| Jaeger | 1.30.2 |

| Kiali | 1.12.21.1 |

| 3scale Istio Adapter | 1.0.0 |

2.1.5.2. New features Red Hat OpenShift Service Mesh 1.1.18.1

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs).

2.1.5.2.1. Component versions included in Red Hat OpenShift Service Mesh version 1.1.18.1

| Component | Version |

|---|---|

| Istio | 1.4.10 |

| Jaeger | 1.30.2 |

| Kiali | 1.12.20.1 |

| 3scale Istio Adapter | 1.0.0 |

2.1.5.3. New features Red Hat OpenShift Service Mesh 1.1.18

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs).

2.1.5.3.1. Component versions included in Red Hat OpenShift Service Mesh version 1.1.18

| Component | Version |

|---|---|

| Istio | 1.4.10 |

| Jaeger | 1.24.0 |

| Kiali | 1.12.18 |

| 3scale Istio Adapter | 1.0.0 |

2.1.5.4. New features Red Hat OpenShift Service Mesh 1.1.17.1

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs).

2.1.5.4.1. Change in how Red Hat OpenShift Service Mesh handles URI fragments

Red Hat OpenShift Service Mesh contains a remotely exploitable vulnerability, CVE-2021-39156, where an HTTP request with a fragment (a section in the end of a URI that begins with a # character) in the URI path could bypass the Istio URI path-based authorization policies. For instance, an Istio authorization policy denies requests sent to the URI path /user/profile. In the vulnerable versions, a request with URI path /user/profile#section1 bypasses the deny policy and routes to the backend (with the normalized URI path /user/profile%23section1), possibly leading to a security incident.

You are impacted by this vulnerability if you use authorization policies with DENY actions and operation.paths, or ALLOW actions and operation.notPaths.

With the mitigation, the fragment part of the request’s URI is removed before the authorization and routing. This prevents a request with a fragment in its URI from bypassing authorization policies which are based on the URI without the fragment part.

2.1.5.4.2. Required update for authorization policies

Istio generates hostnames for both the hostname itself and all matching ports. For instance, a virtual service or Gateway for a host of "httpbin.foo" generates a config matching "httpbin.foo and httpbin.foo:*". However, exact match authorization policies only match the exact string given for the hosts or notHosts fields.

Your cluster is impacted if you have AuthorizationPolicy resources using exact string comparison for the rule to determine hosts or notHosts.

You must update your authorization policy rules to use prefix match instead of exact match. For example, replacing hosts: ["httpbin.com"] with hosts: ["httpbin.com:*"] in the first AuthorizationPolicy example.

First example AuthorizationPolicy using prefix match

Second example AuthorizationPolicy using prefix match

2.1.5.5. New features Red Hat OpenShift Service Mesh 1.1.17

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

2.1.5.6. New features Red Hat OpenShift Service Mesh 1.1.16

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

2.1.5.7. New features Red Hat OpenShift Service Mesh 1.1.15

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

2.1.5.8. New features Red Hat OpenShift Service Mesh 1.1.14

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

There are manual steps that must be completed to address CVE-2021-29492 and CVE-2021-31920.

2.1.5.8.1. Manual updates required by CVE-2021-29492 and CVE-2021-31920

Istio contains a remotely exploitable vulnerability where an HTTP request path with multiple slashes or escaped slash characters (%2F` or %5C`) could potentially bypass an Istio authorization policy when path-based authorization rules are used.

For example, assume an Istio cluster administrator defines an authorization DENY policy to reject the request at path /admin. A request sent to the URL path //admin will NOT be rejected by the authorization policy.

According to RFC 3986, the path //admin with multiple slashes should technically be treated as a different path from the /admin. However, some backend services choose to normalize the URL paths by merging multiple slashes into a single slash. This can result in a bypass of the authorization policy (//admin does not match /admin), and a user can access the resource at path /admin in the backend; this would represent a security incident.

Your cluster is impacted by this vulnerability if you have authorization policies using ALLOW action + notPaths field or DENY action + paths field patterns. These patterns are vulnerable to unexpected policy bypasses.

Your cluster is NOT impacted by this vulnerability if:

- You don’t have authorization policies.

-

Your authorization policies don’t define

pathsornotPathsfields. -

Your authorization policies use

ALLOW action + pathsfield orDENY action + notPathsfield patterns. These patterns could only cause unexpected rejection instead of policy bypasses. The upgrade is optional for these cases.

The Red Hat OpenShift Service Mesh configuration location for path normalization is different from the Istio configuration.

2.1.5.8.2. Updating the path normalization configuration

Istio authorization policies can be based on the URL paths in the HTTP request. Path normalization, also known as URI normalization, modifies and standardizes the incoming requests' paths so that the normalized paths can be processed in a standard way. Syntactically different paths may be equivalent after path normalization.

Istio supports the following normalization schemes on the request paths before evaluating against the authorization policies and routing the requests:

| Option | Description | Example | Notes |

|---|---|---|---|

|

| No normalization is done. Anything received by Envoy will be forwarded exactly as-is to any backend service. |

| This setting is vulnerable to CVE-2021-31920. |

|

|

This is currently the option used in the default installation of Istio. This applies the |

| This setting is vulnerable to CVE-2021-31920. |

|

| Slashes are merged after the BASE normalization. |

| Update to this setting to mitigate CVE-2021-31920. |

|

|

The strictest setting when you allow all traffic by default. This setting is recommended, with the caveat that you must thoroughly test your authorization policies routes. Percent-encoded slash and backslash characters ( |

| Update to this setting to mitigate CVE-2021-31920. This setting is more secure, but also has the potential to break applications. Test your applications before deploying to production. |

The normalization algorithms are conducted in the following order:

-

Percent-decode

%2F,%2f,%5Cand%5c. -

The RFC 3986 and other normalization implemented by the

normalize_pathoption in Envoy. - Merge slashes.

While these normalization options represent recommendations from HTTP standards and common industry practices, applications may interpret a URL in any way it chooses to. When using denial policies, ensure that you understand how your application behaves.

2.1.5.8.3. Path normalization configuration examples

Ensuring Envoy normalizes request paths to match your backend services' expectations is critical to the security of your system. The following examples can be used as a reference for you to configure your system. The normalized URL paths, or the original URL paths if NONE is selected, will be:

- Used to check against the authorization policies.

- Forwarded to the backend application.

| If your application… | Choose… |

|---|---|

| Relies on the proxy to do normalization |

|

| Normalizes request paths based on RFC 3986 and does not merge slashes. |

|

| Normalizes request paths based on RFC 3986 and merges slashes, but does not decode percent-encoded slashes. |

|

| Normalizes request paths based on RFC 3986, decodes percent-encoded slashes, and merges slashes. |

|

| Processes request paths in a way that is incompatible with RFC 3986. |

|

2.1.5.8.4. Configuring your SMCP for path normalization

To configure path normalization for Red Hat OpenShift Service Mesh, specify the following in your ServiceMeshControlPlane. Use the configuration examples to help determine the settings for your system.

SMCP v1 pathNormalization

spec:

global:

pathNormalization: <option>

spec:

global:

pathNormalization: <option>2.1.5.9. New features Red Hat OpenShift Service Mesh 1.1.13

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

2.1.5.10. New features Red Hat OpenShift Service Mesh 1.1.12

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

2.1.5.11. New features Red Hat OpenShift Service Mesh 1.1.11

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

2.1.5.12. New features Red Hat OpenShift Service Mesh 1.1.10

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

2.1.5.13. New features Red Hat OpenShift Service Mesh 1.1.9

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

2.1.5.14. New features Red Hat OpenShift Service Mesh 1.1.8

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

2.1.5.15. New features Red Hat OpenShift Service Mesh 1.1.7

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

2.1.5.16. New features Red Hat OpenShift Service Mesh 1.1.6

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

2.1.5.17. New features Red Hat OpenShift Service Mesh 1.1.5

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

This release also added support for configuring cipher suites.

2.1.5.18. New features Red Hat OpenShift Service Mesh 1.1.4

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

There are manual steps that must be completed to address CVE-2020-8663.

2.1.5.18.1. Manual updates required by CVE-2020-8663

The fix for CVE-2020-8663: envoy: Resource exhaustion when accepting too many connections added a configurable limit on downstream connections. The configuration option for this limit must be configured to mitigate this vulnerability.

These manual steps are required to mitigate this CVE whether you are using the 1.1 version or the 1.0 version of Red Hat OpenShift Service Mesh.

This new configuration option is called overload.global_downstream_max_connections, and it is configurable as a proxy runtime setting. Perform the following steps to configure limits at the Ingress Gateway.

Procedure

Create a file named

bootstrap-override.jsonwith the following text to force the proxy to override the bootstrap template and load runtime configuration from disk:{ "runtime": { "symlink_root": "/var/lib/istio/envoy/runtime" } }{ "runtime": { "symlink_root": "/var/lib/istio/envoy/runtime" } }Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a secret from the

bootstrap-override.jsonfile, replacing <SMCPnamespace> with the namespace where you created the service mesh control plane (SMCP):oc create secret generic -n <SMCPnamespace> gateway-bootstrap --from-file=bootstrap-override.json

$ oc create secret generic -n <SMCPnamespace> gateway-bootstrap --from-file=bootstrap-override.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the SMCP configuration to activate the override.

Updated SMCP configuration example #1

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To set the new configuration option, create a secret that has the desired value for the

overload.global_downstream_max_connectionssetting. The following example uses a value of10000:oc create secret generic -n <SMCPnamespace> gateway-settings --from-literal=overload.global_downstream_max_connections=10000

$ oc create secret generic -n <SMCPnamespace> gateway-settings --from-literal=overload.global_downstream_max_connections=10000Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Update the SMCP again to mount the secret in the location where Envoy is looking for runtime configuration:

Updated SMCP configuration example #2

2.1.5.18.2. Upgrading from Elasticsearch 5 to Elasticsearch 6

When updating from Elasticsearch 5 to Elasticsearch 6, you must delete your Jaeger instance, then recreate the Jaeger instance because of an issue with certificates. Re-creating the Jaeger instance triggers creating a new set of certificates. If you are using persistent storage the same volumes can be mounted for the new Jaeger instance as long as the Jaeger name and namespace for the new Jaeger instance are the same as the deleted Jaeger instance.

Procedure if Jaeger is installed as part of Red Hat Service Mesh

Determine the name of your Jaeger custom resource file:

oc get jaeger -n istio-system

$ oc get jaeger -n istio-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow You should see something like the following:

NAME AGE jaeger 3d21h

NAME AGE jaeger 3d21hCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the generated custom resource file into a temporary directory:

oc get jaeger jaeger -oyaml -n istio-system > /tmp/jaeger-cr.yaml

$ oc get jaeger jaeger -oyaml -n istio-system > /tmp/jaeger-cr.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the Jaeger instance:

oc delete jaeger jaeger -n istio-system

$ oc delete jaeger jaeger -n istio-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Recreate the Jaeger instance from your copy of the custom resource file:

oc create -f /tmp/jaeger-cr.yaml -n istio-system

$ oc create -f /tmp/jaeger-cr.yaml -n istio-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the copy of the generated custom resource file:

rm /tmp/jaeger-cr.yaml

$ rm /tmp/jaeger-cr.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure if Jaeger not installed as part of Red Hat Service Mesh

Before you begin, create a copy of your Jaeger custom resource file.

Delete the Jaeger instance by deleting the custom resource file:

oc delete -f <jaeger-cr-file>

$ oc delete -f <jaeger-cr-file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

oc delete -f jaeger-prod-elasticsearch.yaml

$ oc delete -f jaeger-prod-elasticsearch.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Recreate your Jaeger instance from the backup copy of your custom resource file:

oc create -f <jaeger-cr-file>

$ oc create -f <jaeger-cr-file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Validate that your Pods have restarted:

oc get pods -n jaeger-system -w

$ oc get pods -n jaeger-system -wCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.5.19. New features Red Hat OpenShift Service Mesh 1.1.3

This release of Red Hat OpenShift Service Mesh addresses Common Vulnerabilities and Exposures (CVEs) and bug fixes.

2.1.5.20. New features Red Hat OpenShift Service Mesh 1.1.2

This release of Red Hat OpenShift Service Mesh addresses a security vulnerability.

2.1.5.21. New features Red Hat OpenShift Service Mesh 1.1.1

This release of Red Hat OpenShift Service Mesh adds support for a disconnected installation.

2.1.5.22. New features Red Hat OpenShift Service Mesh 1.1.0

This release of Red Hat OpenShift Service Mesh adds support for Istio 1.4.6 and Jaeger 1.17.1.

2.1.5.22.1. Manual updates from 1.0 to 1.1

If you are updating from Red Hat OpenShift Service Mesh 1.0 to 1.1, you must update the ServiceMeshControlPlane resource to update the control plane components to the new version.

- In the web console, click the Red Hat OpenShift Service Mesh Operator.

-

Click the Project menu and choose the project where your

ServiceMeshControlPlaneis deployed from the list, for exampleistio-system. -

Click the name of your control plane, for example

basic-install. -

Click YAML and add a version field to the

spec:of yourServiceMeshControlPlaneresource. For example, to update to Red Hat OpenShift Service Mesh 1.1.0, addversion: v1.1.

spec: version: v1.1 ...

spec:

version: v1.1

...The version field specifies the version of Service Mesh to install and defaults to the latest available version.

Note that support for Red Hat OpenShift Service Mesh v1.0 ended in October, 2020. You must upgrade to either v1.1 or v2.0.

2.1.6. Deprecated features

Some features available in previous releases have been deprecated or removed.

Deprecated functionality is still included in OpenShift Container Platform and continues to be supported; however, it will be removed in a future release of this product and is not recommended for new deployments.

2.1.6.1. Deprecated features Red Hat OpenShift Service Mesh 1.1.5

The following custom resources were deprecated in release 1.1.5 and were removed in release 1.1.12

-

Policy- ThePolicyresource is deprecated and will be replaced by thePeerAuthenticationresource in a future release. -

MeshPolicy- TheMeshPolicyresource is deprecated and will be replaced by thePeerAuthenticationresource in a future release. v1alpha1RBAC API -The v1alpha1 RBAC policy is deprecated by the v1beta1AuthorizationPolicy. RBAC (Role Based Access Control) definesServiceRoleandServiceRoleBindingobjects.-

ServiceRole -

ServiceRoleBinding

-

RbacConfig-RbacConfigimplements the Custom Resource Definition for controlling Istio RBAC behavior.-

ClusterRbacConfig(versions prior to Red Hat OpenShift Service Mesh 1.0) -

ServiceMeshRbacConfig(Red Hat OpenShift Service Mesh version 1.0 and later)

-

-

In Kiali, the

loginandLDAPstrategies are deprecated. A future version will introduce authentication using OpenID providers.

The following components are also deprecated in this release and will be replaced by the Istiod component in a future release.

- Mixer - access control and usage policies

- Pilot - service discovery and proxy configuration

- Citadel - certificate generation

- Galley - configuration validation and distribution

2.1.7. Known issues

These limitations exist in Red Hat OpenShift Service Mesh:

- Red Hat OpenShift Service Mesh does not support IPv6, as it is not supported by the upstream Istio project, nor fully supported by OpenShift Container Platform.

- Graph layout - The layout for the Kiali graph can render differently, depending on your application architecture and the data to display (number of graph nodes and their interactions). Because it is difficult if not impossible to create a single layout that renders nicely for every situation, Kiali offers a choice of several different layouts. To choose a different layout, you can choose a different Layout Schema from the Graph Settings menu.

- The first time you access related services such as Jaeger and Grafana, from the Kiali console, you must accept the certificate and re-authenticate using your OpenShift Container Platform login credentials. This happens due to an issue with how the framework displays embedded pages in the console.

2.1.7.1. Service Mesh known issues

These are the known issues in Red Hat OpenShift Service Mesh:

Jaeger/Kiali Operator upgrade blocked with operator pending When upgrading the Jaeger or Kiali Operators with Service Mesh 1.0.x installed, the operator status shows as Pending.

Workaround: See the linked Knowledge Base article for more information.

- Istio-14743 Due to limitations in the version of Istio that this release of Red Hat OpenShift Service Mesh is based on, there are several applications that are currently incompatible with Service Mesh. See the linked community issue for details.

MAISTRA-858 The following Envoy log messages describing deprecated options and configurations associated with Istio 1.1.x are expected:

- [2019-06-03 07:03:28.943][19][warning][misc] [external/envoy/source/common/protobuf/utility.cc:129] Using deprecated option 'envoy.api.v2.listener.Filter.config'. This configuration will be removed from Envoy soon.

- [2019-08-12 22:12:59.001][13][warning][misc] [external/envoy/source/common/protobuf/utility.cc:174] Using deprecated option 'envoy.api.v2.Listener.use_original_dst' from file lds.proto. This configuration will be removed from Envoy soon.

MAISTRA-806 Evicted Istio Operator Pod causes mesh and CNI not to deploy.

Workaround: If the

istio-operatorpod is evicted while deploying the control pane, delete the evictedistio-operatorpod.- MAISTRA-681 When the control plane has many namespaces, it can lead to performance issues.

- MAISTRA-465 The Maistra Operator fails to create a service for operator metrics.

-

MAISTRA-453 If you create a new project and deploy pods immediately, sidecar injection does not occur. The operator fails to add the

maistra.io/member-ofbefore the pods are created, therefore the pods must be deleted and recreated for sidecar injection to occur. - MAISTRA-158 Applying multiple gateways referencing the same hostname will cause all gateways to stop functioning.

2.1.7.2. Kiali known issues

New issues for Kiali should be created in the OpenShift Service Mesh project with the Component set to Kiali.

These are the known issues in Kiali:

- KIALI-2206 When you are accessing the Kiali console for the first time, and there is no cached browser data for Kiali, the “View in Grafana” link on the Metrics tab of the Kiali Service Details page redirects to the wrong location. The only way you would encounter this issue is if you are accessing Kiali for the first time.

- KIALI-507 Kiali does not support Internet Explorer 11. This is because the underlying frameworks do not support Internet Explorer. To access the Kiali console, use one of the two most recent versions of the Chrome, Edge, Firefox or Safari browser.

2.1.7.3. Red Hat OpenShift distributed tracing known issues

These limitations exist in Red Hat OpenShift distributed tracing:

- Apache Spark is not supported.

- The streaming deployment via AMQ/Kafka is unsupported on IBM Z and IBM Power Systems.

These are the known issues for Red Hat OpenShift distributed tracing:

-

OBSDA-220 In some cases, if you try to pull an image using distributed tracing data collection, the image pull fails and a

Failed to pull imageerror message appears. There is no workaround for this issue. TRACING-2057 The Kafka API has been updated to

v1beta2to support the Strimzi Kafka Operator 0.23.0. However, this API version is not supported by AMQ Streams 1.6.3. If you have the following environment, your Jaeger services will not be upgraded, and you cannot create new Jaeger services or modify existing Jaeger services:- Jaeger Operator channel: 1.17.x stable or 1.20.x stable

AMQ Streams Operator channel: amq-streams-1.6.x

To resolve this issue, switch the subscription channel for your AMQ Streams Operator to either amq-streams-1.7.x or stable.

2.1.8. Fixed issues

The following issues been resolved in the current release:

2.1.8.1. Service Mesh fixed issues

- MAISTRA-2371 Handle tombstones in listerInformer. The updated cache codebase was not handling tombstones when translating the events from the namespace caches to the aggregated cache, leading to a panic in the go routine.

- OSSM-542 Galley is not using the new certificate after rotation.

- OSSM-99 Workloads generated from direct pod without labels may crash Kiali.

- OSSM-93 IstioConfigList can’t filter by two or more names.

- OSSM-92 Cancelling unsaved changes on the VS/DR YAML edit page does not cancel the changes.

- OSSM-90 Traces not available on the service details page.

- MAISTRA-1649 Headless services conflict when in different namespaces. When deploying headless services within different namespaces the endpoint configuration is merged and results in invalid Envoy configurations being pushed to the sidecars.

-

MAISTRA-1541 Panic in kubernetesenv when the controller is not set on owner reference. If a pod has an ownerReference which does not specify the controller, this will cause a panic within the

kubernetesenv cache.gocode. - MAISTRA-1352 Cert-manager Custom Resource Definitions (CRD) from the control plane installation have been removed for this release and future releases. If you have already installed Red Hat OpenShift Service Mesh, the CRDs must be removed manually if cert-manager is not being used.

-

MAISTRA-1001 Closing HTTP/2 connections could lead to segmentation faults in

istio-proxy. -

MAISTRA-932 Added the

requiresmetadata to add dependency relationship between Jaeger Operator and OpenShift Elasticsearch Operator. Ensures that when the Jaeger Operator is installed, it automatically deploys the OpenShift Elasticsearch Operator if it is not available. - MAISTRA-862 Galley dropped watches and stopped providing configuration to other components after many namespace deletions and re-creations.

- MAISTRA-833 Pilot stopped delivering configuration after many namespace deletions and re-creations.

-

MAISTRA-684 The default Jaeger version in the

istio-operatoris 1.12.0, which does not match Jaeger version 1.13.1 that shipped in Red Hat OpenShift Service Mesh 0.12.TechPreview. - MAISTRA-622 In Maistra 0.12.0/TP12, permissive mode does not work. The user has the option to use Plain text mode or Mutual TLS mode, but not permissive.

- MAISTRA-572 Jaeger cannot be used with Kiali. In this release Jaeger is configured to use the OAuth proxy, but is also only configured to work through a browser and does not allow service access. Kiali cannot properly communicate with the Jaeger endpoint and it considers Jaeger to be disabled. See also TRACING-591.

- MAISTRA-357 In OpenShift 4 Beta on AWS, it is not possible, by default, to access a TCP or HTTPS service through the ingress gateway on a port other than port 80. The AWS load balancer has a health check that verifies if port 80 on the service endpoint is active. Without a service running on port 80, the load balancer health check fails.

- MAISTRA-348 OpenShift 4 Beta on AWS does not support ingress gateway traffic on ports other than 80 or 443. If you configure your ingress gateway to handle TCP traffic with a port number other than 80 or 443, you have to use the service hostname provided by the AWS load balancer rather than the OpenShift router as a workaround.

- MAISTRA-193 Unexpected console info messages are visible when health checking is enabled for citadel.

- Bug 1821432 Toggle controls in OpenShift Container Platform Control Resource details page do not update the CR correctly. UI Toggle controls in the Service Mesh Control Plane (SMCP) Overview page in the OpenShift Container Platform web console sometimes update the wrong field in the resource. To update a ServiceMeshControlPlane resource, edit the YAML content directly or update the resource from the command line instead of clicking the toggle controls.

2.1.8.2. Kiali fixed issues

- KIALI-3239 If a Kiali Operator pod has failed with a status of “Evicted” it blocks the Kiali operator from deploying. The workaround is to delete the Evicted pod and redeploy the Kiali operator.

- KIALI-3118 After changes to the ServiceMeshMemberRoll, for example adding or removing projects, the Kiali pod restarts and then displays errors on the Graph page while the Kiali pod is restarting.

- KIALI-3096 Runtime metrics fail in Service Mesh. There is an OAuth filter between the Service Mesh and Prometheus, requiring a bearer token to be passed to Prometheus before access is granted. Kiali has been updated to use this token when communicating to the Prometheus server, but the application metrics are currently failing with 403 errors.

- KIALI-3070 This bug only affects custom dashboards, not the default dashboards. When you select labels in metrics settings and refresh the page, your selections are retained in the menu but your selections are not displayed on the charts.

- KIALI-2686 When the control plane has many namespaces, it can lead to performance issues.

2.1.8.3. Red Hat OpenShift distributed tracing fixed issues

- OSSM-1910 Because of an issue introduced in version 2.6, TLS connections could not be established with OpenShift Container Platform Service Mesh. This update resolves the issue by changing the service port names to match conventions used by OpenShift Container Platform Service Mesh and Istio.

- OBSDA-208 Before this update, the default 200m CPU and 256Mi memory resource limits could cause distributed tracing data collection to restart continuously on large clusters. This update resolves the issue by removing these resource limits.

- OBSDA-222 Before this update, spans could be dropped in the OpenShift Container Platform distributed tracing platform. To help prevent this issue from occurring, this release updates version dependencies.

TRACING-2337 Jaeger is logging a repetitive warning message in the Jaeger logs similar to the following:

{"level":"warn","ts":1642438880.918793,"caller":"channelz/logging.go:62","msg":"[core]grpc: Server.Serve failed to create ServerTransport: connection error: desc = \"transport: http2Server.HandleStreams received bogus greeting from client: \\\"\\\\x16\\\\x03\\\\x01\\\\x02\\\\x00\\\\x01\\\\x00\\\\x01\\\\xfc\\\\x03\\\\x03vw\\\\x1a\\\\xc9T\\\\xe7\\\\xdaCj\\\\xb7\\\\x8dK\\\\xa6\\\"\"","system":"grpc","grpc_log":true}{"level":"warn","ts":1642438880.918793,"caller":"channelz/logging.go:62","msg":"[core]grpc: Server.Serve failed to create ServerTransport: connection error: desc = \"transport: http2Server.HandleStreams received bogus greeting from client: \\\"\\\\x16\\\\x03\\\\x01\\\\x02\\\\x00\\\\x01\\\\x00\\\\x01\\\\xfc\\\\x03\\\\x03vw\\\\x1a\\\\xc9T\\\\xe7\\\\xdaCj\\\\xb7\\\\x8dK\\\\xa6\\\"\"","system":"grpc","grpc_log":true}Copy to Clipboard Copied! Toggle word wrap Toggle overflow This issue was resolved by exposing only the HTTP(S) port of the query service, and not the gRPC port.

- TRACING-2009 The Jaeger Operator has been updated to include support for the Strimzi Kafka Operator 0.23.0.

-

TRACING-1907 The Jaeger agent sidecar injection was failing due to missing config maps in the application namespace. The config maps were getting automatically deleted due to an incorrect

OwnerReferencefield setting and as a result, the application pods were not moving past the "ContainerCreating" stage. The incorrect settings have been removed. - TRACING-1725 Follow-up to TRACING-1631. Additional fix to ensure that Elasticsearch certificates are properly reconciled when there are multiple Jaeger production instances, using same name but within different namespaces. See also BZ-1918920.

- TRACING-1631 Multiple Jaeger production instances, using same name but within different namespaces, causing Elasticsearch certificate issue. When multiple service meshes were installed, all of the Jaeger Elasticsearch instances had the same Elasticsearch secret instead of individual secrets, which prevented the OpenShift Elasticsearch Operator from communicating with all of the Elasticsearch clusters.

- TRACING-1300 Failed connection between Agent and Collector when using Istio sidecar. An update of the Jaeger Operator enabled TLS communication by default between a Jaeger sidecar agent and the Jaeger Collector.

-

TRACING-1208 Authentication "500 Internal Error" when accessing Jaeger UI. When trying to authenticate to the UI using OAuth, I get a 500 error because oauth-proxy sidecar doesn’t trust the custom CA bundle defined at installation time with the

additionalTrustBundle. -

TRACING-1166 It is not currently possible to use the Jaeger streaming strategy within a disconnected environment. When a Kafka cluster is being provisioned, it results in a error:

Failed to pull image registry.redhat.io/amq7/amq-streams-kafka-24-rhel7@sha256:f9ceca004f1b7dccb3b82d9a8027961f9fe4104e0ed69752c0bdd8078b4a1076. - TRACING-809 Jaeger Ingester is incompatible with Kafka 2.3. When there are two or more instances of the Jaeger Ingester and enough traffic it will continuously generate rebalancing messages in the logs. This is due to a regression in Kafka 2.3 that was fixed in Kafka 2.3.1. For more information, see Jaegertracing-1819.

BZ-1918920/LOG-1619 The Elasticsearch pods does not get restarted automatically after an update.

Workaround: Restart the pods manually.

2.2. Understanding Service Mesh

You are viewing documentation for a Red Hat OpenShift Service Mesh release that is no longer supported.

Service Mesh version 1.0 and 1.1 control planes are no longer supported. For information about upgrading your service mesh control plane, see Upgrading Service Mesh.

For information about the support status of a particular Red Hat OpenShift Service Mesh release, see the Product lifecycle page.

Red Hat OpenShift Service Mesh provides a platform for behavioral insight and operational control over your networked microservices in a service mesh. With Red Hat OpenShift Service Mesh, you can connect, secure, and monitor microservices in your OpenShift Container Platform environment.

2.2.1. Understanding service mesh

A service mesh is the network of microservices that make up applications in a distributed microservice architecture and the interactions between those microservices. When a Service Mesh grows in size and complexity, it can become harder to understand and manage.

Based on the open source Istio project, Red Hat OpenShift Service Mesh adds a transparent layer on existing distributed applications without requiring any changes to the service code. You add Red Hat OpenShift Service Mesh support to services by deploying a special sidecar proxy to relevant services in the mesh that intercepts all network communication between microservices. You configure and manage the Service Mesh using the Service Mesh control plane features.

Red Hat OpenShift Service Mesh gives you an easy way to create a network of deployed services that provide:

- Discovery

- Load balancing

- Service-to-service authentication

- Failure recovery

- Metrics

- Monitoring

Red Hat OpenShift Service Mesh also provides more complex operational functions including:

- A/B testing

- Canary releases

- Access control

- End-to-end authentication

2.2.2. Red Hat OpenShift Service Mesh Architecture

Red Hat OpenShift Service Mesh is logically split into a data plane and a control plane:

The data plane is a set of intelligent proxies deployed as sidecars. These proxies intercept and control all inbound and outbound network communication between microservices in the service mesh. Sidecar proxies also communicate with Mixer, the general-purpose policy and telemetry hub.

- Envoy proxy intercepts all inbound and outbound traffic for all services in the service mesh. Envoy is deployed as a sidecar to the relevant service in the same pod.

The control plane manages and configures proxies to route traffic, and configures Mixers to enforce policies and collect telemetry.

- Mixer enforces access control and usage policies (such as authorization, rate limits, quotas, authentication, and request tracing) and collects telemetry data from the Envoy proxy and other services.

- Pilot configures the proxies at runtime. Pilot provides service discovery for the Envoy sidecars, traffic management capabilities for intelligent routing (for example, A/B tests or canary deployments), and resiliency (timeouts, retries, and circuit breakers).

- Citadel issues and rotates certificates. Citadel provides strong service-to-service and end-user authentication with built-in identity and credential management. You can use Citadel to upgrade unencrypted traffic in the service mesh. Operators can enforce policies based on service identity rather than on network controls using Citadel.

- Galley ingests the service mesh configuration, then validates, processes, and distributes the configuration. Galley protects the other service mesh components from obtaining user configuration details from OpenShift Container Platform.

Red Hat OpenShift Service Mesh also uses the istio-operator to manage the installation of the control plane. An Operator is a piece of software that enables you to implement and automate common activities in your OpenShift Container Platform cluster. It acts as a controller, allowing you to set or change the desired state of objects in your cluster.

2.2.3. Understanding Kiali

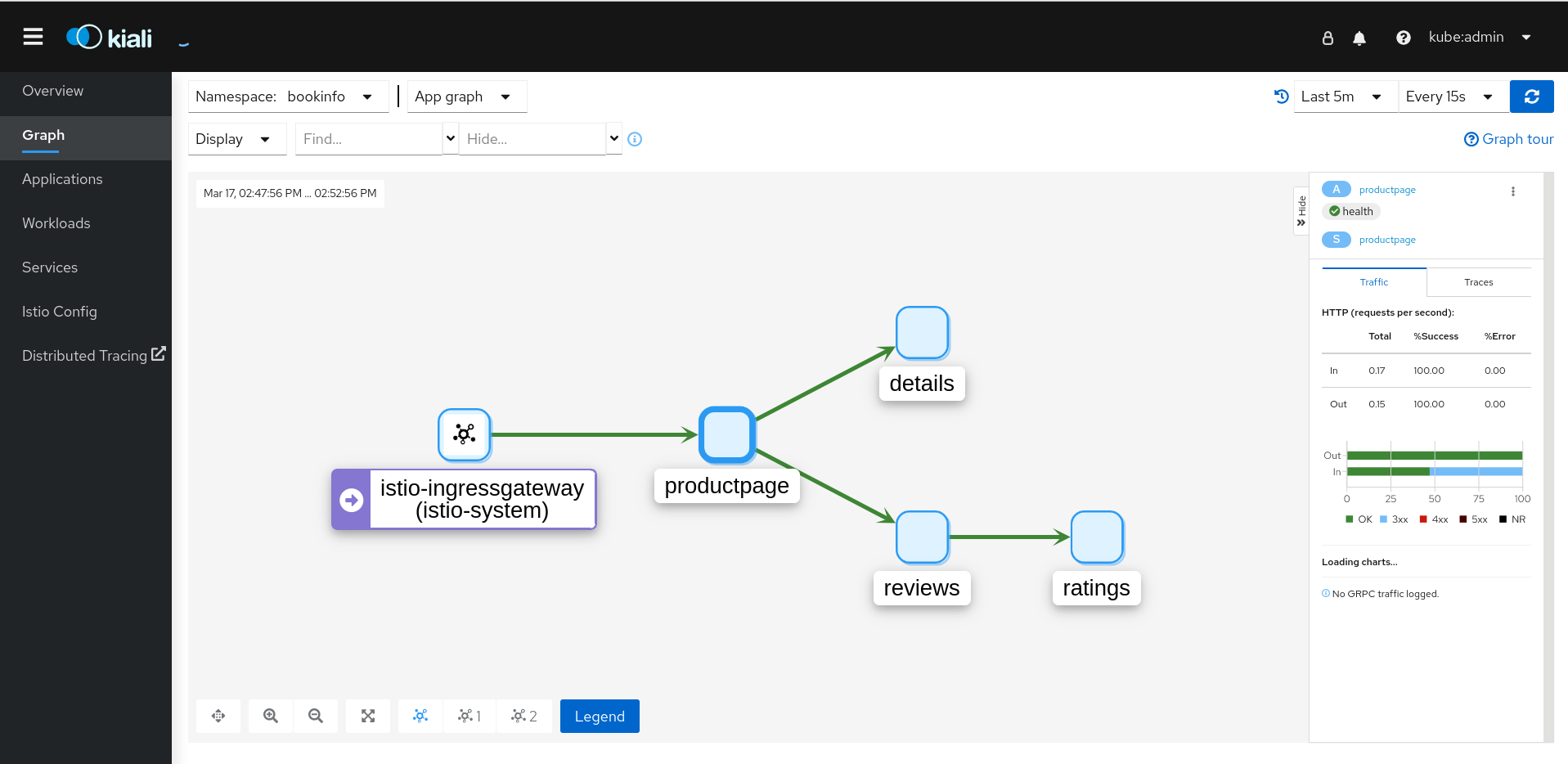

Kiali provides visibility into your service mesh by showing you the microservices in your service mesh, and how they are connected.

2.2.3.1. Kiali overview

Kiali provides observability into the Service Mesh running on OpenShift Container Platform. Kiali helps you define, validate, and observe your Istio service mesh. It helps you to understand the structure of your service mesh by inferring the topology, and also provides information about the health of your service mesh.

Kiali provides an interactive graph view of your namespace in real time that provides visibility into features like circuit breakers, request rates, latency, and even graphs of traffic flows. Kiali offers insights about components at different levels, from Applications to Services and Workloads, and can display the interactions with contextual information and charts on the selected graph node or edge. Kiali also provides the ability to validate your Istio configurations, such as gateways, destination rules, virtual services, mesh policies, and more. Kiali provides detailed metrics, and a basic Grafana integration is available for advanced queries. Distributed tracing is provided by integrating Jaeger into the Kiali console.

Kiali is installed by default as part of the Red Hat OpenShift Service Mesh.

2.2.3.2. Kiali architecture

Kiali is based on the open source Kiali project. Kiali is composed of two components: the Kiali application and the Kiali console.

- Kiali application (back end) – This component runs in the container application platform and communicates with the service mesh components, retrieves and processes data, and exposes this data to the console. The Kiali application does not need storage. When deploying the application to a cluster, configurations are set in ConfigMaps and secrets.

- Kiali console (front end) – The Kiali console is a web application. The Kiali application serves the Kiali console, which then queries the back end for data to present it to the user.

In addition, Kiali depends on external services and components provided by the container application platform and Istio.

- Red Hat Service Mesh (Istio) - Istio is a Kiali requirement. Istio is the component that provides and controls the service mesh. Although Kiali and Istio can be installed separately, Kiali depends on Istio and will not work if it is not present. Kiali needs to retrieve Istio data and configurations, which are exposed through Prometheus and the cluster API.

- Prometheus - A dedicated Prometheus instance is included as part of the Red Hat OpenShift Service Mesh installation. When Istio telemetry is enabled, metrics data are stored in Prometheus. Kiali uses this Prometheus data to determine the mesh topology, display metrics, calculate health, show possible problems, and so on. Kiali communicates directly with Prometheus and assumes the data schema used by Istio Telemetry. Prometheus is an Istio dependency and a hard dependency for Kiali, and many of Kiali’s features will not work without Prometheus.

- Cluster API - Kiali uses the API of the OpenShift Container Platform (cluster API) to fetch and resolve service mesh configurations. Kiali queries the cluster API to retrieve, for example, definitions for namespaces, services, deployments, pods, and other entities. Kiali also makes queries to resolve relationships between the different cluster entities. The cluster API is also queried to retrieve Istio configurations like virtual services, destination rules, route rules, gateways, quotas, and so on.

- Jaeger - Jaeger is optional, but is installed by default as part of the Red Hat OpenShift Service Mesh installation. When you install the distributed tracing platform as part of the default Red Hat OpenShift Service Mesh installation, the Kiali console includes a tab to display distributed tracing data. Note that tracing data will not be available if you disable Istio’s distributed tracing feature. Also note that user must have access to the namespace where the Service Mesh control plane is installed to view tracing data.

- Grafana - Grafana is optional, but is installed by default as part of the Red Hat OpenShift Service Mesh installation. When available, the metrics pages of Kiali display links to direct the user to the same metric in Grafana. Note that user must have access to the namespace where the Service Mesh control plane is installed to view links to the Grafana dashboard and view Grafana data.

2.2.3.3. Kiali features

The Kiali console is integrated with Red Hat Service Mesh and provides the following capabilities:

- Health – Quickly identify issues with applications, services, or workloads.

- Topology – Visualize how your applications, services, or workloads communicate via the Kiali graph.

- Metrics – Predefined metrics dashboards let you chart service mesh and application performance for Go, Node.js. Quarkus, Spring Boot, Thorntail and Vert.x. You can also create your own custom dashboards.

- Tracing – Integration with Jaeger lets you follow the path of a request through various microservices that make up an application.

- Validations – Perform advanced validations on the most common Istio objects (Destination Rules, Service Entries, Virtual Services, and so on).

- Configuration – Optional ability to create, update and delete Istio routing configuration using wizards or directly in the YAML editor in the Kiali Console.

2.2.4. Understanding Jaeger

Every time a user takes an action in an application, a request is executed by the architecture that may require dozens of different services to participate to produce a response. The path of this request is a distributed transaction. Jaeger lets you perform distributed tracing, which follows the path of a request through various microservices that make up an application.

Distributed tracing is a technique that is used to tie the information about different units of work together—usually executed in different processes or hosts—to understand a whole chain of events in a distributed transaction. Distributed tracing lets developers visualize call flows in large service oriented architectures. It can be invaluable in understanding serialization, parallelism, and sources of latency.

Jaeger records the execution of individual requests across the whole stack of microservices, and presents them as traces. A trace is a data/execution path through the system. An end-to-end trace is comprised of one or more spans.

A span represents a logical unit of work in Jaeger that has an operation name, the start time of the operation, and the duration. Spans may be nested and ordered to model causal relationships.

2.2.4.1. Distributed tracing overview

As a service owner, you can use distributed tracing to instrument your services to gather insights into your service architecture. You can use distributed tracing for monitoring, network profiling, and troubleshooting the interaction between components in modern, cloud-native, microservices-based applications.

With distributed tracing you can perform the following functions:

- Monitor distributed transactions

- Optimize performance and latency

- Perform root cause analysis

Red Hat OpenShift distributed tracing consists of two main components:

- Red Hat OpenShift distributed tracing platform - This component is based on the open source Jaeger project.

- Red Hat OpenShift distributed tracing data collection - This component is based on the open source OpenTelemetry project.

Jaeger does not use FIPS validated cryptographic modules.

2.2.4.2. Distributed tracing architecture

The distributed tracing platform is based on the open source Jaeger project. The distributed tracing platform is made up of several components that work together to collect, store, and display tracing data.

- Jaeger Client (Tracer, Reporter, instrumented application, client libraries)- Jaeger clients are language specific implementations of the OpenTracing API. They can be used to instrument applications for distributed tracing either manually or with a variety of existing open source frameworks, such as Camel (Fuse), Spring Boot (RHOAR), MicroProfile (RHOAR/Thorntail), Wildfly (EAP), and many more, that are already integrated with OpenTracing.

- Jaeger Agent (Server Queue, Processor Workers) - The Jaeger agent is a network daemon that listens for spans sent over User Datagram Protocol (UDP), which it batches and sends to the collector. The agent is meant to be placed on the same host as the instrumented application. This is typically accomplished by having a sidecar in container environments like Kubernetes.

- Jaeger Collector (Queue, Workers) - Similar to the Agent, the Collector is able to receive spans and place them in an internal queue for processing. This allows the collector to return immediately to the client/agent instead of waiting for the span to make its way to the storage.

- Storage (Data Store) - Collectors require a persistent storage backend. Jaeger has a pluggable mechanism for span storage. Note that for this release, the only supported storage is Elasticsearch.

- Query (Query Service) - Query is a service that retrieves traces from storage.

- Ingester (Ingester Service) - Jaeger can use Apache Kafka as a buffer between the collector and the actual backing storage (Elasticsearch). Ingester is a service that reads data from Kafka and writes to another storage backend (Elasticsearch).

- Jaeger Console – Jaeger provides a user interface that lets you visualize your distributed tracing data. On the Search page, you can find traces and explore details of the spans that make up an individual trace.

2.2.4.3. Red Hat OpenShift distributed tracing features

Red Hat OpenShift distributed tracing provides the following capabilities:

- Integration with Kiali – When properly configured, you can view distributed tracing data from the Kiali console.

- High scalability – The distributed tracing back end is designed to have no single points of failure and to scale with the business needs.

- Distributed Context Propagation – Enables you to connect data from different components together to create a complete end-to-end trace.

- Backwards compatibility with Zipkin – Red Hat OpenShift distributed tracing has APIs that enable it to be used as a drop-in replacement for Zipkin, but Red Hat is not supporting Zipkin compatibility in this release.

2.2.5. Next steps

- Prepare to install Red Hat OpenShift Service Mesh in your OpenShift Container Platform environment.

2.3. Service Mesh and Istio differences

You are viewing documentation for a Red Hat OpenShift Service Mesh release that is no longer supported.

Service Mesh version 1.0 and 1.1 control planes are no longer supported. For information about upgrading your service mesh control plane, see Upgrading Service Mesh.

For information about the support status of a particular Red Hat OpenShift Service Mesh release, see the Product lifecycle page.

An installation of Red Hat OpenShift Service Mesh differs from upstream Istio community installations in multiple ways. The modifications to Red Hat OpenShift Service Mesh are sometimes necessary to resolve issues, provide additional features, or to handle differences when deploying on OpenShift Container Platform.

The current release of Red Hat OpenShift Service Mesh differs from the current upstream Istio community release in the following ways:

2.3.1. Multitenant installations

Whereas upstream Istio takes a single tenant approach, Red Hat OpenShift Service Mesh supports multiple independent control planes within the cluster. Red Hat OpenShift Service Mesh uses a multitenant operator to manage the control plane lifecycle.

Red Hat OpenShift Service Mesh installs a multitenant control plane by default. You specify the projects that can access the Service Mesh, and isolate the Service Mesh from other control plane instances.

2.3.1.1. Multitenancy versus cluster-wide installations

The main difference between a multitenant installation and a cluster-wide installation is the scope of privileges used by istod. The components no longer use cluster-scoped Role Based Access Control (RBAC) resource ClusterRoleBinding.

Every project in the ServiceMeshMemberRoll members list will have a RoleBinding for each service account associated with the control plane deployment and each control plane deployment will only watch those member projects. Each member project has a maistra.io/member-of label added to it, where the member-of value is the project containing the control plane installation.

Red Hat OpenShift Service Mesh configures each member project to ensure network access between itself, the control plane, and other member projects. The exact configuration differs depending on how OpenShift Container Platform software-defined networking (SDN) is configured. See About OpenShift SDN for additional details.

If the OpenShift Container Platform cluster is configured to use the SDN plugin:

NetworkPolicy: Red Hat OpenShift Service Mesh creates aNetworkPolicyresource in each member project allowing ingress to all pods from the other members and the control plane. If you remove a member from Service Mesh, thisNetworkPolicyresource is deleted from the project.NoteThis also restricts ingress to only member projects. If you require ingress from non-member projects, you need to create a

NetworkPolicyto allow that traffic through.-

Multitenant: Red Hat OpenShift Service Mesh joins the

NetNamespacefor each member project to theNetNamespaceof the control plane project (the equivalent of runningoc adm pod-network join-projects --to control-plane-project member-project). If you remove a member from the Service Mesh, itsNetNamespaceis isolated from the control plane (the equivalent of runningoc adm pod-network isolate-projects member-project). - Subnet: No additional configuration is performed.

2.3.1.2. Cluster scoped resources

Upstream Istio has two cluster scoped resources that it relies on. The MeshPolicy and the ClusterRbacConfig. These are not compatible with a multitenant cluster and have been replaced as described below.

- ServiceMeshPolicy replaces MeshPolicy for configuration of control-plane-wide authentication policies. This must be created in the same project as the control plane.

- ServicemeshRbacConfig replaces ClusterRbacConfig for configuration of control-plane-wide role based access control. This must be created in the same project as the control plane.

2.3.2. Differences between Istio and Red Hat OpenShift Service Mesh

An installation of Red Hat OpenShift Service Mesh differs from an installation of Istio in multiple ways. The modifications to Red Hat OpenShift Service Mesh are sometimes necessary to resolve issues, provide additional features, or to handle differences when deploying on OpenShift Container Platform.

2.3.2.1. Command line tool

The command line tool for Red Hat OpenShift Service Mesh is oc. Red Hat OpenShift Service Mesh does not support istioctl.

2.3.2.2. Automatic injection

The upstream Istio community installation automatically injects the sidecar into pods within the projects you have labeled.

Red Hat OpenShift Service Mesh does not automatically inject the sidecar to any pods, but requires you to opt in to injection using an annotation without labeling projects. This method requires fewer privileges and does not conflict with other OpenShift capabilities such as builder pods. To enable automatic injection you specify the sidecar.istio.io/inject annotation as described in the Automatic sidecar injection section.

2.3.2.3. Istio Role Based Access Control features

Istio Role Based Access Control (RBAC) provides a mechanism you can use to control access to a service. You can identify subjects by user name or by specifying a set of properties and apply access controls accordingly.

The upstream Istio community installation includes options to perform exact header matches, match wildcards in headers, or check for a header containing a specific prefix or suffix.

Red Hat OpenShift Service Mesh extends the ability to match request headers by using a regular expression. Specify a property key of request.regex.headers with a regular expression.

Upstream Istio community matching request headers example

Red Hat OpenShift Service Mesh matching request headers by using regular expressions

2.3.2.4. OpenSSL

Red Hat OpenShift Service Mesh replaces BoringSSL with OpenSSL. OpenSSL is a software library that contains an open source implementation of the Secure Sockets Layer (SSL) and Transport Layer Security (TLS) protocols. The Red Hat OpenShift Service Mesh Proxy binary dynamically links the OpenSSL libraries (libssl and libcrypto) from the underlying Red Hat Enterprise Linux operating system.

2.3.2.5. Component modifications

- A maistra-version label has been added to all resources.

- All Ingress resources have been converted to OpenShift Route resources.

- Grafana, Tracing (Jaeger), and Kiali are enabled by default and exposed through OpenShift routes.

- Godebug has been removed from all templates

-

The

istio-multiServiceAccount and ClusterRoleBinding have been removed, as well as theistio-readerClusterRole.

2.3.2.6. Envoy, Secret Discovery Service, and certificates

- Red Hat OpenShift Service Mesh does not support QUIC-based services.

- Deployment of TLS certificates using the Secret Discovery Service (SDS) functionality of Istio is not currently supported in Red Hat OpenShift Service Mesh. The Istio implementation depends on a nodeagent container that uses hostPath mounts.

2.3.2.7. Istio Container Network Interface (CNI) plugin

Red Hat OpenShift Service Mesh includes CNI plugin, which provides you with an alternate way to configure application pod networking. The CNI plugin replaces the init-container network configuration eliminating the need to grant service accounts and projects access to Security Context Constraints (SCCs) with elevated privileges.

2.3.2.8. Routes for Istio Gateways

OpenShift routes for Istio Gateways are automatically managed in Red Hat OpenShift Service Mesh. Every time an Istio Gateway is created, updated or deleted inside the service mesh, an OpenShift route is created, updated or deleted.

A Red Hat OpenShift Service Mesh control plane component called Istio OpenShift Routing (IOR) synchronizes the gateway route. For more information, see Automatic route creation.

2.3.2.8.1. Catch-all domains

Catch-all domains ("*") are not supported. If one is found in the Gateway definition, Red Hat OpenShift Service Mesh will create the route, but will rely on OpenShift to create a default hostname. This means that the newly created route will not be a catch all ("*") route, instead it will have a hostname in the form <route-name>[-<project>].<suffix>. See the OpenShift documentation for more information about how default hostnames work and how a cluster administrator can customize it.

2.3.2.8.2. Subdomains

Subdomains (e.g.: "*.domain.com") are supported. However this ability doesn’t come enabled by default in OpenShift Container Platform. This means that Red Hat OpenShift Service Mesh will create the route with the subdomain, but it will only be in effect if OpenShift Container Platform is configured to enable it.

2.3.2.8.3. Transport layer security

Transport Layer Security (TLS) is supported. This means that, if the Gateway contains a tls section, the OpenShift Route will be configured to support TLS.

Additional resources

2.3.3. Kiali and service mesh

Installing Kiali via the Service Mesh on OpenShift Container Platform differs from community Kiali installations in multiple ways. These modifications are sometimes necessary to resolve issues, provide additional features, or to handle differences when deploying on OpenShift Container Platform.

- Kiali has been enabled by default.

- Ingress has been enabled by default.

- Updates have been made to the Kiali ConfigMap.

- Updates have been made to the ClusterRole settings for Kiali.

-

Do not edit the ConfigMap, because your changes might be overwritten by the Service Mesh or Kiali Operators. Files that the Kiali Operator manages have a

kiali.io/label or annotation. Updating the Operator files should be restricted to those users withcluster-adminprivileges. If you use Red Hat OpenShift Dedicated, updating the Operator files should be restricted to those users withdedicated-adminprivileges.

2.3.4. Distributed tracing and service mesh

Installing the distributed tracing platform with the Service Mesh on OpenShift Container Platform differs from community Jaeger installations in multiple ways. These modifications are sometimes necessary to resolve issues, provide additional features, or to handle differences when deploying on OpenShift Container Platform.

- Distributed tracing has been enabled by default for Service Mesh.

- Ingress has been enabled by default for Service Mesh.

-

The name for the Zipkin port name has changed to

jaeger-collector-zipkin(fromhttp) -

Jaeger uses Elasticsearch for storage by default when you select either the

productionorstreamingdeployment option. - The community version of Istio provides a generic "tracing" route. Red Hat OpenShift Service Mesh uses a "jaeger" route that is installed by the Red Hat OpenShift distributed tracing platform Operator and is already protected by OAuth.

- Red Hat OpenShift Service Mesh uses a sidecar for the Envoy proxy, and Jaeger also uses a sidecar, for the Jaeger agent. These two sidecars are configured separately and should not be confused with each other. The proxy sidecar creates spans related to the pod’s ingress and egress traffic. The agent sidecar receives the spans emitted by the application and sends them to the Jaeger Collector.

2.4. Preparing to install Service Mesh

You are viewing documentation for a Red Hat OpenShift Service Mesh release that is no longer supported.

Service Mesh version 1.0 and 1.1 control planes are no longer supported. For information about upgrading your service mesh control plane, see Upgrading Service Mesh.

For information about the support status of a particular Red Hat OpenShift Service Mesh release, see the Product lifecycle page.

Before you can install Red Hat OpenShift Service Mesh, review the installation activities, ensure that you meet the prerequisites:

2.4.1. Prerequisites

- Possess an active OpenShift Container Platform subscription on your Red Hat account. If you do not have a subscription, contact your sales representative for more information.

- Review the OpenShift Container Platform 4.10 overview.

Install OpenShift Container Platform 4.10.

- Install OpenShift Container Platform 4.10 on AWS

- Install OpenShift Container Platform 4.10 on user-provisioned AWS

- Install OpenShift Container Platform 4.10 on bare metal

Install OpenShift Container Platform 4.10 on vSphere

NoteIf you are installing Red Hat OpenShift Service Mesh on a restricted network, follow the instructions for your chosen OpenShift Container Platform infrastructure.

Install the version of the OpenShift Container Platform command line utility (the

occlient tool) that matches your OpenShift Container Platform version and add it to your path.- If you are using OpenShift Container Platform 4.10, see About the OpenShift CLI.

2.4.2. Red Hat OpenShift Service Mesh supported configurations

The following are the only supported configurations for the Red Hat OpenShift Service Mesh:

- OpenShift Container Platform version 4.6 or later.

OpenShift Online and Red Hat OpenShift Dedicated are not supported for Red Hat OpenShift Service Mesh.

- The deployment must be contained within a single OpenShift Container Platform cluster that is not federated.

- This release of Red Hat OpenShift Service Mesh is only available on OpenShift Container Platform x86_64.

- This release only supports configurations where all Service Mesh components are contained in the OpenShift Container Platform cluster in which it operates. It does not support management of microservices that reside outside of the cluster, or in a multi-cluster scenario.

- This release only supports configurations that do not integrate external services such as virtual machines.

For additional information about Red Hat OpenShift Service Mesh lifecycle and supported configurations, refer to the Support Policy.

2.4.2.1. Supported configurations for Kiali on Red Hat OpenShift Service Mesh

- The Kiali observability console is only supported on the two most recent releases of the Chrome, Edge, Firefox, or Safari browsers.

2.4.2.2. Supported Mixer adapters

This release only supports the following Mixer adapter:

- 3scale Istio Adapter

2.4.3. Operator overview

Red Hat OpenShift Service Mesh requires the following four Operators:

- OpenShift Elasticsearch - (Optional) Provides database storage for tracing and logging with the distributed tracing platform. It is based on the open source Elasticsearch project.

- Red Hat OpenShift distributed tracing platform - Provides distributed tracing to monitor and troubleshoot transactions in complex distributed systems. It is based on the open source Jaeger project.

- Kiali Operator provided by Red Hat - Provides observability for your service mesh. You can view configurations, monitor traffic, and analyze traces in a single console. It is based on the open source Kiali project.

-

Red Hat OpenShift Service Mesh - Allows you to connect, secure, control, and observe the microservices that comprise your applications. The Service Mesh Operator defines and monitors the

ServiceMeshControlPlaneresources that manage the deployment, updating, and deletion of the Service Mesh components. It is based on the open source Istio project.

See Configuring the log store for details on configuring the default Jaeger parameters for Elasticsearch in a production environment.

2.4.4. Next steps

- Install Red Hat OpenShift Service Mesh in your OpenShift Container Platform environment.

2.5. Installing Service Mesh

You are viewing documentation for a Red Hat OpenShift Service Mesh release that is no longer supported.

Service Mesh version 1.0 and 1.1 control planes are no longer supported. For information about upgrading your service mesh control plane, see Upgrading Service Mesh.

For information about the support status of a particular Red Hat OpenShift Service Mesh release, see the Product lifecycle page.

Installing the Service Mesh involves installing the OpenShift Elasticsearch, Jaeger, Kiali and Service Mesh Operators, creating and managing a ServiceMeshControlPlane resource to deploy the control plane, and creating a ServiceMeshMemberRoll resource to specify the namespaces associated with the Service Mesh.

Mixer’s policy enforcement is disabled by default. You must enable it to run policy tasks. See Update Mixer policy enforcement for instructions on enabling Mixer policy enforcement.

Multi-tenant control plane installations are the default configuration.

The Service Mesh documentation uses istio-system as the example project, but you can deploy the service mesh to any project.

2.5.1. Prerequisites

- Follow the Preparing to install Red Hat OpenShift Service Mesh process.

-

An account with the

cluster-adminrole.

The Service Mesh installation process uses the OperatorHub to install the ServiceMeshControlPlane custom resource definition within the openshift-operators project. The Red Hat OpenShift Service Mesh defines and monitors the ServiceMeshControlPlane related to the deployment, update, and deletion of the control plane.

Starting with Red Hat OpenShift Service Mesh 1.1.18.2, you must install the OpenShift Elasticsearch Operator, the Jaeger Operator, and the Kiali Operator before the Red Hat OpenShift Service Mesh Operator can install the control plane.

2.5.2. Installing the OpenShift Elasticsearch Operator

The default Red Hat OpenShift distributed tracing platform deployment uses in-memory storage because it is designed to be installed quickly for those evaluating Red Hat OpenShift distributed tracing, giving demonstrations, or using Red Hat OpenShift distributed tracing platform in a test environment. If you plan to use Red Hat OpenShift distributed tracing platform in production, you must install and configure a persistent storage option, in this case, Elasticsearch.

Prerequisites

- You have access to the OpenShift Container Platform web console.

-

You have access to the cluster as a user with the

cluster-adminrole. If you use Red Hat OpenShift Dedicated, you must have an account with thededicated-adminrole.

Do not install Community versions of the Operators. Community Operators are not supported.

If you have already installed the OpenShift Elasticsearch Operator as part of OpenShift Logging, you do not need to install the OpenShift Elasticsearch Operator again. The Red Hat OpenShift distributed tracing platform Operator creates the Elasticsearch instance using the installed OpenShift Elasticsearch Operator.

Procedure

-

Log in to the OpenShift Container Platform web console as a user with the

cluster-adminrole. If you use Red Hat OpenShift Dedicated, you must have an account with thededicated-adminrole. -

Navigate to Operators

OperatorHub. - Type Elasticsearch into the filter box to locate the OpenShift Elasticsearch Operator.

- Click the OpenShift Elasticsearch Operator provided by Red Hat to display information about the Operator.

- Click Install.

- On the Install Operator page, select the stable Update Channel. This automatically updates your Operator as new versions are released.

Accept the default All namespaces on the cluster (default). This installs the Operator in the default

openshift-operators-redhatproject and makes the Operator available to all projects in the cluster.NoteThe Elasticsearch installation requires the openshift-operators-redhat namespace for the OpenShift Elasticsearch Operator. The other Red Hat OpenShift distributed tracing Operators are installed in the