This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Questo contenuto non è disponibile nella lingua selezionata.

Network Observability

The Network Observability Operator

Abstract

Chapter 1. Network Observability Operator release notes

The Network Observability Operator enables administrators to observe and analyze network traffic flows for OpenShift Container Platform clusters.

These release notes track the development of the Network Observability Operator in the OpenShift Container Platform.

For an overview of the Network Observability Operator, see About Network Observability Operator.

1.1. Network Observability Operator 1.4.2

The following advisory is available for the Network Observability Operator 1.4.2:

1.1.1. CVEs

1.2. Network Observability Operator 1.4.1

The following advisory is available for the Network Observability Operator 1.4.1:

1.2.1. CVEs

1.2.2. Bug fixes

- In 1.4, there was a known issue when sending network flow data to Kafka. The Kafka message key was ignored, causing an error with connection tracking. Now the key is used for partitioning, so each flow from the same connection is sent to the same processor. (NETOBSERV-926)

-

In 1.4, the

Innerflow direction was introduced to account for flows between pods running on the same node. Flows with theInnerdirection were not taken into account in the generated Prometheus metrics derived from flows, resulting in under-evaluated bytes and packets rates. Now, derived metrics are including flows with theInnerdirection, providing correct bytes and packets rates. (NETOBSERV-1344)

1.3. Network Observability Operator 1.4.0

The following advisory is available for the Network Observability Operator 1.4.0:

1.3.1. Channel removal

You must switch your channel from v1.0.x to stable to receive the latest Operator updates. The v1.0.x channel is now removed.

1.3.2. New features and enhancements

1.3.2.1. Notable enhancements

The 1.4 release of the Network Observability Operator adds improvements and new capabilities to the OpenShift Container Platform web console plugin and the Operator configuration.

Web console enhancements:

- In the Query Options, the Duplicate flows checkbox is added to choose whether or not to show duplicated flows.

-

You can now filter source and destination traffic with

One-way,

One-way,

Back-and-forth, and Swap filters.

Back-and-forth, and Swap filters.

The Network Observability metrics dashboards in Observe → Dashboards → NetObserv and NetObserv / Health are modified as follows:

- The NetObserv dashboard shows top bytes, packets sent, packets received per nodes, namespaces, and workloads. Flow graphs are removed from this dashboard.

- The NetObserv / Health dashboard shows flows overhead as well as top flow rates per nodes, namespaces, and workloads.

- Infrastructure and Application metrics are shown in a split-view for namespaces and workloads.

For more information, see Network Observability metrics and Quick filters.

Configuration enhancements:

- You now have the option to specify different namespaces for any configured ConfigMap or Secret reference, such as in certificates configuration.

-

The

spec.processor.clusterNameparameter is added so that the name of the cluster appears in the flows data. This is useful in a multi-cluster context. When using OpenShift Container Platform, leave empty to make it automatically determined.

For more information, see Flow Collector sample resource and Flow Collector API Reference.

1.3.2.2. Network Observability without Loki

The Network Observability Operator is now functional and usable without Loki. If Loki is not installed, it can only export flows to KAFKA or IPFIX format and provide metrics in the Network Observability metrics dashboards. For more information, see Network Observability without Loki.

1.3.2.3. DNS tracking

In 1.4, the Network Observability Operator makes use of eBPF tracepoint hooks to enable DNS tracking. You can monitor your network, conduct security analysis, and troubleshoot DNS issues in the Network Traffic and Overview pages in the web console.

For more information, see Configuring DNS tracking and Working with DNS tracking.

1.3.2.4. SR-IOV support

You can now collect traffic from a cluster with Single Root I/O Virtualization (SR-IOV) device. For more information, see Configuring the monitoring of SR-IOV interface traffic.

1.3.2.5. IPFIX exporter support

You can now export eBPF-enriched network flows to the IPFIX collector. For more information, see Export enriched network flow data.

1.3.2.6. s390x architecture support

Network Observability Operator can now run on s390x architecture. Previously it ran on amd64, ppc64le, or arm64.

1.3.3. Bug fixes

- Previously, the Prometheus metrics exported by Network Observability were computed out of potentially duplicated network flows. In the related dashboards, from Observe → Dashboards, this could result in potentially doubled rates. Note that dashboards from the Network Traffic view were not affected. Now, network flows are filtered to eliminate duplicates prior to metrics calculation, which results in correct traffic rates displayed in the dashboards. (NETOBSERV-1131)

-

Previously, the Network Observability Operator agents were not able to capture traffic on network interfaces when configured with Multus or SR-IOV, non-default network namespaces. Now, all available network namespaces are recognized and used for capturing flows, allowing capturing traffic for SR-IOV. There are configurations needed for the

FlowCollectorandSRIOVnetworkcustom resource to collect traffic. (NETOBSERV-1283) -

Previously, in the Network Observability Operator details from Operators → Installed Operators, the

FlowCollectorStatus field might have reported incorrect information about the state of the deployment. The status field now shows the proper conditions with improved messages. The history of events is kept, ordered by event date. (NETOBSERV-1224) -

Previously, during spikes of network traffic load, certain eBPF pods were OOM-killed and went into a

CrashLoopBackOffstate. Now, theeBPFagent memory footprint is improved, so pods are not OOM-killed and entering aCrashLoopBackOffstate. (NETOBSERV-975) -

Previously when

processor.metrics.tlswas set toPROVIDEDtheinsecureSkipVerifyoption value was forced to betrue. Now you can setinsecureSkipVerifytotrueorfalse, and provide a CA certificate if needed. (NETOBSERV-1087)

1.3.4. Known issues

-

Since the 1.2.0 release of the Network Observability Operator, using Loki Operator 5.6, a Loki certificate change periodically affects the

flowlogs-pipelinepods and results in dropped flows rather than flows written to Loki. The problem self-corrects after some time, but it still causes temporary flow data loss during the Loki certificate change. This issue has only been observed in large-scale environments of 120 nodes or greater. (NETOBSERV-980) -

Currently, when

spec.agent.ebpf.featuresincludes DNSTracking, larger DNS packets require theeBPFagent to look for DNS header outside of the 1st socket buffer (SKB) segment. A neweBPFagent helper function needs to be implemented to support it. Currently, there is no workaround for this issue. (NETOBSERV-1304) -

Currently, when

spec.agent.ebpf.featuresincludes DNSTracking, DNS over TCP packets requires theeBPFagent to look for DNS header outside of the 1st SKB segment. A neweBPFagent helper function needs to be implemented to support it. Currently, there is no workaround for this issue. (NETOBSERV-1245) -

Currently, when using a

KAFKAdeployment model, if conversation tracking is configured, conversation events might be duplicated across Kafka consumers, resulting in inconsistent tracking of conversations, and incorrect volumetric data. For that reason, it is not recommended to configure conversation tracking whendeploymentModelis set toKAFKA. (NETOBSERV-926) -

Currently, when the

processor.metrics.server.tls.typeis configured to use aPROVIDEDcertificate, the operator enters an unsteady state that might affect its performance and resource consumption. It is recommended to not use aPROVIDEDcertificate until this issue is resolved, and instead using an auto-generated certificate, settingprocessor.metrics.server.tls.typetoAUTO. (NETOBSERV-1293

1.4. Network Observability Operator 1.3.0

The following advisory is available for the Network Observability Operator 1.3.0:

1.4.1. Channel deprecation

You must switch your channel from v1.0.x to stable to receive future Operator updates. The v1.0.x channel is deprecated and planned for removal in the next release.

1.4.2. New features and enhancements

1.4.2.1. Multi-tenancy in Network Observability

- System administrators can allow and restrict individual user access, or group access, to the flows stored in Loki. For more information, see Multi-tenancy in Network Observability.

1.4.2.2. Flow-based metrics dashboard

- This release adds a new dashboard, which provides an overview of the network flows in your OpenShift Container Platform cluster. For more information, see Network Observability metrics.

1.4.2.3. Troubleshooting with the must-gather tool

- Information about the Network Observability Operator can now be included in the must-gather data for troubleshooting. For more information, see Network Observability must-gather.

1.4.2.4. Multiple architectures now supported

-

Network Observability Operator can now run on an

amd64,ppc64le, orarm64architectures. Previously, it only ran onamd64.

1.4.3. Deprecated features

1.4.3.1. Deprecated configuration parameter setting

The release of Network Observability Operator 1.3 deprecates the spec.Loki.authToken HOST setting. When using the Loki Operator, you must now only use the FORWARD setting.

1.4.4. Bug fixes

-

Previously, when the Operator was installed from the CLI, the

RoleandRoleBindingthat are necessary for the Cluster Monitoring Operator to read the metrics were not installed as expected. The issue did not occur when the operator was installed from the web console. Now, either way of installing the Operator installs the requiredRoleandRoleBinding. (NETOBSERV-1003) -

Since version 1.2, the Network Observability Operator can raise alerts when a problem occurs with the flows collection. Previously, due to a bug, the related configuration to disable alerts,

spec.processor.metrics.disableAlertswas not working as expected and sometimes ineffectual. Now, this configuration is fixed so that it is possible to disable the alerts. (NETOBSERV-976) -

Previously, when Network Observability was configured with

spec.loki.authTokenset toDISABLED, only akubeadmincluster administrator was able to view network flows. Other types of cluster administrators received authorization failure. Now, any cluster administrator is able to view network flows. (NETOBSERV-972) -

Previously, a bug prevented users from setting

spec.consolePlugin.portNaming.enabletofalse. Now, this setting can be set tofalseto disable port-to-service name translation. (NETOBSERV-971) - Previously, the metrics exposed by the console plugin were not collected by the Cluster Monitoring Operator (Prometheus), due to an incorrect configuration. Now the configuration has been fixed so that the console plugin metrics are correctly collected and accessible from the OpenShift Container Platform web console. (NETOBSERV-765)

-

Previously, when

processor.metrics.tlswas set toAUTOin theFlowCollector, theflowlogs-pipeline servicemonitordid not adapt the appropriate TLS scheme, and metrics were not visible in the web console. Now the issue is fixed for AUTO mode. (NETOBSERV-1070) -

Previously, certificate configuration, such as used for Kafka and Loki, did not allow specifying a namespace field, implying that the certificates had to be in the same namespace where Network Observability is deployed. Moreover, when using Kafka with TLS/mTLS, the user had to manually copy the certificate(s) to the privileged namespace where the

eBPFagent pods are deployed and manually manage certificate updates, such as in the case of certificate rotation. Now, Network Observability setup is simplified by adding a namespace field for certificates in theFlowCollectorresource. As a result, users can now install Loki or Kafka in different namespaces without needing to manually copy their certificates in the Network Observability namespace. The original certificates are watched so that the copies are automatically updated when needed. (NETOBSERV-773) - Previously, the SCTP, ICMPv4 and ICMPv6 protocols were not covered by the Network Observability agents, resulting in a less comprehensive network flows coverage. These protocols are now recognized to improve the flows coverage. (NETOBSERV-934)

1.4.5. Known issues

-

When

processor.metrics.tlsis set toPROVIDEDin theFlowCollector, theflowlogs-pipelineservicemonitoris not adapted to the TLS scheme. (NETOBSERV-1087) -

Since the 1.2.0 release of the Network Observability Operator, using Loki Operator 5.6, a Loki certificate change periodically affects the

flowlogs-pipelinepods and results in dropped flows rather than flows written to Loki. The problem self-corrects after some time, but it still causes temporary flow data loss during the Loki certificate change. This issue has only been observed in large-scale environments of 120 nodes or greater.(NETOBSERV-980)

1.5. Network Observability Operator 1.2.0

The following advisory is available for the Network Observability Operator 1.2.0:

1.5.1. Preparing for the next update

The subscription of an installed Operator specifies an update channel that tracks and receives updates for the Operator. Until the 1.2 release of the Network Observability Operator, the only channel available was v1.0.x. The 1.2 release of the Network Observability Operator introduces the stable update channel for tracking and receiving updates. You must switch your channel from v1.0.x to stable to receive future Operator updates. The v1.0.x channel is deprecated and planned for removal in a following release.

1.5.2. New features and enhancements

1.5.2.1. Histogram in Traffic Flows view

- You can now choose to show a histogram bar chart of flows over time. The histogram enables you to visualize the history of flows without hitting the Loki query limit. For more information, see Using the histogram.

1.5.2.2. Conversation tracking

- You can now query flows by Log Type, which enables grouping network flows that are part of the same conversation. For more information, see Working with conversations.

1.5.2.3. Network Observability health alerts

-

The Network Observability Operator now creates automatic alerts if the

flowlogs-pipelineis dropping flows because of errors at the write stage or if the Loki ingestion rate limit has been reached. For more information, see Viewing health information.

1.5.3. Bug fixes

-

Previously, after changing the

namespacevalue in the FlowCollector spec,eBPFagent pods running in the previous namespace were not appropriately deleted. Now, the pods running in the previous namespace are appropriately deleted. (NETOBSERV-774) -

Previously, after changing the

caCert.namevalue in the FlowCollector spec (such as in Loki section), FlowLogs-Pipeline pods and Console plug-in pods were not restarted, therefore they were unaware of the configuration change. Now, the pods are restarted, so they get the configuration change. (NETOBSERV-772) - Previously, network flows between pods running on different nodes were sometimes not correctly identified as being duplicates because they are captured by different network interfaces. This resulted in over-estimated metrics displayed in the console plug-in. Now, flows are correctly identified as duplicates, and the console plug-in displays accurate metrics. (NETOBSERV-755)

- The "reporter" option in the console plug-in is used to filter flows based on the observation point of either source node or destination node. Previously, this option mixed the flows regardless of the node observation point. This was due to network flows being incorrectly reported as Ingress or Egress at the node level. Now, the network flow direction reporting is correct. The "reporter" option filters for source observation point, or destination observation point, as expected. (NETOBSERV-696)

- Previously, for agents configured to send flows directly to the processor as gRPC+protobuf requests, the submitted payload could be too large and is rejected by the processors' GRPC server. This occurred under very-high-load scenarios and with only some configurations of the agent. The agent logged an error message, such as: grpc: received message larger than max. As a consequence, there was information loss about those flows. Now, the gRPC payload is split into several messages when the size exceeds a threshold. As a result, the server maintains connectivity. (NETOBSERV-617)

1.5.4. Known issue

-

In the 1.2.0 release of the Network Observability Operator, using Loki Operator 5.6, a Loki certificate transition periodically affects the

flowlogs-pipelinepods and results in dropped flows rather than flows written to Loki. The problem self-corrects after some time, but it still causes temporary flow data loss during the Loki certificate transition. (NETOBSERV-980)

1.5.5. Notable technical changes

-

Previously, you could install the Network Observability Operator using a custom namespace. This release introduces the

conversion webhookwhich changes theClusterServiceVersion. Because of this change, all the available namespaces are no longer listed. Additionally, to enable Operator metrics collection, namespaces that are shared with other Operators, like theopenshift-operatorsnamespace, cannot be used. Now, the Operator must be installed in theopenshift-netobserv-operatornamespace. You cannot automatically upgrade to the new Operator version if you previously installed the Network Observability Operator using a custom namespace. If you previously installed the Operator using a custom namespace, you must delete the instance of the Operator that was installed and re-install your operator in theopenshift-netobserv-operatornamespace. It is important to note that custom namespaces, such as the commonly usednetobservnamespace, are still possible for theFlowCollector, Loki, Kafka, and other plug-ins. (NETOBSERV-907)(NETOBSERV-956)

1.6. Network Observability Operator 1.1.0

The following advisory is available for the Network Observability Operator 1.1.0:

The Network Observability Operator is now stable and the release channel is upgraded to v1.1.0.

1.6.1. Bug fix

-

Previously, unless the Loki

authTokenconfiguration was set toFORWARDmode, authentication was no longer enforced, allowing any user who could connect to the OpenShift Container Platform console in an OpenShift Container Platform cluster to retrieve flows without authentication. Now, regardless of the LokiauthTokenmode, only cluster administrators can retrieve flows. (BZ#2169468)

Chapter 2. About Network Observability

Red Hat offers cluster administrators the Network Observability Operator to observe the network traffic for OpenShift Container Platform clusters. The Network Observability Operator uses the eBPF technology to create network flows. The network flows are then enriched with OpenShift Container Platform information and stored in Loki. You can view and analyze the stored network flows information in the OpenShift Container Platform console for further insight and troubleshooting.

2.1. Optional dependencies of the Network Observability Operator

- Loki Operator: Loki is the backend that is used to store all collected flows. It is recommended to install Loki to use with the Network Observability Operator. You can choose to use Network Observability without Loki, but there are some considerations for doing this, as described in the linked section. If you choose to install Loki, it is recommended to use the Loki Operator, as it is supported by Red Hat.

- Grafana Operator: You can install Grafana for creating custom dashboards and querying capabilities, by using an open source product, such as the Grafana Operator. Red Hat does not support the Grafana Operator.

- AMQ Streams Operator: Kafka provides scalability, resiliency and high availability in the OpenShift Container Platform cluster for large scale deployments. If you choose to use Kafka, it is recommended to use the AMQ Streams Operator, because it is supported by Red Hat.

2.2. Network Observability Operator

The Network Observability Operator provides the Flow Collector API custom resource definition. A Flow Collector instance is created during installation and enables configuration of network flow collection. The Flow Collector instance deploys pods and services that form a monitoring pipeline where network flows are then collected and enriched with the Kubernetes metadata before storing in Loki. The eBPF agent, which is deployed as a daemonset object, creates the network flows.

2.3. OpenShift Container Platform console integration

OpenShift Container Platform console integration offers overview, topology view and traffic flow tables.

2.3.1. Network Observability metrics dashboards

On the Overview tab in the OpenShift Container Platform console, you can view the overall aggregated metrics of the network traffic flow on the cluster. You can choose to display the information by node, namespace, owner, pod, and service. Filters and display options can further refine the metrics.

In Observe → Dashboards, the Netobserv dashboard provides a quick overview of the network flows in your OpenShift Container Platform cluster. You can view distillations of the network traffic metrics in the following categories:

- Top byte rates received per source and destination nodes

- Top byte rates received per source and destination namespaces

- Top byte rates received per source and destination workloads

Infrastructure and Application metrics are shown in a split-view for namespace and workloads. You can configure the FlowCollector spec.processor.metrics to add or remove metrics by changing the ignoreTags list. For more information about available tags, see the Flow Collector API Reference

Also in Observe → Dashboards, the Netobserv/Health dashboard provides metrics about the health of the Operator in the following categories.

- Flows

- Flows Overhead

- Top flow rates per source and destination nodes

- Top flow rates per source and destination namespaces

- Top flow rates per source and destination workloads

- Agents

- Processor

- Operator

Infrastructure and Application metrics are shown in a split-view for namespace and workloads.

2.3.2. Network Observability topology views

The OpenShift Container Platform console offers the Topology tab which displays a graphical representation of the network flows and the amount of traffic. The topology view represents traffic between the OpenShift Container Platform components as a network graph. You can refine the graph by using the filters and display options. You can access the information for node, namespace, owner, pod, and service.

2.3.3. Traffic flow tables

The traffic flow table view provides a view for raw flows, non aggregated filtering options, and configurable columns. The OpenShift Container Platform console offers the Traffic flows tab which displays the data of the network flows and the amount of traffic.

Chapter 3. Installing the Network Observability Operator

Installing Loki is a recommended prerequisite for using the Network Observability Operator. You can choose to use Network Observability without Loki, but there are some considerations for doing this, described in the previously linked section.

The Loki Operator integrates a gateway that implements multi-tenancy and authentication with Loki for data flow storage. The LokiStack resource manages Loki, which is a scalable, highly-available, multi-tenant log aggregation system, and a web proxy with OpenShift Container Platform authentication. The LokiStack proxy uses OpenShift Container Platform authentication to enforce multi-tenancy and facilitate the saving and indexing of data in Loki log stores.

The Loki Operator can also be used for configuring the LokiStack log store. The Network Observability Operator requires a dedicated LokiStack separate from the logging.

3.1. Network Observability without Loki

You can use Network Observability without Loki by not performing the Loki installation steps and skipping directly to "Installing the Network Observability Operator". If you only want to export flows to a Kafka consumer or IPFIX collector, or you only need dashboard metrics, then you do not need to install Loki or provide storage for Loki. Without Loki, there won’t be a Network Traffic panel under Observe, which means there is no overview charts, flow table, or topology. The following table compares available features with and without Loki:

| With Loki | Without Loki | |

|---|---|---|

| Exporters |

|

|

| Flow-based metrics and dashboards |

|

|

| Traffic Flow Overview, Table and Topology views |

|

|

| Quick Filters |

|

|

| OpenShift Container Platform console Network Traffic tab integration |

|

|

3.2. Installing the Loki Operator

The Loki Operator versions 5.7+ are the supported Loki Operator versions for Network Observabilty; these versions provide the ability to create a LokiStack instance using the openshift-network tenant configuration mode and provide fully-automatic, in-cluster authentication and authorization support for Network Observability. There are several ways you can install Loki. One way is by using the OpenShift Container Platform web console Operator Hub.

Prerequisites

- Supported Log Store (AWS S3, Google Cloud Storage, Azure, Swift, Minio, OpenShift Data Foundation)

- OpenShift Container Platform 4.10+

- Linux Kernel 4.18+

Procedure

- In the OpenShift Container Platform web console, click Operators → OperatorHub.

- Choose Loki Operator from the list of available Operators, and click Install.

- Under Installation Mode, select All namespaces on the cluster.

Verification

- Verify that you installed the Loki Operator. Visit the Operators → Installed Operators page and look for Loki Operator.

- Verify that Loki Operator is listed with Status as Succeeded in all the projects.

To uninstall Loki, refer to the uninstallation process that corresponds with the method you used to install Loki. You might have remaining ClusterRoles and ClusterRoleBindings, data stored in object store, and persistent volume that must be removed.

3.2.1. Creating a secret for Loki storage

The Loki Operator supports a few log storage options, such as AWS S3, Google Cloud Storage, Azure, Swift, Minio, OpenShift Data Foundation. The following example shows how to create a secret for AWS S3 storage. The secret created in this example, loki-s3, is referenced in "Creating a LokiStack resource". You can create this secret in the web console or CLI.

-

Using the web console, navigate to the Project → All Projects dropdown and select Create Project. Name the project

netobservand click Create. Navigate to the Import icon, +, in the top right corner. Paste your YAML file into the editor.

The following shows an example secret YAML file for S3 storage:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The installation examples in this documentation use the same namespace,

netobserv, across all components. You can optionally use a different namespace for the different components

Verification

- Once you create the secret, you should see it listed under Workloads → Secrets in the web console.

3.2.2. Creating a LokiStack custom resource

You can deploy a LokiStack using the web console or CLI to create a namespace, or new project.

Querying application logs for multiple namespaces as a cluster-admin user, where the sum total of characters of all of the namespaces in the cluster is greater than 5120, results in the error Parse error: input size too long (XXXX > 5120). For better control over access to logs in LokiStack, make the cluster-admin user a member of the cluster-admin group. If the cluster-admin group does not exist, create it and add the desired users to it.

For more information about creating a cluster-admin group, see the "Additional resources" section.

Procedure

- Navigate to Operators → Installed Operators, viewing All projects from the Project dropdown.

- Look for Loki Operator. In the details, under Provided APIs, select LokiStack.

- Click Create LokiStack.

Ensure the following fields are specified in either Form View or YAML view:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The installation examples in this documentation use the same namespace,

netobserv, across all components. You can optionally use a different namespace. - 2

- Use a storage class name that is available on the cluster for

ReadWriteOnceaccess mode. You can useoc get storageclassesto see what is available on your cluster.

ImportantYou must not reuse the same

LokiStackthat is used for cluster logging.- Click Create.

3.2.2.1. Deployment Sizing

Sizing for Loki follows the format of N<x>.<size> where the value <N> is the number of instances and <size> specifies performance capabilities.

1x.extra-small is for demo purposes only, and is not supported.

| 1x.extra-small | 1x.small | 1x.medium | |

|---|---|---|---|

| Data transfer | Demo use only. | 500GB/day | 2TB/day |

| Queries per second (QPS) | Demo use only. | 25-50 QPS at 200ms | 25-75 QPS at 200ms |

| Replication factor | None | 2 | 3 |

| Total CPU requests | 5 vCPUs | 36 vCPUs | 54 vCPUs |

| Total Memory requests | 7.5Gi | 63Gi | 139Gi |

| Total Disk requests | 150Gi | 300Gi | 450Gi |

3.2.3. LokiStack ingestion limits and health alerts

The LokiStack instance comes with default settings according to the configured size. It is possible to override some of these settings, such as the ingestion and query limits. You might want to update them if you get Loki errors showing up in the Console plugin, or in flowlogs-pipeline logs. An automatic alert in the web console notifies you when these limits are reached.

Here is an example of configured limits:

For more information about these settings, see the LokiStack API reference.

3.2.4. Configuring authorization and multi-tenancy

Define ClusterRole and ClusterRoleBinding. The netobserv-reader ClusterRole enables multi-tenancy and allows individual user access, or group access, to the flows stored in Loki. You can create a YAML file to define these roles.

Procedure

- Using the web console, click the Import icon, +.

- Drop your YAML file into the editor and click Create:

Example ClusterRole reader yaml

- 1

- This role can be used for multi-tenancy.

Example ClusterRole writer yaml

Example ClusterRoleBinding yaml

- 1

- The

flowlogs-pipelinewrites to Loki. If you are using Kafka, this value isflowlogs-pipeline-transformer.

3.2.5. Enabling multi-tenancy in Network Observability

Multi-tenancy in the Network Observability Operator allows and restricts individual user access, or group access, to the flows stored in Loki. Access is enabled for project admins. Project admins who have limited access to some namespaces can access flows for only those namespaces.

Prerequisite

- You have installed Loki Operator version 5.7

-

The

FlowCollectorspec.loki.authTokenconfiguration must be set toFORWARD. - You must be logged in as a project administrator

Procedure

Authorize reading permission to

user1by running the following command:oc adm policy add-cluster-role-to-user netobserv-reader user1

$ oc adm policy add-cluster-role-to-user netobserv-reader user1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Now, the data is restricted to only allowed user namespaces. For example, a user that has access to a single namespace can see all the flows internal to this namespace, as well as flows going from and to this namespace. Project admins have access to the Administrator perspective in the OpenShift Container Platform console to access the Network Flows Traffic page.

3.3. Installing the Network Observability Operator

You can install the Network Observability Operator using the OpenShift Container Platform web console Operator Hub. When you install the Operator, it provides the FlowCollector custom resource definition (CRD). You can set specifications in the web console when you create the FlowCollector.

The actual memory consumption of the Operator depends on your cluster size and the number of resources deployed. Memory consumption might need to be adjusted accordingly. For more information refer to "Network Observability controller manager pod runs out of memory" in the "Important Flow Collector configuration considerations" section.

Prerequisites

- If you choose to use Loki, install the Loki Operator version 5.7+.

-

You must have

cluster-adminprivileges. -

One of the following supported architectures is required:

amd64,ppc64le,arm64, ors390x. - Any CPU supported by Red Hat Enterprise Linux (RHEL) 9.

- Must be configured with OVN-Kubernetes or OpenShift SDN as the main network plugin, and optionally using secondary interfaces, such as Multus and SR-IOV.

This documentation assumes that your LokiStack instance name is loki. Using a different name requires additional configuration.

Procedure

- In the OpenShift Container Platform web console, click Operators → OperatorHub.

- Choose Network Observability Operator from the list of available Operators in the OperatorHub, and click Install.

-

Select the checkbox

Enable Operator recommended cluster monitoring on this Namespace. - Navigate to Operators → Installed Operators. Under Provided APIs for Network Observability, select the Flow Collector link.

Navigate to the Flow Collector tab, and click Create FlowCollector. Make the following selections in the form view:

-

spec.agent.ebpf.Sampling: Specify a sampling size for flows. Lower sampling sizes will have higher impact on resource utilization. For more information, see the "FlowCollector API reference",

spec.agent.ebpf. If you are using Loki, set the following specifications:

- spec.loki.enable: Select the check box to enable storing flows in Loki.

-

spec.loki.url: Since authentication is specified separately, this URL needs to be updated to

https://loki-gateway-http.netobserv.svc:8080/api/logs/v1/network. The first part of the URL, "loki", must match the name of yourLokiStack. -

spec.loki.authToken: Select the

FORWARDvalue. -

spec.loki.statusUrl: Set this to

https://loki-query-frontend-http.netobserv.svc:3100/. The first part of the URL, "loki", must match the name of yourLokiStack. - spec.loki.tls.enable: Select the checkbox to enable TLS.

spec.loki.statusTls: The

enablevalue is false by default.For the first part of the certificate reference names:

loki-gateway-ca-bundle,loki-ca-bundle, andloki-query-frontend-http,loki, must match the name of yourLokiStack.

-

Optional: If you are in a large-scale environment, consider configuring the

FlowCollectorwith Kafka for forwarding data in a more resilient, scalable way. See "Configuring the Flow Collector resource with Kafka storage" in the "Important Flow Collector configuration considerations" section. -

Optional: Configure other optional settings before the next step of creating the

FlowCollector. For example, if you choose not to use Loki, then you can configure exporting flows to Kafka or IPFIX. See "Export enriched network flow data to Kafka and IPFIX" and more in the "Important Flow Collector configuration considerations" section. - Click Create.

-

spec.agent.ebpf.Sampling: Specify a sampling size for flows. Lower sampling sizes will have higher impact on resource utilization. For more information, see the "FlowCollector API reference",

Verification

To confirm this was successful, when you navigate to Observe you should see Network Traffic listed in the options.

In the absence of Application Traffic within the OpenShift Container Platform cluster, default filters might show that there are "No results", which results in no visual flow. Beside the filter selections, select Clear all filters to see the flow.

If you installed Loki using the Loki Operator, it is advised not to use querierUrl, as it can break the console access to Loki. If you installed Loki using another type of Loki installation, this does not apply.

3.5. Installing Kafka (optional)

The Kafka Operator is supported for large scale environments. Kafka provides high-throughput and low-latency data feeds for forwarding network flow data in a more resilient, scalable way. You can install the Kafka Operator as Red Hat AMQ Streams from the Operator Hub, just as the Loki Operator and Network Observability Operator were installed. Refer to "Configuring the FlowCollector resource with Kafka" to configure Kafka as a storage option.

To uninstall Kafka, refer to the uninstallation process that corresponds with the method you used to install.

3.6. Uninstalling the Network Observability Operator

You can uninstall the Network Observability Operator using the OpenShift Container Platform web console Operator Hub, working in the Operators → Installed Operators area.

Procedure

Remove the

FlowCollectorcustom resource.- Click Flow Collector, which is next to the Network Observability Operator in the Provided APIs column.

-

Click the options menu

for the cluster and select Delete FlowCollector.

for the cluster and select Delete FlowCollector.

Uninstall the Network Observability Operator.

- Navigate back to the Operators → Installed Operators area.

-

Click the options menu

next to the Network Observability Operator and select Uninstall Operator.

next to the Network Observability Operator and select Uninstall Operator.

-

Home → Projects and select

openshift-netobserv-operator - Navigate to Actions and select Delete Project

Remove the

FlowCollectorcustom resource definition (CRD).- Navigate to Administration → CustomResourceDefinitions.

-

Look for FlowCollector and click the options menu

.

.

Select Delete CustomResourceDefinition.

ImportantThe Loki Operator and Kafka remain if they were installed and must be removed separately. Additionally, you might have remaining data stored in an object store, and a persistent volume that must be removed.

Chapter 4. Network Observability Operator in OpenShift Container Platform

Network Observability is an OpenShift operator that deploys a monitoring pipeline to collect and enrich network traffic flows that are produced by the Network Observability eBPF agent.

4.1. Viewing statuses

The Network Observability Operator provides the Flow Collector API. When a Flow Collector resource is created, it deploys pods and services to create and store network flows in the Loki log store, as well as to display dashboards, metrics, and flows in the OpenShift Container Platform web console.

Procedure

Run the following command to view the state of

FlowCollector:oc get flowcollector/cluster

$ oc get flowcollector/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME AGENT SAMPLING (EBPF) DEPLOYMENT MODEL STATUS cluster EBPF 50 DIRECT Ready

NAME AGENT SAMPLING (EBPF) DEPLOYMENT MODEL STATUS cluster EBPF 50 DIRECT ReadyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the status of pods running in the

netobservnamespace by entering the following command:oc get pods -n netobserv

$ oc get pods -n netobservCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

flowlogs-pipeline pods collect flows, enriches the collected flows, then send flows to the Loki storage. netobserv-plugin pods create a visualization plugin for the OpenShift Container Platform Console.

Check the status of pods running in the namespace

netobserv-privilegedby entering the following command:oc get pods -n netobserv-privileged

$ oc get pods -n netobserv-privilegedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

netobserv-ebpf-agent pods monitor network interfaces of the nodes to get flows and send them to flowlogs-pipeline pods.

If you are using the Loki Operator, check the status of pods running in the

openshift-operators-redhatnamespace by entering the following command:oc get pods -n openshift-operators-redhat

$ oc get pods -n openshift-operators-redhatCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

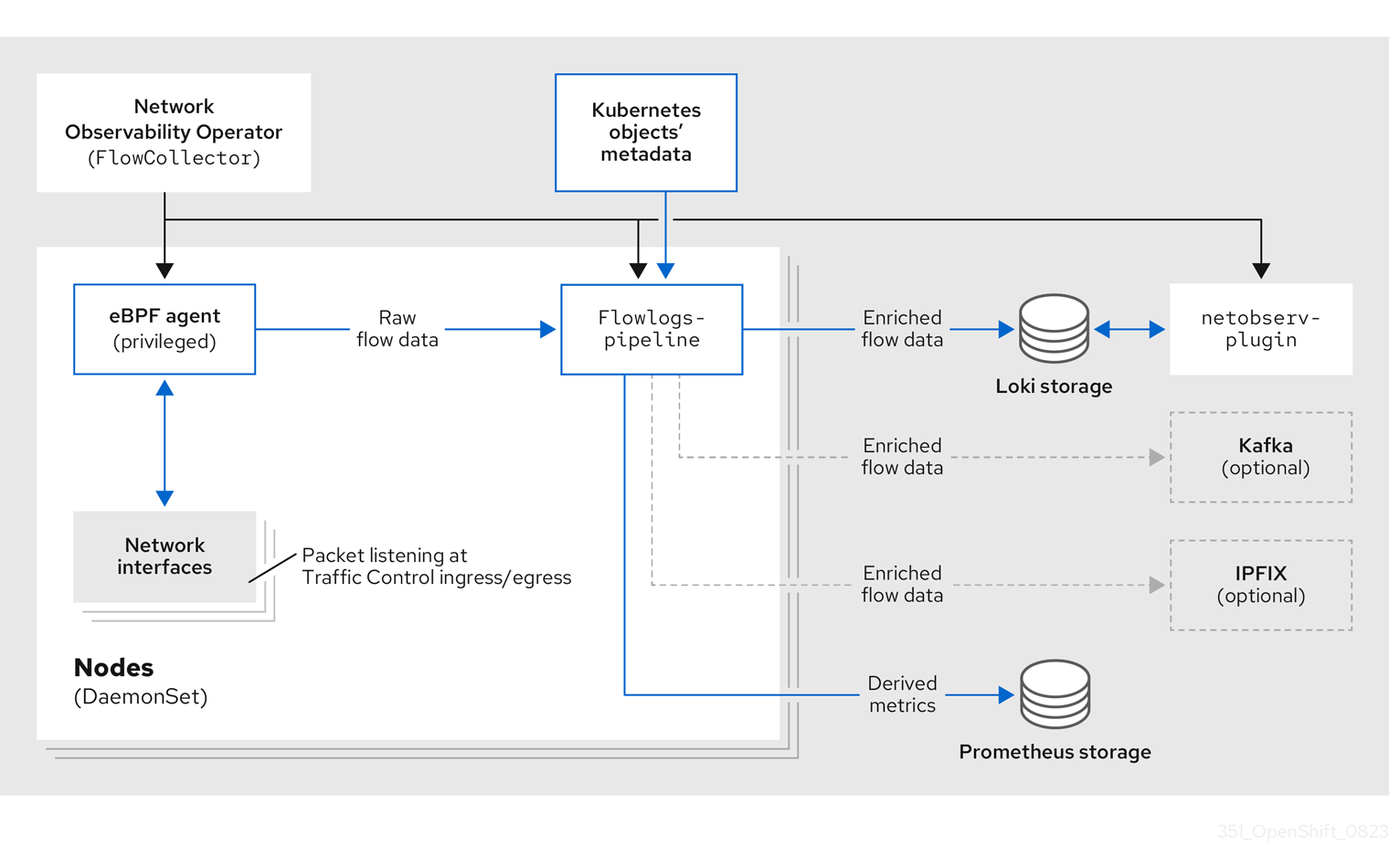

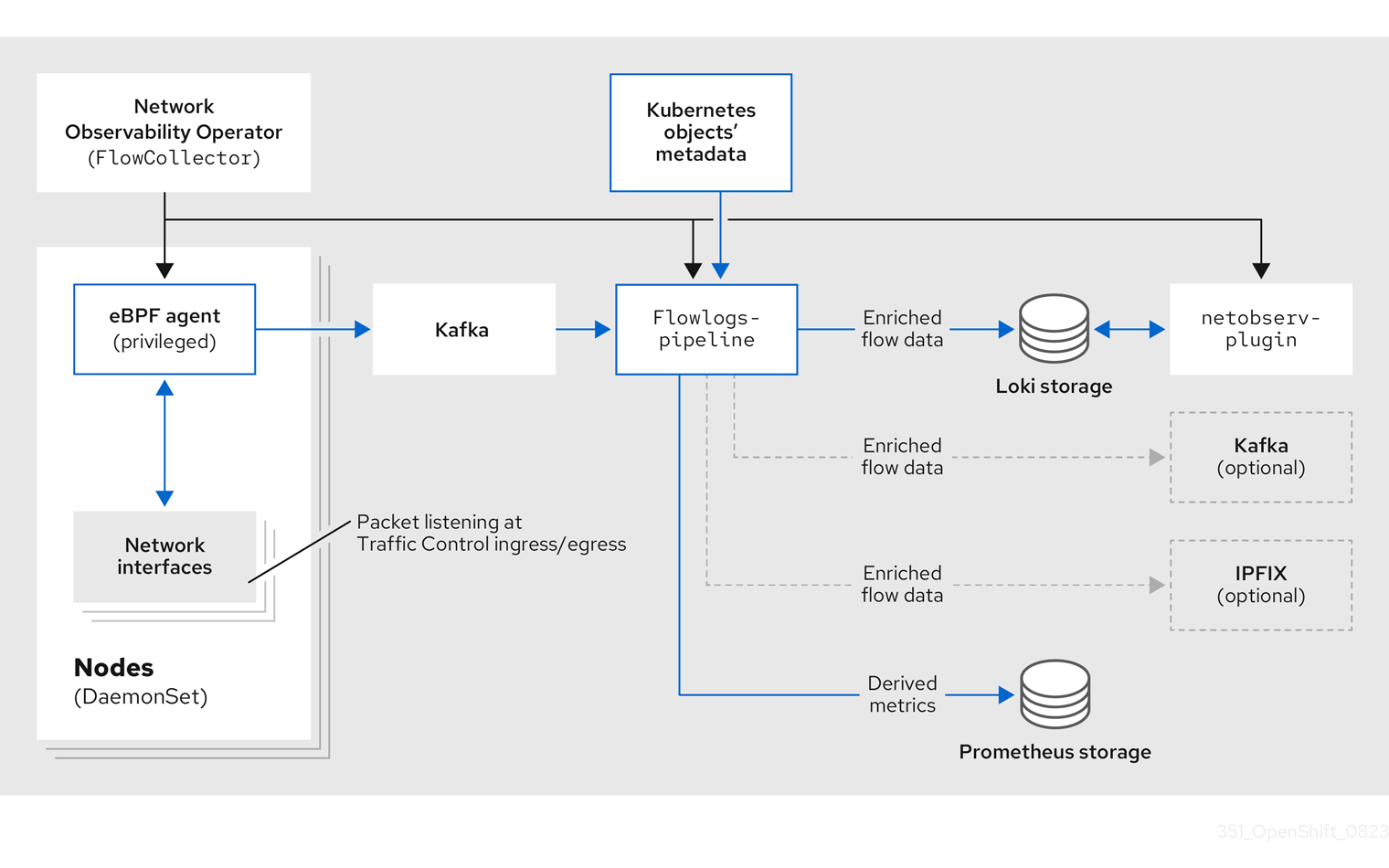

4.2. Network Observablity Operator architecture

The Network Observability Operator provides the FlowCollector API, which is instantiated at installation and configured to reconcile the eBPF agent, the flowlogs-pipeline, and the netobserv-plugin components. Only a single FlowCollector per cluster is supported.

The eBPF agent runs on each cluster node with some privileges to collect network flows. The flowlogs-pipeline receives the network flows data and enriches the data with Kubernetes identifiers. If you are using Loki, the flowlogs-pipeline sends flow logs data to Loki for storing and indexing. The netobserv-plugin, which is a dynamic OpenShift Container Platform web console plugin, queries Loki to fetch network flows data. Cluster-admins can view the data in the web console.

If you are using the Kafka option, the eBPF agent sends the network flow data to Kafka, and the flowlogs-pipeline reads from the Kafka topic before sending to Loki, as shown in the following diagram.

4.3. Viewing Network Observability Operator status and configuration

You can inspect the status and view the details of the FlowCollector using the oc describe command.

Procedure

Run the following command to view the status and configuration of the Network Observability Operator:

oc describe flowcollector/cluster

$ oc describe flowcollector/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 5. Configuring the Network Observability Operator

You can update the Flow Collector API resource to configure the Network Observability Operator and its managed components. The Flow Collector is explicitly created during installation. Since this resource operates cluster-wide, only a single FlowCollector is allowed, and it has to be named cluster.

5.1. View the FlowCollector resource

You can view and edit YAML directly in the OpenShift Container Platform web console.

Procedure

- In the web console, navigate to Operators → Installed Operators.

- Under the Provided APIs heading for the NetObserv Operator, select Flow Collector.

-

Select cluster then select the YAML tab. There, you can modify the

FlowCollectorresource to configure the Network Observability operator.

The following example shows a sample FlowCollector resource for OpenShift Container Platform Network Observability operator:

Sample FlowCollector resource

- 1

- The Agent specification,

spec.agent.type, must beEBPF. eBPF is the only OpenShift Container Platform supported option. - 2

- You can set the Sampling specification,

spec.agent.ebpf.sampling, to manage resources. Lower sampling values might consume a large amount of computational, memory and storage resources. You can mitigate this by specifying a sampling ratio value. A value of 100 means 1 flow every 100 is sampled. A value of 0 or 1 means all flows are captured. The lower the value, the increase in returned flows and the accuracy of derived metrics. By default, eBPF sampling is set to a value of 50, so 1 flow every 50 is sampled. Note that more sampled flows also means more storage needed. It is recommend to start with default values and refine empirically, to determine which setting your cluster can manage. - 3

- The optional specifications

spec.processor.logTypes,spec.processor.conversationHeartbeatInterval, andspec.processor.conversationEndTimeoutcan be set to enable conversation tracking. When enabled, conversation events are queryable in the web console. The values forspec.processor.logTypesare as follows:FLOWSCONVERSATIONS,ENDED_CONVERSATIONS, orALL. Storage requirements are highest forALLand lowest forENDED_CONVERSATIONS. - 4

- The Loki specification,

spec.loki, specifies the Loki client. The default values match the Loki install paths mentioned in the Installing the Loki Operator section. If you used another installation method for Loki, specify the appropriate client information for your install. - 5

- The original certificates are copied to the Network Observability instance namespace and watched for updates. When not provided, the namespace defaults to be the same as "spec.namespace". If you chose to install Loki in a different namespace, you must specify it in the

spec.loki.tls.caCert.namespacefield. Similarly, thespec.exporters.kafka.tls.caCert.namespacefield is available for Kafka installed in a different namespace. - 6

- The

spec.quickFiltersspecification defines filters that show up in the web console. TheApplicationfilter keys,src_namespaceanddst_namespace, are negated (!), so theApplicationfilter shows all traffic that does not originate from, or have a destination to, anyopenshift-ornetobservnamespaces. For more information, see Configuring quick filters below.

5.2. Configuring the Flow Collector resource with Kafka

You can configure the FlowCollector resource to use Kafka for high-throughput and low-latency data feeds. A Kafka instance needs to be running, and a Kafka topic dedicated to OpenShift Container Platform Network Observability must be created in that instance. For more information, see Kafka documentation with AMQ Streams.

Prerequisites

- Kafka is installed. Red Hat supports Kafka with AMQ Streams Operator.

Procedure

- In the web console, navigate to Operators → Installed Operators.

- Under the Provided APIs heading for the Network Observability Operator, select Flow Collector.

- Select the cluster and then click the YAML tab.

-

Modify the

FlowCollectorresource for OpenShift Container Platform Network Observability Operator to use Kafka, as shown in the following sample YAML:

Sample Kafka configuration in FlowCollector resource

- 1

- Set

spec.deploymentModeltoKAFKAinstead ofDIRECTto enable the Kafka deployment model. - 2

spec.kafka.addressrefers to the Kafka bootstrap server address. You can specify a port if needed, for instancekafka-cluster-kafka-bootstrap.netobserv:9093for using TLS on port 9093.- 3

spec.kafka.topicshould match the name of a topic created in Kafka.- 4

spec.kafka.tlscan be used to encrypt all communications to and from Kafka with TLS or mTLS. When enabled, the Kafka CA certificate must be available as a ConfigMap or a Secret, both in the namespace where theflowlogs-pipelineprocessor component is deployed (default:netobserv) and where the eBPF agents are deployed (default:netobserv-privileged). It must be referenced withspec.kafka.tls.caCert. When using mTLS, client secrets must be available in these namespaces as well (they can be generated for instance using the AMQ Streams User Operator) and referenced withspec.kafka.tls.userCert.

5.3. Export enriched network flow data

You can send network flows to Kafka, IPFIX, or both at the same time. Any processor or storage that supports Kafka or IPFIX input, such as Splunk, Elasticsearch, or Fluentd, can consume the enriched network flow data.

Prerequisites

-

Your Kafka or IPFIX collector endpoint(s) are available from Network Observability

flowlogs-pipelinepods.

Procedure

- In the web console, navigate to Operators → Installed Operators.

- Under the Provided APIs heading for the NetObserv Operator, select Flow Collector.

- Select cluster and then select the YAML tab.

Edit the

FlowCollectorto configurespec.exportersas follows:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 2

- The Network Observability Operator exports all flows to the configured Kafka topic.

- 3

- You can encrypt all communications to and from Kafka with SSL/TLS or mTLS. When enabled, the Kafka CA certificate must be available as a ConfigMap or a Secret, both in the namespace where the

flowlogs-pipelineprocessor component is deployed (default: netobserv). It must be referenced withspec.exporters.tls.caCert. When using mTLS, client secrets must be available in these namespaces as well (they can be generated for instance using the AMQ Streams User Operator) and referenced withspec.exporters.tls.userCert. - 1 4

- You can export flows to IPFIX instead of or in conjunction with exporting flows to Kafka.

- 5

- You have the option to specify transport. The default value is

tcpbut you can also specifyudp.

- After configuration, network flows data can be sent to an available output in a JSON format. For more information, see Network flows format reference.

5.4. Updating the Flow Collector resource

As an alternative to editing YAML in the OpenShift Container Platform web console, you can configure specifications, such as eBPF sampling, by patching the flowcollector custom resource (CR):

Procedure

Run the following command to patch the

flowcollectorCR and update thespec.agent.ebpf.samplingvalue:oc patch flowcollector cluster --type=json -p "[{"op": "replace", "path": "/spec/agent/ebpf/sampling", "value": <new value>}] -n netobserv"$ oc patch flowcollector cluster --type=json -p "[{"op": "replace", "path": "/spec/agent/ebpf/sampling", "value": <new value>}] -n netobserv"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5. Configuring quick filters

You can modify the filters in the FlowCollector resource. Exact matches are possible using double-quotes around values. Otherwise, partial matches are used for textual values. The bang (!) character, placed at the end of a key, means negation. See the sample FlowCollector resource for more context about modifying the YAML.

The filter matching types "all of" or "any of" is a UI setting that the users can modify from the query options. It is not part of this resource configuration.

Here is a list of all available filter keys:

| Universal* | Source | Destination | Description |

|---|---|---|---|

| namespace |

|

| Filter traffic related to a specific namespace. |

| name |

|

| Filter traffic related to a given leaf resource name, such as a specific pod, service, or node (for host-network traffic). |

| kind |

|

| Filter traffic related to a given resource kind. The resource kinds include the leaf resource (Pod, Service or Node), or the owner resource (Deployment and StatefulSet). |

| owner_name |

|

| Filter traffic related to a given resource owner; that is, a workload or a set of pods. For example, it can be a Deployment name, a StatefulSet name, etc. |

| resource |

|

|

Filter traffic related to a specific resource that is denoted by its canonical name, that identifies it uniquely. The canonical notation is |

| address |

|

| Filter traffic related to an IP address. IPv4 and IPv6 are supported. CIDR ranges are also supported. |

| mac |

|

| Filter traffic related to a MAC address. |

| port |

|

| Filter traffic related to a specific port. |

| host_address |

|

| Filter traffic related to the host IP address where the pods are running. |

| protocol | N/A | N/A | Filter traffic related to a protocol, such as TCP or UDP. |

-

Universal keys filter for any of source or destination. For example, filtering

name: 'my-pod'means all traffic frommy-podand all traffic tomy-pod, regardless of the matching type used, whether Match all or Match any.

5.6. Configuring monitoring for SR-IOV interface traffic

In order to collect traffic from a cluster with a Single Root I/O Virtualization (SR-IOV) device, you must set the FlowCollector spec.agent.ebpf.privileged field to true. Then, the eBPF agent monitors other network namespaces in addition to the host network namespaces, which are monitored by default. When a pod with a virtual functions (VF) interface is created, a new network namespace is created. With SRIOVNetwork policy IPAM configurations specified, the VF interface is migrated from the host network namespace to the pod network namespace.

Prerequisites

- Access to an OpenShift Container Platform cluster with a SR-IOV device.

-

The

SRIOVNetworkcustom resource (CR)spec.ipamconfiguration must be set with an IP address from the range that the interface lists or from other plugins.

Procedure

- In the web console, navigate to Operators → Installed Operators.

- Under the Provided APIs heading for the NetObserv Operator, select Flow Collector.

- Select cluster and then select the YAML tab.

Configure the

FlowCollectorcustom resource. A sample configuration is as follows:Configure

FlowCollectorfor SR-IOV monitoringCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

spec.agent.ebpf.privilegedfield value must be set totrueto enable SR-IOV monitoring.

5.7. Resource management and performance considerations

The amount of resources required by Network Observability depends on the size of your cluster and your requirements for the cluster to ingest and store observability data. To manage resources and set performance criteria for your cluster, consider configuring the following settings. Configuring these settings might meet your optimal setup and observability needs.

The following settings can help you manage resources and performance from the outset:

- eBPF Sampling

-

You can set the Sampling specification,

spec.agent.ebpf.sampling, to manage resources. Smaller sampling values might consume a large amount of computational, memory and storage resources. You can mitigate this by specifying a sampling ratio value. A value of100means 1 flow every 100 is sampled. A value of0or1means all flows are captured. Smaller values result in an increase in returned flows and the accuracy of derived metrics. By default, eBPF sampling is set to a value of 50, so 1 flow every 50 is sampled. Note that more sampled flows also means more storage needed. Consider starting with the default values and refine empirically, in order to determine which setting your cluster can manage. - Restricting or excluding interfaces

-

Reduce the overall observed traffic by setting the values for

spec.agent.ebpf.interfacesandspec.agent.ebpf.excludeInterfaces. By default, the agent fetches all the interfaces in the system, except the ones listed inexcludeInterfacesandlo(local interface). Note that the interface names might vary according to the Container Network Interface (CNI) used.

The following settings can be used to fine-tune performance after the Network Observability has been running for a while:

- Resource requirements and limits

-

Adapt the resource requirements and limits to the load and memory usage you expect on your cluster by using the

spec.agent.ebpf.resourcesandspec.processor.resourcesspecifications. The default limits of 800MB might be sufficient for most medium-sized clusters. - Cache max flows timeout

-

Control how often flows are reported by the agents by using the eBPF agent’s

spec.agent.ebpf.cacheMaxFlowsandspec.agent.ebpf.cacheActiveTimeoutspecifications. A larger value results in less traffic being generated by the agents, which correlates with a lower CPU load. However, a larger value leads to a slightly higher memory consumption, and might generate more latency in the flow collection.

5.7.1. Resource considerations

The following table outlines examples of resource considerations for clusters with certain workload sizes.

The examples outlined in the table demonstrate scenarios that are tailored to specific workloads. Consider each example only as a baseline from which adjustments can be made to accommodate your workload needs.

| Extra small (10 nodes) | Small (25 nodes) | Medium (65 nodes) [2] | Large (120 nodes) [2] | |

|---|---|---|---|---|

| Worker Node vCPU and memory | 4 vCPUs| 16GiB mem [1] | 16 vCPUs| 64GiB mem [1] | 16 vCPUs| 64GiB mem [1] | 16 vCPUs| 64GiB Mem [1] |

| LokiStack size |

|

|

|

|

| Network Observability controller memory limit | 400Mi (default) | 400Mi (default) | 400Mi (default) | 800Mi |

| eBPF sampling rate | 50 (default) | 50 (default) | 50 (default) | 50 (default) |

| eBPF memory limit | 800Mi (default) | 800Mi (default) | 2000Mi | 800Mi (default) |

| FLP memory limit | 800Mi (default) | 800Mi (default) | 800Mi (default) | 800Mi (default) |

| FLP Kafka partitions | N/A | 48 | 48 | 48 |

| Kafka consumer replicas | N/A | 24 | 24 | 24 |

| Kafka brokers | N/A | 3 (default) | 3 (default) | 3 (default) |

- Tested with AWS M6i instances.

-

In addition to this worker and its controller, 3 infra nodes (size

M6i.12xlarge) and 1 workload node (sizeM6i.8xlarge) were tested.

Chapter 6. Network Policy

As a user with the admin role, you can create a network policy for the netobserv namespace.

6.1. Creating a network policy for Network Observability

You might need to create a network policy to secure ingress traffic to the netobserv namespace. In the web console, you can create a network policy using the form view.

Procedure

- Navigate to Networking → NetworkPolicies.

-

Select the

netobservproject from the Project dropdown menu. -

Name the policy. For this example, the policy name is

allow-ingress. - Click Add ingress rule three times to create three ingress rules.

Specify the following in the form:

Make the following specifications for the first Ingress rule:

- From the Add allowed source dropdown menu, select Allow pods from the same namespace.

Make the following specifications for the second Ingress rule:

- From the Add allowed source dropdown menu, select Allow pods from inside the cluster.

- Click + Add namespace selector.

-

Add the label,

kubernetes.io/metadata.name, and the selector,openshift-console.

Make the following specifications for the third Ingress rule:

- From the Add allowed source dropdown menu, select Allow pods from inside the cluster.

- Click + Add namespace selector.

-

Add the label,

kubernetes.io/metadata.name, and the selector,openshift-monitoring.

Verification

- Navigate to Observe → Network Traffic.

- View the Traffic Flows tab, or any tab, to verify that the data is displayed.

- Navigate to Observe → Dashboards. In the NetObserv/Health selection, verify that the flows are being ingested and sent to Loki, which is represented in the first graph.

6.2. Example network policy

The following annotates an example NetworkPolicy object for the netobserv namespace:

Sample network policy

- 1

- A selector that describes the pods to which the policy applies. The policy object can only select pods in the project that defines the

NetworkPolicyobject. In this documentation, it would be the project in which the Network Observability Operator is installed, which is thenetobservproject. - 2

- A selector that matches the pods from which the policy object allows ingress traffic. The default is that the selector matches pods in the same namespace as the

NetworkPolicy. - 3

- When the

namespaceSelectoris specified, the selector matches pods in the specified namespace.

Chapter 7. Observing the network traffic

As an administrator, you can observe the network traffic in the OpenShift Container Platform console for detailed troubleshooting and analysis. This feature helps you get insights from different graphical representations of traffic flow. There are several available views to observe the network traffic.

7.1. Observing the network traffic from the Overview view

The Overview view displays the overall aggregated metrics of the network traffic flow on the cluster. As an administrator, you can monitor the statistics with the available display options.

7.1.1. Working with the Overview view

As an administrator, you can navigate to the Overview view to see the graphical representation of the flow rate statistics.

Procedure

- Navigate to Observe → Network Traffic.

- In the Network Traffic page, click the Overview tab.

You can configure the scope of each flow rate data by clicking the menu icon.

7.1.2. Configuring advanced options for the Overview view

You can customize the graphical view by using advanced options. To access the advanced options, click Show advanced options.You can configure the details in the graph by using the Display options drop-down menu. The options available are:

- Metric type: The metrics to be shown in Bytes or Packets. The default value is Bytes.

- Scope: To select the detail of components between which the network traffic flows. You can set the scope to Node, Namespace, Owner, or Resource. Owner is an aggregation of resources. Resource can be a pod, service, node, in case of host-network traffic, or an unknown IP address. The default value is Namespace.

- Truncate labels: Select the required width of the label from the drop-down list. The default value is M.

7.1.2.1. Managing panels

You can select the required statistics to be displayed, and reorder them. To manage columns, click Manage panels.

7.1.2.2. DNS tracking

You can configure graphical representation of Domain Name System (DNS) tracking of network flows in the Overview view. Using DNS tracking with extended Berkeley Packet Filter (eBPF) tracepoint hooks can serve various purposes:

- Network Monitoring: Gain insights into DNS queries and responses, helping network administrators identify unusual patterns, potential bottlenecks, or performance issues.

- Security Analysis: Detect suspicious DNS activities, such as domain name generation algorithms (DGA) used by malware, or identify unauthorized DNS resolutions that might indicate a security breach.

- Troubleshooting: Debug DNS-related issues by tracing DNS resolution steps, tracking latency, and identifying misconfigurations.

When DNS tracking is enabled, you can see the following metrics represented in a chart in the Overview. See the Additional Resources in this section for more information about enabling and working with this view.

- Top 5 average DNS latencies

- Top 5 DNS response code

- Top 5 DNS response code stacked with total

This feature is supported for IPv4 and IPv6 UDP protocol.

7.2. Observing the network traffic from the Traffic flows view

The Traffic flows view displays the data of the network flows and the amount of traffic in a table. As an administrator, you can monitor the amount of traffic across the application by using the traffic flow table.

7.2.1. Working with the Traffic flows view

As an administrator, you can navigate to Traffic flows table to see network flow information.

Procedure

- Navigate to Observe → Network Traffic.

- In the Network Traffic page, click the Traffic flows tab.

You can click on each row to get the corresponding flow information.

7.2.2. Configuring advanced options for the Traffic flows view

You can customize and export the view by using Show advanced options. You can set the row size by using the Display options drop-down menu. The default value is Normal.

7.2.2.1. Managing columns

You can select the required columns to be displayed, and reorder them. To manage columns, click Manage columns.

7.2.2.2. Exporting the traffic flow data

You can export data from the Traffic flows view.

Procedure

- Click Export data.

- In the pop-up window, you can select the Export all data checkbox to export all the data, and clear the checkbox to select the required fields to be exported.

- Click Export.

7.2.3. Working with conversation tracking

As an administrator, you can you can group network flows that are part of the same conversation. A conversation is defined as a grouping of peers that are identified by their IP addresses, ports, and protocols, resulting in an unique Conversation Id. You can query conversation events in the web console. These events are represented in the web console as follows:

- Conversation start: This event happens when a connection is starting or TCP flag intercepted

-

Conversation tick: This event happens at each specified interval defined in the

FlowCollectorspec.processor.conversationHeartbeatIntervalparameter while the connection is active. -

Conversation end: This event happens when the

FlowCollectorspec.processor.conversationEndTimeoutparameter is reached or the TCP flag is intercepted. - Flow: This is the network traffic flow that occurs within the specified interval.

Procedure

- In the web console, navigate to Operators → Installed Operators.

- Under the Provided APIs heading for the NetObserv Operator, select Flow Collector.

- Select cluster then select the YAML tab.

Configure the

FlowCollectorcustom resource so thatspec.processor.logTypes,conversationEndTimeout, andconversationHeartbeatIntervalparameters are set according to your observation needs. A sample configuration is as follows:Configure

FlowCollectorfor conversation trackingCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The Conversation end event represents the point when the

conversationEndTimeoutis reached or the TCP flag is intercepted. - 2

- When

logTypesis set toFLOWS, only the Flow event is exported. If you set the value toALL, both conversation and flow events are exported and visible in the Network Traffic page. To focus only on conversation events, you can specifyCONVERSATIONSwhich exports the Conversation start, Conversation tick and Conversation end events; orENDED_CONVERSATIONSexports only the Conversation end events. Storage requirements are highest forALLand lowest forENDED_CONVERSATIONS. - 3

- The Conversation tick event represents each specified interval defined in the

FlowCollectorconversationHeartbeatIntervalparameter while the network connection is active.

NoteIf you update the

logTypeoption, the flows from the previous selection do not clear from the console plugin. For example, if you initially setlogTypetoCONVERSATIONSfor a span of time until 10 AM and then move toENDED_CONVERSATIONS, the console plugin shows all conversation events before 10 AM and only ended conversations after 10 AM.-

Refresh the Network Traffic page on the Traffic flows tab. Notice there are two new columns, Event/Type and Conversation Id. All the Event/Type fields are

Flowwhen Flow is the selected query option. - Select Query Options and choose the Log Type, Conversation. Now the Event/Type shows all of the desired conversation events.

- Next you can filter on a specific conversation ID or switch between the Conversation and Flow log type options from the side panel.

7.2.4. Working with DNS tracking

Using DNS tracking, you can monitor your network, conduct security analysis, and troubleshoot DNS issues. You can track DNS by editing the FlowCollector to the specifications in the following YAML example.

CPU and memory usage increases are observed in the eBPF agent when this feature is enabled.

Procedure

- In the web console, navigate to Operators → Installed Operators.

- Under the Provided APIs heading for the NetObserv Operator, select Flow Collector.

- Select cluster then select the YAML tab.

Configure the

FlowCollectorcustom resource. A sample configuration is as follows:Configure

FlowCollectorfor DNS trackingCopy to Clipboard Copied! Toggle word wrap Toggle overflow When you refresh the Network Traffic page, there are new DNS representations you can choose to view in the Overview and Traffic Flow views and new filters you can apply.

- Select new DNS choices in Manage panels to display graphical visualizations and DNS metrics in the Overview.

- Select new choices in Manage columns to add DNS columns to the Traffic Flows view.

- Filter on specific DNS metrics, such as DNS Id, DNS Latency and DNS Response Code, and see more information from the side panel.

7.2.4.1. Using the histogram

You can click Show histogram to display a toolbar view for visualizing the history of flows as a bar chart. The histogram shows the number of logs over time. You can select a part of the histogram to filter the network flow data in the table that follows the toolbar.

7.3. Observing the network traffic from the Topology view

The Topology view provides a graphical representation of the network flows and the amount of traffic. As an administrator, you can monitor the traffic data across the application by using the Topology view.

7.3.1. Working with the Topology view

As an administrator, you can navigate to the Topology view to see the details and metrics of the component.

Procedure

- Navigate to Observe → Network Traffic.

- In the Network Traffic page, click the Topology tab.

You can click each component in the Topology to view the details and metrics of the component.

7.3.2. Configuring the advanced options for the Topology view

You can customize and export the view by using Show advanced options. The advanced options view has the following features:

- Find in view: To search the required components in the view.

Display options: To configure the following options:

- Layout: To select the layout of the graphical representation. The default value is ColaNoForce.

- Scope: To select the scope of components between which the network traffic flows. The default value is Namespace.

- Groups: To enchance the understanding of ownership by grouping the components. The default value is None.

- Collapse groups: To expand or collapse the groups. The groups are expanded by default. This option is disabled if Groups has value None.

- Show: To select the details that need to be displayed. All the options are checked by default. The options available are: Edges, Edges label, and Badges.

- Truncate labels: To select the required width of the label from the drop-down list. The default value is M.

7.3.2.1. Exporting the topology view

To export the view, click Export topology view. The view is downloaded in PNG format.

7.4. Filtering the network traffic

By default, the Network Traffic page displays the traffic flow data in the cluster based on the default filters configured in the FlowCollector instance. You can use the filter options to observe the required data by changing the preset filter.

- Query Options

You can use Query Options to optimize the search results, as listed below:

- Log Type: The available options Conversation and Flows provide the ability to query flows by log type, such as flow log, new conversation, completed conversation, and a heartbeat, which is a periodic record with updates for long conversations. A conversation is an aggregation of flows between the same peers.

-

Duplicated flows: A flow might be reported from several interfaces, and from both source and destination nodes, making it appear in the data several times. By selecting this query option, you can choose to show duplicated flows. Duplicated flows have the same sources and destinations, including ports, and also have the same protocols, with the exception of

InterfaceandDirectionfields. Duplicates are hidden by default. Use the Direction filter in the Common section of the dropdown list to switch between ingress and egress traffic. - Match filters: You can determine the relation between different filter parameters selected in the advanced filter. The available options are Match all and Match any. Match all provides results that match all the values, and Match any provides results that match any of the values entered. The default value is Match all.

- Limit: The data limit for internal backend queries. Depending upon the matching and the filter settings, the number of traffic flow data is displayed within the specified limit.

- Quick filters

-

The default values in Quick filters drop-down menu are defined in the

FlowCollectorconfiguration. You can modify the options from console. - Advanced filters

- You can set the advanced filters, Common, Source, or Destination, by selecting the parameter to be filtered from the dropdown list. The flow data is filtered based on the selection. To enable or disable the applied filter, you can click on the applied filter listed below the filter options.

You can toggle between

![]() One way and

One way and

![]()

![]() Back and forth filtering. The

Back and forth filtering. The

![]() One way filter shows only Source and Destination traffic according to your filter selections. You can use Swap to change the directional view of the Source and Destination traffic. The

One way filter shows only Source and Destination traffic according to your filter selections. You can use Swap to change the directional view of the Source and Destination traffic. The

![]()

![]() Back and forth filter includes return traffic with the Source and Destination filters. The directional flow of network traffic is shown in the Direction column in the Traffic flows table as

Back and forth filter includes return traffic with the Source and Destination filters. The directional flow of network traffic is shown in the Direction column in the Traffic flows table as Ingress`or `Egress for inter-node traffic and `Inner`for traffic inside a single node.

You can click Reset defaults to remove the existing filters, and apply the filter defined in FlowCollector configuration.

To understand the rules of specifying the text value, click Learn More.

Alternatively, you can access the traffic flow data in the Network Traffic tab of the Namespaces, Services, Routes, Nodes, and Workloads pages which provide the filtered data of the corresponding aggregations.

Chapter 8. Monitoring the Network Observability Operator

You can use the web console to monitor alerts related to the health of the Network Observability Operator.

8.1. Viewing health information

You can access metrics about health and resource usage of the Network Observability Operator from the Dashboards page in the web console. A health alert banner that directs you to the dashboard can appear on the Network Traffic and Home pages in the event that an alert is triggered. Alerts are generated in the following cases:

-

The

NetObservLokiErroralert occurs if theflowlogs-pipelineworkload is dropping flows because of Loki errors, such as if the Loki ingestion rate limit has been reached. -

The

NetObservNoFlowsalert occurs if no flows are ingested for a certain amount of time.

Prerequisites

- You have the Network Observability Operator installed.

-

You have access to the cluster as a user with the

cluster-adminrole or with view permissions for all projects.

Procedure

- From the Administrator perspective in the web console, navigate to Observe → Dashboards.

- From the Dashboards dropdown, select Netobserv/Health. Metrics about the health of the Operator are displayed on the page.

8.1.1. Disabling health alerts

You can opt out of health alerting by editing the FlowCollector resource:

- In the web console, navigate to Operators → Installed Operators.

- Under the Provided APIs heading for the NetObserv Operator, select Flow Collector.

- Select cluster then select the YAML tab.

-

Add

spec.processor.metrics.disableAlertsto disable health alerts, as in the following YAML sample:

- 1

- You can specify one or a list with both types of alerts to disable.

8.2. Creating Loki rate limit alerts for the NetObserv dashboard

You can create custom rules for the Netobserv dashboard metrics to trigger alerts when Loki rate limits have been reached.

An example of an alerting rule configuration YAML file is as follows:

Chapter 9. FlowCollector configuration parameters

FlowCollector is the Schema for the network flows collection API, which pilots and configures the underlying deployments.

9.1. FlowCollector API specifications

- Description

-

FlowCollectoris the schema for the network flows collection API, which pilots and configures the underlying deployments. - Type

-

object

| Property | Type | Description |

|---|---|---|

|

|