此内容没有您所选择的语言版本。

10.3. Preparing to Deploy Geo-replication

This section provides an overview of geo-replication deployment scenarios, lists prerequisites, and describes how to setup the environment for geo-replication session.

10.3.1. Exploring Geo-replication Deployment Scenarios

复制链接链接已复制到粘贴板!

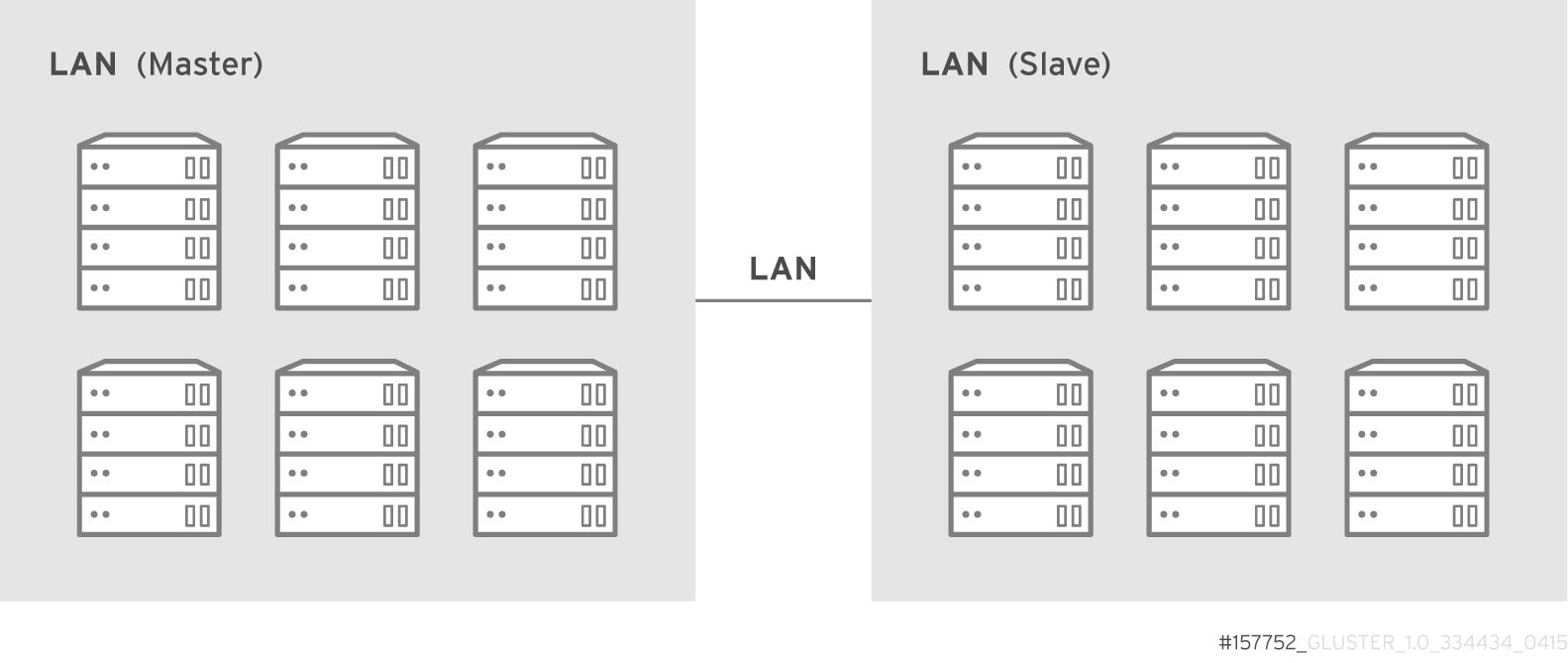

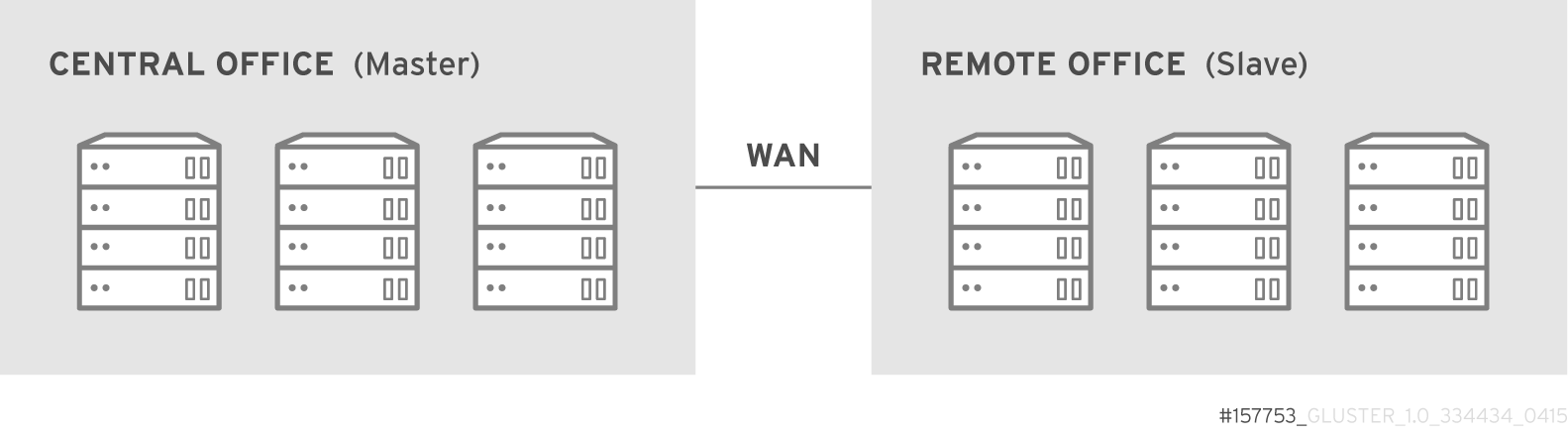

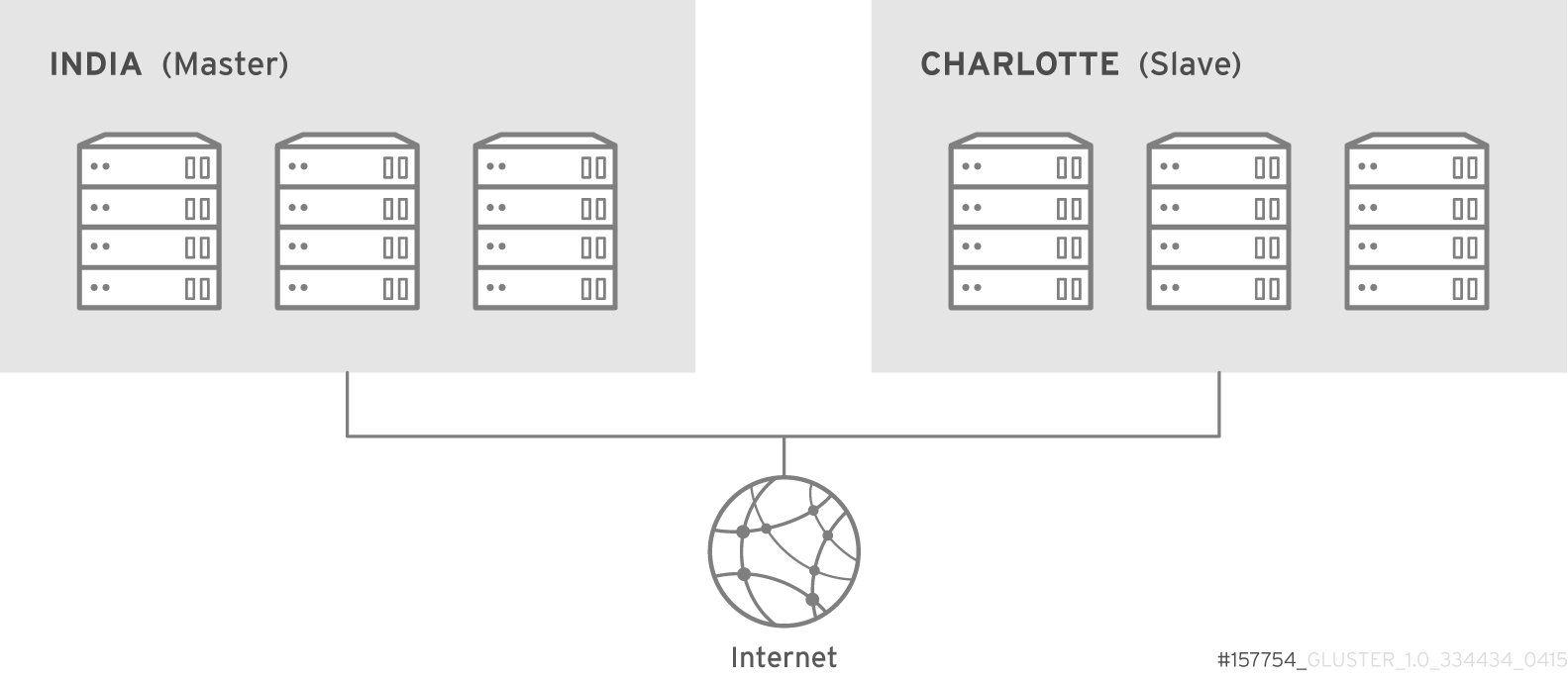

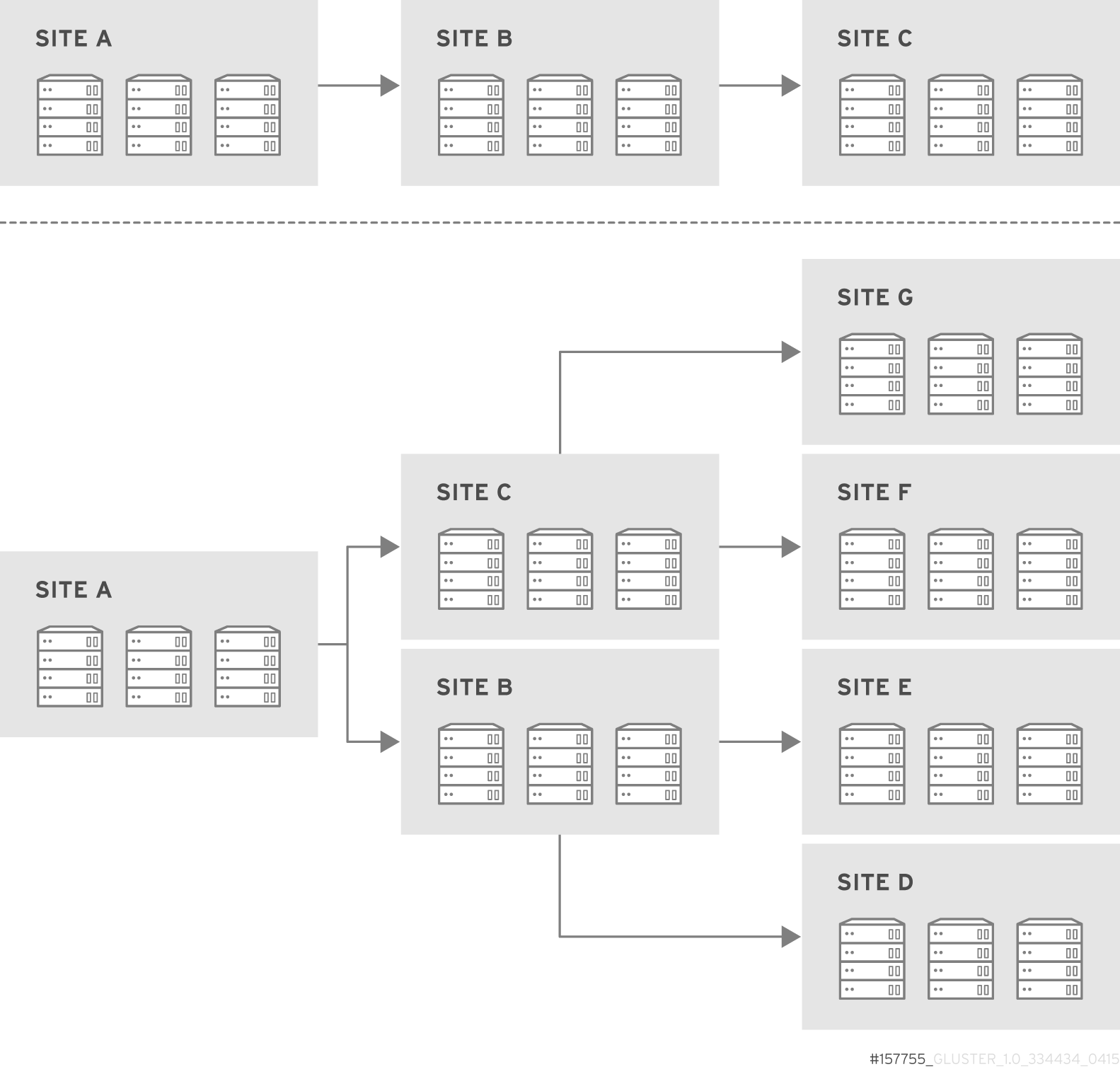

Geo-replication provides an incremental replication service over Local Area Networks (LANs), Wide Area Network (WANs), and the Internet. This section illustrates the most common deployment scenarios for geo-replication, including the following:

- Geo-replication over LAN

- Geo-replication over WAN

- Geo-replication over the Internet

- Multi-site cascading geo-replication

Geo-replication over LAN

Geo-replication over WAN

Geo-replication over Internet

Multi-site cascading Geo-replication

10.3.2. Geo-replication Deployment Overview

复制链接链接已复制到粘贴板!

Deploying geo-replication involves the following steps:

- Verify that your environment matches the minimum system requirements. See Section 10.3.3, “Prerequisites”.

- Determine the appropriate deployment scenario. See Section 10.3.1, “Exploring Geo-replication Deployment Scenarios”.

- Start geo-replication on the master and slave systems. See Section 10.4, “Starting Geo-replication”.

10.3.3. Prerequisites

复制链接链接已复制到粘贴板!

The following are prerequisites for deploying geo-replication:

- The master and slave volumes must be of same version of Red Hat Gluster Storage instances.

- Slave node must not be a peer of the any of the nodes of the Master trusted storage pool.

- Passwordless SSH access is required between one node of the master volume (the node from which the

geo-replication createcommand will be executed), and one node of the slave volume (the node whose IP/hostname will be mentioned in the slave name when running thegeo-replication createcommand).Create the public and private keys usingssh-keygen(without passphrase) on the master node:ssh-keygen

# ssh-keygenCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the public key to the slave node using the following command:ssh-copy-id -i identity_file root@slave_node_IPaddress/Hostname

# ssh-copy-id -i identity_file root@slave_node_IPaddress/HostnameCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you are setting up a non-root geo-replicaton session, then copy the public key to the respectiveuserlocation.Note

- Passwordless SSH access is required from the master node to slave node, whereas passwordless SSH access is not required from the slave node to master node. - ssh-copy-idcommand does not work ifssh authorized_keysfile is configured in the custom location. You must copy the contents of.ssh/id_rsa.pubfile from the Master and paste it to authorized_keys file in the custom location on the Slave node.A passwordless SSH connection is also required forgsyncdbetween every node in the master to every node in the slave. Thegluster system:: execute gsec_createcommand createssecret-pemfiles on all the nodes in the master, and is used to implement the passwordless SSH connection. Thepush-pemoption in thegeo-replication createcommand pushes these keys to all the nodes in the slave.For more information on thegluster system::execute gsec_createandpush-pemcommands, see Section 10.3.4.1, “Setting Up your Environment for Geo-replication Session”.

10.3.4. Setting Up your Environment

复制链接链接已复制到粘贴板!

You can set up your environment for a geo-replication session in the following ways:

- Section 10.3.4.1, “Setting Up your Environment for Geo-replication Session” - In this method, the slave mount is owned by the root user.

- Section 10.3.4.2, “Setting Up your Environment for a Secure Geo-replication Slave” - This method is more secure as the slave mount is owned by a normal user.

Time Synchronization

Before configuring the geo-replication environment, ensure that the time on all the servers are synchronized.

- All the servers' time must be uniform on bricks of a geo-replicated master volume. It is recommended to set up a NTP (Network Time Protocol) service to keep the bricks' time synchronized, and avoid out-of-time sync effects.For example: In a replicated volume where brick1 of the master has the time 12:20, and brick2 of the master has the time 12:10 with a 10 minute time lag, all the changes on brick2 between in this period may go unnoticed during synchronization of files with a Slave.For more information on configuring NTP, see https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/6/html/Deployment_Guide/ch-Configuring_NTP_Using_ntpd.html.

Creating Geo-replication Sessions

- To create a common

pem pubfile, run the following command on the master node where the passwordless SSH connection is configured:gluster system:: execute gsec_create

# gluster system:: execute gsec_createCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create the geo-replication session using the following command. The

push-pemoption is needed to perform the necessarypem-filesetup on the slave nodes.gluster volume geo-replication MASTER_VOL SLAVE_HOST::SLAVE_VOL create push-pem [force]

# gluster volume geo-replication MASTER_VOL SLAVE_HOST::SLAVE_VOL create push-pem [force]Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:gluster volume geo-replication Volume1 example.com::slave-vol create push-pem

# gluster volume geo-replication Volume1 example.com::slave-vol create push-pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

There must be passwordless SSH access between the node from which this command is run, and the slave host specified in the above command. This command performs the slave verification, which includes checking for a valid slave URL, valid slave volume, and available space on the slave. If the verification fails, you can use theforceoption which will ignore the failed verification and create a geo-replication session. - Configure the meta-volume for geo-replication:

gluster volume geo-replication MASTER_VOL SLAVE_HOST::SLAVE_VOL config use_meta_volume true

# gluster volume geo-replication MASTER_VOL SLAVE_HOST::SLAVE_VOL config use_meta_volume trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:gluster volume geo-replication Volume1 example.com::slave-vol config use_meta_volume true

# gluster volume geo-replication Volume1 example.com::slave-vol config use_meta_volume trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow For more information on configuring meta-volume, see Section 10.3.5, “Configuring a Meta-Volume”. - Start the geo-replication by running the following command on the master node:For example,

gluster volume geo-replication MASTER_VOL SLAVE_HOST::SLAVE_VOL start [force]

# gluster volume geo-replication MASTER_VOL SLAVE_HOST::SLAVE_VOL start [force]Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify the status of the created session by running the following command:

gluster volume geo-replication MASTER_VOL SLAVE_HOST::SLAVE_VOL status

# gluster volume geo-replication MASTER_VOL SLAVE_HOST::SLAVE_VOL statusCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Geo-replication supports access to Red Hat Gluster Storage slaves through SSH using an unprivileged account (user account with non-zero UID). This method is more secure and it reduces the master's capabilities over slave to the minimum. This feature relies on

mountbroker, an internal service of glusterd which manages the mounts for unprivileged slave accounts. You must perform additional steps to configure glusterd with the appropriate mountbroker's access control directives. The following example demonstrates this process:

Perform the following steps on all the Slave nodes to setup an auxiliary glusterFS mount for the unprivileged account:

- In all the slave nodes, create a new group. For example,

geogroup.Note

You must not use multiple groups for themountbrokersetup. You can create multiple user accounts but the group should be same for all the non-root users. - In all the slave nodes, create a unprivileged account. For example,

geoaccount. Addgeoaccountas a member ofgeogroupgroup. - On any one of the Slave nodes, run the following command to set up mountbroker root directory and group.

gluster-mountbroker setup <MOUNT ROOT> <GROUP>

# gluster-mountbroker setup <MOUNT ROOT> <GROUP>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example,gluster-mountbroker setup /var/mountbroker-root geogroup

# gluster-mountbroker setup /var/mountbroker-root geogroupCopy to Clipboard Copied! Toggle word wrap Toggle overflow - On any one of the Slave nodes, run the following commands to add volume and user to the mountbroker service.

gluster-mountbroker add <VOLUME> <USER>

# gluster-mountbroker add <VOLUME> <USER>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example,gluster-mountbroker add slavevol geoaccount

# gluster-mountbroker add slavevol geoaccountCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Check the status of the setup by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The output displays the mountbroker status for every peer node in the slave cluster. - Restart

glusterdservice on all the Slave nodes.service glusterd restart

# service glusterd restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow After you setup an auxiliary glusterFS mount for the unprivileged account on all the Slave nodes, perform the following steps to setup a non-root geo-replication session.: - Setup a passwordless SSH from one of the master node to the

useron one of the slave node.For example, to setup a passwordless SSH to the user geoaccount.ssh-keygen ssh-copy-id -i identity_file geoaccount@slave_node_IPaddress/Hostname

# ssh-keygen # ssh-copy-id -i identity_file geoaccount@slave_node_IPaddress/HostnameCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create a common pem pub file by running the following command on the master node, where the passwordless SSH connection is configured to the

useron the slave node:gluster system:: execute gsec_create

# gluster system:: execute gsec_createCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create a geo-replication relationship between the master and the slave to the

userby running the following command on the master node:For example,gluster volume geo-replication MASTERVOL geoaccount@SLAVENODE::slavevol create push-pem

# gluster volume geo-replication MASTERVOL geoaccount@SLAVENODE::slavevol create push-pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you have multiple slave volumes and/or multiple accounts, create a geo-replication session with that particular user and volume.For example,gluster volume geo-replication MASTERVOL geoaccount2@SLAVENODE::slavevol2 create push-pem

# gluster volume geo-replication MASTERVOL geoaccount2@SLAVENODE::slavevol2 create push-pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow - On the slavenode, which is used to create relationship, run

/usr/libexec/glusterfs/set_geo_rep_pem_keys.shas a root with user name, master volume name, and slave volume names as the arguments.For example,/usr/libexec/glusterfs/set_geo_rep_pem_keys.sh geoaccount MASTERVOL SLAVEVOL_NAME

# /usr/libexec/glusterfs/set_geo_rep_pem_keys.sh geoaccount MASTERVOL SLAVEVOL_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Configure the meta-volume for geo-replication:

gluster volume geo-replication MASTER_VOL SLAVE_HOST::SLAVE_VOL config use_meta_volume true

# gluster volume geo-replication MASTER_VOL SLAVE_HOST::SLAVE_VOL config use_meta_volume trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:gluster volume geo-replication Volume1 example.com::slave-vol config use_meta_volume true

# gluster volume geo-replication Volume1 example.com::slave-vol config use_meta_volume trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow For more information on configuring meta-volume, see Section 10.3.5, “Configuring a Meta-Volume”. - Start the geo-replication with slave user by running the following command on the master node:For example,

gluster volume geo-replication MASTERVOL geoaccount@SLAVENODE::slavevol start

# gluster volume geo-replication MASTERVOL geoaccount@SLAVENODE::slavevol startCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify the status of geo-replication session by running the following command on the master node:

gluster volume geo-replication MASTERVOL geoaccount@SLAVENODE::slavevol status

# gluster volume geo-replication MASTERVOL geoaccount@SLAVENODE::slavevol statusCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Deleting a mountbroker geo-replication options after deleting session

After mountbroker geo-replicaton session is deleted, use the following command to remove volumes per mountbroker user.

gluster-mountbroker remove [--volume volume] [--user user]

# gluster-mountbroker remove [--volume volume] [--user user]

For example,

gluster-mountbroker remove --volume slavevol --user geoaccount gluster-mountbroker remove --user geoaccount gluster-mountbroker remove --volume slavevol

# gluster-mountbroker remove --volume slavevol --user geoaccount

# gluster-mountbroker remove --user geoaccount

# gluster-mountbroker remove --volume slavevol

If the volume to be removed is the last one for the mountbroker user, the user is also removed.

Important

If you have a secured geo-replication setup, you must ensure to prefix the unprivileged user account to the slave volume in the command. For example, to execute a geo-replication status command, run the following:

gluster volume geo-replication MASTERVOL geoaccount@SLAVENODE::slavevol status

# gluster volume geo-replication MASTERVOL geoaccount@SLAVENODE::slavevol status

In this command,

geoaccount is the name of the unprivileged user account.

10.3.5. Configuring a Meta-Volume

复制链接链接已复制到粘贴板!

For effective handling of node fail-overs in Master volume, geo-replication requires a shared storage to be available across all nodes of the cluster. Hence, you must ensure that a gluster volume named

gluster_shared_storage is created in the cluster, and is mounted at /var/run/gluster/shared_storage on all the nodes in the cluster. For more information on setting up shared storage volume, see Section 11.8, “Setting up Shared Storage Volume”.

- Configure the meta-volume for geo-replication:

gluster volume geo-replication MASTER_VOL SLAVE_HOST::SLAVE_VOL config use_meta_volume true

# gluster volume geo-replication MASTER_VOL SLAVE_HOST::SLAVE_VOL config use_meta_volume trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:gluster volume geo-replication Volume1 example.com::slave-vol config use_meta_volume true

# gluster volume geo-replication Volume1 example.com::slave-vol config use_meta_volume trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow