Storage

Configuring and managing storage in OpenShift Container Platform

Abstract

Chapter 1. OpenShift Container Platform storage overview

OpenShift Container Platform supports multiple types of storage, both for on-premise and cloud providers. You can manage container storage for persistent and non-persistent data in an OpenShift Container Platform cluster.

1.1. Glossary of common terms for OpenShift Container Platform storage

This glossary defines common terms that are used in the storage content.

- Access modes

Volume access modes describe volume capabilities. You can use access modes to match persistent volume claim (PVC) and persistent volume (PV). The following are the examples of access modes:

- ReadWriteOnce (RWO)

- ReadOnlyMany (ROX)

- ReadWriteMany (RWX)

- ReadWriteOncePod (RWOP)

- Cinder

- The Block Storage service for Red Hat OpenStack Platform (RHOSP) which manages the administration, security, and scheduling of all volumes.

- Config map

-

A config map provides a way to inject configuration data into pods. You can reference the data stored in a config map in a volume of type

ConfigMap. Applications running in a pod can use this data. - Container Storage Interface (CSI)

- An API specification for the management of container storage across different container orchestration (CO) systems.

- Dynamic Provisioning

- The framework allows you to create storage volumes on-demand, eliminating the need for cluster administrators to pre-provision persistent storage.

- Ephemeral storage

- Pods and containers can require temporary or transient local storage for their operation. The lifetime of this ephemeral storage does not extend beyond the life of the individual pod, and this ephemeral storage cannot be shared across pods.

- Fiber channel

- A networking technology that is used to transfer data among data centers, computer servers, switches and storage.

- FlexVolume

- FlexVolume is an out-of-tree plugin interface that uses an exec-based model to interface with storage drivers. You must install the FlexVolume driver binaries in a pre-defined volume plugin path on each node and in some cases the control plane nodes.

- fsGroup

- The fsGroup defines a file system group ID of a pod.

- iSCSI

- Internet Small Computer Systems Interface (iSCSI) is an Internet Protocol-based storage networking standard for linking data storage facilities. An iSCSI volume allows an existing iSCSI (SCSI over IP) volume to be mounted into your Pod.

- hostPath

- A hostPath volume in an OpenShift Container Platform cluster mounts a file or directory from the host node’s filesystem into your pod.

- KMS key

- The Key Management Service (KMS) helps you achieve the required level of encryption of your data across different services. you can use the KMS key to encrypt, decrypt, and re-encrypt data.

- Local volumes

- A local volume represents a mounted local storage device such as a disk, partition or directory.

- NFS

- A Network File System (NFS) that allows remote hosts to mount file systems over a network and interact with those file systems as though they are mounted locally. This enables system administrators to consolidate resources onto centralized servers on the network.

- OpenShift Data Foundation

- A provider of agnostic persistent storage for OpenShift Container Platform supporting file, block, and object storage, either in-house or in hybrid clouds

- Persistent storage

- Pods and containers can require permanent storage for their operation. OpenShift Container Platform uses the Kubernetes persistent volume (PV) framework to allow cluster administrators to provision persistent storage for a cluster. Developers can use PVC to request PV resources without having specific knowledge of the underlying storage infrastructure.

- Persistent volumes (PV)

- OpenShift Container Platform uses the Kubernetes persistent volume (PV) framework to allow cluster administrators to provision persistent storage for a cluster. Developers can use PVC to request PV resources without having specific knowledge of the underlying storage infrastructure.

- Persistent volume claims (PVCs)

- You can use a PVC to mount a PersistentVolume into a Pod. You can access the storage without knowing the details of the cloud environment.

- Pod

- One or more containers with shared resources, such as volume and IP addresses, running in your OpenShift Container Platform cluster. A pod is the smallest compute unit defined, deployed, and managed.

- Reclaim policy

-

A policy that tells the cluster what to do with the volume after it is released. A volume’s reclaim policy can be

Retain,Recycle, orDelete. - Role-based access control (RBAC)

- Role-based access control (RBAC) is a method of regulating access to computer or network resources based on the roles of individual users within your organization.

- Stateless applications

- A stateless application is an application program that does not save client data generated in one session for use in the next session with that client.

- Stateful applications

-

A stateful application is an application program that saves data to persistent disk storage. A server, client, and applications can use a persistent disk storage. You can use the

Statefulsetobject in OpenShift Container Platform to manage the deployment and scaling of a set of Pods, and provides guarantee about the ordering and uniqueness of these Pods. - Static provisioning

- A cluster administrator creates a number of PVs. PVs contain the details of storage. PVs exist in the Kubernetes API and are available for consumption.

- Storage

- OpenShift Container Platform supports many types of storage, both for on-premise and cloud providers. You can manage container storage for persistent and non-persistent data in an OpenShift Container Platform cluster.

- Storage class

- A storage class provides a way for administrators to describe the classes of storage they offer. Different classes might map to quality of service levels, backup policies, arbitrary policies determined by the cluster administrators.

- VMware vSphere’s Virtual Machine Disk (VMDK) volumes

- Virtual Machine Disk (VMDK) is a file format that describes containers for virtual hard disk drives that is used in virtual machines.

1.2. Storage types

OpenShift Container Platform storage is broadly classified into two categories, namely ephemeral storage and persistent storage.

1.2.1. Ephemeral storage

Pods and containers are ephemeral or transient in nature and designed for stateless applications. Ephemeral storage allows administrators and developers to better manage the local storage for some of their operations. For more information about ephemeral storage overview, types, and management, see Understanding ephemeral storage.

1.2.2. Persistent storage

Stateful applications deployed in containers require persistent storage. OpenShift Container Platform uses a pre-provisioned storage framework called persistent volumes (PV) to allow cluster administrators to provision persistent storage. The data inside these volumes can exist beyond the lifecycle of an individual pod. Developers can use persistent volume claims (PVCs) to request storage requirements. For more information about persistent storage overview, configuration, and lifecycle, see Understanding persistent storage.

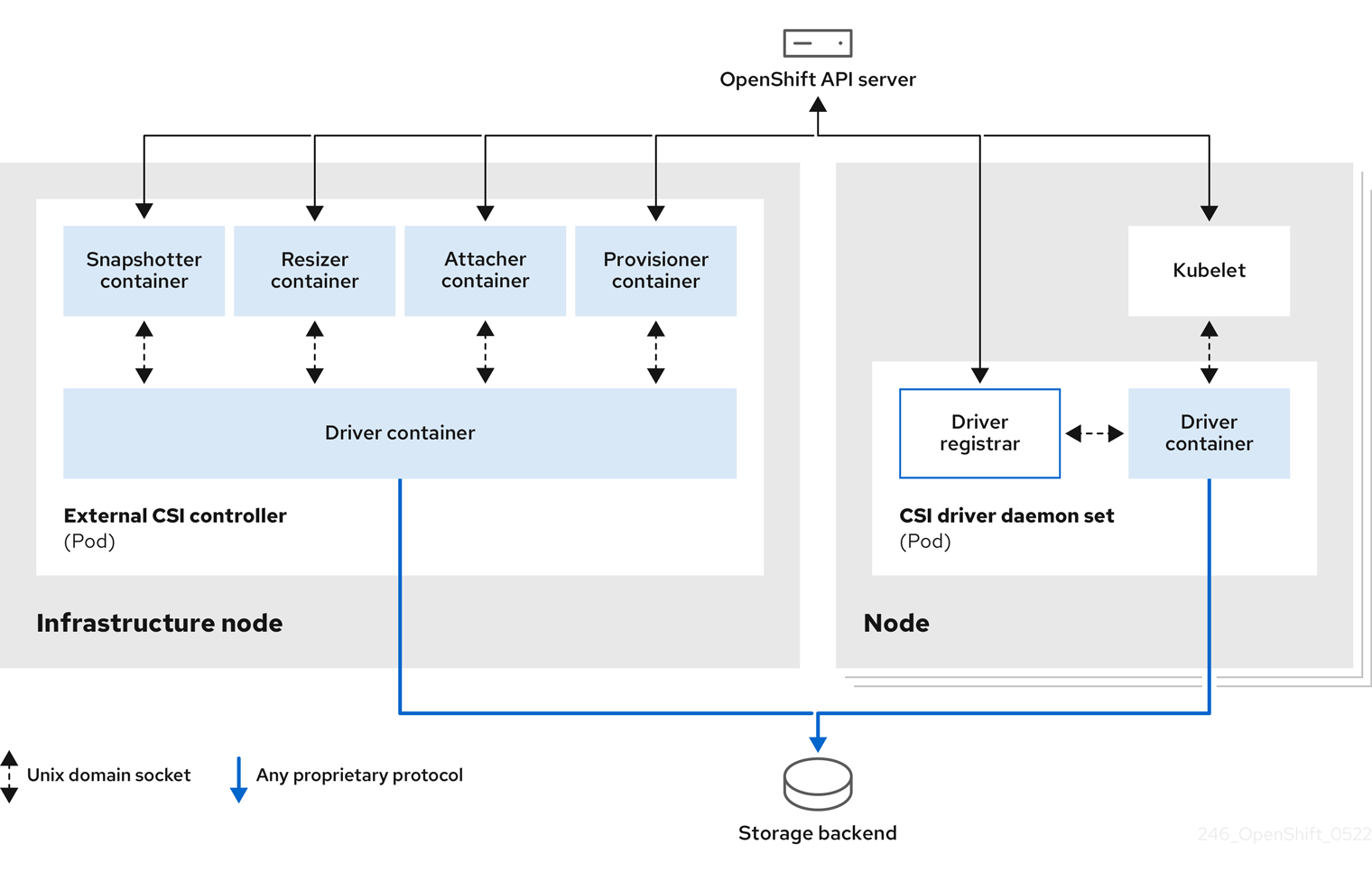

1.3. Container Storage Interface (CSI)

CSI is an API specification for the management of container storage across different container orchestration (CO) systems. You can manage the storage volumes within the container native environments, without having specific knowledge of the underlying storage infrastructure. With the CSI, storage works uniformly across different container orchestration systems, regardless of the storage vendors you are using. For more information about CSI, see Using Container Storage Interface (CSI).

1.4. Dynamic Provisioning

Dynamic Provisioning allows you to create storage volumes on-demand, eliminating the need for cluster administrators to pre-provision storage. For more information about dynamic provisioning, see Dynamic provisioning.

Chapter 2. Understanding ephemeral storage

2.1. Overview

In addition to persistent storage, pods and containers can require ephemeral or transient local storage for their operation. The lifetime of this ephemeral storage does not extend beyond the life of the individual pod, and this ephemeral storage cannot be shared across pods.

Pods use ephemeral local storage for scratch space, caching, and logs. Issues related to the lack of local storage accounting and isolation include the following:

- Pods cannot detect how much local storage is available to them.

- Pods cannot request guaranteed local storage.

- Local storage is a best-effort resource.

- Pods can be evicted due to other pods filling the local storage, after which new pods are not admitted until sufficient storage is reclaimed.

Unlike persistent volumes, ephemeral storage is unstructured and the space is shared between all pods running on a node, in addition to other uses by the system, the container runtime, and OpenShift Container Platform. The ephemeral storage framework allows pods to specify their transient local storage needs. It also allows OpenShift Container Platform to schedule pods where appropriate, and to protect the node against excessive use of local storage.

While the ephemeral storage framework allows administrators and developers to better manage local storage, I/O throughput and latency are not directly effected.

2.2. Types of ephemeral storage

Ephemeral local storage is always made available in the primary partition. There are two basic ways of creating the primary partition: root and runtime.

2.2.1. Root

This partition holds the kubelet root directory, /var/lib/kubelet/ by default, and /var/log/ directory. This partition can be shared between user pods, the OS, and Kubernetes system daemons. This partition can be consumed by pods through EmptyDir volumes, container logs, image layers, and container-writable layers. Kubelet manages shared access and isolation of this partition. This partition is ephemeral, and applications cannot expect any performance SLAs, such as disk IOPS, from this partition.

2.2.2. Runtime

This is an optional partition that runtimes can use for overlay file systems. OpenShift Container Platform attempts to identify and provide shared access along with isolation to this partition. Container image layers and writable layers are stored here. If the runtime partition exists, the root partition does not hold any image layer or other writable storage.

2.3. Ephemeral storage management

Cluster administrators can manage ephemeral storage within a project by setting quotas that define the limit ranges and number of requests for ephemeral storage across all pods in a non-terminal state. Developers can also set requests and limits on this compute resource at the pod and container level.

You can manage local ephemeral storage by specifying requests and limits. Each container in a pod can specify the following:

-

spec.containers[].resources.limits.ephemeral-storage -

spec.containers[].resources.requests.ephemeral-storage

2.3.1. Ephemeral storage limits and requests units

Limits and requests for ephemeral storage are measured in byte quantities. You can express storage as a plain integer or as a fixed-point number using one of these suffixes: E, P, T, G, M, k. You can also use the power-of-two equivalents: Ei, Pi, Ti, Gi, Mi, Ki.

For example, the following quantities all represent approximately the same value: 128974848, 129e6, 129M, and 123Mi.

The suffixes for each byte quantity are case-sensitive. Be sure to use the correct case. Use the case-sensitive "M", such as used in "400M" to set the request at 400 megabytes. Use the case-sensitive "400Mi" to request 400 mebibytes. If you specify "400m" of ephemeral storage, the storage requests is only 0.4 bytes.

2.3.2. Ephemeral storage requests and limits example

The following example configuration file shows a pod with two containers:

- Each container requests 2GiB of local ephemeral storage.

- Each container has a limit of 4GiB of local ephemeral storage.

At the pod level, kubelet works out an overall pod storage limit by adding up the limits of all the containers in that pod.

-

In this case, the total storage usage at the pod level is the sum of the disk usage from all containers plus the pod’s

emptyDirvolumes. - Therefore, the pod has a request of 4GiB of local ephemeral storage, and a limit of 8GiB of local ephemeral storage.

-

In this case, the total storage usage at the pod level is the sum of the disk usage from all containers plus the pod’s

Example ephemeral storage configuration with quotas and limits

2.3.3. Ephemeral storage configuration effects pod scheduling and eviction

The settings in the pod spec affect both how the scheduler makes a decision about scheduling pods and when kubelet evicts pods.

- First, the scheduler ensures that the sum of the resource requests of the scheduled containers is less than the capacity of the node. In this case, the pod can be assigned to a node only if the node’s available ephemeral storage (allocatable resource) is more than 4GiB.

- Second, at the container level, because the first container sets a resource limit, kubelet eviction manager measures the disk usage of this container and evicts the pod if the storage usage of the container exceeds its limit (4GiB). The kubelet eviction manager also marks the pod for eviction if the total usage exceeds the overall pod storage limit (8GiB).

For information about defining quotas for projects, see Quota setting per project.

2.4. Monitoring ephemeral storage

You can use /bin/df as a tool to monitor ephemeral storage usage on the volume where ephemeral container data is located, which is /var/lib/kubelet and /var/lib/containers. The available space for only /var/lib/kubelet is shown when you use the df command if /var/lib/containers is placed on a separate disk by the cluster administrator.

Procedure

To show the human-readable values of used and available space in

/var/lib, enter the following command:df -h /var/lib

$ df -h /var/libCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output shows the ephemeral storage usage in

/var/lib:Example output

Filesystem Size Used Avail Use% Mounted on /dev/disk/by-partuuid/4cd1448a-01 69G 32G 34G 49% /

Filesystem Size Used Avail Use% Mounted on /dev/disk/by-partuuid/4cd1448a-01 69G 32G 34G 49% /Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Understanding persistent storage

3.1. Persistent storage overview

Managing storage is a distinct problem from managing compute resources. OpenShift Container Platform uses the Kubernetes persistent volume (PV) framework to allow cluster administrators to provision persistent storage for a cluster. Developers can use persistent volume claims (PVCs) to request PV resources without having specific knowledge of the underlying storage infrastructure.

PVCs are specific to a project, and are created and used by developers as a means to use a PV. PV resources on their own are not scoped to any single project; they can be shared across the entire OpenShift Container Platform cluster and claimed from any project. After a PV is bound to a PVC, that PV can not then be bound to additional PVCs. This has the effect of scoping a bound PV to a single namespace, that of the binding project.

PVs are defined by a PersistentVolume API object, which represents a piece of existing storage in the cluster that was either statically provisioned by the cluster administrator or dynamically provisioned using a StorageClass object. It is a resource in the cluster just like a node is a cluster resource.

PVs are volume plugins like Volumes but have a lifecycle that is independent of any individual pod that uses the PV. PV objects capture the details of the implementation of the storage, be that NFS, iSCSI, or a cloud-provider-specific storage system.

High availability of storage in the infrastructure is left to the underlying storage provider.

PVCs are defined by a PersistentVolumeClaim API object, which represents a request for storage by a developer. It is similar to a pod in that pods consume node resources and PVCs consume PV resources. For example, pods can request specific levels of resources, such as CPU and memory, while PVCs can request specific storage capacity and access modes. For example, they can be mounted once read-write or many times read-only.

3.2. Lifecycle of a volume and claim

PVs are resources in the cluster. PVCs are requests for those resources and also act as claim checks to the resource. The interaction between PVs and PVCs have the following lifecycle.

3.2.1. Provision storage

In response to requests from a developer defined in a PVC, a cluster administrator configures one or more dynamic provisioners that provision storage and a matching PV.

Alternatively, a cluster administrator can create a number of PVs in advance that carry the details of the real storage that is available for use. PVs exist in the API and are available for use.

3.2.2. Bind claims

When you create a PVC, you request a specific amount of storage, specify the required access mode, and create a storage class to describe and classify the storage. The control loop in the master watches for new PVCs and binds the new PVC to an appropriate PV. If an appropriate PV does not exist, a provisioner for the storage class creates one.

The size of all PVs might exceed your PVC size. This is especially true with manually provisioned PVs. To minimize the excess, OpenShift Container Platform binds to the smallest PV that matches all other criteria.

Claims remain unbound indefinitely if a matching volume does not exist or can not be created with any available provisioner servicing a storage class. Claims are bound as matching volumes become available. For example, a cluster with many manually provisioned 50Gi volumes would not match a PVC requesting 100Gi. The PVC can be bound when a 100Gi PV is added to the cluster.

3.2.3. Use pods and claimed PVs

Pods use claims as volumes. The cluster inspects the claim to find the bound volume and mounts that volume for a pod. For those volumes that support multiple access modes, you must specify which mode applies when you use the claim as a volume in a pod.

Once you have a claim and that claim is bound, the bound PV belongs to you for as long as you need it. You can schedule pods and access claimed PVs by including persistentVolumeClaim in the pod’s volumes block.

If you attach persistent volumes that have high file counts to pods, those pods can fail or can take a long time to start. For more information, see When using Persistent Volumes with high file counts in OpenShift, why do pods fail to start or take an excessive amount of time to achieve "Ready" state?.

3.2.4. Storage Object in Use Protection

The Storage Object in Use Protection feature ensures that PVCs in active use by a pod and PVs that are bound to PVCs are not removed from the system, as this can result in data loss.

Storage Object in Use Protection is enabled by default.

A PVC is in active use by a pod when a Pod object exists that uses the PVC.

If a user deletes a PVC that is in active use by a pod, the PVC is not removed immediately. PVC removal is postponed until the PVC is no longer actively used by any pods. Also, if a cluster admin deletes a PV that is bound to a PVC, the PV is not removed immediately. PV removal is postponed until the PV is no longer bound to a PVC.

3.2.5. Release a persistent volume

When you are finished with a volume, you can delete the PVC object from the API, which allows reclamation of the resource. The volume is considered released when the claim is deleted, but it is not yet available for another claim. The previous claimant’s data remains on the volume and must be handled according to policy.

3.2.6. Reclaim policy for persistent volumes

The reclaim policy of a persistent volume tells the cluster what to do with the volume after it is released. A volume’s reclaim policy can be Retain, Recycle, or Delete.

-

Retainreclaim policy allows manual reclamation of the resource for those volume plugins that support it. -

Recyclereclaim policy recycles the volume back into the pool of unbound persistent volumes once it is released from its claim.

The Recycle reclaim policy is deprecated in OpenShift Container Platform 4. Dynamic provisioning is recommended for equivalent and better functionality.

-

Deletereclaim policy deletes both thePersistentVolumeobject from OpenShift Container Platform and the associated storage asset in external infrastructure, such as Amazon Elastic Block Store (Amazon EBS) or VMware vSphere.

Dynamically provisioned volumes are always deleted.

3.2.7. Reclaiming a persistent volume manually

When a persistent volume claim (PVC) is deleted, the persistent volume (PV) still exists and is considered "released". However, the PV is not yet available for another claim because the data of the previous claimant remains on the volume.

Procedure

To manually reclaim the PV as a cluster administrator:

Delete the PV by running the following command:

oc delete pv <pv_name>

$ oc delete pv <pv_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The associated storage asset in the external infrastructure, such as an AWS EBS, GCE PD, Azure Disk, or Cinder volume, still exists after the PV is deleted.

- Clean up the data on the associated storage asset.

- Delete the associated storage asset. Alternately, to reuse the same storage asset, create a new PV with the storage asset definition.

The reclaimed PV is now available for use by another PVC.

3.2.8. Changing the reclaim policy of a persistent volume

You can change the reclaim policy of a persistent volume.

Procedure

List the persistent volumes in your cluster:

oc get pv

$ oc get pvCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-b6efd8da-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim1 manual 10s pvc-b95650f8-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim2 manual 6s pvc-bb3ca71d-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim3 manual 3s

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-b6efd8da-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim1 manual 10s pvc-b95650f8-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim2 manual 6s pvc-bb3ca71d-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim3 manual 3sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Choose one of your persistent volumes and change its reclaim policy:

oc patch pv <your-pv-name> -p '{"spec":{"persistentVolumeReclaimPolicy":"Retain"}}'$ oc patch pv <your-pv-name> -p '{"spec":{"persistentVolumeReclaimPolicy":"Retain"}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that your chosen persistent volume has the right policy:

oc get pv

$ oc get pvCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-b6efd8da-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim1 manual 10s pvc-b95650f8-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim2 manual 6s pvc-bb3ca71d-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Retain Bound default/claim3 manual 3s

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-b6efd8da-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim1 manual 10s pvc-b95650f8-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim2 manual 6s pvc-bb3ca71d-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Retain Bound default/claim3 manual 3sCopy to Clipboard Copied! Toggle word wrap Toggle overflow In the preceding output, the volume bound to claim

default/claim3now has aRetainreclaim policy. The volume will not be automatically deleted when a user deletes claimdefault/claim3.

3.3. Persistent volumes

Each PV contains a spec and status, which is the specification and status of the volume, for example:

PersistentVolume object definition example

3.3.1. Types of PVs

OpenShift Container Platform supports the following persistent volume plugins:

- AliCloud Disk

- AWS Elastic Block Store (EBS)

- AWS Elastic File Store (EFS)

- Azure Disk

- Azure File

- Cinder

- Fibre Channel

- GCP Persistent Disk

- GCP Filestore

- IBM Power Virtual Server Block

- IBM® VPC Block

- HostPath

- iSCSI

- Local volume

- LVM Storage

- NFS

- OpenStack Manila

- Red Hat OpenShift Data Foundation

- VMware vSphere

3.3.2. Capacity

Generally, a persistent volume (PV) has a specific storage capacity. This is set by using the capacity attribute of the PV.

Currently, storage capacity is the only resource that can be set or requested. Future attributes may include IOPS, throughput, and so on.

3.3.3. Access modes

A persistent volume can be mounted on a host in any way supported by the resource provider. Providers have different capabilities and each PV’s access modes are set to the specific modes supported by that particular volume. For example, NFS can support multiple read-write clients, but a specific NFS PV might be exported on the server as read-only. Each PV gets its own set of access modes describing that specific PV’s capabilities.

Claims are matched to volumes with similar access modes. The only two matching criteria are access modes and size. A claim’s access modes represent a request. Therefore, you might be granted more, but never less. For example, if a claim requests RWO, but the only volume available is an NFS PV (RWO+ROX+RWX), the claim would then match NFS because it supports RWO.

Direct matches are always attempted first. The volume’s modes must match or contain more modes than you requested. The size must be greater than or equal to what is expected. If two types of volumes, such as NFS and iSCSI, have the same set of access modes, either of them can match a claim with those modes. There is no ordering between types of volumes and no way to choose one type over another.

All volumes with the same modes are grouped, and then sorted by size, smallest to largest. The binder gets the group with matching modes and iterates over each, in size order, until one size matches.

Volume access modes describe volume capabilities. They are not enforced constraints. The storage provider is responsible for runtime errors resulting from invalid use of the resource. Errors in the provider show up at runtime as mount errors.

For example, NFS offers ReadWriteOnce access mode. If you want to use the volume’s ROX capability, mark the claims as ReadOnlyMany.

iSCSI and Fibre Channel volumes do not currently have any fencing mechanisms. You must ensure the volumes are only used by one node at a time. In certain situations, such as draining a node, the volumes can be used simultaneously by two nodes. Before draining the node, delete the pods that use the volumes.

The following table lists the access modes:

| Access Mode | CLI abbreviation | Description |

|---|---|---|

| ReadWriteOnce |

| The volume can be mounted as read-write by a single node. |

| ReadWriteOncePod [1] |

| The volume can be mounted as read-write by a single pod on a single node. |

| ReadOnlyMany |

| The volume can be mounted as read-only by many nodes. |

| ReadWriteMany |

| The volume can be mounted as read-write by many nodes. |

- ReadWriteOncePod access mode for persistent volumes is a Technology Preview feature.

ReadWriteOncePod access mode for persistent volumes is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

| Volume plugin | ReadWriteOnce [1] | ReadWriteOncePod [2] | ReadOnlyMany | ReadWriteMany |

|---|---|---|---|---|

| AliCloud Disk |

✅ |

✅ |

- |

- |

| AWS EBS [3] |

✅ |

✅ |

- |

- |

| AWS EFS |

✅ |

✅ |

✅ |

✅ |

| Azure File |

✅ |

✅ |

✅ |

✅ |

| Azure Disk |

✅ |

✅ |

- |

- |

| Cinder |

✅ |

✅ |

- |

- |

| Fibre Channel |

✅ |

✅ |

✅ |

✅ [4] |

| GCP Persistent Disk |

✅ |

✅ |

- |

- |

| GCP Filestore |

✅ |

✅ |

✅ |

✅ |

| HostPath |

✅ |

✅ |

- |

- |

| IBM Power Virtual Server Disk |

✅ |

✅ |

✅ |

✅ |

| IBM® VPC Disk |

✅ |

✅ |

- |

- |

| iSCSI |

✅ |

✅ |

✅ |

✅ [4] |

| Local volume |

✅ |

✅ |

- |

- |

| LVM Storage |

✅ |

✅ |

- |

- |

| NFS |

✅ |

✅ |

✅ |

✅ |

| OpenStack Manila |

- |

✅ |

- |

✅ |

| Red Hat OpenShift Data Foundation |

✅ |

✅ |

- |

✅ |

| VMware vSphere |

✅ |

✅ |

- |

✅ [5] |

- ReadWriteOnce (RWO) volumes cannot be mounted on multiple nodes. If a node fails, the system does not allow the attached RWO volume to be mounted on a new node because it is already assigned to the failed node. If you encounter a multi-attach error message as a result, force delete the pod on a shutdown or crashed node to avoid data loss in critical workloads, such as when dynamic persistent volumes are attached.

- ReadWriteOncePod is a Technology Preview feature.

- Use a recreate deployment strategy for pods that rely on AWS EBS.

- Only raw block volumes support the ReadWriteMany (RWX) access mode for Fibre Channel and iSCSI. For more information, see "Block volume support".

- If the underlying vSphere environment supports the vSAN file service, then the vSphere Container Storage Interface (CSI) Driver Operator installed by OpenShift Container Platform supports provisioning of ReadWriteMany (RWX) volumes. If you do not have vSAN file service configured, and you request RWX, the volume fails to get created and an error is logged. For more information, see "Using Container Storage Interface" → "VMware vSphere CSI Driver Operator".

3.3.4. Phase

Volumes can be found in one of the following phases:

| Phase | Description |

|---|---|

| Available | A free resource not yet bound to a claim. |

| Bound | The volume is bound to a claim. |

| Released | The claim was deleted, but the resource is not yet reclaimed by the cluster. |

| Failed | The volume has failed its automatic reclamation. |

You can view the name of the PVC that is bound to the PV by running the following command:

oc get pv <pv_claim>

$ oc get pv <pv_claim>3.3.4.1. Mount options

You can specify mount options while mounting a PV by using the attribute mountOptions.

For example:

Mount options example

- 1

- Specified mount options are used while mounting the PV to the disk.

The following PV types support mount options:

- AWS Elastic Block Store (EBS)

- Azure Disk

- Azure File

- Cinder

- GCE Persistent Disk

- iSCSI

- Local volume

- NFS

- Red Hat OpenShift Data Foundation (Ceph RBD only)

- VMware vSphere

Fibre Channel and HostPath PVs do not support mount options.

3.3.5. Persistent volume claims

Each PersistentVolumeClaim object contains spec and status fields, which are the specification and status of the persistent volume claim (PVC), for example:

PersistentVolumeClaim object definition example

3.3.6. Storage classes

Claims can optionally request a specific storage class by specifying the storage class’s name in the storageClassName attribute. Only PVs of the requested class, ones with the same storageClassName as the PVC, can be bound to the PVC. The cluster administrator can configure dynamic provisioners to service one or more storage classes. The cluster administrator can create a PV on-demand that matches the specifications in the PVC.

The Cluster Storage Operator might install a default storage class depending on the platform in use. This storage class is owned and controlled by the Operator. It cannot be deleted or modified beyond defining annotations and labels. If different behavior is desired, you must define a custom storage class.

The cluster administrator can also set a default storage class for all PVCs. When a default storage class is configured, the PVC must explicitly ask for StorageClass or storageClassName annotations set to "" to be bound to a PV without a storage class.

If more than one storage class is marked as default, a PVC can only be created if the storageClassName is explicitly specified. Therefore, only one storage class should be set as the default.

3.3.7. Access modes

Claims use the same conventions as volumes when requesting storage with specific access modes.

3.3.8. Resources

Claims, such as pods, can request specific quantities of a resource. In this case, the request is for storage. The same resource model applies to volumes and claims.

3.3.9. Claims as volumes

Pods access storage by using the claim as a volume. Claims must exist in the same namespace as the pod using the claim. The cluster finds the claim in the pod’s namespace and uses it to get the PersistentVolume backing the claim. The volume is mounted to the host and into the pod, for example:

Mount volume to the host and into the pod example

- 1

- Path to mount the volume inside the pod.

- 2

- Name of the volume to mount. Do not mount to the container root,

/, or any path that is the same in the host and the container. This can corrupt your host system if the container is sufficiently privileged, such as the host/dev/ptsfiles. It is safe to mount the host by using/host. - 3

- Name of the PVC, that exists in the same namespace, to use.

3.4. Block volume support

OpenShift Container Platform can statically provision raw block volumes. These volumes do not have a file system, and can provide performance benefits for applications that either write to the disk directly or implement their own storage service.

Raw block volumes are provisioned by specifying volumeMode: Block in the PV and PVC specification.

Pods using raw block volumes must be configured to allow privileged containers.

The following table displays which volume plugins support block volumes.

| Volume Plugin | Manually provisioned | Dynamically provisioned | Fully supported |

|---|---|---|---|

| Amazon Elastic Block Store (Amazon EBS) | ✅ | ✅ | ✅ |

| Amazon Elastic File Storage (Amazon EFS) | |||

| AliCloud Disk | ✅ | ✅ | ✅ |

| Azure Disk | ✅ | ✅ | ✅ |

| Azure File | |||

| Cinder | ✅ | ✅ | ✅ |

| Fibre Channel | ✅ | ✅ | |

| GCP | ✅ | ✅ | ✅ |

| HostPath | |||

| IBM VPC Disk | ✅ | ✅ | ✅ |

| iSCSI | ✅ | ✅ | |

| Local volume | ✅ | ✅ | |

| LVM Storage | ✅ | ✅ | ✅ |

| NFS | |||

| Red Hat OpenShift Data Foundation | ✅ | ✅ | ✅ |

| VMware vSphere | ✅ | ✅ | ✅ |

Using any of the block volumes that can be provisioned manually, but are not provided as fully supported, is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

3.4.1. Block volume examples

PV example

- 1

volumeModemust be set toBlockto indicate that this PV is a raw block volume.

PVC example

- 1

volumeModemust be set toBlockto indicate that a raw block PVC is requested.

Pod specification example

- 1

volumeDevices, instead ofvolumeMounts, is used for block devices. OnlyPersistentVolumeClaimsources can be used with raw block volumes.- 2

devicePath, instead ofmountPath, represents the path to the physical device where the raw block is mapped to the system.- 3

- The volume source must be of type

persistentVolumeClaimand must match the name of the PVC as expected.

| Value | Default |

|---|---|

| Filesystem | Yes |

| Block | No |

PV volumeMode | PVC volumeMode | Binding result |

|---|---|---|

| Filesystem | Filesystem | Bind |

| Unspecified | Unspecified | Bind |

| Filesystem | Unspecified | Bind |

| Unspecified | Filesystem | Bind |

| Block | Block | Bind |

| Unspecified | Block | No Bind |

| Block | Unspecified | No Bind |

| Filesystem | Block | No Bind |

| Block | Filesystem | No Bind |

Unspecified values result in the default value of Filesystem.

3.5. Using fsGroup to reduce pod timeouts

If a storage volume contains many files (~1,000,000 or greater), you may experience pod timeouts.

This can occur because, by default, OpenShift Container Platform recursively changes ownership and permissions for the contents of each volume to match the fsGroup specified in a pod’s securityContext when that volume is mounted. For large volumes, checking and changing ownership and permissions can be time consuming, slowing pod startup. You can use the fsGroupChangePolicy field inside a securityContext to control the way that OpenShift Container Platform checks and manages ownership and permissions for a volume.

fsGroupChangePolicy defines behavior for changing ownership and permission of the volume before being exposed inside a pod. This field only applies to volume types that support fsGroup-controlled ownership and permissions. This field has two possible values:

-

OnRootMismatch: Only change permissions and ownership if permission and ownership of root directory does not match with expected permissions of the volume. This can help shorten the time it takes to change ownership and permission of a volume to reduce pod timeouts. -

Always: Always change permission and ownership of the volume when a volume is mounted.

fsGroupChangePolicy example

- 1

OnRootMismatchspecifies skipping recursive permission change, thus helping to avoid pod timeout problems.

The fsGroupChangePolicyfield has no effect on ephemeral volume types, such as secret, configMap, and emptydir.

Chapter 4. Configuring persistent storage

4.1. Persistent storage using AWS Elastic Block Store

OpenShift Container Platform supports Amazon Elastic Block Store (EBS) volumes. You can provision your OpenShift Container Platform cluster with persistent storage by using Amazon EC2.

The Kubernetes persistent volume framework allows administrators to provision a cluster with persistent storage and gives users a way to request those resources without having any knowledge of the underlying infrastructure. You can dynamically provision Amazon EBS volumes. Persistent volumes are not bound to a single project or namespace; they can be shared across the OpenShift Container Platform cluster. Persistent volume claims are specific to a project or namespace and can be requested by users. You can define a KMS key to encrypt container-persistent volumes on AWS. By default, newly created clusters using OpenShift Container Platform version 4.10 and later use gp3 storage and the AWS EBS CSI driver.

High-availability of storage in the infrastructure is left to the underlying storage provider.

OpenShift Container Platform 4.12 and later provides automatic migration for the AWS Block in-tree volume plugin to its equivalent CSI driver.

CSI automatic migration should be seamless. Migration does not change how you use all existing API objects, such as persistent volumes, persistent volume claims, and storage classes. For more information about migration, see CSI automatic migration.

4.1.1. Creating the EBS storage class

Storage classes are used to differentiate and delineate storage levels and usages. By defining a storage class, users can obtain dynamically provisioned persistent volumes.

4.1.2. Creating the persistent volume claim

Prerequisites

Storage must exist in the underlying infrastructure before it can be mounted as a volume in OpenShift Container Platform.

Procedure

- In the OpenShift Container Platform console, click Storage → Persistent Volume Claims.

- In the persistent volume claims overview, click Create Persistent Volume Claim.

Define the desired options on the page that appears.

- Select the previously-created storage class from the drop-down menu.

- Enter a unique name for the storage claim.

- Select the access mode. This selection determines the read and write access for the storage claim.

- Define the size of the storage claim.

- Click Create to create the persistent volume claim and generate a persistent volume.

4.1.3. Volume format

Before OpenShift Container Platform mounts the volume and passes it to a container, it checks that the volume contains a file system as specified by the fsType arameter in the persistent volume definition. If the device is not formatted with the file system, all data from the device is erased and the device is automatically formatted with the given file system.

This verification enables you to use unformatted AWS volumes as persistent volumes, because OpenShift Container Platform formats them before the first use.

4.1.4. Maximum number of EBS volumes on a node

By default, OpenShift Container Platform supports a maximum of 39 EBS volumes attached to one node. This limit is consistent with the AWS volume limits. The volume limit depends on the instance type.

As a cluster administrator, you must use either in-tree or Container Storage Interface (CSI) volumes and their respective storage classes, but never both volume types at the same time. The maximum attached EBS volume number is counted separately for in-tree and CSI volumes, which means you could have up to 39 EBS volumes of each type.

For information about accessing additional storage options, such as volume snapshots, that are not possible with in-tree volume plug-ins, see AWS Elastic Block Store CSI Driver Operator.

4.1.5. Encrypting container persistent volumes on AWS with a KMS key

Defining a KMS key to encrypt container-persistent volumes on AWS is useful when you have explicit compliance and security guidelines when deploying to AWS.

Prerequisites

- Underlying infrastructure must contain storage.

- You must create a customer KMS key on AWS.

Procedure

Create a storage class:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specifies the name of the storage class.

- 2

- File system that is created on provisioned volumes.

- 3

- Specifies the full Amazon Resource Name (ARN) of the key to use when encrypting the container-persistent volume. If you do not provide any key, but the

encryptedfield is set totrue, then the default KMS key is used. See Finding the key ID and key ARN on AWS in the AWS documentation.

Create a persistent volume claim (PVC) with the storage class specifying the KMS key:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create workload containers to consume the PVC:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2. Persistent storage using Azure

OpenShift Container Platform supports Microsoft Azure Disk volumes. You can provision your OpenShift Container Platform cluster with persistent storage using Azure. Some familiarity with Kubernetes and Azure is assumed. The Kubernetes persistent volume framework allows administrators to provision a cluster with persistent storage and gives users a way to request those resources without having any knowledge of the underlying infrastructure. Azure Disk volumes can be provisioned dynamically. Persistent volumes are not bound to a single project or namespace; they can be shared across the OpenShift Container Platform cluster. Persistent volume claims are specific to a project or namespace and can be requested by users.

OpenShift Container Platform 4.11 and later provides automatic migration for the Azure Disk in-tree volume plugin to its equivalent CSI driver.

CSI automatic migration should be seamless. Migration does not change how you use all existing API objects, such as persistent volumes, persistent volume claims, and storage classes. For more information about migration, see CSI automatic migration.

High availability of storage in the infrastructure is left to the underlying storage provider.

4.2.1. Creating the Azure storage class

Storage classes are used to differentiate and delineate storage levels and usages. By defining a storage class, users can obtain dynamically provisioned persistent volumes.

Procedure

- In the OpenShift Container Platform console, click Storage → Storage Classes.

- In the storage class overview, click Create Storage Class.

Define the desired options on the page that appears.

- Enter a name to reference the storage class.

- Enter an optional description.

- Select the reclaim policy.

Select

kubernetes.io/azure-diskfrom the drop down list.Enter the storage account type. This corresponds to your Azure storage account SKU tier. Valid options are

Premium_LRS,PremiumV2_LRS,Standard_LRS,StandardSSD_LRS, andUltraSSD_LRS.ImportantThe skuname

PremiumV2_LRSis not supported in all regions, and in some supported regions, not all of the availability zones are supported. For more information, see Azure doc.Enter the kind of account. Valid options are

shared,dedicated,andmanaged.ImportantRed Hat only supports the use of

kind: Managedin the storage class.With

SharedandDedicated, Azure creates unmanaged disks, while OpenShift Container Platform creates a managed disk for machine OS (root) disks. But because Azure Disk does not allow the use of both managed and unmanaged disks on a node, unmanaged disks created withSharedorDedicatedcannot be attached to OpenShift Container Platform nodes.

- Enter additional parameters for the storage class as desired.

- Click Create to create the storage class.

4.2.2. Creating the persistent volume claim

Prerequisites

Storage must exist in the underlying infrastructure before it can be mounted as a volume in OpenShift Container Platform.

Procedure

- In the OpenShift Container Platform console, click Storage → Persistent Volume Claims.

- In the persistent volume claims overview, click Create Persistent Volume Claim.

Define the desired options on the page that appears.

- Select the previously-created storage class from the drop-down menu.

- Enter a unique name for the storage claim.

- Select the access mode. This selection determines the read and write access for the storage claim.

- Define the size of the storage claim.

- Click Create to create the persistent volume claim and generate a persistent volume.

4.2.3. Volume format

Before OpenShift Container Platform mounts the volume and passes it to a container, it checks that it contains a file system as specified by the fsType parameter in the persistent volume definition. If the device is not formatted with the file system, all data from the device is erased and the device is automatically formatted with the given file system.

This allows using unformatted Azure volumes as persistent volumes, because OpenShift Container Platform formats them before the first use.

4.2.4. Machine sets that deploy machines with ultra disks using PVCs

You can create a machine set running on Azure that deploys machines with ultra disks. Ultra disks are high-performance storage that are intended for use with the most demanding data workloads.

Both the in-tree plugin and CSI driver support using PVCs to enable ultra disks. You can also deploy machines with ultra disks as data disks without creating a PVC.

4.2.4.1. Creating machines with ultra disks by using machine sets

You can deploy machines with ultra disks on Azure by editing your machine set YAML file.

Prerequisites

- Have an existing Microsoft Azure cluster.

Procedure

Copy an existing Azure

MachineSetcustom resource (CR) and edit it by running the following command:oc edit machineset <machine_set_name>

$ oc edit machineset <machine_set_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where

<machine_set_name>is the machine set that you want to provision machines with ultra disks.Add the following lines in the positions indicated:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a machine set using the updated configuration by running the following command:

oc create -f <machine_set_name>.yaml

$ oc create -f <machine_set_name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a storage class that contains the following YAML definition:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify the name of the storage class. This procedure uses

ultra-disk-scfor this value. - 2

- Specify the number of IOPS for the storage class.

- 3

- Specify the throughput in MBps for the storage class.

- 4

- For Azure Kubernetes Service (AKS) version 1.21 or later, use

disk.csi.azure.com. For earlier versions of AKS, usekubernetes.io/azure-disk. - 5

- Optional: Specify this parameter to wait for the creation of the pod that will use the disk.

Create a persistent volume claim (PVC) to reference the

ultra-disk-scstorage class that contains the following YAML definition:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a pod that contains the following YAML definition:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Validate that the machines are created by running the following command:

oc get machines

$ oc get machinesCopy to Clipboard Copied! Toggle word wrap Toggle overflow The machines should be in the

Runningstate.For a machine that is running and has a node attached, validate the partition by running the following command:

oc debug node/<node_name> -- chroot /host lsblk

$ oc debug node/<node_name> -- chroot /host lsblkCopy to Clipboard Copied! Toggle word wrap Toggle overflow In this command,

oc debug node/<node_name>starts a debugging shell on the node<node_name>and passes a command with--. The passed commandchroot /hostprovides access to the underlying host OS binaries, andlsblkshows the block devices that are attached to the host OS machine.

Next steps

To use an ultra disk from within a pod, create a workload that uses the mount point. Create a YAML file similar to the following example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.4.2. Troubleshooting resources for machine sets that enable ultra disks

Use the information in this section to understand and recover from issues you might encounter.

4.2.4.2.1. Unable to mount a persistent volume claim backed by an ultra disk

If there is an issue mounting a persistent volume claim backed by an ultra disk, the pod becomes stuck in the ContainerCreating state and an alert is triggered.

For example, if the additionalCapabilities.ultraSSDEnabled parameter is not set on the machine that backs the node that hosts the pod, the following error message appears:

StorageAccountType UltraSSD_LRS can be used only when additionalCapabilities.ultraSSDEnabled is set.

StorageAccountType UltraSSD_LRS can be used only when additionalCapabilities.ultraSSDEnabled is set.To resolve this issue, describe the pod by running the following command:

oc -n <stuck_pod_namespace> describe pod <stuck_pod_name>

$ oc -n <stuck_pod_namespace> describe pod <stuck_pod_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. Persistent storage using Azure File

OpenShift Container Platform supports Microsoft Azure File volumes. You can provision your OpenShift Container Platform cluster with persistent storage using Azure. Some familiarity with Kubernetes and Azure is assumed.

The Kubernetes persistent volume framework allows administrators to provision a cluster with persistent storage and gives users a way to request those resources without having any knowledge of the underlying infrastructure. You can provision Azure File volumes dynamically.

Persistent volumes are not bound to a single project or namespace, and you can share them across the OpenShift Container Platform cluster. Persistent volume claims are specific to a project or namespace, and can be requested by users for use in applications.

High availability of storage in the infrastructure is left to the underlying storage provider.

Azure File volumes use Server Message Block.

OpenShift Container Platform 4.13 and later provides automatic migration for the Azure File in-tree volume plugin to its equivalent CSI driver.

CSI automatic migration should be seamless. Migration does not change how you use all existing API objects, such as persistent volumes, persistent volume claims, and storage classes. For more information about migration, see CSI automatic migration.

Additional resources

4.3.1. Create the Azure File share persistent volume claim

To create the persistent volume claim, you must first define a Secret object that contains the Azure account and key. This secret is used in the PersistentVolume definition, and will be referenced by the persistent volume claim for use in applications.

Prerequisites

- An Azure File share exists.

- The credentials to access this share, specifically the storage account and key, are available.

Procedure

Create a

Secretobject that contains the Azure File credentials:oc create secret generic <secret-name> --from-literal=azurestorageaccountname=<storage-account> \ --from-literal=azurestorageaccountkey=<storage-account-key>

$ oc create secret generic <secret-name> --from-literal=azurestorageaccountname=<storage-account> \1 --from-literal=azurestorageaccountkey=<storage-account-key>2 Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

PersistentVolumeobject that references theSecretobject you created:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

PersistentVolumeClaimobject that maps to the persistent volume you created:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The name of the persistent volume claim.

- 2

- The size of this persistent volume claim.

- 3

- The name of the storage class that is used to provision the persistent volume. Specify the storage class used in the

PersistentVolumedefinition. - 4

- The name of the existing

PersistentVolumeobject that references the Azure File share.

4.3.2. Mount the Azure File share in a pod

After the persistent volume claim has been created, it can be used inside by an application. The following example demonstrates mounting this share inside of a pod.

Prerequisites

- A persistent volume claim exists that is mapped to the underlying Azure File share.

Procedure

Create a pod that mounts the existing persistent volume claim:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The name of the pod.

- 2

- The path to mount the Azure File share inside the pod. Do not mount to the container root,

/, or any path that is the same in the host and the container. This can corrupt your host system if the container is sufficiently privileged, such as the host/dev/ptsfiles. It is safe to mount the host by using/host. - 3

- The name of the

PersistentVolumeClaimobject that has been previously created.

4.4. Persistent storage using Cinder

OpenShift Container Platform supports OpenStack Cinder. Some familiarity with Kubernetes and OpenStack is assumed.

Cinder volumes can be provisioned dynamically. Persistent volumes are not bound to a single project or namespace; they can be shared across the OpenShift Container Platform cluster. Persistent volume claims are specific to a project or namespace and can be requested by users.

OpenShift Container Platform 4.11 and later provides automatic migration for the Cinder in-tree volume plugin to its equivalent CSI driver.

CSI automatic migration should be seamless. Migration does not change how you use all existing API objects, such as persistent volumes, persistent volume claims, and storage classes. For more information about migration, see CSI automatic migration.

4.4.1. Manual provisioning with Cinder

Storage must exist in the underlying infrastructure before it can be mounted as a volume in OpenShift Container Platform.

Prerequisites

- OpenShift Container Platform configured for Red Hat OpenStack Platform (RHOSP)

- Cinder volume ID

4.4.1.1. Creating the persistent volume

You must define your persistent volume (PV) in an object definition before creating it in OpenShift Container Platform:

Procedure

Save your object definition to a file.

cinder-persistentvolume.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The name of the volume that is used by persistent volume claims or pods.

- 2

- The amount of storage allocated to this volume.

- 3

- Indicates

cinderfor Red Hat OpenStack Platform (RHOSP) Cinder volumes. - 4

- The file system that is created when the volume is mounted for the first time.

- 5

- The Cinder volume to use.

ImportantDo not change the

fstypeparameter value after the volume is formatted and provisioned. Changing this value can result in data loss and pod failure.Create the object definition file you saved in the previous step.

oc create -f cinder-persistentvolume.yaml

$ oc create -f cinder-persistentvolume.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4.1.2. Persistent volume formatting

You can use unformatted Cinder volumes as PVs because OpenShift Container Platform formats them before the first use.

Before OpenShift Container Platform mounts the volume and passes it to a container, the system checks that it contains a file system as specified by the fsType parameter in the PV definition. If the device is not formatted with the file system, all data from the device is erased and the device is automatically formatted with the given file system.

4.4.1.3. Cinder volume security

If you use Cinder PVs in your application, configure security for their deployment configurations.

Prerequisites

-

An SCC must be created that uses the appropriate

fsGroupstrategy.

Procedure

Create a service account and add it to the SCC:

oc create serviceaccount <service_account>

$ oc create serviceaccount <service_account>Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc adm policy add-scc-to-user <new_scc> -z <service_account> -n <project>

$ oc adm policy add-scc-to-user <new_scc> -z <service_account> -n <project>Copy to Clipboard Copied! Toggle word wrap Toggle overflow In your application’s deployment configuration, provide the service account name and

securityContext:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The number of copies of the pod to run.

- 2

- The label selector of the pod to run.

- 3

- A template for the pod that the controller creates.

- 4

- The labels on the pod. They must include labels from the label selector.

- 5

- The maximum name length after expanding any parameters is 63 characters.

- 6

- Specifies the service account you created.

- 7

- Specifies an

fsGroupfor the pods.

4.5. Persistent storage using Fibre Channel

OpenShift Container Platform supports Fibre Channel, allowing you to provision your OpenShift Container Platform cluster with persistent storage using Fibre channel volumes. Some familiarity with Kubernetes and Fibre Channel is assumed.

Persistent storage using Fibre Channel is not supported on ARM architecture based infrastructures.

The Kubernetes persistent volume framework allows administrators to provision a cluster with persistent storage and gives users a way to request those resources without having any knowledge of the underlying infrastructure. Persistent volumes are not bound to a single project or namespace; they can be shared across the OpenShift Container Platform cluster. Persistent volume claims are specific to a project or namespace and can be requested by users.

High availability of storage in the infrastructure is left to the underlying storage provider.

4.5.1. Provisioning

To provision Fibre Channel volumes using the PersistentVolume API the following must be available:

-

The

targetWWNs(array of Fibre Channel target’s World Wide Names). - A valid LUN number.

- The filesystem type.

A persistent volume and a LUN have a one-to-one mapping between them.

Prerequisites

- Fibre Channel LUNs must exist in the underlying infrastructure.

PersistentVolume object definition

- 1

- World wide identifiers (WWIDs). Either FC

wwidsor a combination of FCtargetWWNsandlunmust be set, but not both simultaneously. The FC WWID identifier is recommended over the WWNs target because it is guaranteed to be unique for every storage device, and independent of the path that is used to access the device. The WWID identifier can be obtained by issuing a SCSI Inquiry to retrieve the Device Identification Vital Product Data (page 0x83) or Unit Serial Number (page 0x80). FC WWIDs are identified as/dev/disk/by-id/to reference the data on the disk, even if the path to the device changes and even when accessing the device from different systems. - 2 3

- Fibre Channel WWNs are identified as

/dev/disk/by-path/pci-<IDENTIFIER>-fc-0x<WWN>-lun-<LUN#>, but you do not need to provide any part of the path leading up to theWWN, including the0x, and anything after, including the-(hyphen).

Changing the value of the fstype parameter after the volume has been formatted and provisioned can result in data loss and pod failure.

4.5.1.1. Enforcing disk quotas

Use LUN partitions to enforce disk quotas and size constraints. Each LUN is mapped to a single persistent volume, and unique names must be used for persistent volumes.

Enforcing quotas in this way allows the end user to request persistent storage by a specific amount, such as 10Gi, and be matched with a corresponding volume of equal or greater capacity.

4.5.1.2. Fibre Channel volume security

Users request storage with a persistent volume claim. This claim only lives in the user’s namespace, and can only be referenced by a pod within that same namespace. Any attempt to access a persistent volume across a namespace causes the pod to fail.

Each Fibre Channel LUN must be accessible by all nodes in the cluster.

4.6. Persistent storage using FlexVolume

FlexVolume is a deprecated feature. Deprecated functionality is still included in OpenShift Container Platform and continues to be supported; however, it will be removed in a future release of this product and is not recommended for new deployments.

Out-of-tree Container Storage Interface (CSI) driver is the recommended way to write volume drivers in OpenShift Container Platform. Maintainers of FlexVolume drivers should implement a CSI driver and move users of FlexVolume to CSI. Users of FlexVolume should move their workloads to CSI driver.

For the most recent list of major functionality that has been deprecated or removed within OpenShift Container Platform, refer to the Deprecated and removed features section of the OpenShift Container Platform release notes.

OpenShift Container Platform supports FlexVolume, an out-of-tree plugin that uses an executable model to interface with drivers.

To use storage from a back-end that does not have a built-in plugin, you can extend OpenShift Container Platform through FlexVolume drivers and provide persistent storage to applications.

Pods interact with FlexVolume drivers through the flexvolume in-tree plugin.

4.6.1. About FlexVolume drivers

A FlexVolume driver is an executable file that resides in a well-defined directory on all nodes in the cluster. OpenShift Container Platform calls the FlexVolume driver whenever it needs to mount or unmount a volume represented by a PersistentVolume object with flexVolume as the source.

Attach and detach operations are not supported in OpenShift Container Platform for FlexVolume.

4.6.2. FlexVolume driver example

The first command-line argument of the FlexVolume driver is always an operation name. Other parameters are specific to each operation. Most of the operations take a JavaScript Object Notation (JSON) string as a parameter. This parameter is a complete JSON string, and not the name of a file with the JSON data.

The FlexVolume driver contains:

-

All

flexVolume.options. -

Some options from

flexVolumeprefixed bykubernetes.io/, such asfsTypeandreadwrite. -

The content of the referenced secret, if specified, prefixed by

kubernetes.io/secret/.

FlexVolume driver JSON input example

OpenShift Container Platform expects JSON data on standard output of the driver. When not specified, the output describes the result of the operation.

FlexVolume driver default output example

{

"status": "<Success/Failure/Not supported>",

"message": "<Reason for success/failure>"

}

{

"status": "<Success/Failure/Not supported>",

"message": "<Reason for success/failure>"

}

Exit code of the driver should be 0 for success and 1 for error.

Operations should be idempotent, which means that the mounting of an already mounted volume should result in a successful operation.

4.6.3. Installing FlexVolume drivers

FlexVolume drivers that are used to extend OpenShift Container Platform are executed only on the node. To implement FlexVolumes, a list of operations to call and the installation path are all that is required.

Prerequisites

FlexVolume drivers must implement these operations:

initInitializes the driver. It is called during initialization of all nodes.

- Arguments: none

- Executed on: node

- Expected output: default JSON

mountMounts a volume to directory. This can include anything that is necessary to mount the volume, including finding the device and then mounting the device.

-

Arguments:

<mount-dir><json> - Executed on: node

- Expected output: default JSON

-

Arguments:

unmountUnmounts a volume from a directory. This can include anything that is necessary to clean up the volume after unmounting.

-

Arguments:

<mount-dir> - Executed on: node

- Expected output: default JSON

-

Arguments:

mountdevice- Mounts a volume’s device to a directory where individual pods can then bind mount.

This call-out does not pass "secrets" specified in the FlexVolume spec. If your driver requires secrets, do not implement this call-out.

-

Arguments:

<mount-dir><json> - Executed on: node

Expected output: default JSON

unmountdevice- Unmounts a volume’s device from a directory.

-

Arguments:

<mount-dir> - Executed on: node

Expected output: default JSON

-

All other operations should return JSON with

{"status": "Not supported"}and exit code1.

-

All other operations should return JSON with

Procedure

To install the FlexVolume driver:

- Ensure that the executable file exists on all nodes in the cluster.

-

Place the executable file at the volume plugin path:

/etc/kubernetes/kubelet-plugins/volume/exec/<vendor>~<driver>/<driver>.

For example, to install the FlexVolume driver for the storage foo, place the executable file at: /etc/kubernetes/kubelet-plugins/volume/exec/openshift.com~foo/foo.

4.6.4. Consuming storage using FlexVolume drivers

Each PersistentVolume object in OpenShift Container Platform represents one storage asset in the storage back-end, such as a volume.

Procedure

-

Use the

PersistentVolumeobject to reference the installed storage.

Persistent volume object definition using FlexVolume drivers example

- 1

- The name of the volume. This is how it is identified through persistent volume claims or from pods. This name can be different from the name of the volume on back-end storage.

- 2

- The amount of storage allocated to this volume.

- 3

- The name of the driver. This field is mandatory.

- 4

- The file system that is present on the volume. This field is optional.

- 5

- The reference to a secret. Keys and values from this secret are provided to the FlexVolume driver on invocation. This field is optional.

- 6

- The read-only flag. This field is optional.

- 7

- The additional options for the FlexVolume driver. In addition to the flags specified by the user in the

optionsfield, the following flags are also passed to the executable:"fsType":"<FS type>", "readwrite":"<rw>", "secret/key1":"<secret1>" ... "secret/keyN":"<secretN>"

"fsType":"<FS type>", "readwrite":"<rw>", "secret/key1":"<secret1>" ... "secret/keyN":"<secretN>"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Secrets are passed only to mount or unmount call-outs.

4.7. Persistent storage using GCE Persistent Disk

OpenShift Container Platform supports GCE Persistent Disk volumes (gcePD). You can provision your OpenShift Container Platform cluster with persistent storage using GCE. Some familiarity with Kubernetes and GCE is assumed.

The Kubernetes persistent volume framework allows administrators to provision a cluster with persistent storage and gives users a way to request those resources without having any knowledge of the underlying infrastructure.

GCE Persistent Disk volumes can be provisioned dynamically.

Persistent volumes are not bound to a single project or namespace; they can be shared across the OpenShift Container Platform cluster. Persistent volume claims are specific to a project or namespace and can be requested by users.

OpenShift Container Platform 4.12 and later provides automatic migration for the GCE Persist Disk in-tree volume plugin to its equivalent CSI driver.

CSI automatic migration should be seamless. Migration does not change how you use all existing API objects, such as persistent volumes, persistent volume claims, and storage classes.

For more information about migration, see CSI automatic migration.

High availability of storage in the infrastructure is left to the underlying storage provider.

4.7.1. Creating the GCE storage class

Storage classes are used to differentiate and delineate storage levels and usages. By defining a storage class, users can obtain dynamically provisioned persistent volumes.

4.7.2. Creating the persistent volume claim

Prerequisites

Storage must exist in the underlying infrastructure before it can be mounted as a volume in OpenShift Container Platform.

Procedure

- In the OpenShift Container Platform console, click Storage → Persistent Volume Claims.

- In the persistent volume claims overview, click Create Persistent Volume Claim.

Define the desired options on the page that appears.

- Select the previously-created storage class from the drop-down menu.

- Enter a unique name for the storage claim.

- Select the access mode. This selection determines the read and write access for the storage claim.

- Define the size of the storage claim.

- Click Create to create the persistent volume claim and generate a persistent volume.

4.7.3. Volume format

Before OpenShift Container Platform mounts the volume and passes it to a container, it checks that the volume contains a file system as specified by the fsType arameter in the persistent volume definition. If the device is not formatted with the file system, all data from the device is erased and the device is automatically formatted with the given file system.

This verification enables you to use unformatted GCE volumes as persistent volumes, because OpenShift Container Platform formats them before the first use.

4.8. Persistent storage using iSCSI

You can provision your OpenShift Container Platform cluster with persistent storage using iSCSI. Some familiarity with Kubernetes and iSCSI is assumed.

The Kubernetes persistent volume framework allows administrators to provision a cluster with persistent storage and gives users a way to request those resources without having any knowledge of the underlying infrastructure.

High-availability of storage in the infrastructure is left to the underlying storage provider.

When you use iSCSI on Amazon Web Services, you must update the default security policy to include TCP traffic between nodes on the iSCSI ports. By default, they are ports 860 and 3260.

Users must ensure that the iSCSI initiator is already configured on all OpenShift Container Platform nodes by installing the iscsi-initiator-utils package and configuring their initiator name in /etc/iscsi/initiatorname.iscsi. The iscsi-initiator-utils package is already installed on deployments that use Red Hat Enterprise Linux CoreOS (RHCOS).

For more information, see Managing Storage Devices.

4.8.1. Provisioning

You can verify that the storage exists in the underlying infrastructure before mounting it as a volume in OpenShift Container Platform. All that is required for the iSCSI is the iSCSI target portal, a valid iSCSI Qualified Name (IQN), a valid LUN number, the filesystem type, and the PersistentVolume API.

Procedure

-

Verify that the storage exists in the underlying infrastructure before mounting it as a volume in OpenShift Container Platform by creating the following .

PersistentVolumeobject definition:

PersistentVolume object definition

4.8.2. Enforce disk quotas

Use LUN partitions to enforce disk quotas and size constraints. Each LUN is one persistent volume. Kubernetes enforces unique names for persistent volumes.

Enforcing quotas in this way allows the user to request persistent storage by a specific amount (for example, 10Gi) and be matched with a corresponding volume of equal or greater capacity.

4.8.3. iSCSI volume security

Users request storage with a PersistentVolumeClaim object. This claim only lives in the user’s namespace and can only be referenced by a pod within that same namespace. Any attempt to access a persistent volume claim across a namespace causes the pod to fail.

Each iSCSI LUN must be accessible by all nodes in the cluster.

4.8.3.1. Challenge Handshake Authentication Protocol (CHAP) configuration

Optionally, OpenShift Container Platform can use CHAP to authenticate itself to iSCSI targets:

4.8.4. iSCSI multipathing

For iSCSI-based storage, you can configure multiple paths by using the same IQN for more than one target portal IP address. Multipathing ensures access to the persistent volume when one or more of the components in a path fail.

Procedure

-

To specify multi-paths in the pod specification, specify a value in the

portalsfield of thePersistentVolumedefinition object.

Example PersistentVolume object with a value specified in the portals field.

- 1

- Add additional target portals using the

portalsfield.

4.8.5. iSCSI custom initiator IQN

Configure the custom initiator iSCSI Qualified Name (IQN) if the iSCSI targets are restricted to certain IQNs, but the nodes that the iSCSI PVs are attached to are not guaranteed to have these IQNs.

Procedure

-

To specify a custom initiator IQN, update the

initiatorNamefield in thePersistentVolumedefinition object .

Example PersistentVolume object with a value specified in the initiatorName field.

- 1

- Specify the name of the initiator.

4.9. Persistent storage using NFS

OpenShift Container Platform clusters can be provisioned with persistent storage using NFS. Persistent volumes (PVs) and persistent volume claims (PVCs) provide a convenient method for sharing a volume across a project. While the NFS-specific information contained in a PV definition could also be defined directly in a Pod definition, doing so does not create the volume as a distinct cluster resource, making the volume more susceptible to conflicts.

4.9.1. Provisioning