アーキテクチャー

アーキテクチャーの概要

概要

第1章 アーキテクチャーの概要

OpenShift Dedicated は、クラウドベースの Kubernetes コンテナープラットフォームです。OpenShift Dedicated の基盤は Kubernetes にベースにしているため同じテクノロジーを共有しています。OpenShift Dedicated と Kubernetes の詳細は、製品アーキテクチャー を参照してください。

1.1. OpenShift Dedicated アーキテクチャーの一般的な用語集

この用語集では、アーキテクチャーコンテンツで使用される一般的な用語を定義します。

- アクセスポリシー

- クラスター内のユーザー、アプリケーション、およびエンティティーが相互に対話する方法を決定する一連のロール。アクセスポリシーは、クラスターのセキュリティーを強化します。

- 受付プラグイン

- 受付プラグインは、セキュリティーポリシー、リソース制限、または設定要件を適用します。

- 認証

-

OpenShift Dedicated へのアクセスを制御する際には、

dedicated-adminロールを持つ管理者がユーザー認証を設定し、承認されたユーザーにのみクラスターへのアクセス権を付与します。OpenShift Dedicated クラスターと対話するには、OpenShift Dedicated API を使用して認証する必要があります。OpenShift Dedicated API への要求で、OAuth アクセストークンまたは X.509 クライアント証明書を提供することで認証できます。 - bootstrap

- 最小限の Kubernetes を実行し、OpenShift Dedicated コントロールプレーンをデプロイする一時的なマシン。

- 証明書署名要求 (CSR)

- リソースは、指定された署名者に証明書への署名を要求します。この要求は承認または拒否される可能性があります。

- Cluster Version Operator (CVO)

- OpenShift Dedicated Update Service をチェックして、現在のコンポーネントのバージョンとグラフの情報に基づいて有効な更新と更新パスを確認する Operator。

- コンピュートノード

- クラスターユーザーのワークロードを実行するノード。コンピュートノードは、ワーカーノードとも呼ばれます。

- 設定ドリフト

- ノードの設定が、machine config で指定されているものと一致しない状況。

- コンテナー

- ソフトウェアとそのすべての依存関係を構成する軽量で実行可能なイメージ。コンテナーはオペレーティングシステムを仮想化するため、データセンター、パブリッククラウドまたはプライベートクラウド、ローカルホストなどの任意の場所でコンテナーを実行できます。

- コンテナーオーケストレーションエンジン

- コンテナーのデプロイ、管理、スケーリング、ネットワークを自動化するソフトウェア。

- コンテナーワークロード

- パッケージ化され、コンテナーにデプロイされるアプリケーション。

- コントロールグループ (cgroup)

- プロセスのセットをグループに分割して、プロセスが消費するリソースを管理および制限します。

- コントロールプレーン

- コンテナーのライフサイクルを定義、デプロイ、および管理するための API とインターフェイスを公開するコンテナーオーケストレーションレイヤー。コントロールプレーンは、コントロールプレーンマシンとも呼ばれます。

- CRI-O

- オペレーティングシステムと統合して効率的な Kubernetes エクスペリエンスを提供する Kubernetes ネイティブコンテナーランタイム実装。

- デプロイメント

- アプリケーションのライフサイクルを維持する Kubernetes リソースオブジェクト。

- Dockerfile

- イメージを組み立てるために端末で実行するユーザーコマンドを含むテキストファイル。

- ハイブリッドクラウドのデプロイメント。

- ベアメタル、仮想、プライベート、およびパブリッククラウド環境全体で一貫したプラットフォームを提供するデプロイメント。これにより、速度、機敏性、移植性が実現します。

- Ignition

- RHCOS が初期設定中にディスクを操作するために使用するユーティリティー。これにより、ディスクのパーティション設定やパーティションのフォーマット、ファイル作成、ユーザー設定などの一般的なディスク関連のタスクが実行されます。

- installer-provisioned infrastructure

- インストールプログラムは、クラスターが実行されるインフラストラクチャーをデプロイして設定します。

- kubelet

- コンテナーが Pod で実行されていることを確認するために、クラスター内の各ノードで実行されるプライマリーノードエージェント。

- kubernetes マニフェスト

- JSON または YAML 形式の Kubernetes API オブジェクトの仕様。設定ファイルには、デプロイメント、設定マップ、シークレット、デーモンセットを含めることができます。

- Machine Config Daemon (MCD)

- ノードの設定ドリフトを定期的にチェックするデーモン。

- Machine Config Operator (MCO)

- 新しい設定をクラスターマシンに適用する Operator。

- machine config pool (MCP)

- コントロールプレーンコンポーネントやユーザーワークロードなど、それらが処理するリソースに基づくマシンのグループです。

- metadata

- クラスターデプロイメントアーティファクトに関する追加情報。

- マイクロサービス

- ソフトウェアを書くためのアプローチ。アプリケーションは、マイクロサービスを使用して互いに独立した最小のコンポーネントに分離できます。

- ミラーレジストリー

- OpenShift Dedicated イメージのミラーを保持するレジストリー。

- モノリシックアプリケーション

- 自己完結型で、構築され、1 つのピースとしてパッケージ化されたアプリケーション。

- namespace

- namespace は、すべてのプロセスから見える特定のシステムリソースを分離します。namespace 内では、その namespace のメンバーであるプロセスのみがそれらのリソースを参照できます。

- networking

- OpenShift Dedicated クラスターのネットワーク情報。

- node

- OpenShift Dedicated クラスター内のワーカーマシンです。ノードは、仮想マシン (VM) または物理マシンのいずれかです。

- OpenShift CLI (

oc) - ターミナル上で OpenShift Dedicated コマンドを実行するためのコマンドラインツール。

- OpenShift Update Service (OSUS)

- インターネットにアクセスできるクラスターの場合、Red Hat Enterprise Linux (RHEL) は、パブリック API の背後にあるホストされたサービスとして OpenShift 更新サービスを使用して、over-the-air 更新を提供します。

- OpenShift イメージレジストリー

- イメージを管理するために OpenShift Dedicated によって提供されるレジストリー。

- Operator

- OpenShift Dedicated クラスターで Kubernetes アプリケーションをパッケージ化、デプロイ、および管理するための推奨される方法です。Operator は、人間による操作に関する知識を取り入れて、簡単にパッケージ化してお客様と共有できるソフトウェアにエンコードします。

- OperatorHub

- インストールするさまざまな OpenShift Dedicated Operator が含まれるプラットフォーム。

- Operator Lifecycle Manager (OLM)

- OLM は、Kubernetes ネイティブアプリケーションのライフサイクルをインストール、更新、および管理するのに役立ちます。OLM は、Operator を効果的かつ自動化されたスケーラブルな方法で管理するために設計されたオープンソースのツールキットです。

- OSTree

- 完全なファイルシステムツリーのアトミックアップグレードを実行する、Linux ベースのオペレーティングシステムのアップグレードシステム。OSTree は、アドレス指定可能なオブジェクトストアを使用して、ファイルシステムツリーへの重要な変更を追跡し、既存のパッケージ管理システムを補完するように設計されています。

- OTA (over-the-air) 更新

- OpenShift Dedicated Update Service (OSUS) は、Red Hat Enterprise Linux CoreOS (RHCOS) を含む OpenShift Dedicated に over-the-air 更新を提供します。

- Pod

- OpenShift Dedicated クラスターで実行されている、ボリュームや IP アドレスなどの共有リソースを持つ 1 つ以上のコンテナー。Pod は、定義、デプロイ、および管理される最小のコンピュート単位です。

- プライベートレジストリー

- OpenShift Dedicated は、コンテナーイメージレジストリー API を実装する任意のサーバーをイメージのソースとして使用できます。これにより、開発者はプライベートコンテナーイメージをプッシュおよびプルできます。

- 公開レジストリー

- OpenShift Dedicated は、コンテナーイメージレジストリー API を実装する任意のサーバーをイメージのソースとして使用できます。これにより、開発者はプライベートコンテナーイメージをプッシュおよびプルできます。

- RHEL OpenShift Dedicated クラスターマネージャー

- OpenShift Dedicated クラスターをインストール、変更、操作、アップグレードできるマネージドサービスです。

- RHEL Quay Container Registry

- ほとんどのコンテナーイメージと Operator を OpenShift Dedicated クラスターに提供する Quay.io コンテナーレジストリー。

- レプリケーションコントローラー

- 一度に実行する必要がある Pod レプリカの数を示すアセット。

- ロールベースのアクセス制御 (RBAC)

- クラスターユーザーとワークロードが、ロールを実行するために必要なリソースにのみアクセスできるようにするための重要なセキュリティーコントロール。

- ルート

- ルートは、サービスを公開して、OpenShift Dedicated インスタンス外のユーザーおよびアプリケーションから Pod へのネットワークアクセスを許可します。

- スケーリング

- リソース容量の増加または減少。

- サービス

- サービスは、一連の Pod で実行中のアプリケーションを公開します。

- Source-to-Image (S2I) イメージ

- アプリケーションをデプロイするために、OpenShift Dedicated のアプリケーションソースコードのプログラミング言語に基づいて作成されたイメージ。

- storage

- OpenShift Dedicated は、クラウドプロバイダー向けにさまざまな種類のストレージをサポートしています。OpenShift Dedicated クラスターでは、永続データと非永続データのコンテナーストレージを管理できます。

- テレメトリー

- OpenShift Dedicated のサイズ、健全性、ステータスなどの情報を収集するコンポーネント。

- template

- テンプレートでは、パラメーター化や処理が可能な一連のオブジェクトを記述し、OpenShift Dedicated で作成するためのオブジェクトのリストを生成します。

- Web コンソール

- OpenShift Dedicated を管理するためのユーザーインターフェイス (UI)。

- ワーカーノード

- クラスターユーザーのワークロードを実行するノード。ワーカーノードは、コンピュートノードとも呼ばれます。

1.2. OpenShift Dedicated と OpenShift Container Platform の違い

OpenShift Dedicated は OpenShift Container Platform と同じコードベースを使用しますが、パフォーマンス、スケーラビリティー、およびセキュリティーに対して最適化されるように意見をもとにした方法でインストールされます。OpenShift Dedicated は完全なマネージドサービスです。そのため、OpenShift Container Platform で手動で設定した OpenShift Dedicated コンポーネントおよび設定の多くは、デフォルトで設定されています。

独自のインフラストラクチャーで OpenShift Dedicated と OpenShift Container Platform の標準インストールとの以下の違いを確認します。

| OpenShift Container Platform | OpenShift Dedicated |

|---|---|

| お客様が OpenShift Container Platform をインストールし、設定します。 | OpenShift Dedicated は、Red Hat OpenShift Cluster Manager を通じて、パフォーマンス、スケーラビリティー、セキュリティーが最適化された標準的な方法でインストールされます。 |

| お客様がコンピューティングリソースを選択できます。 | OpenShift Dedicated は、Red Hat 所有またはお客様提供のパブリッククラウド (Amazon Web Services または Google Cloud) でホストおよび管理されます。 |

| お客様がインフラストラクチャーに対して上位レベルの管理権限を持ちます。 |

お客様には組み込みの管理者グループ ( |

| お客様は OpenShift Container Platform で利用可能なすべてのサポート対象機能と設定を使用できます。 | 一部の OpenShift Container Platform 機能と設定は、OpenShift Dedicated で利用または変更できない場合があります。 |

|

| Red Hat はコントロールプレーンをセットアップし、コントロールプレーンコンポーネントを管理します。コントロールプレーンは高可用性を維持します。 |

| お客様は、コントロールプレーンおよびワーカーノードの基礎となるインフラストラクチャーを更新する必要があります。OpenShift Web コンソールを使用して、OpenShift Container Platform のバージョンを更新できます。 | Red Hat は、更新が利用可能になると自動的にお客様に通知します。OpenShift Cluster Manager で、更新を手動または自動でスケジュールできます。 |

| サポートは、Red Hat サブスクリプションまたはクラウドプロバイダーの規約に基づいて提供されます。 | Red Hat によって設計、運用、サポートされ、99.95% の稼働率 SLA と 24 時間 365 日の対応が保証されています。詳細は、Red Hat Enterprise Agreement Appendix 4 (Online Subscription Services) を参照してください。 |

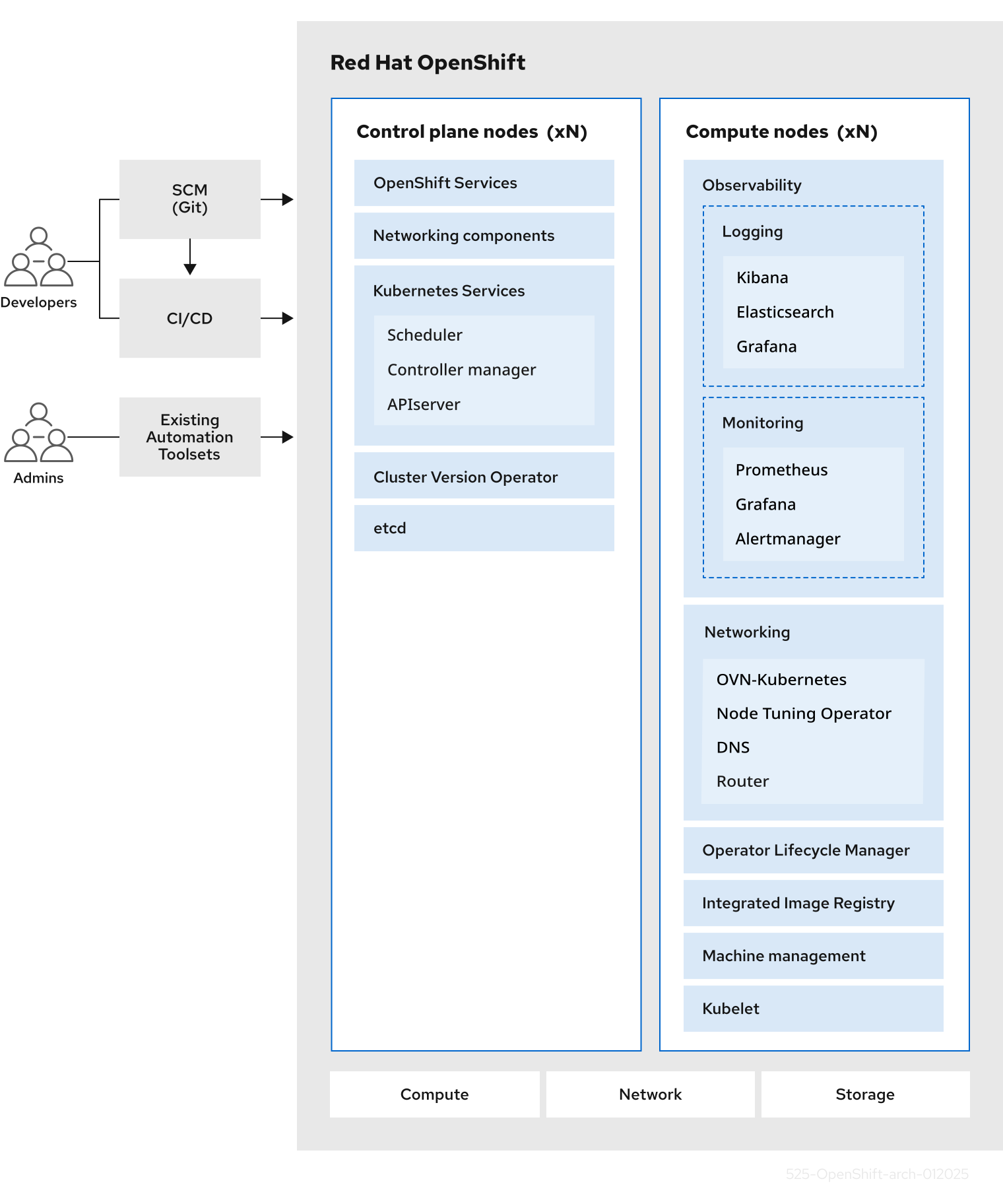

1.3. コントロールプレーンについて

コントロールプレーン は、クラスター内のワーカーノードと Pod を管理します。machine config pool (MCP) を使用してノードを設定できます。MCP は、コントロールプレーンコンポーネントやユーザーワークロードなど、それらが処理するリソースに基づくマシンのグループです。OpenShift Dedicated はホストにさまざまなロールを割り当てます。これらのロールは、クラスター内のマシンの機能を定義します。クラスターには、標準のコントロールプレーンとワーカーロールタイプの定義が含まれています。

Operator を使用して、コントロールプレーン上のサービスをパッケージ化、デプロイ、および管理できます。Operator は、以下のサービスを提供するため、OpenShift Dedicated の重要なコンポーネントです。

- ヘルスチェックを実行する

- アプリケーションを監視する方法を提供する

- 無線更新を管理する

- アプリケーションが指定された状態にとどまるようにする

1.4. 開発者向けのコンテナー化されたアプリケーションについて

開発者は、さまざまなツール、メソッド、および形式を使用して、固有の要件に基づいて コンテナー化されたアプリケーションを開発 できます。次に例を示します。

- さまざまなビルドツール、ベースイメージ、およびレジストリーオプションを使用して、単純なコンテナーアプリケーションをビルドします。

- ソフトウェアカタログやテンプレートなどのサポートコンポーネントを使用してアプリケーションを開発します。

- アプリケーションを Operator としてパッケージ化してデプロイします。

Kubernetes マニフェストを作成して、Git リポジトリーに保存することもできます。Kubernetes は、Pod と呼ばれる基本ユニットで動作します。Pod は、クラスターで実行中のプロセスの単一インスタンスです。Pod には 1 つ以上のコンテナーを含めることができます。Pod のセットとそのアクセスポリシーをグループ化すると、サービスを作成できます。サービスは、Pod が作成および破棄されるときに使用する他のアプリケーションの永続的な内部 IP アドレスおよびホスト名を提供します。Kubernetes は、アプリケーションのタイプに基づいてワークロードを定義します。

1.5. 受付プラグインについて

受付プラグイン は、OpenShift Dedicated の機能の調整に役立ちます。リソースリクエストが認証および承認された後、受付プラグインはマスター API へのリソース要求をインターセプトして、リソース要求を検証し、スケーリングポリシーが遵守されていることを確認します。受付プラグインは、セキュリティーポリシー、リソース制限、設定要件、およびその他の設定を適用するために使用されます。

1.6. Linux cgroup バージョン 2 について

OpenShift Dedicated は、クラスター内で Linux コントロールグループバージョン 2 (cgroup v2) を使用します。

cgroup v2 は、統一された階層、より安全なサブツリー委譲、Pressure Stall Information などの機能、強化されたリソース管理と分離など、cgroup v1 に比べていくつかの点で改善されています。ただし、cgroup v2 には、cgroup v1 とは異なる CPU、メモリー、および I/O 管理特性があります。したがって、一部のワークロードでは、cgroup v2 を実行するクラスター上のメモリーまたは CPU 使用率にわずかな違いが発生する可能性があります。

- cgroup ファイルシステムに依存するサードパーティーの監視およびセキュリティーエージェントを実行している場合は、エージェントを cgroup v2 をサポートするバージョンに更新します。

- cgroup v2 を設定し、Pod とコンテナーを監視するスタンドアロンデーモンセットとして cAdvisor を実行している場合は、cAdvisor を v0.43.0 以降に更新します。

Java アプリケーションをデプロイする場合は、次のパッケージなど、cgroup v2 を完全にサポートするバージョンを使用してください。

- OpenJDK/HotSpot: jdk8u372、11.0.16、15 以降

- NodeJs 20.3.0 以降

- IBM Semeru ランタイム: jdk8u345-b01、11.0.16.0、17.0.4.0、18.0.2.0 以降

- IBM SDK Java Technology Edition バージョン (IBM Java): 8.0.7.15 以降

第2章 OpenShift Dedicated のアーキテクチャー

2.1. OpenShift Dedicated の紹介

OpenShift Dedicated は、コンテナー化されたアプリケーションを開発し、実行するためのプラットフォームです。アプリケーションおよびアプリケーションをサポートするデータセンターで、わずか数台のマシンとアプリケーションから、何百万ものクライアントに対応する何千ものマシンに拡張できるように設計されています。

Kubernetes をその基盤とする OpenShift Dedicated には、大規模な通信、ビデオストリーミング、ゲーミング、バンキングその他のアプリケーションのエンジンと同様に機能する技術が組み込まれています。Red Hat のオープンテクノロジーに実装することで、コンテナー化されたアプリケーションを、単一クラウドを超えてオンプレミスおよびマルチクラウド環境へと拡張できます。

2.1.1. Kubernetes について

コンテナーイメージとそれらのイメージから実行されるコンテナーは、最先端のアプリケーション開発における主要な設定要素ですが、それらを大規模に実行するには、信頼性と柔軟性に優れた分配システムが必要となります。Kubernetes は、コンテナーをオーケストレーションするための事実上の業界標準です。

Kubernetes は、コンテナー化されたアプリケーションのデプロイ、スケーリング、管理を自動化するための、オープンソースのコンテナーオーケストレーションエンジンです。Kubernetes の一般的概念は非常にシンプルです。

- 1 つまたは複数のワーカーノードを使用して起動し、コンテナーのワークロードを実行します。

- 1 つまたは複数のコントロールプレーンノードからワークロードのデプロイを管理します。

- Pod と呼ばれるデプロイメント単位にコンテナーをラップします。Pod を使うことでコンテナーに追加のメタデータが付与され、複数のコンテナーを単一のデプロイメントエンティティーにグループ化する機能が提供されます。

- 特殊な種類のアセットを作成します。たとえば、サービスは一連の Pod とそのアクセス方法を定義するポリシーによって表されます。このポリシーにより、コンテナーはサービス用の特定の IP アドレスを持っていない場合でも、必要とするサービスに接続できます。レプリケーションコントローラーは、一度に実行するのに必要な Pod レプリカ数を示すもう一つの特殊なアセットです。この機能を使用すると、現在の需要に対応できるようにアプリケーションを自動的にスケーリングできます。

Kubernetes は、わずか数年でクラウドとオンプレミスに非常に幅広く採用されるようになりました。このオープンソースの開発モデルにより、多くの人々がネットワーク、ストレージ、認証といったコンポーネント向けの各種の技術を実装し、Kubernetes を拡張できます。

2.1.2. コンテナー化されたアプリケーションの利点

コンテナー化されたアプリケーションには、従来のデプロイメント方法を使用する場合と比べて多くの利点があります。アプリケーションはこれまで、すべての依存関係を含むオペレーティングシステムにインストールする必要がありましたが、コンテナーの場合はアプリケーションがそれぞれの依存関係を持ち込むことができます。コンテナー化されたアプリケーションを作成すると多くの利点が得られます。

2.1.2.1. オペレーティングシステムの利点

コンテナーは、小型の、専用の Linux オペレーティングシステムをカーネルなしで使用します。ファイルシステム、ネットワーク、cgroups、プロセステーブル、namespace は、ホストの Linux システムから分離されていますが、コンテナーは、必要に応じてホストとシームレスに統合できます。Linux を基盤とすることで、コンテナーでは、迅速なイノベーションを可能にするオープンソース開発モデルに備わっているあらゆる利点を活用できます。

各コンテナーは専用のオペレーティングシステムを使用するため、競合するソフトウェアの依存関係を必要とする複数のアプリケーションを、同じホストにデプロイできます。各コンテナーは、それぞれの依存するソフトウェアを持ち運び、ネットワークやファイルシステムなどの独自のインターフェイスを管理します。したがってアプリケーションはそれらのアセットについて競い合う必要はありません。

2.1.2.2. デプロイメントとスケーリングの利点

アプリケーションのメジャーリリース間でローリングアップグレードを行うと、ダウンタイムなしにアプリケーションを継続的に改善し、かつ現行リリースとの互換性を維持できます。

さらに、アプリケーションの新バージョンを、旧バージョンと並行してデプロイおよびテストすることもできます。コンテナーがテストにパスしたら、新規コンテナーを追加でデプロイし、古いコンテナーを削除できます。

アプリケーションのソフトウェアの依存関係はすべてコンテナー内で解決されるため、データセンターの各ホストには標準化されたオペレーティングシステムを使用できます。各アプリケーションホスト向けに特定のオペレーティングシステムを設定する必要はありません。データセンターでさらに多くの容量が必要な場合は、別の汎用ホストシステムをデプロイできます。

同様に、コンテナー化されたアプリケーションのスケーリングも簡単です。OpenShift Dedicated には、どのようなコンテナー化したサービスでもスケーリングできる、シンプルで標準的な方法が用意されています。アプリケーションを大きなモノリシックな (一枚岩的な) サービスではなく、マイクロサービスのセットとしてビルドする場合、個々のマイクロサービスを、需要に合わせて個別にスケーリングできます。この機能により、アプリケーション全体ではなく必要なサービスのみをスケーリングすることができ、使用するリソースを最小限に抑えつつ、アプリケーションの需要を満たすことができます。

2.1.3. OpenShift Dedicated の概要

OpenShift Dedicated は、以下を含むエンタープライズ対応の拡張機能を Kubernetes に提供します。

- Red Hat の統合されたテクノロジー。OpenShift Dedicated の主要コンポーネントは、Red Hat Enterprise Linux (RHEL) および関連する Red Hat テクノロジーに基づいています。OpenShift Dedicated は、Red Hat の高品質エンタープライズソフトウェアの集中的なテストや認定の取り組みによる数多くの利点を活用しています。

- オープンソースの開発モデル。開発はオープンソースで行われ、ソースコードはソフトウェアのパブリックリポジトリーから入手可能です。このオープンな共同作業が迅速な技術と開発を促進します。

Kubernetes はアプリケーションの管理で威力を発揮しますが、プラットフォームレベルの各種要件やデプロイメントプロセスを指定したり、管理したりすることはありません。そのため、OpenShift Dedicated 4 が提供する強力かつ柔軟なプラットフォーム管理ツールとプロセスは重要な利点の 1 つとなります。以下のセクションでは、OpenShift Dedicated のいくつかのユニークな機能と利点を説明します。

2.1.3.1. カスタムオペレーティングシステム

OpenShift Dedicated は、すべてのコントロールプレーンとワーカーノードのオペレーティングシステムとして Red Hat Enterprise Linux CoreOS (RHCOS) を使用します。

RHCOS には以下が含まれます。

- Ignition。OpenShift Dedicated が使用するマシンを最初に起動し、設定するための初回起動時のシステム設定です。

- CRI-O、Kubernetes ネイティブコンテナーランタイム実装。これはオペレーティングシステムに密接に統合し、Kubernetes の効率的で最適化されたエクスペリエンスを提供します。CRI-O は、コンテナーを実行、停止および再起動を実行するための機能を提供します。これは、OpenShift Dedicated 3 で使用されていた Docker Container Engine を完全に置き換えます。

- Kubelet、Kubernetes のプライマリーノードエージェント。これは、コンテナーを起動し、これを監視します。

2.1.3.2. 簡素化された更新プロセス

OpenShift Dedicated の更新またはアップグレードは、シンプルで自動化が進んだプロセスです。OpenShift Dedicated は、各マシンで実行される、オペレーティングシステム自体を含むシステムとサービスを中央のコントロールプレーンから完全に制御するので、アップグレードは自動イベントになるように設計されています。

2.1.3.3. その他の主な機能

Operator は、OpenShift Dedicated 4 コードベースの基本単位であるだけでなく、アプリケーションとアプリケーションで使用されるソフトウェアコンポーネントをデプロイするための便利な手段です。Operator をプラットフォームの基盤として使用することで、OpenShift Dedicated ではオペレーティングシステムおよびコントロールプレーンアプリケーションの手動によるアップグレードが不要になります。Cluster Version Operator や Machine Config Operator などの OpenShift Dedicated の Operator が、それらの重要なコンポーネントのクラスター全体での管理を単純化します。

Operator Lifecycle Manager (OLM) およびソフトウェアカタログは、Operator を保管し、アプリケーションの開発やデプロイを行うユーザーに Operator を提供する機能を提供します。

Red Hat Quay Container Registry は、ほとんどのコンテナーイメージと Operator を OpenShift Dedicated クラスターに提供する Quay.io コンテナーレジストリーです。Quay.io は、何百万ものイメージやタグを保存する Red Hat Quay の公開レジストリー版です。

OpenShift Dedicated における Kubernetes のその他の拡張には、SDN (Software Defined Networking)、認証、ログ集計、監視、およびルーティングの強化された機能が含まれます。OpenShift Dedicated は、包括的な Web コンソールとカスタム OpenShift CLI (oc) インタフェースも提供します。

第3章 OpenShift Dedicated on Google Cloud のアーキテクチャーモデル

OpenShift Dedicated on Google Cloud を使用すると、パブリックネットワークまたはプライベートネットワーク経由でアクセス可能なクラスターを作成できます。

3.1. パブリックおよびプライベートネットワーク上のプライベート OpenShift Dedicated on Google Cloud アーキテクチャー

次のいずれかのネットワーク設定タイプを選択して、API サーバーエンドポイントおよび Red Hat SRE 管理のアクセスパターンをカスタマイズできます。

- Private Service Connect (PSC) を使用したプライベートクラスター

- PSC を使用しないプライベートクラスター

- パブリッククラスター

Red Hat では、Google Cloud にプライベート OpenShift Dedicated クラスターをデプロイする場合に PSC を使用することを推奨しています。PSC は、Red Hat インフラストラクチャー、Site Reliability Engineering (SRE)、およびプライベート OpenShift クラスター間のセキュアなプライベート接続を実現します。

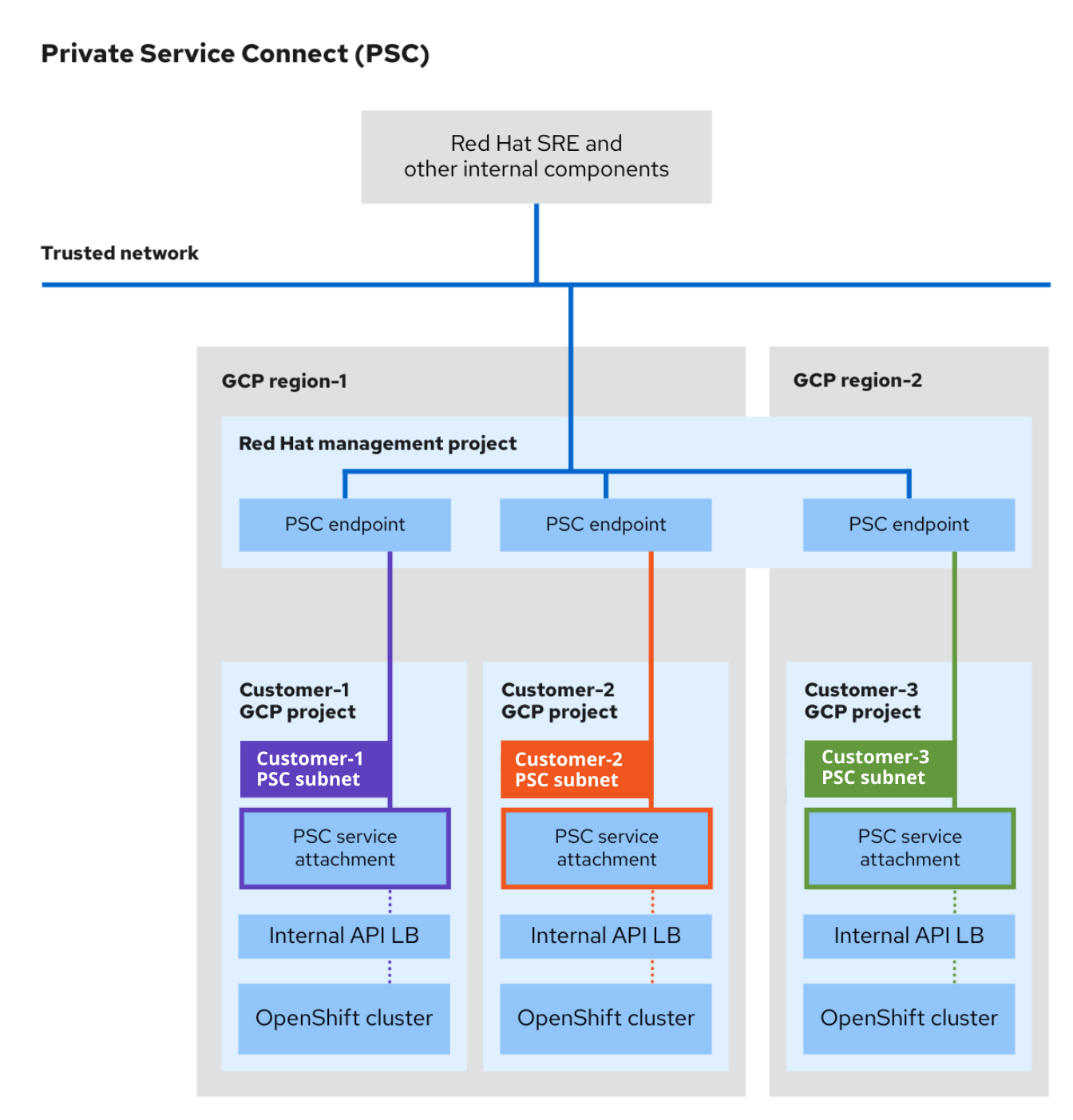

3.2. Private Service Connect について

Google Cloud のネットワーク機能である Private Service Connect (PSC) を使用すると、Google Cloud 内の異なるプロジェクトや組織にまたがるサービス間のプライベート通信が可能になります。ネットワーク接続の一部として PSC を実装するユーザーは、パブリックに公開されるクラウドリソースを使用せずに、Google Cloud 内のプライベートでセキュアな環境に OpenShift Dedicated クラスターをデプロイできます。

PSC の詳細は、Private Service Connect を参照してください。

PSC は OpenShift Dedicated バージョン 4.17 以降でのみ使用可能であり、Customer Cloud Subscription (CCS) インフラストラクチャータイプでのみサポートされます。

3.2.1. Private Service Connect のアーキテクチャー

PSC のアーキテクチャーには、プロデューサーサービスとコンシューマーサービスが含まれています。PSC を使用すると、コンシューマーは VPC ネットワーク内からプロデューサーサービスにプライベートにアクセスできます。同様に、プロデューサーは独自の個別の VPC ネットワークでサービスをホストし、コンシューマーにプライベート接続を提供できます。

次の図は、Red Hat SRE およびその他の内部リソースが PSC を使用して作成されたクラスターにアクセスし、サポートする方法を示しています。

- お客様の Google Cloud プロジェクト内の OSD クラスターごとに、一意の PSC サービスアタッチメントが作成されます。PSC サービスアタッチメントは、お客様の Google Cloud プロジェクトに作成されたクラスター API サーバーロードバランサーを参照します。

- サービスアタッチメントと同様に、各 OSD クラスターに対して、Red Hat Management Google Cloud プロジェクトに一意の PSC エンドポイントが作成されます。

- お客様の Google Cloud プロジェクト内のクラスターのネットワークに、Google Cloud Private Service Connect 専用のサブネットが作成されます。これは特別なサブネットタイプであり、このサブネットタイプを使用してプロデューサーサービスが PSC サービスアタッチメントを介して公開されます。このサブネットは、クラスター API サーバーへの受信要求に対してソース NAT (SNAT) を実行するために使用されます。さらに、PSC サブネットは、マシン CIDR 範囲内にある必要があり、複数のサービスアタッチメントで使用することはできません。

- Red Hat の内部リソースと SRE は、PSC エンドポイントとサービスアタッチメント間の接続を使用してプライベート OSD クラスターにアクセスします。トラフィックは複数の VPC ネットワークを通過しますが、完全に Google Cloud 内に留まります。

- PSC サービスアタッチメントへのアクセスは、Red Hat Management プロジェクト経由でのみ可能です。

図3.1 PSC のアーキテクチャーの概要

3.3. Private Service Connect アーキテクチャーモデルを使用したプライベート OpenShift Dedicated on Google Cloud

プライベート Google Cloud Private Service Connect (PSC) ネットワーク設定では、クラスター API サーバーエンドポイントとアプリケーションルートがプライベートになります。Egress の場合、VPC にパブリックサブネットまたは NAT ゲートウェイは必要ありません。Red Hat SRE 管理は、Google Cloud PSC 対応のプライベート接続を介してクラスターにアクセスします。デフォルトの Ingress コントローラーはプライベートです。追加の Ingress コントローラーは、パブリックまたはプライベートにすることができます。次の図は、PSC を使用したプライベートクラスターのネットワーク接続を示しています。

図3.2 PSC を使用してプライベートネットワークにデプロイされた OpenShift Dedicated on Google Cloud

3.4. Private Service Connect (PSC) アーキテクチャーモデルを使用しないプライベート OpenShift Dedicated on Google Cloud

プライベートネットワーク設定では、クラスター API サーバーエンドポイントとアプリケーションルートがプライベートになります。プライベート OpenShift Dedicated on Google Cloud クラスターは、いくつかのパブリックサブネットを使用します。ただし、パブリックサブネットにコントロールプレーンやワーカーノードがデプロイされることはありません。

Red Hat では、Google Cloud にプライベート OpenShift Dedicated クラスターをデプロイする場合に、Private Service Connect (PSC) を使用することを推奨しています。PSC は、Red Hat インフラストラクチャー、Site Reliability Engineering (SRE)、およびプライベート OpenShift クラスター間のセキュアなプライベート接続を実現します。

Red Hat SRE 管理は、Red Hat の IP に制限されているパブリックロードバランサーエンドポイントを介してクラスターにアクセスします。API サーバーエンドポイントはプライベートです。それとは別の Red Hat API サーバーエンドポイントはパブリックです (ただし、Red Hat の信頼できる IP アドレスに制限されています)。デフォルトの Ingress コントローラーは、パブリックまたはプライベートにすることができます。次の図は、Private Service Connect (PSC) を使用しないプライベートクラスターのネットワーク接続を示しています。

図3.3 PSC を使用せずにプライベートネットワークにデプロイされた OpenShift Dedicated on Google Cloud

3.5. パブリック OpenShift Dedicated on Google Cloud アーキテクチャーモデル

パブリックネットワーク設定では、クラスター API サーバーエンドポイントとアプリケーションルートがインターネットに接続されます。デフォルトの Ingress コントローラーは、パブリックまたはプライベートにすることができます。次の画像は、パブリッククラスターのネットワーク接続を示しています。

図3.4 パブリックネットワークにデプロイされた OpenShift Dedicated on Google Cloud

第4章 コントロールプレーンアーキテクチャー

コントロールプレーンマシンで構成される コントロールプレーン は、OpenShift Dedicated クラスターを管理します。コントロールプレーンマシンは、コンピュートマシン (ワーカーマシンとしても知られる) のワークロードを管理します。クラスター自体は、Cluster Version Operator (CVO) と一連の個別の Operator のアクションによって、マシンへのすべてのアップグレードを管理します。

4.1. OpenShift Dedicated のマシンロール

OpenShift Dedicated はホストに複数の異なるロールを割り当てます。これらのロールは、クラスター内のマシンの機能を定義します。クラスターには、標準の master および worker のロールタイプの定義が含まれます。

4.1.1. クラスターのワーカー

Kubernetes のクラスターでは、ワーカーノードは Kubernetes ユーザーによってリクエストされた実際のワークロードを実行し、管理します。ワーカーノードは容量をアドバタイズし、コントロールプレーンサービスであるスケジューラーは Pod とコンテナーを開始するノードを決定します。以下の重要なサービスは、各ワーカーノードで実行されます。

- コンテナーエンジンである CRI-O。

- コンテナーワークロードの実行と停止の要求を受け入れて停止するサービスである kubelet。

- ワーカー全体で Pod の通信を管理するサービスプロキシー。

- コンテナーを作成して実行する、crun または runC low-level コンテナーランタイム。

デフォルトの crun の代わりに runC を有効にする方法は、ContainerRuntimeConfig CR の作成に関するドキュメントを参照してください。

OpenShift Dedicated では、コンピューティングマシンセットは、worker マシンロールが割り当てられたコンピューティングマシンを制御します。worker のロールを持つマシンは、自動スケーリングを行う特定のマシンプールにより制御されるコンピュートワークロードを実行します。OpenShift Dedicated は複数のマシンタイプをサポートする機能があるため、ワーカー ロールを持つマシンは コンピュート マシンとして分類されます。このリリースでは、コンピュートマシンの唯一のデフォルトタイプはワーカーマシンであるため、このリリースでは ワーカーマシン と コンピュートマシン は相互に置き換え可能な用語として使用されています。OpenShift Dedicated の今後のバージョンでは、インフラストラクチャーマシンなどの異なる種類のコンピュートマシンがデフォルトで使用される可能性があります。

コンピューティングマシンセットは、machine-api namespace にあるコンピューティングマシンリソースのグループです。コンピューティングマシンセットは、特定のクラウドプロバイダーで新しいコンピューティングマシンを起動するように設計された設定です。machine config pool (MCP) は Machine Config Operator (MCO) namespace の一部です。MCP は、MCO がそれらの設定を管理し、それらのアップグレードを容易に実行できるようにマシンをまとめるために使用されます。

4.1.2. クラスターコントロールプレーン

Kubernetes のクラスターでは、master ノードは Kubernetes クラスターの制御に必要なサービスを実行します。OpenShift Dedicated では、コントロールプレーンは、master マシンのロールを持つコントロールプレーンマシンで構成されます。これには、OpenShift Dedicated クラスターを管理する Kubernetes サービス以外も含まれます。

ほとんどの OpenShift Dedicated クラスターでは、コントロールプレーンマシンは一連のスタンドアロンマシン API リソースによって定義されます。コントロールプレーンは、コントロールプレーンマシンセットで管理されます。すべてのコントロールプレーンマシンが削除されてクラスターが切断されないようにするために、追加の制御がコントロールプレーンマシンに適用されます。

アベイラビリティゾーンクラスターと複数のアベイラビリティーゾーンクラスターには、少なくとも 3 つのコントロールプレーンノードが必要です。

コントロールプレーン上の Kubernetes カテゴリーに分類されるサービスには、Kubernetes API サーバー、etcd、Kubernetes コントローラーマネージャー、Kubernetes スケジューラーが含まれます。

| コンポーネント | 説明 |

|---|---|

| Kubernetes API サーバー | Kubernetes API サーバーは Pod、サービスおよびレプリケーションコントローラーのデータを検証し、設定します。また、クラスターの共有される状態を確認できる中心的な部分として機能します。 |

| etcd | etcd はコントロールプレーンの永続的な状態を保存し、他のコンポーネントは etcd で変更の有無を監視して、それぞれを指定された状態に切り替えます。 |

| Kubernetes コントローラーマネージャー | Kubernetes コントローラーマネージャーは etcd でレプリケーション、namespace、サービスアカウントコントローラーのオブジェクトなどのオブジェクトへの変更の有無を監視し、API を使用して指定された状態を実行します。このような複数のプロセスは、一度に 1 つのアクティブなリーダーを設定してクラスターを作成します。 |

| Kubernetes スケジューラー | Kubernetes スケジューラーは、割り当て済みのノードなしで新規に作成された Pod の有無を監視し、Pod をホストする最適なノードを選択します。 |

また、コントロールプレーンで実行される OpenShift サービス (OpenShift API サーバー、OpenShift コントローラーマネージャー、OpenShift OAuth API サーバー、および OpenShift OAuth サーバー) もあります。

| コンポーネント | 説明 |

|---|---|

| OpenShift API サーバー | OpenShift API サーバーは、プロジェクト、ルート、テンプレートなどの OpenShift リソースのデータを検証し、設定します。 OpenShift API サーバーは OpenShift API Server Operator により管理されます。 |

| OpenShift コントロールマネージャー | OpenShift コントローラーマネージャーは etcd でプロジェクト、ルート、テンプレートコントローラーオブジェクトなどの OpenShift オブジェクトへの変更の有無を監視し、API を使用して指定された状態を適用します。 OpenShift コントローラーマネージャーは OpenShift Controller Manager Operator により管理されます。 |

| OpenShift OAuth API サーバー | OpenShift OAuth API サーバーは、ユーザー、グループ、OAuth トークンなどの OpenShift Dedicated に対して認証を行うようにデータを検証し、設定します。 OpenShift OAuth API サーバーは Cluster Authentication Operator により管理されます。 |

| OpenShift OAuth サーバー | ユーザーは OpenShift OAuth サーバーからトークンを要求し、API に対して認証します。 OpenShift OAuth サーバーは Cluster Authentication Operator により管理されます。 |

コントロールプレーンマシン上のこれらサービスの一部は systemd サービスとして実行し、それ以外は静的な Pod として実行されます。

systemd サービスは、起動直後の特定のシステムで常に起動している必要のあるサービスに適しています。コントロールプレーンマシンの場合は、リモートログインを可能にする sshd も含まれます。また、以下のようなサービスも含まれます。

- CRI-O コンテナーエンジン (crio): コンテナーを実行し、管理します。OpenShift Dedicated 4 は、Docker Container Engine ではなく CRI-O を使用します。

- Kubelet (kubelet): マシン上で、コントロールプレーンサービスからのコンテナー管理要求を受け入れます。

CRI-O および Kubelet は、他のコンテナーを実行する前に実行されている必要があるため、systemd サービスとしてホスト上で直接実行される必要があります。

installer-* および revision-pruner-* コントロールプレーン Pod は、root ユーザーが所有する /etc/kubernetes ディレクトリーに書き込むため、root パーミッションで実行する必要があります。これらの Pod は以下の namespace に置かれます。

-

openshift-etcd -

openshift-kube-apiserver -

openshift-kube-controller-manager -

openshift-kube-scheduler

4.2. OpenShift Dedicated の Operator

Operator は OpenShift Dedicated の最も重要なコンポーネントです。これらは、コントロールプレーン上でサービスをパッケージ化、デプロイ、および管理するための推奨される方法です。Operator の使用は、ユーザーが実行するアプリケーションにも各種の利点があります。

Operators は、kubectl や OpenShift CLI (oc) などの Kubernetes API および CLI ツールと統合します。Operator はアプリケーションの監視、ヘルスチェックの実行、OTA (over-the-air) 更新の管理を実行し、アプリケーションが指定した状態にあることを確認するための手段となります。

また、Operator はより粒度の高いエクスペリエンスも提供します。各コンポーネントは、グローバル設定ファイルではなく、Operator が公開する API を変更して設定できます。

CRI-O と Kubelet はすべてのノード上で実行されるため、Operator を使用することにより、ほぼすべての他のクラスター機能をコントロールプレーンで管理できます。Operator を使用してコントロールプレーンに追加されるコンポーネントには、重要なネットワークおよび認証情報サービスが含まれます。

OpenShift Dedicated の Operator は、目的に応じて 2 つの異なるシステムによって管理されます。どちらも同様の Operator の概念と目標に準拠しています。

- クラスター Operator

- Cluster Version Operator (CVO) により管理され、クラスター機能を実行するためにデフォルトでインストールされます。

- オプションのアドオン Operators

- Operator Lifecycle Manager (OLM) により管理され、ユーザーがアプリケーションで実行するようにアクセスを可能にします。OLM ベースの Operator とも呼ばれます。

4.2.1. アドオン Operator

Operator Lifecycle Manager (OLM) とソフトウェアカタログは、Kubernetes ネイティブアプリケーションを Operator として管理するのに役立つ OpenShift Dedicated のデフォルトコンポーネントです。これらは一緒になって、クラスターで使用可能なオプションのアドオン Operator を検出、インストール、および管理するためのシステムを提供します。

OpenShift Dedicated Web コンソールのソフトウェアカタログを使用すると、dedicated-admin ロールを持つ管理者と許可されたユーザーは、インストールする Operator を Operator のカタログから選択できます。ソフトウェアカタログから Operator をインストールすると、ユーザーアプリケーションで実行できるように、グローバルまたは特定の namespace で使用できるようになります。

Red Hat Operator、認定 Operator、コミュニティー Operator を含むデフォルトのカタログソースが利用可能です。dedicated-admin ロールを持つ管理者は、独自のカスタムカタログソースを追加することもできます。このカタログソースには、カスタムの Operator セットを含めることができます。

Operator Hub Marketplace にリスト表示されるすべての Operator はインストールに利用できるはずです。これらの Operator はお客様のワークロードとみなされるため、Red Hat Site Reliability Engineering (SRE) の監視の対象外です。

OLM は、OpenShift Dedicated アーキテクチャーを構成するクラスター Operator を管理しません。

4.3. etcd の概要

etcd は、完全にメモリーに収まる少量のデータを保持する、一貫性のある分散型のキー値ストアです。etcd は多くのプロジェクトのコアコンポーネントですが、コンテナーオーケストレーションの標準システムである Kubernetes のプライマリーデータストアです。

4.3.1. etcd を使用する利点

etcd を使用すると、いくつかの利点があります。

- クラウドネイティブアプリケーションの一貫したアップタイムを維持し、個々のサーバーに障害が発生した場合でも動作を維持します

- Kubernetes のすべてのクラスター状態を保存して複製する

- 設定データを配布して、ノードの設定に冗長性と回復力を提供する

4.3.2. etcd の仕組み

クラスターの設定と管理に対する信頼性の高いアプローチを確保するために、etcd は etcd Operator を使用します。Operator は、OpenShift Dedicated のような Kubernetes コンテナープラットフォームでの etcd の使用を簡素化します。etcd Operator を使用すると、etcd メンバーの作成または削除、クラスターのサイズ変更、バックアップの実行、および etcd のアップグレードを行うことができます。

etcd オペレーターは、以下を観察、分析、および実行します。

- Kubernetes API を使用してクラスターの状態を監視します。

- 現在の状態と希望する状態の違いを分析します。

- etcd クラスター管理 API、Kubernetes API、またはその両方を使用して相違点を修正します。

etcd は、常に更新されるクラスターの状態を保持します。この状態は継続的に持続するため、高い頻度で多数の小さな変化が発生します。その結果、Red Hat Site Reliability Engineering (SRE) は、etcd クラスターメンバーを高速で低レイテンシーの I/O でサポートします。

4.4. コントロールプレーン設定の自動更新

OpenShift Dedicated クラスターでは、コントロールプレーンマシンセットによって、コントロールプレーン設定への変更が自動的に伝播されます。コントロールプレーンマシンを置き換える必要がある場合、Control Plane Machine Set Operator は ControlPlaneMachineSet カスタムリソース (CR) で指定された設定に基づいて置き換えマシンを作成します。新しいコントロールプレーンマシンの準備ができたら、Operator は、クラスター API またはワークロードの可用性に対する潜在的な悪影響を軽減する方法で、古いコントロールプレーンマシンを安全にドレインして終了します。

コントロールプレーンマシンの交換がメンテナンス期間中にのみ行われるようにリクエストできません。Control Plane Machine Set Operator は、クラスターの安定性を確保するために動作します。メンテナンス期間を待つと、クラスターの安定性が損なわれる可能性があります。

コントロールプレーンマシンは、通常、マシンが仕様外になったり、不健全な状態になったりすると、任意のタイミングで交換対象としてマークされる可能性があります。クラスターのライフサイクルの一部として、このような交換が行われるため、特に懸念する必要はありません。コントロールプレーンノードの交換時に障害が発生すると、SRE に自動的に問題が通知されます。

OpenShift Dedicated クラスターが最初に作成されたタイミングによっては、コントロールプレーンマシンセットを追加すると、1 つまたは 2 つのコントロールプレーンノードのラベルまたはマシン名が他のコントロールプレーンノードと一致しなくなる可能性があります。たとえば、clustername-master-0、clustername-master-1、および clustername-master-2-abcxyz などです。このように名前に整合性がなくても、クラスターの作業に影響を与えず、心配する必要はありません。

4.5. 障害が発生したコントロールプレーンマシンの復旧

Control Plane Machine Set Operator は、コントロールプレーンマシンの復旧を自動化します。コントロールプレーンマシンが削除されると、Operator は ControlPlaneMachineSet カスタムリソース (CR) で指定された設定で置換を作成します。

第5章 NVIDIA GPU アーキテクチャーの概要

NVIDIA は、OpenShift Dedicated でのグラフィックプロセッシングユニット (GPU) リソースの使用をサポートしています。OpenShift Dedicated は、大規模な Kubernetes クラスターのデプロイと管理用に Red Hat が開発およびサポートする、セキュリティーを重視して強化された Kubernetes プラットフォームです。OpenShift Dedicated には Kubernetes の拡張機能が含まれているため、ユーザーはが簡単に NVIDIA GPU リソースを設定し、それを使用してワークロードを高速化できます。

NVIDIA GPU Operator は、OpenShift Dedicated 内の Operator フレームワークを活用して、GPU で高速化されたワークロードの実行に必要な NVIDIA ソフトウェアコンポーネントの完全なライフサイクルを管理します。

これらのコンポーネントには、NVIDIA ドライバー (CUDA を有効にするため)、GPU 用の Kubernetes デバイスプラグイン、NVIDIA Container Toolkit、GPU Feature Discovery (GFD) を使用した自動ノードタグ付け、DCGM ベースのモニタリングなどが含まれます。

NVIDIA GPU Operator をサポートしているのは NVIDIA だけです。NVIDIA からサポートを受ける方法は、NVIDIA サポートの利用方法 を参照してください。

5.1. NVIDIA GPU の前提条件

- 1 つ以上の GPU ワーカーノードを備えた OpenShift クラスターが稼働している。

-

必要な手順を実行するために

cluster-adminとして OpenShift クラスターにアクセスできる。 -

OpenShift CLI (

oc) がインストールされている。 -

Node Feature Discovery (NFD) Operator をインストールし、

nodefeaturediscoveryインスタンスを作成している。

5.2. GPU と OpenShift Dedicated

NVIDIA GPU インスタンスタイプに OpenShift Dedicated をデプロイできます。

このコンピュートインスタンスが GPU により高速化されたコンピュートインスタンスであること、および GPU タイプが NVIDIA AI Enterprise でサポートされている GPU のリストと一致することが重要です。たとえば、T4、V100、A100 はこのリストに含まれます。

以下のいずれかの方法で、コンテナー化された GPU にアクセスできます。

- 仮想マシン内の GPU ハードウェアにアクセスして使用するための GPU パススルー。

- GPU 全体を必要としない場合 GPU (vGPU) タイムスライス。

5.3. GPU の共有方法

Red Hat と NVIDIA は、エンタープライズレベルの OpenShift Dedicated クラスター上で、GPU 加速コンピューティングを簡略化するための GPU 同時実行性と共有メカニズムを開発しました。

通常、アプリケーションにはさまざまなコンピューティング要件があり、GPU が十分に活用されていない可能性があります。デプロイメントコストを削減し、GPU 使用率を最大化するには、ワークロードごとに適切な量のコンピュートリソースを提供することが重要です。

GPU 使用率を改善するための同時実行メカニズムは、プログラミングモデル API からシステムソフトウェアやハードウェアパーティショニングまで、仮想化を含めて幅広く存在します。次のリストは、GPU 同時実行メカニズムを示しています。

- Compute Unified Device Architecture (CUDA) ストリーム

- タイムスライス

- CUDA マルチプロセスサービス (MPS)

- マルチインスタンス GPU (MIG)

- vGPU による仮想化

関連情報

5.3.1. CUDA ストリーム

Compute Unified Device Architecture (CUDA) は、GPU での計算全般のために NVIDIA が開発した並列コンピューティングプラットフォームおよびプログラミングモデルです。

ストリームは、GPU 上で発行順に実行される一連の操作です。CUDA コマンドは通常、デフォルトストリームで順次実行され、前のタスクが完了するまでタスクは開始されません。

ストリームをまたいだ操作の非同期処理により、タスクの並列実行が可能になります。あるストリームで発行されたタスクは、別のタスクが別のストリームで発行される前、実行中、または発行された後に実行されます。これにより、GPU は指定された順序に関係なく複数のタスクを同時に実行できるようになり、パフォーマンスの向上につながります。

5.3.2. タイムスライス

GPU タイムスライスは、複数の CUDA アプリケーションを実行しているときに、過負荷になった GPU でスケジュールされたワークロードをインターリーブします。

Kubernetes で GPU のタイムスライスを有効にするには、GPU のレプリカセットを定義し、それを個別に Pod に配分してワークロードを実行できるようにしっます。マルチインスタンス GPU (MIG) とは異なり、メモリーや障害はレプリカ間で分離されませんが、一部のワークロードでは一切共有しないより、こちらの方が適切です。内部的には、GPU タイムスライスを使用して、基礎である同じ GPU のレプリカからのワークロードを多重化します。

クラスター全体のデフォルト設定をタイムスライスに適用できます。ノード固有の設定を適用することもできます。たとえば、タイムスライス設定を Tesla T4 GPU を備えたノードにのみ適用し、他の GPU モデルを備えたノードは変更しないようにできます。

クラスター全体のデフォルト設定を適用し、ノードにラベルを付けて、それらのノードにノード固有の設定が適用されるようにすることで、2 つのアプローチを組み合わせることができます。

5.3.3. CUDA マルチプロセスサービス

CUDA マルチプロセスサービス (MPS) を使用すると、単一の GPU で複数の CUDA プロセスを使用できます。プロセスは GPU 上で並行して実行されるため、GPU コンピュートリソースの飽和が発生しなくなります。MPS を使用すると、カーネル操作や、別のプロセスからのメモリーコピーの同時実行または重複も可能になり、使用率が向上します。

関連情報

5.3.4. マルチインスタンス GPU

マルチインスタンス GPU (MIG) を使用すると、GPU コンピュートユニットとメモリーを複数の MIG インスタンスに分割できます。これらの各インスタンスは、システムの観点からはスタンドアロン GPU デバイスであり、ノード上で実行されている任意のアプリケーション、コンテナー、または仮想マシンに接続できます。GPU を使用するソフトウェアは、これらの各 MIG インスタンスを個別の GPU として扱います。

MIG は、GPU 全体のフルパワーを必要としないアプリケーションがある場合に役立ちます。新しい NVIDIA Ampere アーキテクチャーの MIG 機能を使用すると、ハードウェアリソースを複数の GPU インスタンスに分割できます。各インスタンスは、オペレーティングシステムで独立した CUDA 対応 GPU として利用できます。

NVIDIA GPU Operator バージョン 1.7.0 以降では、A100 および A30 Ampere カードの MIG サポートを提供しています。これらの GPU インスタンスは、最大 7 つの独立した CUDA アプリケーションをサポートするように設計されており、専用のハードウェアリソースをしようしてそれぞれ完全に分離された状態で稼働します。

5.3.5. vGPU による仮想化

仮想マシンは、NVIDIA vGPU を使用して単一の物理 GPU に直接アクセスできます。企業全体の仮想マシンで共有され、他のデバイスからアクセスできる仮想 GPU を作成できます。

この機能は、GPU パフォーマンスのパワーと、vGPU がもたらす管理およびセキュリティーの利点を組み合わせたものです。vGPU には他にも、仮想環境のプロアクティブな管理と監視、混合 VDI とコンピュートワークロードのワークロードバランシング、複数の仮想マシン間でのリソース共有などの利点があります。

関連情報

5.4. OpenShift Dedicated の NVIDIA GPU 機能

- NVIDIA Container Toolkit

- NVIDIA Container Toolkit を使用すると、GPU で高速化されたコンテナーを作成して実行できます。ツールキットには、コンテナーが NVIDIA GPU を使用するように自動的に設定するためのコンテナーランタイムライブラリーとユーティリティーが含まれています。

- NVIDIA AI Enterprise

NVIDIA AI Enterprise は、NVIDIA 認定システムで最適化、認定、サポートされている AI およびデータ分析ソフトウェアのエンドツーエンドのクラウドネイティブスイートです。

NVIDIA AI Enterprise には、Red Hat OpenShift Dedicated のサポートが含まれています。サポートされているインストール方法は以下のとおりです。

- ベアメタルまたは、GPU パススルー機能を備えた VMware vSphere 上の OpenShift Dedicated。

- NVIDIA vGPU を備えた VMware vSphere 上の OpenShift Dedicated。

- GPU Feature Discovery

NVIDIA GPU Feature Discovery for Kubernetes は、ノード上で使用可能な GPU のラベルを自動的に生成できるソフトウェアコンポーネントです。GPU Feature Discovery は、Node Feature Discovery (NFD) を使用してこのラベル付けを実行します。

Node Feature Discovery (NFD) Operator は、ハードウェア固有の情報でノードにラベル付けを行うことで、OpenShift Container Platform クラスターのハードウェア機能と設定の検出を管理します。NFD は、PCI カード、カーネル、OS バージョンなどのノード固有の属性で、ホストにラベル付けを行います。

Operator Hub で NFD Operator を見つけるには、"Node Feature Discovery" で検索してください。

- NVIDIA GPU Operator with OpenShift Virtualization

これまで、GPU Operator は、GPU で高速化されたコンテナーを実行するためにワーカーノードのみをプロビジョニングしていました。現在は、GPU Operator を使用して、GPU で高速化された仮想マシンを実行するためのワーカーノードもプロビジョニングできます。

GPU Operator を、どの GPU ワークロードがそのワーカーノード上で実行するように設定されたかに応じて、異なるソフトウェアコンポーネントをワーカーノードにデプロイするように設定できます。

- GPU モニタリングダッシュボード

- モニタリングダッシュボードをインストールして、OpenShift Dedicated Web コンソールのクラスターの Observe ページに、GPU の使用状況に関する情報を表示できます。GPU 使用状況に関する情報には、使用可能な GPU の数、消費電力 (ワット単位)、温度 (摂氏)、使用率 (パーセント)、および各 GPU のその他のメトリクスが含まれます。

第6章 OpenShift Dedicated の開発について

高品質のエンタープライズアプリケーションの開発および実行時にコンテナーの各種機能をフルに活用できるようにするには、使用する環境が、コンテナーの以下の機能を可能にするツールでサポートされている必要があります。

- 他のコンテナー化された/されていないサービスに接続できる分離したマイクロサービスとして作成される。たとえば、アプリケーションをデータベースに結合したり、アプリケーションにモニタリングアプリケーションを割り当てることが必要になることがあります。

- 回復性がある。サーバーがクラッシュしたときやメンテナンスのために停止する必要があるとき、またはまもなく使用停止になる場合などに、コンテナーを別のマシンで起動できます。

- 自動化されている。コードの変更を自動的に選択し、新規バージョンの起動およびデプロイを自動化します。

- スケールアップまたは複製が可能である。需要の上下に合わせてクライアントに対応するインスタンスの数を増やしたり、インスタンスの数を減らしたりできます。

- アプリケーションの種類に応じて複数の異なる方法で実行できる。たとえば、あるアプリケーションは月一回実行してレポートを作成した後に終了させる場合があります。別のアプリケーションは継続的に実行して、クライアントに対する高可用性が必要になる場合があります。

- 管理された状態を保つ。アプリケーションの状態を監視し、異常が発生したら対応できるようにします。

コンテナーが広く浸透し、エンタープライズレベルでの対応を可能にするためのツールや方法への要求が高まっていることにより、多くのオプションがコンテナーで利用できるようになりました。

このセクションの残りの部分では、OpenShift Dedicated で Kubernetes のコンテナー化されたアプリケーションをビルドし、デプロイする際に作成できるアセットの各種のオプションを説明します。また、各種の異なるアプリケーションや開発要件に適した方法も説明します。

6.1. コンテナー化されたアプリケーションの開発について

コンテナーを使用したアプリケーションの開発にはさまざまな方法を状況に合わせて使用できます。単一コンテナーの開発から、最終的にそのコンテナーの大企業のミッションクリティカルなアプリケーションとしてのデプロイに対応する一連の方法の概要を示します。それぞれのアプローチと共に、コンテナー化されたアプリケーションの開発に使用できる各種のツール、フォーマットおよび方法を説明します。扱う内容は以下の通りです。

- 単純なコンテナーをビルドし、レジストリーに格納する

- Kubernetes マニフェストを作成し、それを Git リポジトリーに保存する

- Operator を作成し、アプリケーションを他のユーザーと共有する

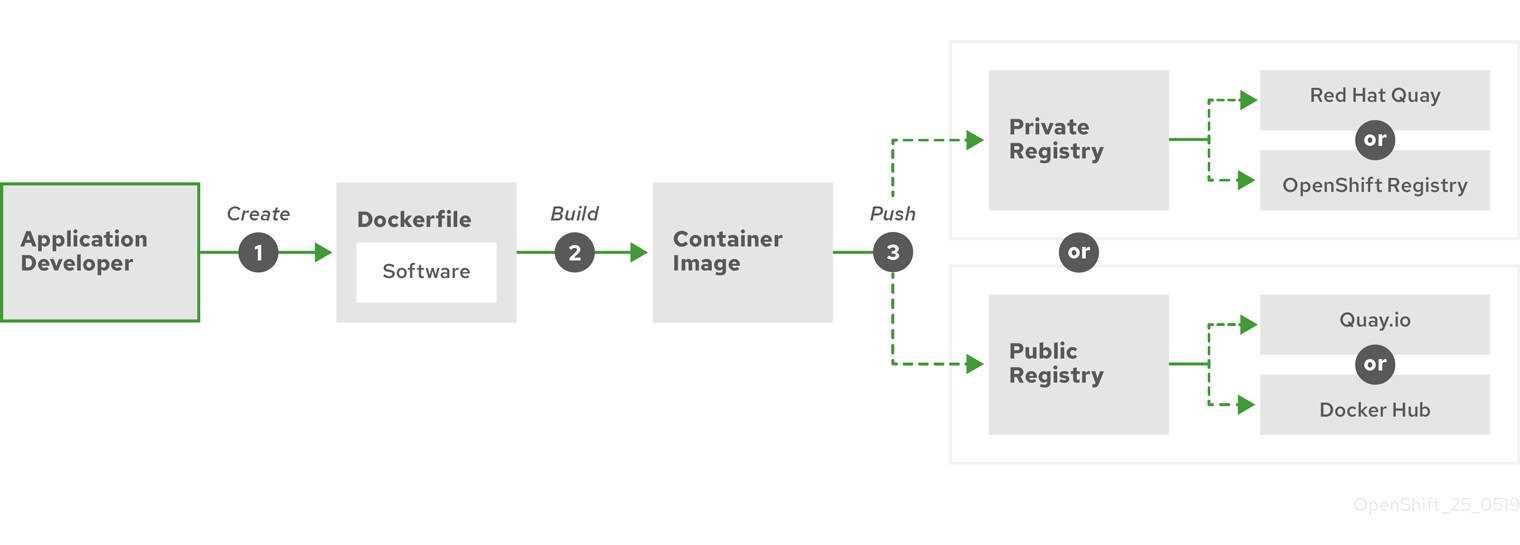

6.2. 単純なコンテナーのビルド

たとえば、アプリケーションをコンテナー化しようと考えているとします。

その場合、まず必要になるのは buildah や docker などのコンテナーをビルドするためのツール、およびコンテナーの内部で実行されることを記述したファイルです。これは通常、Dockerfile になります。

次に、作成したコンテナーイメージをプッシュする場所が必要になります。ここからコンテナーイメージをプルすると、任意の場所で実行できます。この場所はコンテナーレジストリーになります。

各コンポーネントのサンプルは、ほとんどの Linux オペレーティングシステムにデフォルトでインストールされています。ただし Dockerfile はユーザーが各自で用意する必要があります。

以下の図は、イメージをビルドし、プッシュするプロセスを示しています。

図6.1 単純なコンテナー化アプリケーションを作成し、レジストリーにプッシュする

Red Hat Enterprise Linux (RHEL) をオペレーティングシステムとして実行しているコンピューターを使用している場合、コンテナー化されているアプリケーションを作成するプロセスには以下の手順が必要になります。

- コンテナービルドツールのインストール。RHEL には、コンテナーのビルドと管理に使用される podman、buildah、skopeo など一連のツールが含まれています。

-

Dockerfile を作成してベースイメージとソフトウェアを組み合わせる。コンテナーのビルドに関する情報は、

Dockerfileというファイルに保管されます。このファイルでビルドの起点となるベースイメージ、インストールするソフトウェアパッケージ、コンテナーにコピーするソフトウェアを指定します。さらに、コンテナーの外部に公開するネットワークポートやコンテナーの内部にマウントするボリュームのなどのパラメーター値も指定します。Dockerfile とコンテナー化するソフトウェアは、RHEL システムのディレクトリーに配置します。 -

buildah または docker build を実行する。

buildah build-using-dockerfileまたはdocker buildコマンドを実行し、選択したベースイメージをローカルシステムにプルして、ローカルに保存されるコンテナーイメージを作成します。 buildah を使用して、Dockerfile なしにコンテナーイメージをビルドすることもできます。 -

タグ付けおよびレジストリーへのプッシュを実行します。コンテナーの格納および共有に使用するレジストリーの場所を特定する新しいコンテナーイメージにタグを追加します。次に、

podman pushコマンドまたはdocker pushコマンドを実行してそのイメージをレジストリーにプッシュします。 -

イメージをプルして実行する。Podman や Docker などのコンテナークライアントツールがある任意のシステムから、新しいイメージを特定するコマンドを実行します。たとえば、

podman run <image_name>やdocker run <image_name>のコマンドを実行します。ここで、<image_name>は新しいイメージの名前であり、quay.io/myrepo/myapp:latestのようになります。レジストリーでは、イメージをプッシュおよびプルするために認証情報が必要になる場合があります。

6.2.1. コンテナービルドツールのオプション

Buildah、Podman、Skopeo を使用してコンテナービルドし、管理すると、それらのコンテナーを最終的に OpenShift Dedicated またはその他の Kubernetes 環境にデプロイする目的で調整された各種機能が含まれる業界標準のコンテナーイメージが生成されます。これらのツールはデーモンレスであり、root 権限なしで実行できるため、実行に必要なオーバーヘッドが少なくて済みます。

コンテナーランタイムとしての Docker Container Engine のサポートは、Kubernetes 1.20 で非推奨になり、将来のリリースで削除される予定です。ただし、Docker で生成されたイメージは、CRI-O を含むすべてのランタイムでクラスター内で引き続き機能します。詳細は、Kubernetes ブログの発表 を参照してください。

コンテナーを最終的に OpenShift Dedicated で実行するときは、CRI-O コンテナーエンジンを使用します。CRI-O は、OpenShift Dedicated クラスターのすべてのワーカーマシンおよびコントロールプレーンマシン上で実行されますが、CRI-O は、OpenShift Dedicated の外部のスタンドアロンランタイムとしてはまだサポートされていません。

6.2.2. ベースイメージのオプション

アプリケーションをビルドするために選択するベースイメージには、Linux システムがアプリケーションのように表示されるソフトウェアのセットが含まれます。ユーザーが独自のイメージをビルドする場合、ソフトウェアはそのファイルシステム内に配置され、ファイルシステムはオペレーティングシステムのように表示されます。このベースイメージの選択により、コンテナーの将来の安全性、効率性およびアップグレードの可能性に大きな影響を与えます。

Red Hat は、Red Hat Universal Base Images (UBI) と呼ばれるベースイメージの新たなセットを提供します。これらのイメージは Red Hat Enterprise Linux をベースにしており、Red Hat が過去に提供していたベースイメージに類似していますが、1 つの大きな違いは、Red Hat サブスクリプションがなくても自由に再ディストリビューションできることです。そのため、UBI イメージの共有方法や環境ごとに異なるイメージを作成する必要性を心配することなく、UBI イメージ上にアプリケーションを構築できるようになります。

これらの UBI イメージは、標準で最小限の init バージョンです。また、Red Hat Software Collections イメージを、Node.js、Perl、Python などの特定のランタイム環境に依存するアプリケーションの基盤として使用できます。これらのランタイムベースイメージの特殊なバージョンは Source-to-Image (S2I) イメージと呼ばれています。S2I イメージを使用して、コードを、そのコードを実行できるベースイメージ環境に挿入できます。

S2I イメージは、OpenShift Dedicated Web UI から直接使用できます。Developer パースペクティブで、+Add ビューに移動し、Developer Catalog タイルで Developer Catalog で利用可能なすべてのサービスを表示します。

図6.2 特定のランタイムを必要とするアプリケーションの S2I ベースイメージを選択する

6.2.3. レジストリーオプション

コンテナーレジストリーはコンテナーイメージを保管する場所です。ここから、コンテナーイメージを他の人と共有したり、最終的に実行するプラットフォームで使用できるようにしたりできます。無料アカウントを提供する大規模なパブリックコンテナーレジストリーや、容量の大きいストレージや特殊な機能を備えたプレミアムバージョンを選択できます。また、自身の組織にのみ限定される独自のレジストリーをインストールしたり、共有する相手を選択して独自のレジストリーをインストールすることもできます。

Red Hat のイメージおよび認定パートナーのイメージを取得するには、Red Hat レジストリーから取り出すことができます。Red Hat レジストリーは、認証されていない非推奨の registry.access.redhat.com、および認証が必要な registry.redhat.io の 2 つの場所によって表わされます。Container images section of the Red Hat Ecosystem Catalog で、Red Hat レジストリーの Red Hat イメージおよびパートナーのイメージを確認できます。Red Hat コンテナーイメージのリスト表示に加えて、適用されたセキュリティー更新に基づくヘルススコアなど、イメージの内容と品質に関する詳細な情報も表示されます。

大規模なパブリックレジストリーには、Docker Hub および Quay.io が含まれます。Quay.io レジストリーは Red Hat が所有し、管理しています。OpenShift Dedicated で使用されるコンポーネントの多くは Quay.io に保管されます。これには OpenShift Dedicated 自体をデプロイするために使用されるコンテナーイメージおよび Operator が含まれます。Quay.io は、Helm チャートなどの他のタイプのコンテンツを保管する手段ともなります。

専用のプライベートコンテナーレジストリーが必要な場合、OpenShift Dedicated 自体にプライベートコンテナーレジストリーが含まれています。これは、OpenShift Dedicated と共にインストールされ、そのクラスター上で実行されます。また、Red Hat は Red Hat Quay と呼ばれるプライベートバージョンの Quay.io レジストリーも提供しています。Red Hat Quay には、geo レプリケーション、Git ビルドトリガー、Clair イメージスキャンなどの多くの機能が含まれています。

ここで言及したすべてのレジストリーでは、これらのレジストリーからイメージをダウンロードする際に認証情報が必要です。これらの認証情報の一部は OpenShift Dedicated のクラスター全体に提供されますが、他の認証情報は個別に割り当てられます。

6.3. OpenShift Dedicated 用の Kubernetes マニフェストの作成

コンテナーイメージは、コンテナー化されたアプリケーション用の基本的なビルディングブロックですが、OpenShift Dedicated などの Kubernetes 環境でそのアプリケーションを管理し、デプロイするには、より多くの情報が必要になります。イメージ作成後に実行される通常の手順は以下のとおりです。

- Kubernetes マニフェストで使用する各種リソースの理解

- 実行するアプリケーションの種類を決定

- サポートコンポーネントの収集

- マニフェストの作成、およびそのマニフェストを Git リボジトリーに保管。これにより、マニフェストをソースバージョン管理システムに保管し、監査と追跡、次の環境へのプロモートとデプロイ、必要な場合は以前のバージョンへのロールバックなどを実行でき、これを他者と共有できます。

6.3.1. Kubernetes Pod およびサービスについて

コンテナーイメージは Docker を使用する基本単位であり、Kubernetes が使用する基本単位は Pod と呼ばれます。Pod はアプリケーションのビルドの次の手順で使用されます。Pod には、1 つ以上のコンテナーを含めることができます。Pod はデプロイやスケーリングおよび管理を実行する単一の単位であることに留意してください。

Pod での実行内容を決定する際に考慮する必要のある主要な点として、スケーラビリティーと namespace を考慮できます。デプロイメントを容易にするには、コンテナーを Pod にデプロイして、Pod 内に独自のロギングとモニタリングコンテナーを含めることができるかもしれません。後に、Pod を実行し、追加のインスタンスをスケールアップすることが必要になると、それらの他のコンテナーもスケールアップできます。namespace の場合、Pod 内のコンテナーは同じネットワークインターフェイス、共有ストレージボリューム、メモリーや CPU などのリソース制限を共有します。これにより、Pod のコンテンツを単一の単位として管理することが容易になります。また Pod 内のコンテナーは、System V セマフォや POSIX 共有メモリーなどの標準的なプロセス間通信を使用することにより、相互に通信できます。

個々の Pod が Kubernetes 内のスケーラブルな単位を表すのに対し、サービス は、負荷分散などの完全なタスクを実行する完全で安定したアプリケーションを作成するために複数の Pod をグループ化する手段を提供します。 また、サービスは削除されるまで同じ IP アドレスで利用可能な状態になるため、Pod より永続性があります。サービスが使用できる状態の場合、サービスは名前で要求され、OpenShift Dedicated クラスターはその名前を IP アドレスとポートに解決し、そこからサービスを設定する Pod に到達することができます。

性質上、コンテナー化されたアプリケーションは、そのアプリケーションが実行するオペレーティングシステムから分離され、したがってユーザーからも分離されます。Kubernetes マニフェストの一部には、コンテナー化されたアプリケーションとの通信の詳細な制御を可能にする ネットワークポリシー を定義して、アプリケーションを内外のネットワークに公開する方法が記述されています。HTTP、HTTPS の受信要求やクラスター外からの他のサービスをクラスター内のサービスに接続するには、Ingress リソースを使用できます。

コンテナーが、サービスを通じて提供されるデータベースストレージではなくディスク上のストレージを必要とする場合は、ボリューム をマニフェストに追加して、そのディスクを Pod で使用できるようにすることができます。永続ボリューム (PV) を作成するか、Pod 定義に追加されるボリュームを動的に作成するようにマニフェストを設定できます。

アプリケーションを設定する Pod のグループを定義した後に、それらの Pod を Deployment および DeploymentConfig オブジェクトで定義できます。

6.3.2. アプリケーションのタイプ

次に、アプリケーションのタイプが、その実行方法にどのように影響するかを検討します。

Kubernetes は、各種のアプリケーションに適した異なるタイプのワークロードのタイプを定義します。アプリケーションに適したワークロードを決定するために、アプリケーションが以下のどのタイプに該当するかを確認してください。

-

完了まで実行されることが意図されている。 例として、アプリケーションがレポートを作成するために起動される場合は、レポートの完了時に終了することが想定されます。このアプリケーションはその後 1 カ月間、再度実行されない場合もあります。これらのタイプのアプリケーションに適した OpenShift Dedicated オブジェクトには、

JobおよびCronJobオブジェクトがあります。 - 継続的に実行することが予想されている。 長時間実行されるアプリケーションの場合、デプロイメントを作成できます。

-

高い可用性が必要。 使用しているアプリケーションに高可用性が必要な場合は、2 つ以上のインスタンスを持てるようにデプロイメントのサイズを設定する必要があります。

DeploymentオブジェクトまたはDeploymentConfigオブジェクトの場合は、このタイプのアプリケーション用に レプリカセット を組み込むことができます。レプリカセットを使用すると、Pod は複数のノード間で実行され、ワーカーが停止してもアプリケーションを常に利用可能な状態にすることができます。 - すべてのノード上で実行される必要がある。 Kubernetes アプリケーションのタイプによっては、すべてのマスターまたはワーカーノード上のクラスターで実行することが意図されています。DNS およびモニタリングアプリケーションは、すべてのノード上で継続的に実行する必要があるアプリケーションの例です。このタイプのアプリケーションは、デーモンセット として実行できます。また、デーモンセットはノードラベルに基づいて、ノードのサブセット上でも実行できます。

- ライフサイクル管理を必要とする。 アプリケーションが他者も使用できるようにする場合は、Operator を作成することを検討してください。Operator を使用すると、インテリジェンスをビルドできるため、バックアップやアップグレードなどを自動的に処理できます。Operator Lifecycle Manager (OLM) と組み合わせることで、クラスターマネージャーは、Operator を選択された namespace に公開し、クラスター内のユーザーが Operator を実行できるようになります。

-

アイデンティティーまたは番号付けの要件がある。アプリケーションには、アイデンティティーや番号付けの要件が存在する場合があります。 たとえば、アプリケーションの 3 つのインスタンスのみを実行し、インスタンスに

0、1、2という名前を付けることが求められる場合があります。このアプリケーションには、ステートフルセット が適しています。 ステートフルセットは、データベースや zookeeper クラスターなどの独立したストレージが必要なアプリケーションに最も適しています。

6.3.3. 利用可能なサポートコンポーネント

作成するアプリケーションには、データベースやロギングコンポーネントなどのサポートコンポーネントが必要な場合があります。このニーズに対応するために、OpenShift Dedicated の Web コンソールで利用可能な以下のカタログから必要なコンポーネントを取得できる場合があります。

- 各 OpenShift Dedicated 4 クラスターで利用可能なソフトウェアカタログ。ソフトウェアカタログにより、Red Hat、認定 Red Hat パートナー、コミュニティーメンバーおよびクラスターの Operator などから Operator が利用できます。クラスター Operator は、それらの Operator をクラスター内のすべての場所または選択された namespace で利用できるようにします。そのため、開発者は Operator を起動し、それらをアプリケーションと共に設定できます。

-

テンプレート: ワンオフタイプのアプリケーションの場合に役立ちます。この場合、インストール後のコンポーネントのライフサイクルは重要ではありません。テンプレートは、最小限のオーバーヘッドで Kubernetes アプリケーションの開発を始める簡単な方法を提供します。テンプレートは、

Deployment、Service、Route、またはその他のオブジェクトなどのリソース定義のリストである場合があります。名前またはリソースを変更する必要がある場合に、それらの値をパラメーターとしてテンプレートに設定できます。

サポートする Operator およびテンプレートは、開発チームの特定のニーズに合わせて設定し、開発者が作業に使用する namespace で利用できるようにすることができます。 多くの場合、共有テンプレートは他のすべての namespace からアクセス可能になるために openshift namespace に追加されます。

6.3.4. マニフェストの適用

Kubernetes マニフェストを使用して、Kubernetes アプリケーションを設定するコンポーネントのより詳細な情報を得ることができます。これらのマニフェストは YAML ファイルとして作成し、oc apply などのコマンドを実行して、それらをクラスターに適用してデプロイできます。

6.3.5. 次のステップ

この時点で、コンテナー開発のプロセスを自動化する方法を検討します。この際、イメージをビルドしてレジストリーにプッシュするいくつかの CI パイプラインがあることが望ましいと言えます。とくに GitOps パイプラインは、アプリケーションのビルドに必要なソフトウェアを保管する Git リボジトリーにコンテナー開発を統合します。

ここまでのワークフローは以下のようになります。

-

Day 1: YAML を作成します。次に

oc applyコマンドを実行して、YAML をクラスターに適用し、機能することを確かめます。 - Day 2: YAML コンテナー設定ファイルを独自の Git リポジトリーに配置します。ここから、アプリのインストールやその改善の支援に携わるメンバーが YAML をプルダウンし、アプリを実行するクラスターにこれを適用できます。

- Day 3: アプリケーション用の Operator の作成を検討します。

6.4. Operator 向けの開発

アプリケーションを他者が実行できるようにする際、アプリケーションを Operator としてパッケージ化し、デプロイすることが適切である場合があります。前述のように、Operator は、ライフサイクルコンポーネントをアプリケーションに追加し、インストール後すぐにアプリケーションを実行するジョブが完了していないことを認識します。

アプリケーションを Operator として作成する場合は、アプリケーションを実行し、維持する方法に関する独自のノウハウを盛り込むことができます。アプリケーションのアップグレード、バックアップ、スケーリング、状態のトラッキングなどを行う機能を組み込むことができます。アプリケーションを正しく設定すれば、Operator の更新などのメンテナンスタスクは、Operator のユーザーに非表示の状態で自動的に実行されます。

役に立つ Operator の一例として、データを特定のタイミングで自動的にバックアップするように設定された Operator を挙げることができます。Operator は設定されたタイミングでのアプリケーションのバックアップを管理するため、システム管理者はバックアップのタイミングを覚えておく必要がありません。

データのバックアップや証明書のローテーションなど、これまで手作業で行われていたアプリケーションのメンテナンスは、Operator によって自動化されます。

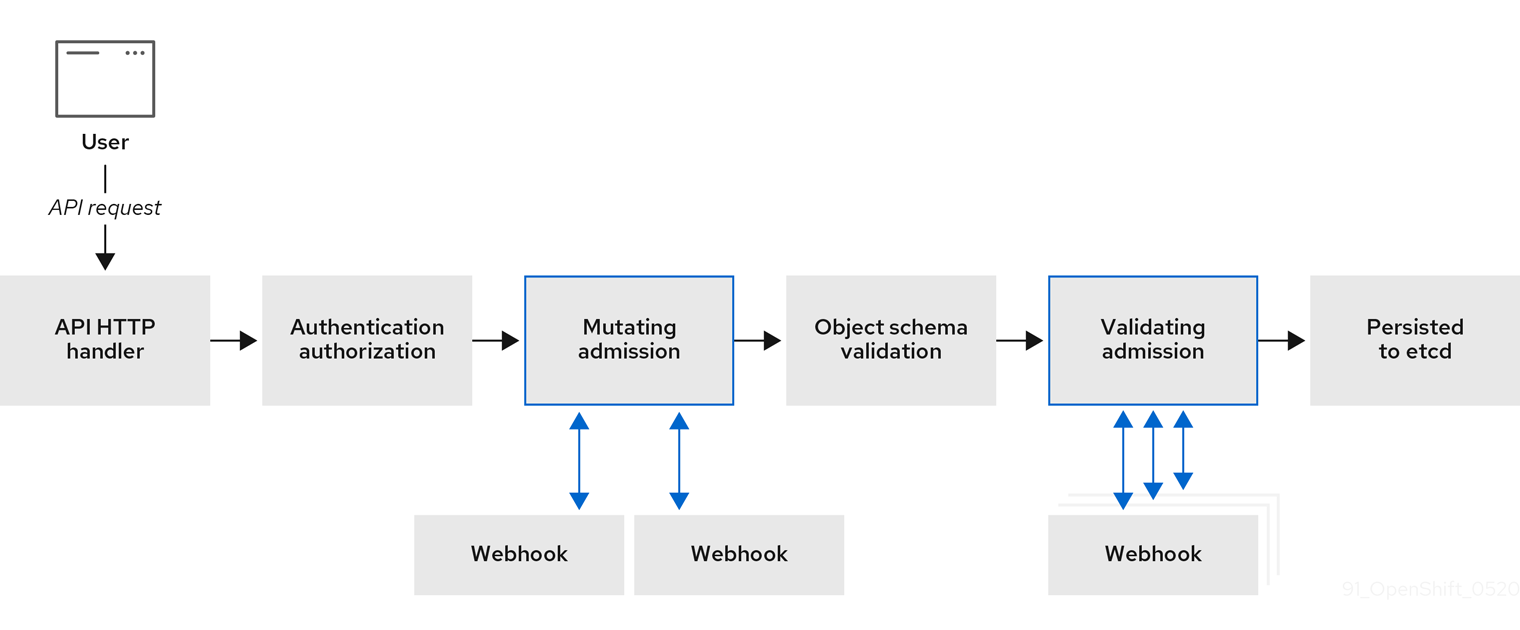

第7章 受付プラグイン

受付プラグインは、OpenShift Dedicated の機能の調整に役立ちます。

7.1. 受付プラグインについて

受付プラグインは、マスター API へのリクエストをインターセプトして、リソースリクエストを検証します。リクエストが認証および認可された後、受付プラグインは、関連するポリシーが遵守されていることを確認します。受付プラグインは、たとえばセキュリティーポリシー、リソース制限、設定要件を適用するためによく使用されます。

受付プラグインは受付チェーン (admission chain) として順番に実行されます。シーケンス内の受付プラグインが要求を拒否すると、チェーン全体が中止され、エラーが返されます。

OpenShift Dedicated には、各リソースタイプについて有効にされている受付プラグインのデフォルトセットがあります。それらはマスターが適切に機能するために必要です。受付プラグインは、それらが対応していないリソースを無視します。

デフォルト以外にも、受付チェーンは、カスタム Webhook サーバーを呼び出す Webhook 受付プラグインを介して動的に拡張できます。Webhook 受付プラグインには、変更用の受付プラグインと検証用の受付プラグインの 2 種類があります。変更用の受付プラグインが最初に実行され、リソースの変更および要求の検証の両方が可能です。検証用の受付プラグインは要求を検証し、変更用の受付プラグインによってトリガーされた変更も検証できるように変更用の受付プラグインの後に実行されます。

変更用の受付プラグインを使用して Webhook サーバーを呼び出すと、ターゲットオブジェクトに関連するリソースに影響を与える可能性があります。このような場合に、最終結果が想定通りであることを検証するためにいくつかの手順を実行する必要があります。

動的な受付クラスターはコントロールプレーンの操作に影響するため、これは注意して使用する必要があります。OpenShift Dedicated 4.14 の Webhook 受付プラグインを使用して Webhook サーバーを呼び出す場合は、変更による影響に関する情報を十分に確認し、それらの影響の有無をテストするようにしてください。要求が受付チェーン全体を通過しない場合は、リソースを変更前の元の状態に復元する手順を追加します。

7.2. デフォルトの受付プラグイン

OpenShift Dedicated 4 では、デフォルトの検証および受付プラグインが有効になっています。これらのデフォルトプラグインは、Ingress ポリシー、クラスターリソース制限の上書き、クォータポリシーなどの基本的なコントロールプレーンの機能に貢献するものです。

デフォルトプロジェクトでワークロードを実行したり、デフォルトプロジェクトへのアクセスを共有したりしないでください。デフォルトのプロジェクトは、コアクラスターコンポーネントを実行するために予約されています。

デフォルトプロジェクトである default、kube-public、kube-system、openshift、openshift-infra、openshift-node、および openshift.io/run-level ラベルが 0 または 1 に設定されているその他のシステム作成プロジェクトは、高い特権があるとみなされます。Pod セキュリティーアドミッション、Security Context Constraints、クラスターリソースクォータ、イメージ参照解決などのアドミッションプラグインに依存する機能は、高い特権を持つプロジェクトでは機能しません。

次のリストには、デフォルトの受付プラグインが含まれています。

例7.1 受付プラグインの検証

-

LimitRanger -

ServiceAccount -

PodNodeSelector -

Priority -

PodTolerationRestriction -

OwnerReferencesPermissionEnforcement -

PersistentVolumeClaimResize -

RuntimeClass -

CertificateApproval -

CertificateSigning -

CertificateSubjectRestriction -

autoscaling.openshift.io/ManagementCPUsOverride -

authorization.openshift.io/RestrictSubjectBindings -

scheduling.openshift.io/OriginPodNodeEnvironment -

network.openshift.io/ExternalIPRanger -

network.openshift.io/RestrictedEndpointsAdmission -

image.openshift.io/ImagePolicy -

security.openshift.io/SecurityContextConstraint -

security.openshift.io/SCCExecRestrictions -

route.openshift.io/IngressAdmission -

config.openshift.io/ValidateAPIServer -

config.openshift.io/ValidateAuthentication -

config.openshift.io/ValidateFeatureGate -

config.openshift.io/ValidateConsole -

operator.openshift.io/ValidateDNS -

config.openshift.io/ValidateImage -

config.openshift.io/ValidateOAuth -

config.openshift.io/ValidateProject -

config.openshift.io/DenyDeleteClusterConfiguration -

config.openshift.io/ValidateScheduler -

quota.openshift.io/ValidateClusterResourceQuota -

security.openshift.io/ValidateSecurityContextConstraints -

authorization.openshift.io/ValidateRoleBindingRestriction -

config.openshift.io/ValidateNetwork -

operator.openshift.io/ValidateKubeControllerManager -

ValidatingAdmissionWebhook -

ResourceQuota -

quota.openshift.io/ClusterResourceQuota

例7.2 受付プラグインの変更

-

NamespaceLifecycle -

LimitRanger -

ServiceAccount -

NodeRestriction -

TaintNodesByCondition -

PodNodeSelector -

Priority -

DefaultTolerationSeconds -

PodTolerationRestriction -

DefaultStorageClass -

StorageObjectInUseProtection -

RuntimeClass -

DefaultIngressClass -

autoscaling.openshift.io/ManagementCPUsOverride -

scheduling.openshift.io/OriginPodNodeEnvironment -

image.openshift.io/ImagePolicy -

security.openshift.io/SecurityContextConstraint -

security.openshift.io/DefaultSecurityContextConstraints -

MutatingAdmissionWebhook

7.3. Webhook 受付プラグイン

OpenShift Dedicated のデフォルト受付プラグインのほかに、受付チェーンの機能を拡張するために Webhook サーバーを呼び出す Webhook 受付プラグインを使用して動的な受付を実装できます。Webhook サーバーは、定義されたエンドポイントにて HTTP で呼び出されます。

OpenShift Dedicated には、2 種類の Webhook 受付プラグインがあります。

- 受付プロセスで、変更用の受付プラグイン は、アフィニティーラベルの挿入などのタスクを実行できます。

- 受付プロセスの最後に、検証用の受付プラグイン を使用して、アフィニティーラベルが予想通りにされているかどうかの確認など、オブジェクトが適切に設定されていることを確認できます。検証にパスすると、OpenShift Dedicated はオブジェクトを設定済みとしてスケジュールします。

API 要求が送信されると、変更用または検証用の受付コントローラーは設定内の外部 Webhook の一覧を使用し、それらを並行して呼び出します。

- すべての Webhook が要求を承認すると、受付チェーンは継続します。

- Webhook のいずれかが要求を拒否すると、受付要求は拒否され、これは、初回の拒否理由に基づいて実行されます。

- 複数の Webhook が受付要求を拒否する場合は、初回の拒否理由のみがユーザーに返されます。

-

Webhook の呼び出し時にエラーが発生すると、要求が拒否されるか、Webhook がエラーポリシーセットに応じて無視されます。エラーポリシーが

Ignoreに設定されていると、要求が失敗しても無条件で受け入れられます。ポリシーがFailに設定されていると、失敗した要求が拒否されます。Ignoreを使用すると、すべてのクライアントで予測できない動作が生じる可能性があります。

以下の図は、複数の Webhook サーバーが呼び出される連続した受付チェーンのプロセスを示しています。

図7.1 変更用および検証用の受付プラグインを含む API 受付チェーン

Webhook 受付プラグインのユースケースの例として使用できるケースでは、すべての Pod に共通のラベルのセットがなければなりません。この例では、変更用の受付プラグインはラベルを挿入でき、検証用の受付プラグインではラベルが予想通りであることを確認できます。OpenShift Dedicated は引き続いて必要なラベルが含まれる Pod をスケジュールし、それらのラベルが含まれない Pod を拒否します。

一般的な Webhook 受付プラグインのユースケースとして、以下が含まれます。

- namespace の予約。

- SR-IOV ネットワークデバイスプラグインにより管理されるカスタムネットワークリソースの制限。

- Pod 優先順位クラスの検証。

OpenShift Dedicated の最大デフォルトの webhook タイムアウト値は 13 秒であり、変更できません。

7.4. Webhook 受付プラグインのタイプ

クラスター管理者は、API サーバーの受付チェーンで変更用の受付プラグインまたは検証用の受付プラグインを使用して Webhook サーバーを呼び出すことができます。

7.4.1. 受付プラグインの変更

変更用の受付プラグインは、受付プロセスの変更フェーズで起動します。これにより、リソースコンテンツが永続化する前にそれらを変更できます。変更用の受付プラグインで呼び出し可能な Webhook の一例として、Pod ノードセレクター機能があります。この機能は namespace でアノテーションを使用してラベルセレクターを検索し、これを Pod 仕様に追加します。

変更用の受付プラグインの設定例:

- 1

- 変更用の受付プラグイン設定を指定します。

- 2

MutatingWebhookConfigurationオブジェクトの名前。<webhook_name>を適切な値に置き換えます。- 3

- 呼び出す Webhook の名前。

<webhook_name>を適切な値に置き換えます。 - 4

- Webhook サーバーに接続し、これを信頼し、データをこれに送信する方法に関する情報です。

- 5

- フロントエンドサービスが作成される namespace。

- 6

- フロントエンドサービスの名前。

- 7

- 受付要求に使用される Webhook URL。

<webhook_url>を適切な値に置き換えます。 - 8

- Webhook サーバーで使用されるサーバー証明書に署名する PEM でエンコーディングされた CA 証明書。

<ca_signing_certificate>を base64 形式の適切な証明書に置き換えます。 - 9

- API サーバーがこの Webhook 受付プラグインを使用する必要があるタイミングを定義するルール。

- 10

- API サーバーをトリガーしてこの Webhook 受付プラグインを呼び出す 1 つ以上の操作。使用できる値は、

create、update、delete、またはconnectです。<operation>および<resource>を適切な値に置き換えます。 - 11

- Webhook サーバーが利用できない場合にポリシーを実行する方法を指定します。

<policy>をIgnore(失敗した場合に要求を無条件で受け入れる) またはFail(失敗した要求を拒否する) のいずれかに置き換えます。Ignoreを使用すると、すべてのクライアントで予測できない動作が生じる可能性があります。

OpenShift Dedicated 4.14 では、ユーザーによって作成されるオブジェクト、または変更用の受付プラグインを使用するコントロールループは、初回の要求で設定される値が上書きされる場合などに予期しない結果を返す場合があるため、推奨されていません。

7.4.2. 検証用の受付プラグイン

検証用の受付プラグインは、受付プロセスの検証フェーズ中に呼び出されます。このフェーズでは、特定 API リソースの変更がない項目の実施を可能にし、リソースが再び変更されないようにすることができます。Pod ノードセレクターは、すべての nodeSelector フィールドが namespace のノードセレクターの制限の制約を受けるようにするために、検証用の受付プラグインによって呼び出される Webhook の一例です。

検証用の受付プラグイン設定のサンプル

- 1

- 検証用の受付プラグイン設定を指定します。

- 2

ValidatingWebhookConfigurationオブジェクトの名前。<webhook_name>を適切な値に置き換えます。- 3

- 呼び出す Webhook の名前。

<webhook_name>を適切な値に置き換えます。 - 4

- Webhook サーバーに接続し、これを信頼し、データをこれに送信する方法に関する情報です。

- 5

- フロントエンドサービスが作成される namespace。

- 6

- フロントエンドサービスの名前。

- 7

- 受付要求に使用される Webhook URL。

<webhook_url>を適切な値に置き換えます。 - 8

- Webhook サーバーで使用されるサーバー証明書に署名する PEM でエンコーディングされた CA 証明書。

<ca_signing_certificate>を base64 形式の適切な証明書に置き換えます。 - 9

- API サーバーがこの Webhook 受付プラグインを使用する必要があるタイミングを定義するルール。

- 10

- API サーバーをトリガーしてこの Webhook 受付プラグインを呼び出す 1 つ以上の操作。使用できる値は、

create、update、delete、またはconnectです。<operation>および<resource>を適切な値に置き換えます。 - 11

- Webhook サーバーが利用できない場合にポリシーを実行する方法を指定します。

<policy>をIgnore(失敗した場合に要求を無条件で受け入れる) またはFail(失敗した要求を拒否する) のいずれかに置き換えます。Ignoreを使用すると、すべてのクライアントで予測できない動作が生じる可能性があります。

Legal Notice

Copyright © Red Hat

OpenShift documentation is licensed under the Apache License 2.0 (https://www.apache.org/licenses/LICENSE-2.0).

Modified versions must remove all Red Hat trademarks.

Portions adapted from https://github.com/kubernetes-incubator/service-catalog/ with modifications by Red Hat.

Red Hat, Red Hat Enterprise Linux, the Red Hat logo, the Shadowman logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries.

Linux® is the registered trademark of Linus Torvalds in the United States and other countries.

Java® is a registered trademark of Oracle and/or its affiliates.

XFS® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries.

MySQL® is a registered trademark of MySQL AB in the United States, the European Union and other countries.

Node.js® is an official trademark of the OpenJS Foundation.

The OpenStack® Word Mark and OpenStack logo are either registered trademarks/service marks or trademarks/service marks of the OpenStack Foundation, in the United States and other countries and are used with the OpenStack Foundation’s permission. We are not affiliated with, endorsed or sponsored by the OpenStack Foundation, or the OpenStack community.

All other trademarks are the property of their respective owners.