Questo contenuto non è disponibile nella lingua selezionata.

Support

OpenShift Dedicated Support.

Abstract

Chapter 1. Support overview

Red Hat offers cluster administrators tools for gathering data for your cluster, monitoring, and troubleshooting.

1.1. Get support

Get support: Visit the Red Hat Customer Portal to review knowledge base articles, submit a support case, and review additional product documentation and resources.

1.2. Remote health monitoring issues

Remote health monitoring issues: OpenShift Dedicated collects telemetry and configuration data about your cluster and reports it to Red Hat by using the Telemeter Client and the Insights Operator. Red Hat uses this data to understand and resolve issues in a connected cluster. OpenShift Dedicated collects data and monitors health using the following:

Telemetry: The Telemetry Client gathers and uploads the metrics values to Red Hat every four minutes and thirty seconds. Red Hat uses this data to:

- Monitor the clusters.

- Roll out OpenShift Dedicated upgrades.

- Improve the upgrade experience.

Insights Operator: By default, OpenShift Dedicated installs and enables the Insights Operator, which reports configuration and component failure status every two hours. The Insights Operator helps to:

- Identify potential cluster issues proactively.

- Provide a solution and preventive action in Red Hat OpenShift Cluster Manager.

You can review telemetry information.

If you have enabled remote health reporting, Using Red Hat Lightspeed to identify issues with your cluster. You can optionally disable remote health reporting.

1.3. Gather data about your cluster

Gather data about your cluster: Red Hat recommends gathering your debugging information when opening a support case. This helps Red Hat Support to perform a root cause analysis. A cluster administrator can use the following to gather data about your cluster:

-

must-gather tool: Use the

must-gathertool to collect information about your cluster and to debug the issues. -

sosreport: Use the

sosreporttool to collect configuration details, system information, and diagnostic data for debugging purposes. - Cluster ID: Obtain the unique identifier for your cluster, when providing information to Red Hat Support.

-

Cluster node journal logs: Gather

journaldunit logs and logs within/var/logon individual cluster nodes to troubleshoot node-related issues. - Network trace: Provide a network packet trace from a specific OpenShift Dedicated cluster node or a container to Red Hat Support to help troubleshoot network-related issues.

1.4. Troubleshooting issues

A cluster administrator can monitor and troubleshoot the following OpenShift Dedicated component issues:

Node issues: A cluster administrator can verify and troubleshoot node-related issues by reviewing the status, resource usage, and configuration of a node. You can query the following:

- Kubelet’s status on a node.

- Cluster node journal logs.

Operator issues: A cluster administrator can do the following to resolve Operator issues:

- Verify Operator subscription status.

- Check Operator pod health.

- Gather Operator logs.

Pod issues: A cluster administrator can troubleshoot pod-related issues by reviewing the status of a pod and completing the following:

- Review pod and container logs.

- Start debug pods with root access.

Source-to-image issues: A cluster administrator can observe the S2I stages to determine where in the S2I process a failure occurred. Gather the following to resolve Source-to-Image (S2I) issues:

- Source-to-Image diagnostic data.

- Application diagnostic data to investigate application failure.

Storage issues: A multi-attach storage error occurs when the mounting volume on a new node is not possible because the failed node cannot unmount the attached volume. A cluster administrator can do the following to resolve multi-attach storage issues:

- Enable multiple attachments by using RWX volumes.

- Recover or delete the failed node when using an RWO volume.

Monitoring issues: A cluster administrator can follow the procedures on the troubleshooting page for monitoring. If the metrics for your user-defined projects are unavailable or if Prometheus is consuming a lot of disk space, check the following:

- Investigate why user-defined metrics are unavailable.

- Determine why Prometheus is consuming a lot of disk space.

-

OpenShift CLI (

oc) issues: Investigate OpenShift CLI (oc) issues by increasing the log level.

Chapter 2. Managing your cluster resources

You can apply global configuration options in OpenShift Dedicated. Operators apply these configuration settings across the cluster.

2.1. Interacting with your cluster resources

You can interact with cluster resources by using the OpenShift CLI (oc) tool in OpenShift Dedicated. The cluster resources that you see after running the oc api-resources command can be edited.

Prerequisites

-

You have access to the cluster as a user with the

dedicated-adminrole. -

You have access to the web console or you have installed the

ocCLI tool.

Procedure

To see which configuration Operators have been applied, run the following command:

oc api-resources -o name | grep config.openshift.io

$ oc api-resources -o name | grep config.openshift.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow To see what cluster resources you can configure, run the following command:

oc explain <resource_name>.config.openshift.io

$ oc explain <resource_name>.config.openshift.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow To see the configuration of custom resource definition (CRD) objects in the cluster, run the following command:

oc get <resource_name>.config -o yaml

$ oc get <resource_name>.config -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow To edit the cluster resource configuration, run the following command:

oc edit <resource_name>.config -o yaml

$ oc edit <resource_name>.config -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Getting support

You can get support for OpenShift Dedicated by searching the knowledge base, submitting a support case, and using remote health monitoring tools.

3.1. Getting support

If you experience difficulty with a procedure described in this documentation, or with OpenShift Dedicated in general, visit the Red Hat Customer Portal.

From the Customer Portal, you can:

- Search or browse through the Red Hat Knowledgebase of articles and solutions relating to Red Hat products.

- Submit a support case to Red Hat Support.

- Access other product documentation.

To identify issues with your cluster, you can use Red Hat Lightspeed in OpenShift Cluster Manager. Red Hat Lightspeed provides details about issues and, if available, information on how to solve a problem.

If you have a suggestion for improving this documentation or have found an error, submit a Jira issue for the most relevant documentation component. Please provide specific details, such as the section name and OpenShift Dedicated version.

3.2. About the Red Hat Knowledgebase

The Red Hat Knowledgebase provides rich content aimed at helping you make the most of Red Hat’s products and technologies. The Red Hat Knowledgebase consists of articles, product documentation, and videos outlining best practices on installing, configuring, and using Red Hat products. In addition, you can search for solutions to known issues, each providing concise root cause descriptions and remedial steps.

3.3. Searching the Red Hat Knowledgebase

In the event of an OpenShift Dedicated issue, you can perform an initial search to determine if a solution already exists within the Red Hat Knowledgebase.

Prerequisites

- You have a Red Hat Customer Portal account.

Procedure

- Log in to the Red Hat Customer Portal.

- Click Search.

In the search field, input keywords and strings relating to the problem, including:

- OpenShift Dedicated components (such as etcd)

- Related procedure (such as installation)

- Warnings, error messages, and other outputs related to explicit failures

- Click the Enter key.

- Optional: Select the OpenShift Dedicated product filter.

- Optional: Select the Documentation content type filter.

3.4. Submitting a support case

Submit a support case to Red Hat Support to get help with issues you encounter with OpenShift Dedicated.

Prerequisites

-

You have access to the cluster as a user with the

dedicated-adminrole. -

You have installed the OpenShift CLI (

oc). - You have access to the Red Hat OpenShift Cluster Manager.

Procedure

- Log in to the Customer Support page of the Red Hat Customer Portal.

- Click Get support.

On the Cases tab of the Customer Support page:

- Optional: Change the pre-filled account and owner details if needed.

- Select the appropriate category for your issue, such as Bug or Defect, and click Continue.

Enter the following information:

- In the Summary field, enter a concise but descriptive problem summary and further details about the symptoms being experienced, as well as your expectations.

- Select OpenShift Dedicated from the Product drop-down menu.

- Review the list of suggested Red Hat Knowledgebase solutions for a potential match against the problem that is being reported. If the suggested articles do not address the issue, click Continue.

- Review the updated list of suggested Red Hat Knowledgebase solutions for a potential match against the problem that is being reported. The list is refined as you provide more information during the case creation process. If the suggested articles do not address the issue, click Continue.

- Ensure that the account information presented is as expected, and if not, amend accordingly.

Check that the autofilled OpenShift Dedicated Cluster ID is correct. If it is not, manually obtain your cluster ID.

To manually obtain your cluster ID using OpenShift Cluster Manager:

- Navigate to Cluster List.

- Click on the name of the cluster you need to open a support case for.

- Find the value in the Cluster ID field of the Details section of the Overview tab.

To manually obtain your cluster ID using the OpenShift Dedicated web console:

- Navigate to Home → Overview.

- Find the value in the Cluster ID field of the Details section.

Alternatively, it is possible to open a new support case through the OpenShift Dedicated web console and have your cluster ID autofilled.

- From the toolbar, navigate to (?) Help → Open Support Case.

- The Cluster ID value is autofilled.

To obtain your cluster ID using the OpenShift CLI (

oc), run the following command:oc get clusterversion -o jsonpath='{.items[].spec.clusterID}{"\n"}'$ oc get clusterversion -o jsonpath='{.items[].spec.clusterID}{"\n"}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Complete the following questions where prompted and then click Continue:

- What are you experiencing? What are you expecting to happen?

- Define the value or impact to you or the business.

- Where are you experiencing this behavior? What environment?

- When does this behavior occur? Frequency? Repeatedly? At certain times?

- Upload relevant diagnostic data files and click Continue.

- Input relevant case management details and click Continue.

- Preview the case details and click Submit.

Chapter 4. Remote health monitoring with connected clusters

4.1. About remote health monitoring

OpenShift Dedicated collects telemetry and configuration data about your cluster and reports it to Red Hat by using the Telemeter Client and the Insights Operator. The data that is provided to Red Hat enables the benefits outlined in this document.

A cluster that reports data to Red Hat through Telemetry and the Insights Operator is considered a connected cluster.

Telemetry is the term that Red Hat uses to describe the information being sent to Red Hat by the OpenShift Dedicated Telemeter Client. Lightweight attributes are sent from connected clusters to Red Hat to enable subscription management automation, monitor the health of clusters, assist with support, and improve customer experience.

The Insights Operator gathers OpenShift Dedicated configuration data and sends it to Red Hat. The data is used to produce insights about potential issues that a cluster might be exposed to. These insights are communicated to cluster administrators on OpenShift Cluster Manager.

More information is provided in this document about these two processes.

4.1.1. Telemetry and Insights Operator benefits

Telemetry and the Insights Operator enable the following benefits for end-users:

- Enhanced identification and resolution of issues. Events that might seem normal to an end-user can be observed by Red Hat from a broader perspective across a fleet of clusters. Some issues can be more rapidly identified from this point of view and resolved without an end-user needing to open a support case or file a Jira issue.

-

Advanced release management. OpenShift Dedicated offers the

candidate,fast, andstablerelease channels, which enable you to choose an update strategy. The graduation of a release fromfasttostableis dependent on the success rate of updates and on the events seen during upgrades. With the information provided by connected clusters, Red Hat can improve the quality of releases tostablechannels and react more rapidly to issues found in thefastchannels. - Targeted prioritization of new features and functionality. The data collected provides insights about which areas of OpenShift Dedicated are used most. With this information, Red Hat can focus on developing the new features and functionality that have the greatest impact for our customers.

- A streamlined support experience. You can provide a cluster ID for a connected cluster when creating a support ticket on the Red Hat Customer Portal. This enables Red Hat to deliver a streamlined support experience that is specific to your cluster, by using the connected information. This document provides more information about that enhanced support experience.

- Predictive analytics. The insights displayed for your cluster on OpenShift Cluster Manager are enabled by the information collected from connected clusters. Red Hat is investing in applying deep learning, machine learning, and artificial intelligence automation to help identify issues that OpenShift Dedicated clusters are exposed to.

On OpenShift Dedicated, remote health reporting is always enabled. You cannot opt out of it.

4.1.2. About Telemetry

Telemetry sends a carefully chosen subset of the cluster monitoring metrics to Red Hat. The Telemeter Client fetches the metrics values every four minutes and thirty seconds and uploads the data to Red Hat. These metrics are described in this document.

This stream of data is used by Red Hat to monitor the clusters in real-time and to react as necessary to problems that impact our customers. It also allows Red Hat to roll out OpenShift Dedicated upgrades to customers to minimize service impact and continuously improve the upgrade experience.

This debugging information is available to Red Hat Support and Engineering teams with the same restrictions as accessing data reported through support cases. All connected cluster information is used by Red Hat to help make OpenShift Dedicated better and more intuitive to use.

4.1.5. About the Insights Operator

The Insights Operator periodically gathers configuration and component failure status and, by default, reports that data every two hours to Red Hat. This information enables Red Hat to assess configuration and deeper failure data than is reported through Telemetry.

Users of OpenShift Dedicated can display the report of each cluster in the Advisor service on Red Hat Hybrid Cloud Console. If any issues have been identified, Red Hat Lightspeed provides further details and, if available, steps on how to solve a problem.

The Insights Operator does not collect identifying information, such as user names, passwords, or certificates. See Red Hat Lightspeed Data & Application Security for information about Red Hat Lightspeed data collection and controls.

Red Hat uses all connected cluster information to:

- Identify potential cluster issues and provide a solution and preventive actions in the Advisor service on Red Hat Hybrid Cloud Console

- Improve OpenShift Dedicated by providing aggregated and critical information to product and support teams

- Make OpenShift Dedicated more intuitive

4.1.5.1. Information collected by the Insights Operator

The following information is collected by the Insights Operator:

- General information about your cluster and its components to identify issues that are specific to your OpenShift Dedicated version and environment.

- Configuration files, such as the image registry configuration, of your cluster to determine incorrect settings and issues that are specific to parameters you set.

- Errors that occur in the cluster components.

- Progress information of running updates, and the status of any component upgrades.

- Details of the platform that OpenShift Dedicated is deployed on and the region that the cluster is located in

-

If an Operator reports an issue, information is collected about core OpenShift Dedicated pods in the

openshift-*andkube-*projects. This includes state, resource, security context, volume information, and more.

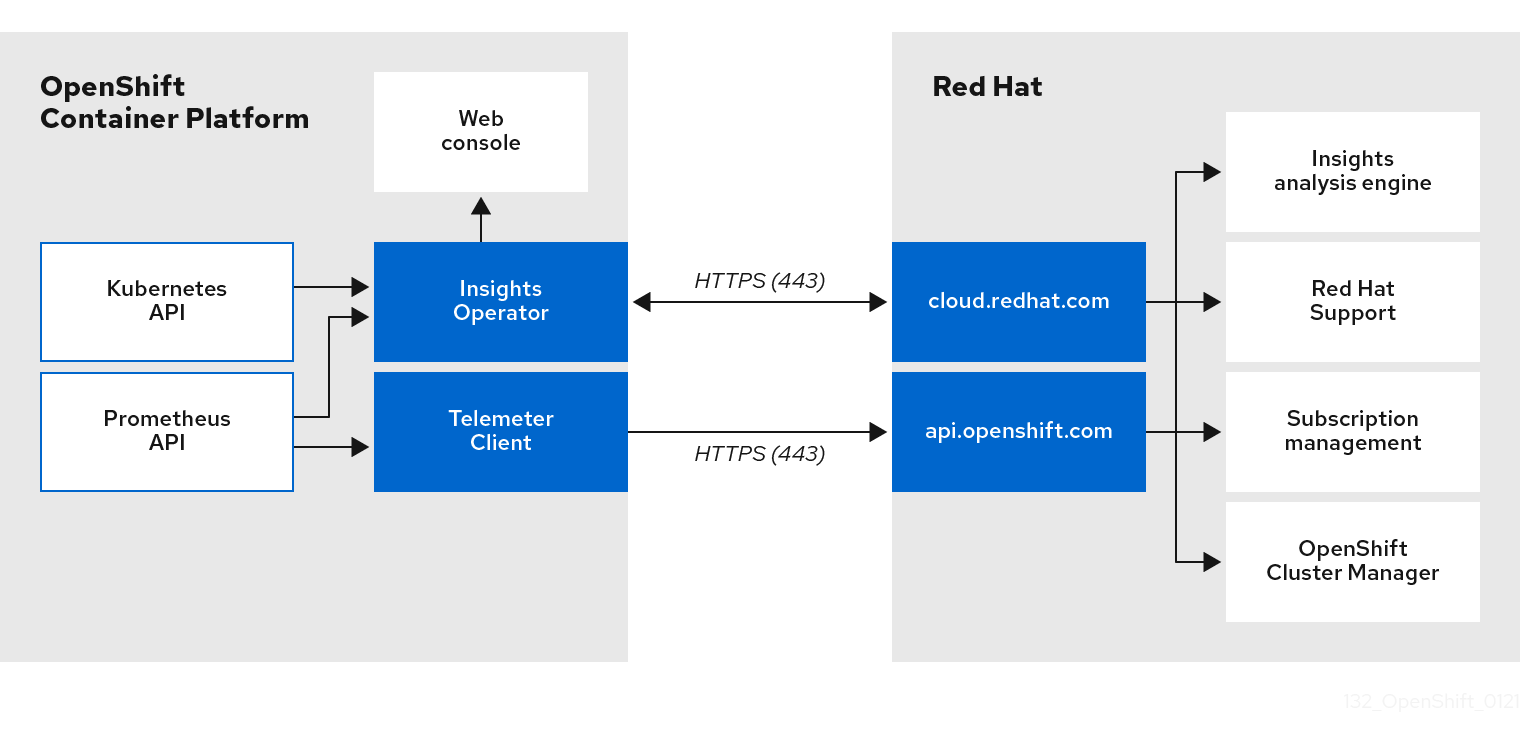

4.1.7. Understanding Telemetry and Insights Operator data flow

The Telemeter Client collects selected time series data from the Prometheus API. The time series data is uploaded to api.openshift.com every four minutes and thirty seconds for processing.

The Insights Operator gathers selected data from the Kubernetes API and the Prometheus API into an archive. The archive is uploaded to OpenShift Cluster Manager every two hours for processing. The Insights Operator also downloads the latest Red Hat Lightspeed analysis from OpenShift Cluster Manager. This is used to populate the Red Hat Lightspeed status pop-up that is included in the Overview page in the OpenShift Dedicated web console.

All of the communication with Red Hat occurs over encrypted channels by using Transport Layer Security (TLS) and mutual certificate authentication. All of the data is encrypted in transit and at rest.

Access to the systems that handle customer data is controlled through multi-factor authentication and strict authorization controls. Access is granted on a need-to-know basis and is limited to required operations.

4.1.7.1. Telemetry and Insights Operator data flow

4.1.9. Additional details about how remote health monitoring data is used

The information collected to enable remote health monitoring is detailed in Information collected by Telemetry and Information collected by the Insights Operator.

As further described in the preceding sections of this document, Red Hat collects data about your use of the Red Hat Product(s) for purposes such as providing support and upgrades, optimizing performance or configuration, minimizing service impacts, identifying and remediating threats, troubleshooting, improving the offerings and user experience, responding to issues, and for billing purposes if applicable.

4.1.10. Collection safeguards

Red Hat employs technical and organizational measures designed to protect the telemetry and configuration data.

4.1.11. Sharing

Red Hat might share the data collected through Telemetry and the Insights Operator internally within Red Hat to improve your user experience. Red Hat might share telemetry and configuration data with its business partners in an aggregated form that does not identify customers to help the partners better understand their markets and their customers' use of Red Hat offerings or to ensure the successful integration of products jointly supported by those partners.

4.1.12. Third parties

Red Hat may engage certain third parties to assist in the collection, analysis, and storage of the Telemetry and configuration data.

4.2. Showing data collected by remote health monitoring

As an administrator, you can review the metrics collected by Telemetry and the Insights Operator.

4.2.1. Showing data collected by Telemetry

You can view the cluster and components time series data captured by Telemetry.

Prerequisites

-

You have installed the OpenShift Container Platform CLI (

oc). -

You have access to the cluster as a user with the

dedicated-adminrole.

Procedure

- Log in to a cluster.

Run the following command, which queries a cluster’s Prometheus service and returns the full set of time series data captured by Telemetry:

NoteThe following example contains some values that are specific to OpenShift Dedicated on AWS.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. Using Red Hat Lightspeed to identify issues with your cluster

Red Hat Lightspeed repeatedly analyzes the data Insights Operator sends, which includes workload recommendations from Deployment Validation Operator (DVO). Users of OpenShift Dedicated can display the results in the Advisor service on Red Hat Hybrid Cloud Console.

4.3.1. About Red Hat Lightspeed Advisor for OpenShift Dedicated

You can use the Red Hat Lightspeed advisor service to assess and monitor the health of your OpenShift Dedicated clusters. Whether you are concerned about individual clusters, or with your whole infrastructure, it is important to be aware of the exposure of your cluster infrastructure to issues that can affect service availability, fault tolerance, performance, or security.

If the cluster has the Deployment Validation Operator (DVO) installed the recommendations also highlight workloads whose configuration might lead to cluster health issues.

The results of the Red Hat Lightspeed analysis are available in the Red Hat Lightspeed advisor service on Red Hat Hybrid Cloud Console. In the Red Hat Hybrid Cloud Console, you can perform the following actions:

- View clusters and workloads affected by specific recommendations.

- Use robust filtering capabilities to refine your results to those recommendations.

- Learn more about individual recommendations, details about the risks they present, and get resolutions tailored to your individual clusters.

- Share results with other stakeholders.

Additional resources

4.3.2. Understanding Red Hat Lightspeed advisor service recommendations

The Red Hat Lightspeed advisor service bundles information about various cluster states and component configurations that can negatively affect the service availability, fault tolerance, performance, or security of your clusters and workloads. This information set is called a recommendation in the Red Hat Lightspeed advisor service. Recommendations for clusters includes the following information:

- Name: A concise description of the recommendation

- Added: When the recommendation was published to the Red Hat Lightspeed advisor service archive

- Category: Whether the issue has the potential to negatively affect service availability, fault tolerance, performance, or security

- Total risk: A value derived from the likelihood that the condition will negatively affect your cluster or workload, and the impact on operations if that were to happen

- Clusters: A list of clusters on which a recommendation is detected

- Description: A brief synopsis of the issue, including how it affects your clusters

4.3.3. Displaying potential issues with your cluster

This section describes how to display the Red Hat Lightspeed report in Red Hat Lightspeed Advisor on OpenShift Cluster Manager.

Note that Red Hat Lightspeed repeatedly analyzes your cluster and shows the latest results. These results can change, for example, if you fix an issue or a new issue has been detected.

Prerequisites

- Your cluster is registered on OpenShift Cluster Manager.

- Remote health reporting is enabled, which is the default.

- You are logged in to OpenShift Cluster Manager.

Procedure

Navigate to Advisor → Recommendations on OpenShift Cluster Manager.

Depending on the result, the Red Hat Lightspeed advisor service displays one of the following:

- No matching recommendations found, if Red Hat Lightspeed did not identify any issues.

- A list of issues Red Hat Lightspeed has detected, grouped by risk (low, moderate, important, and critical).

- No clusters yet, if Red Hat Lightspeed has not yet analyzed the cluster. The analysis starts shortly after the cluster has been installed, registered, and connected to the internet.

If any issues are displayed, click the > icon in front of the entry for more details.

Depending on the issue, the details can also contain a link to more information from Red Hat about the issue.

4.3.4. Displaying all Red Hat Lightspeed advisor service recommendations

The Recommendations view, by default, only displays the recommendations that are detected on your clusters. However, you can view all of the recommendations in the advisor service’s archive.

Prerequisites

- Remote health reporting is enabled, which is the default.

- Your cluster is registered on Red Hat Hybrid Cloud Console.

- You are logged in to OpenShift Cluster Manager.

Procedure

- Navigate to Advisor → Recommendations on OpenShift Cluster Manager.

Click the X icons next to the Clusters Impacted and Status filters.

You can now browse through all of the potential recommendations for your cluster.

4.3.5. Advisor recommendation filters

The Red Hat Lightspeed advisor service can return a large number of recommendations. To focus on your most critical recommendations, you can apply filters to the Advisor recommendations list to remove low-priority recommendations.

By default, filters are set to only show enabled recommendations that are impacting one or more clusters. To view all or disabled recommendations in the Red Hat Lightspeed library, you can customize the filters.

To apply a filter, select a filter type and then set its value based on the options that are available in the drop-down list. You can apply multiple filters to the list of recommendations.

You can set the following filter types:

- Name: Search for a recommendation by name.

- Total risk: Select one or more values from Critical, Important, Moderate, and Low indicating the likelihood and the severity of a negative impact on a cluster.

- Impact: Select one or more values from Critical, High, Medium, and Low indicating the potential impact to the continuity of cluster operations.

- Likelihood: Select one or more values from Critical, High, Medium, and Low indicating the potential for a negative impact to a cluster if the recommendation comes to fruition.

- Category: Select one or more categories from Service Availability, Performance, Fault Tolerance, Security, and Best Practice to focus your attention on.

- Status: Click a radio button to show enabled recommendations (default), disabled recommendations, or all recommendations.

- Clusters impacted: Set the filter to show recommendations currently impacting one or more clusters, non-impacting recommendations, or all recommendations.

- Risk of change: Select one or more values from High, Moderate, Low, and Very low indicating the risk that the implementation of the resolution could have on cluster operations.

4.3.5.1. Filtering Red Hat Lightspeed advisor service recommendations

As an OpenShift Dedicated cluster manager, you can filter the recommendations that are displayed on the recommendations list. By applying filters, you can reduce the number of reported recommendations and concentrate on your highest priority recommendations.

The following procedure demonstrates how to set and remove Category filters; however, the procedure is applicable to any of the filter types and respective values.

Prerequisites

You are logged in to the OpenShift Cluster Manager in the Hybrid Cloud Console.

Procedure

- Go to OpenShift > Advisor > Recommendations.

- In the main, filter-type drop-down list, select the Category filter type.

- Expand the filter-value drop-down list and select the checkbox next to each category of recommendation you want to view. Leave the checkboxes for unnecessary categories clear.

Optional: Add additional filters to further refine the list.

Only recommendations from the selected categories are shown in the list.

Verification

- After applying filters, you can view the updated recommendations list. The applied filters are added next to the default filters.

4.3.5.2. Removing filters from Red Hat Lightspeed advisor service recommendations

You can apply multiple filters to the list of recommendations. When ready, you can remove them individually or completely reset them.

Procedure

Removing filters individually

- Click the X icon next to each filter, including the default filters, to remove them individually.

Removing all non-default filters

- Click Reset filters to remove only the filters that you applied, leaving the default filters in place.

4.3.6. Disabling Red Hat Lightspeed advisor service recommendations

You can disable specific recommendations that affect your clusters, so that they no longer appear in your reports. It is possible to disable a recommendation for a single cluster or all of your clusters.

Disabling a recommendation for all of your clusters also applies to any future clusters.

Prerequisites

- Remote health reporting is enabled, which is the default.

- Your cluster is registered on OpenShift Cluster Manager.

- You are logged in to OpenShift Cluster Manager.

Procedure

- Navigate to Advisor → Recommendations on OpenShift Cluster Manager.

- Optional: Use the Clusters Impacted and Status filters as needed.

Disable an alert by using one of the following methods:

To disable an alert:

-

Click the Options menu

for that alert, and then click Disable recommendation.

for that alert, and then click Disable recommendation.

- Enter a justification note and click Save.

-

Click the Options menu

To view the clusters affected by this alert before disabling the alert:

- Click the name of the recommendation to disable. You are directed to the single recommendation page.

- Review the list of clusters in the Affected clusters section.

- Click Actions → Disable recommendation to disable the alert for all of your clusters.

- Enter a justification note and click Save.

4.3.7. Enabling a previously disabled Red Hat Lightspeed advisor service recommendation

When a recommendation is disabled for all clusters, you no longer see the recommendation in the Red Hat Lightspeed advisor service. You can change this behavior.

Prerequisites

- Remote health reporting is enabled, which is the default.

- Your cluster is registered on OpenShift Cluster Manager.

- You are logged in to OpenShift Cluster Manager.

Procedure

- Navigate to Advisor → Recommendations on OpenShift Cluster Manager.

Filter the recommendations to display on the disabled recommendations:

- From the Status drop-down menu, select Status.

- From the Filter by status drop-down menu, select Disabled.

- Optional: Clear the Clusters impacted filter.

- Locate the recommendation to enable.

-

Click the Options menu

, and then click Enable recommendation.

, and then click Enable recommendation.

4.3.8. About Red Hat Lightspeed advisor service recommendations for workloads

You can use the Red Hat Lightspeed advisor service to view and manage information about recommendations that affect not only your clusters, but also your workloads. The advisor service takes advantage of deployment validation and helps OpenShift cluster administrators to see all runtime violations of deployment policies. You can see recommendations for workloads at OpenShift > Advisor > Workloads on the Red Hat Hybrid Cloud Console. For more information, see these additional resources:

- Information about Kubernetes workloads

- Boost your cluster operations with Deployment Validation and Red Hat Lightspeed Advisor for Workloads

- Identifying workload recommendations for namespaces in your clusters

- Viewing workload recommendations for namespaces in your cluster

- Excluding objects from workload recommendations in your clusters

4.3.9. Displaying the Red Hat Lightspeed status in the web console

Red Hat Lightspeed repeatedly analyzes your cluster and you can display the status of identified potential issues of your cluster in the OpenShift Dedicated web console. This status shows the number of issues in the different categories and, for further details, links to the reports in OpenShift Cluster Manager.

Prerequisites

- Your cluster is registered in OpenShift Cluster Manager.

- Remote health reporting is enabled, which is the default.

- You are logged in to the OpenShift Dedicated web console.

Procedure

- Navigate to Home → Overview in the OpenShift Dedicated web console.

Click Red Hat Lightspeed on the Status card.

The pop-up window lists potential issues grouped by risk. Click the individual categories or View all recommendations in Red Hat Lightspeed Advisor to display more details.

4.4. Using the Insights Operator

The Insights Operator periodically gathers configuration and component failure status and, by default, reports that data every two hours to Red Hat. This information enables Red Hat to assess configuration and deeper failure data than is reported through Telemetry. Users of OpenShift Dedicated can display the report in the Advisor service on Red Hat Hybrid Cloud Console.

4.4.2. Understanding Insights Operator alerts

The Insights Operator declares alerts through the Prometheus monitoring system to the Alertmanager. You can view these alerts in the Alerting UI in the OpenShift Dedicated web console by using one of the following methods:

- In the Administrator perspective, click Observe → Alerting.

- In the Developer perspective, click Observe → <project_name> → Alerts tab.

Currently, Insights Operator sends the following alerts when the conditions are met:

| Alert | Description |

|---|---|

|

| Insights Operator is disabled. |

|

| Simple content access is not enabled in Red Hat Subscription Management. |

|

| Red Hat Lightspeed has an active recommendation for the cluster. |

4.4.3. Obfuscating Deployment Validation Operator data

By default, when you install the Deployment Validation Operator (DVO), the name and unique identifier (UID) of a resource are included in the data that is captured and processed by the Insights Operator for OpenShift Dedicated. If you are a cluster administrator, you can configure the Insights Operator to obfuscate data from the Deployment Validation Operator (DVO). For example, you can obfuscate workload names in the archive file that is then sent to Red Hat.

To obfuscate the name of resources, you must manually set the obfuscation attribute in the insights-config ConfigMap object to include the workload_names value, as outlined in the following procedure.

Prerequisites

- Remote health reporting is enabled, which is the default.

- You are logged in to the OpenShift Dedicated web console with the "cluster-admin" role.

-

The insights-config

ConfigMapobject exists in theopenshift-insightsnamespace. - The cluster is self managed and the Deployment Validation Operator is installed.

Procedure

- Go to Workloads → ConfigMaps and select Project: openshift-insights.

-

Click the

insights-configConfigMapobject to open it. - Click Actions and select Edit ConfigMap.

- Click the YAML view radio button.

In the file, set the

obfuscationattribute with theworkload_namesvalue.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Click Save. The insights-config config-map details page opens.

-

Verify that the value of the

config.yamlobfuscationattribute is set to- workload_names.

Chapter 5. Gathering data about your cluster

When opening a support case, it is helpful to provide debugging information about your cluster to Red Hat Support. You can use tools such as must-gather, sosreport, and cluster node journal logs to collect diagnostic data.

When opening a support case, it is helpful to provide debugging information about your cluster to Red Hat Support.

It is recommended to provide:

5.1. About the must-gather tool

The oc adm must-gather CLI command collects the information from your cluster that is most likely needed for debugging issues, including:

- Resource definitions

- Service logs

By default, the oc adm must-gather command uses the default plugin image and writes into ./must-gather.local.

Alternatively, you can collect specific information by running the command with the appropriate arguments as described in the following sections:

To collect data related to one or more specific features, use the

--imageargument with an image, as listed in a following section.For example:

oc adm must-gather \ --image=registry.redhat.io/container-native-virtualization/cnv-must-gather-rhel9:v4.21.0

$ oc adm must-gather \ --image=registry.redhat.io/container-native-virtualization/cnv-must-gather-rhel9:v4.21.0Copy to Clipboard Copied! Toggle word wrap Toggle overflow To collect the audit logs, use the

-- /usr/bin/gather_audit_logsargument, as described in a following section.For example:

oc adm must-gather -- /usr/bin/gather_audit_logs

$ oc adm must-gather -- /usr/bin/gather_audit_logsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note- Audit logs are not collected as part of the default set of information to reduce the size of the files.

-

On a Windows operating system, install the

cwRsyncclient and add to thePATHvariable for use with theoc rsynccommand.

When you run oc adm must-gather, a new pod with a random name is created in a new project on the cluster. The data is collected on that pod and saved in a new directory that starts with must-gather.local in the current working directory.

For example:

NAMESPACE NAME READY STATUS RESTARTS AGE ... openshift-must-gather-5drcj must-gather-bklx4 2/2 Running 0 72s openshift-must-gather-5drcj must-gather-s8sdh 2/2 Running 0 72s ...

NAMESPACE NAME READY STATUS RESTARTS AGE

...

openshift-must-gather-5drcj must-gather-bklx4 2/2 Running 0 72s

openshift-must-gather-5drcj must-gather-s8sdh 2/2 Running 0 72s

...

Optionally, you can run the oc adm must-gather command in a specific namespace by using the --run-namespace option.

For example:

oc adm must-gather --run-namespace <namespace> \ --image=registry.redhat.io/container-native-virtualization/cnv-must-gather-rhel9:v4.21.0

$ oc adm must-gather --run-namespace <namespace> \

--image=registry.redhat.io/container-native-virtualization/cnv-must-gather-rhel9:v4.21.05.1.1. Gathering data about your cluster for Red Hat Support

You can gather debugging information about your cluster by using the oc adm must-gather CLI command.

Prerequisites

You have access to the cluster as a user with the

cluster-adminrole.NoteIn OpenShift Dedicated deployments, customers who are not using the Customer Cloud Subscription (CCS) model cannot use the

oc adm must-gathercommand as it requirescluster-adminprivileges.-

The OpenShift CLI (

oc) is installed.

Procedure

-

Navigate to the directory where you want to store the

must-gatherdata. Run the

oc adm must-gathercommand:oc adm must-gather

$ oc adm must-gatherCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteBecause this command picks a random control plane node by default, the pod might be scheduled to a control plane node that is in the

NotReadyandSchedulingDisabledstate.If this command fails, for example, if you cannot schedule a pod on your cluster, then use the

oc adm inspectcommand to gather information for particular resources.NoteContact Red Hat Support for the recommended resources to gather.

Create a compressed file from the

must-gatherdirectory that was just created in your working directory. Make sure you provide the date and cluster ID for the unique must-gather data. For more information about how to find the cluster ID, see How to find the cluster-id or name on OpenShift cluster. For example, on a computer that uses a Linux operating system, run the following command:tar cvaf must-gather-`date +"%m-%d-%Y-%H-%M-%S"`-<cluster_id>.tar.gz <must_gather_local_dir>

$ tar cvaf must-gather-`date +"%m-%d-%Y-%H-%M-%S"`-<cluster_id>.tar.gz <must_gather_local_dir>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<must_gather_local_dir>- Replace with the actual directory name.

- Attach the compressed file to your support case on the the Customer Support page of the Red Hat Customer Portal.

5.2. Reducing the size of must-gather output

The oc adm must-gather command collects comprehensive cluster information. However, a full data collection can result in a large file that is difficult to upload and analyze and could result in timeouts.

To manage the output size and target your data collection for more effective troubleshooting, you can pass specific flags to the underlying gather script or scope the collection to particular resources.

5.2.1. Gathering data for specific resources

Instead of collecting data for the entire cluster, you can direct the must-gather tool to inspect a specific resource. This method is highly effective for isolating issues within a single project, Operator, or application.

The must-gather tool uses oc adm inspect internally. You can specify what to inspect by passing the inspect command and its arguments after the -- separator.

Procedure

To gather data for a specific namespace, such as

my-project, run the following command:oc adm must-gather --dest-dir=my-project-must-gather -- oc adm inspect ns/my-project

$ oc adm must-gather --dest-dir=my-project-must-gather -- oc adm inspect ns/my-projectCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

This command collects all standard resources within the

my-projectnamespace, including logs from pods in that namespace, but excludes cluster-scoped resources. To gather data related to a specific Cluster Operator, such as

openshift-apiserver, run the following command:oc adm must-gather --dest-dir=apiserver-must-gather -- oc adm inspect clusteroperator/openshift-apiserver

$ oc adm must-gather --dest-dir=apiserver-must-gather -- oc adm inspect clusteroperator/openshift-apiserverCopy to Clipboard Copied! Toggle word wrap Toggle overflow To exclude logs entirely and significantly reduce the size of the

must-gatherarchive, add a double dash (--) afteroc adm must-gathercommand and add the--no-logsargument:oc adm must-gather -- /usr/bin/gather --no-logs

$ oc adm must-gather -- /usr/bin/gather --no-logsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.2. Must-gather flags

The flags listed in the following table are available to use with the oc adm must-gather command.

| Flag | Example command | Description |

|---|---|---|

|

|

|

Collect |

|

|

| Set a specific directory on the local machine where the gathered data is written. |

|

|

|

Run |

|

|

|

Specify a |

|

|

|

Specify an`<image_stream>` using a namespace or name:tag value containing a |

|

|

| Set a specific node to use. If not specified, by default a random master is used. |

|

|

| Set a specific node selector to use. Only relevant when specifying a command and image which needs to capture data on a set of cluster nodes simultaneously. |

|

|

|

An existing privileged namespace where |

|

|

|

Only return logs newer than the specified duration. Defaults to all logs. Plugins are encouraged but not required to support this. Only one |

|

|

|

Only return logs after a specific date and time, expressed in (RFC3339) format. Defaults to all logs. Plugins are encouraged but not required to support this. Only one |

|

|

| Set the specific directory on the pod where you copy the gathered data from. |

|

|

| The length of time to gather data before timing out, expressed as seconds, minutes, or hours, for example, 3s, 5m, or 2h. Time specified must be higher than zero. Defaults to 10 minutes if not specified. |

|

|

|

Specify maximum percentage of pod’s allocated volume that can be used for |

5.2.3. Gathering data about specific features

You can gather debugging information about specific features by using the oc adm must-gather CLI command with the --image or --image-stream argument. The must-gather tool supports multiple images, so you can gather data about more than one feature by running a single command.

| Image | Purpose |

|---|---|

|

| Data collection for OpenShift Virtualization. |

|

| Data collection for OpenShift Serverless. |

|

| Data collection for Red Hat OpenShift Service Mesh. |

|

| Data collection for hosted control planes. |

|

| Data collection for the Migration Toolkit for Containers. |

|

| Data collection for logging. |

|

| Data collection for the Network Observability Operator. |

|

| Data collection for Red Hat OpenShift GitOps. |

|

| Data collection for the Secrets Store CSI Driver Operator. |

To determine the latest version for an OpenShift Dedicated component’s image, see the OpenShift Operator Life Cycles web page on the Red Hat Customer Portal.

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole. -

The OpenShift CLI (

oc) is installed.

Procedure

-

Navigate to the directory where you want to store the

must-gatherdata. Run the

oc adm must-gathercommand with one or more--imageor--image-streamarguments.Note-

To collect the default

must-gatherdata in addition to specific feature data, add the--image-stream=openshift/must-gatherargument.

For example, the following command gathers both the default cluster data and information specific to OpenShift Virtualization:

oc adm must-gather \ --image-stream=openshift/must-gather \ --image=registry.redhat.io/container-native-virtualization/cnv-must-gather-rhel9:v4.21.0

$ oc adm must-gather \ --image-stream=openshift/must-gather \ --image=registry.redhat.io/container-native-virtualization/cnv-must-gather-rhel9:v4.21.0Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can use the

must-gathertool with additional arguments to gather data that is specifically related to OpenShift Logging and the Cluster Logging Operator in your cluster. For OpenShift Logging, run the following command:oc adm must-gather --image=$(oc -n openshift-logging get deployment.apps/cluster-logging-operator \ -o jsonpath='{.spec.template.spec.containers[?(@.name == "cluster-logging-operator")].image}')$ oc adm must-gather --image=$(oc -n openshift-logging get deployment.apps/cluster-logging-operator \ -o jsonpath='{.spec.template.spec.containers[?(@.name == "cluster-logging-operator")].image}')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

must-gatheroutput for OpenShift LoggingCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

To collect the default

Run the

oc adm must-gathercommand with one or more--imageor--image-streamarguments. For example, the following command gathers both the default cluster data and information specific to KubeVirt:oc adm must-gather \ --image-stream=openshift/must-gather \ --image=quay.io/kubevirt/must-gather

$ oc adm must-gather \ --image-stream=openshift/must-gather \ --image=quay.io/kubevirt/must-gatherCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a compressed file from the

must-gatherdirectory that was just created in your working directory. Make sure you provide the date and cluster ID for the unique must-gather data. For more information about how to find the cluster ID, see How to find the cluster-id or name on OpenShift cluster. For example, on a computer that uses a Linux operating system, run the following command:tar cvaf must-gather-`date +"%m-%d-%Y-%H-%M-%S"`-<cluster_id>.tar.gz <must_gather_local_dir>

$ tar cvaf must-gather-`date +"%m-%d-%Y-%H-%M-%S"`-<cluster_id>.tar.gz <must_gather_local_dir>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<must_gather_local_dir>- Replace with the actual directory name.

- Attach the compressed file to your support case on the the Customer Support page of the Red Hat Customer Portal.

5.4. About Support Log Gather

Support Log Gather Operator builds on the functionality of the traditional must-gather tool to automate the collection of debugging data. It streamlines troubleshooting by packaging the collected information into a single .tar file and automatically uploading it to the specified Red Hat Support case.

Support Log Gather is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

The key features of Support Log Gather include the following:

- No administrator privileges required: Enables you to collect and upload logs without needing elevated permissions, making it easier for non-administrators to gather data securely.

- Simplified log collection: Collects debugging data from the cluster, such as resource definitions and service logs.

-

Configurable data upload: Provides configuration options to either automatically upload the

.tarfile to a support case, or store it locally for manual upload.

5.4.1. Installing Support Log Gather by using the web console

You can use the web console to install the Support Log Gather.

Support Log Gather is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

Prerequisites

-

You have access to the cluster with

cluster-adminprivileges. - You have access to the OpenShift Dedicated web console.

Procedure

- Log in to the OpenShift Dedicated web console.

- Navigate to Ecosystem → Software Catalog.

- In the filter box, enter Support Log Gather.

- Select Support Log Gather.

- From Version list, select the Support Log Gather version, and click Install.

On the Install Operator page, configure the installation settings:

Choose the Installed Namespace for the Operator.

The default Operator namespace is

must-gather-operator. Themust-gather-operatornamespace is created automatically if it does not exist.Select an Update approval strategy:

- Select Automatic to have the Operator Lifecycle Manager (OLM) update the Operator automatically when a newer version is available.

- Select Manual if Operator updates must be approved by a user with appropriate credentials.

- Click Install.

Verification

Verify that the Operator is installed successfully:

- Navigate to Ecosystem → Software Catalog.

-

Verify that Support Log Gather is listed with a Status of Succeeded in the

must-gather-operatornamespace.

Verify that Support Log Gather pods are running:

- Navigate to Workloads → Pods

Verify that the status of the Support Log Gather pods is Running.

You can use the Support Log Gather only after the pods are up and running.

5.4.2. Installing Support Log Gather by using the CLI

To enable automated log collection for support cases, you can install Support Log Gather from the command-line interface (CLI).

Support Log Gather is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

Prerequisites

-

You have access to the cluster with

cluster-adminprivileges.

Procedure

Create a new project named

must-gather-operatorby running the following command:oc new-project must-gather-operator

$ oc new-project must-gather-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create an

OperatorGroupobject:Create a YAML file, for example,

operatorGroup.yaml, that defines theOperatorGroupobject:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

OperatorGroupobject by running the following command:oc create -f operatorGroup.yaml

$ oc create -f operatorGroup.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a

Subscriptionobject:Create a YAML file, for example,

subscription.yaml, that defines theSubscriptionobject:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

Subscriptionobject by running the following command:oc create -f subscription.yaml

$ oc create -f subscription.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify the status of the pods in the Operator namespace by running the following command.

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE must-gather-operator-657fc74d64-2gg2w 1/1 Running 0 13m

NAME READY STATUS RESTARTS AGE must-gather-operator-657fc74d64-2gg2w 1/1 Running 0 13mCopy to Clipboard Copied! Toggle word wrap Toggle overflow The status of all the pods must be

Running.Verify that the subscription is created by running the following command:

oc get subscription -n must-gather-operator

$ oc get subscription -n must-gather-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME PACKAGE SOURCE CHANNEL support-log-gather-operator support-log-gather-operator redhat-operators tech-preview

NAME PACKAGE SOURCE CHANNEL support-log-gather-operator support-log-gather-operator redhat-operators tech-previewCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the Operator is installed by running the following command:

oc get csv -n must-gather-operator

$ oc get csv -n must-gather-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME DISPLAY VERSION REPLACES PHASE support-log-gather-operator.v4.21.0 support log gather 4.21.0 Succeeded

NAME DISPLAY VERSION REPLACES PHASE support-log-gather-operator.v4.21.0 support log gather 4.21.0 SucceededCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4.3. Configuring a Support Log Gather instance

You must create a MustGather custom resource (CR) from the command-line interface (CLI) to automate the collection of diagnostic data from your cluster. This process also automatically uploads the data to a Red Hat Support case.

Support Log Gather is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

Prerequisites

-

You have installed the OpenShift CLI (

oc) tool. - You have installed Support Log Gather in your cluster.

- You have a Red Hat Support case ID.

- You have created a Kubernetes secret containing your Red Hat Customer Portal credentials. The secret must contain a username field and a password field.

- You have created a service account.

Procedure

Create a YAML file for the

MustGatherCR, such assupport-log-gather.yaml, that contains the following basic configuration::Example

support-log-gather.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For more information on the configuration parameters, see "Configuration parameters for MustGather custom resource".

Create the

MustGatherobject by running the following command:oc create -f support-log-gather.yaml

$ oc create -f support-log-gather.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that the

MustGatherCR was created by running the following command:oc get mustgather

$ oc get mustgatherCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME AGE example-mg 7s

NAME AGE example-mg 7sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the status of the pods in the Operator namespace by running the following command.

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE must-gather-operator-657fc74d64-2gg2w 1/1 Running 0 13m example-mg-gk8m8 2/2 Running 0 13s

NAME READY STATUS RESTARTS AGE must-gather-operator-657fc74d64-2gg2w 1/1 Running 0 13m example-mg-gk8m8 2/2 Running 0 13sCopy to Clipboard Copied! Toggle word wrap Toggle overflow A new pod with a name based on the

MustGatherCR must be created. The status of all the pods must beRunning.To monitor the progress of the file upload, view the logs of the upload container in the job pod by running the following command:

oc logs -f pod/example-mg-gk8m8 -c upload

oc logs -f pod/example-mg-gk8m8 -c uploadCopy to Clipboard Copied! Toggle word wrap Toggle overflow When successful, the process must create an archive and upload it to the Red Hat Secure File Transfer Protocol (SFTP) server for the specified case.

5.6. Obtaining your cluster ID

When providing information to Red Hat Support, it is helpful to provide the unique identifier for your cluster. You can have your cluster ID autofilled by using the OpenShift Dedicated web console. You can also manually obtain your cluster ID by using the web console or the OpenShift CLI (oc).

Prerequisites

-

You have access to the cluster as a user with the

dedicated-adminrole. -

You have access to the web console or the OpenShift CLI (

oc) installed.

Procedure

To manually obtain your cluster ID using OpenShift Cluster Manager:

- Navigate to Cluster List.

- Click on the name of the cluster you need to open a support case for.

- Find the value in the Cluster ID field of the Details section of the Overview tab.

To open a support case and have your cluster ID autofilled using the web console:

- From the toolbar, navigate to (?) Help and select Share Feedback from the list.

- Click Open a support case from the Tell us about your experience window.

To manually obtain your cluster ID using the web console:

- Navigate to Home → Overview.

- The value is available in the Cluster ID field of the Details section.

To obtain your cluster ID using the OpenShift CLI (

oc), run the following command:oc get clusterversion -o jsonpath='{.items[].spec.clusterID}{"\n"}'$ oc get clusterversion -o jsonpath='{.items[].spec.clusterID}{"\n"}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.7. Querying cluster node journal logs

You can gather journald unit logs and other logs within /var/log on individual cluster nodes.

Prerequisites

You have access to the cluster as a user with the

cluster-adminrole.NoteIn OpenShift Dedicated deployments, customers who are not using the Customer Cloud Subscription (CCS) model cannot use the

oc adm node-logscommand as it requirescluster-adminprivileges.-

You have installed the OpenShift CLI (

oc).

Procedure

Query

kubeletjournaldunit logs from OpenShift Dedicated cluster nodes. The following example queries control plane nodes only:oc adm node-logs --role=master -u kubelet

$ oc adm node-logs --role=master -u kubelet1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow kubelet- Replace as appropriate to query other unit logs.

Collect logs from specific subdirectories under

/var/log/on cluster nodes.Retrieve a list of logs contained within a

/var/log/subdirectory. The following example lists files in/var/log/openshift-apiserver/on all control plane nodes:oc adm node-logs --role=master --path=openshift-apiserver

$ oc adm node-logs --role=master --path=openshift-apiserverCopy to Clipboard Copied! Toggle word wrap Toggle overflow Inspect a specific log within a

/var/log/subdirectory. The following example outputs/var/log/openshift-apiserver/audit.logcontents from all control plane nodes:oc adm node-logs --role=master --path=openshift-apiserver/audit.log

$ oc adm node-logs --role=master --path=openshift-apiserver/audit.logCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.8. Network trace methods

Collecting network traces, in the form of packet capture records, can assist Red Hat Support with troubleshooting network issues.

OpenShift Dedicated supports two ways of performing a network trace. Review the following table and choose the method that meets your needs.

| Method | Benefits and capabilities |

|---|---|

| Collecting a host network trace | You perform a packet capture for a duration that you specify on one or more nodes at the same time. The packet capture files are transferred from nodes to the client machine when the specified duration is met. You can troubleshoot why a specific action triggers network communication issues. Run the packet capture, perform the action that triggers the issue, and use the logs to diagnose the issue. |

| Collecting a network trace from an OpenShift Dedicated node or container |

You perform a packet capture on one node or one container. You run the You can start the packet capture manually, trigger the network communication issue, and then stop the packet capture manually.

This method uses the |

5.9. Collecting a host network trace

Sometimes, troubleshooting a network-related issue is simplified by tracing network communication and capturing packets on multiple nodes at the same time.

You can use a combination of the oc adm must-gather command and the registry.redhat.io/openshift4/network-tools-rhel8 container image to gather packet captures from nodes. Analyzing packet captures can help you troubleshoot network communication issues.

The oc adm must-gather command is used to run the tcpdump command in pods on specific nodes. The tcpdump command records the packet captures in the pods. When the tcpdump command exits, the oc adm must-gather command transfers the files with the packet captures from the pods to your client machine.

The sample command in the following procedure demonstrates performing a packet capture with the tcpdump command. However, you can run any command in the container image that is specified in the --image argument to gather troubleshooting information from multiple nodes at the same time.

Prerequisites

You are logged in to OpenShift Dedicated as a user with the

cluster-adminrole.NoteIn OpenShift Dedicated deployments, customers who are not using the Customer Cloud Subscription (CCS) model cannot use the

oc adm must-gathercommand as it requirescluster-adminprivileges.-

You have installed the OpenShift CLI (

oc).

Procedure

Run a packet capture from the host network on some nodes by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

--dest-dir /tmp/captures-

The

--dest-dirargument specifies thatoc adm must-gatherstores the packet captures in directories that are relative to/tmp/captureson the client machine. You can specify any writable directory. --source-dir '/tmp/tcpdump/'-

When

tcpdumpis run in the debug pod thatoc adm must-gatherstarts, the--source-dirargument specifies that the packet captures are temporarily stored in the/tmp/tcpdumpdirectory on the pod. --image registry.redhat.io/openshift4/network-tools-rhel8:latest-

The

--imageargument specifies a container image that includes thetcpdumpcommand. --node-selector 'node-role.kubernetes.io/worker'-

The

--node-selectorargument and example value specifies to perform the packet captures on the worker nodes. As an alternative, you can specify the--node-nameargument instead to run the packet capture on a single node. If you omit both the--node-selectorand the--node-nameargument, the packet captures are performed on all nodes. --host-network=true-

The

--host-network=trueargument is required so that the packet captures are performed on the network interfaces of the node. --timeout 30s-

The

--timeoutargument and value specify to run the debug pod for 30 seconds. If you do not specify the--timeoutargument and a duration, the debug pod runs for 10 minutes. -i any-

The

-i anyargument for thetcpdumpcommand specifies to capture packets on all network interfaces. As an alternative, you can specify a network interface name.

- Perform the action, such as accessing a web application, that triggers the network communication issue while the network trace captures packets.

Review the packet capture files that

oc adm must-gathertransferred from the pods to your client machine:Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

ip-10-0-192-217-ec2-internal,ip-10-0-201-178-ec2-internal-

The packet captures are stored in directories that identify the hostname, container, and file name. If you did not specify the

--node-selectorargument, then the directory level for the hostname is not present.

5.10. Collecting a network trace from an OpenShift Dedicated node or container

When investigating potential network-related OpenShift Dedicated issues, Red Hat Support might request a network packet trace from a specific OpenShift Dedicated cluster node or from a specific container. The recommended method to capture a network trace in OpenShift Dedicated is through a debug pod.

Prerequisites

You have access to the cluster as a user with the

cluster-adminrole.NoteIn OpenShift Dedicated deployments, customers who are not using the Customer Cloud Subscription (CCS) model cannot use the

oc debugcommand as it requirescluster-adminprivileges.-

You have installed the OpenShift CLI (

oc). - You have an existing Red Hat Support case ID.

Procedure

Obtain a list of cluster nodes:

oc get nodes

$ oc get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter into a debug session on the target node. This step instantiates a debug pod called

<node_name>-debug:oc debug node/my-cluster-node

$ oc debug node/my-cluster-nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set

/hostas the root directory within the debug shell. The debug pod mounts the host’s root file system in/hostwithin the pod. By changing the root directory to/host, you can run binaries contained in the host’s executable paths:chroot /host

# chroot /hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow From within the

chrootenvironment console, obtain the node’s interface names:ip ad

# ip adCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start a

toolboxcontainer, which includes the required binaries and plugins to runsosreport:toolbox

# toolboxCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf an existing

toolboxpod is already running, thetoolboxcommand outputs'toolbox-' already exists. Trying to start…. To avoidtcpdumpissues, remove the running toolbox container withpodman rm toolbox-and spawn a new toolbox container.Initiate a

tcpdumpsession on the cluster node and redirect output to a capture file. This example usesens5as the interface name:tcpdump -nn -s 0 -i ens5 -w /host/var/tmp/my-cluster-node_$(date +%d_%m_%Y-%H_%M_%S-%Z).pcap

$ tcpdump -nn -s 0 -i ens5 -w /host/var/tmp/my-cluster-node_$(date +%d_%m_%Y-%H_%M_%S-%Z).pcapCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

/host/var/tmp/my-cluster-node_$(date +%d_%m_%Y-%H_%M_%S-%Z).pcap-

The

tcpdumpcapture file’s path is outside of thechrootenvironment because the toolbox container mounts the host’s root directory at/host.

If a

tcpdumpcapture is required for a specific container on the node, follow these steps.Determine the target container ID. The

chroot hostcommand precedes thecrictlcommand in this step because the toolbox container mounts the host’s root directory at/host:chroot /host crictl ps

# chroot /host crictl psCopy to Clipboard Copied! Toggle word wrap Toggle overflow Determine the container’s process ID. In this example, the container ID is

a7fe32346b120:chroot /host crictl inspect --output yaml a7fe32346b120 | grep 'pid' | awk '{print $2}'# chroot /host crictl inspect --output yaml a7fe32346b120 | grep 'pid' | awk '{print $2}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Initiate a

tcpdumpsession on the container and redirect output to a capture file. This example uses49628as the container’s process ID andens5as the interface name. Thensentercommand enters the namespace of a target process and runs a command in its namespace. because the target process in this example is a container’s process ID, thetcpdumpcommand is run in the container’s namespace from the host:nsenter -n -t 49628 -- tcpdump -nn -i ens5 -w /host/var/tmp/my-cluster-node-my-container_$(date +%d_%m_%Y-%H_%M_%S-%Z).pcap

# nsenter -n -t 49628 -- tcpdump -nn -i ens5 -w /host/var/tmp/my-cluster-node-my-container_$(date +%d_%m_%Y-%H_%M_%S-%Z).pcapCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

/host/var/tmp/my-cluster-node-my-container_$(date +%d_%m_%Y-%H_%M_%S-%Z).pcap-

The

tcpdumpcapture file’s path is outside of thechrootenvironment because the toolbox container mounts the host’s root directory at/host.

Provide the

tcpdumpcapture file to Red Hat Support for analysis, using one of the following methods.Upload the file to an existing Red Hat support case.

Concatenate the

sosreportarchive by running theoc debug node/<node_name>command and redirect the output to a file. This command assumes you have exited the previousoc debugsession:oc debug node/my-cluster-node -- bash -c 'cat /host/var/tmp/my-tcpdump-capture-file.pcap' > /tmp/my-tcpdump-capture-file.pcap

$ oc debug node/my-cluster-node -- bash -c 'cat /host/var/tmp/my-tcpdump-capture-file.pcap' > /tmp/my-tcpdump-capture-file.pcapCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

/host/var/tmp/my-tcpdump-capture-file.pcap-

The debug container mounts the host’s root directory at

/host. Reference the absolute path from the debug container’s root directory, including/host, when specifying target files for concatenation.

- Navigate to an existing support case within the Customer Support page of the Red Hat Customer Portal.

- Select Attach files and follow the prompts to upload the file.

5.11. Providing diagnostic data to Red Hat Support

When investigating OpenShift Dedicated issues, Red Hat Support might ask you to upload diagnostic data to a support case. Files can be uploaded to a support case through the Red Hat Customer Portal.

Prerequisites

You have access to the cluster as a user with the

cluster-adminrole.NoteIn OpenShift Dedicated deployments, customers who are not using the Customer Cloud Subscription (CCS) model cannot use the

oc debugcommand as it requirescluster-adminprivileges.-

You have installed the OpenShift CLI (

oc). - You have an existing Red Hat Support case ID.

Procedure

Upload diagnostic data to an existing Red Hat support case through the Red Hat Customer Portal.

Concatenate a diagnostic file contained on an OpenShift Dedicated node by using the

oc debug node/<node_name>command and redirect the output to a file. The following example copies/host/var/tmp/my-diagnostic-data.tar.gzfrom a debug container to/var/tmp/my-diagnostic-data.tar.gz:oc debug node/my-cluster-node -- bash -c 'cat /host/var/tmp/my-diagnostic-data.tar.gz' > /var/tmp/my-diagnostic-data.tar.gz

$ oc debug node/my-cluster-node -- bash -c 'cat /host/var/tmp/my-diagnostic-data.tar.gz' > /var/tmp/my-diagnostic-data.tar.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

/host/var/tmp/my-diagnostic-data.tar.gz-

The debug container mounts the host’s root directory at

/host. Reference the absolute path from the debug container’s root directory, including/host, when specifying target files for concatenation.

- Navigate to an existing support case within the Customer Support page of the Red Hat Customer Portal.

- Select Attach files and follow the prompts to upload the file.

5.12. About toolbox

toolbox is a tool that starts a container on a Red Hat Enterprise Linux CoreOS (RHCOS) system. The tool is primarily used to start a container that includes the required binaries and plugins that are needed to run commands such as sosreport.

The primary purpose for a toolbox container is to gather diagnostic information and to provide it to Red Hat Support. However, if additional diagnostic tools are required, you can add RPM packages or run an image that is an alternative to the standard support tools image.

5.12.1. Installing packages to a toolbox container

By default, running the toolbox command starts a container with the registry.redhat.io/rhel9/support-tools:latest image. This image contains the most frequently used support tools. If you need to collect node-specific data that requires a support tool that is not part of the image, you can install additional packages.

Prerequisites

-

You have accessed a node with the

oc debug node/<node_name>command. - You can access your system as a user with root privileges.

Procedure

Set

/hostas the root directory within the debug shell. The debug pod mounts the host’s root file system in/hostwithin the pod. By changing the root directory to/host, you can run binaries contained in the host’s executable paths:chroot /host

# chroot /hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start the toolbox container:

toolbox

# toolboxCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install the additional package, such as

wget:dnf install -y <package_name>

# dnf install -y <package_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.12.2. Starting an alternative image with toolbox

By default, running the toolbox command starts a container with the registry.redhat.io/rhel9/support-tools:latest image.

You can start an alternative image by creating a .toolboxrc file and specifying the image to run. However, running an older version of the support-tools image, such as registry.redhat.io/rhel8/support-tools:latest, is not supported on OpenShift Dedicated 4.

Prerequisites

-

You have accessed a node with the

oc debug node/<node_name>command. - You can access your system as a user with root privileges.

Procedure

Set

/hostas the root directory within the debug shell. The debug pod mounts the host’s root file system in/hostwithin the pod. By changing the root directory to/host, you can run binaries contained in the host’s executable paths:chroot /host

# chroot /hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If you need to use an alternative image instead of the default image, create a

.toolboxrcfile in the home directory for the root user ID, and specify the image metadata:REGISTRY=quay.io IMAGE=fedora/fedora:latest TOOLBOX_NAME=toolbox-fedora-latest

REGISTRY=quay.io IMAGE=fedora/fedora:latest TOOLBOX_NAME=toolbox-fedora-latestCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

REGISTRY=quay.io- Optional: Specify an alternative container registry.

IMAGE=fedora/fedora:latest- Specify an alternative image to start.

TOOLBOX_NAME=toolbox-fedora-latest- Optional: Specify an alternative name for the toolbox container.

Start a toolbox container by entering the following command:

toolbox