This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Chapter 24. Configuring Persistent Storage

24.1. Overview

The Kubernetes persistent volume framework allows you to provision an OpenShift Container Platform cluster with persistent storage using networked storage available in your environment. This can be done after completing the initial OpenShift Container Platform installation depending on your application needs, giving users a way to request those resources without having any knowledge of the underlying infrastructure.

These topics show how to configure persistent volumes in OpenShift Container Platform using the following supported volume plug-ins:

24.2. Persistent Storage Using NFS

24.2.1. Overview

OpenShift Container Platform clusters can be provisioned with persistent storage using NFS. Persistent volumes (PVs) and persistent volume claims (PVCs) provide a convenient method for sharing a volume across a project. While the NFS-specific information contained in a PV definition could also be defined directly in a pod definition, doing so does not create the volume as a distinct cluster resource, making the volume more susceptible to conflicts.

This topic covers the specifics of using the NFS persistent storage type. Some familiarity with OpenShift Container Platform and NFS is beneficial. See the Persistent Storage concept topic for details on the OpenShift Container Platform persistent volume (PV) framework in general.

24.2.2. Provisioning

Storage must exist in the underlying infrastructure before it can be mounted as a volume in OpenShift Container Platform. To provision NFS volumes, a list of NFS servers and export paths are all that is required.

You must first create an object definition for the PV:

Example 24.1. PV Object Definition Using NFS

- 1

- The name of the volume. This is the PV identity in various

oc <command> podcommands. - 2

- The amount of storage allocated to this volume.

- 3

- Though this appears to be related to controlling access to the volume, it is actually used similarly to labels and used to match a PVC to a PV. Currently, no access rules are enforced based on the

accessModes. - 4

- The volume type being used, in this case the nfs plug-in.

- 5

- The path that is exported by the NFS server.

- 6

- The host name or IP address of the NFS server.

- 7

- The reclaim policy for the PV. This defines what happens to a volume when released from its claim. Valid options are Retain (default) and Recycle. See Reclaiming Resources.

Each NFS volume must be mountable by all schedulable nodes in the cluster.

Save the definition to a file, for example nfs-pv.yaml, and create the PV:

oc create -f nfs-pv.yaml persistentvolume "pv0001" created

$ oc create -f nfs-pv.yaml

persistentvolume "pv0001" createdVerify that the PV was created:

oc get pv NAME LABELS CAPACITY ACCESSMODES STATUS CLAIM REASON AGE pv0001 <none> 5368709120 RWO Available 31s

# oc get pv

NAME LABELS CAPACITY ACCESSMODES STATUS CLAIM REASON AGE

pv0001 <none> 5368709120 RWO Available 31sThe next step can be to create a PVC, which binds to the new PV:

Example 24.2. PVC Object Definition

Save the definition to a file, for example nfs-claim.yaml, and create the PVC:

oc create -f nfs-claim.yaml

# oc create -f nfs-claim.yaml24.2.3. Enforcing Disk Quotas

You can use disk partitions to enforce disk quotas and size constraints. Each partition can be its own export. Each export is one PV. OpenShift Container Platform enforces unique names for PVs, but the uniqueness of the NFS volume’s server and path is up to the administrator.

Enforcing quotas in this way allows the developer to request persistent storage by a specific amount (for example, 10Gi) and be matched with a corresponding volume of equal or greater capacity.

24.2.4. NFS Volume Security

This section covers NFS volume security, including matching permissions and SELinux considerations. The user is expected to understand the basics of POSIX permissions, process UIDs, supplemental groups, and SELinux.

See the full Volume Security topic before implementing NFS volumes.

Developers request NFS storage by referencing, in the volumes section of their pod definition, either a PVC by name or the NFS volume plug-in directly.

The /etc/exports file on the NFS server contains the accessible NFS directories. The target NFS directory has POSIX owner and group IDs. The OpenShift Container Platform NFS plug-in mounts the container’s NFS directory with the same POSIX ownership and permissions found on the exported NFS directory. However, the container is not run with its effective UID equal to the owner of the NFS mount, which is the desired behavior.

As an example, if the target NFS directory appears on the NFS server as:

ls -lZ /opt/nfs -d drwxrws---. nfsnobody 5555 unconfined_u:object_r:usr_t:s0 /opt/nfs id nfsnobody uid=65534(nfsnobody) gid=65534(nfsnobody) groups=65534(nfsnobody)

# ls -lZ /opt/nfs -d

drwxrws---. nfsnobody 5555 unconfined_u:object_r:usr_t:s0 /opt/nfs

# id nfsnobody

uid=65534(nfsnobody) gid=65534(nfsnobody) groups=65534(nfsnobody)Then the container must match SELinux labels, and either run with a UID of 65534 (nfsnobody owner) or with 5555 in its supplemental groups in order to access the directory.

The owner ID of 65534 is used as an example. Even though NFS’s root_squash maps root (0) to nfsnobody (65534), NFS exports can have arbitrary owner IDs. Owner 65534 is not required for NFS exports.

24.2.4.1. Group IDs

The recommended way to handle NFS access (assuming it is not an option to change permissions on the NFS export) is to use supplemental groups. Supplemental groups in OpenShift Container Platform are used for shared storage, of which NFS is an example. In contrast, block storage, such as Ceph RBD or iSCSI, use the fsGroup SCC strategy and the fsGroup value in the pod’s securityContext.

It is generally preferable to use supplemental group IDs to gain access to persistent storage versus using user IDs. Supplemental groups are covered further in the full Volume Security topic.

Because the group ID on the example target NFS directory shown above is 5555, the pod can define that group ID using supplementalGroups under the pod-level securityContext definition. For example:

Assuming there are no custom SCCs that might satisfy the pod’s requirements, the pod likely matches the restricted SCC. This SCC has the supplementalGroups strategy set to RunAsAny, meaning that any supplied group ID is accepted without range checking.

As a result, the above pod passes admissions and is launched. However, if group ID range checking is desired, a custom SCC, as described in pod security and custom SCCs, is the preferred solution. A custom SCC can be created such that minimum and maximum group IDs are defined, group ID range checking is enforced, and a group ID of 5555 is allowed.

To use a custom SCC, you must first add it to the appropriate service account. For example, use the default service account in the given project unless another has been specified on the pod specification. See Add an SCC to a User, Group, or Project for details.

24.2.4.2. User IDs

User IDs can be defined in the container image or in the pod definition. The full Volume Security topic covers controlling storage access based on user IDs, and should be read prior to setting up NFS persistent storage.

It is generally preferable to use supplemental group IDs to gain access to persistent storage versus using user IDs.

In the example target NFS directory shown above, the container needs its UID set to 65534 (ignoring group IDs for the moment), so the following can be added to the pod definition:

Assuming the default project and the restricted SCC, the pod’s requested user ID of 65534 is not allowed, and therefore the pod fails. The pod fails for the following reasons:

- It requests 65534 as its user ID.

- All SCCs available to the pod are examined to see which SCC allows a user ID of 65534 (actually, all policies of the SCCs are checked but the focus here is on user ID).

-

Because all available SCCs use MustRunAsRange for their

runAsUserstrategy, UID range checking is required. - 65534 is not included in the SCC or project’s user ID range.

It is generally considered a good practice not to modify the predefined SCCs. The preferred way to fix this situation is to create a custom SCC, as described in the full Volume Security topic. A custom SCC can be created such that minimum and maximum user IDs are defined, UID range checking is still enforced, and the UID of 65534 is allowed.

To use a custom SCC, you must first add it to the appropriate service account. For example, use the default service account in the given project unless another has been specified on the pod specification. See Add an SCC to a User, Group, or Project for details.

24.2.4.3. SELinux

See the full Volume Security topic for information on controlling storage access in conjunction with using SELinux.

By default, SELinux does not allow writing from a pod to a remote NFS server. The NFS volume mounts correctly, but is read-only.

To enable writing to NFS volumes with SELinux enforcing on each node, run:

setsebool -P virt_use_nfs 1

# setsebool -P virt_use_nfs 1

The -P option above makes the bool persistent between reboots.

The virt_use_nfs boolean is defined by the docker-selinux package. If an error is seen indicating that this bool is not defined, ensure this package has been installed.

24.2.4.4. Export Settings

In order to enable arbitrary container users to read and write the volume, each exported volume on the NFS server should conform to the following conditions:

Each export must be:

/<example_fs> *(rw,root_squash)

/<example_fs> *(rw,root_squash)Copy to Clipboard Copied! Toggle word wrap Toggle overflow The firewall must be configured to allow traffic to the mount point.

For NFSv4, configure the default port

2049(nfs) and port111(portmapper).NFSv4

iptables -I INPUT 1 -p tcp --dport 2049 -j ACCEPT iptables -I INPUT 1 -p tcp --dport 111 -j ACCEPT

# iptables -I INPUT 1 -p tcp --dport 2049 -j ACCEPT # iptables -I INPUT 1 -p tcp --dport 111 -j ACCEPTCopy to Clipboard Copied! Toggle word wrap Toggle overflow For NFSv3, there are three ports to configure:

2049(nfs),20048(mountd), and111(portmapper).NFSv3

iptables -I INPUT 1 -p tcp --dport 2049 -j ACCEPT iptables -I INPUT 1 -p tcp --dport 20048 -j ACCEPT iptables -I INPUT 1 -p tcp --dport 111 -j ACCEPT

# iptables -I INPUT 1 -p tcp --dport 2049 -j ACCEPT # iptables -I INPUT 1 -p tcp --dport 20048 -j ACCEPT # iptables -I INPUT 1 -p tcp --dport 111 -j ACCEPTCopy to Clipboard Copied! Toggle word wrap Toggle overflow

-

The NFS export and directory must be set up so that it is accessible by the target pods. Either set the export to be owned by the container’s primary UID, or supply the pod group access using

supplementalGroups, as shown in Group IDs above. See the full Volume Security topic for additional pod security information as well.

24.2.5. Reclaiming Resources

NFS implements the OpenShift Container Platform Recyclable plug-in interface. Automatic processes handle reclamation tasks based on policies set on each persistent volume.

By default, PVs are set to Retain. NFS volumes which are set to Recycle are scrubbed (i.e., rm -rf is run on the volume) after being released from their claim (i.e, after the user’s PersistentVolumeClaim bound to the volume is deleted). Once recycled, the NFS volume can be bound to a new claim.

Once claim to a PV is released (that is, the PVC is deleted), the PV object should not be re-used. Instead, a new PV should be created with the same basic volume details as the original.

For example, the administrator creates a PV named nfs1:

The user creates PVC1, which binds to nfs1. The user then deletes PVC1, releasing claim to nfs1, which causes nfs1 to be Released. If the administrator wishes to make the same NFS share available, they should create a new PV with the same NFS server details, but a different PV name:

Deleting the original PV and re-creating it with the same name is discouraged. Attempting to manually change the status of a PV from Released to Available causes errors and potential data loss.

A PV with retention policy of Recycle scrubs (rm -rf) the data and marks it as Available for claim. The Recycle retention policy is deprecated starting in OpenShift Container Platform 3.6 and should be avoided. Anyone using recycler should use dynamic provision and volume deletion instead.

24.2.6. Automation

Clusters can be provisioned with persistent storage using NFS in the following ways:

- Enforce storage quotas using disk partitions.

- Enforce security by restricting volumes to the project that has a claim to them.

- Configure reclamation of discarded resources for each PV.

They are many ways that you can use scripts to automate the above tasks. You can use an example Ansible playbook to help you get started.

24.2.7. Additional Configuration and Troubleshooting

Depending on what version of NFS is being used and how it is configured, there may be additional configuration steps needed for proper export and security mapping. The following are some that may apply:

| NFSv4 mount incorrectly shows all files with ownership of nobody:nobody |

|

| Disabling ID mapping on NFSv4 |

|

24.3. Persistent Storage Using Red Hat Gluster Storage

24.3.1. Overview

Red Hat Gluster Storage can be configured to provide persistent storage and dynamic provisioning for OpenShift Container Platform. It can be used both containerized within OpenShift Container Platform (converged mode) and non-containerized on its own nodes (independent mode).

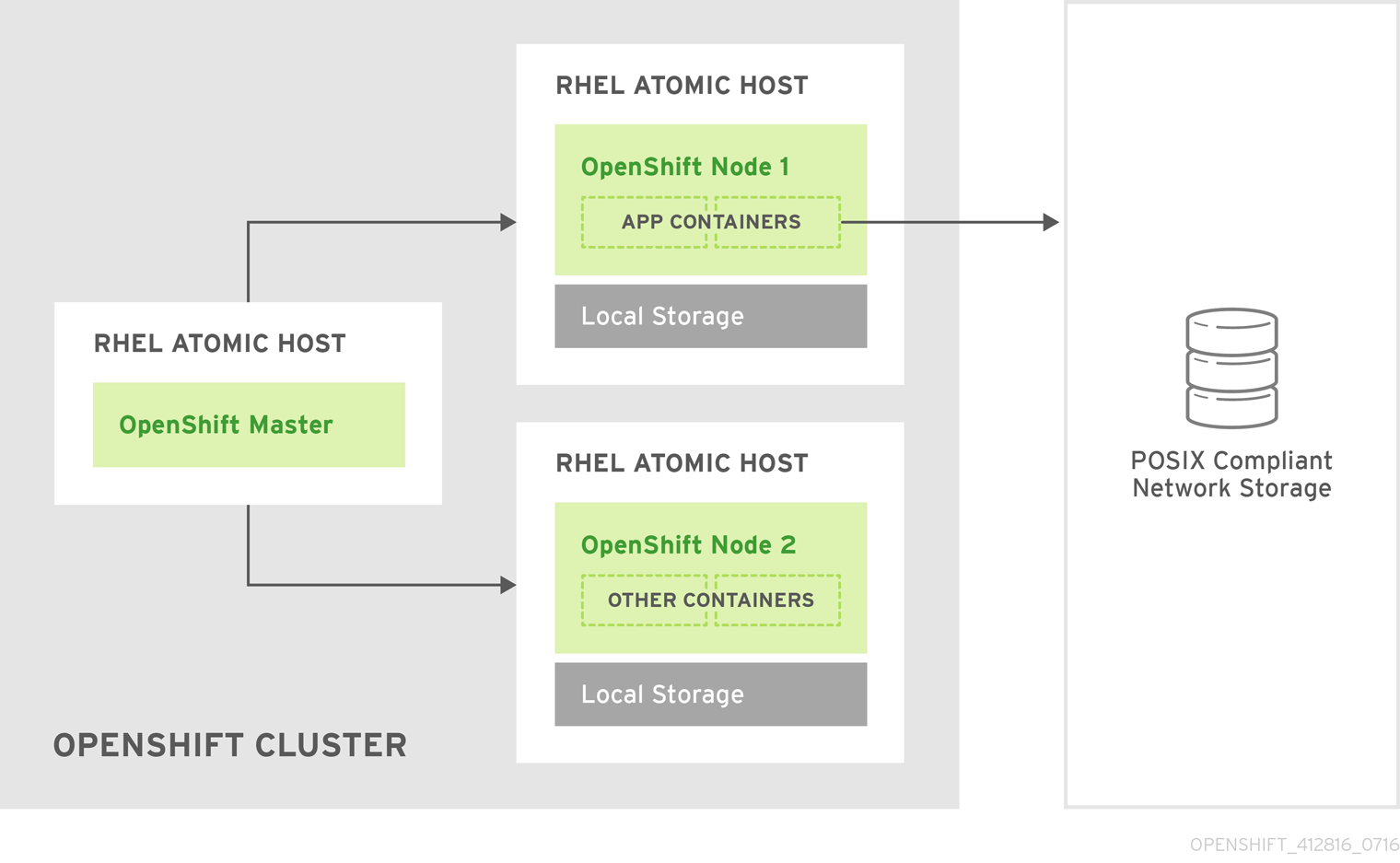

24.3.1.1. converged mode

With converged mode, Red Hat Gluster Storage runs containerized directly on OpenShift Container Platform nodes. This allows for compute and storage instances to be scheduled and run from the same set of hardware.

Figure 24.1. Architecture - converged mode

converged mode is available starting with Red Hat Gluster Storage 3.1 update 3. See converged mode for OpenShift Container Platform for additional documentation.

24.3.1.2. independent mode

With independent mode, Red Hat Gluster Storage runs on its own dedicated nodes and is managed by an instance of heketi, the GlusterFS volume management REST service. This heketi service can run either standalone or containerized. Containerization allows for an easy mechanism to provide high-availability to the service. This documentation will focus on the configuration where heketi is containerized.

24.3.1.3. Standalone Red Hat Gluster Storage

If you have a standalone Red Hat Gluster Storage cluster available in your environment, you can make use of volumes on that cluster using OpenShift Container Platform’s GlusterFS volume plug-in. This solution is a conventional deployment where applications run on dedicated compute nodes, an OpenShift Container Platform cluster, and storage is provided from its own dedicated nodes.

Figure 24.2. Architecture - Standalone Red Hat Gluster Storage Cluster Using OpenShift Container Platform's GlusterFS Volume Plug-in

See the Red Hat Gluster Storage Installation Guide and the Red Hat Gluster Storage Administration Guide for more on Red Hat Gluster Storage.

High availability of storage in the infrastructure is left to the underlying storage provider.

24.3.1.4. GlusterFS Volumes

GlusterFS volumes present a POSIX-compliant filesystem and are comprised of one or more "bricks" across one or more nodes in their cluster. A brick is just a directory on a given storage node and is typically the mount point for a block storage device. GlusterFS handles distribution and replication of files across a given volume’s bricks per that volume’s configuration.

It is recommended to use heketi for most common volume management operations such as create, delete, and resize. OpenShift Container Platform expects heketi to be present when using the GlusterFS provisioner. heketi by default will create volumes that are three-ray replica, that is volumes where each file has three copies across three different nodes. As such it is recommended that any Red Hat Gluster Storage clusters which will be used by heketi have at least three nodes available.

There are many features available for GlusterFS volumes, but they are beyond the scope of this documentation.

24.3.1.5. gluster-block Volumes

gluster-block volumes are volumes that can be mounted over iSCSI. This is done by creating a file on an existing GlusterFS volume and then presenting that file as a block device via an iSCSI target. Such GlusterFS volumes are called block-hosting volumes.

gluster-block volumes present a sort of trade-off. Being consumed as iSCSI targets, gluster-block volumes can only be mounted by one node/client at a time which is in contrast to GlusterFS volumes which can be mounted by multiple nodes/clients. Being files on the backend, however, allows for operations which are typically costly on GlusterFS volumes (e.g. metadata lookups) to be converted to ones which are typically much faster on GlusterFS volumes (e.g. reads and writes). This leads to potentially substantial performance improvements for certain workloads.

At this time, it is recommended to only use gluster-block volumes for OpenShift Logging and OpenShift Metrics storage.

24.3.1.6. Gluster S3 Storage

The Gluster S3 service allows user applications to access GlusterFS storage via an S3 interface. The service binds to two GlusterFS volumes, one for object data and one for object metadata, and translates incoming S3 REST requests into filesystem operations on the volumes. It is recommended to run the service as a pod inside OpenShift Container Platform.

At this time, use and installation of the Gluster S3 service is in tech preview.

24.3.2. Considerations

This section covers a few topics that should be taken into consideration when using Red Hat Gluster Storage with OpenShift Container Platform.

24.3.2.1. Software Prerequisites

To access GlusterFS volumes, the mount.glusterfs command must be available on all schedulable nodes. For RPM-based systems, the glusterfs-fuse package must be installed:

yum install glusterfs-fuse

# yum install glusterfs-fuseThis package comes installed on every RHEL system. However, it is recommended to update to the latest available version from Red Hat Gluster Storage if your servers use x86_64 architecture. To do this, the following RPM repository must be enabled:

subscription-manager repos --enable=rh-gluster-3-client-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-client-for-rhel-7-server-rpmsIf glusterfs-fuse is already installed on the nodes, ensure that the latest version is installed:

yum update glusterfs-fuse

# yum update glusterfs-fuse24.3.2.2. Hardware Requirements

Any nodes used in a converged mode or independent mode cluster are considered storage nodes. Storage nodes can be grouped into distinct cluster groups, though a single node can not be in multiple groups. For each group of storage nodes:

- A minimum of three storage nodes per group is required.

Each storage node must have a minimum of 8 GB of RAM. This is to allow running the Red Hat Gluster Storage pods, as well as other applications and the underlying operating system.

- Each GlusterFS volume also consumes memory on every storage node in its storage cluster, which is about 30 MB. The total amount of RAM should be determined based on how many concurrent volumes are desired or anticipated.

Each storage node must have at least one raw block device with no present data or metadata. These block devices will be used in their entirety for GlusterFS storage. Make sure the following are not present:

- Partition tables (GPT or MSDOS)

- Filesystems or residual filesystem signatures

- LVM2 signatures of former Volume Groups and Logical Volumes

- LVM2 metadata of LVM2 physical volumes

If in doubt,

wipefs -a <device>should clear any of the above.

It is recommended to plan for two clusters: one dedicated to storage for infrastructure applications (such as an OpenShift Container Registry) and one dedicated to storage for general applications. This would require a total of six storage nodes. This recommendation is made to avoid potential impacts on performance in I/O and volume creation.

24.3.2.3. Storage Sizing

Every GlusterFS cluster must be sized based on the needs of the anticipated applications that will use its storage. For example, there are sizing guides available for both OpenShift Logging and OpenShift Metrics.

Some additional things to consider are:

For each converged mode or independent mode cluster, the default behavior is to create GlusterFS volumes with three-way replication. As such, the total storage to plan for should be the desired capacity times three.

-

As an example, each heketi instance creates a

heketidbstoragevolume that is 2 GB in size, requiring a total of 6 GB of raw storage across three nodes in the storage cluster. This capacity is always required and should be taken into consideration for sizing calculations. - Applications like an integrated OpenShift Container Registry share a single GlusterFS volume across multiple instances of the application.

-

As an example, each heketi instance creates a

gluster-block volumes require the presence of a GlusterFS block-hosting volume with enough capacity to hold the full size of any given block volume’s capacity.

- By default, if no such block-hosting volume exists, one will be automatically created at a set size. The default for this size is 100 GB. If there is not enough space in the cluster to create the new block-hosting volume, the creation of the block volume will fail. Both the auto-create behavior and the auto-created volume size are configurable.

- Applications with multiple instances that use gluster-block volumes, such as OpenShift Logging and OpenShift Metrics, will use one volume per instance.

- The Gluster S3 service binds to two GlusterFS volumes. In a default cluster installation, these volumes are 1 GB each, consuming a total of 6 GB of raw storage.

24.3.2.4. Volume Operation Behaviors

Volume operations, such as create and delete, can be impacted by a variety of environmental circumstances and can in turn affect applications as well.

If the application pod requests a dynamically provisioned GlusterFS persistent volume claim (PVC), then extra time might have to be considered for the volume to be created and bound to the corresponding PVC. This effects the startup time for an application pod.

NoteCreation time of GlusterFS volumes scales linearly depending on the number of volumes. As an example, given 100 volumes in a cluster using recommended hardware specifications, each volume took approximately 6 seconds to be created, allocated, and bound to a pod.

When a PVC is deleted, that action will trigger the deletion of the underlying GlusterFS volume. While PVCs will disappear immediately from the

oc get pvcoutput, this does not mean the volume has been fully deleted. A GlusterFS volume can only be considered deleted when it does not show up in the command-line outputs forheketi-cli volume listandgluster volume list.NoteThe time to delete the GlusterFS volume and recycle its storage depends on and scales linearly with the number of active GlusterFS volumes. While pending volume deletes do not affect running applications, storage administrators should be aware of and be able to estimate how long they will take, especially when tuning resource consumption at scale.

24.3.2.5. Volume Security

This section covers Red Hat Gluster Storage volume security, including Portable Operating System Interface [for Unix] (POSIX) permissions and SELinux considerations. Understanding the basics of Volume Security, POSIX permissions, and SELinux is presumed.

24.3.2.5.1. POSIX Permissions

Red Hat Gluster Storage volumes present POSIX-compliant file systems. As such, access permissions can be managed using standard command-line tools such as chmod and chown.

For converged mode and independent mode, it is also possible to specify a group ID that will own the root of the volume at volume creation time. For static provisioning, this is specified as part of the heketi-cli volume creation command:

heketi-cli volume create --size=100 --gid=10001000

$ heketi-cli volume create --size=100 --gid=10001000The PersistentVolume that will be associated with this volume must be annotated with the group ID so that pods consuming the PersistentVolume can have access to the file system. This annotation takes the form of:

pv.beta.kubernetes.io/gid: "<GID>" ---

For dynamic provisioning, the provisioner automatically generates and applies a group ID. It is possible to control the range from which this group ID is selected using the gidMin and gidMax StorageClass parameters (see Dynamic Provisioning). The provisioner also takes care of annotating the generated PersistentVolume with the group ID.

24.3.2.5.2. SELinux

By default, SELinux does not allow writing from a pod to a remote Red Hat Gluster Storage server. To enable writing to Red Hat Gluster Storage volumes with SELinux on, run the following on each node running GlusterFS:

sudo setsebool -P virt_sandbox_use_fusefs on sudo setsebool -P virt_use_fusefs on

$ sudo setsebool -P virt_sandbox_use_fusefs on

$ sudo setsebool -P virt_use_fusefs on- 1

- The

-Poption makes the boolean persistent between reboots.

The virt_sandbox_use_fusefs boolean is defined by the docker-selinux package. If you get an error saying it is not defined, ensure that this package is installed.

If you use Atomic Host, the SELinux booleans are cleared when you upgrade Atomic Host. When you upgrade Atomic Host, you must set these boolean values again.

24.3.3. Support Requirements

The following requirements must be met to create a supported integration of Red Hat Gluster Storage and OpenShift Container Platform.

For independent mode or standalone Red Hat Gluster Storage:

- Minimum version: Red Hat Gluster Storage 3.1.3

- All Red Hat Gluster Storage nodes must have valid subscriptions to Red Hat Network channels and Subscription Manager repositories.

- Red Hat Gluster Storage nodes must adhere to the requirements specified in the Planning Red Hat Gluster Storage Installation.

- Red Hat Gluster Storage nodes must be completely up to date with the latest patches and upgrades. Refer to the Red Hat Gluster Storage Installation Guide to upgrade to the latest version.

- A fully-qualified domain name (FQDN) must be set for each Red Hat Gluster Storage node. Ensure that correct DNS records exist, and that the FQDN is resolvable via both forward and reverse DNS lookup.

24.3.4. Installation

For standalone Red Hat Gluster Storage, there is no component installation required to use it with OpenShift Container Platform. OpenShift Container Platform comes with a built-in GlusterFS volume driver, allowing it to make use of existing volumes on existing clusters. See provisioning for more on how to make use of existing volumes.

For converged mode and independent mode, it is recommended to use the cluster installation process to install the required components.

24.3.4.1. independent mode: Installing Red Hat Gluster Storage Nodes

For independent mode, each Red Hat Gluster Storage node must have the appropriate system configurations (e.g. firewall ports, kernel modules) and the Red Hat Gluster Storage services must be running. The services should not be further configured, and should not have formed a Trusted Storage Pool.

The installation of Red Hat Gluster Storage nodes is beyond the scope of this documentation. For more information, see Setting Up independent mode.

24.3.4.2. Using the Installer

The cluster installation process can be used to install one or both of two GlusterFS node groups:

-

glusterfs: A general storage cluster for use by user applications. -

glusterfs_registry: A dedicated storage cluster for use by infrastructure applications such as an integrated OpenShift Container Registry.

It is recommended to deploy both groups to avoid potential impacts on performance in I/O and volume creation. Both of these are defined in the inventory hosts file.

The definition of the clusters is done by including the relevant names in the [OSEv3:children] group, creating similarly named groups, and then populating the groups with the node information. The clusters can then be configured through a variety of variables in the [OSEv3:vars] group. glusterfs variables begin with openshift_storage_glusterfs_ and glusterfs_registry variables begin with openshift_storage_glusterfs_registry_. A few other variables, such as openshift_hosted_registry_storage_kind, interact with the GlusterFS clusters.

To prevent Red Hat Gluster Storage pods from upgrading after an outage leading to a cluster with different Red Hat Gluster Storage versions, it is recommended to specify the image name and version tags for all containerized components. The relevant variables are:

-

openshift_storage_glusterfs_image -

openshift_storage_glusterfs_block_image -

openshift_storage_glusterfs_s3_image -

openshift_storage_glusterfs_heketi_image -

openshift_storage_glusterfs_registry_image -

openshift_storage_glusterfs_registry_block_image -

openshift_storage_glusterfs_registry_s3_image -

openshift_storage_glusterfs_registry_heketi_image

The image variables for gluster-block and gluster-s3 are only necessary if the corresponding deployment variables (the variables ending in _block_deploy and _s3_deploy) are true.

A valid image tag is required for your deployment to succeed. Replace <tag> with the version of Red Hat Gluster Storage that is compatible with OpenShift Container Platform 3.10 as described in the interoperability matrix for the following variables in your inventory file:

-

openshift_storage_glusterfs_image=registry.redhat.io/rhgs3/rhgs-server-rhel7:<tag> -

openshift_storage_glusterfs_block_image=registry.redhat.io/rhgs3/rhgs-gluster-block-prov-rhel7:<tag> -

openshift_storage_glusterfs_s3_image=registry.redhat.io/rhgs3/rhgs-s3-server-rhel7:<tag> -

openshift_storage_glusterfs_heketi_image=registry.redhat.io/rhgs3/rhgs-volmanager-rhel7:<tag> -

openshift_storage_glusterfs_registry_image=registry.redhat.io/rhgs3/rhgs-server-rhel7:<tag> -

openshift_storage_glusterfs_block_registry_image=registry.redhat.io/rhgs3/rhgs-gluster-block-prov-rhel7:<tag> -

openshift_storage_glusterfs_s3_registry_image=registry.redhat.io/rhgs3/rhgs-s3-server-rhel7:<tag> -

openshift_storage_glusterfs_heketi_registry_image=registry.redhat.io/rhgs3/rhgs-volmanager-rhel7:<tag>

For a complete list of variables, see the GlusterFS role README on GitHub.

Once the variables are configured, there are several playbooks available depending on the circumstances of the installation:

The main playbook for cluster installations can be used to deploy the GlusterFS clusters in tandem with an initial installation of OpenShift Container Platform.

- This includes deploying an integrated OpenShift Container Registry that uses GlusterFS storage.

- This does not include OpenShift Logging or OpenShift Metrics, as that is currently still a separate step. See converged mode for OpenShift Logging and Metrics for more information.

-

playbooks/openshift-glusterfs/config.ymlcan be used to deploy the clusters onto an existing OpenShift Container Platform installation. playbooks/openshift-glusterfs/registry.ymlcan be used to deploy the clusters onto an existing OpenShift Container Platform installation. In addition, this will deploy an integrated OpenShift Container Registry which uses GlusterFS storage.ImportantThere must not be a pre-existing registry in the OpenShift Container Platform cluster.

playbooks/openshift-glusterfs/uninstall.ymlcan be used to remove existing clusters matching the configuration in the inventory hosts file. This is useful for cleaning up the OpenShift Container Platform environment in the case of a failed deployment due to configuration errors.NoteThe GlusterFS playbooks are not guaranteed to be idempotent.

NoteRunning the playbooks more than once for a given installation is currently not supported without deleting the entire GlusterFS installation (including disk data) and starting over.

24.3.4.2.1. Example: Basic converged mode Installation

In your inventory file, include the following variables in the

[OSEv3:vars]section, and adjust them as required for your configuration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add

glusterfsin the[OSEv3:children]section to enable the[glusterfs]group:[OSEv3:children] masters nodes glusterfs

[OSEv3:children] masters nodes glusterfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a

[glusterfs]section with entries for each storage node that will host the GlusterFS storage. For each node, setglusterfs_devicesto a list of raw block devices that will be completely managed as part of a GlusterFS cluster. There must be at least one device listed. Each device must be bare, with no partitions or LVM PVs. Specifying the variable takes the form:<hostname_or_ip> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'

<hostname_or_ip> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

[glusterfs] node11.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' node12.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' node13.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]'

[glusterfs] node11.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' node12.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' node13.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the hosts listed under

[glusterfs]to the[nodes]group:[nodes] ... node11.example.com openshift_node_group_name="node-config-compute" node12.example.com openshift_node_group_name="node-config-compute" node13.example.com openshift_node_group_name="node-config-compute"

[nodes] ... node11.example.com openshift_node_group_name="node-config-compute" node12.example.com openshift_node_group_name="node-config-compute" node13.example.com openshift_node_group_name="node-config-compute"Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe preceding steps only provide some of the options that must be added to the inventory file. Use the complete inventory file to deploy Red Hat Gluster Storage.

Run the installation playbook and provide the relative path for the inventory file as an option.

For a new OpenShift Container Platform installation:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For an installation onto an existing OpenShift Container Platform cluster:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

24.3.4.2.2. Example: Basic independent mode Installation

In your inventory file, include the following variables in the

[OSEv3:vars]section, and adjust them as required for your configuration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add

glusterfsin the[OSEv3:children]section to enable the[glusterfs]group:[OSEv3:children] masters nodes glusterfs

[OSEv3:children] masters nodes glusterfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a

[glusterfs]section with entries for each storage node that will host the GlusterFS storage. For each node, setglusterfs_devicesto a list of raw block devices that will be completely managed as part of a GlusterFS cluster. There must be at least one device listed. Each device must be bare, with no partitions or LVM PVs. Also, setglusterfs_ipto the IP address of the node. Specifying the variable takes the form:<hostname_or_ip> glusterfs_ip=<ip_address> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'

<hostname_or_ip> glusterfs_ip=<ip_address> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

[glusterfs] gluster1.example.com glusterfs_ip=192.168.10.11 glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' gluster2.example.com glusterfs_ip=192.168.10.12 glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' gluster3.example.com glusterfs_ip=192.168.10.13 glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]'

[glusterfs] gluster1.example.com glusterfs_ip=192.168.10.11 glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' gluster2.example.com glusterfs_ip=192.168.10.12 glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' gluster3.example.com glusterfs_ip=192.168.10.13 glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe preceding steps only provide some of the options that must be added to the inventory file. Use the complete inventory file to deploy Red Hat Gluster Storage.

Run the installation playbook and provide the relative path for the inventory file as an option.

For a new OpenShift Container Platform installation:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For an installation onto an existing OpenShift Container Platform cluster:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

24.3.4.2.3. Example: converged mode with an Integrated OpenShift Container Registry

In your inventory file, set the following variable under

[OSEv3:vars]section, and adjust them as required for your configuration:[OSEv3:vars] ... openshift_hosted_registry_storage_kind=glusterfs openshift_hosted_registry_storage_volume_size=5Gi openshift_hosted_registry_selector='node-role.kubernetes.io/infra=true'

[OSEv3:vars] ... openshift_hosted_registry_storage_kind=glusterfs1 openshift_hosted_registry_storage_volume_size=5Gi openshift_hosted_registry_selector='node-role.kubernetes.io/infra=true'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Running the integrated OpenShift Container Registry, on infrastructure nodes is recommended. Infrastructure node are nodes dedicated to running applications deployed by administrators to provide services for the OpenShift Container Platform cluster.

Add

glusterfs_registryin the[OSEv3:children]section to enable the[glusterfs_registry]group:[OSEv3:children] masters nodes glusterfs_registry

[OSEv3:children] masters nodes glusterfs_registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a

[glusterfs_registry]section with entries for each storage node that will host the GlusterFS storage. For each node, setglusterfs_devicesto a list of raw block devices that will be completely managed as part of a GlusterFS cluster. There must be at least one device listed. Each device must be bare, with no partitions or LVM PVs. Specifying the variable takes the form:<hostname_or_ip> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'

<hostname_or_ip> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

[glusterfs_registry] node11.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' node12.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' node13.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]'

[glusterfs_registry] node11.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' node12.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' node13.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the hosts listed under

[glusterfs_registry]to the[nodes]group:[nodes] ... node11.example.com openshift_node_group_name="node-config-infra" node12.example.com openshift_node_group_name="node-config-infra" node13.example.com openshift_node_group_name="node-config-infra"

[nodes] ... node11.example.com openshift_node_group_name="node-config-infra" node12.example.com openshift_node_group_name="node-config-infra" node13.example.com openshift_node_group_name="node-config-infra"Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe preceding steps only provide some of the options that must be added to the inventory file. Use the complete inventory file to deploy Red Hat Gluster Storage.

Run the installation playbook and provide the relative path for the inventory file as an option.

For a new OpenShift Container Platform installation:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For an installation onto an existing OpenShift Container Platform cluster:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

24.3.4.2.4. Example: converged mode for OpenShift Logging and Metrics

In your inventory file, set the following variables under

[OSEv3:vars]section, and adjust them as required for your configuration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1 2 3 5 6 7

- It is recommended to run the integrated OpenShift Container Registry, Logging, and Metrics on nodes dedicated to "infrastructure" applications, that is applications deployed by administrators to provide services for the OpenShift Container Platform cluster.

- 4 10

- Specify the StorageClass to be used for Logging and Metrics. This name is generated from the name of the target GlusterFS cluster (e.g.,

glusterfs-<name>-block). In this example, this defaults toregistry. - 8

- OpenShift Logging requires that a PVC size be specified. The supplied value is only an example, not a recommendation.

- 9

- If using Persistent Elasticsearch Storage, set the storage type to

pvc.

NoteSee the GlusterFS role README for details on these and other variables.

Add

glusterfs_registryin the[OSEv3:children]section to enable the[glusterfs_registry]group:[OSEv3:children] masters nodes glusterfs_registry

[OSEv3:children] masters nodes glusterfs_registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a

[glusterfs_registry]section with entries for each storage node that will host the GlusterFS storage. For each node, setglusterfs_devicesto a list of raw block devices that will be completely managed as part of a GlusterFS cluster. There must be at least one device listed. Each device must be bare, with no partitions or LVM PVs. Specifying the variable takes the form:<hostname_or_ip> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'

<hostname_or_ip> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

[glusterfs_registry] node11.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' node12.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' node13.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]'

[glusterfs_registry] node11.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' node12.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' node13.example.com glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the hosts listed under

[glusterfs_registry]to the[nodes]group:[nodes] ... node11.example.com openshift_node_group_name="node-config-infra" node12.example.com openshift_node_group_name="node-config-infra" node13.example.com openshift_node_group_name="node-config-infra"

[nodes] ... node11.example.com openshift_node_group_name="node-config-infra" node12.example.com openshift_node_group_name="node-config-infra" node13.example.com openshift_node_group_name="node-config-infra"Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe preceding steps only provide some of the options that must be added to the inventory file. Use the complete inventory file to deploy Red Hat Gluster Storage.

Run the installation playbook and provide the relative path for the inventory file as an option.

For a new OpenShift Container Platform installation:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For an installation onto an existing OpenShift Container Platform cluster:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

24.3.4.2.5. Example: converged mode for Applications, Registry, Logging, and Metrics

In your inventory file, set the following variables under

[OSEv3:vars]section, and adjust them as required for your configuration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1 2 3 4 6 7 8

- Running the integrated OpenShift Container Registry, Logging, and Metrics on infrastructure nodes is recommended. Infrastructure node are nodes dedicated to running applications deployed by administrators to provide services for the OpenShift Container Platform cluster.

- 5 11

- Specify the StorageClass to be used for Logging and Metrics. This name is generated from the name of the target GlusterFS cluster, for example

glusterfs-<name>-block. In this example,<name>defaults toregistry. - 9

- Specifying a PVC size is required for OpenShift Logging. The supplied value is only an example, not a recommendation.

- 10

- If using Persistent Elasticsearch Storage, set the storage type to

pvc. - 12

- Size, in GB, of GlusterFS volumes that will be automatically created to host glusterblock volumes. This variable is used only if there is not enough space is available for a glusterblock volume create request. This value represents an upper limit on the size of glusterblock volumes unless you manually create larger GlusterFS block-hosting volumes.

Add

glusterfsandglusterfs_registryin the[OSEv3:children]section to enable the[glusterfs]and[glusterfs_registry]groups:[OSEv3:children] ... glusterfs glusterfs_registry

[OSEv3:children] ... glusterfs glusterfs_registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add

[glusterfs]and[glusterfs_registry]sections with entries for each storage node that will host the GlusterFS storage. For each node, setglusterfs_devicesto a list of raw block devices that will be completely managed as part of a GlusterFS cluster. There must be at least one device listed. Each device must be bare, with no partitions or LVM PVs. Specifying the variable takes the form:<hostname_or_ip> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'

<hostname_or_ip> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the hosts listed under

[glusterfs]and[glusterfs_registry]to the[nodes]group:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe preceding steps only provide some of the options that must be added to the inventory file. Use the complete inventory file to deploy Red Hat Gluster Storage.

Run the installation playbook and provide the relative path for the inventory file as an option.

For a new OpenShift Container Platform installation:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For an installation onto an existing OpenShift Container Platform cluster:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

24.3.4.2.6. Example: independent mode for Applications, Registry, Logging, and Metrics

In your inventory file, set the following variables under

[OSEv3:vars]section, and adjust them as required for your configuration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1 2 3 4 6 7 8

- It is recommended to run the integrated OpenShift Container Registry on nodes dedicated to "infrastructure" applications, that is applications deployed by administrators to provide services for the OpenShift Container Platform cluster. It is up to the administrator to select and label nodes for infrastructure applications.

- 5 11

- Specify the StorageClass to be used for Logging and Metrics. This name is generated from the name of the target GlusterFS cluster (e.g.,

glusterfs-<name>-block). In this example, this defaults toregistry. - 9

- OpenShift Logging requires that a PVC size be specified. The supplied value is only an example, not a recommendation.

- 10

- If using Persistent Elasticsearch Storage, set the storage type to

pvc. - 12

- Size, in GB, of GlusterFS volumes that will be automatically created to host glusterblock volumes. This variable is used only if there is not enough space is available for a glusterblock volume create request. This value represents an upper limit on the size of glusterblock volumes unless you manually create larger GlusterFS block-hosting volumes.

Add

glusterfsandglusterfs_registryin the[OSEv3:children]section to enable the[glusterfs]and[glusterfs_registry]groups:[OSEv3:children] ... glusterfs glusterfs_registry

[OSEv3:children] ... glusterfs glusterfs_registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add

[glusterfs]and[glusterfs_registry]sections with entries for each storage node that will host the GlusterFS storage. For each node, setglusterfs_devicesto a list of raw block devices that will be completely managed as part of a GlusterFS cluster. There must be at least one device listed. Each device must be bare, with no partitions or LVM PVs. Also, setglusterfs_ipto the IP address of the node. Specifying the variable takes the form:<hostname_or_ip> glusterfs_ip=<ip_address> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'

<hostname_or_ip> glusterfs_ip=<ip_address> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe preceding steps only provide some of the options that must be added to the inventory file. Use the complete inventory file to deploy Red Hat Gluster Storage.

Run the installation playbook and provide the relative path for the inventory file as an option.

For a new OpenShift Container Platform installation:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For an installation onto an existing OpenShift Container Platform cluster:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

24.3.5. Uninstall converged mode

For converged mode, an OpenShift Container Platform install comes with a playbook to uninstall all resources and artifacts from the cluster. To use the playbook, provide the original inventory file that was used to install the target instance of converged mode and run the following playbook:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/uninstall.yml

# ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/uninstall.yml

In addition, the playbook supports the use of a variable called openshift_storage_glusterfs_wipe which, when enabled, destroys any data on the block devices that were used for Red Hat Gluster Storage backend storage. To use the openshift_storage_glusterfs_wipe variable:

ansible-playbook -i <path_to_inventory_file> -e "openshift_storage_glusterfs_wipe=true" /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/uninstall.yml

# ansible-playbook -i <path_to_inventory_file> -e

"openshift_storage_glusterfs_wipe=true"

/usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/uninstall.ymlThis procedure destroys data. Proceed with caution.

24.3.6. Provisioning

GlusterFS volumes can be provisioned either statically or dynamically. Static provisioning is available with all configurations. Only converged mode and independent mode support dynamic provisioning.

24.3.6.1. Static Provisioning

-

To enable static provisioning, first create a GlusterFS volume. See the Red Hat Gluster Storage Administration Guide for information on how to do this using the

glustercommand-line interface or the heketi project site for information on how to do this usingheketi-cli. For this example, the volume will be namedmyVol1. Define the following Service and Endpoints in

gluster-endpoints.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow From the OpenShift Container Platform master host, create the Service and Endpoints:

oc create -f gluster-endpoints.yaml service "glusterfs-cluster" created endpoints "glusterfs-cluster" created

$ oc create -f gluster-endpoints.yaml service "glusterfs-cluster" created endpoints "glusterfs-cluster" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the Service and Endpoints were created:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteEndpoints are unique per project. Each project accessing the GlusterFS volume needs its own Endpoints.

In order to access the volume, the container must run with either a user ID (UID) or group ID (GID) that has access to the file system on the volume. This information can be discovered in the following manner:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Define the following PersistentVolume (PV) in

gluster-pv.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The name of the volume.

- 2

- The GID on the root of the GlusterFS volume.

- 3

- The amount of storage allocated to this volume.

- 4

accessModesare used as labels to match a PV and a PVC. They currently do not define any form of access control.- 5

- The Endpoints resource previously created.

- 6

- The GlusterFS volume that will be accessed.

From the OpenShift Container Platform master host, create the PV:

oc create -f gluster-pv.yaml

$ oc create -f gluster-pv.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the PV was created:

oc get pv NAME LABELS CAPACITY ACCESSMODES STATUS CLAIM REASON AGE gluster-default-volume <none> 2147483648 RWX Available 2s

$ oc get pv NAME LABELS CAPACITY ACCESSMODES STATUS CLAIM REASON AGE gluster-default-volume <none> 2147483648 RWX Available 2sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a PersistentVolumeClaim (PVC) that will bind to the new PV in

gluster-claim.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow From the OpenShift Container Platform master host, create the PVC:

oc create -f gluster-claim.yaml

$ oc create -f gluster-claim.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the PV and PVC are bound:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

PVCs are unique per project. Each project accessing the GlusterFS volume needs its own PVC. PVs are not bound to a single project, so PVCs across multiple projects may refer to the same PV.

24.3.6.2. Dynamic Provisioning

To enable dynamic provisioning, first create a

StorageClassobject definition. The definition below is based on the minimum requirements needed for this example to work with OpenShift Container Platform. See Dynamic Provisioning and Creating Storage Classes for additional parameters and specification definitions.Copy to Clipboard Copied! Toggle word wrap Toggle overflow From the OpenShift Container Platform master host, create the StorageClass:

oc create -f gluster-storage-class.yaml storageclass "glusterfs" created

# oc create -f gluster-storage-class.yaml storageclass "glusterfs" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a PVC using the newly-created StorageClass. For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow From the OpenShift Container Platform master host, create the PVC:

oc create -f glusterfs-dyn-pvc.yaml persistentvolumeclaim "gluster1" created

# oc create -f glusterfs-dyn-pvc.yaml persistentvolumeclaim "gluster1" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the PVC to see that the volume was dynamically created and bound to the PVC:

oc get pvc NAME STATUS VOLUME CAPACITY ACCESSMODES STORAGECLASS AGE gluster1 Bound pvc-78852230-d8e2-11e6-a3fa-0800279cf26f 30Gi RWX glusterfs 42s

# oc get pvc NAME STATUS VOLUME CAPACITY ACCESSMODES STORAGECLASS AGE gluster1 Bound pvc-78852230-d8e2-11e6-a3fa-0800279cf26f 30Gi RWX glusterfs 42sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

24.4. Persistent Storage Using OpenStack Cinder

24.4.1. Overview

You can provision your OpenShift Container Platform cluster with persistent storage using OpenStack Cinder. Some familiarity with Kubernetes and OpenStack is assumed.

Before you create persistent volumes (PVs) using Cinder, configured OpenShift Container Platform for OpenStack.

The Kubernetes persistent volume framework allows administrators to provision a cluster with persistent storage and gives users a way to request those resources without having any knowledge of the underlying infrastructure. You can provision OpenStack Cinder volumes dynamically.

Persistent volumes are not bound to a single project or namespace; they can be shared across the OpenShift Container Platform cluster. Persistent volume claims, however, are specific to a project or namespace and can be requested by users.

High-availability of storage in the infrastructure is left to the underlying storage provider.

24.4.2. Provisioning Cinder PVs

Storage must exist in the underlying infrastructure before it can be mounted as a volume in OpenShift Container Platform. After ensuring that OpenShift Container Platform is configured for OpenStack, all that is required for Cinder is a Cinder volume ID and the PersistentVolume API.

24.4.2.1. Creating the Persistent Volume

Cinder does not support the 'Recycle' reclaim policy.

You must define your PV in an object definition before creating it in OpenShift Container Platform:

Save your object definition to a file, for example cinder-pv.yaml:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantDo not change the

fstypeparameter value after the volume is formatted and provisioned. Changing this value can result in data loss and pod failure.Create the persistent volume:

oc create -f cinder-pv.yaml persistentvolume "pv0001" created

# oc create -f cinder-pv.yaml persistentvolume "pv0001" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the persistent volume exists:

oc get pv NAME LABELS CAPACITY ACCESSMODES STATUS CLAIM REASON AGE pv0001 <none> 5Gi RWO Available 2s

# oc get pv NAME LABELS CAPACITY ACCESSMODES STATUS CLAIM REASON AGE pv0001 <none> 5Gi RWO Available 2sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Users can then request storage using persistent volume claims, which can now utilize your new persistent volume.

Persistent volume claims exist only in the user’s namespace and can be referenced by a pod within that same namespace. Any attempt to access a persistent volume claim from a different namespace causes the pod to fail.

24.4.2.2. Cinder PV format

Before OpenShift Container Platform mounts the volume and passes it to a container, it checks that it contains a file system as specified by the fsType parameter in the persistent volume definition. If the device is not formatted with the file system, all data from the device is erased and the device is automatically formatted with the given file system.

This allows using unformatted Cinder volumes as persistent volumes, because OpenShift Container Platform formats them before the first use.

24.4.2.3. Cinder volume security

If you use Cinder PVs in your application, configure security for their deployment configurations.

Review the Volume Security information before implementing Cinder volumes.

-

Create an SCC that uses the appropriate

fsGroupstrategy. Create a service account and add it to the SCC:

[source,bash] $ oc create serviceaccount <service_account> $ oc adm policy add-scc-to-user <new_scc> -z <service_account> -n <project>

[source,bash] $ oc create serviceaccount <service_account> $ oc adm policy add-scc-to-user <new_scc> -z <service_account> -n <project>Copy to Clipboard Copied! Toggle word wrap Toggle overflow In your application’s deployment configuration, provide the service account name and

securityContext:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The number of copies of the pod to run.

- 2

- The label selector of the pod to run.

- 3

- A template for the pod the controller creates.

- 4

- The labels on the pod must include labels from the label selector.

- 5

- The maximum name length after expanding any parameters is 63 characters.

- 6

- Specify the service account you created.

- 7

- Specify an

fsGroupfor the pods.

24.5. Persistent Storage Using Ceph Rados Block Device (RBD)

24.5.1. Overview

OpenShift Container Platform clusters can be provisioned with persistent storage using Ceph RBD.

Persistent volumes (PVs) and persistent volume claims (PVCs) can share volumes across a single project. While the Ceph RBD-specific information contained in a PV definition could also be defined directly in a pod definition, doing so does not create the volume as a distinct cluster resource, making the volume more susceptible to conflicts.

This topic presumes some familiarity with OpenShift Container Platform and Ceph RBD. See the Persistent Storage concept topic for details on the OpenShift Container Platform persistent volume (PV) framework in general.

Project and namespace are used interchangeably throughout this document. See Projects and Users for details on the relationship.

High-availability of storage in the infrastructure is left to the underlying storage provider.

24.5.2. Provisioning

To provision Ceph volumes, the following are required:

- An existing storage device in your underlying infrastructure.

- The Ceph key to be used in an OpenShift Container Platform secret object.

- The Ceph image name.

- The file system type on top of the block storage (e.g., ext4).

ceph-common installed on each schedulable OpenShift Container Platform node in your cluster:

yum install ceph-common

# yum install ceph-commonCopy to Clipboard Copied! Toggle word wrap Toggle overflow

24.5.2.1. Creating the Ceph Secret

Define the authorization key in a secret configuration, which is then converted to base64 for use by OpenShift Container Platform.

In order to use Ceph storage to back a persistent volume, the secret must be created in the same project as the PVC and pod. The secret cannot simply be in the default project.

Run

ceph auth get-keyon a Ceph MON node to display the key value for theclient.adminuser:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the secret definition to a file, for example ceph-secret.yaml, then create the secret:

oc create -f ceph-secret.yaml

$ oc create -f ceph-secret.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the secret was created:

oc get secret ceph-secret NAME TYPE DATA AGE ceph-secret Opaque 1 23d

# oc get secret ceph-secret NAME TYPE DATA AGE ceph-secret Opaque 1 23dCopy to Clipboard Copied! Toggle word wrap Toggle overflow

24.5.2.2. Creating the Persistent Volume

Ceph RBD does not support the 'Recycle' reclaim policy.

Developers request Ceph RBD storage by referencing either a PVC, or the Gluster volume plug-in directly in the volumes section of a pod specification. A PVC exists only in the user’s namespace and can be referenced only by pods within that same namespace. Any attempt to access a PV from a different namespace causes the pod to fail.

Define the PV in an object definition before creating it in OpenShift Container Platform:

Example 24.3. Persistent Volume Object Definition Using Ceph RBD

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The name of the PV that is referenced in pod definitions or displayed in various

ocvolume commands. - 2

- The amount of storage allocated to this volume.

- 3

accessModesare used as labels to match a PV and a PVC. They currently do not define any form of access control. All block storage is defined to be single user (non-shared storage).- 4

- The volume type being used, in this case the rbd plug-in.

- 5

- An array of Ceph monitor IP addresses and ports.

- 6

- The Ceph secret used to create a secure connection from OpenShift Container Platform to the Ceph server.

- 7

- The file system type mounted on the Ceph RBD block device.

ImportantChanging the value of the

fstypeparameter after the volume has been formatted and provisioned can result in data loss and pod failure.Save your definition to a file, for example ceph-pv.yaml, and create the PV:

oc create -f ceph-pv.yaml

# oc create -f ceph-pv.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the persistent volume was created:

oc get pv NAME LABELS CAPACITY ACCESSMODES STATUS CLAIM REASON AGE ceph-pv <none> 2147483648 RWO Available 2s

# oc get pv NAME LABELS CAPACITY ACCESSMODES STATUS CLAIM REASON AGE ceph-pv <none> 2147483648 RWO Available 2sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a PVC that will bind to the new PV:

Save the definition to a file, for example ceph-claim.yaml, and create the PVC:

oc create -f ceph-claim.yaml

# oc create -f ceph-claim.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

24.5.3. Ceph Volume Security

See the full Volume Security topic before implementing Ceph RBD volumes.

A significant difference between shared volumes (NFS and GlusterFS) and block volumes (Ceph RBD, iSCSI, and most cloud storage), is that the user and group IDs defined in the pod definition or container image are applied to the target physical storage. This is referred to as managing ownership of the block device. For example, if the Ceph RBD mount has its owner set to 123 and its group ID set to 567, and if the pod defines its runAsUser set to 222 and its fsGroup to be 7777, then the Ceph RBD physical mount’s ownership will be changed to 222:7777.

Even if the user and group IDs are not defined in the pod specification, the resulting pod may have defaults defined for these IDs based on its matching SCC, or its project. See the full Volume Security topic which covers storage aspects of SCCs and defaults in greater detail.

A pod defines the group ownership of a Ceph RBD volume using the fsGroup stanza under the pod’s securityContext definition:

24.6. Persistent Storage Using AWS Elastic Block Store

24.6.1. Overview

OpenShift Container Platform supports AWS Elastic Block Store volumes (EBS). You can provision your OpenShift Container Platform cluster with persistent storage using AWS EC2. Some familiarity with Kubernetes and AWS is assumed.

Before creating persistent volumes using AWS, OpenShift Container Platform must first be properly configured for AWS ElasticBlockStore.

The Kubernetes persistent volume framework allows administrators to provision a cluster with persistent storage and gives users a way to request those resources without having any knowledge of the underlying infrastructure. AWS Elastic Block Store volumes can be provisioned dynamically. Persistent volumes are not bound to a single project or namespace; they can be shared across the OpenShift Container Platform cluster. Persistent volume claims, however, are specific to a project or namespace and can be requested by users.

High-availability of storage in the infrastructure is left to the underlying storage provider.

24.6.2. Provisioning

Storage must exist in the underlying infrastructure before it can be mounted as a volume in OpenShift Container Platform. After ensuring OpenShift is configured for AWS Elastic Block Store, all that is required for OpenShift and AWS is an AWS EBS volume ID and the PersistentVolume API.

24.6.2.1. Creating the Persistent Volume

AWS does not support the 'Recycle' reclaim policy.

You must define your persistent volume in an object definition before creating it in OpenShift Container Platform:

Example 24.5. Persistent Volume Object Definition Using AWS

- 1

- The name of the volume. This will be how it is identified via persistent volume claims or from pods.

- 2

- The amount of storage allocated to this volume.

- 3

- This defines the volume type being used, in this case the awsElasticBlockStore plug-in.

- 4

- File system type to mount.

- 5

- This is the AWS volume that will be used.

Changing the value of the fstype parameter after the volume has been formatted and provisioned can result in data loss and pod failure.

Save your definition to a file, for example aws-pv.yaml, and create the persistent volume:

oc create -f aws-pv.yaml persistentvolume "pv0001" created

# oc create -f aws-pv.yaml

persistentvolume "pv0001" createdVerify that the persistent volume was created:

oc get pv NAME LABELS CAPACITY ACCESSMODES STATUS CLAIM REASON AGE pv0001 <none> 5Gi RWO Available 2s

# oc get pv

NAME LABELS CAPACITY ACCESSMODES STATUS CLAIM REASON AGE

pv0001 <none> 5Gi RWO Available 2sUsers can then request storage using persistent volume claims, which can now utilize your new persistent volume.

Persistent volume claims only exist in the user’s namespace and can only be referenced by a pod within that same namespace. Any attempt to access a persistent volume from a different namespace causes the pod to fail.

24.6.2.2. Volume Format

Before OpenShift Container Platform mounts the volume and passes it to a container, it checks that it contains a file system as specified by the fsType parameter in the persistent volume definition. If the device is not formatted with the file system, all data from the device is erased and the device is automatically formatted with the given file system.

This allows using unformatted AWS volumes as persistent volumes, because OpenShift Container Platform formats them before the first use.

24.6.2.3. Maximum Number of EBS Volumes on a Node

By default, OpenShift Container Platform supports a maximum of 39 EBS volumes attached to one node. This limit is consistent with the AWS Volume Limits.

OpenShift Container Platform can be configured to have a higher limit by setting the environment variable KUBE_MAX_PD_VOLS. However, AWS requires a particular naming scheme (AWS Device Naming) for attached devices, which only supports a maximum of 52 volumes. This limits the number of volumes that can be attached to a node via OpenShift Container Platform to 52.

24.7. Persistent Storage Using GCE Persistent Disk

24.7.1. Overview

OpenShift Container Platform supports GCE Persistent Disk volumes (gcePD). You can provision your OpenShift Container Platform cluster with persistent storage using GCE. Some familiarity with Kubernetes and GCE is assumed.

Before creating persistent volumes using GCE, OpenShift Container Platform must first be properly configured for GCE Persistent Disk.

The Kubernetes persistent volume framework allows administrators to provision a cluster with persistent storage and gives users a way to request those resources without having any knowledge of the underlying infrastructure. GCE Persistent Disk volumes can be provisioned dynamically. Persistent volumes are not bound to a single project or namespace; they can be shared across the OpenShift Container Platform cluster. Persistent volume claims, however, are specific to a project or namespace and can be requested by users.

High-availability of storage in the infrastructure is left to the underlying storage provider.

24.7.2. Provisioning

Storage must exist in the underlying infrastructure before it can be mounted as a volume in OpenShift Container Platform. After ensuring OpenShift Container Platform is configured for GCE PersistentDisk, all that is required for OpenShift Container Platform and GCE is an GCE Persistent Disk volume ID and the PersistentVolume API.

24.7.2.1. Creating the Persistent Volume

GCE does not support the 'Recycle' reclaim policy.

You must define your persistent volume in an object definition before creating it in OpenShift Container Platform:

Example 24.6. Persistent Volume Object Definition Using GCE

- 1

- The name of the volume. This will be how it is identified via persistent volume claims or from pods.

- 2

- The amount of storage allocated to this volume.

- 3

- This defines the volume type being used, in this case the gcePersistentDisk plug-in.

- 4

- File system type to mount.

- 5

- This is the GCE Persistent Disk volume that will be used.

Changing the value of the fstype parameter after the volume has been formatted and provisioned can result in data loss and pod failure.

Save your definition to a file, for example gce-pv.yaml, and create the persistent volume:

oc create -f gce-pv.yaml persistentvolume "pv0001" created

# oc create -f gce-pv.yaml

persistentvolume "pv0001" createdVerify that the persistent volume was created:

oc get pv NAME LABELS CAPACITY ACCESSMODES STATUS CLAIM REASON AGE pv0001 <none> 5Gi RWO Available 2s

# oc get pv