Chapter 4. Additional Concepts

4.1. Authentication

4.1.1. Overview

The authentication layer identifies the user associated with requests to the OpenShift Container Platform API. The authorization layer then uses information about the requesting user to determine if the request should be allowed.

As an administrator, you can configure authentication using a master configuration file.

4.1.2. Users and Groups

A user in OpenShift Container Platform is an entity that can make requests to the OpenShift Container Platform API. Typically, this represents the account of a developer or administrator that is interacting with OpenShift Container Platform.

A user can be assigned to one or more groups, each of which represent a certain set of users. Groups are useful when managing authorization policies to grant permissions to multiple users at once, for example allowing access to objects within a project, versus granting them to users individually.

In addition to explicitly defined groups, there are also system groups, or virtual groups, that are automatically provisioned by OpenShift. These can be seen when viewing cluster bindings.

In the default set of virtual groups, note the following in particular:

| Virtual Group | Description |

|---|---|

| system:authenticated | Automatically associated with all authenticated users. |

| system:authenticated:oauth | Automatically associated with all users authenticated with an OAuth access token. |

| system:unauthenticated | Automatically associated with all unauthenticated users. |

4.1.3. API Authentication

Requests to the OpenShift Container Platform API are authenticated using the following methods:

- OAuth Access Tokens

-

Obtained from the OpenShift Container Platform OAuth server using the

<master>/oauth/authorizeand<master>/oauth/tokenendpoints. -

Sent as an

Authorization: Bearer…header. -

Sent as a websocket subprotocol header in the form

base64url.bearer.authorization.k8s.io.<base64url-encoded-token>for websocket requests.

-

Obtained from the OpenShift Container Platform OAuth server using the

- X.509 Client Certificates

- Requires a HTTPS connection to the API server.

- Verified by the API server against a trusted certificate authority bundle.

- The API server creates and distributes certificates to controllers to authenticate themselves.

Any request with an invalid access token or an invalid certificate is rejected by the authentication layer with a 401 error.

If no access token or certificate is presented, the authentication layer assigns the system:anonymous virtual user and the system:unauthenticated virtual group to the request. This allows the authorization layer to determine which requests, if any, an anonymous user is allowed to make.

4.1.3.1. Impersonation

A request to the OpenShift Container Platform API can include an Impersonate-User header, which indicates that the requester wants to have the request handled as though it came from the specified user. You impersonate a user by adding the --as=<user> flag to requests.

Before User A can impersonate User B, User A is authenticated. Then, an authorization check occurs to ensure that User A is allowed to impersonate the user named User B. If User A is requesting to impersonate a service account, system:serviceaccount:namespace:name, OpenShift Container Platform confirms that User A can impersonate the serviceaccount named name in namespace. If the check fails, the request fails with a 403 (Forbidden) error code.

By default, project administrators and editors can impersonate service accounts in their namespace. The sudoers role allows a user to impersonate system:admin, which in turn has cluster administrator permissions. The ability to impersonate system:admin grants some protection against typos, but not security, for someone administering the cluster. For example, running oc delete nodes --all fails, but running oc delete nodes --all --as=system:admin succeeds. You can grant a user that permission by running this command:

oc create clusterrolebinding <any_valid_name> --clusterrole=sudoer --user=<username>

$ oc create clusterrolebinding <any_valid_name> --clusterrole=sudoer --user=<username>

If you need to create a project request on behalf of a user, include the --as=<user> --as-group=<group1> --as-group=<group2> flags in your command. Because system:authenticated:oauth is the only bootstrap group that can create project requests, you must impersonate that group, as shown in the following example:

oc new-project <project> --as=<user> \ --as-group=system:authenticated --as-group=system:authenticated:oauth

$ oc new-project <project> --as=<user> \

--as-group=system:authenticated --as-group=system:authenticated:oauth4.1.4. OAuth

The OpenShift Container Platform master includes a built-in OAuth server. Users obtain OAuth access tokens to authenticate themselves to the API.

When a person requests a new OAuth token, the OAuth server uses the configured identity provider to determine the identity of the person making the request.

It then determines what user that identity maps to, creates an access token for that user, and returns the token for use.

4.1.4.1. OAuth Clients

Every request for an OAuth token must specify the OAuth client that will receive and use the token. The following OAuth clients are automatically created when starting the OpenShift Container Platform API:

| OAuth Client | Usage |

|---|---|

| openshift-web-console | Requests tokens for the web console. |

| openshift-browser-client |

Requests tokens at |

| openshift-challenging-client |

Requests tokens with a user-agent that can handle |

To register additional clients:

- 1

- The

nameof the OAuth client is used as theclient_idparameter when making requests to<master>/oauth/authorizeand<master>/oauth/token. - 2

- The

secretis used as theclient_secretparameter when making requests to<master>/oauth/token. - 3

- The

redirect_uriparameter specified in requests to<master>/oauth/authorizeand<master>/oauth/tokenmust be equal to (or prefixed by) one of the URIs inredirectURIs. - 4

- The

grantMethodis used to determine what action to take when this client requests tokens and has not yet been granted access by the user. Uses the same values seen in Grant Options.

4.1.4.2. Service Accounts as OAuth Clients

A service account can be used as a constrained form of OAuth client. Service accounts can only request a subset of scopes that allow access to some basic user information and role-based power inside of the service account’s own namespace:

-

user:info -

user:check-access -

role:<any_role>:<serviceaccount_namespace> -

role:<any_role>:<serviceaccount_namespace>:!

When using a service account as an OAuth client:

-

client_idissystem:serviceaccount:<serviceaccount_namespace>:<serviceaccount_name>. client_secretcan be any of the API tokens for that service account. For example:oc sa get-token <serviceaccount_name>

$ oc sa get-token <serviceaccount_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

To get

WWW-Authenticatechallenges, set anserviceaccounts.openshift.io/oauth-want-challengesannotation on the service account to true. -

redirect_urimust match an annotation on the service account. Redirect URIs for Service Accounts as OAuth Clients provides more information.

4.1.4.3. Redirect URIs for Service Accounts as OAuth Clients

Annotation keys must have the prefix serviceaccounts.openshift.io/oauth-redirecturi. or serviceaccounts.openshift.io/oauth-redirectreference. such as:

serviceaccounts.openshift.io/oauth-redirecturi.<name>

serviceaccounts.openshift.io/oauth-redirecturi.<name>In its simplest form, the annotation can be used to directly specify valid redirect URIs. For example:

"serviceaccounts.openshift.io/oauth-redirecturi.first": "https://example.com" "serviceaccounts.openshift.io/oauth-redirecturi.second": "https://other.com"

"serviceaccounts.openshift.io/oauth-redirecturi.first": "https://example.com"

"serviceaccounts.openshift.io/oauth-redirecturi.second": "https://other.com"

The first and second postfixes in the above example are used to separate the two valid redirect URIs.

In more complex configurations, static redirect URIs may not be enough. For example, perhaps you want all ingresses for a route to be considered valid. This is where dynamic redirect URIs via the serviceaccounts.openshift.io/oauth-redirectreference. prefix come into play.

For example:

"serviceaccounts.openshift.io/oauth-redirectreference.first": "{\"kind\":\"OAuthRedirectReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"Route\",\"name\":\"jenkins\"}}"

"serviceaccounts.openshift.io/oauth-redirectreference.first": "{\"kind\":\"OAuthRedirectReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"Route\",\"name\":\"jenkins\"}}"Since the value for this annotation contains serialized JSON data, it is easier to see in an expanded format:

Now you can see that an OAuthRedirectReference allows us to reference the route named jenkins. Thus, all ingresses for that route will now be considered valid. The full specification for an OAuthRedirectReference is:

- 1

kindrefers to the type of the object being referenced. Currently, onlyrouteis supported.- 2

namerefers to the name of the object. The object must be in the same namespace as the service account.- 3

grouprefers to the group of the object. Leave this blank, as the group for a route is the empty string.

Both annotation prefixes can be combined to override the data provided by the reference object. For example:

"serviceaccounts.openshift.io/oauth-redirecturi.first": "custompath"

"serviceaccounts.openshift.io/oauth-redirectreference.first": "{\"kind\":\"OAuthRedirectReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"Route\",\"name\":\"jenkins\"}}"

"serviceaccounts.openshift.io/oauth-redirecturi.first": "custompath"

"serviceaccounts.openshift.io/oauth-redirectreference.first": "{\"kind\":\"OAuthRedirectReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"Route\",\"name\":\"jenkins\"}}"

The first postfix is used to tie the annotations together. Assuming that the jenkins route had an ingress of https://example.com, now https://example.com/custompath is considered valid, but https://example.com is not. The format for partially supplying override data is as follows:

| Type | Syntax |

|---|---|

| Scheme | "https://" |

| Hostname | "//website.com" |

| Port | "//:8000" |

| Path | "examplepath" |

Specifying a host name override will replace the host name data from the referenced object, which is not likely to be desired behavior.

Any combination of the above syntax can be combined using the following format:

<scheme:>//<hostname><:port>/<path>

The same object can be referenced more than once for more flexibility:

"serviceaccounts.openshift.io/oauth-redirecturi.first": "custompath"

"serviceaccounts.openshift.io/oauth-redirectreference.first": "{\"kind\":\"OAuthRedirectReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"Route\",\"name\":\"jenkins\"}}"

"serviceaccounts.openshift.io/oauth-redirecturi.second": "//:8000"

"serviceaccounts.openshift.io/oauth-redirectreference.second": "{\"kind\":\"OAuthRedirectReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"Route\",\"name\":\"jenkins\"}}"

"serviceaccounts.openshift.io/oauth-redirecturi.first": "custompath"

"serviceaccounts.openshift.io/oauth-redirectreference.first": "{\"kind\":\"OAuthRedirectReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"Route\",\"name\":\"jenkins\"}}"

"serviceaccounts.openshift.io/oauth-redirecturi.second": "//:8000"

"serviceaccounts.openshift.io/oauth-redirectreference.second": "{\"kind\":\"OAuthRedirectReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"Route\",\"name\":\"jenkins\"}}"

Assuming that the route named jenkins has an ingress of https://example.com, then both https://example.com:8000 and https://example.com/custompath are considered valid.

Static and dynamic annotations can be used at the same time to achieve the desired behavior:

"serviceaccounts.openshift.io/oauth-redirectreference.first": "{\"kind\":\"OAuthRedirectReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"Route\",\"name\":\"jenkins\"}}"

"serviceaccounts.openshift.io/oauth-redirecturi.second": "https://other.com"

"serviceaccounts.openshift.io/oauth-redirectreference.first": "{\"kind\":\"OAuthRedirectReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"Route\",\"name\":\"jenkins\"}}"

"serviceaccounts.openshift.io/oauth-redirecturi.second": "https://other.com"4.1.4.3.1. API Events for OAuth

In some cases the API server returns an unexpected condition error message that is difficult to debug without direct access to the API master log. The underlying reason for the error is purposely obscured in order to avoid providing an unauthenticated user with information about the server’s state.

A subset of these errors is related to service account OAuth configuration issues. These issues are captured in events that can be viewed by non-administrator users. When encountering an unexpected condition server error during OAuth, run oc get events to view these events under ServiceAccount.

The following example warns of a service account that is missing a proper OAuth redirect URI:

oc get events | grep ServiceAccount

$ oc get events | grep ServiceAccountExample Output

1m 1m 1 proxy ServiceAccount Warning NoSAOAuthRedirectURIs service-account-oauth-client-getter system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>

1m 1m 1 proxy ServiceAccount Warning NoSAOAuthRedirectURIs service-account-oauth-client-getter system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>

Running oc describe sa/<service-account-name> reports any OAuth events associated with the given service account name.

oc describe sa/proxy | grep -A5 Events

$ oc describe sa/proxy | grep -A5 EventsExample Output

Events: FirstSeen LastSeen Count From SubObjectPath Type Reason Message --------- -------- ----- ---- ------------- -------- ------ ------- 3m 3m 1 service-account-oauth-client-getter Warning NoSAOAuthRedirectURIs system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

3m 3m 1 service-account-oauth-client-getter Warning NoSAOAuthRedirectURIs system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>The following is a list of the possible event errors:

No redirect URI annotations or an invalid URI is specified

Reason Message NoSAOAuthRedirectURIs system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>

Reason Message

NoSAOAuthRedirectURIs system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>Invalid route specified

Reason Message NoSAOAuthRedirectURIs [routes.route.openshift.io "<name>" not found, system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>]

Reason Message

NoSAOAuthRedirectURIs [routes.route.openshift.io "<name>" not found, system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>]Invalid reference type specified

Reason Message NoSAOAuthRedirectURIs [no kind "<name>" is registered for version "v1", system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>]

Reason Message

NoSAOAuthRedirectURIs [no kind "<name>" is registered for version "v1", system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>]Missing SA tokens

Reason Message NoSAOAuthTokens system:serviceaccount:myproject:proxy has no tokens

Reason Message

NoSAOAuthTokens system:serviceaccount:myproject:proxy has no tokens4.1.4.3.1.1. Sample API Event Caused by a Possible Misconfiguration

The following steps represent one way a user could get into a broken state and how to debug or fix the issue:

Create a project utilizing a service account as an OAuth client.

Create YAML for a proxy service account object and ensure it uses the route

proxy:vi serviceaccount.yaml

$ vi serviceaccount.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the following sample code:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create YAML for a route object to create a secure connection to the proxy:

vi route.yaml

$ vi route.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the following sample code:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a YAML for a deployment configuration to launch a proxy as a sidecar:

vi proxysidecar.yaml

$ vi proxysidecar.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the following sample code:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the three objects:

oc create -f serviceaccount.yaml

$ oc create -f serviceaccount.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc create -f route.yaml

$ oc create -f route.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc create -f proxysidecar.yaml

$ oc create -f proxysidecar.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Run

oc edit sa/proxyto edit the service account and change theserviceaccounts.openshift.io/oauth-redirectreferenceannotation to point to a Route that does not exist.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Review the OAuth log for the service to locate the server error:

The authorization server encountered an unexpected condition that prevented it from fulfilling the request.

The authorization server encountered an unexpected condition that prevented it from fulfilling the request.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run

oc get eventsto view theServiceAccountevent:oc get events | grep ServiceAccount

$ oc get events | grep ServiceAccountCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

23m 23m 1 proxy ServiceAccount Warning NoSAOAuthRedirectURIs service-account-oauth-client-getter [routes.route.openshift.io "notexist" not found, system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>]

23m 23m 1 proxy ServiceAccount Warning NoSAOAuthRedirectURIs service-account-oauth-client-getter [routes.route.openshift.io "notexist" not found, system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>]Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.4.4. Integrations

All requests for OAuth tokens involve a request to <master>/oauth/authorize. Most authentication integrations place an authenticating proxy in front of this endpoint, or configure OpenShift Container Platform to validate credentials against a backing identity provider. Requests to <master>/oauth/authorize can come from user-agents that cannot display interactive login pages, such as the CLI. Therefore, OpenShift Container Platform supports authenticating using a WWW-Authenticate challenge in addition to interactive login flows.

If an authenticating proxy is placed in front of the <master>/oauth/authorize endpoint, it should send unauthenticated, non-browser user-agents WWW-Authenticate challenges, rather than displaying an interactive login page or redirecting to an interactive login flow.

To prevent cross-site request forgery (CSRF) attacks against browser clients, Basic authentication challenges should only be sent if a X-CSRF-Token header is present on the request. Clients that expect to receive Basic WWW-Authenticate challenges should set this header to a non-empty value.

If the authenticating proxy cannot support WWW-Authenticate challenges, or if OpenShift Container Platform is configured to use an identity provider that does not support WWW-Authenticate challenges, users can visit <master>/oauth/token/request using a browser to obtain an access token manually.

4.1.4.5. OAuth Server Metadata

Applications running in OpenShift Container Platform may need to discover information about the built-in OAuth server. For example, they may need to discover what the address of the <master> server is without manual configuration. To aid in this, OpenShift Container Platform implements the IETF OAuth 2.0 Authorization Server Metadata draft specification.

Thus, any application running inside the cluster can issue a GET request to https://openshift.default.svc/.well-known/oauth-authorization-server to fetch the following information:

- 1

- The authorization server’s issuer identifier, which is a URL that uses the

httpsscheme and has no query or fragment components. This is the location where.well-knownRFC 5785 resources containing information about the authorization server are published. - 2

- URL of the authorization server’s authorization endpoint. See RFC 6749.

- 3

- URL of the authorization server’s token endpoint. See RFC 6749.

- 4

- JSON array containing a list of the OAuth 2.0 RFC 6749 scope values that this authorization server supports. Note that not all supported scope values are advertised.

- 5

- JSON array containing a list of the OAuth 2.0

response_typevalues that this authorization server supports. The array values used are the same as those used with theresponse_typesparameter defined by "OAuth 2.0 Dynamic Client Registration Protocol" in RFC 7591. - 6

- JSON array containing a list of the OAuth 2.0 grant type values that this authorization server supports. The array values used are the same as those used with the

grant_typesparameter defined by OAuth 2.0 Dynamic Client Registration Protocol in RFC 7591. - 7

- JSON array containing a list of PKCE RFC 7636 code challenge methods supported by this authorization server. Code challenge method values are used in the

code_challenge_methodparameter defined in Section 4.3 of RFC 7636. The valid code challenge method values are those registered in the IANA PKCE Code Challenge Methods registry. See IANA OAuth Parameters.

4.1.4.6. Obtaining OAuth Tokens

The OAuth server supports standard authorization code grant and the implicit grant OAuth authorization flows.

Run the following command to request an OAuth token by using the authorization code grant method:

curl -H "X-Remote-User: <username>" \

--cacert /etc/origin/master/ca.crt \

--cert /etc/origin/master/admin.crt \

--key /etc/origin/master/admin.key \

-I https://<master-address>/oauth/authorize?response_type=token\&client_id=openshift-challenging-client | grep -oP "access_token=\K[^&]*"

$ curl -H "X-Remote-User: <username>" \

--cacert /etc/origin/master/ca.crt \

--cert /etc/origin/master/admin.crt \

--key /etc/origin/master/admin.key \

-I https://<master-address>/oauth/authorize?response_type=token\&client_id=openshift-challenging-client | grep -oP "access_token=\K[^&]*"

When requesting an OAuth token using the implicit grant flow (response_type=token) with a client_id configured to request WWW-Authenticate challenges (like openshift-challenging-client), these are the possible server responses from /oauth/authorize, and how they should be handled:

| Status | Content | Client response |

|---|---|---|

| 302 |

|

Use the |

| 302 |

|

Fail, optionally surfacing the |

| 302 |

Other | Follow the redirect, and process the result using these rules |

| 401 |

|

Respond to challenge if type is recognized (e.g. |

| 401 |

| No challenge authentication is possible. Fail and show response body (which might contain links or details on alternate methods to obtain an OAuth token) |

| Other | Other | Fail, optionally surfacing response body to the user |

To request an OAuth token using the implicit grant flow:

curl -u <username>:<password> 'https://<master-address>:8443/oauth/authorize?client_id=openshift-challenging-client&response_type=token' -skv / / -H "X-CSRF-Token: xxx"

$ curl -u <username>:<password>

'https://<master-address>:8443/oauth/authorize?client_id=openshift-challenging-client&response_type=token' -skv /

/ -H "X-CSRF-Token: xxx" Example Output

To view only the OAuth token value, run the following command:

curl -u <username>:<password> / 'https://<master-address>:8443/oauth/authorize?client_id=openshift-challenging-client&response_type=token' / -skv -H "X-CSRF-Token: xxx" --stderr - | grep -oP "access_token=\K[^&]*"

$ curl -u <username>:<password> /

'https://<master-address>:8443/oauth/authorize?client_id=openshift-challenging-client&response_type=token' /

-skv -H "X-CSRF-Token: xxx" --stderr - | grep -oP "access_token=\K[^&]*" Example Output

hvqxe5aMlAzvbqfM2WWw3D6tR0R2jCQGKx0viZBxwmc

hvqxe5aMlAzvbqfM2WWw3D6tR0R2jCQGKx0viZBxwmc

You can also use the Code Grant method to request a token.

4.1.4.7. Authentication Metrics for Prometheus

OpenShift Container Platform captures the following Prometheus system metrics during authentication attempts:

-

openshift_auth_basic_password_countcounts the number ofoc loginuser name and password attempts. -

openshift_auth_basic_password_count_resultcounts the number ofoc loginuser name and password attempts by result (success or error). -

openshift_auth_form_password_countcounts the number of web console login attempts. -

openshift_auth_form_password_count_resultcounts the number of web console login attempts by result (success or error). -

openshift_auth_password_totalcounts the total number ofoc loginand web console login attempts.

4.2. Authorization

4.2.1. Overview

Role-based Access Control (RBAC) objects determine whether a user is allowed to perform a given action within a project.

This allows platform administrators to use the cluster roles and bindings to control who has various access levels to the OpenShift Container Platform platform itself and all projects.

It allows developers to use local roles and bindings to control who has access to their projects. Note that authorization is a separate step from authentication, which is more about determining the identity of who is taking the action.

Authorization is managed using:

| Rules |

Sets of permitted verbs on a set of objects. For example, whether something can |

| Roles | Collections of rules. Users and groups can be associated with, or bound to, multiple roles at the same time. |

| Bindings | Associations between users and/or groups with a role. |

Cluster administrators can visualize rules, roles, and bindings using the CLI.

For example, consider the following excerpt that shows the rule sets for the admin and basic-user default cluster roles:

oc describe clusterrole.rbac admin basic-user

$ oc describe clusterrole.rbac admin basic-userExample Output

The following excerpt from viewing local role bindings shows the above roles bound to various users and groups:

oc describe rolebinding.rbac admin basic-user -n alice-project

$ oc describe rolebinding.rbac admin basic-user -n alice-projectExample Output

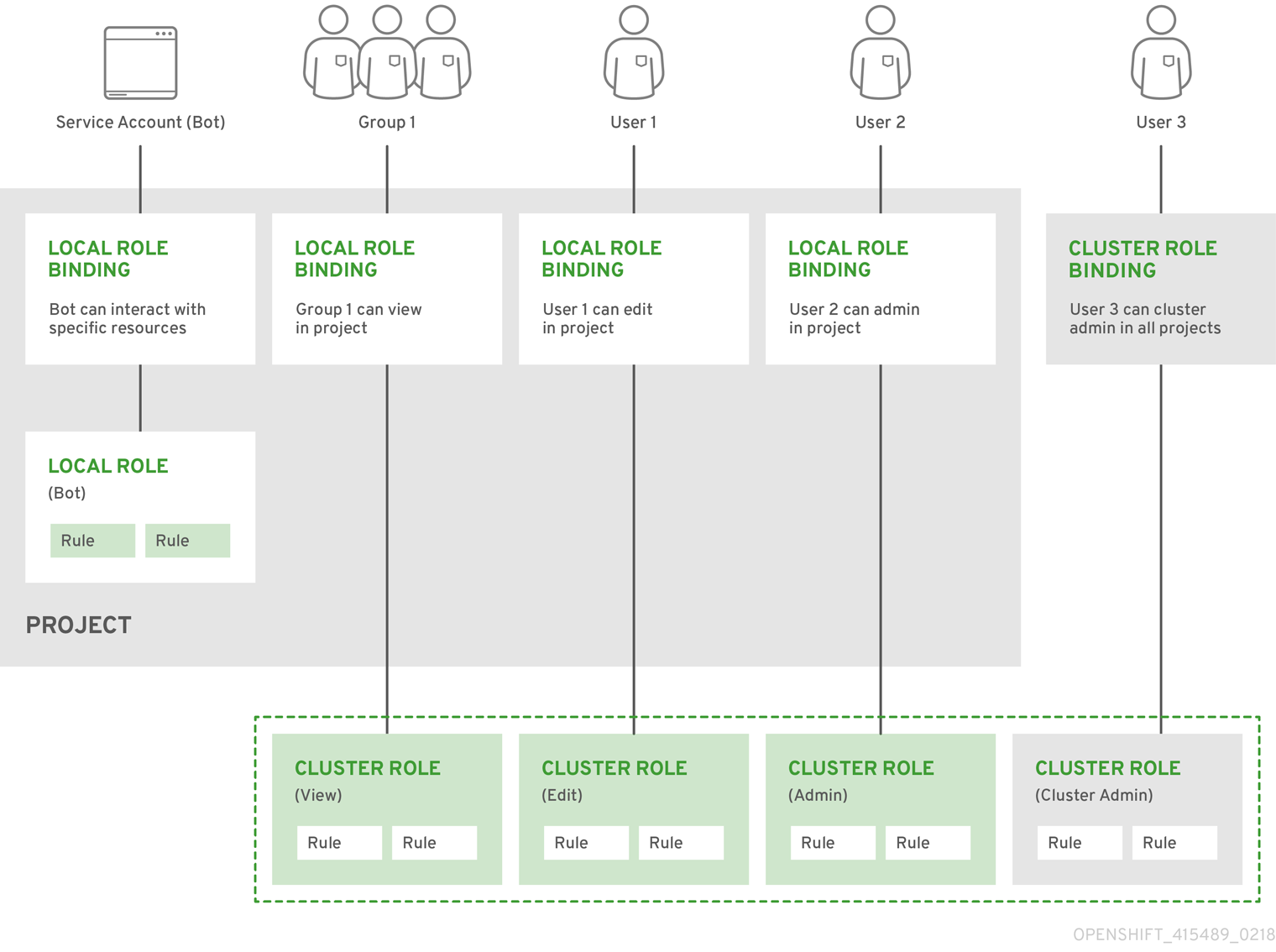

The relationships between cluster roles, local roles, cluster role bindings, local role bindings, users, groups and service accounts are illustrated below.

4.2.2. Evaluating Authorization

Several factors are combined to make the decision when OpenShift Container Platform evaluates authorization:

| Identity | In the context of authorization, both the user name and list of groups the user belongs to. | ||||||

| Action | The action being performed. In most cases, this consists of:

| ||||||

| Bindings | The full list of bindings. |

OpenShift Container Platform evaluates authorizations using the following steps:

- The identity and the project-scoped action is used to find all bindings that apply to the user or their groups.

- Bindings are used to locate all the roles that apply.

- Roles are used to find all the rules that apply.

- The action is checked against each rule to find a match.

- If no matching rule is found, the action is then denied by default.

4.2.3. Cluster and Local RBAC

There are two levels of RBAC roles and bindings that control authorization:

| Cluster RBAC | Roles and bindings that are applicable across all projects. Roles that exist cluster-wide are considered cluster roles. Cluster role bindings can only reference cluster roles. |

| Local RBAC | Roles and bindings that are scoped to a given project. Roles that exist only in a project are considered local roles. Local role bindings can reference both cluster and local roles. |

This two-level hierarchy allows re-usability over multiple projects through the cluster roles while allowing customization inside of individual projects through local roles.

During evaluation, both the cluster role bindings and the local role bindings are used. For example:

- Cluster-wide "allow" rules are checked.

- Locally-bound "allow" rules are checked.

- Deny by default.

4.2.4. Cluster Roles and Local Roles

Roles are collections of policy rules, which are sets of permitted verbs that can be performed on a set of resources. OpenShift Container Platform includes a set of default cluster roles that can be bound to users and groups cluster wide or locally.

| Default Cluster Role | Description |

|---|---|

| admin | A project manager. If used in a local binding, an admin user will have rights to view any resource in the project and modify any resource in the project except for quota. |

| basic-user | A user that can get basic information about projects and users. |

| cluster-admin | A super-user that can perform any action in any project. When bound to a user with a local binding, they have full control over quota and every action on every resource in the project. |

| cluster-status | A user that can get basic cluster status information. |

| edit | A user that can modify most objects in a project, but does not have the power to view or modify roles or bindings. |

| self-provisioner | A user that can create their own projects. |

| view | A user who cannot make any modifications, but can see most objects in a project. They cannot view or modify roles or bindings. |

| cluster-reader | A user who can read, but not view, objects in the cluster. |

Remember that users and groups can be associated with, or bound to, multiple roles at the same time.

Project administrators can visualize roles, including a matrix of the verbs and resources each are associated using the CLI to view local roles and bindings.

The cluster role bound to the project administrator is limited in a project via a local binding. It is not bound cluster-wide like the cluster roles granted to the cluster-admin or system:admin.

Cluster roles are roles defined at the cluster level, but can be bound either at the cluster level or at the project level.

Learn how to create a local role for a project.

4.2.4.1. Updating Cluster Roles

After any OpenShift Container Platform cluster upgrade, the default roles are updated and automatically reconciled when the server is started. During reconciliation, any permissions that are missing from the default roles are added. If you added more permissions to the role, they are not removed.

If you customized the default roles and configured them to prevent automatic role reconciliation, you must manually update policy definitions when you upgrade OpenShift Container Platform.

4.2.4.2. Applying Custom Roles and Permissions

To update custom roles and permissions, it is strongly recommended to use the following command:

oc auth reconcile -f <file>

$ oc auth reconcile -f <file> - 1

<file>is the absolute path to the roles and permissions to apply.

You can also use this command to add new custom roles and permissions. If the new custom role has the same name as an existing role, the existing role is updated. The cluster administrator is not notified that a custom role with the same name already exists.

This command ensures that new permissions are applied properly in a way that will not break other clients. This is done internally by computing logical covers operations between rule sets, which is something you cannot do via a JSON merge on RBAC resources.

4.2.4.3. Cluster Role Aggregation

The default admin, edit, view, and cluster-reader cluster roles support cluster role aggregation, where the cluster rules for each role are dynamically updated as new rules are created. This feature is relevant only if you extend the Kubernetes API by creating custom resources.

4.2.5. Security Context Constraints

In addition to the RBAC resources that control what a user can do, OpenShift Container Platform provides security context constraints (SCC) that control the actions that a pod can perform and what it has the ability to access. Administrators can manage SCCs using the CLI.

SCCs are also very useful for managing access to persistent storage.

SCCs are objects that define a set of conditions that a pod must run with in order to be accepted into the system. They allow an administrator to control the following:

- Running of privileged containers.

- Capabilities a container can request to be added.

- Use of host directories as volumes.

- The SELinux context of the container.

- The user ID.

- The use of host namespaces and networking.

-

Allocating an

FSGroupthat owns the pod’s volumes - Configuring allowable supplemental groups

- Requiring the use of a read only root file system

- Controlling the usage of volume types

- Configuring allowable seccomp profiles

Seven SCCs are added to the cluster by default, and are viewable by cluster administrators using the CLI:

oc get scc

$ oc get sccExample Output

Do not modify the default SCCs. Customizing the default SCCs can lead to issues when OpenShift Container Platform is upgraded. Instead, create new SCCs.

The definition for each SCC is also viewable by cluster administrators using the CLI. For example, for the privileged SCC:

oc get -o yaml --export scc/privileged

$ oc get -o yaml --export scc/privilegedExample Output

- 1

- A list of capabilities that can be requested by a pod. An empty list means that none of capabilities can be requested while the special symbol

*allows any capabilities. - 2

- A list of additional capabilities that will be added to any pod.

- 3

- The

FSGroupstrategy which dictates the allowable values for the Security Context. - 4

- The groups that have access to this SCC.

- 5

- A list of capabilities that will be dropped from a pod.

- 6

- The run as user strategy type which dictates the allowable values for the Security Context.

- 7

- The SELinux context strategy type which dictates the allowable values for the Security Context.

- 8

- The supplemental groups strategy which dictates the allowable supplemental groups for the Security Context.

- 9

- The users who have access to this SCC.

The users and groups fields on the SCC control which SCCs can be used. By default, cluster administrators, nodes, and the build controller are granted access to the privileged SCC. All authenticated users are granted access to the restricted SCC.

Docker has a default list of capabilities that are allowed for each container of a pod. The containers use the capabilities from this default list, but pod manifest authors can alter it by requesting additional capabilities or dropping some of defaulting. The allowedCapabilities, defaultAddCapabilities, and requiredDropCapabilities fields are used to control such requests from the pods, and to dictate which capabilities can be requested, which ones must be added to each container, and which ones must be forbidden.

The privileged SCC:

- allows privileged pods.

- allows host directories to be mounted as volumes.

- allows a pod to run as any user.

- allows a pod to run with any MCS label.

- allows a pod to use the host’s IPC namespace.

- allows a pod to use the host’s PID namespace.

- allows a pod to use any FSGroup.

- allows a pod to use any supplemental group.

- allows a pod to use any seccomp profiles.

- allows a pod to request any capabilities.

The restricted SCC:

- ensures pods cannot run as privileged.

- ensures pods cannot use host directory volumes.

- requires that a pod run as a user in a pre-allocated range of UIDs.

- requires that a pod run with a pre-allocated MCS label.

- allows a pod to use any FSGroup.

- allows a pod to use any supplemental group.

For more information about each SCC, see the kubernetes.io/description annotation available on the SCC.

SCCs are comprised of settings and strategies that control the security features a pod has access to. These settings fall into three categories:

| Controlled by a boolean |

Fields of this type default to the most restrictive value. For example, |

| Controlled by an allowable set | Fields of this type are checked against the set to ensure their value is allowed. |

| Controlled by a strategy | Items that have a strategy to generate a value provide:

|

4.2.5.1. SCC Strategies

4.2.5.1.1. RunAsUser

-

MustRunAs - Requires a

runAsUserto be configured. Uses the configuredrunAsUseras the default. Validates against the configuredrunAsUser. - MustRunAsRange - Requires minimum and maximum values to be defined if not using pre-allocated values. Uses the minimum as the default. Validates against the entire allowable range.

-

MustRunAsNonRoot - Requires that the pod be submitted with a non-zero

runAsUseror have theUSERdirective defined in the image. No default provided. -

RunAsAny - No default provided. Allows any

runAsUserto be specified.

4.2.5.1.2. SELinuxContext

-

MustRunAs - Requires

seLinuxOptionsto be configured if not using pre-allocated values. UsesseLinuxOptionsas the default. Validates againstseLinuxOptions. -

RunAsAny - No default provided. Allows any

seLinuxOptionsto be specified.

4.2.5.1.3. SupplementalGroups

- MustRunAs - Requires at least one range to be specified if not using pre-allocated values. Uses the minimum value of the first range as the default. Validates against all ranges.

-

RunAsAny - No default provided. Allows any

supplementalGroupsto be specified.

4.2.5.1.4. FSGroup

- MustRunAs - Requires at least one range to be specified if not using pre-allocated values. Uses the minimum value of the first range as the default. Validates against the first ID in the first range.

-

RunAsAny - No default provided. Allows any

fsGroupID to be specified.

4.2.5.2. Controlling Volumes

The usage of specific volume types can be controlled by setting the volumes field of the SCC. The allowable values of this field correspond to the volume sources that are defined when creating a volume:

- azureFile

- azureDisk

- flocker

- flexVolume

- hostPath

- emptyDir

- gcePersistentDisk

- awsElasticBlockStore

- secret

- nfs

- iscsi

- glusterfs

- persistentVolumeClaim

- rbd

- cinder

- cephFS

- downwardAPI

- fc

- configMap

- vsphereVolume

- quobyte

- photonPersistentDisk

- projected

- portworxVolume

- scaleIO

- storageos

- * (a special value to allow the use of all volume types)

- none (a special value to disallow the use of all volumes types. Exist only for backwards compatibility)

The recommended minimum set of allowed volumes for new SCCs are configMap, downwardAPI, emptyDir, persistentVolumeClaim, secret, and projected.

The list of allowable volume types is not exhaustive because new types are added with each release of OpenShift Container Platform.

For backwards compatibility, the usage of allowHostDirVolumePlugin overrides settings in the volumes field. For example, if allowHostDirVolumePlugin is set to false but allowed in the volumes field, then the hostPath value will be removed from volumes.

4.2.5.3. Restricting Access to FlexVolumes

OpenShift Container Platform provides additional control of FlexVolumes based on their driver. When SCC allows the usage of FlexVolumes, pods can request any FlexVolumes. However, when the cluster administrator specifies driver names in the AllowedFlexVolumes field, pods must only use FlexVolumes with these drivers.

Example of Limiting Access to Only Two FlexVolumes

volumes: - flexVolume allowedFlexVolumes: - driver: example/lvm - driver: example/cifs

volumes:

- flexVolume

allowedFlexVolumes:

- driver: example/lvm

- driver: example/cifs4.2.5.4. Seccomp

SeccompProfiles lists the allowed profiles that can be set for the pod or container’s seccomp annotations. An unset (nil) or empty value means that no profiles are specified by the pod or container. Use the wildcard * to allow all profiles. When used to generate a value for a pod, the first non-wildcard profile is used as the default.

Refer to the seccomp documentation for more information about configuring and using custom profiles.

4.2.5.5. Admission

Admission control with SCCs allows for control over the creation of resources based on the capabilities granted to a user.

In terms of the SCCs, this means that an admission controller can inspect the user information made available in the context to retrieve an appropriate set of SCCs. Doing so ensures the pod is authorized to make requests about its operating environment or to generate a set of constraints to apply to the pod.

The set of SCCs that admission uses to authorize a pod are determined by the user identity and groups that the user belongs to. Additionally, if the pod specifies a service account, the set of allowable SCCs includes any constraints accessible to the service account.

Admission uses the following approach to create the final security context for the pod:

- Retrieve all SCCs available for use.

- Generate field values for security context settings that were not specified on the request.

- Validate the final settings against the available constraints.

If a matching set of constraints is found, then the pod is accepted. If the request cannot be matched to an SCC, the pod is rejected.

A pod must validate every field against the SCC. The following are examples for just two of the fields that must be validated:

These examples are in the context of a strategy using the preallocated values.

A FSGroup SCC Strategy of MustRunAs

If the pod defines a fsGroup ID, then that ID must equal the default fsGroup ID. Otherwise, the pod is not validated by that SCC and the next SCC is evaluated.

If the SecurityContextConstraints.fsGroup field has value RunAsAny and the pod specification omits the Pod.spec.securityContext.fsGroup, then this field is considered valid. Note that it is possible that during validation, other SCC settings will reject other pod fields and thus cause the pod to fail.

A SupplementalGroups SCC Strategy of MustRunAs

If the pod specification defines one or more supplementalGroups IDs, then the pod’s IDs must equal one of the IDs in the namespace’s openshift.io/sa.scc.supplemental-groups annotation. Otherwise, the pod is not validated by that SCC and the next SCC is evaluated.

If the SecurityContextConstraints.supplementalGroups field has value RunAsAny and the pod specification omits the Pod.spec.securityContext.supplementalGroups, then this field is considered valid. Note that it is possible that during validation, other SCC settings will reject other pod fields and thus cause the pod to fail.

4.2.5.5.1. SCC Prioritization

SCCs have a priority field that affects the ordering when attempting to validate a request by the admission controller. A higher priority SCC is moved to the front of the set when sorting. When the complete set of available SCCs are determined they are ordered by:

- Highest priority first, nil is considered a 0 priority

- If priorities are equal, the SCCs will be sorted from most restrictive to least restrictive

- If both priorities and restrictions are equal the SCCs will be sorted by name

By default, the anyuid SCC granted to cluster administrators is given priority in their SCC set. This allows cluster administrators to run pods as any user by without specifying a RunAsUser on the pod’s SecurityContext. The administrator may still specify a RunAsUser if they wish.

4.2.5.5.2. Role-Based Access to SCCs

Starting with OpenShift Container Platform 3.11, you can specify SCCs as a resource that is handled by RBAC. This allows you to scope access to your SCCs to a certain project or to the entire cluster. Assigning users, groups or service accounts directly to an SCC retains cluster-wide scope.

To include access to SCCs for your role, you specify the following rule in the definition of the role: .Role-Based Access to SCCs

A local or cluster role with such a rule allows the subjects that are bound to it with a rolebinding or a clusterrolebinding to use the user-defined SCC called myPermittingSCC.

Because RBAC is designed to prevent escalation, even project administrators will be unable to grant access to an SCC because they are not allowed, by default, to use the verb use on SCC resources, including the restricted SCC.

4.2.5.5.3. Understanding Pre-allocated Values and Security Context Constraints

The admission controller is aware of certain conditions in the security context constraints that trigger it to look up pre-allocated values from a namespace and populate the security context constraint before processing the pod. Each SCC strategy is evaluated independently of other strategies, with the pre-allocated values (where allowed) for each policy aggregated with pod specification values to make the final values for the various IDs defined in the running pod.

The following SCCs cause the admission controller to look for pre-allocated values when no ranges are defined in the pod specification:

-

A

RunAsUserstrategy of MustRunAsRange with no minimum or maximum set. Admission looks for the openshift.io/sa.scc.uid-range annotation to populate range fields. -

An

SELinuxContextstrategy of MustRunAs with no level set. Admission looks for the openshift.io/sa.scc.mcs annotation to populate the level. -

A

FSGroupstrategy of MustRunAs. Admission looks for the openshift.io/sa.scc.supplemental-groups annotation. -

A

SupplementalGroupsstrategy of MustRunAs. Admission looks for the openshift.io/sa.scc.supplemental-groups annotation.

During the generation phase, the security context provider will default any values that are not specifically set in the pod. Defaulting is based on the strategy being used:

-

RunAsAnyandMustRunAsNonRootstrategies do not provide default values. Thus, if the pod needs a field defined (for example, a group ID), this field must be defined inside the pod specification. -

MustRunAs(single value) strategies provide a default value which is always used. As an example, for group IDs: even if the pod specification defines its own ID value, the namespace’s default field will also appear in the pod’s groups. -

MustRunAsRangeandMustRunAs(range-based) strategies provide the minimum value of the range. As with a single valueMustRunAsstrategy, the namespace’s default value will appear in the running pod. If a range-based strategy is configurable with multiple ranges, it will provide the minimum value of the first configured range.

FSGroup and SupplementalGroups strategies fall back to the openshift.io/sa.scc.uid-range annotation if the openshift.io/sa.scc.supplemental-groups annotation does not exist on the namespace. If neither exist, the SCC will fail to create.

By default, the annotation-based FSGroup strategy configures itself with a single range based on the minimum value for the annotation. For example, if your annotation reads 1/3, the FSGroup strategy will configure itself with a minimum and maximum of 1. If you want to allow more groups to be accepted for the FSGroup field, you can configure a custom SCC that does not use the annotation.

The openshift.io/sa.scc.supplemental-groups annotation accepts a comma delimited list of blocks in the format of <start>/<length or <start>-<end>. The openshift.io/sa.scc.uid-range annotation accepts only a single block.

4.2.6. Determining What You Can Do as an Authenticated User

From within your OpenShift Container Platform project, you can determine what verbs you can perform against all namespace-scoped resources (including third-party resources). Run:

oc policy can-i --list --loglevel=8

$ oc policy can-i --list --loglevel=8The output will help you to determine what API request to make to gather the information.

To receive information back in a user-readable format, run:

oc policy can-i --list

$ oc policy can-i --listThe output will provide a full list.

To determine if you can perform specific verbs, run:

oc policy can-i <verb> <resource>

$ oc policy can-i <verb> <resource>User scopes can provide more information about a given scope. For example:

oc policy can-i <verb> <resource> --scopes=user:info

$ oc policy can-i <verb> <resource> --scopes=user:info4.3. Persistent Storage

4.3.1. Overview

Managing storage is a distinct problem from managing compute resources. OpenShift Container Platform uses the Kubernetes persistent volume (PV) framework to allow cluster administrators to provision persistent storage for a cluster. Developers can use persistent volume claims (PVCs) to request PV resources without having specific knowledge of the underlying storage infrastructure.

PVCs are specific to a project and are created and used by developers as a means to use a PV. PV resources on their own are not scoped to any single project; they can be shared across the entire OpenShift Container Platform cluster and claimed from any project. After a PV is bound to a PVC, however, that PV cannot then be bound to additional PVCs. This has the effect of scoping a bound PV to a single namespace (that of the binding project).

PVs are defined by a PersistentVolume API object, which represents a piece of existing, networked storage in the cluster that was provisioned by the cluster administrator. It is a resource in the cluster just like a node is a cluster resource. PVs are volume plug-ins like Volumes but have a lifecycle that is independent of any individual pod that uses the PV. PV objects capture the details of the implementation of the storage, be that NFS, iSCSI, or a cloud-provider-specific storage system.

High availability of storage in the infrastructure is left to the underlying storage provider.

PVCs are defined by a PersistentVolumeClaim API object, which represents a request for storage by a developer. It is similar to a pod in that pods consume node resources and PVCs consume PV resources. For example, pods can request specific levels of resources (e.g., CPU and memory), while PVCs can request specific storage capacity and access modes (e.g., they can be mounted once read/write or many times read-only).

4.3.2. Lifecycle of a volume and claim

PVs are resources in the cluster. PVCs are requests for those resources and also act as claim checks to the resource. The interaction between PVs and PVCs have the following lifecycle.

4.3.2.1. Provision storage

In response to requests from a developer defined in a PVC, a cluster administrator configures one or more dynamic provisioners that provision storage and a matching PV.

Alternatively, a cluster administrator can create a number of PVs in advance that carry the details of the real storage that is available for use. PVs exist in the API and are available for use.

4.3.2.2. Bind claims

When you create a PVC, you request a specific amount of storage, specify the required access mode, and create a storage class to describe and classify the storage. The control loop in the master watches for new PVCs and binds the new PVC to an appropriate PV. If an appropriate PV does not exist, a provisioner for the storage class creates one.

The PV volume might exceed your requested volume. This is especially true with manually provisioned PVs. To minimize the excess, OpenShift Container Platform binds to the smallest PV that matches all other criteria.

Claims remain unbound indefinitely if a matching volume does not exist or cannot be created with any available provisioner servicing a storage class. Claims are bound as matching volumes become available. For example, a cluster with many manually provisioned 50Gi volumes would not match a PVC requesting 100Gi. The PVC can be bound when a 100Gi PV is added to the cluster.

4.3.2.3. Use pods and claimed PVs

Pods use claims as volumes. The cluster inspects the claim to find the bound volume and mounts that volume for a pod. For those volumes that support multiple access modes, you must specify which mode applies when you use the claim as a volume in a pod.

After you have a claim and that claim is bound, the bound PV belongs to you for as long as you need it. You can schedule pods and access claimed PVs by including persistentVolumeClaim in the pod’s volumes block. See below for syntax details.

4.3.2.4. PVC protection

PVC protection is enabled by default.

4.3.2.5. Release volumes

When you are finished with a volume, you can delete the PVC object from the API, which allows reclamation of the resource. The volume is considered "released" when the claim is deleted, but it is not yet available for another claim. The previous claimant’s data remains on the volume and must be handled according to policy.

4.3.2.6. Reclaim volumes

The reclaim policy of a PersistentVolume tells the cluster what to do with the volume after it is released. A PV’s reclaim policy can be either Retain or Delete.

-

Retainreclaim policy allows manual reclamation of the resource for those volume plug-ins that support it. -

Deletereclaim policy deletes both thePersistentVolumeobject from OpenShift Container Platform and the associated storage asset in external infrastructure, such as AWS EBS, GCE PD, or Cinder volume.

Dynamically provisioned volumes have a default ReclaimPolicy value of Delete. Manually provisioned volumes have a default ReclaimPolicy value of Retain.

4.3.2.7. Reclaim a PersistentVolume Manually

When a PersistentVolumeClaim is deleted, the PersistentVolume still exists and is considered "released". However, the PV is not yet available for another claim because the previous claimant’s data remains on the volume.

To manually reclaim the PV as a cluster administrator:

Delete the PV.

oc delete <pv-name>

$ oc delete <pv-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The associated storage asset in the external infrastructure, such as an AWS EBS, GCE PD, Azure Disk, or Cinder volume, still exists after the PV is deleted.

- Clean up the data on the associated storage asset.

- Delete the associated storage asset. Alternately, to reuse the same storage asset, create a new PV with the storage asset definition.

The reclaimed PV is now available for use by another PVC.

4.3.2.8. Change the reclaim policy

To change the reclaim policy of a PV:

List the PVs in your cluster:

oc get pv

$ oc get pvCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-b6efd8da-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim1 manual 10s pvc-b95650f8-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim2 manual 6s pvc-bb3ca71d-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim3 manual 3s

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-b6efd8da-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim1 manual 10s pvc-b95650f8-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim2 manual 6s pvc-bb3ca71d-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim3 manual 3sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Choose one of your PVs and change its reclaim policy:

oc patch pv <your-pv-name> -p '{"spec":{"persistentVolumeReclaimPolicy":"Retain"}}'$ oc patch pv <your-pv-name> -p '{"spec":{"persistentVolumeReclaimPolicy":"Retain"}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that your chosen PV has the right policy:

oc get pv

$ oc get pvCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-b6efd8da-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim1 manual 10s pvc-b95650f8-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim2 manual 6s pvc-bb3ca71d-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Retain Bound default/claim3 manual 3s

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-b6efd8da-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim1 manual 10s pvc-b95650f8-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Delete Bound default/claim2 manual 6s pvc-bb3ca71d-b7b5-11e6-9d58-0ed433a7dd94 4Gi RWO Retain Bound default/claim3 manual 3sCopy to Clipboard Copied! Toggle word wrap Toggle overflow In the preceding output, the PV bound to claim

default/claim3now has aRetainreclaim policy. The PV will not be automatically deleted when a user deletes claimdefault/claim3.

4.3.3. Persistent volumes

Each PV contains a spec and status, which is the specification and status of the volume, for example:

PV object definition example

4.3.3.1. Types of PVs

OpenShift Container Platform supports the following PersistentVolume plug-ins:

4.3.3.2. Capacity

Generally, a PV has a specific storage capacity. This is set by using the PV’s capacity attribute.

Currently, storage capacity is the only resource that can be set or requested. Future attributes may include IOPS, throughput, and so on.

4.3.3.3. Access modes

A PersistentVolume can be mounted on a host in any way supported by the resource provider. Providers will have different capabilities and each PV’s access modes are set to the specific modes supported by that particular volume. For example, NFS can support multiple read/write clients, but a specific NFS PV might be exported on the server as read-only. Each PV gets its own set of access modes describing that specific PV’s capabilities.

Claims are matched to volumes with similar access modes. The only two matching criteria are access modes and size. A claim’s access modes represent a request. Therefore, you might be granted more, but never less. For example, if a claim requests RWO, but the only volume available is an NFS PV (RWO+ROX+RWX), the claim would then match NFS because it supports RWO.

Direct matches are always attempted first. The volume’s modes must match or contain more modes than you requested. The size must be greater than or equal to what is expected. If two types of volumes (NFS and iSCSI, for example) have the same set of access modes, either of them can match a claim with those modes. There is no ordering between types of volumes and no way to choose one type over another.

All volumes with the same modes are grouped, and then sorted by size (smallest to largest). The binder gets the group with matching modes and iterates over each (in size order) until one size matches.

The following table lists the access modes:

| Access Mode | CLI abbreviation | Description |

|---|---|---|

| ReadWriteOnce |

| The volume can be mounted as read-write by a single node. |

| ReadOnlyMany |

| The volume can be mounted read-only by many nodes. |

| ReadWriteMany |

| The volume can be mounted as read-write by many nodes. |

A volume’s AccessModes are descriptors of the volume’s capabilities. They are not enforced constraints. The storage provider is responsible for runtime errors resulting from invalid use of the resource.

For example, Ceph offers ReadWriteOnce access mode. You must mark the claims as read-only if you want to use the volume’s ROX capability. Errors in the provider show up at runtime as mount errors.

iSCSI and Fibre Channel volumes do not currently have any fencing mechanisms. You must ensure the volumes are only used by one node at a time. In certain situations, such as draining a node, the volumes can be used simultaneously by two nodes. Before draining the node, first ensure the pods that use these volumes are deleted.

The following table lists the access modes supported by different PVs:

| Volume Plug-in | ReadWriteOnce | ReadOnlyMany | ReadWriteMany |

|---|---|---|---|

| AWS EBS |

✅ |

- |

- |

| Azure File |

✅ |

✅ |

✅ |

| Azure Disk |

✅ |

- |

- |

| Ceph RBD |

✅ |

✅ |

- |

| Fibre Channel |

✅ |

✅ |

- |

| GCE Persistent Disk |

✅ |

- |

- |

| GlusterFS |

✅ |

✅ |

✅ |

| gluster-block |

✅ |

- |

- |

| HostPath |

✅ |

- |

- |

| iSCSI |

✅ |

✅ |

- |

| NFS |

✅ |

✅ |

✅ |

| Openstack Cinder |

✅ |

- |

- |

| VMWare vSphere |

✅ |

- |

- |

| Local |

✅ |

- |

- |

Use a recreate deployment strategy for pods that rely on AWS EBS, GCE Persistent Disks, or Openstack Cinder PVs.

4.3.3.4. Reclaim policy

The following table lists current reclaim policies:

| Reclaim policy | Description |

|---|---|

| Retain | Allows manual reclamation. |

| Delete | Deletes both PV and associated external storage asset. |

If you do not want to retain all pods, use dynamic provisioning.

4.3.3.5. Phase

Volumes can be found in one of the following phases:

| Phase | Description |

|---|---|

| Available | A free resource not yet bound to a claim. |

| Bound | The volume is bound to a claim. |

| Released | The claim was deleted, but the resource is not yet reclaimed by the cluster. |

| Failed | The volume has failed its automatic reclamation. |

The CLI shows the name of the PVC bound to the PV.

4.3.3.6. Mount options

You can specify mount options while mounting a persistent volume by using the annotation volume.beta.kubernetes.io/mount-options.

For example:

Mount options example

- 1

- Specified mount options are used while mounting the PV to the disk.

The following persistent volume types support mount options:

- NFS

- GlusterFS

- Ceph RBD

- OpenStack Cinder

- AWS Elastic Block Store (EBS)

- GCE Persistent Disk

- iSCSI

- Azure Disk

- Azure File

- VMWare vSphere

Fibre Channel and HostPath persistent volumes do not support mount options.

4.3.3.7. Recursive chown

Each time a PV is mounted to a Pod, or when a Pod restart occurs, the volume’s ownership and permissions are recursively changed to match those of the Pod. Group read/write access is added to the volume when chown is performed on all files and directories in the PV. This enables the process running inside the Pod to access the PV file system. A user can prevent these recursive changes to ownership by not specifying the fsGroup in their Pods and security context constraints (SCCs).

SELinux labels are also changed recursively. A user cannot prevent recursive changes to SELinux labels.

If a user has a large number of files (e.g., > 100,000), a significant delay can occur before the PV is mounted to a Pod.

4.3.4. Persistent volume claims

Each PVC contains a spec and status, which is the specification and status of the claim, for example:

PVC object definition example

4.3.4.1. Storage classes

Claims can optionally request a specific storage class by specifying the storage class’s name in the storageClassName attribute. Only PVs of the requested class, ones with the same storageClassName as the PVC, can be bound to the PVC. The cluster administrator can configure dynamic provisioners to service one or more storage classes. The cluster administrator can create a PV on demand that matches the specifications in the PVC.

The cluster administrator can also set a default storage class for all PVCs. When a default storage class is configured, the PVC must explicitly ask for StorageClass or storageClassName annotations set to "" to be bound to a PV without a storage class.

4.3.4.2. Access modes

Claims use the same conventions as volumes when requesting storage with specific access modes.

4.3.4.3. Resources

Claims, such as pods, can request specific quantities of a resource. In this case, the request is for storage. The same resource model applies to volumes and claims.

4.3.4.4. Claims as volumes

Pods access storage by using the claim as a volume. Claims must exist in the same namespace as the pod by using the claim. The cluster finds the claim in the pod’s namespace and uses it to get the PersistentVolume backing the claim. The volume is mounted to the host and into the pod, for example:

Mount volume to the host and into the pod example

4.3.5. Block volume support

Block volume support is a Technology Preview feature and it is only available for manually provisioned PVs.

Technology Preview features are not supported with Red Hat production service level agreements (SLAs), might not be functionally complete, and Red Hat does not recommend to use them for production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about Red Hat Technology Preview features support scope, see https://access.redhat.com/support/offerings/techpreview/.

You can statically provision raw block volumes by including API fields in your PV and PVC specifications.

To use block volume, you must first enable the BlockVolume feature gate. To enable the feature gates for master(s), add feature-gates to apiServerArguments and controllerArguments. To enable the feature gates for node(s), add feature-gates to kubeletArguments. For example:

kubeletArguments:

feature-gates:

- BlockVolume=true

kubeletArguments:

feature-gates:

- BlockVolume=truePV example

- 1

volumeModefield indicating that this PV is a raw block volume.

PVC example

- 1

volumeModefield indicating that a raw block persistent volume is requested.

Pod specification example

- 1

volumeDevices(similar tovolumeMounts) is used for block devices and can only be used withPersistentVolumeClaimsources.- 2

devicePath(similar tomountPath) represents the path to the physical device.- 3

- The volume source must be of type

persistentVolumeClaimand must match the name of the PVC as expected.

| Value | Default |

|---|---|

| Filesystem | Yes |

| Block | No |

| PV VolumeMode | PVC VolumeMode | Binding Result |

|---|---|---|

| Filesystem | Filesystem | Bind |

| Unspecified | Unspecified | Bind |

| Filesystem | Unspecified | Bind |

| Unspecified | Filesystem | Bind |

| Block | Block | Bind |

| Unspecified | Block | No Bind |

| Block | Unspecified | No Bind |

| Filesystem | Block | No Bind |

| Block | Filesystem | No Bind |

Unspecified values result in the default value of Filesystem.

4.4. Ephemeral local storage

4.4.1. Overview

This topic applies only if the ephemeral storage technology preview is enabled. This feature is disabled by default. If enabled, the OpenShift Container Platform cluster uses ephemeral storage to store information that does not need to persist after the cluster is destroyed. To enable this feature, see configuring for ephemeral storage.

In addition to persistent storage, pods and containers may require ephemeral or transient local storage for their operation. The lifetime of this ephemeral storage does not extend beyond the life of the individual pod, and this ephemeral storage cannot be shared across pods.

Prior to OpenShift Container Platform 3.10, ephemeral local storage was exposed to pods using the container’s writable layer, logs directory, and EmptyDir volumes. Pods use ephemeral local storage for scratch space, caching, and logs. Issues related to the lack of local storage accounting and isolation include the following:

- Pods do not know how much local storage is available to them.

- Pods cannot request guaranteed local storage.

- Local storage is a best effort resource.

- Pods can be evicted due to other pods filling the local storage, after which new pods are not admitted until sufficient storage has been reclaimed.

Unlike persistent volumes, ephemeral storage is unstructured and shared, the space, not the actual data, between all pods running on a node, in addition to other uses by the system, the container runtime, and OpenShift Container Platform. The ephemeral storage framework allows pods to specify their transient local storage needs, and OpenShift Container Platform to schedule pods where appropriate and protect the node against excessive use of local storage.

While the ephemeral storage framework allows administrators and developers to better manage this local storage, it does not provide any promises related to I/O throughput and latency.

4.4.2. Types of ephemeral storage

Ephemeral local storage is always made available in the primary partition. There are two basic ways of creating the primary partition, root and runtime.

4.4.2.1. Root

This partition holds the kubelet’s root directory, /var/lib/origin/ by default, and /var/log/ directory. This partition may be shared between user pods, OS, and Kubernetes system daemons. This partition can be consumed by pods via EmptyDir volumes, container logs, image layers, and container writable layers. Kubelet manages shared access and isolation of this partition. This partition is ephemeral, and applications cannot expect any performance SLAs, disk IOPS for example, from this partition.

4.4.2.2. Runtime

This is an optional partition that runtimes can use for overlay file systems. OpenShift Container Platform attempts to identify and provide shared access along with isolation to this partition. Container image layers and writable layers are stored here. If the runtime partition exists, the root partition does not hold any image layer or other writable storage.

When you use DeviceMapper to provide runtime storage, a containers' copy-on-write layer is not accounted for in ephemeral storage management. Use overlay storage to monitor this ephemeral storage.

4.4.3. Managing ephemeral storage

Cluster administrators can manage ephemeral storage within a project by setting quotas that define the limit ranges and number of requests for ephemeral storage across all Pods in a non-terminal state. Developers can also set requests and limits on this compute resource at the Pod and Container level.

4.4.4. Monitoring ephemeral storage

You can use /bin/df as a tool to monitor ephemeral storage usage on the volume where ephemeral container data is located, which is /var/lib/origin and /var/lib/docker. The available space for only /var/lib/origin is shown when you use the df command if /var/lib/docker is placed on a separate disk by the cluster administrator.

Use the df -h command to show the human-readable values of used and available space in /var/lib:

df -h /var/lib

$ df -h /var/libExample Output

Filesystem Size Used Avail Use% Mounted on /dev/sda1 69G 32G 34G 49% /

Filesystem Size Used Avail Use% Mounted on

/dev/sda1 69G 32G 34G 49% /4.5. Source Control Management

OpenShift Container Platform takes advantage of preexisting source control management (SCM) systems hosted either internally (such as an in-house Git server) or externally (for example, on GitHub, Bitbucket, etc.). Currently, OpenShift Container Platform only supports Git solutions.

SCM integration is tightly coupled with builds, the two points being:

-

Creating a

BuildConfigusing a repository, which allows building your application inside of OpenShift Container Platform. You can create aBuildConfigmanually or let OpenShift Container Platform create it automatically by inspecting your repository. - Triggering a build upon repository changes.

4.6. Admission Controllers

4.6.1. Overview

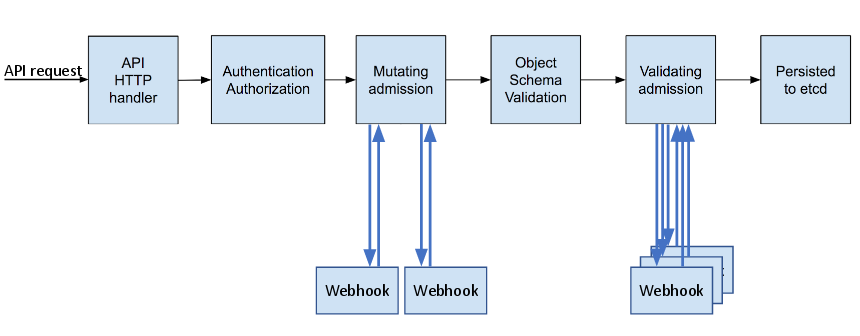

Admission control plug-ins intercept requests to the master API prior to persistence of a resource, but after the request is authenticated and authorized.

Each admission control plug-in is run in sequence before a request is accepted into the cluster. If any plug-in in the sequence rejects the request, the entire request is rejected immediately, and an error is returned to the end-user.

Admission control plug-ins may modify the incoming object in some cases to apply system configured defaults. In addition, admission control plug-ins may modify related resources as part of request processing to do things such as incrementing quota usage.

The OpenShift Container Platform master has a default list of plug-ins that are enabled by default for each type of resource (Kubernetes and OpenShift Container Platform). These are required for the proper functioning of the master. Modifying these lists is not recommended unless you strictly know what you are doing. Future versions of the product may use a different set of plug-ins and may change their ordering. If you do override the default list of plug-ins in the master configuration file, you are responsible for updating it to reflect requirements of newer versions of the OpenShift Container Platform master.

4.6.2. General Admission Rules

OpenShift Container Platform uses a single admission chain for Kubernetes and OpenShift Container Platform resources. This means that the top-level admissionConfig.pluginConfig element can now contain the admission plug-in configuration, which used to be contained in kubernetesMasterConfig.admissionConfig.pluginConfig.

The kubernetesMasterConfig.admissionConfig.pluginConfig should be moved and merged into admissionConfig.pluginConfig.

All the supported admission plug-ins are ordered in the single chain for you. You do not set admissionConfig.pluginOrderOverride or the kubernetesMasterConfig.admissionConfig.pluginOrderOverride. Instead, enable plug-ins that are off by default by either adding their plug-in-specific configuration, or adding a DefaultAdmissionConfig stanza like this:

Setting disable to true will disable an admission plug-in that defaults to on.

Admission plug-ins are commonly used to help enforce security on the API server. Be careful when disabling them.

If you were previously using admissionConfig elements that cannot be safely combined into a single admission chain, you will get a warning in your API server logs and your API server will start with two separate admission chains for legacy compatibility. Update your admissionConfig to resolve the warning.

4.6.3. Customizable Admission Plug-ins

Cluster administrators can configure some admission control plug-ins to control certain behavior, such as:

4.6.4. Admission Controllers Using Containers

Admission controllers using containers also support init containers.