Chapter 18. Configuring NSX-T SDN

18.1. NSX-T SDN and OpenShift Container Platform

VMware NSX-T Data Center ™ provides advanced software-defined networking (SDN), security, and visibility to container environments that simplifies IT operations and extends native OpenShift Container Platform networking capabilities.

NSX-T Data Center supports virtual machine, bare metal, and container workloads across multiple clusters. This allows organizations to have complete visibility using a single SDN across the entire environment.

For more information on how NSX-T integrates with OpenShift Container Platform, see the NSX-T SDN in Available SDN plug-ins.

18.2. Example Topology

One typical use case is to have a Tier-0 (T0) router that connects the physical system with the virtual environment and a Tier-1 (T1) router to act as a default gateway for the OpenShift Container Platform VMs.

Each VM has two vNICs: One vNIC connects to the Management Logical Switch for accessing the VMs. The other vNIC connects to a Dump Logical Switch and is used by nsx-node-agent to uplink the Pod networking. For further details, refer to NSX Container Plug-in for OpenShift.

The LoadBalancer used for configuring OpenShift Container Platform Routes and all project T1 routers and Logical Switches are created automatically during the OpenShift Container Platform installation.

In this topology, the default OpenShift Container Platform HAProxy Router is used for all infrastructure components such as Grafana, Prometheus, Console, Service Catalog, and others. Ensure that the DNS records for the infrastructure components point to the infrastructure node IP addresses, because the HAProxy uses the host network namespace. This works for infrastructure routes, but in order to avoid exposing the infrastructure nodes management IPs to the outside world, deploy application-specific routes to the NSX-T LoadBalancer.

This example topology assumes you are using three OpenShift Container Platform master virtual machines and four OpenShift Container Platform worker virtual machines (two for infrastructure and two for compute).

18.3. Installing VMware NSX-T

Prerequisites:

ESXi hosts requirements:

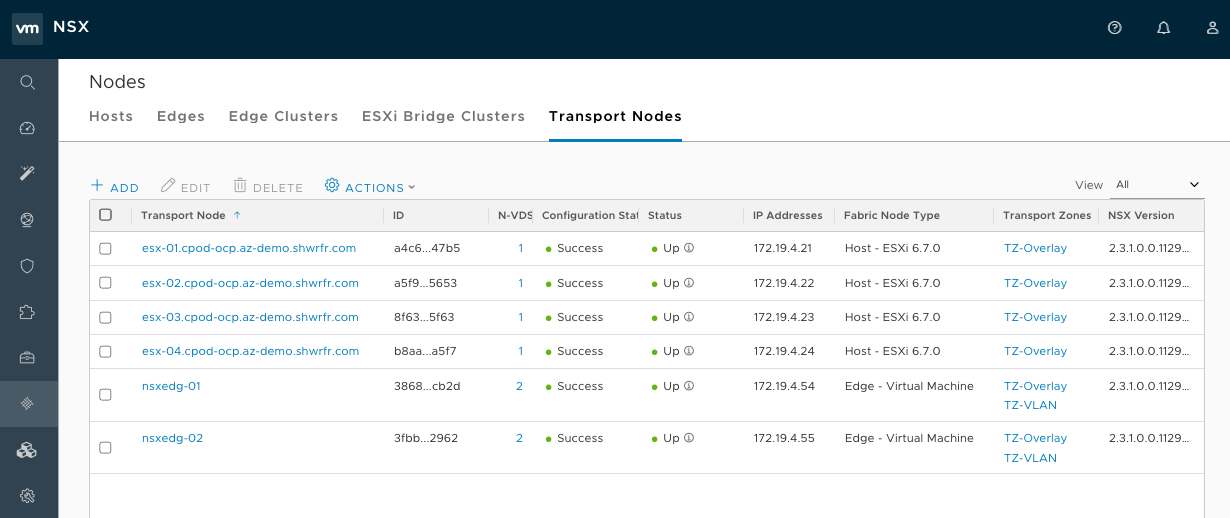

ESXi servers that host OpenShift Container Platform node VMs must be NSX-T Transport Nodes.

Figure 18.1. NSX UI dislaying the Transport Nodes for a typical high availability environment:

DNS requirements:

-

You must add a new entry to your DNS server with a wildcard to the infrastructure nodes. This allows load balancing by NSX-T or other third-party LoadBalancer. In the

hostsfile below, the entry is defined by theopenshift_master_default_subdomainvariable. -

You must update your DNS server with the

openshift_master_cluster_hostnameandopenshift_master_cluster_public_hostnamevariables.

-

You must add a new entry to your DNS server with a wildcard to the infrastructure nodes. This allows load balancing by NSX-T or other third-party LoadBalancer. In the

Virtual Machine requirements:

- The OpenShift Container Platform node VMs must have two vNICs:

- A Management vNIC must be connected to the Logical Switch that is uplinked to the management T1 router.

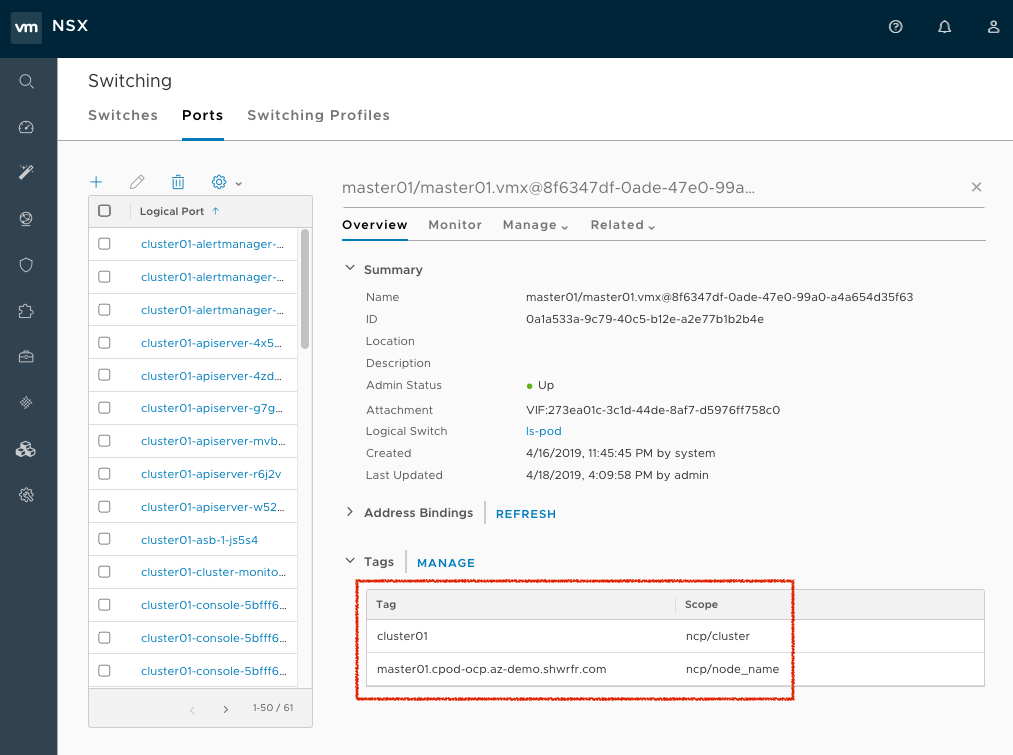

The second vNIC on all VMs must be tagged in NSX-T so that the NSX Container Plug-in (NCP) knows which port needs to be used as a parent VIF for all Pods running in a particular OpenShift Container Platform node. The tags must be the following:

{'ncp/node_name': 'node_name'} {'ncp/cluster': 'cluster_name'}{'ncp/node_name': 'node_name'} {'ncp/cluster': 'cluster_name'}Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following image shows how the tags in NSX UI for all nodes. For a large scale cluster, you can automate the tagging using API Call or by using Ansible.

Figure 18.2. NSX UI dislaying node tags

The order of the tags in the NSX UI is opposite from the API. The node name must be exactly as kubelet expects and the cluster name must be the same as the

nsx_openshift_cluster_namein the Ansible hosts file, as shown below. Ensure that the proper tags are applied on the second vNIC on every node.

NSX-T requirements:

The following prerequisites need to be met in NSX:

- A Tier-0 Router.

- An Overlay Transport Zone.

- An IP Block for POD networking.

- Optionally, an IP Block for routed (NoNAT) POD networking.

- An IP Pool for SNAT. By default the subnet given per Project from the Pod networking IP Block is routable only inside NSX-T. NCP uses this IP Pool to provide connectivity to the outside.

- Optionally, the Top and Bottom firewall sections in a dFW (Distributed Firewall). NCP places the Kubernetes Network Policy rules between those two sections.

-

The Open vSwitch and CNI plug-in RPMs need to be hosted on a HTTP server reachable from the OpenShift Container Platform Node VMs (

http://websrv.example.comin this example). Those files are included in the NCP Tar file, which you can download from VMware at Download NSX Container Plug-in 2.4.0 .

OpenShift Container Platform requirements:

Run the following command to install required software packages, if any, for OpenShift Container Platform:

ansible-playbook -i hosts openshift-ansible/playbooks/prerequisites.yml

$ ansible-playbook -i hosts openshift-ansible/playbooks/prerequisites.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Ensure that the NCP container image is downloaded locally on all nodes

After the

prerequisites.ymlplaybook has successfully executed, run the following command on all nodes, replacing thexxxwith the NCP build version:docker load -i nsx-ncp-rhel-xxx.tar

$ docker load -i nsx-ncp-rhel-xxx.tarCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

docker load -i nsx-ncp-rhel-2.4.0.12511604.tar

$ docker load -i nsx-ncp-rhel-2.4.0.12511604.tarCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the image name and retag it:

docker images docker image tag registry.local/xxxxx/nsx-ncp-rhel nsx-ncp

$ docker images $ docker image tag registry.local/xxxxx/nsx-ncp-rhel nsx-ncp1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace the

xxxwith the NCP build version. For example:

docker image tag registry.local/2.4.0.12511604/nsx-ncp-rhel nsx-ncp

docker image tag registry.local/2.4.0.12511604/nsx-ncp-rhel nsx-ncpCopy to Clipboard Copied! Toggle word wrap Toggle overflow In the OpenShift Container Platform Ansible hosts file, specify the following parameters to set up NSX-T as the network plug-in:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow For information on the OpenShift Container Platform installation parameters, see Configuring Your Inventory File.

Procedure

After meeting all of the prerequisites, you can deploy NSX Data Center and OpenShift Container Platform.

Deploy the OpenShift Container Platform cluster:

ansible-playbook -i hosts openshift-ansible/playbooks/deploy_cluster.yml

$ ansible-playbook -i hosts openshift-ansible/playbooks/deploy_cluster.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For more information on the OpenShift Container Platform installation, see Installing OpenShift Container Platform.

After the installation is complete, validate that the NCP and nsx-node-agent Pods are running:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

18.4. Check NSX-T after OpenShift Container Platform deployment

After installing OpenShift Container Platform and verifying the NCP and nsx-node-agent-* Pods:

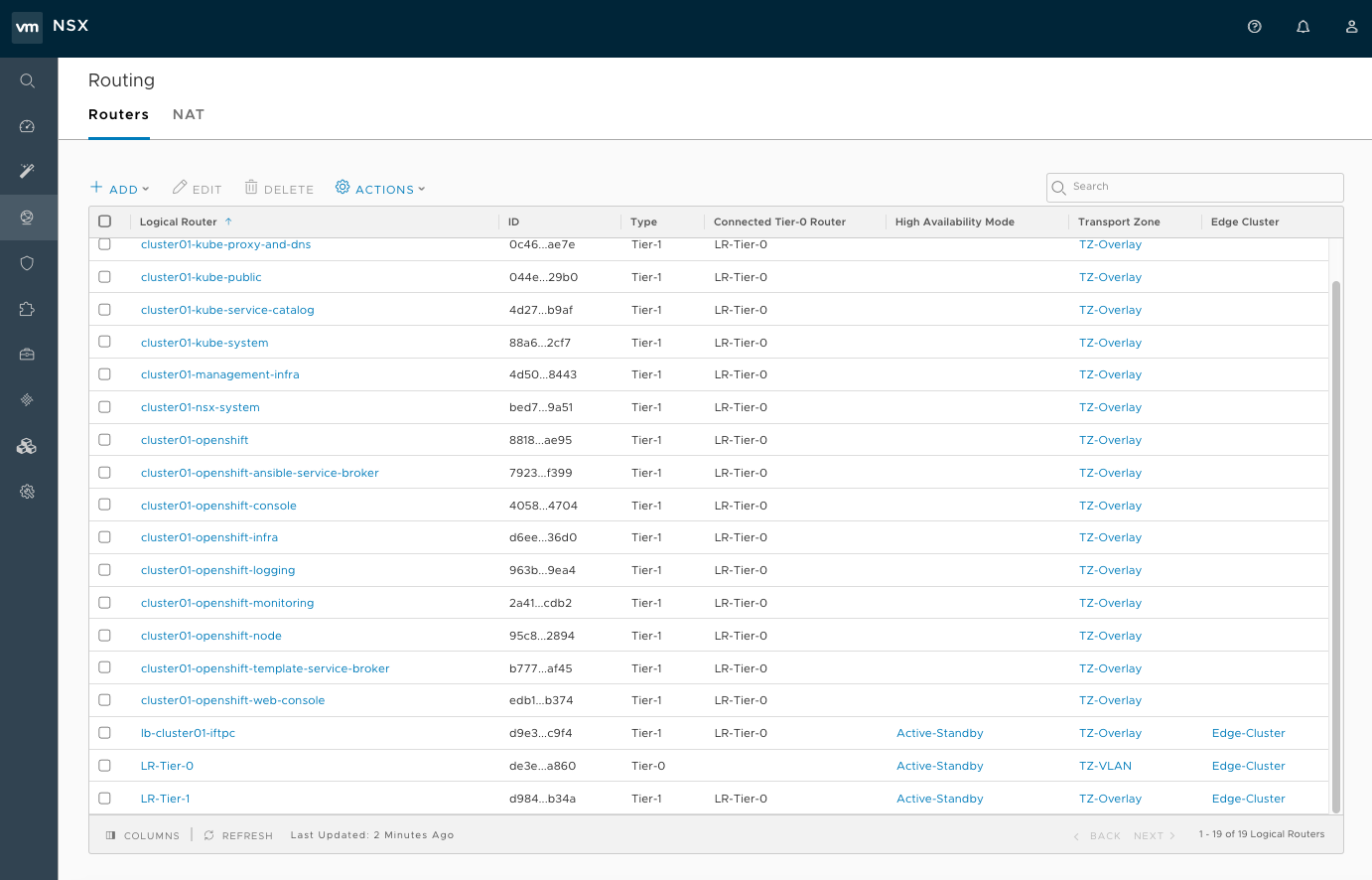

Check the routing. Ensure that the Tier-1 routers were created during the installation and are linked to the Tier-0 router:

Figure 18.3. NSX UI dislaying showing the T1 routers

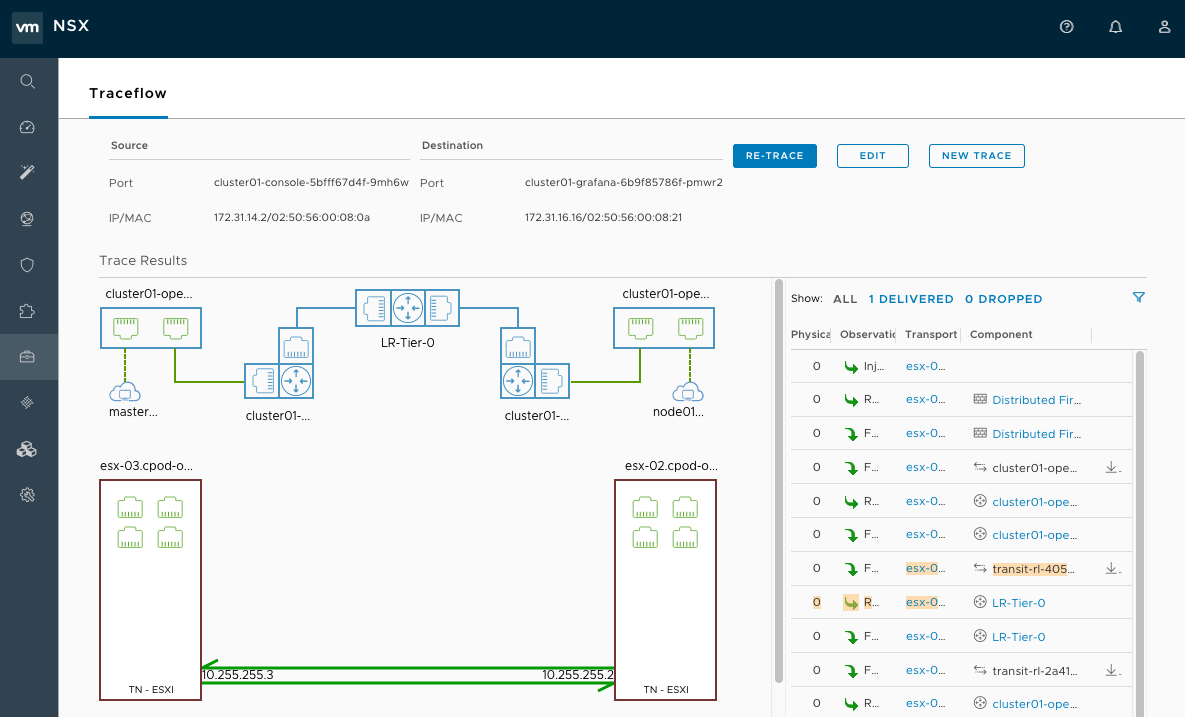

Observe the network traceflow and visibility. For example, check the connection between 'console' and 'grafana'.

For more information on securing and optimizing communications between Pods, Projects, virtual machines, and external services, see the following example:

Figure 18.4. NSX UI dislaying showing network traceflow

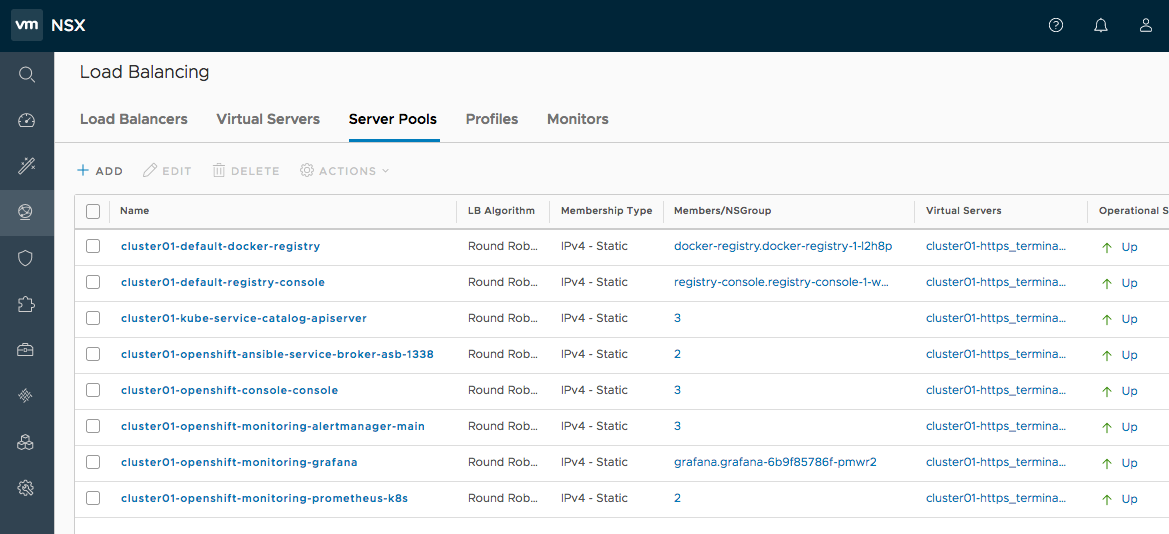

Check the load balancing. NSX-T Data center offers Load Balancer and Ingress Controller capabilities, as shown in the following example:

Figure 18.5. NSX UI dislay showing the load balancers

For additional configuration and options, refer to the VMware NSX-T v2.4 OpenShift Plug-In documentation.