This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Chapter 18. Managing cloud provider credentials

18.1. About the Cloud Credential Operator

The Cloud Credential Operator (CCO) manages cloud provider credentials as custom resource definitions (CRDs). The CCO syncs on CredentialsRequest custom resources (CRs) to allow OpenShift Container Platform components to request cloud provider credentials with the specific permissions that are required for the cluster to run.

By setting different values for the credentialsMode parameter in the install-config.yaml file, the CCO can be configured to operate in several different modes. If no mode is specified, or the credentialsMode parameter is set to an empty string (""), the CCO operates in its default mode.

18.1.1. Modes

By setting different values for the credentialsMode parameter in the install-config.yaml file, the CCO can be configured to operate in mint, passthrough, or manual mode. These options provide transparency and flexibility in how the CCO uses cloud credentials to process CredentialsRequest CRs in the cluster, and allow the CCO to be configured to suit the security requirements of your organization. Not all CCO modes are supported for all cloud providers.

- Mint: In mint mode, the CCO uses the provided admin-level cloud credential to create new credentials for components in the cluster with only the specific permissions that are required.

- Passthrough: In passthrough mode, the CCO passes the provided cloud credential to the components that request cloud credentials.

Manual: In manual mode, a user manages cloud credentials instead of the CCO.

- Manual with AWS Security Token Service: In manual mode, you can configure an AWS cluster to use Amazon Web Services Security Token Service (AWS STS). With this configuration, the CCO uses temporary credentials for different components.

- Manual with GCP Workload Identity: In manual mode, you can configure a GCP cluster to use GCP Workload Identity. With this configuration, the CCO uses temporary credentials for different components.

| Cloud provider | Mint | Passthrough | Manual |

|---|---|---|---|

| Alibaba Cloud | X | ||

| Amazon Web Services (AWS) | X | X | X |

| Microsoft Azure | X [1] | X | |

| Google Cloud Platform (GCP) | X | X | X |

| IBM Cloud | X | ||

| Red Hat OpenStack Platform (RHOSP) | X | ||

| Red Hat Virtualization (RHV) | X | ||

| VMware vSphere | X |

- Manual mode is the only supported CCO configuration for Microsoft Azure Stack Hub.

18.1.2. Determining the Cloud Credential Operator mode

For platforms that support using the CCO in multiple modes, you can determine what mode the CCO is configured to use by using the web console or the CLI.

Figure 18.1. Determining the CCO configuration

18.1.2.1. Determining the Cloud Credential Operator mode by using the web console

You can determine what mode the Cloud Credential Operator (CCO) is configured to use by using the web console.

Only Amazon Web Services (AWS), global Microsoft Azure, and Google Cloud Platform (GCP) clusters support multiple CCO modes.

Prerequisites

- You have access to an OpenShift Container Platform account with cluster administrator permissions.

Procedure

-

Log in to the OpenShift Container Platform web console as a user with the

cluster-adminrole. -

Navigate to Administration

Cluster Settings. - On the Cluster Settings page, select the Configuration tab.

- Under Configuration resource, select CloudCredential.

- On the CloudCredential details page, select the YAML tab.

In the YAML block, check the value of

spec.credentialsMode. The following values are possible, though not all are supported on all platforms:-

'': The CCO is operating in the default mode. In this configuration, the CCO operates in mint or passthrough mode, depending on the credentials provided during installation. -

Mint: The CCO is operating in mint mode. -

Passthrough: The CCO is operating in passthrough mode. -

Manual: The CCO is operating in manual mode.

ImportantTo determine the specific configuration of an AWS or GCP cluster that has a

spec.credentialsModeof'',Mint, orManual, you must investigate further.AWS and GCP clusters support using mint mode with the root secret deleted.

An AWS or GCP cluster that uses manual mode might be configured to create and manage cloud credentials from outside of the cluster using the AWS Security Token Service (STS) or GCP Workload Identity. You can determine whether your cluster uses this strategy by examining the cluster

Authenticationobject.-

AWS or GCP clusters that use the default (

'') only: To determine whether the cluster is operating in mint or passthrough mode, inspect the annotations on the cluster root secret:Navigate to Workloads

Secrets and look for the root secret for your cloud provider. NoteEnsure that the Project dropdown is set to All Projects.

Expand Platform Secret name AWS

aws-credsGCP

gcp-credentialsTo view the CCO mode that the cluster is using, click

1 annotationunder Annotations, and check the value field. The following values are possible:-

Mint: The CCO is operating in mint mode. -

Passthrough: The CCO is operating in passthrough mode.

If your cluster uses mint mode, you can also determine whether the cluster is operating without the root secret.

-

AWS or GCP clusters that use mint mode only: To determine whether the cluster is operating without the root secret, navigate to Workloads

Secrets and look for the root secret for your cloud provider. NoteEnsure that the Project dropdown is set to All Projects.

Expand Platform Secret name AWS

aws-credsGCP

gcp-credentials- If you see one of these values, your cluster is using mint or passthrough mode with the root secret present.

- If you do not see these values, your cluster is using the CCO in mint mode with the root secret removed.

AWS or GCP clusters that use manual mode only: To determine whether the cluster is configured to create and manage cloud credentials from outside of the cluster, you must check the cluster

Authenticationobject YAML values.-

Navigate to Administration

Cluster Settings. - On the Cluster Settings page, select the Configuration tab.

- Under Configuration resource, select Authentication.

- On the Authentication details page, select the YAML tab.

In the YAML block, check the value of the

.spec.serviceAccountIssuerparameter.-

A value that contains a URL that is associated with your cloud provider indicates that the CCO is using manual mode with AWS STS or GCP Workload Identity to create and manage cloud credentials from outside of the cluster. These clusters are configured using the

ccoctlutility. -

An empty value (

'') indicates that the cluster is using the CCO in manual mode but was not configured using theccoctlutility.

-

A value that contains a URL that is associated with your cloud provider indicates that the CCO is using manual mode with AWS STS or GCP Workload Identity to create and manage cloud credentials from outside of the cluster. These clusters are configured using the

-

Navigate to Administration

18.1.2.2. Determining the Cloud Credential Operator mode by using the CLI

You can determine what mode the Cloud Credential Operator (CCO) is configured to use by using the CLI.

Only Amazon Web Services (AWS), global Microsoft Azure, and Google Cloud Platform (GCP) clusters support multiple CCO modes.

Prerequisites

- You have access to an OpenShift Container Platform account with cluster administrator permissions.

-

You have installed the OpenShift CLI (

oc).

Procedure

-

Log in to

ocon the cluster as a user with thecluster-adminrole. To determine the mode that the CCO is configured to use, enter the following command:

oc get cloudcredentials cluster \ -o=jsonpath={.spec.credentialsMode}$ oc get cloudcredentials cluster \ -o=jsonpath={.spec.credentialsMode}Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following output values are possible, though not all are supported on all platforms:

-

'': The CCO is operating in the default mode. In this configuration, the CCO operates in mint or passthrough mode, depending on the credentials provided during installation. -

Mint: The CCO is operating in mint mode. -

Passthrough: The CCO is operating in passthrough mode. -

Manual: The CCO is operating in manual mode.

ImportantTo determine the specific configuration of an AWS or GCP cluster that has a

spec.credentialsModeof'',Mint, orManual, you must investigate further.AWS and GCP clusters support using mint mode with the root secret deleted.

An AWS or GCP cluster that uses manual mode might be configured to create and manage cloud credentials from outside of the cluster using the AWS Security Token Service (STS) or GCP Workload Identity. You can determine whether your cluster uses this strategy by examining the cluster

Authenticationobject.-

AWS or GCP clusters that use the default (

'') only: To determine whether the cluster is operating in mint or passthrough mode, run the following command:oc get secret <secret_name> \ -n kube-system \ -o jsonpath \ --template '{ .metadata.annotations }'$ oc get secret <secret_name> \ -n kube-system \ -o jsonpath \ --template '{ .metadata.annotations }'Copy to Clipboard Copied! Toggle word wrap Toggle overflow where

<secret_name>isaws-credsfor AWS orgcp-credentialsfor GCP.This command displays the value of the

.metadata.annotationsparameter in the cluster root secret object. The following output values are possible:-

Mint: The CCO is operating in mint mode. -

Passthrough: The CCO is operating in passthrough mode.

If your cluster uses mint mode, you can also determine whether the cluster is operating without the root secret.

-

AWS or GCP clusters that use mint mode only: To determine whether the cluster is operating without the root secret, run the following command:

oc get secret <secret_name> \ -n=kube-system

$ oc get secret <secret_name> \ -n=kube-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow where

<secret_name>isaws-credsfor AWS orgcp-credentialsfor GCP.If the root secret is present, the output of this command returns information about the secret. An error indicates that the root secret is not present on the cluster.

AWS or GCP clusters that use manual mode only: To determine whether the cluster is configured to create and manage cloud credentials from outside of the cluster, run the following command:

oc get authentication cluster \ -o jsonpath \ --template='{ .spec.serviceAccountIssuer }'$ oc get authentication cluster \ -o jsonpath \ --template='{ .spec.serviceAccountIssuer }'Copy to Clipboard Copied! Toggle word wrap Toggle overflow This command displays the value of the

.spec.serviceAccountIssuerparameter in the clusterAuthenticationobject.-

An output of a URL that is associated with your cloud provider indicates that the CCO is using manual mode with AWS STS or GCP Workload Identity to create and manage cloud credentials from outside of the cluster. These clusters are configured using the

ccoctlutility. -

An empty output indicates that the cluster is using the CCO in manual mode but was not configured using the

ccoctlutility.

-

An output of a URL that is associated with your cloud provider indicates that the CCO is using manual mode with AWS STS or GCP Workload Identity to create and manage cloud credentials from outside of the cluster. These clusters are configured using the

18.1.3. Default behavior

For platforms on which multiple modes are supported (AWS, Azure, and GCP), when the CCO operates in its default mode, it checks the provided credentials dynamically to determine for which mode they are sufficient to process CredentialsRequest CRs.

By default, the CCO determines whether the credentials are sufficient for mint mode, which is the preferred mode of operation, and uses those credentials to create appropriate credentials for components in the cluster. If the credentials are not sufficient for mint mode, it determines whether they are sufficient for passthrough mode. If the credentials are not sufficient for passthrough mode, the CCO cannot adequately process CredentialsRequest CRs.

If the provided credentials are determined to be insufficient during installation, the installation fails. For AWS, the installer fails early in the process and indicates which required permissions are missing. Other providers might not provide specific information about the cause of the error until errors are encountered.

If the credentials are changed after a successful installation and the CCO determines that the new credentials are insufficient, the CCO puts conditions on any new CredentialsRequest CRs to indicate that it cannot process them because of the insufficient credentials.

To resolve insufficient credentials issues, provide a credential with sufficient permissions. If an error occurred during installation, try installing again. For issues with new CredentialsRequest CRs, wait for the CCO to try to process the CR again. As an alternative, you can manually create IAM for AWS, Azure, and GCP.

18.2. Using mint mode

Mint mode is supported for Amazon Web Services (AWS) and Google Cloud Platform (GCP).

Mint mode is the default mode on the platforms for which it is supported. In this mode, the Cloud Credential Operator (CCO) uses the provided administrator-level cloud credential to create new credentials for components in the cluster with only the specific permissions that are required.

If the credential is not removed after installation, it is stored and used by the CCO to process CredentialsRequest CRs for components in the cluster and create new credentials for each with only the specific permissions that are required. The continuous reconciliation of cloud credentials in mint mode allows actions that require additional credentials or permissions, such as upgrading, to proceed.

Mint mode stores the administrator-level credential in the cluster kube-system namespace. If this approach does not meet the security requirements of your organization, see Alternatives to storing administrator-level secrets in the kube-system project for AWS or GCP.

18.2.1. Mint mode permissions requirements

When using the CCO in mint mode, ensure that the credential you provide meets the requirements of the cloud on which you are running or installing OpenShift Container Platform. If the provided credentials are not sufficient for mint mode, the CCO cannot create an IAM user.

18.2.1.1. Amazon Web Services (AWS) permissions

The credential you provide for mint mode in AWS must have the following permissions:

-

iam:CreateAccessKey -

iam:CreateUser -

iam:DeleteAccessKey -

iam:DeleteUser -

iam:DeleteUserPolicy -

iam:GetUser -

iam:GetUserPolicy -

iam:ListAccessKeys -

iam:PutUserPolicy -

iam:TagUser -

iam:SimulatePrincipalPolicy

18.2.1.2. Google Cloud Platform (GCP) permissions

The credential you provide for mint mode in GCP must have the following permissions:

-

resourcemanager.projects.get -

serviceusage.services.list -

iam.serviceAccountKeys.create -

iam.serviceAccountKeys.delete -

iam.serviceAccounts.create -

iam.serviceAccounts.delete -

iam.serviceAccounts.get -

iam.roles.get -

resourcemanager.projects.getIamPolicy -

resourcemanager.projects.setIamPolicy

18.2.2. Admin credentials root secret format

Each cloud provider uses a credentials root secret in the kube-system namespace by convention, which is then used to satisfy all credentials requests and create their respective secrets. This is done either by minting new credentials with mint mode, or by copying the credentials root secret with passthrough mode.

The format for the secret varies by cloud, and is also used for each CredentialsRequest secret.

Amazon Web Services (AWS) secret format

Google Cloud Platform (GCP) secret format

18.2.3. Mint mode with removal or rotation of the administrator-level credential

Currently, this mode is only supported on AWS and GCP.

In this mode, a user installs OpenShift Container Platform with an administrator-level credential just like the normal mint mode. However, this process removes the administrator-level credential secret from the cluster post-installation.

The administrator can have the Cloud Credential Operator make its own request for a read-only credential that allows it to verify if all CredentialsRequest objects have their required permissions, thus the administrator-level credential is not required unless something needs to be changed. After the associated credential is removed, it can be deleted or deactivated on the underlying cloud, if desired.

Prior to a non z-stream upgrade, you must reinstate the credential secret with the administrator-level credential. If the credential is not present, the upgrade might be blocked.

The administrator-level credential is not stored in the cluster permanently.

Following these steps still requires the administrator-level credential in the cluster for brief periods of time. It also requires manually re-instating the secret with administrator-level credentials for each upgrade.

18.2.3.1. Rotating cloud provider credentials manually

If your cloud provider credentials are changed for any reason, you must manually update the secret that the Cloud Credential Operator (CCO) uses to manage cloud provider credentials.

The process for rotating cloud credentials depends on the mode that the CCO is configured to use. After you rotate credentials for a cluster that is using mint mode, you must manually remove the component credentials that were created by the removed credential.

Prerequisites

Your cluster is installed on a platform that supports rotating cloud credentials manually with the CCO mode that you are using:

- For mint mode, Amazon Web Services (AWS) and Google Cloud Platform (GCP) are supported.

- You have changed the credentials that are used to interface with your cloud provider.

- The new credentials have sufficient permissions for the mode CCO is configured to use in your cluster.

Procedure

-

In the Administrator perspective of the web console, navigate to Workloads

Secrets. In the table on the Secrets page, find the root secret for your cloud provider.

Expand Platform Secret name AWS

aws-credsGCP

gcp-credentials-

Click the Options menu

in the same row as the secret and select Edit Secret.

in the same row as the secret and select Edit Secret.

- Record the contents of the Value field or fields. You can use this information to verify that the value is different after updating the credentials.

- Update the text in the Value field or fields with the new authentication information for your cloud provider, and then click Save.

Delete each component secret that is referenced by the individual

CredentialsRequestobjects.-

Log in to the OpenShift Container Platform CLI as a user with the

cluster-adminrole. Get the names and namespaces of all referenced component secrets:

oc -n openshift-cloud-credential-operator get CredentialsRequest \ -o json | jq -r '.items[] | select (.spec.providerSpec.kind=="<provider_spec>") | .spec.secretRef'

$ oc -n openshift-cloud-credential-operator get CredentialsRequest \ -o json | jq -r '.items[] | select (.spec.providerSpec.kind=="<provider_spec>") | .spec.secretRef'Copy to Clipboard Copied! Toggle word wrap Toggle overflow where

<provider_spec>is the corresponding value for your cloud provider:-

AWS:

AWSProviderSpec -

GCP:

GCPProviderSpec

Partial example output for AWS

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

AWS:

Delete each of the referenced component secrets:

oc delete secret <secret_name> \ -n <secret_namespace>

$ oc delete secret <secret_name> \1 -n <secret_namespace>2 Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example deletion of an AWS secret

oc delete secret ebs-cloud-credentials -n openshift-cluster-csi-drivers

$ oc delete secret ebs-cloud-credentials -n openshift-cluster-csi-driversCopy to Clipboard Copied! Toggle word wrap Toggle overflow You do not need to manually delete the credentials from your provider console. Deleting the referenced component secrets will cause the CCO to delete the existing credentials from the platform and create new ones.

-

Log in to the OpenShift Container Platform CLI as a user with the

Verification

To verify that the credentials have changed:

-

In the Administrator perspective of the web console, navigate to Workloads

Secrets. - Verify that the contents of the Value field or fields have changed.

18.2.3.2. Removing cloud provider credentials

After installing an OpenShift Container Platform cluster with the Cloud Credential Operator (CCO) in mint mode, you can remove the administrator-level credential secret from the kube-system namespace in the cluster. The administrator-level credential is required only during changes that require its elevated permissions, such as upgrades.

Prior to a non z-stream upgrade, you must reinstate the credential secret with the administrator-level credential. If the credential is not present, the upgrade might be blocked.

Prerequisites

- Your cluster is installed on a platform that supports removing cloud credentials from the CCO. Supported platforms are AWS and GCP.

Procedure

-

In the Administrator perspective of the web console, navigate to Workloads

Secrets. In the table on the Secrets page, find the root secret for your cloud provider.

Expand Platform Secret name AWS

aws-credsGCP

gcp-credentials-

Click the Options menu

in the same row as the secret and select Delete Secret.

in the same row as the secret and select Delete Secret.

18.3. Using passthrough mode

Passthrough mode is supported for Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), Red Hat OpenStack Platform (RHOSP), Red Hat Virtualization (RHV), and VMware vSphere.

In passthrough mode, the Cloud Credential Operator (CCO) passes the provided cloud credential to the components that request cloud credentials. The credential must have permissions to perform the installation and complete the operations that are required by components in the cluster, but does not need to be able to create new credentials. The CCO does not attempt to create additional limited-scoped credentials in passthrough mode.

Manual mode is the only supported CCO configuration for Microsoft Azure Stack Hub.

18.3.1. Passthrough mode permissions requirements

When using the CCO in passthrough mode, ensure that the credential you provide meets the requirements of the cloud on which you are running or installing OpenShift Container Platform. If the provided credentials the CCO passes to a component that creates a CredentialsRequest CR are not sufficient, that component will report an error when it tries to call an API that it does not have permissions for.

18.3.1.1. Amazon Web Services (AWS) permissions

The credential you provide for passthrough mode in AWS must have all the requested permissions for all CredentialsRequest CRs that are required by the version of OpenShift Container Platform you are running or installing.

To locate the CredentialsRequest CRs that are required, see Manually creating IAM for AWS.

18.3.1.2. Microsoft Azure permissions

The credential you provide for passthrough mode in Azure must have all the requested permissions for all CredentialsRequest CRs that are required by the version of OpenShift Container Platform you are running or installing.

To locate the CredentialsRequest CRs that are required, see Manually creating IAM for Azure.

18.3.1.3. Google Cloud Platform (GCP) permissions

The credential you provide for passthrough mode in GCP must have all the requested permissions for all CredentialsRequest CRs that are required by the version of OpenShift Container Platform you are running or installing.

To locate the CredentialsRequest CRs that are required, see Manually creating IAM for GCP.

18.3.1.4. Red Hat OpenStack Platform (RHOSP) permissions

To install an OpenShift Container Platform cluster on RHOSP, the CCO requires a credential with the permissions of a member user role.

18.3.1.5. Red Hat Virtualization (RHV) permissions

To install an OpenShift Container Platform cluster on RHV, the CCO requires a credential with the following privileges:

-

DiskOperator -

DiskCreator -

UserTemplateBasedVm -

TemplateOwner -

TemplateCreator -

ClusterAdminon the specific cluster that is targeted for OpenShift Container Platform deployment

18.3.1.6. VMware vSphere permissions

To install an OpenShift Container Platform cluster on VMware vSphere, the CCO requires a credential with the following vSphere privileges:

| Category | Privileges |

|---|---|

| Datastore | Allocate space |

| Folder | Create folder, Delete folder |

| vSphere Tagging | All privileges |

| Network | Assign network |

| Resource | Assign virtual machine to resource pool |

| Profile-driven storage | All privileges |

| vApp | All privileges |

| Virtual machine | All privileges |

18.3.2. Admin credentials root secret format

Each cloud provider uses a credentials root secret in the kube-system namespace by convention, which is then used to satisfy all credentials requests and create their respective secrets. This is done either by minting new credentials with mint mode, or by copying the credentials root secret with passthrough mode.

The format for the secret varies by cloud, and is also used for each CredentialsRequest secret.

Amazon Web Services (AWS) secret format

Microsoft Azure secret format

On Microsoft Azure, the credentials secret format includes two properties that must contain the cluster’s infrastructure ID, generated randomly for each cluster installation. This value can be found after running create manifests:

cat .openshift_install_state.json | jq '."*installconfig.ClusterID".InfraID' -r

$ cat .openshift_install_state.json | jq '."*installconfig.ClusterID".InfraID' -rExample output

mycluster-2mpcn

mycluster-2mpcnThis value would be used in the secret data as follows:

azure_resource_prefix: mycluster-2mpcn azure_resourcegroup: mycluster-2mpcn-rg

azure_resource_prefix: mycluster-2mpcn

azure_resourcegroup: mycluster-2mpcn-rgGoogle Cloud Platform (GCP) secret format

Red Hat OpenStack Platform (RHOSP) secret format

Red Hat Virtualization (RHV) secret format

VMware vSphere secret format

18.3.3. Passthrough mode credential maintenance

If CredentialsRequest CRs change over time as the cluster is upgraded, you must manually update the passthrough mode credential to meet the requirements. To avoid credentials issues during an upgrade, check the CredentialsRequest CRs in the release image for the new version of OpenShift Container Platform before upgrading. To locate the CredentialsRequest CRs that are required for your cloud provider, see Manually creating IAM for AWS, Azure, or GCP.

18.3.3.1. Rotating cloud provider credentials manually

If your cloud provider credentials are changed for any reason, you must manually update the secret that the Cloud Credential Operator (CCO) uses to manage cloud provider credentials.

The process for rotating cloud credentials depends on the mode that the CCO is configured to use. After you rotate credentials for a cluster that is using mint mode, you must manually remove the component credentials that were created by the removed credential.

Prerequisites

Your cluster is installed on a platform that supports rotating cloud credentials manually with the CCO mode that you are using:

- For passthrough mode, Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), Red Hat OpenStack Platform (RHOSP), Red Hat Virtualization (RHV), and VMware vSphere are supported.

- You have changed the credentials that are used to interface with your cloud provider.

- The new credentials have sufficient permissions for the mode CCO is configured to use in your cluster.

Procedure

-

In the Administrator perspective of the web console, navigate to Workloads

Secrets. In the table on the Secrets page, find the root secret for your cloud provider.

Expand Platform Secret name AWS

aws-credsAzure

azure-credentialsGCP

gcp-credentialsRHOSP

openstack-credentialsRHV

ovirt-credentialsVMware vSphere

vsphere-creds-

Click the Options menu

in the same row as the secret and select Edit Secret.

in the same row as the secret and select Edit Secret.

- Record the contents of the Value field or fields. You can use this information to verify that the value is different after updating the credentials.

- Update the text in the Value field or fields with the new authentication information for your cloud provider, and then click Save.

If you are updating the credentials for a vSphere cluster that does not have the vSphere CSI Driver Operator enabled, you must force a rollout of the Kubernetes controller manager to apply the updated credentials.

NoteIf the vSphere CSI Driver Operator is enabled, this step is not required.

To apply the updated vSphere credentials, log in to the OpenShift Container Platform CLI as a user with the

cluster-adminrole and run the following command:oc patch kubecontrollermanager cluster \ -p='{"spec": {"forceRedeploymentReason": "recovery-'"$( date )"'"}}' \ --type=merge$ oc patch kubecontrollermanager cluster \ -p='{"spec": {"forceRedeploymentReason": "recovery-'"$( date )"'"}}' \ --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow While the credentials are rolling out, the status of the Kubernetes Controller Manager Operator reports

Progressing=true. To view the status, run the following command:oc get co kube-controller-manager

$ oc get co kube-controller-managerCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To verify that the credentials have changed:

-

In the Administrator perspective of the web console, navigate to Workloads

Secrets. - Verify that the contents of the Value field or fields have changed.

18.3.4. Reducing permissions after installation

When using passthrough mode, each component has the same permissions used by all other components. If you do not reduce the permissions after installing, all components have the broad permissions that are required to run the installer.

After installation, you can reduce the permissions on your credential to only those that are required to run the cluster, as defined by the CredentialsRequest CRs in the release image for the version of OpenShift Container Platform that you are using.

To locate the CredentialsRequest CRs that are required for AWS, Azure, or GCP and learn how to change the permissions the CCO uses, see Manually creating IAM for AWS, Azure, or GCP.

18.4. Using manual mode

Manual mode is supported for Alibaba Cloud, Amazon Web Services (AWS), Microsoft Azure, IBM Cloud, and Google Cloud Platform (GCP).

In manual mode, a user manages cloud credentials instead of the Cloud Credential Operator (CCO). To use this mode, you must examine the CredentialsRequest CRs in the release image for the version of OpenShift Container Platform that you are running or installing, create corresponding credentials in the underlying cloud provider, and create Kubernetes Secrets in the correct namespaces to satisfy all CredentialsRequest CRs for the cluster’s cloud provider.

Using manual mode allows each cluster component to have only the permissions it requires, without storing an administrator-level credential in the cluster. This mode also does not require connectivity to the AWS public IAM endpoint. However, you must manually reconcile permissions with new release images for every upgrade.

For information about configuring your cloud provider to use manual mode, see the manual credentials management options for your cloud provider:

18.4.1. Manual mode with cloud credentials created and managed outside of the cluster

An AWS or GCP cluster that uses manual mode might be configured to create and manage cloud credentials from outside of the cluster using the AWS Security Token Service (STS) or GCP Workload Identity. With this configuration, the CCO uses temporary credentials for different components.

For more information, see Using manual mode with Amazon Web Services Security Token Service or Using manual mode with GCP Workload Identity.

18.4.2. Updating cloud provider resources with manually maintained credentials

Before upgrading a cluster with manually maintained credentials, you must create any new credentials for the release image that you are upgrading to. You must also review the required permissions for existing credentials and accommodate any new permissions requirements in the new release for those components.

Procedure

Extract and examine the

CredentialsRequestcustom resource for the new release.The "Manually creating IAM" section of the installation content for your cloud provider explains how to obtain and use the credentials required for your cloud.

Update the manually maintained credentials on your cluster:

-

Create new secrets for any

CredentialsRequestcustom resources that are added by the new release image. -

If the

CredentialsRequestcustom resources for any existing credentials that are stored in secrets have changed permissions requirements, update the permissions as required.

-

Create new secrets for any

Next steps

-

Update the

upgradeable-toannotation to indicate that the cluster is ready to upgrade.

18.4.2.1. Indicating that the cluster is ready to upgrade

The Cloud Credential Operator (CCO) Upgradable status for a cluster with manually maintained credentials is False by default.

Prerequisites

-

For the release image that you are upgrading to, you have processed any new credentials manually or by using the Cloud Credential Operator utility (

ccoctl). -

You have installed the OpenShift CLI (

oc).

Procedure

-

Log in to

ocon the cluster as a user with thecluster-adminrole. Edit the

CloudCredentialresource to add anupgradeable-toannotation within themetadatafield by running the following command:oc edit cloudcredential cluster

$ oc edit cloudcredential clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Text to add

... metadata: annotations: cloudcredential.openshift.io/upgradeable-to: <version_number> ...... metadata: annotations: cloudcredential.openshift.io/upgradeable-to: <version_number> ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<version_number>is the version that you are upgrading to, in the formatx.y.z. For example, use4.10.2for OpenShift Container Platform 4.10.2.It may take several minutes after adding the annotation for the upgradeable status to change.

Verification

-

In the Administrator perspective of the web console, navigate to Administration

Cluster Settings. To view the CCO status details, click cloud-credential in the Cluster Operators list.

-

If the Upgradeable status in the Conditions section is False, verify that the

upgradeable-toannotation is free of typographical errors.

-

If the Upgradeable status in the Conditions section is False, verify that the

- When the Upgradeable status in the Conditions section is True, begin the OpenShift Container Platform upgrade.

18.5. Using manual mode with Amazon Web Services Security Token Service

Manual mode with STS is supported for Amazon Web Services (AWS).

This credentials strategy is supported for only new OpenShift Container Platform clusters and must be configured during installation. You cannot reconfigure an existing cluster that uses a different credentials strategy to use this feature.

18.5.1. About manual mode with AWS Security Token Service

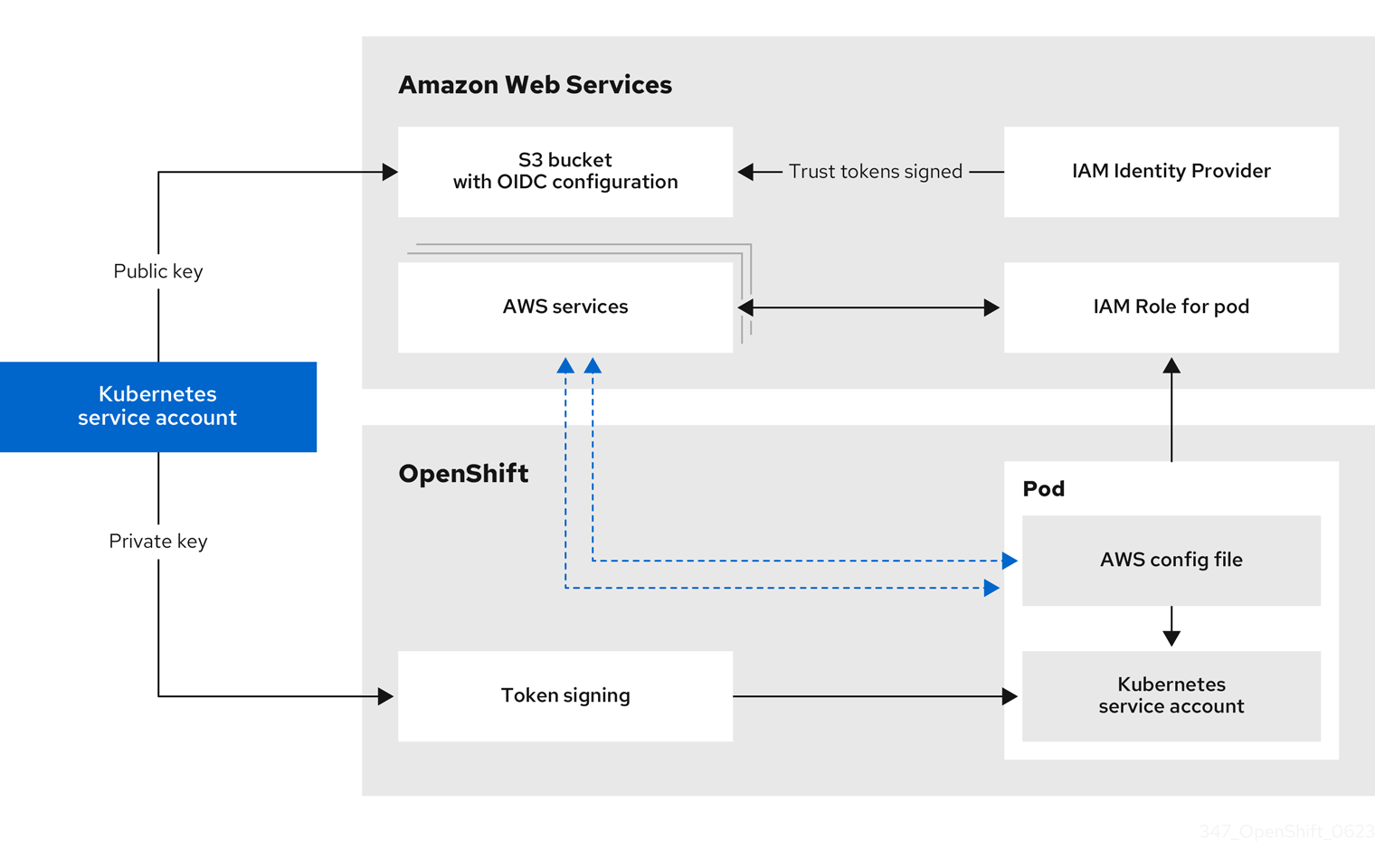

In manual mode with STS, the individual OpenShift Container Platform cluster components use AWS Security Token Service (STS) to assign components IAM roles that provide short-term, limited-privilege security credentials. These credentials are associated with IAM roles that are specific to each component that makes AWS API calls.

Requests for new and refreshed credentials are automated by using an appropriately configured AWS IAM OpenID Connect (OIDC) identity provider, combined with AWS IAM roles. OpenShift Container Platform signs service account tokens that are trusted by AWS IAM, and can be projected into a pod and used for authentication. Tokens are refreshed after one hour.

Figure 18.2. STS authentication flow

Using manual mode with STS changes the content of the AWS credentials that are provided to individual OpenShift Container Platform components.

AWS secret format using long-lived credentials

AWS secret format with STS

18.5.2. Installing an OpenShift Container Platform cluster configured for manual mode with STS

To install a cluster that is configured to use the Cloud Credential Operator (CCO) in manual mode with STS:

- Configure the Cloud Credential Operator utility.

- Create the required AWS resources individually, or with a single command.

- Run the OpenShift Container Platform installer.

- Verify that the cluster is using short-lived credentials.

Because the cluster is operating in manual mode when using STS, it is not able to create new credentials for components with the permissions that they require. When upgrading to a different minor version of OpenShift Container Platform, there are often new AWS permission requirements. Before upgrading a cluster that is using STS, the cluster administrator must manually ensure that the AWS permissions are sufficient for existing components and available to any new components.

18.5.2.1. Configuring the Cloud Credential Operator utility

To create and manage cloud credentials from outside of the cluster when the Cloud Credential Operator (CCO) is operating in manual mode, extract and prepare the CCO utility (ccoctl) binary.

The ccoctl utility is a Linux binary that must run in a Linux environment.

Prerequisites

- You have access to an OpenShift Container Platform account with cluster administrator access.

-

You have installed the OpenShift CLI (

oc).

You have created an AWS account for the

ccoctlutility to use with the following permissions:Expand Table 18.3. Required AWS permissions Permission type Required permissions iampermissions-

iam:CreateOpenIDConnectProvider -

iam:CreateRole -

iam:DeleteOpenIDConnectProvider -

iam:DeleteRole -

iam:DeleteRolePolicy -

iam:GetOpenIDConnectProvider -

iam:GetRole -

iam:GetUser -

iam:ListOpenIDConnectProviders -

iam:ListRolePolicies -

iam:ListRoles -

iam:PutRolePolicy -

iam:TagOpenIDConnectProvider -

iam:TagRole

s3permissions-

s3:CreateBucket -

s3:DeleteBucket -

s3:DeleteObject -

s3:GetBucketAcl -

s3:GetBucketTagging -

s3:GetObject -

s3:GetObjectAcl -

s3:GetObjectTagging -

s3:ListBucket -

s3:PutBucketAcl -

s3:PutBucketPolicy -

s3:PutBucketPublicAccessBlock -

s3:PutBucketTagging -

s3:PutObject -

s3:PutObjectAcl -

s3:PutObjectTagging

cloudfrontpermissions-

cloudfront:ListCloudFrontOriginAccessIdentities -

cloudfront:ListDistributions -

cloudfront:ListTagsForResource

If you plan to store the OIDC configuration in a private S3 bucket that is accessed by the IAM identity provider through a public CloudFront distribution URL, the AWS account that runs the

ccoctlutility requires the following additional permissions:-

cloudfront:CreateCloudFrontOriginAccessIdentity -

cloudfront:CreateDistribution -

cloudfront:DeleteCloudFrontOriginAccessIdentity -

cloudfront:DeleteDistribution -

cloudfront:GetCloudFrontOriginAccessIdentity -

cloudfront:GetCloudFrontOriginAccessIdentityConfig -

cloudfront:GetDistribution -

cloudfront:TagResource -

cloudfront:UpdateDistribution

NoteThese additional permissions support the use of the

--create-private-s3-bucketoption when processing credentials requests with theccoctl aws create-allcommand.-

Procedure

Obtain the OpenShift Container Platform release image:

RELEASE_IMAGE=$(./openshift-install version | awk '/release image/ {print $3}')$ RELEASE_IMAGE=$(./openshift-install version | awk '/release image/ {print $3}')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Get the CCO container image from the OpenShift Container Platform release image:

CCO_IMAGE=$(oc adm release info --image-for='cloud-credential-operator' $RELEASE_IMAGE)

$ CCO_IMAGE=$(oc adm release info --image-for='cloud-credential-operator' $RELEASE_IMAGE)Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteEnsure that the architecture of the

$RELEASE_IMAGEmatches the architecture of the environment in which you will use theccoctltool.Extract the

ccoctlbinary from the CCO container image within the OpenShift Container Platform release image:oc image extract $CCO_IMAGE --file="/usr/bin/ccoctl" -a ~/.pull-secret

$ oc image extract $CCO_IMAGE --file="/usr/bin/ccoctl" -a ~/.pull-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change the permissions to make

ccoctlexecutable:chmod 775 ccoctl

$ chmod 775 ccoctlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To verify that

ccoctlis ready to use, display the help file:ccoctl --help

$ ccoctl --helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Output of

ccoctl --helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow

18.5.2.2. Creating AWS resources with the Cloud Credential Operator utility

You can use the CCO utility (ccoctl) to create the required AWS resources individually, or with a single command.

18.5.2.2.1. Creating AWS resources individually

If you need to review the JSON files that the ccoctl tool creates before modifying AWS resources, or if the process the ccoctl tool uses to create AWS resources automatically does not meet the requirements of your organization, you can create the AWS resources individually. For example, this option might be useful for an organization that shares the responsibility for creating these resources among different users or departments.

Otherwise, you can use the ccoctl aws create-all command to create the AWS resources automatically.

By default, ccoctl creates objects in the directory in which the commands are run. To create the objects in a different directory, use the --output-dir flag. This procedure uses <path_to_ccoctl_output_dir> to refer to this directory.

Some ccoctl commands make AWS API calls to create or modify AWS resources. You can use the --dry-run flag to avoid making API calls. Using this flag creates JSON files on the local file system instead. You can review and modify the JSON files and then apply them with the AWS CLI tool using the --cli-input-json parameters.

Prerequisites

-

Extract and prepare the

ccoctlbinary.

Procedure

Generate the public and private RSA key files that are used to set up the OpenID Connect provider for the cluster:

ccoctl aws create-key-pair

$ ccoctl aws create-key-pairCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

2021/04/13 11:01:02 Generating RSA keypair 2021/04/13 11:01:03 Writing private key to /<path_to_ccoctl_output_dir>/serviceaccount-signer.private 2021/04/13 11:01:03 Writing public key to /<path_to_ccoctl_output_dir>/serviceaccount-signer.public 2021/04/13 11:01:03 Copying signing key for use by installer

2021/04/13 11:01:02 Generating RSA keypair 2021/04/13 11:01:03 Writing private key to /<path_to_ccoctl_output_dir>/serviceaccount-signer.private 2021/04/13 11:01:03 Writing public key to /<path_to_ccoctl_output_dir>/serviceaccount-signer.public 2021/04/13 11:01:03 Copying signing key for use by installerCopy to Clipboard Copied! Toggle word wrap Toggle overflow where

serviceaccount-signer.privateandserviceaccount-signer.publicare the generated key files.This command also creates a private key that the cluster requires during installation in

/<path_to_ccoctl_output_dir>/tls/bound-service-account-signing-key.key.Create an OpenID Connect identity provider and S3 bucket on AWS:

ccoctl aws create-identity-provider \ --name=<name> \ --region=<aws_region> \ --public-key-file=<path_to_ccoctl_output_dir>/serviceaccount-signer.public

$ ccoctl aws create-identity-provider \ --name=<name> \ --region=<aws_region> \ --public-key-file=<path_to_ccoctl_output_dir>/serviceaccount-signer.publicCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

-

<name>is the name used to tag any cloud resources that are created for tracking. -

<aws-region>is the AWS region in which cloud resources will be created. -

<path_to_ccoctl_output_dir>is the path to the public key file that theccoctl aws create-key-paircommand generated.

Example output:

2021/04/13 11:16:09 Bucket <name>-oidc created 2021/04/13 11:16:10 OpenID Connect discovery document in the S3 bucket <name>-oidc at .well-known/openid-configuration updated 2021/04/13 11:16:10 Reading public key 2021/04/13 11:16:10 JSON web key set (JWKS) in the S3 bucket <name>-oidc at keys.json updated 2021/04/13 11:16:18 Identity Provider created with ARN: arn:aws:iam::<aws_account_id>:oidc-provider/<name>-oidc.s3.<aws_region>.amazonaws.com

2021/04/13 11:16:09 Bucket <name>-oidc created 2021/04/13 11:16:10 OpenID Connect discovery document in the S3 bucket <name>-oidc at .well-known/openid-configuration updated 2021/04/13 11:16:10 Reading public key 2021/04/13 11:16:10 JSON web key set (JWKS) in the S3 bucket <name>-oidc at keys.json updated 2021/04/13 11:16:18 Identity Provider created with ARN: arn:aws:iam::<aws_account_id>:oidc-provider/<name>-oidc.s3.<aws_region>.amazonaws.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow where

02-openid-configurationis a discovery document and03-keys.jsonis a JSON web key set file.This command also creates a YAML configuration file in

/<path_to_ccoctl_output_dir>/manifests/cluster-authentication-02-config.yaml. This file sets the issuer URL field for the service account tokens that the cluster generates, so that the AWS IAM identity provider trusts the tokens.-

Create IAM roles for each component in the cluster.

Extract the list of

CredentialsRequestobjects from the OpenShift Container Platform release image:oc adm release extract --credentials-requests \ --cloud=aws \ --to=<path_to_directory_with_list_of_credentials_requests>/credrequests --from=quay.io/<path_to>/ocp-release:<version>

$ oc adm release extract --credentials-requests \ --cloud=aws \ --to=<path_to_directory_with_list_of_credentials_requests>/credrequests1 --from=quay.io/<path_to>/ocp-release:<version>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

credrequestsis the directory where the list ofCredentialsRequestobjects is stored. This command creates the directory if it does not exist.

Use the

ccoctltool to process allCredentialsRequestobjects in thecredrequestsdirectory:ccoctl aws create-iam-roles \ --name=<name> \ --region=<aws_region> \ --credentials-requests-dir=<path_to_directory_with_list_of_credentials_requests>/credrequests \ --identity-provider-arn=arn:aws:iam::<aws_account_id>:oidc-provider/<name>-oidc.s3.<aws_region>.amazonaws.com

$ ccoctl aws create-iam-roles \ --name=<name> \ --region=<aws_region> \ --credentials-requests-dir=<path_to_directory_with_list_of_credentials_requests>/credrequests \ --identity-provider-arn=arn:aws:iam::<aws_account_id>:oidc-provider/<name>-oidc.s3.<aws_region>.amazonaws.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFor AWS environments that use alternative IAM API endpoints, such as GovCloud, you must also specify your region with the

--regionparameter.If your cluster uses Technology Preview features that are enabled by the

TechPreviewNoUpgradefeature set, you must include the--enable-tech-previewparameter.For each

CredentialsRequestobject,ccoctlcreates an IAM role with a trust policy that is tied to the specified OIDC identity provider, and a permissions policy as defined in eachCredentialsRequestobject from the OpenShift Container Platform release image.

Verification

To verify that the OpenShift Container Platform secrets are created, list the files in the

<path_to_ccoctl_output_dir>/manifestsdirectory:ll <path_to_ccoctl_output_dir>/manifests

$ ll <path_to_ccoctl_output_dir>/manifestsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

You can verify that the IAM roles are created by querying AWS. For more information, refer to AWS documentation on listing IAM roles.

18.5.2.2.2. Creating AWS resources with a single command

If you do not need to review the JSON files that the ccoctl tool creates before modifying AWS resources, and if the process the ccoctl tool uses to create AWS resources automatically meets the requirements of your organization, you can use the ccoctl aws create-all command to automate the creation of AWS resources.

Otherwise, you can create the AWS resources individually.

By default, ccoctl creates objects in the directory in which the commands are run. To create the objects in a different directory, use the --output-dir flag. This procedure uses <path_to_ccoctl_output_dir> to refer to this directory.

Prerequisites

You must have:

-

Extracted and prepared the

ccoctlbinary.

Procedure

Extract the list of

CredentialsRequestobjects from the OpenShift Container Platform release image by running the following command:oc adm release extract \ --credentials-requests \ --cloud=aws \ --to=<path_to_directory_with_list_of_credentials_requests>/credrequests \ --from=quay.io/<path_to>/ocp-release:<version>

$ oc adm release extract \ --credentials-requests \ --cloud=aws \ --to=<path_to_directory_with_list_of_credentials_requests>/credrequests \1 --from=quay.io/<path_to>/ocp-release:<version>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

credrequestsis the directory where the list ofCredentialsRequestobjects is stored. This command creates the directory if it does not exist.

NoteThis command can take a few moments to run.

If your cluster uses cluster capabilities to disable one or more optional components, delete the

CredentialsRequestcustom resources for any disabled components.Example

credrequestsdirectory contents for OpenShift Container Platform 4.12 on AWSCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The Machine API Operator CR is required.

- 2

- The Cloud Credential Operator CR is required.

- 3

- The Image Registry Operator CR is required.

- 4

- The Ingress Operator CR is required.

- 5

- The Network Operator CR is required.

- 6

- The Storage Operator CR is an optional component and might be disabled in your cluster.

Use the

ccoctltool to process allCredentialsRequestobjects in thecredrequestsdirectory:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify the name used to tag any cloud resources that are created for tracking.

- 2

- Specify the AWS region in which cloud resources will be created.

- 3

- Specify the directory containing the files for the component

CredentialsRequestobjects. - 4

- Optional: Specify the directory in which you want the

ccoctlutility to create objects. By default, the utility creates objects in the directory in which the commands are run. - 5

- Optional: By default, the

ccoctlutility stores the OpenID Connect (OIDC) configuration files in a public S3 bucket and uses the S3 URL as the public OIDC endpoint. To store the OIDC configuration in a private S3 bucket that is accessed by the IAM identity provider through a public CloudFront distribution URL instead, use the--create-private-s3-bucketparameter.

NoteIf your cluster uses Technology Preview features that are enabled by the

TechPreviewNoUpgradefeature set, you must include the--enable-tech-previewparameter.

Verification

To verify that the OpenShift Container Platform secrets are created, list the files in the

<path_to_ccoctl_output_dir>/manifestsdirectory:ls <path_to_ccoctl_output_dir>/manifests

$ ls <path_to_ccoctl_output_dir>/manifestsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

You can verify that the IAM roles are created by querying AWS. For more information, refer to AWS documentation on listing IAM roles.

18.5.2.3. Running the installer

Prerequisites

- Configure an account with the cloud platform that hosts your cluster.

- Obtain the OpenShift Container Platform release image.

Procedure

Change to the directory that contains the installation program and create the

install-config.yamlfile:openshift-install create install-config --dir <installation_directory>

$ openshift-install create install-config --dir <installation_directory>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where

<installation_directory>is the directory in which the installation program creates files.Edit the

install-config.yamlconfiguration file so that it contains thecredentialsModeparameter set toManual.Example

install-config.yamlconfiguration fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- This line is added to set the

credentialsModeparameter toManual.

Create the required OpenShift Container Platform installation manifests:

openshift-install create manifests

$ openshift-install create manifestsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the manifests that

ccoctlgenerated to the manifests directory that the installation program created:cp /<path_to_ccoctl_output_dir>/manifests/* ./manifests/

$ cp /<path_to_ccoctl_output_dir>/manifests/* ./manifests/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the private key that the

ccoctlgenerated in thetlsdirectory to the installation directory:cp -a /<path_to_ccoctl_output_dir>/tls .

$ cp -a /<path_to_ccoctl_output_dir>/tls .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the OpenShift Container Platform installer:

./openshift-install create cluster

$ ./openshift-install create clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow

18.5.2.4. Verifying the installation

- Connect to the OpenShift Container Platform cluster.

Verify that the cluster does not have

rootcredentials:oc get secrets -n kube-system aws-creds

$ oc get secrets -n kube-system aws-credsCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output should look similar to:

Error from server (NotFound): secrets "aws-creds" not found

Error from server (NotFound): secrets "aws-creds" not foundCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the components are assuming the IAM roles that are specified in the secret manifests, instead of using credentials that are created by the CCO:

Example command with the Image Registry Operator

oc get secrets -n openshift-image-registry installer-cloud-credentials -o json | jq -r .data.credentials | base64 --decode

$ oc get secrets -n openshift-image-registry installer-cloud-credentials -o json | jq -r .data.credentials | base64 --decodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output should show the role and web identity token that are used by the component and look similar to:

Example output with the Image Registry Operator

[default] role_arn = arn:aws:iam::123456789:role/openshift-image-registry-installer-cloud-credentials web_identity_token_file = /var/run/secrets/openshift/serviceaccount/token

[default] role_arn = arn:aws:iam::123456789:role/openshift-image-registry-installer-cloud-credentials web_identity_token_file = /var/run/secrets/openshift/serviceaccount/tokenCopy to Clipboard Copied! Toggle word wrap Toggle overflow

18.6. Using manual mode with GCP Workload Identity

Manual mode with GCP Workload Identity is supported for Google Cloud Platform (GCP).

This credentials strategy is supported for only new OpenShift Container Platform clusters and must be configured during installation. You cannot reconfigure an existing cluster that uses a different credentials strategy to use this feature.

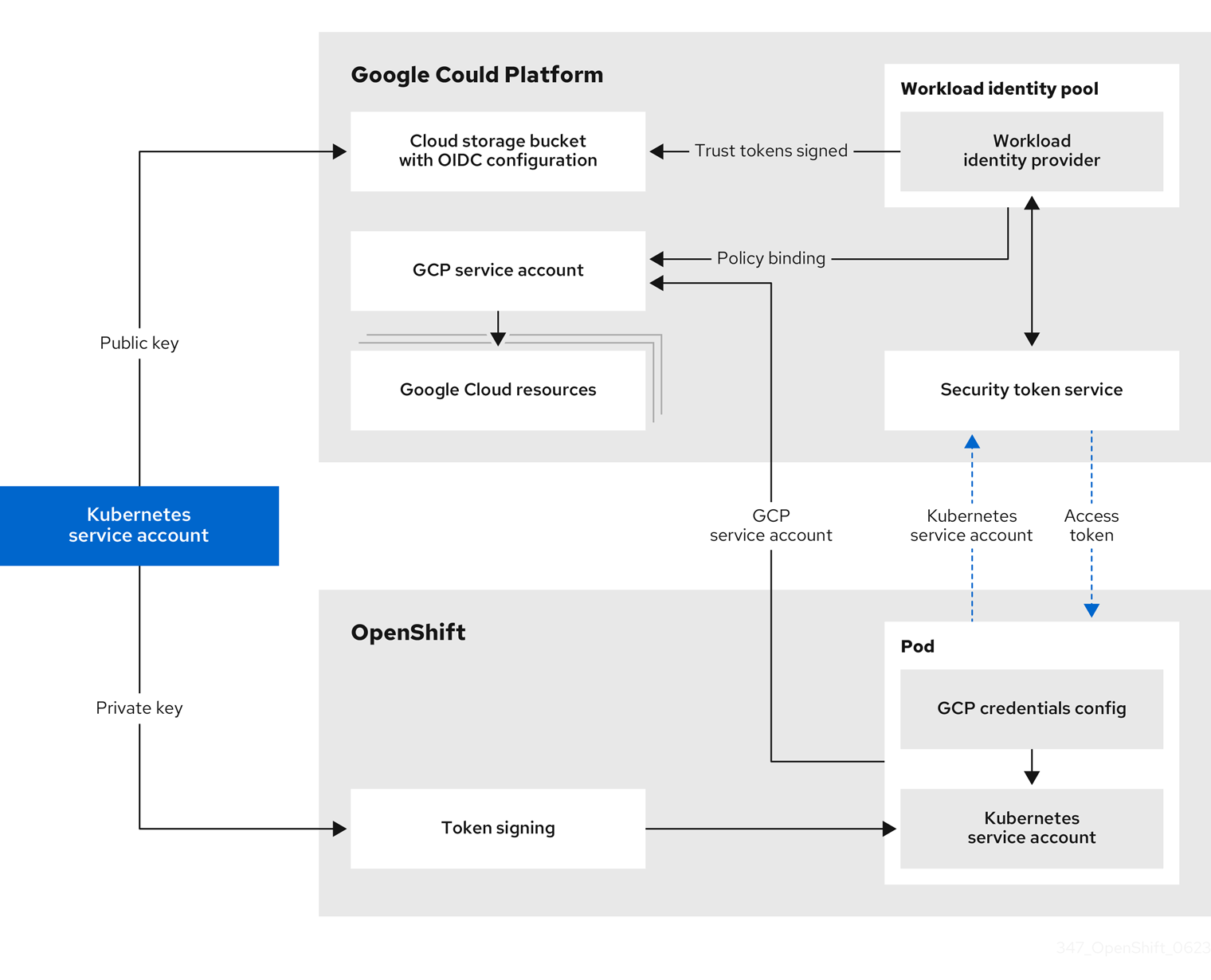

18.6.1. About manual mode with GCP Workload Identity

In manual mode with GCP Workload Identity, the individual OpenShift Container Platform cluster components can impersonate IAM service accounts using short-term, limited-privilege credentials.

Requests for new and refreshed credentials are automated by using an appropriately configured OpenID Connect (OIDC) identity provider, combined with IAM service accounts. OpenShift Container Platform signs service account tokens that are trusted by GCP, and can be projected into a pod and used for authentication. Tokens are refreshed after one hour by default.

Figure 18.3. Workload Identity authentication flow

Using manual mode with GCP Workload Identity changes the content of the GCP credentials that are provided to individual OpenShift Container Platform components.

GCP secret format

Content of the Base64 encoded service_account.json file using long-lived credentials

Content of the Base64 encoded service_account.json file using GCP Workload Identity

- 1

- The credential type is

external_account. - 2

- The target audience is the GCP Workload Identity provider.

- 3

- The resource URL of the service account that can be impersonated with these credentials.

- 4

- The path to the service account token inside the pod. By convention, this is

/var/run/secrets/openshift/serviceaccount/tokenfor OpenShift Container Platform components.

In OpenShift Container Platform 4.10.8, image registry support for using GCP Workload Identity was removed due to the discovery of an adverse impact to the image registry. To use the image registry on an OpenShift Container Platform 4.10.8 cluster that uses Workload Identity, you must configure the image registry to use long-lived credentials instead.

With OpenShift Container Platform 4.10.21, support for using GCP Workload Identity with the image registry is restored. For more information about the status of this feature between OpenShift Container Platform 4.10.8 and 4.10.20, see the related Knowledgebase article.

18.6.2. Installing an OpenShift Container Platform cluster configured for manual mode with GCP Workload Identity

To install a cluster that is configured to use the Cloud Credential Operator (CCO) in manual mode with GCP Workload Identity:

Because the cluster is operating in manual mode when using GCP Workload Identity, it is not able to create new credentials for components with the permissions that they require. When upgrading to a different minor version of OpenShift Container Platform, there are often new GCP permission requirements. Before upgrading a cluster that is using GCP Workload Identity, the cluster administrator must manually ensure that the GCP permissions are sufficient for existing components and available to any new components.

18.6.2.1. Configuring the Cloud Credential Operator utility

To create and manage cloud credentials from outside of the cluster when the Cloud Credential Operator (CCO) is operating in manual mode, extract and prepare the CCO utility (ccoctl) binary.

The ccoctl utility is a Linux binary that must run in a Linux environment.

Prerequisites

- You have access to an OpenShift Container Platform account with cluster administrator access.

-

You have installed the OpenShift CLI (

oc).

Procedure

Obtain the OpenShift Container Platform release image:

RELEASE_IMAGE=$(./openshift-install version | awk '/release image/ {print $3}')$ RELEASE_IMAGE=$(./openshift-install version | awk '/release image/ {print $3}')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Get the CCO container image from the OpenShift Container Platform release image:

CCO_IMAGE=$(oc adm release info --image-for='cloud-credential-operator' $RELEASE_IMAGE)

$ CCO_IMAGE=$(oc adm release info --image-for='cloud-credential-operator' $RELEASE_IMAGE)Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteEnsure that the architecture of the

$RELEASE_IMAGEmatches the architecture of the environment in which you will use theccoctltool.Extract the

ccoctlbinary from the CCO container image within the OpenShift Container Platform release image:oc image extract $CCO_IMAGE --file="/usr/bin/ccoctl" -a ~/.pull-secret

$ oc image extract $CCO_IMAGE --file="/usr/bin/ccoctl" -a ~/.pull-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change the permissions to make

ccoctlexecutable:chmod 775 ccoctl

$ chmod 775 ccoctlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To verify that

ccoctlis ready to use, display the help file:ccoctl --help

$ ccoctl --helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Output of

ccoctl --helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow

18.6.2.2. Creating GCP resources with the Cloud Credential Operator utility

You can use the ccoctl gcp create-all command to automate the creation of GCP resources.

By default, ccoctl creates objects in the directory in which the commands are run. To create the objects in a different directory, use the --output-dir flag. This procedure uses <path_to_ccoctl_output_dir> to refer to this directory.

Prerequisites

You must have:

-

Extracted and prepared the

ccoctlbinary.

Procedure

Extract the list of

CredentialsRequestobjects from the OpenShift Container Platform release image by running the following command:oc adm release extract \ --credentials-requests \ --cloud=gcp \ --to=<path_to_directory_with_list_of_credentials_requests>/credrequests \ --quay.io/<path_to>/ocp-release:<version>

$ oc adm release extract \ --credentials-requests \ --cloud=gcp \ --to=<path_to_directory_with_list_of_credentials_requests>/credrequests \1 --quay.io/<path_to>/ocp-release:<version>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

credrequestsis the directory where the list ofCredentialsRequestobjects is stored. This command creates the directory if it does not exist.

NoteThis command can take a few moments to run.

If your cluster uses cluster capabilities to disable one or more optional components, delete the

CredentialsRequestcustom resources for any disabled components.Example

credrequestsdirectory contents for OpenShift Container Platform 4.12 on GCPCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The Cloud Controller Manager Operator CR is required.

- 2

- The Machine API Operator CR is required.

- 3

- The Cloud Credential Operator CR is required.

- 4

- The Image Registry Operator CR is required.

- 5

- The Ingress Operator CR is required.

- 6

- The Network Operator CR is required.

- 7

- The Storage Operator CR is an optional component and might be disabled in your cluster.

Use the

ccoctltool to process allCredentialsRequestobjects in thecredrequestsdirectory:ccoctl gcp create-all \ --name=<name> \ --region=<gcp_region> \ --project=<gcp_project_id> \ --credentials-requests-dir=<path_to_directory_with_list_of_credentials_requests>/credrequests

$ ccoctl gcp create-all \ --name=<name> \ --region=<gcp_region> \ --project=<gcp_project_id> \ --credentials-requests-dir=<path_to_directory_with_list_of_credentials_requests>/credrequestsCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

-

<name>is the user-defined name for all created GCP resources used for tracking. -

<gcp_region>is the GCP region in which cloud resources will be created. -

<gcp_project_id>is the GCP project ID in which cloud resources will be created. -

<path_to_directory_with_list_of_credentials_requests>/credrequestsis the directory containing the files ofCredentialsRequestmanifests to create GCP service accounts.

NoteIf your cluster uses Technology Preview features that are enabled by the

TechPreviewNoUpgradefeature set, you must include the--enable-tech-previewparameter.-

Verification

To verify that the OpenShift Container Platform secrets are created, list the files in the

<path_to_ccoctl_output_dir>/manifestsdirectory:ls <path_to_ccoctl_output_dir>/manifests

$ ls <path_to_ccoctl_output_dir>/manifestsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

You can verify that the IAM service accounts are created by querying GCP. For more information, refer to GCP documentation on listing IAM service accounts.

18.6.2.3. Running the installer

Prerequisites

- Configure an account with the cloud platform that hosts your cluster.

- Obtain the OpenShift Container Platform release image.

Procedure

Change to the directory that contains the installation program and create the

install-config.yamlfile:openshift-install create install-config --dir <installation_directory>

$ openshift-install create install-config --dir <installation_directory>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where

<installation_directory>is the directory in which the installation program creates files.Edit the

install-config.yamlconfiguration file so that it contains thecredentialsModeparameter set toManual.Example

install-config.yamlconfiguration fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- This line is added to set the

credentialsModeparameter toManual.

Create the required OpenShift Container Platform installation manifests:

openshift-install create manifests

$ openshift-install create manifestsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the manifests that

ccoctlgenerated to the manifests directory that the installation program created:cp /<path_to_ccoctl_output_dir>/manifests/* ./manifests/

$ cp /<path_to_ccoctl_output_dir>/manifests/* ./manifests/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the private key that the

ccoctlgenerated in thetlsdirectory to the installation directory:cp -a /<path_to_ccoctl_output_dir>/tls .

$ cp -a /<path_to_ccoctl_output_dir>/tls .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the OpenShift Container Platform installer:

./openshift-install create cluster

$ ./openshift-install create clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow

18.6.2.4. Verifying the installation

- Connect to the OpenShift Container Platform cluster.

Verify that the cluster does not have

rootcredentials:oc get secrets -n kube-system gcp-credentials

$ oc get secrets -n kube-system gcp-credentialsCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output should look similar to:

Error from server (NotFound): secrets "gcp-credentials" not found

Error from server (NotFound): secrets "gcp-credentials" not foundCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the components are assuming the service accounts that are specified in the secret manifests, instead of using credentials that are created by the CCO:

Example command with the Image Registry Operator

oc get secrets -n openshift-image-registry installer-cloud-credentials -o json | jq -r '.data."service_account.json"' | base64 -d

$ oc get secrets -n openshift-image-registry installer-cloud-credentials -o json | jq -r '.data."service_account.json"' | base64 -dCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output should show the role and web identity token that are used by the component and look similar to:

Example output with the Image Registry Operator

Copy to Clipboard Copied! Toggle word wrap Toggle overflow