Chapter 6. Cluster Operators reference

This reference guide indexes the cluster Operators shipped by Red Hat that serve as the architectural foundation for OpenShift Container Platform. Cluster Operators are installed by default, unless otherwise noted, and are managed by the Cluster Version Operator (CVO). For more details on the control plane architecture, see Operators in OpenShift Container Platform.

Cluster administrators can view cluster Operators in the OpenShift Container Platform web console from the Administration

Cluster Operators are not managed by Operator Lifecycle Manager (OLM) and OperatorHub. OLM and OperatorHub are part of the Operator Framework used in OpenShift Container Platform for installing and running optional add-on Operators.

Some of the following cluster Operators can be disabled prior to installation. For more information see cluster capabilities.

6.1. Cluster Baremetal Operator

The Cluster Baremetal Operator is an optional cluster capability that can be disabled by cluster administrators during installation. For more information about optional cluster capabilities, see "Cluster capabilities" in Installing.

Purpose

The Cluster Baremetal Operator (CBO) deploys all the components necessary to take a bare-metal server to a fully functioning worker node ready to run OpenShift Container Platform compute nodes. The CBO ensures that the metal3 deployment, which consists of the Bare Metal Operator (BMO) and Ironic containers, runs on one of the control plane nodes within the OpenShift Container Platform cluster. The CBO also listens for OpenShift Container Platform updates to resources that it watches and takes appropriate action.

Project

Additional resources

6.2. Bare Metal Event Relay

Purpose

The OpenShift Bare Metal Event Relay manages the life-cycle of the Bare Metal Event Relay. The Bare Metal Event Relay enables you to configure the types of cluster event that are monitored using Redfish hardware events.

Configuration objects

You can use this command to edit the configuration after installation: for example, the webhook port. You can edit configuration objects with:

$ oc -n [namespace] edit cm hw-event-proxy-operator-manager-config

apiVersion: controller-runtime.sigs.k8s.io/v1alpha1 kind: ControllerManagerConfig health: healthProbeBindAddress: :8081 metrics: bindAddress: 127.0.0.1:8080 webhook: port: 9443 leaderElection: leaderElect: true resourceName: 6e7a703c.redhat-cne.org

Project

CRD

The proxy enables applications running on bare-metal clusters to respond quickly to Redfish hardware changes and failures such as breaches of temperature thresholds, fan failure, disk loss, power outages, and memory failure, reported using the HardwareEvent CR.

hardwareevents.event.redhat-cne.org:

- Scope: Namespaced

- CR: HardwareEvent

- Validation: Yes

6.3. Cloud Credential Operator

Purpose

The Cloud Credential Operator (CCO) manages cloud provider credentials as Kubernetes custom resource definitions (CRDs). The CCO syncs on CredentialsRequest custom resources (CRs) to allow OpenShift Container Platform components to request cloud provider credentials with the specific permissions that are required for the cluster to run.

By setting different values for the credentialsMode parameter in the install-config.yaml file, the CCO can be configured to operate in several different modes. If no mode is specified, or the credentialsMode parameter is set to an empty string (""), the CCO operates in its default mode.

Project

openshift-cloud-credential-operator

CRDs

credentialsrequests.cloudcredential.openshift.io- Scope: Namespaced

-

CR:

CredentialsRequest - Validation: Yes

Configuration objects

No configuration required.

Additional resources

6.4. Cluster Authentication Operator

Purpose

The Cluster Authentication Operator installs and maintains the Authentication custom resource in a cluster and can be viewed with:

$ oc get clusteroperator authentication -o yaml

Project

6.5. Cluster Autoscaler Operator

Purpose

The Cluster Autoscaler Operator manages deployments of the OpenShift Cluster Autoscaler using the cluster-api provider.

Project

CRDs

-

ClusterAutoscaler: This is a singleton resource, which controls the configuration autoscaler instance for the cluster. The Operator only responds to theClusterAutoscalerresource nameddefaultin the managed namespace, the value of theWATCH_NAMESPACEenvironment variable. -

MachineAutoscaler: This resource targets a node group and manages the annotations to enable and configure autoscaling for that group, theminandmaxsize. Currently onlyMachineSetobjects can be targeted.

6.6. Cluster Cloud Controller Manager Operator

Purpose

This Operator is General Availability for Microsoft Azure Stack Hub, IBM Cloud, Nutanix, Red Hat OpenStack Platform (RHOSP), and VMware vSphere.

It is available as a Technology Preview for Alibaba Cloud, Amazon Web Services (AWS), Google Cloud Platform (GCP), IBM Cloud Power VS, and Microsoft Azure.

The Cluster Cloud Controller Manager Operator manages and updates the cloud controller managers deployed on top of OpenShift Container Platform. The Operator is based on the Kubebuilder framework and controller-runtime libraries. It is installed via the Cluster Version Operator (CVO).

It contains the following components:

- Operator

- Cloud configuration observer

By default, the Operator exposes Prometheus metrics through the metrics service.

Project

6.7. Cluster CAPI Operator

This Operator is available as a Technology Preview for Amazon Web Services (AWS) and Google Cloud Platform (GCP).

Purpose

The Cluster CAPI Operator maintains the lifecycle of Cluster API resources. This Operator is responsible for all administrative tasks related to deploying the Cluster API project within an OpenShift Container Platform cluster.

Project

CRDs

awsmachines.infrastructure.cluster.x-k8s.io- Scope: Namespaced

-

CR:

awsmachine - Validation: No

gcpmachines.infrastructure.cluster.x-k8s.io- Scope: Namespaced

-

CR:

gcpmachine - Validation: No

awsmachinetemplates.infrastructure.cluster.x-k8s.io- Scope: Namespaced

-

CR:

awsmachinetemplate - Validation: No

gcpmachinetemplates.infrastructure.cluster.x-k8s.io- Scope: Namespaced

-

CR:

gcpmachinetemplate - Validation: No

6.8. Cluster Config Operator

Purpose

The Cluster Config Operator performs the following tasks related to config.openshift.io:

- Creates CRDs.

- Renders the initial custom resources.

- Handles migrations.

Project

6.9. Cluster CSI Snapshot Controller Operator

The Cluster CSI Snapshot Controller Operator is an optional cluster capability that can be disabled by cluster administrators during installation. For more information about optional cluster capabilities, see "Cluster capabilities" in Installing.

Purpose

The Cluster CSI Snapshot Controller Operator installs and maintains the CSI Snapshot Controller. The CSI Snapshot Controller is responsible for watching the VolumeSnapshot CRD objects and manages the creation and deletion lifecycle of volume snapshots.

Project

cluster-csi-snapshot-controller-operator

Additional resources

6.10. Cluster Image Registry Operator

Purpose

The Cluster Image Registry Operator manages a singleton instance of the OpenShift image registry. It manages all configuration of the registry, including creating storage.

On initial start up, the Operator creates a default image-registry resource instance based on the configuration detected in the cluster. This indicates what cloud storage type to use based on the cloud provider.

If insufficient information is available to define a complete image-registry resource, then an incomplete resource is defined and the Operator updates the resource status with information about what is missing.

The Cluster Image Registry Operator runs in the openshift-image-registry namespace and it also manages the registry instance in that location. All configuration and workload resources for the registry reside in that namespace.

Project

6.11. Cluster Machine Approver Operator

Purpose

The Cluster Machine Approver Operator automatically approves the CSRs requested for a new worker node after cluster installation.

For the control plane node, the approve-csr service on the bootstrap node automatically approves all CSRs during the cluster bootstrapping phase.

Project

6.12. Cluster Monitoring Operator

Purpose

The Cluster Monitoring Operator (CMO) manages and updates the Prometheus-based cluster monitoring stack deployed on top of OpenShift Container Platform.

Project

CRDs

alertmanagers.monitoring.coreos.com- Scope: Namespaced

-

CR:

alertmanager - Validation: Yes

prometheuses.monitoring.coreos.com- Scope: Namespaced

-

CR:

prometheus - Validation: Yes

prometheusrules.monitoring.coreos.com- Scope: Namespaced

-

CR:

prometheusrule - Validation: Yes

servicemonitors.monitoring.coreos.com- Scope: Namespaced

-

CR:

servicemonitor - Validation: Yes

Configuration objects

$ oc -n openshift-monitoring edit cm cluster-monitoring-config

6.13. Cluster Network Operator

Purpose

The Cluster Network Operator installs and upgrades the networking components on an OpenShift Container Platform cluster.

6.14. Cluster Samples Operator

The Cluster Samples Operator is an optional cluster capability that can be disabled by cluster administrators during installation. For more information about optional cluster capabilities, see "Cluster capabilities" in Installing.

Purpose

The Cluster Samples Operator manages the sample image streams and templates stored in the openshift namespace.

On initial start up, the Operator creates the default samples configuration resource to initiate the creation of the image streams and templates. The configuration object is a cluster scoped object with the key cluster and type configs.samples.

The image streams are the Red Hat Enterprise Linux CoreOS (RHCOS)-based OpenShift Container Platform image streams pointing to images on registry.redhat.io. Similarly, the templates are those categorized as OpenShift Container Platform templates.

The Cluster Samples Operator deployment is contained within the openshift-cluster-samples-operator namespace. On start up, the install pull secret is used by the image stream import logic in the OpenShift image registry and API server to authenticate with registry.redhat.io. An administrator can create any additional secrets in the openshift namespace if they change the registry used for the sample image streams. If created, those secrets contain the content of a config.json for docker needed to facilitate image import.

The image for the Cluster Samples Operator contains image stream and template definitions for the associated OpenShift Container Platform release. After the Cluster Samples Operator creates a sample, it adds an annotation that denotes the OpenShift Container Platform version that it is compatible with. The Operator uses this annotation to ensure that each sample matches the compatible release version. Samples outside of its inventory are ignored, as are skipped samples.

Modifications to any samples that are managed by the Operator are allowed as long as the version annotation is not modified or deleted. However, on an upgrade, as the version annotation will change, those modifications can get replaced as the sample will be updated with the newer version. The Jenkins images are part of the image payload from the installation and are tagged into the image streams directly.

The samples resource includes a finalizer, which cleans up the following upon its deletion:

- Operator-managed image streams

- Operator-managed templates

- Operator-generated configuration resources

- Cluster status resources

Upon deletion of the samples resource, the Cluster Samples Operator recreates the resource using the default configuration.

Project

Additional resources

6.15. Cluster Storage Operator

The Cluster Storage Operator is an optional cluster capability that can be disabled by cluster administrators during installation. For more information about optional cluster capabilities, see "Cluster capabilities" in Installing.

Purpose

The Cluster Storage Operator sets OpenShift Container Platform cluster-wide storage defaults. It ensures a default storageclass exists for OpenShift Container Platform clusters. It also installs Container Storage Interface (CSI) drivers which enable your cluster to use various storage backends.

Project

Configuration

No configuration is required.

Notes

- The storage class that the Operator creates can be made non-default by editing its annotation, but this storage class cannot be deleted as long as the Operator runs.

Additional resources

6.16. Cluster Version Operator

Purpose

Cluster Operators manage specific areas of cluster functionality. The Cluster Version Operator (CVO) manages the lifecycle of cluster Operators, many of which are installed in OpenShift Container Platform by default.

The CVO also checks with the OpenShift Update Service to see the valid updates and update paths based on current component versions and information in the graph by collecting the status of both the cluster version and its cluster Operators. This status includes the condition type, which informs you of the health and current state of the OpenShift Container Platform cluster.

For more information regarding cluster version condition types, see "Understanding cluster version condition types".

Project

Additional resources

6.17. Console Operator

The Console Operator is an optional cluster capability that can be disabled by cluster administrators during installation. If you disable the Console Operator at installation, your cluster is still supported and upgradable. For more information about optional cluster capabilities, see "Cluster capabilities" in Installing.

Purpose

The Console Operator installs and maintains the OpenShift Container Platform web console on a cluster. The Console Operator is installed by default and automatically maintains a console.

Project

Additional resources

6.18. Control Plane Machine Set Operator

This Operator is available for Amazon Web Services (AWS), Google Cloud Platform (GCP), Microsoft Azure, and VMware vSphere.

Purpose

The Control Plane Machine Set Operator automates the management of control plane machine resources within an OpenShift Container Platform cluster.

Project

cluster-control-plane-machine-set-operator

CRDs

controlplanemachineset.machine.openshift.io- Scope: Namespaced

-

CR:

ControlPlaneMachineSet - Validation: Yes

Additional resources

6.19. DNS Operator

Purpose

The DNS Operator deploys and manages CoreDNS to provide a name resolution service to pods that enables DNS-based Kubernetes Service discovery in OpenShift Container Platform.

The Operator creates a working default deployment based on the cluster’s configuration.

-

The default cluster domain is

cluster.local. - Configuration of the CoreDNS Corefile or Kubernetes plugin is not yet supported.

The DNS Operator manages CoreDNS as a Kubernetes daemon set exposed as a service with a static IP. CoreDNS runs on all nodes in the cluster.

Project

6.20. etcd cluster Operator

Purpose

The etcd cluster Operator automates etcd cluster scaling, enables etcd monitoring and metrics, and simplifies disaster recovery procedures.

Project

CRDs

etcds.operator.openshift.io- Scope: Cluster

-

CR:

etcd - Validation: Yes

Configuration objects

$ oc edit etcd cluster

6.21. Ingress Operator

Purpose

The Ingress Operator configures and manages the OpenShift Container Platform router.

Project

CRDs

clusteringresses.ingress.openshift.io- Scope: Namespaced

-

CR:

clusteringresses - Validation: No

Configuration objects

Cluster config

-

Type Name:

clusteringresses.ingress.openshift.io -

Instance Name:

default View Command:

$ oc get clusteringresses.ingress.openshift.io -n openshift-ingress-operator default -o yaml

-

Type Name:

Notes

The Ingress Operator sets up the router in the openshift-ingress project and creates the deployment for the router:

$ oc get deployment -n openshift-ingress

The Ingress Operator uses the clusterNetwork[].cidr from the network/cluster status to determine what mode (IPv4, IPv6, or dual stack) the managed Ingress Controller (router) should operate in. For example, if clusterNetwork contains only a v6 cidr, then the Ingress Controller operates in IPv6-only mode.

In the following example, Ingress Controllers managed by the Ingress Operator will run in IPv4-only mode because only one cluster network exists and the network is an IPv4 cidr:

$ oc get network/cluster -o jsonpath='{.status.clusterNetwork[*]}'Example output

map[cidr:10.128.0.0/14 hostPrefix:23]

6.22. Insights Operator

The Insights Operator is an optional cluster capability that can be disabled by cluster administrators during installation. For more information about optional cluster capabilities, see "Cluster capabilities" in Installing.

Purpose

The Insights Operator gathers OpenShift Container Platform configuration data and sends it to Red Hat. The data is used to produce proactive insights recommendations about potential issues that a cluster might be exposed to. These insights are communicated to cluster administrators through Insights Advisor on console.redhat.com.

Project

Configuration

No configuration is required.

Notes

Insights Operator complements OpenShift Container Platform Telemetry.

Additional resources

- Insights capability

- See About remote health monitoring for details about Insights Operator and Telemetry.

6.23. Kubernetes API Server Operator

Purpose

The Kubernetes API Server Operator manages and updates the Kubernetes API server deployed on top of OpenShift Container Platform. The Operator is based on the OpenShift Container Platform library-go framework and it is installed using the Cluster Version Operator (CVO).

Project

openshift-kube-apiserver-operator

CRDs

kubeapiservers.operator.openshift.io- Scope: Cluster

-

CR:

kubeapiserver - Validation: Yes

Configuration objects

$ oc edit kubeapiserver

6.24. Kubernetes Controller Manager Operator

Purpose

The Kubernetes Controller Manager Operator manages and updates the Kubernetes Controller Manager deployed on top of OpenShift Container Platform. The Operator is based on OpenShift Container Platform library-go framework and it is installed via the Cluster Version Operator (CVO).

It contains the following components:

- Operator

- Bootstrap manifest renderer

- Installer based on static pods

- Configuration observer

By default, the Operator exposes Prometheus metrics through the metrics service.

Project

6.25. Kubernetes Scheduler Operator

Purpose

The Kubernetes Scheduler Operator manages and updates the Kubernetes Scheduler deployed on top of OpenShift Container Platform. The Operator is based on the OpenShift Container Platform library-go framework and it is installed with the Cluster Version Operator (CVO).

The Kubernetes Scheduler Operator contains the following components:

- Operator

- Bootstrap manifest renderer

- Installer based on static pods

- Configuration observer

By default, the Operator exposes Prometheus metrics through the metrics service.

Project

cluster-kube-scheduler-operator

Configuration

The configuration for the Kubernetes Scheduler is the result of merging:

- a default configuration.

-

an observed configuration from the spec

schedulers.config.openshift.io.

All of these are sparse configurations, invalidated JSON snippets which are merged to form a valid configuration at the end.

6.26. Kubernetes Storage Version Migrator Operator

Purpose

The Kubernetes Storage Version Migrator Operator detects changes of the default storage version, creates migration requests for resource types when the storage version changes, and processes migration requests.

Project

6.27. Machine API Operator

Purpose

The Machine API Operator manages the lifecycle of specific purpose custom resource definitions (CRD), controllers, and RBAC objects that extend the Kubernetes API. This declares the desired state of machines in a cluster.

Project

CRDs

-

MachineSet -

Machine -

MachineHealthCheck

6.28. Machine Config Operator

Purpose

The Machine Config Operator manages and applies configuration and updates of the base operating system and container runtime, including everything between the kernel and kubelet.

There are four components:

-

machine-config-server: Provides Ignition configuration to new machines joining the cluster. -

machine-config-controller: Coordinates the upgrade of machines to the desired configurations defined by aMachineConfigobject. Options are provided to control the upgrade for sets of machines individually. -

machine-config-daemon: Applies new machine configuration during update. Validates and verifies the state of the machine to the requested machine configuration. -

machine-config: Provides a complete source of machine configuration at installation, first start up, and updates for a machine.

Currently, there is no supported way to block or restrict the machine config server endpoint. The machine config server must be exposed to the network so that newly-provisioned machines, which have no existing configuration or state, are able to fetch their configuration. In this model, the root of trust is the certificate signing requests (CSR) endpoint, which is where the kubelet sends its certificate signing request for approval to join the cluster. Because of this, machine configs should not be used to distribute sensitive information, such as secrets and certificates.

To ensure that the machine config server endpoints, ports 22623 and 22624, are secured in bare metal scenarios, customers must configure proper network policies.

Additional resources

Project

6.29. Marketplace Operator

The Marketplace Operator is an optional cluster capability that can be disabled by cluster administrators if it is not needed. For more information about optional cluster capabilities, see "Cluster capabilities" in Installing.

Purpose

The Marketplace Operator simplifies the process for bringing off-cluster Operators to your cluster by using a set of default Operator Lifecycle Manager (OLM) catalogs on the cluster. When the Marketplace Operator is installed, it creates the openshift-marketplace namespace. OLM ensures catalog sources installed in the openshift-marketplace namespace are available for all namespaces on the cluster.

Project

Additional resources

6.30. Node Tuning Operator

Purpose

The Node Tuning Operator helps you manage node-level tuning by orchestrating the TuneD daemon and achieves low latency performance by using the Performance Profile controller. The majority of high-performance applications require some level of kernel tuning. The Node Tuning Operator provides a unified management interface to users of node-level sysctls and more flexibility to add custom tuning specified by user needs.

The Operator manages the containerized TuneD daemon for OpenShift Container Platform as a Kubernetes daemon set. It ensures the custom tuning specification is passed to all containerized TuneD daemons running in the cluster in the format that the daemons understand. The daemons run on all nodes in the cluster, one per node.

Node-level settings applied by the containerized TuneD daemon are rolled back on an event that triggers a profile change or when the containerized TuneD daemon is terminated gracefully by receiving and handling a termination signal.

The Node Tuning Operator uses the Performance Profile controller to implement automatic tuning to achieve low latency performance for OpenShift Container Platform applications.

The cluster administrator configures a performance profile to define node-level settings such as the following:

- Updating the kernel to kernel-rt.

- Choosing CPUs for housekeeping.

- Choosing CPUs for running workloads.

Currently, disabling CPU load balancing is not supported by cgroup v2. As a result, you might not get the desired behavior from performance profiles if you have cgroup v2 enabled. Enabling cgroup v2 is not recommended if you are using performance profiles.

The Node Tuning Operator is part of a standard OpenShift Container Platform installation in version 4.1 and later.

In earlier versions of OpenShift Container Platform, the Performance Addon Operator was used to implement automatic tuning to achieve low latency performance for OpenShift applications. In OpenShift Container Platform 4.11 and later, this functionality is part of the Node Tuning Operator.

Project

Additional resources

6.31. OpenShift API Server Operator

Purpose

The OpenShift API Server Operator installs and maintains the openshift-apiserver on a cluster.

Project

CRDs

openshiftapiservers.operator.openshift.io- Scope: Cluster

-

CR:

openshiftapiserver - Validation: Yes

6.32. OpenShift Controller Manager Operator

Purpose

The OpenShift Controller Manager Operator installs and maintains the OpenShiftControllerManager custom resource in a cluster and can be viewed with:

$ oc get clusteroperator openshift-controller-manager -o yaml

The custom resource definition (CRD) openshiftcontrollermanagers.operator.openshift.io can be viewed in a cluster with:

$ oc get crd openshiftcontrollermanagers.operator.openshift.io -o yaml

Project

6.33. Operator Lifecycle Manager Operators

Purpose

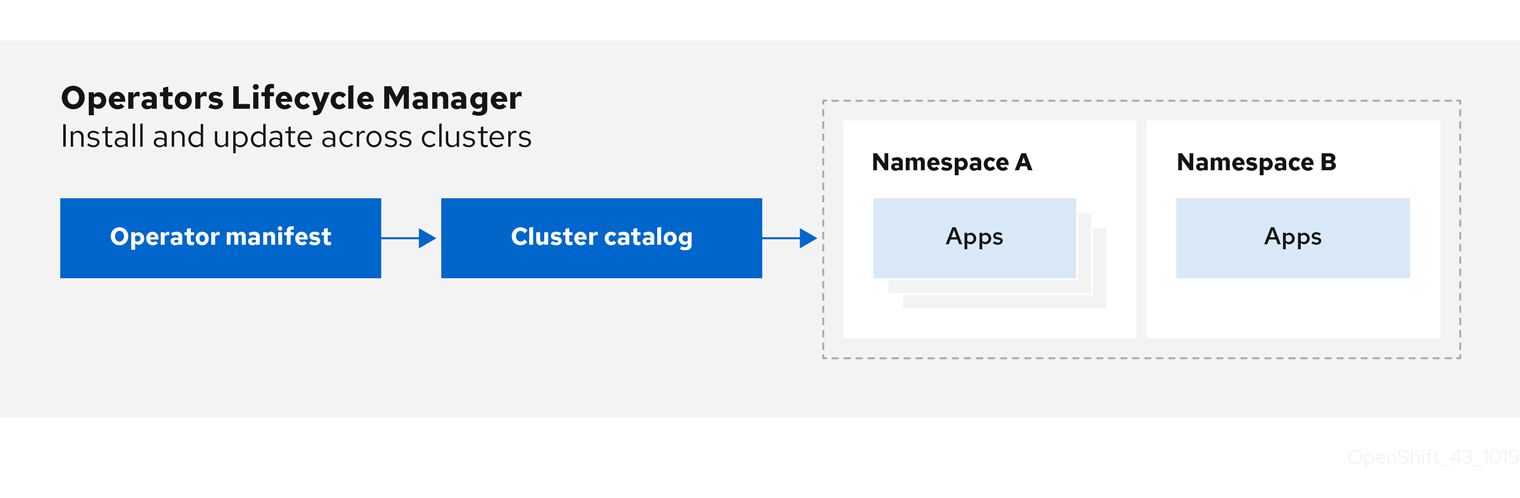

Operator Lifecycle Manager (OLM) helps users install, update, and manage the lifecycle of Kubernetes native applications (Operators) and their associated services running across their OpenShift Container Platform clusters. It is part of the Operator Framework, an open source toolkit designed to manage Operators in an effective, automated, and scalable way.

Figure 6.1. Operator Lifecycle Manager workflow

OLM runs by default in OpenShift Container Platform 4.13, which aids cluster administrators in installing, upgrading, and granting access to Operators running on their cluster. The OpenShift Container Platform web console provides management screens for cluster administrators to install Operators, as well as grant specific projects access to use the catalog of Operators available on the cluster.

For developers, a self-service experience allows provisioning and configuring instances of databases, monitoring, and big data services without having to be subject matter experts, because the Operator has that knowledge baked into it.

CRDs

Operator Lifecycle Manager (OLM) is composed of two Operators: the OLM Operator and the Catalog Operator.

Each of these Operators is responsible for managing the custom resource definitions (CRDs) that are the basis for the OLM framework:

| Resource | Short name | Owner | Description |

|---|---|---|---|

|

|

| OLM | Application metadata: name, version, icon, required resources, installation, and so on. |

|

|

| Catalog | Calculated list of resources to be created to automatically install or upgrade a CSV. |

|

|

| Catalog | A repository of CSVs, CRDs, and packages that define an application. |

|

|

| Catalog | Used to keep CSVs up to date by tracking a channel in a package. |

|

|

| OLM |

Configures all Operators deployed in the same namespace as the |

Each of these Operators is also responsible for creating the following resources:

| Resource | Owner |

|---|---|

|

| OLM |

|

| |

|

| |

|

| |

|

| Catalog |

|

|

OLM Operator

The OLM Operator is responsible for deploying applications defined by CSV resources after the required resources specified in the CSV are present in the cluster.

The OLM Operator is not concerned with the creation of the required resources; you can choose to manually create these resources using the CLI or using the Catalog Operator. This separation of concern allows users incremental buy-in in terms of how much of the OLM framework they choose to leverage for their application.

The OLM Operator uses the following workflow:

- Watch for cluster service versions (CSVs) in a namespace and check that requirements are met.

If requirements are met, run the install strategy for the CSV.

NoteA CSV must be an active member of an Operator group for the install strategy to run.

Catalog Operator

The Catalog Operator is responsible for resolving and installing cluster service versions (CSVs) and the required resources they specify. It is also responsible for watching catalog sources for updates to packages in channels and upgrading them, automatically if desired, to the latest available versions.

To track a package in a channel, you can create a Subscription object configuring the desired package, channel, and the CatalogSource object you want to use for pulling updates. When updates are found, an appropriate InstallPlan object is written into the namespace on behalf of the user.

The Catalog Operator uses the following workflow:

- Connect to each catalog source in the cluster.

Watch for unresolved install plans created by a user, and if found:

- Find the CSV matching the name requested and add the CSV as a resolved resource.

- For each managed or required CRD, add the CRD as a resolved resource.

- For each required CRD, find the CSV that manages it.

- Watch for resolved install plans and create all of the discovered resources for it, if approved by a user or automatically.

- Watch for catalog sources and subscriptions and create install plans based on them.

Catalog Registry

The Catalog Registry stores CSVs and CRDs for creation in a cluster and stores metadata about packages and channels.

A package manifest is an entry in the Catalog Registry that associates a package identity with sets of CSVs. Within a package, channels point to a particular CSV. Because CSVs explicitly reference the CSV that they replace, a package manifest provides the Catalog Operator with all of the information that is required to update a CSV to the latest version in a channel, stepping through each intermediate version.

Additional resources

- For more information, see the sections on understanding Operator Lifecycle Manager (OLM).

6.34. OpenShift Service CA Operator

Purpose

The OpenShift Service CA Operator mints and manages serving certificates for Kubernetes services.

Project

6.35. vSphere Problem Detector Operator

Purpose

The vSphere Problem Detector Operator checks clusters that are deployed on vSphere for common installation and misconfiguration issues that are related to storage.

The vSphere Problem Detector Operator is only started by the Cluster Storage Operator when the Cluster Storage Operator detects that the cluster is deployed on vSphere.

Configuration

No configuration is required.

Notes

- The Operator supports OpenShift Container Platform installations on vSphere.

-

The Operator uses the

vsphere-cloud-credentialsto communicate with vSphere. - The Operator performs checks that are related to storage.

Additional resources

- For more details, see Using the vSphere Problem Detector Operator.