Hosted control planes

Using hosted control planes with OpenShift Container Platform

Abstract

Chapter 1. Hosted control planes overview

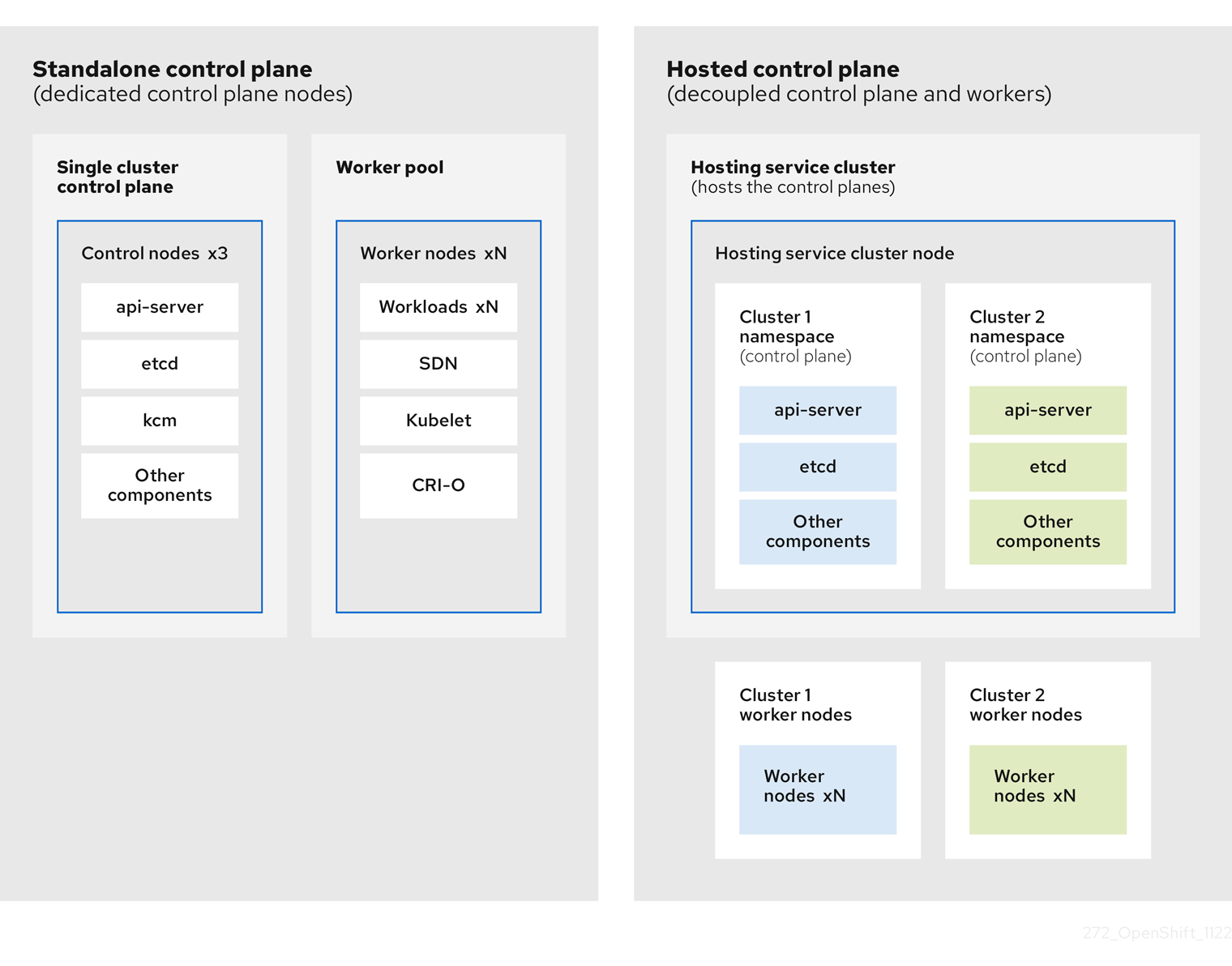

You can deploy OpenShift Container Platform clusters by using two different control plane configurations: standalone or hosted control planes. The standalone configuration uses dedicated virtual machines or physical machines to host the control plane. With hosted control planes for OpenShift Container Platform, you create control planes as pods on a hosting cluster without the need for dedicated virtual or physical machines for each control plane.

1.1. Glossary of common concepts and personas for hosted control planes

When you use hosted control planes for OpenShift Container Platform, it is important to understand its key concepts and the personas that are involved.

1.1.1. Concepts

- hosted cluster

- An OpenShift Container Platform cluster with its control plane and API endpoint hosted on a management cluster. The hosted cluster includes the control plane and its corresponding data plane.

- hosted cluster infrastructure

- Network, compute, and storage resources that exist in the tenant or end-user cloud account.

- hosted control plane

- An OpenShift Container Platform control plane that runs on the management cluster, which is exposed by the API endpoint of a hosted cluster. The components of a control plane include etcd, the Kubernetes API server, the Kubernetes controller manager, and a VPN.

- hosting cluster

- See management cluster.

- managed cluster

- A cluster that the hub cluster manages. This term is specific to the cluster lifecycle that the multicluster engine for Kubernetes Operator manages in Red Hat Advanced Cluster Management. A managed cluster is not the same thing as a management cluster. For more information, see Managed cluster.

- management cluster

- An OpenShift Container Platform cluster where the HyperShift Operator is deployed and where the control planes for hosted clusters are hosted. The management cluster is synonymous with the hosting cluster.

- management cluster infrastructure

- Network, compute, and storage resources of the management cluster.

- node pool

- A resource that contains the compute nodes. The control plane contains node pools. The compute nodes run applications and workloads.

1.1.2. Personas

- cluster instance administrator

-

Users who assume this role are the equivalent of administrators in standalone OpenShift Container Platform. This user has the

cluster-adminrole in the provisioned cluster, but might not have power over when or how the cluster is updated or configured. This user might have read-only access to see some configuration projected into the cluster. - cluster instance user

- Users who assume this role are the equivalent of developers in standalone OpenShift Container Platform. This user does not have a view into OperatorHub or machines.

- cluster service consumer

- Users who assume this role can request control planes and worker nodes, drive updates, or modify externalized configurations. Typically, this user does not manage or access cloud credentials or infrastructure encryption keys. The cluster service consumer persona can request hosted clusters and interact with node pools. Users who assume this role have RBAC to create, read, update, or delete hosted clusters and node pools within a logical boundary.

- cluster service provider

Users who assume this role typically have the

cluster-adminrole on the management cluster and have RBAC to monitor and own the availability of the HyperShift Operator as well as the control planes for the tenant’s hosted clusters. The cluster service provider persona is responsible for several activities, including the following examples:- Owning service-level objects for control plane availability, uptime, and stability

- Configuring the cloud account for the management cluster to host control planes

- Configuring the user-provisioned infrastructure, which includes the host awareness of available compute resources

1.2. Introduction to hosted control planes

You can use hosted control planes for Red Hat OpenShift Container Platform to reduce management costs, optimize cluster deployment time, and separate management and workload concerns so that you can focus on your applications.

Hosted control planes is available by using the multicluster engine for Kubernetes Operator version 2.0 or later on the following platforms:

- Bare metal by using the Agent provider

- OpenShift Virtualization, as a Generally Available feature in connected environments and a Technology Preview feature in disconnected environments

- Amazon Web Services (AWS), as a Technology Preview feature

- IBM Z, as a Technology Preview feature

- IBM Power, as a Technology Preview feature

1.2.1. Architecture of hosted control planes

OpenShift Container Platform is often deployed in a coupled, or standalone, model, where a cluster consists of a control plane and a data plane. The control plane includes an API endpoint, a storage endpoint, a workload scheduler, and an actuator that ensures state. The data plane includes compute, storage, and networking where workloads and applications run.

The standalone control plane is hosted by a dedicated group of nodes, which can be physical or virtual, with a minimum number to ensure quorum. The network stack is shared. Administrator access to a cluster offers visibility into the cluster’s control plane, machine management APIs, and other components that contribute to the state of a cluster.

Although the standalone model works well, some situations require an architecture where the control plane and data plane are decoupled. In those cases, the data plane is on a separate network domain with a dedicated physical hosting environment. The control plane is hosted by using high-level primitives such as deployments and stateful sets that are native to Kubernetes. The control plane is treated as any other workload.

1.2.2. Benefits of hosted control planes

With hosted control planes for OpenShift Container Platform, you can pave the way for a true hybrid-cloud approach and enjoy several other benefits.

- The security boundaries between management and workloads are stronger because the control plane is decoupled and hosted on a dedicated hosting service cluster. As a result, you are less likely to leak credentials for clusters to other users. Because infrastructure secret account management is also decoupled, cluster infrastructure administrators cannot accidentally delete control plane infrastructure.

- With hosted control planes, you can run many control planes on fewer nodes. As a result, clusters are more affordable.

- Because the control planes consist of pods that are launched on OpenShift Container Platform, control planes start quickly. The same principles apply to control planes and workloads, such as monitoring, logging, and auto-scaling.

- From an infrastructure perspective, you can push registries, HAProxy, cluster monitoring, storage nodes, and other infrastructure components to the tenant’s cloud provider account, isolating usage to the tenant.

- From an operational perspective, multicluster management is more centralized, which results in fewer external factors that affect the cluster status and consistency. Site reliability engineers have a central place to debug issues and navigate to the cluster data plane, which can lead to shorter Time to Resolution (TTR) and greater productivity.

1.3. Differences between hosted control planes and OpenShift Container Platform

Hosted control planes is a form factor of OpenShift Container Platform. Hosted clusters and the stand-alone OpenShift Container Platform clusters are configured and managed differently. See the following tables to understand the differences between OpenShift Container Platform and hosted control planes:

1.3.1. Cluster creation and lifecycle

| OpenShift Container Platform | Hosted control planes |

|---|---|

|

You install a standalone OpenShift Container Platform cluster by using the |

You install a hosted cluster by using the |

1.3.2. Cluster configuration

| OpenShift Container Platform | Hosted control planes |

|---|---|

|

You configure cluster-scoped resources such as authentication, API server, and proxy by using the |

You configure resources that impact the control plane in the |

1.3.3. etcd encryption

| OpenShift Container Platform | Hosted control planes |

|---|---|

|

You configure etcd encryption by using the |

You configure etcd encryption by using the |

1.3.4. Operators and control plane

| OpenShift Container Platform | Hosted control planes |

|---|---|

| A standalone OpenShift Container Platform cluster contains separate Operators for each control plane component. | A hosted cluster contains a single Operator named Control Plane Operator that runs in the hosted control plane namespace on the management cluster. |

| etcd uses storage that is mounted on the control plane nodes. The etcd cluster Operator manages etcd. | etcd uses a persistent volume claim for storage and is managed by the Control Plane Operator. |

| The Ingress Operator, network related Operators, and Operator Lifecycle Manager (OLM) run on the cluster. | The Ingress Operator, network related Operators, and Operator Lifecycle Manager (OLM) run in the hosted control plane namespace on the management cluster. |

| The OAuth server runs inside the cluster and is exposed through a route in the cluster. | The OAuth server runs inside the control plane and is exposed through a route, node port, or load balancer on the management cluster. |

1.3.5. Updates

| OpenShift Container Platform | Hosted control planes |

|---|---|

|

The Cluster Version Operator (CVO) orchestrates the update process and monitors the |

The hosted control planes update results in a change to the |

| After you update an OpenShift Container Platform cluster, both the control plane and compute machines are updated. | After you update the hosted cluster, only the control plane is updated. You perform node pool updates separately. |

1.3.6. Machine configuration and management

| OpenShift Container Platform | Hosted control planes |

|---|---|

|

The |

The |

| A set of control plane machines are available. | A set of control plane machines do not exist. |

|

You enable a machine health check by using the |

You enable a machine health check through the |

|

You enable autoscaling by using the |

You enable autoscaling through the |

| Machines and machine sets are exposed in the cluster. | Machines, machine sets, and machine deployments from upstream Cluster CAPI Operator are used to manage machines but are not exposed to the user. |

| All machine sets are upgraded automatically when you update the cluster. | You update your node pools independently from the hosted cluster updates. |

| Only an in-place upgrade is supported in the cluster. | Both replace and in-place upgrades are supported in the hosted cluster. |

| The Machine Config Operator manages configurations for machines. | The Machine Config Operator does not exist in hosted control planes. |

|

You configure machine Ignition by using the |

You configure the |

| The Machine Config Daemon (MCD) manages configuration changes and updates on each of the nodes. | For an in-place upgrade, the node pool controller creates a run-once pod that updates a machine based on your configuration. |

| You can modify the machine configuration resources such as the SR-IOV Operator. | You cannot modify the machine configuration resources. |

1.3.7. Networking

| OpenShift Container Platform | Hosted control planes |

|---|---|

| The Kube API server communicates with nodes directly, because the Kube API server and nodes exist in the same Virtual Private Cloud (VPC). | The Kube API server communicates with nodes through Konnectivity. The Kube API server and nodes exist in a different Virtual Private Cloud (VPC). |

| Nodes communicate with the Kube API server through the internal load balancer. | Nodes communicate with the Kube API server through an external load balancer or a node port. |

1.3.8. Web console

| OpenShift Container Platform | Hosted control planes |

|---|---|

| The web console shows the status of a control plane. | The web console does not show the status of a control plane. |

| You can update your cluster by using the web console. | You cannot update the hosted cluster by using the web console. |

| The web console displays the infrastructure resources such as machines. | The web console does not display the infrastructure resources. |

|

You can configure machines through the | You cannot configure machines by using the web console. |

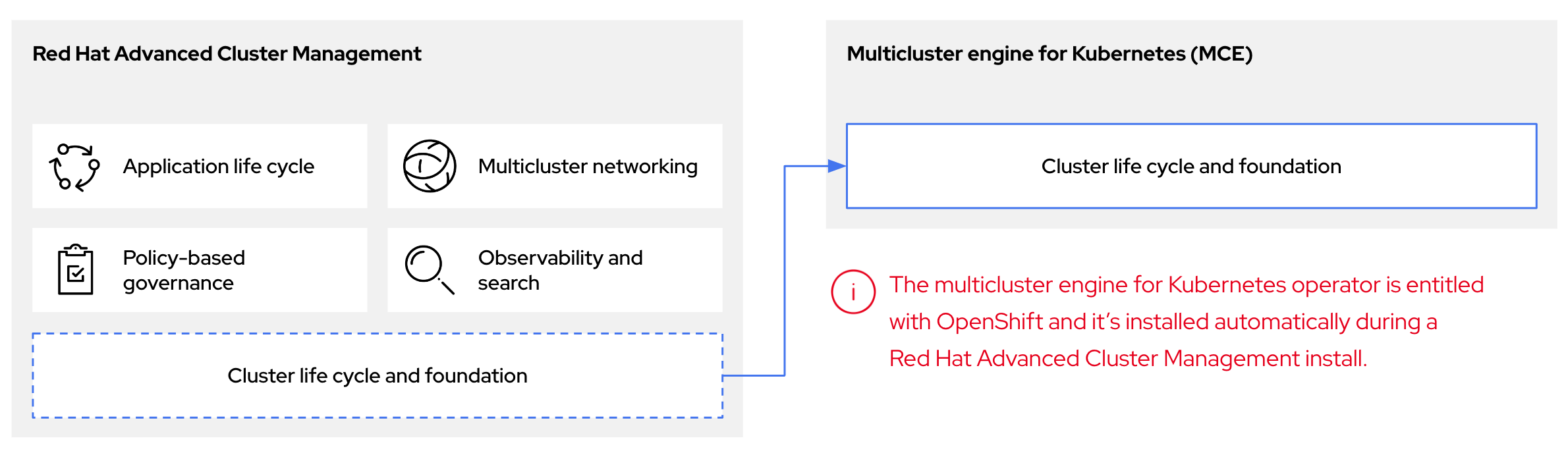

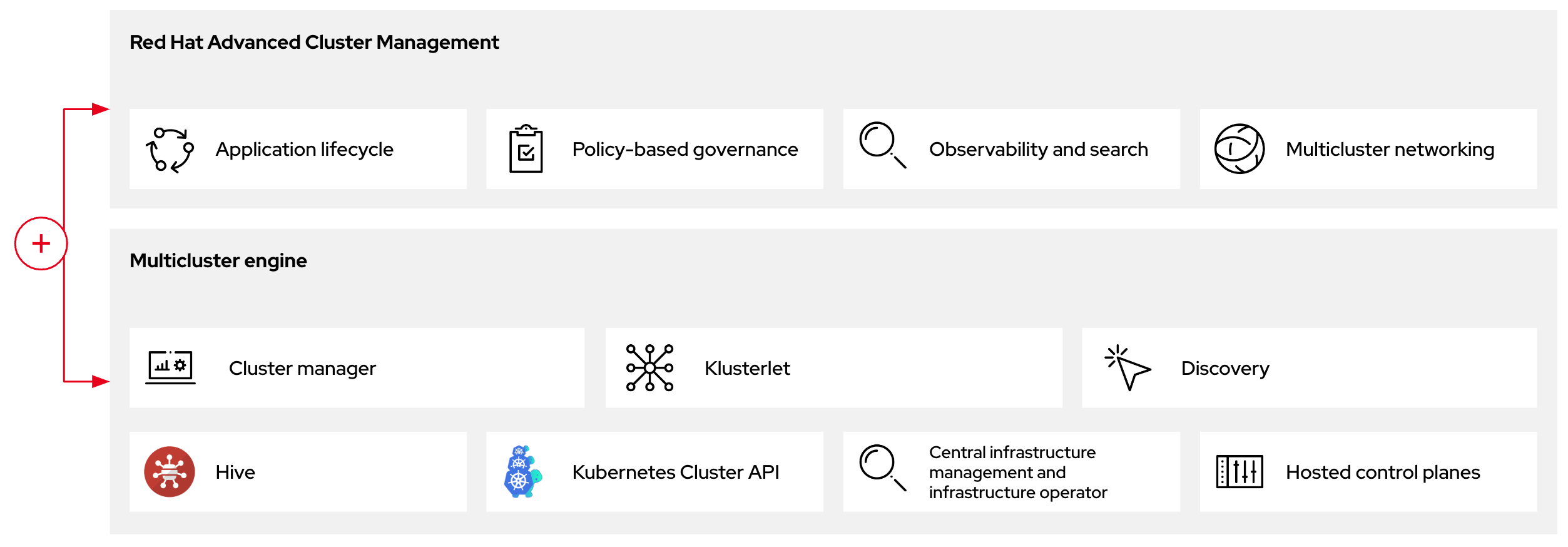

1.4. Relationship between hosted control planes, multicluster engine Operator, and RHACM

You can configure hosted control planes by using the multicluster engine for Kubernetes Operator. The multicluster engine is an integral part of Red Hat Advanced Cluster Management (RHACM) and is enabled by default with RHACM. The multicluster engine Operator cluster lifecycle defines the process of creating, importing, managing, and destroying Kubernetes clusters across various infrastructure cloud providers, private clouds, and on-premises data centers.

The multicluster engine Operator is the cluster lifecycle Operator that provides cluster management capabilities for OpenShift Container Platform and RHACM hub clusters. The multicluster engine Operator enhances cluster fleet management and supports OpenShift Container Platform cluster lifecycle management across clouds and data centers.

Figure 1.1. Cluster life cycle and foundation

You can use the multicluster engine Operator with OpenShift Container Platform as a standalone cluster manager or as part of a RHACM hub cluster.

A management cluster is also known as the hosting cluster.

You can deploy OpenShift Container Platform clusters by using two different control plane configurations: standalone or hosted control planes. The standalone configuration uses dedicated virtual machines or physical machines to host the control plane. With hosted control planes for OpenShift Container Platform, you create control planes as pods on a management cluster without the need for dedicated virtual or physical machines for each control plane.

Figure 1.2. RHACM and the multicluster engine Operator introduction diagram

1.5. Versioning for hosted control planes

With each major, minor, or patch version release of OpenShift Container Platform, two components of hosted control planes are released:

- The HyperShift Operator

-

The

hcpcommand-line interface (CLI)

The HyperShift Operator manages the lifecycle of hosted clusters that are represented by the HostedCluster API resources. The HyperShift Operator is released with each OpenShift Container Platform release. The HyperShift Operator creates the supported-versions config map in the hypershift namespace. The config map contains the supported hosted cluster versions.

You can host different versions of control planes on the same management cluster.

Example supported-versions config map object

You can use the hcp CLI to create hosted clusters.

You can use the hypershift.openshift.io API resources, such as, HostedCluster and NodePool, to create and manage OpenShift Container Platform clusters at scale. A HostedCluster resource contains the control plane and common data plane configuration. When you create a HostedCluster resource, you have a fully functional control plane with no attached nodes. A NodePool resource is a scalable set of worker nodes that is attached to a HostedCluster resource.

The API version policy generally aligns with the policy for Kubernetes API versioning.

Chapter 2. Getting started with hosted control planes

To get started with hosted control planes for OpenShift Container Platform, you first configure your hosted cluster on the provider that you want to use. Then, you complete a few management tasks.

You can view the procedures by selecting from one of the following providers:

2.1. Bare metal

- Hosted control plane sizing guidance

- Installing the hosted control plane command line interface

- Distributing hosted cluster workloads

- Bare metal firewall and port requirements

- Bare metal infrastructure requirements: Review the infrastructure requirements to create a hosted cluster on bare metal.

Configuring hosted control plane clusters on bare metal:

- Configure DNS

- Create a hosted cluster and verify cluster creation

-

Scale the

NodePoolobject for the hosted cluster - Handle ingress traffic for the hosted cluster

- Enable node auto-scaling for the hosted cluster

- Configuring hosted control planes in a disconnected environment

- To destroy a hosted cluster on bare metal, follow the instructions in Destroying a hosted cluster on bare metal.

- If you want to disable the hosted control plane feature, see Disabling the hosted control plane feature.

2.2. OpenShift Virtualization

- Hosted control plane sizing guidance

- Installing the hosted control plane command line interface

- Distributing hosted cluster workloads

- Managing hosted control plane clusters on OpenShift Virtualization: Create OpenShift Container Platform clusters with worker nodes that are hosted by KubeVirt virtual machines.

- Configuring hosted control planes in a disconnected environment

- To destroy a hosted cluster is on OpenShift Virtualization, follow the instructions in Destroying a hosted cluster on OpenShift Virtualization.

- If you want to disable the hosted control plane feature, see Disabling the hosted control plane feature.

2.3. Amazon Web Services (AWS)

- AWS infrastructure requirements: Review the infrastructure requirements to create a hosted cluster on AWS.

- Configuring hosted control plane clusters on AWS: The tasks to configure hosted control plane clusters on AWS include creating the AWS S3 OIDC secret, creating a routable public zone, enabling external DNS, enabling AWS PrivateLink, and deploying a hosted cluster.

- Deploying the SR-IOV Operator for hosted control planes: After you configure and deploy your hosting service cluster, you can create a subscription to the Single Root I/O Virtualization (SR-IOV) Operator on a hosted cluster. The SR-IOV pod runs on worker machines rather than the control plane.

- To destroy a hosted cluster on AWS, follow the instructions in Destroying a hosted cluster on AWS.

- If you want to disable the hosted control plane feature, see Disabling the hosted control plane feature.

2.4. IBM Z

Hosted control planes on the IBM Z platform is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

2.5. IBM Power

Hosted control planes on the IBM Power platform is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

2.6. Non bare metal agent machines

Hosted control planes clusters using non bare metal agent machines is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

- Installing the hosted control plane command line interface

- Configuring hosted control plane clusters using non bare metal agent machines (Technology Preview)

- To destroy a hosted cluster on non bare metal agent machines, follow the instructions in Destroying a hosted cluster on non bare metal agent machines

- If you want to disable the hosted control plane feature, see Disabling the hosted control plane feature.

Chapter 3. Authentication and authorization for hosted control planes

The OpenShift Container Platform control plane includes a built-in OAuth server. You can obtain OAuth access tokens to authenticate to the OpenShift Container Platform API. After you create your hosted cluster, you can configure OAuth by specifying an identity provider.

3.1. Configuring the OAuth server for a hosted cluster by using the CLI

You can configure the internal OAuth server for your hosted cluster by using an OpenID Connect identity provider (oidc).

You can configure OAuth for the following supported identity providers:

-

oidc -

htpasswd -

keystone -

ldap -

basic-authentication -

request-header -

github -

gitlab -

google

Adding any identity provider in the OAuth configuration removes the default kubeadmin user provider.

When you configure identity providers, you must configure at least one NodePool replica in your hosted cluster in advance. Traffic for DNS resolution is sent through the worker nodes. You do not need to configure the NodePool replicas in advance for the htpasswd and request-header identity providers.

Prerequisites

- You created your hosted cluster.

Procedure

Edit the

HostedClustercustom resource (CR) on the hosting cluster by running the following command:oc edit hostedcluster <hosted_cluster_name> -n <hosted_cluster_namespace>

$ oc edit hostedcluster <hosted_cluster_name> -n <hosted_cluster_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the OAuth configuration in the

HostedClusterCR by using the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specifies your hosted cluster name.

- 2

- Specifies your hosted cluster namespace.

- 3

- This provider name is prefixed to the value of the identity claim to form an identity name. The provider name is also used to build the redirect URL.

- 4

- Defines a list of attributes to use as the email address.

- 5

- Defines a list of attributes to use as a display name.

- 6

- Defines a list of attributes to use as a preferred user name.

- 7

- Defines the ID of a client registered with the OpenID provider. You must allow the client to redirect to the

https://oauth-openshift.apps.<cluster_name>.<cluster_domain>/oauth2callback/<idp_provider_name>URL. - 8

- Defines a secret of a client registered with the OpenID provider.

- 9

- The Issuer Identifier described in the OpenID spec. You must use

httpswithout query or fragment component. - 10

- Defines a mapping method that controls how mappings are established between identities of this provider and

Userobjects.

- Save the file to apply the changes.

3.2. Configuring the OAuth server for a hosted cluster by using the web console

You can configure the internal OAuth server for your hosted cluster by using the OpenShift Container Platform web console.

You can configure OAuth for the following supported identity providers:

-

oidc -

htpasswd -

keystone -

ldap -

basic-authentication -

request-header -

github -

gitlab -

google

Adding any identity provider in the OAuth configuration removes the default kubeadmin user provider.

When you configure identity providers, you must configure at least one NodePool replica in your hosted cluster in advance. Traffic for DNS resolution is sent through the worker nodes. You do not need to configure the NodePool replicas in advance for the htpasswd and request-header identity providers.

Prerequisites

-

You logged in as a user with

cluster-adminprivileges. - You created your hosted cluster.

Procedure

- Navigate to Home → API Explorer.

-

Use the Filter by kind box to search for your

HostedClusterresource. -

Click the

HostedClusterresource that you want to edit. - Click the Instances tab.

-

Click the Options menu

next to your hosted cluster name entry and click Edit HostedCluster.

next to your hosted cluster name entry and click Edit HostedCluster.

Add the OAuth configuration in the YAML file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- This provider name is prefixed to the value of the identity claim to form an identity name. The provider name is also used to build the redirect URL.

- 2

- Defines a list of attributes to use as the email address.

- 3

- Defines a list of attributes to use as a display name.

- 4

- Defines a list of attributes to use as a preferred user name.

- 5

- Defines the ID of a client registered with the OpenID provider. You must allow the client to redirect to the

https://oauth-openshift.apps.<cluster_name>.<cluster_domain>/oauth2callback/<idp_provider_name>URL. - 6

- Defines a secret of a client registered with the OpenID provider.

- 7

- The Issuer Identifier described in the OpenID spec. You must use

httpswithout query or fragment component. - 8

- Defines a mapping method that controls how mappings are established between identities of this provider and

Userobjects.

- Click Save.

3.3. Assigning components IAM roles by using the CCO in a hosted cluster on AWS

You can assign components IAM roles that provide short-term, limited-privilege security credentials by using the Cloud Credential Operator (CCO) in hosted clusters on Amazon Web Services (AWS). By default, the CCO runs in a hosted control plane.

The CCO supports a manual mode only for hosted clusters on AWS. By default, hosted clusters are configured in a manual mode. The management cluster might use modes other than manual.

3.4. Verifying the CCO installation in a hosted cluster on AWS

You can verify that the Cloud Credential Operator (CCO) is running correctly in your hosted control plane.

Prerequisites

- You configured the hosted cluster on Amazon Web Services (AWS).

Procedure

Verify that the CCO is configured in a manual mode in your hosted cluster by running the following command:

oc get cloudcredentials <hosted_cluster_name> -n <hosted_cluster_namespace> -o=jsonpath={.spec.credentialsMode}$ oc get cloudcredentials <hosted_cluster_name> -n <hosted_cluster_namespace> -o=jsonpath={.spec.credentialsMode}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

Manual

ManualCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the value for the

serviceAccountIssuerresource is not empty by running the following command:oc get authentication cluster --kubeconfig <hosted_cluster_name>.kubeconfig -o jsonpath --template '{.spec.serviceAccountIssuer }'$ oc get authentication cluster --kubeconfig <hosted_cluster_name>.kubeconfig -o jsonpath --template '{.spec.serviceAccountIssuer }'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

https://aos-hypershift-ci-oidc-29999.s3.us-east-2.amazonaws.com/hypershift-ci-29999

https://aos-hypershift-ci-oidc-29999.s3.us-east-2.amazonaws.com/hypershift-ci-29999Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.5. Enabling Operators to support CCO-based workflows with AWS STS

As an Operator author designing your project to run on Operator Lifecycle Manager (OLM), you can enable your Operator to authenticate against AWS on STS-enabled OpenShift Container Platform clusters by customizing your project to support the Cloud Credential Operator (CCO).

With this method, the Operator is responsible for creating the CredentialsRequest object, which means the Operator requires RBAC permission to create these objects. Then, the Operator must be able to read the resulting Secret object.

By default, pods related to the Operator deployment mount a serviceAccountToken volume so that the service account token can be referenced in the resulting Secret object.

Prerequisites

- OpenShift Container Platform 4.14 or later

- Cluster in STS mode

- OLM-based Operator project

Procedure

Update your Operator project’s

ClusterServiceVersion(CSV) object:Ensure your Operator has RBAC permission to create

CredentialsRequestsobjects:Example 3.1. Example

clusterPermissionslistCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the following annotation to claim support for this method of CCO-based workflow with AWS STS:

# ... metadata: annotations: features.operators.openshift.io/token-auth-aws: "true"

# ... metadata: annotations: features.operators.openshift.io/token-auth-aws: "true"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Update your Operator project code:

Get the role ARN from the environment variable set on the pod by the

Subscriptionobject. For example:// Get ENV var roleARN := os.Getenv("ROLEARN") setupLog.Info("getting role ARN", "role ARN = ", roleARN) webIdentityTokenPath := "/var/run/secrets/openshift/serviceaccount/token"// Get ENV var roleARN := os.Getenv("ROLEARN") setupLog.Info("getting role ARN", "role ARN = ", roleARN) webIdentityTokenPath := "/var/run/secrets/openshift/serviceaccount/token"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure you have a

CredentialsRequestobject ready to be patched and applied. For example:Example 3.2. Example

CredentialsRequestobject creationCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, if you are starting from a

CredentialsRequestobject in YAML form (for example, as part of your Operator project code), you can handle it differently:Example 3.3. Example

CredentialsRequestobject creation in YAML formCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteAdding a

CredentialsRequestobject to the Operator bundle is not currently supported.Add the role ARN and web identity token path to the credentials request and apply it during Operator initialization:

Example 3.4. Example applying

CredentialsRequestobject during Operator initializationCopy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure your Operator can wait for a

Secretobject to show up from the CCO, as shown in the following example, which is called along with the other items you are reconciling in your Operator:Example 3.5. Example wait for

SecretobjectCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

timeoutvalue is based on an estimate of how fast the CCO might detect an addedCredentialsRequestobject and generate aSecretobject. You might consider lowering the time or creating custom feedback for cluster administrators that could be wondering why the Operator is not yet accessing the cloud resources.

Set up the AWS configuration by reading the secret created by the CCO from the credentials request and creating the AWS config file containing the data from that secret:

Example 3.6. Example AWS configuration creation

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantThe secret is assumed to exist, but your Operator code should wait and retry when using this secret to give time to the CCO to create the secret.

Additionally, the wait period should eventually time out and warn users that the OpenShift Container Platform cluster version, and therefore the CCO, might be an earlier version that does not support the

CredentialsRequestobject workflow with STS detection. In such cases, instruct users that they must add a secret by using another method.Configure the AWS SDK session, for example:

Example 3.7. Example AWS SDK session configuration

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 4. Handling a machine configuration for hosted control planes

In a standalone OpenShift Container Platform cluster, a machine config pool manages a set of nodes. You can handle a machine configuration by using the MachineConfigPool custom resource (CR).

You can reference any machineconfiguration.openshift.io resources in the nodepool.spec.config field of the NodePool CR.

In hosted control planes, the MachineConfigPool CR does not exist. A node pool contains a set of compute nodes. You can handle a machine configuration by using node pools.

4.1. Configuring node pools for hosted control planes

On hosted control planes, you can configure node pools by creating a MachineConfig object inside of a config map in the management cluster.

Procedure

To create a

MachineConfigobject inside of a config map in the management cluster, enter the following information:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Sets the path on the node where the

MachineConfigobject is stored.

After you add the object to the config map, you can apply the config map to the node pool as follows:

$ oc edit nodepool <nodepool_name> --namespace <hosted_cluster_namespace>

$ oc edit nodepool <nodepool_name> --namespace <hosted_cluster_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<configmap_name>with the name of your config map.

4.2. Referencing the kubelet configuration in node pools

To reference your kubelet configuration in node pools, you add the kubelet configuration in a config map and then apply the config map in the NodePool resource.

Procedure

Add the kubelet configuration inside of a config map in the management cluster by entering the following information:

Example

ConfigMapobject with the kubelet configurationCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the config map to the node pool by entering the following command:

$ oc edit nodepool <nodepool_name> --namespace clusters

$ oc edit nodepool <nodepool_name> --namespace clusters1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<nodepool_name>with the name of your node pool.

Example

NodePoolresource configurationCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<configmap_name>with the name of your config map.

4.3. Configuring node tuning in a hosted cluster

To set node-level tuning on the nodes in your hosted cluster, you can use the Node Tuning Operator. In hosted control planes, you can configure node tuning by creating config maps that contain Tuned objects and referencing those config maps in your node pools.

Procedure

Create a config map that contains a valid tuned manifest, and reference the manifest in a node pool. In the following example, a

Tunedmanifest defines a profile that setsvm.dirty_ratioto 55 on nodes that contain thetuned-1-node-labelnode label with any value. Save the followingConfigMapmanifest in a file namedtuned-1.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you do not add any labels to an entry in the

spec.recommendsection of the Tuned spec, node-pool-based matching is assumed, so the highest priority profile in thespec.recommendsection is applied to nodes in the pool. Although you can achieve more fine-grained node-label-based matching by setting a label value in the Tuned.spec.recommend.matchsection, node labels will not persist during an upgrade unless you set the.spec.management.upgradeTypevalue of the node pool toInPlace.Create the

ConfigMapobject in the management cluster:oc --kubeconfig="$MGMT_KUBECONFIG" create -f tuned-1.yaml

$ oc --kubeconfig="$MGMT_KUBECONFIG" create -f tuned-1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reference the

ConfigMapobject in thespec.tuningConfigfield of the node pool, either by editing a node pool or creating one. In this example, assume that you have only oneNodePool, namednodepool-1, which contains 2 nodes.Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou can reference the same config map in multiple node pools. In hosted control planes, the Node Tuning Operator appends a hash of the node pool name and namespace to the name of the Tuned CRs to distinguish them. Outside of this case, do not create multiple TuneD profiles of the same name in different Tuned CRs for the same hosted cluster.

Verification

Now that you have created the ConfigMap object that contains a Tuned manifest and referenced it in a NodePool, the Node Tuning Operator syncs the Tuned objects into the hosted cluster. You can verify which Tuned objects are defined and which TuneD profiles are applied to each node.

List the

Tunedobjects in the hosted cluster:oc --kubeconfig="$HC_KUBECONFIG" get tuned.tuned.openshift.io -n openshift-cluster-node-tuning-operator

$ oc --kubeconfig="$HC_KUBECONFIG" get tuned.tuned.openshift.io -n openshift-cluster-node-tuning-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME AGE default 7m36s rendered 7m36s tuned-1 65s

NAME AGE default 7m36s rendered 7m36s tuned-1 65sCopy to Clipboard Copied! Toggle word wrap Toggle overflow List the

Profileobjects in the hosted cluster:oc --kubeconfig="$HC_KUBECONFIG" get profile.tuned.openshift.io -n openshift-cluster-node-tuning-operator

$ oc --kubeconfig="$HC_KUBECONFIG" get profile.tuned.openshift.io -n openshift-cluster-node-tuning-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME TUNED APPLIED DEGRADED AGE nodepool-1-worker-1 tuned-1-profile True False 7m43s nodepool-1-worker-2 tuned-1-profile True False 7m14s

NAME TUNED APPLIED DEGRADED AGE nodepool-1-worker-1 tuned-1-profile True False 7m43s nodepool-1-worker-2 tuned-1-profile True False 7m14sCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf no custom profiles are created, the

openshift-nodeprofile is applied by default.To confirm that the tuning was applied correctly, start a debug shell on a node and check the sysctl values:

oc --kubeconfig="$HC_KUBECONFIG" debug node/nodepool-1-worker-1 -- chroot /host sysctl vm.dirty_ratio

$ oc --kubeconfig="$HC_KUBECONFIG" debug node/nodepool-1-worker-1 -- chroot /host sysctl vm.dirty_ratioCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

vm.dirty_ratio = 55

vm.dirty_ratio = 55Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. Deploying the SR-IOV Operator for hosted control planes

Hosted control planes on the AWS platform is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

After you configure and deploy your hosting service cluster, you can create a subscription to the SR-IOV Operator on a hosted cluster. The SR-IOV pod runs on worker machines rather than the control plane.

Prerequisites

You must configure and deploy the hosted cluster on AWS. For more information, see Configuring the hosting cluster on AWS (Technology Preview).

Procedure

Create a namespace and an Operator group:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a subscription to the SR-IOV Operator:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To verify that the SR-IOV Operator is ready, run the following command and view the resulting output:

oc get csv -n openshift-sriov-network-operator

$ oc get csv -n openshift-sriov-network-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME DISPLAY VERSION REPLACES PHASE sriov-network-operator.4.16.0-202211021237 SR-IOV Network Operator 4.16.0-202211021237 sriov-network-operator.4.16.0-202210290517 Succeeded

NAME DISPLAY VERSION REPLACES PHASE sriov-network-operator.4.16.0-202211021237 SR-IOV Network Operator 4.16.0-202211021237 sriov-network-operator.4.16.0-202210290517 SucceededCopy to Clipboard Copied! Toggle word wrap Toggle overflow To verify that the SR-IOV pods are deployed, run the following command:

oc get pods -n openshift-sriov-network-operator

$ oc get pods -n openshift-sriov-network-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Configuring the NTP server for hosted clusters

You can configure the Network Time Protocol (NTP) server for your hosted clusters by using Butane.

Procedure

Create a Butane config file,

99-worker-chrony.bu, that includes the contents of thechrony.conffile. For more information about Butane, see "Creating machine configs with Butane".Example

99-worker-chrony.buconfigurationCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify an octal value mode for the

modefield in the machine config file. After creating the file and applying the changes, themodefield is converted to a decimal value. - 2

- Specify any valid, reachable time source, such as the one provided by your Dynamic Host Configuration Protocol (DHCP) server.

NoteFor machine-to-machine communication, the NTP on the User Datagram Protocol (UDP) port is

123. If you configured an external NTP time server, you must open UDP port123.Use Butane to generate a

MachineConfigobject file,99-worker-chrony.yaml, that contains a configuration that Butane sends to the nodes. Run the following command:butane 99-worker-chrony.bu -o 99-worker-chrony.yaml

$ butane 99-worker-chrony.bu -o 99-worker-chrony.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

99-worker-chrony.yamlconfigurationCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the contents of the

99-worker-chrony.yamlfile inside of a config map in the management cluster:Example config map

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<namespace>with the name of your namespace where you created the node pool, such asclusters.

Apply the config map to your node pool by running the following command:

oc edit nodepool <nodepool_name> --namespace <hosted_cluster_namespace>

$ oc edit nodepool <nodepool_name> --namespace <hosted_cluster_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

NodePoolconfigurationCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<configmap_name>with the name of your config map.

Add the list of your NTP servers in the

infra-env.yamlfile, which defines theInfraEnvcustom resource (CR):Example

infra-env.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<ntp_server>with the name of your NTP server. For more details about creating a host inventory and theInfraEnvCR, see "Creating a host inventory".

Apply the

InfraEnvCR by running the following command:oc apply -f infra-env.yaml

$ oc apply -f infra-env.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Check the following fields to know the status of your host inventory:

-

conditions: The standard Kubernetes conditions indicating if the image was created successfully. -

isoDownloadURL: The URL to download the Discovery Image. createdTime: The time at which the image was last created. If you modify theInfraEnvCR, ensure that you have updated the timestamp before downloading a new image.Verify that your host inventory is created by running the following command:

oc describe infraenv <infraenv_resource_name> -n <infraenv_namespace>

$ oc describe infraenv <infraenv_resource_name> -n <infraenv_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you modify the

InfraEnvCR, confirm that theInfraEnvCR has created a new Discovery Image by looking at thecreatedTimefield. If you already booted hosts, boot them again with the latest Discovery Image.

-

Chapter 5. Using feature gates in a hosted cluster

You can use feature gates in a hosted cluster to enable features that are not part of the default set of features. You can enable the TechPreviewNoUpgrade feature set by using feature gates in your hosted cluster.

5.1. Enabling feature sets by using feature gates

You can enable the TechPreviewNoUpgrade feature set in a hosted cluster by editing the HostedCluster custom resource (CR) with the OpenShift CLI.

Prerequisites

-

You installed the OpenShift CLI (

oc).

Procedure

Open the

HostedClusterCR for editing on the hosting cluster by running the following command:oc edit hostedcluster <hosted_cluster_name> -n <hosted_cluster_namespace>

$ oc edit hostedcluster <hosted_cluster_name> -n <hosted_cluster_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Define the feature set by entering a value in the

featureSetfield. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow WarningEnabling the

TechPreviewNoUpgradefeature set on your cluster cannot be undone and prevents minor version updates. This feature set allows you to enable these Technology Preview features on test clusters, where you can fully test them. Do not enable this feature set on production clusters.- Save the file to apply the changes.

Verification

Verify that the

TechPreviewNoUpgradefeature gate is enabled in your hosted cluster by running the following command:oc get featuregate cluster -o yaml

$ oc get featuregate cluster -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 6. Configuring certificates for hosted control planes

With hosted control planes, the steps to configure certificates differ from those of standalone OpenShift Container Platform.

6.1. Configuring a custom API server certificate in a hosted cluster

To configure a custom certificate for the API server, specify the certificate details in the spec.configuration.apiServer section of your HostedCluster configuration.

You can configure a custom certificate during either day-1 or day-2 operations. However, because the service publishing strategy is immutable after you set it during hosted cluster creation, you must know what the hostname is for the Kubernetes API server that you plan to configure.

Prerequisites

You created a Kubernetes secret that contains your custom certificate in the management cluster. The secret contains the following keys:

-

tls.crt: The certificate -

tls.key: The private key

-

-

If your

HostedClusterconfiguration includes a service publishing strategy that uses a load balancer, ensure that the Subject Alternative Names (SANs) of the certificate do not conflict with the internal API endpoint (api-int). The internal API endpoint is automatically created and managed by your platform. If you use the same hostname in both the custom certificate and the internal API endpoint, routing conflicts can occur. The only exception to this rule is when you use AWS as the provider with eitherPrivateorPublicAndPrivateconfigurations. In those cases, the SAN conflict is managed by the platform. - The certificate must be valid for the external API endpoint.

- The validity period of the certificate aligns with your cluster’s expected life cycle.

Procedure

Create a secret with your custom certificate by entering the following command:

oc create secret tls sample-hosted-kas-custom-cert \ --cert=path/to/cert.crt \ --key=path/to/key.key \ -n <hosted_cluster_namespace>

$ oc create secret tls sample-hosted-kas-custom-cert \ --cert=path/to/cert.crt \ --key=path/to/key.key \ -n <hosted_cluster_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update your

HostedClusterconfiguration with the custom certificate details, as shown in the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the changes to your

HostedClusterconfiguration by entering the following command:oc apply -f <hosted_cluster_config>.yaml

$ oc apply -f <hosted_cluster_config>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

- Check the API server pods to ensure that the new certificate is mounted.

- Test the connection to the API server by using the custom domain name.

-

Verify the certificate details in your browser or by using tools such as

openssl.

6.2. Configuring the Kubernetes API server for a hosted cluster

If you want to customize the Kubernetes API server for your hosted cluster, complete the following steps.

Prerequisites

- You have a running hosted cluster.

-

You have access to modify the

HostedClusterresource. You have a custom DNS domain to use for the Kubernetes API server.

- The custom DNS domain must be properly configured and resolvable.

- The DNS domain must have valid TLS certificates configured.

- Network access to the domain must be properly configured in your environment.

- The custom DNS domain must be unique across your hosted clusters.

- You have a configured custom certificate. For more information, see "Configuring a custom API server certificate in a hosted cluster".

Procedure

In your provider platform, configure the DNS record so that the

kubeAPIServerDNSNameURL points to the IP address that the Kubernetes API server is being exposed to. The DNS record must be properly configured and resolvable from your cluster.Example command to configure the DNS record

dig + short kubeAPIServerDNSName

$ dig + short kubeAPIServerDNSNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow In your

HostedClusterspecification, modify thekubeAPIServerDNSNamefield, as shown in the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The list of DNS names that the certificate is valid for. The names listed in this field cannot be the same as the names specified in the

spec.servicePublishingStrategy.*hostnamefield. - 2

- The name of the secret that contains the custom certificate.

- 3

- This field accepts a URI that will be used as the API server endpoint.

Apply the configuration by entering the following command:

oc -f <hosted_cluster_spec>.yaml

$ oc -f <hosted_cluster_spec>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow After the configuration is applied, the HyperShift Operator generates a new

kubeconfigsecret that points to your custom DNS domain.Retrieve the

kubeconfigsecret by using the CLI or the console.To retrieve the secret by using the CLI, enter the following command:

kubectl get secret <hosted_cluster_name>-custom-admin-kubeconfig \ -n <cluster_namespace> \ -o jsonpath='{.data.kubeconfig}' | base64 -d$ kubectl get secret <hosted_cluster_name>-custom-admin-kubeconfig \ -n <cluster_namespace> \ -o jsonpath='{.data.kubeconfig}' | base64 -dCopy to Clipboard Copied! Toggle word wrap Toggle overflow To retrieve the secret by using the console, go to your hosted cluster and click Download Kubeconfig.

NoteYou cannot consume the new

kubeconfigsecret by using the show login command option in the console.

6.3. Troubleshooting accessing a hosted cluster by using a custom DNS

If you encounter issues when you access a hosted cluster by using a custom DNS, complete the following steps.

Procedure

- Verify that the DNS record is properly configured and resolved.

Check that the TLS certificates for the custom domain are valid, verifying that the SAN is correct for your domain, by entering the following command:

oc get secret \ -n clusters <serving_certificate_name> \ -o jsonpath='{.data.tls\.crt}' | base64 \ -d |openssl x509 -text -noout -$ oc get secret \ -n clusters <serving_certificate_name> \ -o jsonpath='{.data.tls\.crt}' | base64 \ -d |openssl x509 -text -noout -Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Ensure that network connectivity to the custom domain is working.

In the

HostedClusterresource, verify that the status shows the correct customkubeconfiginformation, as shown in the following example:Example

HostedClusterstatusstatus: customKubeconfig: name: sample-hosted-custom-admin-kubeconfigstatus: customKubeconfig: name: sample-hosted-custom-admin-kubeconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the

kube-apiserverlogs in theHostedControlPlanenamespace by entering the following command:oc logs -n <hosted_control_plane_namespace> \ -l app=kube-apiserver -f -c kube-apiserver

$ oc logs -n <hosted_control_plane_namespace> \ -l app=kube-apiserver -f -c kube-apiserverCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 7. Updating hosted control planes

Updates for hosted control planes involve updating the hosted cluster and the node pools. For a cluster to remain fully operational during an update process, you must meet the requirements of the Kubernetes version skew policy while completing the control plane and node updates.

7.1. Requirements to upgrade hosted control planes

The multicluster engine for Kubernetes Operator can manage one or more OpenShift Container Platform clusters. After you create a hosted cluster on OpenShift Container Platform, you must import your hosted cluster in the multicluster engine Operator as a managed cluster. Then, you can use the OpenShift Container Platform cluster as a management cluster.

Consider the following requirements before you start updating hosted control planes:

- You must use the bare metal platform for an OpenShift Container Platform cluster when using OpenShift Virtualization as a provider.

-

You must use bare metal or OpenShift Virtualization as the cloud platform for the hosted cluster. You can find the platform type of your hosted cluster in the

spec.Platform.typespecification of theHostedClustercustom resource (CR).

You must upgrade the OpenShift Container Platform cluster, multicluster engine Operator, hosted cluster, and node pools by completing the following tasks:

- Upgrade an OpenShift Container Platform cluster to the latest version. For more information, see "Updating a cluster using the web console" or "Updating a cluster using the CLI".

- Upgrade the multicluster engine Operator to the latest version. For more information, see "Updating installed Operators".

- Upgrade the hosted cluster and node pools from the previous OpenShift Container Platform version to the latest version. For more information, see "Updating a control plane in a hosted cluster" and "Updating node pools in a hosted cluster".

7.2. Setting channels in a hosted cluster

You can see available updates in the HostedCluster.Status field of the HostedCluster custom resource (CR).

The available updates are not fetched from the Cluster Version Operator (CVO) of a hosted cluster. The list of the available updates can be different from the available updates from the following fields of the HostedCluster custom resource (CR):

-

status.version.availableUpdates -

status.version.conditionalUpdates

The initial HostedCluster CR does not have any information in the status.version.availableUpdates and status.version.conditionalUpdates fields. After you set the spec.channel field to the stable OpenShift Container Platform release version, the HyperShift Operator reconciles the HostedCluster CR and updates the status.version field with the available and conditional updates.

See the following example of the HostedCluster CR that contains the channel configuration:

- 1

- Replace

<4.y>with the OpenShift Container Platform release version you specified inspec.release. For example, if you set thespec.releasetoocp-release:4.16.4-multi, you must setspec.channeltostable-4.16.

After you configure the channel in the HostedCluster CR, to view the output of the status.version.availableUpdates and status.version.conditionalUpdates fields, run the following command:

oc get -n <hosted_cluster_namespace> hostedcluster <hosted_cluster_name> -o yaml

$ oc get -n <hosted_cluster_namespace> hostedcluster <hosted_cluster_name> -o yamlExample output

7.3. Updating the OpenShift Container Platform version in a hosted cluster

Hosted control planes enables the decoupling of updates between the control plane and the data plane.

As a cluster service provider or cluster administrator, you can manage the control plane and the data separately.

You can update a control plane by modifying the HostedCluster custom resource (CR) and a node by modifying its NodePool CR. Both the HostedCluster and NodePool CRs specify an OpenShift Container Platform release image in a .release field.

To keep your hosted cluster fully operational during an update process, the control plane and the node updates must follow the Kubernetes version skew policy.

7.3.1. The multicluster engine Operator hub management cluster

The multicluster engine for Kubernetes Operator requires a specific OpenShift Container Platform version for the management cluster to remain in a supported state. You can install the multicluster engine Operator from OperatorHub in the OpenShift Container Platform web console.

See the following support matrices for the multicluster engine Operator versions:

The multicluster engine Operator supports the following OpenShift Container Platform versions:

- The latest unreleased version

- The latest released version

- Two versions before the latest released version

You can also get the multicluster engine Operator version as a part of Red Hat Advanced Cluster Management (RHACM).

7.3.2. Supported OpenShift Container Platform versions in a hosted cluster

When deploying a hosted cluster, the OpenShift Container Platform version of the management cluster does not affect the OpenShift Container Platform version of a hosted cluster.

The HyperShift Operator creates the supported-versions ConfigMap in the hypershift namespace. The supported-versions ConfigMap describes the range of supported OpenShift Container Platform versions that you can deploy.

See the following example of the supported-versions ConfigMap:

To create a hosted cluster, you must use the OpenShift Container Platform version from the support version range. However, the multicluster engine Operator can manage only between n+1 and n-2 OpenShift Container Platform versions, where n defines the current minor version. You can check the multicluster engine Operator support matrix to ensure the hosted clusters managed by the multicluster engine Operator are within the supported OpenShift Container Platform range.

To deploy a higher version of a hosted cluster on OpenShift Container Platform, you must update the multicluster engine Operator to a new minor version release to deploy a new version of the Hypershift Operator. Upgrading the multicluster engine Operator to a new patch, or z-stream, release does not update the HyperShift Operator to the next version.

See the following example output of the hcp version command that shows the supported OpenShift Container Platform versions for OpenShift Container Platform 4.16 in the management cluster:

Client Version: openshift/hypershift: fe67b47fb60e483fe60e4755a02b3be393256343. Latest supported OCP: 4.17.0 Server Version: 05864f61f24a8517731664f8091cedcfc5f9b60d Server Supports OCP Versions: 4.17, 4.16, 4.15, 4.14

Client Version: openshift/hypershift: fe67b47fb60e483fe60e4755a02b3be393256343. Latest supported OCP: 4.17.0

Server Version: 05864f61f24a8517731664f8091cedcfc5f9b60d

Server Supports OCP Versions: 4.17, 4.16, 4.15, 4.147.4. Updates for the hosted cluster

The spec.release.image value dictates the version of the control plane. The HostedCluster object transmits the intended spec.release.image value to the HostedControlPlane.spec.releaseImage value and runs the appropriate Control Plane Operator version.

The hosted control plane manages the rollout of the new version of the control plane components along with any OpenShift Container Platform components through the new version of the Cluster Version Operator (CVO).

In hosted control planes, the NodeHealthCheck resource cannot detect the status of the CVO. A cluster administrator must manually pause the remediation triggered by NodeHealthCheck, before performing critical operations, such as updating the cluster, to prevent new remediation actions from interfering with cluster updates.

To pause the remediation, enter the array of strings, for example, pause-test-cluster, as a value of the pauseRequests field in the NodeHealthCheck resource. For more information, see About the Node Health Check Operator.

After the cluster update is complete, you can edit or delete the remediation. Navigate to the Compute → NodeHealthCheck page, click your node health check, and then click Actions, which shows a drop-down list.

7.5. Updates for node pools

With node pools, you can configure the software that is running in the nodes by exposing the spec.release and spec.config values. You can start a rolling node pool update in the following ways:

-

Changing the

spec.releaseorspec.configvalues. - Changing any platform-specific field, such as the AWS instance type. The result is a set of new instances with the new type.

- Changing the cluster configuration, if the change propagates to the node.

Node pools support replace updates and in-place updates. The nodepool.spec.release value dictates the version of any particular node pool. A NodePool object completes a replace or an in-place rolling update according to the .spec.management.upgradeType value.

After you create a node pool, you cannot change the update type. If you want to change the update type, you must create a node pool and delete the other one.

7.5.1. Replace updates for node pools

A replace update creates instances in the new version while it removes old instances from the previous version. This update type is effective in cloud environments where this level of immutability is cost effective.

Replace updates do not preserve any manual changes because the node is entirely re-provisioned.

7.5.2. In place updates for node pools

An in-place update directly updates the operating systems of the instances. This type is suitable for environments where the infrastructure constraints are higher, such as bare metal.

In-place updates can preserve manual changes, but will report errors if you make manual changes to any file system or operating system configuration that the cluster directly manages, such as kubelet certificates.

7.6. Updating node pools in a hosted cluster

You can update your version of OpenShift Container Platform by updating the node pools in your hosted cluster. The node pool version must not surpass the hosted control plane version.

The .spec.release field in the NodePool custom resource (CR) shows the version of a node pool.

Procedure

Change the

spec.release.imagevalue in the node pool by entering the following command:oc patch nodepool <node_pool_name> -n <hosted_cluster_namespace> --type=merge -p '{"spec":{"nodeDrainTimeout":"60s","release":{"image":"<openshift_release_image>"}}}'$ oc patch nodepool <node_pool_name> -n <hosted_cluster_namespace> --type=merge -p '{"spec":{"nodeDrainTimeout":"60s","release":{"image":"<openshift_release_image>"}}}'1 2 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<node_pool_name>and<hosted_cluster_namespace>with your node pool name and hosted cluster namespace, respectively. - 2

- The

<openshift_release_image>variable specifies the new OpenShift Container Platform release image that you want to upgrade to, for example,quay.io/openshift-release-dev/ocp-release:4.y.z-x86_64. Replace<4.y.z>with the supported OpenShift Container Platform version.

Verification

To verify that the new version was rolled out, check the

.status.conditionsvalue in the node pool by running the following command:oc get -n <hosted_cluster_namespace> nodepool <node_pool_name> -o yaml

$ oc get -n <hosted_cluster_namespace> nodepool <node_pool_name> -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<4.y.z>with the supported OpenShift Container Platform version.

7.7. Updating a control plane in a hosted cluster

On hosted control planes, you can upgrade your version of OpenShift Container Platform by updating the hosted cluster. The .spec.release in the HostedCluster custom resource (CR) shows the version of the control plane. The HostedCluster updates the .spec.release field to the HostedControlPlane.spec.release and runs the appropriate Control Plane Operator version.

The HostedControlPlane resource orchestrates the rollout of the new version of the control plane components along with the OpenShift Container Platform component in the data plane through the new version of the Cluster Version Operator (CVO). The HostedControlPlane includes the following artifacts:

- CVO

- Cluster Network Operator (CNO)

- Cluster Ingress Operator

- Manifests for the Kube API server, scheduler, and manager

- Machine approver

- Autoscaler

- Infrastructure resources to enable ingress for control plane endpoints such as the Kube API server, ignition, and konnectivity

You can set the .spec.release field in the HostedCluster CR to update the control plane by using the information from the status.version.availableUpdates and status.version.conditionalUpdates fields.

Procedure

Add the

hypershift.openshift.io/force-upgrade-to=<openshift_release_image>annotation to the hosted cluster by entering the following command:oc annotate hostedcluster -n <hosted_cluster_namespace> <hosted_cluster_name> "hypershift.openshift.io/force-upgrade-to=<openshift_release_image>" --overwrite

$ oc annotate hostedcluster -n <hosted_cluster_namespace> <hosted_cluster_name> "hypershift.openshift.io/force-upgrade-to=<openshift_release_image>" --overwrite1 2 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<hosted_cluster_name>and<hosted_cluster_namespace>with your hosted cluster name and hosted cluster namespace, respectively. - 2

- The

<openshift_release_image>variable specifies the new OpenShift Container Platform release image that you want to upgrade to, for example,quay.io/openshift-release-dev/ocp-release:4.y.z-x86_64. Replace<4.y.z>with the supported OpenShift Container Platform version.

Change the

spec.release.imagevalue in the hosted cluster by entering the following command:oc patch hostedcluster <hosted_cluster_name> -n <hosted_cluster_namespace> --type=merge -p '{"spec":{"release":{"image":"<openshift_release_image>"}}}'$ oc patch hostedcluster <hosted_cluster_name> -n <hosted_cluster_namespace> --type=merge -p '{"spec":{"release":{"image":"<openshift_release_image>"}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To verify that the new version was rolled out, check the

.status.conditionsand.status.versionvalues in the hosted cluster by running the following command:oc get -n <hosted_cluster_namespace> hostedcluster <hosted_cluster_name> -o yaml

$ oc get -n <hosted_cluster_namespace> hostedcluster <hosted_cluster_name> -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.8. Updating a hosted cluster by using the multicluster engine Operator console

You can update your hosted cluster by using the multicluster engine Operator console.

Before updating a hosted cluster, you must refer to the available and conditional updates of a hosted cluster. Choosing a wrong release version might break the hosted cluster.

Procedure

- Select All clusters.

- Navigate to Infrastructure → Clusters to view managed hosted clusters.

- Click the Upgrade available link to update the control plane and node pools.

7.9. Limitations of managing imported hosted clusters

Hosted clusters are automatically imported into the local multicluster engine for Kubernetes Operator, unlike a standalone OpenShift Container Platform or third party clusters. Hosted clusters run some of their agents in the hosted mode so that the agents do not use the resources of your cluster.

If you choose to automatically import hosted clusters, you can update node pools and the control plane in hosted clusters by using the HostedCluster resource on the management cluster. To update node pools and a control plane, see "Updating node pools in a hosted cluster" and "Updating a control plane in a hosted cluster".

You can import hosted clusters into a location other than the local multicluster engine Operator by using the Red Hat Advanced Cluster Management (RHACM). For more information, see "Discovering multicluster engine for Kubernetes Operator hosted clusters in Red Hat Advanced Cluster Management".

In this topology, you must update your hosted clusters by using the command-line interface or the console of the local multicluster engine for Kubernetes Operator where the cluster is hosted. You cannot update the hosted clusters through the RHACM hub cluster.

Chapter 8. Hosted control planes Observability

You can gather metrics for hosted control planes by configuring metrics sets. The HyperShift Operator can create or delete monitoring dashboards in the management cluster for each hosted cluster that it manages.

8.1. Configuring metrics sets for hosted control planes

Hosted control planes for Red Hat OpenShift Container Platform creates ServiceMonitor resources in each control plane namespace that allow a Prometheus stack to gather metrics from the control planes. The ServiceMonitor resources use metrics relabelings to define which metrics are included or excluded from a particular component, such as etcd or the Kubernetes API server. The number of metrics that are produced by control planes directly impacts the resource requirements of the monitoring stack that gathers them.

Instead of producing a fixed number of metrics that apply to all situations, you can configure a metrics set that identifies a set of metrics to produce for each control plane. The following metrics sets are supported:

-

Telemetry: These metrics are needed for telemetry. This set is the default set and is the smallest set of metrics. -

SRE: This set includes the necessary metrics to produce alerts and allow the troubleshooting of control plane components. -

All: This set includes all of the metrics that are produced by standalone OpenShift Container Platform control plane components.

To configure a metrics set, set the METRICS_SET environment variable in the HyperShift Operator deployment by entering the following command:

oc set env -n hypershift deployment/operator METRICS_SET=All

$ oc set env -n hypershift deployment/operator METRICS_SET=All8.1.1. Configuring the SRE metrics set

When you specify the SRE metrics set, the HyperShift Operator looks for a config map named sre-metric-set with a single key: config. The value of the config key must contain a set of RelabelConfigs that are organized by control plane component.

You can specify the following components:

-

etcd -

kubeAPIServer -

kubeControllerManager -

openshiftAPIServer -

openshiftControllerManager -

openshiftRouteControllerManager -

cvo -

olm -

catalogOperator -

registryOperator -

nodeTuningOperator -

controlPlaneOperator -

hostedClusterConfigOperator

A configuration of the SRE metrics set is illustrated in the following example:

8.2. Enabling monitoring dashboards in a hosted cluster

You can enable monitoring dashboards in a hosted cluster by creating a config map.

Procedure

Create the

hypershift-operator-install-flagsconfig map in thelocal-clusternamespace. See the following example configuration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

--monitoring-dashboards --metrics-set=Allflag adds the monitoring dashboard for all metrics.

Wait a couple of minutes for the HyperShift Operator deployment in the

hypershiftnamespace to be updated to include the following environment variable:- name: MONITORING_DASHBOARDS value: "1"- name: MONITORING_DASHBOARDS value: "1"Copy to Clipboard Copied! Toggle word wrap Toggle overflow When monitoring dashboards are enabled, for each hosted cluster that the HyperShift Operator manages, the Operator creates a config map named

cp-<hosted_cluster_namespace>-<hosted_cluster_name>in theopenshift-config-managednamespace, where<hosted_cluster_namespace>is the namespace of the hosted cluster and<hosted_cluster_name>is the name of the hosted cluster. As a result, a new dashboard is added in the administrative console of the management cluster.- To view the dashboard, log in to the management cluster’s console and go to the dashboard for the hosted cluster by clicking Observe → Dashboards.

-

Optional: To disable monitoring dashboards in a hosted cluster, remove the

--monitoring-dashboards --metrics-set=Allflag from thehypershift-operator-install-flagsconfig map. When you delete a hosted cluster, its corresponding dashboard is also deleted.

8.2.1. Dashboard customization

To generate dashboards for each hosted cluster, the HyperShift Operator uses a template that is stored in the monitoring-dashboard-template config map in the Operator namespace (hypershift). This template contains a set of Grafana panels that contain the metrics for the dashboard. You can edit the content of the config map to customize the dashboards.

When a dashboard is generated, the following strings are replaced with values that correspond to a specific hosted cluster:

| Name | Description |

|---|---|

|

| The name of the hosted cluster |

|

| The namespace of the hosted cluster |

|

| The namespace where the control plane pods of the hosted cluster are placed |

|

|

The UUID of the hosted cluster, which matches the |

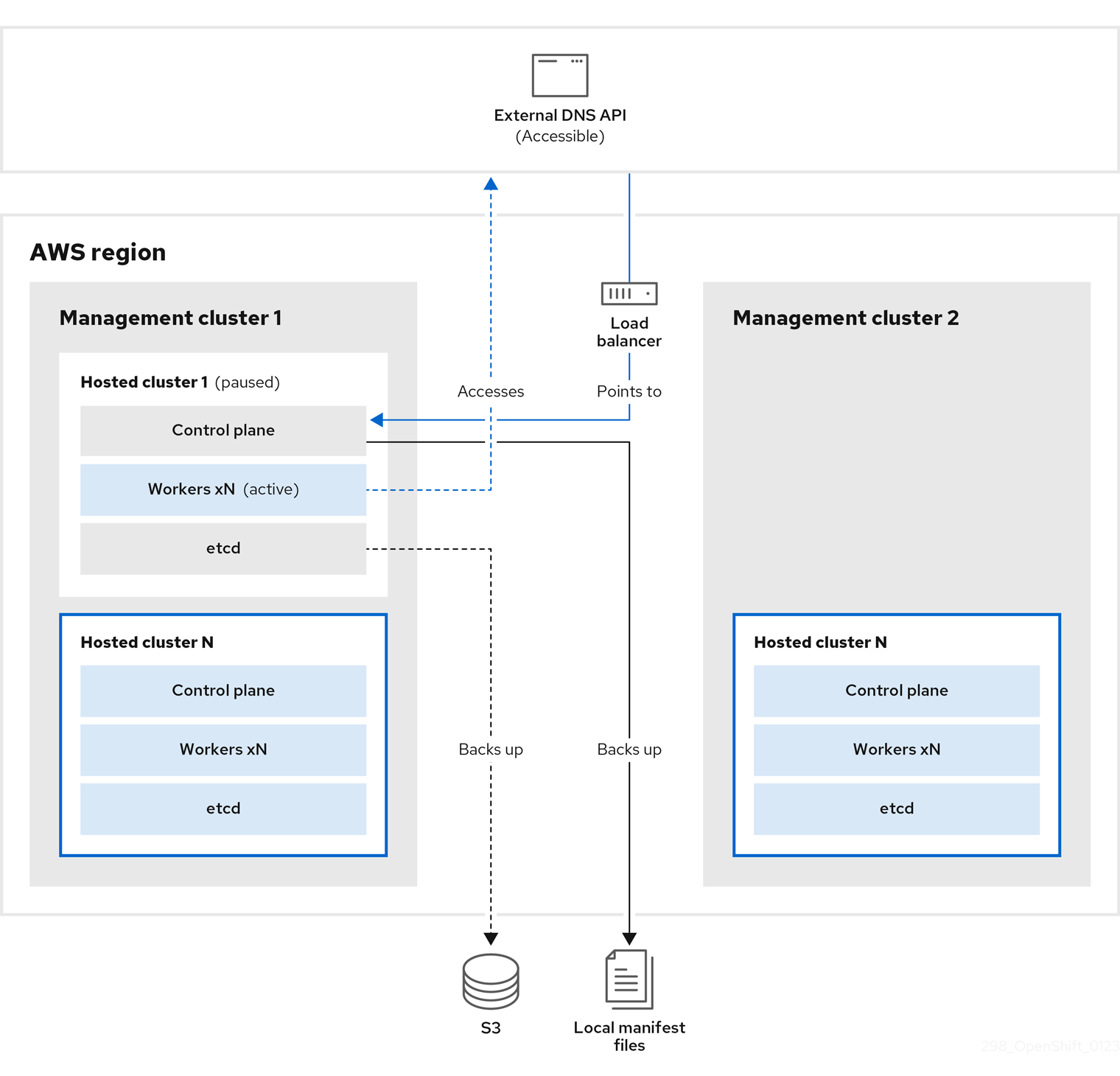

Chapter 9. High availability for hosted control planes

9.1. About high availability for hosted control planes

You can maintain high availability (HA) of hosted control planes by implementing the following actions:

- Recover etcd members for a hosted cluster.

- Back up and restore etcd for a hosted cluster.

- Perform a disaster recovery process for a hosted cluster.

9.1.1. Impact of the failed management cluster component

If the management cluster component fails, your workload remains unaffected. In the OpenShift Container Platform management cluster, the control plane is decoupled from the data plane to provide resiliency.

The following table covers the impact of a failed management cluster component on the control plane and the data plane. However, the table does not cover all scenarios for the management cluster component failures.

| Name of the failed component | Hosted control plane API status | Hosted cluster data plane status |

|---|---|---|

| Worker node | Available | Available |

| Availability zone | Available | Available |

| Management cluster control plane | Available | Available |

| Management cluster control plane and worker nodes | Not available | Available |

9.2. Recovering an unhealthy etcd cluster

In a highly available control plane, three etcd pods run as a part of a stateful set in an etcd cluster. To recover an etcd cluster, identify unhealthy etcd pods by checking the etcd cluster health.

9.2.1. Checking the status of an etcd cluster

You can check the status of the etcd cluster health by logging into any etcd pod.

Procedure

Log in to an etcd pod by entering the following command:

oc rsh -n openshift-etcd -c etcd <etcd_pod_name>

$ oc rsh -n openshift-etcd -c etcd <etcd_pod_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Print the health status of an etcd cluster by entering the following command:

etcdctl endpoint status -w table

sh-4.4# etcdctl endpoint status -w tableCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

9.2.2. Recovering a failing etcd pod

Each etcd pod of a 3-node cluster has its own persistent volume claim (PVC) to store its data. An etcd pod might fail because of corrupted or missing data. You can recover a failing etcd pod and its PVC.

Procedure