Chapter 6. Network security

6.1. Understanding network policy APIs

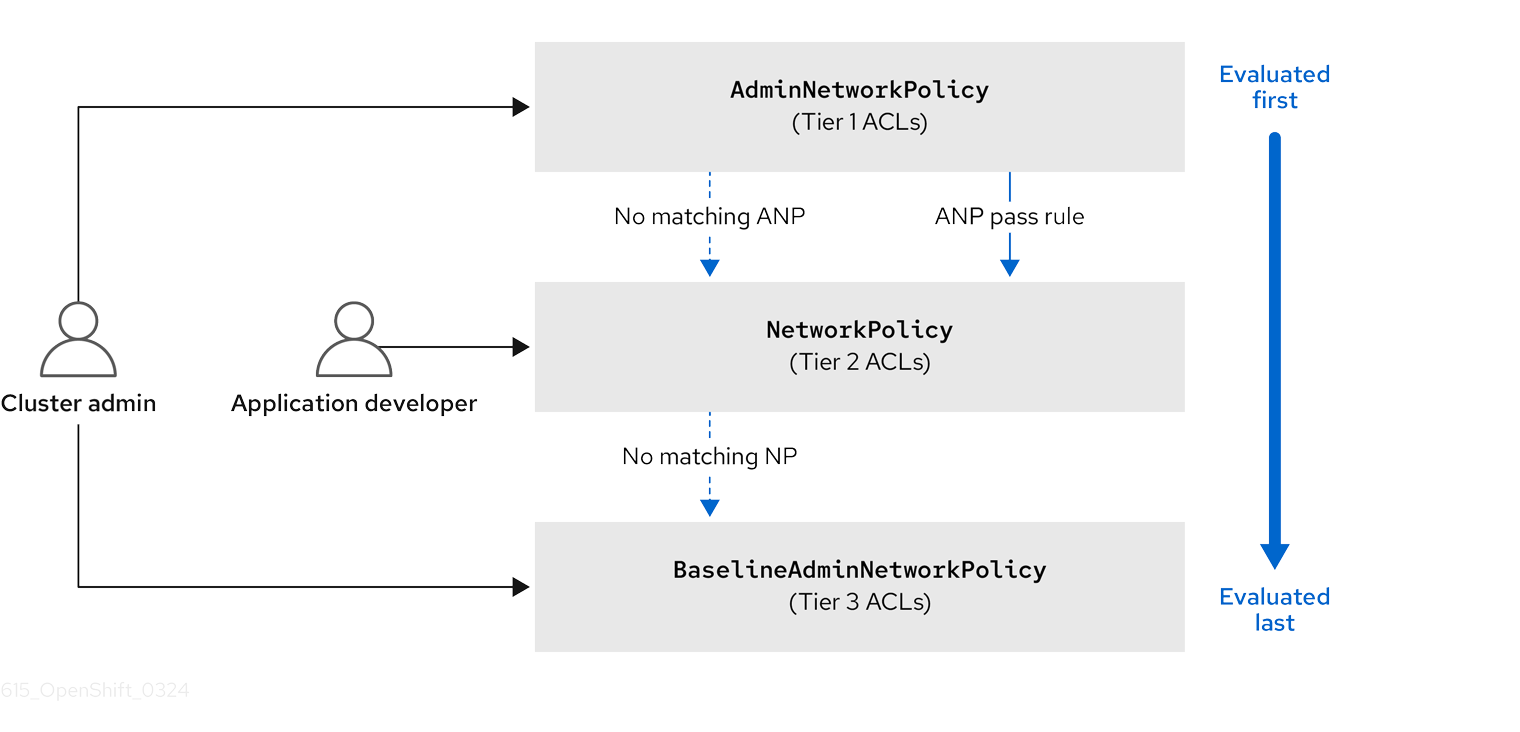

Kubernetes offers two features that users can use to enforce network security. One feature that allows users to enforce network policy is the NetworkPolicy API that is designed mainly for application developers and namespace tenants to protect their namespaces by creating namespace-scoped policies.

The second feature is AdminNetworkPolicy which consists of two APIs: the AdminNetworkPolicy (ANP) API and the BaselineAdminNetworkPolicy (BANP) API. ANP and BANP are designed for cluster and network administrators to protect their entire cluster by creating cluster-scoped policies. Cluster administrators can use ANPs to enforce non-overridable policies that take precedence over NetworkPolicy objects. Administrators can use BANP to set up and enforce optional cluster-scoped network policy rules that are overridable by users using NetworkPolicy objects when necessary. When used together, ANP, BANP, and network policy can achieve full multi-tenant isolation that administrators can use to secure their cluster.

OVN-Kubernetes CNI in OpenShift Container Platform implements these network policies using Access Control List (ACL) Tiers to evaluate and apply them. ACLs are evaluated in descending order from Tier 1 to Tier 3.

Tier 1 evaluates AdminNetworkPolicy (ANP) objects. Tier 2 evaluates NetworkPolicy objects. Tier 3 evaluates BaselineAdminNetworkPolicy (BANP) objects.

ANPs are evaluated first. When the match is an ANP allow or deny rule, any existing NetworkPolicy and BaselineAdminNetworkPolicy (BANP) objects in the cluster are skipped from evaluation. When the match is an ANP pass rule, then evaluation moves from tier 1 of the ACL to tier 2 where the NetworkPolicy policy is evaluated. If no NetworkPolicy matches the traffic then evaluation moves from tier 2 ACLs to tier 3 ACLs where BANP is evaluated.

6.1.1. Key differences between AdminNetworkPolicy and NetworkPolicy custom resources

The following table explains key differences between the cluster scoped AdminNetworkPolicy API and the namespace scoped NetworkPolicy API.

| Policy elements | AdminNetworkPolicy | NetworkPolicy |

|---|---|---|

| Applicable user | Cluster administrator or equivalent | Namespace owners |

| Scope | Cluster | Namespaced |

| Drop traffic |

Supported with an explicit |

Supported via implicit |

| Delegate traffic |

Supported with an | Not applicable |

| Allow traffic |

Supported with an explicit | The default action for all rules is to allow. |

| Rule precedence within the policy | Depends on the order in which they appear within an ANP. The higher the rule’s position the higher the precedence. | Rules are additive |

| Policy precedence |

Among ANPs the | There is no policy ordering between policies. |

| Feature precedence | Evaluated first via tier 1 ACL and BANP is evaluated last via tier 3 ACL. | Enforced after ANP and before BANP, they are evaluated in tier 2 of the ACL. |

| Matching pod selection | Can apply different rules across namespaces. | Can apply different rules across pods in single namespace. |

| Cluster egress traffic |

Supported via |

Supported through |

| Cluster ingress traffic | Not supported | Not supported |

| Fully qualified domain names (FQDN) peer support | Not supported | Not supported |

| Namespace selectors |

Supports advanced selection of Namespaces with the use of |

Supports label based namespace selection with the use of |

6.2. Admin network policy

6.2.1. OVN-Kubernetes AdminNetworkPolicy

6.2.1.1. AdminNetworkPolicy

An AdminNetworkPolicy (ANP) is a cluster-scoped custom resource definition (CRD). As a OpenShift Container Platform administrator, you can use ANP to secure your network by creating network policies before creating namespaces. Additionally, you can create network policies on a cluster-scoped level that is non-overridable by NetworkPolicy objects.

The key difference between AdminNetworkPolicy and NetworkPolicy objects are that the former is for administrators and is cluster scoped while the latter is for tenant owners and is namespace scoped.

An ANP allows administrators to specify the following:

-

A

priorityvalue that determines the order of its evaluation. The lower the value the higher the precedence. - A set of pods that consists of a set of namespaces or namespace on which the policy is applied.

-

A list of ingress rules to be applied for all ingress traffic towards the

subject. -

A list of egress rules to be applied for all egress traffic from the

subject.

6.2.1.1.1. AdminNetworkPolicy example

Example 6.1. Example YAML file for an ANP

- 1

- Specify a name for your ANP.

- 2

- The

spec.priorityfield supports a maximum of 100 ANPs in the range of values0-99in a cluster. The lower the value, the higher the precedence because the range is read in order from the lowest to highest value. Because there is no guarantee which policy takes precedence when ANPs are created at the same priority, set ANPs at different priorities so that precedence is deliberate. - 3

- Specify the namespace to apply the ANP resource.

- 4

- ANP have both ingress and egress rules. ANP rules for

spec.ingressfield accepts values ofPass,Deny, andAllowfor theactionfield. - 5

- Specify a name for the

ingress.name. - 6

- Specify

podSelector.matchLabelsto select pods within the namespaces selected bynamespaceSelector.matchLabelsas ingress peers. - 7

- ANPs have both ingress and egress rules. ANP rules for

spec.egressfield accepts values ofPass,Deny, andAllowfor theactionfield.

Additional resources

6.2.1.1.2. AdminNetworkPolicy actions for rules

As an administrator, you can set Allow, Deny, or Pass as the action field for your AdminNetworkPolicy rules. Because OVN-Kubernetes uses a tiered ACLs to evaluate network traffic rules, ANP allow you to set very strong policy rules that can only be changed by an administrator modifying them, deleting the rule, or overriding them by setting a higher priority rule.

6.2.1.1.2.1. AdminNetworkPolicy Allow example

The following ANP that is defined at priority 9 ensures all ingress traffic is allowed from the monitoring namespace towards any tenant (all other namespaces) in the cluster.

Example 6.2. Example YAML file for a strong Allow ANP

This is an example of a strong Allow ANP because it is non-overridable by all the parties involved. No tenants can block themselves from being monitored using NetworkPolicy objects and the monitoring tenant also has no say in what it can or cannot monitor.

6.2.1.1.2.2. AdminNetworkPolicy Deny example

The following ANP that is defined at priority 5 ensures all ingress traffic from the monitoring namespace is blocked towards restricted tenants (namespaces that have labels security: restricted).

Example 6.3. Example YAML file for a strong Deny ANP

This is a strong Deny ANP that is non-overridable by all the parties involved. The restricted tenant owners cannot authorize themselves to allow monitoring traffic, and the infrastructure’s monitoring service cannot scrape anything from these sensitive namespaces.

When combined with the strong Allow example, the block-monitoring ANP has a lower priority value giving it higher precedence, which ensures restricted tenants are never monitored.

6.2.1.1.2.3. AdminNetworkPolicy Pass example

The following ANP that is defined at priority 7 ensures all ingress traffic from the monitoring namespace towards internal infrastructure tenants (namespaces that have labels security: internal) are passed on to tier 2 of the ACLs and evaluated by the namespaces’ NetworkPolicy objects.

Example 6.4. Example YAML file for a strong Pass ANP

This example is a strong Pass action ANP because it delegates the decision to NetworkPolicy objects defined by tenant owners. This pass-monitoring ANP allows all tenant owners grouped at security level internal to choose if their metrics should be scraped by the infrastructures' monitoring service using namespace scoped NetworkPolicy objects.

6.2.2. OVN-Kubernetes BaselineAdminNetworkPolicy

6.2.2.1. BaselineAdminNetworkPolicy

BaselineAdminNetworkPolicy (BANP) is a cluster-scoped custom resource definition (CRD). As a OpenShift Container Platform administrator, you can use BANP to setup and enforce optional baseline network policy rules that are overridable by users using NetworkPolicy objects if need be. Rule actions for BANP are allow or deny.

The BaselineAdminNetworkPolicy resource is a cluster singleton object that can be used as a guardrail policy incase a passed traffic policy does not match any NetworkPolicy objects in the cluster. A BANP can also be used as a default security model that provides guardrails that intra-cluster traffic is blocked by default and a user will need to use NetworkPolicy objects to allow known traffic. You must use default as the name when creating a BANP resource.

A BANP allows administrators to specify:

-

A

subjectthat consists of a set of namespaces or namespace. -

A list of ingress rules to be applied for all ingress traffic towards the

subject. -

A list of egress rules to be applied for all egress traffic from the

subject.

6.2.2.1.1. BaselineAdminNetworkPolicy example

Example 6.5. Example YAML file for BANP

- 1

- The policy name must be

defaultbecause BANP is a singleton object. - 2

- Specify the namespace to apply the ANP to.

- 3

- BANP have both ingress and egress rules. BANP rules for

spec.ingressandspec.egressfields accepts values ofDenyandAllowfor theactionfield. - 4

- Specify a name for the

ingress.name - 5

- Specify the namespaces to select the pods from to apply the BANP resource.

- 6

- Specify

podSelector.matchLabelsname of the pods to apply the BANP resource.

6.2.2.1.2. BaselineAdminNetworkPolicy Deny example

The following BANP singleton ensures that the administrator has set up a default deny policy for all ingress monitoring traffic coming into the tenants at internal security level. When combined with the "AdminNetworkPolicy Pass example", this deny policy acts as a guardrail policy for all ingress traffic that is passed by the ANP pass-monitoring policy.

Example 6.6. Example YAML file for a guardrail Deny rule

You can use an AdminNetworkPolicy resource with a Pass value for the action field in conjunction with the BaselineAdminNetworkPolicy resource to create a multi-tenant policy. This multi-tenant policy allows one tenant to collect monitoring data on their application while simultaneously not collecting data from a second tenant.

As an administrator, if you apply both the "AdminNetworkPolicy Pass action example" and the "BaselineAdminNetwork Policy Deny example", tenants are then left with the ability to choose to create a NetworkPolicy resource that will be evaluated before the BANP.

For example, Tenant 1 can set up the following NetworkPolicy resource to monitor ingress traffic:

Example 6.7. Example NetworkPolicy

In this scenario, Tenant 1’s policy would be evaluated after the "AdminNetworkPolicy Pass action example" and before the "BaselineAdminNetwork Policy Deny example", which denies all ingress monitoring traffic coming into tenants with security level internal. With Tenant 1’s NetworkPolicy object in place, they will be able to collect data on their application. Tenant 2, however, who does not have any NetworkPolicy objects in place, will not be able to collect data. As an administrator, you have not by default monitored internal tenants, but instead, you created a BANP that allows tenants to use NetworkPolicy objects to override the default behavior of your BANP.

6.2.3. Monitoring ANP and BANP

AdminNetworkPolicy and BaselineAdminNetworkPolicy resources have metrics that can be used for monitoring and managing your policies. See the following table for more details on the metrics.

6.2.3.1. Metrics for AdminNetworkPolicy

| Name | Description | Explanation |

|---|---|---|

|

| Not applicable |

The total number of |

|

| Not applicable |

The total number of |

|

|

|

The total number of rules across all ANP policies in the cluster grouped by |

|

|

|

The total number of rules across all BANP policies in the cluster grouped by |

|

|

|

The total number of OVN Northbound database (nbdb) objects that are created by all the ANP in the cluster grouped by the |

|

|

|

The total number of OVN Northbound database (nbdb) objects that are created by all the BANP in the cluster grouped by the |

6.2.4. Egress nodes and networks peer for AdminNetworkPolicy

This section explains nodes and networks peers. Administrators can use the examples in this section to design AdminNetworkPolicy and BaselineAdminNetworkPolicy to control northbound traffic in their cluster.

6.2.4.1. Northbound traffic controls for AdminNetworkPolicy and BaselineAdminNetworkPolicy

In addition to supporting east-west traffic controls, ANP and BANP also allow administrators to control their northbound traffic leaving the cluster or traffic leaving the node to other nodes in the cluster. End-users can do the following:

-

Implement egress traffic control towards cluster nodes using

nodesegress peer -

Implement egress traffic control towards Kubernetes API servers using

nodesornetworksegress peers -

Implement egress traffic control towards external destinations outside the cluster using

networkspeer

For ANP and BANP, nodes and networks peers can be specified for egress rules only.

6.2.4.1.1. Using nodes peer to control egress traffic to cluster nodes

Using the nodes peer administrators can control egress traffic from pods to nodes in the cluster. A benefit of this is that you do not have to change the policy when nodes are added to or deleted from the cluster.

The following example allows egress traffic to the Kubernetes API server on port 6443 by any of the namespaces with a restricted, confidential, or internal level of security using the node selector peer. It also denies traffic to all worker nodes in your cluster from any of the namespaces with a restricted, confidential, or internal level of security.

Example 6.8. Example of ANP Allow egress using nodes peer

- 1

- Specifies a node or set of nodes in the cluster using the

matchExpressionsfield. - 2

- Specifies all the pods labeled with

dept: engr. - 3

- Specifies the subject of the ANP which includes any namespaces that match the labels used by the network policy. The example matches any of the namespaces with

restricted,confidential, orinternallevel ofsecurity. - 4

- Specifies key/value pairs for

matchExpressionsfield.

6.2.4.1.2. Using networks peer to control egress traffic towards external destinations

Cluster administrators can use CIDR ranges in networks peer and apply a policy to control egress traffic leaving from pods and going to a destination configured at the IP address that is within the CIDR range specified with networks field.

The following example uses networks peer and combines ANP and BANP policies to restrict egress traffic.

Use the empty selector ({}) in the namespace field for ANP and BANP with caution. When using an empty selector, it also selects OpenShift namespaces.

If you use values of 0.0.0.0/0 in a ANP or BANP Deny rule, you must set a higher priority ANP Allow rule to necessary destinations before setting the Deny to 0.0.0.0/0.

Example 6.9. Example of ANP and BANP using networks peers

- 1

- Use

networksto specify a range of CIDR networks outside of the cluster. - 2

- Specifies the CIDR ranges for the intra-cluster traffic from your resources.

- 3 4

- Specifies a

Denyegress to everything by settingnetworksvalues to0.0.0.0/0. Make sure you have a higher priorityAllowrule to necessary destinations before setting aDenyto0.0.0.0/0because this will deny all traffic including to Kubernetes API and DNS servers.

Collectively the network-as-egress-peer ANP and default BANP using networks peers enforces the following egress policy:

- All pods cannot talk to external DNS servers at the listed IP addresses.

- All pods can talk to rest of the company’s intranet.

- All pods can talk to other pods, nodes, and services.

-

All pods cannot talk to the internet. Combining the last ANP

Passrule and the strong BANPDenyrule a guardrail policy is created that secures traffic in the cluster.

6.2.4.1.3. Using nodes peer and networks peer together

Cluster administrators can combine nodes and networks peer in your ANP and BANP policies.

Example 6.10. Example of nodes and networks peer

- 1

- Specifies the name of the policy.

- 2

- For

nodesandnetworkspeers, you can only use northbound traffic controls in ANP asegress. - 3

- Specifies the priority of the ANP, determining the order in which they should be evaluated. Lower priority rules have higher precedence. ANP accepts values of 0-99 with 0 being the highest priority and 99 being the lowest.

- 4

- Specifies the set of pods in the cluster on which the rules of the policy are to be applied. In the example, any pods with the

apps: all-appslabel across all namespaces are thesubjectof the policy.

6.2.5. Troubleshooting AdminNetworkPolicy

6.2.5.1. Checking creation of ANP

To check that your AdminNetworkPolicy (ANP) and BaselineAdminNetworkPolicy (BANP) are created correctly, check the status outputs of the following commands: oc describe anp or oc describe banp.

A good status indicates OVN DB plumbing was successful and the SetupSucceeded.

Example 6.11. Example ANP with a good status

If plumbing is unsuccessful, an error is reported from the respective zone controller.

Example 6.12. Example of an ANP with a bad status and error message

See the following section for nbctl commands to help troubleshoot unsuccessful policies.

6.2.5.1.1. Using nbctl commands for ANP and BANP

To troubleshoot an unsuccessful setup, start by looking at OVN Northbound database (nbdb) objects including ACL, AdressSet, and Port_Group. To view the nbdb, you need to be inside the pod on that node to view the objects in that node’s database.

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

The OpenShift CLI (

oc) installed.

To run ovn nbctl commands in a cluster, you must open a remote shell into the `nbdb`on the relevant node.

The following policy was used to generate outputs.

Example 6.13. AdminNetworkPolicy used to generate outputs

Procedure

List pods with node information by running the following command:

oc get pods -n openshift-ovn-kubernetes -owide

$ oc get pods -n openshift-ovn-kubernetes -owideCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Navigate into a pod to look at the northbound database by running the following command:

oc rsh -c nbdb -n openshift-ovn-kubernetes ovnkube-node-524dt

$ oc rsh -c nbdb -n openshift-ovn-kubernetes ovnkube-node-524dtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to look at the ACLs nbdb:

ovn-nbctl find ACL 'external_ids{>=}{"k8s.ovn.org/owner-type"=AdminNetworkPolicy,"k8s.ovn.org/name"=cluster-control}'$ ovn-nbctl find ACL 'external_ids{>=}{"k8s.ovn.org/owner-type"=AdminNetworkPolicy,"k8s.ovn.org/name"=cluster-control}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Where, cluster-control

-

Specifies the name of the

AdminNetworkPolicyyou are troubleshooting. - AdminNetworkPolicy

-

Specifies the type:

AdminNetworkPolicyorBaselineAdminNetworkPolicy.

Example 6.14. Example output for ACLs

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe outputs for ingress and egress show you the logic of the policy in the ACL. For example, every time a packet matches the provided

matchtheactionis taken.Examine the specific ACL for the rule by running the following command:

ovn-nbctl find ACL 'external_ids{>=}{"k8s.ovn.org/owner-type"=AdminNetworkPolicy,direction=Ingress,"k8s.ovn.org/name"=cluster-control,gress-index="1"}'$ ovn-nbctl find ACL 'external_ids{>=}{"k8s.ovn.org/owner-type"=AdminNetworkPolicy,direction=Ingress,"k8s.ovn.org/name"=cluster-control,gress-index="1"}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Where,

cluster-control -

Specifies the

nameof your ANP. Ingress-

Specifies the

directionof traffic either of typeIngressorEgress. 1- Specifies the rule you want to look at.

For the example ANP named

cluster-controlatpriority34, the following is an example output forIngressrule1:Example 6.15. Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Where,

Run the following command to look at address sets in the nbdb:

ovn-nbctl find Address_Set 'external_ids{>=}{"k8s.ovn.org/owner-type"=AdminNetworkPolicy,"k8s.ovn.org/name"=cluster-control}'$ ovn-nbctl find Address_Set 'external_ids{>=}{"k8s.ovn.org/owner-type"=AdminNetworkPolicy,"k8s.ovn.org/name"=cluster-control}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example 6.16. Example outputs for

Address_SetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Examine the specific address set of the rule by running the following command:

ovn-nbctl find Address_Set 'external_ids{>=}{"k8s.ovn.org/owner-type"=AdminNetworkPolicy,direction=Egress,"k8s.ovn.org/name"=cluster-control,gress-index="5"}'$ ovn-nbctl find Address_Set 'external_ids{>=}{"k8s.ovn.org/owner-type"=AdminNetworkPolicy,direction=Egress,"k8s.ovn.org/name"=cluster-control,gress-index="5"}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example 6.17. Example outputs for

Address_Set_uuid : 8fd3b977-6e1c-47aa-82b7-e3e3136c4a72 addresses : ["0.0.0.0/0"] external_ids : {direction=Egress, gress-index="5", ip-family=v4, "k8s.ovn.org/id"="default-network-controller:AdminNetworkPolicy:cluster-control:Egress:5:v4", "k8s.ovn.org/name"=cluster-control, "k8s.ovn.org/owner-controller"=default-network-controller, "k8s.ovn.org/owner-type"=AdminNetworkPolicy} name : a11452480169090787059_uuid : 8fd3b977-6e1c-47aa-82b7-e3e3136c4a72 addresses : ["0.0.0.0/0"] external_ids : {direction=Egress, gress-index="5", ip-family=v4, "k8s.ovn.org/id"="default-network-controller:AdminNetworkPolicy:cluster-control:Egress:5:v4", "k8s.ovn.org/name"=cluster-control, "k8s.ovn.org/owner-controller"=default-network-controller, "k8s.ovn.org/owner-type"=AdminNetworkPolicy} name : a11452480169090787059Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Run the following command to look at the port groups in the nbdb:

ovn-nbctl find Port_Group 'external_ids{>=}{"k8s.ovn.org/owner-type"=AdminNetworkPolicy,"k8s.ovn.org/name"=cluster-control}'$ ovn-nbctl find Port_Group 'external_ids{>=}{"k8s.ovn.org/owner-type"=AdminNetworkPolicy,"k8s.ovn.org/name"=cluster-control}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example 6.18. Example outputs for

Port_Group_uuid : f50acf71-7488-4b9a-b7b8-c8a024e99d21 acls : [04f20275-c410-405c-a923-0e677f767889, 0d5e4722-b608-4bb1-b625-23c323cc9926, 1a27d30e-3f96-4915-8ddd-ade7f22c117b, 1a68a5ed-e7f9-47d0-b55c-89184d97e81a, 4b5d836a-e0a3-4088-825e-f9f0ca58e538, 5a6e5bb4-36eb-4209-b8bc-c611983d4624, 5d09957d-d2cc-4f5a-9ddd-b97d9d772023, aa1a224d-7960-4952-bdfb-35246bafbac8, b23a087f-08f8-4225-8c27-4a9a9ee0c407, b7be6472-df67-439c-8c9c-f55929f0a6e0, d14ed5cf-2e06-496e-8cae-6b76d5dd5ccd] external_ids : {"k8s.ovn.org/id"="default-network-controller:AdminNetworkPolicy:cluster-control", "k8s.ovn.org/name"=cluster-control, "k8s.ovn.org/owner-controller"=default-network-controller, "k8s.ovn.org/owner-type"=AdminNetworkPolicy} name : a14645450421485494999 ports : [5e75f289-8273-4f8a-8798-8c10f7318833, de7e1b71-6184-445d-93e7-b20acadf41ea]_uuid : f50acf71-7488-4b9a-b7b8-c8a024e99d21 acls : [04f20275-c410-405c-a923-0e677f767889, 0d5e4722-b608-4bb1-b625-23c323cc9926, 1a27d30e-3f96-4915-8ddd-ade7f22c117b, 1a68a5ed-e7f9-47d0-b55c-89184d97e81a, 4b5d836a-e0a3-4088-825e-f9f0ca58e538, 5a6e5bb4-36eb-4209-b8bc-c611983d4624, 5d09957d-d2cc-4f5a-9ddd-b97d9d772023, aa1a224d-7960-4952-bdfb-35246bafbac8, b23a087f-08f8-4225-8c27-4a9a9ee0c407, b7be6472-df67-439c-8c9c-f55929f0a6e0, d14ed5cf-2e06-496e-8cae-6b76d5dd5ccd] external_ids : {"k8s.ovn.org/id"="default-network-controller:AdminNetworkPolicy:cluster-control", "k8s.ovn.org/name"=cluster-control, "k8s.ovn.org/owner-controller"=default-network-controller, "k8s.ovn.org/owner-type"=AdminNetworkPolicy} name : a14645450421485494999 ports : [5e75f289-8273-4f8a-8798-8c10f7318833, de7e1b71-6184-445d-93e7-b20acadf41ea]Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.6. Best practices for AdminNetworkPolicy

This section provides best practices for the AdminNetworkPolicy and BaselineAdminNetworkPolicy resources.

6.2.6.1. Designing AdminNetworkPolicy

When building AdminNetworkPolicy (ANP) resources, you might consider the following when creating your policies:

- You can create ANPs that have the same priority. If you do create two ANPs at the same priority, ensure that they do not apply overlapping rules to the same traffic. Only one rule per value is applied and there is no guarantee which rule is applied when there is more than one at the same priority value. Because there is no guarantee which policy takes precedence when overlapping ANPs are created, set ANPs at different priorities so that precedence is well defined.

- Administrators must create ANP that apply to user namespaces not system namespaces.

Applying ANP and BaselineAdminNetworkPolicy (BANP) to system namespaces (default, kube-system, any namespace whose name starts with openshift-, etc) is not supported, and this can leave your cluster unresponsive and in a non-functional state.

-

Because

0-100is the supported priority range, you might design your ANP to use a middle range like30-70. This leaves some placeholder for priorities before and after. Even in the middle range, you might want to leave gaps so that as your infrastructure requirements evolve over time, you are able to insert new ANPs when needed at the right priority level. If you pack your ANPs, then you might need to recreate all of them to accommodate any changes in the future. -

When using

0.0.0.0/0or::/0to create a strongDenypolicy, ensure that you have higher priorityAlloworPassrules for essential traffic. -

Use

Allowas youractionfield when you want to ensure that a connection is allowed no matter what. AnAllowrule in an ANP means that the connection will always be allowed, andNetworkPolicywill be ignored. -

Use

Passas youractionfield to delegate the policy decision of allowing or denying the connection to theNetworkPolicylayer. - Ensure that the selectors across multiple rules do not overlap so that the same IPs do not appear in multiple policies, which can cause performance and scale limitations.

-

Avoid using

namedPortsin conjunction withPortNumberandPortRangebecause this creates 6 ACLs and cause inefficiencies in your cluster.

6.2.6.1.1. Considerations for using BaselineAdminNetworkPolicy

You can define only a single

BaselineAdminNetworkPolicy(BANP) resource within a cluster. The following are supported uses for BANP that administrators might consider in designing their BANP:-

You can set a default deny policy for cluster-local ingress in user namespaces. This BANP will force developers to have to add

NetworkPolicyobjects to allow the ingress traffic that they want to allow, and if they do not add network policies for ingress it will be denied. -

You can set a default deny policy for cluster-local egress in user namespaces. This BANP will force developers to have to add

NetworkPolicyobjects to allow the egress traffic that they want to allow, and if they do not add network policies it will be denied. -

You can set a default allow policy for egress to the in-cluster DNS service. Such a BANP ensures that the namespaced users do not have to set an allow egress

NetworkPolicyto the in-cluster DNS service. -

You can set an egress policy that allows internal egress traffic to all pods but denies access to all external endpoints (i.e

0.0.0.0/0and::/0). This BANP allows user workloads to send traffic to other in-cluster endpoints, but not to external endpoints by default.NetworkPolicycan then be used by developers in order to allow their applications to send traffic to an explicit set of external services.

-

You can set a default deny policy for cluster-local ingress in user namespaces. This BANP will force developers to have to add

-

Ensure you scope your BANP so that it only denies traffic to user namespaces and not to system namespaces. This is because the system namespaces do not have

NetworkPolicyobjects to override your BANP.

6.2.6.1.2. Differences to consider between AdminNetworkPolicy and NetworkPolicy

-

Unlike

NetworkPolicyobjects, you must use explicit labels to reference your workloads within ANP and BANP rather than using the empty ({}) catch all selector to avoid accidental traffic selection.

An empty namespace selector applied to a infrastructure namespace can make your cluster unresponsive and in a non-functional state.

-

In API semantics for ANP, you have to explicitly define allow or deny rules when you create the policy, unlike

NetworkPolicyobjects which have an implicit deny. -

Unlike

NetworkPolicyobjects,AdminNetworkPolicyobjects ingress rules are limited to in-cluster pods and namespaces so you cannot, and do not need to, set rules for ingress from the host network.

6.3. Network policy

6.3.1. About network policy

As a developer, you can define network policies that restrict traffic to pods in your cluster.

6.3.1.1. About network policy

In a cluster that uses a network plugin that supports a Kubernetes network policy, a NetworkPolicy object controls network isolation. In OpenShift Container Platform 4.16, OpenShift SDN supports using network policy in its default network isolation mode.

- On OpenShift SDN: A network policy does not apply to the host network namespace. Network policy rules do not impact pods configured with host networking enabled. Network policy rules might impact pods connected to host-networked pods.

-

On Openshift-OVN-Kubernetes: Network policies do impact pods that have host networking enabled, so you must explicitly allow a connection to these pods in your network policy rules. If a namespace has any network policy applied, traffic originating from system components, such as

openshift-ingressoropenshift-kube-apiserver, get dropped by default; You must explicitly enable this traffic to allow it through. -

Using the

namespaceSelectorfield without thepodSelectorfield set to{}will not includehostNetworkpods. You must use thepodSelectorset to{}with thenamespaceSelectorfield in order to targethostNetworkpods when creating network policies. - Network policies cannot block traffic from localhost or from their resident nodes.

By default, all pods in a project are accessible from other pods and network endpoints. To isolate one or more pods in a project, you can create NetworkPolicy objects in that project to indicate the allowed incoming connections. Project administrators can create and delete NetworkPolicy objects within their own project.

If a pod is matched by selectors in one or more NetworkPolicy objects, then the pod will accept only connections that are allowed by at least one of those NetworkPolicy objects. A pod that is not selected by any NetworkPolicy objects is fully accessible.

A network policy applies to only the Transmission Control Protocol (TCP), User Datagram Protocol (UDP), Internet Control Message Protocol (ICMP), and Stream Control Transmission Protocol (SCTP) protocols. Other protocols are not affected.

The following example NetworkPolicy objects demonstrate supporting different scenarios:

Deny all traffic:

To make a project deny by default, add a

NetworkPolicyobject that matches all pods but accepts no traffic:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Only allow connections from the OpenShift Container Platform Ingress Controller:

To make a project allow only connections from the OpenShift Container Platform Ingress Controller, add the following

NetworkPolicyobject.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Only accept connections from pods within a project:

ImportantTo allow ingress connections from

hostNetworkpods in the same namespace, you need to apply theallow-from-hostnetworkpolicy together with theallow-same-namespacepolicy.To make pods accept connections from other pods in the same project, but reject all other connections from pods in other projects, add the following

NetworkPolicyobject:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Only allow HTTP and HTTPS traffic based on pod labels:

To enable only HTTP and HTTPS access to the pods with a specific label (

role=frontendin following example), add aNetworkPolicyobject similar to the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Accept connections by using both namespace and pod selectors:

To match network traffic by combining namespace and pod selectors, you can use a

NetworkPolicyobject similar to the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

NetworkPolicy objects are additive, which means you can combine multiple NetworkPolicy objects together to satisfy complex network requirements.

For example, for the NetworkPolicy objects defined in previous samples, you can define both allow-same-namespace and allow-http-and-https policies within the same project. Thus allowing the pods with the label role=frontend, to accept any connection allowed by each policy. That is, connections on any port from pods in the same namespace, and connections on ports 80 and 443 from pods in any namespace.

6.3.1.1.1. Using the allow-from-router network policy

Use the following NetworkPolicy to allow external traffic regardless of the router configuration:

- 1

policy-group.network.openshift.io/ingress:""label supports both OpenShift-SDN and OVN-Kubernetes.

6.3.1.1.2. Using the allow-from-hostnetwork network policy

Add the following allow-from-hostnetwork NetworkPolicy object to direct traffic from the host network pods.

6.3.1.2. Optimizations for network policy with OpenShift SDN

Use a network policy to isolate pods that are differentiated from one another by labels within a namespace.

It is inefficient to apply NetworkPolicy objects to large numbers of individual pods in a single namespace. Pod labels do not exist at the IP address level, so a network policy generates a separate Open vSwitch (OVS) flow rule for every possible link between every pod selected with a podSelector.

For example, if the spec podSelector and the ingress podSelector within a NetworkPolicy object each match 200 pods, then 40,000 (200*200) OVS flow rules are generated. This might slow down a node.

When designing your network policy, refer to the following guidelines:

Reduce the number of OVS flow rules by using namespaces to contain groups of pods that need to be isolated.

NetworkPolicyobjects that select a whole namespace, by using thenamespaceSelectoror an emptypodSelector, generate only a single OVS flow rule that matches the VXLAN virtual network ID (VNID) of the namespace.- Keep the pods that do not need to be isolated in their original namespace, and move the pods that require isolation into one or more different namespaces.

- Create additional targeted cross-namespace network policies to allow the specific traffic that you do want to allow from the isolated pods.

6.3.1.3. Optimizations for network policy with OVN-Kubernetes network plugin

Learn how to optimize OVN-Kubernetes network policies to reduce flow count and ensure external IP traffic is allowed when needed.

When designing your network policy, refer to the following guidelines:

-

For network policies with the same

spec.podSelectorspec, it is more efficient to use one network policy with multipleingressoregressrules, than multiple network policies with subsets ofingressoregressrules. Every

ingressoregressrule based on thepodSelectorornamespaceSelectorspec generates the number of OVS flows proportional tonumber of pods selected by network policy + number of pods selected by ingress or egress rule. Therefore, it is preferable to use thepodSelectorornamespaceSelectorspec that can select as many pods as you need in one rule, instead of creating individual rules for every pod.For example, the following policy contains two rules:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following policy expresses those same two rules as one:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The same guideline applies to the

spec.podSelectorspec. If you have the sameingressoregressrules for different network policies, it might be more efficient to create one network policy with a commonspec.podSelectorspec. For example, the following two policies have different rules:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following network policy expresses those same two rules as one:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can apply this optimization when only multiple selectors are expressed as one. In cases where selectors are based on different labels, it may not be possible to apply this optimization. In those cases, consider applying some new labels for network policy optimization specifically.

6.3.1.3.1. NetworkPolicy CR and external IPs in OVN-Kubernetes

In OVN-Kubernetes, the NetworkPolicy custom resource (CR) enforces strict isolation rules. If a service is exposed using an external IP, a network policy can block access from other namespaces unless explicitly configured to allow traffic.

To allow access to external IPs across namespaces, create a NetworkPolicy CR that explicitly permits ingress from the required namespaces and ensures traffic is allowed to the designated service ports. Without allowing traffic to the required ports, access might still be restricted.

Example output

where:

<policy_name>- Specifies your name for the policy.

<my_namespace>- Specifies the name of the namespace where the policy is deployed.

For more details, see "About network policy".

6.3.1.4. Next steps

6.3.2. Creating a network policy

As a cluster administrator, you can create a network policy for a namespace.

6.3.2.1. Example NetworkPolicy object

The following configuration annotates an example NetworkPolicy object:

where:

name- The name of the NetworkPolicy object.

spec.podSelector- A selector that describes the pods to which the policy applies. The policy object can only select pods in the project that defines the NetworkPolicy object.

ingress.from.podSelector- A selector that matches the pods from which the policy object allows ingress traffic. The selector matches pods in the same namespace as the NetworkPolicy.

ingress.ports- A list of one or more destination ports on which to accept traffic.

6.3.2.2. Creating a network policy using the CLI

To define granular rules describing ingress or egress network traffic allowed for namespaces in your cluster, you can create a network policy.

If you log in with a user with the cluster-admin role, then you can create a network policy in any namespace in the cluster.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You logged in to the cluster with a user with

adminprivileges. - You are working in the namespace that the network policy applies to.

Procedure

Create a policy rule.

Create a

<policy_name>.yamlfile:touch <policy_name>.yaml

$ touch <policy_name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<policy_name>- Specifies the network policy file name.

Define a network policy in the created file. The following example denies ingress traffic from all pods in all namespaces. This is a fundamental policy, blocking all cross-pod networking other than cross-pod traffic allowed by the configuration of other Network Policies.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following example configuration allows ingress traffic from all pods in the same namespace:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following example allows ingress traffic to one pod from a particular namespace. This policy allows traffic to pods that have the

pod-alabel from pods running innamespace-y.Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following example configuration restricts traffic to a service. This policy when applied ensures every pod with both labels

app=bookstoreandrole=apican only be accessed by pods with labelapp=bookstore. In this example the application could be a REST API server, marked with labelsapp=bookstoreandrole=api.This example configuration addresses the following use cases:

- Restricting the traffic to a service to only the other microservices that need to use it.

Restricting the connections to a database to only permit the application using it.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

To create the network policy object, enter the following command. Successful output lists the name of the policy object and the

createdstatus.oc apply -f <policy_name>.yaml -n <namespace>

$ oc apply -f <policy_name>.yaml -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<policy_name>- Specifies the network policy file name.

<namespace>- Optional parameter. If you defined the object in a different namespace than the current namespace, the parameter specifices the namespace.

Successful output lists the name of the policy object and the

createdstatus.NoteIf you log in to the web console with

cluster-adminprivileges, you have a choice of creating a network policy in any namespace in the cluster directly in YAML or from a form in the web console.

6.3.2.3. Creating a default deny all network policy

The default deny all network policy blocks all cross-pod networking other than network traffic allowed by the configuration of other deployed network policies and traffic between host-networked pods. This procedure enforces a strong deny policy by applying a deny-by-default policy in the my-project namespace.

Without configuring a NetworkPolicy custom resource (CR) that allows traffic communication, the following policy might cause communication problems across your cluster.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You logged in to the cluster with a user with

adminprivileges. - You are working in the namespace that the network policy applies to.

Procedure

Create the following YAML that defines a

deny-by-defaultpolicy to deny ingress from all pods in all namespaces. Save the YAML in thedeny-by-default.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

namespace-

Specifies the namespace in which to deploy the policy. For example, the

my-projectnamespace. podSelector-

If this field is empty, the configuration matches all the pods. Therefore, the policy applies to all pods in the

my-projectnamespace. ingress-

Where

[]indicates that noingressrules are specified. This causes incoming traffic to be dropped to all pods.

Apply the policy by entering the following command. Successful output lists the name of the policy object and the

createdstatus.oc apply -f deny-by-default.yaml

$ oc apply -f deny-by-default.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.2.4. Creating a network policy to allow traffic from external clients

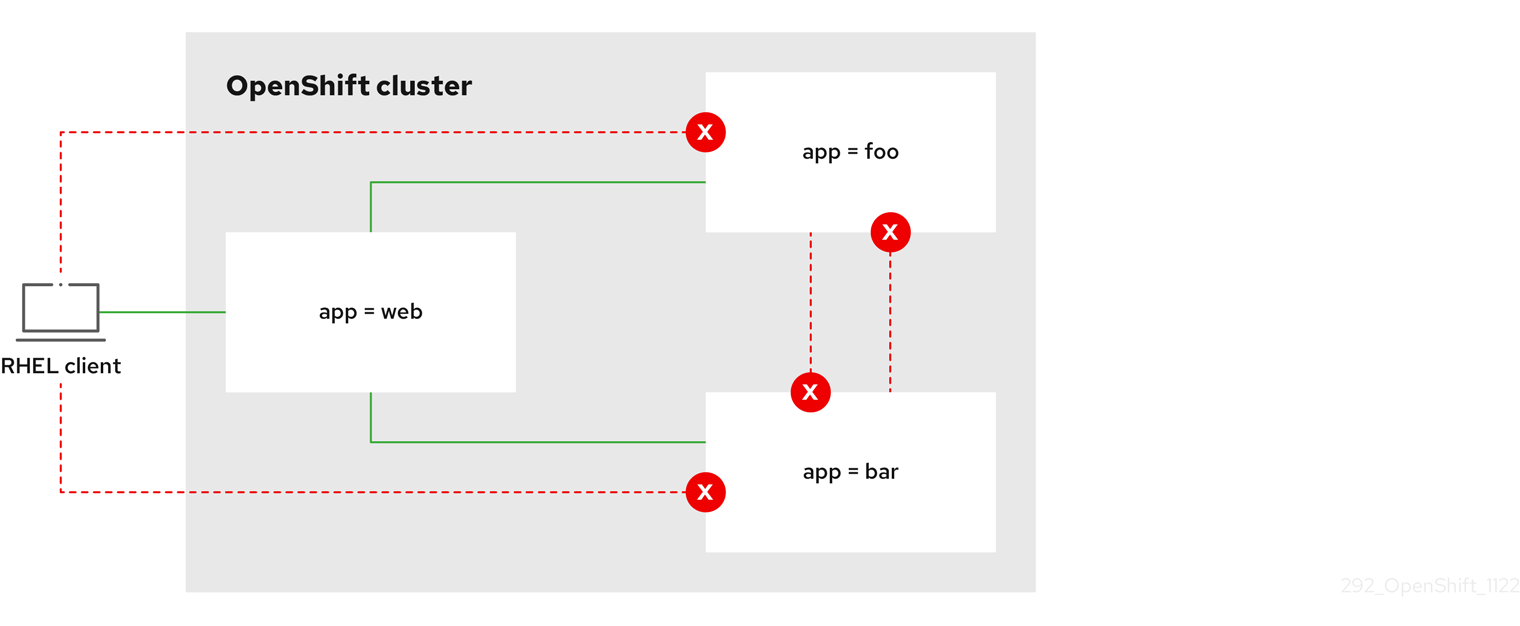

With the deny-by-default policy in place you can proceed to configure a policy that allows traffic from external clients to a pod with the label app=web.

If you log in with a user with the cluster-admin role, then you can create a network policy in any namespace in the cluster.

Follow this procedure to configure a policy that allows external service from the public Internet directly or by using a Load Balancer to access the pod. Traffic is only allowed to a pod with the label app=web.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You logged in to the cluster with a user with

adminprivileges. - You are working in the namespace that the network policy applies to.

Procedure

Create a policy that allows traffic from the public Internet directly or by using a load balancer to access the pod. Save the YAML in the

web-allow-external.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by entering the following command. Successful output lists the name of the policy object and the

createdstatus.oc apply -f web-allow-external.yaml

$ oc apply -f web-allow-external.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow This policy allows traffic from all resources, including external traffic as illustrated in the following diagram:

6.3.2.5. Creating a network policy allowing traffic to an application from all namespaces

You can configure a policy that allows traffic from all pods in all namespaces to a particular application.

If you log in with a user with the cluster-admin role, then you can create a network policy in any namespace in the cluster.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You logged in to the cluster with a user with

adminprivileges. - You are working in the namespace that the network policy applies to.

Procedure

Create a policy that allows traffic from all pods in all namespaces to a particular application. Save the YAML in the

web-allow-all-namespaces.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

app-

Applies the policy only to

app:webpods in default namespace. namespaceSelectorSelects all pods in all namespaces.

NoteBy default, if you do not specify a

namespaceSelectorparameter in the policy object, no namespaces get selected. This means the policy allows traffic only from the namespace where the network policy deployes.

Apply the policy by entering the following command. Successful output lists the name of the policy object and the

createdstatus.oc apply -f web-allow-all-namespaces.yaml

$ oc apply -f web-allow-all-namespaces.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Start a web service in the

defaultnamespace by entering the following command:oc run web --namespace=default --image=nginx --labels="app=web" --expose --port=80

$ oc run web --namespace=default --image=nginx --labels="app=web" --expose --port=80Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to deploy an

alpineimage in thesecondarynamespace and to start a shell:oc run test-$RANDOM --namespace=secondary --rm -i -t --image=alpine -- sh

$ oc run test-$RANDOM --namespace=secondary --rm -i -t --image=alpine -- shCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command in the shell and observe that the service allows the request:

wget -qO- --timeout=2 http://web.default

# wget -qO- --timeout=2 http://web.defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.2.6. Creating a network policy allowing traffic to an application from a namespace

You can configure a policy that allows traffic to a pod with the label app=web from a particular namespace. This configuration is useful in the following use cases:

- Restrict traffic to a production database only to namespaces that have production workloads deployed.

- Enable monitoring tools deployed to a particular namespace to scrape metrics from the current namespace.

If you log in with a user with the cluster-admin role, then you can create a network policy in any namespace in the cluster.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You logged in to the cluster with a user with

adminprivileges. - You are working in the namespace that the network policy applies to.

Procedure

Create a policy that allows traffic from all pods in a particular namespaces with a label

purpose=production. Save the YAML in theweb-allow-prod.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

app-

Applies the policy only to

app:webpods in the default namespace. purpose-

Restricts traffic to only pods in namespaces that have the label

purpose=production.

Apply the policy by entering the following command. Successful output lists the name of the policy object and the

createdstatus.oc apply -f web-allow-prod.yaml

$ oc apply -f web-allow-prod.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Start a web service in the

defaultnamespace by entering the following command:oc run web --namespace=default --image=nginx --labels="app=web" --expose --port=80

$ oc run web --namespace=default --image=nginx --labels="app=web" --expose --port=80Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to create the

prodnamespace:oc create namespace prod

$ oc create namespace prodCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to label the

prodnamespace:oc label namespace/prod purpose=production

$ oc label namespace/prod purpose=productionCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to create the

devnamespace:oc create namespace dev

$ oc create namespace devCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to label the

devnamespace:oc label namespace/dev purpose=testing

$ oc label namespace/dev purpose=testingCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to deploy an

alpineimage in thedevnamespace and to start a shell:oc run test-$RANDOM --namespace=dev --rm -i -t --image=alpine -- sh

$ oc run test-$RANDOM --namespace=dev --rm -i -t --image=alpine -- shCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command in the shell and observe the reason for the blocked request. For example, expected output states

wget: download timed out.wget -qO- --timeout=2 http://web.default

# wget -qO- --timeout=2 http://web.defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to deploy an

alpineimage in theprodnamespace and start a shell:oc run test-$RANDOM --namespace=prod --rm -i -t --image=alpine -- sh

$ oc run test-$RANDOM --namespace=prod --rm -i -t --image=alpine -- shCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command in the shell and observe that the request is allowed:

wget -qO- --timeout=2 http://web.default

# wget -qO- --timeout=2 http://web.defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.3. Viewing a network policy

As a cluster administrator, you can view a network policy for a namespace.

6.3.3.1. Example NetworkPolicy object

The following configuration annotates an example NetworkPolicy object:

where:

name- The name of the NetworkPolicy object.

spec.podSelector- A selector that describes the pods to which the policy applies. The policy object can only select pods in the project that defines the NetworkPolicy object.

ingress.from.podSelector- A selector that matches the pods from which the policy object allows ingress traffic. The selector matches pods in the same namespace as the NetworkPolicy.

ingress.ports- A list of one or more destination ports on which to accept traffic.

6.3.3.2. Viewing network policies using the CLI

You can examine the network policies in a namespace.

If you log in with cluster-admin privileges, you can edit network policies in any namespace in the cluster.

If you log in with cluster-admin privileges, you can edit network policies in any namespace in the cluster. In the web console, you can edit policies directly in YAML or by using the Actions menu.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

adminprivileges. - You are working in the namespace where the network policy exists.

Procedure

List network policies in a namespace.

To view network policy objects defined in a namespace enter the following command:

oc get networkpolicy

$ oc get networkpolicyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: To examine a specific network policy enter the following command:

oc describe networkpolicy <policy_name> -n <namespace>

$ oc describe networkpolicy <policy_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<policy_name>- Specifies the name of the network policy to inspect.

<namespace>Optional: Specifies the namespace if the object is defined in a different namespace than the current namespace.

oc describe networkpolicy allow-same-namespace

$ oc describe networkpolicy allow-same-namespaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.4. Editing a network policy

As a cluster administrator, you can edit an existing network policy for a namespace.

6.3.4.1. Editing a network policy

To modify existing policy configurations, you can edit a network policy in a namespace. Edit policies by modifying the policy file and applying it with oc apply, or by using the oc edit command directly.

If you log in with cluster-admin privileges, you can edit network policies in any namespace in the cluster.

If you log in with cluster-admin privileges, you can edit network policies in any namespace in the cluster. In the web console, you can edit policies directly in YAML or by using the Actions menu.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

adminprivileges. - You are working in the namespace where the network policy exists.

Procedure

Optional: To list the network policy objects in a namespace, enter the following command:

oc get network policy -n <namespace>

$ oc get network policy -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<namespace>- Optional: Specifies the namespace if the object is defined in a different namespace than the current namespace.

Edit the network policy object.

If you saved the network policy definition in a file, edit the file and make any necessary changes, and then enter the following command.

oc apply -n <namespace> -f <policy_file>.yaml

$ oc apply -n <namespace> -f <policy_file>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<namespace>- Optional: Specifies the namespace if the object is defined in a different namespace than the current namespace.

<policy_file>- Specifies the name of the file containing the network policy.

If you need to update the network policy object directly, enter the following command:

oc edit network policy <policy_name> -n <namespace>

$ oc edit network policy <policy_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<policy_name>- Specifies the name of the network policy.

<namespace>- Optional: Specifies the namespace if the object is defined in a different namespace than the current namespace.

Confirm that the network policy object is updated.

oc describe networkpolicy <policy_name> -n <namespace>

$ oc describe networkpolicy <policy_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<policy_name>- Specifies the name of the network policy.

<namespace>- Optional: Specifies the namespace if the object is defined in a different namespace than the current namespace.

6.3.4.2. Example NetworkPolicy object

The following configuration annotates an example NetworkPolicy object:

where:

name- The name of the NetworkPolicy object.

spec.podSelector- A selector that describes the pods to which the policy applies. The policy object can only select pods in the project that defines the NetworkPolicy object.

ingress.from.podSelector- A selector that matches the pods from which the policy object allows ingress traffic. The selector matches pods in the same namespace as the NetworkPolicy.

ingress.ports- A list of one or more destination ports on which to accept traffic.

6.3.5. Deleting a network policy

As a cluster administrator, you can delete a network policy from a namespace.

6.3.5.1. Deleting a network policy using the CLI

You can delete a network policy in a namespace.

If you log in with cluster-admin privileges, you can delete network policies in any namespace in the cluster.

If you log in with cluster-admin privileges, you can delete network policies in any namespace in the cluster. In the web console, you can delete policies directly in YAML or by using the Actions menu.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You logged in to the cluster with a user with

adminprivileges. - You are working in the namespace where the network policy exists.

Procedure

To delete a network policy object, enter the following command. Successful output lists the name of the policy object and the

deletedstatus.oc delete networkpolicy <policy_name> -n <namespace>

$ oc delete networkpolicy <policy_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<policy_name>- Specifies the name of the network policy.

<namespace>- Optional parameter. If you defined the object in a different namespace than the current namespace, the parameter specifices the namespace.

6.3.6. Defining a default network policy for projects

As a cluster administrator, you can modify the new project template to automatically include network policies when you create a new project. If you do not yet have a customized template for new projects, you must first create one.

6.3.6.1. Modifying the template for new projects

As a cluster administrator, you can modify the default project template so that new projects are created using your custom requirements.

To create your own custom project template:

Prerequisites

-

You have access to an OpenShift Container Platform cluster using an account with

cluster-adminpermissions.

Procedure

-

Log in as a user with

cluster-adminprivileges. Generate the default project template:

oc adm create-bootstrap-project-template -o yaml > template.yaml

$ oc adm create-bootstrap-project-template -o yaml > template.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Use a text editor to modify the generated

template.yamlfile by adding objects or modifying existing objects. The project template must be created in the

openshift-confignamespace. Load your modified template:oc create -f template.yaml -n openshift-config

$ oc create -f template.yaml -n openshift-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the project configuration resource using the web console or CLI.

Using the web console:

-

Navigate to the Administration

Cluster Settings page. - Click Configuration to view all configuration resources.

- Find the entry for Project and click Edit YAML.

-

Navigate to the Administration

Using the CLI:

Edit the

project.config.openshift.io/clusterresource:oc edit project.config.openshift.io/cluster

$ oc edit project.config.openshift.io/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Update the

specsection to include theprojectRequestTemplateandnameparameters, and set the name of your uploaded project template. The default name isproject-request.Project configuration resource with custom project template

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - After you save your changes, create a new project to verify that your changes were successfully applied.

6.3.6.2. Adding network policies to the new project template

As a cluster administrator, you can add network policies to the default template for new projects. OpenShift Container Platform will automatically create all the NetworkPolicy objects specified in the template in the project.

Prerequisites

-

Your cluster uses a default CNI network plugin that supports

NetworkPolicyobjects, such as the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You must log in to the cluster with a user with

cluster-adminprivileges. - You must have created a custom default project template for new projects.

Procedure

Edit the default template for a new project by running the following command:

oc edit template <project_template> -n openshift-config

$ oc edit template <project_template> -n openshift-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

<project_template>with the name of the default template that you configured for your cluster. The default template name isproject-request.In the template, add each

NetworkPolicyobject as an element to theobjectsparameter. Theobjectsparameter accepts a collection of one or more objects.In the following example, the

objectsparameter collection includes severalNetworkPolicyobjects.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Create a new project and confirm the successful creation of your network policy objects.

Create a new project:

oc new-project <project>

$ oc new-project <project>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<project>with the name for the project you are creating.

Confirm that the network policy objects in the new project template exist in the new project:

oc get networkpolicy

$ oc get networkpolicyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output:

NAME POD-SELECTOR AGE allow-from-openshift-ingress <none> 7s allow-from-same-namespace <none> 7s

NAME POD-SELECTOR AGE allow-from-openshift-ingress <none> 7s allow-from-same-namespace <none> 7sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.7. Configuring multitenant isolation with network policy

As a cluster administrator, you can configure your network policies to provide multitenant network isolation.

If you are using the OpenShift SDN network plugin, configuring network policies as described in this section provides network isolation similar to multitenant mode but with network policy mode set.

6.3.7.1. Configuring multitenant isolation by using network policy

You can configure your project to isolate it from pods and services in other project namespaces.

Prerequisites

-

Your cluster uses a network plugin that supports

NetworkPolicyobjects, such as the OVN-Kubernetes network plugin or the OpenShift SDN network plugin withmode: NetworkPolicyset. This mode is the default for OpenShift SDN. -

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

adminprivileges.

Procedure

Create the following

NetworkPolicyobjects:A policy named

allow-from-openshift-ingress.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Notepolicy-group.network.openshift.io/ingress: ""is the preferred namespace selector label for OpenShift SDN. You can use thenetwork.openshift.io/policy-group: ingressnamespace selector label, but this is a legacy label.A policy named

allow-from-openshift-monitoring:Copy to Clipboard Copied! Toggle word wrap Toggle overflow A policy named

allow-same-namespace:Copy to Clipboard Copied! Toggle word wrap Toggle overflow A policy named

allow-from-kube-apiserver-operator:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For more details, see New

kube-apiserver-operatorwebhook controller validating health of webhook.

Optional: To confirm that the network policies exist in your current project, enter the following command:

oc describe networkpolicy

$ oc describe networkpolicyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.7.2. Next steps

6.4. Audit logging for network security

The OVN-Kubernetes network plugin uses Open Virtual Network (OVN) access control lists (ACLs) to manage AdminNetworkPolicy, BaselineAdminNetworkPolicy, NetworkPolicy, and EgressFirewall objects. Audit logging exposes allow and deny ACL events for NetworkPolicy, EgressFirewall and BaselineAdminNetworkPolicy custom resources (CR). Logging also exposes allow, deny, and pass ACL events for AdminNetworkPolicy (ANP) CR.

Audit logging is available for only the OVN-Kubernetes network plugin.

6.4.1. Audit configuration

The configuration for audit logging is specified as part of the OVN-Kubernetes cluster network provider configuration. The following YAML illustrates the default values for the audit logging:

Audit logging configuration

The following table describes the configuration fields for audit logging.

| Field | Type | Description |

|---|---|---|

|

| integer |

The maximum number of messages to generate every second per node. The default value is |

|

| integer |

The maximum size for the audit log in bytes. The default value is |

|

| integer | The maximum number of log files that are retained. |

|

| string | One of the following additional audit log targets:

|

|

| string |

The syslog facility, such as |

6.4.2. Audit logging

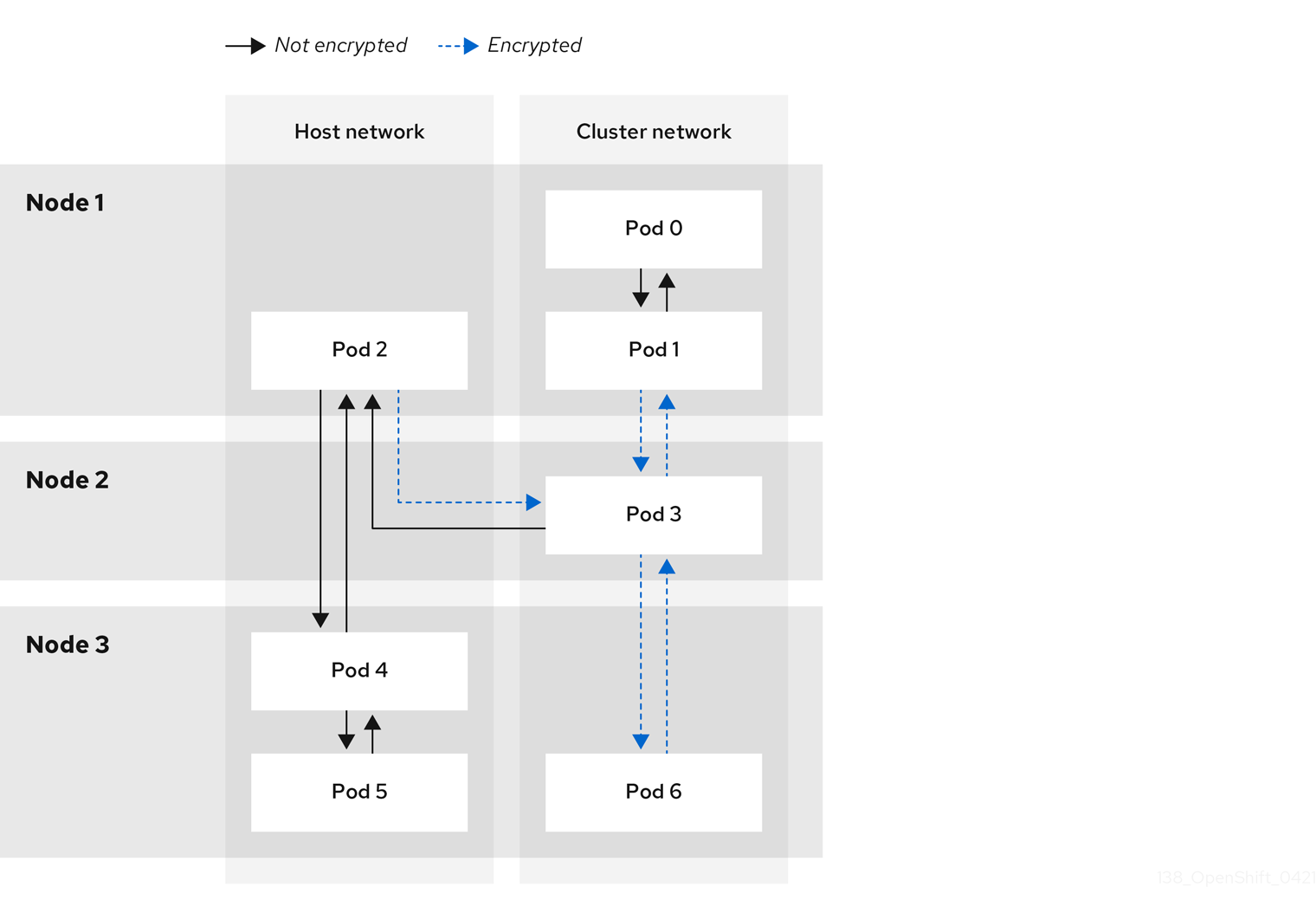

You can configure the destination for audit logs, such as a syslog server or a UNIX domain socket. Regardless of any additional configuration, an audit log is always saved to /var/log/ovn/acl-audit-log.log on each OVN-Kubernetes pod in the cluster.

You can enable audit logging for each namespace by annotating each namespace configuration with a k8s.ovn.org/acl-logging section. In the k8s.ovn.org/acl-logging section, you must specify allow, deny, or both values to enable audit logging for a namespace.

A network policy does not support setting the Pass action set as a rule.

The ACL-logging implementation logs access control list (ACL) events for a network. You can view these logs to analyze any potential security issues.

Example namespace annotation

To view the default ACL logging configuration values, see the policyAuditConfig object in the cluster-network-03-config.yml file. If required, you can change the ACL logging configuration values for log file parameters in this file.

The logging message format is compatible with syslog as defined by RFC5424. The syslog facility is configurable and defaults to local0. The following example shows key parameters and their values outputted in a log message:

Example logging message that outputs parameters and their values

<timestamp>|<message_serial>|acl_log(ovn_pinctrl0)|<severity>|name="<acl_name>", verdict="<verdict>", severity="<severity>", direction="<direction>": <flow>

<timestamp>|<message_serial>|acl_log(ovn_pinctrl0)|<severity>|name="<acl_name>", verdict="<verdict>", severity="<severity>", direction="<direction>": <flow>Where:

-

<timestamp>states the time and date for the creation of a log message. -

<message_serial>lists the serial number for a log message. -

acl_log(ovn_pinctrl0)is a literal string that prints the location of the log message in the OVN-Kubernetes plugin. -

<severity>sets the severity level for a log message. If you enable audit logging that supportsallowanddenytasks then two severity levels show in the log message output. -

<name>states the name of the ACL-logging implementation in the OVN Network Bridging Database (nbdb) that was created by the network policy. -

<verdict>can be eitherallowordrop. -

<direction>can be eitherto-lportorfrom-lportto indicate that the policy was applied to traffic going to or away from a pod. -

<flow>shows packet information in a format equivalent to theOpenFlowprotocol. This parameter comprises Open vSwitch (OVS) fields.

The following example shows OVS fields that the flow parameter uses to extract packet information from system memory:

Example of OVS fields used by the flow parameter to extract packet information

<proto>,vlan_tci=0x0000,dl_src=<src_mac>,dl_dst=<source_mac>,nw_src=<source_ip>,nw_dst=<target_ip>,nw_tos=<tos_dscp>,nw_ecn=<tos_ecn>,nw_ttl=<ip_ttl>,nw_frag=<fragment>,tp_src=<tcp_src_port>,tp_dst=<tcp_dst_port>,tcp_flags=<tcp_flags>

<proto>,vlan_tci=0x0000,dl_src=<src_mac>,dl_dst=<source_mac>,nw_src=<source_ip>,nw_dst=<target_ip>,nw_tos=<tos_dscp>,nw_ecn=<tos_ecn>,nw_ttl=<ip_ttl>,nw_frag=<fragment>,tp_src=<tcp_src_port>,tp_dst=<tcp_dst_port>,tcp_flags=<tcp_flags>Where:

-

<proto>states the protocol. Valid values aretcpandudp. -

vlan_tci=0x0000states the VLAN header as0because a VLAN ID is not set for internal pod network traffic. -

<src_mac>specifies the source for the Media Access Control (MAC) address. -

<source_mac>specifies the destination for the MAC address. -

<source_ip>lists the source IP address -

<target_ip>lists the target IP address. -

<tos_dscp>states Differentiated Services Code Point (DSCP) values to classify and prioritize certain network traffic over other traffic. -

<tos_ecn>states Explicit Congestion Notification (ECN) values that indicate any congested traffic in your network. -

<ip_ttl>states the Time To Live (TTP) information for an packet. -

<fragment>specifies what type of IP fragments or IP non-fragments to match. -

<tcp_src_port>shows the source for the port for TCP and UDP protocols. -

<tcp_dst_port>lists the destination port for TCP and UDP protocols. -

<tcp_flags>supports numerous flags such asSYN,ACK,PSHand so on. If you need to set multiple values then each value is separated by a vertical bar (|). The UDP protocol does not support this parameter.

For more information about the previous field descriptions, go to the OVS manual page for ovs-fields.

Example ACL deny log entry for a network policy

2023-11-02T16:28:54.139Z|00004|acl_log(ovn_pinctrl0)|INFO|name="NP:verify-audit-logging:Ingress", verdict=drop, severity=alert, direction=to-lport: tcp,vlan_tci=0x0000,dl_src=0a:58:0a:81:02:01,dl_dst=0a:58:0a:81:02:23,nw_src=10.131.0.39,nw_dst=10.129.2.35,nw_tos=0,nw_ecn=0,nw_ttl=62,nw_frag=no,tp_src=58496,tp_dst=8080,tcp_flags=syn 2023-11-02T16:28:55.187Z|00005|acl_log(ovn_pinctrl0)|INFO|name="NP:verify-audit-logging:Ingress", verdict=drop, severity=alert, direction=to-lport: tcp,vlan_tci=0x0000,dl_src=0a:58:0a:81:02:01,dl_dst=0a:58:0a:81:02:23,nw_src=10.131.0.39,nw_dst=10.129.2.35,nw_tos=0,nw_ecn=0,nw_ttl=62,nw_frag=no,tp_src=58496,tp_dst=8080,tcp_flags=syn 2023-11-02T16:28:57.235Z|00006|acl_log(ovn_pinctrl0)|INFO|name="NP:verify-audit-logging:Ingress", verdict=drop, severity=alert, direction=to-lport: tcp,vlan_tci=0x0000,dl_src=0a:58:0a:81:02:01,dl_dst=0a:58:0a:81:02:23,nw_src=10.131.0.39,nw_dst=10.129.2.35,nw_tos=0,nw_ecn=0,nw_ttl=62,nw_frag=no,tp_src=58496,tp_dst=8080,tcp_flags=syn

2023-11-02T16:28:54.139Z|00004|acl_log(ovn_pinctrl0)|INFO|name="NP:verify-audit-logging:Ingress", verdict=drop, severity=alert, direction=to-lport: tcp,vlan_tci=0x0000,dl_src=0a:58:0a:81:02:01,dl_dst=0a:58:0a:81:02:23,nw_src=10.131.0.39,nw_dst=10.129.2.35,nw_tos=0,nw_ecn=0,nw_ttl=62,nw_frag=no,tp_src=58496,tp_dst=8080,tcp_flags=syn

2023-11-02T16:28:55.187Z|00005|acl_log(ovn_pinctrl0)|INFO|name="NP:verify-audit-logging:Ingress", verdict=drop, severity=alert, direction=to-lport: tcp,vlan_tci=0x0000,dl_src=0a:58:0a:81:02:01,dl_dst=0a:58:0a:81:02:23,nw_src=10.131.0.39,nw_dst=10.129.2.35,nw_tos=0,nw_ecn=0,nw_ttl=62,nw_frag=no,tp_src=58496,tp_dst=8080,tcp_flags=syn

2023-11-02T16:28:57.235Z|00006|acl_log(ovn_pinctrl0)|INFO|name="NP:verify-audit-logging:Ingress", verdict=drop, severity=alert, direction=to-lport: tcp,vlan_tci=0x0000,dl_src=0a:58:0a:81:02:01,dl_dst=0a:58:0a:81:02:23,nw_src=10.131.0.39,nw_dst=10.129.2.35,nw_tos=0,nw_ecn=0,nw_ttl=62,nw_frag=no,tp_src=58496,tp_dst=8080,tcp_flags=synThe following table describes namespace annotation values:

| Field | Description |

|---|---|

|

|

Blocks namespace access to any traffic that matches an ACL rule with the |

|

|

Permits namespace access to any traffic that matches an ACL rule with the |

|

|

A |

6.4.3. AdminNetworkPolicy audit logging

Audit logging is enabled per AdminNetworkPolicy CR by annotating an ANP policy with the k8s.ovn.org/acl-logging key such as in the following example:

Example 6.19. Example of annotation for AdminNetworkPolicy CR

Logs are generated whenever a specific OVN ACL is hit and meets the action criteria set in your logging annotation. For example, an event in which any of the namespaces with the label tenant: product-development accesses the namespaces with the label tenant: backend-storage, a log is generated.

ACL logging is limited to 60 characters. If your ANP name field is long, the rest of the log will be truncated.

The following is a direction index for the examples log entries that follow:

| Direction | Rule |

|---|---|

| Ingress |

|

| Egress |

|

Example 6.20. Example ACL log entry for Allow action of the AdminNetworkPolicy named anp-tenant-log with Ingress:0 and Egress:0

2024-06-10T16:27:45.194Z|00052|acl_log(ovn_pinctrl0)|INFO|name="ANP:anp-tenant-log:Ingress:0", verdict=allow, severity=alert, direction=to-lport: tcp,vlan_tci=0x0000,dl_src=0a:58:0a:80:02:1a,dl_dst=0a:58:0a:80:02:19,nw_src=10.128.2.26,nw_dst=10.128.2.25,nw_tos=0,nw_ecn=0,nw_ttl=64,nw_frag=no,tp_src=57814,tp_dst=8080,tcp_flags=syn 2024-06-10T16:28:23.130Z|00059|acl_log(ovn_pinctrl0)|INFO|name="ANP:anp-tenant-log:Ingress:0", verdict=allow, severity=alert, direction=to-lport: tcp,vlan_tci=0x0000,dl_src=0a:58:0a:80:02:18,dl_dst=0a:58:0a:80:02:19,nw_src=10.128.2.24,nw_dst=10.128.2.25,nw_tos=0,nw_ecn=0,nw_ttl=64,nw_frag=no,tp_src=38620,tp_dst=8080,tcp_flags=ack 2024-06-10T16:28:38.293Z|00069|acl_log(ovn_pinctrl0)|INFO|name="ANP:anp-tenant-log:Egress:0", verdict=allow, severity=alert, direction=from-lport: tcp,vlan_tci=0x0000,dl_src=0a:58:0a:80:02:19,dl_dst=0a:58:0a:80:02:1a,nw_src=10.128.2.25,nw_dst=10.128.2.26,nw_tos=0,nw_ecn=0,nw_ttl=64,nw_frag=no,tp_src=47566,tp_dst=8080,tcp_flags=fin|ack=0,nw_ecn=0,nw_ttl=64,nw_frag=no,tp_src=55704,tp_dst=8080,tcp_flags=ack

2024-06-10T16:27:45.194Z|00052|acl_log(ovn_pinctrl0)|INFO|name="ANP:anp-tenant-log:Ingress:0", verdict=allow, severity=alert, direction=to-lport: tcp,vlan_tci=0x0000,dl_src=0a:58:0a:80:02:1a,dl_dst=0a:58:0a:80:02:19,nw_src=10.128.2.26,nw_dst=10.128.2.25,nw_tos=0,nw_ecn=0,nw_ttl=64,nw_frag=no,tp_src=57814,tp_dst=8080,tcp_flags=syn

2024-06-10T16:28:23.130Z|00059|acl_log(ovn_pinctrl0)|INFO|name="ANP:anp-tenant-log:Ingress:0", verdict=allow, severity=alert, direction=to-lport: tcp,vlan_tci=0x0000,dl_src=0a:58:0a:80:02:18,dl_dst=0a:58:0a:80:02:19,nw_src=10.128.2.24,nw_dst=10.128.2.25,nw_tos=0,nw_ecn=0,nw_ttl=64,nw_frag=no,tp_src=38620,tp_dst=8080,tcp_flags=ack