This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Chapter 23. Load balancing with MetalLB

23.1. About MetalLB and the MetalLB Operator

As a cluster administrator, you can add the MetalLB Operator to your cluster so that when a service of type LoadBalancer is added to the cluster, MetalLB can add a fault-tolerant external IP address for the service. The external IP address is added to the host network for your cluster.

23.1.1. When to use MetalLB

Using MetalLB is valuable when you have a bare-metal cluster, or an infrastructure that is like bare metal, and you want fault-tolerant access to an application through an external IP address.

You must configure your networking infrastructure to ensure that network traffic for the external IP address is routed from clients to the host network for the cluster.

After deploying MetalLB with the MetalLB Operator, when you add a service of type LoadBalancer, MetalLB provides a platform-native load balancer.

23.1.2. MetalLB Operator custom resources

The MetalLB Operator monitors its own namespace for two custom resources:

MetalLB-

When you add a

MetalLBcustom resource to the cluster, the MetalLB Operator deploys MetalLB on the cluster. The Operator only supports a single instance of the custom resource. If the instance is deleted, the Operator removes MetalLB from the cluster. AddressPool-

MetalLB requires one or more pools of IP addresses that it can assign to a service when you add a service of type

LoadBalancer. When you add anAddressPoolcustom resource to the cluster, the MetalLB Operator configures MetalLB so that it can assign IP addresses from the pool. An address pool includes a list of IP addresses. The list can be a single IP address that is set using a range, such as 1.1.1.1-1.1.1.1, a range specified in CIDR notation, a range specified as a starting and ending address separated by a hyphen, or a combination of the three. An address pool requires a name. The documentation uses names likedoc-example,doc-example-reserved, anddoc-example-ipv6. An address pool specifies whether MetalLB can automatically assign IP addresses from the pool or whether the IP addresses are reserved for services that explicitly specify the pool by name.

After you add the MetalLB custom resource to the cluster and the Operator deploys MetalLB, the MetalLB software components, controller and speaker, begin running.

23.1.3. MetalLB software components

When you install the MetalLB Operator, the metallb-operator-controller-manager deployment starts a pod. The pod is the implementation of the Operator. The pod monitors for changes to the MetalLB custom resource and AddressPool custom resources.

When the Operator starts an instance of MetalLB, it starts a controller deployment and a speaker daemon set.

controllerThe Operator starts the deployment and a single pod. When you add a service of type

LoadBalancer, Kubernetes uses thecontrollerto allocate an IP address from an address pool. In case of a service failure, verify you have the following entry in yourcontrollerpod logs:Example output

"event":"ipAllocated","ip":"172.22.0.201","msg":"IP address assigned by controller

"event":"ipAllocated","ip":"172.22.0.201","msg":"IP address assigned by controllerCopy to Clipboard Copied! Toggle word wrap Toggle overflow speakerThe Operator starts a daemon set with one

speakerpod for each node in your cluster. If thecontrollerallocated the IP address to the service and service is still unavailable, read thespeakerpod logs. If thespeakerpod is unavailable, run theoc describe pod -ncommand.For layer 2 mode, after the

controllerallocates an IP address for the service, eachspeakerpod determines if it is on the same node as an endpoint for the service. An algorithm that involves hashing the node name and the service name is used to select a singlespeakerpod to announce the load balancer IP address. Thespeakeruses Address Resolution Protocol (ARP) to announce IPv4 addresses and Neighbor Discovery Protocol (NDP) to announce IPv6 addresses.Requests for the load balancer IP address are routed to the node with the

speakerthat announces the IP address. After the node receives the packets, the service proxy routes the packets to an endpoint for the service. The endpoint can be on the same node in the optimal case, or it can be on another node. The service proxy chooses an endpoint each time a connection is established.

23.1.4. MetalLB concepts for layer 2 mode

In layer 2 mode, the speaker pod on one node announces the external IP address for a service to the host network. From a network perspective, the node appears to have multiple IP addresses assigned to a network interface.

Since layer 2 mode relies on ARP and NDP, the client must be on the same subnet of the nodes announcing the service in order for MetalLB to work. Additionally, the IP address assigned to the service must be on the same subnet of the network used by the client to reach the service.

The speaker pod responds to ARP requests for IPv4 services and NDP requests for IPv6.

In layer 2 mode, all traffic for a service IP address is routed through one node. After traffic enters the node, the service proxy for the CNI network provider distributes the traffic to all the pods for the service.

Because all traffic for a service enters through a single node in layer 2 mode, in a strict sense, MetalLB does not implement a load balancer for layer 2. Rather, MetalLB implements a failover mechanism for layer 2 so that when a speaker pod becomes unavailable, a speaker pod on a different node can announce the service IP address.

When a node becomes unavailable, failover is automatic. The speaker pods on the other nodes detect that a node is unavailable and a new speaker pod and node take ownership of the service IP address from the failed node.

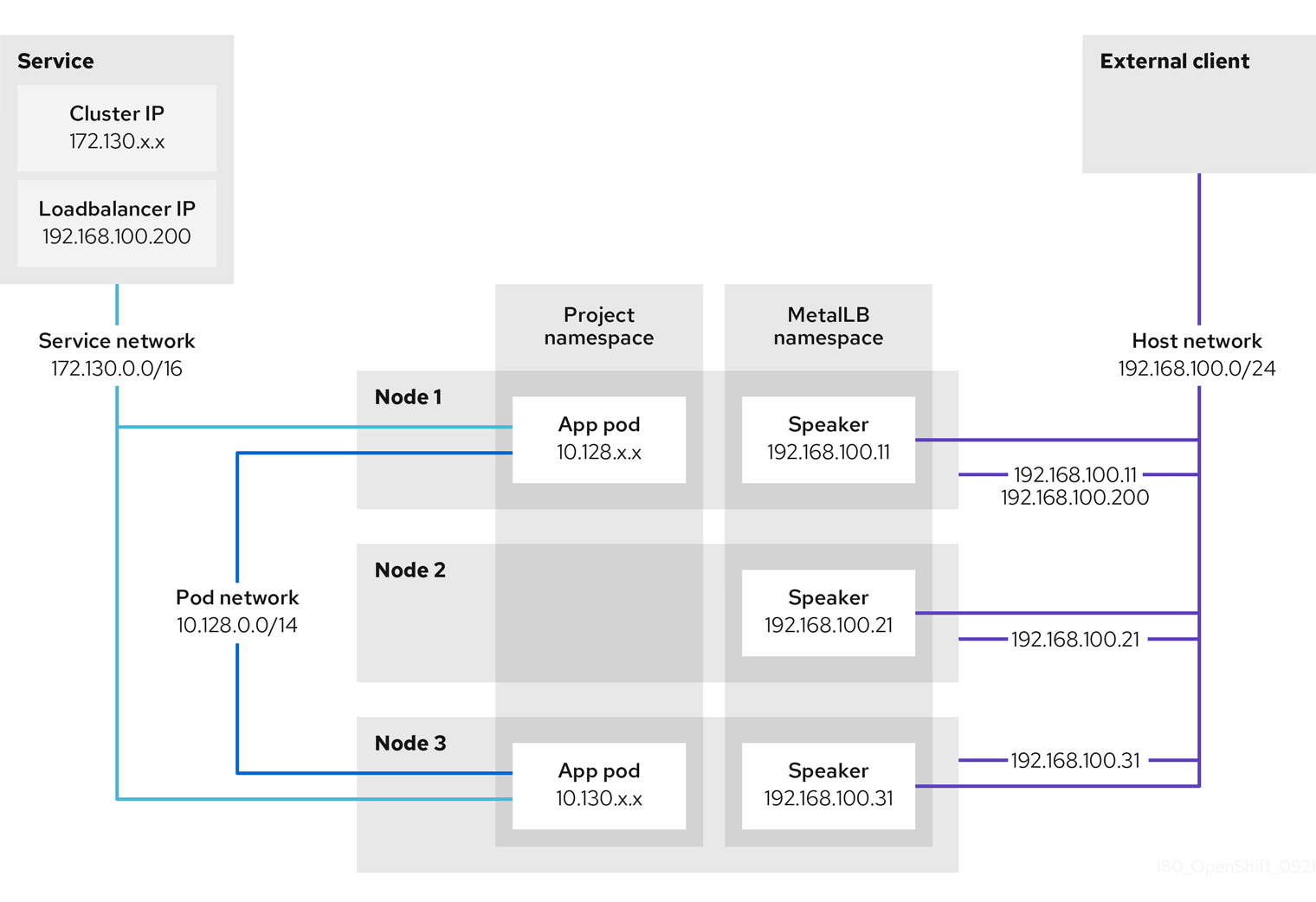

The preceding graphic shows the following concepts related to MetalLB:

-

An application is available through a service that has a cluster IP on the

172.130.0.0/16subnet. That IP address is accessible from inside the cluster. The service also has an external IP address that MetalLB assigned to the service,192.168.100.200. - Nodes 1 and 3 have a pod for the application.

-

The

speakerdaemon set runs a pod on each node. The MetalLB Operator starts these pods. -

Each

speakerpod is a host-networked pod. The IP address for the pod is identical to the IP address for the node on the host network. -

The

speakerpod on node 1 uses ARP to announce the external IP address for the service,192.168.100.200. Thespeakerpod that announces the external IP address must be on the same node as an endpoint for the service and the endpoint must be in theReadycondition. -

Client traffic is routed to the host network and connects to the

192.168.100.200IP address. After traffic enters the node, the service proxy sends the traffic to the application pod on the same node or another node according to the external traffic policy that you set for the service. -

If node 1 becomes unavailable, the external IP address fails over to another node. On another node that has an instance of the application pod and service endpoint, the

speakerpod begins to announce the external IP address,192.168.100.200and the new node receives the client traffic. In the diagram, the only candidate is node 3.

23.1.4.1. Layer 2 and external traffic policy

With layer 2 mode, one node in your cluster receives all the traffic for the service IP address. How your cluster handles the traffic after it enters the node is affected by the external traffic policy.

clusterThis is the default value for

spec.externalTrafficPolicy.With the

clustertraffic policy, after the node receives the traffic, the service proxy distributes the traffic to all the pods in your service. This policy provides uniform traffic distribution across the pods, but it obscures the client IP address and it can appear to the application in your pods that the traffic originates from the node rather than the client.localWith the

localtraffic policy, after the node receives the traffic, the service proxy only sends traffic to the pods on the same node. For example, if thespeakerpod on node A announces the external service IP, then all traffic is sent to node A. After the traffic enters node A, the service proxy only sends traffic to pods for the service that are also on node A. Pods for the service that are on additional nodes do not receive any traffic from node A. Pods for the service on additional nodes act as replicas in case failover is needed.This policy does not affect the client IP address. Application pods can determine the client IP address from the incoming connections.

23.1.5. Limitations and restrictions

23.1.5.1. Support for layer 2 only

When you install and configure MetalLB on OpenShift Container Platform 4.9 with the MetalLB Operator, support is restricted to layer 2 mode only. In comparison, the open source MetalLB project offers load balancing for layer 2 mode and a mode for layer 3 that uses border gateway protocol (BGP).

23.1.5.2. Support for single stack networking

Although you can specify IPv4 addresses and IPv6 addresses in the same address pool, MetalLB only assigns one IP address for the load balancer.

When MetalLB is deployed on a cluster that is configured for dual-stack networking, MetalLB assigns one IPv4 or IPv6 address for the load balancer, depending on the IP address family of the cluster IP for the service. For example, if the cluster IP of the service is IPv4, then MetalLB assigns an IPv4 address for the load balancer. MetalLB does not assign an IPv4 and an IPv6 address simultaneously.

IPv6 is only supported for clusters that use the OVN-Kubernetes network provider.

23.1.5.3. Infrastructure considerations for MetalLB

MetalLB is primarily useful for on-premise, bare metal installations because these installations do not include a native load-balancer capability. In addition to bare metal installations, installations of OpenShift Container Platform on some infrastructures might not include a native load-balancer capability. For example, the following infrastructures can benefit from adding the MetalLB Operator:

- Bare metal

- VMware vSphere

MetalLB Operator and MetalLB are supported with the OpenShift SDN and OVN-Kubernetes network providers.

23.1.5.4. Limitations for layer 2 mode

23.1.5.4.1. Single-node bottleneck

MetalLB routes all traffic for a service through a single node, the node can become a bottleneck and limit performance.

Layer 2 mode limits the ingress bandwidth for your service to the bandwidth of a single node. This is a fundamental limitation of using ARP and NDP to direct traffic.

23.1.5.4.2. Slow failover performance

Failover between nodes depends on cooperation from the clients. When a failover occurs, MetalLB sends gratuitous ARP packets to notify clients that the MAC address associated with the service IP has changed.

Most client operating systems handle gratuitous ARP packets correctly and update their neighbor caches promptly. When clients update their caches quickly, failover completes within a few seconds. Clients typically fail over to a new node within 10 seconds. However, some client operating systems either do not handle gratuitous ARP packets at all or have outdated implementations that delay the cache update.

Recent versions of common operating systems such as Windows, macOS, and Linux implement layer 2 failover correctly. Issues with slow failover are not expected except for older and less common client operating systems.

To minimize the impact from a planned failover on outdated clients, keep the old node running for a few minutes after flipping leadership. The old node can continue to forward traffic for outdated clients until their caches refresh.

During an unplanned failover, the service IPs are unreachable until the outdated clients refresh their cache entries.

23.1.5.5. Incompatibility with IP failover

MetalLB is incompatible with the IP failover feature. Before you install the MetalLB Operator, remove IP failover.

23.2. Installing the MetalLB Operator

As a cluster administrator, you can add the MetallB Operator so that the Operator can manage the lifecycle for an instance of MetalLB on your cluster.

The installation procedures use the metallb-system namespace. You can install the Operator and configure custom resources in a different namespace. The Operator starts MetalLB in the same namespace that the Operator is installed in.

MetalLB and IP failover are incompatible. If you configured IP failover for your cluster, perform the steps to remove IP failover before you install the Operator.

23.2.1. Installing from OperatorHub using the web console

You can install and subscribe to an Operator from OperatorHub using the OpenShift Container Platform web console.

Procedure

-

Navigate in the web console to the Operators

OperatorHub page. Scroll or type a keyword into the Filter by keyword box to find the Operator you want. For example, type

metallbto find the MetalLB Operator.You can also filter options by Infrastructure Features. For example, select Disconnected if you want to see Operators that work in disconnected environments, also known as restricted network environments.

Select the Operator to display additional information.

NoteChoosing a Community Operator warns that Red Hat does not certify Community Operators; you must acknowledge the warning before continuing.

- Read the information about the Operator and click Install.

On the Install Operator page:

- Select an Update Channel (if more than one is available).

- Select Automatic or Manual approval strategy, as described earlier.

Click Install to make the Operator available to the selected namespaces on this OpenShift Container Platform cluster.

If you selected a Manual approval strategy, the upgrade status of the subscription remains Upgrading until you review and approve the install plan.

After approving on the Install Plan page, the subscription upgrade status moves to Up to date.

- If you selected an Automatic approval strategy, the upgrade status should resolve to Up to date without intervention.

After the upgrade status of the subscription is Up to date, select Operators

Installed Operators to verify that the cluster service version (CSV) of the installed Operator eventually shows up. The Status should ultimately resolve to InstallSucceeded in the relevant namespace. NoteFor the All namespaces… installation mode, the status resolves to InstallSucceeded in the

openshift-operatorsnamespace, but the status is Copied if you check in other namespaces.If it does not:

-

Check the logs in any pods in the

openshift-operatorsproject (or other relevant namespace if A specific namespace… installation mode was selected) on the WorkloadsPods page that are reporting issues to troubleshoot further.

-

Check the logs in any pods in the

23.2.2. Installing from OperatorHub using the CLI

Instead of using the OpenShift Container Platform web console, you can install an Operator from OperatorHub using the CLI. Use the oc command to create or update a Subscription object.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Confirm that the MetalLB Operator is available:

oc get packagemanifests -n openshift-marketplace metallb-operator

$ oc get packagemanifests -n openshift-marketplace metallb-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME CATALOG AGE metallb-operator Red Hat Operators 9h

NAME CATALOG AGE metallb-operator Red Hat Operators 9hCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

metallb-systemnamespace:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an Operator group custom resource in the namespace:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm the Operator group is installed in the namespace:

oc get operatorgroup -n metallb-system

$ oc get operatorgroup -n metallb-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME AGE metallb-operator 14m

NAME AGE metallb-operator 14mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Subscribe to the MetalLB Operator.

Run the following command to get the OpenShift Container Platform major and minor version. You use the values to set the

channelvalue in the next step.OC_VERSION=$(oc version -o yaml | grep openshiftVersion | \ grep -o '[0-9]*[.][0-9]*' | head -1)$ OC_VERSION=$(oc version -o yaml | grep openshiftVersion | \ grep -o '[0-9]*[.][0-9]*' | head -1)Copy to Clipboard Copied! Toggle word wrap Toggle overflow To create a subscription custom resource for the Operator, enter the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Confirm the install plan is in the namespace:

oc get installplan -n metallb-system

$ oc get installplan -n metallb-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME CSV APPROVAL APPROVED install-wzg94 metallb-operator.4.9.0-nnnnnnnnnnnn Automatic true

NAME CSV APPROVAL APPROVED install-wzg94 metallb-operator.4.9.0-nnnnnnnnnnnn Automatic trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow To verify that the Operator is installed, enter the following command:

oc get clusterserviceversion -n metallb-system \ -o custom-columns=Name:.metadata.name,Phase:.status.phase

$ oc get clusterserviceversion -n metallb-system \ -o custom-columns=Name:.metadata.name,Phase:.status.phaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Name Phase metallb-operator.4.9.0-nnnnnnnnnnnn Succeeded

Name Phase metallb-operator.4.9.0-nnnnnnnnnnnn SucceededCopy to Clipboard Copied! Toggle word wrap Toggle overflow

23.2.3. Starting MetalLB on your cluster

After you install the Operator, you need to configure a single instance of a MetalLB custom resource. After you configure the custom resource, the Operator starts MetalLB on your cluster.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges. - Install the MetalLB Operator.

Procedure

Create a single instance of a MetalLB custom resource:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Confirm that the deployment for the MetalLB controller and the daemon set for the MetalLB speaker are running.

Check that the deployment for the controller is running:

oc get deployment -n metallb-system controller

$ oc get deployment -n metallb-system controllerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY UP-TO-DATE AVAILABLE AGE controller 1/1 1 1 11m

NAME READY UP-TO-DATE AVAILABLE AGE controller 1/1 1 1 11mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the daemon set for the speaker is running:

oc get daemonset -n metallb-system speaker

$ oc get daemonset -n metallb-system speakerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE speaker 6 6 6 6 6 kubernetes.io/os=linux 18m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE speaker 6 6 6 6 6 kubernetes.io/os=linux 18mCopy to Clipboard Copied! Toggle word wrap Toggle overflow The example output indicates 6 speaker pods. The number of speaker pods in your cluster might differ from the example output. Make sure the output indicates one pod for each node in your cluster.

23.2.4. Next steps

23.3. Configuring MetalLB address pools

As a cluster administrator, you can add, modify, and delete address pools. The MetalLB Operator uses the address pool custom resources to set the IP addresses that MetalLB can assign to services.

23.3.1. About the address pool custom resource

The fields for the address pool custom resource are described in the following table.

| Field | Type | Description |

|---|---|---|

|

|

|

Specifies the name for the address pool. When you add a service, you can specify this pool name in the |

|

|

| Specifies the namespace for the address pool. Specify the same namespace that the MetalLB Operator uses. |

|

|

|

Specifies the protocol for announcing the load balancer IP address to peer nodes. The only supported value is |

|

|

|

Optional: Specifies whether MetalLB automatically assigns IP addresses from this pool. Specify |

|

|

| Specifies a list of IP addresses for MetalLB to assign to services. You can specify multiple ranges in a single pool. Specify each range in CIDR notation or as starting and ending IP addresses separated with a hyphen. |

23.3.2. Configuring an address pool

As a cluster administrator, you can add address pools to your cluster to control the IP addresses that MetaLLB can assign to load-balancer services.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create a file, such as

addresspool.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the address pool:

oc apply -f addresspool.yaml

$ oc apply -f addresspool.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

View the address pool:

oc describe -n metallb-system addresspool doc-example

$ oc describe -n metallb-system addresspool doc-exampleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Confirm that the address pool name, such as doc-example, and the IP address ranges appear in the output.

23.3.3. Example address pool configurations

23.3.3.1. Example: IPv4 and CIDR ranges

You can specify a range of IP addresses in CIDR notation. You can combine CIDR notation with the notation that uses a hyphen to separate lower and upper bounds.

23.3.3.2. Example: Reserve IP addresses

You can set the autoAssign field to false to prevent MetalLB from automatically assigning the IP addresses from the pool. When you add a service, you can request a specific IP address from the pool or you can specify the pool name in an annotation to request any IP address from the pool.

23.3.3.3. Example: IPv6 address pool

You can add address pools that use IPv6. The following example shows a single IPv6 range. However, you can specify multiple ranges in the addresses list, just like several IPv4 examples.

23.3.4. Next steps

23.4. Configuring services to use MetalLB

As a cluster administrator, when you add a service of type LoadBalancer, you can control how MetalLB assigns an IP address.

23.4.1. Request a specific IP address

Like some other load-balancer implementations, MetalLB accepts the spec.loadBalancerIP field in the service specification.

If the requested IP address is within a range from any address pool, MetalLB assigns the requested IP address. If the requested IP address is not within any range, MetalLB reports a warning.

Example service YAML for a specific IP address

If MetalLB cannot assign the requested IP address, the EXTERNAL-IP for the service reports <pending> and running oc describe service <service_name> includes an event like the following example.

Example event when MetalLB cannot assign a requested IP address

... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning AllocationFailed 3m16s metallb-controller Failed to allocate IP for "default/invalid-request": "4.3.2.1" is not allowed in config

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning AllocationFailed 3m16s metallb-controller Failed to allocate IP for "default/invalid-request": "4.3.2.1" is not allowed in config23.4.2. Request an IP address from a specific pool

To assign an IP address from a specific range, but you are not concerned with the specific IP address, then you can use the metallb.universe.tf/address-pool annotation to request an IP address from the specified address pool.

Example service YAML for an IP address from a specific pool

If the address pool that you specify for <address_pool_name> does not exist, MetalLB attempts to assign an IP address from any pool that permits automatic assignment.

23.4.3. Accept any IP address

By default, address pools are configured to permit automatic assignment. MetalLB assigns an IP address from these address pools.

To accept any IP address from any pool that is configured for automatic assignment, no special annotation or configuration is required.

Example service YAML for accepting any IP address

23.4.5. Configuring a service with MetalLB

You can configure a load-balancing service to use an external IP address from an address pool.

Prerequisites

-

Install the OpenShift CLI (

oc). - Install the MetalLB Operator and start MetalLB.

- Configure at least one address pool.

- Configure your network to route traffic from the clients to the host network for the cluster.

Procedure

Create a

<service_name>.yamlfile. In the file, ensure that thespec.typefield is set toLoadBalancer.Refer to the examples for information about how to request the external IP address that MetalLB assigns to the service.

Create the service:

oc apply -f <service_name>.yaml

$ oc apply -f <service_name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

service/<service_name> created

service/<service_name> createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Describe the service:

oc describe service <service_name>

$ oc describe service <service_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow <.> The annotation is present if you request an IP address from a specific pool. <.> The service type must indicate

LoadBalancer. <.> The load-balancer ingress field indicates the external IP address if the service is assigned correctly. <.> The events field indicates the node name that is assigned to announce the external IP address. If you experience an error, the events field indicates the reason for the error.