Updating clusters

Updating OpenShift Container Platform clusters

Abstract

Chapter 1. Understanding OpenShift updates

1.1. Introduction to OpenShift updates

With OpenShift Container Platform 4, you can update an OpenShift Container Platform cluster with a single operation by using the web console or the OpenShift CLI (oc).

Platform administrators can view new update options either by going to Administration → Cluster Settings in the web console or by looking at the output of the oc adm upgrade command.

1.1.1. Cluster update overview

OpenShift Container Platform updates involve several services, Operators, and processes working in tandem to change the cluster to the desired version.

Red Hat hosts a public OpenShift Update Service (OSUS), which serves a graph of update possibilities based on the OpenShift Container Platform release images in the official registry. The graph contains update information for any public release. OpenShift Container Platform clusters are configured to connect to the OSUS by default, and the OSUS responds to clusters with information about known update targets.

An update begins when either a cluster administrator or an automatic update controller edits the custom resource (CR) of the Cluster Version Operator (CVO) with a new version. To reconcile the cluster with the newly specified version, the CVO retrieves the target release image from an image registry and begins to apply changes to the cluster.

Operators previously installed through Operator Lifecycle Manager (OLM) follow a different process for updates. See Updating installed Operators for more information.

The target release image contains manifest files for all cluster components that form a specific OCP version. When updating the cluster to a new version, the CVO applies manifests in separate stages called Runlevels. Most, but not all, manifests support one of the cluster Operators. As the CVO applies a manifest to a cluster Operator, the Operator might perform update tasks to reconcile itself with its new specified version.

The CVO monitors the state of each applied resource and the states reported by all cluster Operators. The CVO only proceeds with the update when all manifests and cluster Operators in the active Runlevel reach a stable condition. After the CVO updates the entire control plane through this process, the Machine Config Operator (MCO) updates the operating system and configuration of every node in the cluster.

1.1.2. Common questions about update availability

There are several factors that affect if and when an update is made available to an OpenShift Container Platform cluster.

The following list provides common questions regarding the availability of an update:

What are the differences between each of the update channels?

-

A new release is initially added to the

candidatechannel. -

After successful final testing, a release on the

candidatechannel is promoted to thefastchannel, an errata is published, and the release is now fully supported. After a delay, a release on the

fastchannel is finally promoted to thestablechannel. This delay represents the only difference between thefastandstablechannels.NoteFor the latest z-stream releases, this delay may generally be a week or two. However, the delay for initial updates to the latest minor version may take much longer, generally 45-90 days.

-

Releases promoted to the

stablechannel are simultaneously promoted to theeuschannel. The primary purpose of theeuschannel is to serve as a convenience for clusters performing a Control Plane Only update.

Is a release on the stable channel safer or more supported than a release on the fast channel?

-

If a regression is identified for a release on a

fastchannel, it will be resolved and managed to the same extent as if that regression was identified for a release on thestablechannel. -

The only difference between releases on the

fastandstablechannels is that a release only appears on thestablechannel after it has been on thefastchannel for some time, which provides more time for new update risks to be discovered. -

A release that is available on the

fastchannel always becomes available on thestablechannel after this delay.

What does it mean if an update has known issues?

- Red Hat continuously evaluates data from multiple sources to determine whether updates from one version to another have any declared issues. Identified issues are typically documented in the version’s release notes. Even if the update path has known issues, customers are still supported if they perform the update.

Red Hat does not block users from updating to a certain version. Red Hat may declare conditional update risks, which may or may not apply to a particular cluster.

- Declared risks provide cluster administrators more context about a supported update. Cluster administrators can still accept the risk and update to that particular target version.

What if I see that an update to a particular release is no longer recommended?

- If Red Hat removes update recommendations from any supported release due to a regression, a superseding update recommendation will be provided to a future version that corrects the regression. There may be a delay while the defect is corrected, tested, and promoted to your selected channel.

How long until the next z-stream release is made available on the fast and stable channels?

While the specific cadence can vary based on a number of factors, new z-stream releases for the latest minor version are typically made available about every week. Older minor versions, which have become more stable over time, may take much longer for new z-stream releases to be made available.

ImportantThese are only estimates based on past data about z-stream releases. Red Hat reserves the right to change the release frequency as needed. Any number of issues could cause irregularities and delays in this release cadence.

-

Once a z-stream release is published, it also appears in the

fastchannel for that minor version. After a delay, the z-stream release may then appear in that minor version’sstablechannel.

1.1.3. About the OpenShift Update Service

The OpenShift Update Service (OSUS) provides update recommendations to OpenShift Container Platform, including Red Hat Enterprise Linux CoreOS (RHCOS). It provides a graph, or diagram, that contains the vertices of component Operators and the edges that connect them.

The edges in the graph show which versions you can safely update to. The vertices are update payloads that specify the intended state of the managed cluster components.

The Cluster Version Operator (CVO) in your cluster checks with the OpenShift Update Service to see the valid updates and update paths based on current component versions and information in the graph. When you request an update, the CVO uses the corresponding release image to update your cluster. The release artifacts are hosted in Quay as container images.

To allow the OpenShift Update Service to provide only compatible updates, a release verification pipeline drives automation. Each release artifact is verified for compatibility with supported cloud platforms and system architectures, as well as other component packages. After the pipeline confirms the suitability of a release, the OpenShift Update Service notifies you that it is available.

The OpenShift Update Service (OSUS) supports a single-stream release model, where only one release version is active and supported at any given time. When a new release is deployed, it fully replaces the previous release.

The updated release provides support for upgrades from all OpenShift Container Platform versions starting after 4.8 up to the new release version.

The OpenShift Update Service displays all recommended updates for your current cluster. If an update path is not recommended by the OpenShift Update Service, it might be because of a known issue related to the update path, such as incompatibility or availability.

Two controllers run during continuous update mode. The first controller continuously updates the payload manifests, applies the manifests to the cluster, and outputs the controlled rollout status of the Operators to indicate whether they are available, upgrading, or failed. The second controller polls the OpenShift Update Service to determine if updates are available.

Only updating to a newer version is supported. Reverting or rolling back your cluster to a previous version is not supported. If your update fails, contact Red Hat support.

During the update process, the Machine Config Operator (MCO) applies the new configuration to your cluster machines. The MCO cordons the number of nodes specified by the maxUnavailable field on the machine configuration pool and marks them unavailable. By default, this value is set to 1. The MCO updates the affected nodes alphabetically by zone, based on the topology.kubernetes.io/zone label. If a zone has more than one node, the oldest nodes are updated first. For nodes that do not use zones, such as in bare metal deployments, the nodes are updated by age, with the oldest nodes updated first. The MCO updates the number of nodes as specified by the maxUnavailable field on the machine configuration pool at a time. The MCO then applies the new configuration and reboots the machine.

The default setting for maxUnavailable is 1 for all the machine config pools in OpenShift Container Platform. It is recommended to not change this value and update one control plane node at a time. Do not change this value to 3 for the control plane pool.

If you use Red Hat Enterprise Linux (RHEL) machines as workers, the MCO does not update the kubelet because you must update the OpenShift API on the machines first.

With the specification for the new version applied to the old kubelet, the RHEL machine cannot return to the Ready state. You cannot complete the update until the machines are available. However, the maximum number of unavailable nodes is set to ensure that normal cluster operations can continue with that number of machines out of service.

The OpenShift Update Service is composed of an Operator and one or more application instances.

1.1.4. Understanding cluster Operator condition types

The status of cluster Operators includes their condition type, which informs you of the current state of your Operator’s health.

The following definitions cover a list of some common ClusterOperator condition types. Operators that have additional condition types and use Operator-specific language have been omitted.

The Cluster Version Operator (CVO) is responsible for collecting the status conditions from cluster Operators so that cluster administrators can better understand the state of the OpenShift Container Platform cluster.

-

Available: The condition type

Availableindicates that an Operator is functional and available in the cluster. If the status isFalse, at least one part of the operand is non-functional and the condition requires an administrator to intervene. Progressing: The condition type

Progressingindicates that an Operator is actively rolling out new code, propagating configuration changes, or otherwise moving from one steady state to another.Operators do not report the condition type

ProgressingasTruewhen they are reconciling a previous known state. If the observed cluster state has changed and the Operator is reacting to it, then the status reports back asTrue, since it is moving from one steady state to another.Degraded: The condition type

Degradedindicates that an Operator has a current state that does not match its required state over a period of time. The period of time can vary by component, but aDegradedstatus represents persistent observation of an Operator’s condition. As a result, an Operator does not fluctuate in and out of theDegradedstate.There might be a different condition type if the transition from one state to another does not persist over a long enough period to report

Degraded. An Operator does not reportDegradedduring the course of a normal update. An Operator may reportDegradedin response to a persistent infrastructure failure that requires eventual administrator intervention.NoteThis condition type is only an indication that something may need investigation and adjustment. As long as the Operator is available, the

Degradedcondition does not cause user workload failure or application downtime.Upgradeable: The condition type

Upgradeableindicates whether the Operator is safe to update based on the current cluster state. The message field contains a human-readable description of what the administrator needs to do for the cluster to successfully update. The CVO allows updates when this condition isTrue,Unknownor missing.When the

Upgradeablestatus isFalse, only minor updates are impacted, and the CVO prevents the cluster from performing impacted updates unless forced.

1.1.5. Understanding cluster version condition types

The Cluster Version Operator (CVO) monitors cluster Operators and other components, and is responsible for collecting the status of both the cluster version and its Operators. This status includes the condition type, which informs you of the health and current state of the OpenShift Container Platform cluster.

In addition to Available, Progressing, and Upgradeable, there are condition types that affect cluster versions and Operators.

-

Failing: The cluster version condition type

Failingindicates that a cluster cannot reach its desired state, is unhealthy, and requires an administrator to intervene. -

Invalid: The cluster version condition type

Invalidindicates that the cluster version has an error that prevents the server from taking action. The CVO only reconciles the current state as long as this condition is set. -

RetrievedUpdates: The cluster version condition type

RetrievedUpdatesindicates whether or not available updates have been retrieved from the upstream update server. The condition isUnknownbefore retrieval,Falseif the updates either recently failed or could not be retrieved, orTrueif theavailableUpdatesfield is both recent and accurate. -

ReleaseAccepted: The cluster version condition type

ReleaseAcceptedwith aTruestatus indicates that the requested release payload was successfully loaded without failure during image verification and precondition checking. -

ImplicitlyEnabledCapabilities: The cluster version condition type

ImplicitlyEnabledCapabilitieswith aTruestatus indicates that there are enabled capabilities that the user is not currently requesting throughspec.capabilities. The CVO does not support disabling capabilities if any associated resources were previously managed by the CVO.

1.1.6. Common terms

Some terms are commonly used in the context of OpenShift Container Platform updates, which might be useful to learn.

- Control plane

- The control plane, which is composed of control plane machines, manages the OpenShift Container Platform cluster. The control plane machines manage workloads on the compute machines, which are also known as worker machines.

- Cluster Version Operator

- The Cluster Version Operator (CVO) starts the update process for the cluster. It checks with OSUS based on the current cluster version and retrieves the graph which contains available or possible update paths.

- Machine Config Operator

- The Machine Config Operator (MCO) is a cluster-level Operator that manages the operating system and machine configurations. Through the MCO, platform administrators can configure and update systemd, CRI-O and Kubelet, the kernel, NetworkManager, and other system features on the worker nodes.

- OpenShift Update Service

- The OpenShift Update Service (OSUS) provides over-the-air updates to OpenShift Container Platform, including to Red Hat Enterprise Linux CoreOS (RHCOS). It provides a graph, or diagram, that contains the vertices of component Operators and the edges that connect them.

- Channels

- Channels declare an update strategy tied to minor versions of OpenShift Container Platform. The OSUS uses this configured strategy to recommend update edges consistent with that strategy.

- Recommended update edge

- A recommended update edge is a recommended update between OpenShift Container Platform releases. Whether a given update is recommended can depend on the cluster’s configured channel, current version, known bugs, and other information. OSUS communicates the recommended edges to the CVO, which runs in every cluster.

1.2. How cluster updates work

The Cluster Version Operator (CVO) is the primary component that orchestrates the OpenShift Container Platform update process. During standard cluster operation, the CVO compares manifests of cluster Operators to in-cluster resources and reconciles discrepancies between the actual state of these resources and their desired state.

The following sections describe each major aspect of the OpenShift Container Platform (OCP) update process in detail. For a general overview of how updates work, see the Introduction to OpenShift updates.

1.2.1. The ClusterVersion object

One of the resources that the Cluster Version Operator (CVO) monitors is the ClusterVersion resource.

Administrators and OpenShift Container Platform components can communicate or interact with the CVO through the ClusterVersion object. The desired CVO state is declared through the ClusterVersion object and the current CVO state is reflected in the object’s status.

Do not directly modify the ClusterVersion object. Instead, use interfaces such as the oc CLI or the web console to declare your update target.

The CVO continually reconciles the cluster with the target state declared in the spec property of the ClusterVersion resource. When the desired release differs from the actual release, that reconciliation updates the cluster.

1.2.1.1. Update availability data

The ClusterVersion resource also contains information about updates that are available to the cluster. This includes updates that are available, but not recommended due to a known risk that applies to the cluster. These updates are known as conditional updates. To learn how the CVO maintains this information about available updates in the ClusterVersion resource, see the "Evaluation of update availability" section.

You can inspect all available updates with the following command:

oc adm upgrade --include-not-recommended

$ oc adm upgrade --include-not-recommended

The additional --include-not-recommended parameter includes updates that are available with known issues that apply to the cluster.

Example output

The oc adm upgrade command queries the ClusterVersion resource for information about available updates and presents it in a human-readable format.

One way to directly inspect the underlying availability data created by the CVO is by querying the ClusterVersion resource with the following command:

oc get clusterversion version -o json | jq '.status.availableUpdates'

$ oc get clusterversion version -o json | jq '.status.availableUpdates'Example output

A similar command can be used to check conditional updates:

oc get clusterversion version -o json | jq '.status.conditionalUpdates'

$ oc get clusterversion version -o json | jq '.status.conditionalUpdates'Example output

1.2.2. Evaluation of update availability

The Cluster Version Operator (CVO) periodically queries the OpenShift Update Service (OSUS) for the most recent data about update possibilities.

This data is based on the cluster’s subscribed channel. The CVO then saves information about update recommendations into either the availableUpdates or conditionalUpdates field of its ClusterVersion resource.

The CVO periodically checks the conditional updates for update risks. These risks are conveyed through the data served by the OSUS, which contains information for each version about known issues that might affect a cluster updated to that version. Most risks are limited to clusters with specific characteristics, such as clusters with a certain size or clusters that are deployed in a particular cloud platform.

The CVO continuously evaluates its cluster characteristics against the conditional risk information for each conditional update. If the CVO finds that the cluster matches the criteria, the CVO stores this information in the conditionalUpdates field of its ClusterVersion resource. If the CVO finds that the cluster does not match the risks of an update, or that there are no risks associated with the update, it stores the target version in the availableUpdates field of its ClusterVersion resource.

The user interface, either the web console or the OpenShift CLI (oc), presents this information in sectioned headings to the administrator. Each known issue associated with the update path contains a link to further resources about the risk so that the administrator can make an informed decision about the update.

1.2.3. Release images

A release image is the delivery mechanism for a specific OpenShift Container Platform (OCP) version.

It contains the release metadata, a Cluster Version Operator (CVO) binary matching the release version, every manifest needed to deploy individual cluster Operators, and a list of SHA digest-versioned references to all container images that make up this version.

You can extract a specific release image by running the following command:

oc adm release extract <release image>

$ oc adm release extract <release image>Example command

oc adm release extract quay.io/openshift-release-dev/ocp-release:4.12.6-x86_64

$ oc adm release extract quay.io/openshift-release-dev/ocp-release:4.12.6-x86_64Example output

Extracted release payload from digest sha256:800d1e39d145664975a3bb7cbc6e674fbf78e3c45b5dde9ff2c5a11a8690c87b created at 2023-03-01T12:46:29Z

Extracted release payload from digest sha256:800d1e39d145664975a3bb7cbc6e674fbf78e3c45b5dde9ff2c5a11a8690c87b created at 2023-03-01T12:46:29ZAfter the release image is extracted, you can inspect its contents by running the following command:

ls

$ lsExample output

In this example output, the following contents can be seen:

-

0000_03_quota-openshift_01_clusterresourcequota.crd.yamlis the manifest for theClusterResourceQuotaCRD, to be applied on Runlevel 03. -

0000_90_service-ca-operator_02_prometheusrolebinding.yamlis the manifest for thePrometheusRoleBindingresource for theservice-ca-operator, to be applied on Runlevel 90. -

image-referencesis the list of SHA digest-versioned references to all required images.

1.2.4. Update process workflow

When you initiate a cluster update, the Cluster Version Operator (CVO) begins a specific sequence of events to orchestrate the update.

The following steps represent a detailed workflow of the OpenShift Container Platform update process:

-

The target version is stored in the

spec.desiredUpdate.versionfield of theClusterVersionresource, which may be managed through the web console or the CLI. -

The CVO detects that the

desiredUpdatefield in theClusterVersionresource differs from the current cluster version. Using graph data from the OpenShift Update Service, the CVO resolves the desired cluster version to a pull spec for the release image. - The CVO validates the integrity and authenticity of the release image. Red Hat publishes cryptographically-signed statements about published release images at predefined locations by using image SHA digests as unique and immutable release image identifiers. The CVO utilizes a list of built-in public keys to validate the presence and signatures of the statement matching the checked release image.

-

The CVO creates a job named

version-$version-$hashin theopenshift-cluster-versionnamespace. This job uses containers that are executing the release image, so the cluster downloads the image through the container runtime. The job then extracts the manifests and metadata from the release image to a shared volume that is accessible to the CVO. - The CVO validates the extracted manifests and metadata.

- The CVO checks some preconditions to ensure that no problematic condition is detected in the cluster. Certain conditions can prevent updates from proceeding. These conditions are either determined by the CVO itself, or reported by individual cluster Operators that detect some details about the cluster that the Operator considers problematic for the update.

-

The CVO records the accepted release in

status.desiredand creates astatus.historyentry about the new update. - The CVO begins reconciling the manifests from the release image. Cluster Operators are updated in separate stages called Runlevels, and the CVO ensures that all Operators in a Runlevel finish updating before it proceeds to the next level.

- Manifests for the CVO itself are applied early in the process. When the CVO deployment is applied, the current CVO pod stops, and a CVO pod that uses the new version starts. The new CVO proceeds to reconcile the remaining manifests.

-

The update proceeds until the entire control plane is updated to the new version. Individual cluster Operators might perform update tasks on their domain of the cluster, and while they do so, they report their state through the

Progressing=Truecondition. - The Machine Config Operator (MCO) manifests are applied towards the end of the process. The updated MCO then begins updating the system configuration and operating system of every node. Each node might be drained, updated, and rebooted before it starts to accept workloads again.

The cluster reports as updated after the control plane update is finished, usually before all nodes are updated. After the update, the CVO maintains all cluster resources to match the state delivered in the release image.

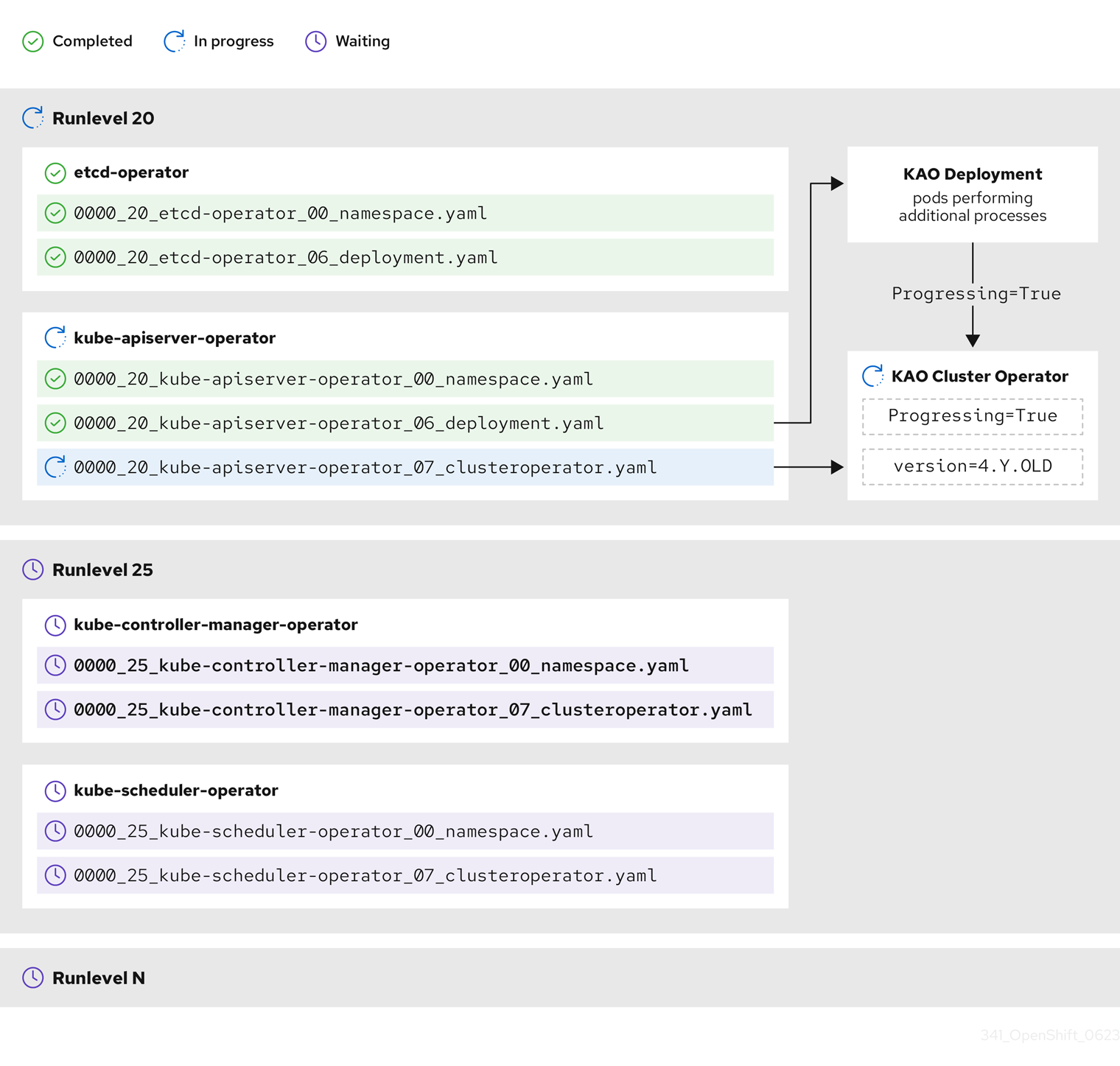

1.2.5. Understanding how manifests are applied during an update

Some manifests supplied in a release image must be applied in a certain order because of the dependencies between them.

For example, the CustomResourceDefinition resource must be created before the matching custom resources. Additionally, there is a logical order in which the individual cluster Operators must be updated to minimize disruption in the cluster. The Cluster Version Operator (CVO) implements this logical order through the concept of Runlevels.

These dependencies are encoded in the filenames of the manifests in the release image:

0000_<runlevel>_<component>_<manifest-name>.yaml

0000_<runlevel>_<component>_<manifest-name>.yamlFor example:

0000_03_config-operator_01_proxy.crd.yaml

0000_03_config-operator_01_proxy.crd.yamlThe CVO internally builds a dependency graph for the manifests, where the CVO obeys the following rules:

- During an update, manifests at a lower Runlevel are applied before those at a higher Runlevel.

- Within one Runlevel, manifests for different components can be applied in parallel.

- Within one Runlevel, manifests for a single component are applied in lexicographic order.

The CVO then applies manifests following the generated dependency graph.

For some resource types, the CVO monitors the resource after its manifest is applied, and considers it to be successfully updated only after the resource reaches a stable state. Achieving this state can take some time. This is especially true for ClusterOperator resources, while the CVO waits for a cluster Operator to update itself and then update its ClusterOperator status.

The CVO waits until all cluster Operators in the Runlevel meet the following conditions before it proceeds to the next Runlevel:

-

The cluster Operators have an

Available=Truecondition. -

The cluster Operators have a

Degraded=Falsecondition. - The cluster Operators declare they have achieved the desired version in their ClusterOperator resource.

Some actions can take significant time to finish. The CVO waits for the actions to complete in order to ensure the subsequent Runlevels can proceed safely. Initially reconciling the new release’s manifests is expected to take 60 to 120 minutes in total; see Understanding OpenShift Container Platform update duration for more information about factors that influence update duration.

In the previous example diagram, the CVO is waiting until all work is completed at Runlevel 20. The CVO has applied all manifests to the Operators in the Runlevel, but the kube-apiserver-operator ClusterOperator performs some actions after its new version was deployed. The kube-apiserver-operator ClusterOperator declares this progress through the Progressing=True condition and by not declaring the new version as reconciled in its status.versions. The CVO waits until the ClusterOperator reports an acceptable status, and then it will start reconciling manifests at Runlevel 25.

1.2.6. Understanding how the Machine Config Operator updates nodes

The Machine Config Operator (MCO) applies a new machine configuration to each control plane node and compute node. During the machine configuration update, control plane nodes and compute nodes are organized into their own machine config pools, where the pools of machines are updated in parallel.

The .spec.maxUnavailable parameter, which has a default value of 1, determines how many nodes in a machine config pool can simultaneously undergo the update process.

The default setting for maxUnavailable is 1 for all the machine config pools in OpenShift Container Platform. It is recommended to not change this value and update one control plane node at a time. Do not change this value to 3 for the control plane pool.

When the machine configuration update process begins, the MCO checks the amount of currently unavailable nodes in a pool. If there are fewer unavailable nodes than the value of .spec.maxUnavailable, the MCO initiates the following sequence of actions on available nodes in the pool:

Cordon and drain the node

NoteWhen a node is cordoned, workloads cannot be scheduled to it.

- Update the system configuration and operating system (OS) of the node

- Reboot the node

- Uncordon the node

A node undergoing this process is unavailable until it is uncordoned and workloads can be scheduled to it again. The MCO begins updating nodes until the number of unavailable nodes is equal to the value of .spec.maxUnavailable.

As a node completes its update and becomes available, the number of unavailable nodes in the machine config pool is once again fewer than .spec.maxUnavailable. If there are remaining nodes that need to be updated, the MCO initiates the update process on a node until the .spec.maxUnavailable limit is once again reached. This process repeats until each control plane node and compute node has been updated.

The following example workflow describes how this process might occur in a machine config pool with 5 nodes, where .spec.maxUnavailable is 3 and all nodes are initially available:

- The MCO cordons nodes 1, 2, and 3, and begins to drain them.

- Node 2 finishes draining, reboots, and becomes available again. The MCO cordons node 4 and begins draining it.

- Node 1 finishes draining, reboots, and becomes available again. The MCO cordons node 5 and begins draining it.

- Node 3 finishes draining, reboots, and becomes available again.

- Node 5 finishes draining, reboots, and becomes available again.

- Node 4 finishes draining, reboots, and becomes available again.

Because the update process for each node is independent of other nodes, some nodes in the example above finish their update out of the order in which they were cordoned by the MCO.

You can check the status of the machine configuration update by running the following command:

oc get mcp

$ oc get mcpExample output

NAME CONFIG UPDATED UPDATING DEGRADED MACHINECOUNT READYMACHINECOUNT UPDATEDMACHINECOUNT DEGRADEDMACHINECOUNT AGE master rendered-master-acd1358917e9f98cbdb599aea622d78b True False False 3 3 3 0 22h worker rendered-worker-1d871ac76e1951d32b2fe92369879826 False True False 2 1 1 0 22h

NAME CONFIG UPDATED UPDATING DEGRADED MACHINECOUNT READYMACHINECOUNT UPDATEDMACHINECOUNT DEGRADEDMACHINECOUNT AGE

master rendered-master-acd1358917e9f98cbdb599aea622d78b True False False 3 3 3 0 22h

worker rendered-worker-1d871ac76e1951d32b2fe92369879826 False True False 2 1 1 0 22h1.3. Understanding update channels and releases

Update channels are the mechanism by which users declare the OpenShift Container Platform minor version they intend to update their clusters to. They also allow users to choose the timing and level of support their updates will have through the fast, stable, candidate, and eus channel options.

The Cluster Version Operator uses an update graph based on the channel declaration, along with other conditional information, to provide a list of recommended and conditional updates available to the cluster.

1.3.1. Overview of update channels

Update channels correspond to a minor version of OpenShift Container Platform. The version number in the channel represents the target minor version that the cluster will eventually be updated to, even if it is higher than the cluster’s current minor version.

For instance, OpenShift Container Platform 4.10 update channels provide the following recommendations:

- Updates within 4.10.

- Updates within 4.9.

- Updates from 4.9 to 4.10, allowing all 4.9 clusters to eventually update to 4.10, even if they do not immediately meet the minimum z-stream version requirements.

-

eus-4.10only: updates within 4.8. -

eus-4.10only: updates from 4.8 to 4.9 to 4.10, allowing all 4.8 clusters to eventually update to 4.10.

4.10 update channels do not recommend updates to 4.11 or later releases. This strategy ensures that administrators must explicitly decide to update to the next minor version of OpenShift Container Platform.

Update channels control only release selection and do not impact the version of the cluster that you install. The openshift-install binary file for a specific version of OpenShift Container Platform always installs that version.

OpenShift Container Platform 4.17 offers the following update channels:

-

stable-4.17 -

eus-4.y(only offered for EUS versions and meant to facilitate updates between EUS versions) -

fast-4.17 -

candidate-4.17

If you do not want the Cluster Version Operator to fetch available updates from the update recommendation service, you can use the oc adm upgrade channel command in the OpenShift CLI to configure an empty channel. This configuration can be helpful if, for example, a cluster has restricted network access and there is no local, reachable update recommendation service.

Red Hat recommends updating only to versions suggested by OpenShift Update Service. For a minor version update, versions must be contiguous. Red Hat does not test updates to noncontiguous versions and cannot guarantee compatibility with earlier versions.

1.3.2. Update channels

OpenShift Container Platform offers several update channels for you to choose from, depending on your desired update strategy.

1.3.2.1. fast-4.17 channel

The fast-4.17 channel is updated with new versions of OpenShift Container Platform 4.17 as soon as Red Hat declares the version as a general availability (GA) release. As such, these releases are fully supported and purposed to be used in production environments.

1.3.2.2. stable-4.17 channel

While the fast-4.17 channel contains releases as soon as their errata are published, releases are added to the stable-4.17 channel after a delay. During this delay, data is collected from multiple sources and analyzed for indications of product regressions. Once a significant number of data points have been collected, these releases are added to the stable channel.

Since the time required to obtain a significant number of data points varies based on many factors, Service LeveL Objective (SLO) is not offered for the delay duration between fast and stable channels. For more information, please see "Choosing the correct channel for your cluster"

Newly installed clusters default to using stable channels.

1.3.2.3. eus-4.y channel

In addition to the stable channel, all even-numbered minor versions of OpenShift Container Platform offer Extended Update Support (EUS). Releases promoted to the stable channel are also simultaneously promoted to the EUS channels. The primary purpose of the EUS channels is to serve as a convenience for clusters performing a Control Plane Only update.

Both standard and non-EUS subscribers can access all EUS repositories and necessary RPMs (rhel-*-eus-rpms) to be able to support critical purposes such as debugging and building drivers.

1.3.2.4. candidate-4.17 channel

The candidate-4.17 channel offers unsupported early access to releases as soon as they are built. Releases present only in candidate channels may not contain the full feature set of eventual GA releases or features may be removed prior to GA. Additionally, these releases have not been subject to full Red Hat Quality Assurance and may not offer update paths to later GA releases. Given these caveats, the candidate channel is only suitable for testing purposes where destroying and recreating a cluster is acceptable.

1.3.3. Restricted network clusters

If you manage the container images for your OpenShift Container Platform clusters yourself, you must consult the Red Hat errata that is associated with product releases and note any comments that impact updates.

During an update, the user interface might warn you about switching between these versions, so you must ensure that you selected an appropriate version before you bypass those warnings.

1.3.4. Update recommendations in the channel

OpenShift Container Platform maintains an update recommendation service that knows your installed OpenShift Container Platform version and the path to take within the channel to get you to the next release.

Update paths are also limited to versions relevant to your currently selected channel and its promotion characteristics.

You can imagine seeing the following releases in your channel:

- 4.17.0

- 4.17.1

- 4.17.3

- 4.17.4

The service recommends only updates that have been tested and have no known serious regressions. For example, if your cluster is on 4.17.1 and OpenShift Container Platform suggests 4.17.4, then it is recommended to update from 4.17.1 to 4.17.4.

Do not rely on consecutive patch numbers. In this example, 4.17.2 is not and never was available in the channel, therefore updates to 4.17.2 are not recommended or supported.

1.3.5. Update recommendations and Conditional Updates

Red Hat monitors newly released versions and update paths associated with those versions before and after they are added to supported channels.

If Red Hat removes update recommendations from any supported release, a superseding update recommendation will be provided to a future version that corrects the regression. There may however be a delay while the defect is corrected, tested, and promoted to your selected channel.

Beginning in OpenShift Container Platform 4.10, when update risks are confirmed, they are declared as Conditional Update risks for the relevant updates. Each known risk may apply to all clusters or only clusters matching certain conditions. Some examples include having the Platform set to None or the CNI provider set to OpenShiftSDN. The Cluster Version Operator (CVO) continually evaluates known risks against the current cluster state. If no risks match, the update is recommended. If the risk matches, those update paths are labeled as updates with known issues, and a reference link to the known issues is provided. The reference link helps the cluster admin decide if they want to accept the risk and continue to update their cluster.

When Red Hat chooses to declare Conditional Update risks, that action is taken in all relevant channels simultaneously. Declaration of a Conditional Update risk may happen either before or after the update has been promoted to supported channels.

1.3.6. What to consider when choosing an update channel

Choosing the appropriate update channel for your cluster involves two decisions.

First, select the minor version you want for your cluster update. Selecting a channel which matches your current version ensures that you only apply z-stream updates and do not receive feature updates. Selecting an available channel which has a version greater than your current version will ensure that after one or more updates your cluster will have updated to that version. Your cluster will only be offered channels which match its current version, the next version, or the next EUS version.

Due to the complexity involved in planning updates between versions many minors apart, channels that assist in planning updates beyond a single Control Plane Only update are not offered.

Second, you should choose your desired rollout strategy. You may choose to update as soon as Red Hat declares a release GA by selecting from fast channels or you may want to wait for Red Hat to promote releases to the stable channel. Update recommendations offered in the fast-4.17 and stable-4.17 are both fully supported and benefit equally from ongoing data analysis. The promotion delay before promoting a release to the stable channel represents the only difference between the two channels. Updates to the latest z-streams are generally promoted to the stable channel within a week or two, however the delay when initially rolling out updates to the latest minor is much longer, generally 45-90 days. Please consider the promotion delay when choosing your desired channel, as waiting for promotion to the stable channel may affect your scheduling plans.

Additionally, there are several factors which may lead an organization to move clusters to the fast channel either permanently or temporarily including the following:

- The desire to apply a specific fix known to affect your environment without delay.

- Application of CVE fixes without delay. CVE fixes may introduce regressions, so promotion delays still apply to z-streams with CVE fixes.

- Internal testing processes. If it takes your organization several weeks to qualify releases it is best test concurrently with our promotion process rather than waiting. This also assures that any telemetry signal provided to Red Hat is a factored into our rollout, so issues relevant to you can be fixed faster.

1.3.7. Considerations for switching between channels

You can switch your cluster’s update channel through the web console or the CLI, in order to access different update recommendations for your cluster.

You can switch the channel from the CLI by running the following command:

oc adm upgrade channel <channel>

$ oc adm upgrade channel <channel>The web console will display an alert if you switch to a channel that does not include the current release. The web console does not recommend any updates while on a channel without the current release. You can return to the original channel at any point, however.

Changing your channel might impact the supportability of your cluster. The following conditions might apply:

-

Your cluster is still supported if you change from the

stable-4.17channel to thefast-4.17channel. -

You can switch to the

candidate-4.17channel at any time, but some releases for this channel might be unsupported. -

You can switch from the

candidate-4.17channel to thefast-4.17channel if your current release is a general availability release. -

You can always switch from the

fast-4.17channel to thestable-4.17channel. There is a possible delay of up to a day for the release to be promoted tostable-4.17if the current release was recently promoted.

1.4. Understanding OpenShift Container Platform update duration

OpenShift Container Platform update duration varies based on the deployment topology. You can understand the factors that affect update duration and use them to estimate how long the cluster update takes in your environment.

1.4.1. Factors affecting update duration

The duration of OpenShift Container Platform updates vary for several reasons.

The following factors can affect your cluster update duration:

The reboot of compute nodes to the new machine configuration by Machine Config Operator (MCO)

The value of

MaxUnavailablein the machine config poolWarningThe default setting for

maxUnavailableis1for all the machine config pools in OpenShift Container Platform. It is recommended to not change this value and update one control plane node at a time. Do not change this value to3for the control plane pool.- The minimum number or percentages of replicas set in pod disruption budget (PDB)

- The number of nodes in the cluster

- The health of the cluster nodes

1.4.2. Cluster update phases

OpenShift Container Platform updates are done in multiple phases.

The cluster update happens in the following two phases:

- Cluster Version Operator (CVO) target update payload deployment

- Machine Config Operator (MCO) node updates

1.4.2.1. Cluster Version Operator target update payload deployment

In the first phase of the update, the Cluster Version Operator (CVO) retrieves the target update release image and applies to the cluster.

All components which run as pods are updated during this phase, whereas the host components are updated by the Machine Config Operator (MCO). This process might take 60 to 120 minutes.

The CVO phase of the update does not restart the nodes.

1.4.2.2. Machine Config Operator node updates

In the second phase of the update, the Machine Config Operator (MCO) applies a new machine configuration to each control plane and compute node.

During this process, the MCO performs the following sequential actions on each node of the cluster:

- Cordon and drain all the nodes

- Update the operating system (OS)

- Reboot the nodes

- Uncordon all nodes and schedule workloads on the node

When a node is cordoned, workloads cannot be scheduled to it.

The time to complete this process depends on several factors including the node and infrastructure configuration. This process might take 5 or more minutes to complete per node.

In addition to MCO, you should consider the impact of the following parameters:

- The control plane node update duration is predictable and oftentimes shorter than compute nodes, because the control plane workloads are tuned for graceful updates and quick drains.

-

You can update the compute nodes in parallel by setting the

maxUnavailablefield to greater than1in the Machine Config Pool (MCP). The MCO cordons the number of nodes specified inmaxUnavailableand marks them unavailable for update. -

When you increase

maxUnavailableon the MCP, it can help the pool to update more quickly. However, ifmaxUnavailableis set too high, and several nodes are cordoned simultaneously, the pod disruption budget (PDB) guarded workloads could fail to drain because a schedulable node cannot be found to run the replicas. If you increasemaxUnavailablefor the MCP, ensure that you still have sufficient schedulable nodes to allow PDB guarded workloads to drain. Before you begin the update, you must ensure that all the nodes are available. Any unavailable nodes can significantly impact the update duration because the node unavailability affects the

maxUnavailableand pod disruption budgets.To check the status of nodes from the terminal, run the following command:

oc get node

$ oc get nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the status of the node is

NotReadyorSchedulingDisabled, then the node is not available and this impacts the update duration.You can also check the status of nodes from the Administrator perspective in the web console by expanding Compute → Nodes.

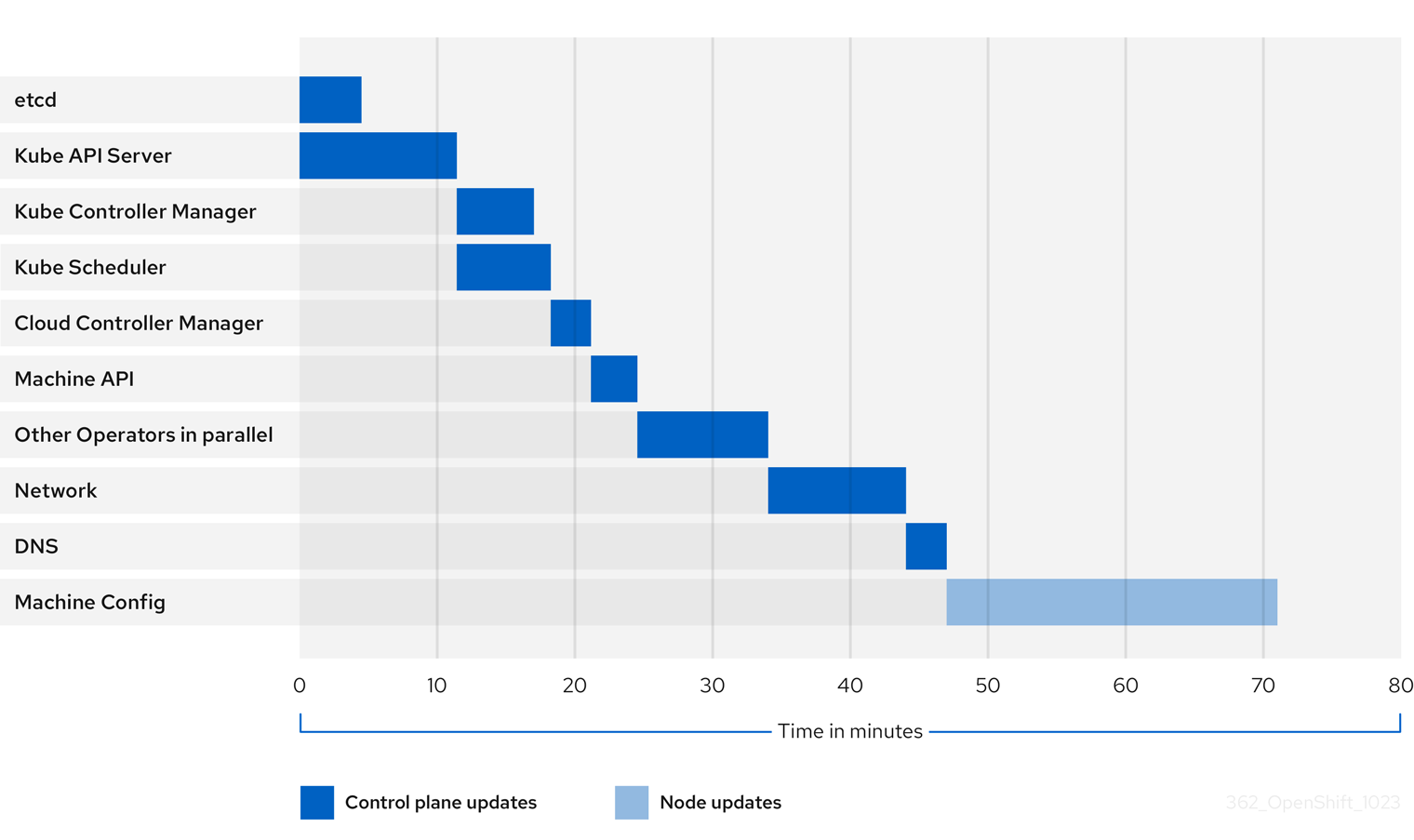

1.4.2.3. Example update duration of cluster Operators

You can review an example of the update duration for cluster Operators to better understand the factors that affect the duration of the update.

The previous diagram shows an example of the time that cluster Operators might take to update to their new versions. The example is based on a three-node AWS OVN cluster, which has a healthy compute MachineConfigPool and no workloads that take long to drain, updating from 4.13 to 4.14.

- The specific update duration of a cluster and its Operators can vary based on several cluster characteristics, such as the target version, the amount of nodes, and the types of workloads scheduled to the nodes.

- Some Operators, such as the Cluster Version Operator, update themselves in a short amount of time. These Operators have either been omitted from the diagram or are included in the broader group of Operators labeled "Other Operators in parallel".

Each cluster Operator has characteristics that affect the time it takes to update itself. For instance, the Kube API Server Operator in this example took more than eleven minutes to update because kube-apiserver provides graceful termination support, meaning that existing, in-flight requests are allowed to complete gracefully. This might result in a longer shutdown of the kube-apiserver. In the case of this Operator, update speed is sacrificed to help prevent and limit disruptions to cluster functionality during an update.

Another characteristic that affects the update duration of an Operator is whether the Operator utilizes DaemonSets. The Network and DNS Operators utilize full-cluster DaemonSets, which can take time to roll out their version changes, and this is one of several reasons why these Operators might take longer to update themselves.

Additionally, the update duration for some Operators is heavily dependent on characteristics of the cluster itself. For example, the Machine Config Operator update applies machine configuration changes to each node in the cluster. A cluster with many nodes has a longer update duration for the Machine Config Operator compared to a cluster with fewer nodes.

Each cluster Operator is assigned a stage during which it can be updated. Operators within the same stage can update simultaneously, and Operators in a given stage cannot begin updating until all previous stages have been completed. For more information, see "Understanding how manifests are applied during an update".

1.4.3. How to estimate cluster update time

Historical update duration of similar clusters provides you the best estimate for the future cluster updates. If you do not have historical data, you can calculate an estimate of the update duration.

You can use the following convention to estimate your cluster update time:

Cluster update time = CVO target update payload deployment time + (# node update iterations x MCO node update time)

Cluster update time = CVO target update payload deployment time + (# node update iterations x MCO node update time)

A node update iteration consists of one or more nodes updated in parallel. The control plane nodes are always updated in parallel with the compute nodes. In addition, one or more compute nodes can be updated in parallel based on the maxUnavailable value.

The default setting for maxUnavailable is 1 for all the machine config pools in OpenShift Container Platform. It is recommended to not change this value and update one control plane node at a time. Do not change this value to 3 for the control plane pool.

For example, to estimate the update time, consider an OpenShift Container Platform cluster with three control plane nodes and six compute nodes, where each host takes about 5 minutes to reboot.

The time it takes to reboot a particular node varies significantly. In cloud instances, the reboot might take about 1 to 2 minutes, whereas in physical bare metal hosts the reboot might take more than 15 minutes.

In a scenario where you set maxUnavailable to 1 for both the control plane and compute nodes Machine Config Pool (MCP), then all the six compute nodes will update one after another in each iteration:

Cluster update time = 60 + (6 x 5) = 90 minutes

Cluster update time = 60 + (6 x 5) = 90 minutes

In a scenario where you set maxUnavailable to 2 for the compute node MCP, then two compute nodes will update in parallel in each iteration. Therefore it takes total three iterations to update all the nodes.

Cluster update time = 60 + (3 x 5) = 75 minutes

Cluster update time = 60 + (3 x 5) = 75 minutes

The default setting for maxUnavailable is 1 for all the MCPs in OpenShift Container Platform. It is recommended that you do not change the maxUnavailable in the control plane MCP.

1.4.4. Red Hat Enterprise Linux (RHEL) compute nodes

Red Hat Enterprise Linux (RHEL) compute nodes require an additional usage of openshift-ansible to update node binary components. The actual time spent updating RHEL compute nodes should not be significantly different from Red Hat Enterprise Linux CoreOS (RHCOS) compute nodes.

Chapter 2. Preparing to update a cluster

2.1. Preparing to update to OpenShift Container Platform 4.17

Learn more about administrative tasks that cluster admins must perform to successfully initialize an update, as well as optional guidelines for ensuring a successful update.

2.1.1. Kubernetes API removals

There are no Kubernetes API removals in OpenShift Container Platform 4.17.

2.1.2. Assessing the risk of conditional updates

A conditional update is an update target that is available but not recommended due to a known risk that applies to your cluster. The Cluster Version Operator (CVO) periodically queries the OpenShift Update Service (OSUS) for the most recent data about update recommendations, and some potential update targets might have risks associated with them.

The CVO evaluates the conditional risks, and if the risks are not applicable to the cluster, then the target version is available as a recommended update path for the cluster. If the risk is determined to be applicable, or if for some reason CVO cannot evaluate the risk, then the update target is available to the cluster as a conditional update.

When you encounter a conditional update while you are trying to update to a target version, you must assess the risk of updating your cluster to that version. Generally, if you do not have a specific need to update to that target version, it is best to wait for a recommended update path from Red Hat.

However, if you have a strong reason to update to that version, for example, if you need to fix an important CVE, then the benefit of fixing the CVE might outweigh the risk of the update being problematic for your cluster. You can complete the following tasks to determine whether you agree with the Red Hat assessment of the update risk:

- Complete extensive testing in a non-production environment to the extent that you are comfortable completing the update in your production environment.

- Follow the links provided in the conditional update description, investigate the bug, and determine if it is likely to cause issues for your cluster. If you need help understanding the risk, contact Red Hat Support.

2.1.3. etcd backups before cluster updates

etcd backups record the state of your cluster and all of its resource objects. You can use backups to attempt restoring the state of a cluster in disaster scenarios where you cannot recover a cluster in its currently dysfunctional state.

In the context of updates, you can attempt an etcd restoration of the cluster if an update introduced catastrophic conditions that cannot be fixed without reverting to the previous cluster version. etcd restorations might be destructive and destabilizing to a running cluster, use them only as a last resort.

Due to their high consequences, etcd restorations are not intended to be used as a rollback solution. Rolling your cluster back to a previous version is not supported. If your update is failing to complete, contact Red Hat support.

There are several factors that affect the viability of an etcd restoration. For more information, see "Backing up etcd data" and "Restoring to a previous cluster state".

2.1.4. Best practices for cluster updates

OpenShift Container Platform provides a robust update experience that minimizes workload disruptions during an update. Updates will not begin unless the cluster is in an upgradeable state at the time of the update request.

This design enforces some key conditions before initiating an update, but there are a number of actions you can take to increase your chances of a successful cluster update.

2.1.4.1. Choose versions recommended by the OpenShift Update Service

The OpenShift Update Service (OSUS) provides update recommendations based on cluster characteristics such as the cluster’s subscribed channel. The Cluster Version Operator saves these recommendations as either recommended or conditional updates. While it is possible to attempt an update to a version that is not recommended by OSUS, following a recommended update path protects users from encountering known issues or unintended consequences on the cluster.

Choose only update targets that are recommended by OSUS to ensure a successful update.

2.1.4.2. Address all critical alerts on the cluster

Critical alerts must always be addressed as soon as possible, but it is especially important to address these alerts and resolve any problems before initiating a cluster update. Failing to address critical alerts before beginning an update can cause problematic conditions for the cluster.

In the Administrator perspective of the web console, navigate to Observe → Alerting to find critical alerts.

2.1.4.3. Ensure that the cluster is in an Upgradable state

When one or more Operators have not reported their Upgradeable condition as True for more than an hour, the ClusterNotUpgradeable warning alert is triggered in the cluster. In most cases this alert does not block patch updates, but you cannot perform a minor version update until you resolve this alert and all Operators report Upgradeable as True.

For more information about the Upgradeable condition, see "Understanding cluster Operator condition types" in the additional resources section.

2.1.4.3.1. SDN support removal

OpenShift SDN network plugin was deprecated in versions 4.15 and 4.16. With this release, the SDN network plugin is no longer supported and the content has been removed from the documentation.

If your OpenShift Container Platform cluster is still using the OpenShift SDN CNI, see Migrating from the OpenShift SDN network plugin.

It is not possible to update a cluster to OpenShift Container Platform 4.17 if it is using the OpenShift SDN network plugin. You must migrate to the OVN-Kubernetes plugin before upgrading to OpenShift Container Platform 4.17.

2.1.4.4. Ensure that enough spare nodes are available

A cluster should not be running with little to no spare node capacity, especially when initiating a cluster update. Nodes that are not running and available may limit a cluster’s ability to perform an update with minimal disruption to cluster workloads.

Depending on the configured value of the cluster’s maxUnavailable spec, the cluster might not be able to apply machine configuration changes to nodes if there is an unavailable node. Additionally, if compute nodes do not have enough spare capacity, workloads might not be able to temporarily shift to another node while the first node is taken offline for an update.

Make sure that you have enough available nodes in each worker pool, as well as enough spare capacity on your compute nodes, to increase the chance of successful node updates.

The default setting for maxUnavailable is 1 for all the machine config pools in OpenShift Container Platform. It is recommended to not change this value and update one control plane node at a time. Do not change this value to 3 for the control plane pool.

2.1.4.5. Ensure that the cluster’s PodDisruptionBudget is properly configured

You can use the PodDisruptionBudget object to define the minimum number or percentage of pod replicas that must be available at any given time. This configuration protects workloads from disruptions during maintenance tasks such as cluster updates.

However, it is possible to configure the PodDisruptionBudget for a given topology in a way that prevents nodes from being drained and updated during a cluster update.

When planning a cluster update, check the configuration of the PodDisruptionBudget object for the following factors:

-

For highly available workloads, make sure there are replicas that can be temporarily taken offline without being prohibited by the

PodDisruptionBudget. -

For workloads that are not highly available, make sure they are either not protected by a

PodDisruptionBudgetor have some alternative mechanism for draining these workloads eventually, such as periodic restart or guaranteed eventual termination.

2.2. Preparing to update a cluster with manually maintained credentials

The Cloud Credential Operator (CCO) Upgradable status for a cluster with manually maintained credentials is False by default.

-

For minor releases, for example, from 4.12 to 4.13, this status prevents you from updating until you have addressed any updated permissions and annotated the

CloudCredentialresource to indicate that the permissions are updated as needed for the next version. This annotation changes theUpgradablestatus toTrue. - For z-stream releases, for example, from 4.13.0 to 4.13.1, no permissions are added or changed, so the update is not blocked.

Before updating a cluster with manually maintained credentials, you must accommodate any new or changed credentials in the release image for the version of OpenShift Container Platform you are updating to.

2.2.1. Update requirements for clusters with manually maintained credentials

Before you update a cluster that uses manually maintained credentials with the Cloud Credential Operator (CCO), you must update the cloud provider resources for the new release.

If the cloud credential management for your cluster was configured using the CCO utility (ccoctl), use the ccoctl utility to update the resources. Clusters that were configured to use manual mode without the ccoctl utility require manual updates for the resources.

After updating the cloud provider resources, you must update the upgradeable-to annotation for the cluster to indicate that it is ready to update.

The process to update the cloud provider resources and the upgradeable-to annotation can only be completed by using command-line tools.

2.2.1.1. Cloud credential configuration options and update requirements by platform type

Some platforms only support using the CCO in one mode. For clusters that are installed on those platforms, the platform type determines the credentials update requirements.

For platforms that support using the CCO in multiple modes, you must determine which mode the cluster is configured to use and take the required actions for that configuration.

Figure 2.1. Credentials update requirements by platform type

- Red Hat OpenStack Platform (RHOSP) and VMware vSphere

These platforms do not support using the CCO in manual mode. Clusters on these platforms handle changes in cloud provider resources automatically and do not require an update to the

upgradeable-toannotation.Administrators of clusters on these platforms should skip the manually maintained credentials section of the update process.

- IBM Cloud and Nutanix

Clusters installed on these platforms are configured using the

ccoctlutility.Administrators of clusters on these platforms must take the following actions:

-

Extract and prepare the

CredentialsRequestcustom resources (CRs) for the new release. -

Configure the

ccoctlutility for the new release and use it to update the cloud provider resources. -

Indicate that the cluster is ready to update with the

upgradeable-toannotation.

-

Extract and prepare the

- Microsoft Azure Stack Hub

These clusters use manual mode with long-term credentials and do not use the

ccoctlutility.Administrators of clusters on these platforms must take the following actions:

-

Extract and prepare the

CredentialsRequestcustom resources (CRs) for the new release. - Manually update the cloud provider resources for the new release.

-

Indicate that the cluster is ready to update with the

upgradeable-toannotation.

-

Extract and prepare the

- Amazon Web Services (AWS), global Microsoft Azure, and Google Cloud

Clusters installed on these platforms support multiple CCO modes.

The required update process depends on the mode that the cluster is configured to use. If you are not sure what mode the CCO is configured to use on your cluster, you can use the web console or the CLI to determine this information.

2.2.1.2. Determining the Cloud Credential Operator mode by using the web console

You can determine what mode the Cloud Credential Operator (CCO) is configured to use by using the web console.

Only Amazon Web Services (AWS), global Microsoft Azure, and Google Cloud clusters support multiple CCO modes.

Prerequisites

- You have access to an OpenShift Container Platform account with cluster administrator permissions.

Procedure

-

Log in to the OpenShift Container Platform web console as a user with the

cluster-adminrole. - Navigate to Administration → Cluster Settings.

- On the Cluster Settings page, select the Configuration tab.

- Under Configuration resource, select CloudCredential.

- On the CloudCredential details page, select the YAML tab.

In the YAML block, check the value of

spec.credentialsMode. The following values are possible, though not all are supported on all platforms:-

'': The CCO is operating in the default mode. In this configuration, the CCO operates in mint or passthrough mode, depending on the credentials provided during installation. -

Mint: The CCO is operating in mint mode. -

Passthrough: The CCO is operating in passthrough mode. -

Manual: The CCO is operating in manual mode.

ImportantTo determine the specific configuration of an AWS, Google Cloud, or global Microsoft Azure cluster that has a

spec.credentialsModeof'',Mint, orManual, you must investigate further.AWS and Google Cloud clusters support using mint mode with the root secret deleted. If the cluster is specifically configured to use mint mode or uses mint mode by default, you must determine if the root secret is present on the cluster before updating.

An AWS, Google Cloud, or global Microsoft Azure cluster that uses manual mode might be configured to create and manage cloud credentials from outside of the cluster with AWS STS, Google Cloud Workload Identity, or Microsoft Entra Workload ID. You can determine whether your cluster uses this strategy by examining the cluster

Authenticationobject.-

AWS or Google Cloud clusters that use mint mode only: To determine whether the cluster is operating without the root secret, navigate to Workloads → Secrets and look for the root secret for your cloud provider.

NoteEnsure that the Project dropdown is set to All Projects.

Expand Platform Secret name AWS

aws-credsGoogle Cloud

gcp-credentials- If you see one of these values, your cluster is using mint or passthrough mode with the root secret present.

- If you do not see these values, your cluster is using the CCO in mint mode with the root secret removed.

AWS, Google Cloud, or global Microsoft Azure clusters that use manual mode only: To determine whether the cluster is configured to create and manage cloud credentials from outside of the cluster, you must check the cluster

Authenticationobject YAML values.- Navigate to Administration → Cluster Settings.

- On the Cluster Settings page, select the Configuration tab.

- Under Configuration resource, select Authentication.

- On the Authentication details page, select the YAML tab.

In the YAML block, check the value of the

.spec.serviceAccountIssuerparameter.-

A value that contains a URL that is associated with your cloud provider indicates that the CCO is using manual mode with short-term credentials for components. These clusters are configured using the

ccoctlutility to create and manage cloud credentials from outside of the cluster. -

An empty value (

'') indicates that the cluster is using the CCO in manual mode but was not configured using theccoctlutility.

-

A value that contains a URL that is associated with your cloud provider indicates that the CCO is using manual mode with short-term credentials for components. These clusters are configured using the

Next steps

- If you are updating a cluster that has the CCO operating in mint or passthrough mode and the root secret is present, you do not need to update any cloud provider resources and can continue to the next part of the update process.

- If your cluster is using the CCO in mint mode with the root secret removed, you must reinstate the credential secret with the administrator-level credential before continuing to the next part of the update process.

If your cluster was configured using the CCO utility (

ccoctl), you must take the following actions:-

Extract and prepare the

CredentialsRequestcustom resources (CRs) for the new release. -

Configure the

ccoctlutility for the new release and use it to update the cloud provider resources. -

Update the

upgradeable-toannotation to indicate that the cluster is ready to update.

-

Extract and prepare the

If your cluster is using the CCO in manual mode but was not configured using the

ccoctlutility, you must take the following actions:-

Extract and prepare the

CredentialsRequestcustom resources (CRs) for the new release. - Manually update the cloud provider resources for the new release.

-

Update the

upgradeable-toannotation to indicate that the cluster is ready to update.

-

Extract and prepare the

2.2.1.3. Determining the Cloud Credential Operator mode by using the CLI

You can determine what mode the Cloud Credential Operator (CCO) is configured to use by using the CLI.

Only Amazon Web Services (AWS), global Microsoft Azure, and Google Cloud clusters support multiple CCO modes.

Prerequisites

- You have access to an OpenShift Container Platform account with cluster administrator permissions.

-

You have installed the OpenShift CLI (

oc).

Procedure

-

Log in to

ocon the cluster as a user with thecluster-adminrole. To determine the mode that the CCO is configured to use, enter the following command:

oc get cloudcredentials cluster \ -o=jsonpath={.spec.credentialsMode}$ oc get cloudcredentials cluster \ -o=jsonpath={.spec.credentialsMode}Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following output values are possible, though not all are supported on all platforms:

-

'': The CCO is operating in the default mode. In this configuration, the CCO operates in mint or passthrough mode, depending on the credentials provided during installation. -

Mint: The CCO is operating in mint mode. -

Passthrough: The CCO is operating in passthrough mode. -

Manual: The CCO is operating in manual mode.

ImportantTo determine the specific configuration of an AWS, Google Cloud, or global Microsoft Azure cluster that has a

spec.credentialsModeof'',Mint, orManual, you must investigate further.AWS and Google Cloud clusters support using mint mode with the root secret deleted. If the cluster is specifically configured to use mint mode or uses mint mode by default, you must determine if the root secret is present on the cluster before updating.

An AWS, Google Cloud, or global Microsoft Azure cluster that uses manual mode might be configured to create and manage cloud credentials from outside of the cluster with AWS STS, Google Cloud Workload Identity, or Microsoft Entra Workload ID. You can determine whether your cluster uses this strategy by examining the cluster

Authenticationobject.-

AWS or Google Cloud clusters that use mint mode only: To determine whether the cluster is operating without the root secret, run the following command:

oc get secret <secret_name> \ -n=kube-system

$ oc get secret <secret_name> \ -n=kube-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow where

<secret_name>isaws-credsfor AWS orgcp-credentialsfor Google Cloud.If the root secret is present, the output of this command returns information about the secret. An error indicates that the root secret is not present on the cluster.

AWS, Google Cloud, or global Microsoft Azure clusters that use manual mode only: To determine whether the cluster is configured to create and manage cloud credentials from outside of the cluster, run the following command:

oc get authentication cluster \ -o jsonpath \ --template='{ .spec.serviceAccountIssuer }'$ oc get authentication cluster \ -o jsonpath \ --template='{ .spec.serviceAccountIssuer }'Copy to Clipboard Copied! Toggle word wrap Toggle overflow This command displays the value of the

.spec.serviceAccountIssuerparameter in the clusterAuthenticationobject.-

An output of a URL that is associated with your cloud provider indicates that the CCO is using manual mode with short-term credentials for components. These clusters are configured using the

ccoctlutility to create and manage cloud credentials from outside of the cluster. -

An empty output indicates that the cluster is using the CCO in manual mode but was not configured using the

ccoctlutility.

-

An output of a URL that is associated with your cloud provider indicates that the CCO is using manual mode with short-term credentials for components. These clusters are configured using the

Next steps

- If you are updating a cluster that has the CCO operating in mint or passthrough mode and the root secret is present, you do not need to update any cloud provider resources and can continue to the next part of the update process.

- If your cluster is using the CCO in mint mode with the root secret removed, you must reinstate the credential secret with the administrator-level credential before continuing to the next part of the update process.

If your cluster was configured using the CCO utility (

ccoctl), you must take the following actions:-

Extract and prepare the

CredentialsRequestcustom resources (CRs) for the new release. -

Configure the

ccoctlutility for the new release and use it to update the cloud provider resources. -

Update the

upgradeable-toannotation to indicate that the cluster is ready to update.

-

Extract and prepare the

If your cluster is using the CCO in manual mode but was not configured using the

ccoctlutility, you must take the following actions:-

Extract and prepare the

CredentialsRequestcustom resources (CRs) for the new release. - Manually update the cloud provider resources for the new release.

-

Update the

upgradeable-toannotation to indicate that the cluster is ready to update.

-

Extract and prepare the

2.2.2. Extracting and preparing credentials request resources

Before updating a cluster that uses the Cloud Credential Operator (CCO) in manual mode, you must extract and prepare the CredentialsRequest custom resources (CRs) for the new release.

Prerequisites

-

Install the OpenShift CLI (

oc) that matches the version for your updated version. -

Log in to the cluster as user with

cluster-adminprivileges.

Procedure

Obtain the pull spec for the update that you want to apply by running the following command:

oc adm upgrade

$ oc adm upgradeCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output of this command includes pull specs for the available updates similar to the following:

Partial example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set a

$RELEASE_IMAGEvariable with the release image that you want to use by running the following command:RELEASE_IMAGE=<update_pull_spec>

$ RELEASE_IMAGE=<update_pull_spec>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where

<update_pull_spec>is the pull spec for the release image that you want to use. For example:quay.io/openshift-release-dev/ocp-release@sha256:6a899c54dda6b844bb12a247e324a0f6cde367e880b73ba110c056df6d018032

quay.io/openshift-release-dev/ocp-release@sha256:6a899c54dda6b844bb12a247e324a0f6cde367e880b73ba110c056df6d018032Copy to Clipboard Copied! Toggle word wrap Toggle overflow Extract the list of

CredentialsRequestcustom resources (CRs) from the OpenShift Container Platform release image by running the following command:oc adm release extract \ --from=$RELEASE_IMAGE \ --credentials-requests \ --included \ --to=<path_to_directory_for_credentials_requests>

$ oc adm release extract \ --from=$RELEASE_IMAGE \ --credentials-requests \ --included \1 --to=<path_to_directory_for_credentials_requests>2 Copy to Clipboard Copied! Toggle word wrap Toggle overflow This command creates a YAML file for each

CredentialsRequestobject.For each

CredentialsRequestCR in the release image, ensure that a namespace that matches the text in thespec.secretRef.namespacefield exists in the cluster. This field is where the generated secrets that hold the credentials configuration are stored.Sample AWS

CredentialsRequestobjectCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- This field indicates the namespace which must exist to hold the generated secret.

The