This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Chapter 1. OpenShift Container Platform 4.8 release notes

Red Hat OpenShift Container Platform provides developers and IT organizations with a hybrid cloud application platform for deploying both new and existing applications on secure, scalable resources with minimal configuration and management overhead. OpenShift Container Platform supports a wide selection of programming languages and frameworks, such as Java, JavaScript, Python, Ruby, and PHP.

Built on Red Hat Enterprise Linux (RHEL) and Kubernetes, OpenShift Container Platform provides a more secure and scalable multi-tenant operating system for today’s enterprise-class applications, while delivering integrated application runtimes and libraries. OpenShift Container Platform enables organizations to meet security, privacy, compliance, and governance requirements.

1.1. About this release

OpenShift Container Platform (RHSA-2021:2438) is now available. This release uses Kubernetes 1.21 with CRI-O runtime. New features, changes, and known issues that pertain to OpenShift Container Platform 4.8 are included in this topic.

Red Hat did not publicly release OpenShift Container Platform 4.8.0 as the GA version and, instead, is releasing OpenShift Container Platform 4.8.2 as the GA version.

OpenShift Container Platform 4.8 clusters are available at https://console.redhat.com/openshift. The Red Hat OpenShift Cluster Manager application for OpenShift Container Platform allows you to deploy OpenShift clusters to either on-premise or cloud environments.

OpenShift Container Platform 4.8 is supported on Red Hat Enterprise Linux (RHEL) 7.9 or later, as well as on Red Hat Enterprise Linux CoreOS (RHCOS) 4.8.

You must use RHCOS machines for the control plane, and you can use either RHCOS or Red Hat Enterprise Linux (RHEL) 7.9 or later for compute machines.

Because only RHEL 7.9 or later is supported for compute machines, you must not upgrade the RHEL compute machines to RHEL 8.

OpenShift Container Platform 4.8 is an Extended Update Support (EUS) release. More information on Red Hat OpenShift EUS is available in OpenShift Life Cycle and OpenShift EUS Overview.

With the release of OpenShift Container Platform 4.8, version 4.5 is now end of life. For more information, see the Red Hat OpenShift Container Platform Life Cycle Policy.

1.2. Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties.

As part of that effort, with this release the following changes are in place:

-

The OpenShift Docs GitHub repository

masterbranch has been renamed tomain. - We have begun to progressively replace the terminology of "master" with "control plane". You will notice throughout the documentation that we use both terms, with "master" in parenthesis. For example "… the control plane node (also known as the master node)". In a future release, we will update this to be "the control plane node".

1.3. OpenShift Container Platform layered and dependent component support and compatibility

The scope of support for layered and dependent components of OpenShift Container Platform changes independently of the OpenShift Container Platform version. To determine the current support status and compatibility for an add-on, refer to its release notes. For more information, see the Red Hat OpenShift Container Platform Life Cycle Policy.

1.4. New features and enhancements

This release adds improvements related to the following components and concepts.

1.4.1. Red Hat Enterprise Linux CoreOS (RHCOS)

1.4.1.1. RHCOS now uses RHEL 8.4

RHCOS now uses Red Hat Enterprise Linux (RHEL) 8.4 packages in OpenShift Container Platform 4.8, as well as in OpenShift Container Platform 4.7.24 and above. This enables you to have the latest fixes, features, and enhancements, as well as the latest hardware support and driver updates. OpenShift Container Platform 4.6 is an Extended Update Support (EUS) release that will continue to use RHEL 8.2 EUS packages for the entirety of its lifecycle.

1.4.1.2. Using stream metadata for improved boot image automation

Stream metadata provides a standardized JSON format for injecting metadata into the cluster during OpenShift Container Platform installation. For improved automation, the new openshift-install coreos print-stream-json command provides a method for printing stream metadata in a scriptable, machine-readable format.

For user-provisioned installations, the openshift-install binary contains references to the version of RHCOS boot images that are tested for use with OpenShift Container Platform, such as the AWS AMI. You can now parse the stream metadata from a Go program by using the official stream-metadata-go library at https://github.com/coreos/stream-metadata-go.

For more information, see Accessing RHCOS AMIs with stream metadata.

1.4.1.3. Butane config transpiler simplifies creation of machine configs

OpenShift Container Platform now includes the Butane config transpiler to assist with producing and validating machine configs. Documentation now recommends using Butane to create machine configs for LUKS disk encryption, boot disk mirroring, and custom kernel modules.

For more information, see Creating machine configs with Butane.

1.4.1.4. Change to custom chrony.conf default on cloud platforms

If a cloud administrator has already set a custom /etc/chrony.conf configuration, RHCOS no longer sets the PEERNTP=no option by default on cloud platforms. Otherwise, the PEERNTP=no option is still set by default. See BZ#1924869 for more information.

1.4.1.5. Enabling multipathing at bare metal installation time

Enabling multipathing during bare metal installation is now supported for nodes provisioned in OpenShift Container Platform 4.8 or higher. You can enable multipathing by appending kernel arguments to the coreos-installer install command so that the installed system itself uses multipath beginning from the first boot. While post-installation support is still available by activating multipathing via the machine config, enabling multipathing during installation is recommended for nodes provisioned starting in OpenShift Container Platform 4.8.

For more information, see Enabling multipathing with kernel arguments on RHCOS.

1.4.2. Installation and upgrade

1.4.2.1. Installing a cluster to an existing, empty resource group on Azure

You can now define an already existing resource group to install your cluster to on Azure by defining the platform.azure.resourceGroupName field in the install-config.yaml file. This resource group must be empty and only used for a single cluster; the cluster components assume ownership of all resources in the resource group.

If you limit the service principal scope of the installation program to this resource group, you must ensure all other resources used by the installation program in your environment have the necessary permissions, such as the public DNS zone and virtual network. Destroying the cluster using the installation program deletes the user-defined resource group.

1.4.2.2. Using existing IAM roles for clusters on AWS

You can now define a pre-existing Amazon Web Services (AWS) IAM role for your machine instance profiles by setting the compute.platform.aws.iamRole and controlPlane.platform.aws.iamRole fields in the install-config.yaml file. This allows you to do the following for your IAM roles:

- Match naming schemes

- Include predefined permissions boundaries

1.4.2.3. Using pre-existing Route53 hosted private zones on AWS

You can now define an existing Route 53 private hosted zone for your cluster by setting the platform.aws.hostedZone field in the install-config.yaml file. You can only use a pre-existing hosted zone when also supplying your own VPC.

1.4.2.4. Increasing the size of GCP subnets within the machine CIDR

The OpenShift Container Platform installation program for Google Cloud Platform (GCP) now creates subnets as large as possible within the machine CIDR. This allows the cluster to use a machine CIDR appropriately sized to accommodate the number of nodes in the cluster.

1.4.2.5. Improved upgrade duration

With this release, the upgrade duration for cluster Operators that deploy daemon sets to all nodes is significantly reduced. For example, the upgrade duration of a 250-node test cluster is reduced from 7.5 hours to 1.5 hours, resulting in upgrade duration scaling of less than one minute per additional node.

This change does not affect machine config pool rollout duration.

1.4.2.6. MCO waits for all machine config pools to update before reporting the update is complete

When updating, the Machine Config Operator (MCO) now reports an Upgradeable=False condition in the machine-config Cluster Operator if any machine config pool has not completed updating. This status blocks future minor updates, but does not block future patch updates, or the current update. Previously, the MCO reported the Upgradeable status based only on the state of the control plane machine config pool, even if the worker pools were not done updating.

1.4.2.7. Using Fujitsu iRMC for installation on bare metal nodes

In OpenShift Container Platform 4.8, you can use Fujitsu hardware and the Fujitsu iRMC base board management controller protocol when deploying installer-provisioned clusters on bare metal. Currently Fujitsu supports iRMC S5 firmware version 3.05P and above for installer-provisioned installation on bare metal. Enhancements and bug fixes for OpenShift Container Platform 4.8 include:

- Supporting soft power-off on iRMC hardware.

- Stopping the provisioning services once the installer deploys the control plane on the bare metal nodes. See BZ#1949859 for more information.

-

Adding an Ironic health check to the bootstrap

keepalivedchecks. See BZ#1949859 for more information. - Verifying that the unicast peers list isn’t empty on the control plane nodes. See BZ#1957708 for more information.

- Updating the Bare Metal Operator to align the iRMC PowerInterface. See BZ#1957869 for more information.

-

Updating the

pyghmilibrary version. See BZ#1920294 for more information. - Updating the Bare Metal Operator to address missing IPMI credentials. See BZ#1965182 for more information.

-

Removing iRMC from

enabled_bios_interfaces. See BZ#1969212 for more information. -

Adding

ironicTlsMountandinspectorTlsMountto the the bare metal pod definition. See BZ#1968701 for more information. - Disabling the RAID feature for iRMC server. See BZ#1969487 for more information.

- Disabling RAID for all drivers. See BZ#1969487 for more information.

1.4.2.8. SR-IOV network support for clusters with installer-provisioned infrastructure on RHOSP

You can now deploy clusters on RHOSP that use single-root I/O virtualization (SR-IOV) networks for compute machines.

See Installing a cluster on OpenStack that supports SR-IOV-connected compute machines for more information.

1.4.2.9. Ironic Python Agent support for VLAN interfaces

With this update, the Ironic Python Agent now reports VLAN interfaces in the list of interfaces during introspection. Additionally, the IP address is included on the interfaces, which allows for proper creation of a CSR. As a result, a CSR can be obtained for all interfaces, including VLAN interfaces. For more information, see BZ#1888712.

1.4.2.10. Over-the-air updates with the OpenShift Update Service

The OpenShift Update Service (OSUS) provides over-the-air updates to OpenShift Container Platform, including Red Hat Enterprise Linux CoreOS (RHCOS). It was previously only accessible as a Red Hat hosted service located behind public APIs, but can now be installed locally. The OpenShift Update Service is composed of an Operator and one or more application instances and is now generally available in OpenShift Container Platform 4.6 and higher.

For more information, see Understanding the OpenShift Update Service.

1.4.3. Web console

1.4.3.1. Custom console routes now use the new CustomDomains cluster API

For console and downloads routes, custom routes functionality is now implemented to use the new ingress config route configuration API spec.componentRoutes. The Console Operator config already contained custom route customization, but for the console route only. The route configuration via console-operator config is being deprecated. Therefore, if the console custom route is set up in both the ingress config and console-operator config, then the new ingress config custom route configuration takes precedent.

For more information, see Customizing console routes.

1.4.3.2. Access a code snippet from a quick start

You can now execute a CLI snippet when it is included in a quick start from the web console. To use this feature, you must first install the Web Terminal Operator. The web terminal and code snippet actions that execute in the web terminal are not present if you do not install the Web Terminal Operator. Alternatively, you can copy a code snippet to the clipboard regardless of whether you have the Web Terminal Operator installed or not.

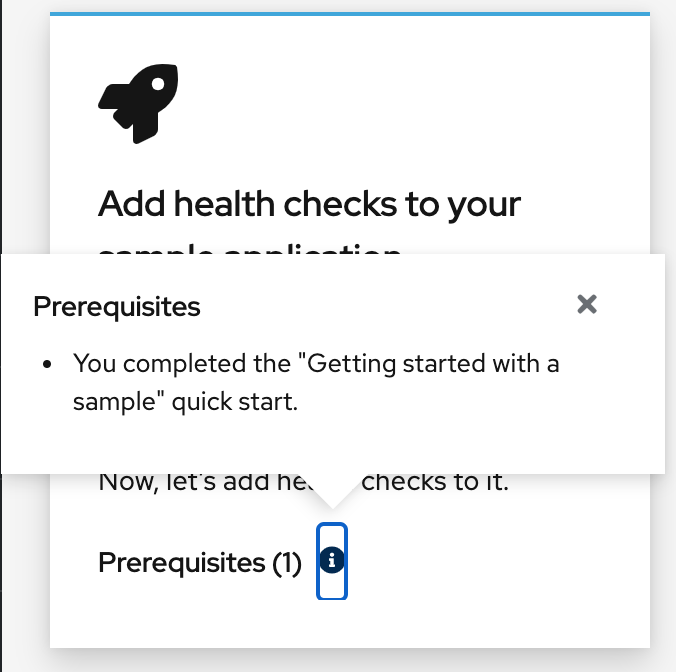

1.4.3.3. Improved presentation of quick start prerequisites

Previously, quick start prerequisites were displayed as combined text instead of a list on the quick start card. With scalability in mind, the prerequisites are now presented in a popover rather than on the card.

1.4.3.4. Developer perspective

With this release, you can:

- Use custom pipeline templates to create and deploy an application from a Git repository. These templates override the default pipeline templates provided by OpenShift Pipelines 1.5 and later.

- Filter Helm charts based on their certification level and see all Helm charts in the Developer Catalog.

- Use the options in the Add page to create applications and associated services and deploy these applications and services on OpenShift Container Platform. The Add page options include: Getting started resources, Creating applications using samples, Build with guided documentation, and Explore new developer features.

- Use workspaces when creating pipelines in the pipeline builder. You can also add a trigger to a pipeline so you can use tasks to support workspaces in pipelines.

- Use the JAR files in the Topology view of the Developer perspective to deploy your Java applications.

- Create multiple event source types in OpenShift Container Platform and connect these source types to sinks. You can take functions deployed as a Knative service on a OpenShift Container Platform cluster and connect them to a sink.

-

Use the

finallytask in the pipeline to execute commands in parallel. - Use code assistance in the Add Task form to access the task parameter values. To view the pipeline parameter, and the correct syntax for referencing that particular pipeline parameter, go to the respective text fields.

-

Run a

Taskonly after certain conditions are met. Use thewhenfield to configure the executions of your task, and to list a series of references towhenexpressions.

1.4.4. IBM Z and LinuxONE

With this release, IBM Z and LinuxONE are now compatible with OpenShift Container Platform 4.8. The installation can be performed with z/VM or RHEL KVM. For installation instructions, see the following documentation:

Notable enhancements

The following new features are supported on IBM Z and LinuxONE with OpenShift Container Platform 4.8:

- KVM on RHEL 8.3 or later is supported as a hypervisor for user-provisioned installation of OpenShift Container Platform 4.8 on IBM Z and LinuxONE. Installation with static IP addresses as well as installation in a restricted network are now also supported.

- Encrypting data stored in etcd.

- 4K FCP block device.

- Three-node cluster support.

Supported features

The following features are also supported on IBM Z and LinuxONE:

- Multipathing

- Persistent storage using iSCSI

- Persistent storage using local volumes (Local Storage Operator)

- Persistent storage using hostPath

- Persistent storage using Fibre Channel

- Persistent storage using Raw Block

- OVN-Kubernetes with an initial installation of OpenShift Container Platform 4.8

- z/VM Emulated FBA devices on SCSI disks

These features are available only for OpenShift Container Platform on IBM Z for 4.8:

- HyperPAV enabled on IBM Z /LinuxONE for the virtual machines for FICON attached ECKD storage

Restrictions

Note the following restrictions for OpenShift Container Platform on IBM Z and LinuxONE:

OpenShift Container Platform for IBM Z does not include the following Technology Preview features:

- Precision Time Protocol (PTP) hardware

The following OpenShift Container Platform features are unsupported:

- Automatic repair of damaged machines with machine health checking

- CodeReady Containers (CRC)

- Controlling overcommit and managing container density on nodes

- CSI volume cloning

- CSI volume snapshots

- FIPS cryptography

- Helm command-line interface (CLI) tool

- Multus CNI plugin

- NVMe

- OpenShift Metering

- OpenShift Virtualization

- Tang mode disk encryption during OpenShift Container Platform deployment

- Worker nodes must run Red Hat Enterprise Linux CoreOS (RHCOS)

- Persistent shared storage must be provisioned by using either NFS or other supported storage protocols

- Persistent non-shared storage must be provisioned using local storage, like iSCSI, FC, or using LSO with DASD, FCP, or EDEV/FBA

1.4.5. IBM Power Systems

With this release, IBM Power Systems are now compatible with OpenShift Container Platform 4.8. For installation instructions, see the following documentation:

Notable enhancements

The following new features are supported on IBM Power Systems with OpenShift Container Platform 4.8:

- Encrypting data stored in etcd

- Three-node cluster support

- Multus SR-IOV

Supported features

The following features are also supported on IBM Power Systems:

Currently, five Operators are supported:

- Cluster-Logging-Operator

- Cluster-NFD-Operator

- Elastic Search-Operator

- Local Storage Operator

- SR-IOV Network Operator

- Multipathing

- Persistent storage using iSCSI

- Persistent storage using local volumes (Local Storage Operator)

- Persistent storage using hostPath

- Persistent storage using Fibre Channel

- Persistent storage using Raw Block

- OVN-Kubernetes with an initial installation of OpenShift Container Platform 4.8

- 4K Disk Support

- NVMe

Restrictions

Note the following restrictions for OpenShift Container Platform on IBM Power Systems:

OpenShift Container Platform for IBM Power Systems does not include the following Technology Preview features:

- Precision Time Protocol (PTP) hardware

The following OpenShift Container Platform features are unsupported:

- Automatic repair of damaged machines with machine health checking

- CodeReady Containers (CRC)

- Controlling overcommit and managing container density on nodes

- FIPS cryptography

- Helm command-line interface (CLI) tool

- OpenShift Metering

- OpenShift Virtualization

- Tang mode disk encryption during OpenShift Container Platform deployment

- Worker nodes must run Red Hat Enterprise Linux CoreOS (RHCOS)

- Persistent storage must be of the Filesystem type that uses local volumes, Network File System (NFS), or Container Storage Interface (CSI)

1.4.6. Security and compliance

1.4.6.1. Audit logging for OAuth access token logout requests

The Default audit log policy now logs request bodies for OAuth access token creation (login) and deletion (logout) requests. Previously, deletion request bodies were not logged.

For more information about audit log policies, see Configuring the node audit log policy.

1.4.6.2. Wildcard subject for service serving certificates for headless services

Generating a service serving certificate for headless services now includes a wildcard subject in the format of *.<service.name>.<service.namespace>.svc. This allows for TLS-protected connections to individual stateful set pods without having to manually generate certificates for these pods.

Because the generated certificates contain wildcard subjects for headless services, do not use the service CA if your client must differentiate between individual pods. In this case:

- Generate individual TLS certificates by using a different CA.

- Do not accept the service CA as a trusted CA for connections that are directed to individual pods and must not be impersonated by other pods. These connections must be configured to trust the CA that was used to generate the individual TLS certificates.

For more information, see Add a service certificate.

1.4.6.3. The oc-compliance plugin is now available

The Compliance Operator automates many of the checks and remediations for an OpenShift Container Platform cluster. However, the full process of bringing a cluster into compliance often requires administrator interaction with the Compliance Operator API and other components. The oc-compliance plugin is now available and makes the process easier.

For more information, see Using the oc-compliance plugin

1.4.6.4. TLS security profile for the Kubernetes control plane

The Kubernetes API server TLS security profile setting is now also honored by the Kubernetes scheduler and Kubernetes controller manager.

For more information, see Configuring TLS security profiles.

1.4.6.5. TLS security profile for the kubelet as a server

You can now set a TLS security profile for kubelet when it acts as an HTTP server for the Kubernetes API server.

For more information, see Configuring TLS security profiles.

1.4.6.6. Support for bcrypt password hashing

Previously, the oauth-proxy command only allowed the use of SHA-1 hashed passwords in htpasswd files used for authentication. oauth-proxy now includes support for htpasswd entries that use bcrypt password hashing. For more information, see BZ#1874322.

1.4.6.7. Enabling managed Secure Boot with installer-provisioned clusters

OpenShift Container Platform 4.8 supports automatically turning on UEFI Secure Boot mode for provisioned control plane and worker nodes and turning it back off when removing the nodes. To use this feature, set the node’s bootMode configuration setting to UEFISecureBoot in the install-config.yaml file. Red Hat only supports installer-provisioned installation with managed Secure Boot on 10th generation HPE hardware or 13th generation Dell hardware running firmware version 2.75.75.75 or greater. For additional details, see Configuring managed Secure Boot in the install-config.yaml file.

1.4.7. Networking

1.4.7.1. Dual-stack support on installer-provisioned bare metal infrastructure with the OVN-Kubernetes cluster network provider

For clusters on installer-provisioned bare metal infrastructure, the OVN-Kubernetes cluster network provider supports both IPv4 and IPv6 address families.

For installer-provisioned bare metal clusters upgrading from previous versions of OpenShift Container Platform, you must convert your cluster to support dual-stack networking. For more information, see Converting to IPv4/IPv6 dual stack networking.

1.4.7.2. Migrate from OpenShift SDN to OVN-Kubernetes on user-provisioned infrastructure

An OpenShift SDN cluster network provider migration to the OVN-Kubernetes cluster network provider is supported for user-provisioned clusters. For more information, see Migrate from the OpenShift SDN cluster network provider.

1.4.7.3. OpenShift SDN cluster network provider egress IP feature balances across nodes

The egress IP feature of OpenShift SDN now balances network traffic roughly equally across nodes for a given namespace, if that namespace is assigned multiple egress IP addresses. Each IP address must reside on a different node. For more information, refer to Configuring egress IPs for a project for OpenShift SDN.

1.4.7.4. Network policy supports selecting host network Ingress Controllers

When using the OpenShift SDN or OVN-Kubernetes cluster network providers, you can select traffic from Ingress Controllers in a network policy rule regardless of whether an Ingress Controller runs on the cluster network or the host network. In a network policy rule, the policy-group.network.openshift.io/ingress: "" namespace selector label matches traffic from an Ingress Controller. You can continue to use the network.openshift.io/policy-group: ingress namespace selector label, but this is a legacy label that can be removed in a future release of OpenShift Container Platform.

In earlier releases of OpenShift Container Platform, the following limitations existed:

-

A cluster that uses the OpenShift SDN cluster network provider could select traffic from an Ingress Controller on the host network only by applying the

network.openshift.io/policy-group: ingresslabel to thedefaultnamespace. - A cluster that uses the OVN-Kubernetes cluster network provider could not select traffic from an Ingress Controller on the host network.

For more information, refer to About network policy.

1.4.7.5. Network policy supports selecting host network traffic

When using either the OVN-Kubernetes cluster network provider or the OpenShift SDN cluster network provider, you can use the policy-group.network.openshift.io/host-network: "" namespace selector to select host network traffic in a network policy rule.

1.4.7.6. Network policy audit logs

If you use the OVN-Kubernetes cluster network provider, you can enable audit logging for network policies in a namespace. The logs are in a syslog compatible format and can be saved locally, sent over a UDP connection, or directed to a UNIX domain socket. You can specify whether to log allowed, dropped, or both allowed and dropped connections. For more information, see Logging network policy events.

1.4.7.7. Network policy support for macvlan additional networks

You can create network policies that apply to macvlan additional networks by using the MultiNetworkPolicy API, which implements the NetworkPolicy API. For more information, see Configuring multi-network policy.

1.4.7.8. Supported hardware for SR-IOV

OpenShift Container Platform 4.8 adds support for additional Intel and Mellanox network interface controllers.

- Intel X710, XL710, and E810

- Mellanox ConnectX-5 Ex

For more information, see the supported devices.

1.4.7.9. Enhancements to the SR-IOV Network Operator

The Network Resources Injector that is deployed with the Operator is enhanced to expose information about huge pages requests and limits with the Downward API. When a pod specification includes a huge pages request or limit, the information is exposed in the /etc/podnetinfo path.

For more information, see Huge pages resource injection for Downward API.

1.4.7.10. Tracking network flows

OpenShift Container Platform 4.8 adds support for sending the metadata about network flows on the pod network to a network flows collector. The following protocols are supported:

- NetFlow

- sFlow

- IPFIX

Packet data is not sent to the network flows collector. Packet-level metadata such as the protocol, source address, destination address, port numbers, number of bytes, and other packet-level information is sent to the network flows collector.

For more information, see Tracking network flows.

1.4.7.11. CoreDNS-mDNS no longer used to resolve node names to IP addresses

OpenShift Container Platform 4.8 and later releases include functionality that uses cluster membership information to generate A/AAAA records. This resolves the node names to their IP addresses. Once the nodes are registered with the API, the cluster can disperse node information without using CoreDNS-mDNS. This eliminates the network traffic associated with multicast DNS.

1.4.7.12. Converting HTTP header names to support upgrading to OpenShift Container Platform 4.8

OpenShift Container Platform updated to HAProxy 2.2, which changes HTTP header names to lowercase by default, for example, changing Host: xyz.com to host: xyz.com. For legacy applications that are sensitive to the capitalization of HTTP header names, use the Ingress Controller spec.httpHeaders.headerNameCaseAdjustments API field to accommodate legacy applications until they can be fixed. Make sure to add the necessary configuration by using spec.httpHeaders.headerNameCaseAdjustments before upgrading OpenShift Container Platform now that HAProxy 2.2 is available.

For more information, see Converting HTTP header case.

1.4.7.13. Stricter HTTP header validation in OpenShift Container Platform 4.8

OpenShift Container Platform updated to HAProxy 2.2, which enforces some additional restrictions on HTTP headers. These restrictions are intended to mitigate possible security weaknesses in applications. In particular, HTTP client requests that specify a host name in an HTTP request line may be rejected if the request line and HTTP host header in a request do not both either specify or omit the port number. For example, a request GET http://hostname:80/path with the header host: hostname will be rejected with an HTTP 400 "Bad request" response because the request line specifies a port number while the host header does not. The purpose of this restriction is to mitigate request smuggling attacks.

This stricter HTTP header validation was also enabled in previous versions of OpenShift Container Platform when HTTP/2 was enabled. This means that you can test for problematic client requests by enabling HTTP/2 on a OpenShift Container Platform 4.7 cluster and checking for HTTP 400 errors. For information on how to enable HTTP/2, see Enabling HTTP/2 Ingress connectivity.

1.4.7.14. Configuring global access for an Ingress Controller on GCP

OpenShift Container Platform 4.8 adds support for the global access option for Ingress Controllers created on GCP with an internal load balancer. When the global access option is enabled, clients in any region within the same VPC network and compute region as the load balancer can reach the workloads running on your cluster.

For more information, see Configuring global access for an Ingress Controller on GCP.

1.4.7.15. Setting Ingress Controller thread count

OpenShift Container Platform 4.8 adds support for setting the thread count to increase the amount of incoming connections a cluster can handle.

For more information, see Setting Ingress Controller thread count.

1.4.7.16. Configuring the PROXY protocol for an Ingress Controller

OpenShift Container Platform 4.8 adds support for configuring the PROXY protocol for the Ingress Controller on non-cloud platforms, specifically for HostNetwork or NodePortService endpoint publishing strategy types.

For more information, see Configuring PROXY protocol for an Ingress Controller.

1.4.7.17. NTP servers on control plane nodes

In OpenShift Container Platform 4.8, installer-provisioned clusters can configure and deploy Network Time Protocol (NTP) servers on the control plane nodes and NTP clients on worker nodes. This enables workers to retrieve the date and time from the NTP servers on the control plane nodes, even when disconnected from a routable network. You can also configure and deploy NTP servers and NTP clients after deployment.

1.4.7.18. Changes to default API load balancer management for Kuryr

In OpenShift Container Platform 4.8 deployments on Red Hat OpenStack Platform (RHOSP) with Kuryr-Kubernetes, the API load balancer for the default/kubernetes service is no longer managed by the Cluster Network Operator (CNO), but instead by the kuryr-controller itself. This means that:

When upgrading to OpenShift Container Platform 4.8, the

default/kubernetesservice will have downtime.NoteIn deployments where no Open Virtual Network (OVN) Octavia is available, more downtime should be expected

-

The

default/kubernetesload balancer is no longer required to use the Octavia Amphora driver. Instead, OVN Octavia will be used to implement thedefault/kubernetesservice if it is available in the OpenStack cloud.

1.4.7.19. Enabling a provisioning network after installation

The assisted installer and installer-provisioned installation for bare metal clusters provide the ability to deploy a cluster without a provisioning network. In OpenShift Container Platform 4.8 and later, you can enable a provisioning network after installation by using the Cluster Baremetal Operator (CBO).

1.4.7.20. Configure network components to run on the control plane

If you need the virtual IP (VIP) addresses to run on the control plane nodes in a bare metal installation, you must configure the apiVIP and ingressVIP VIP addresses to run exclusively on the control plane nodes. By default, OpenShift Container Platform allows any node in the worker machine configuration pool to host the apiVIP and ingressVIP VIP addresses. Because many bare metal environments deploy worker nodes in separate subnets from the control plane nodes, configuring the apiVIP and ingressVIP virtual IP addresses to run exclusively on the control plane nodes prevents issues from arising due to deploying worker nodes in separate subnets. For additional details, see Configure network components to run on the control plane.

1.4.7.21. Configuring external load balancers for apiVIP and ingressVIP traffic

In OpenShift Container Platform 4.8, you can configure an external load balancer to handle apiVIP and ingressVIP traffic for Red Hat OpenStack Platform (RHOSP) and bare-metal installer-provisioned clusters. External load balancing services and the control plane nodes must run on the same L2 network, and on the same VLAN when using VLANs to route traffic between the load balancing services and the control plane nodes.

Configuring load balancers to handle apiVIP and ingressVIP traffic is not supported for VMware installer-provisioned clusters.

1.4.7.22. OVN-Kubernetes IPsec support for dual-stack networking

OpenShift Container Platform 4.8 adds OVN-Kubernetes IPsec support for clusters that are configured to use dual-stack networking.

1.4.7.23. Egress router CNI for OVN-Kubernetes

The egress router CNI plugin is generally available. The Cluster Network Operator is enhanced to support an EgressRouter API object. The process for adding an egress router on a cluster that uses OVN-Kubernetes is simplified. When you create an egress router object, the Operator automatically adds a network attachment definition and a deployment. The pod for the deployment acts as the egress router.

For more information, see Considerations for the use of an egress router pod.

1.4.7.24. IP failover support on OpenShift Container Platform

IP failover is now supported on OpenShift Container Platform clusters on bare metal. IP failover uses keepalived to host a set of externally accessible VIP addresses on a set of hosts. Each VIP is only serviced by a single host at a time. keepalived uses the Virtual Router Redundancy Protocol (VRRP) to determine which host, from the set of hosts, services which VIP. If a host becomes unavailable, or if the service that keepalived is watching does not respond, the VIP is switched to another host from the set. This means a VIP is always serviced as long as a host is available.

For more information, see Configuring IP failover.

1.4.7.25. Control DNS pod placement

In OpenShift Container Platform 4.8, you can use a custom node selector and tolerations to configure the daemon set for CoreDNS to run or not run on certain nodes.

Previous versions of OpenShift Container Platform configured the CoreDNS daemon set with a toleration for all taints so that DNS pods ran on all nodes in the cluster, irrespective of node taints. OpenShift Container Platform 4.8 no longer configures this toleration for all taints by default. Instead, the default is to tolerate only the node-role.kubernetes.io/master taint. To make DNS pods run on nodes with other taints, you must configure custom tolerations.

For more information, see Controlling DNS pod placement.

1.4.7.26. Provider networks support for clusters that run on RHOSP

OpenShift Container Platform clusters on Red Hat OpenStack Platform (RHOSP) now support provider networks for all deployment types.

1.4.7.27. Configurable tune.maxrewrite and tune.bufsize for HAProxy

Cluster Administrators can now set headerBufferMaxRewriteByte and headerBufferBytes Ingress Controller tuning parameters to configure tune.maxrewrite and tune.bufsize HAProxy memory options per-Ingress Controller.

See Ingress Controller configuration parameters for more information.

1.4.8. Storage

1.4.8.1. Persistent storage using GCP PD CSI driver operator is generally available

The Google Cloud Platform (GCP) persistent disk (PD) Container Storage Interface (CSI) driver is automatically deployed and managed on GCP environments, allowing you to dynamically provision these volumes without having to install the driver manually. This feature was previously introduced as a Technology Preview feature in OpenShift Container Platform 4.7 and is now generally available and enabled by default in OpenShift Container Platform 4.8.

For more information, GCP PD CSI Driver Operator.

1.4.8.2. Persistent storage using the Azure Disk CSI Driver Operator (Technology Preview)

The Azure Disk CSI Driver Operator provides a storage class by default that you can use to create persistent volume claims (PVCs). The Azure Disk CSI Driver Operator that manages this driver is in Technology Preview.

For more information, see Azure Disk CSI Driver Operator.

1.4.8.3. Persistent storage using the vSphere CSI Driver Operator (Technology Preview)

The vSphere CSI Driver Operator provides a storage class by default that you can use to create persistent volume claims (PVCs). The vSphere CSI Driver Operator that manages this driver is in Technology Preview.

For more information, see vSphere CSI Driver Operator.

1.4.8.4. Automatic CSI migration (Technology Preview)

Starting with OpenShift Container Platform 4.8, automatic migration for the following in-tree volume plugins to their equivalent CSI drivers is available as a Technology Preview feature:

- Amazon Web Services (AWS) Elastic Block Storage (EBS)

- OpenStack Cinder

For more information, see Automatic CSI Migration.

1.4.8.5. External provisioner for AWS EFS (Technology Preview) feature has been removed

The Amazon Web Services (AWS) Elastic File System (EFS) Technology Preview feature has been removed and is no longer supported.

1.4.8.6. Improved control over Cinder volume availability zones for clusters that run on RHOSP

You can now select availability zones for Cinder volumes during installation. You can also use Cinder volumes in particular availability zones for your image registry.

1.4.9. Registry

1.4.10. Operator lifecycle

1.4.10.1. Enhanced error reporting for administrators

A cluster administrator using Operator Lifecycle Manager (OLM) to install an Operator can encounter error conditions that are related either to the current API or low-level APIs. Previously, there was little insight into why OLM could not fulfill a request to install or update an Operator. These errors could range from trivial issues like typos in object properties or missing RBAC, to more complex issues where items could not be loaded from the catalog due to metadata parsing.

Because administrators should not require understanding of the interaction process between the various low-level APIs or access to the OLM pod logs to successfully debug such issues, OpenShift Container Platform 4.8 introduces the following enhancements in OLM to provide administrators with more comprehensible error reporting and messages:

1.4.10.2. Retrying install plans

Install plans, defined by an InstallPlan object, can encounter transient errors, for example, due to API server availability or conflicts with other writers. Previously, these errors would result in the termination of partially-applied install plans that required manual cleanup. With this enhancement, the Catalog Operator now retries errors during install plan execution for up to one minute. The new .status.message field provides a human-readable indication when retries are occurring.

1.4.10.3. Indicating invalid Operator groups

Creating a subscription in a namespace with no Operator groups or multiple Operator groups would previously result in a stalled Operator installation with an install plan that stays in phase=Installing forever. With this enhancement, the install plan immediately transitions to phase=Failed so that the administrator can correct the invalid Operator group, and then delete and re-create the subscription again.

1.4.10.4. Specific reporting when no candidate Operators found

ResolutionFailed events, which are created when dependency resolution in a namespace fails, now provide more specific text when the namespace contains a subscription that references a package or channel that does not exist in the referenced catalog source. Previously, this message was generic:

no candidate operators found matching the spec of subscription '<name>'

no candidate operators found matching the spec of subscription '<name>'With this enhancement, the messages are more specific:

Operator does not exist

no operators found in package <name> in the catalog referenced by subscription <name>

no operators found in package <name> in the catalog referenced by subscription <name>Catalog does not exist

no operators found from catalog <name> in namespace openshift-marketplace referenced by subscription <name>

no operators found from catalog <name> in namespace openshift-marketplace referenced by subscription <name>Channel does not exist

no operators found in channel <name> of package <name> in the catalog referenced by subscription <name>

no operators found in channel <name> of package <name> in the catalog referenced by subscription <name>Cluster service version (CSV) does not exist

no operators found with name <name>.<version> in channel <name> of package <name> in the catalog referenced by subscription <name>

no operators found with name <name>.<version> in channel <name> of package <name> in the catalog referenced by subscription <name>1.4.11. Operator development

1.4.11.1. Migration of Operator projects from package manifest format to bundle format

Support for the legacy package manifest format for Operators is removed in OpenShift Container Platform 4.8 and later. The bundle format is the preferred Operator packaging format for Operator Lifecycle Manager (OLM) starting in OpenShift Container Platform 4.6. If you have an Operator project that was initially created in the package manifest format, which has been deprecated, you can now use the Operator SDK pkgman-to-bundle command to migrate the project to the bundle format.

For more information, see Migrating package manifest projects to bundle format.

1.4.11.2. Publishing a catalog containing a bundled Operator

To install and manage Operators, Operator Lifecycle Manager (OLM) requires that Operator bundles are listed in an index image, which is referenced by a catalog on the cluster. As an Operator author, you can use the Operator SDK to create an index containing the bundle for your Operator and all of its dependencies. This is useful for testing on remote clusters and publishing to container registries.

For more information, see Publishing a catalog containing a bundled Operator.

1.4.11.3. Enhanced Operator upgrade testing

The Operator SDK’s run bundle-upgrade subcommand automates triggering an installed Operator to upgrade to a later version by specifying a bundle image for the later version. Previously, the subcommand could only upgrade Operators that were initially installed using the run bundle subcommand. With this enhancement, the run bundle-upgrade now also works with Operators that were initially installed with the traditional Operator Lifecycle Manager (OLM) workflow.

For more information, see Testing an Operator upgrade on Operator Lifecycle Manager.

1.4.11.4. Controlling Operator compatibility with OpenShift Container Platform versions

When an API is removed from an OpenShift Container Platform version, Operators running on that cluster version that are still using removed APIs will no longer work properly. As an Operator author, you should plan to update your Operator projects to accommodate API deprecation and removal to avoid interruptions for users of your Operator.

For more details, see Controlling Operator compatibility with OpenShift Container Platform versions.

Builds

1.4.11.5. New Telemetry metric for number of builds by strategy

Telemetry includes a new openshift:build_by_strategy:sum gauge metric, which sends the number of builds by strategy type to the Telemeter Client. This metric gives site reliability engineers (SREs) and product managers visibility into the kinds of builds that run on OpenShift Container Platform clusters.

1.4.11.6. Mount custom PKI certificate authorities

Previously, builds could not use the cluster PKI certificate authorities that were sometimes required to access corporate artifact repositories. Now, you can configure the BuildConfig object to mount cluster custom PKI certificate authorities by setting mountTrustedCA to true.

1.4.12. Images

1.4.13. Machine API

1.4.13.1. Scaling machines running in vSphere to and from zero with the cluster autoscaler

When running machines in vSphere, you can now set the minReplicas value to 0 in the MachineAutoscaler resource definition. When this value is set to 0, the cluster autoscaler scales the machine set to and from zero depending on if the machines are in use. For more information, see the MachineAutoscaler resource definition.

1.4.13.2. Automatic rotation of kubelet-ca.crt does not require node draining or reboot

The automatic rotation of the /etc/kubernetes/kubelet-ca.crt certificate authority (CA) no longer requires the Machine Config Operator (MCO) to drain nodes or reboot the cluster.

As part of this change, the following modifications do not require the MCO to drain nodes:

-

Changes to the SSH key in the

spec.config.ignition.passwd.users.sshAuthorizedKeysparameter of a machine config -

Changes to the global pull secret or pull secret in the

openshift-confignamespace

When the MCO detects any of these changes, it applies the changes and uncordons the node.

For more information, see Understanding the Machine Config Operator.

1.4.13.3. Machine set policy enhancement

Previously, creating machine sets required users to manually configure their CPU pinning settings, NUMA pinning settings, and CPU topology changes to get better performance from the host. With this enhancement, users can select a policy in the MachineSet resource to populate settings automatically. For more information, see BZ#1941334.

1.4.13.4. Machine set hugepage enhancement

Providing a hugepages property into the MachineSet resource is now possible. This enhancement creates the MachineSet resource’s nodes with a custom property in oVirt and instructs those nodes to use the hugepages of the hypervisor. For more information, see BZ#1948963.

1.4.13.5. Machine Config Operator ImageContentSourcePolicy object enhancement

OpenShift Container Platform 4.8 avoids workload disruption for selected ImageContentSourcePolicy object changes. This feature helps users and teams add additional mirrors and registries without workload disruption. As a result, workload distruption will no longer occur for the following changes in /etc/containers/registries.conf files:

-

Addition of a registry with

mirror-by-digest-only=true -

Addition of a mirror in a registry with

mirror-by-digest-only=true -

Appending items in

unqualified-search-registrieslist

For any other changes in /etc/containers/registries.conf files, the Machine Config Operator will default to draining nodes to apply changes. For more information, see BZ#1943315.

1.4.14. Nodes

1.4.14.1. Descheduler operator.openshift.io/v1 API group is now available

The operator.openshift.io/v1 API group is now available for the descheduler. Support for the operator.openshift.io/v1beta1 API group for the descheduler might be removed in a future release.

1.4.14.2. Prometheus metrics for the descheduler

You can now enable Prometheus metrics for the descheduler by adding the openshift.io/cluster-monitoring=true label to the openshift-kube-descheduler-operator namespace where you installed the descheduler.

The following descheduler metrics are available:

-

descheduler_build_info- Provides build information about the descheduler. -

descheduler_pods_evicted- Provides the number of pods that have been evicted for each combination of strategy, namespace, and result. There must be at least one evicted pod for this metric to appear.

1.4.14.3. Support for huge pages with the Downward API

With this release, when you set requests and limits for huge pages in a pod specification, you can use the Downward API to view the allocation for the pod from within a container. This enhancements relies on the DownwardAPIHugePages feature gate. OpenShift Container Platform 4.8 enables the feature gate.

For more information, see Consuming huge pages resources using the Downward API.

1.4.14.4. New labels for the Node Feature Discovery Operator

The Node Feature Discovery (NFD) Operator detects hardware features available on each node in an OpenShift Container Platform cluster. Then, it modifies node objects with node labels. This enables the NFD Operator to advertise the features of specific nodes. OpenShift Container Platform 4.8 supports three additional labels for the NFD Operator.

-

pstate intel-pstate: When the Intelpstatedriver is enabled and in use, thepstate intel-pstatelabel reflects the status of the Intelpstatedriver. The status is eitheractiveorpassive. -

pstate scaling_governor: When the Intelpstatedriver status isactive, thepstate scaling_governorlabel reflects the scaling governor algorithm. The algorithm is eitherpowersaveorperformance. -

cstate status: If theintel_idledriver has C-states or idle states, thecstate statuslabel istrue. Otherwise, it isfalse.

1.4.14.5. Remediate unhealthy nodes with the Poison Pill Operator

You can use the Poison Pill Operator to allow unhealthy nodes to reboot automatically. This minimizes downtime for stateful applications and ReadWriteOnce (RWO) volumes, and restores compute capacity if transient failures occur.

The Poison Pill Operator works with all cluster and hardware types.

For more information, see Remediating nodes with the Poison Pill Operator.

1.4.14.6. Automatic rotation of kubelet-ca.crt does not require reboot

The automatic rotation of the /etc/kubernetes/kubelet-ca.crt certificate authority (CA) no longer requires the Machine Config Operator (MCO) to drain nodes or reboot the cluster.

As part of this change, the following modifications do not require the MCO to drain nodes:

-

Changes to the SSH key in the

spec.config.ignition.passwd.users.sshAuthorizedKeysparameter of a machine config -

Changes to the global pull secret or pull secret in the

openshift-confignamespace

When the MCO detects any of these changes, it applies the changes and uncordons the node.

For more information, see Understanding the Machine Config Operator.

1.4.14.7. Vertical pod autoscaling is generally available

The OpenShift Container Platform vertical pod autoscaler (VPA) is now generally available. The VPA automatically reviews the historic and current CPU and memory resources for containers in pods and can update the resource limits and requests based on the usage values it learns.

You can also use the VPA with pods that require only one replica by modifying the VerticalPodAutoscalerController object as described below. Previously, the VPA worked only with pods that required two or more replicas.

For more information, see Automatically adjust pod resource levels with the vertical pod autoscaler.

1.4.14.8. Vertical pod autoscaling minimum can be configured

By default, workload objects must specify a minimum of two replicas in order for the VPA to automatically update pods. As a result, workload objects that specify fewer than two replicas are not acted upon by the VPA. You can change this cluster-wide minimum value by modifying the VerticalPodAutoscalerController object to add the minReplicas parameter.

For more information, see Automatically adjust pod resource levels with the vertical pod autoscaler.

1.4.14.9. Automatically allocate CPU and memory resources for nodes

OpenShift Container Platform can automatically determine the optimal sizing value of the system-reserved setting when a node starts. Previously, the CPU and memory allocations in the system-reserved setting were fixed limits that you needed to manually determine and set.

When automatic resource allocation is enabled, a script on each node calculates the optimal values for the respective reserved resources based on the installed CPU and memory capacity on the node.

For more information, see Automatically allocating resources for nodes.

1.4.14.10. Adding specific repositories to pull images

You can now specify an individual repository within a registry when creating lists of allowed and blocked registries for pulling and pushing images. Previously, you could specify only a registry.

For more information, see Adding specific registries and Blocking specific registries.

1.4.14.11. Cron jobs are generally available

The cron job custom resource is now generally available. As part of this change, a new controller has been implemented that substantially improves the performance of cron jobs. For more information on cron jobs, see Understanding jobs and cron jobs.

1.4.15. Red Hat OpenShift Logging

In OpenShift Container Platform 4.7, Cluster Logging became Red Hat OpenShift Logging. For more information, see Release notes for Red Hat OpenShift Logging.

1.4.16. Monitoring

1.4.16.1. Alerting rule changes

OpenShift Container Platform 4.8 includes the following alerting rule changes:

Example 1.1. Alerting rule changes

-

The

ThanosSidecarPrometheusDownalert severity is updated from critical to warning. -

The

ThanosSidecarUnhealthyalert severity is updated from critical to warning. -

The

ThanosQueryHttpRequestQueryErrorRateHighalert severity is updated from critical to warning. -

The

ThanosQueryHttpRequestQueryRangeErrorRateHighalert severity is updated from critical to warning. -

The

ThanosQueryInstantLatencyHighcritical alert is removed. This alert fired if Thanos Querier had a high latency for instant queries. -

The

ThanosQueryRangeLatencyHighcritical alert is removed. This alert fired if Thanos Querier had a high latency for range queries. -

For all Thanos Querier alerts, the

forduration is increased to 1 hour. -

For all Thanos sidecar alerts, the

forduration is increased to 1 hour.

Red Hat does not guarantee backward compatibility for metrics, recording rules, or alerting rules.

1.4.16.2. Alerts and information on APIs in use that will be removed in the next release

OpenShift Container Platform 4.8 introduces two new alerts that fire when an API that will be removed in the next release is in use:

-

APIRemovedInNextReleaseInUse- for APIs that will be removed in the next OpenShift Container Platform release. -

APIRemovedInNextEUSReleaseInUse- for APIs that will be removed in the next OpenShift Container Platform Extended Update Support (EUS) release.

You can use the new APIRequestCount API to track what is using the deprecated APIs. This allows you to plan whether any actions are required in order to upgrade to the next release.

1.4.16.3. Version updates to monitoring stack components and dependencies

OpenShift Container Platform 4.8 includes version updates to the following monitoring stack components and dependencies:

- The Prometheus Operator is now on version 0.48.1.

- Prometheus is now on version 2.26.1.

-

The

node-exporteragent is now on version 1.1.2. - Thanos is now on version 0.20.2.

- Grafana is now on version 7.5.5.

1.4.16.4. kube-state-metrics upgraded to version 2.0.0

kube-state-metrics is upgraded to version 2.0.0. The following metrics were deprecated in kube-state-metrics version 1.9 and are effectively removed in version 2.0.0:

Non-generic resource metrics for pods:

- kube_pod_container_resource_requests_cpu_cores

- kube_pod_container_resource_limits_cpu_cores

- kube_pod_container_resource_requests_memory_bytes

- kube_pod_container_resource_limits_memory_bytes

Non-generic resource metrics for nodes:

- kube_node_status_capacity_pods

- kube_node_status_capacity_cpu_cores

- kube_node_status_capacity_memory_bytes

- kube_node_status_allocatable_pods

- kube_node_status_allocatable_cpu_cores

- kube_node_status_allocatable_memory_bytes

1.4.16.5. Removed Grafana and Alertmanager UI links

The link to the third-party Alertmanager UI is removed from the Monitoring openshift-monitoring project.

1.4.16.6. Monitoring dashboard enhancements in the web console

New enhancements are available on the Monitoring

- When you zoom in on a single graph by selecting an area with the mouse, all other graphs now update to reflect the same time range.

- Dashboard panels are now organized into groups, which you can expand and collapse.

- Single-value panels now support changing color depending on their value.

- Dashboard labels now display in the Dashboard drop-down list.

- You can now specify a custom time range for a dashboard by selecting Custom time range in the Time Range drop-down list.

- When applicable, you can now select the All option in a dashboard filter drop-down menu to display data for all of the options in that filter.

1.4.17. Metering

The Metering Operator is deprecated as of OpenShift Container Platform 4.6, and is scheduled to be removed in the next OpenShift Container Platform release.

1.4.18. Scale

1.4.18.1. Running on a single node cluster

Running tests on a single node cluster causes longer timeouts for certain tests, including SR-IOV and SCTP tests, and tests requiring control plane and worker nodes are skipped. Reconfiguration requiring node reboots causes a reboot of the entire environment, including the OpenShift control plane, and therefore takes longer to complete. All PTP tests requiring a control plane node and a worker node are skipped. No additional configuration is needed because the tests check for the number of nodes at startup and adjust test behavior accordingly.

PTP tests can run in Discovery mode. The tests look for a PTP control plane configured outside of the cluster. The following parameters are required:

-

ROLE_WORKER_CNF=master- Required because the control plane (master) is the only machine pool to which the node will belong. -

XT_U32TEST_HAS_NON_CNF_WORKERS=false- Required to instruct thext_u32negative test to skip because there are only nodes where the module is loaded. -

SCTPTEST_HAS_NON_CNF_WORKERS=false- Required to instruct the SCTP negative test to skip because there are only nodes where the module is loaded.

1.4.18.2. Reducing NIC using the Performance Addon Operator

The Performance Addon Operator allows you to adjust the Network Interface Card (NIC) queue count for each network device by configuring the performance profile. Device network queues allow packets to be distributed among different physical queues, and each queue gets a separate thread for packet processing.

For Data Plane Development Kit (DPDK) based workloads, it is important to reduce the NIC queues to only the number of reserved or housekeeping CPUs to ensure the desired low latency is achieved.

For more information, see Reducing NIC queues using the Performance Addon Operator.

1.4.18.3. Cluster maximums

Updated guidance around cluster maximums for OpenShift Container Platform 4.8 is now available.

No large scale testing for performance against OVN-Kubernetes testing was executed for this release.

Use the OpenShift Container Platform Limit Calculator to estimate cluster limits for your environment.

1.4.18.4. Creating a performance profile

You can now create a performance profile using the Performance Profile Creator (PPC) tool. The tool consumes must-gather data from the cluster and several user-supplied profile arguments, and using this information it generates a performance profile that is appropriate for your hardware and topology.

For more information, see Creating a Performance Profile.

1.4.18.5. Node Feature Discovery Operator

The Node Feature Discovery (NFD) Operator is now available. Use it to expose node-level information by orchestrating Node Feature Discovery, a Kubernetes add-on for detecting hardware features and system configuration.

1.4.18.6. The Driver Toolkit (Technology Preview)

You can now use the Driver Toolkit as a base image for driver containers so that you can enable special software and hardware devices on Kubernetes. This is currently a Technology Preview feature.

1.4.19. Backup and restore

1.4.19.1. etcd snapshot enhancement

A new enhancement validates the status of the etcd snapshot after backup and before restore. Previously, the backup process did not validate that the snapshot taken was complete, and the restore process did not verify that the snapshot being restored was valid, not corrupted. Now, if the disk is corrupted during backup or restore, the error is clearly reported to the admin. For more information, see BZ#1965024.

1.4.20. Insights Operator

1.4.20.1. Insights Advisor recommendations for restricted networks

In OpenShift Container Platform 4.8, users operating in restricted networks can gather and upload Insights Operator archives to Insights Advisor to diagnose potential issues. Additionally, users can obfuscate sensitive data contained in the Insights Operator archive before upload.

For more information, see Using remote health reporting in a restricted network.

1.4.20.2. Insights Advisor improvements

Insights Advisor in the OpenShift Container Platform web console now correctly reports 0 issues found. Previously, Insights Advisor gave no information.

1.4.20.3. Insights Operator data collection enhancements

In OpenShift Container Platform 4.8, the Insights Operator collects the following additional information:

- Non-identifiable cluster workload information to find known security and version issues.

-

The

MachineHealthCheckandMachineAutoscalerdefinitions. -

The

virt_platformandvsphere_node_hw_version_totalmetrics. - Information about unhealthy SAP pods to assist in the installation of SAP Smart Data Integration.

-

The

datahubs.installers.datahub.sap.comresources to identfy SAP clusters. -

A summary of failed

PodNetworkConnectivityChecksto enhance networking. -

Information about the

cluster-versionpods and events from theopenshift-cluster-operatornamespace to debug issues with thecluster-versionOperator.

With this additional information, Red Hat can provide improved remediation steps in Insights Advisor.

1.4.20.4. Insights Operator enhancement for unhealthy SAP pods

The Insights Operator can now gather data for unhealthy SAP pods. When the SDI installation fails, it is possible to detect the problem by looking at which of the initialization pods have failed. The Insights Operator now gathers information about failed pods in the SAP/SDI namespaces. For more information, see BZ#1930393.

1.4.20.5. Insights Operator enhancement for gathering SAP pod data

The Insights Operator can now gather Datahubs resources from SAP clusters. This data allows SAP clusters to be distinguished from non-SAP clusters in the Insights Operator archives, even in situations in which all of the data gathered exclusively from SAP clusters is missing and it would otherwise be impossible to determine if a cluster has an SDI installation. For more information, see BZ#1940432.

1.4.21. Authentication and authorization

1.4.21.1. Running OpenShift Container Platform using AWS Security Token Service (STS) for credentials is generally available

You can now use the Cloud Credential Operator (CCO) utility (ccoctl) to configure the CCO to use the Amazon Web Services Security Token Service (AWS STS). When the CCO is configured to use STS, it assigns IAM roles that provide short-term, limited-privilege security credentials to components.

This feature was previously introduced as a Technology Preview feature in OpenShift Container Platform 4.7, and is now generally available in OpenShift Container Platform 4.8.

For more information, see Using manual mode with STS.

1.4.22. OpenShift sandboxed containers

1.4.22.1. OpenShift sandboxed containers support on OpenShift Container Platform (Technology Preview)

To review OpenShift sandboxed containers new features, bug fixes, known issues, and asynchronous errata updates, see OpenShift sandboxed containers 1.0 release notes.

1.5. Notable technical changes

OpenShift Container Platform 4.8 introduces the following notable technical changes.

Kuryr service subnet creation Changes

New installations of OpenShift Container Platform on Red Hat OpenStack Platform (RHOSP) with Open Virtual Network configured to use Kuryr no longer create a services subnet that is twice the size requested in networking.serviceCIDR. The subnet created is now the same as the requested size. For more information, see BZ#1955548.

OAuth tokens without a SHA-256 prefix can no longer be used

Prior to OpenShift Container Platform 4.6, OAuth access and authorize tokens used secret information for the object names.

Starting with OpenShift Container Platform 4.6, OAuth access token and authorize token object names are stored as non-sensitive object names, with a SHA-256 prefix. OAuth tokens that do not contain a SHA-256 prefix can no longer be used or created in OpenShift Container Platform 4.8.

The Federal Risk and Authorization Management Program (FedRAMP) moderate controls

In OpenShift Container Platform 4.8, the rhcos4-moderate profile is now complete. The ocp4-moderate profile will be completed in a future release.

Ingress Controller upgraded to HAProxy 2.2.13

The OpenShift Container Platform Ingress Controller is upgraded to HAProxy version 2.2.13.

CoreDNS update to version 1.8.1

In OpenShift Container Platform 4.8, CoreDNS uses version 1.8.1, which has several bug fixes, renamed metrics, and dual-stack IPv6 enablement.

etcd now uses the zap logger

In OpenShift Container Platform 4.8, etcd now uses zap as the default logger instead of capnslog. Zap is a structured logger that provides machine consumable JSON log messages. You can use jq to easily parse these log messages.

If you have a log consumer that is expecting the capnslog format, you might need to adjust it for the zap logger format.

Example capnslog format (OpenShift Container Platform 4.7)

2021-06-03 22:40:16.984470 W | etcdserver: read-only range request "key:\"/kubernetes.io/operator.openshift.io/clustercsidrivers/\" range_end:\"/kubernetes.io/operator.openshift.io/clustercsidrivers0\" count_only:true " with result "range_response_count:0 size:8" took too long (100.498102ms) to execute

2021-06-03 22:40:16.984470 W | etcdserver: read-only range request "key:\"/kubernetes.io/operator.openshift.io/clustercsidrivers/\" range_end:\"/kubernetes.io/operator.openshift.io/clustercsidrivers0\" count_only:true " with result "range_response_count:0 size:8" took too long (100.498102ms) to executeExample zap format (OpenShift Container Platform 4.8)

{"level":"warn","ts":"2021-06-14T13:13:23.243Z","caller":"etcdserver/util.go:163","msg":"apply request took too long","took":"163.262994ms","expected-duration":"100ms","prefix":"read-only range ","request":"key:\"/kubernetes.io/namespaces/default\" serializable:true keys_only:true ","response":"range_response_count:1 size:53"}

{"level":"warn","ts":"2021-06-14T13:13:23.243Z","caller":"etcdserver/util.go:163","msg":"apply request took too long","took":"163.262994ms","expected-duration":"100ms","prefix":"read-only range ","request":"key:\"/kubernetes.io/namespaces/default\" serializable:true keys_only:true ","response":"range_response_count:1 size:53"}Multiple daemon sets merged for LSO

In OpenShift Container Platform 4.8, multiple daemon sets are merged for Local Storage Object (LSO). When you create a local volume custom resource, only daemonset.apps/diskmaker-manager is created.

Bound service account token volumes are enabled

Previously, service account tokens were secrets that were mounted into pods. Starting with OpenShift Container Platform 4.8, projected volumes are used instead. As a result of this change, service account tokens no longer have an underlying corresponding secret.

Bound service account tokens are audience-bound and time-bound. For more information, see Using bound service account tokens.

Additionally, the kubelet refreshes tokens automatically after they reach 80% of duration, and client-go watches for token changes and reloads automatically. The combination of these two behaviors means that most usage of bound tokens is no different from usage of legacy tokens that never expire. Non-standard usage outside of client-go might cause issues.

Operator SDK v1.8.0

OpenShift Container Platform 4.8 supports Operator SDK v1.8.0. See Installing the Operator SDK CLI to install or update to this latest version.

Operator SDK v1.8.0 supports Kubernetes 1.20.

If you have any Operator projects that were previously created or maintained with Operator SDK v1.3.0, see Upgrading projects for newer Operator SDK versions to ensure your projects are upgraded to maintain compatibility with Operator SDK v1.8.0.

1.6. Deprecated and removed features

Some features available in previous releases have been deprecated or removed.

Deprecated functionality is still included in OpenShift Container Platform and continues to be supported; however, it will be removed in a future release of this product and is not recommended for new deployments. For the most recent list of major functionality deprecated and removed within OpenShift Container Platform 4.8, refer to the table below. Additional details for more fine-grained functionality that has been deprecated and removed are listed after the table.

In the table, features are marked with the following statuses:

- GA: General Availability

- TP: Technology Preview

- DEP: Deprecated

- REM: Removed

| Feature | OCP 4.6 | OCP 4.7 | OCP 4.8 |

|---|---|---|---|

|

| REM | REM | REM |

| Package manifest format (Operator Framework) | DEP | DEP | REM |

|

| DEP | DEP | REM |

|

| GA | DEP | REM |

| v1beta1 CRDs | DEP | DEP | DEP |

| Docker Registry v1 API | DEP | DEP | DEP |

| Metering Operator | DEP | DEP | DEP |

| Scheduler policy | GA | DEP | DEP |

|

| GA | DEP | DEP |

|

| GA | DEP | DEP |

|

Use of | GA | DEP | DEP |

|

Use of | DEP | DEP | DEP |

| Cluster Loader | GA | GA | DEP |

| Bring your own RHEL 7 compute machines | DEP | DEP | DEP |

| External provisioner for AWS EFS | REM | REM | REM |

|

| GA | GA | DEP |

| Jenkins Operator | TP | TP | DEP |

| HPA custom metrics adapter based on Prometheus | TP | TP | REM |

|

The | GA | DEP | DEP |

| Minting credentials for Microsoft Azure clusters | GA | GA |

1.6.1. Deprecated features

1.6.1.1. Descheduler operator.openshift.io/v1beta1 API group is deprecated

The operator.openshift.io/v1beta1 API group for the descheduler is deprecated and might be removed in a future release. Use the operator.openshift.io/v1 API group instead.

1.6.1.2. Use of dhclient in Red Hat Enterprise Linux CoreOS (RHCOS) is deprecated

Starting with OpenShift Container Platform 4.6, Red Hat Enterprise Linux CoreOS (RHCOS) switched to using NetworkManager in the initramfs to configure networking during early boot. As part of this change, the use of the dhclient binary for DHCP was deprecated. Use the NetworkManager internal DHCP client for networking configuration instead. The dhclient binary will be removed from Red Hat Enterprise Linux CoreOS (RHCOS) in a future release. See BZ#1908462 for more information.

1.6.1.3. Cluster Loader is deprecated

Cluster Loader is now deprecated and will be removed in a future release.

1.6.1.4. The lastTriggeredImageID parameter in builds is deprecated

This release deprecates the lastTriggeredImageID in the ImageChangeTrigger object, which is one of the BuildTriggerPolicy types that can be set on a BuildConfig spec.

OpenShift Container Platform next release will remove support for lastTriggeredImageID and ignore it. Then, image change triggers will not start a build based on changes to the lastTriggeredImageID field in the BuildConfig spec. Instead, the image IDs that trigger a build will be recorded in the status of the BuildConfig object, which most users cannot change.

Therefore, update scripts and jobs that inspect buildConfig.spec.triggers[i].imageChange.lastTriggeredImageID accordingly. (BUILD-213)

1.6.1.5. The Jenkins Operator (Technology Preview) is deprecated

This release deprecates the Jenkins Operator, which was a Technology Preview feature. A future version of OpenShift Container Platform will remove the Jenkins Operator from OperatorHub in the OpenShift Container Platform web console interface. Then, upgrades for the Jenkins Operator will no longer be available, and the Operator will not be supported.

Customers can continue to deploy Jenkins on OpenShift Container Platform using the templates provided by the Samples Operator.

1.6.1.6. The instance_type_id installation configuration parameter for Red Hat Virtualization (RHV)

The instance_type_id installation configuration parameter is deprecated and will be removed in a future release.

1.6.2. Removed features

1.6.2.1. Support for minting credentials for Microsoft Azure removed

Starting with OpenShift Container Platform 4.8.34, support for using the Cloud Credential Operator (CCO) in mint mode on Microsoft Azure clusters has been removed from OpenShift Container Platform 4.8. This change is due to the planned retirement of the Azure AD Graph API by Microsoft on 30 June 2022 and is being backported to all supported versions of OpenShift Container Platform in z-stream updates.

For previously installed Azure clusters that use mint mode, the CCO attempts to update existing secrets. If a secret contains the credentials of previously minted app registration service principals, it is updated with the contents of the secret in kube-system/azure-credentials. This behavior is similar to passthrough mode.

For clusters with the credentials mode set to its default value of "", the updated CCO automatically changes from operating in mint mode to operating in passthrough mode. If your cluster has the credentials mode explicitly set to mint mode ("Mint"), you must change the value to "" or "Passthrough".

In addition to the Contributor role that is required by mint mode, the modified app registration service principals now require the User Access Administrator role that is used for passthrough mode.

While the Azure AD Graph API is still available, the CCO in upgraded versions of OpenShift Container Platform attempts to clean up previously minted app registration service principals. Upgrading your cluster before the Azure AD Graph API is retired might avoid the need to clean up resources manually.

If the cluster is upgraded to a version of OpenShift Container Platform that no longer supports mint mode after the Azure AD Graph API is retired, the CCO sets an OrphanedCloudResource condition on the associated CredentialsRequest but does not treat the error as fatal. The condition includes a message similar to unable to clean up App Registration / Service Principal: <app_registration_name>. Cleanup after the Azure AD Graph API is retired requires manual intervention using the Azure CLI tool or the Azure web console to remove any remaining app registration service principals.

To clean up resources manually, you must find and delete the affected resources.

Using the Azure CLI tool, filter the app registration service principals that use the

<app_registration_name>from anOrphanedCloudResourcecondition message by running the following command:az ad app list --filter "displayname eq '<app_registration_name>'" --query '[].objectId'

$ az ad app list --filter "displayname eq '<app_registration_name>'" --query '[].objectId'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

[ "038c2538-7c40-49f5-abe5-f59c59c29244" ]

[ "038c2538-7c40-49f5-abe5-f59c59c29244" ]Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the app registration service principal by running the following command:

az ad app delete --id 038c2538-7c40-49f5-abe5-f59c59c29244

$ az ad app delete --id 038c2538-7c40-49f5-abe5-f59c59c29244Copy to Clipboard Copied! Toggle word wrap Toggle overflow