Chapter 19. How the subscriptions service gets and refreshes data

The data collection tools gather and periodically send data, including data about subscription usage, to the Hybrid Cloud Console tools that analyze and process this data. After the data is processed, the data that is needed for the subscriptions service, including the data related to subscription usage and capacity, is sent to the subscriptions service for display. For Annual subscriptions, this data is sent once per day. For On-Demand subscriptions, this data can be updated more frequently, usually a few times per day. Therefore, the data that displays in the subscriptions service is a tally of the results in the form of a snapshot, either once per day or at a few intervals throughout the day, and is not a real-time, continuous usage monitor.

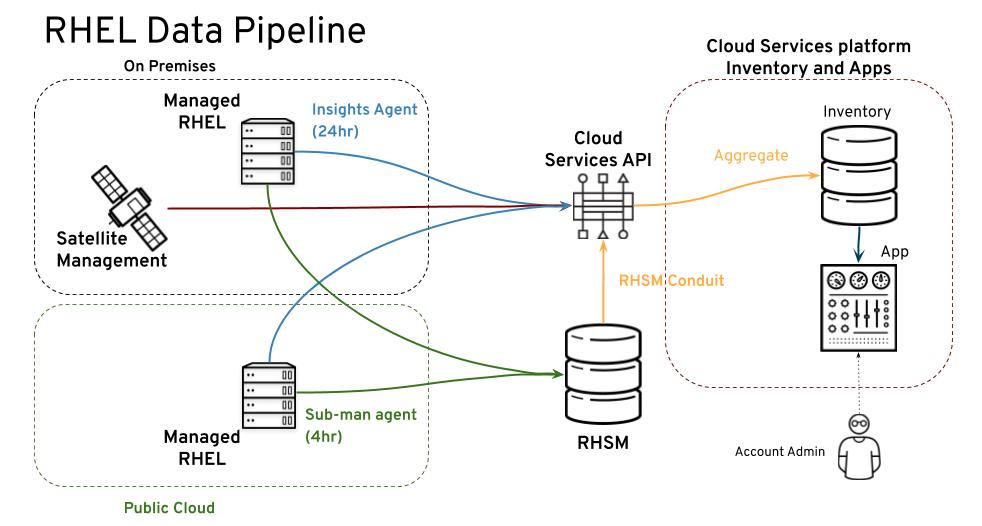

The Red Hat Enterprise Linux data pipeline

The following image provides additional detail about the data pipeline that moves RHEL data from collection to display in the subscriptions service. The data collection tool, whether you are using Red Hat Insights, Satellite, or Red Hat Subscription Management with the Subscription Manager agent, sends data to the Hybrid Cloud Console processing tools. After data is processed, it is available to Hybrid Cloud Console tools such as the inventory service. The subscriptions service uses a subset of the data that is available to the inventory service to display data about subscription usage and capacity.

Figure 19.1. The RHEL data pipeline for the subscriptions service

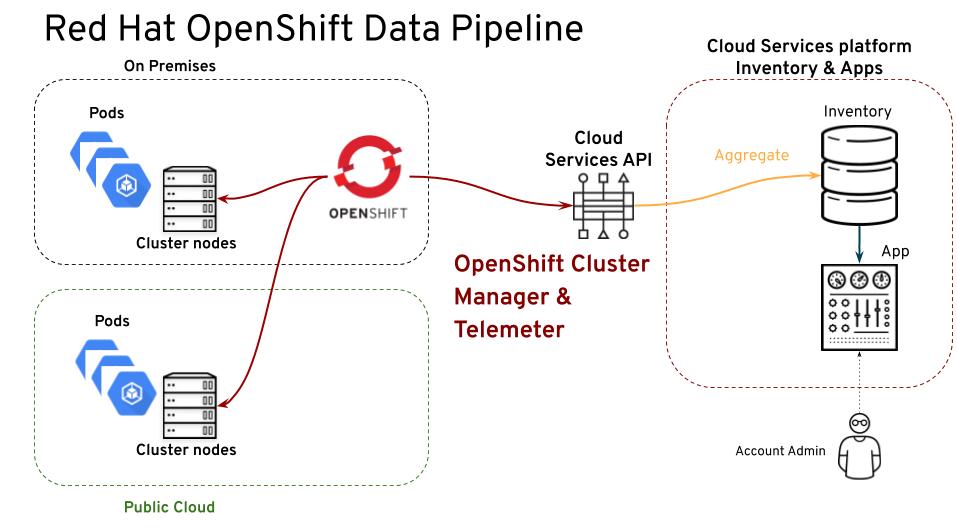

The Red Hat OpenShift data pipeline

Red Hat OpenShift can have nodes that are based on Red Hat Enterprise Linux or Red Hat Enterprise Linux CoreOS. Only nodes that are based on RHCOS report data through the tools in the Red Hat OpenShift data pipeline, such as OpenShift Cluster Manager and the monitoring stack. RHEL nodes report through the tools in the RHEL data pipeline, such as Red Hat Insights, Satellite, or Red Hat Subscription Management.

| Red Hat OpenShift version | Node operating system | Data pipeline used |

|---|---|---|

| Version 4 | RHCOS | Red Hat OpenShift pipeline Nodes aggregated into cluster reporting Node types and node labels analyzed to determine subscribed nodes |

| Version 4 | RHEL | Red Hat OpenShift pipeline Nodes report individually Node types and node labels analyzed to determine subscribed nodes |

| Version 3 | RHEL | RHEL pipeline Nodes report individually Subscribed nodes cannot be distinguished from nonsubscribed nodes |

For Red Hat OpenShift version 4.1 and later data collection, the tools available in the monitoring stack, including Telemetry, Prometheus, Thanos, and others, monitor and periodically sum the CPU activity of all subscribed nodes, while ignoring the activity of nonsubscribed nodes. That data is sent to Red Hat OpenShift Cluster Manager at different intervals for new clusters, resized clusters, and clusters with deleted entities, to maintain currency.

Red Hat OpenShift Cluster Manager then updates the cluster size attribute for existing clusters and creates entries for any new clusters in the Hybrid Cloud Console inventory tool.

Lastly, the subscriptions service analyzes the inventory data and creates account-wide usage information for each Red Hat OpenShift product in the subscription profile. That information is displayed in the the subscriptions service interface, along with capacity data as applicable for the subscription type. For Red Hat OpenShift Container Platform with an Annual subscription, the usage information accounts for both core and socket usage. For Red Hat OpenShift Container Platform or OpenShift Dedicated with an On-Demand subscription, the usage information shows core hour usage.

Figure 19.2. The Red Hat OpenShift data pipeline for the subscriptions service

Other Red Hat OpenShift families, products, and add-ons in the portfolio, such as Red Hat OpenShift AI, Red Hat Advanced Cluster Security for Kubernetes, or Red Hat OpenShift Service on AWS Hosted Control Planes, rely on Red Hat infrastructure. Part of that infrastructure is the monitoring stack tools that, among other jobs, supply data about subscription usage to the subscriptions service. Therefore, these services also report usage through the tools used in the Red Hat OpenShift data pipeline.

Heartbeats for data collection tools

The frequency at which the data collection tools send data for processing, also known as the heartbeat, varies by tool. This variance can affect the freshness of the data that the subscriptions service displays.

The following table shows default heartbeats for the data collection tools. In some cases, these values are configurable within that data collection tool.

| Tool | Configurable | Heartbeat interval |

|---|---|---|

| Insights | No | Daily, once every 24 hours |

| Red Hat Subscription Management | Yes | Multiple times per day, 4 hour default |

| Satellite | Yes | Monthly, configurable with the Satellite scheduler function If used, the Satellite inventory upload plugin reports daily, with a manual send option. Additionally, to maintain accurate information about the mapping of virtual guests to hosts, a best practice is to run the virt-who utility daily. |

| Red Hat OpenShift | No | Several tools are involved in the data pipeline, including tools in the Red Hat OpenShift Container Platform monitoring stack and in the Hybrid Cloud Console, with differing intervals:

Red Hat OpenShift Container Platform monitoring stack:

Red Hat OpenShift Cluster Manager:

the subscriptions service: |