This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Chapter 6. Creating and managing serverless applications

6.1. Serverless applications using Knative services

To deploy a serverless application using OpenShift Serverless, you must create a Knative service. Knative services are Kubernetes services, defined by a route and a configuration, and contained in a YAML file.

Example Knative service YAML

You can create a serverless application by using one of the following methods:

- Create a Knative service from the OpenShift Container Platform web console.

-

Create a Knative service using the

knCLI. - Create and apply a YAML file.

6.2. Creating serverless applications using the OpenShift Container Platform web console

You can create a serverless application using either the Developer or Administrator perspective in the OpenShift Container Platform web console.

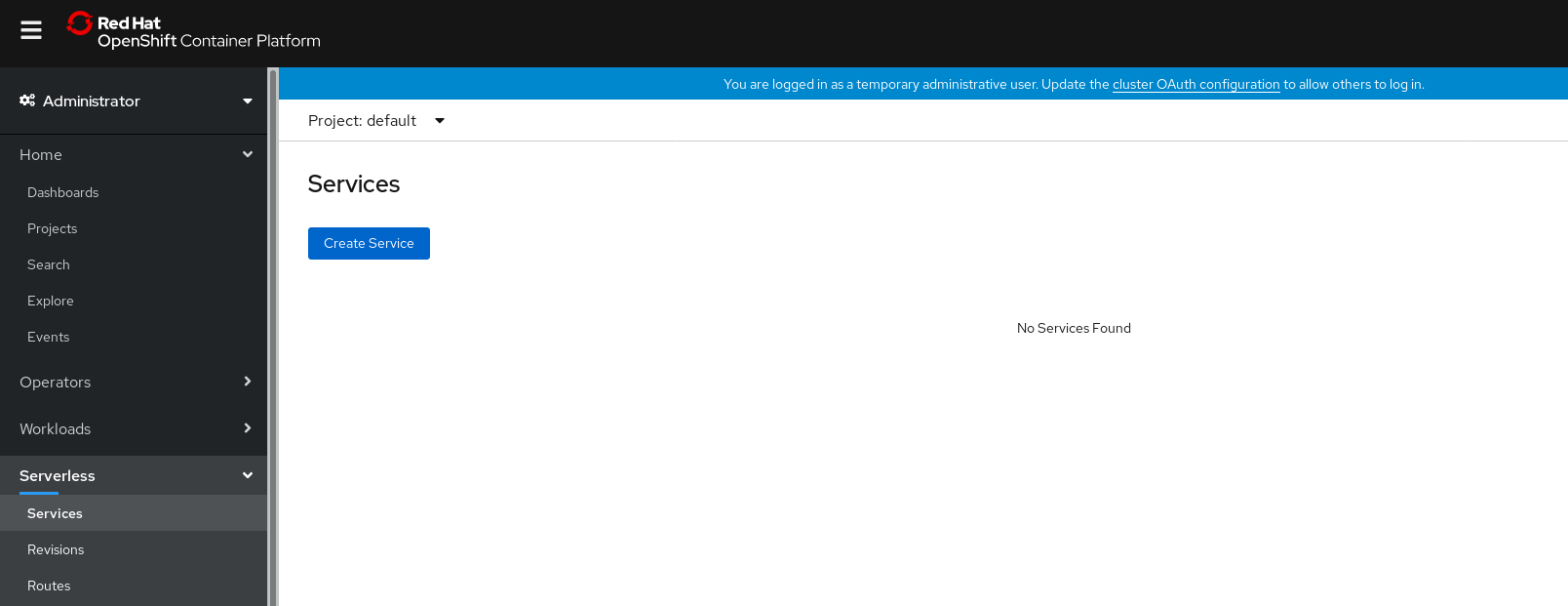

6.2.1. Creating serverless applications using the Administrator perspective

Prerequisites

To create serverless applications using the Administrator perspective, ensure that you have completed the following steps.

- The OpenShift Serverless Operator and Knative Serving are installed.

- You have logged in to the web console and are in the Administrator perspective.

Procedure

Navigate to the Serverless

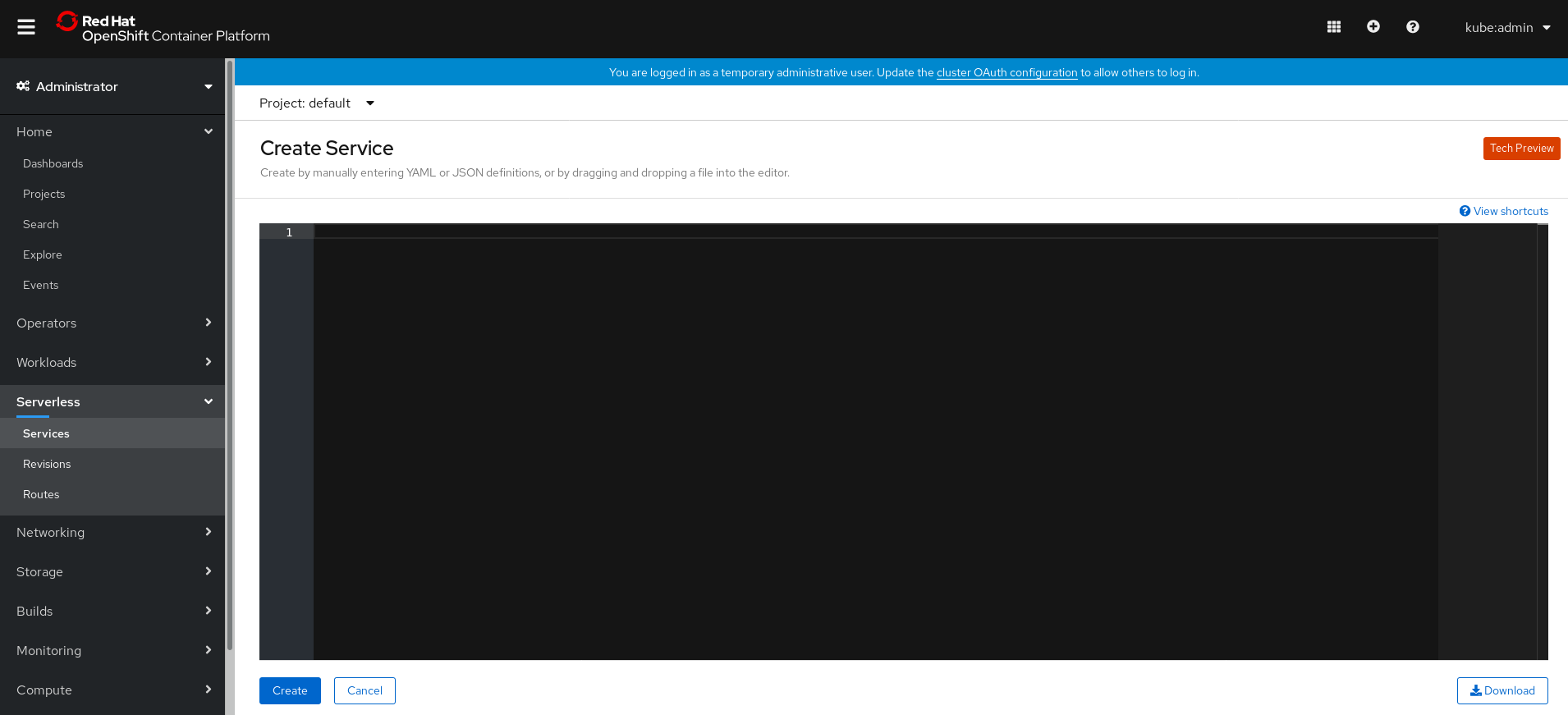

Services page. - Click Create Service.

Manually enter YAML or JSON definitions, or by dragging and dropping a file into the editor.

- Click Create.

6.2.2. Creating serverless applications using the Developer perspective

For more information about creating applications using the Developer perspective in OpenShift Container Platform, see the documentation on Creating applications using the Developer perspective.

6.3. Creating serverless applications using the kn CLI

The following procedure describes how you can create a basic serverless application using the kn CLI.

Prerequisites

- OpenShift Serverless Operator and Knative Serving are installed on your cluster.

-

You have installed

knCLI.

Procedure

Create a Knative service:

kn service create <service_name> --image <image> --env <key=value>

$ kn service create <service_name> --image <image> --env <key=value>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example command

kn service create hello --image docker.io/openshift/hello-openshift --env RESPONSE="Hello Serverless!"

$ kn service create hello --image docker.io/openshift/hello-openshift --env RESPONSE="Hello Serverless!"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.4. Creating serverless applications using YAML

To create a serverless application, you can create a YAML file and apply it using oc apply.

Procedure

Create a YAML file by copying the following example:

Example Knative service YAML

Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, the YAML file is named

hello-service.yaml.Navigate to the directory where the

hello-service.yamlfile is contained, and deploy the application by applying the YAML file:oc apply -f hello-service.yaml

$ oc apply -f hello-service.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

After the service has been created and the application has been deployed, Knative will create a new immutable revision for this version of the application.

Knative will also perform network programming to create a route, ingress, service, and load balancer for your application, and will automatically scale your pods up and down based on traffic, including inactive pods.

6.5. Verifying your serverless application deployment

To verify that your serverless application has been deployed successfully, you must get the application URL created by Knative, and then send a request to that URL and observe the output.

OpenShift Serverless supports the use of both HTTP and HTTPS URLs, however the output from oc get ksvc <service_name> always prints URLs using the http:// format.

Procedure

Find the application URL:

oc get ksvc <service_name>

$ oc get ksvc <service_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME URL LATESTCREATED LATESTREADY READY REASON hello http://hello-default.example.com hello-4wsd2 hello-4wsd2 True

NAME URL LATESTCREATED LATESTREADY READY REASON hello http://hello-default.example.com hello-4wsd2 hello-4wsd2 TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Make a request to your cluster and observe the output:

Example HTTP request

curl http://hello-default.example.com

$ curl http://hello-default.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example HTTPS request

curl https://hello-default.example.com

$ curl https://hello-default.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Hello Serverless!

Hello Serverless!Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional. If you receive an error relating to a self-signed certificate in the certificate chain, you can add the

--insecureflag to the curl command to ignore the error.ImportantSelf-signed certificates must not be used in a production deployment. This method is only for testing purposes.

Example command

curl https://hello-default.example.com --insecure

$ curl https://hello-default.example.com --insecureCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Hello Serverless!

Hello Serverless!Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional. If your OpenShift Container Platform cluster is configured with a certificate that is signed by a certificate authority (CA) but not yet globally configured for your system, you can specify this with the curl command. The path to the certificate can be passed to the curl command by using the

--cacertflag.Example command

curl https://hello-default.example.com --cacert <file>

$ curl https://hello-default.example.com --cacert <file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Hello Serverless!

Hello Serverless!Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.6. Interacting with a serverless application using HTTP2 / gRPC

OpenShift Container Platform routes do not support HTTP2, and therefore do not support gRPC as this is transported by HTTP2. If you use these protocols in your application, you must call the application using the ingress gateway directly. To do this you must find the ingress gateway’s public address and the application’s specific host.

Procedure

- Find the application host. See the instructions in Verifying your serverless application deployment.

Get the public address of the ingress gateway:

oc -n knative-serving-ingress get svc kourier

$ oc -n knative-serving-ingress get svc kourierCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kourier LoadBalancer 172.30.51.103 a83e86291bcdd11e993af02b7a65e514-33544245.us-east-1.elb.amazonaws.com 80:31380/TCP,443:31390/TCP 67m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kourier LoadBalancer 172.30.51.103 a83e86291bcdd11e993af02b7a65e514-33544245.us-east-1.elb.amazonaws.com 80:31380/TCP,443:31390/TCP 67mCopy to Clipboard Copied! Toggle word wrap Toggle overflow The public address is surfaced in the

EXTERNAL-IPfield, and in this case would be:a83e86291bcdd11e993af02b7a65e514-33544245.us-east-1.elb.amazonaws.com

a83e86291bcdd11e993af02b7a65e514-33544245.us-east-1.elb.amazonaws.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Manually set the host header of your HTTP request to the application’s host, but direct the request itself against the public address of the ingress gateway.

Here is an example, using the information obtained from the steps in Verifying your serverless application deployment:

curl -H "Host: hello-default.example.com" a83e86291bcdd11e993af02b7a65e514-33544245.us-east-1.elb.amazonaws.com Hello Serverless!

$ curl -H "Host: hello-default.example.com" a83e86291bcdd11e993af02b7a65e514-33544245.us-east-1.elb.amazonaws.com Hello Serverless!Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can also make a gRPC request by setting the authority to the application’s host, while directing the request against the ingress gateway directly.

Here is an example of what that looks like in the Golang gRPC client:

NoteEnsure that you append the respective port (80 by default) to both hosts as shown in the example.

grpc.Dial( "a83e86291bcdd11e993af02b7a65e514-33544245.us-east-1.elb.amazonaws.com:80", grpc.WithAuthority("hello-default.example.com:80"), grpc.WithInsecure(), )grpc.Dial( "a83e86291bcdd11e993af02b7a65e514-33544245.us-east-1.elb.amazonaws.com:80", grpc.WithAuthority("hello-default.example.com:80"), grpc.WithInsecure(), )Copy to Clipboard Copied! Toggle word wrap Toggle overflow