Introduction to ROSA

An overview of Red Hat OpenShift Service on AWS architecture

Abstract

Chapter 1. Red Hat OpenShift Service on AWS 4 Documentation

Welcome to the official Red Hat OpenShift Service on AWS documentation, where you can learn about Red Hat OpenShift Service on AWS and start exploring its features.

Chapter 2. Red Hat OpenShift Service on AWS overview

Red Hat OpenShift Service on AWS is a fully-managed turnkey application platform that allows you to focus on what matters most, delivering value to your customers by building and deploying applications. Red Hat and AWS SRE experts manage the underlying platform so you do not have to worry about infrastructure management. Red Hat OpenShift Service on AWS provides seamless integration with a wide range of AWS compute, database, analytics, machine learning, networking, mobile, AI and other services to further accelerate the building and delivering of differentiating experiences to your customers.

Red Hat OpenShift Service on AWS offers a reduced-cost solution to create a managed Red Hat OpenShift Service on AWS cluster with a focus on efficiency and security. You can quickly create a new cluster and deploy applications in minutes.

You subscribe to the service directly from your AWS account. After you create clusters, you can operate your clusters with the OpenShift web console, the rosa CLI, or through Red Hat OpenShift Cluster Manager.

You receive OpenShift updates with new feature releases and a shared, common source for alignment with OpenShift Container Platform. Red Hat OpenShift Service on AWS supports the same versions of OpenShift as Red Hat OpenShift Container Platform to achieve version consistency.

Red Hat OpenShift Service on AWS uses AWS Security Token Service (STS) with AWS IAM to obtain credentials to manage infrastructure in your AWS account. AWS STS is a global web service that creates temporary credentials for IAM users/roles or federated users/roles. Red Hat OpenShift Service on AWS uses this to assign short-term, limited-privilege, security credentials. These credentials are associated with IAM roles that are specific to each component that makes AWS API calls. This method aligns with the principals of least privilege and secure practices in cloud service resource management. The ROSA command-line interface (CLI) tool manages the STS credentials that are assigned for unique tasks and takes action on AWS resources as part of OpenShift functionality. For a more detailed explanation, see AWS STS and Red Hat OpenShift Service on AWS explained.

2.1. Key features of Red Hat OpenShift Service on AWS

- Cluster node scaling: Red Hat OpenShift Service on AWS requires a minimum of only two nodes, making it ideal for smaller projects while still being able to scale to support larger projects and enterprises. Easily add or remove compute nodes to match resource demand. Autoscaling allows you to automatically adjust the size of the cluster based on the current workload. See About autoscaling nodes on a cluster for more details.

- Fully managed underlying control plane infrastructure: Control plane components, such as the API server and etcd database, are hosted in a Red Hat-owned AWS account.

- Rapid provisioning time: Provisioning time is approximately 10 minutes.

- Continued cluster operation during upgrades: Customers can upgrade the control plane and machine pools separately, ensuring the cluster remains operational during the upgrade process.

- Native AWS service: Access and use Red Hat OpenShift on-demand with a self-service onboarding experience through the AWS management console.

- Flexible, consumption-based pricing: Scale to your business needs and pay as you go with flexible pricing and an on-demand hourly or annual billing model.

- Single bill for Red Hat OpenShift and AWS usage: Customers will receive a single bill from AWS for both Red Hat OpenShift and AWS consumption.

- Fully integrated support experience: Management, maintenance, and upgrades are performed by Red Hat site reliability engineers (SREs) with joint Red Hat and Amazon support and a 99.95% service-level agreement (SLA). See the Red Hat OpenShift Service on AWS support documentation for more details.

- AWS service integration: AWS has a robust portfolio of cloud services, such as compute, storage, networking, database, analytics, Virtualization and AI. All of these services are directly accessible through Red Hat OpenShift Service on AWS. This makes it easier to build, operate, and scale globally and on-demand through a familiar management interface.

- Maximum availability: Deploy clusters across multiple availability zones in supported regions to maximize availability and maintain high availability for your most demanding mission-critical applications and data.

- Optimized clusters: Choose from memory-optimized, compute-optimized, general purpose, or accelerated EC2 instance types with clusters to meet your needs.

- Global availability: Refer to the product regional availability page to see where Red Hat OpenShift Service on AWS is available globally.

2.2. Billing and pricing

Red Hat OpenShift Service on AWS is billed directly to your Amazon Web Services (AWS) account. ROSA pricing is consumption based, with annual commitments or three-year commitments for greater discounting. The total cost of ROSA consists of two components:

- ROSA service fees

- AWS infrastructure fees

Visit the Red Hat OpenShift Service on AWS Pricing page on the AWS website for more details.

2.3. Getting started with Red Hat OpenShift Service on AWS

Use the following sections to find content to help you learn about and use Red Hat OpenShift Service on AWS.

2.3.1. Architect

| Learn about Red Hat OpenShift Service on AWS | Plan Red Hat OpenShift Service on AWS deployment | Additional resources |

|---|---|---|

| Understanding process and security | ||

2.3.2. Cluster Administrator

| Learn about Red Hat OpenShift Service on AWS | Deploy Red Hat OpenShift Service on AWS | Manage Red Hat OpenShift Service on AWS | Additional resources |

|---|---|---|---|

| About Red Hat OpenShift Service on AWS monitoring | |||

2.3.3. Developer

| Learn about application development in Red Hat OpenShift Service on AWS | Deploy applications | Additional resources |

|---|---|---|

| Red Hat OpenShift Dev Spaces (formerly Red Hat CodeReady Workspaces) | ||

2.3.4. Before creating your first Red Hat OpenShift Service on AWS cluster

For additional information about ROSA installation, see a quick introduction to the process in Installing Red Hat OpenShift Service on AWS interactive walkthrough.

Chapter 3. AWS STS and ROSA with HCP explained

Red Hat OpenShift Service on AWS uses an AWS (Amazon Web Services) Security Token Service (STS) for AWS Identity Access Management (IAM) to obtain the necessary credentials to interact with resources in your AWS account.

3.1. AWS STS credential method

As part of ROSA with HCP, Red Hat must be granted the necessary permissions to manage infrastructure resources in your AWS account. ROSA with HCP IAM STS policies grants the cluster’s automation software limited, short-term access to resources in your AWS account.

The STS method uses predefined roles and policies to grant temporary, least-privilege permissions to IAM roles. The credentials typically expire an hour after being requested. Once expired, they are no longer recognized by AWS and no longer have account access to make API requests with them. For more information, see the AWS documentation.

AWS IAM STS roles must be created for each ROSA cluster. The ROSA command-line interface (CLI) (rosa) manages the STS roles and helps you attach the ROSA-specific, AWS managed policies to each role. The CLI provides the commands and files to create the roles, attach the AWS-managed policies, and an option to allow the CLI to automatically create the roles and attach the policies. Alternatively, the ROSA CLI can also provide you with the content to prepare the roles and attach the ROSA-specific AWS managed policies.

3.2. AWS STS security

Security features for AWS STS include:

An explicit and limited set of policies that the user creates ahead of time.

- The user can review every requested permission needed by the platform.

- The service cannot do anything outside of those permissions.

- There is no need to rotate or revoke credentials. Whenever the service needs to perform an action, it obtains credentials that expire in one hour or less.

- Credential expiration reduces the risks of credentials leaking and being reused.

- The ROSA-specific AWS managed policies are tightly scoped to only allow actions on ROSA-specific AWS resources in your account, within the limits of the AWS API.

ROSA policies grant cluster software components with least-privilege permissions with short-term security credentials to specific and segregated IAM roles. The credentials are associated with IAM roles specific to each component and cluster that makes AWS API calls. This method aligns with principles of least-privilege and secure practices in cloud service resource management.

3.3. Components of ROSA with HCP

- AWS infrastructure - The infrastructure required for the cluster including the Amazon EC2 instances, Amazon EBS storage, and networking components. See AWS compute types to see the supported instance types for compute nodes and provisioned AWS infrastructure for more information on cloud resource configuration.

- AWS STS - A method for granting short-term, dynamic tokens to provide users the necessary permissions to temporarily interact with your AWS account resources.

- OpenID Connect (OIDC) - A mechanism for cluster Operators to authenticate with AWS, assume the cluster roles through a trust policy, and obtain temporary credentials from AWS IAM STS to make the required API calls.

Roles and policies - The roles and policies used by ROSA with HCP can be divided into account-wide roles and policies and Operator roles and policies.

The policies determine the allowed actions for each of the roles. See About IAM resources for more details about the individual roles and policies. See Required IAM roles and resources for more details on preparing these resources in your cluster.

The following account-wide roles are required:

-

<prefix>-HCP-ROSA-Worker-Role -

<prefix>-HCP-ROSA-Support-Role -

<prefix>-HCP-ROSA-Installer-Role

-

The following account-wide AWS-managed policies are required:

- ROSAInstallerPolicy

- ROSAWorkerInstancePolicy

- ROSASRESupportPolicy

- ROSAIngressOperatorPolicy

- ROSAAmazonEBSCSIDriverOperatorPolicy

- ROSACloudNetworkConfigOperatorPolicy

- ROSAControlPlaneOperatorPolicy

- ROSAImageRegistryOperatorPolicy

- ROSAKMSProviderPolicy

- ROSAKubeControllerPolicy

- ROSAManageSubscription

- ROSANodePoolManagementPolicy

NoteCertain policies are used by the cluster Operator roles, listed below. The Operator roles are created in a second step because they are dependent on an existing cluster name and cannot be created at the same time as the account-wide roles.

The Operator roles are:

- <operator_role_prefix>-openshift-cluster-csi-drivers-ebs-cloud-credentials

- <operator_role_prefix>-openshift-cloud-network-config-controller-cloud-credentials

- <operator_role_prefix>-openshift-machine-api-aws-cloud-credentials

- <operator_role_prefix>-openshift-cloud-credential-operator-cloud-credentials

- <operator_role_prefix>-openshift-image-registry-installer-cloud-credentials

- <operator_role_prefix>-openshift-ingress-operator-cloud-credentials

- Trust policies are created for each account-wide role and each Operator role.

3.4. Deploying a ROSA with HCP cluster

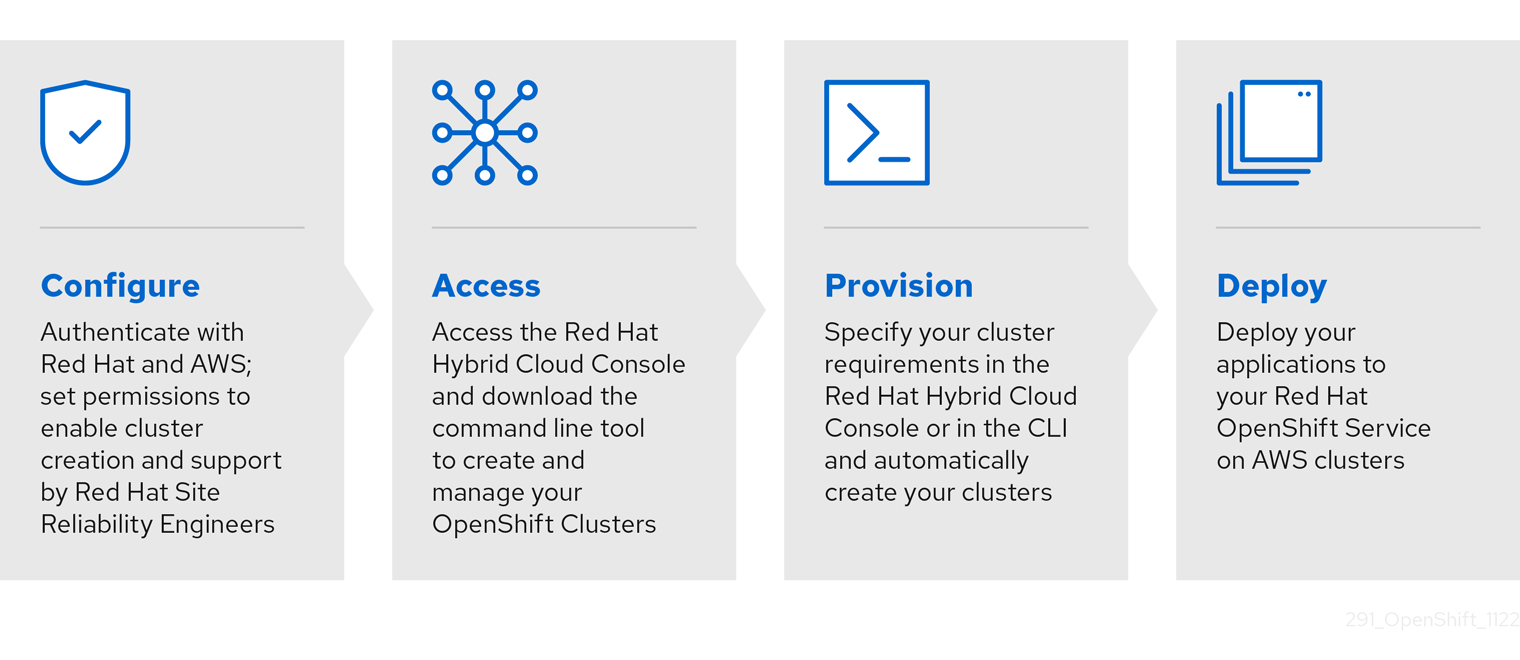

Deploying a ROSA with HCP cluster follows the following general steps:

- You create the account-wide roles.

- You create the Operator roles.

- Red Hat uses AWS IAM STS to send the required permissions to AWS that allow AWS to create and attach the corresponding AWS-managed Operator policies.

- You create the OIDC provider.

- You create the cluster.

During the cluster creation process, the ROSA CLI creates the required JSON files for you and outputs the commands you need. If desired, the ROSA CLI can also run the commands for you.

The ROSA CLI can automatically create the roles for you, or you can manually create them by using the --mode manual or --mode auto flags. For further details about deployment, see Creating a cluster with customizations.

3.5. ROSA with HCP workflow

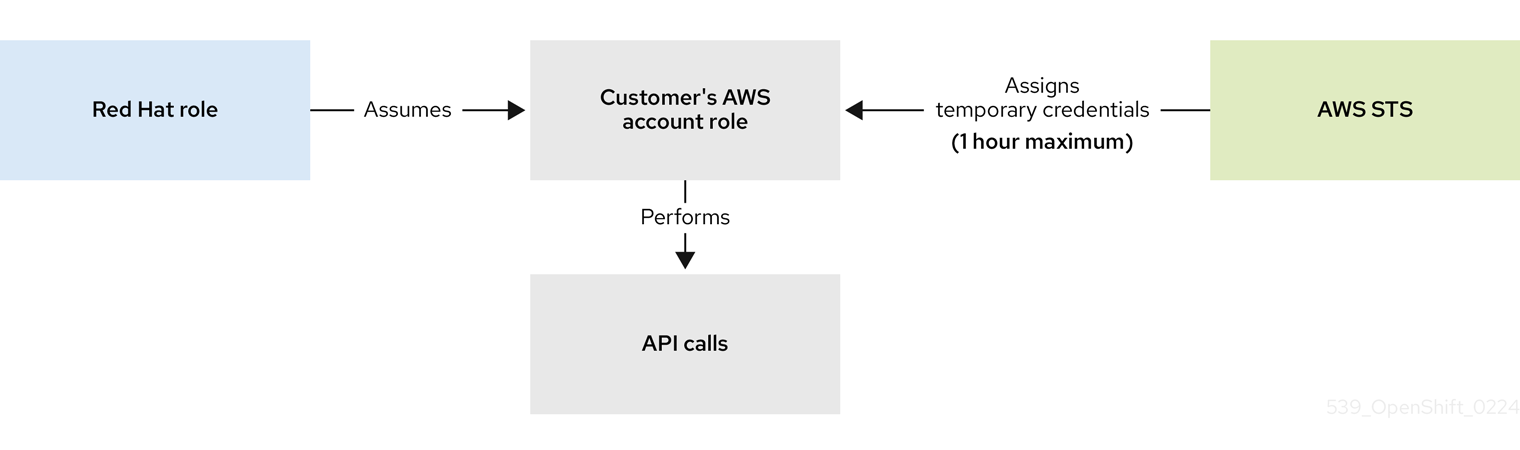

The user creates the required account-wide roles. During role creation, a trust policy, known as a cross-account trust policy, is created which allows a Red Hat-owned role to assume the roles. Trust policies are also created for the EC2 service, which allows workloads on EC2 instances to assume roles and obtain credentials. AWS assigns a corresponding permissions policy to each role.

After both the account-wide operator roles and policies are created, the user can create a cluster. These operator roles are assigned to the corresponding permission policies that were created earlier and a trust policy with an OIDC provider. The operator roles differ from the account-wide roles in that they ultimately represent the in-cluster pods that need access to AWS resources. Because a user cannot attach IAM roles to pods, they must create a trust policy with an OIDC provider so that the Operator, and therefore the pods, can access the roles they need.

When a new role is needed, the workload currently using the Red Hat role will assume the role in the AWS account, obtain temporary credentials from AWS STS, and begin performing the actions using API calls within the user’s AWS account as permitted by the assumed role’s permissions policy. The credentials are temporary and have a maximum duration of one hour.

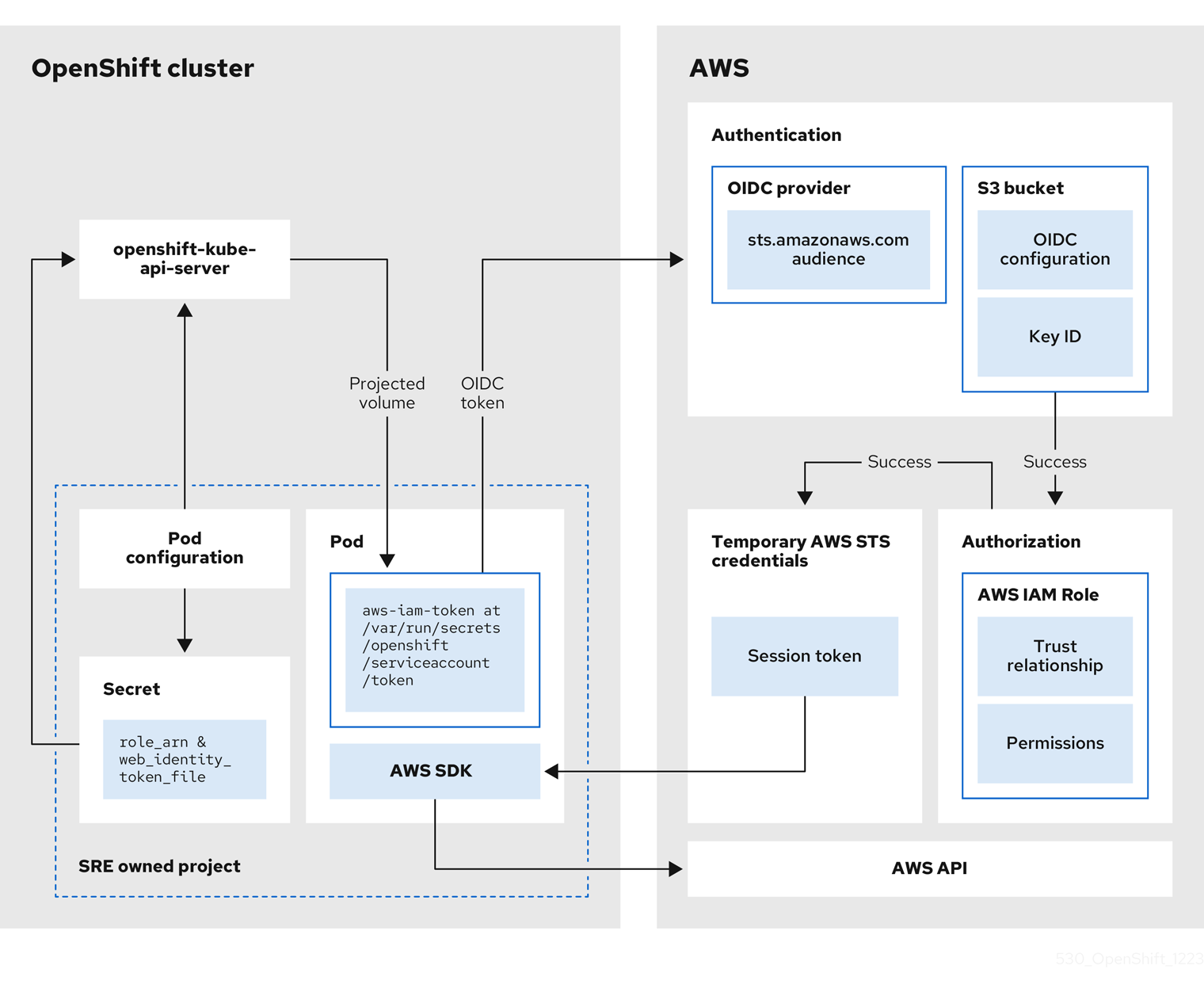

Operators use the following process to obtain the requisite credentials to perform their tasks. Each Operator is assigned an Operator role, a permissions policy, and a trust policy with an OIDC provider. The Operator will assume the role by passing a JSON web token that contains the role and a token file (web_identity_token_file) to the OIDC provider, which then authenticates the signed key with a public key. The public key is created during cluster creation and stored in an S3 bucket. The Operator then confirms that the subject in the signed token file matches the role in the role trust policy which ensures that the OIDC provider can only obtain the allowed role. The OIDC provider then returns the temporary credentials to the Operator so that the Operator can make AWS API calls. For a visual representation, see the following diagram:

Chapter 4. Architecture models

Red Hat OpenShift Service on AWS has the following cluster topology:

Hosted control plane (HCP) - The control plane is hosted in a Red Hat account and the worker nodes are deployed in the customer’s AWS account.

4.1. Comparing Red Hat OpenShift Service on AWS and Red Hat OpenShift Service on AWS (classic architecture)

| | Hosted Control Plane (HCP) | Classic |

|---|---|---|

| Control plane hosting | Control plane components, such as the API server etcd database, are hosted in a Red Hat-owned AWS account. | Control plane components, such as the API server etcd database, are hosted in a customer-owned AWS account. |

| Virtual Private Cloud (VPC) | Worker nodes communicate with the control plane over AWS PrivateLink. | Worker nodes and control plane nodes are deployed in the customer’s VPC. |

| Multi-zone deployment | The control plane is always deployed across multiple availability zones (AZs). | The control plane can be deployed within a single AZ or across multiple AZs. |

| Machine pools | Each machine pool is deployed in a single AZ (private subnet). | Machine pools can be deployed in single AZ or across multiple AZs. |

| Infrastructure nodes | Does not use any dedicated infrastructure nodes to host platform components, such as ingress and image registry. | Uses 2 (single-AZ) or 3 (multi-AZ) dedicated infrastructure nodes to host platform components. |

| OpenShift capabilities | Platform monitoring, image registry, and the ingress controller are deployed in the worker nodes. | Platform monitoring, image registry, and the ingress controller are deployed in the dedicated infrastructure nodes. |

| Cluster upgrades | The control plane and each machine pool can be upgraded separately. | The entire cluster must be upgraded at the same time. |

| Minimum EC2 footprint | 2 EC2 instances are needed to create a cluster. | 7 (single-AZ) or 9 (multi-AZ) EC2 instances are needed to create a cluster. |

Additional resources

4.2. Red Hat OpenShift Service on AWS with HCP architecture

Red Hat OpenShift Service on AWS hosts a highly-available, single-tenant OpenShift control plane. The hosted control plane is deployed across 3 availability zones with 2 API server instances and 3 etcd instances.

You can create a Red Hat OpenShift Service on AWS cluster with or without an internet-facing API server, with the latter considered a “private” cluster and the former considered a “public” cluster. Private API servers are only accessible from your VPC subnets. You access the hosted control plane through an AWS PrivateLink endpoint regardless of API privacy.

The worker nodes are deployed in your AWS account and run on your VPC private subnets. You can add additional private subnets from one or more availability zones to ensure high availability. Worker nodes are shared by OpenShift components and applications. OpenShift components such as the ingress controller, image registry, and monitoring are deployed on the worker nodes hosted on your VPC.

Figure 4.1. Red Hat OpenShift Service on AWS architecture

4.2.1. Red Hat OpenShift Service on AWS architecture on public and private networks

With Red Hat OpenShift Service on AWS, you can create your clusters on public or private networks. The following images depict the architecture of both public and private networks.

Figure 4.2. Red Hat OpenShift Service on AWS deployed on a public network

Figure 4.3. Red Hat OpenShift Service on AWS deployed on a private network

Chapter 5. Policies and service definition

5.1. About availability for Red Hat OpenShift Service on AWS

Availability and disaster avoidance are extremely important aspects of any application platform. Although Red Hat OpenShift Service on AWS provides many protections against failures at several levels, customer-deployed applications must be appropriately configured for high availability. To account for outages that might occur with cloud providers, additional options are available such as deploying a cluster across multiple availability zones and maintaining multiple clusters with failover mechanisms.

5.1.1. Potential points of failure

Red Hat OpenShift Service on AWS (ROSA) provides many features and options for protecting your workloads against downtime, but applications must be architected appropriately to take advantage of these features.

ROSA can help further protect you against many common Kubernetes issues given Red Hat site reliability engineering (SRE) support and the option to deploy machine pools into more than one availability zone, but there are several ways in which a container or infrastructure can still fail. By understanding potential points of failure, you can understand risks and appropriately architect both your applications and your clusters to be as resilient as necessary at each specific level.

Worker nodes are not guaranteed longevity, and may be replaced at any time as part of the normal operation and management of OpenShift. For more details about the node lifecycle, refer to additional resources.

An outage can occur at several different levels of infrastructure and cluster components.

5.1.1.1. Container or pod failure

By design, pods are meant to exist for a short time. Appropriately scaling services so that multiple instances of your application pods are running can protect against issues with any individual pod or container. The OpenShift node scheduler can also make sure these workloads are distributed across different worker nodes to further improve resiliency.

When accounting for possible pod failures, it is also important to understand how storage is attached to your applications. Single persistent volumes attached to single pods cannot leverage the full benefits of pod scaling, whereas replicated databases, database services, or shared storage can.

To avoid disruption to your applications during planned maintenance, such as upgrades, it is important to define a Pod Disruption Budget. These are part of the Kubernetes API and can be managed with oc commands such as other object types. They allow for the specification of safety constraints on pods during operations, such as draining a node for maintenance.

5.1.1.2. Worker node failure

Worker nodes are the virtual machines (VMs) that contain your application pods. By default, a ROSA cluster has a minimum of two worker nodes for a cluster. In the event of a worker node failure, pods are relocated to functioning worker nodes, as long as there is enough capacity, until any issue with an existing node is resolved or the node is replaced. More worker nodes means more protection against single-node outages, and ensures proper cluster capacity for rescheduled pods in the event of a node failure.

When accounting for possible node failures, it is also important to understand how storage is affected. EFS volumes are not affected by node failure. However, EBS volumes are not accessible if they are connected to a node that fails.

5.1.1.3. Zone failure

A zone failure from AWS affects all virtual components, such as worker nodes, block or shared storage, and load balancers that are specific to a single availability zone. To protect against a zone failure, ROSA provides the option for machine pools that are deployed across three availability zones. Existing stateless workloads are then redistributed to unaffected zones in the event of an outage, as long as there is enough capacity.

5.1.1.4. Storage failure

If you have deployed a stateful application, then storage is a critical component and must be accounted for when thinking about high availability. A single block storage PV is unable to withstand outages even at the pod level. The best ways to maintain availability of storage are to use replicated storage solutions, shared storage that is unaffected by outages, or a database service that is independent of the cluster.

Additional resources

5.2. Overview of responsibilities for Red Hat OpenShift Service on AWS

This documentation outlines Red Hat, Amazon Web Services (AWS), and customer responsibilities for the Red Hat OpenShift Service on AWS managed service.

5.2.1. Shared responsibilities for Red Hat OpenShift Service on AWS

While Red Hat and Amazon Web Services (AWS) manage the Red Hat OpenShift Service on AWS services, the customer shares certain responsibilities. The Red Hat OpenShift Service on AWS services are accessed remotely, hosted on public cloud resources, created in customer-owned AWS accounts, and have underlying platform and data security that is owned by Red Hat.

If the cluster-admin role is added to a user, see the responsibilities and exclusion notes in the Red Hat Enterprise Agreement Appendix 4 (Online Subscription Services).

| Resource | Incident and operations management | Change management | Access and identity authorization | Security and regulation compliance | Disaster recovery |

|---|---|---|---|---|---|

| Customer data | Customer | Customer | Customer | Customer | Customer |

| Customer applications | Customer | Customer | Customer | Customer | Customer |

| Developer services | Customer | Customer | Customer | Customer | Customer |

| Platform monitoring | Red Hat | Red Hat | Red Hat | Red Hat | Red Hat |

| Logging | Red Hat | Red Hat and Customer | Red Hat and Customer | Red Hat and Customer | Red Hat |

| Application networking | Red Hat and Customer | Red Hat and Customer | Red Hat and Customer | Red Hat | Red Hat |

| Cluster networking | Red Hat [1] | Red Hat and Customer [2] | Red Hat and Customer | Red Hat [1] | Red Hat [1] |

| Virtual networking management | Red Hat and Customer | Red Hat and Customer | Red Hat and Customer | Red Hat and Customer | Red Hat and Customer |

| Virtual compute management (control plane, infrastructure and worker nodes) | Red Hat | Red Hat | Red Hat | Red Hat | Red Hat |

| Cluster version | Red Hat | Red Hat and Customer | Red Hat | Red Hat | Red Hat |

| Capacity management | Red Hat | Red Hat and Customer | Red Hat | Red Hat | Red Hat |

| Virtual storage management | Red Hat | Red Hat | Red Hat | Red Hat | Red Hat |

| AWS software (public AWS services) | AWS | AWS | AWS | AWS | AWS |

| Hardware/AWS global infrastructure | AWS | AWS | AWS | AWS | AWS |

- If the customer chooses to use their own CNI plugin, the responsibility shifts to the customer.

- The customer must configure their firewall to grant access to the required OpenShift and AWS domains and ports before the cluster is provisioned. For more information, see "AWS firewall prerequisites".

5.2.3. Review and action cluster notifications

Cluster notifications (sometimes referred to as service logs) are messages about the status, health, or performance of your cluster.

Cluster notifications are the primary way that Red Hat Site Reliability Engineering (SRE) communicates with you about the health of your managed cluster. Red Hat SRE may also use cluster notifications to prompt you to perform an action in order to resolve or prevent an issue with your cluster.

Cluster owners and administrators must regularly review and action cluster notifications to ensure clusters remain healthy and supported.

You can view cluster notifications in the Red Hat Hybrid Cloud Console, in the Cluster history tab for your cluster. By default, only the cluster owner receives cluster notifications as emails. If other users need to receive cluster notification emails, add each user as a notification contact for your cluster.

5.2.3.1. Cluster notification policy

Cluster notifications are designed to keep you informed about the health of your cluster and high impact events that affect it.

Most cluster notifications are generated and sent automatically to ensure that you are immediately informed of problems or important changes to the state of your cluster.

In certain situations, Red Hat Site Reliability Engineering (SRE) creates and sends cluster notifications to provide additional context and guidance for a complex issue.

Cluster notifications are not sent for low-impact events, low-risk security updates, routine operations and maintenance, or minor, transient issues that are quickly resolved by Red Hat SRE.

Red Hat services automatically send notifications when:

- Remote health monitoring or environment verification checks detect an issue in your cluster, for example, when a worker node has low disk space.

- Significant cluster life cycle events occur, for example, when scheduled maintenance or upgrades begin, or cluster operations are impacted by an event, but do not require customer intervention.

- Significant cluster management changes occur, for example, when cluster ownership or administrative control is transferred from one user to another.

- Your cluster subscription is changed or updated, for example, when Red Hat makes updates to subscription terms or features available to your cluster.

SRE creates and sends notifications when:

- An incident results in a degradation or outage that impacts your cluster’s availability or performance, for example, your cloud provider has a regional outage. SRE sends subsequent notifications to inform you of incident resolution progress, and when the incident is resolved.

- A security vulnerability, security breach, or unusual activity is detected on your cluster.

- Red Hat detects that changes you have made are creating or may result in cluster instability.

- Red Hat detects that your workloads are causing performance degradation or instability in your cluster.

5.2.4. Incident and operations management

Red Hat is responsible for overseeing the service components required for default platform networking. AWS is responsible for protecting the hardware infrastructure that runs all of the services offered in the AWS Cloud. The customer is responsible for incident and operations management of customer application data and any custom networking the customer has configured for the cluster network or virtual network.

| Resource | Service responsibilities | Customer responsibilities |

|---|---|---|

| Application networking | Red Hat

|

|

| Cluster networking | Red Hat

|

|

| Virtual networking management | Red Hat

|

|

| Virtual storage management | Red Hat

|

|

| Platform monitoring | Red Hat

|

|

| Incident management | Red Hat

|

|

| Infrastructure and data resiliency | Red Hat

|

|

| Cluster capacity | Red Hat

| |

| AWS software (public AWS services) | AWS

|

|

| Hardware/AWS global infrastructure | AWS

|

|

5.2.4.1. Platform monitoring

Platform audit logs are securely forwarded to a centralized security information and event monitoring (SIEM) system, where they may trigger configured alerts to the Red Hat SRE team and are also subject to manual review. Audit logs are retained in the SIEM system for one year. Audit logs for a given cluster are not deleted at the time the cluster is deleted.

Red Hat monitors the cluster using monitoring and alerting systems that run on Red Hat managed infrastructure and operate independently of the cluster. Customers retain full access to the Cluster Monitoring Operator stack for their own in-cluster monitoring, alerting, and observability needs.

5.2.4.2. Incident management

An incident is an event that results in a degradation or outage of one or more Red Hat services.

An incident can be raised by a customer or a Customer Experience and Engagement (CEE) member through a support case, directly by the centralized monitoring and alerting system, or directly by a member of the SRE team.

Depending on the impact on the service and customer, the incident is categorized in terms of severity.

When managing a new incident, Red Hat uses the following general workflow:

- An SRE first responder is alerted to a new incident and begins an initial investigation.

- After the initial investigation, the incident is assigned an incident lead, who coordinates the recovery efforts.

- The incident lead manages all communication and coordination around recovery, including any relevant notifications and support case updates.

- When the incident is resolved a brief summary of the incident and resolution are provided in the customer-initiated support ticket. This summary helps the customers understand the incident and its resolution in more detail.

If customers require more information in addition to what is provided in the support ticket, they can request the following workflow:

- The customer must make a request for the additional information within 5 business days of the incident resolution.

- Depending on the severity of the incident, Red Hat may provide customers with a root cause summary, or a root cause analysis (RCA) in the support ticket. The additional information will be provided within 7 business days for root cause summary and 30 business days for root cause analysis from the incident resolution.

Red Hat also assists with customer incidents raised through support cases. Red Hat can assist with activities including but not limited to:

- Forensic gathering, including isolating virtual compute

- Guiding compute image collection

- Providing collected audit logs

5.2.4.3. Cluster capacity

The impact of a cluster upgrade on capacity is evaluated as part of the upgrade testing process to ensure that capacity is not negatively impacted by new additions to the cluster. During a cluster upgrade, additional worker nodes are added to make sure that total cluster capacity is maintained during the upgrade process.

Capacity evaluations by the Red Hat SRE staff also happen in response to alerts from the cluster, after usage thresholds are exceeded for a certain period of time. Such alerts can also result in a notification to the customer.

5.2.5. Change management

This section describes the policies about how cluster and configuration changes, patches, and releases are managed.

Red Hat is responsible for enabling changes to the cluster infrastructure and services that the customer will control, as well as maintaining versions for the control plane nodes, infrastructure nodes and services, and worker nodes. AWS is responsible for protecting the hardware infrastructure that runs all of the services offered in the AWS Cloud. The customer is responsible for initiating infrastructure change requests and installing and maintaining optional services and networking configurations on the cluster, as well as all changes to customer data and customer applications.

5.2.5.1. Customer-initiated changes

You can initiate changes using self-service capabilities such as cluster deployment, worker node scaling, or cluster deletion.

Change history is captured in the Cluster History section in the OpenShift Cluster Manager Overview tab, and is available for you to view. The change history includes, but is not limited to, logs from the following changes:

- Adding or removing identity providers

-

Adding or removing users to or from the

dedicated-adminsgroup - Scaling the cluster compute nodes

- Scaling the cluster load balancer

- Scaling the cluster persistent storage

- Upgrading the cluster

You can implement a maintenance exclusion by avoiding changes in OpenShift Cluster Manager for the following components:

- Deleting a cluster

- Adding, modifying, or removing identity providers

- Adding, modifying, or removing a user from an elevated group

- Installing or removing add-ons

- Modifying cluster networking configurations

- Adding, modifying, or removing machine pools

- Enabling or disabling user workload monitoring

- Initiating an upgrade

To enforce the maintenance exclusion, ensure machine pool autoscaling or automatic upgrade policies have been disabled. After the maintenance exclusion has been lifted, proceed with enabling machine pool autoscaling or automatic upgrade policies as desired.

5.2.5.2. Red Hat-initiated changes

Red Hat site reliability engineering (SRE) manages the infrastructure, code, and configuration of Red Hat OpenShift Service on AWS using a GitOps workflow and fully automated CI/CD pipelines. This process ensures that Red Hat can safely introduce service improvements on a continuous basis without negatively impacting customers.

Every proposed change undergoes a series of automated verifications immediately upon check-in. Changes are then deployed to a staging environment where they undergo automated integration testing. Finally, changes are deployed to the production environment. Each step is fully automated.

An authorized Red Hat SRE reviewer must approve advancement to each step. The reviewer cannot be the same individual who proposed the change. All changes and approvals are fully auditable as part of the GitOps workflow.

Some changes are released to production incrementally, using feature flags to control availability of new features to specified clusters or customers, such as private or public previews.

5.2.5.3. Patch management

OpenShift Container Platform software and the underlying immutable Red Hat CoreOS (RHCOS) operating system image are patched for bugs and vulnerabilities in regular z-stream upgrades. Read more about RHCOS architecture in the OpenShift Container Platform documentation.

5.2.5.4. Release management

Red Hat does not automatically upgrade your clusters. You can schedule to upgrade the clusters at regular intervals (recurring upgrade) or just once (individual upgrade) using the OpenShift Cluster Manager web console. Red Hat might forcefully upgrade a cluster to a new z-stream version only if the cluster is affected by a critical impact CVE.

Because the required permissions can change between y-stream releases, the AWS managed policies are automatically updated before an upgrade can be performed.

You can review the history of all cluster upgrade events in the OpenShift Cluster Manager web console.

5.2.5.5. Service and Customer resource responsibilities

The following table defines the responsibilities for cluster resources.

| Resource | Service responsibilities | Customer responsibilities |

|---|---|---|

| Logging | Red Hat

|

|

| Application networking | Red Hat

|

|

| Cluster networking | Red Hat

|

|

| Virtual networking management | Red Hat

|

|

| Virtual compute management | Red Hat

|

|

| Cluster version | Red Hat

|

|

| Capacity management | Red Hat

|

|

| Virtual storage management | Red Hat

|

|

| AWS software (public AWS services) | AWS Compute: Provide the Amazon EC2 service, used for ROSA relevant resources. Storage: Provide Amazon EBS, used by ROSA to provision local node storage and persistent volume storage for the cluster. Storage: Provide Amazon S3, used for the ROSA built-in image registry. Networking: Provide the following AWS Cloud services, used by ROSA to satisfy virtual networking infrastructure needs:

Networking: Provide the following AWS services, which customers can optionally integrate with ROSA:

|

|

| Hardware/AWS global infrastructure | AWS

|

|

- For more information on authentication flow for AWS STS, see Authentication flow for AWS STS.

- For more information on pruning images, see Automatically pruning Images.

5.2.6. Security and regulation compliance

The following table outlines the the responsibilities in regards to security and regulation compliance:

| Resource | Service responsibilities | Customer responsibilities |

|---|---|---|

| Logging | Red Hat

|

|

| Virtual networking management | Red Hat

|

|

| Virtual storage management | Red Hat

|

|

| Virtual compute management | Red Hat

|

|

| AWS software (public AWS services) | AWS Compute: Secure Amazon EC2, used for ROSA used for ROSA control plane and worker nodes. For more information, see Infrastructure security in Amazon EC2 in the Amazon EC2 User Guide. Storage: Secure Amazon Elastic Block Store (EBS), used for ROSA control plane and worker node volumes, as well as Kubernetes persistent volumes. For more information, see Data protection in Amazon EC2 in the Amazon EC2 User Guide. Storage: Provide AWS KMS, which ROSA uses to encrypt control plane, worker node volumes and persistent volumes. For more information, see Amazon EBS encryption in the Amazon EC2 User Guide. Storage: Secure Amazon S3, used for the ROSA service’s built-in container image registry. For more information, see Amazon S3 security in the S3 User Guide. Networking: Provide security capabilities and services to increase privacy and control network access on AWS global infrastructure, including network firewalls built into Amazon VPC, private or dedicated network connections, and automatic encryption of all traffic on the AWS global and regional networks between AWS secured facilities. For more information, see the AWS Shared Responsibility Model and Infrastructure security in the Introduction to AWS Security whitepaper. |

|

| Hardware/AWS global infrastructure | AWS

|

|

5.2.7. Disaster recovery

Disaster recovery includes data and configuration backup, replicating data and configuration to the disaster recovery environment, and failover on disaster events.

Red Hat OpenShift Service on AWS (ROSA) provides disaster recovery for failures that occur at the pod, node, and availability zone levels.

All disaster recovery requires that the customer use best practices for deploying highly available applications, storage, and cluster architecture, such as multiple machine pools across multiple availability zones, to account for the level of desired availability.

One cluster with a single machine pool will not provide disaster avoidance or recovery in the event of an availability zone or region outage. Multiple clusters with single machine pools with customer-maintained failover can account for outages at the zone or at the regional level.

One cluster with multiple machine pools across multiple availability zones will not provide disaster avoidance or recovery in the event of a full region outage. Multiple clusters in several regions with multiple machine pools in more than one availability-zone with customer-maintained failover can account for outages at the regional level.

| Resource | Service responsibilities | Customer responsibilities |

|---|---|---|

| Virtual networking management | Red Hat

|

|

| Virtual Storage management | Red Hat |

|

| Virtual compute management | Red Hat - Provide the ability for the customer to manually or automatically replace failed worker nodes. |

|

| AWS software (public AWS services) | AWS Compute: Provide Amazon EC2 features that support data resiliency such as Amazon EBS snapshots and Amazon EC2 Auto Scaling. For more information, see Resilience in Amazon EC2 in the EC2 User Guide. Storage: Provide the ability for the ROSA service and customers to back up the Amazon EBS volume on the cluster through Amazon EBS volume snapshots. Storage: For information about Amazon S3 features that support data resiliency, see Resilience in Amazon S3. Networking: For information about Amazon VPC features that support data resiliency, see Resilience in Amazon Virtual Private Cloud in the Amazon VPC User Guide. |

|

| Hardware/AWS global infrastructure | AWS

|

|

5.2.8. Additional customer responsibilities for data and applications

The customer is responsible for the applications, workloads, and data that they deploy to Red Hat OpenShift Service on AWS. However, Red Hat and AWS provide various tools to help the customer manage data and applications on the platform.

| Resource | Red Hat and AWS | Customer responsibilities |

|---|---|---|

| Customer data | Red Hat

AWS

|

|

| Customer applications | Red Hat

AWS

|

|

5.3. Red Hat OpenShift Service on AWS service definition

This documentation outlines the service definition for the Red Hat OpenShift Service on AWS managed service.

5.3.1. Account management

This section provides information about the service definition for Red Hat OpenShift Service on AWS account management.

5.3.1.1. Billing and pricing

Red Hat OpenShift Service on AWS is billed directly to your Amazon Web Services (AWS) account. ROSA pricing is consumption based, with annual commitments or three-year commitments for greater discounting. The total cost of ROSA consists of two components:

- ROSA service fees

- AWS infrastructure fees

Visit the Red Hat OpenShift Service on AWS Pricing page on the AWS website for more details.

5.3.1.2. Cluster self-service

Customers can self-service their clusters, including, but not limited to:

- Create a cluster

- Delete a cluster

- Add or remove an identity provider

- Add or remove a user from an elevated group

- Configure cluster privacy

- Add or remove machine pools and configure autoscaling

- Define upgrade policies

You can perform these self-service tasks using the Red Hat OpenShift Service on AWS (ROSA) CLI, rosa.

5.3.1.3. Instance types

All ROSA with HCP clusters require a minimum of 2 worker nodes. Shutting down the underlying (EC2 instance) infrastructure through the cloud provider console is unsupported and can lead to data loss and other risks.

Approximately one vCPU core and 1 GiB of memory are reserved on each worker node and removed from allocatable resources. This reservation of resources is necessary to run processes required by the underlying platform. These processes include system daemons such as udev, kubelet, and container runtime among others. The reserved resources also account for kernel reservations.

OpenShift/ROSA core systems such as audit log aggregation, metrics collection, DNS, image registry, CNI/OVN-Kubernetes, and others might consume additional allocatable resources to maintain the stability and maintainability of the cluster. The additional resources consumed might vary based on usage.

For additional information, see the Kubernetes documentation.

5.3.1.4. Regions and availability zones

The following AWS regions are currently available for ROSA with HCP.

Regions in China are not supported, regardless of their support on OpenShift Container Platform.

For GovCloud (US) regions, you must submit an Access request for Red Hat OpenShift Service on AWS (ROSA) FedRAMP.

The following AWS GovCloud regions are supported:

-

us-gov-west-1 -

us-gov-east-1

For more information about AWS GovCloud regions, see the The AWS GovCloud (US) User Guide.

| Region | Location | Minimum ROSA version required | AWS opt-in required |

|---|---|---|---|

| us-east-1 | N. Virginia | 4.14 | No |

| us-east-2 | Ohio | 4.14 | No |

| us-west-2 | Oregon | 4.14 | No |

| af-south-1 | Cape Town | 4.14 | Yes |

| ap-east-1 | Hong Kong | 4.14 | Yes |

| ap-south-2 | Hyderabad | 4.14 | Yes |

| ap-southeast-3 | Jakarta | 4.14 | Yes |

| ap-southeast-4 | Melbourne | 4.14 | Yes |

| ap-southeast-5 | Malaysia | 4.16.34; 4.17.15 | Yes |

| ap-southeast-6 | Auckland | 4.19.18 | Yes |

| ap-southeast-7 | Thailand | 4.18 | Yes |

| ap-south-1 | Mumbai | 4.14 | No |

| ap-northeast-3 | Osaka | 4.14 | No |

| ap-northeast-2 | Seoul | 4.14 | No |

| ap-southeast-1 | Singapore | 4.14 | No |

| ap-southeast-2 | Sydney | 4.14 | No |

| ap-northeast-1 | Tokyo | 4.14 | No |

| ca-central-1 | Central Canada | 4.14 | No |

| eu-central-1 | Frankfurt | 4.14 | No |

| mx-central-1 | Mexico | 4.18 | Yes |

| eu-north-1 | Stockholm | 4.14 | No |

| eu-west-1 | Ireland | 4.14 | No |

| eu-west-2 | London | 4.14 | No |

| eu-south-1 | Milan | 4.14 | Yes |

| eu-west-3 | Paris | 4.14 | No |

| eu-south-2 | Spain | 4.14 | Yes |

| eu-central-2 | Zurich | 4.14 | Yes |

| me-south-1 | Bahrain | 4.14 | Yes |

| me-central-1 | UAE | 4.14 | Yes |

| sa-east-1 | São Paulo | 4.14 | No |

| il-central-1 | Tel Aviv | 4.15 | Yes |

| ca-west-1 | Calgary | 4.14 | Yes |

Clusters can only be deployed in regions with at least 3 availability zones. For more information, see the Regions and Availability Zones section in the AWS documentation.

Each new ROSA with HCP cluster is installed within a preexisting Virtual Private Cloud (VPC) in a single region, with the option to deploy up to the total number of availability zones for the given region. This provides cluster-level network and resource isolation, and enables cloud-provider VPC settings, such as VPN connections and VPC Peering. Persistent volumes (PVs) are backed by Amazon Elastic Block Storage (Amazon EBS), and are specific to the availability zone in which they are provisioned. Persistent volume claims (PVCs) do not bind to a volume until the associated pod resource is assigned into a specific availability zone to prevent unschedulable pods. Availability zone-specific resources are only usable by resources in the same availability zone.

The region cannot be changed after a cluster has been deployed.

5.3.1.5. Local Zones

Red Hat OpenShift Service on AWS does not support the use of AWS Local Zones.

5.3.1.6. Service Level Agreement (SLA)

Any SLAs for the service itself are defined in Appendix 4 of the Red Hat Enterprise Agreement Appendix 4 (Online Subscription Services).

5.3.1.7. Limited support status

When a cluster transitions to a Limited Support status, Red Hat no longer proactively monitors the cluster, the SLA is no longer applicable, and credits requested against the SLA are denied. It does not mean that you no longer have product support. In some cases, the cluster can return to a fully-supported status if you remediate the violating factors. However, in other cases, you might have to delete and recreate the cluster.

A cluster might move to a Limited Support status for many reasons, including the following scenarios:

- If you remove or replace any native Red Hat OpenShift Service on AWS components or any other component that is installed and managed by Red Hat

- If cluster administrator permissions were used, Red Hat is not responsible for any of your or your authorized users’ actions, including those that affect infrastructure services, service availability, or data loss. If Red Hat detects any such actions, the cluster might transition to a Limited Support status. Red Hat notifies you of the status change and you should either revert the action or create a support case to explore remediation steps that might require you to delete and recreate the cluster.

If you have questions about a specific action that might cause a cluster to move to a Limited Support status or need further assistance, open a support ticket.

5.3.1.8. Support

Red Hat OpenShift Service on AWS includes Red Hat Premium Support, which can be accessed by using the Red Hat Customer Portal.

See the Red Hat Production Support Terms of Service for support response times.

AWS support is subject to a customer’s existing support contract with AWS.

5.3.2. Logging

Red Hat OpenShift Service on AWS provides optional integrated log forwarding to Amazon (AWS) CloudWatch.

5.3.2.1. Cluster audit logging

Cluster audit logs are available through AWS CloudWatch, if the integration is enabled. If the integration is not enabled, you can request the audit logs by opening a support case.

5.3.2.2. Application logging

Application logs sent to STDOUT are collected by Fluentd and forwarded to AWS CloudWatch through the cluster logging stack, if it is installed.

5.3.3. Monitoring

This section provides information about the service definition for Red Hat OpenShift Service on AWS monitoring.

5.3.3.1. Cluster metrics

Red Hat OpenShift Service on AWS clusters come with an integrated Prometheus stack for cluster monitoring including CPU, memory, and network-based metrics. This is accessible through the web console. These metrics also allow for horizontal pod autoscaling based on CPU or memory metrics provided by a ROSA user.

5.3.3.2. Cluster notifications

Cluster notifications (sometimes referred to as service logs) are messages about the status, health, or performance of your cluster.

Cluster notifications are the primary way that Red Hat Site Reliability Engineering (SRE) communicates with you about the health of your managed cluster. Red Hat SRE may also use cluster notifications to prompt you to perform an action in order to resolve or prevent an issue with your cluster.

Cluster owners and administrators must regularly review and action cluster notifications to ensure clusters remain healthy and supported.

You can view cluster notifications in the Red Hat Hybrid Cloud Console, in the Cluster history tab for your cluster. By default, only the cluster owner receives cluster notifications as emails. If other users need to receive cluster notification emails, add each user as a notification contact for your cluster.

5.3.4. Networking

This section provides information about the service definition for ROSA networking.

5.3.4.1. Custom domains for applications

Starting with Red Hat OpenShift Service on AWS 4.14, the Custom Domain Operator is deprecated. To manage Ingress in ROSA 4.14 or later, use the Ingress Operator.

To use a custom hostname for a route, you must update your DNS provider by creating a canonical name (CNAME) record. Your CNAME record should map the OpenShift canonical router hostname to your custom domain. The OpenShift canonical router hostname is shown on the Route Details page after a route is created. Alternatively, a wildcard CNAME record can be created once to route all subdomains for a given hostname to the cluster’s router.

5.3.4.2. Domain validated certificates

ROSA includes TLS security certificates needed for both internal and external services on the cluster. For external routes, there are two separate TLS wildcard certificates that are provided and installed on each cluster: one is for the web console and route default hostnames, and the other is for the API endpoint. Let’s Encrypt is the certificate authority used for certificates. Routes within the cluster, such as the internal API endpoint, use TLS certificates signed by the cluster’s built-in certificate authority and require the CA bundle available in every pod for trusting the TLS certificate.

5.3.4.3. Custom certificate authorities for builds

ROSA supports the use of custom certificate authorities to be trusted by builds when pulling images from an image registry.

5.3.4.4. Load balancers

Red Hat OpenShift Service on AWS only deploys load balancers from the default ingress controller. All other load balancers can be optionally deployed by a customer for secondary ingress controllers or service load balancers.

5.3.4.5. Cluster ingress

Project administrators can add route annotations for many different purposes, including ingress control through IP allow-listing.

Ingress policies can also be changed by using NetworkPolicy objects, which leverage the ovs-networkpolicy plugin. This allows for full control over the ingress network policy down to the pod level, including between pods on the same cluster and even in the same namespace.

All cluster ingress traffic will go through the defined load balancers. Direct access to all nodes is blocked by cloud configuration.

5.3.4.6. Cluster egress

Pod egress traffic control through EgressNetworkPolicy objects can be used to prevent or limit outbound traffic in ROSA with hosted control planes (HCP).

5.3.4.7. Cloud network configuration

Red Hat OpenShift Service on AWS allows for the configuration of a private network connection through AWS-managed technologies, such as:

- VPN connections

- VPC peering

- Transit Gateway

- Direct Connect

Red Hat site reliability engineers (SREs) do not monitor private network connections. Monitoring of these connections is the responsibility of the customer.

5.3.4.8. DNS forwarding

For ROSA clusters that have a private cloud network configuration, a customer can specify internal DNS servers available on that private connection that should be queried for explicitly provided domains.

5.3.4.9. Network verification

Network verification checks run automatically when you deploy a ROSA cluster into an existing Virtual Private Cloud (VPC) or create an additional machine pool with a subnet that is new to your cluster. The checks validate your network configuration and highlight errors, enabling you to resolve configuration issues prior to deployment.

You can also run the network verification checks manually to validate the configuration for an existing cluster.

5.3.5. Storage

This section provides information about the service definition for Red Hat OpenShift Service on AWS storage.

5.3.5.1. Encrypted-at-rest OS and node storage

Worker nodes use encrypted-at-rest Amazon Elastic Block Store (Amazon EBS) storage.

5.3.5.2. Encrypted-at-rest PV

EBS volumes that are used for PVs are encrypted-at-rest by default.

5.3.5.3. Block storage (RWO)

Persistent volumes (PVs) are backed by Amazon Elastic Block Store (Amazon EBS), which is Read-Write-Once.

PVs can be attached only to a single node at a time and are specific to the availability zone in which they were provisioned. However, PVs can be attached to any node in the availability zone.

Each cloud provider has its own limits for how many PVs can be attached to a single node. See AWS instance type limits for details.

5.3.6. Platform

This section provides information about the service definition for the Red Hat OpenShift Service on AWS platform.

5.3.6.1. Autoscaling

Node autoscaling is available on ROSA with HCP. You can configure the autoscaler option to automatically scale the number of machines in a cluster.

5.3.6.2. Multiple availability zone

Control plane components are always deployed across multiple availability zones, regardless of a customer’s worker node configuration.

5.3.6.3. Node labels

Custom node labels are created by Red Hat during node creation and cannot be changed on ROSA with HCP clusters at this time. However, custom labels are supported when creating new machine pools.

5.3.6.4. Node lifecycle

Worker nodes are not guaranteed longevity, and may be replaced at any time as part of the normal operation and management of OpenShift.

A worker node might be replaced in the following circumstances:

-

Machine health checks are deployed and configured to ensure that a worker node with a

NotReadystatus is replaced to ensure smooth operation of the cluster. - AWS EC2 instances may be terminated when AWS detects irreparable failure of the underlying hardware that hosts the instance.

- During upgrades, a new, upgraded node is first created and joined to the cluster. Once this new node has been successfully integrated into the cluster via the previously described automated health checks, an older node is then removed from the cluster.

For all containerized workloads running on a Kubernetes based system, it is best practice to configure applications to be resilient of node replacements.

5.3.6.5. Cluster backup policy

Red Hat recommends object-level backup solutions for ROSA clusters. OpenShift API for Data Protection (OADP) is included in OpenShift but not enabled by default. Customers can configure OADP on their clusters to achieve object-level backup and restore capabilities.

Red Hat does not back up customer applications or application data. Customers are solely responsible for applications and their data, and must put their own backup and restore capabilities in place.

Customers are solely responsible for backing up and restoring their applications and application data. For more information about customer responsibilities, see "Shared responsibility matrix".

5.3.6.6. OpenShift version

ROSA with HCP is run as a service. Red Hat SRE team will force upgrade when end of life (EOL) is reached. Upgrade scheduling to the latest version is available.

5.3.6.7. Upgrades

Upgrades can be scheduled using the ROSA CLI, rosa, or through OpenShift Cluster Manager.

See the Red Hat OpenShift Service on AWS Life Cycle for more information on the upgrade policy and procedures.

5.3.6.8. Windows Containers

Red Hat OpenShift support for Windows Containers is not available on Red Hat OpenShift Service on AWS at this time. Alternatively, it is supported to run Windows based virtual machines on OpenShift Virtualization running on a ROSA cluster.

5.3.6.9. Container engine

ROSA with HCP runs on OpenShift 4 and uses CRI-O as the only available container engine (container runtime interface).

5.3.6.10. Operating system

ROSA with HCP runs on OpenShift 4 and uses Red Hat CoreOS (RHCOS) as the operating system for all cluster nodes.

5.3.6.11. Red Hat Operator support

Red Hat workloads typically refer to Red Hat-provided Operators made available through Operator Hub. Red Hat workloads are not managed by the Red Hat SRE team, and must be deployed on worker nodes. These Operators may require additional Red Hat subscriptions, and may incur additional cloud infrastructure costs. Examples of these Red Hat-provided Operators are:

- Red Hat Quay

- Red Hat Advanced Cluster Management

- Red Hat Advanced Cluster Security

- Red Hat OpenShift Service Mesh

- OpenShift Serverless

- Red Hat OpenShift Logging

- Red Hat OpenShift Pipelines

- OpenShift Virtualization

5.3.6.12. Kubernetes Operator support

All Operators listed in the software catalog marketplace should be available for installation. These Operators are considered customer workloads, and are not monitored nor managed by Red Hat SRE. Operators authored by Red Hat are supported by Red Hat.

5.3.7. Security

This section provides information about the service definition for Red Hat OpenShift Service on AWS security.

5.3.7.1. Authentication provider

Authentication for the cluster can be configured using either OpenShift Cluster Manager or cluster creation process or using the ROSA CLI, rosa. ROSA is not an identity provider, and all access to the cluster must be managed by the customer as part of their integrated solution. The use of multiple identity providers provisioned at the same time is supported. The following identity providers are supported:

- GitHub or GitHub Enterprise

- GitLab

- LDAP

- OpenID Connect

- htpasswd

5.3.7.2. Privileged containers

Privileged containers are available for users with the cluster-admin role. Usage of privileged containers as cluster-admin is subject to the responsibilities and exclusion notes in the Red Hat Enterprise Agreement Appendix 4 (Online Subscription Services).

5.3.7.3. Customer administrator user

In addition to normal users, Red Hat OpenShift Service on AWS provides access to a ROSA with HCP-specific group called dedicated-admin. Any users on the cluster that are members of the dedicated-admin group:

- Have administrator access to all customer-created projects on the cluster.

- Can manage resource quotas and limits on the cluster.

-

Can add and manage

NetworkPolicyobjects. - Are able to view information about specific nodes and PVs in the cluster, including scheduler information.

-

Can access the reserved

dedicated-adminproject on the cluster, which allows for the creation of service accounts with elevated privileges and also gives the ability to update default limits and quotas for projects on the cluster. -

Can install Operators from the software catalog and perform all verbs in all

*.operators.coreos.comAPI groups.

5.3.7.4. Cluster administration role

The administrator of Red Hat OpenShift Service on AWS has default access to the cluster-admin role for your organization’s cluster. While logged into an account with the cluster-admin role, users have increased permissions to run privileged security contexts.

5.3.7.5. Project self-service

By default, all users have the ability to create, update, and delete their projects. This can be restricted if a member of the dedicated-admin group removes the self-provisioner role from authenticated users:

oc adm policy remove-cluster-role-from-group self-provisioner system:authenticated:oauth

$ oc adm policy remove-cluster-role-from-group self-provisioner system:authenticated:oauthRestrictions can be reverted by applying:

oc adm policy add-cluster-role-to-group self-provisioner system:authenticated:oauth

$ oc adm policy add-cluster-role-to-group self-provisioner system:authenticated:oauth5.3.7.6. Regulatory compliance

See the Compliance table in Understanding process and security for ROSA for the latest compliance information.

5.3.7.7. Network security

With Red Hat OpenShift Service on AWS, AWS provides a standard DDoS protection on all load balancers, called AWS Shield. This provides 95% protection against most commonly used level 3 and 4 attacks on all the public facing load balancers used for ROSA. A 10-second timeout is added for HTTP requests coming to the haproxy router to receive a response or the connection is closed to provide additional protection.

5.3.7.8. etcd encryption

In Red Hat OpenShift Service on AWS, the control plane storage is encrypted at rest by default, including encryption of the etcd volumes. This storage-level encryption is provided through the storage layer of the cloud provider.

Customers can also opt to encrypt the etcd database at build time or provide their own custom AWS KMS keys for the purpose of encrypting the etcd database.

Etcd encryption will encrypt the following Kubernetes API server and OpenShift API server resources:

- Secrets

- Config maps

- Routes

- OAuth access tokens

- OAuth authorize tokens

5.4. Red Hat OpenShift Service on AWS instance types

ROSA with HCP offers the following worker node instance types and sizes.

Currently, ROSA with HCP supports a maximum of 500 worker nodes.

5.4.1. AWS x86-based instance types

Example 5.1. General purpose

- m5.xlarge (4 vCPU, 16 GiB)

- m5.2xlarge (8 vCPU, 32 GiB)

- m5.4xlarge (16 vCPU, 64 GiB)

- m5.8xlarge (32 vCPU, 128 GiB)

- m5.12xlarge (48 vCPU, 192 GiB)

- m5.16xlarge (64 vCPU, 256 GiB)

- m5.24xlarge (96 vCPU, 384 GiB)

- m5.metal (96 vCPU, 384 GiB) These instance types offer 96 logical processors on 48 physical cores. They run on single servers with two physical Intel sockets.

- m5a.xlarge (4 vCPU, 16 GiB)

- m5a.2xlarge (8 vCPU, 32 GiB)

- m5a.4xlarge (16 vCPU, 64 GiB)

- m5a.8xlarge (32 vCPU, 128 GiB)

- m5a.12xlarge (48 vCPU, 192 GiB)

- m5a.16xlarge (64 vCPU, 256 GiB)

- m5a.24xlarge (96 vCPU, 384 GiB)

- m5dn.metal (96 vCPU, 384 GiB)

- m5zn.metal (48 vCPU, 192 GiB)

- m5d.metal (96† vCPU, 384 GiB)

- m5n.metal (96 vCPU, 384 GiB)

- m6a.xlarge (4 vCPU, 16 GiB)

- m6a.2xlarge (8 vCPU, 32 GiB)

- m6a.4xlarge (16 vCPU, 64 GiB)

- m6a.8xlarge (32 vCPU, 128 GiB)

- m6a.12xlarge (48 vCPU, 192 GiB)

- m6a.16xlarge (64 vCPU, 256 GiB)

- m6a.24xlarge (96 vCPU, 384 GiB)

- m6a.32xlarge (128 vCPU, 512 GiB)

- m6a.48xlarge (192 vCPU, 768 GiB)

- m6a.metal (192 vCPU, 768 GiB)

- m6i.xlarge (4 vCPU, 16 GiB)

- m6i.2xlarge (8 vCPU, 32 GiB)

- m6i.4xlarge (16 vCPU, 64 GiB)

- m6i.8xlarge (32 vCPU, 128 GiB)

- m6i.12xlarge (48 vCPU, 192 GiB)

- m6i.16xlarge (64 vCPU, 256 GiB)

- m6i.24xlarge (96 vCPU, 384 GiB)

- m6i.32xlarge (128 vCPU, 512 GiB)

- m6i.metal (128 vCPU, 512 GiB)

- m6id.xlarge (4 vCPU, 16 GiB)

- m6id.2xlarge (8 vCPU, 32 GiB)

- m6id.4xlarge (16 vCPU, 64 GiB)

- m6id.8xlarge (32 vCPU, 128 GiB)

- m6id.12xlarge (48 vCPU, 192 GiB)

- m6id.16xlarge (64 vCPU, 256 GiB)

- m6id.24xlarge (96 vCPU, 384 GiB)

- m6id.32xlarge (128 vCPU, 512 GiB)

- m6id.metal (128 vCPU, 512 GiB)

- m6idn.xlarge (4 vCPU, 16 GiB)

- m6idn.2xlarge (8 vCPU, 32 GiB)

- m6idn.4xlarge (16 vCPU, 64 GiB)

- m6idn.8xlarge (32 vCPU, 128 GiB)

- m6idn.12xlarge (48 vCPU, 192 GiB)

- m6idn.16xlarge (64 vCPU, 256 GiB)

- m6idn.24xlarge (96 vCPU, 384 GiB)

- m6idn.32xlarge (128 vCPU, 512 GiB)

- m6in.xlarge (4 vCPU, 16 GiB)

- m6in.2xlarge (8 vCPU, 32 GiB)

- m6in.4xlarge (16 vCPU, 64 GiB)

- m6in.8xlarge (32 vCPU, 128 GiB)

- m6in.12xlarge (48 vCPU, 192 GiB)

- m6in.16xlarge (64 vCPU, 256 GiB)

- m6in.24xlarge (96 vCPU, 384 GiB)

- m6in.32xlarge (128 vCPU, 512 GiB)

- m7a.xlarge (4 vCPU, 16 GiB)

- m7a.2xlarge (8 vCPU, 32 GiB)

- m7a.4xlarge (16 vCPU, 64 GiB)

- m7a.8xlarge (32 vCPU, 128 GiB)

- m7a.12xlarge (48 vCPU, 192 GiB)

- m7a.16xlarge (64 vCPU, 256 GiB)

- m7a.24xlarge (96 vCPU, 384 GiB)

- m7a.32xlarge (128 vCPU, 512 GiB)

- m7a.48xlarge (192 vCPU, 768 GiB)

- m7a.metal-48xl (192 vCPU, 768 GiB)

- m7i-flex.2xlarge (8 vCPU, 32 GiB)

- m7i-flex.4xlarge (16 vCPU, 64 GiB)

- m7i-flex.8xlarge (32 vCPU, 128 GiB)

- m7i-flex.xlarge (4 vCPU, 16 GiB)

- m7i.xlarge (4 vCPU, 16 GiB)

- m7i.2xlarge (8 vCPU, 32 GiB)

- m7i.4xlarge (16 vCPU, 64 GiB)

- m7i.8xlarge (32 vCPU, 128 GiB)

- m7i.12xlarge (48 vCPU, 192 GiB)

- m7i.16xlarge (64 vCPU, 256 GiB)

- m7i.24xlarge (96 vCPU, 384 GiB)

- m7i.48xlarge (192 vCPU, 768 GiB)

- m7i.metal-24xl (96 vCPU, 384 GiB)

- m7i.metal-48xl (192 vCPU, 768 GiB)

- m8i.xlarge (4 vCPU, 16 GiB)

- m8i.2xlarge (8 vCPU, 32 GiB)

- m8i.4xlarge (16 vCPU, 64 GiB)

- m8i.8xlarge (32 vCPU, 128 GiB)

- m8i.12xlarge (48 vCPU, 192 GiB)

- m8i.16xlarge (64 vCPU, 256 GiB)

- m8i.24xlarge (96 vCPU, 384 GiB)

- m8i.32xlarge (128 vCPU, 512 GiB)

- m8i.48xlarge (192 vCPU, 768 GiB)

- m8i.96xlarge (384 vCPU, 1,536 GiB)

- m8i.metal-48xl (192 vCPU, 768 GiB)

- m8i.metal-96xl (384 vCPU, 1,536 GiB)

- m8i-flex.large (2 vCPU, 8 GiB)

- m8i-flex.xlarge (4 vCPU, 16 GiB)

- m8i-flex.2xlarge (8 vCPU, 32 GiB)

- m8i-flex.4xlarge (16 vCPU, 64 GiB)

- m8i-flex.8xlarge (32 vCPU, 128 GiB)

- m8i-flex.12xlarge (48 vCPU, 192 GiB)

- m8i-flex.16xlarge (64 vCPU, 256 GiB)

- m8a.xlarge (4 vCPU, 16 GiB)

- m8a.2xlarge (8 vCPU, 32 GiB)

- m8a.4xlarge (16 vCPU, 64 GiB)

- m8a.8xlarge (32 vCPU, 128 GiB)

- m8a.12xlarge (48 vCPU, 192 GiB)

- m8a.16xlarge (64 vCPU, 256 GiB)

- m8a.24xlarge (96 vCPU, 384 GiB)

- m8a.48xlarge (192 vCPU, 768 GiB)

- m8a.metal-24xl (96 vCPU, 384 GiB)

- m8a.metal-48xl (192 vCPU, 768 GiB)

† These instance types offer 96 logical processors on 48 physical cores. They run on single servers with two physical Intel sockets.

Example 5.2. Burstable general purpose

- t3.xlarge (4 vCPU, 16 GiB)

- t3.2xlarge (8 vCPU, 32 GiB)

- t3a.xlarge (4 vCPU, 16 GiB)

- t3a.2xlarge (8 vCPU, 32 GiB)

Example 5.3. Memory intensive

- u7i-6tb.112xlarge (448 vCPU, 6,144 GiB)

- u7i-8tb.112xlarge (448 vCPU, 6,144 GiB)

- u7i-12tb.224xlarge (896 vCPU, 12,288 GiB)

- u7in-16tb.224xlarge (896 vCPU, 16,384 GiB)

- u7in-24tb.224xlarge (896 vCPU, 24,576 GiB)

- u7in-32tb.224xlarge (896 vCPU, 32,768 GiB)

- u7inh-32tb.480xlarge (1920 vCPU, 32,768 GiB)

- x1.16xlarge (64 vCPU, 976 GiB)

- x1.32xlarge (128 vCPU, 1,952 GiB)

- x1e.xlarge (4 vCPU, 122 GiB)

- x1e.2xlarge (8 vCPU, 244 GiB)

- x1e.4xlarge (16 vCPU, 488 GiB)

- x1e.8xlarge (32 vCPU, 976 GiB)

- x1e.16xlarge (64 vCPU, 1,952 GiB)

- x1e.32xlarge (128 vCPU, 3,904 GiB)

- x2idn.16xlarge (64 vCPU, 1,024 GiB)

- x2idn.24xlarge (96 vCPU, 1,536 GiB)

- x2idn.32xlarge (128 vCPU, 2,048 GiB)

- x2iedn.xlarge (4 vCPU, 128 GiB)

- x2iedn.2xlarge (8 vCPU, 256 GiB)

- x2iedn.4xlarge (16 vCPU, 512 GiB)

- x2iedn.8xlarge (32 vCPU, 1,024 GiB)

- x2iedn.16xlarge (64 vCPU, 2,048 GiB)

- x2iedn.24xlarge (96 vCPU, 3,072 GiB)

- x2iedn.32xlarge (128 vCPU, 4,096 GiB)

- x2iezn.2xlarge (8 vCPU, 256 GiB)

- x2iezn.4xlarge (16vCPU, 512 GiB)

- x2iezn.6xlarge (24vCPU, 768 GiB)

- x2iezn.8xlarge (32vCPU, 1,024 GiB)

- x2iezn.12xlarge (48vCPU, 1,536 GiB)

- x2iezn.metal (48 vCPU, 1,536 GiB)

- x2idn.metal (128vCPU, 2,048 GiB)

- x2iedn.metal (128vCPU, 4,096 GiB)

Example 5.4. Memory optimized

- r4.xlarge (4 vCPU, 30.5 GiB)

- r4.2xlarge (8 vCPU, 61 GiB)

- r4.4xlarge (16 vCPU, 122 GiB)

- r4.8xlarge (32 vCPU, 244 GiB)

- r4.16xlarge (64 vCPU, 488 GiB)

- r5.xlarge (4 vCPU, 32 GiB)

- r5.2xlarge (8 vCPU, 64 GiB)

- r5.4xlarge (16 vCPU, 128 GiB)

- r5.8xlarge (32 vCPU, 256 GiB)

- r5.12xlarge (48 vCPU, 384 GiB)

- r5.16xlarge (64 vCPU, 512 GiB)

- r5.24xlarge (96 vCPU, 768 GiB)

- r5.metal (96 vCPU, 768 GiB) These instance types offer 96 logical processors on 48 physical cores. They run on single servers with two physical Intel sockets.

- r5a.xlarge (4 vCPU, 32 GiB)

- r5a.2xlarge (8 vCPU, 64 GiB)

- r5a.4xlarge (16 vCPU, 128 GiB)

- r5a.8xlarge (32 vCPU, 256 GiB)

- r5a.12xlarge (48 vCPU, 384 GiB)

- r5a.16xlarge (64 vCPU, 512 GiB)

- r5a.24xlarge (96 vCPU, 768 GiB)

- r5ad.xlarge (4 vCPU, 32 GiB)

- r5ad.2xlarge (8 vCPU, 64 GiB)

- r5ad.4xlarge (16 vCPU, 128 GiB)

- r5ad.8xlarge (32 vCPU, 256 GiB)

- r5ad.12xlarge (48 vCPU, 384 GiB)

- r5ad.16xlarge (64 vCPU, 512 GiB)

- r5ad.24xlarge (96 vCPU, 768 GiB)

- r5b.xlarge (4 vCPU, 32 GiB)

- r5b.2xlarge (8 vCPU, 364 GiB)

- r5b.4xlarge (16 vCPU, 3,128 GiB)

- r5b.8xlarge (32 vCPU, 3,256 GiB)

- r5b.12xlarge (48 vCPU, 3,384 GiB)

- r5b.16xlarge (64 vCPU, 3,512 GiB)

- r5b.24xlarge (96 vCPU, 3,768 GiB)

- r5b.metal (96 768 GiB)

- r5d.xlarge (4 vCPU, 32 GiB)

- r5d.2xlarge (8 vCPU, 64 GiB)

- r5d.4xlarge (16 vCPU, 128 GiB)

- r5d.8xlarge (32 vCPU, 256 GiB)

- r5d.12xlarge (48 vCPU, 384 GiB)

- r5d.16xlarge (64 vCPU, 512 GiB)

- r5d.24xlarge (96 vCPU, 768 GiB)

- r5d.metal (96 vCPU, 768 GiB) These instance types offer 96 logical processors on 48 physical cores. They run on single servers with two physical Intel sockets.

- r5n.xlarge (4 vCPU, 32 GiB)

- r5n.2xlarge (8 vCPU, 64 GiB)

- r5n.4xlarge (16 vCPU, 128 GiB)

- r5n.8xlarge (32 vCPU, 256 GiB)

- r5n.12xlarge (48 vCPU, 384 GiB)

- r5n.16xlarge (64 vCPU, 512 GiB)

- r5n.24xlarge (96 vCPU, 768 GiB)

- r5n.metal (96 vCPU, 768 GiB)

- r5dn.xlarge (4 vCPU, 32 GiB)

- r5dn.2xlarge (8 vCPU, 64 GiB)

- r5dn.4xlarge (16 vCPU, 128 GiB)

- r5dn.8xlarge (32 vCPU, 256 GiB)

- r5dn.12xlarge (48 vCPU, 384 GiB)

- r5dn.16xlarge (64 vCPU, 512 GiB)

- r5dn.24xlarge (96 vCPU, 768 GiB)

- r5dn.metal (96 vCPU, 768 GiB)

- r6a.xlarge (4 vCPU, 32 GiB)

- r6a.2xlarge (8 vCPU, 64 GiB)

- r6a.4xlarge (16 vCPU, 128 GiB)

- r6a.8xlarge (32 vCPU, 256 GiB)

- r6a.12xlarge (48 vCPU, 384 GiB)

- r6a.16xlarge (64 vCPU, 512 GiB)

- r6a.24xlarge (96 vCPU, 768 GiB)

- r6a.32xlarge (128 vCPU, 1,024 GiB)

- r6a.48xlarge (192 vCPU, 1,536 GiB)

- r6a.metal (192 vCPU, 1,536 GiB)

- r6i.xlarge (4 vCPU, 32 GiB)

- r6i.2xlarge (8 vCPU, 64 GiB)

- r6i.4xlarge (16 vCPU, 128 GiB)

- r6i.8xlarge (32 vCPU, 256 GiB)

- r6i.12xlarge (48 vCPU, 384 GiB)

- r6i.16xlarge (64 vCPU, 512 GiB)

- r6i.24xlarge (96 vCPU, 768 GiB)

- r6i.32xlarge (128 vCPU, 1,024 GiB)

- r6i.metal (128 vCPU, 1,024 GiB)

- r6id.xlarge (4 vCPU, 32 GiB)

- r6id.2xlarge (8 vCPU, 64 GiB)

- r6id.4xlarge (16 vCPU, 128 GiB)

- r6id.8xlarge (32 vCPU, 256 GiB)

- r6id.12xlarge (48 vCPU, 384 GiB)

- r6id.16xlarge (64 vCPU, 512 GiB)

- r6id.24xlarge (96 vCPU, 768 GiB)

- r6id.32xlarge (128 vCPU, 1,024 GiB)

- r6id.metal (128 vCPU, 1,024 GiB)

- r6idn.12xlarge (48 vCPU, 384 GiB)

- r6idn.16xlarge (64 vCPU, 512 GiB)

- r6idn.24xlarge (96 vCPU, 768 GiB)

- r6idn.2xlarge (8 vCPU, 64 GiB)

- r6idn.32xlarge (128 vCPU, 1,024 GiB)

- r6idn.4xlarge (16 vCPU, 128 GiB)

- r6idn.8xlarge (32 vCPU, 256 GiB)

- r6idn.xlarge (4 vCPU, 32 GiB)

- r6in.12xlarge (48 vCPU, 384 GiB)

- r6in.16xlarge (64 vCPU, 512 GiB)

- r6in.24xlarge (96 vCPU, 768 GiB)

- r6in.2xlarge (8 vCPU, 64 GiB)

- r6in.32xlarge (128 vCPU, 1,024 GiB)

- r6in.4xlarge (16 vCPU, 128 GiB)

- r6in.8xlarge (32 vCPU, 256 GiB)

- r6in.xlarge (4 vCPU, 32 GiB)

- r7a.xlarge (4 vCPU, 32 GiB)

- r7a.2xlarge (8 vCPU, 64 GiB)

- r7a.4xlarge (16 vCPU, 128 GiB)

- r7a.8xlarge (32 vCPU, 256 GiB)

- r7a.12xlarge (48 vCPU, 384 GiB)

- r7a.16xlarge (64 vCPU, 512 GiB)

- r7a.24xlarge (96 vCPU, 768 GiB)

- r7a.32xlarge (128 vCPU, 1024 GiB)

- r7a.48xlarge (192 vCPU, 1536 GiB)

- r7a.metal-48xl (192 vCPU, 1536 GiB)

- r7i.xlarge (4 vCPU, 32 GiB)

- r7i.2xlarge (8 vCPU, 64 GiB)

- r7i.4xlarge (16 vCPU, 128 GiB)

- r7i.8xlarge (32 vCPU, 256 GiB)

- r7i.12xlarge (48 vCPU, 384 GiB)

- r7i.16xlarge (64 vCPU, 512 GiB)

- r7i.24xlarge (96 vCPU, 768 GiB)

- r7i.metal-24xl (96 vCPU, 768 GiB)

- r7iz.xlarge (4 vCPU, 32 GiB)

- r7iz.2xlarge (8 vCPU, 64 GiB)

- r7iz.4xlarge (16 vCPU, 128 GiB)

- r7iz.8xlarge (32 vCPU, 256 GiB)

- r7iz.12xlarge (48 vCPU, 384 GiB)

- r7iz.16xlarge (64 vCPU, 512 GiB)

- r7iz.32xlarge (128 vCPU, 1024 GiB)

- r7iz.metal-16xl (64 vCPU, 512 GiB)

- r7iz.metal-32xl (128 vCPU, 1,024 GiB)

- r8i.xlarge (4 vCPU, 32 GiB)

- r8i.2xlarge (8 vCPU, 64 GiB)

- r8i.4xlarge (16 vCPU, 128 GiB)

- r8i.8xlarge (32 vCPU, 256 GiB)

- r8i.12xlarge (48 vCPU, 384 GiB)

- r8i.16xlarge (64 vCPU, 512 GiB)

- r8i.24xlarge (96 vCPU, 768 GiB)

- r8i.32xlarge (128 vCPU, 1,024 GiB)

- r8i.48xlarge (192 vCPU, 1,536 GiB)

- r8i.96xlarge (384 vCPU, 3,072 GiB)

- r8i.metal-48xl (192 vCPU, 1,536 GiB)

- r8i.metal-96xl (384 vCPU, 3,072 GiB)

- r8i-flex.xlarge (4 vCPU, 32 GiB)

- r8i-flex.2xlarge (8 vCPU, 64 GiB)

- r8i-flex.4xlarge (16 vCPU, 128 GiB)

- r8i-flex.8xlarge (32 vCPU, 256 GiB)

- r8i-flex.12xlarge (48 vCPU, 384 GiB)

- r8i-flex.16xlarge (64 vCPU, 512 GiB)

- z1d.xlarge (4 vCPU, 32 GiB)

- z1d.2xlarge (8 vCPU, 64 GiB)

- z1d.3xlarge (12 vCPU, 96 GiB)

- z1d.6xlarge (24 vCPU, 192 GiB)

- z1d.12xlarge (48 vCPU, 384 GiB)