Ce contenu n'est pas disponible dans la langue sélectionnée.

Chapter 18. Hardware networks

18.1. About Single Root I/O Virtualization (SR-IOV) hardware networks

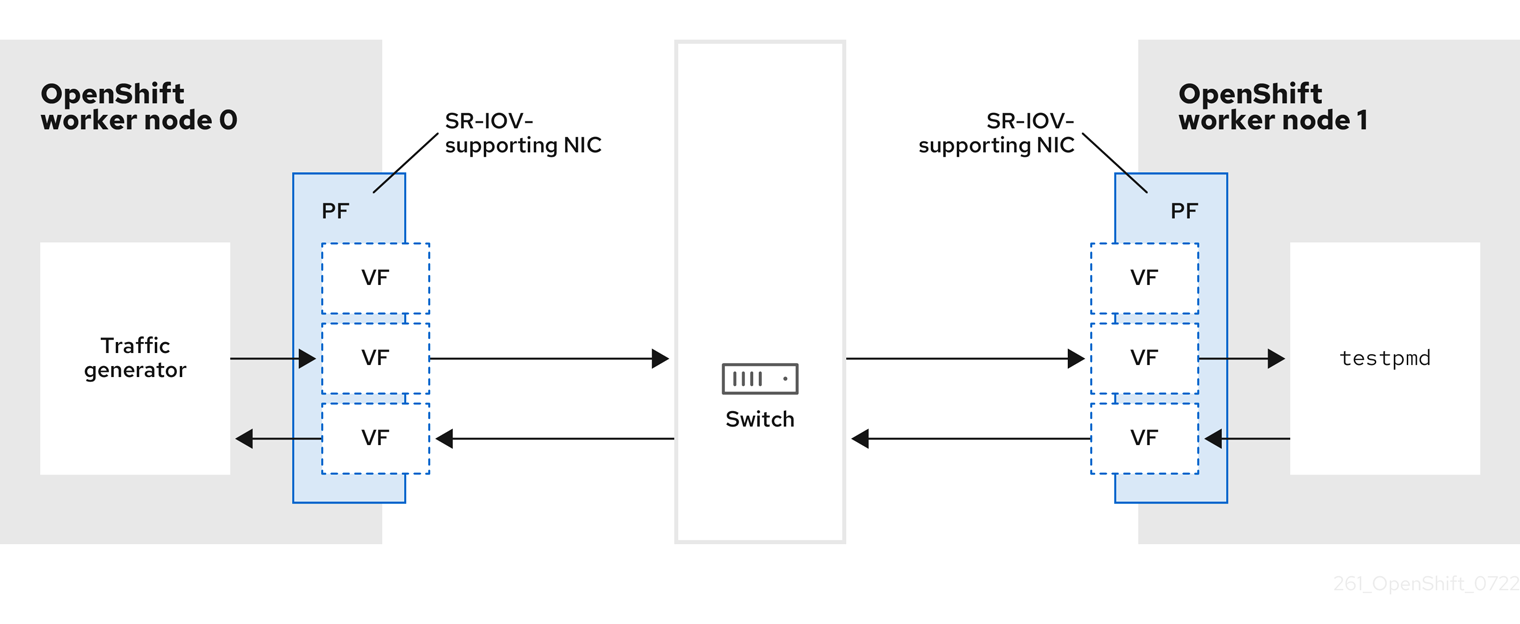

The Single Root I/O Virtualization (SR-IOV) specification is a standard for a type of PCI device assignment that can share a single device with multiple pods.

You can configure a Single Root I/O Virtualization (SR-IOV) device in your cluster by using the SR-IOV Operator.

SR-IOV can segment a compliant network device, recognized on the host node as a physical function (PF), into multiple virtual functions (VFs). The VF is used like any other network device. The SR-IOV network device driver for the device determines how the VF is exposed in the container:

-

netdevicedriver: A regular kernel network device in thenetnsof the container -

vfio-pcidriver: A character device mounted in the container

You can use SR-IOV network devices with additional networks on your OpenShift Container Platform cluster installed on bare metal or Red Hat OpenStack Platform (RHOSP) infrastructure for applications that require high bandwidth or low latency.

You can configure multi-network policies for SR-IOV networks. The support for this is technology preview and SR-IOV additional networks are only supported with kernel NICs. They are not supported for Data Plane Development Kit (DPDK) applications.

Creating multi-network policies on SR-IOV networks might not deliver the same performance to applications compared to SR-IOV networks without a multi-network policy configured.

Multi-network policies for SR-IOV network is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

You can enable SR-IOV on a node by using the following command:

$ oc label node <node_name> feature.node.kubernetes.io/network-sriov.capable="true"

Additional resources

18.1.1. Components that manage SR-IOV network devices

The SR-IOV Network Operator creates and manages the components of the SR-IOV stack. The Operator performs the following functions:

- Orchestrates discovery and management of SR-IOV network devices

-

Generates

NetworkAttachmentDefinitioncustom resources for the SR-IOV Container Network Interface (CNI) - Creates and updates the configuration of the SR-IOV network device plugin

-

Creates node specific

SriovNetworkNodeStatecustom resources -

Updates the

spec.interfacesfield in eachSriovNetworkNodeStatecustom resource

The Operator provisions the following components:

- SR-IOV network configuration daemon

- A daemon set that is deployed on worker nodes when the SR-IOV Network Operator starts. The daemon is responsible for discovering and initializing SR-IOV network devices in the cluster.

- SR-IOV Network Operator webhook

- A dynamic admission controller webhook that validates the Operator custom resource and sets appropriate default values for unset fields.

- SR-IOV Network resources injector

-

A dynamic admission controller webhook that provides functionality for patching Kubernetes pod specifications with requests and limits for custom network resources such as SR-IOV VFs. The SR-IOV network resources injector adds the

resourcefield to only the first container in a pod automatically. - SR-IOV network device plugin

- A device plugin that discovers, advertises, and allocates SR-IOV network virtual function (VF) resources. Device plugins are used in Kubernetes to enable the use of limited resources, typically in physical devices. Device plugins give the Kubernetes scheduler awareness of resource availability, so that the scheduler can schedule pods on nodes with sufficient resources.

- SR-IOV CNI plugin

- A CNI plugin that attaches VF interfaces allocated from the SR-IOV network device plugin directly into a pod.

- SR-IOV InfiniBand CNI plugin

- A CNI plugin that attaches InfiniBand (IB) VF interfaces allocated from the SR-IOV network device plugin directly into a pod.

The SR-IOV Network resources injector and SR-IOV Network Operator webhook are enabled by default and can be disabled by editing the default SriovOperatorConfig CR. Use caution when disabling the SR-IOV Network Operator Admission Controller webhook. You can disable the webhook under specific circumstances, such as troubleshooting, or if you want to use unsupported devices.

18.1.1.1. Supported platforms

The SR-IOV Network Operator is supported on the following platforms:

- Bare metal

- Red Hat OpenStack Platform (RHOSP)

18.1.1.2. Supported devices

OpenShift Container Platform supports the following network interface controllers:

| Manufacturer | Model | Vendor ID | Device ID |

|---|---|---|---|

| Broadcom | BCM57414 | 14e4 | 16d7 |

| Broadcom | BCM57508 | 14e4 | 1750 |

| Broadcom | BCM57504 | 14e4 | 1751 |

| Intel | X710 | 8086 | 1572 |

| Intel | X710 Backplane | 8086 | 1581 |

| Intel | X710 Base T | 8086 | 15ff |

| Intel | XL710 | 8086 | 1583 |

| Intel | XXV710 | 8086 | 158b |

| Intel | E810-CQDA2 | 8086 | 1592 |

| Intel | E810-2CQDA2 | 8086 | 1592 |

| Intel | E810-XXVDA2 | 8086 | 159b |

| Intel | E810-XXVDA4 | 8086 | 1593 |

| Intel | E810-XXVDA4T | 8086 | 1593 |

| Intel | Ice E810-XXV Backplane | 8086 | 1599 |

| Intel | Ice E823L Backplane | 8086 | 124c |

| Intel | Ice E823L SFP | 8086 | 124d |

| Marvell | OCTEON Fusion CNF105XX | 177d | ba00 |

| Marvell | OCTEON10 CN10XXX | 1177d | b900 |

| Mellanox | MT27700 Family [ConnectX‑4] | 15b3 | 1013 |

| Mellanox | MT27710 Family [ConnectX‑4 Lx] | 15b3 | 1015 |

| Mellanox | MT27800 Family [ConnectX‑5] | 15b3 | 1017 |

| Mellanox | MT28880 Family [ConnectX‑5 Ex] | 15b3 | 1019 |

| Mellanox | MT28908 Family [ConnectX‑6] | 15b3 | 101b |

| Mellanox | MT2892 Family [ConnectX‑6 Dx] | 15b3 | 101d |

| Mellanox | MT2894 Family [ConnectX‑6 Lx] | 15b3 | 101f |

| Mellanox | Mellanox MT2910 Family [ConnectX‑7] | 15b3 | 1021 |

| Mellanox | MT42822 BlueField‑2 in ConnectX‑6 NIC mode | 15b3 | a2d6 |

| Pensando [1] | DSC-25 dual-port 25G distributed services card for ionic driver | 0x1dd8 | 0x1002 |

| Pensando [1] | DSC-100 dual-port 100G distributed services card for ionic driver | 0x1dd8 | 0x1003 |

| Silicom | STS Family | 8086 | 1591 |

- OpenShift SR-IOV is supported, but you must set a static, Virtual Function (VF) media access control (MAC) address using the SR-IOV CNI config file when using SR-IOV.

For the most up-to-date list of supported cards and compatible OpenShift Container Platform versions available, see Openshift Single Root I/O Virtualization (SR-IOV) and PTP hardware networks Support Matrix.

18.1.2. Additional resources

18.1.3. Next steps

- Configuring the SR-IOV Network Operator

- Configuring an SR-IOV network device

- If you use OpenShift Virtualization: Connecting a virtual machine to an SR-IOV network

- Configuring an SR-IOV network attachment

- Ethernet network attachement: Adding a pod to an SR-IOV additional network

- InfiniBand network attachement: Adding a pod to an SR-IOV additional network

18.2. Configuring an SR-IOV network device

You can configure a Single Root I/O Virtualization (SR-IOV) device in your cluster.

Before you perform any tasks in the following documentation, ensure that you installed the SR-IOV Network Operator.

18.2.1. SR-IOV network node configuration object

You specify the SR-IOV network device configuration for a node by creating an SR-IOV network node policy. The API object for the policy is part of the sriovnetwork.openshift.io API group.

The following YAML describes an SR-IOV network node policy:

apiVersion: sriovnetwork.openshift.io/v1 kind: SriovNetworkNodePolicy metadata: name: <name> 1 namespace: openshift-sriov-network-operator 2 spec: resourceName: <sriov_resource_name> 3 nodeSelector: feature.node.kubernetes.io/network-sriov.capable: "true" 4 priority: <priority> 5 mtu: <mtu> 6 needVhostNet: false 7 numVfs: <num> 8 externallyManaged: false 9 nicSelector: 10 vendor: "<vendor_code>" 11 deviceID: "<device_id>" 12 pfNames: ["<pf_name>", ...] 13 rootDevices: ["<pci_bus_id>", ...] 14 netFilter: "<filter_string>" 15 deviceType: <device_type> 16 isRdma: false 17 linkType: <link_type> 18 eSwitchMode: "switchdev" 19 excludeTopology: false 20

- 1

- The name for the custom resource object.

- 2

- The namespace where the SR-IOV Network Operator is installed.

- 3

- The resource name of the SR-IOV network device plugin. You can create multiple SR-IOV network node policies for a resource name.

When specifying a name, be sure to use the accepted syntax expression

^[a-zA-Z0-9_]+$in theresourceName. - 4

- The node selector specifies the nodes to configure. Only SR-IOV network devices on the selected nodes are configured. The SR-IOV Container Network Interface (CNI) plugin and device plugin are deployed on selected nodes only.Important

The SR-IOV Network Operator applies node network configuration policies to nodes in sequence. Before applying node network configuration policies, the SR-IOV Network Operator checks if the machine config pool (MCP) for a node is in an unhealthy state such as

DegradedorUpdating. If a node is in an unhealthy MCP, the process of applying node network configuration policies to all targeted nodes in the cluster pauses until the MCP returns to a healthy state.To avoid a node in an unhealthy MCP from blocking the application of node network configuration policies to other nodes, including nodes in other MCPs, you must create a separate node network configuration policy for each MCP.

- 5

- Optional: The priority is an integer value between

0and99. A smaller value receives higher priority. For example, a priority of10is a higher priority than99. The default value is99. - 6

- Optional: The maximum transmission unit (MTU) of the physical function and all its virtual functions. The maximum MTU value can vary for different network interface controller (NIC) models.Important

If you want to create virtual function on the default network interface, ensure that the MTU is set to a value that matches the cluster MTU.

If you want to modify the MTU of a single virtual function while the function is assigned to a pod, leave the MTU value blank in the SR-IOV network node policy. Otherwise, the SR-IOV Network Operator reverts the MTU of the virtual function to the MTU value defined in the SR-IOV network node policy, which might trigger a node drain.

- 7

- Optional: Set

needVhostNettotrueto mount the/dev/vhost-netdevice in the pod. Use the mounted/dev/vhost-netdevice with Data Plane Development Kit (DPDK) to forward traffic to the kernel network stack. - 8

- The number of the virtual functions (VF) to create for the SR-IOV physical network device. For an Intel network interface controller (NIC), the number of VFs cannot be larger than the total VFs supported by the device. For a Mellanox NIC, the number of VFs cannot be larger than

127. - 9

- The

externallyManagedfield indicates whether the SR-IOV Network Operator manages all, or only a subset of virtual functions (VFs). With the value set tofalsethe SR-IOV Network Operator manages and configures all VFs on the PF.NoteWhen

externallyManagedis set totrue, you must manually create the Virtual Functions (VFs) on the physical function (PF) before applying theSriovNetworkNodePolicyresource. If the VFs are not pre-created, the SR-IOV Network Operator’s webhook will block the policy request.When

externallyManagedis set tofalse, the SR-IOV Network Operator automatically creates and manages the VFs, including resetting them if necessary.To use VFs on the host system, you must create them through NMState, and set

externallyManagedtotrue. In this mode, the SR-IOV Network Operator does not modify the PF or the manually managed VFs, except for those explicitly defined in thenicSelectorfield of your policy. However, the SR-IOV Network Operator continues to manage VFs that are used as pod secondary interfaces. - 10

- The NIC selector identifies the device to which this resource applies. You do not have to specify values for all the parameters. It is recommended to identify the network device with enough precision to avoid selecting a device unintentionally.

If you specify

rootDevices, you must also specify a value forvendor,deviceID, orpfNames. If you specify bothpfNamesandrootDevicesat the same time, ensure that they refer to the same device. If you specify a value fornetFilter, then you do not need to specify any other parameter because a network ID is unique. - 11

- Optional: The vendor hexadecimal vendor identifier of the SR-IOV network device. The only allowed values are

8086(Intel) and15b3(Mellanox). - 12

- Optional: The device hexadecimal device identifier of the SR-IOV network device. For example,

101bis the device ID for a Mellanox ConnectX-6 device. - 13

- Optional: An array of one or more physical function (PF) names the resource must apply to.

- 14

- Optional: An array of one or more PCI bus addresses the resource must apply to. For example

0000:02:00.1. - 15

- Optional: The platform-specific network filter. The only supported platform is Red Hat OpenStack Platform (RHOSP). Acceptable values use the following format:

openstack/NetworkID:xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx. Replacexxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxwith the value from the/var/config/openstack/latest/network_data.jsonmetadata file. This filter ensures that VFs are associated with a specific OpenStack network. The operator uses this filter to map the VFs to the appropriate network based on metadata provided by the OpenStack platform. - 16

- Optional: The driver to configure for the VFs created from this resource. The only allowed values are

netdeviceandvfio-pci. The default value isnetdevice.For a Mellanox NIC to work in DPDK mode on bare metal nodes, use the

netdevicedriver type and setisRdmatotrue. - 17

- Optional: Configures whether to enable remote direct memory access (RDMA) mode. The default value is

false.If the

isRdmaparameter is set totrue, you can continue to use the RDMA-enabled VF as a normal network device. A device can be used in either mode.Set

isRdmatotrueand additionally setneedVhostNettotrueto configure a Mellanox NIC for use with Fast Datapath DPDK applications.NoteYou cannot set the

isRdmaparameter totruefor intel NICs. - 18

- Optional: The link type for the VFs. The default value is

ethfor Ethernet. Change this value to 'ib' for InfiniBand.When

linkTypeis set toib,isRdmais automatically set totrueby the SR-IOV Network Operator webhook. WhenlinkTypeis set toib,deviceTypeshould not be set tovfio-pci.Do not set linkType to

ethfor SriovNetworkNodePolicy, because this can lead to an incorrect number of available devices reported by the device plugin. - 19

- Optional: To enable hardware offloading, you must set the

eSwitchModefield to"switchdev". For more information about hardware offloading, see "Configuring hardware offloading". - 20

- Optional: To exclude advertising an SR-IOV network resource’s NUMA node to the Topology Manager, set the value to

true. The default value isfalse.

18.2.1.1. SR-IOV network node configuration examples

The following example describes the configuration for an InfiniBand device:

Example configuration for an InfiniBand device

apiVersion: sriovnetwork.openshift.io/v1

kind: SriovNetworkNodePolicy

metadata:

name: <name>

namespace: openshift-sriov-network-operator

spec:

resourceName: <sriov_resource_name>

nodeSelector:

feature.node.kubernetes.io/network-sriov.capable: "true"

numVfs: <num>

nicSelector:

vendor: "<vendor_code>"

deviceID: "<device_id>"

rootDevices:

- "<pci_bus_id>"

linkType: <link_type>

isRdma: true

# ...

The following example describes the configuration for an SR-IOV network device in a RHOSP virtual machine:

Example configuration for an SR-IOV device in a virtual machine

apiVersion: sriovnetwork.openshift.io/v1

kind: SriovNetworkNodePolicy

metadata:

name: <name>

namespace: openshift-sriov-network-operator

spec:

resourceName: <sriov_resource_name>

nodeSelector:

feature.node.kubernetes.io/network-sriov.capable: "true"

numVfs: 1 1

nicSelector:

vendor: "<vendor_code>"

deviceID: "<device_id>"

netFilter: "openstack/NetworkID:ea24bd04-8674-4f69-b0ee-fa0b3bd20509" 2

# ...

18.2.1.2. Automated discovery of SR-IOV network devices

The SR-IOV Network Operator searches your cluster for SR-IOV capable network devices on worker nodes. The Operator creates and updates a SriovNetworkNodeState custom resource (CR) for each worker node that provides a compatible SR-IOV network device.

The CR is assigned the same name as the worker node. The status.interfaces list provides information about the network devices on a node.

Do not modify a SriovNetworkNodeState object. The Operator creates and manages these resources automatically.

18.2.1.2.1. Example SriovNetworkNodeState object

The following YAML is an example of a SriovNetworkNodeState object created by the SR-IOV Network Operator:

An SriovNetworkNodeState object

apiVersion: sriovnetwork.openshift.io/v1 kind: SriovNetworkNodeState metadata: name: node-25 1 namespace: openshift-sriov-network-operator ownerReferences: - apiVersion: sriovnetwork.openshift.io/v1 blockOwnerDeletion: true controller: true kind: SriovNetworkNodePolicy name: default spec: dpConfigVersion: "39824" status: interfaces: 2 - deviceID: "1017" driver: mlx5_core mtu: 1500 name: ens785f0 pciAddress: "0000:18:00.0" totalvfs: 8 vendor: 15b3 - deviceID: "1017" driver: mlx5_core mtu: 1500 name: ens785f1 pciAddress: "0000:18:00.1" totalvfs: 8 vendor: 15b3 - deviceID: 158b driver: i40e mtu: 1500 name: ens817f0 pciAddress: 0000:81:00.0 totalvfs: 64 vendor: "8086" - deviceID: 158b driver: i40e mtu: 1500 name: ens817f1 pciAddress: 0000:81:00.1 totalvfs: 64 vendor: "8086" - deviceID: 158b driver: i40e mtu: 1500 name: ens803f0 pciAddress: 0000:86:00.0 totalvfs: 64 vendor: "8086" syncStatus: Succeeded

18.2.1.3. Virtual function (VF) partitioning for SR-IOV devices

In some cases, you might want to split virtual functions (VFs) from the same physical function (PF) into multiple resource pools. For example, you might want some of the VFs to load with the default driver and the remaining VFs load with the vfio-pci driver. In such a deployment, the pfNames selector in your SriovNetworkNodePolicy custom resource (CR) can be used to specify a range of VFs for a pool using the following format: <pfname>#<first_vf>-<last_vf>.

For example, the following YAML shows the selector for an interface named netpf0 with VF 2 through 7:

pfNames: ["netpf0#2-7"]

-

netpf0is the PF interface name. -

2is the first VF index (0-based) that is included in the range. -

7is the last VF index (0-based) that is included in the range.

You can select VFs from the same PF by using different policy CRs if the following requirements are met:

-

The

numVfsvalue must be identical for policies that select the same PF. -

The VF index must be in the range of

0to<numVfs>-1. For example, if you have a policy withnumVfsset to8, then the<first_vf>value must not be smaller than0, and the<last_vf>must not be larger than7. - The VFs ranges in different policies must not overlap.

-

The

<first_vf>must not be larger than the<last_vf>.

The following example illustrates NIC partitioning for an SR-IOV device.

The policy policy-net-1 defines a resource pool net-1 that contains the VF 0 of PF netpf0 with the default VF driver. The policy policy-net-1-dpdk defines a resource pool net-1-dpdk that contains the VF 8 to 15 of PF netpf0 with the vfio VF driver.

Policy policy-net-1:

apiVersion: sriovnetwork.openshift.io/v1

kind: SriovNetworkNodePolicy

metadata:

name: policy-net-1

namespace: openshift-sriov-network-operator

spec:

resourceName: net1

nodeSelector:

feature.node.kubernetes.io/network-sriov.capable: "true"

numVfs: 16

nicSelector:

pfNames: ["netpf0#0-0"]

deviceType: netdevice

Policy policy-net-1-dpdk:

apiVersion: sriovnetwork.openshift.io/v1

kind: SriovNetworkNodePolicy

metadata:

name: policy-net-1-dpdk

namespace: openshift-sriov-network-operator

spec:

resourceName: net1dpdk

nodeSelector:

feature.node.kubernetes.io/network-sriov.capable: "true"

numVfs: 16

nicSelector:

pfNames: ["netpf0#8-15"]

deviceType: vfio-pciVerifying that the interface is successfully partitioned

Confirm that the interface partitioned to virtual functions (VFs) for the SR-IOV device by running the following command.

$ ip link show <interface> 1- 1

- Replace

<interface>with the interface that you specified when partitioning to VFs for the SR-IOV device, for example,ens3f1.

Example output

5: ens3f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000 link/ether 3c:fd:fe:d1:bc:01 brd ff:ff:ff:ff:ff:ff vf 0 link/ether 5a:e7:88:25:ea:a0 brd ff:ff:ff:ff:ff:ff, spoof checking on, link-state auto, trust off vf 1 link/ether 3e:1d:36:d7:3d:49 brd ff:ff:ff:ff:ff:ff, spoof checking on, link-state auto, trust off vf 2 link/ether ce:09:56:97:df:f9 brd ff:ff:ff:ff:ff:ff, spoof checking on, link-state auto, trust off vf 3 link/ether 5e:91:cf:88:d1:38 brd ff:ff:ff:ff:ff:ff, spoof checking on, link-state auto, trust off vf 4 link/ether e6:06:a1:96:2f:de brd ff:ff:ff:ff:ff:ff, spoof checking on, link-state auto, trust off

18.2.1.4. A test pod template for clusters that use SR-IOV on OpenStack

The following testpmd pod demonstrates container creation with huge pages, reserved CPUs, and the SR-IOV port.

An example testpmd pod

apiVersion: v1

kind: Pod

metadata:

name: testpmd-sriov

namespace: mynamespace

annotations:

cpu-load-balancing.crio.io: "disable"

cpu-quota.crio.io: "disable"

# ...

spec:

containers:

- name: testpmd

command: ["sleep", "99999"]

image: registry.redhat.io/openshift4/dpdk-base-rhel8:v4.9

securityContext:

capabilities:

add: ["IPC_LOCK","SYS_ADMIN"]

privileged: true

runAsUser: 0

resources:

requests:

memory: 1000Mi

hugepages-1Gi: 1Gi

cpu: '2'

openshift.io/sriov1: 1

limits:

hugepages-1Gi: 1Gi

cpu: '2'

memory: 1000Mi

openshift.io/sriov1: 1

volumeMounts:

- mountPath: /dev/hugepages

name: hugepage

readOnly: False

runtimeClassName: performance-cnf-performanceprofile 1

volumes:

- name: hugepage

emptyDir:

medium: HugePages

- 1

- This example assumes that the name of the performance profile is

cnf-performance profile.

18.2.1.5. A test pod template for clusters that use OVS hardware offloading on OpenStack

The following testpmd pod demonstrates Open vSwitch (OVS) hardware offloading on Red Hat OpenStack Platform (RHOSP).

An example testpmd pod

apiVersion: v1

kind: Pod

metadata:

name: testpmd-sriov

namespace: mynamespace

annotations:

k8s.v1.cni.cncf.io/networks: hwoffload1

spec:

runtimeClassName: performance-cnf-performanceprofile 1

containers:

- name: testpmd

command: ["sleep", "99999"]

image: registry.redhat.io/openshift4/dpdk-base-rhel8:v4.9

securityContext:

capabilities:

add: ["IPC_LOCK","SYS_ADMIN"]

privileged: true

runAsUser: 0

resources:

requests:

memory: 1000Mi

hugepages-1Gi: 1Gi

cpu: '2'

limits:

hugepages-1Gi: 1Gi

cpu: '2'

memory: 1000Mi

volumeMounts:

- mountPath: /mnt/huge

name: hugepage

readOnly: False

volumes:

- name: hugepage

emptyDir:

medium: HugePages

- 1

- If your performance profile is not named

cnf-performance profile, replace that string with the correct performance profile name.

18.2.1.6. Huge pages resource injection for Downward API

When a pod specification includes a resource request or limit for huge pages, the Network Resources Injector automatically adds Downward API fields to the pod specification to provide the huge pages information to the container.

The Network Resources Injector adds a volume that is named podnetinfo and is mounted at /etc/podnetinfo for each container in the pod. The volume uses the Downward API and includes a file for huge pages requests and limits. The file naming convention is as follows:

-

/etc/podnetinfo/hugepages_1G_request_<container-name> -

/etc/podnetinfo/hugepages_1G_limit_<container-name> -

/etc/podnetinfo/hugepages_2M_request_<container-name> -

/etc/podnetinfo/hugepages_2M_limit_<container-name>

The paths specified in the previous list are compatible with the app-netutil library. By default, the library is configured to search for resource information in the /etc/podnetinfo directory. If you choose to specify the Downward API path items yourself manually, the app-netutil library searches for the following paths in addition to the paths in the previous list.

-

/etc/podnetinfo/hugepages_request -

/etc/podnetinfo/hugepages_limit -

/etc/podnetinfo/hugepages_1G_request -

/etc/podnetinfo/hugepages_1G_limit -

/etc/podnetinfo/hugepages_2M_request -

/etc/podnetinfo/hugepages_2M_limit

As with the paths that the Network Resources Injector can create, the paths in the preceding list can optionally end with a _<container-name> suffix.

18.2.2. Configuring SR-IOV network devices

The SR-IOV Network Operator adds the SriovNetworkNodePolicy.sriovnetwork.openshift.io CustomResourceDefinition to OpenShift Container Platform. You can configure an SR-IOV network device by creating a SriovNetworkNodePolicy custom resource (CR).

When applying the configuration specified in a SriovNetworkNodePolicy object, the SR-IOV Operator might drain the nodes, and in some cases, reboot nodes. Reboot only happens in the following cases:

-

With Mellanox NICs (

mlx5driver) a node reboot happens every time the number of virtual functions (VFs) increase on a physical function (PF). -

With Intel NICs, a reboot only happens if the kernel parameters do not include

intel_iommu=onandiommu=pt.

It might take several minutes for a configuration change to apply.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You have access to the cluster as a user with the

cluster-adminrole. - You have installed the SR-IOV Network Operator.

- You have enough available nodes in your cluster to handle the evicted workload from drained nodes.

- You have not selected any control plane nodes for SR-IOV network device configuration.

Procedure

-

Create an

SriovNetworkNodePolicyobject, and then save the YAML in the<name>-sriov-node-network.yamlfile. Replace<name>with the name for this configuration. -

Optional: Label the SR-IOV capable cluster nodes with

SriovNetworkNodePolicy.Spec.NodeSelectorif they are not already labeled. For more information about labeling nodes, see "Understanding how to update labels on nodes". Create the

SriovNetworkNodePolicyobject:$ oc create -f <name>-sriov-node-network.yaml

where

<name>specifies the name for this configuration.After applying the configuration update, all the pods in

sriov-network-operatornamespace transition to theRunningstatus.To verify that the SR-IOV network device is configured, enter the following command. Replace

<node_name>with the name of a node with the SR-IOV network device that you just configured.$ oc get sriovnetworknodestates -n openshift-sriov-network-operator <node_name> -o jsonpath='{.status.syncStatus}'

Additional resources

18.2.3. Creating a non-uniform memory access (NUMA) aligned SR-IOV pod

You can create a NUMA aligned SR-IOV pod by restricting SR-IOV and the CPU resources allocated from the same NUMA node with restricted or single-numa-node Topology Manager policies.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have configured the CPU Manager policy to

static. For more information on CPU Manager, see the "Additional resources" section. You have configured the Topology Manager policy to

single-numa-node.NoteWhen

single-numa-nodeis unable to satisfy the request, you can configure the Topology Manager policy torestricted. For more flexible SR-IOV network resource scheduling, see Excluding SR-IOV network topology during NUMA-aware scheduling in the Additional resources section.

Procedure

Create the following SR-IOV pod spec, and then save the YAML in the

<name>-sriov-pod.yamlfile. Replace<name>with a name for this pod.The following example shows an SR-IOV pod spec:

apiVersion: v1 kind: Pod metadata: name: sample-pod annotations: k8s.v1.cni.cncf.io/networks: <name> 1 spec: containers: - name: sample-container image: <image> 2 command: ["sleep", "infinity"] resources: limits: memory: "1Gi" 3 cpu: "2" 4 requests: memory: "1Gi" cpu: "2"- 1

- Replace

<name>with the name of the SR-IOV network attachment definition CR. - 2

- Replace

<image>with the name of thesample-podimage. - 3

- To create the SR-IOV pod with guaranteed QoS, set

memory limitsequal tomemory requests. - 4

- To create the SR-IOV pod with guaranteed QoS, set

cpu limitsequals tocpu requests.

Create the sample SR-IOV pod by running the following command:

$ oc create -f <filename> 1- 1

- Replace

<filename>with the name of the file you created in the previous step.

Confirm that the

sample-podis configured with guaranteed QoS.$ oc describe pod sample-pod

Confirm that the

sample-podis allocated with exclusive CPUs.$ oc exec sample-pod -- cat /sys/fs/cgroup/cpuset/cpuset.cpus

Confirm that the SR-IOV device and CPUs that are allocated for the

sample-podare on the same NUMA node.$ oc exec sample-pod -- cat /sys/fs/cgroup/cpuset/cpuset.cpus

18.2.4. Exclude the SR-IOV network topology for NUMA-aware scheduling

You can exclude advertising the Non-Uniform Memory Access (NUMA) node for the SR-IOV network to the Topology Manager for more flexible SR-IOV network deployments during NUMA-aware pod scheduling.

In some scenarios, it is a priority to maximize CPU and memory resources for a pod on a single NUMA node. By not providing a hint to the Topology Manager about the NUMA node for the pod’s SR-IOV network resource, the Topology Manager can deploy the SR-IOV network resource and the pod CPU and memory resources to different NUMA nodes. This can add to network latency because of the data transfer between NUMA nodes. However, it is acceptable in scenarios when workloads require optimal CPU and memory performance.

For example, consider a compute node, compute-1, that features two NUMA nodes: numa0 and numa1. The SR-IOV-enabled NIC is present on numa0. The CPUs available for pod scheduling are present on numa1 only. By setting the excludeTopology specification to true, the Topology Manager can assign CPU and memory resources for the pod to numa1 and can assign the SR-IOV network resource for the same pod to numa0. This is only possible when you set the excludeTopology specification to true. Otherwise, the Topology Manager attempts to place all resources on the same NUMA node.

18.2.5. Troubleshooting SR-IOV configuration

After following the procedure to configure an SR-IOV network device, the following sections address some error conditions.

To display the state of nodes, run the following command:

$ oc get sriovnetworknodestates -n openshift-sriov-network-operator <node_name>

where: <node_name> specifies the name of a node with an SR-IOV network device.

Error output: Cannot allocate memory

"lastSyncError": "write /sys/bus/pci/devices/0000:3b:00.1/sriov_numvfs: cannot allocate memory"

When a node indicates that it cannot allocate memory, check the following items:

- Confirm that global SR-IOV settings are enabled in the BIOS for the node.

- Confirm that VT-d is enabled in the BIOS for the node.

Additional resources

18.2.6. Next steps

18.3. Configuring an SR-IOV Ethernet network attachment

You can configure an Ethernet network attachment for an Single Root I/O Virtualization (SR-IOV) device in the cluster.

Before you perform any tasks in the following documentation, ensure that you installed the SR-IOV Network Operator.

18.3.1. Ethernet device configuration object

You can configure an Ethernet network device by defining an SriovNetwork object.

The following YAML describes an SriovNetwork object:

apiVersion: sriovnetwork.openshift.io/v1 kind: SriovNetwork metadata: name: <name> 1 namespace: openshift-sriov-network-operator 2 spec: resourceName: <sriov_resource_name> 3 networkNamespace: <target_namespace> 4 vlan: <vlan> 5 spoofChk: "<spoof_check>" 6 ipam: |- 7 {} linkState: <link_state> 8 maxTxRate: <max_tx_rate> 9 minTxRate: <min_tx_rate> 10 vlanQoS: <vlan_qos> 11 trust: "<trust_vf>" 12 capabilities: <capabilities> 13

- 1

- A name for the object. The SR-IOV Network Operator creates a

NetworkAttachmentDefinitionobject with same name. - 2

- The namespace where the SR-IOV Network Operator is installed.

- 3

- The value for the

spec.resourceNameparameter from theSriovNetworkNodePolicyobject that defines the SR-IOV hardware for this additional network. - 4

- The target namespace for the

SriovNetworkobject. Only pods in the target namespace can attach to the additional network. - 5

- Optional: A Virtual LAN (VLAN) ID for the additional network. The integer value must be from

0to4095. The default value is0. - 6

- Optional: The spoof check mode of the VF. The allowed values are the strings

"on"and"off".ImportantYou must enclose the value you specify in quotes or the object is rejected by the SR-IOV Network Operator.

- 7

- A configuration object for the IPAM CNI plugin as a YAML block scalar. The plugin manages IP address assignment for the attachment definition.

- 8

- Optional: The link state of virtual function (VF). Allowed value are

enable,disableandauto. - 9

- Optional: A maximum transmission rate, in Mbps, for the VF.

- 10

- Optional: A minimum transmission rate, in Mbps, for the VF. This value must be less than or equal to the maximum transmission rate.Note

Intel NICs do not support the

minTxRateparameter. For more information, see BZ#1772847. - 11

- Optional: An IEEE 802.1p priority level for the VF. The default value is

0. - 12

- Optional: The trust mode of the VF. The allowed values are the strings

"on"and"off".ImportantYou must enclose the value that you specify in quotes, or the SR-IOV Network Operator rejects the object.

- 13

- Optional: The capabilities to configure for this additional network. You can specify

'{ "ips": true }'to enable IP address support or'{ "mac": true }'to enable MAC address support.

18.3.1.1. Creating a configuration for assignment of dual-stack IP addresses dynamically

Dual-stack IP address assignment can be configured with the ipRanges parameter for:

- IPv4 addresses

- IPv6 addresses

- multiple IP address assignment

Procedure

-

Set

typetowhereabouts. Use

ipRangesto allocate IP addresses as shown in the following example:cniVersion: operator.openshift.io/v1 kind: Network =metadata: name: cluster spec: additionalNetworks: - name: whereabouts-shim namespace: default type: Raw rawCNIConfig: |- { "name": "whereabouts-dual-stack", "cniVersion": "0.3.1, "type": "bridge", "ipam": { "type": "whereabouts", "ipRanges": [ {"range": "192.168.10.0/24"}, {"range": "2001:db8::/64"} ] } }- Attach network to a pod. For more information, see "Adding a pod to an additional network".

- Verify that all IP addresses are assigned.

Run the following command to ensure the IP addresses are assigned as metadata.

$ oc exec -it mypod -- ip a

18.3.1.2. Configuration of IP address assignment for a network attachment

For additional networks, IP addresses can be assigned using an IP Address Management (IPAM) CNI plugin, which supports various assignment methods, including Dynamic Host Configuration Protocol (DHCP) and static assignment.

The DHCP IPAM CNI plugin responsible for dynamic assignment of IP addresses operates with two distinct components:

- CNI Plugin: Responsible for integrating with the Kubernetes networking stack to request and release IP addresses.

- DHCP IPAM CNI Daemon: A listener for DHCP events that coordinates with existing DHCP servers in the environment to handle IP address assignment requests. This daemon is not a DHCP server itself.

For networks requiring type: dhcp in their IPAM configuration, ensure the following:

- A DHCP server is available and running in the environment. The DHCP server is external to the cluster and is expected to be part of the customer’s existing network infrastructure.

- The DHCP server is appropriately configured to serve IP addresses to the nodes.

In cases where a DHCP server is unavailable in the environment, it is recommended to use the Whereabouts IPAM CNI plugin instead. The Whereabouts CNI provides similar IP address management capabilities without the need for an external DHCP server.

Use the Whereabouts CNI plugin when there is no external DHCP server or where static IP address management is preferred. The Whereabouts plugin includes a reconciler daemon to manage stale IP address allocations.

A DHCP lease must be periodically renewed throughout the container’s lifetime, so a separate daemon, the DHCP IPAM CNI Daemon, is required. To deploy the DHCP IPAM CNI daemon, modify the Cluster Network Operator (CNO) configuration to trigger the deployment of this daemon as part of the additional network setup.

18.3.1.2.1. Static IP address assignment configuration

The following table describes the configuration for static IP address assignment:

| Field | Type | Description |

|---|---|---|

|

|

|

The IPAM address type. The value |

|

|

| An array of objects specifying IP addresses to assign to the virtual interface. Both IPv4 and IPv6 IP addresses are supported. |

|

|

| An array of objects specifying routes to configure inside the pod. |

|

|

| Optional: An array of objects specifying the DNS configuration. |

The addresses array requires objects with the following fields:

| Field | Type | Description |

|---|---|---|

|

|

|

An IP address and network prefix that you specify. For example, if you specify |

|

|

| The default gateway to route egress network traffic to. |

| Field | Type | Description |

|---|---|---|

|

|

|

The IP address range in CIDR format, such as |

|

|

| The gateway where network traffic is routed. |

| Field | Type | Description |

|---|---|---|

|

|

| An array of one or more IP addresses for to send DNS queries to. |

|

|

|

The default domain to append to a hostname. For example, if the domain is set to |

|

|

|

An array of domain names to append to an unqualified hostname, such as |

Static IP address assignment configuration example

{

"ipam": {

"type": "static",

"addresses": [

{

"address": "191.168.1.7/24"

}

]

}

}

18.3.1.2.2. Dynamic IP address (DHCP) assignment configuration

A pod obtains its original DHCP lease when it is created. The lease must be periodically renewed by a minimal DHCP server deployment running on the cluster.

For an Ethernet network attachment, the SR-IOV Network Operator does not create a DHCP server deployment; the Cluster Network Operator is responsible for creating the minimal DHCP server deployment.

To trigger the deployment of the DHCP server, you must create a shim network attachment by editing the Cluster Network Operator configuration, as in the following example:

Example shim network attachment definition

apiVersion: operator.openshift.io/v1

kind: Network

metadata:

name: cluster

spec:

additionalNetworks:

- name: dhcp-shim

namespace: default

type: Raw

rawCNIConfig: |-

{

"name": "dhcp-shim",

"cniVersion": "0.3.1",

"type": "bridge",

"ipam": {

"type": "dhcp"

}

}

# ...

The following table describes the configuration parameters for dynamic IP address address assignment with DHCP.

| Field | Type | Description |

|---|---|---|

|

|

|

The IPAM address type. The value |

The following JSON example describes the configuration p for dynamic IP address address assignment with DHCP.

Dynamic IP address (DHCP) assignment configuration example

{

"ipam": {

"type": "dhcp"

}

}

18.3.1.2.3. Dynamic IP address assignment configuration with Whereabouts

The Whereabouts CNI plugin allows the dynamic assignment of an IP address to an additional network without the use of a DHCP server.

The Whereabouts CNI plugin also supports overlapping IP address ranges and configuration of the same CIDR range multiple times within separate NetworkAttachmentDefinition CRDs. This provides greater flexibility and management capabilities in multi-tenant environments.

18.3.1.2.3.1. Dynamic IP address configuration objects

The following table describes the configuration objects for dynamic IP address assignment with Whereabouts:

| Field | Type | Description |

|---|---|---|

|

|

|

The IPAM address type. The value |

|

|

| An IP address and range in CIDR notation. IP addresses are assigned from within this range of addresses. |

|

|

| Optional: A list of zero or more IP addresses and ranges in CIDR notation. IP addresses within an excluded address range are not assigned. |

|

|

| Optional: Helps ensure that each group or domain of pods gets its own set of IP addresses, even if they share the same range of IP addresses. Setting this field is important for keeping networks separate and organized, notably in multi-tenant environments. |

18.3.1.2.3.2. Dynamic IP address assignment configuration that uses Whereabouts

The following example shows a dynamic address assignment configuration that uses Whereabouts:

Whereabouts dynamic IP address assignment

{

"ipam": {

"type": "whereabouts",

"range": "192.0.2.192/27",

"exclude": [

"192.0.2.192/30",

"192.0.2.196/32"

]

}

}

18.3.1.2.3.3. Dynamic IP address assignment that uses Whereabouts with overlapping IP address ranges

The following example shows a dynamic IP address assignment that uses overlapping IP address ranges for multi-tenant networks.

NetworkAttachmentDefinition 1

{

"ipam": {

"type": "whereabouts",

"range": "192.0.2.192/29",

"network_name": "example_net_common", 1

}

}

- 1

- Optional. If set, must match the

network_nameofNetworkAttachmentDefinition 2.

NetworkAttachmentDefinition 2

{

"ipam": {

"type": "whereabouts",

"range": "192.0.2.192/24",

"network_name": "example_net_common", 1

}

}

- 1

- Optional. If set, must match the

network_nameofNetworkAttachmentDefinition 1.

18.3.2. Configuring SR-IOV additional network

You can configure an additional network that uses SR-IOV hardware by creating an SriovNetwork object. When you create an SriovNetwork object, the SR-IOV Network Operator automatically creates a NetworkAttachmentDefinition object.

Do not modify or delete an SriovNetwork object if it is attached to any pods in a running state.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create a

SriovNetworkobject, and then save the YAML in the<name>.yamlfile, where<name>is a name for this additional network. The object specification might resemble the following example:apiVersion: sriovnetwork.openshift.io/v1 kind: SriovNetwork metadata: name: attach1 namespace: openshift-sriov-network-operator spec: resourceName: net1 networkNamespace: project2 ipam: |- { "type": "host-local", "subnet": "10.56.217.0/24", "rangeStart": "10.56.217.171", "rangeEnd": "10.56.217.181", "gateway": "10.56.217.1" }To create the object, enter the following command:

$ oc create -f <name>.yaml

where

<name>specifies the name of the additional network.Optional: To confirm that the

NetworkAttachmentDefinitionobject that is associated with theSriovNetworkobject that you created in the previous step exists, enter the following command. Replace<namespace>with the networkNamespace you specified in theSriovNetworkobject.$ oc get net-attach-def -n <namespace>

18.3.3. Assigning an SR-IOV network to a VRF

As a cluster administrator, you can assign an SR-IOV network interface to your VRF domain by using the CNI VRF plugin.

To do this, add the VRF configuration to the optional metaPlugins parameter of the SriovNetwork resource.

Applications that use VRFs need to bind to a specific device. The common usage is to use the SO_BINDTODEVICE option for a socket. SO_BINDTODEVICE binds the socket to a device that is specified in the passed interface name, for example, eth1. To use SO_BINDTODEVICE, the application must have CAP_NET_RAW capabilities.

Using a VRF through the ip vrf exec command is not supported in OpenShift Container Platform pods. To use VRF, bind applications directly to the VRF interface.

18.3.3.1. Creating an additional SR-IOV network attachment with the CNI VRF plugin

The SR-IOV Network Operator manages additional network definitions. When you specify an additional SR-IOV network to create, the SR-IOV Network Operator creates the NetworkAttachmentDefinition custom resource (CR) automatically.

Do not edit NetworkAttachmentDefinition custom resources that the SR-IOV Network Operator manages. Doing so might disrupt network traffic on your additional network.

To create an additional SR-IOV network attachment with the CNI VRF plugin, perform the following procedure.

Prerequisites

- Install the OpenShift Container Platform CLI (oc).

- Log in to the OpenShift Container Platform cluster as a user with cluster-admin privileges.

Procedure

Create the

SriovNetworkcustom resource (CR) for the additional SR-IOV network attachment and insert themetaPluginsconfiguration, as in the following example CR. Save the YAML as the filesriov-network-attachment.yaml.Example

SriovNetworkcustom resource (CR) exampleapiVersion: sriovnetwork.openshift.io/v1 kind: SriovNetwork metadata: name: example-network namespace: additional-sriov-network-1 spec: ipam: | { "type": "host-local", "subnet": "10.56.217.0/24", "rangeStart": "10.56.217.171", "rangeEnd": "10.56.217.181", "routes": [{ "dst": "0.0.0.0/0" }], "gateway": "10.56.217.1" } vlan: 0 resourceName: intelnics metaPlugins : | { "type": "vrf", 1 "vrfname": "example-vrf-name" 2 }Create the

SriovNetworkresource:$ oc create -f sriov-network-attachment.yaml

Verifying that the NetworkAttachmentDefinition CR is successfully created

Confirm that the SR-IOV Network Operator created the

NetworkAttachmentDefinitionCR by running the following command:$ oc get network-attachment-definitions -n <namespace> 1- 1

- Replace

<namespace>with the namespace that you specified when configuring the network attachment, for example,additional-sriov-network-1.

Example output

NAME AGE additional-sriov-network-1 14m

NoteThere might be a delay before the SR-IOV Network Operator creates the CR.

Verifying that the additional SR-IOV network attachment is successful

To verify that the VRF CNI is correctly configured and that the additional SR-IOV network attachment is attached, do the following:

- Create an SR-IOV network that uses the VRF CNI.

- Assign the network to a pod.

Verify that the pod network attachment is connected to the SR-IOV additional network. Remote shell into the pod and run the following command:

$ ip vrf show

Example output

Name Table ----------------------- red 10

Confirm that the VRF interface is

masterof the secondary interface by running the following command:$ ip link

Example output

... 5: net1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master red state UP mode ...

18.3.4. Runtime configuration for an Ethernet-based SR-IOV attachment

When attaching a pod to an additional network, you can specify a runtime configuration to make specific customizations for the pod. For example, you can request a specific MAC hardware address.

You specify the runtime configuration by setting an annotation in the pod specification. The annotation key is k8s.v1.cni.cncf.io/networks, and it accepts a JSON object that describes the runtime configuration.

The following JSON describes the runtime configuration options for an Ethernet-based SR-IOV network attachment.

[

{

"name": "<name>", 1

"mac": "<mac_address>", 2

"ips": ["<cidr_range>"] 3

}

]- 1

- The name of the SR-IOV network attachment definition CR.

- 2

- Optional: The MAC address for the SR-IOV device that is allocated from the resource type defined in the SR-IOV network attachment definition CR. To use this feature, you also must specify

{ "mac": true }in theSriovNetworkobject. - 3

- Optional: IP addresses for the SR-IOV device that is allocated from the resource type defined in the SR-IOV network attachment definition CR. Both IPv4 and IPv6 addresses are supported. To use this feature, you also must specify

{ "ips": true }in theSriovNetworkobject.

Example runtime configuration

apiVersion: v1

kind: Pod

metadata:

name: sample-pod

annotations:

k8s.v1.cni.cncf.io/networks: |-

[

{

"name": "net1",

"mac": "20:04:0f:f1:88:01",

"ips": ["192.168.10.1/24", "2001::1/64"]

}

]

spec:

containers:

- name: sample-container

image: <image>

imagePullPolicy: IfNotPresent

command: ["sleep", "infinity"]

18.3.5. Adding a pod to an additional network

You can add a pod to an additional network. The pod continues to send normal cluster-related network traffic over the default network.

When a pod is created additional networks are attached to it. However, if a pod already exists, you cannot attach additional networks to it.

The pod must be in the same namespace as the additional network.

Prerequisites

-

Install the OpenShift CLI (

oc). - Log in to the cluster.

Procedure

Add an annotation to the

Podobject. Only one of the following annotation formats can be used:To attach an additional network without any customization, add an annotation with the following format. Replace

<network>with the name of the additional network to associate with the pod:metadata: annotations: k8s.v1.cni.cncf.io/networks: <network>[,<network>,...] 1- 1

- To specify more than one additional network, separate each network with a comma. Do not include whitespace between the comma. If you specify the same additional network multiple times, that pod will have multiple network interfaces attached to that network.

To attach an additional network with customizations, add an annotation with the following format:

metadata: annotations: k8s.v1.cni.cncf.io/networks: |- [ { "name": "<network>", 1 "namespace": "<namespace>", 2 "default-route": ["<default-route>"] 3 } ]

To create the pod, enter the following command. Replace

<name>with the name of the pod.$ oc create -f <name>.yaml

Optional: To Confirm that the annotation exists in the

PodCR, enter the following command, replacing<name>with the name of the pod.$ oc get pod <name> -o yaml

In the following example, the

example-podpod is attached to thenet1additional network:$ oc get pod example-pod -o yaml apiVersion: v1 kind: Pod metadata: annotations: k8s.v1.cni.cncf.io/networks: macvlan-bridge k8s.v1.cni.cncf.io/network-status: |- 1 [{ "name": "ovn-kubernetes", "interface": "eth0", "ips": [ "10.128.2.14" ], "default": true, "dns": {} },{ "name": "macvlan-bridge", "interface": "net1", "ips": [ "20.2.2.100" ], "mac": "22:2f:60:a5:f8:00", "dns": {} }] name: example-pod namespace: default spec: ... status: ...- 1

- The

k8s.v1.cni.cncf.io/network-statusparameter is a JSON array of objects. Each object describes the status of an additional network attached to the pod. The annotation value is stored as a plain text value.

18.3.5.1. Exposing MTU for vfio-pci SR-IOV devices to pod

After adding a pod to an additional network, you can check that the MTU is available for the SR-IOV network.

Procedure

Check that the pod annotation includes MTU by running the following command:

$ oc describe pod example-pod

The following example shows the sample output:

"mac": "20:04:0f:f1:88:01", "mtu": 1500, "dns": {}, "device-info": { "type": "pci", "version": "1.1.0", "pci": { "pci-address": "0000:86:01.3" } }Verify that the MTU is available in

/etc/podnetinfo/inside the pod by running the following command:$ oc exec example-pod -n sriov-tests -- cat /etc/podnetinfo/annotations | grep mtu

The following example shows the sample output:

k8s.v1.cni.cncf.io/network-status="[{ \"name\": \"ovn-kubernetes\", \"interface\": \"eth0\", \"ips\": [ \"10.131.0.67\" ], \"mac\": \"0a:58:0a:83:00:43\", \"default\": true, \"dns\": {} },{ \"name\": \"sriov-tests/sriov-nic-1\", \"interface\": \"net1\", \"ips\": [ \"192.168.10.1\" ], \"mac\": \"20:04:0f:f1:88:01\", \"mtu\": 1500, \"dns\": {}, \"device-info\": { \"type\": \"pci\", \"version\": \"1.1.0\", \"pci\": { \"pci-address\": \"0000:86:01.3\" } } }]"

18.3.6. Configuring parallel node draining during SR-IOV network policy updates

By default, the SR-IOV Network Operator drains workloads from a node before every policy change. The Operator performs this action, one node at a time, to ensure that no workloads are affected by the reconfiguration.

In large clusters, draining nodes sequentially can be time-consuming, taking hours or even days. In time-sensitive environments, you can enable parallel node draining in an SriovNetworkPoolConfig custom resource (CR) for faster rollouts of SR-IOV network configurations.

To configure parallel draining, use the SriovNetworkPoolConfig CR to create a node pool. You can then add nodes to the pool and define the maximum number of nodes in the pool that the Operator can drain in parallel. With this approach, you can enable parallel draining for faster reconfiguration while ensuring you still have enough nodes remaining in the pool to handle any running workloads.

A node can only belong to one SR-IOV network pool configuration. If a node is not part of a pool, it is added to a virtual, default, pool that is configured to drain one node at a time only.

The node might restart during the draining process.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges. - Install the SR-IOV Network Operator.

- Nodes have hardware that support SR-IOV.

Procedure

Create a

SriovNetworkPoolConfigresource:Create a YAML file that defines the

SriovNetworkPoolConfigresource:Example

sriov-nw-pool.yamlfileapiVersion: v1 kind: SriovNetworkPoolConfig metadata: name: pool-1 1 namespace: openshift-sriov-network-operator 2 spec: maxUnavailable: 2 3 nodeSelector: 4 matchLabels: node-role.kubernetes.io/worker: ""

- 1

- Specify the name of the

SriovNetworkPoolConfigobject. - 2

- Specify namespace where the SR-IOV Network Operator is installed.

- 3

- Specify an integer number, or percentage value, for nodes that can be unavailable in the pool during an update. For example, if you have 10 nodes and you set the maximum unavailable to 2, then only 2 nodes can be drained in parallel at any time, leaving 8 nodes for handling workloads.

- 4

- Specify the nodes to add the pool by using the node selector. This example adds all nodes with the

workerrole to the pool.

Create the

SriovNetworkPoolConfigresource by running the following command:$ oc create -f sriov-nw-pool.yaml

Create the

sriov-testnamespace by running the following comand:$ oc create namespace sriov-test

Create a

SriovNetworkNodePolicyresource:Create a YAML file that defines the

SriovNetworkNodePolicyresource:Example

sriov-node-policy.yamlfileapiVersion: sriovnetwork.openshift.io/v1 kind: SriovNetworkNodePolicy metadata: name: sriov-nic-1 namespace: openshift-sriov-network-operator spec: deviceType: netdevice nicSelector: pfNames: ["ens1"] nodeSelector: node-role.kubernetes.io/worker: "" numVfs: 5 priority: 99 resourceName: sriov_nic_1Create the

SriovNetworkNodePolicyresource by running the following command:$ oc create -f sriov-node-policy.yaml

Create a

SriovNetworkresource:Create a YAML file that defines the

SriovNetworkresource:Example

sriov-network.yamlfileapiVersion: sriovnetwork.openshift.io/v1 kind: SriovNetwork metadata: name: sriov-nic-1 namespace: openshift-sriov-network-operator spec: linkState: auto networkNamespace: sriov-test resourceName: sriov_nic_1 capabilities: '{ "mac": true, "ips": true }' ipam: '{ "type": "static" }'Create the

SriovNetworkresource by running the following command:$ oc create -f sriov-network.yaml

Verification

View the node pool you created by running the following command:

$ oc get sriovNetworkpoolConfig -n openshift-sriov-network-operator

Example output

NAME AGE pool-1 67s 1- 1

- In this example,

pool-1contains all the nodes with theworkerrole.

To demonstrate the node draining process using the example scenario from the above procedure, complete the following steps:

Update the number of virtual functions in the

SriovNetworkNodePolicyresource to trigger workload draining in the cluster:$ oc patch SriovNetworkNodePolicy sriov-nic-1 -n openshift-sriov-network-operator --type merge -p '{"spec": {"numVfs": 4}}'Monitor the draining status on the target cluster by running the following command:

$ oc get sriovNetworkNodeState -n openshift-sriov-network-operator

Example output

NAMESPACE NAME SYNC STATUS DESIRED SYNC STATE CURRENT SYNC STATE AGE openshift-sriov-network-operator worker-0 InProgress Drain_Required DrainComplete 3d10h openshift-sriov-network-operator worker-1 InProgress Drain_Required DrainComplete 3d10h

When the draining process is complete, the

SYNC STATUSchanges toSucceeded, and theDESIRED SYNC STATEandCURRENT SYNC STATEvalues return toIDLE.Example output

NAMESPACE NAME SYNC STATUS DESIRED SYNC STATE CURRENT SYNC STATE AGE openshift-sriov-network-operator worker-0 Succeeded Idle Idle 3d10h openshift-sriov-network-operator worker-1 Succeeded Idle Idle 3d10h

18.3.7. Excluding the SR-IOV network topology for NUMA-aware scheduling

To exclude advertising the SR-IOV network resource’s Non-Uniform Memory Access (NUMA) node to the Topology Manager, you can configure the excludeTopology specification in the SriovNetworkNodePolicy custom resource. Use this configuration for more flexible SR-IOV network deployments during NUMA-aware pod scheduling.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have configured the CPU Manager policy to

static. For more information about CPU Manager, see the Additional resources section. -

You have configured the Topology Manager policy to

single-numa-node. - You have installed the SR-IOV Network Operator.

Procedure

Create the

SriovNetworkNodePolicyCR:Save the following YAML in the

sriov-network-node-policy.yamlfile, replacing values in the YAML to match your environment:apiVersion: sriovnetwork.openshift.io/v1 kind: SriovNetworkNodePolicy metadata: name: <policy_name> namespace: openshift-sriov-network-operator spec: resourceName: sriovnuma0 1 nodeSelector: kubernetes.io/hostname: <node_name> numVfs: <number_of_Vfs> nicSelector: 2 vendor: "<vendor_ID>" deviceID: "<device_ID>" deviceType: netdevice excludeTopology: true 3

- 1

- The resource name of the SR-IOV network device plugin. This YAML uses a sample

resourceNamevalue. - 2

- Identify the device for the Operator to configure by using the NIC selector.

- 3

- To exclude advertising the NUMA node for the SR-IOV network resource to the Topology Manager, set the value to

true. The default value isfalse.

NoteIf multiple

SriovNetworkNodePolicyresources target the same SR-IOV network resource, theSriovNetworkNodePolicyresources must have the same value as theexcludeTopologyspecification. Otherwise, the conflicting policy is rejected.Create the

SriovNetworkNodePolicyresource by running the following command:$ oc create -f sriov-network-node-policy.yaml

Example output

sriovnetworknodepolicy.sriovnetwork.openshift.io/policy-for-numa-0 created

Create the

SriovNetworkCR:Save the following YAML in the

sriov-network.yamlfile, replacing values in the YAML to match your environment:apiVersion: sriovnetwork.openshift.io/v1 kind: SriovNetwork metadata: name: sriov-numa-0-network 1 namespace: openshift-sriov-network-operator spec: resourceName: sriovnuma0 2 networkNamespace: <namespace> 3 ipam: |- 4 { "type": "<ipam_type>", }

- 1

- Replace

sriov-numa-0-networkwith the name for the SR-IOV network resource. - 2

- Specify the resource name for the

SriovNetworkNodePolicyCR from the previous step. This YAML uses a sampleresourceNamevalue. - 3

- Enter the namespace for your SR-IOV network resource.

- 4

- Enter the IP address management configuration for the SR-IOV network.

Create the

SriovNetworkresource by running the following command:$ oc create -f sriov-network.yaml

Example output

sriovnetwork.sriovnetwork.openshift.io/sriov-numa-0-network created

Create a pod and assign the SR-IOV network resource from the previous step:

Save the following YAML in the

sriov-network-pod.yamlfile, replacing values in the YAML to match your environment:apiVersion: v1 kind: Pod metadata: name: <pod_name> annotations: k8s.v1.cni.cncf.io/networks: |- [ { "name": "sriov-numa-0-network", 1 } ] spec: containers: - name: <container_name> image: <image> imagePullPolicy: IfNotPresent command: ["sleep", "infinity"]- 1

- This is the name of the

SriovNetworkresource that uses theSriovNetworkNodePolicyresource.

Create the

Podresource by running the following command:$ oc create -f sriov-network-pod.yaml

Example output

pod/example-pod created

Verification

Verify the status of the pod by running the following command, replacing

<pod_name>with the name of the pod:$ oc get pod <pod_name>

Example output

NAME READY STATUS RESTARTS AGE test-deployment-sriov-76cbbf4756-k9v72 1/1 Running 0 45h

Open a debug session with the target pod to verify that the SR-IOV network resources are deployed to a different node than the memory and CPU resources.

Open a debug session with the pod by running the following command, replacing <pod_name> with the target pod name.

$ oc debug pod/<pod_name>

Set

/hostas the root directory within the debug shell. The debug pod mounts the root file system from the host in/hostwithin the pod. By changing the root directory to/host, you can run binaries from the host file system:$ chroot /host

View information about the CPU allocation by running the following commands:

$ lscpu | grep NUMA

Example output

NUMA node(s): 2 NUMA node0 CPU(s): 0,2,4,6,8,10,12,14,16,18,... NUMA node1 CPU(s): 1,3,5,7,9,11,13,15,17,19,...

$ cat /proc/self/status | grep Cpus

Example output

Cpus_allowed: aa Cpus_allowed_list: 1,3,5,7

$ cat /sys/class/net/net1/device/numa_node

Example output

0

In this example, CPUs 1,3,5, and 7 are allocated to

NUMA node1but the SR-IOV network resource can use the NIC inNUMA node0.

If the excludeTopology specification is set to True, it is possible that the required resources exist in the same NUMA node.

18.3.8. Additional resources

18.4. Configuring an SR-IOV InfiniBand network attachment

You can configure an InfiniBand (IB) network attachment for an Single Root I/O Virtualization (SR-IOV) device in the cluster.

Before you perform any tasks in the following documentation, ensure that you installed the SR-IOV Network Operator.

18.4.1. InfiniBand device configuration object

You can configure an InfiniBand (IB) network device by defining an SriovIBNetwork object.

The following YAML describes an SriovIBNetwork object:

apiVersion: sriovnetwork.openshift.io/v1 kind: SriovIBNetwork metadata: name: <name> 1 namespace: openshift-sriov-network-operator 2 spec: resourceName: <sriov_resource_name> 3 networkNamespace: <target_namespace> 4 ipam: |- 5 {} linkState: <link_state> 6 capabilities: <capabilities> 7

- 1

- A name for the object. The SR-IOV Network Operator creates a

NetworkAttachmentDefinitionobject with same name. - 2

- The namespace where the SR-IOV Operator is installed.

- 3

- The value for the

spec.resourceNameparameter from theSriovNetworkNodePolicyobject that defines the SR-IOV hardware for this additional network. - 4

- The target namespace for the

SriovIBNetworkobject. Only pods in the target namespace can attach to the network device. - 5

- Optional: A configuration object for the IPAM CNI plugin as a YAML block scalar. The plugin manages IP address assignment for the attachment definition.

- 6

- Optional: The link state of virtual function (VF). Allowed values are

enable,disableandauto. - 7

- Optional: The capabilities to configure for this network. You can specify

'{ "ips": true }'to enable IP address support or'{ "infinibandGUID": true }'to enable IB Global Unique Identifier (GUID) support.

18.4.1.1. Creating a configuration for assignment of dual-stack IP addresses dynamically

Dual-stack IP address assignment can be configured with the ipRanges parameter for:

- IPv4 addresses

- IPv6 addresses

- multiple IP address assignment

Procedure

-

Set

typetowhereabouts. Use

ipRangesto allocate IP addresses as shown in the following example:cniVersion: operator.openshift.io/v1 kind: Network =metadata: name: cluster spec: additionalNetworks: - name: whereabouts-shim namespace: default type: Raw rawCNIConfig: |- { "name": "whereabouts-dual-stack", "cniVersion": "0.3.1, "type": "bridge", "ipam": { "type": "whereabouts", "ipRanges": [ {"range": "192.168.10.0/24"}, {"range": "2001:db8::/64"} ] } }- Attach network to a pod. For more information, see "Adding a pod to an additional network".

- Verify that all IP addresses are assigned.

Run the following command to ensure the IP addresses are assigned as metadata.

$ oc exec -it mypod -- ip a

18.4.1.2. Configuration of IP address assignment for a network attachment

For additional networks, IP addresses can be assigned using an IP Address Management (IPAM) CNI plugin, which supports various assignment methods, including Dynamic Host Configuration Protocol (DHCP) and static assignment.

The DHCP IPAM CNI plugin responsible for dynamic assignment of IP addresses operates with two distinct components:

- CNI Plugin: Responsible for integrating with the Kubernetes networking stack to request and release IP addresses.

- DHCP IPAM CNI Daemon: A listener for DHCP events that coordinates with existing DHCP servers in the environment to handle IP address assignment requests. This daemon is not a DHCP server itself.

For networks requiring type: dhcp in their IPAM configuration, ensure the following:

- A DHCP server is available and running in the environment. The DHCP server is external to the cluster and is expected to be part of the customer’s existing network infrastructure.

- The DHCP server is appropriately configured to serve IP addresses to the nodes.

In cases where a DHCP server is unavailable in the environment, it is recommended to use the Whereabouts IPAM CNI plugin instead. The Whereabouts CNI provides similar IP address management capabilities without the need for an external DHCP server.

Use the Whereabouts CNI plugin when there is no external DHCP server or where static IP address management is preferred. The Whereabouts plugin includes a reconciler daemon to manage stale IP address allocations.

A DHCP lease must be periodically renewed throughout the container’s lifetime, so a separate daemon, the DHCP IPAM CNI Daemon, is required. To deploy the DHCP IPAM CNI daemon, modify the Cluster Network Operator (CNO) configuration to trigger the deployment of this daemon as part of the additional network setup.

18.4.1.2.1. Static IP address assignment configuration

The following table describes the configuration for static IP address assignment:

| Field | Type | Description |

|---|---|---|

|

|

|

The IPAM address type. The value |

|

|

| An array of objects specifying IP addresses to assign to the virtual interface. Both IPv4 and IPv6 IP addresses are supported. |

|

|

| An array of objects specifying routes to configure inside the pod. |

|

|

| Optional: An array of objects specifying the DNS configuration. |

The addresses array requires objects with the following fields:

| Field | Type | Description |

|---|---|---|

|

|

|

An IP address and network prefix that you specify. For example, if you specify |

|

|

| The default gateway to route egress network traffic to. |

| Field | Type | Description |

|---|---|---|

|

|

|

The IP address range in CIDR format, such as |

|

|

| The gateway where network traffic is routed. |

| Field | Type | Description |

|---|---|---|

|

|

| An array of one or more IP addresses for to send DNS queries to. |

|

|

|

The default domain to append to a hostname. For example, if the domain is set to |

|

|

|

An array of domain names to append to an unqualified hostname, such as |

Static IP address assignment configuration example

{

"ipam": {

"type": "static",

"addresses": [

{

"address": "191.168.1.7/24"

}

]

}

}

18.4.1.2.2. Dynamic IP address (DHCP) assignment configuration

A pod obtains its original DHCP lease when it is created. The lease must be periodically renewed by a minimal DHCP server deployment running on the cluster.

For an Ethernet network attachment, the SR-IOV Network Operator does not create a DHCP server deployment; the Cluster Network Operator is responsible for creating the minimal DHCP server deployment.

To trigger the deployment of the DHCP server, you must create a shim network attachment by editing the Cluster Network Operator configuration, as in the following example:

Example shim network attachment definition

apiVersion: operator.openshift.io/v1

kind: Network

metadata:

name: cluster

spec:

additionalNetworks:

- name: dhcp-shim

namespace: default

type: Raw

rawCNIConfig: |-

{

"name": "dhcp-shim",

"cniVersion": "0.3.1",

"type": "bridge",

"ipam": {

"type": "dhcp"

}

}

# ...

The following table describes the configuration parameters for dynamic IP address address assignment with DHCP.

| Field | Type | Description |

|---|---|---|

|

|

|

The IPAM address type. The value |

The following JSON example describes the configuration p for dynamic IP address address assignment with DHCP.

Dynamic IP address (DHCP) assignment configuration example

{

"ipam": {

"type": "dhcp"

}

}

18.4.1.2.3. Dynamic IP address assignment configuration with Whereabouts

The Whereabouts CNI plugin allows the dynamic assignment of an IP address to an additional network without the use of a DHCP server.

The Whereabouts CNI plugin also supports overlapping IP address ranges and configuration of the same CIDR range multiple times within separate NetworkAttachmentDefinition CRDs. This provides greater flexibility and management capabilities in multi-tenant environments.

18.4.1.2.3.1. Dynamic IP address configuration objects

The following table describes the configuration objects for dynamic IP address assignment with Whereabouts:

| Field | Type | Description |

|---|---|---|

|

|

|

The IPAM address type. The value |

|

|

| An IP address and range in CIDR notation. IP addresses are assigned from within this range of addresses. |

|

|

| Optional: A list of zero or more IP addresses and ranges in CIDR notation. IP addresses within an excluded address range are not assigned. |

|

|

| Optional: Helps ensure that each group or domain of pods gets its own set of IP addresses, even if they share the same range of IP addresses. Setting this field is important for keeping networks separate and organized, notably in multi-tenant environments. |

18.4.1.2.3.2. Dynamic IP address assignment configuration that uses Whereabouts

The following example shows a dynamic address assignment configuration that uses Whereabouts:

Whereabouts dynamic IP address assignment

{

"ipam": {

"type": "whereabouts",

"range": "192.0.2.192/27",

"exclude": [

"192.0.2.192/30",

"192.0.2.196/32"

]

}

}

18.4.1.2.3.3. Dynamic IP address assignment that uses Whereabouts with overlapping IP address ranges

The following example shows a dynamic IP address assignment that uses overlapping IP address ranges for multi-tenant networks.

NetworkAttachmentDefinition 1

{

"ipam": {

"type": "whereabouts",

"range": "192.0.2.192/29",

"network_name": "example_net_common", 1

}

}

- 1

- Optional. If set, must match the

network_nameofNetworkAttachmentDefinition 2.

NetworkAttachmentDefinition 2

{

"ipam": {

"type": "whereabouts",

"range": "192.0.2.192/24",

"network_name": "example_net_common", 1

}

}

- 1

- Optional. If set, must match the

network_nameofNetworkAttachmentDefinition 1.

18.4.2. Configuring SR-IOV additional network

You can configure an additional network that uses SR-IOV hardware by creating an SriovIBNetwork object. When you create an SriovIBNetwork object, the SR-IOV Network Operator automatically creates a NetworkAttachmentDefinition object.

Do not modify or delete an SriovIBNetwork object if it is attached to any pods in a running state.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create a

SriovIBNetworkobject, and then save the YAML in the<name>.yamlfile, where<name>is a name for this additional network. The object specification might resemble the following example:apiVersion: sriovnetwork.openshift.io/v1 kind: SriovIBNetwork metadata: name: attach1 namespace: openshift-sriov-network-operator spec: resourceName: net1 networkNamespace: project2 ipam: |- { "type": "host-local", "subnet": "10.56.217.0/24", "rangeStart": "10.56.217.171", "rangeEnd": "10.56.217.181", "gateway": "10.56.217.1" }To create the object, enter the following command:

$ oc create -f <name>.yaml

where

<name>specifies the name of the additional network.Optional: To confirm that the

NetworkAttachmentDefinitionobject that is associated with theSriovIBNetworkobject that you created in the previous step exists, enter the following command. Replace<namespace>with the networkNamespace you specified in theSriovIBNetworkobject.$ oc get net-attach-def -n <namespace>

18.4.3. Runtime configuration for an InfiniBand-based SR-IOV attachment

When attaching a pod to an additional network, you can specify a runtime configuration to make specific customizations for the pod. For example, you can request a specific MAC hardware address.

You specify the runtime configuration by setting an annotation in the pod specification. The annotation key is k8s.v1.cni.cncf.io/networks, and it accepts a JSON object that describes the runtime configuration.

The following JSON describes the runtime configuration options for an InfiniBand-based SR-IOV network attachment.

[

{