This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Chapter 22. Clusters at the network far edge

22.1. Challenges of the network far edge

Edge computing presents complex challenges when managing many sites in geographically displaced locations. Use zero touch provisioning (ZTP) and GitOps to provision and manage sites at the far edge of the network.

22.1.1. Overcoming the challenges of the network far edge

Today, service providers want to deploy their infrastructure at the edge of the network. This presents significant challenges:

- How do you handle deployments of many edge sites in parallel?

- What happens when you need to deploy sites in disconnected environments?

- How do you manage the lifecycle of large fleets of clusters?

Zero touch provisioning (ZTP) and GitOps meets these challenges by allowing you to provision remote edge sites at scale with declarative site definitions and configurations for bare-metal equipment. Template or overlay configurations install OpenShift Container Platform features that are required for CNF workloads. The full lifecycle of installation and upgrades is handled through the ZTP pipeline.

ZTP uses GitOps for infrastructure deployments. With GitOps, you use declarative YAML files and other defined patterns stored in Git repositories. Red Hat Advanced Cluster Management (RHACM) uses your Git repositories to drive the deployment of your infrastructure.

GitOps provides traceability, role-based access control (RBAC), and a single source of truth for the desired state of each site. Scalability issues are addressed by Git methodologies and event driven operations through webhooks.

You start the ZTP workflow by creating declarative site definition and configuration custom resources (CRs) that the ZTP pipeline delivers to the edge nodes.

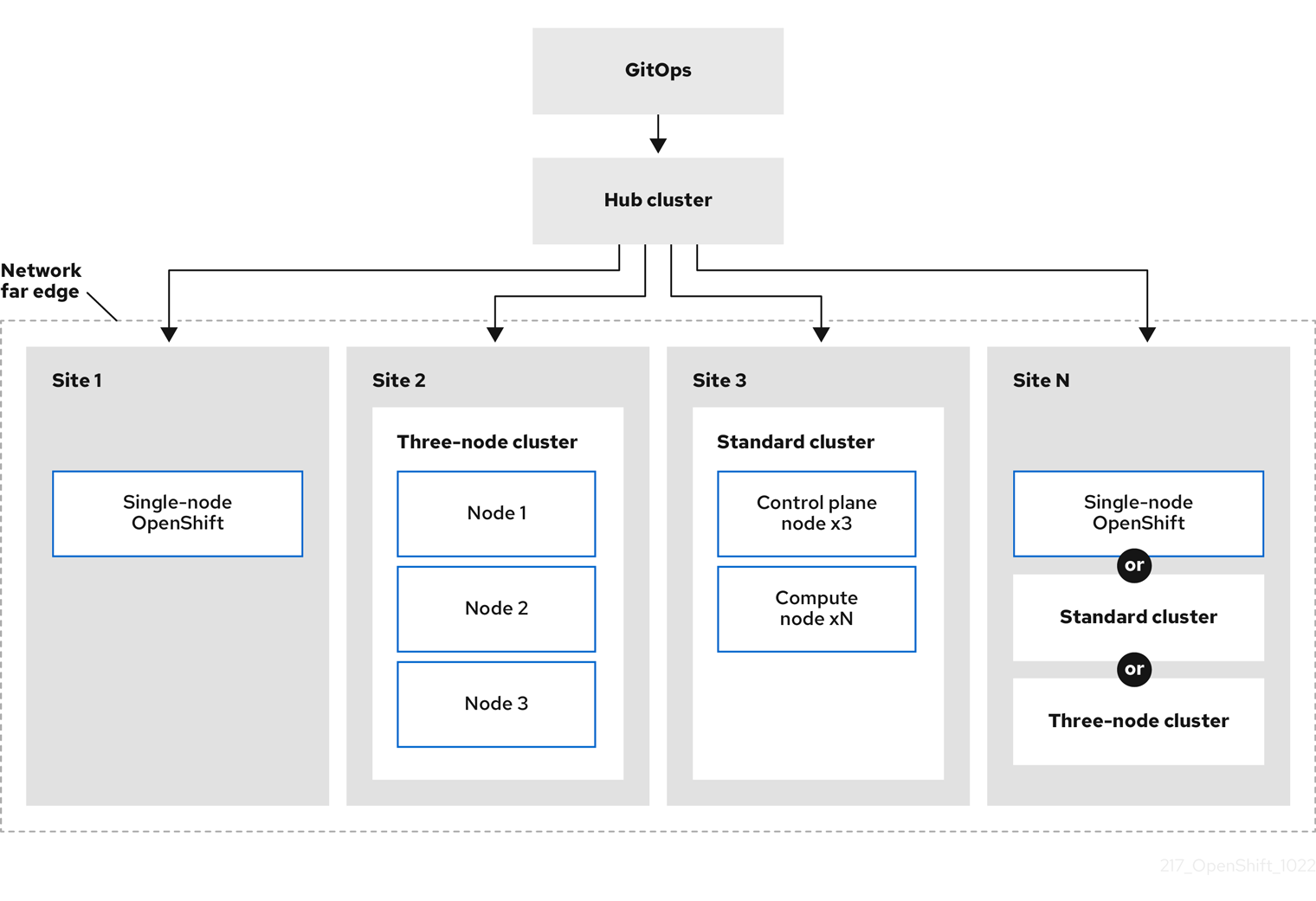

The following diagram shows how ZTP works within the far edge framework.

22.1.2. Using ZTP to provision clusters at the network far edge

Red Hat Advanced Cluster Management (RHACM) manages clusters in a hub-and-spoke architecture, where a single hub cluster manages many spoke clusters. Hub clusters running RHACM provision and deploy the managed clusters by using zero touch provisioning (ZTP) and the assisted service that is deployed when you install RHACM.

The assisted service handles provisioning of OpenShift Container Platform on single node clusters, three-node clusters, or standard clusters running on bare metal.

A high-level overview of using ZTP to provision and maintain bare-metal hosts with OpenShift Container Platform is as follows:

- A hub cluster running RHACM manages an OpenShift image registry that mirrors the OpenShift Container Platform release images. RHACM uses the OpenShift image registry to provision the managed clusters.

- You manage the bare-metal hosts in a YAML format inventory file, versioned in a Git repository.

- You make the hosts ready for provisioning as managed clusters, and use RHACM and the assisted service to install the bare-metal hosts on site.

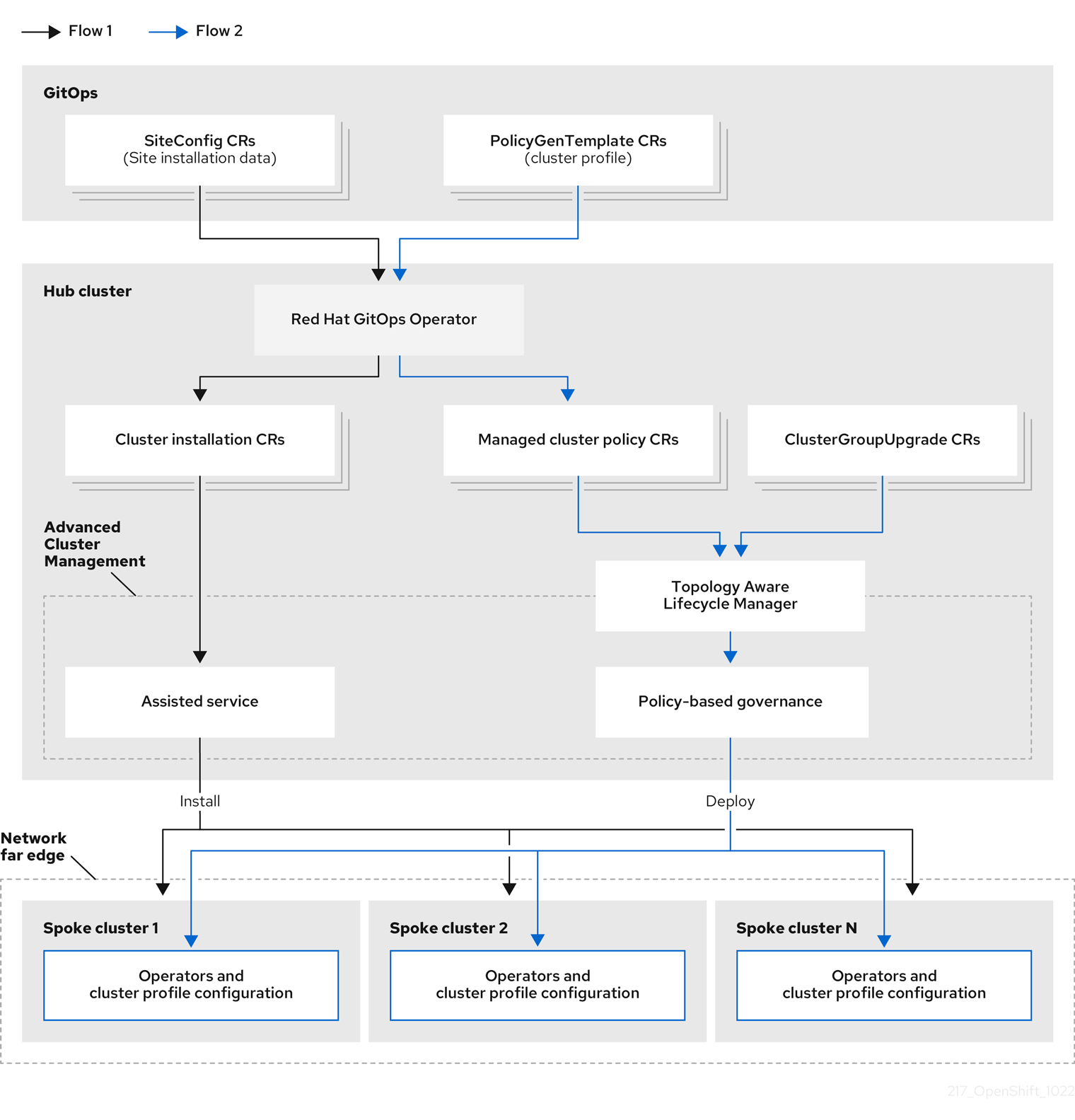

Installing and deploying the clusters is a two-stage process, involving an initial installation phase, and a subsequent configuration phase. The following diagram illustrates this workflow:

22.1.3. Installing managed clusters with SiteConfig resources and RHACM

GitOps ZTP uses SiteConfig custom resources (CRs) in a Git repository to manage the processes that install OpenShift Container Platform clusters. The SiteConfig CR contains cluster-specific parameters required for installation. It has options for applying select configuration CRs during installation including user defined extra manifests.

The ZTP GitOps plugin processes SiteConfig CRs to generate a collection of CRs on the hub cluster. This triggers the assisted service in Red Hat Advanced Cluster Management (RHACM) to install OpenShift Container Platform on the bare-metal host. You can find installation status and error messages in these CRs on the hub cluster.

You can provision single clusters manually or in batches with ZTP:

- Provisioning a single cluster

-

Create a single

SiteConfigCR and related installation and configuration CRs for the cluster, and apply them in the hub cluster to begin cluster provisioning. This is a good way to test your CRs before deploying on a larger scale. - Provisioning many clusters

-

Install managed clusters in batches of up to 400 by defining

SiteConfigand related CRs in a Git repository. ArgoCD uses theSiteConfigCRs to deploy the sites. The RHACM policy generator creates the manifests and applies them to the hub cluster. This starts the cluster provisioning process.

22.1.4. Configuring managed clusters with policies and PolicyGenTemplate resources

Zero touch provisioning (ZTP) uses Red Hat Advanced Cluster Management (RHACM) to configure clusters by using a policy-based governance approach to applying the configuration.

The policy generator or PolicyGen is a plugin for the GitOps Operator that enables the creation of RHACM policies from a concise template. The tool can combine multiple CRs into a single policy, and you can generate multiple policies that apply to various subsets of clusters in your fleet.

For scalability and to reduce the complexity of managing configurations across the fleet of clusters, use configuration CRs with as much commonality as possible.

- Where possible, apply configuration CRs using a fleet-wide common policy.

- The next preference is to create logical groupings of clusters to manage as much of the remaining configurations as possible under a group policy.

- When a configuration is unique to an individual site, use RHACM templating on the hub cluster to inject the site-specific data into a common or group policy. Alternatively, apply an individual site policy for the site.

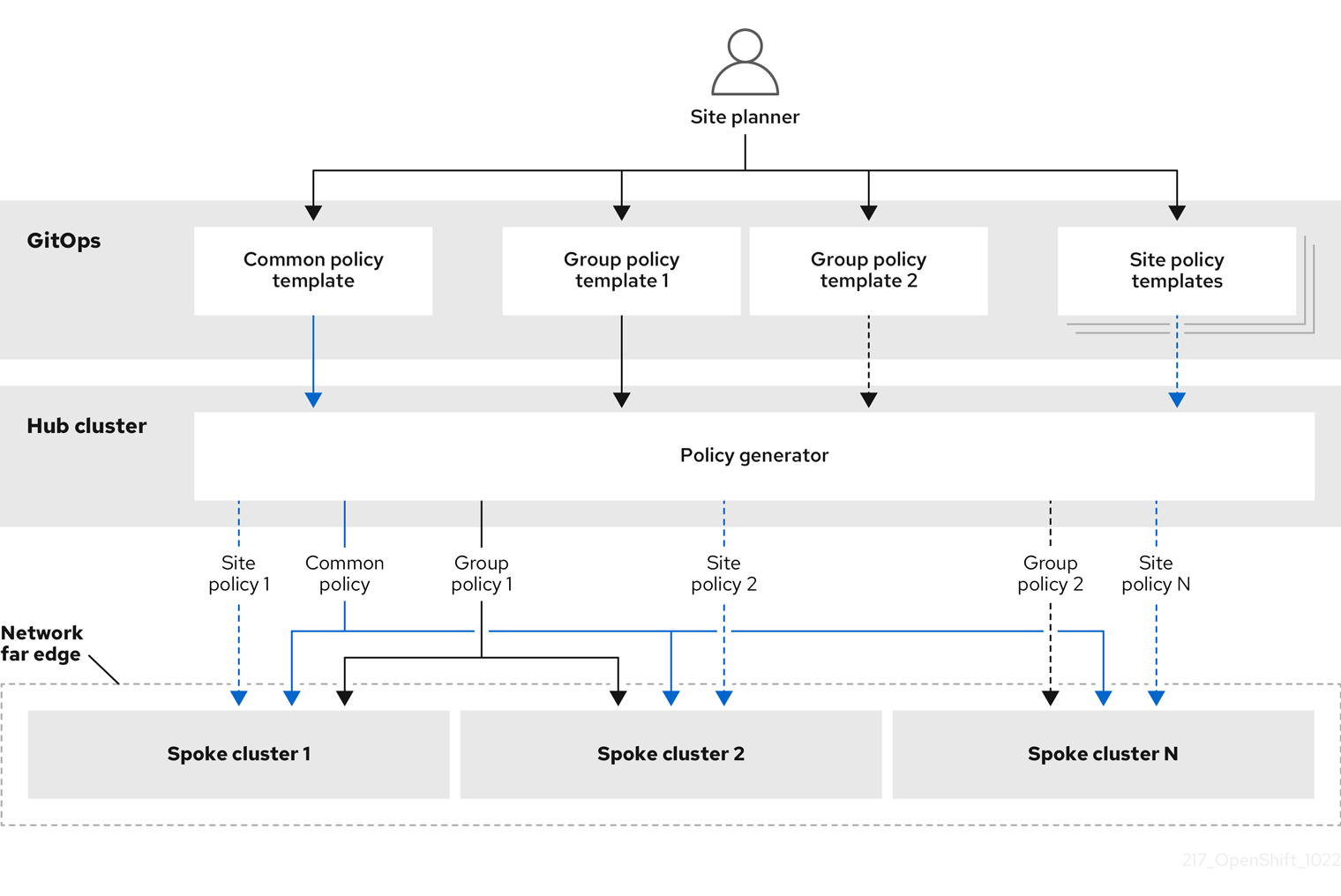

The following diagram shows how the policy generator interacts with GitOps and RHACM in the configuration phase of cluster deployment.

For large fleets of clusters, it is typical for there to be a high-level of consistency in the configuration of those clusters.

The following recommended structuring of policies combines configuration CRs to meet several goals:

- Describe common configurations once and apply to the fleet.

- Minimize the number of maintained and managed policies.

- Support flexibility in common configurations for cluster variants.

| Policy category | Description |

|---|---|

| Common |

A policy that exists in the common category is applied to all clusters in the fleet. Use common |

| Groups |

A policy that exists in the groups category is applied to a group of clusters in the fleet. Use group |

| Sites | A policy that exists in the sites category is applied to a specific cluster site. Any cluster can have its own specific policies maintained. |

22.2. Preparing the hub cluster for ZTP

To use RHACM in a disconnected environment, create a mirror registry that mirrors the OpenShift Container Platform release images and Operator Lifecycle Manager (OLM) catalog that contains the required Operator images. OLM manages, installs, and upgrades Operators and their dependencies in the cluster. You can also use a disconnected mirror host to serve the RHCOS ISO and RootFS disk images that are used to provision the bare-metal hosts.

22.2.1. Telco RAN 4.11 validated solution software versions

The Red Hat Telco Radio Access Network (RAN) version 4.11 solution has been validated using the following Red Hat software products versions.

| Product | Software version |

|---|---|

| Hub cluster OpenShift Container Platform version | 4.11 |

| GitOps ZTP plugin | 4.9, 4.10, or 4.11 |

| Red Hat Advanced Cluster Management (RHACM) | 2.5 or 2.6 |

| Red Hat OpenShift GitOps | 1.5 |

| Topology Aware Lifecycle Manager (TALM) | 4.10 or 4.11 |

22.2.2. Installing GitOps ZTP in a disconnected environment

Use Red Hat Advanced Cluster Management (RHACM), Red Hat OpenShift GitOps, and Topology Aware Lifecycle Manager (TALM) on the hub cluster in the disconnected environment to manage the deployment of multiple managed clusters.

Prerequisites

-

You have installed the OpenShift Container Platform CLI (

oc). -

You have logged in as a user with

cluster-adminprivileges. You have configured a disconnected mirror registry for use in the cluster.

NoteThe disconnected mirror registry that you create must contain a version of TALM backup and pre-cache images that matches the version of TALM running in the hub cluster. The spoke cluster must be able to resolve these images in the disconnected mirror registry.

Procedure

- Install RHACM in the hub cluster. See Installing RHACM in a disconnected environment.

- Install GitOps and TALM in the hub cluster.

22.2.3. Adding RHCOS ISO and RootFS images to the disconnected mirror host

Before you begin installing clusters in the disconnected environment with Red Hat Advanced Cluster Management (RHACM), you must first host Red Hat Enterprise Linux CoreOS (RHCOS) images for it to use. Use a disconnected mirror to host the RHCOS images.

Prerequisites

- Deploy and configure an HTTP server to host the RHCOS image resources on the network. You must be able to access the HTTP server from your computer, and from the machines that you create.

The RHCOS images might not change with every release of OpenShift Container Platform. You must download images with the highest version that is less than or equal to the version that you install. Use the image versions that match your OpenShift Container Platform version if they are available. You require ISO and RootFS images to install RHCOS on the hosts. RHCOS QCOW2 images are not supported for this installation type.

Procedure

- Log in to the mirror host.

Obtain the RHCOS ISO and RootFS images from mirror.openshift.com, for example:

Export the required image names and OpenShift Container Platform version as environment variables:

export ISO_IMAGE_NAME=<iso_image_name>

$ export ISO_IMAGE_NAME=<iso_image_name>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow export ROOTFS_IMAGE_NAME=<rootfs_image_name>

$ export ROOTFS_IMAGE_NAME=<rootfs_image_name>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow export OCP_VERSION=<ocp_version>

$ export OCP_VERSION=<ocp_version>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow Download the required images:

sudo wget https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/4.11/${OCP_VERSION}/${ISO_IMAGE_NAME} -O /var/www/html/${ISO_IMAGE_NAME}$ sudo wget https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/4.11/${OCP_VERSION}/${ISO_IMAGE_NAME} -O /var/www/html/${ISO_IMAGE_NAME}Copy to Clipboard Copied! Toggle word wrap Toggle overflow sudo wget https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/4.11/${OCP_VERSION}/${ROOTFS_IMAGE_NAME} -O /var/www/html/${ROOTFS_IMAGE_NAME}$ sudo wget https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/4.11/${OCP_VERSION}/${ROOTFS_IMAGE_NAME} -O /var/www/html/${ROOTFS_IMAGE_NAME}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification steps

Verify that the images downloaded successfully and are being served on the disconnected mirror host, for example:

wget http://$(hostname)/${ISO_IMAGE_NAME}$ wget http://$(hostname)/${ISO_IMAGE_NAME}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Saving to: rhcos-4.11.1-x86_64-live.x86_64.iso rhcos-4.11.1-x86_64-live.x86_64.iso- 11%[====> ] 10.01M 4.71MB/s

Saving to: rhcos-4.11.1-x86_64-live.x86_64.iso rhcos-4.11.1-x86_64-live.x86_64.iso- 11%[====> ] 10.01M 4.71MB/sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

22.2.4. Enabling the assisted service and updating AgentServiceConfig on the hub cluster

Red Hat Advanced Cluster Management (RHACM) uses the assisted service to deploy OpenShift Container Platform clusters. The assisted service is deployed automatically when you enable the MultiClusterHub Operator with Central Infrastructure Management (CIM). When you have enabled CIM on the hub cluster, you then need to update the AgentServiceConfig custom resource (CR) with references to the ISO and RootFS images that are hosted on the mirror registry HTTP server.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have logged in to the hub cluster as a user with

cluster-adminprivileges. - You have enabled the assisted service on the hub cluster. For more information, see Enabling CIM.

Procedure

Update the

AgentServiceConfigCR by running the following command:oc edit AgentServiceConfig

$ oc edit AgentServiceConfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the following entry to the

items.spec.osImagesfield in the CR:- cpuArchitecture: x86_64 openshiftVersion: "4.11" rootFSUrl: https://<host>/<path>/rhcos-live-rootfs.x86_64.img url: https://<mirror-registry>/<path>/rhcos-live.x86_64.iso- cpuArchitecture: x86_64 openshiftVersion: "4.11" rootFSUrl: https://<host>/<path>/rhcos-live-rootfs.x86_64.img url: https://<mirror-registry>/<path>/rhcos-live.x86_64.isoCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

- <host>

- Is the fully qualified domain name (FQDN) for the target mirror registry HTTP server.

- <path>

- Is the path to the image on the target mirror registry.

Save and quit the editor to apply the changes.

22.2.5. Configuring the hub cluster to use a disconnected mirror registry

You can configure the hub cluster to use a disconnected mirror registry for a disconnected environment.

Prerequisites

- You have a disconnected hub cluster installation with Red Hat Advanced Cluster Management (RHACM) 2.6 installed.

-

You have hosted the

rootfsandisoimages on an HTTP server.

If you enable TLS for the HTTP server, you must confirm the root certificate is signed by an authority trusted by the client and verify the trusted certificate chain between your OpenShift Container Platform hub and managed clusters and the HTTP server. Using a server configured with an untrusted certificate prevents the images from being downloaded to the image creation service. Using untrusted HTTPS servers is not supported.

Procedure

Create a

ConfigMapcontaining the mirror registry config:Copy to Clipboard Copied! Toggle word wrap Toggle overflow This updates

mirrorRegistryRefin theAgentServiceConfigcustom resource, as shown below:Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

A valid NTP server is required during cluster installation. Ensure that a suitable NTP server is available and can be reached from the installed clusters through the disconnected network.

22.2.6. Configuring the hub cluster with ArgoCD

You can configure your hub cluster with a set of ArgoCD applications that generate the required installation and policy custom resources (CR) for each site based on a zero touch provisioning (ZTP) GitOps flow.

Prerequisites

- You have a OpenShift Container Platform hub cluster with Red Hat Advanced Cluster Management (RHACM) and Red Hat OpenShift GitOps installed.

-

You have extracted the reference deployment from the ZTP GitOps plugin container as described in the "Preparing the GitOps ZTP site configuration repository" section. Extracting the reference deployment creates the

out/argocd/deploymentdirectory referenced in the following procedure.

Procedure

Prepare the ArgoCD pipeline configuration:

- Create a Git repository with the directory structure similar to the example directory. For more information, see "Preparing the GitOps ZTP site configuration repository".

Configure access to the repository using the ArgoCD UI. Under Settings configure the following:

-

Repositories - Add the connection information. The URL must end in

.git, for example,https://repo.example.com/repo.gitand credentials. - Certificates - Add the public certificate for the repository, if needed.

-

Repositories - Add the connection information. The URL must end in

Modify the two ArgoCD applications,

out/argocd/deployment/clusters-app.yamlandout/argocd/deployment/policies-app.yaml, based on your Git repository:-

Update the URL to point to the Git repository. The URL ends with

.git, for example,https://repo.example.com/repo.git. -

The

targetRevisionindicates which Git repository branch to monitor. -

pathspecifies the path to theSiteConfigandPolicyGenTemplateCRs, respectively.

-

Update the URL to point to the Git repository. The URL ends with

To install the ZTP GitOps plugin you must patch the ArgoCD instance in the hub cluster by using the patch file previously extracted into the

out/argocd/deployment/directory. Run the following command:oc patch argocd openshift-gitops \ -n openshift-gitops --type=merge \ --patch-file out/argocd/deployment/argocd-openshift-gitops-patch.json

$ oc patch argocd openshift-gitops \ -n openshift-gitops --type=merge \ --patch-file out/argocd/deployment/argocd-openshift-gitops-patch.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the pipeline configuration to your hub cluster by using the following command:

oc apply -k out/argocd/deployment

$ oc apply -k out/argocd/deploymentCopy to Clipboard Copied! Toggle word wrap Toggle overflow

22.2.7. Preparing the GitOps ZTP site configuration repository

Before you can use the ZTP GitOps pipeline, you need to prepare the Git repository to host the site configuration data.

Prerequisites

- You have configured the hub cluster GitOps applications for generating the required installation and policy custom resources (CRs).

- You have deployed the managed clusters using zero touch provisioning (ZTP).

Procedure

-

Create a directory structure with separate paths for the

SiteConfigandPolicyGenTemplateCRs. Export the

argocddirectory from theztp-site-generatecontainer image using the following commands:podman pull registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11

$ podman pull registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11Copy to Clipboard Copied! Toggle word wrap Toggle overflow mkdir -p ./out

$ mkdir -p ./outCopy to Clipboard Copied! Toggle word wrap Toggle overflow podman run --log-driver=none --rm registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11 extract /home/ztp --tar | tar x -C ./out

$ podman run --log-driver=none --rm registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11 extract /home/ztp --tar | tar x -C ./outCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the

outdirectory contains the following subdirectories:-

out/extra-manifestcontains the source CR files thatSiteConfiguses to generate extra manifestconfigMap. -

out/source-crscontains the source CR files thatPolicyGenTemplateuses to generate the Red Hat Advanced Cluster Management (RHACM) policies. -

out/argocd/deploymentcontains patches and YAML files to apply on the hub cluster for use in the next step of this procedure. -

out/argocd/examplecontains the examples forSiteConfigandPolicyGenTemplatefiles that represent the recommended configuration.

-

The directory structure under out/argocd/example serves as a reference for the structure and content of your Git repository. The example includes SiteConfig and PolicyGenTemplate reference CRs for single-node, three-node, and standard clusters. Remove references to cluster types that you are not using. The following example describes a set of CRs for a network of single-node clusters:

Keep SiteConfig and PolicyGenTemplate CRs in separate directories. Both the SiteConfig and PolicyGenTemplate directories must contain a kustomization.yaml file that explicitly includes the files in that directory.

This directory structure and the kustomization.yaml files must be committed and pushed to your Git repository. The initial push to Git should include the kustomization.yaml files. The SiteConfig (example-sno.yaml) and PolicyGenTemplate (common-ranGen.yaml, group-du-sno*.yaml, and example-sno-site.yaml) files can be omitted and pushed at a later time as required when deploying a site.

The KlusterletAddonConfigOverride.yaml file is only required if one or more SiteConfig CRs which make reference to it are committed and pushed to Git. See example-sno.yaml for an example of how this is used.

22.3. Installing managed clusters with RHACM and SiteConfig resources

You can provision OpenShift Container Platform clusters at scale with Red Hat Advanced Cluster Management (RHACM) using the assisted service and the GitOps plugin policy generator with core-reduction technology enabled. The zero touch priovisioning (ZTP) pipeline performs the cluster installations. ZTP can be used in a disconnected environment.

22.3.1. GitOps ZTP and Topology Aware Lifecycle Manager

GitOps zero touch provisioning (ZTP) generates installation and configuration CRs from manifests stored in Git. These artifacts are applied to a centralized hub cluster where Red Hat Advanced Cluster Management (RHACM), the assisted service, and the Topology Aware Lifecycle Manager (TALM) use the CRs to install and configure the managed cluster. The configuration phase of the ZTP pipeline uses the TALM to orchestrate the application of the configuration CRs to the cluster. There are several key integration points between GitOps ZTP and the TALM.

- Inform policies

-

By default, GitOps ZTP creates all policies with a remediation action of

inform. These policies cause RHACM to report on compliance status of clusters relevant to the policies but does not apply the desired configuration. During the ZTP process, after OpenShift installation, the TALM steps through the createdinformpolicies and enforces them on the target managed cluster(s). This applies the configuration to the managed cluster. Outside of the ZTP phase of the cluster lifecycle, this allows you to change policies without the risk of immediately rolling those changes out to affected managed clusters. You can control the timing and the set of remediated clusters by using TALM. - Automatic creation of ClusterGroupUpgrade CRs

To automate the initial configuration of newly deployed clusters, TALM monitors the state of all

ManagedClusterCRs on the hub cluster. AnyManagedClusterCR that does not have aztp-donelabel applied, including newly createdManagedClusterCRs, causes the TALM to automatically create aClusterGroupUpgradeCR with the following characteristics:-

The

ClusterGroupUpgradeCR is created and enabled in theztp-installnamespace. -

ClusterGroupUpgradeCR has the same name as theManagedClusterCR. -

The cluster selector includes only the cluster associated with that

ManagedClusterCR. -

The set of managed policies includes all policies that RHACM has bound to the cluster at the time the

ClusterGroupUpgradeis created. - Pre-caching is disabled.

- Timeout set to 4 hours (240 minutes).

The automatic creation of an enabled

ClusterGroupUpgradeensures that initial zero-touch deployment of clusters proceeds without the need for user intervention. Additionally, the automatic creation of aClusterGroupUpgradeCR for anyManagedClusterwithout theztp-donelabel allows a failed ZTP installation to be restarted by simply deleting theClusterGroupUpgradeCR for the cluster.-

The

- Waves

Each policy generated from a

PolicyGenTemplateCR includes aztp-deploy-waveannotation. This annotation is based on the same annotation from each CR which is included in that policy. The wave annotation is used to order the policies in the auto-generatedClusterGroupUpgradeCR. The wave annotation is not used other than for the auto-generatedClusterGroupUpgradeCR.NoteAll CRs in the same policy must have the same setting for the

ztp-deploy-waveannotation. The default value of this annotation for each CR can be overridden in thePolicyGenTemplate. The wave annotation in the source CR is used for determining and setting the policy wave annotation. This annotation is removed from each built CR which is included in the generated policy at runtime.The TALM applies the configuration policies in the order specified by the wave annotations. The TALM waits for each policy to be compliant before moving to the next policy. It is important to ensure that the wave annotation for each CR takes into account any prerequisites for those CRs to be applied to the cluster. For example, an Operator must be installed before or concurrently with the configuration for the Operator. Similarly, the

CatalogSourcefor an Operator must be installed in a wave before or concurrently with the Operator Subscription. The default wave value for each CR takes these prerequisites into account.Multiple CRs and policies can share the same wave number. Having fewer policies can result in faster deployments and lower CPU usage. It is a best practice to group many CRs into relatively few waves.

To check the default wave value in each source CR, run the following command against the out/source-crs directory that is extracted from the ztp-site-generate container image:

grep -r "ztp-deploy-wave" out/source-crs

$ grep -r "ztp-deploy-wave" out/source-crs- Phase labels

The

ClusterGroupUpgradeCR is automatically created and includes directives to annotate theManagedClusterCR with labels at the start and end of the ZTP process.When ZTP configuration postinstallation commences, the

ManagedClusterhas theztp-runninglabel applied. When all policies are remediated to the cluster and are fully compliant, these directives cause the TALM to remove theztp-runninglabel and apply theztp-donelabel.For deployments that make use of the

informDuValidatorpolicy, theztp-donelabel is applied when the cluster is fully ready for deployment of applications. This includes all reconciliation and resulting effects of the ZTP applied configuration CRs. Theztp-donelabel affects automaticClusterGroupUpgradeCR creation by TALM. Do not manipulate this label after the initial ZTP installation of the cluster.- Linked CRs

-

The automatically created

ClusterGroupUpgradeCR has the owner reference set as theManagedClusterfrom which it was derived. This reference ensures that deleting theManagedClusterCR causes the instance of theClusterGroupUpgradeto be deleted along with any supporting resources.

22.3.2. Overview of deploying managed clusters with ZTP

Red Hat Advanced Cluster Management (RHACM) uses zero touch provisioning (ZTP) to deploy single-node OpenShift Container Platform clusters, three-node clusters, and standard clusters. You manage site configuration data as OpenShift Container Platform custom resources (CRs) in a Git repository. ZTP uses a declarative GitOps approach for a develop once, deploy anywhere model to deploy the managed clusters.

The deployment of the clusters includes:

- Installing the host operating system (RHCOS) on a blank server

- Deploying OpenShift Container Platform

- Creating cluster policies and site subscriptions

- Making the necessary network configurations to the server operating system

- Deploying profile Operators and performing any needed software-related configuration, such as performance profile, PTP, and SR-IOV

Overview of the managed site installation process

After you apply the managed site custom resources (CRs) on the hub cluster, the following actions happen automatically:

- A Discovery image ISO file is generated and booted on the target host.

- When the ISO file successfully boots on the target host it reports the host hardware information to RHACM.

- After all hosts are discovered, OpenShift Container Platform is installed.

-

When OpenShift Container Platform finishes installing, the hub installs the

klusterletservice on the target cluster. - The requested add-on services are installed on the target cluster.

The Discovery image ISO process is complete when the Agent CR for the managed cluster is created on the hub cluster.

The target bare-metal host must meet the networking, firmware, and hardware requirements listed in Recommended single-node OpenShift cluster configuration for vDU application workloads.

22.3.3. Creating the managed bare-metal host secrets

Add the required Secret custom resources (CRs) for the managed bare-metal host to the hub cluster. You need a secret for the ZTP pipeline to access the Baseboard Management Controller (BMC) and a secret for the assisted installer service to pull cluster installation images from the registry.

The secrets are referenced from the SiteConfig CR by name. The namespace must match the SiteConfig namespace.

Procedure

Create a YAML secret file containing credentials for the host Baseboard Management Controller (BMC) and a pull secret required for installing OpenShift and all add-on cluster Operators:

Save the following YAML as the file

example-sno-secret.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Add the relative path to

example-sno-secret.yamlto thekustomization.yamlfile that you use to install the cluster.

22.3.4. Deploying a managed cluster with SiteConfig and ZTP

Use the following procedure to create a SiteConfig custom resource (CR) and related files and initiate the zero touch provisioning (ZTP) cluster deployment.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have logged in to the hub cluster as a user with

cluster-adminprivileges. - You configured the hub cluster for generating the required installation and policy CRs.

You created a Git repository where you manage your custom site configuration data. The repository must be accessible from the hub cluster and you must configure it as a source repository for the ArgoCD application. See "Preparing the GitOps ZTP site configuration repository" for more information.

NoteWhen you create the source repository, ensure that you patch the ArgoCD application with the

argocd/deployment/argocd-openshift-gitops-patch.jsonpatch-file that you extract from theztp-site-generatecontainer. See "Configuring the hub cluster with ArgoCD".To be ready for provisioning managed clusters, you require the following for each bare-metal host:

- Network connectivity

- Your network requires DNS. Managed cluster hosts should be reachable from the hub cluster. Ensure that Layer 3 connectivity exists between the hub cluster and the managed cluster host.

- Baseboard Management Controller (BMC) details

-

ZTP uses BMC username and password details to connect to the BMC during cluster installation. The GitOps ZTP plugin manages the

ManagedClusterCRs on the hub cluster based on theSiteConfigCR in your site Git repo. You create individualBMCSecretCRs for each host manually.

Procedure

Create the required managed cluster secrets on the hub cluster. These resources must be in a namespace with a name matching the cluster name. For example, in

out/argocd/example/siteconfig/example-sno.yaml, the cluster name and namespace isexample-sno.Export the cluster namespace by running the following command:

export CLUSTERNS=example-sno

$ export CLUSTERNS=example-snoCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the namespace:

oc create namespace $CLUSTERNS

$ oc create namespace $CLUSTERNSCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create pull secret and BMC

SecretCRs for the managed cluster. The pull secret must contain all the credentials necessary for installing OpenShift Container Platform and all required Operators. See "Creating the managed bare-metal host secrets" for more information.NoteThe secrets are referenced from the

SiteConfigcustom resource (CR) by name. The namespace must match theSiteConfignamespace.Create a

SiteConfigCR for your cluster in your local clone of the Git repository:Choose the appropriate example for your CR from the

out/argocd/example/siteconfig/folder. The folder includes example files for single node, three-node, and standard clusters:-

example-sno.yaml -

example-3node.yaml -

example-standard.yaml

-

Change the cluster and host details in the example file to match the type of cluster you want. For example:

Example single-node OpenShift cluster SiteConfig CR

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Create the

assisted-deployment-pull-secretCR with the same namespace as theSiteConfigCR. - 2

clusterImageSetNameRefdefines an image set available on the hub cluster. To see the list of supported versions on your hub cluster, runoc get clusterimagesets.- 3

- Configure the SSH public key used to access the cluster.

- 4

- Cluster labels must correspond to the

bindingRulesfield in thePolicyGenTemplateCRs that you define. For example,policygentemplates/common-ranGen.yamlapplies to all clusters withcommon: trueset,policygentemplates/group-du-sno-ranGen.yamlapplies to all clusters withgroup-du-sno: ""set. - 5

- Optional. The CR specifed under

KlusterletAddonConfigis used to override the defaultKlusterletAddonConfigthat is created for the cluster. - 6

- For single-node deployments, define a single host. For three-node deployments, define three hosts. For standard deployments, define three hosts with

role: masterand two or more hosts defined withrole: worker. - 7

- BMC address that you use to access the host. Applies to all cluster types.

- 8

- Name of the

bmh-secretCR that you separately create with the host BMC credentials. When creating thebmh-secretCR, use the same namespace as theSiteConfigCR that provisions the host. - 9

- Configures the boot mode for the host. The default value is

UEFI. UseUEFISecureBootto enable secure boot on the host. - 10

cpusetmust match the value set in the clusterPerformanceProfileCRspec.cpu.reservedfield for workload partitioning.- 11

- Specifies the network settings for the node.

- 12

- Configures the IPv6 address for the host. For single-node OpenShift clusters with static IP addresses, the node-specific API and Ingress IPs should be the same.

NoteFor more information about BMC addressing, see the "Additional resources" section.

-

You can inspect the default set of extra-manifest

MachineConfigCRs inout/argocd/extra-manifest. It is automatically applied to the cluster when it is installed. -

Optional: To provision additional install-time manifests on the provisioned cluster, create a directory in your Git repository, for example,

sno-extra-manifest/, and add your custom manifest CRs to this directory. If yourSiteConfig.yamlrefers to this directory in theextraManifestPathfield, any CRs in this referenced directory are appended to the default set of extra manifests.

-

Add the

SiteConfigCR to thekustomization.yamlfile in thegeneratorssection, similar to the example shown inout/argocd/example/siteconfig/kustomization.yaml. Commit the

SiteConfigCR and associatedkustomization.yamlchanges in your Git repository and push the changes.The ArgoCD pipeline detects the changes and begins the managed cluster deployment.

22.3.5. Monitoring managed cluster installation progress

The ArgoCD pipeline uses the SiteConfig CR to generate the cluster configuration CRs and syncs it with the hub cluster. You can monitor the progress of the synchronization in the ArgoCD dashboard.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have logged in to the hub cluster as a user with

cluster-adminprivileges.

Procedure

When the synchronization is complete, the installation generally proceeds as follows:

The Assisted Service Operator installs OpenShift Container Platform on the cluster. You can monitor the progress of cluster installation from the RHACM dashboard or from the command line by running the following commands:

Export the cluster name:

export CLUSTER=<clusterName>

$ export CLUSTER=<clusterName>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Query the

AgentClusterInstallCR for the managed cluster:oc get agentclusterinstall -n $CLUSTER $CLUSTER -o jsonpath='{.status.conditions[?(@.type=="Completed")]}' | jq$ oc get agentclusterinstall -n $CLUSTER $CLUSTER -o jsonpath='{.status.conditions[?(@.type=="Completed")]}' | jqCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the installation events for the cluster:

curl -sk $(oc get agentclusterinstall -n $CLUSTER $CLUSTER -o jsonpath='{.status.debugInfo.eventsURL}') | jq '.[-2,-1]'$ curl -sk $(oc get agentclusterinstall -n $CLUSTER $CLUSTER -o jsonpath='{.status.debugInfo.eventsURL}') | jq '.[-2,-1]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

22.3.6. Troubleshooting GitOps ZTP by validating the installation CRs

The ArgoCD pipeline uses the SiteConfig and PolicyGenTemplate custom resources (CRs) to generate the cluster configuration CRs and Red Hat Advanced Cluster Management (RHACM) policies. Use the following steps to troubleshoot issues that might occur during this process.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have logged in to the hub cluster as a user with

cluster-adminprivileges.

Procedure

Check that the installation CRs were created by using the following command:

oc get AgentClusterInstall -n <cluster_name>

$ oc get AgentClusterInstall -n <cluster_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow If no object is returned, use the following steps to troubleshoot the ArgoCD pipeline flow from

SiteConfigfiles to the installation CRs.Verify that the

ManagedClusterCR was generated using theSiteConfigCR on the hub cluster:oc get managedcluster

$ oc get managedclusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the

ManagedClusteris missing, check if theclustersapplication failed to synchronize the files from the Git repository to the hub cluster:oc describe -n openshift-gitops application clusters

$ oc describe -n openshift-gitops application clustersCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check for the

Status.Conditionsfield to view the error logs for the managed cluster. For example, setting an invalid value forextraManifestPath:in theSiteConfigCR raises the following error:Status: Conditions: Last Transition Time: 2021-11-26T17:21:39Z Message: rpc error: code = Unknown desc = `kustomize build /tmp/https___git.com/ran-sites/siteconfigs/ --enable-alpha-plugins` failed exit status 1: 2021/11/26 17:21:40 Error could not create extra-manifest ranSite1.extra-manifest3 stat extra-manifest3: no such file or directory 2021/11/26 17:21:40 Error: could not build the entire SiteConfig defined by /tmp/kust-plugin-config-913473579: stat extra-manifest3: no such file or directory Error: failure in plugin configured via /tmp/kust-plugin-config-913473579; exit status 1: exit status 1 Type: ComparisonErrorStatus: Conditions: Last Transition Time: 2021-11-26T17:21:39Z Message: rpc error: code = Unknown desc = `kustomize build /tmp/https___git.com/ran-sites/siteconfigs/ --enable-alpha-plugins` failed exit status 1: 2021/11/26 17:21:40 Error could not create extra-manifest ranSite1.extra-manifest3 stat extra-manifest3: no such file or directory 2021/11/26 17:21:40 Error: could not build the entire SiteConfig defined by /tmp/kust-plugin-config-913473579: stat extra-manifest3: no such file or directory Error: failure in plugin configured via /tmp/kust-plugin-config-913473579; exit status 1: exit status 1 Type: ComparisonErrorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the

Status.Syncfield. If there are log errors, theStatus.Syncfield could indicate anUnknownerror:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

22.3.7. Troubleshooting {ztp} virtual media booting on Supermicro servers

SuperMicro X11 servers do not support virtual media installations when the image is served using the https protocol. As a result, single-node OpenShift deployments for this environment fail to boot on the target node. To avoid this issue, log in to the hub cluster and disable Transport Layer Security (TLS) in the Provisioning resource. This ensures the image is not served with TLS even though the image address uses the https scheme.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have logged in to the hub cluster as a user with

cluster-adminprivileges.

Procedure

Disable TLS in the

Provisioningresource by running the following command:oc patch provisioning provisioning-configuration --type merge -p '{"spec":{"disableVirtualMediaTLS": true}}'$ oc patch provisioning provisioning-configuration --type merge -p '{"spec":{"disableVirtualMediaTLS": true}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Continue the steps to deploy your single-node OpenShift cluster.

22.3.8. Removing a managed cluster site from the ZTP pipeline

You can remove a managed site and the associated installation and configuration policy CRs from the ZTP pipeline.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have logged in to the hub cluster as a user with

cluster-adminprivileges.

Procedure

Remove a site and the associated CRs by removing the associated

SiteConfigandPolicyGenTemplatefiles from thekustomization.yamlfile.When you run the ZTP pipeline again, the generated CRs are removed.

-

Optional: If you want to permanently remove a site, you should also remove the

SiteConfigand site-specificPolicyGenTemplatefiles from the Git repository. -

Optional: If you want to remove a site temporarily, for example when redeploying a site, you can leave the

SiteConfigand site-specificPolicyGenTemplateCRs in the Git repository.

After removing the SiteConfig file from the Git repository, if the corresponding clusters get stuck in the detach process, check Red Hat Advanced Cluster Management (RHACM) on the hub cluster for information about cleaning up the detached cluster.

22.3.9. Removing obsolete content from the ZTP pipeline

If a change to the PolicyGenTemplate configuration results in obsolete policies, for example, if you rename policies, use the following procedure to remove the obsolete policies.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have logged in to the hub cluster as a user with

cluster-adminprivileges.

Procedure

-

Remove the affected

PolicyGenTemplatefiles from the Git repository, commit and push to the remote repository. - Wait for the changes to synchronize through the application and the affected policies to be removed from the hub cluster.

Add the updated

PolicyGenTemplatefiles back to the Git repository, and then commit and push to the remote repository.NoteRemoving zero touch provisioning (ZTP) policies from the Git repository, and as a result also removing them from the hub cluster, does not affect the configuration of the managed cluster. The policy and CRs managed by that policy remains in place on the managed cluster.

Optional: As an alternative, after making changes to

PolicyGenTemplateCRs that result in obsolete policies, you can remove these policies from the hub cluster manually. You can delete policies from the RHACM console using the Governance tab or by running the following command:oc delete policy -n <namespace> <policy_name>

$ oc delete policy -n <namespace> <policy_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

22.3.10. Tearing down the ZTP pipeline

You can remove the ArgoCD pipeline and all generated ZTP artifacts.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have logged in to the hub cluster as a user with

cluster-adminprivileges.

Procedure

- Detach all clusters from Red Hat Advanced Cluster Management (RHACM) on the hub cluster.

Delete the

kustomization.yamlfile in thedeploymentdirectory using the following command:oc delete -k out/argocd/deployment

$ oc delete -k out/argocd/deploymentCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Commit and push your changes to the site repository.

22.4. Configuring managed clusters with policies and PolicyGenTemplate resources

Applied policy custom resources (CRs) configure the managed clusters that you provision. You can customize how Red Hat Advanced Cluster Management (RHACM) uses PolicyGenTemplate CRs to generate the applied policy CRs.

22.4.1. About the PolicyGenTemplate CRD

The PolicyGenTemplate custom resource definition (CRD) tells the PolicyGen policy generator what custom resources (CRs) to include in the cluster configuration, how to combine the CRs into the generated policies, and what items in those CRs need to be updated with overlay content.

The following example shows a PolicyGenTemplate CR (common-du-ranGen.yaml) extracted from the ztp-site-generate reference container. The common-du-ranGen.yaml file defines two Red Hat Advanced Cluster Management (RHACM) policies. The polices manage a collection of configuration CRs, one for each unique value of policyName in the CR. common-du-ranGen.yaml creates a single placement binding and a placement rule to bind the policies to clusters based on the labels listed in the bindingRules section.

Example PolicyGenTemplate CR - common-du-ranGen.yaml

- 1

common: "true"applies the policies to all clusters with this label.- 2

- Files listed under

sourceFilescreate the Operator policies for installed clusters. - 3

OperatorHub.yamlconfigures the OperatorHub for the disconnected registry.- 4

DefaultCatsrc.yamlconfigures the catalog source for the disconnected registry.- 5

policyName: "config-policy"configures Operator subscriptions. TheOperatorHubCR disables the default and this CR replacesredhat-operatorswith aCatalogSourceCR that points to the disconnected registry.

A PolicyGenTemplate CR can be constructed with any number of included CRs. Apply the following example CR in the hub cluster to generate a policy containing a single CR:

Using the source file PtpConfigSlave.yaml as an example, the file defines a PtpConfig CR. The generated policy for the PtpConfigSlave example is named group-du-sno-config-policy. The PtpConfig CR defined in the generated group-du-sno-config-policy is named du-ptp-slave. The spec defined in PtpConfigSlave.yaml is placed under du-ptp-slave along with the other spec items defined under the source file.

The following example shows the group-du-sno-config-policy CR:

22.4.2. Recommendations when customizing PolicyGenTemplate CRs

Consider the following best practices when customizing site configuration PolicyGenTemplate custom resources (CRs):

-

Use as few policies as are necessary. Using fewer policies requires less resources. Each additional policy creates overhead for the hub cluster and the deployed managed cluster. CRs are combined into policies based on the

policyNamefield in thePolicyGenTemplateCR. CRs in the samePolicyGenTemplatewhich have the same value forpolicyNameare managed under a single policy. -

In disconnected environments, use a single catalog source for all Operators by configuring the registry as a single index containing all Operators. Each additional

CatalogSourceCR on the managed clusters increases CPU usage. -

MachineConfigCRs should be included asextraManifestsin theSiteConfigCR so that they are applied during installation. This can reduce the overall time taken until the cluster is ready to deploy applications. -

PolicyGenTemplatesshould override the channel field to explicitly identify the desired version. This ensures that changes in the source CR during upgrades does not update the generated subscription.

When managing large numbers of spoke clusters on the hub cluster, minimize the number of policies to reduce resource consumption.

Grouping multiple configuration CRs into a single or limited number of policies is one way to reduce the overall number of policies on the hub cluster. When using the common, group, and site hierarchy of policies for managing site configuration, it is especially important to combine site-specific configuration into a single policy.

22.4.3. PolicyGenTemplate CRs for RAN deployments

Use PolicyGenTemplate (PGT) custom resources (CRs) to customize the configuration applied to the cluster by using the GitOps zero touch provisioning (ZTP) pipeline. The PGT CR allows you to generate one or more policies to manage the set of configuration CRs on your fleet of clusters. The PGT identifies the set of managed CRs, bundles them into policies, builds the policy wrapping around those CRs, and associates the policies with clusters by using label binding rules.

The reference configuration, obtained from the GitOps ZTP container, is designed to provide a set of critical features and node tuning settings that ensure the cluster can support the stringent performance and resource utilization constraints typical of RAN (Radio Access Network) Distributed Unit (DU) applications. Changes or omissions from the baseline configuration can affect feature availability, performance, and resource utilization. Use the reference PolicyGenTemplate CRs as the basis to create a hierarchy of configuration files tailored to your specific site requirements.

The baseline PolicyGenTemplate CRs that are defined for RAN DU cluster configuration can be extracted from the GitOps ZTP ztp-site-generate container. See "Preparing the GitOps ZTP site configuration repository" for further details.

The PolicyGenTemplate CRs can be found in the ./out/argocd/example/policygentemplates folder. The reference architecture has common, group, and site-specific configuration CRs. Each PolicyGenTemplate CR refers to other CRs that can be found in the ./out/source-crs folder.

The PolicyGenTemplate CRs relevant to RAN cluster configuration are described below. Variants are provided for the group PolicyGenTemplate CRs to account for differences in single-node, three-node compact, and standard cluster configurations. Similarly, site-specific configuration variants are provided for single-node clusters and multi-node (compact or standard) clusters. Use the group and site-specific configuration variants that are relevant for your deployment.

| PolicyGenTemplate CR | Description |

|---|---|

|

| Contains a set of CRs that get applied to multi-node clusters. These CRs configure SR-IOV features typical for RAN installations. |

|

| Contains a set of CRs that get applied to single-node OpenShift clusters. These CRs configure SR-IOV features typical for RAN installations. |

|

| Contains a set of common RAN CRs that get applied to all clusters. These CRs subscribe to a set of operators providing cluster features typical for RAN as well as baseline cluster tuning. |

|

| Contains the RAN policies for three-node clusters only. |

|

| Contains the RAN policies for single-node clusters only. |

|

| Contains the RAN policies for standard three control-plane clusters. |

|

|

|

|

|

|

|

|

|

22.4.4. Customizing a managed cluster with PolicyGenTemplate CRs

Use the following procedure to customize the policies that get applied to the managed cluster that you provision using the zero touch provisioning (ZTP) pipeline.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have logged in to the hub cluster as a user with

cluster-adminprivileges. - You configured the hub cluster for generating the required installation and policy CRs.

- You created a Git repository where you manage your custom site configuration data. The repository must be accessible from the hub cluster and be defined as a source repository for the Argo CD application.

Procedure

Create a

PolicyGenTemplateCR for site-specific configuration CRs.-

Choose the appropriate example for your CR from the

out/argocd/example/policygentemplatesfolder, for example,example-sno-site.yamlorexample-multinode-site.yaml. Change the

bindingRulesfield in the example file to match the site-specific label included in theSiteConfigCR. In the exampleSiteConfigfile, the site-specific label issites: example-sno.NoteEnsure that the labels defined in your

PolicyGenTemplatebindingRulesfield correspond to the labels that are defined in the related managed clustersSiteConfigCR.- Change the content in the example file to match the desired configuration.

-

Choose the appropriate example for your CR from the

Optional: Create a

PolicyGenTemplateCR for any common configuration CRs that apply to the entire fleet of clusters.-

Select the appropriate example for your CR from the

out/argocd/example/policygentemplatesfolder, for example,common-ranGen.yaml. - Change the content in the example file to match the desired configuration.

-

Select the appropriate example for your CR from the

Optional: Create a

PolicyGenTemplateCR for any group configuration CRs that apply to the certain groups of clusters in the fleet.Ensure that the content of the overlaid spec files matches your desired end state. As a reference, the out/source-crs directory contains the full list of source-crs available to be included and overlaid by your PolicyGenTemplate templates.

NoteDepending on the specific requirements of your clusters, you might need more than a single group policy per cluster type, especially considering that the example group policies each have a single PerformancePolicy.yaml file that can only be shared across a set of clusters if those clusters consist of identical hardware configurations.

-

Select the appropriate example for your CR from the

out/argocd/example/policygentemplatesfolder, for example,group-du-sno-ranGen.yaml. - Change the content in the example file to match the desired configuration.

-

Select the appropriate example for your CR from the

-

Optional. Create a validator inform policy

PolicyGenTemplateCR to signal when the ZTP installation and configuration of the deployed cluster is complete. For more information, see "Creating a validator inform policy". Define all the policy namespaces in a YAML file similar to the example

out/argocd/example/policygentemplates/ns.yamlfile.ImportantDo not include the

NamespaceCR in the same file with thePolicyGenTemplateCR.-

Add the

PolicyGenTemplateCRs andNamespaceCR to thekustomization.yamlfile in the generators section, similar to the example shown inout/argocd/example/policygentemplates/kustomization.yaml. Commit the

PolicyGenTemplateCRs,NamespaceCR, and associatedkustomization.yamlfile in your Git repository and push the changes.The ArgoCD pipeline detects the changes and begins the managed cluster deployment. You can push the changes to the

SiteConfigCR and thePolicyGenTemplateCR simultaneously.

22.4.5. Monitoring managed cluster policy deployment progress

The ArgoCD pipeline uses PolicyGenTemplate CRs in Git to generate the RHACM policies and then sync them to the hub cluster. You can monitor the progress of the managed cluster policy synchronization after the assisted service installs OpenShift Container Platform on the managed cluster.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have logged in to the hub cluster as a user with

cluster-adminprivileges.

Procedure

The Topology Aware Lifecycle Manager (TALM) applies the configuration policies that are bound to the cluster.

After the cluster installation is complete and the cluster becomes

Ready, aClusterGroupUpgradeCR corresponding to this cluster, with a list of ordered policies defined by theran.openshift.io/ztp-deploy-wave annotations, is automatically created by the TALM. The cluster’s policies are applied in the order listed inClusterGroupUpgradeCR.You can monitor the high-level progress of configuration policy reconciliation by using the following commands:

export CLUSTER=<clusterName>

$ export CLUSTER=<clusterName>Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get clustergroupupgrades -n ztp-install $CLUSTER -o jsonpath='{.status.conditions[-1:]}' | jq$ oc get clustergroupupgrades -n ztp-install $CLUSTER -o jsonpath='{.status.conditions[-1:]}' | jqCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can monitor the detailed cluster policy compliance status by using the RHACM dashboard or the command line.

To check policy compliance by using

oc, run the following command:oc get policies -n $CLUSTER

$ oc get policies -n $CLUSTERCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To check policy status from the RHACM web console, perform the following actions:

-

Click Governance

Find policies. - Click on a cluster policy to check it’s status.

-

Click Governance

When all of the cluster policies become compliant, ZTP installation and configuration for the cluster is complete. The ztp-done label is added to the cluster.

In the reference configuration, the final policy that becomes compliant is the one defined in the *-du-validator-policy policy. This policy, when compliant on a cluster, ensures that all cluster configuration, Operator installation, and Operator configuration is complete.

22.4.6. Validating the generation of configuration policy CRs

Policy custom resources (CRs) are generated in the same namespace as the PolicyGenTemplate from which they are created. The same troubleshooting flow applies to all policy CRs generated from a PolicyGenTemplate regardless of whether they are ztp-common, ztp-group, or ztp-site based, as shown using the following commands:

export NS=<namespace>

$ export NS=<namespace>oc get policy -n $NS

$ oc get policy -n $NSThe expected set of policy-wrapped CRs should be displayed.

If the policies failed synchronization, use the following troubleshooting steps.

Procedure

To display detailed information about the policies, run the following command:

oc describe -n openshift-gitops application policies

$ oc describe -n openshift-gitops application policiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check for

Status: Conditions:to show the error logs. For example, setting an invalidsourceFile→fileName:generates the error shown below:Status: Conditions: Last Transition Time: 2021-11-26T17:21:39Z Message: rpc error: code = Unknown desc = `kustomize build /tmp/https___git.com/ran-sites/policies/ --enable-alpha-plugins` failed exit status 1: 2021/11/26 17:21:40 Error could not find test.yaml under source-crs/: no such file or directory Error: failure in plugin configured via /tmp/kust-plugin-config-52463179; exit status 1: exit status 1 Type: ComparisonErrorStatus: Conditions: Last Transition Time: 2021-11-26T17:21:39Z Message: rpc error: code = Unknown desc = `kustomize build /tmp/https___git.com/ran-sites/policies/ --enable-alpha-plugins` failed exit status 1: 2021/11/26 17:21:40 Error could not find test.yaml under source-crs/: no such file or directory Error: failure in plugin configured via /tmp/kust-plugin-config-52463179; exit status 1: exit status 1 Type: ComparisonErrorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check for

Status: Sync:. If there are log errors atStatus: Conditions:, theStatus: Sync:showsUnknownorError:Copy to Clipboard Copied! Toggle word wrap Toggle overflow When Red Hat Advanced Cluster Management (RHACM) recognizes that policies apply to a

ManagedClusterobject, the policy CR objects are applied to the cluster namespace. Check to see if the policies were copied to the cluster namespace:oc get policy -n $CLUSTER

$ oc get policy -n $CLUSTERCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow RHACM copies all applicable policies into the cluster namespace. The copied policy names have the format:

<policyGenTemplate.Namespace>.<policyGenTemplate.Name>-<policyName>.Check the placement rule for any policies not copied to the cluster namespace. The

matchSelectorin thePlacementRulefor those policies should match labels on theManagedClusterobject:oc get placementrule -n $NS

$ oc get placementrule -n $NSCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note the

PlacementRulename appropriate for the missing policy, common, group, or site, using the following command:oc get placementrule -n $NS <placementRuleName> -o yaml

$ oc get placementrule -n $NS <placementRuleName> -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow - The status-decisions should include your cluster name.

-

The key-value pair of the

matchSelectorin the spec must match the labels on your managed cluster.

Check the labels on the

ManagedClusterobject using the following command:oc get ManagedCluster $CLUSTER -o jsonpath='{.metadata.labels}' | jq$ oc get ManagedCluster $CLUSTER -o jsonpath='{.metadata.labels}' | jqCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check to see which policies are compliant using the following command:

oc get policy -n $CLUSTER

$ oc get policy -n $CLUSTERCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the

Namespace,OperatorGroup, andSubscriptionpolicies are compliant but the Operator configuration policies are not, it is likely that the Operators did not install on the managed cluster. This causes the Operator configuration policies to fail to apply because the CRD is not yet applied to the spoke.

22.4.7. Restarting policy reconciliation

You can restart policy reconciliation when unexpected compliance issues occur, for example, when the ClusterGroupUpgrade custom resource (CR) has timed out.

Procedure

A

ClusterGroupUpgradeCR is generated in the namespaceztp-installby the Topology Aware Lifecycle Manager after the managed cluster becomesReady:export CLUSTER=<clusterName>

$ export CLUSTER=<clusterName>Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get clustergroupupgrades -n ztp-install $CLUSTER

$ oc get clustergroupupgrades -n ztp-install $CLUSTERCopy to Clipboard Copied! Toggle word wrap Toggle overflow If there are unexpected issues and the policies fail to become complaint within the configured timeout (the default is 4 hours), the status of the

ClusterGroupUpgradeCR showsUpgradeTimedOut:oc get clustergroupupgrades -n ztp-install $CLUSTER -o jsonpath='{.status.conditions[?(@.type=="Ready")]}'$ oc get clustergroupupgrades -n ztp-install $CLUSTER -o jsonpath='{.status.conditions[?(@.type=="Ready")]}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow A

ClusterGroupUpgradeCR in theUpgradeTimedOutstate automatically restarts its policy reconciliation every hour. If you have changed your policies, you can start a retry immediately by deleting the existingClusterGroupUpgradeCR. This triggers the automatic creation of a newClusterGroupUpgradeCR that begins reconciling the policies immediately:oc delete clustergroupupgrades -n ztp-install $CLUSTER

$ oc delete clustergroupupgrades -n ztp-install $CLUSTERCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Note that when the ClusterGroupUpgrade CR completes with status UpgradeCompleted and the managed cluster has the label ztp-done applied, you can make additional configuration changes using PolicyGenTemplate. Deleting the existing ClusterGroupUpgrade CR will not make the TALM generate a new CR.

At this point, ZTP has completed its interaction with the cluster and any further interactions should be treated as an update and a new ClusterGroupUpgrade CR created for remediation of the policies.

22.4.8. Changing applied managed cluster CRs using policies

You can remove content from a custom resource (CR) that is deployed in a managed cluster through a policy.

By default, all Policy CRs created from a PolicyGenTemplate CR have the complianceType field set to musthave. A musthave policy without the removed content is still compliant because the CR on the managed cluster has all the specified content. With this configuration, when you remove content from a CR, TALM removes the content from the policy but the content is not removed from the CR on the managed cluster.

With the complianceType field to mustonlyhave, the policy ensures that the CR on the cluster is an exact match of what is specified in the policy.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have logged in to the hub cluster as a user with

cluster-adminprivileges. - You have deployed a managed cluster from a hub cluster running RHACM.

- You have installed Topology Aware Lifecycle Manager on the hub cluster.

Procedure

Remove the content that you no longer need from the affected CRs. In this example, the

disableDrain: falseline was removed from theSriovOperatorConfigCR.Example CR

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Change the

complianceTypeof the affected policies tomustonlyhavein thegroup-du-sno-ranGen.yamlfile.Example YAML

# ... - fileName: SriovOperatorConfig.yaml policyName: "config-policy" complianceType: mustonlyhave # ...

# ... - fileName: SriovOperatorConfig.yaml policyName: "config-policy" complianceType: mustonlyhave # ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

ClusterGroupUpdatesCR and specify the clusters that must receive the CR changes::Example ClusterGroupUpdates CR

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

ClusterGroupUpgradeCR by running the following command:oc create -f cgu-remove.yaml

$ oc create -f cgu-remove.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow When you are ready to apply the changes, for example, during an appropriate maintenance window, change the value of the

spec.enablefield totrueby running the following command:oc --namespace=default patch clustergroupupgrade.ran.openshift.io/cgu-remove \ --patch '{"spec":{"enable":true}}' --type=merge$ oc --namespace=default patch clustergroupupgrade.ran.openshift.io/cgu-remove \ --patch '{"spec":{"enable":true}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Check the status of the policies by running the following command:

oc get <kind> <changed_cr_name>

$ oc get <kind> <changed_cr_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAMESPACE NAME REMEDIATION ACTION COMPLIANCE STATE AGE default cgu-ztp-group.group-du-sno-config-policy enforce 17m default ztp-group.group-du-sno-config-policy inform NonCompliant 15h

NAMESPACE NAME REMEDIATION ACTION COMPLIANCE STATE AGE default cgu-ztp-group.group-du-sno-config-policy enforce 17m default ztp-group.group-du-sno-config-policy inform NonCompliant 15hCopy to Clipboard Copied! Toggle word wrap Toggle overflow When the

COMPLIANCE STATEof the policy isCompliant, it means that the CR is updated and the unwanted content is removed.Check that the policies are removed from the targeted clusters by running the following command on the managed clusters:

oc get <kind> <changed_cr_name>

$ oc get <kind> <changed_cr_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow If there are no results, the CR is removed from the managed cluster.

22.4.9. Indication of done for ZTP installations

Zero touch provisioning (ZTP) simplifies the process of checking the ZTP installation status for a cluster. The ZTP status moves through three phases: cluster installation, cluster configuration, and ZTP done.

- Cluster installation phase

-

The cluster installation phase is shown by the

ManagedClusterJoinedandManagedClusterAvailableconditions in theManagedClusterCR . If theManagedClusterCR does not have these conditions, or the condition is set toFalse, the cluster is still in the installation phase. Additional details about installation are available from theAgentClusterInstallandClusterDeploymentCRs. For more information, see "Troubleshooting GitOps ZTP". - Cluster configuration phase

-

The cluster configuration phase is shown by a

ztp-runninglabel applied theManagedClusterCR for the cluster. - ZTP done

Cluster installation and configuration is complete in the ZTP done phase. This is shown by the removal of the

ztp-runninglabel and addition of theztp-donelabel to theManagedClusterCR. Theztp-donelabel shows that the configuration has been applied and the baseline DU configuration has completed cluster tuning.The transition to the ZTP done state is conditional on the compliant state of a Red Hat Advanced Cluster Management (RHACM) validator inform policy. This policy captures the existing criteria for a completed installation and validates that it moves to a compliant state only when ZTP provisioning of the managed cluster is complete.

The validator inform policy ensures the configuration of the cluster is fully applied and Operators have completed their initialization. The policy validates the following:

-

The target

MachineConfigPoolcontains the expected entries and has finished updating. All nodes are available and not degraded. -

The SR-IOV Operator has completed initialization as indicated by at least one

SriovNetworkNodeStatewithsyncStatus: Succeeded. - The PTP Operator daemon set exists.

-

The target

22.5. Manually installing a single-node OpenShift cluster with ZTP

You can deploy a managed single-node OpenShift cluster by using Red Hat Advanced Cluster Management (RHACM) and the assisted service.

If you are creating multiple managed clusters, use the SiteConfig method described in Deploying far edge sites with ZTP.

The target bare-metal host must meet the networking, firmware, and hardware requirements listed in Recommended cluster configuration for vDU application workloads.

22.5.1. Generating ZTP installation and configuration CRs manually

Use the generator entrypoint for the ztp-site-generate container to generate the site installation and configuration custom resource (CRs) for a cluster based on SiteConfig and PolicyGenTemplate CRs.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You have logged in to the hub cluster as a user with

cluster-adminprivileges.

Procedure

Create an output folder by running the following command:

mkdir -p ./out

$ mkdir -p ./outCopy to Clipboard Copied! Toggle word wrap Toggle overflow Export the

argocddirectory from theztp-site-generatecontainer image:podman run --log-driver=none --rm registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11 extract /home/ztp --tar | tar x -C ./out

$ podman run --log-driver=none --rm registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11 extract /home/ztp --tar | tar x -C ./outCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

./outdirectory has the referencePolicyGenTemplateandSiteConfigCRs in theout/argocd/example/folder.Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an output folder for the site installation CRs:

mkdir -p ./site-install

$ mkdir -p ./site-installCopy to Clipboard Copied! Toggle word wrap Toggle overflow Modify the example

SiteConfigCR for the cluster type that you want to install. Copyexample-sno.yamltosite-1-sno.yamland modify the CR to match the details of the site and bare-metal host that you want to install, for example:Example single-node OpenShift cluster SiteConfig CR

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Create the

assisted-deployment-pull-secretCR with the same namespace as theSiteConfigCR. - 2

clusterImageSetNameRefdefines an image set available on the hub cluster. To see the list of supported versions on your hub cluster, runoc get clusterimagesets.- 3

- Configure the SSH public key used to access the cluster.

- 4

- Cluster labels must correspond to the

bindingRulesfield in thePolicyGenTemplateCRs that you define. For example,policygentemplates/common-ranGen.yamlapplies to all clusters withcommon: trueset,policygentemplates/group-du-sno-ranGen.yamlapplies to all clusters withgroup-du-sno: ""set. - 5

- Optional. The CR specifed under

KlusterletAddonConfigis used to override the defaultKlusterletAddonConfigthat is created for the cluster. - 6

- For single-node deployments, define a single host. For three-node deployments, define three hosts. For standard deployments, define three hosts with

role: masterand two or more hosts defined withrole: worker. - 7

- BMC address that you use to access the host. Applies to all cluster types.

- 8

- Name of the

bmh-secretCR that you separately create with the host BMC credentials. When creating thebmh-secretCR, use the same namespace as theSiteConfigCR that provisions the host. - 9

- Configures the boot mode for the host. The default value is

UEFI. UseUEFISecureBootto enable secure boot on the host. - 10

cpusetmust match the value set in the clusterPerformanceProfileCRspec.cpu.reservedfield for workload partitioning.- 11

- Specifies the network settings for the node.

- 12

- Configures the IPv6 address for the host. For single-node OpenShift clusters with static IP addresses, the node-specific API and Ingress IPs should be the same.

Generate the day-0 installation CRs by processing the modified

SiteConfigCRsite-1-sno.yamlby running the following command:podman run -it --rm -v `pwd`/out/argocd/example/siteconfig:/resources:Z -v `pwd`/site-install:/output:Z,U registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11.1 generator install site-1-sno.yaml /output

$ podman run -it --rm -v `pwd`/out/argocd/example/siteconfig:/resources:Z -v `pwd`/site-install:/output:Z,U registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11.1 generator install site-1-sno.yaml /outputCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Generate just the day-0

MachineConfiginstallation CRs for a particular cluster type by processing the referenceSiteConfigCR with the-Eoption. For example, run the following commands:Create an output folder for the

MachineConfigCRs:mkdir -p ./site-machineconfig

$ mkdir -p ./site-machineconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow Generate the

MachineConfiginstallation CRs:podman run -it --rm -v `pwd`/out/argocd/example/siteconfig:/resources:Z -v `pwd`/site-machineconfig:/output:Z,U registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11.1 generator install -E site-1-sno.yaml /output

$ podman run -it --rm -v `pwd`/out/argocd/example/siteconfig:/resources:Z -v `pwd`/site-machineconfig:/output:Z,U registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11.1 generator install -E site-1-sno.yaml /outputCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

site-machineconfig └── site-1-sno ├── site-1-sno_machineconfig_02-master-workload-partitioning.yaml ├── site-1-sno_machineconfig_predefined-extra-manifests-master.yaml └── site-1-sno_machineconfig_predefined-extra-manifests-worker.yamlsite-machineconfig └── site-1-sno ├── site-1-sno_machineconfig_02-master-workload-partitioning.yaml ├── site-1-sno_machineconfig_predefined-extra-manifests-master.yaml └── site-1-sno_machineconfig_predefined-extra-manifests-worker.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Generate and export the day-2 configuration CRs using the reference

PolicyGenTemplateCRs from the previous step. Run the following commands:Create an output folder for the day-2 CRs:

mkdir -p ./ref

$ mkdir -p ./refCopy to Clipboard Copied! Toggle word wrap Toggle overflow Generate and export the day-2 configuration CRs:

podman run -it --rm -v `pwd`/out/argocd/example/policygentemplates:/resources:Z -v `pwd`/ref:/output:Z,U registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11.1 generator config -N . /output

$ podman run -it --rm -v `pwd`/out/argocd/example/policygentemplates:/resources:Z -v `pwd`/ref:/output:Z,U registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.11.1 generator config -N . /outputCopy to Clipboard Copied! Toggle word wrap Toggle overflow The command generates example group and site-specific

PolicyGenTemplateCRs for single-node OpenShift, three-node clusters, and standard clusters in the./reffolder.Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Use the generated CRs as the basis for the CRs that you use to install the cluster. You apply the installation CRs to the hub cluster as described in "Installing a single managed cluster". The configuration CRs can be applied to the cluster after cluster installation is complete.

22.5.2. Creating the managed bare-metal host secrets

Add the required Secret custom resources (CRs) for the managed bare-metal host to the hub cluster. You need a secret for the ZTP pipeline to access the Baseboard Management Controller (BMC) and a secret for the assisted installer service to pull cluster installation images from the registry.

The secrets are referenced from the SiteConfig CR by name. The namespace must match the SiteConfig namespace.

Procedure