This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Chapter 5. Using Container Storage Interface (CSI)

5.1. Configuring CSI volumes

The Container Storage Interface (CSI) allows OpenShift Container Platform to consume storage from storage back ends that implement the CSI interface as persistent storage.

OpenShift Container Platform 4.11 supports version 1.5.0 of the CSI specification.

5.1.1. CSI Architecture

CSI drivers are typically shipped as container images. These containers are not aware of OpenShift Container Platform where they run. To use CSI-compatible storage back end in OpenShift Container Platform, the cluster administrator must deploy several components that serve as a bridge between OpenShift Container Platform and the storage driver.

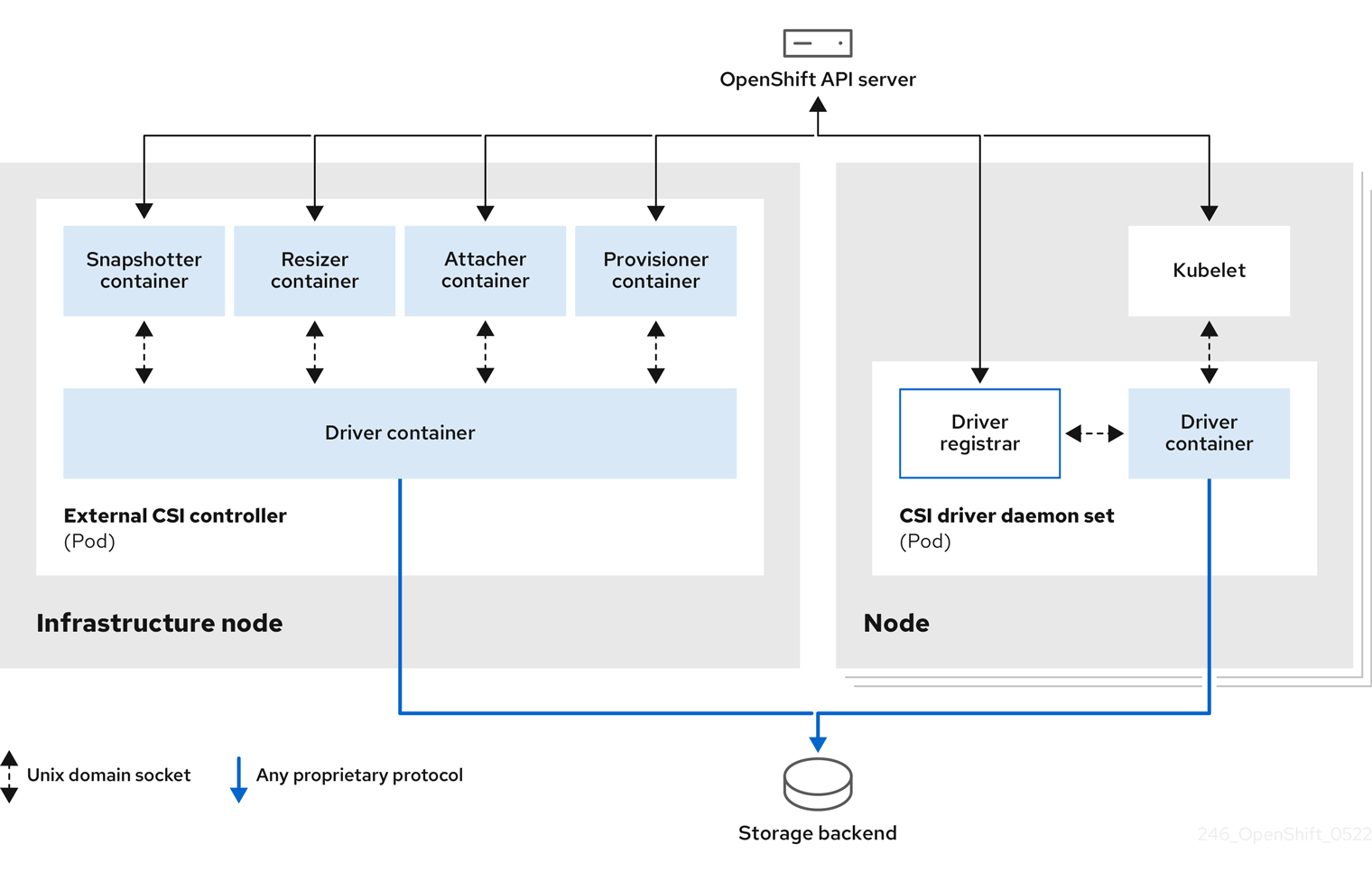

The following diagram provides a high-level overview about the components running in pods in the OpenShift Container Platform cluster.

It is possible to run multiple CSI drivers for different storage back ends. Each driver needs its own external controllers deployment and daemon set with the driver and CSI registrar.

5.1.1.1. External CSI controllers

External CSI Controllers is a deployment that deploys one or more pods with five containers:

-

The snapshotter container watches

VolumeSnapshotandVolumeSnapshotContentobjects and is responsible for the creation and deletion ofVolumeSnapshotContentobject. -

The resizer container is a sidecar container that watches for

PersistentVolumeClaimupdates and triggersControllerExpandVolumeoperations against a CSI endpoint if you request more storage onPersistentVolumeClaimobject. -

An external CSI attacher container translates

attachanddetachcalls from OpenShift Container Platform to respectiveControllerPublishandControllerUnpublishcalls to the CSI driver. -

An external CSI provisioner container that translates

provisionanddeletecalls from OpenShift Container Platform to respectiveCreateVolumeandDeleteVolumecalls to the CSI driver. - A CSI driver container

The CSI attacher and CSI provisioner containers communicate with the CSI driver container using UNIX Domain Sockets, ensuring that no CSI communication leaves the pod. The CSI driver is not accessible from outside of the pod.

attach, detach, provision, and delete operations typically require the CSI driver to use credentials to the storage backend. Run the CSI controller pods on infrastructure nodes so the credentials are never leaked to user processes, even in the event of a catastrophic security breach on a compute node.

The external attacher must also run for CSI drivers that do not support third-party attach or detach operations. The external attacher will not issue any ControllerPublish or ControllerUnpublish operations to the CSI driver. However, it still must run to implement the necessary OpenShift Container Platform attachment API.

5.1.1.2. CSI driver daemon set

The CSI driver daemon set runs a pod on every node that allows OpenShift Container Platform to mount storage provided by the CSI driver to the node and use it in user workloads (pods) as persistent volumes (PVs). The pod with the CSI driver installed contains the following containers:

-

A CSI driver registrar, which registers the CSI driver into the

openshift-nodeservice running on the node. Theopenshift-nodeprocess running on the node then directly connects with the CSI driver using the UNIX Domain Socket available on the node. - A CSI driver.

The CSI driver deployed on the node should have as few credentials to the storage back end as possible. OpenShift Container Platform will only use the node plugin set of CSI calls such as NodePublish/NodeUnpublish and NodeStage/NodeUnstage, if these calls are implemented.

5.1.2. CSI drivers supported by OpenShift Container Platform

OpenShift Container Platform installs certain CSI drivers by default, giving users storage options that are not possible with in-tree volume plugins.

To create CSI-provisioned persistent volumes that mount to these supported storage assets, OpenShift Container Platform installs the necessary CSI driver Operator, the CSI driver, and the required storage class by default. For more details about the default namespace of the Operator and driver, see the documentation for the specific CSI Driver Operator.

The following table describes the CSI drivers that are installed with OpenShift Container Platform and which CSI features they support, such as volume snapshots, cloning, and resize.

| CSI driver | CSI volume snapshots | CSI cloning | CSI resize |

|---|---|---|---|

| AliCloud Disk |

✅ |

- |

✅ |

| AWS EBS |

✅ |

- |

✅ |

| AWS EFS |

- |

- |

- |

| Google Cloud Platform (GCP) persistent disk (PD) |

✅ |

✅ |

✅ |

| IBM VPC Block |

- |

- |

✅ |

| Microsoft Azure Disk |

✅ |

✅ |

✅ |

| Microsoft Azure Stack Hub |

✅ |

✅ |

✅ |

| Microsoft Azure File |

- |

- |

✅ |

| OpenStack Cinder |

✅ |

✅ |

✅ |

| OpenShift Data Foundation |

✅ |

✅ |

✅ |

| OpenStack Manila |

✅ |

- |

- |

| Red Hat Virtualization (oVirt) |

- |

- |

✅ |

| VMware vSphere |

✅[1] |

- |

✅[2] |

1.

- Requires vSphere version 7.0 Update 3 or later for both vCenter Server and ESXi.

- Does not support fileshare volumes.

2.

- Offline volume expansion: minimum required vSphere version is 6.7 Update 3 P06

- Online volume expansion: minimum required vSphere version is 7.0 Update 2.

If your CSI driver is not listed in the preceding table, you must follow the installation instructions provided by your CSI storage vendor to use their supported CSI features.

5.1.3. Dynamic provisioning

Dynamic provisioning of persistent storage depends on the capabilities of the CSI driver and underlying storage back end. The provider of the CSI driver should document how to create a storage class in OpenShift Container Platform and the parameters available for configuration.

The created storage class can be configured to enable dynamic provisioning.

Procedure

Create a default storage class that ensures all PVCs that do not require any special storage class are provisioned by the installed CSI driver.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.1.4. Example using the CSI driver

The following example installs a default MySQL template without any changes to the template.

Prerequisites

- The CSI driver has been deployed.

- A storage class has been created for dynamic provisioning.

Procedure

Create the MySQL template:

oc new-app mysql-persistent

# oc new-app mysql-persistentCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

--> Deploying template "openshift/mysql-persistent" to project default ...

--> Deploying template "openshift/mysql-persistent" to project default ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get pvc

# oc get pvcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE mysql Bound kubernetes-dynamic-pv-3271ffcb4e1811e8 1Gi RWO cinder 3s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE mysql Bound kubernetes-dynamic-pv-3271ffcb4e1811e8 1Gi RWO cinder 3sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2. CSI inline ephemeral volumes

Container Storage Interface (CSI) inline ephemeral volumes allow you to define a Pod spec that creates inline ephemeral volumes when a pod is deployed and delete them when a pod is destroyed.

This feature is only available with supported Container Storage Interface (CSI) drivers.

CSI inline ephemeral volumes is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

5.2.1. Overview of CSI inline ephemeral volumes

Traditionally, volumes that are backed by Container Storage Interface (CSI) drivers can only be used with a PersistentVolume and PersistentVolumeClaim object combination.

This feature allows you to specify CSI volumes directly in the Pod specification, rather than in a PersistentVolume object. Inline volumes are ephemeral and do not persist across pod restarts.

5.2.1.1. Support limitations

By default, OpenShift Container Platform supports CSI inline ephemeral volumes with these limitations:

- Support is only available for CSI drivers. In-tree and FlexVolumes are not supported.

- The Shared Resource CSI Driver supports inline ephemeral volumes as a Technology Preview feature.

- Community or storage vendors provide other CSI drivers that support these volumes. Follow the installation instructions provided by the CSI driver provider.

CSI drivers might not have implemented the inline volume functionality, including Ephemeral capacity. For details, see the CSI driver documentation.

Shared Resource CSI Driver is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

5.2.2. Embedding a CSI inline ephemeral volume in the pod specification

You can embed a CSI inline ephemeral volume in the Pod specification in OpenShift Container Platform. At runtime, nested inline volumes follow the ephemeral lifecycle of their associated pods so that the CSI driver handles all phases of volume operations as pods are created and destroyed.

Procedure

-

Create the

Podobject definition and save it to a file. Embed the CSI inline ephemeral volume in the file.

my-csi-app.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The name of the volume that is used by pods.

Create the object definition file that you saved in the previous step.

oc create -f my-csi-app.yaml

$ oc create -f my-csi-app.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4. CSI volume snapshots

This document describes how to use volume snapshots with supported Container Storage Interface (CSI) drivers to help protect against data loss in OpenShift Container Platform. Familiarity with persistent volumes is suggested.

5.4.1. Overview of CSI volume snapshots

A snapshot represents the state of the storage volume in a cluster at a particular point in time. Volume snapshots can be used to provision a new volume.

OpenShift Container Platform supports Container Storage Interface (CSI) volume snapshots by default. However, a specific CSI driver is required.

With CSI volume snapshots, a cluster administrator can:

- Deploy a third-party CSI driver that supports snapshots.

- Create a new persistent volume claim (PVC) from an existing volume snapshot.

- Take a snapshot of an existing PVC.

- Restore a snapshot as a different PVC.

- Delete an existing volume snapshot.

With CSI volume snapshots, an app developer can:

- Use volume snapshots as building blocks for developing application- or cluster-level storage backup solutions.

- Rapidly rollback to a previous development version.

- Use storage more efficiently by not having to make a full copy each time.

Be aware of the following when using volume snapshots:

- Support is only available for CSI drivers. In-tree and FlexVolumes are not supported.

- OpenShift Container Platform only ships with select CSI drivers. For CSI drivers that are not provided by an OpenShift Container Platform Driver Operator, it is recommended to use the CSI drivers provided by community or storage vendors. Follow the installation instructions furnished by the CSI driver provider.

-

CSI drivers may or may not have implemented the volume snapshot functionality. CSI drivers that have provided support for volume snapshots will likely use the

csi-external-snapshottersidecar. See documentation provided by the CSI driver for details.

5.4.2. CSI snapshot controller and sidecar

OpenShift Container Platform provides a snapshot controller that is deployed into the control plane. In addition, your CSI driver vendor provides the CSI snapshot sidecar as a helper container that is installed during the CSI driver installation.

The CSI snapshot controller and sidecar provide volume snapshotting through the OpenShift Container Platform API. These external components run in the cluster.

The external controller is deployed by the CSI Snapshot Controller Operator.

5.4.2.1. External controller

The CSI snapshot controller binds VolumeSnapshot and VolumeSnapshotContent objects. The controller manages dynamic provisioning by creating and deleting VolumeSnapshotContent objects.

5.4.2.2. External sidecar

Your CSI driver vendor provides the csi-external-snapshotter sidecar. This is a separate helper container that is deployed with the CSI driver. The sidecar manages snapshots by triggering CreateSnapshot and DeleteSnapshot operations. Follow the installation instructions provided by your vendor.

5.4.3. About the CSI Snapshot Controller Operator

The CSI Snapshot Controller Operator runs in the openshift-cluster-storage-operator namespace. It is installed by the Cluster Version Operator (CVO) in all clusters by default.

The CSI Snapshot Controller Operator installs the CSI snapshot controller, which runs in the openshift-cluster-storage-operator namespace.

5.4.3.1. Volume snapshot CRDs

During OpenShift Container Platform installation, the CSI Snapshot Controller Operator creates the following snapshot custom resource definitions (CRDs) in the snapshot.storage.k8s.io/v1 API group:

VolumeSnapshotContentA snapshot taken of a volume in the cluster that has been provisioned by a cluster administrator.

Similar to the

PersistentVolumeobject, theVolumeSnapshotContentCRD is a cluster resource that points to a real snapshot in the storage back end.For manually pre-provisioned snapshots, a cluster administrator creates a number of

VolumeSnapshotContentCRDs. These carry the details of the real volume snapshot in the storage system.The

VolumeSnapshotContentCRD is not namespaced and is for use by a cluster administrator.VolumeSnapshotSimilar to the

PersistentVolumeClaimobject, theVolumeSnapshotCRD defines a developer request for a snapshot. The CSI Snapshot Controller Operator runs the CSI snapshot controller, which handles the binding of aVolumeSnapshotCRD with an appropriateVolumeSnapshotContentCRD. The binding is a one-to-one mapping.The

VolumeSnapshotCRD is namespaced. A developer uses the CRD as a distinct request for a snapshot.VolumeSnapshotClassAllows a cluster administrator to specify different attributes belonging to a

VolumeSnapshotobject. These attributes may differ among snapshots taken of the same volume on the storage system, in which case they would not be expressed by using the same storage class of a persistent volume claim.The

VolumeSnapshotClassCRD defines the parameters for thecsi-external-snapshottersidecar to use when creating a snapshot. This allows the storage back end to know what kind of snapshot to dynamically create if multiple options are supported.Dynamically provisioned snapshots use the

VolumeSnapshotClassCRD to specify storage-provider-specific parameters to use when creating a snapshot.The

VolumeSnapshotContentClassCRD is not namespaced and is for use by a cluster administrator to enable global configuration options for their storage back end.

5.4.4. Volume snapshot provisioning

There are two ways to provision snapshots: dynamically and manually.

5.4.4.1. Dynamic provisioning

Instead of using a preexisting snapshot, you can request that a snapshot be taken dynamically from a persistent volume claim. Parameters are specified using a VolumeSnapshotClass CRD.

5.4.4.2. Manual provisioning

As a cluster administrator, you can manually pre-provision a number of VolumeSnapshotContent objects. These carry the real volume snapshot details available to cluster users.

5.4.5. Creating a volume snapshot

When you create a VolumeSnapshot object, OpenShift Container Platform creates a volume snapshot.

Prerequisites

- Logged in to a running OpenShift Container Platform cluster.

-

A PVC created using a CSI driver that supports

VolumeSnapshotobjects. - A storage class to provision the storage back end.

No pods are using the persistent volume claim (PVC) that you want to take a snapshot of.

NoteDo not create a volume snapshot of a PVC if a pod is using it. Doing so might cause data corruption because the PVC is not quiesced (paused). Be sure to first tear down a running pod to ensure consistent snapshots.

Procedure

To dynamically create a volume snapshot:

Create a file with the

VolumeSnapshotClassobject described by the following YAML:volumesnapshotclass.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The name of the CSI driver that is used to create snapshots of this

VolumeSnapshotClassobject. The name must be the same as theProvisionerfield of the storage class that is responsible for the PVC that is being snapshotted.

NoteDepending on the driver that you used to configure persistent storage, additional parameters might be required. You can also use an existing

VolumeSnapshotClassobject.Create the object you saved in the previous step by entering the following command:

oc create -f volumesnapshotclass.yaml

$ oc create -f volumesnapshotclass.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

VolumeSnapshotobject:volumesnapshot-dynamic.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The request for a particular class by the volume snapshot. If the

volumeSnapshotClassNamesetting is absent and there is a default volume snapshot class, a snapshot is created with the default volume snapshot class name. But if the field is absent and no default volume snapshot class exists, then no snapshot is created. - 2

- The name of the

PersistentVolumeClaimobject bound to a persistent volume. This defines what you want to create a snapshot of. Required for dynamically provisioning a snapshot.

Create the object you saved in the previous step by entering the following command:

oc create -f volumesnapshot-dynamic.yaml

$ oc create -f volumesnapshot-dynamic.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

To manually provision a snapshot:

Provide a value for the

volumeSnapshotContentNameparameter as the source for the snapshot, in addition to defining volume snapshot class as shown above.volumesnapshot-manual.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

volumeSnapshotContentNameparameter is required for pre-provisioned snapshots.

Create the object you saved in the previous step by entering the following command:

oc create -f volumesnapshot-manual.yaml

$ oc create -f volumesnapshot-manual.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

After the snapshot has been created in the cluster, additional details about the snapshot are available.

To display details about the volume snapshot that was created, enter the following command:

oc describe volumesnapshot mysnap

$ oc describe volumesnapshot mysnapCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following example displays details about the

mysnapvolume snapshot:volumesnapshot.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The pointer to the actual storage content that was created by the controller.

- 2

- The time when the snapshot was created. The snapshot contains the volume content that was available at this indicated time.

- 3

- If the value is set to

true, the snapshot can be used to restore as a new PVC.

If the value is set tofalse, the snapshot was created. However, the storage back end needs to perform additional tasks to make the snapshot usable so that it can be restored as a new volume. For example, Amazon Elastic Block Store data might be moved to a different, less expensive location, which can take several minutes.

To verify that the volume snapshot was created, enter the following command:

oc get volumesnapshotcontent

$ oc get volumesnapshotcontentCopy to Clipboard Copied! Toggle word wrap Toggle overflow The pointer to the actual content is displayed. If the

boundVolumeSnapshotContentNamefield is populated, aVolumeSnapshotContentobject exists and the snapshot was created.-

To verify that the snapshot is ready, confirm that the

VolumeSnapshotobject hasreadyToUse: true.

5.4.6. Deleting a volume snapshot

You can configure how OpenShift Container Platform deletes volume snapshots.

Procedure

Specify the deletion policy that you require in the

VolumeSnapshotClassobject, as shown in the following example:volumesnapshotclass.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- When deleting the volume snapshot, if the

Deletevalue is set, the underlying snapshot is deleted along with theVolumeSnapshotContentobject. If theRetainvalue is set, both the underlying snapshot andVolumeSnapshotContentobject remain.

If theRetainvalue is set and theVolumeSnapshotobject is deleted without deleting the correspondingVolumeSnapshotContentobject, the content remains. The snapshot itself is also retained in the storage back end.

Delete the volume snapshot by entering the following command:

oc delete volumesnapshot <volumesnapshot_name>

$ oc delete volumesnapshot <volumesnapshot_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

volumesnapshot.snapshot.storage.k8s.io "mysnapshot" deleted

volumesnapshot.snapshot.storage.k8s.io "mysnapshot" deletedCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the deletion policy is set to

Retain, delete the volume snapshot content by entering the following command:oc delete volumesnapshotcontent <volumesnapshotcontent_name>

$ oc delete volumesnapshotcontent <volumesnapshotcontent_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If the

VolumeSnapshotobject is not successfully deleted, enter the following command to remove any finalizers for the leftover resource so that the delete operation can continue:ImportantOnly remove the finalizers if you are confident that there are no existing references from either persistent volume claims or volume snapshot contents to the

VolumeSnapshotobject. Even with the--forceoption, the delete operation does not delete snapshot objects until all finalizers are removed.oc patch -n $PROJECT volumesnapshot/$NAME --type=merge -p '{"metadata": {"finalizers":null}}'$ oc patch -n $PROJECT volumesnapshot/$NAME --type=merge -p '{"metadata": {"finalizers":null}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

volumesnapshotclass.snapshot.storage.k8s.io "csi-ocs-rbd-snapclass" deleted

volumesnapshotclass.snapshot.storage.k8s.io "csi-ocs-rbd-snapclass" deletedCopy to Clipboard Copied! Toggle word wrap Toggle overflow The finalizers are removed and the volume snapshot is deleted.

5.4.7. Restoring a volume snapshot

The VolumeSnapshot CRD content can be used to restore the existing volume to a previous state.

After your VolumeSnapshot CRD is bound and the readyToUse value is set to true, you can use that resource to provision a new volume that is pre-populated with data from the snapshot. .Prerequisites * Logged in to a running OpenShift Container Platform cluster. * A persistent volume claim (PVC) created using a Container Storage Interface (CSI) driver that supports volume snapshots. * A storage class to provision the storage back end. * A volume snapshot has been created and is ready to use.

Procedure

Specify a

VolumeSnapshotdata source on a PVC as shown in the following:pvc-restore.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a PVC by entering the following command:

oc create -f pvc-restore.yaml

$ oc create -f pvc-restore.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the restored PVC has been created by entering the following command:

oc get pvc

$ oc get pvcCopy to Clipboard Copied! Toggle word wrap Toggle overflow A new PVC such as

myclaim-restoreis displayed.

5.5. CSI volume cloning

Volume cloning duplicates an existing persistent volume to help protect against data loss in OpenShift Container Platform. This feature is only available with supported Container Storage Interface (CSI) drivers. You should be familiar with persistent volumes before you provision a CSI volume clone.

5.5.1. Overview of CSI volume cloning

A Container Storage Interface (CSI) volume clone is a duplicate of an existing persistent volume at a particular point in time.

Volume cloning is similar to volume snapshots, although it is more efficient. For example, a cluster administrator can duplicate a cluster volume by creating another instance of the existing cluster volume.

Cloning creates an exact duplicate of the specified volume on the back-end device, rather than creating a new empty volume. After dynamic provisioning, you can use a volume clone just as you would use any standard volume.

No new API objects are required for cloning. The existing dataSource field in the PersistentVolumeClaim object is expanded so that it can accept the name of an existing PersistentVolumeClaim in the same namespace.

5.5.1.1. Support limitations

By default, OpenShift Container Platform supports CSI volume cloning with these limitations:

- The destination persistent volume claim (PVC) must exist in the same namespace as the source PVC.

Cloning is supported with a different Storage Class.

- Destination volume can be the same for a different storage class as the source.

-

You can use the default storage class and omit

storageClassNamein thespec.

- Support is only available for CSI drivers. In-tree and FlexVolumes are not supported.

- CSI drivers might not have implemented the volume cloning functionality. For details, see the CSI driver documentation.

5.5.2. Provisioning a CSI volume clone

When you create a cloned persistent volume claim (PVC) API object, you trigger the provisioning of a CSI volume clone. The clone pre-populates with the contents of another PVC, adhering to the same rules as any other persistent volume. The one exception is that you must add a dataSource that references an existing PVC in the same namespace.

Prerequisites

- You are logged in to a running OpenShift Container Platform cluster.

- Your PVC is created using a CSI driver that supports volume cloning.

- Your storage back end is configured for dynamic provisioning. Cloning support is not available for static provisioners.

Procedure

To clone a PVC from an existing PVC:

Create and save a file with the

PersistentVolumeClaimobject described by the following YAML:pvc-clone.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The name of the storage class that provisions the storage back end. The default storage class can be used and

storageClassNamecan be omitted in the spec.

Create the object you saved in the previous step by running the following command:

oc create -f pvc-clone.yaml

$ oc create -f pvc-clone.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow A new PVC

pvc-1-cloneis created.Verify that the volume clone was created and is ready by running the following command:

oc get pvc pvc-1-clone

$ oc get pvc pvc-1-cloneCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

pvc-1-cloneshows that it isBound.You are now ready to use the newly cloned PVC to configure a pod.

Create and save a file with the

Podobject described by the YAML. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The cloned PVC created during the CSI volume cloning operation.

The created

Podobject is now ready to consume, clone, snapshot, or delete your cloned PVC independently of its originaldataSourcePVC.

5.6. CSI automatic migration

In-tree storage drivers that are traditionally shipped with OpenShift Container Platform are being deprecated and replaced by their equivalent Container Storage Interface (CSI) drivers. OpenShift Container Platform provides automatic migration for certain supported in-tree volume plugins to their equivalent CSI drivers.

5.6.1. Overview

Volumes that are provisioned by using in-tree storage plugins, and that are supported by this feature, are migrated to their counterpart Container Storage Interface (CSI) drivers. This process does not perform any data migration; OpenShift Container Platform only translates the persistent volume object in memory. As a result, the translated persistent volume object is not stored on disk, nor is its contents changed.

The following in-tree to CSI drivers are supported:

| In-tree/CSI drivers | Support level | CSI auto migration enabled automatically? |

|---|---|---|

|

Generally available (GA) |

Yes. For more information, see "Automatic migration of in-tree volumes to CSI". |

|

Technology Preview (TP) |

No. To enable, see "Manually enabling CSI automatic migration". |

CSI automatic migration should be seamless. This feature does not change how you use all existing API objects: for example, PersistentVolumes, PersistentVolumeClaims, and StorageClasses.

Enabling CSI automatic migration for in-tree persistent volumes (PVs) or persistent volume claims (PVCs) does not enable any new CSI driver features, such as snapshots or expansion, if the original in-tree storage plugin did not support it.

5.6.2. Automatic migration of in-tree volumes to CSI

OpenShift Container Platform supports automatic and seamless migration for the following in-tree volume types to their Container Storage Interface (CSI) driver counterpart:

- Azure Disk

- OpenStack Cinder

CSI migration for these volume types is considered generally available (GA), and requires no manual intervention.

For new OpenShift Container Platform 4.11, and later, installations, the default storage class is the CSI storage class. All volumes provisioned using this storage class are CSI persistent volumes (PVs).

For clusters upgraded from 4.10, and earlier, to 4.11, and later, the CSI storage class is created, and is set as the default if no default storage class was set prior to the upgrade. In the very unlikely case that there is a storage class with the same name, the existing storage class remains unchanged. Any existing in-tree storage classes remain, and might be necessary for certain features, such as volume expansion to work for existing in-tree PVs. While storage class referencing to the in-tree storage plugin will continue working, we recommend that you switch the default storage class to the CSI storage class.

5.6.3. Manually enabling CSI automatic migration

If you want to test Container Storage Interface (CSI) migration in development or staging OpenShift Container Platform clusters, you must manually enable in-tree to CSI migration for the following in-tree volume types:

- AWS Elastic Block Storage (EBS)

- Google Compute Engine Persistent Disk (GCE-PD)

- VMware vSphere Disk

- Azure File

CSI automatic migration for the preceding in-tree volume plugins and CSI driver pairs is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

After migration, the default storage class remains the in-tree storage class.

CSI automatic migration will be enabled by default for all storage in-tree plugins in a future OpenShift Container Platform release, so it is highly recommended that you test it now and report any issues.

Enabling CSI automatic migration drains, and then restarts, all nodes in the cluster in sequence. This might take some time.

Procedure

Enable feature gates (see Nodes

Working with clusters Enabling features using feature gates). ImportantAfter turning on Technology Preview features using feature gates, they cannot be turned off. As a result, cluster upgrades are prevented.

The following configuration example enables CSI automatic migration for all CSI drivers supported by this feature that are currently in Technology Preview (TP) status:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Enables automatic migration for AWS EBS, GCP, Azure File, and VMware vSphere.

You can specify CSI automatic migration for a selected CSI driver by setting

CustomNoUpgradefeatureSetand forfeaturegatesto one of the following:- CSIMigrationAWS

- CSIMigrationAzureFile

- CSIMigrationGCE

- CSIMigrationvSphere

The following configuration example enables automatic migration to the AWS EBS CSI driver only:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Enables automatic migration for AWS EBS only.

5.7. AliCloud Disk CSI Driver Operator

5.7.1. Overview

OpenShift Container Platform is capable of provisioning persistent volumes (PVs) using the Container Storage Interface (CSI) driver for Alibaba AliCloud Disk Storage.

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a CSI Operator and driver.

To create CSI-provisioned PVs that mount to AliCloud Disk storage assets, OpenShift Container Platform installs the AliCloud Disk CSI Driver Operator and the AliCloud Disk CSI driver, by default, in the openshift-cluster-csi-drivers namespace.

-

The AliCloud Disk CSI Driver Operator provides a storage class (

alicloud-disk) that you can use to create persistent volume claims (PVCs). The AliCloud Disk CSI Driver Operator supports dynamic volume provisioning by allowing storage volumes to be created on demand, eliminating the need for cluster administrators to pre-provision storage. - The AliCloud Disk CSI driver enables you to create and mount AliCloud Disk PVs.

5.7.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plugins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Container Platform users storage options, such as volume snapshots, that are not possible with in-tree volume plugins.

Additional resources

5.8. AWS Elastic Block Store CSI Driver Operator

5.8.1. Overview

OpenShift Container Platform is capable of provisioning persistent volumes (PVs) using the Container Storage Interface (CSI) driver for AWS Elastic Block Store (EBS).

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a Container Storage Interface (CSI) Operator and driver.

To create CSI-provisioned PVs that mount to AWS EBS storage assets, OpenShift Container Platform installs the AWS EBS CSI Driver Operator and the AWS EBS CSI driver by default in the openshift-cluster-csi-drivers namespace.

- The AWS EBS CSI Driver Operator provides a StorageClass by default that you can use to create PVCs. You also have the option to create the AWS EBS StorageClass as described in Persistent storage using AWS Elastic Block Store.

- The AWS EBS CSI driver enables you to create and mount AWS EBS PVs.

If you installed the AWS EBS CSI Operator and driver on an OpenShift Container Platform 4.5 cluster, you must uninstall the 4.5 Operator and driver before you update to OpenShift Container Platform 4.11.

5.8.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plugins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Container Platform users storage options, such as volume snapshots, that are not possible with in-tree volume plugins.

OpenShift Container Platform defaults to using an in-tree (non-CSI) plugin to provision AWS EBS storage.

In future OpenShift Container Platform versions, volumes provisioned using existing in-tree plugins are planned for migration to their equivalent CSI driver. CSI automatic migration should be seamless. Migration does not change how you use all existing API objects, such as persistent volumes, persistent volume claims, and storage classes. For more information about migration, see CSI automatic migration.

After full migration, in-tree plugins will eventually be removed in future versions of OpenShift Container Platform.

For information about dynamically provisioning AWS EBS persistent volumes in OpenShift Container Platform, see Persistent storage using AWS Elastic Block Store.

5.9. AWS Elastic File Service CSI Driver Operator

5.9.1. Overview

OpenShift Container Platform is capable of provisioning persistent volumes (PVs) using the Container Storage Interface (CSI) driver for AWS Elastic File Service (EFS).

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a CSI Operator and driver.

After installing the AWS EFS CSI Driver Operator, OpenShift Container Platform installs the AWS EFS CSI Operator and the AWS EFS CSI driver by default in the openshift-cluster-csi-drivers namespace. This allows the AWS EFS CSI Driver Operator to create CSI-provisioned PVs that mount to AWS EFS assets.

-

The AWS EFS CSI Driver Operator, after being installed, does not create a storage class by default to use to create persistent volume claims (PVCs). However, you can manually create the AWS EFS

StorageClass. The AWS EFS CSI Driver Operator supports dynamic volume provisioning by allowing storage volumes to be created on-demand. This eliminates the need for cluster administrators to pre-provision storage. - The AWS EFS CSI driver enables you to create and mount AWS EFS PVs.

AWS EFS only supports regional volumes, not zonal volumes.

5.9.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plugins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Container Platform users storage options, such as volume snapshots, that are not possible with in-tree volume plugins.

5.9.3. Installing the AWS EFS CSI Driver Operator

The AWS EFS CSI Driver Operator is not installed in OpenShift Container Platform by default. Use the following procedure to install and configure the AWS EFS CSI Driver Operator in your cluster.

Prerequisites

- Access to the OpenShift Container Platform web console.

Procedure

To install the AWS EFS CSI Driver Operator from the web console:

- Log in to the web console.

Install the AWS EFS CSI Operator:

-

Click Operators

OperatorHub. - Locate the AWS EFS CSI Operator by typing AWS EFS CSI in the filter box.

Click the AWS EFS CSI Driver Operator button.

ImportantBe sure to select the AWS EFS CSI Driver Operator and not the AWS EFS Operator. The AWS EFS Operator is a community Operator and is not supported by Red Hat.

- On the AWS EFS CSI Driver Operator page, click Install.

On the Install Operator page, ensure that:

- All namespaces on the cluster (default) is selected.

- Installed Namespace is set to openshift-cluster-csi-drivers.

Click Install.

After the installation finishes, the AWS EFS CSI Operator is listed in the Installed Operators section of the web console.

-

Click Operators

- If you are using AWS EFS with AWS Security Token Service (STS), you must configure the AWS EFS CSI Driver with STS. For more information, see "Configuring AWS EFS CSI Driver with STS".

Install the AWS EFS CSI Driver:

-

Click administration

CustomResourceDefinitions ClusterCSIDriver. - On the Instances tab, click Create ClusterCSIDriver.

Use the following YAML file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Click Create.

Wait for the following Conditions to change to a "true" status:

- AWSEFSDriverCredentialsRequestControllerAvailable

- AWSEFSDriverNodeServiceControllerAvailable

- AWSEFSDriverControllerServiceControllerAvailable

-

Click administration

5.9.4. Configuring AWS EFS CSI Driver Operator with Security Token Service

This procedure explains how to configure the AWS EFS CSI Driver Operator with OpenShift Container Platform on AWS Security Token Service (STS).

Perform this procedure after installing the AWS EFS CSI Operator, but before installing the AWS EFS CSI driver as part of Installing the AWS EFS CSI Driver Operator procedure. If you perform this procedure after installing the driver and creating volumes, your volumes will fail to mount into pods.

Prerequisites

- AWS account credentials

Procedure

To configure the AWS EFS CSI Driver Operator with STS:

-

Extract the CCO utility (

ccoctl) binary from the OpenShift Container Platform release image, which you used to install the cluster with STS. For more information, see "Configuring the Cloud Credential Operator utility". Create and save an EFS

CredentialsRequestYAML file, such as shown in the following example, and then place it in thecredrequestsdirectory:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

ccoctltool to generate a new IAM role in AWS, and create a YAML file for it in the local file system (<path_to_ccoctl_output_dir>/manifests/openshift-cluster-csi-drivers-aws-efs-cloud-credentials-credentials.yaml).ccoctl aws create-iam-roles --name=<name> --region=<aws_region> --credentials-requests-dir=<path_to_directory_with_list_of_credentials_requests>/credrequests --identity-provider-arn=arn:aws:iam::<aws_account_id>:oidc-provider/<name>-oidc.s3.<aws_region>.amazonaws.com

$ ccoctl aws create-iam-roles --name=<name> --region=<aws_region> --credentials-requests-dir=<path_to_directory_with_list_of_credentials_requests>/credrequests --identity-provider-arn=arn:aws:iam::<aws_account_id>:oidc-provider/<name>-oidc.s3.<aws_region>.amazonaws.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

name=<name>is the name used to tag any cloud resources that are created for tracking. -

region=<aws_region>is the AWS region where cloud resources are created. -

dir=<path_to_directory_with_list_of_credentials_requests>/credrequestsis the directory containing the EFS CredentialsRequest file in previous step. <aws_account_id>is the AWS account ID.Example

ccoctl aws create-iam-roles --name my-aws-efs --credentials-requests-dir credrequests --identity-provider-arn arn:aws:iam::123456789012:oidc-provider/my-aws-efs-oidc.s3.us-east-2.amazonaws.com

$ ccoctl aws create-iam-roles --name my-aws-efs --credentials-requests-dir credrequests --identity-provider-arn arn:aws:iam::123456789012:oidc-provider/my-aws-efs-oidc.s3.us-east-2.amazonaws.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

2022/03/21 06:24:44 Role arn:aws:iam::123456789012:role/my-aws-efs -openshift-cluster-csi-drivers-aws-efs-cloud- created 2022/03/21 06:24:44 Saved credentials configuration to: /manifests/openshift-cluster-csi-drivers-aws-efs-cloud-credentials-credentials.yaml 2022/03/21 06:24:45 Updated Role policy for Role my-aws-efs-openshift-cluster-csi-drivers-aws-efs-cloud-

2022/03/21 06:24:44 Role arn:aws:iam::123456789012:role/my-aws-efs -openshift-cluster-csi-drivers-aws-efs-cloud- created 2022/03/21 06:24:44 Saved credentials configuration to: /manifests/openshift-cluster-csi-drivers-aws-efs-cloud-credentials-credentials.yaml 2022/03/21 06:24:45 Updated Role policy for Role my-aws-efs-openshift-cluster-csi-drivers-aws-efs-cloud-Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Create the AWS EFS cloud credentials and secret:

oc create -f <path_to_ccoctl_output_dir>/manifests/openshift-cluster-csi-drivers-aws-efs-cloud-credentials-credentials.yaml

$ oc create -f <path_to_ccoctl_output_dir>/manifests/openshift-cluster-csi-drivers-aws-efs-cloud-credentials-credentials.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

oc create -f /manifests/openshift-cluster-csi-drivers-aws-efs-cloud-credentials-credentials.yaml

$ oc create -f /manifests/openshift-cluster-csi-drivers-aws-efs-cloud-credentials-credentials.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

secret/aws-efs-cloud-credentials created

secret/aws-efs-cloud-credentials createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.9.5. Creating the AWS EFS storage class

Storage classes are used to differentiate and delineate storage levels and usages. By defining a storage class, users can obtain dynamically provisioned persistent volumes.

The AWS EFS CSI Driver Operator, after being installed, does not create a storage class by default. However, you can manually create the AWS EFS storage class.

5.9.5.1. Creating the AWS EFS storage class using the console

Procedure

-

In the OpenShift Container Platform console, click Storage

StorageClasses. - On the StorageClasses page, click Create StorageClass.

On the StorageClass page, perform the following steps:

- Enter a name to reference the storage class.

- Optional: Enter the description.

- Select the reclaim policy.

-

Select

efs.csi.aws.comfrom the Provisioner drop-down list. - Optional: Set the configuration parameters for the selected provisioner.

- Click Create.

5.9.5.2. Creating the AWS EFS storage class using the CLI

Procedure

Create a

StorageClassobject:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

provisioningModemust beefs-apto enable dynamic provisioning.- 2

fileSystemIdmust be the ID of the EFS volume created manually.- 3

directoryPermsis the default permission of the root directory of the volume. In this example, the volume is accessible only by the owner.- 4 5

gidRangeStartandgidRangeEndset the range of POSIX Group IDs (GIDs) that are used to set the GID of the AWS access point. If not specified, the default range is 50000-7000000. Each provisioned volume, and thus AWS access point, is assigned a unique GID from this range.- 6

basePathis the directory on the EFS volume that is used to create dynamically provisioned volumes. In this case, a PV is provisioned as “/dynamic_provisioning/<random uuid>” on the EFS volume. Only the subdirectory is mounted to pods that use the PV.

NoteA cluster admin can create several

StorageClassobjects, each using a different EFS volume.

5.9.6. Creating and configuring access to EFS volumes in AWS

This procedure explains how to create and configure EFS volumes in AWS so that you can use them in OpenShift Container Platform.

Prerequisites

- AWS account credentials

Procedure

To create and configure access to an EFS volume in AWS:

- On the AWS console, open https://console.aws.amazon.com/efs.

Click Create file system:

- Enter a name for the file system.

- For Virtual Private Cloud (VPC), select your OpenShift Container Platform’s' virtual private cloud (VPC).

- Accept default settings for all other selections.

Wait for the volume and mount targets to finish being fully created:

- Go to https://console.aws.amazon.com/efs#/file-systems.

- Click your volume, and on the Network tab wait for all mount targets to become available (~1-2 minutes).

- On the Network tab, copy the Security Group ID (you will need this in the next step).

- Go to https://console.aws.amazon.com/ec2/v2/home#SecurityGroups, and find the Security Group used by the EFS volume.

On the Inbound rules tab, click Edit inbound rules, and then add a new rule with the following settings to allow OpenShift Container Platform nodes to access EFS volumes :

- Type: NFS

- Protocol: TCP

- Port range: 2049

Source: Custom/IP address range of your nodes (for example: “10.0.0.0/16”)

This step allows OpenShift Container Platform to use NFS ports from the cluster.

- Save the rule.

5.9.7. Dynamic provisioning for AWS EFS

The AWS EFS CSI Driver supports a different form of dynamic provisioning than other CSI drivers. It provisions new PVs as subdirectories of a pre-existing EFS volume. The PVs are independent of each other. However, they all share the same EFS volume. When the volume is deleted, all PVs provisioned out of it are deleted too. The EFS CSI driver creates an AWS Access Point for each such subdirectory. Due to AWS AccessPoint limits, you can only dynamically provision 1000 PVs from a single StorageClass/EFS volume.

Note that PVC.spec.resources is not enforced by EFS.

In the example below, you request 5 GiB of space. However, the created PV is limitless and can store any amount of data (like petabytes). A broken application, or even a rogue application, can cause significant expenses when it stores too much data on the volume.

Using monitoring of EFS volume sizes in AWS is strongly recommended.

Prerequisites

- You have created AWS EFS volumes.

- You have created the AWS EFS storage class.

Procedure

To enable dynamic provisioning:

Create a PVC (or StatefulSet or Template) as usual, referring to the

StorageClasscreated above.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If you have problems setting up dynamic provisioning, see AWS EFS troubleshooting.

5.9.8. Creating static PVs with AWS EFS

It is possible to use an AWS EFS volume as a single PV without any dynamic provisioning. The whole volume is mounted to pods.

Prerequisites

- You have created AWS EFS volumes.

Procedure

Create the PV using the following YAML file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

spec.capacitydoes not have any meaning and is ignored by the CSI driver. It is used only when binding to a PVC. Applications can store any amount of data to the volume.- 2

volumeHandlemust be the same ID as the EFS volume you created in AWS. If you are providing your own access point,volumeHandleshould be<EFS volume ID>::<access point ID>. For example:fs-6e633ada::fsap-081a1d293f0004630.- 3

- If desired, you can disable encryption in transit. Encryption is enabled by default.

If you have problems setting up static PVs, see AWS EFS troubleshooting.

5.9.9. AWS EFS security

The following information is important for AWS EFS security.

When using access points, for example, by using dynamic provisioning as described earlier, Amazon automatically replaces GIDs on files with the GID of the access point. In addition, EFS considers the user ID, group ID, and secondary group IDs of the access point when evaluating file system permissions. EFS ignores the NFS client’s IDs. For more information about access points, see https://docs.aws.amazon.com/efs/latest/ug/efs-access-points.html.

As a consequence, EFS volumes silently ignore FSGroup; OpenShift Container Platform is not able to replace the GIDs of files on the volume with FSGroup. Any pod that can access a mounted EFS access point can access any file on it.

Unrelated to this, encryption in transit is enabled by default. For more information, see https://docs.aws.amazon.com/efs/latest/ug/encryption-in-transit.html.

5.9.10. AWS EFS troubleshooting

The following information provides guidance on how to troubleshoot issues with AWS EFS:

-

The AWS EFS Operator and CSI driver run in namespace

openshift-cluster-csi-drivers. To initiate gathering of logs of the AWS EFS Operator and CSI driver, run the following command:

oc adm must-gather [must-gather ] OUT Using must-gather plugin-in image: quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:125f183d13601537ff15b3239df95d47f0a604da2847b561151fedd699f5e3a5 [must-gather ] OUT namespace/openshift-must-gather-xm4wq created [must-gather ] OUT clusterrolebinding.rbac.authorization.k8s.io/must-gather-2bd8x created [must-gather ] OUT pod for plug-in image quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:125f183d13601537ff15b3239df95d47f0a604da2847b561151fedd699f5e3a5 created

$ oc adm must-gather [must-gather ] OUT Using must-gather plugin-in image: quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:125f183d13601537ff15b3239df95d47f0a604da2847b561151fedd699f5e3a5 [must-gather ] OUT namespace/openshift-must-gather-xm4wq created [must-gather ] OUT clusterrolebinding.rbac.authorization.k8s.io/must-gather-2bd8x created [must-gather ] OUT pod for plug-in image quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:125f183d13601537ff15b3239df95d47f0a604da2847b561151fedd699f5e3a5 createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow To show AWS EFS Operator errors, view the

ClusterCSIDriverstatus:oc get clustercsidriver efs.csi.aws.com -o yaml

$ oc get clustercsidriver efs.csi.aws.com -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow If a volume cannot be mounted to a pod (as shown in the output of the following command):

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Warning message indicating volume not mounted.

This error is frequently caused by AWS dropping packets between an OpenShift Container Platform node and AWS EFS.

Check that the following are correct:

- AWS firewall and Security Groups

- Networking: port number and IP addresses

5.9.11. Uninstalling the AWS EFS CSI Driver Operator

All EFS PVs are inaccessible after uninstalling the AWS EFS CSI Driver Operator.

Prerequisites

- Access to the OpenShift Container Platform web console.

Procedure

To uninstall the AWS EFS CSI Driver Operator from the web console:

- Log in to the web console.

- Stop all applications that use AWS EFS PVs.

Delete all AWS EFS PVs:

-

Click Storage

PersistentVolumeClaims. - Select each PVC that is in use by the AWS EFS CSI Driver Operator, click the drop-down menu on the far right of the PVC, and then click Delete PersistentVolumeClaims.

-

Click Storage

Uninstall the AWS EFS CSI Driver:

NoteBefore you can uninstall the Operator, you must remove the CSI driver first.

-

Click administration

CustomResourceDefinitions ClusterCSIDriver. - On the Instances tab, for efs.csi.aws.com, on the far left side, click the drop-down menu, and then click Delete ClusterCSIDriver.

- When prompted, click Delete.

-

Click administration

Uninstall the AWS EFS CSI Operator:

-

Click Operators

Installed Operators. - On the Installed Operators page, scroll or type AWS EFS CSI into the Search by name box to find the Operator, and then click it.

-

On the upper, right of the Installed Operators > Operator details page, click Actions

Uninstall Operator. When prompted on the Uninstall Operator window, click the Uninstall button to remove the Operator from the namespace. Any applications deployed by the Operator on the cluster need to be cleaned up manually.

After uninstalling, the AWS EFS CSI Driver Operator is no longer listed in the Installed Operators section of the web console.

-

Click Operators

Before you can destroy a cluster (openshift-install destroy cluster), you must delete the EFS volume in AWS. An OpenShift Container Platform cluster cannot be destroyed when there is an EFS volume that uses the cluster’s VPC. Amazon does not allow deletion of such a VPC.

5.10. Azure Disk CSI Driver Operator

5.10.1. Overview

OpenShift Container Platform is capable of provisioning persistent volumes (PVs) using the Container Storage Interface (CSI) driver for Microsoft Azure Disk Storage.

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a CSI Operator and driver.

To create CSI-provisioned PVs that mount to Azure Disk storage assets, OpenShift Container Platform installs the Azure Disk CSI Driver Operator and the Azure Disk CSI driver by default in the openshift-cluster-csi-drivers namespace.

-

The Azure Disk CSI Driver Operator provides a storage class named

managed-csithat you can use to create persistent volume claims (PVCs). The Azure Disk CSI Driver Operator supports dynamic volume provisioning by allowing storage volumes to be created on-demand, eliminating the need for cluster administrators to pre-provision storage. - The Azure Disk CSI driver enables you to create and mount Azure Disk PVs.

5.10.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plugins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Container Platform users storage options, such as volume snapshots, that are not possible with in-tree volume plugins.

OpenShift Container Platform defaults to using an in-tree (non-CSI) plugin to provision Azure Disk storage.

In future OpenShift Container Platform versions, volumes provisioned using existing in-tree plugins are planned for migration to their equivalent CSI driver. CSI automatic migration should be seamless. Migration does not change how you use all existing API objects, such as persistent volumes, persistent volume claims, and storage classes. For more information about migration, see CSI automatic migration.

After full migration, in-tree plugins will eventually be removed in later versions of OpenShift Container Platform.

5.10.3. Creating a storage class with storage account type

Storage classes are used to differentiate and delineate storage levels and usages. By defining a storage class, you can obtain dynamically provisioned persistent volumes.

When creating a storage class, you can designate the storage account type. This corresponds to your Azure storage account SKU tier. Valid options are Standard_LRS, Premium_LRS, StandardSSD_LRS, UltraSSD_LRS, Premium_ZRS, and StandardSSD_ZRS. For information about finding your Azure SKU tier, see SKU Types.

ZRS has some region limitations. For information about these limitations, see ZRS limitations.

Prerequisites

- Access to an OpenShift Container Platform cluster with administrator rights

Procedure

Use the following steps to create a storage class with a storage account type.

Create a storage class designating the storage account type using a YAML file similar to the following:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure that the storage class was created by listing the storage classes:

oc get storageclass

$ oc get storageclassCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

oc get storageclass NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE azurefile-csi file.csi.azure.com Delete Immediate true 68m managed-csi (default) disk.csi.azure.com Delete WaitForFirstConsumer true 68m sc-prem-zrs disk.csi.azure.com Delete WaitForFirstConsumer true 4m25s

$ oc get storageclass NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE azurefile-csi file.csi.azure.com Delete Immediate true 68m managed-csi (default) disk.csi.azure.com Delete WaitForFirstConsumer true 68m sc-prem-zrs disk.csi.azure.com Delete WaitForFirstConsumer true 4m25s1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- New storage class with storage account type.

5.10.4. Machine sets that deploy machines with ultra disks using PVCs

You can create a machine set running on Azure that deploys machines with ultra disks. Ultra disks are high-performance storage that are intended for use with the most demanding data workloads.

Both the in-tree plugin and CSI driver support using PVCs to enable ultra disks. You can also deploy machines with ultra disks as data disks without creating a PVC.

5.10.4.1. Creating machines with ultra disks by using machine sets

You can deploy machines with ultra disks on Azure by editing your machine set YAML file.

Prerequisites

- Have an existing Microsoft Azure cluster.

Procedure

Copy an existing Azure

MachineSetcustom resource (CR) and edit it by running the following command:oc edit machineset <machine-set-name>

$ oc edit machineset <machine-set-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where

<machine-set-name>is the machine set that you want to provision machines with ultra disks.Add the following lines in the positions indicated:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a machine set using the updated configuration by running the following command:

oc create -f <machine-set-name>.yaml

$ oc create -f <machine-set-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a storage class that contains the following YAML definition:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify the name of the storage class. This procedure uses

ultra-disk-scfor this value. - 2

- Specify the number of IOPS for the storage class.

- 3

- Specify the throughput in MBps for the storage class.

- 4

- For Azure Kubernetes Service (AKS) version 1.21 or later, use

disk.csi.azure.com. For earlier versions of AKS, usekubernetes.io/azure-disk. - 5

- Optional: Specify this parameter to wait for the creation of the pod that will use the disk.

Create a persistent volume claim (PVC) to reference the

ultra-disk-scstorage class that contains the following YAML definition:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a pod that contains the following YAML definition:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Validate that the machines are created by running the following command:

oc get machines

$ oc get machinesCopy to Clipboard Copied! Toggle word wrap Toggle overflow The machines should be in the

Runningstate.For a machine that is running and has a node attached, validate the partition by running the following command:

oc debug node/<node-name> -- chroot /host lsblk

$ oc debug node/<node-name> -- chroot /host lsblkCopy to Clipboard Copied! Toggle word wrap Toggle overflow In this command,

oc debug node/<node-name>starts a debugging shell on the node<node-name>and passes a command with--. The passed commandchroot /hostprovides access to the underlying host OS binaries, andlsblkshows the block devices that are attached to the host OS machine.

Next steps

To use an ultra disk from within a pod, create workload that uses the mount point. Create a YAML file similar to the following example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.10.4.2. Troubleshooting resources for machine sets that enable ultra disks

Use the information in this section to understand and recover from issues you might encounter.

5.10.4.2.1. Unable to mount a persistent volume claim backed by an ultra disk

If there is an issue mounting a persistent volume claim backed by an ultra disk, the pod becomes stuck in the ContainerCreating state and an alert is triggered.

For example, if the additionalCapabilities.ultraSSDEnabled parameter is not set on the machine that backs the node that hosts the pod, the following error message appears:

StorageAccountType UltraSSD_LRS can be used only when additionalCapabilities.ultraSSDEnabled is set.

StorageAccountType UltraSSD_LRS can be used only when additionalCapabilities.ultraSSDEnabled is set.To resolve this issue, describe the pod by running the following command:

oc -n <stuck_pod_namespace> describe pod <stuck_pod_name>

$ oc -n <stuck_pod_namespace> describe pod <stuck_pod_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.11. Azure File CSI Driver Operator

5.11.1. Overview

OpenShift Container Platform is capable of provisioning persistent volumes (PVs) by using the Container Storage Interface (CSI) driver for Microsoft Azure File Storage.

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a CSI Operator and driver.

To create CSI-provisioned PVs that mount to Azure File storage assets, OpenShift Container Platform installs the Azure File CSI Driver Operator and the Azure File CSI driver by default in the openshift-cluster-csi-drivers namespace.

-

The Azure File CSI Driver Operator provides a storage class that is named

azurefile-csithat you can use to create persistent volume claims (PVCs). - The Azure File CSI driver enables you to create and mount Azure File PVs. The Azure File CSI driver supports dynamic volume provisioning by allowing storage volumes to be created on-demand, eliminating the need for cluster administrators to pre-provision storage.

Azure File CSI Driver Operator does not support:

- Virtual hard disks (VHD)

- Network File System (NFS): OpenShift Container Platform does not deploy a NFS-backed storage class.

- Running on nodes with FIPS mode enabled.

For more information about supported features, see Supported CSI drivers and features.

5.11.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plugins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Container Platform users storage options, such as volume snapshots, that are not possible with in-tree volume plugins.

Additional resources

5.12. Azure Stack Hub CSI Driver Operator

5.12.1. Overview

OpenShift Container Platform is capable of provisioning persistent volumes (PVs) using the Container Storage Interface (CSI) driver for Azure Stack Hub Storage. Azure Stack Hub, which is part of the Azure Stack portfolio, allows you to run apps in an on-premises environment and deliver Azure services in your datacenter.

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a CSI Operator and driver.

To create CSI-provisioned PVs that mount to Azure Stack Hub storage assets, OpenShift Container Platform installs the Azure Stack Hub CSI Driver Operator and the Azure Stack Hub CSI driver by default in the openshift-cluster-csi-drivers namespace.

-

The Azure Stack Hub CSI Driver Operator provides a storage class (

managed-csi), with "Standard_LRS" as the default storage account type, that you can use to create persistent volume claims (PVCs). The Azure Stack Hub CSI Driver Operator supports dynamic volume provisioning by allowing storage volumes to be created on-demand, eliminating the need for cluster administrators to pre-provision storage. - The Azure Stack Hub CSI driver enables you to create and mount Azure Stack Hub PVs.

5.12.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plugins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Container Platform users storage options, such as volume snapshots, that are not possible with in-tree volume plugins.

5.13. GCP PD CSI Driver Operator

5.13.1. Overview

OpenShift Container Platform can provision persistent volumes (PVs) using the Container Storage Interface (CSI) driver for Google Cloud Platform (GCP) persistent disk (PD) storage.

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a Container Storage Interface (CSI) Operator and driver.

To create CSI-provisioned persistent volumes (PVs) that mount to GCP PD storage assets, OpenShift Container Platform installs the GCP PD CSI Driver Operator and the GCP PD CSI driver by default in the openshift-cluster-csi-drivers namespace.

- GCP PD CSI Driver Operator: By default, the Operator provides a storage class that you can use to create PVCs. You also have the option to create the GCP PD storage class as described in Persistent storage using GCE Persistent Disk.

- GCP PD driver: The driver enables you to create and mount GCP PD PVs.

OpenShift Container Platform defaults to using an in-tree (non-CSI) plugin to provision GCP PD storage.

In future OpenShift Container Platform versions, volumes provisioned using existing in-tree plugins are planned for migration to their equivalent CSI driver. CSI automatic migration should be seamless. Migration does not change how you use all existing API objects, such as persistent volumes, persistent volume claims, and storage classes. For more information about migration, see CSI automatic migration.

After full migration, in-tree plugins will eventually be removed in future versions of OpenShift Container Platform.

5.13.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plugins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Container Platform users storage options, such as volume snapshots, that are not possible with in-tree volume plugins.

5.13.3. GCP PD CSI driver storage class parameters

The Google Cloud Platform (GCP) persistent disk (PD) Container Storage Interface (CSI) driver uses the CSI external-provisioner sidecar as a controller. This is a separate helper container that is deployed with the CSI driver. The sidecar manages persistent volumes (PVs) by triggering the CreateVolume operation.

The GCP PD CSI driver uses the csi.storage.k8s.io/fstype parameter key to support dynamic provisioning. The following table describes all the GCP PD CSI storage class parameters that are supported by OpenShift Container Platform.

| Parameter | Values | Default | Description |

|---|---|---|---|

|

|

|

| Allows you to choose between standard PVs or solid-state-drive PVs. |

|

|

|

| Allows you to choose between zonal or regional PVs. |

|

| Fully qualified resource identifier for the key to use to encrypt new disks. | Empty string | Uses customer-managed encryption keys (CMEK) to encrypt new disks. |

5.13.4. Creating a custom-encrypted persistent volume

When you create a PersistentVolumeClaim object, OpenShift Container Platform provisions a new persistent volume (PV) and creates a PersistentVolume object. You can add a custom encryption key in Google Cloud Platform (GCP) to protect a PV in your cluster by encrypting the newly created PV.

For encryption, the newly attached PV that you create uses customer-managed encryption keys (CMEK) on a cluster by using a new or existing Google Cloud Key Management Service (KMS) key.

Prerequisites

- You are logged in to a running OpenShift Container Platform cluster.

- You have created a Cloud KMS key ring and key version.

For more information about CMEK and Cloud KMS resources, see Using customer-managed encryption keys (CMEK).

Procedure

To create a custom-encrypted PV, complete the following steps:

Create a storage class with the Cloud KMS key. The following example enables dynamic provisioning of encrypted volumes:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- This field must be the resource identifier for the key that will be used to encrypt new disks. Values are case-sensitive. For more information about providing key ID values, see Retrieving a resource’s ID and Getting a Cloud KMS resource ID.

NoteYou cannot add the

disk-encryption-kms-keyparameter to an existing storage class. However, you can delete the storage class and recreate it with the same name and a different set of parameters. If you do this, the provisioner of the existing class must bepd.csi.storage.gke.io.Deploy the storage class on your OpenShift Container Platform cluster using the

occommand:oc describe storageclass csi-gce-pd-cmek

$ oc describe storageclass csi-gce-pd-cmekCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a file named

pvc.yamlthat matches the name of your storage class object that you created in the previous step:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you marked the new storage class as default, you can omit the

storageClassNamefield.Apply the PVC on your cluster:

oc apply -f pvc.yaml

$ oc apply -f pvc.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the status of your PVC and verify that it is created and bound to a newly provisioned PV:

oc get pvc

$ oc get pvcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE podpvc Bound pvc-e36abf50-84f3-11e8-8538-42010a800002 10Gi RWO csi-gce-pd-cmek 9s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE podpvc Bound pvc-e36abf50-84f3-11e8-8538-42010a800002 10Gi RWO csi-gce-pd-cmek 9sCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf your storage class has the

volumeBindingModefield set toWaitForFirstConsumer, you must create a pod to use the PVC before you can verify it.

Your CMEK-protected PV is now ready to use with your OpenShift Container Platform cluster.

5.14. IBM VPC Block CSI Driver Operator

5.14.1. Overview

OpenShift Container Platform is capable of provisioning persistent volumes (PVs) using the Container Storage Interface (CSI) driver for IBM Virtual Private Cloud (VPC) Block Storage.

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a CSI Operator and driver.

To create CSI-provisioned PVs that mount to IBM VPC Block storage assets, OpenShift Container Platform installs the IBM VPC Block CSI Driver Operator and the IBM VPC Block CSI driver by default in the openshift-cluster-csi-drivers namespace.

-

The IBM VPC Block CSI Driver Operator provides three storage classes named

ibmc-vpc-block-10iops-tier(default),ibmc-vpc-block-5iops-tier, andibmc-vpc-block-customfor different tiers that you can use to create persistent volume claims (PVCs). The IBM VPC Block CSI Driver Operator supports dynamic volume provisioning by allowing storage volumes to be created on demand, eliminating the need for cluster administrators to pre-provision storage. - The IBM VPC Block CSI driver enables you to create and mount IBM VPC Block PVs.

5.14.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plugins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Container Platform users storage options, such as volume snapshots, that are not possible with in-tree volume plugins.

Additional resources

5.15. OpenStack Cinder CSI Driver Operator

5.15.1. Overview

OpenShift Container Platform is capable of provisioning persistent volumes (PVs) using the Container Storage Interface (CSI) driver for OpenStack Cinder.

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a Container Storage Interface (CSI) Operator and driver.

To create CSI-provisioned PVs that mount to OpenStack Cinder storage assets, OpenShift Container Platform installs the OpenStack Cinder CSI Driver Operator and the OpenStack Cinder CSI driver in the openshift-cluster-csi-drivers namespace.

- The OpenStack Cinder CSI Driver Operator provides a CSI storage class that you can use to create PVCs.